Abstract

COVID-19 has affected the whole world drastically. A huge number of people have lost their lives due to this pandemic. Early detection of COVID-19 infection is helpful for treatment and quarantine. Therefore, many researchers have designed a deep learning model for the early diagnosis of COVID-19-infected patients. However, deep learning models suffer from overfitting and hyperparameter-tuning issues. To overcome these issues, in this paper, a metaheuristic-based deep COVID-19 screening model is proposed for X-ray images. The modified AlexNet architecture is used for feature extraction and classification of the input images. Strength Pareto evolutionary algorithm-II (SPEA-II) is used to tune the hyperparameters of modified AlexNet. The proposed model is tested on a four-class (i.e., COVID-19, tuberculosis, pneumonia, or healthy) dataset. Finally, the comparisons are drawn among the existing and the proposed models.

1. Introduction

Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) causes this disease. Several other viruses like MERS, flu, and SARS [1–3] have also been detected in the past few decades, but they have not affected the world as COVID-19 does. Many countries are working on preparing a vaccine to get over this pandemic. Since this is an infectious disease and appropriate treatment is not available to date, it is highly required that the disease is detected in the early stages so that its further spreading can be prevented [4]. The symptoms of COVID-19 are sore throat, fever, headache, breathing issues, and cough [5]. Some other symptoms like tiredness, aches, loss of taste, and smell have also been found in some patients. However, in many of the infected patients, no symptoms were reported [6]. Because of the absence of symptoms, it became much difficult to detect the COVID-19 infection. Hence, many countries declared lockdown so that the chain of the disease can be broken. But still, for treating the disease, efficient screening of patients is needed.

Real-time reverse transcription-polymerase chain reaction (RT-PCR) is widely accepted as a COVID-19 detection tool [7]. It can provide results ranging from hours to two days. But, because of the unavailability of kits and RT-PCR's low sensitivity, the imaging techniques utilizing radiography emerged as another option for COVID-19 detection [8]. Several research articles also validate the suitability of chest scans for the detection of COVID-19 [7, 8]. Among available radiography techniques, the chest CT scan and X-ray are extensively utilized techniques. But the availability of machines and lesser impact of radiations on patients make X-rays more preferable over CT [9]. It takes much time and can lead to erroneous reports when the X-rays are examined manually by radiological experts [10]. This problem can be resolved by analyzing the X-ray automatically by using the machine/deep learning models. In recent times, deep learning techniques have been favorites among researchers to diagnose diseases in the field of medical imaging [11]. These techniques can extract the image features automatically without any manual involvement [12] which makes them suitable for the classification process of COVID-19 imaging patterns.

This paper proposes a metaheuristic-based deep COVID-19 screening model for X-ray images. The modified AlexNet architecture is used for feature extraction and classification of the input images. Strength Pareto evolutionary algorithm-II (SPEA-II) is used to tune the hyperparameters of modified AlexNet. The proposed model is tested in a four-class (i.e., COVID-19, tuberculosis, pneumonia, or healthy) dataset. Finally, the comparisons are drawn among the existing and the proposed models.

The remaining structure of the paper is as follows. Section 2 describes the related work in the field. The proposed work for detecting COVID-19 using chest X-rays is presented in Section 3. Section 4 discusses the experimental results and discussions. The proposed work is concluded in Section 5.

2. Literature Review

In the last few months, several deep learning techniques have been rigorously used for the classification of chest X-rays for COVID-19 diagnosis. Among these techniques, convolutional neural networks (CNNs) and transfer learning have been explored a lot. Hemdan et al. [13] proposed an automatic framework named “COVIDX-Net” to identify the COVID-19 infection in chest X-rays. The proposed model used seven deep learning architectures, out of which DenseNet201 and VGG19 achieved 90% accuracy. The authors used only fifty chest X-rays to test the proposed model. In [14], deep learning architectures are studied by Luz et al., and the efficiency of the proposed model is presented using the COVIDx dataset. The accuracy of 93.9% with 96.8% sensitivity is achieved by the Flat EfficientNet model. Ozturk et al. [15] developed DarkCovidNet, an automatic model for detecting COVID-19 using chest X-rays. The model is trained using 125 chest images and provided 98.08% accuracy with binary cases and 87.02% accuracy with multiclass cases. The use of a limited number of COVID-19-infected chest X-ray images for training and validation purposes is the main drawback of this model. Basu and Mitra [16] proposed a model for identifying abnormality caused by COVID-19 in chest X-rays. This model is based on transfer learning; they used Gradient Class Activation Map for extracting features from X-ray images. Validation is also done with the help of the NIH chest X-ray dataset. Results achieved are also promising with an overall accuracy of 95.3%. Das et al. [17] proposed a model for the detection of COVID-19 infection with the help of X-ray images. In this work, the developed model is based on the deep learning technique. The experimental results show an overall classification accuracy of 97.40%.

Tuncer et al. [18] proposed a model to identify the COVID-19 pattern from the X-ray images. In this, features are extracted with Residual Exemplar Local Binary Pattern (ResExLBP). Feature selection is done with iterative ReliefF. In this work, a total of 321 chest X-ray images are used to achieve the classification accuracy of 99% with an SVM classifier. Wang and Wong [19] implemented a COVID-Net framework for identifying coronavirus infection. The proposed framework reports the classification accuracy as 92.4% for normal, pneumonia+ve, and COVID+ve classes, which are better than VGG19 and ResNet-50 architecture. Toğaçar et al. [20] converted the original X-rays of COVID-19 patients into a useful structured dataset with the help of fuzzy color technique; then, an image stacking technique has been used to create a stacked dataset. In this, the classification accuracy of MobileNetV2 and SqueezeNet is 98.25% and 97.81%, respectively.

Apostolopoulos and Mpesiana [21] proposed a COVID-19 identification system. In this work, five pretrained deep learning architectures were used to develop the system for the processing of chest X-ray images. VGG19, MobileNet, Inception, Xception, and Inception_ResNet_V2 are the pretrained deep learning architectures. Classification accuracy is also very good for a binary class as compared with multiclass. Mahmud et al. [22] developed an automatic system CovXNet for COVID-19. The proposed system detects the COVID-19 patterns from chest X-rays. A total of 915 X-ray images were used to validate the model. The proposed system is optimized with the help of a stacking algorithm. Classification is also done with binary and three classes with an accuracy of 97.4% and 89.6%, respectively. In Narin et al. [23], the work performance of three pretrained deep learning architectures is analyzed for COVID-19. ResNet50, Inception_V3, and Inception-ResNet_V2 were executed and concluded that ResNet50 is the best among all with 98% accuracy. Shelke et al. [24] proposed a diagnosis model for COVID-19 based on chest X-ray images. In this work, 22 X-ray images were used to calculate the classification accuracy and it is 98.9%.

Rahimzadeh and Attar [25] proposed a hybridized model for COVID-19 by combining Xception and ResNet50_V2 models. A dataset of 6054 X-ray images was used, and 91.4% accuracy has been achieved. In Chouhan et al. [26], an ensemble approach has been proposed for AlexNet, DenseNet121, Inception_V3, ResNet18, and GoogleNet architectures. These pretrained deep learning architectures show good accuracy for COVID-19 pattern identification. Abbas et al. [27] developed a DeTraC model for predicting COVID-19 with the help of 105 chest X-ray images. CNN model has been used for deep feature extraction. Also, class decomposition is implemented to extract the local structures. 95.12% classification accuracy is achieved with Gradient descent. Das et al. [28] used an open-source chest X-ray dataset for the identification of COVID-19. A model is implemented using the InceptionNet model and achieved promising results. From the above literature, it has been found that the existing model suffers from the overfitting and hyperparameter-tuning issue [29].

3. Proposed Methodology

This section discusses the proposed work. CNN is discussed followed by modified AlexNet architecture and SPEA-II-based hyperparameter- tuning approach.

3.1. Convolutional Neural Network (CNN)

CNN emulates the human brain's functioning. The layers in CNN work like layers of the human brain. It can be termed as a deep learning neural network [30, 31]. CNN has proven its efficacy in pattern recognition, face recognition, and other image processing applications. Different layers in the CNN process the input image [32]. In the initial convolution layer, the input image is fed; then, different layers of the proposed architecture extract the features. In the convolutional layer, the input image goes through different filters; then, the output of this layer is passed as input to the next layer called the maximum pooling layer which removes the unwanted pixels [33].

In this paper, AlexNet architecture is used for CNN because it is computationally better to use the AlexNet architecture to address the complexities than Conv-Net, Le-Net, ResNet, and other architectures [27]. Initially, a set of images are assigned to the first layer of AlexNet. Hidden layers apply multiple filters to extract the features. Finally, the last layer is used for the classification process [34].

3.2. AlexNet Architecture

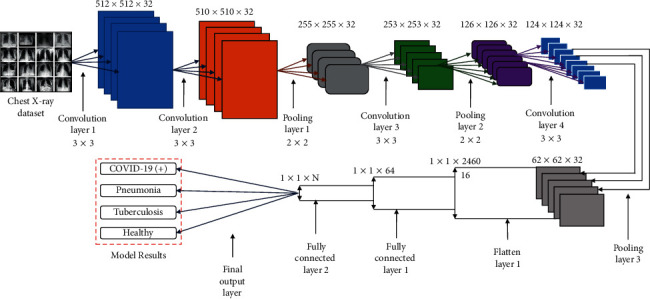

As stated earlier, AlexNet is better than the other available architectures in terms of efficiency and computational ability. These are also used extensively to cope up with the problems in the process of image classification. This paper uses the modified AlexNet architecture [35] for image classification. Figure 1 shows the detailed architecture used for image classification.

Figure 1.

Modified AlexNet Architecture used for COVID-19 classification.

The implementation of the modified architecture is comprised of the following steps:

Step 1: initially, the input image is resized to 259 × 259 pixels representing the length and breadth. The depth is represented by three color channels.

Step 2: next operation carried out in the convolutional layer computed the output of neurons by performing the scalar product of the image's small portions with their respective weights. This operation is iterated along length and breadth.

Step 3: then, the ReLU layer employs an activation function that works element-wise. It also incorporates the nonlinearity in the system and applies the function due to which the negative activation is replaced by 0.

Step 4: the decimation operation is performed at the pooling layer which reduces the samples along with the spatial coordinates.

Step 5: at last, the prediction is given by a fully connected (FC) layer based on a class score of each image. For each prediction class, the probability score is computed and the class with the maximum probability score is considered to be the predicted class.

3.3. Strength Pareto Evolutionary Algorithm (SPEA)

To optimize CNN, SPEA-II [36] is used in this work. The idea of SPEA [37] was introduced in 1999. But to understand SPEA-II, we need to understand the working of SPEA. In SPEA, Strength Pareto depicts how much the solutions are close to the first rank. The nondominated solutions or a set of Pareto optimal solutions are identified and preserved with SPEA. A set containing all Pareto optimal solutions is called Pareto optimal set, and it contains the best nondominated solutions. For each solution, two parameters named Strength Pareto (S) and fitness (F) are considered. The Strength Pareto is represented as

| (1) |

where S(i) represents the Strength Pareto of individual i. NP represents the size of the population and np is the number of individual vectors that are dominated by individual i or having equal strength as i. These dominated individuals possess less strength than the nondominated solutions. The second parameter, i.e., fitness, is represented as [38]

| (2) |

The fitness of an individual a is the addition of Strength Pareto values of all individuals which dominate or equal individual a. Hence, the solution with a lower value of fitness is assumed to be better [38]. Consider G as the maximum number of generations and g as the iteration number. The steps involved in the optimization process of SPEA are described as follows.

Step 1: the population is initialized and an empty external set is created for Pareto optimal solutions.

Step 2: the size of Pareto optimal is defined; if it exceeds that limit, then based on the average linkage-based hierarchical clustering method, Pareto sets are deleted and brought back to a manageable size. This clustering technique combines the adjacent clusters iteratively until the desired number of groups is obtained [38].

Step 3: next, the fitness of the population and external Pareto optimal set is calculated [39].

Step 4: in this step, binary tournament selection is implemented by combining the individuals in an external set and the population. Then, two individuals are chosen randomly and one having better fitness is moved to the mating pool. In the mating pool, crossover operation and mutation operations are performed so that a new population is obtained for the next iteration.

Step 5: a new population is assumed to have better individuals than the previous one generated by perturbation and crossover.

Step 6: increase g as g=g+1 and check for the termination condition. If the termination condition is not satisfied, then go to Step 2; else, represent the archive members as Pareto optimal set [39].

3.4. SPEA-II

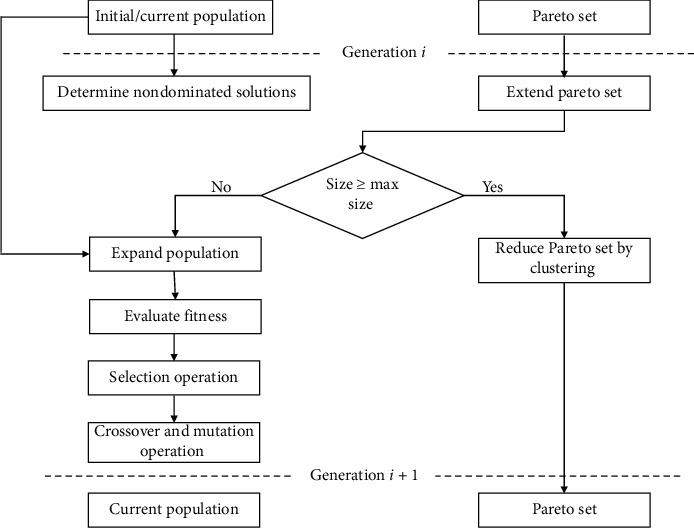

Figure 2 shows the ith generation of SPEA-II. Having Tg (as the population at gth iteration), (as the archive population at gth iteration), G (as the max number of generations), and as archive size, the steps of the optimization process followed by SPEA-II are as follows [36].

-

Step 1

(initialization): initialize an empty archive and an initial population T0 and set g = 0.

-

Step 2(fitness calculation): in this step, the fitness of the population and the archive set individuals are calculated. The strength S(i) of individual i in population and the archive is calculated as

(3) Here, “+” represents the multiset union, “∧” represents the AND operation, and “>” represents the Pareto dominance relation.

- Fitness F(i) for SPEA-II is calculated using raw fitness P(i) and density Q(i) of an individual as

(4) - where

(5) (6) The individuals having the same raw fitness values are distinguished by calculating their individual density using the K-nearest neighbor method as shown in equation (6). In equation (6), the objective space distance among ith and jth nearest neighbors is represented by σij , where .

-

Step 3(selection): this operation copies the nondominated solutions from Tg and to . The following truncation operator is used to reduce if its size exceeds the limit of :

(7) Else is filled with dominated individuals from Tg and . i ≤ da denotes that i individual dominates a [40].

-

Step 4

: in this step, binary tournament selection is implemented by creating a mating pool. In the mating pool, crossover and mutation operations are performed on the individuals selected from through tournament selection so that a new population Tg+1 is obtained for the next iteration.

-

Step 5

: increase g as g=g+1, and if the termination condition g ≥ G is not satisfied, then go to Step 2; else, represent the archive members as Pareto optimal set [36].

Figure 2.

i th generation of SPEA-II.

4. Performance Analysis

In this paper, MATLAB 2020b online servers with 64-bit, 8-core, and 32 GB RAM are utilized to evaluate the effectiveness of the proposed and the competitive models. The information about the used dataset can be found in [41].

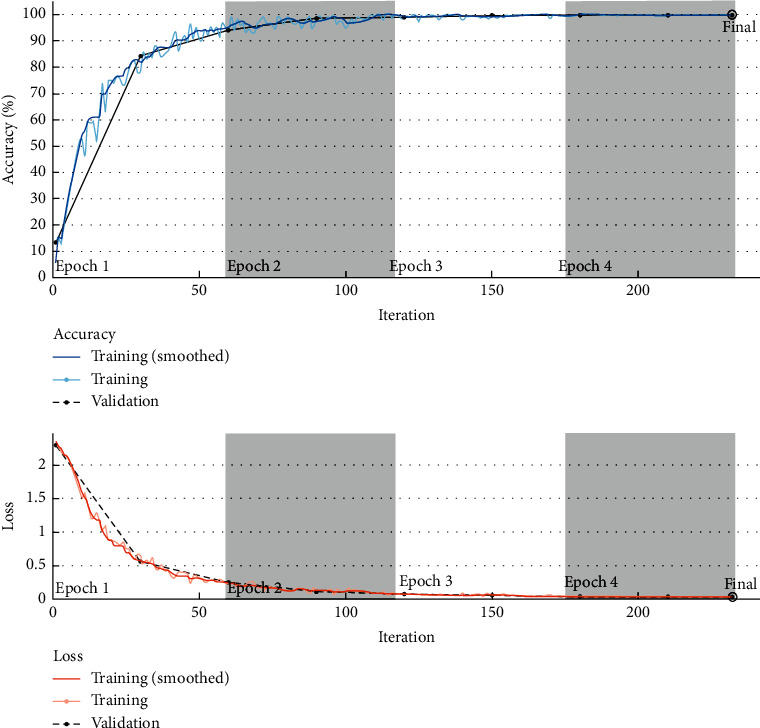

Figure 3 shows the accuracy and loss analysis of the proposed automated diagnosis model. It shows that the proposed diagnosis model achieves significantly better training accuracy and lesser loss value. The proposed model shows a significantly good convergence speed. The proposed model achieves 100% training accuracy during the 150th iteration. The validation accuracy of 99.26% indicates that the proposed model does not suffer from the overfitting issue.

Figure 3.

Accuracy and loss analysis of the proposed COVID-19 classification model.

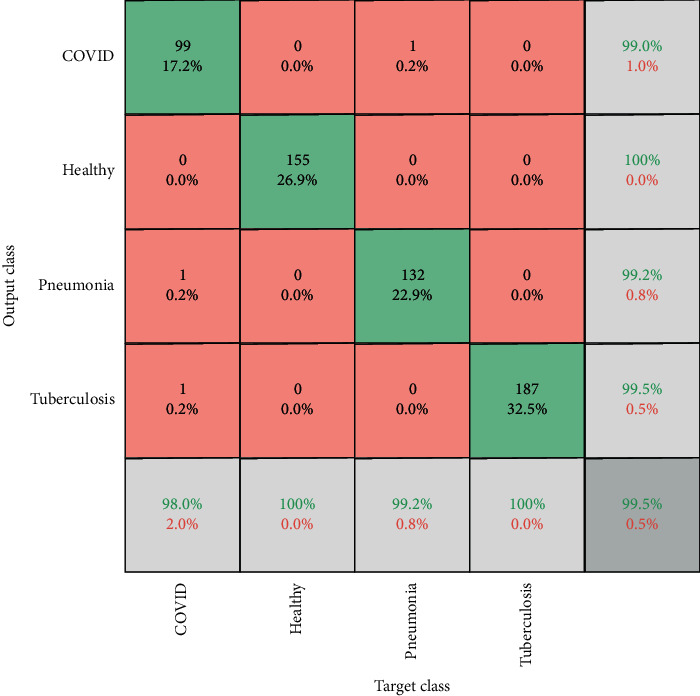

Figure 4 shows the confusion matrix analysis of the proposed metaheuristic-based automated diagnosis model. It depicts that the proposed model achieves significantly good accuracy of 98.55%, 100%, 99.2%, and 99.75%, for COVID-19, healthy, pneumonia, and tuberculosis subjects, respectively. Overall, the proposed model achieves 99.5% training accuracy.

Figure 4.

Confusion matrix analysis of the proposed metaheuristic-based deep learning model on a four-class dataset.

Tables 1 and 2 depict the training and validation analysis of the proposed and the existing SPEA-II-based deep transfer learning models on a four-class chest X-ray dataset. The lower difference among Table 1 and 2 values indicates that the SPEA-II-based deep transfer learning models do not suffer much from the overfitting issues. Overall, the proposed model outperforms the competitive SPEA-II-based deep transfer learning models on validation data in terms of accuracy, F-measure, sensitivity, specificity, and area under the curve by 1.23%, 1.18%, 1.26, 1.6%, and 1.13%, respectively.

Table 1.

Training analysis of the metaheuristic-based deep learning models.

| Models | Accuracy | F-measure | Sensitivity | Specificity | Area under the curve |

|---|---|---|---|---|---|

| SPEA-II-based VGG19 | 0.97227 | 0.97623 | 0.97565 | 0.97292 | 0.97427 |

| SPEA-II-based VGG16 | 0.99127 | 0.98302 | 0.98269 | 0.99143 | 0.98709 |

| SPEA-II-based ResNet50 | 0.98601 | 0.99151 | 0.99121 | 0.98648 | 0.98880 |

| SPEA-II-based AlexNet | 0.99304 | 0.99660 | 0.99651 | 0.99323 | 0.99484 |

| SPEA-II-based ResNet-34 | 0.98786 | 0.99830 | 0.99824 | 0.98823 | 0.99313 |

| SPEA-II-based GoogleNet | 0.98952 | 0.98641 | 0.98608 | 0.98977 | 0.98795 |

| SPEA-II-based InceptionNet | 0.99825 | 0.98981 | 0.98961 | 0.99828 | 0.99397 |

| SPEA-II-based DenseNet201 | 0.98257 | 0.98981 | 0.98947 | 0.98313 | 0.98624 |

| SPEA-II-based Xception | 0.99475 | 0.94906 | 0.94991 | 0.99466 | 0.97157 |

| Proposed SPEA-II-based model | 0.99976 | 0.99890 | 0.99976 | 0.99890 | 0.99883 |

Table 2.

Validation analysis of the metaheuristic-based deep learning models.

| Models | Accuracy | F-measure | Sensitivity | Specificity | Area under the curve |

|---|---|---|---|---|---|

| SPEA-II-based VGG19 | 0.97735 | 0.97623 | 0.97565 | 0.97789 | 0.97678 |

| SPEA-II-based VGG16 | 0.98611 | 0.98302 | 0.98269 | 0.98637 | 0.98454 |

| SPEA-II-based ResNet50 | 0.97577 | 0.99151 | 0.99121 | 0.97658 | 0.98371 |

| SPEA-II-based AlexNet | 0.99304 | 0.99660 | 0.99651 | 0.99323 | 0.99484 |

| SPEA-II-based ResNet-34 | 0.97923 | 0.99830 | 0.99823 | 0.98843 | 0.98886 |

| SPEA-II-based GoogleNet | 0.98437 | 0.98641 | 0.98608 | 0.98474 | 0.98540 |

| SPEA-II-based InceptionNet | 0.99651 | 0.98981 | 0.98961 | 0.99658 | 0.99312 |

| SPEA-II-based DenseNet201 | 0.97409 | 0.98981 | 0.98947 | 0.97491 | 0.98202 |

| SPEA-II-based Xception | 0.98784 | 0.94906 | 0.94991 | 0.98763 | 0.96824 |

| Proposed SPEA-II based model | 0.99130 | 0.99490 | 0.99476 | 0.99154 | 0.99312 |

Table 3 shows the comparison among the existing and proposed SPEA-II-based automated diagnosis models in terms of accuracy. It clearly indicates that the proposed SPEA-II-based automated diagnosis model outperforms the existing models.

Table 3.

Accuracy analysis among the proposed SPEA-II-based automated diagnosis model and the existing models.

| Model name | Accuracy |

|---|---|

| CNN [16] | 95.3% |

| MobileNetV2 and SqueezeNet [20] | 98.25% and 97.81% |

| LBP [18] | 99% |

| CNN [17] | 97.4% |

| COVID-Net [19] | 92.4% |

| CovXNet [22] | 97.4% for binary and 89.6% for three classes |

| VGG19 [21] | 98.75% for binary and 93.48% for multiclass cases |

| Xception and ResNet50_V2 [25] | 91.4% |

| ResNet50 [23] | 98% |

| DeTraC [27] | 95.12% |

| Ensemble model [26] | 96.4% |

| Truncated InceptionNet [28] | 97.92% |

| Proposed model | 99.13% |

5. Conclusion

In this paper, a metaheuristic-based deep COVID-19 screening model was proposed for X-ray images. The modified AlexNet architecture was utilized for feature extraction and classification of the input images. Strength Pareto evolutionary algorithm-II (SPEA-II) is used to tune the hyperparameters of modified AlexNet. The proposed model has been tested on a four-class (i.e., COVID-19, tuberculosis, pneumonia, or healthy) dataset. Finally, comparisons were drawn between the existing and the proposed models. Extensive experimental results reveal that the proposed model outperforms the competitive COVID-19 classification models. Overall, the proposed model outperforms the competitive SPEA-II-based deep transfer learning models on validation data in terms of accuracy, F-measure, sensitivity, specificity, and area under the curve by 1.23%, 1.18%, 1.26, 1.6%, and 1.13%, respectively. [42]

Data Availability

Data will be made available upon request.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Ooi G. C., Khong P. L., Müller N. L., et al. Severe acute respiratory syndrome: temporal lung changes at thin-section CT in 30 patients. Radiology. 2004;230(3):836–844. doi: 10.1148/radiol.2303030853. [DOI] [PubMed] [Google Scholar]

- 2.Wong K. T., Antonio G. E., Hui D. S. C., et al. Severe acute respiratory syndrome: radiographic appearances and pattern of progression in 138 patients. Radiology. 2003;228(2):401–406. doi: 10.1148/radiol.2282030593. [DOI] [PubMed] [Google Scholar]

- 3.Xie X., Li X., Wan S., Gong Y. Mining X-Ray Images of SARS Patients. Data Mining; 2006; Springer, Berlin, Germany. pp. 282–294. [DOI] [Google Scholar]

- 4.Oh Y., Park S., Ye J. C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans Med Imaging. 2020;39(8) doi: 10.1109/tmi.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 5. COVID-19 Symptoms (2020), https://www.who.int/health-topics/coronavirus#tab=tab_3.

- 6.Loey M., Smarandache F., Khalifa N. E. Within the lack of chest COVID-19 X-ray dataset: a novel detection model based on GAN and deep transfer learning. Symmetry. 2020;12(4):p. 651. doi: 10.3390/sym12040651. [DOI] [Google Scholar]

- 7.Corman V. M., Landt O., Kaiser M., et al. Detection of 2019 novel coronavirus (2019-nCoV) by real-time RT-PCR. Eurosurveillance. 2020;25(3) doi: 10.2807/1560-7917.es.2020.25.3.2000045.2000045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xie X., Zhong Z., Zhao W., Zheng C., Wang F., Liu J. Chest CT for typical 2019-nCoV pneumonia: relationship to negative RT-PCR testing. Radiology. 2020;1 doi: 10.1148/radiol.2020200343.200343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yu X., Kang C., Guttery D. S., Kadry S., Chen Y., Zhang Y. D. ResNet-SCDA-50 for breast abnormality classification. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2020;18:p. 1. doi: 10.1109/tcbb.2020.2986544. [DOI] [PubMed] [Google Scholar]

- 10.Cellina M., Orsi M., Toluian T., Valenti Pittino C., Oliva G. False negative chest X-rays in patients affected by COVID-19 pneumonia and corresponding chest CT findings. Radiography. 2020;26(3):e189–e194. doi: 10.1016/j.radi.2020.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shen D., Wu G., Suk H.-I. Deep learning in medical image analysis. Annual Review of Biomedical Engineering. 2017;19(1):221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wang H., Xia Y. ChestNet: a deep neural network for classification of thoracic diseases on chest radiography. 2018. https://arxiv.org/ftp/arxiv/papers/1807/1807.03058.pdf. [DOI] [PubMed]

- 13.Hemdan E., Shouman M., Karar M. COVIDX-Net: a framework of deep learning classifiers to diagnose COVID-19 in X-ray images. 2020. https://arxiv.org/abs/2003.11055.

- 14.Luz E. J. S., Silva P. L., Silva R., Silva L., Moreira G., Menotti D. Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. 2020. https://arxiv.org/abs/2004.05717.

- 15.Ozturk T., Talo M., Yildirim E. A., Baloglu U. B., Yildirim O., Acharya U. R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Computers in Biology and Medicine. 2020;121 doi: 10.1016/j.compbiomed.2020.103792.103792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Basu S., Mitra S. Deep learning for screening COVID-19 using chest X-ray images. 2020. https://arxiv.org/abs/2004.10507.

- 17.Das N. N., Kumar N., Kaur M., Kumar V., Singh D. Automated deep transfer learning-based approach for detection of COVID-19 infection in chest X-rays. IRBM. 2020 doi: 10.1016/j.irbm.2020.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tuncer T., Dogan S., Ozyurt F. An automated residual exemplar local binary pattern and iterative ReliefF based corona detection method using lung X-ray image. Chemometrics and Intelligent Laboratory Systems. 2020;203 doi: 10.1016/j.chemolab.2020.104054.104054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wang L., Wong A. COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. 2020. https://arxiv.org/abs/2003.09871. [DOI] [PMC free article] [PubMed]

- 20.Toğaçar M., Ergen B., Cömert Z. COVID-19 detection using deep learning models to exploit Social Mimic Optimization and structured chest X-ray images using fuzzy color and stacking approaches. Computers in Biology and Medicine. 2020;121 doi: 10.1016/j.compbiomed.2020.103805.103805 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Apostolopoulos I. D., Mpesiana T. A. Covid-19: automatic detection from x-ray images utilizing transfer learning with convolutional neural networks. Physical and Engineering Sciences in Medicine. 2020;43:635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mahmud T., Rahman M. A., Fattah S. A. CovXNet: a multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Computers in Biology and Medicine. 2020;122 doi: 10.1016/j.compbiomed.2020.103869.103869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Narin A., Kaya C., Pamuk Z. Automatic detection of coronavirus disease (COVID-19) Using X-Ray Images and deep convolutional neural networks. 2020. https://arxiv.org/abs/2003.10849. [DOI] [PMC free article] [PubMed]

- 24.Shelke A., Inamdar M., Shah V., et al. Chest X-ray classification using deep learning for automated COVID-19 screening. Radiol Imaging. 2020 doi: 10.1101/2020.06.21.20136.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rahimzadeh M., Attar A. A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of Xception and ResNet50V2. Informatics in Medicine Unlocked. 2020;19 doi: 10.1016/j.imu.2020.100360.100360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chouhan V., Singh S. K., Khamparia A., et al. A novel transfer learning based approach for pneumonia detection in chest X-ray images. Applied Sciences. 2020;10(2):p. 559. doi: 10.3390/app10020559. [DOI] [Google Scholar]

- 27.Abbas A., Abdelsamea M. M., Gaber M. M. Classification of COVID-19 in chest X-Ray images using DeTraC deep convolutional neural network. 2020. https://arxiv.org/abs/2003.13815. [DOI] [PMC free article] [PubMed]

- 28.Das D., Santosh K. C., Pal U. Truncated inception net: COVID-19 outbreak screening using chest X-rays. Physical and Engineering Sciences in Medicine. 2020;43(3):915–925. doi: 10.1007/s13246-020-00888-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Basavegowda H. S., Dagnew G. Deep learning approach for microarray cancer data classification. CAAI Transactions on Intelligence Technology. 2020;5(1):22–33. doi: 10.1049/trit.2019.0028. [DOI] [Google Scholar]

- 30.Ghosh S., Shivakumara P., Roy P., Pal U., Lu T. Graphology based handwritten character analysis for human behaviour identification. CAAI Transactions on Intelligence Technology. 2020;5(1):55–65. doi: 10.1049/trit.2019.0051. [DOI] [Google Scholar]

- 31.Gupta B., Tiwari M., Singh Lamba S. Visibility improvement and mass segmentation of mammogram images using quantile separated histogram equalisation with local contrast enhancement. CAAI Transactions on Intelligence Technology. 2019;4(2):73–79. doi: 10.1049/trit.2018.1006. [DOI] [Google Scholar]

- 32.Osterland S., Weber J. Analytical analysis of single-stage pressure relief valves. International Journal of Hydromechatronics. 2019;2(1):32–53. doi: 10.1504/ijhm.2019.098951. [DOI] [Google Scholar]

- 33.Wang R., Yu H., Wang G., Zhang G., Wang W. Study on the dynamic and static characteristics of gas static thrust bearing with micro-hole restrictors. International Journal of Hydromechatronics. 2019;2(3):189–202. doi: 10.1504/ijhm.2019.102893. [DOI] [Google Scholar]

- 34.Wiens T. Engine speed reduction for hydraulic machinery using predictive algorithms. International Journal of Hydromechatronics. 2019;2(1):16–31. doi: 10.1504/ijhm.2019.098949. [DOI] [Google Scholar]

- 35.Shanthi T., Sabeenian R. S. Modified Alexnet architecture for classification of diabetic retinopathy images. Computers & Electrical Engineering. 2019;76:56–64. doi: 10.1016/j.compeleceng.2019.03.004. [DOI] [Google Scholar]

- 36.Zitzler E., Laumanns M., Thiele L. SPEA2: Improving the Strength Pareto Evolutionary Algorithm TIK-Report, 103.Computer Engineering and Networks Laboratory (TIK) Vol. 76. Zurich: Department of Electrical Engineering, Swiss Federal Institute of Technology (ETH), Zurich ETH Zentrum; 2001. pp. 56–64. [Google Scholar]

- 37.Zitzler E., Thiele L. Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Transactions on Evolutionary Computation. 1999;3(4):257–271. doi: 10.1109/4235.797969. [DOI] [Google Scholar]

- 38.Koduvilarpatti T. Strength Pareto evolutionary algorithm based multi-objective optimization for shortest path routing problem in computer networks. Journal of Computer Science. 2011;7(1):17–26. [Google Scholar]

- 39.Zitzler E. Evolutionary Algorithms for Multiobjective Optimization: Methods and Applications. Vol. 63. Ithaca, NY, USA: Shaker; 1999. [Google Scholar]

- 40.Sheng W., Liu Y., Meng X., Zhang T. An improved strength Pareto evolutionary algorithm 2 with application to the optimization of distributed generations. Computers & Mathematics with Applications. 2012;64(5):944–955. doi: 10.1016/j.camwa.2012.01.063. [DOI] [Google Scholar]

- 41.Gianchandani N., Jaiswal A., Singh D., Kumar V., Kaur M. Rapid COVID-19 diagnosis using ensemble deep transfer learning models from chest radiographic images. Journal of Ambient Intelligence and Humanized Computing. 2020:1–13. doi: 10.1007/s12652-020-02669-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Yilmaz S., Ivanov K., Levine S. Application of genetic algorithm to optimize burnable poison placement in pressurized water reactors. Proceedings of the 7th Annual Conference on Genetic and Evolutionary Computation; June 2005; Washington, NJ, USA. pp. 1477–1483. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available upon request.