Abstract

Cardiovascular disease (CVD) is the leading cause of death worldwide, causing over 17 million deaths per year, which outpaces global cancer mortality rates. Despite these sobering statistics, most bioinformatics and computational biology research and funding to date has been concentrated predominantly on cancer research, with a relatively modest footprint in CVD. In this paper, we review the existing literary landscape and critically assess the unmet need to further develop an emerging field at the multidisciplinary interface of bioinformatics and precision cardiovascular medicine, which we refer to as ‘cardioinformatics’.

Keywords: cardiovascular disease, bioinformatics, cardiology, computational biology

Introduction

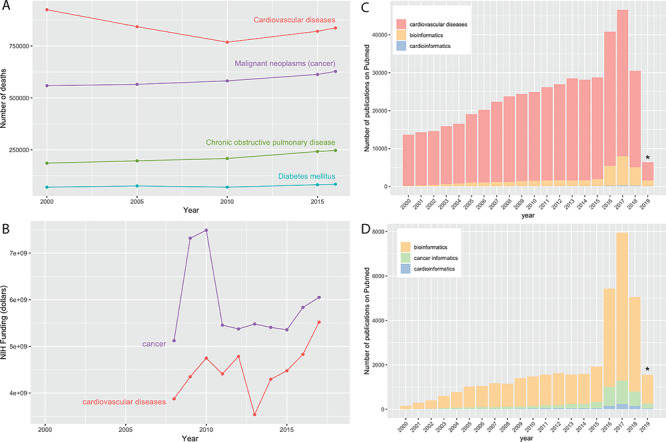

According to the World Health Organization, ischemic heart disease and stroke have remained the top two global killers in the past 15 years. The Global Burden of Diseases, Injuries, and Risk Factors Study shows that heart disease is still the dominant cause of death globally for both genders [1], with a projection that by 2030, almost half of the adult population will have a CVD diagnosis [2, 3]. In the United States, cardiovascular diseases (CVDs) have been the leading cause of death by non-communicable diseases, consistently surpassing cancer for the past many decades (Figure 1A). However, federal National Institutes of Health (NIH) funding on CVD research has consistently been less than that on cancer research, at least half a billion dollars annually since 2008 (Figure 1B). Meanwhile, there has been a 12.5% increase in the global number of deaths from CVD in the past decade [4].

Figure 1.

The status of CVD research. (A) Number of deaths by non-communicable diseases in the United States and (B) funding by the NIH for research on cancer and CVDs. (C) PubMed queries reveal a large body of CVD research, out of which only a small percentage involve bioinformatics. (D) Relative to the total pool of bioinformatics papers (in any field), there are far more cancer papers that utilize bioinformatics methods than CVD papers that utilize such methods.  Since all the queries are based on the manual MeSH catalog, more recent tallies will lag behind the true volume of publication.

Since all the queries are based on the manual MeSH catalog, more recent tallies will lag behind the true volume of publication.

Research in CVD has steadily increased since the year 2000, as measured by the body of publications indexed in PubMed over this time (Figure 1C). In 2017 alone, there were more than 40 000 primary research (non-review) articles classified with the subject heading ‘cardiovascular disease’, defined according to the Medical Subject Heading (MeSH) terms (Figure 1C). However, the share of bioinformatics research has remained modest among these CVD outputs at least relative to comparable work done in cancer biology (Figure 1D). For example, multi-omics data integration reveals novel disease pathways and therapeutic targets, but its implementation in CVD research areas like cardiovascular (CV) calcification is failing to keep pace with other research fields, such as oncology [5]. While bioinformatics is at the center of precision medicine [6] and CV research is involved in several existing precision medicine initiatives [7, 8], the field of cardioinformatics is still in its early days with ample opportunities to benefit from cutting-edge data science techniques and machine learning (ML) methodologies, as has been the case in precision oncology. Even now, the application of ML is already being recognized as an indispensable component of the practice of cardiology in the future [9, 10] and, therefore, given the availability of increasingly performant ML implementations [11], cardioinformatics is better positioned to tackle domain-specific research questions by developing clinical applications to enhance compute-intensive tasks such as those found in medical imaging, CVD risk prediction modeling, among other active research areas. For instance, current methods for CV calcification imaging are mostly limited to advanced calcification and miss clinically relevant early microcalcifications, creating an unmet need for implementation of advanced imaging tools and artificial intelligence to improve diagnostics and risk assessment [5].

In general, efficient implementations of advanced computational algorithms that optimize for time, cost and accuracy measures across broad domains of biological data science, such as single-cell sequencing [12] (e.g. to investigate cellular heterogeneity in transcription [13]) or long-read mapping [14] (e.g. to reconstruct full-length isoform transcripts in high resolution [13]), will find increasingly more adoption in CVD research throughout the next few years as journals begin gearing up for the release of special issues dedicated exclusively to performance benchmarking of new and existing software tools. In addition, the availability of open-access benchmarking data and guidelines to evaluate ML methods across a broad range of application areas including biomedical studies, signal processing and image classification will catalyze the precipitation of the most appropriate bioinformatics software tools for any given research task [15, 16]. Taken together, programmatic need for bioinformatics benchmarking and awareness of state-of-the-art tools for performing CVD research will bridge across multiple areas of expertise (e.g. single-cell sequencing technologies, long-read mapping, 3D genome visualization, etc.), making cardioinformatics research a truly multidisciplinary initiative for dissecting the molecular mechanisms behind complex CVD traits.

The American Heart Association (AHA) Institute for Precision Cardiovascular Medicine recently partnered with Amazon Web Services to provide a variety of grant funding opportunities for testing and refining artificial intelligence (AI) and ML algorithms using healthcare system data and multiple longitudinal data sources to fund research that improves our understanding of all CVD data related to precision medicine. Therefore, we expect that grant funding initiatives such as these will gradually begin narrowing the gap between cardioinformatics and cancer research in terms of the availability of improved computational tools, infrastructure and analysis resources. Some recent positive trends in this direction include large-scale infrastructure and knowledge portal development [17–20] for working with CVD data, as well as population-wide multi-omics initiatives such as the NHLBI Trans-Omics for Precision Medicine (TOPMed) Consortium [21] for integrating whole-genome sequencing (WGS) and other -omics data (e.g. metabolic profiles, protein and RNA expression patterns) with molecular, behavioral, imaging, environmental and clinical data. In this review, we highlight these contemporary opportunities and perspectives for CVD genomic and precision medicine research, introduce the bountiful resources available and propose ways to advance this field further by promoting a culture steeped in computation vis-à-vis modern bioinformatics and computational biology methodologies.

The review is structured as follows: an overview of the current informatics landscape is provided in The democratization of data and the rise of knowledge bases. To explore what has been learned about CVDs, we reviewed an extensive body of CVD research, pivoting around genetics. Emerging from this survey is a central theme of the diseases’ enormous complexity, which is elaborated in the section called Complexity of CVDs. Despite decades of applying and extending statistical methods to study CVD, our knowledge of the diseases barely extends beyond genetic associations into causal, mechanistic insights. Given the exciting expansion of biological datasets, the advances of knowledge bases and the current status of CVD research, we then propose three areas where bioinformaticians and CVD researchers may want to prioritize in pushing this field forward, in The challenges of cardioinformatics.

The democratization of data and the rise of knowledge bases

The past few years have seen a substantial rise in the availability of computational resources and infrastructure that provide access to aggregate genetic data and genomic summary results to facilitate rapid and open sharing of individual level data and summary statistics pertinent to various biological diseases and data types. One of the early pioneers of web-based knowledge portals has been a Memorial Sloan Kettering Cancer Center resource called cBioPortal [22, 23], which provides intuitive visualization and analysis of large-scale cancer genomics datasets from large consortium efforts such as TCGA [24] and TARGET [25] as well as publications from individual labs. Other major players in the cancer knowledge base arena include the National Cancer Institute’s Genomic Data Commons (GDC) Portal [26, 27], which provides full download and access to all raw data (e.g. mRNA expression files, full segmented copy number variant [CNV] files, etc.) generated by TCGA and TARGET. In addition, resources such as the Broad Institute’s Single Cell Portal [28] provide an unprecedented view into the biology of different diseases, including cancers like glioblastoma, at the single-cell sequencing level.

More recently, the Knowledge Portal Framework, an infrastructure sponsored by the Accelerating Medicines Partnership and developed at the Broad Institute, has empowered a variety of disease-focused portals, including those for type II diabetes [29], amyotrophic lateral sclerosis [30], sleep disorders [31], CV [32] and cerebrovascular diseases [33]. The purpose of these resources is to aggregate and store statistical data for hundreds of millions of genetic variants and organize them to be rapidly queried and visualized by biologists, statistical geneticists, pharmaceutical researchers and clinicians. Other such knowledge bases focused on exploring large-scale genetic association data in the context of, for instance, drug/treatment targets include the OpenTargets initiative [25], which is a public–private venture that generates evidence on the validity of therapeutic targets based on genome-scale experiments and analysis. Another public–private partnership—the Accelerating Medicines Partnership-Alzheimer’s Disease (AMP-AD) Target Discovery and Preclinical Validation Project—has developed an AMP-AD Knowledge Portal to help researchers identify potential drug targets to accelerate pre-competitive Alzheimer’s disease treatment and prevention [34]. Interactive, web-based tools such as the Agora platform [35] bring together both AMP-AD analyses and OpenTargets knowledge under one umbrella to help explore and ultimately assist the validation of early AD candidate drug targets.

In addition to these various portals anchored on the results of population genetic association studies, CVD knowledge bases such as HeartBioPortal [17] have begun organizing and integrating the large volume of publicly available gene expression data with genetic association content, motivated by the stimulus that transcriptomic data provide powerful insights into the effects of genetic variation on gene expression and alternative splicing in both health and disease. Other knowledge portals such as COPaKB [36] and large-scale initiatives such as HeartBD2K [37] have taken a parallel focus on CVD proteomics datasets [38]. Such integrative multilevel efforts to dissect the molecular mechanisms behind complex disease traits now also extend beyond academia into biotech startup companies, non-profits and other initiatives such as SVAI, Quiltomics, Omicsoft, Sage Bionetworks, Omics Data Automation, Occamzrazor, NextBio, BenevolentAI, Insitro, Researchably and others—several of which have recently been effectively integrated into the workflow of larger biotech companies such as Illumina and Qiagen. Parallel to these academic and industry initiatives, several government-led genomic sequencing programs to collect a nation’s data have appeared over the years, setting the stage for centralized databases serving disease prevention, health management and discovery. Among those programs are the recently completed 100K Genomes Project in the UK [39], the ongoing 100K Wellness Pioneer Project in China [40], NIH’s All of Us Research Program [41] and the Department of Veterans Affairs Million Veteran Program [42] in the United States, many of which contain vast quantities of population-wide race/ethnic group-specific CVD data. Most recently, the All of Us program released a public data commons browser [43] to explore the prevalence of specific conditions, drug exposures and other clinically relevant factors on a demographically diverse cohort of participants, including populations historically underrepresented in biomedical research. The data in the All of Us Data browser include many CVD phenotypes and come from participant electronic health records (EHRs) and from survey responses (e.g. on basic demographics, overall health and lifestyle) as well as physical measurements (e.g. blood pressure, heart rate, height, weight, waist circumference and hip circumference) taken at the time the participants enroll in the All of Us program.

Complexity of CVDs

The number of genetic actors

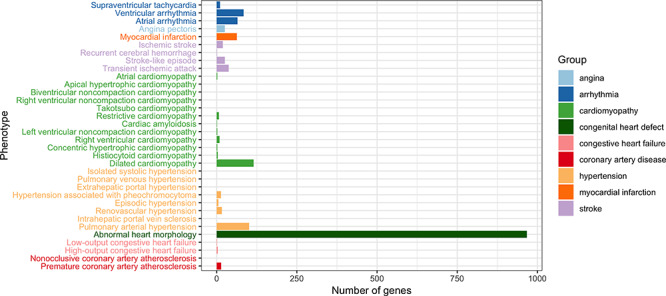

There is a certain genetic component in all major categories of heart disease (Figure 2). The increase in genome-wide association studies (GWASs) has led to the associations of more and more genetic variants to human traits and diseases [44], fueling the identification of hundreds of novel drug targets and the development of polygenic risk scores that may help improve the ability to predict a person’s pre-disposition to various CV ailments [45–49] and facilitate early and preventative care [50]. Although CVD is significantly broad and encompasses diseases related to blood vessels, the myocardium, heart valves, the conduction system and developmental abnormalities, there are only a few CV disorders that can be attributed to a single pathogenic gene (as covered in detail within a recent review [2]). Although GWAS is, by definition, designed to implicate a single pathogenic gene, or a limited number of pathogenic genes, Leopold and Loscalzo [2] present a cogent argument against the theory of a single causal pathogenic gene/gene product as a mediator of CVD phenotypes, even in cases of certain classic Mendelian disorders. Nevertheless, discovering new rare and common variants that may control individual drug responses in different race/ethnic populations may elucidate not only disease mechanisms but also improve clinical trial design whereby drug candidates can be tested in more targeted subpopulations, in which drug efficacy is not masked by the inclusion of predicted nonresponders [51]. To this end, enriching clinical trial selection and enrollment is one of the target outcomes of precision medicine [2]. This is motivated by, for example, case studies of CV pathologies that are prominently characterized by biomarkers that do not reveal the underlying complexity of the disease or its etiology. For instance, although atherosclerosis is strongly clinically associated with elevated low-density lipoprotein levels, the underlying biology is more complex, as suggested by the clinical failure of evacetrapib despite significant effects on low-density lipoprotein [52]. Likewise, since clinical trials tend to focus on the mean response to an intervention instead of examining variability in response, current therapies for clinical indications like essential hypertension are still unsatisfactory because most clinical trials generally examine outcome effects as the sample mean blood pressure is decreased, not personalized differential treatment approaches tailored to patients’ individual hypertension profiles [52]. As a result, it is estimated that 44% of patients with essential hypertension were unable to achieve blood pressure control despite pharmacological therapy [52, 53].

Figure 2.

The number of genes associated with CVD. CVD is defined to include all phenotypes under the term ‘Abnormality of the cardiovascular system’ (HP:0001626) in the Human Phenotype Ontology [248]. Annotations of each phenotype was pooled from OMIM [249], Orphanet [250] and DECIPHER [251].

Predictably, over time the number of variants found associated with (any given) disease has increased and, in most cases, gone beyond a few implicated genes that could be described in a single-page table or diagram. Dilated cardiomyopathy (DCM), a common cause of heart transplantation [54], is a vivid example of how causal variants and their corresponding genes were discovered over the years. In a recent review [54], 16 disease-causing genes were compiled, along with an additional 41 putative genes. Meanwhile, the NHGRI-EBI GWAS Catalog [55] and annotations on Human Phenotype Ontology [56] suggest a larger number of genes associated with this condition, 69 genes and 115 genes, respectively. Clinical application has been keeping up, with a typical commercial gene panel for DCM genetic testing covering 50 genes on average, and 111 in total [57]. Although these genes were discovered via different approaches, the catalog of DCM-associated loci kept expanding. Similarly for coronary artery disease (CAD), additional loci have been associated with the disease almost every year since 2007, bringing the total number of loci associated with CAD to over 150 [58]. Since it is reasonably expected that when more genes are involved in a disease, the individual effect exerted by each gene will be small; these constantly expanding gene panels suggest that the common mutations in a single gene are not likely to capture substantial disease risk for most cases that are polygenic. From a research perspective, these findings imply that the quest of pinpointing causal variants is getting progressively more challenging, because testing the variant–phenotype association on small-effect variations requires a much larger number of samples for sufficient power [44], or critically different methods of statistical testing and inference. Using atherosclerosis as an example, Cranley and MacRae [59] argue that the slow progress on disease mechanisms comes not from incomplete genotyping to identify associated variants, but rather from the inability to draw causal relationships between identified variants (e.g. 9p21) and disease pathways [51]. Specifically, although SNPs in this region were identified by several independent GWAS, and each risk allele was associated with a 29% increased risk of CVD, these SNPs are in noncoding regions where the nearest genes (CDKN2B, CDKN2A) are >100 Kb away, and the causality between these genes and susceptibility to atherosclerosis has not yet been ascertained [60–62]. In general, many genomic variants implicated in GWAS occur in intervening regions with no immediate connections to known coding genes or biochemical pathways and, therefore, studies using ATAC-seq and other NGS techniques (e.g. RNA-seq, Hi-C, etc.) are linking loci identified by GWAS to epigenetic changes such as enhancer–promoter interactions [51]. In addition, large numbers of GWAS variants are now known to function as expression quantitative trait loci (eQTL), meaning that they regulate the expression level of transcripts (as measured, e.g. by RNA-seq), whereas splice quantitative trait loci regulate the splice ratio of transcript isoforms [51], highlighting how the transcriptome can offer a dynamic view of the functions of genetic variants in response to various acute and cumulative exposures including genetic, metabolic and environmental mediators. Finally, as large-scale data become more readily available for population-level estimation of many genetic variants with low allele frequencies, the high penetrance of many previously labeled ‘pathogenic’ rare variants (minor allele frequency < 0.1%) has been questioned [63]. In other words, genomic sequencing data from large population-level cohorts is uncovering many of the same variants previously annotated as pathogenic mutations [63, 64], prompting the need for variant reclassification and the conclusion that some genes reported to cause inherited heart disease are likely spurious [65]. Alternatively, it can also happen that ostensibly causal genetic variants found in family studies have no related phenotype in the population-level setting [66], highlighting some of the general challenges in attributing causality and understanding disease mechanism at the level of the individual patient [65]. In general, although GWAS studies have been successful in identifying genetic variation implicated in CVDs, they provide little or no molecular evidence of gene causality [67]. These observations open up a small window into the complicated and dynamic landscape of human disease genetics.

Besides the increasing difficulty of discovering these variations, modeling their effects poses another set of challenges. With a potential interaction between every pair of genomic features, be they genes or regulatory sequences, the number of such interactions increases quadratically with the number of actors, leading to the combinatorial explosion of states that a biological system can assume (theoretical calculation:  elements leads to

elements leads to  , i.e.

, i.e.  interactions). The plethora of variants associated with a disease or risk of disease do not promise a quick understanding of pathobiological mechanisms, as the functional consequences of a majority of these variants remain unknown. Among the most understood are PCSK9 [68], ANGPTL4 [69, 70] and APOC3 [71] on which association tests and sequencing have been combined to ascertain the linkage to CAD risk, translating to potential vascular protective drugs. The methodology of these studies are still under the influence of mainstream CVD research, i.e. revolving around genotype–phenotype association testing. This top-down approach, i.e. phenotype to gene to variant, has certain limits in its power, requiring more and more samples for less frequent and less penetrant alleles while leaving gaps in mechanistic understanding. Bottom-up approaches in which variations are systematically introduced into a DNA sequence (and their functional consequences are characterized in vitro) will complement the current understanding of these diseases. As exemplified in the novel assays enabled by state-of-the-art experimental techniques and computational processing, this approach has demonstrated utility in cancer variant classification [72], foreshadowing similar progress in CVD research.

interactions). The plethora of variants associated with a disease or risk of disease do not promise a quick understanding of pathobiological mechanisms, as the functional consequences of a majority of these variants remain unknown. Among the most understood are PCSK9 [68], ANGPTL4 [69, 70] and APOC3 [71] on which association tests and sequencing have been combined to ascertain the linkage to CAD risk, translating to potential vascular protective drugs. The methodology of these studies are still under the influence of mainstream CVD research, i.e. revolving around genotype–phenotype association testing. This top-down approach, i.e. phenotype to gene to variant, has certain limits in its power, requiring more and more samples for less frequent and less penetrant alleles while leaving gaps in mechanistic understanding. Bottom-up approaches in which variations are systematically introduced into a DNA sequence (and their functional consequences are characterized in vitro) will complement the current understanding of these diseases. As exemplified in the novel assays enabled by state-of-the-art experimental techniques and computational processing, this approach has demonstrated utility in cancer variant classification [72], foreshadowing similar progress in CVD research.

In addition to the loci that have been directly associated with CVD, a large number of genes or regulatory elements may contribute significantly to CVD risks in an indirect manner, due to highly interconnected biological pathways. For instance, independent research in aging has unraveled the intertwined relationship between heart disease and longevity pathways [73]. With age being the most important factor in conferring CVD risk [74], it is likely that these longevity genes will be involved in future analyses of CVD genetics. The genetic scope of CVD may be enlarged even further to include most of the genome, under the recently proposed omnigenic model for complex traits, in which most heritability is explained by peripheral genes outside of the core pathways [75]. Such expansion calls for a paradigm shift from additive effects of multiple genes to the interactions between them, from the physical genes to the ‘eigen-genes’ that represent biologically functional modules [76].

Such a paradigm shift is, in fact, only part of the potential answer to the long-standing puzzle of missing heritability in CVD as well as other complex diseases [77]. Heritability  , in the ‘broad sense’, is the proportion of phenotypic variance that can be explained by genetic factors, while the ‘narrow sense’ heritability

, in the ‘broad sense’, is the proportion of phenotypic variance that can be explained by genetic factors, while the ‘narrow sense’ heritability  is the proportion attributable to ‘additive’ genetic factors [77]. If all the heritability has been accounted for, the squared correlation between the observed and the predicted phenotype should be equal to

is the proportion attributable to ‘additive’ genetic factors [77]. If all the heritability has been accounted for, the squared correlation between the observed and the predicted phenotype should be equal to  (or

(or  ). The increasing number of loci associated with complex traits still leave a large gap between predicted and observed phenotypes, prompting different strategies to account for the missing heritability. One of the more obvious causes of missing heritability relates to the limited ascertainment of the total pool of rare variants in humans. Even among rare causal variants identified to date, associations with disease has likely been under-appreciated due to insufficient study power to detect modest effects on risk. One addresses this issue by collecting more samples among diverse study populations [44, 63] and improving statistical tests [78, 79]. Even so, conventional models of phenotype prediction have relied almost exclusively on the additive effects of genetic factors, hence can only explain narrow-sense heritability

). The increasing number of loci associated with complex traits still leave a large gap between predicted and observed phenotypes, prompting different strategies to account for the missing heritability. One of the more obvious causes of missing heritability relates to the limited ascertainment of the total pool of rare variants in humans. Even among rare causal variants identified to date, associations with disease has likely been under-appreciated due to insufficient study power to detect modest effects on risk. One addresses this issue by collecting more samples among diverse study populations [44, 63] and improving statistical tests [78, 79]. Even so, conventional models of phenotype prediction have relied almost exclusively on the additive effects of genetic factors, hence can only explain narrow-sense heritability  at best. In addition, alterations outside of DNA sequences have been found to be heritable, suggesting another part of the puzzle relies on epigenetics. Thus, to advance our understanding of complex diseases such as CVD requires moving beyond the exome and genome, as discussed in the next section.

at best. In addition, alterations outside of DNA sequences have been found to be heritable, suggesting another part of the puzzle relies on epigenetics. Thus, to advance our understanding of complex diseases such as CVD requires moving beyond the exome and genome, as discussed in the next section.

The diversity of actors

From SNPs to structural variations

Genome-wide association studies have been predominantly conducted on single-nucleotide polymorphisms (SNPs) thanks to the availability of easy-to-produce SNP microarrays. Such technologies have clearly enriched our knowledge base of the effect of single nucleotide variants, while leaving the effect of structural variations (SV) poorly understood. There are now 660 million SNPs documented in the dbSNP database [80], compared to 4.6 million SVs in the DGVa (Database of Genomic Variants archive [81], which also includes studies annotated by the NCBI-hosted database of structural variants, dbVar [82]). SV databases such as dbVar and DGVa are in fact storing each study–publication individually instead of cataloging SVs into data entries. Although the current knowledge base of SVs is not sufficient to create reference entries of SV, the map of SV from 1000 Genomes Project [83] has enabled further studies of the role of SV in cardiac diseases, suggesting the potential impact of SV on the transcriptional regulation of cardiac genes expressed in the heart [84]. As envisioned, SVs might be one of the promising areas to look for the missing heritability in CVD [85]. In fact, the relative lack of SV investigation in CVD has been recognized as one of the key issues that confound the attribution of causality in linking genetic variants to CVD phenotypes [65]. To this end, ongoing projects like TOPMed contain a Structural Variant Working Group to call CNVs within TOPMed, and they have begun incorporating large-scale multi-ancestry studies spanning diverse types of sequencing data from both European and non-European race/ethnic groups. Most recently, the gnomAD browser [63] added 500 000 structural variants from 15 000 genomes, with full VCF and BED files available for download [86].

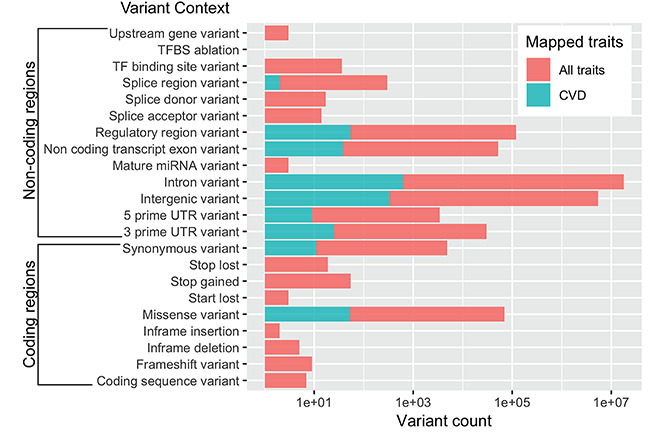

From coding to noncoding regions

As array-based genotyping was gradually replaced by next-generation sequencing, the cost of sequencing an exome, i.e. the protein-coding part of a genome, became much more affordable and enabled the collection of more than 60 000 exomes [63]. Using this dataset, Walsh and colleagues [64] found that many ‘pathogenic’ genetic variants associated with various cardiomyopathies are equally common in clinical cases as in the control population. Genes that were consistently included on genetic testing panels for DCM such as MYBPC3, MYH6, SCN5A, etc. turned out to be less penetrant than previously thought, in consideration of their frequency in the control population. The rationale for prioritizing the sequencing of exome over that of the entire genome, besides the lower cost, was a regularly cited statement that the exome harbors 85% of disease-causing variants [87] which turned out to be an outdated estimate from 1995. In our own survey of the NHGRI-EBI GWAS Catalog [55], a large fraction of variants tend to occur in non-protein coding regions such as intronic, intergenic and splice junctions (Figure 3). The distribution of CVD-associated variants is similar to that of variants associated with all traits. Previous studies also asserted the prevalence of regulatory regions among variants associated with cardiometabolic risk [88], as well as many other complex traits [89]. As an unprecedented amount of WGS data become available from large-scale genomic projects such as The 1000 Genomes Project [90], UK10K [91], The 100,000 Genomes Project [39], The 100K Wellness Pioneer Project in China [40], All of Us Research Program [41], TOPMed [21] and CCDG [92], we are poised to learn more about this ‘dark matter’ in the human genome and how it works in complex diseases.

Figure 3.

Distribution of SNPs that have been associated with a phenotypic trait. The associations are downloaded from NHGRI-EBI GWAS Catalog in which only those with P-value  were retained.

were retained.

Beyond genetics: epigenetics and gene–environment interplay

CV risks can be conferred through heritable changes in gene expression without alterations in the underlying DNA sequence. These epigenetic processes traditionally involve DNA methylation, a wide range of histone modifications including acetylation, methylation, phosphorylation, ubiquitylation, sumoylation and biotinylation, and are now encompassing a looselydefined group of processes mediated by long noncoding RNAs (lncRNAs) and microRNAs (miRNAs). Dysregulation in epigenetic processes has been associated with the pathogenesis of cancer and many other diseases. To date, epigenetic mechanisms have been demonstrated to be involved in a variety of CVDs and conditions [93–97]. For instance, early differential epigenomic analysis, albeit on a limited number of samples, established differentiating features in DNA methylation and histone H3 methylation between control and failing hearts [98]. Likewise, abnormal expression and activity of histone deacetylases (HDACs) have been linked to cardiac defects, heart disease and cardiac development [99–102]. For example, HDAC9 is highly expressed in cardiac muscle, and one of the targets of HDAC9 is the transcription factor MEF2, which has been implicated in cardiac hypertrophy [103]. Following these early findings, epigenome-wide association studies have proposed a number of DNA methylation sites associated with blood lipid [104], body mass index [105, 106], heart failure [107] and heart attack history [108]. In addition, alterations in chromatin structure have been shown to induce heart failure [109].

As more lncRNAs were discovered and characterized, the prevalence of these molecules in CV biology also emerged. At least 22 lncRNAs were reportedly dysregulated in CVDs including CAD, myocardial infarction, cardiac hypertrophy and atherosclerosis, affecting a wide range of molecular, cellular and physiological processes [110, 111]. Due to low relative abundance levels and highly tissue-specific expression patterns, lncRNAs remain challenging to study. Some of the functions of lncRNA that have been recognized include imprinting, scaffolding, enhancer activity and molecular sponges. These actions mark the presence of lncRNAs in many CV processes such as cardiac differentiation, macrophage activation and sarcomere development [112]. With 107 039 lncRNAs detected in the human genome so far (reported by LNCipedia [113], as of November 2018), more lncRNAs are likely to be implicated in CV biology in the future, hence promising potential therapeutic targets. In this regard, there is increasing evidence that circulating miRNAs can serve as potential prognostic and diagnostic biomarkers for the prevention and treatment of CVDs [114], since they are critical regulators of CV function and play important roles in almost all aspects of CV biology [115–118] (for historical perspective, Azuaje and colleagues [119] reviewed some of the first CVD biomarkers discovered through integrative omics approaches). For example, miRNAs associated with the diagnosis and prognosis of heart failure, acute myocardial infarction, pulmonary hypertension and arrhythmia are reviewed by Zhou and colleagues [114]. Nevertheless, challenges remain: for example, for a miRNA to be considered a potential therapeutic target or diagnostic marker of CVD, it should be predominantly expressed in cardiac tissue and/or be essential for heart development, function or repair of heart-specific damage (e.g. miR-1, miR133a, miR-208a/b and miR-499) [120, 121], while also normalizing for the fact that miRNA expression levels are often affected by non-cardiac conditions (e.g. cancer, infection, drug use, etc.) and other co-morbidities. However, given the utility of miRNAs in both animal models and human clinic trials for cancer treatment [122–125], miRNA-based therapeutics for the treatment of CVD remain a promising area of research.

As epigenetic processes include various molecular and cellular events, the experimental assays for mapping of the epigenome are accordingly diverse. DNA methylation profiling can be done with methylation-sensitive restriction enzymes, bisulfite sequencing or immunoprecipitation with antibodies against methylated-cytosine [126]. Histone modifications can be profiled by immunoprecipitation with antibodies specific to the modified histone of interest, essentially requiring a ChIP experiment for each of the histone modifications one wants to interrogate [127]. Meanwhile, the noncoding RNA transcripts can be profiled with variations of RNA-seq experiments that are optimized for the target fraction of RNA. Such diversity entails significant difficulty in comprehensive profiling of the epigenome in a single experimental assay, stressing the need for re-collection and re-analysis of dispersed datasets for a more complete multi-omics picture. As epigenetic alterations have been found to be responsive to environmental cues throughout life, the epigenome lays an important bridge between the genetic makeup of an organism and its phenotype by helping to explain the gene–environment interplay. For example, environmental factors have been known for decades to play critical roles in conferring CV risk. Framingham-based risk scores [128], which include variables that can be intervened upon by lifestyle habits (smoking, blood cholesterol, blood pressure, diabetes), have guided clinical practices [129] and shown to perform well in predicting CV risk in many populations [130, 131]. The importance of a healthy lifestyle (absence of obesity, no current tobacco use, a healthy diet, regular physical activity) cannot be understated for CVD risk reduction, and it has been shown to serve as an environmental resilience factor and modify genetic risk of CVD [2]. In fact, over the past 50 years, historical progress towards the eradication of CVD has been achieved primarily through the adoption of lifestyle modifications, including dietary, tobacco and exercise interventions [2], including changes to public health policy (e.g. secondhand smoke legislation) and other health measures. In a recent study of 55 685 people stratified according to a polygenic risk score, it was found that individuals with a high genetic risk of CAD had a 46% reduction in the relative risk of coronary events if they had a healthy lifestyle, compared to individuals who did not [132]. However, the relative performance of phenotype-based risk scores and the genotype-based counterpart is highly variable depending on specific populations and practices in designing score components. There exist lines of evidence favoring both phenotypic variables [133] and genotypic ones in predicting disease risk [131, 134–136]. Clearly, there remains a gap in understanding gene–environment interaction that can now be studied at the molecular level, thanks to advances in experimental techniques to measure the exposome, i.e. all environmental factors/exposures throughout life that influence disease, including an individual’s diet, pollutants and infections [137]. With recent development of wearable devices to collect real-time data in a non-intrusive manner, it is now possible to monitor the exposome for its dynamic compositions of chemical compounds and micro-organisms [138, 139] as well as monitor early identifiers of CVD progression [140, 141] and disease diagnosis [142]. In general, the emergence of mobile health devices and sensors is now ushering in a new era of streaming data collection relevant to CVD metrics at the individual-based level, e.g. an individual’s blood pressure, heart rhythm, oxygen saturation, brain waves, air quality, radiation, among others [143]. Such devices will enable the collection of longitudinal personal omics profiles across different demographics, ultimately not only helping to detect health–disease transitions based on molecular and physiological metrics but also measuring interactions of environment and health outcomes that inform individualized health data [143]. Being among complex traits that are heavily influenced by environmental factors, CVD research is especially well positioned to benefit from these advances. For instance, Cranley and MacRae mention in a recent review [59] how studying the nutritional exposome (e.g. quantified images, purchase data, modern supply chain tracking of food) is likely to identify new triggers of coronary heart disease (CHD) and other disorders across populations. One interesting population–scale application we envision is monitoring the nutritional exposome (e.g. with respect to the temporal trajectory of atherosclerosis) through social media timeline photos (e.g. many Facebook/Instagram users consistently post food photos of their meals over a timespan of several years), which could potentially be tagged via AI/ML image classification algorithms that ultimately track dietary or lifestyle habits in a long-term longitudinal fashion. Therefore, one area of potentially transformational investigative impact could be the integration of social media activity with data streaming from wearable devices as a way to monitor the exposome. Clearly, such large-scale initiatives directly benefit from public–private partnerships between academia and industry, as has been the case for OpenTargets [25], which unites the academic efforts of EMBL-EBI and the Wellcome Sanger Institute with the commercial efforts of Sanofi, GlaxoSmithKline, Takeda and other biopharmaceutical companies. Likewise, the One Brave Idea initiative [144] has brought together the AHA, Verily, Astrazeneca and Quest Diagnostics to collectively work towards detecting the earliest stages of CHD, how it develops and how it can be stopped from leading to heart attacks and strokes. The objective of One Brave Idea centers on measuring biology early enough to define health and its maintenance rather than just disease and to do so longitudinally in a way that enables passive capture of disease trajectories [59].

The challenges of cardioinformatics

Research in CVD faces unique challenges due to many peculiarities of these diseases. One such idiosyncrasy is time-scale, e.g. atherosclerotic plaques and other CV risk factors build up over an extended period of time (often many decades), which puts CV phenotypes on a complex and continuous spectrum of transition from health to disease, from disease onset to progression. In contrast to diseases like cancer, which are characterized by rapid progression often with a clearly delineated before and after-disease state (e.g. stark mutational profile differences due to somatic hypermutation), CV pathologies such as CAD develop over an extended period of decades, beginning with atherosclerosis and manifesting variably along a spectrum from asymptomatic to stable ischemic heart disease, acute coronary syndrome and sudden cardiac death [52]. In general, the transition from one CVD (e.g. hypertension) to another (e.g. atherosclerosis), which may gradually morph into another CVD (e.g. CAD), which may or may not ultimately lead to a clinical episode like myocardial infarction or stroke, all over the time-scale of several (or more) decades poses its own set of unique informatics challenges. Monitoring the complex disease etiology of such a temporal progression influenced by a combination of genetics (omics profiles), environment (socioeconomics—e.g. zip code can often be as or more important than genetic code at predicting CVD risk [145, 146]) and lifestyle [smoking, diet (e.g. lipids, alcohol), etc.] is a very computationally challenging task and calls for new innovative data integration approaches for risk stratification and surveillance at both individual and population levels across different race/ethnic groups. To complicate matters further, CV pathologies frequently present as co-morbid or multi-morbid with other disease phenotypes such as diabetes, cancer, obesity and metabolic syndrome and rheumatologic disease [52]. Innovative systems biology/medicine approaches to increase the understanding of the multifactorial, complex underpinnings of CVD promise to enhance CVD risk assessment and pave the way to tailored therapies [147]. One active area of research to address these issues involves deeper phenotyping to enable better clinical phenomapping—the stratification of different CVDs into etiologically distinct subtypes, such that it becomes possible to define disease throughout its temporal trajectory, thereby allowing the measurement of fundamental underlying traits such as subclinical vascular abnormalities before they evolve into the classical syndrome (i.e. the full-blown clinical indication/manifestation of disease) [59]. In general, parsing phenotypes in this way allows for a finer, more granular approach to CVD management and prevention that can facilitate precision subtyping of pre-symptomatic and at-risk individuals from symptomatic ones in order to stratify patients for optimized care delivery [52]. Other challenges of cardioinformatics, particularly at the level of tailoring individualized CVD treatments and predicting patient outcomes, are highlighted in some recent reviews [148–152].

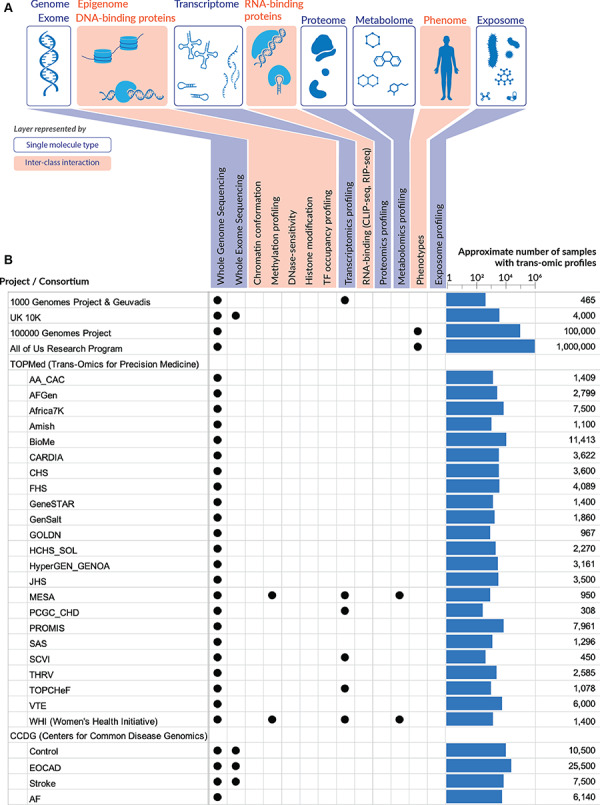

As illustrated in the previous section, the complexity of CVDs calls for pushing research beyond traditional boundaries. Such expansion implies the inclusion of various data modalities described above, such as genome sequences, DNA-methylation profiles, RNA expression profiles, protein expression profiles, metabolic profiles, etc. (Figure 5) within computational analysis workflows. For instance, the presence of even a single metabolite circulating in the blood can strongly predict myocardial infarction risk on top of clinical models [153]. Leon-Mimila and colleagues discuss in a recent review [67] the unmet need for more metabolomics and metagenomics approaches to identify biomarkers with potential clinical applicability in CVD studies. For instance, some bacterial species are associated with risk of CAD and plasma metabolites, e.g. the bacteria Veillonella is associated with chronic heart failure and is also inversely correlated with known CV protective metabolites such as niacin, cinnamic acid and orotic acid [154]. Such correlation between changes in metabolites and gut microbiome associated with chronic heart failure may also potentially be observed in other CVD phenotypes in the future, inviting exploration of new research avenues in this currently underexplored area. In general, circulating small molecules comprise not only endogenous species encoded by the genome but also various xenobiotics from the ‘envirome’, including ingested nutrients, pollutants and other particulate matter such as volatile organic compounds, heavy metals and air pollutants [51, 155, 156]. This complexity extends further to proteomics, where mass spectrometry methods have identified post-translational modifications such as citrullination and S-nitrosylation as direct modulators of CV biology [157], highlighting the direct role of organic chemistry [158] in conferring CVD risk. In general, these data modalities often represent different classes of biological molecules as well as their interactions (Figure 5A). Computational workflows relevant to CV medicine have been proposed [159], clearly illustrating how CVD research can benefit from existing computing resources, from cloud-computing infrastructures to analytic methods for metadata, search and indexing. Likewise, modular data science architectures for supporting CV investigations have been illustrated [17, 160]. Due to the sheer amount of data obtained from CVD research, ranging from medical records to medical images and high-throughput omics profiles, challenges related to data management and analysis that are generic to many fields become even more pressing for cardioinformatics. While benefiting from two decades of research in bioinformatics, there remain significant challenges that can be addressed to accelerate CVD research. From our own perspective, we suggest three particularly pertinent areas to prioritize cardioinformatics research: data sharing/security, multi-omics analysis and augmented intelligence.

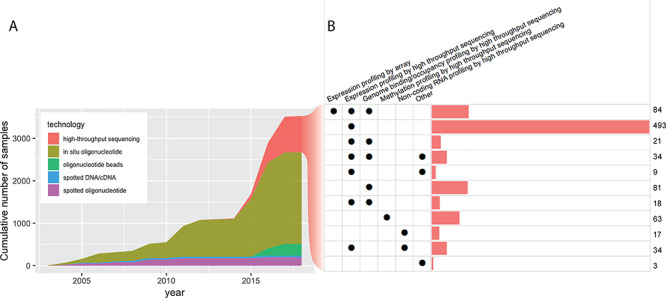

Figure 4.

Molecular assays on GEO. (A) The cumulative number of molecular assays (i.e. unique combinations of biosample, study and platform) deposited in GEO by CV research. (B) Breakdown of high-throughput sequencing assays by the type of study. Expression profiling by high-throughput sequencing, i.e. mRNA-seq assays, are often coupled with another profiling technique, for example, to provide functional read-out of transcription factor binding profiled by ChIP-seq. Note that to avoid excessive over-counting of irrelevant samples such as those from plants or unrelated model organisms, we only counted samples deposited with a PubMed ID pointing to a CV study. Surveys were done on the GEOmetadb database [176] updated on 17 November 2018.

To share or not to share

Data sharing is believed to help scientific advances, thus benefiting everyone [161]. The sharing of personal health and medical data, however, comes with the risk of compromising a person’s privacy and subjecting them to discrimination [161–163]. The current data governance practices employ several administrative measures in the hope of minimizing the risk of exposing the data to adversity, or bad intentions. Taking the process of dbGaP data requests as an example: to access 658 305 records of genotype–phenotype data (Table 1) potentially relevant to future biomedical studies, a researcher first needs to browse these datasets, determine whether each dataset is consented for its purpose, obtain IRB approval if necessary, then file a request, prepare the facility, implement the security measures and transfer the data upon approval. Although the data users are advised, for example, to ‘avoid placing data on mobile devices’ and ‘destroy [the data] if they are no longer used or needed’, the only guarantee to such compliance is the vigilant mindset of every researcher involved in the data behind the project(s). In addition, different datasets are associated with different types of consents, dictating what purposes are permitted (e.g. general research, disease-specific research or biomedical research). Therefore, data users are responsible for obtaining the IRB approval compatible with these consents. These regulatory requirements have heightened the barrier to data access without robust mechanisms to enforce data protection nor to revoke the access when necessary. To add to these challenges, before filing a request, one needs to dive into the metadata of individual studies and decide which datasets are useful for the target research. Important information about a dataset such as the list of phenotypic variables are often vastly different from study to study and cannot be filtered against. In addition to those parameters of a study design, researchers need to be aware of the various types of consent forms applied to different datasets, many times within a single study. This procedure to obtain data access is currently applied for all controlled-access data in dbGaP, adding a significant administrative burden to biomedical researchers.

Table 1.

The subject count aggregated from studies deposited in dbGaP, consented for General Research Use (GRU)

| CVD | All | |

|---|---|---|

| 16s rRNA (NGS) | 0 | 92 |

| CNV Genotypes | 0 | 48 972 |

| Chromatin (NGS) | 0 | 139 |

| Genomic Sequence Amplicon (NGS) | 0 | 8 |

| Methylation (CpG) | 0 | 657 |

| Methylome sequencing | 0 | 152 |

| QTL Results | 0 | 281 |

| RNA Seq (NGS) | 333 | 1498 |

| SNP Genotypes (Array) | 6658 | 113 597 |

| SNP Genotypes (NGS) | 4277 | 11 786 |

| SNP Genotypes (PCR) | 0 | 10 |

| SNP Genotypes (imputed) | 0 | 29 693 |

| SNP/CNV Genotypes (NGS) | 0 | 936 |

| SNP/CNV Genotypes (imputed) | 0 | 9291 |

| SNV (.MAF) | 0 | 2 |

| SNV Aggregate (.MAF) | 0 | 570 |

| Targeted Genome (NGS) | 0 | 9918 |

| Whole Exome (NGS) | 5518 | 12 771 |

| Whole Genome (NGS) | 0 | 1245 |

| mRNA Expression (Array) | 0 | 798 |

| miRNA (NGS) | 0 | 228 |

| Total subject count in data consented for GRU | 16 786 | 242 644 |

| All consent groups | 584 884 | Unknown |

NGS: Next-generation sequencing, MAF: Minor Allele Frequency, QTL: Quantitative Trait Loci, SNV: Single-nucleotide variant

As a pioneering effort towards more accessible biomedical data, the AHA’s Precision Medicine Platform [18] has greatly simplified this process by streamlining the search, request and transfer of data. Datasets deposited on this platform were harmonized such that users can query for data across multiple studies by some common parameters, selectively request access to the relevant data and perform analyses on the cloud-based workspace. The platform has lifted significant burden off of data users by having them file one request for multiple datasets, and the data owners, being aware and responsible for complying with the consents on their data, will decide whether access can be granted or not. The cloud-based workspace also allows data to be transferred and analyzed in a controlled environment that can be ensured to comply with regulatory standards. The risk of intellectual property being compromised remains, for the data, once transferred, cannot be withdrawn nor prevented from being copied. As recognized by the authors, the platform is ‘only as good as the researchers make it’ [18]. More secure modes of data sharing have been explored, forming a spectrum of varying balance between analytic power and data protection. ViPAR [164] supports on-memory analysis of pooled data that is transferred to a central system, avoiding the permanent storage of sensitive data outside of the original sites. In all of the platforms above, a strong system for registering users as well as applying sanction measures are critical to enforce data usage agreements and deter malicious intent. Nevertheless, there still remains significant risk associated with data transfer and data protection at the user end. A couple of solutions have been proposed to further reduce the risks and responsibilities associated with direct access to sensitive data. For example, PRINCESS [165] is designed to perform statistical tests within an enclave hosted on a trusted server, in a stream of small data segments (8000 SNPs at a time). On the other end of the spectrum, DataSHIELD [166, 167] and COINSTAC [168] aim to allow data to be analyzed without moving out of the owners’ facility. Current implementations have shown that a variety of analytic tasks such as summary statistics, histograms, generalized linear models (DataSHIELD) and gradient descent (COINSTAC) can be performed in a distributed manner to achieve equally accurate results compared to the physically pooled counterpart and, more importantly, without disclosure of sensitive or personally identifiable information. The increasing prevalence of cloud computing platforms in scientific research [169] implies that forward-looking solutions should be able to work on these cloud environments.

Besides controlled-access data, a large amount of publicly available human data such as RNA-seq, ChIP-seq, Hi-C, etc. results are freely accessible with no restriction. Without genomic sequences, genotype or phenotype data, the processed output of these assays are deemed anonymous and void of sensitive information. However, recent studies have shown that information leakage is still possible, subjecting individuals to linking attacks that may reveal their identity [170, 171]. With millions of human genomes and thousands of other omics profiles on the not-so-distant horizon, a large fraction of which comes from CVD research programs (Figure 5), it is critical that cardioinformatics researchers pioneer the applications of these security measures, to ensure scientific advances do not compromise human rights to privacy and non-discrimination.

Multi-omics data ocean

The explosion of biological data is manifested in the growth of databases, consortium efforts, repositories, as well as the amount of raw and summary-level data hosted in these warehouses. High-throughput technologies are now available for characterizing and quantifying all major classes of biological molecules including DNA, RNA and protein (Figure 5A), leading to the creation of centralized repositories such as the Gene Expression Omnibus (GEO) [172] for gene expression data, dbGaP [173] for genotypes and phenotypes, ProteomeXchange [174, 175] for proteomics, MetabolomeXchange for metabolomics, among others. GEO, one of the most popular repositories for functional genomics data, has accumulated more than 100 000 datasets [176]. Among these, CVD publications have contributed more than 2000 microarray-based experiments, and about 900 high-throughput sequencing experiments for various purposes (Figure 4). The amount of data potentially reusable for CVD research may be even larger, when taking into account studies that did not focus on CVD but generated a decent number of assays on relevant biospecimens (e.g. heart, blood or blood vessels) such as ENCODE [177] and the Roadmap Epigenomics initiatives [178]. Likewise in dbGaP, where human genotype–phenotype data are deposited, CVD-related research has contributed data on 658 305 subjects, only 16 786 (2.9%) of whom had consented for the data to be employed for general research, leaving a large amount of data locked in field-specific or disease-specific studies (Table 1) (see Supplementary Data for a comprehensive list of CVD studies deposited in dbGaP). An extensive compilation of human genotype–phenotype databases is given in a recent review [179]. In addition to the central repositories for established and popular experimental methods, smaller databases with narrower focus are also budding. For instance, chromatin structure data from 3C, 4C, 5C and Hi-C experiments have been collected in dedicated databases such as 3CDB [180] and 4DGenome [181]. Also, noncoding RNAs are being added into databases such as lncRNAdb [182], NONCODE [183] and LNCipedia [113].

Figure 5.

Multi-omics data. (A) The multiple layers of omics data that are now accessible to researchers. Genome/exome, transcriptome, proteome, metabolome, as well as the microbiome and chemical compounds in the exposome can be profiled by assays on a single class of molecules (DNA, RNA, proteins or small molecules), while the other layers depend on the ability to capture DNA–protein or RNA–protein interactions. The phenome is less well defined as phenotypic measures vary greatly from physical measurements to laboratory tests, from descriptive to quantitative traits. Sources of comprehensive phenotypic data comparable to the other omics can be obtained, for example, from EHRs. Beyond the genome, omics datasets become highly complicated, due to the variation across tissues and cell types. (B) Large omics datasets that are (or will be) available for CVD research. For each dataset, the number of samples being assayed across multiple omics are indicated on the right. This number is often smaller than the total number of samples/participants in a given project, because not every sample is run on multiple assays. Sources are provided in Supplementary Data.

Such abundance and diversity of data promises valuable insights once the data are aggregated across studies within a given omics domain (e.g. RNA-seq), or across multiple omics domains (e.g. RNA-seq, ChIP-seq, ATAC-seq, etc.). Efforts to aggregate genomic data (both individual and summary-level statistics) have resulted in valuable collections such as The Cancer Genome Atlas [24] for genomics and functional genomics data in cancer, or ExAC and gnomAD for exome and genome sequencing data [63]. For instance, aggregated exomes/genomes such as ExAC/gnomAD have been a valuable resource for estimating the allele frequencies of the general population as well as within various race/ethnic groups. Although the need for data integration has been identified within the field of CVD research, resulting in some CVD-focused databases dating back to 2015 (Table 2), many of these resources are either discontinued, not well maintained or remain in a primitive stage where database queries are delivered in plain texts and hyperlinks that require substantial efforts to synthesize new integrative insights. With the upcoming wave of trans-omics data spanning diverse populations and sequencing types (Figure 5B), data harmonization will become a more pressing problem. Generic solutions have started to be proposed, e.g. Biochat for GEO metadata [184] or OmicsDI for diverse datasets spanning genomics, transcriptomics, proteomics and metabolomics [185], and are promising for facilitating data integration in specialty fields like CVD.

Table 2.

List of available resources for cardioinformatics research

| Type | Name | URL | Ref |

|---|---|---|---|

| Knowledge portal | Cardiovascular Disease Knowledge Portal | http://www.broadcvdi.org/ | [10] |

| Knowledge portal | Cerebrovascular Disease Knowledge Portal | http://www.cerebrovascularportal.org/ | [2] |

| Knowledge portal | HeartBioPortal | https://heartbioportal.com/ | [5] |

| Analytics platform | AHA Precision Medicine Platform | https://precision.heart.org/ | [4] |

| Analytics platform | DataSTAGE | https://datastage.io/ | In planning |

| Database | HGDB (Heart Gene Database) | http://www.hgdb.ir/ | [7] |

| Database | In-Cardiome (Integrated Cardiome Database) | http://www.tri-incardiome.org/ | [9] |

| Database | Cardio/Vascular Disease Database | http://www.padb.org/cvd/ | [3] |

| Database | CardioGenBase | Discontinued | [11] |

| Review paper | Cloud computing for genomic data analysis and collaboration | [6] | |

| Review paper | Human genotype–phenotype databases: aims, challenges and opportunities | [1] | |

| Review paper | Methods of integrating data to uncover genotype–phenotype interactions | [8] | |

Resource types are categorized as follows: Database: integrated datasets, harmonized and built into a single, searchable database. Query results are usually presented as table of text and hyperlinks. Knowledge portal: integrated datasets, harmonized and built into a single, searchable database. Query results are usually visualized with charts tailored for the biological data and insights. Analytics platform: computing system, usually comes with access to diverse datasets, allowing users to perform various analyses on the hosted data.

While the issues above are generic for all types of research aiming to reuse public data, we believe CVD research benefits even more by expanding beyond traditional methods. Most use of high-throughput data in CVD research has been largely limited to the very first layer of omics data (Figure 5A), i.e. genome/exome. Whole-exome and whole-genome sequencing data have been slowly incorporated into conventional GWAS, bringing more ascertainment to earlier findings [68–71]. When deeper phenotype data such as blood lipid tests and diagnosis (ICD) codes became available, phenome-wide association studies emerged [186], triggering a new line of biomedical research that coupled EHRs with omics data, enabling powerful analyses, as discussed by [187, 188] and exemplified by [189, 190].

Besides existing data, new recent research programs have started to put more focus on high-throughput assays that result in a comprehensive cross-section of biological molecules (DNA, RNA and protein) and their interactions. For instance, the Multi-Ethnic Study of Atherosclerosis (MESA) medical research study [191] within TOPMed includes WGS, RNA-seq, metabolomics, proteomics and methylomics data across a variety of multi-ethnic communities (white, Hispanic, African-American and Asian). Specifically, MESA investigates the characteristics of subclinical CVD (disease detected non-invasively before it has produced clinical signs and symptoms) and the risk factors that predict progression to clinically overt CVD or progression of the subclinical disease. Some traditional CVD risk factors include hyperlipidemia, hypertension, diabetes mellitus, metabolic syndrome and chronic kidney disease [2]. Likewise, TOPMed is generating a second modest size multi-omic resource involving RNA-seq, metabolomics and methylomics in a subset of participants of the Women’s Health Initiative (WHI) study [192] who have undergone WGS already. Figure 5 highlights the large datasets that are (or will be) available for CV research. It is clear that assays for DNA sequences, including WGS and whole-exome sequencing, are still dominant among these studies. However, a modest number of multi-omics experiments are planned to be assayed for transcriptome, methylome and metabolome, as in the MESA and WHI studies. The availability of these datasets, especially at the individual-level, is critical to correlate the variations across multiple omics and bridge the gap from genotype to phenotype. In general, the analysis of these trans-omics datasets is a fascinating problem—although the computational approaches envisioned from the early days of gene expression profiling, i.e. differential gene expression analysis, co-expression analysis and gene clustering with subsequent identification of enriched biological pathways [193] can still bring fruitful analysis [194], cardioinformatics is clearly steering towards the integration of multiple omics layers (Figure 5). Although the potential of data integration had been recognized as early as a decade ago [195], leading to the development of many data integration strategies [196] such as gene expression and summary-level associations of SNPs and phenotypes from GWAS studies, only recently have these successful data integration strategies begun to emerge in CVD research [197]. Such approaches, usually referred to as transcriptome-wide association studies, are now adopted more widely [198]. In contrast to the relatively recent surge of interest in data integration methodologies, systems biology approaches in CVD have existed since at least the mid-2000s [199–201] and continue to pose challenging questions and present interesting results. For instance, modern systems-level approaches that leverage network analysis methods suggest that covariation between molecules (modeled as the reorganization of network nodes and edges) can be more instructive than the differential expression of individual markers, e.g. for conceptualizing molecular changes that occur in the emergence of high glucose levels in the prediabetic state [51, 202]. Since diabetes is a major risk factor for atherosclerosis, re-casting such physiological phenomena in a new light as tipping points and bifurcations of a network with multiple alternative stable states addresses a blind spot of the disease-oriented paradigm of clinical research and practice, which by definition precludes detailed knowledge about early presentations in subclinical populations [51]. One well-known success story of early detection of a subclinical prediabetic state was the result of a longitudinal multi-omics study that monitored the transcriptome, proteome and metabolome of a single individual over 14 months, ultimately helping the individual avoid the clinical indication (diabetes) by early adjustment in diet [203]. Other successful examples of multi-omics studies conducted on longitudinal personal omics profiles in single individuals to provide constant monitoring and preventative intervention include the MyConnectome study [204], P100 Wellness study [205] and the Personalized Nutrition study [206], which were covered in a recent editorial [51] in the context of CVD-related traits such as blood pressure, QT interval, postprandial glycemic response, etc. In general, computationally integrating diverse stores of data such as physiological and environmental information with other omics layers such as genomes, metabolomes and microbiomes can help identify subclinical imbalances or elevated disease risk in otherwise healthy individuals [51], heralding in an era of preventative healthcare/medicine. Historically, although the first draft of the human genome project had brought a lot of hope and excitement about potential advancements in the diagnosis and treatment of cardiac diseases—such as the ability to identify disease genes within the associated loci, to improve risk estimation based on more precise genotypes, or to personalize the prediction of drug effects on a patient [207]—it seems that a collection of the first population-scale ‘drafts’ of the whole ‘multi-ome’ will ultimately be required for gaining a deeper understanding of the phenotypic manifestations of CVD across different race/ethnic groups. A recent cardiac hypertrophy study in mice [208] highlighted the utility of conducting multi-omics investigations for discovering additional disease gene candidates not apparent from studying each omics data type separately in the context of CVD pathogenesis. In humans, large-scale studies such as the MyHeartCounts study [140] and a global physical activity study of nearly 800 000 individuals across 111 countries [209] demonstrated the feasibility of consenting and engaging large populations using smartphone technology, suggesting the potential to create a similar population-wide intercontinental network for multi-omics participation in the near future. For a recent comprehensive review detailing the success stories of multi-omics studies in CVD, see Leon-Mimila et al. [67]. All in all, the ability to combine data from every omics layer depicted in Figure 5, either at the summary level or the individual level, opens up ample opportunities for cardioinformatics to expand and augment the understanding of CVD etiology.

Augmented intelligence to advance cardiology

As alluded to in the Introduction, AI and ML will play an increasingly important role in cardioinformatics research. Recent trends in this direction include studies for cardiac arrhythmia detection [210, 211], heart failure prediction and classification [141, 212, 213], CV risk stratification [214], individualized treatment effect estimates from clinical trial data [215], among various other active CVD-related research areas [206, 216–218] that utilize ML techniques ranging from deep learning [210, 213, 219–224], class imbalance learning [225, 226], active learning [227], probabilistic graphical models [228, 229] and other areas. An emerging area of AI/ML applications ripe for early adoption is the field of augmented and virtual reality (AR/VR), specifically its applications to cardiology. For instance, surgeons at Children’s Mercy Kansas City, a hospital in Missouri, have been exploring the use of augmented reality to view CT scans of patients’ hearts before an operation to understand patient-specific blood vessel anatomy in different chambers of the heart (e.g. right atrium or left ventricle), ultimately facilitating a safer, more informed surgical intervention/procedure [230]. Other emerging applications of AR/VR in CV medicine include advances for education, pre-procedural planning, intraprocedural visualization and patient rehabilitation [231].

Nevertheless, in an era of big data analytics to improve CV care [232] and an accelerated adoption of ML methodologies to facilitate these objectives, the role of human experts may turn out to be even more indispensable. Among tasks that still require considerable human judgment include understanding and processing of free text data as well as recognizing visual patterns, especially corner/edge cases (e.g. in CVD medical imaging [233, 234]) that may be missed by algorithms trained on conventional datasets. As with other areas, CV research requires expert-level domain knowledge to make the best use of ML applications, for instance in properly labeling CVD data or harmonizing it across epidemiological cohorts. Without a doubt, domain expertise in cardiology is by no means a tractable problem at scale, exemplified by the insurmountable pile of CV publications accumulating over the years (Figure 1C) and the intrinsic difficulty in keeping up with this momentum. With active research in text-mining and natural language processing (NLP), the goal is to liberate human researchers from the time-consuming tasks associated with reading new CVD literature and making sense of free texts in metadata and publications at scale, including tables, figures and charts. Recently, a novel text-mining NLP-based approach was used to analyze over 1 million literature abstracts to uncover novel extracellular matrix functions, pathways and molecular relationships implicated across six CVDs [235]. Since different subdomains in biomedical literature vary along many linguistic dimensions, making text-mining systems that perform well on one subdomain is not guaranteed to perform well on another [184, 236, 237]; we believe that development of cardioNLP algorithms and dedicated large-scale comprehensively labeled CVD training datasets will be essential for progress in tasks such as harmonized patient-data meta analyses in CV precision medicine. To this end, we envision a framework like the Kipoi model zoo for genomics [238] but with a focus on CVD knowledge (both text and data) that can be used to train ML models in cardioinformatics research.

Recognition of visual patterns, on the other hand, remains a powerful human faculty that needs to be fully exploited rather than entirely replaced with automation. With the rise of various visualization techniques across diverse biological data types [239, 240], it will be an exciting challenge for cardioinformatics researchers to leverage them for an integrative representation of heterogeneous data layers towards extracting deeper CVD insights. In addition, the visualization of many experimental assays and biological processes remains a significant challenge, e.g. visualizing alternative splicing events [241, 242], fast implementations for biological heatmaps [243] or interactive matrices for chromatin conformation data from Hi-C experiments [244, 245]. Moving into the clinical setting, gearing up towards large-scale precision medicine will entail the requirement of providing more comprehensive and integrated data, in a more comprehensible manner to assist clinicians. To this end, visualization technology and software design will be critical in improving CVD biomedical software, e.g. for designing robust clinical decision support systems or validating prediction models for critical care outcomes. For instance, by integrating multiple measures of clinical trajectories together with NLP of clinical free text notes from EHR data, more accurate prediction of critical care outcomes were observed among patients in intensive care units across three major hospital systems [246]. Such studies suggest that automated algorithms, particularly those using unstructured data from notes and other sources, can augment clinical research and quality improvement initiatives.

Closing remarks

As we further our quest to understand the genetics and molecular biology of heart disease, many complex clinical CVD indications and pathophenotypes have become too nuanced for traditional computational approaches. Reflecting on the current body of knowledge, we recognize that many aspects of this complexity can be addressed with more (and improved) computational methods, as has been the case for bioinformatics tools and their impact on cancer genomics research. But bioinformatics techniques for conducting CVD studies will require progressively more sophisticated strategies for identifying and monitoring elevated disease risk and intermediary molecular endophenotypes (including CVD-related risk factors and quantitative traits) as CVD poses an unprecedented and unique set of computational challenges with respect to clinical phenomapping and the large-scale integration of multiple diverse sources of population-level biomedical data for understanding the progression of a subclinical imbalance into the clinical manifestation of the disease itself. In this review, we discussed some of the important works evolving at the multidisciplinary interface of bioinformatics and cardiology and advocate for shining a brighter spotlight on cardioinformatics as an emerging field, in its own right. We suggest some future insights based on our understanding of historical perspectives and ongoing work in current CVD research and welcome feedback and ideas from the broader scientific community.

Methods

PubMed queries

Queries are all based on MeSH terms. For reproducibility purposes, the exact queries for each category in Figure 1 are listed below:

Cardiovascular disease: ”cardiovascular diseases”[MeSH Terms]

Cancer: (*cancer[MeSH Terms])

Bioinformatics: bioinformatics[MeSH Terms] OR genomics[MeSH Terms]

Cardioinformatics: (bioinformatics[MeSH Terms] OR genomics[MeSH Terms]) AND (”cardiovascular diseases”[MeSH Terms])

Cancer informatics: (bioinformatics[MeSH Terms] OR genomics[MeSH Terms]) AND (*cancer[MeSH Terms])

All queries were appended with a filtering term to reduce the count of non-primary research items. The filtering term is

AND (hasabstract[text] AND English[lang]))) NOT ((‘autobiography’[Publication Type] OR ‘biography’[Publication Type] OR ‘corrected and republished article’[Publication Type] OR ‘duplicate publication’[Publication Type] OR ‘electronic supplementary materials’[Publication Type] OR ‘interactive tutorial’[Publication Type] OR ‘interview’[Publication Type] OR ‘lectures’[Publication Type] OR ‘legal cases’[Publication Type] OR ‘legislation’[Publication Type] OR ‘meta analysis’[Publication Type] OR ‘news’[Publication Type] OR ‘newspaper article’[Publication Type] OR ‘patient education handout’[Publication Type] OR ‘published erratum’[Publication Type] OR ‘retracted publication’[Publication Type] OR ‘retraction of publication’[Publication Type] OR ‘review’[Publication Type] OR ‘scientific integrity review’[Publication Type] OR ‘support of research’[Publication Type] OR ‘video audio media’[Publication Type] OR ‘webcasts’[Publication Type])).

The MeSH Database entry for ‘cardiovascular disease’ includes many types of CV abnormalities that may occur in organs outside the immediate circulatory system.

Research funding statistics

Data on research funding were provided by the NIH, via the NIH Research Portfolio Online Reporting Tool [247], as a table of awards for each fiscal year and research category. Categorization was done by NIH starting in 2008, through the Research, Condition, and Disease Categorization system. To calculate the research funding for cancer and CVDs, we retrieved tables of awards for all related categories, removed duplicate entries and summed up all the amounts of awards greater than $100.

For ‘Cancer’ funding, relevant categories are Brain Cancer, Breast Cancer, Cancer, Cancer Genomics, Cervical Cancer, Colo-Rectal Cancer, HPV and/or Cervical Cancer Vaccines, Liver Cancer, Lung Cancer, Lymphoma, Neuroblastoma, Ovarian Cancer, Pancreatic Cancer, Pediatric Cancer, Prostate Cancer, Uterine Cancer, Vaginal Cancer.

For ‘Cardiovascular disease’ funding, relevant categories are Aging, Cardiovascular, Cerebrovascular, Congenital Heart Disease, Heart Disease, Heart Disease - Coronary Heart Disease, Hypertension, Pediatric Cardiomyopathy, Stroke.