Introduction

Phase 3 clinical trials are widely recognized as the highest level of evidence to support a particular intervention/therapy in the medical literature. The ability to draw clinically meaningful conclusions from these studies greatly depends on the transparency with which these trials are reported. To this end, careful assessment of the trial protocol (in tandem with the trial results) is essential to maintain the integrity of the trial design and endpoints, the peer review process, and its interpretation by an oncologic audience.

The lack of transparency in clinical trial reporting has been exposed by over three decades of evidence, highlighted by insufficient access to trial protocols and potential discrepancies between protocols and published results.[1–3] Lack of protocol availability may affect both internal and external validity impacting the interpretability and generalizability of results.[4] Because published reports cannot always incorporate all relevant aspects of study design, certain medical journals have begun requiring protocols for publication, which can in turn be accessed ad libitum by the audience. Smaller studies have characterized protocol availability and protocol-publication concordance in trials, having described these elements for select subgroups of trials generally published in a handful of prominent journals.[5,6] The lack of concordance may represent selective reporting, thereby potentially biasing trial results. Furthermore, protocols have been suggested by prior studies to often be incomplete;[7,8] however, the extent of protocol redaction and completeness (and its implications on selective reporting) have yet to be studied.

In order to determine the extent of selective reporting in oncologic clinical trials, we sought to comprehensively characterize protocol availability and protocol-publication concordance across phase 3 cancer clinical trials, assessing the implications of protocol availability and completeness on transparent reporting of results. The objectives of this study were to (1) determine protocol availability across all cancer phase 3 randomized trials, (2) assess published protocols for completeness, and (3) compare protocols with published reports, specifically focusing on the association of protocol completeness with protocol-publication concordance.

Material and Methods

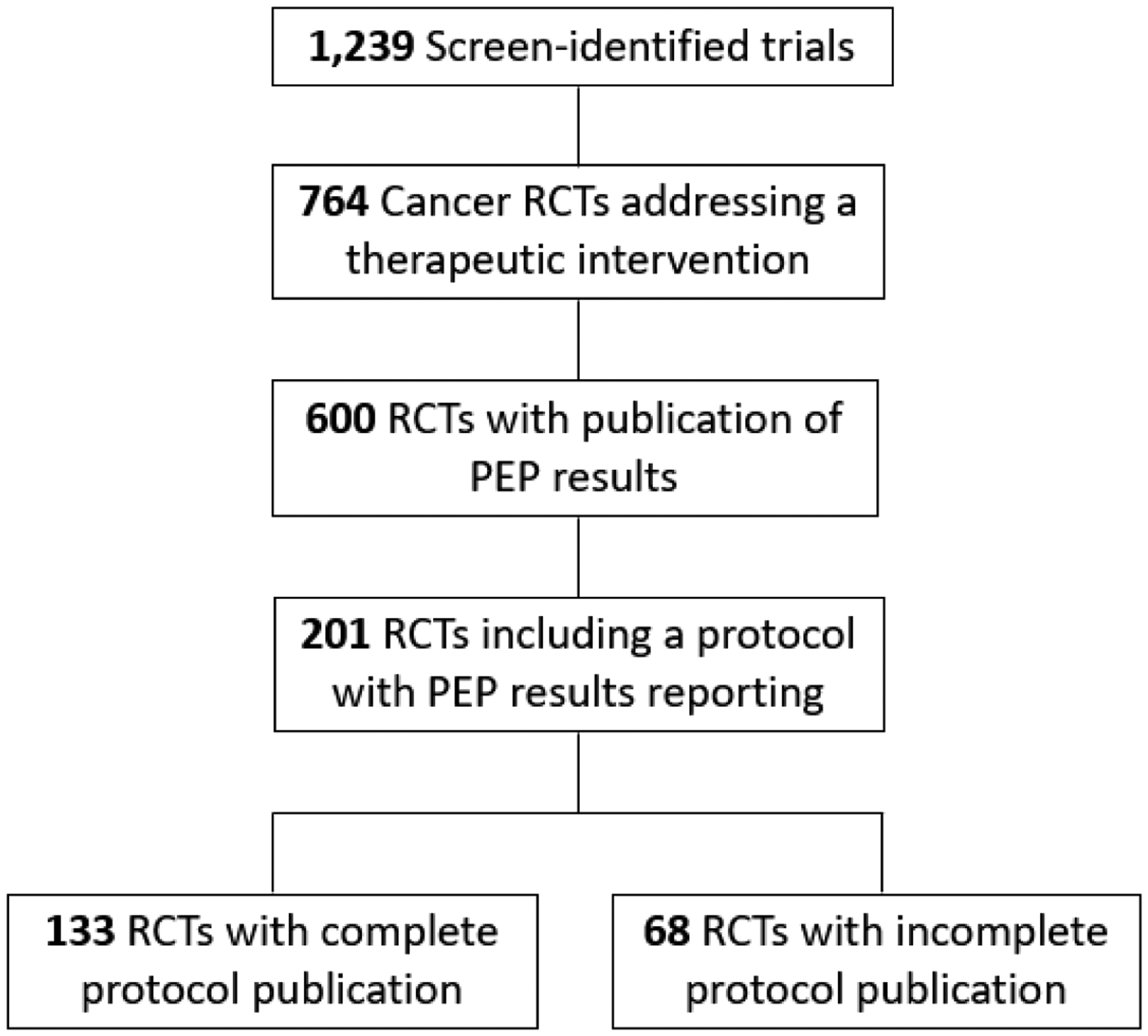

We queried ClinicalTrials.gov to identify all registered, cancer-specific, phase 3 randomized trials. Search criteria included: terms: ‘cancer’; study type: ‘all studies’; status: excluded ‘not yet recruiting’; phase: phase 3; and study results: ‘with results.’[9] Of 1,239 screen-identified trials, 764 were eligible as cancer-specific phase 3 randomized multi-arm trials addressing a therapeutic intervention. We excluded trials that did not have a publication reporting their primary endpoint (PEP) results (n=164). Publications with primary endpoint results were identified directly through links presented on the ClinicalTrials.gov website and through independent PubMed searches of NCT clinical trial identifiers. The remaining trials were analyzed based on the first peer-reviewed publication of PEP results, and assessed for inclusion of a complete protocol (CP). Protocols were considered if they were included as a supplemental file to their respective publication or directly linked or referenced in the manuscript. Protocols were considered incomplete/redacted if the protocol text pertaining to trial design, inclusion/exclusion criteria, treatment/intervention guidelines, randomization schema, and/or statistical analyses were missing or ‘inked out.’

To further understand the impact of incomplete protocol reporting on data interpretation, we analyzed for concordance between protocols and their associated publications regarding (1) the defined PEP for the trial (i.e. whether the PEP in the protocol was the same as reported in the publication), and (2) any pre-specified subgroup analysis of PEP results. Subgroup analyses of the PEP were considered pre-specified if the protocol defined the endpoint and statistical methods for all reported stratifying factors.[10] Trials that pre-specified analysis for all subgroups were considered to be pre-specified even if all subgroups may not have been reported in the published report. Conversely, trials that pre-specified analysis for some, but not all subgroups, were not considered to be pre-specified.

To assess factors related to complete protocol publication, Chi-squared tests were conducted to identify univariate associations between trial-related factors and protocol publication; the relationship between publication year and protocol publication was assessed via binary logistic regression. Trial-related factors displaying univariate associations with protocol publication (p<0.05) were then incorporated into multiple binary logistic regression to assess for independent effects of factors on complete protocol publication. Statistical analysis was conducted via SPSS Statistics, version 26 (IBM Corporation, Armonk, NY).

Results

The included 600 trials had a total combined enrollment of 429,056 patients (Figure 1). Of these trials, 133 (22%) published a CP, while an additional 68 (11%) published an incomplete or redacted protocol. Altogether, 114 trials published final protocols only, 20 trials published final protocols with a summary of amendments, and 67 trials published original and final protocols with summary of amendments. Higher rates of CP publication were identified among cooperative group trials (30% vs 19%, P=0.003; Table 1). Table 1 highlights other factors associated with CP publication, including treatment modality and disease site. Multiple binary logistic regression modeling revealed independent effects related to cooperative group trials (P=0.001), publication year (P<0.001), and treatment modality (P=0.008), but not disease site (P=0.85), on CP reporting. Notably, CP reporting has improved since 2010, as no trials had published CPs prior to that time point.

Figure 1.

Flow Diagram of Clinical Trial Screening, Inclusion, and Results.

Table 1:

Factors Associated with Complete Protocol Publication

| Trial Characteristics | Number of complete protocols published / total | Percentage | P-valuea | |

|---|---|---|---|---|

| All Trials | 133/600 | 22.2% | ||

| Cooperative Group Trial | Yes | 55/186 | 29.6% | P=0.003 |

| No | 78/414 | 18.8% | ||

| Industry funding of trial | Yes | 103/470 | 21.9% | P=0.78 |

| No | 30/130 | 23.1% | ||

| Publication Yearb | 2003–2009 | 0/58 | 0% | P<0.001 |

| 2010–2012 | 32/145 | 22.1% | ||

| 2013–2015 | 69/255 | 27.1% | ||

| 2016–2018 | 32/142 | 22.5% | ||

| Disease Sitec | Breast | 22/105 | 21.0% | P=0.001 |

| Gastrointestinal | 12/76 | 15.8% | ||

| Genitourinary | 22/68 | 32.4% | ||

| Hematologic | 24/119 | 20.2% | ||

| Thoracic | 15/88 | 17.0% | ||

| Other | 38/144 | 26.4% | ||

| Treatment modalityd | Systemic therapy | 110/471 | 23.4% | P=0.007 |

| Radiotherapy | 7/14 | 50.0% | ||

| Surgery | 2/7 | 28.6% | ||

| Supportive Care | 14/108 | 13.0% | ||

| PEP met | Yes | 76/302 | 25.2% | P=0.07 |

| No | 55/289 | 19.0% | ||

P-value reflects Pearson’s Chi-squared tests for all except by Publication Year (binary logistic regression analysis by year)

Model uses publication year as a continuous variable

Limited to trials with a defined single disease site. Other includes trials of other disease sites and multiple disease sites.

Primary intervention as part of the randomization. Systemic therapy includes cytotoxic chemotherapy, targeted systemic agents, and similar, with primary endpoint aimed at improved disease-related outcomes. Supportive care trials aimed to reduce disease- or treatment-related toxicity.

Abbreviations: PEP, primary endpoint.

Nearly all trials that published a protocol (198/201) reported the same PEP(s) as defined in the associated protocol; two trials reported protocol-defined co-PEPs as secondary endpoints, and a third trial did not specify which endpoint was the PEP in the publication. Less than half of all protocol reporting trials (43%, 87/201) published a summary of amendments to the original protocol. Of these trials, 5% made changes to their PEP(s).

PEP subgroup analyses were reported in the publication for most trials (82%, 164/201 trials), but were pre-specified for only 43% (71/164) of trials. Notably, subgroup pre-specification was more common among trials reporting complete versus incomplete protocols (50% vs 31%, respectively, P=0.02). Only 10% of trials (9/93) that did not pre-specify subgroup analyses acknowledged this in the published report. Conversely, 20% (19/93) of trials reported pre-specified subgroup analyses in the full publication, even though the corresponding protocols made no mention of such analyses. With respect to selective reporting, 23% (16/71) of trials with pre-specified subgroup analyses reported some, but not all, subgroups. Two trials pre-specified subgroup analyses, but did not report any in the publication of the PEP results (recognizing that these subgroup analyses may be reported at a later date). Lastly, trials that included a summary of amendments with final protocols were significantly more likely to pre-specify subgroup analyses than trials with final protocols only (72% versus 44%, P<0.001).

Only 8 of the 96 journals (8.3%) reporting PEP results published any protocols (including CPs or incomplete/redacted protocols, Supplemental Table 1). Trials that published protocols were more likely to have a higher impact factor (Mann-Whitney U, P=0.002). These journals were typically general medicine- and oncology-specific. For these journals, there seemed to be a temporally-related inflexion point, after which it became more common to include protocols with published reports.

Discussion

Transparent reporting of phase 3 cancer clinical trial results is essential to maintain confidence in high-level evidence. Reporting the complete trial protocol is an effective measure to ensure such transparency, but has been understudied to date. The present study demonstrates that less than one-third of phase 3 cancer trials publish any protocol documentation when reporting PEP results. Even so, rates of protocol redaction or incomplete protocol publication are high (34%). Furthermore, this study identified a lack of concordance between clinical trial protocols and publications with respect to subgroup analysis. This notion is consistent with prior studies demonstrating marked disparities in aspects including eligibility criteria, statistical analysis, subgroup analyses, and outcomes.[2,3,8,11,12] Taken together, these data may serve as an important benchmark for future assessment regarding the transparent reporting of clinical protocols as an adjunct to their full publications.

This indicates that the trends observed in the present series are largely driven by a few journals, which do not imply a ‘systemic cultural change’ in transparent protocol reporting over time. Although the improving trend in protocol publication is important, the fact that most journals still do not require or encourage protocol publication may serve as a ‘call to action’ to standardize this reporting across all journals (similar to other standardized trial reporting mechanisms, e.g. a trial profile or CONSORT criteria).[13] One such example of this are the Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT 2013) guidelines that identify 33 items for inclusion in every protocol. [7] Furthermore, journals should discourage redaction (e.g. ‘inking out’ or missing sections) of substantive portions of clinical trial protocols.

The Department of Health and Human Services final rule on ‘Clinical Trials Registration and Results Information Submission’ (42 CFR 11) requires all clinical trials registering with ClinicalTrials.gov to include a protocol and statistical analysis plan beginning on January 18th, 2017.[14] The rule also mandates updated protocols to be submitted within 30 days after any amendments are made. Such requirements are vitally important; however, we contend that CP inclusion with published reports is similarly critical for transparency. While our study includes only trials registered with ClinicalTrials.gov, a recent study showed that as much as 39% percent of trials may not prospectively register with such an organization and often display higher levels of selective reporting.[15]

Interestingly, the majority of trials having published CPs in this analysis published just the final protocol (i.e., without a summary of amendments). One may question whether doing so would even constitute ‘transparent reporting’; characterizing the nature and degree of amendments (e.g. trial design, inclusion/exclusion criteria, and endpoints) is important to critically examine the validity and reliability of the trial and its conclusions, which is also the same rationale for reporting the full CP. For this reason, many cooperative groups (e.g., NRG Oncology) require inclusion of amendments to each protocol iteration, but the same may not necessarily be true for other multi-institutional phase 3 trials, particularly trials sponsored by industry.

Limitations in this study center around the availability of the relevant data. Trials were assessed for protocols based on the first peer-reviewed publication of PEP results only. Therefore, this study may underestimate the extent of protocol publication if they were published in later reports or as a separate report. While protocols were studied for missing sections or clear redaction, the number of incomplete protocols may have been underestimated as more subtle alterations or exclusions from protocols may have not been identified. Furthermore, nearly two-thirds of published protocols did not include a summary of amendments, making it difficult to assess for changes in pre-specified PEPs or subgroups.

In summary, CP publication is associated with improved protocol-to-publication concordance, reflecting use of proper pre-specified statistical methodology and less selective reporting. Selective reporting of subgroup analyses increases the risk of false-positive associations that are unlikely to be replicated. Given the continued infrequency of CP publication and its implications on transparency, CP publication should be encouraged among both medical journals and clinical trialists when results are reported.

Supplementary Material

Funding:

Outside of the submitted work, Dr. Fuller received funding and salary support from the National Institutes of Health (NIH), including: the National Institute for Dental and Craniofacial Research Establishing Outcome Measures Award (1R01DE025248/R56DE025248) and an Academic Industrial Partnership Grant (R01DE028290); NCI Early Phase Clinical Trials in Imaging and Image-Guided Interventions Program (1R01CA218148); an NIH/NCI Cancer Center Support Grant (CCSG) Pilot Research Program Award from the UT MD Anderson CCSG Radiation Oncology and Cancer Imaging Program (P30CA016672) and an NIH/NCI Head and Neck Specialized Programs of Research Excellence (SPORE) Developmental Research Program Award (P50 CA097007). Dr. Fuller received funding and salary support unrelated to this project from: National Science Foundation (NSF), Division of Mathematical Sciences, Joint NIH/NSF Initiative on Quantitative Approaches to Biomedical Big Data (QuBBD) Grant (NSF 1557679); NSF Division of Civil, Mechanical, and Manufacturing Innovation (CMMI) standard grant (NSF 1933369) a National Institute of Biomedical Imaging and Bioengineering (NIBIB) Research Education Programs for Residents and Clinical Fellows Grant (R25EB025787-01); the NIH Big Data to Knowledge (BD2K) Program of the National Cancer Institute (NCI) Early Stage Development of Technologies in Biomedical Computing, Informatics, and Big Data Science Award (1R01CA214825). Dr. Fuller received direct infrastructure support provided by the multidisciplinary Stiefel Oropharyngeal Research Fund of the University of Texas MD Anderson Cancer Center Charles and Daneen Stiefel Center for Head and Neck Cancer and the Cancer Center Support Grant (P30CA016672) and the MD Anderson Program in Image-guided Cancer Therapy. Dr. Fuller has received direct industry grant support, honoraria, and travel funding from Elekta AB.

Footnotes

Data availability: Data were obtained from publicly available data sources including clinicaltrials.gov and from journal publications themselves. Data may be available upon reasonable request.

Financial Disclosures / Conflicts of Interest: The authors report no financial disclosures or conflicts of interests related to this work.

References

- 1.Siegel JP. Editorial review of protocols for clinical trials. The New England journal of medicine. 1990. November 8;323(19):1355. [DOI] [PubMed] [Google Scholar]

- 2.Chan AW, Hróbjartsson A, Haahr MT, et al. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. Jama. 2004. May 26;291(20):2457–65. [DOI] [PubMed] [Google Scholar]

- 3.Al-Marzouki S, Roberts I, Evans S, et al. Selective reporting in clinical trials: analysis of trial protocols accepted by The Lancet. Lancet (London, England). 2008. July 19;372(9634):201. [DOI] [PubMed] [Google Scholar]

- 4.Chan AW, Hróbjartsson A. Promoting public access to clinical trial protocols: challenges and recommendations. Trials. 2018. February 17;19(1):116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhang S, Liang F, Li W. Comparison between publicly accessible publications, registries, and protocols of phase III trials indicated persistence of selective outcome reporting. Journal of clinical epidemiology. 2017. November;91:87–94. [DOI] [PubMed] [Google Scholar]

- 6.Perlmutter AS, Tran VT, Dechartres A, et al. Statistical controversies in clinical research: comparison of primary outcomes in protocols, public clinical-trial registries and publications: the example of oncology trials. Annals of oncology: official journal of the European Society for Medical Oncology. 2017. April 1;28(4):688–695. [DOI] [PubMed] [Google Scholar]

- 7.Chan AW, Tetzlaff JM, Altman DG, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013. February 5;158(3):200–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pildal J, Chan AW, Hróbjartsson A, et al. Comparison of descriptions of allocation concealment in trial protocols and the published reports: cohort study. BMJ (Clinical research ed). 2005. May 7;330(7499):1049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ludmir EB, Mainwaring W, Lin TA, et al. Factors Associated With Age Disparities Among Cancer Clinical Trial Participants. JAMA oncology. 2019. June 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wang R, Lagakos SW, Ware JH, et al. Statistics in medicine--reporting of subgroup analyses in clinical trials. The New England journal of medicine. 2007. November 22;357(21):2189–94. [DOI] [PubMed] [Google Scholar]

- 11.Chan AW, Hróbjartsson A, Jørgensen KJ, et al. Discrepancies in sample size calculations and data analyses reported in randomised trials: comparison of publications with protocols. BMJ (Clinical research ed). 2008. December 4;337:a2299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dwan K, Altman DG, Cresswell L, et al. Comparison of protocols and registry entries to published reports for randomised controlled trials. The Cochrane database of systematic reviews. 2011. January 19(1):Mr000031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. Jama. 1996. August 28;276(8):637–9. [DOI] [PubMed] [Google Scholar]

- 14.Clinical Trials Registration and Results Information Submission., 42 CFR 11 (2016). [PubMed]

- 15.Chan AW, Pello A, Kitchen J, et al. Association of Trial Registration With Reporting of Primary Outcomes in Protocols and Publications. Jama. 2017. November 7;318(17):1709–1711. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.