Significance

Non–line-of-sight (NLOS) imaging can recover details of a hidden scene from the indirect light that has scattered multiple times. Despite recent advances, NLOS imaging has remained at short-range verifications. Here, both experimental and conceptual innovations yield hardware and software solutions to increase NLOS imaging from meter to kilometer range. This range is about three orders of magnitude longer than previous experiments. The results will open avenues for the development of NLOS imaging techniques and relevant applications to real-world conditions.

Keywords: non–line-of-sight imaging, optical imaging, computational imaging, computer vision

Abstract

Non–line-of-sight (NLOS) imaging has the ability to reconstruct hidden objects from indirect light paths that scatter multiple times in the surrounding environment, which is of considerable interest in a wide range of applications. Whereas conventional imaging involves direct line-of-sight light transport to recover the visible objects, NLOS imaging aims to reconstruct the hidden objects from the indirect light paths that scatter multiple times, typically using the information encoded in the time-of-flight of scattered photons. Despite recent advances, NLOS imaging has remained at short-range realizations, limited by the heavy loss and the spatial mixing due to the multiple diffuse reflections. Here, both experimental and conceptual innovations yield hardware and software solutions to increase the standoff distance of NLOS imaging from meter to kilometer range, which is about three orders of magnitude longer than previous experiments. In hardware, we develop a high-efficiency, low-noise NLOS imaging system at near-infrared wavelength based on a dual-telescope confocal optical design. In software, we adopt a convex optimizer, equipped with a tailored spatial–temporal kernel expressed using three-dimensional matrix, to mitigate the effect of the spatial–temporal broadening over long standoffs. Together, these enable our demonstration of NLOS imaging and real-time tracking of hidden objects over a distance of 1.43 km. The results will open venues for the development of NLOS imaging techniques and relevant applications to real-world conditions.

In recent years, the problem of imaging scenes that are hidden from the camera’s direct line of sight (LOS), referred to as non–line-of-sight (NLOS) imaging (1–3), has attracted growing interest (4–14). NLOS imaging has the potential to be transformative in important and diverse applications such as medicine, robotics, manufacturing, and scientific imaging. In contrast to conventional LOS imaging (15–17), the diffuse nature of light reflected from typical surfaces in NLOS imaging leads to mixing of spatial information in the collected light, seemingly precluding useful scene reconstruction. To address these issues, optical techniques for NLOS imaging that have been demonstrated include transient imaging (4–8, 10, 11, 18), speckle correlations (9, 19), acoustic echoes (20, 21), intensity imaging (14), confocal imaging (22), occlusion-based imaging (23–27), wave-propagation transformation (28, 29), Fermat paths (30), and so forth (1–3). With few exceptions (14, 23–27), most of the techniques that reconstruct NLOS scenes rely on high-resolution time-resolved detectors and use the information encoded in the time-of-flight (TOF) of photons that scatter multiple times. Using such techniques, NLOS tracking of the positions of moving objects has been also demonstrated (12, 13). Despite this remarkable progress, NLOS imaging has been limited to short-range implementations, where the light path of the direct LOS is typically in the range of a few meters (1–3). Our interest is to demonstrate NLOS imaging over long ranges (i.e., kilometers), thus pushing its applications to real-life scenarios such as transportation, defense, and public safety and security.

The major obstacles to extending NLOS imaging to long ranges are signal strength, background noise, and optical divergence. Due to the three-bounce reflections and the long standoffs, the attenuation in long-range NLOS imaging is huge. Also, the weak back-reflected signal is mixed with ambient light, leading to poor signal-to-noise ratio (SNR). The sunlight contributes ambient noise, and the backscattering from the near-field atmospheric will also introduce high noise. Moreover, unlike long-range LOS imaging (31, 32), the signal detected at each raster-scanning point in NLOS imaging contains light reflected by all of the parts of the hidden scene. Consequently, it is more difficult to tolerate low SNR in NLOS imaging than in LOS imaging. On the other hand, the optical divergence over long range introduces a strong temporal broadening of the received optical pulses. Such broadening renders the idealizations of virtual sources and virtual detectors in previous short-standoff NLOS imaging experiments (19, 22, 28–30) inapplicable. Furthermore, previous NLOS experiments typically required high-precision timing measurements at picosecond scale—as obtained from a streak camera (4–6) or a single-photon avalanche diode (SPAD) detector (8, 10–13, 22, 28–30)—to recover hidden scenes. However, the temporal broadening in long-range situations can contribute time jitters on the order of nanoseconds (SI Appendix, Fig. S4). Lastly, long-range NLOS imaging needs a higher scanning accuracy, which will effect the resolution of the reconstruction results. All of these issues prevent the useful reconstructions of NLOS imaging over long standoffs.

We develop both hardware and software solutions to realize long-range NLOS imaging. First, we construct an NLOS imaging system operating at the near-infrared wavelength, which has the advantages (as compared to visible light) of low atmospheric loss, low solar background, eye-safety (33), and invisibility (SI Appendix, Fig. S2). Second, operating at near-infrared requires previously undescribed detection techniques, since the conventional Si SPAD does not work. To do this, we develop a fully integrated InGaAs/InP negative-feedback SPAD, which is specially designed for accurate light-detection and ranging applications (34). Third, we develop a high-efficiency optical receiver by employing a telescope with high coating efficiency and a single-photon detector with large photosensitive surface. The collection efficiency is 4.5 times higher than previous experiments (SI Appendix, Table S1). Fourth, we adopt a dual-telescope optical design for the confocal system (22) to reduce the backscattering noise, thus enhancing the SNR. The dual-telescope design can separate the illumination from detection and remove the use of beam splitter, thus allowing high illumination power and the optimization of receiver optics for high collection efficiency. Fifth, we optimize the system design to balance the collecting efficiency and the temporal resolution to realize high-resolution imaging (SI Appendix). We achieve a fine precision scanning with 46× and 28× magnification, where the scanning accuracy is as low as 9 microrads. Finally, we derive a forward model and a tailored deconvolution algorithm that includes the effects of temporal and spatial broadening in long-range conditions. With these efforts, we demonstrate NLOS imaging and tracking over a range up to 1.43 km at centimeter resolution. The achieved range is about three orders of magnitude longer than previous experiments (1, 2, 4–14, 18, 19, 22–30) (SI Appendix, Table S1).

NLOS Imaging Setup

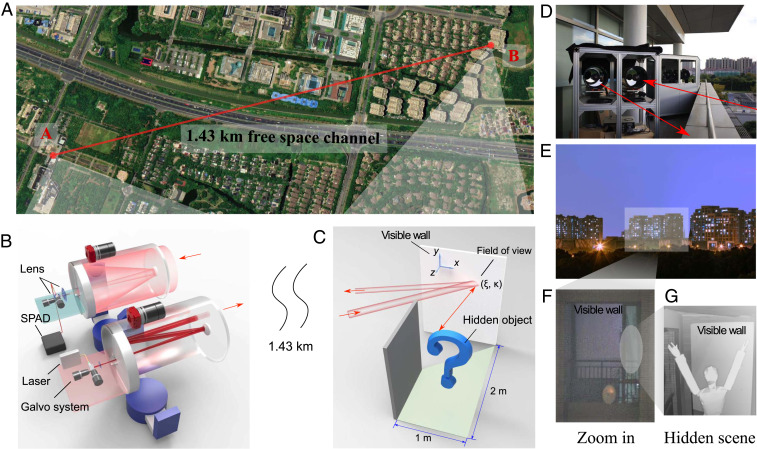

We implement NLOS imaging in the field, over an urban environment in the city of Shanghai. Fig. 1A shows an aerial view of the topology of the experiment. The imaging system is placed at location A ( N, E), facing the hidden scene located at B ( N, E) over a 1.43-km (determined by laser ranging measurement) free-space link. Note that this standoff distance is close to the maximal achievable range of our optical system (SI Appendix, Fig. S13). The hidden scene is an apartment on the 11th floor in a residential area with a size of about 2 m1 m (Fig. 1E), where the objects are hidden from the field-of-view (FOV) of the NLOS imaging system, except for the visible wall (Fig. 1F).

Fig. 1.

Long-range NLOS imaging experiment. (A) An aerial schematic of the NLOS imaging experiment over a (standoff distance of) 1.43-km free-space link, in which the setup is placed at A and the hidden scene is placed at B. (The geographic image is from Google Earth 2020 Google.) (B) The optical setup of the NLOS imaging system, which consists of two synchronized telescopes for transmitter and receiver. A laser followed by galvo system and lenses are used for transmitting light pulses, while an InGaAs/InP SPAD together with a galvo system is used for collecting and detecting photons and recording their TOF information. (C) Schematic of the hidden scene in a room with a dimension size of 2 m1 m. (D) An actual photograph of the NLOS imaging setup. (E and F) Zoomed-out and zoomed-in photographs of the hidden scene taken at location A, where only the visible wall can be seen. (G) Photograph of the hidden object, taken at the room located at B.

The optical NLOS imaging setup, shown in Fig. 1B, utilizes commercial off-the-shelf devices that operate at room temperature, where the source is a standard fiber laser, and the detector is a compact InGaAs/InP single-photon avalanche diode (SPAD). This shows its potential for real-life applications. In particular, the whole system is operated at 1,550.2 nm, and it adopts a dual-telescope confocal optical design with two identical Schmidt–Cassegrain optical telescopes, each of which has a diameter of mm and a focal length of m. In the transmitter, pulsed light is generated by a fiber laser (BKtel HFL-300am) at the pulse width of 500 ps, 300-mW average power, and 1-MHz repetition frequency. The pulses pass through an 11-mm collimator, a two-axis raster-scanning galvo mirror, and an optical lens with 30-mm focal length. Then, the pulses go into the transmitting telescope and are sent out over the free-space link to the visible wall at location B. For the optical receiver, diffuse photons are collected by the receiving telescope and guided to a lens followed by the receiving galvo mirror. Then, the photons are coupled to a multimode fiber and directed to a homemade, compact, multimode fiber-coupled, free-running InGaAs/InP SPAD detector (34), which has 20% system detection efficiency, 210-ps timing jitter, 2.8 K dark count rate. The photon-detection signals from the SPAD are recorded and time tagged by a time-correlated single photon counting module with a time resolution of 32 ps.

To achieve a high-efficiency, low-noise NLOS system, we implement several optimized optical designs. In the receiving system, we utilize conversion lenses with focal lengths of 60 and 100 mm, and we magnify the equivalent aperture angle of the telescope, which matches with the multimode fiber with a core diameter of 62.5 m and NA = 0.22 (SI Appendix). Such a design not only achieves a high coupling efficiency but also reduces the background noise from stray light. The total system efficiency, including transmission efficiency of lenses and galvo mirrors (40%), fiber coupling efficiency (50%), and SPAD detector efficiency (20%), is 4%. Meanwhile, the 62.5-m fiber ensures a proper FOV of 14 cm (at 1.43-km relay wall) per pixel for high-resolution NLOS imaging. Moreover, the galvo system is placed between the two lenses so that the rotation angle of the galvo can be well determined by the lens and the telescope.

In long-range conditions, a standard coaxial design of the confocal NLOS imaging system (22) will present high background noise due to the direct backscatter. When the illumination light passes through the system’s internal mirrors and telescope, it will produce strong local backscattered noise due to their limited coating. This noise is much higher than the NLOS signal photons that travel back after three bounces. Besides, the near-field atmosphere will also contribute direct backscatter. To resolve these issues, we designed a dual-telescope optical system, where the illuminator and the receiver utilize two different telescopes to remove the cross-talk between them. The dual-telescope system can also avoid the beam splitter (22), which allows the use of high illumination power and optimization of the receiver optics for high collection efficiency. The two telescopes are carefully matched and automatically synchronized for each raster-scanning position. Furthermore, the central positions of each illuminating point and receiving FOV, projected on the visible wall, are set to be slightly separated in order to reduce the effect of first-bounce light. This separation is controlled at 9.8 cm, which is much smaller than the distance between the hidden object and the visible wall (2 m); so the spherical geometry resulting from coaxial alignment is an accurate approximation.

For the choices of the FOVs of the transmitting and receiving systems, there is a tradeoff between the collection efficiency and the system timing jitter. In our experiment, we perform an optimization to choose both FOVs at a diameter of 14 cm projected on the visible wall, where the receiving FOV matches the numerical aperture and the core diameter of the multimode fiber (SI Appendix). The transmitting and receiving telescopes raster-scan a grid with a total area of 0.85 m 0.85 m on the visible wall. The distance between the central positions of two neighboring scan points is set to be 1.3 cm. This is achieved by designing the two optical telescope systems to magnify the rotation angle of the two galvo systems.

Forward Model and Reconstruction Algorithm

Inspired by confocal NLOS imaging based on the light-cone transform (LCT) (22), we derive an image formation algorithm that is tailored for long-range outdoor conditions. The forward model takes two additional parameters into consideration: 1) the system’s time jitter , which includes time-broadening contributions (35) from the laser pulse width, the detector’s jitter, the optical divergence, etc.; and 2) the standard deviation (SD) of the receiver’s size of FOV projected onto the visible wall . Similar to most NLOS imaging methods, our model makes the following assumptions: the visible wall and the hidden object are both Lambertian and the influence of the angle of the Lambertian scattering can be ignored, and the photons that bounce more than three times can be ignored.

Forward Model.

A topology of the imaging formation is shown in SI Appendix, Fig. S1. We consider a confocal NLOS imaging system where the receiver’s and transmitter’s FOVs overlap. We assume that the laser and the detector raster-scan an grid at the same points. Let denote the scanning points on the visible wall and denote the patch on the object with reflectivity . The detection rate as a function of time for each grid position is a three-dimensional (3D) signal modeled as

| [1] |

where

Here, denotes the distance between the scanning point on the visible wall and the point on the hidden object; is the temporal distribution of the whole transceiver system, modeled by a Gaussian function with SD ; describes the spatial distribution of the receiver’s FOV projected on the visible wall, which can be modeled by a two-dimensional (2D) Gaussian distribution with the spatial size (SD) of ; represents convolution with respect to the spatial variables; and represents convolution with respect to the time variable. Note that several scalar factors are absorbed into , such as the detection efficiency and normalization factors for and .

According to the properties of the Dirac delta function and the change of the variables and adopted in the LCT model (22), Eq. 1 can be rewritten as

| [2] |

where

Upon discretizing time (raster-scan points are already discrete), the pair of convolutions in Eq. 2 has a discrete representation, . Here, is a 3D spatiotemporal matrix representing the facula intensity distribution on the visible wall and the system jitter distribution in temporal domain; models the light-cone transform in confocal NLOS imaging. From the theory of photodetection, the photons detected by the SPAD follow an inhomogeneous Poisson distribution. Therefore, the image formulation of this long-range confocal NLOS imaging is

| [3] |

where represents the discretized measured photon count detected by scanning point , discrete histogram time bin. encodes the discretized version of the imaging formulation in Eq. 1. is the distribution of the hidden object’s reflectivity. Furthermore, represents the dark count of the detector and the background noise.

Reconstruction.

The goal of imaging reconstruction is to estimate , which contains both intensity and depth information, from the measurement data . To reconstruct , we describe the inverse problem as a convex optimization. Let denote the negative log-likelihood data-fidelity function from Eq. 3. The computation of the regularized maximum-likelihood estimate can be written as the convex optimization problem

| [4] |

Here, the constraint comes from the nonnegativity of the reflectivity, and the total variation (TV) is exploited as the regularizer, where is the regularization parameter.

We develop an iterative 3D deconvolution algorithm, modified from Sparse Poisson Intensity Reconstruction Algorithms–Theory and Practice (SPIRAL-TAP) (36), to obtain an approximate solution of the inverse problem in Eq. 4. This NLOS problem is different from the standard LOS algorithms (37, 38). Our reconstruction algorithm—SPIRAL3D—employs sequential quadratic approximations to the log-likelihood objective function . SPIRAL3D extends the conventional SPIRAL-TAP from a 2D matrix to a 3D matrix. That is, it performs the iterative optimization directly for 3D matrices that include both the spatial and temporal information, in order to match the convolutional operators in the forward model of Eqs. 1, 2, and 3 (39). The convergence of this iteration method has been proved in ref. 36.

NLOS Imaging Results

In the long-range case shown in Fig. 1, the temporal resolution of received photons after three bounces is characterized to be a full width at half-maximum of 1.1 ns in good weather conditions (SI Appendix, Fig. S4). In the experiment, we observe that the total attenuation for the third bouncing is 160 dB over the 1.43-km link, when a typical hidden object (0.6 m 0.4 m size) is placed about 1 m away from the visible wall. On average, for each emitted pulse, the received mean photon numbers for the first and third bounce are about 0.048 and 0.0003. During data collection, for each raster-scanning point, we normally operate the system with an exposure time of 2 s, where we send out laser pulses with a total number of photons and collect 674 returned third-bounce photon counts. The SNR for the third bouncing is about 2.0. The data are mainly collected at night, because of relatively stable temperature and humidity (SI Appendix, Fig. S3). We also perform measurements in daylight. By using proper spectral filters, we observe similar SNRs (SI Appendix, Fig. S12). For each scanning position, a photon-counting histogram mainly consists of two peaks in the temporal domain (see a typical sequence in SI Appendix, Fig. S5). The first peak records the photons reflected by the visible wall (first bounce), and the second peak records the photons through three bounces (third bounce). The time difference between first-bounce and third-bounce photons is extracted as the signal for NLOS image reconstructions.

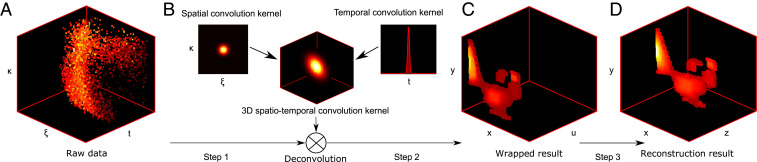

Fig. 2 illustrates the process of the reconstruction for the hidden mannequin. The process generally involves three steps: 1) setting the raw data as the initial value of the iteration; 2) applying the spatiotemporal kernel to solve the 3D deconvolution with the SPIRAL3D solver; and 3) transforming the coordinate along depth from to to recover . With these three steps, the shape and albedo of the hidden mannequin can be reconstructed successfully.

Fig. 2.

The reconstruction procedure of our approach. (A) The raw-data measurements are resampled along the time axis. (B) The spatiotemporal kernel is applied to the 3D raw data to solve the deconvolution with the SPIRAL3D solver. (C) Transforming the coordinate along depth from to to recover the hidden scene. (D) The final reconstructed result.

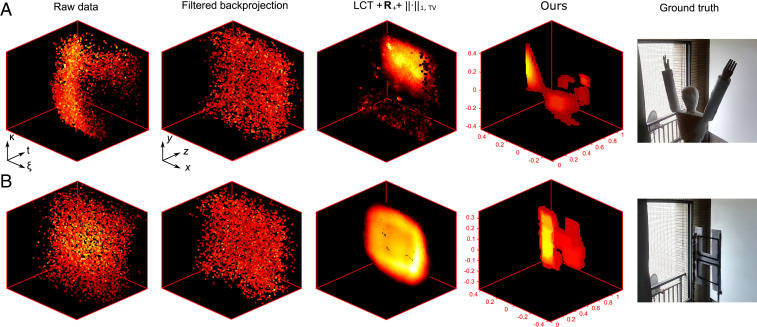

Fig. 3 shows the results for two distinct hidden objects, which are computed with different types of NLOS reconstruction approaches, including filtered back-projection with a 3D Laplacian of Gaussian filter (40), LCT with an iterative solver based on Alternating Direction Method of Multipliers (ADMM) (22), and the algorithm proposed herein. The proposed algorithm recovers the fine features of the hidden objects, allowing the hidden scenes to be accurately identified. The other approaches, however, fail in this regard. These results clearly demonstrate that the proposed algorithm has superior performance than state-of-the-art approaches for NLOS reconstruction of hidden targets in outdoor and long-range conditions. In the experiment, we can resolve two hidden objects of cm apart, which behaves better than the approximate resolution bound (SI Appendix). SI Appendix, Figs. S6 and S10 show additional results such as 3D scenes that contain multiple objects with different depths. For a longer distance, the optical divergence introduces larger spatial–temporal broadening, which will deteriorate the accuracy of the reconstructed image (SI Appendix, Fig. S14).

Fig. 3.

Comparison of the reconstructed results with different approaches. The NLOS measurements are computed by filtered back-projection with a 3D Laplacian of Gaussian filter (40), LCT with an iterative ADMM-based solver (22), and our proposed algorithm. (A) The reconstructed results for the hidden scene of mannequin. (B) The reconstructed results for the hidden scene of letter H.

NLOS Tracking Results

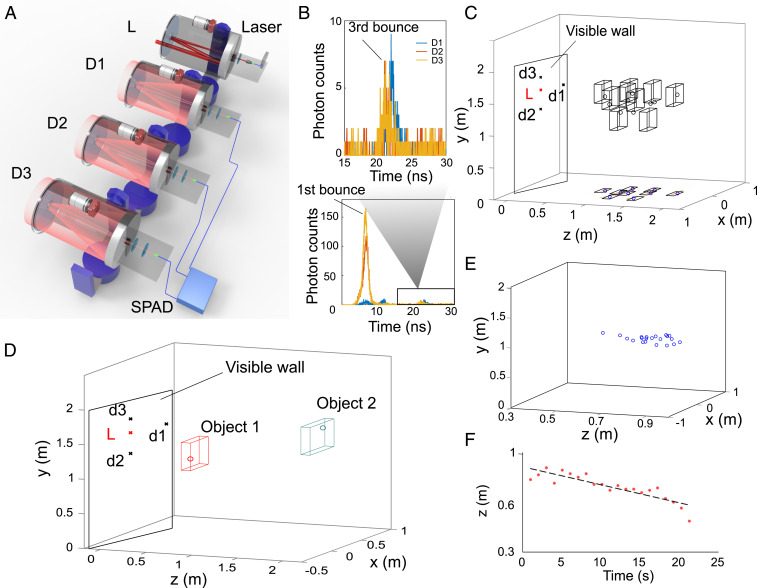

We perform experiments to test NLOS tracking for the positions of hidden objects in real time over 1.43 km. Obtaining the position of hidden objects is sufficient for a number of applications, and earlier remarkable experiments have proved the feasibility for NLOS tracking up to 50 m (12, 13). As shown in Fig. 4A, our experiment is realized with three receiver telescopes simultaneously observing three distinct positions D1, D2, and D3, while a transmitter telescope transmits laser pulses at position L on the visible wall. These four positions on the visible wall are optimized to achieve high-resolution detecting of the hidden objects (Fig. 4 C and D). The collected photons from the three receiver telescopes are coupled to multimode fibers and detection systems, which include three-channel InGaAs/InP SPADs and TDCs. By detecting the TOF information of the photons reflected by the hidden object, one can retrieve both the coordinates and the speed of the target. Following refs. 12 and 13, we use the Gaussian model to correct the time jitter and adopt the estimation method of the maximum likelihood for ellipsoid curves.

Fig. 4.

NLOS real-time detecting and tracking experiment over 1.43 km. (A) The setup includes one telescope and a pulsed laser for transmitting light (L) and three telescopes and SPADs for detecting light (D1, D2, D3). (B) A representative raw measurement contains the first- and third-bounce photon counts. (C) Detection of the various positions for a single hidden object. (D) Detection of the positions for two simultaneous hidden objects. (E) The real-time tracking for a hidden moving object at a frame rate of 1 Hz. (F) The real-time tracking speed for a hidden moving object, where the retrieved speed is 1.28 cm/s, which matches the actual speed of 1.25 cm/s.

The results of NLOS detection and tracking are shown in Fig. 4. We implement the detection of a single hidden object for difference positions (Fig. 4C) and the detection of two hidden objects (Fig. 4D). In the experiment, we show the ability to retrieve hidden objects at different hidden distances to the visible wall. The agreement between the joint probability density function and the object’s true position shows that our system is able to locate a stationary person up to 1.8 m. We perform 10 measurements for each position of the hidden object and observe that the SD is [10, 10, 2.5] cm and the error (or accuracy) between the mean value and the actual position is [7, 8, 3] cm, at the hidden distance of 1 m. These results are comparable to previous experiments (12, 13). Furthermore, we demonstrate real-time tracking (Fig. 4 E and F) of a moving object at a frame rate of 1 Hz. Fig. 4E shows the retrieved positions of the moving object at different times. The retrieved speed is 1.28 cm/s according to the fitting result in Fig. 4F, which matches the actual speed of the hidden object of 1.25 cm/s. There results demonstrate the potential for NLOS detection and tracking in real time over a long range.

Discussion

In summary, we demonstrate in this work NLOS imaging and tracking of a hidden scene at long ranges up to 1.43 km. The computed images allow for NLOS target recognition and identification at low light levels and at the invisible wavelength with eye safety. (It is noted that our aim is to extend the standoff distance of NLOS techniques while the scale of the hidden scene is similar to the previous work at room scale.) In the future, advanced devices such as femtosecond lasers, high-efficiency superconducting detectors (41), and SPAD array detectors (42) can be used to boost the imaging resolution, increase the imaging speed, and extend the scale of the hidden scene. The atmospheric turbulence will be another consideration when going toward longer ranges. Overall, the proposed high-efficiency confocal NLOS imaging/tracking system, noise-suppression method, and long range-tailored reconstruction algorithm open opportunities for long-range, low-power NLOS imaging, which is important to move NLOS imaging techniques from short-range validations to outdoor and real application.

Materials and Methods

Supporting Details.

In SI Appendix, we provide more details on both hardware and software. For hardware, we mainly discuss the optimized system design and some calibrations; for software, we include a more detailed forward model and some simulation results. Full information regarding materials and methods is available in SI Appendix.

Supplementary Material

Acknowledgments

This work was supported by National Key Research and Development Plan of China Grant (2018YFB0504300), National Natural Science Foundation of China Grants (61771443 and 62031024), the Shanghai Municipal Science and Technology Major Project (2019SHZDZX01), the Anhui Initiative in Quantum Information Technologies, the Chinese Academy of Sciences, Shanghai Science and Technology Development Funds (18JC1414700), Fundamental Research Funds for the Central Universities (WK2340000083), and Key-Area Research and Development Program of Guangdong Province Grant (2020B0303020001).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2024468118/-/DCSupplemental.

Data Availability

All data and processing code supporting the findings of this study have been deposited in GitHub (https://github.com/quantum-inspired-lidar/NLOS_imaging_over_1.43km).

References

- 1.Altmann Y., et al. , Quantum-inspired computational imaging. Science 361, eaat2298 (2018). [DOI] [PubMed] [Google Scholar]

- 2.Maeda T., Satat G., Swedish T., Sinha L., Raskar R., Recent advances in imaging around corners. arXiv:1910.05613 (12 October 2019).

- 3.Faccio D., Velten A., Wetzstein G., Non-line-of-sight imaging. Nat. Rev. Phys. 2, 318–327 (2020). [Google Scholar]

- 4.Kirmani A., Hutchison T., Davis J., Raskar R., “Looking around the corner using transient imaging” in Proceedings of the 12th IEEE International Conference on Computer Vision. (IEEE, 2009), pp. 159–166. [Google Scholar]

- 5.Velten A., et al. , Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging. Nat. Commun. 3, 745 (2012). [DOI] [PubMed] [Google Scholar]

- 6.Gupta O., Willwacher T., Velten A., Veeraraghavan A., Raskar R., Reconstruction of hidden 3D shapes using diffuse reflections. Optic Express 20, 19096–19108 (2012). [DOI] [PubMed] [Google Scholar]

- 7.Heide F., Xiao L., Heidrich W., Hullin M. B., “Diffuse mirrors: 3D reconstruction from diffuse indirect illumination using inexpensive time-of-flight sensors” in Proc. IEEE Conf. Computer Vision and Pattern Recognition (IEEE, 2014), pp. 3222–3229. [Google Scholar]

- 8.Laurenzis M., Velten A., Nonline-of-sight laser gated viewing of scattered photons. Opt. Eng. 53, 023102 (2014). [Google Scholar]

- 9.Katz O., Heidmann P., Fink M., Gigan S., Non-invasive single-shot imaging through scattering layers and around corners via speckle correlations. Nat. Photon. 8, 784 (2014). [Google Scholar]

- 10.Laurenzis M., Klein J., Bacher E., Metzger N., Multiple-return single-photon counting of light in flight and sensing of non-line-of-sight objects at shortwave infrared wavelengths. Optic Lett. 40, 4815–4818 (2015). [DOI] [PubMed] [Google Scholar]

- 11.Buttafava M., Zeman J., Tosi A., Eliceiri K., Velten A., Non-line-of-sight imaging using a time-gated single photon avalanche diode. Optic Express 23, 20997–21011 (2015). [DOI] [PubMed] [Google Scholar]

- 12.Gariepy G., Tonolini F., Henderson R., Leach J., Faccio D., Detection and tracking of moving objects hidden from view. Nat. Photon. 10, 23 (2016). [Google Scholar]

- 13.Chan S., Warburton R. E., Gariepy G., Leach J., Faccio D., Non-line-of-sight tracking of people at long range. Optic Express 25, 10109–10117 (2017). [DOI] [PubMed] [Google Scholar]

- 14.Klein J., Peters C., Martín J., Laurenzis M., Hullin M. B., Tracking objects outside the line of sight using 2D intensity images. Sci. Rep. 6, 32491 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sun B., et al. , 3D computational imaging with single-pixel detectors. Science 340, 844–847 (2013). [DOI] [PubMed] [Google Scholar]

- 16.Kirmani A., et al. , First-photon imaging. Science 343, 58–61 (2014). [DOI] [PubMed] [Google Scholar]

- 17.Shin D., et al. , Photon-efficient imaging with a single-photon camera. Nat. Commun. 7, 12046 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Heide F., et al. , Non-line-of-sight imaging with partial occluders and surface normals. ACM Trans. Graph. 38, 22 (2019). [Google Scholar]

- 19.Metzler C. A., et al. , Deep-inverse correlography: Toward real-time high-resolution non-line-of-sight imaging. Optica 7, 63–71 (2020). [Google Scholar]

- 20.Dokmanić I., Parhizkar R., Walther A., Lu Y. M., Vetterli M., Acoustic echoes reveal room shape. Proc. Natl. Acad. Sci. U.S.A. 110, 12186–12191 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lindell D. B., Wetzstein G., Koltun V., “Acoustic non-line-of-sight imaging” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(IEEE, 2019), pp. 6780–6789.

- 22.O’Toole M., Lindell D. B., Wetzstein G., Confocal non-line-of-sight imaging based on the light-cone transform. Nature 555, 338 (2018). [DOI] [PubMed] [Google Scholar]

- 23.Bouman K. L., et al. , “Turning corners into cameras: Principles and methods” in Proceedings of the 23rd IEEE International Conference on Computer Vision (IEEE, 2017), pp. 2270–2278. [Google Scholar]

- 24.Baradad M., et al. , “Inferring light fields from shadows” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2018), pp. 6267–6275. [Google Scholar]

- 25.Xu F., et al. , Revealing hidden scenes by photon-efficient occlusion-based opportunistic active imaging. Optic Express 26, 9945–9962 (2018). [DOI] [PubMed] [Google Scholar]

- 26.Saunders C., Murray-Bruce J., Goyal V. K., Computational periscopy with an ordinary digital camera. Nature 565, 472–475 (2019). [DOI] [PubMed] [Google Scholar]

- 27.Seidel S. W., et al. , “Corner occluder computational periscopy: Estimating a hidden scene from a single photograph” in Proceedings of the IEEE International Conference on Computational Photography (IEEE, 2019), pp. 25–33. [Google Scholar]

- 28.Liu X., et al. , Non-line-of-sight imaging using phasor-field virtual wave optics. Nature 572, 620–623 (2019). [DOI] [PubMed] [Google Scholar]

- 29.Lindell D. B., Wetzstein G., O’Toole M., Wave-based non-line-of-sight imaging using fast – migration. ACM Trans. Graph. 38, 1–13 (2019). [Google Scholar]

- 30.Xin S., et al. , “A theory of Fermat paths for non-line-of-sight shape reconstruction” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2019), pp. 6800–6809. [Google Scholar]

- 31.Pawlikowska A. M., Halimi A., Lamb R. A., Buller G. S., Single-photon three-dimensional imaging at up to 10 kilometers range. Optic Express 25, 11919–11931 (2017). [DOI] [PubMed] [Google Scholar]

- 32.Li Z. P., et al. , Super-resolution single-photon imaging at 8.2 kilometers. Optic Express 28, 4076–4087 (2020). [DOI] [PubMed] [Google Scholar]

- 33.Thomas R. J., et al. , A procedure for multiple-pulse maximum permissible exposure determination under the Z136.1-2000 American National Standard for Safe Use of Lasers. J. Laser Appl. 13, 134–140 (2001). [Google Scholar]

- 34.Yu C., et al. , Fully integrated free-running InGaAs/InP single-photon detector for accurate lidar applications. Optic. Express 25, 14611–14620 (2017). [DOI] [PubMed] [Google Scholar]

- 35.O’Toole M., et al. , “Reconstructing transient images from single-photon sensors” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2017), pp. 1539–1547. [Google Scholar]

- 36.Harmany Z. T., Marcia R. F., Willett R. M., This is SPIRAL-TAP: Sparse Poisson intensity reconstruction algorithms—theory and practice. IEEE Trans. Image Process. 21, 1084–1096 (2011). [DOI] [PubMed] [Google Scholar]

- 37.Shin D., Kirmani A., Goyal V. K., Shapiro J. H., Photon-efficient computational 3-D and reflectivity imaging with single-photon detectors. IEEE Trans. Comput. Imag. 1, 112–125 (2015). [Google Scholar]

- 38.Tachella J., et al. , Bayesian 3D reconstruction of complex scenes from single-photon lidar data. SIAM J. Imag. Sci. 12, 521–550 (2019). [Google Scholar]

- 39.Shin D., Shapiro J. H., Goyal V. K., “Photon-efficient super-resolution laser radar” in Proceedings of SPIE: Wavelets and Sparsity XVII, Lu Y. M., Van De Ville D., Papadakis M., Eds. (International Society for Optics and Photonics, 2017), vol. 10394, p. 1039409. [Google Scholar]

- 40.Laurenzis M., Velten A., Feature selection and back-projection algorithms for nonline-of-sight laser–gated viewing. J. Electron. Imag. 23, 063003 (2014). [Google Scholar]

- 41.Hadfield R. H., Single-photon detectors for optical quantum information applications. Nat. Photon. 3, 696 (2009). [Google Scholar]

- 42.Zappa F., Tisa S., Tosi A., Cova S., Principles and features of single-photon avalanche diode arrays. Sensor Actuator Phys. 140, 103–112 (2007). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data and processing code supporting the findings of this study have been deposited in GitHub (https://github.com/quantum-inspired-lidar/NLOS_imaging_over_1.43km).