Abstract

A picture-naming task and ERPs were used to investigate effects of iconicity and visual alignment between signs and pictures in American Sign Language (ASL). For iconic signs, half the pictures visually overlapped with phonological features of the sign (e.g., the fingers of CAT align with a picture of a cat with prominent whiskers), while half did not (whiskers are not shown). Iconic signs were produced numerically faster than non-iconic signs and were associated with larger N400 amplitudes, akin to concreteness effects. Pictures aligned with iconic signs were named faster than non-aligned pictures, and there was a reduction in N400 amplitude. No behavioral effects were observed for the control group (English speakers). We conclude that sensory-motoric semantic features are represented more robustly for iconic than non-iconic signs (eliciting a concreteness-like N400 effect) and visual overlap between pictures and the phonological form of iconic signs facilitates lexical retrieval (eliciting a reduced N400).

Keywords: Iconicity, ERPs, American Sign Language, N400, picture-naming

Psycholinguistic models of language processing typically separate semantic and phonological levels of representation (e.g., Dell & O’Seaghdha, 1992; Levelt et al., 1999). However, iconic lexical items, in which there is a motivated relationship between phonological form and meaning, challenge the degree of separation between phonology and semantics. Historically, iconic words in spoken languages were considered to be rare and limited to onomatopoeia (e.g., Assaneo et al., 2011). More recently, it has become clear that iconicity is more pervasive in spoken languages than previously thought (e.g., Imai et al., 2015; Perniss et al., 2010; Perry et al., 2015). For example, ideophones depict sensory images and are found in many of the world’s languages (see Dingemanse, 2012 for review). In addition, a recent analysis of over 4,000 languages revealed that sound and meaning were not completely independent, and unrelated languages often use the same sounds for specific referents, e.g., words for tongue tend to contain an l sound and words related to smallness contain an i vowel (Blasi et al., 2016). Unlike spoken languages, iconicity has long been known to be widespread across the lexicon in sign languages. Iconic signs may be more prevalent than iconic words because of the affordances of visible, manual articulators that allow the creation of iconic expressions that depict objects and human actions, movements, locations, and shapes (e.g., Taub, 2001).

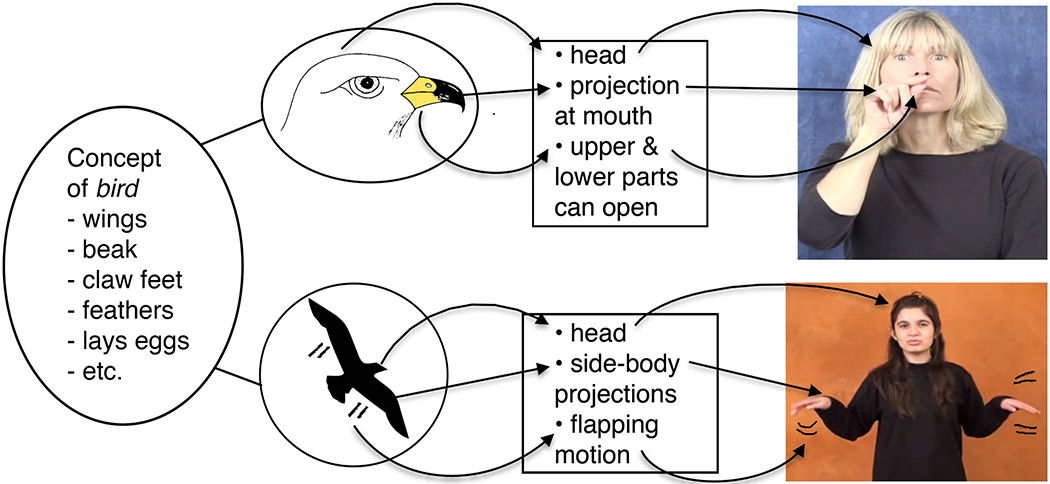

Emmorey (2014) has proposed that iconicity should be viewed as a structured mapping between two mental representations, a conceptual representation and a phonological representation. This proposal draws heavily on the structure-mapping theory proposed by Gentner and colleagues (Gentner, 1983; Gentner & Markman, 1997) and the analogue-building model of linguistic iconicity proposed by Taub (2001). Taub (2001) argued that the cognitive process of comparison is key to the concept of iconicity, and Gentner (1983) provides evidence that comparison processes crucially involve creating structured correspondences between two mental representations. Figure 1 illustrates how Taub’s model applies to the iconic signs denoting ‘bird’ in American Sign Language (ASL) and Turkish Sign Language (TİD). These two unrelated sign languages exhibit different iconic mappings because different representations have been selected to represent the concept ‘bird’. In ASL, the representative image highlights the bird’s beak, and this aspect of the image can be mapped to the thumb and index finger of the phonological form; whereas in TİD, the bird’s wings are prominent in the selected image and can be mapped to the two hands. We refer to this type of structured mapping between an image and a phonological form as ‘alignment.’ In this study we used a picture-naming task to investigate the effects on lexical retrieval of both sign iconicity (comparing iconic vs. non-iconic signs) and picture-sign alignment (comparing pictures that are aligned vs. non-aligned to a targeted iconic sign).

Figure 1.

Illustration of the analogue-building process proposed by Taub (2001) for the iconic signs for ‘bird’ in American Sign Language (top) and Turkish Sign Language (bottom). Adapted from Emmorey (2014).

There is growing evidence that iconicity may play a role in lexical retrieval and production for signers, but whether these effects are strategic or reflect lexical processing effects is unclear. Vinson, Thompson, Skinner, and Vigliocco (2015) found that picture-naming times in British Sign Language (BSL) were faster for iconic than non-iconic signs, but this effect was limited to signs that were rated as having a later age of acquisition (Vinson et al., 2015). Vinson et al. (2015) speculated that iconicity may not have facilitated retrieval of early-acquired signs because a) their study may not have included a sufficient range of early-acquired signs or b) iconicity may only facilitate naming when there is some degree of difficulty in lexical retrieval, as occurs for late-acquired signs. However, Vinson et al. (2015) did not include a control condition in which non-signers named the same pictures in English. Such a control would help confirm that the observed effects were due to sign iconicity and not to uncontrolled aspects of the different pictures that were used to elicit iconic and non-iconic signs. Navarrete et al. (2017) carried out just such a control analysis with 70 of the 92 pictures used by Vinson et al. (2015) for which spoken naming latencies were available in Szekely et al. (2004). The authors found that pictures with iconic signs were named faster than those with non-iconic signs by non-signing speakers, highlighting the need for this type of control.

Navarrete et al. (2017) also found effects of iconicity on picture naming for Italian Sign Language (LIS). This study used a picture-picture interference task to investigate whether picture distractors with iconic signs interfere less with naming target pictures. The authors hypothesized that iconic signs become activated more quickly and robustly than non-iconic signs because iconic signs receive additional activation from the perceptual and action-related features that they encode. Navarrete et al. argued that if iconic distractor signs become activated more quickly, then they can be discarded more quickly as possible responses, which leads to faster responses when naming target pictures. Previous studies using this paradigm report a similar effect with lexical frequency; that is, target pictures were named faster when the distractor pictures had more frequent names (e.g., Dhooge & Hartsuiker, 2010; Miozzo and Caramazza, 2003). Two experiments confirmed Navarrete et al.’s prediction: naming times were faster when distractor pictures had iconic signs compared to non-iconic signs, and this effect held only for LIS signers and not for speakers performing the same task in Italian. In addition, a third experiment found that pictures with iconic signs were named faster than those with non-iconic signs for LIS signers, but not for Italian speakers. Pretato, Peressotti, Bertone, and Navarrete, (2018) found similar effects of iconicity on picture-naming times for hearing LIS signers.

These studies suggest that iconic signs may be easier to retrieve than non-iconic signs during production, but response time data can only reveal the summation of processing. The use of event-related potentials (ERPs) can track the time course of processing and reveal whether effects of iconicity occur during early picture processing, during lexical access, or at a later stage. Baus and Costa (2015) used ERPs and picture-naming to investigate the effects of iconicity and frequency on lexical retrieval in hearing bimodal bilinguals fluent in Catalan Sign Language (CSL) and Spanish/Catalan. Naming latencies were faster for pictures with iconic signs (particularly for low frequency items), and this effect was only observed when the bilinguals named the pictures in CSL and not in Spanish/Catalan. Baus and Costa found a very early effect of iconicity in a 70-140ms time window post picture onset: pictures named with iconic signs elicited a more positive response than those named with non-iconic signs, and this effect was only observed when naming pictures in CSL. Within the N400 time window (350-500ms), an effect of iconicity was again observed (greater positivity for iconic than non-iconic signs), but only for low frequency signs. In a later time-window (550-750ms), an interaction between iconicity and frequency was again observed, but now the effect of iconicity was only observed for high frequency signs. However, the same iconicity and frequency interactions were also observed when bilinguals named the pictures in Spanish/Catalan, which makes the iconicity effects difficult to interpret. In addition, the majority of the hearing bilinguals (23/26) learned CSL as a late L2 and had been exposed to CSL for an average of only 2.6 years. Iconicity is known to play a larger role in second compared to first language acquisition (for review see Ortega et al., 2017). Thus, it is unclear whether the observed iconicity effects only occur when a sign language is recently learned by hearing adults.

In the present study, we investigated the possible effects of iconicity on ERPs when deaf highly proficient signers name pictures in ASL. Of particular interest is the N400 ERP component, which has been shown to be sensitive to lexico-semantic processes (for review see Kutas & Federmeier, 2011). There are several possible hypotheses for how iconicity could modulate the N400 response. One possibility is that iconicity might pattern like lexical frequency, such that iconic signs are activated more rapidly and more easily compared to non-iconic signs. In this case, we should observe a smaller N400 (less negativity) for iconic than non-iconic signs, just as high frequency words elicit faster response times and smaller N400 amplitudes compared to low frequency words (Kutas and Federmeier, 2011). For picture-naming, ERP effects of lexical frequency (greater positivity for low frequency words) have also been found to emerge 150-200ms after picture onset for speech production (Strijkers, Costa, & Thierry, 2010; see also Baus, Strijkers, & Costa (2013) for typed naming). Strijkers et al. (2010) argued that this early frequency modulation reflects lexical access, rather than conceptual processing or phonological retrieval.

Another possibility is that iconicity might pattern more like concreteness because iconic signs more strongly encode perceptual and action features of the concepts they denote compared to non-iconic signs. Concrete words are typically responded to faster than abstract words and also elicit a larger N400 (greater negativity) compared to abstract words (e.g., Holcomb et al., 1999; Kounios & Holcomb, 1994; van Elk et al., 2010). One explanation for the larger N400 amplitude for concrete words is that they have stronger and denser associative semantic links than abstract words, and concrete words may activate more sensory-motor information (see Barber et al., 2013).

In the present study, we also investigated the possible effect of alignment between a picture and the targeted iconic sign. Two previous studies have manipulated alignment in a picture-sign matching task with ASL signers (Thompson Vinson, & Vigliocco, 2009) and BSL signers (Vinson et al., 2015). In these studies, participants judged whether a picture and a sign matched, and the alignment between the form of the iconic sign and the picture was manipulated. For example, the iconic ASL sign BIRD depicts a bird’s beak (see Figure 1), and an aligned picture would show a prominent bird’s beak (e.g., top picture in Figure 1), whereas a non-aligned picture would show a bird in flight where the beak is not salient (e.g., bottom picture in Figure 1) (see also Figure 2 below). Both Thompson et al. (2009) and Vinson et al. (2015) found that native signers had faster RTs for iconic signs preceded by aligned than non-aligned pictures, and speakers performing the task with audio-visual English words showed no RT difference between the two picture-alignment conditions. We investigated whether picture-alignment impacts naming latencies and ERPs for iconic signs. We hypothesized that picture-alignment makes signs easier to retrieve due to the visual mapping between the picture and the form of the iconic sign. Therefore, we predicted faster naming times and a reduced N400 amplitude for iconic signs in the picture-aligned condition compared to the non-aligned picture condition.

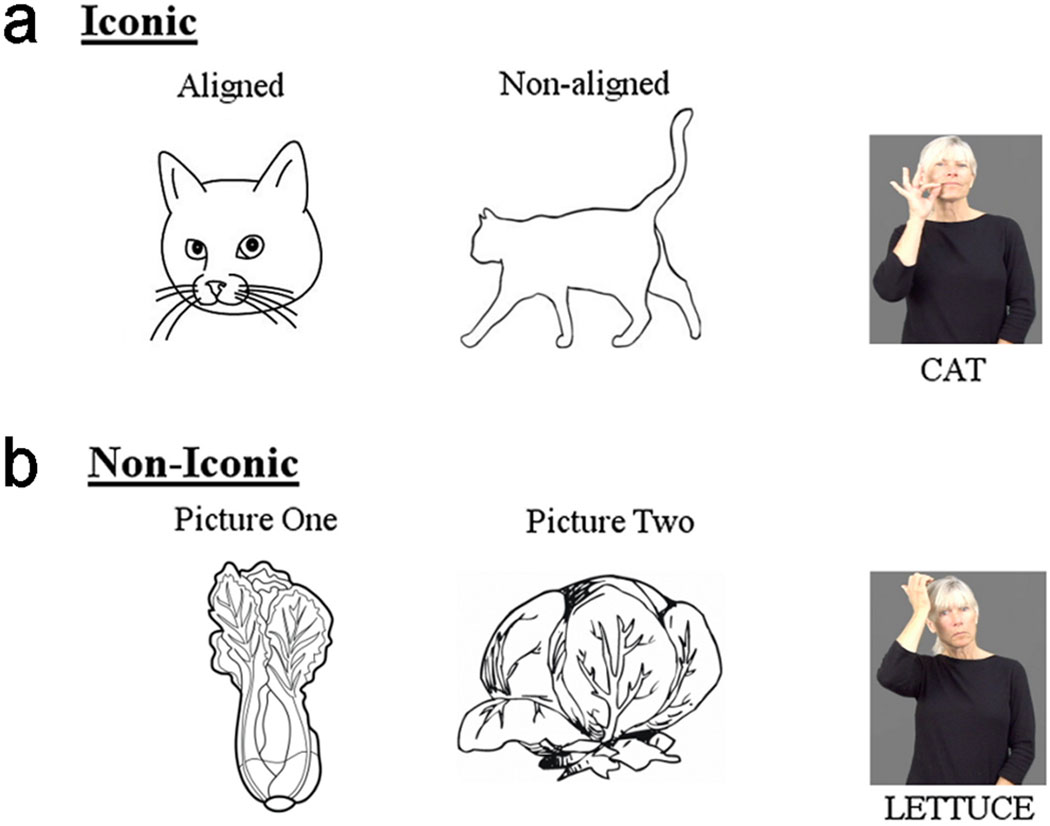

Figure 2.

Example of stimuli illustrating an iconic and non-iconic target sign, as well as the aligned and non-aligned pictures for the iconic target sign. Non-iconic target signs are not aligned or non-aligned to the pictures because there is no clear mapping between form and meaning for these signs.

In sum, the present study constitutes a two-part analysis of both iconicity and structured alignment between a picture and a sign. In our investigation, we explored 1) whether deaf ASL signers would be faster to name pictures using iconic signs than non-iconic signs and 2) whether for iconic signs, naming latencies are faster when the picture is aligned vs. non-aligned to the target sign. In addition, we investigated whether iconicity and/or picture alignment impacted the N400 response for sign retrieval and production.

Methods

Participants.

Twenty-three deaf signers were included in our analyses (11 female; mean age = 33.65 years, SD = 6.11 years). Thirteen were native signers born into deaf signing families and ten were early ASL signers, exposed to ASL before age 6 years (mean age of ASL exposure = 2.9; SD = 1.76 years)1. All participants had normal or corrected-to-normal vision, and had no history of any language, reading, or neurological disorders. Two participants were left-handed. Two additional participants were run but were excluded from the analyses. One was excluded due to a large number of skipped trials (“don’t know” responses), and the other due to an excessive number of artifacts in the ERP recording.

Control participants were 27 monolingual English speakers (13 female; mean age = 30.95 years, SD = 8.97 years) who had normal or corrected-to-normal vision, and had no history of any language, reading, or neurological disorders. Two participants were left-handed to match the deaf participants. Control participants had no exposure to ASL beyond knowing a few signs or the fingerspelled alphabet. These participants underwent the same experimental procedures as the deaf participants, but due to extensive speech-related ERP artifacts, only reaction times are compared for analysis.

All participants received monetary compensation in return for participation. Informed consent was obtained from all participants in accordance with the Institutional Review Board at San Diego State University.

Materials.

Stimuli consisted of digitized black on white line drawings. A total of 176 different pictures were presented representing 88 different concepts. Half of the 176 pictures (88) were named with iconic signs and half (88) were named with non-iconic signs. Descriptive statistics for the ASL signs are given in Table 1, and the English glosses for the ASL signs are listed in the Appendix. Iconicity ratings and subjective frequency ratings were retrieved from the ASL-LEX database (Caselli et al., 2017; Sevcikova Sehry, Caselli, Coehn-Goldberg, & Emmorey, submitted), and videos of all targeted ASL signs can be found in this database (http://asl-lex.org). For this database, hearing non-signers rated sign iconicity on a scale from 1 (not iconic at all) to 7 (very iconic). Signs were considered iconic and included in the present study if they received a rating of 3.7 or higher, while non-iconic signs were included if they received ratings of 2.5 or lower. Iconic and non-iconic signs were matched for ASL frequency based on ratings from ASL-LEX; deaf signers rated the frequency of ASL signs on a scale from 1 (very infrequent in everyday conversation) to 7 (very frequent in everyday conversation). Iconic and non-iconic signs were also matched for the number of two-handed signs and for phonological neighborhood density (PND) using the Maximum PND measure from ASL-LEX. Age of acquisition (AoA) norms are not available for ASL, and therefore as a proxy, we matched the English translations of the iconic and non-iconic signs using English AoA norms from Kuperman et al. (2012). Both the iconic and non-iconic sign translations were early-acquired (see Table 1), suggesting that the ASL signs in the present study were all acquired early (only two items had an AoA above age 7.0 years).

Table 1.

Descriptive statistics for the ASL signs.

| Iconicity M(SD) | Frequency M(SD) | # of two-handed signs | PND M (SD) | AoA (in years) M (SD) | |

|---|---|---|---|---|---|

| Iconic signs | 5.31 (0.94) | 3.90 (1.24) | 16 | 37.95 (27.02) | 4.67(1.11) |

| Non-Iconic signs | 1.86 (0.39) | 4.17 (1.11) | 17 | 31.23 (28.25) | 4.66(1.22) |

Note: PND = phonological neighborhood density, AoA = Age of Acquisition.

Of the 88 pictures that were named with iconic signs, 44 pictures were aligned with the ASL sign and 44 pictures were non-aligned with the same target ASL sign (see Figure 2A). The other 88 pictures for non-iconic signs represented 44 different concepts, each of which was depicted by two different pictures (see Figure 2B). Each participant saw all 176 pictures, and the order of the aligned and non-aligned pictures (iconic signs) was counterbalanced across participants in two pseudorandom lists; similarly for the non-iconic signs, the order of picture 1 and picture 2 was counterbalanced within these two lists.

Because two different pictures were used for each concept, there are inevitable differences between the two pictures. Thus, it is possible to inadvertently have selected pictures such that one is a more prototypical example of the concept than the other which would lead to easier recognition and faster naming times. In order to avoid this potential confound, thirty MTurk workers rated all the pictures for prototypicality through an online Qualtrics survey. Each participant was given the English word and was asked to mentally imagine the concept. When they had a mental image in mind they clicked “Continue” and were shown a target picture. This target picture was rated on a 5-point scale for how well the picture represented their mental image for that concept, where 1 was a ‘poor match’ and 5 was a ‘very close match’. All pictures were matched on their prototypicality ratings. Ratings did not differ between pictures targeting iconic signs (mean = 4.50, SD = 0.27) and pictures targeting non-iconic (mean = 4.53, SD = 0.23), p = 0.22. Prototypicality ratings also did not differ between aligned pictures for iconic signs (mean = 4.67, SD = 0.33) and non-aligned pictures (mean = 4.50, SD = 0.33), p = .24.

Procedure.

Each trial began with text advising the signer to prepare for the next trial by pressing and holding down the spacebar (with their dominant hand) on a keyboard placed in the participant’s lap. As soon as the space bar was pressed down, a fixation cross appeared in the center of the screen for 800ms followed by a 200ms blank screen and then the to-be-named picture which remained on screen until the spacebar was released. The release of the spacebar marked the response onset, i.e. the beginning of signing. Reaction times were calculated as the amount of time elapsed from when the picture appeared on screen to spacebar release. After signing the participant again saw the text asking them to press the spacebar. Participants were told that they could blink and move prior to replacing their hand on the keyboard or during the longer blink breaks that came about every fifteen trials. Participants were also provided with two self-timed breaks during the study, which gave them the opportunity to take a break for as long as they desired before resuming the study. Participants were instructed to name each picture as quickly and accurately as possible. They were also asked to use minimal mouth and facial movements while signing in order to avoid artifacts associated with facial muscle movements in the ERPs. In the event that a participant did not know what the picture represented or the sign to name it, they were instructed to respond with the sign DON’T-KNOW, thereby skipping the trial.

For hearing participants, each trial began with a purple fixation cross appearing in the center of the screen for 800ms followed by a gray fixation cross for 800ms. The second fixation cross was followed by 200ms blank screen and then the to-be-named picture which remained on screen for 300ms. A blank screen was then presented until the picture was named aloud, after which the experimenter pressed a key to begin the next trial. Headphones worn by the participants included a microphone which recorded the naming of the picture. The time elapsed between the picture’s presentation and the onset of speech was used as a measure of reaction time. Participants were told that they could blink during the purple fixation cross or during the longer blink breaks that came about every fifteen trials, as well as during the self-timed breaks. As with the deaf participants, the hearing participants were instructed to name the pictures as quickly and accurately as possible, and to tell the experimenter if they did not recognize the picture or could not name it. As noted above, due to speech artifact that overlapped temporally with the components of interest the ERP data from the hearing participants could not be used for analysis.

To familiarize all participants with the task, a practice set of 15 pictures that were not included in the experimental list was given to participants before the experiments. Participants were not familiarized with the images prior to the experiment.

EEG recording and analysis.

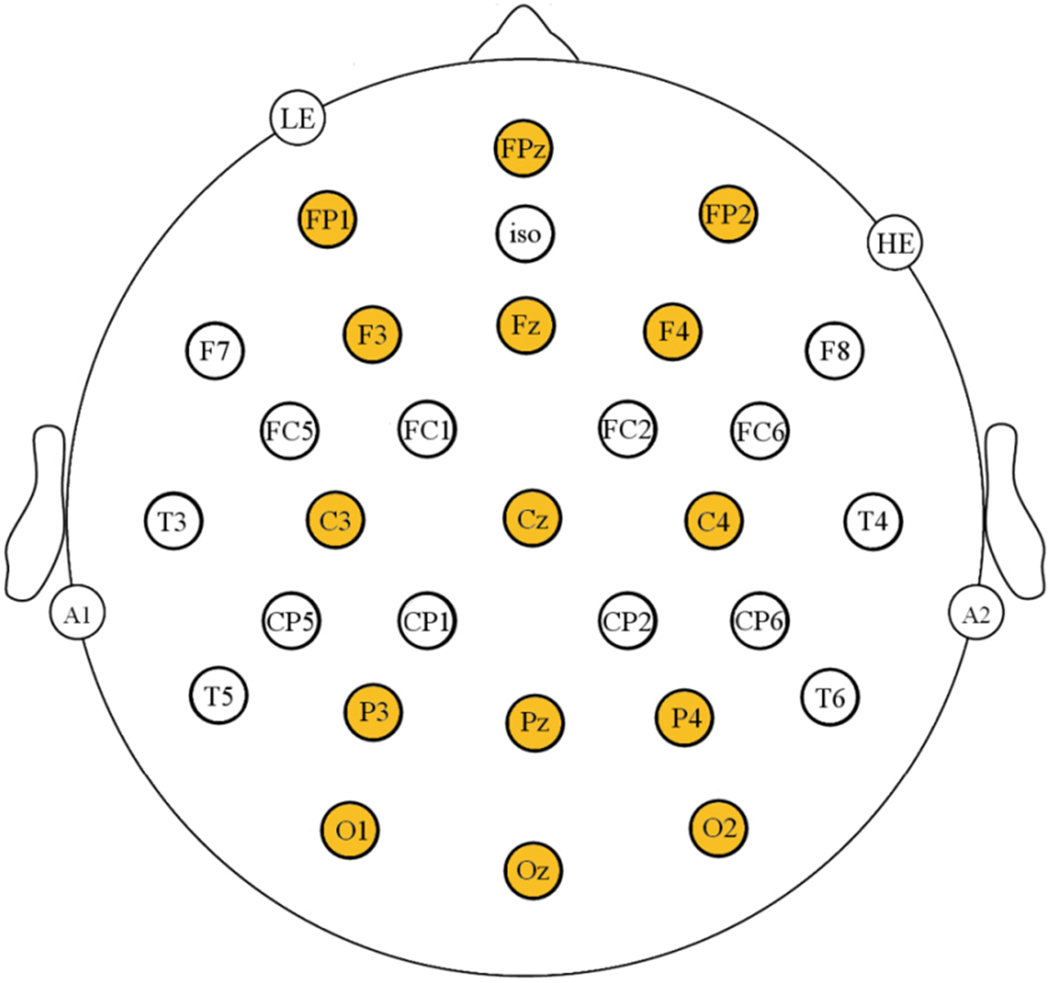

Participants wore an elastic cap (Electro-Cap) with 29 active electrodes (see Figure 3 for an illustration of the electrode montage). An electrode placed on their left mastoid served as a reference during the recording and for analyses. Recordings from electrodes located below the left eye and on the outer canthus of the right eye were used to identify and reject trials with blinks, horizontal eye movements, and other artifacts. Using saline gel (Electro-Gel), all mastoid, eye and scalp electrode impedances were maintained below 2.5 kΩ. EEG was amplified with SynAmpsRT amplifiers (Neuroscan-Compumedics) with a bandpass of DC to 100 Hz and was sampled continuously at 500 Hz.

Figure 3.

Electrode montage used in this study. Highlighted sites were used in the analysis.

ERPs were time-locked offline to the onset of the picture, with a 100ms pre-stimulus baseline. Trials contaminated with artifact were excluded from analysis, as were trials with reaction times shorter than 300ms or longer than 2.5 standard deviations above the individual participant’s mean RT.

Mean amplitude was calculated for the N400 window (300-600ms after picture presentation).2 The selection of this window was based on visual inspection of the grand means, which showed an N400 component with activity continuing to 600ms after picture presentation. For this time window, two repeated-measure ANOVAs were conducted. One ANOVA with two factors of Iconicity (Iconic, Non-Iconic), three of Laterality (Left, Midline, Right) and five of Anteriority (FP, F, C, P3, O). The other ANOVA was conducted only with the iconic sign targets and included two factors of picture alignment (Aligned, Non-Aligned), three of Laterality, and five of Anteriority. These ANOVAs were used to assess the effect of iconicity and alignment on sign production during the N400 window.

Image Complexity Analysis

During the early stages of behavioral analysis, it became apparent that there were some images with significantly and consistently slower response latencies. As the pictures were controlled for prototypicality and several other variables described above, the complexity of the pictures was explored as a potential previously-unidentified variable. To assess this variable, the pictures were all processed through Matlab’s Entropy function, which returned a numerical value to represent the complexity of an image. Through this function, an image of entirely white pixels will receive a low complexity score, while an image with a lot of detail will receive a high complexity score. Higher image complexity scores may be associated with more effortful recognition, and therefore changes in reaction time and brain response. Four pairs of pictures targeting iconic signs were determined to have unusually different entropy values between the aligned and non-aligned pictures, and thus were excluded from all analyses. For matching purposes, the four most dissimilar pairs in the non-iconic condition were also excluded.

Results

Iconic vs. non-iconic sign production

For deaf signers, naming latencies and accuracy, as well as ERPs, for the 80 pictures targeting iconic signs were compared to the 80 pictures targeting non-iconic signs. As a control, naming latencies and accuracy for English speakers responding to the same pictures were also compared. Trials with RTs shorter than 300ms or longer than 2.5 standard deviations above the individual participant’s mean RT were excluded from analysis (3% of the data). Trials with incorrect responses (7 trials or 4%, on average) were excluded from ERP analyses and the naming latency analyses.

Naming accuracy.

Mean accuracy was similar for the signing participants and the English-speaking participants (3% of all trials and 5% of all trials, respectively) with no significant difference in accuracy for iconic and non-iconic trials for either group (p=.23 and p=.43 respectively).

Naming latencies.

The mean overall naming latency for ASL and English was similar (M = 806ms, SD = 167 and M = 827ms, SD=109, respectively). However, we conducted separate analyses for manual (ASL) and vocal (English) naming latencies due to the potential articulatory differences in response type (see Emmorey et al., 2012). To statistically compare naming latencies between iconic and non-iconic signs, we used a linear mixed effects model, with items and participants as random intercepts, and iconicity, sign frequency, picture prototypicality, and picture complexity as fixed effects for both groups. For signing participants, a marginal main effect of iconicity was found, t = −0.502, p = 0.055, such that iconic signs (M = 798ms, SD = 165) were produced faster than non-iconic signs (M = 830ms, SD = 160). For the English speakers, the production of English words with iconic ASL translations (M = 824, SD = 109) vs. non-iconic translations (M = 834, SD = 113) yielded no significant effect of iconicity, t = −1.283, p = 0.14.

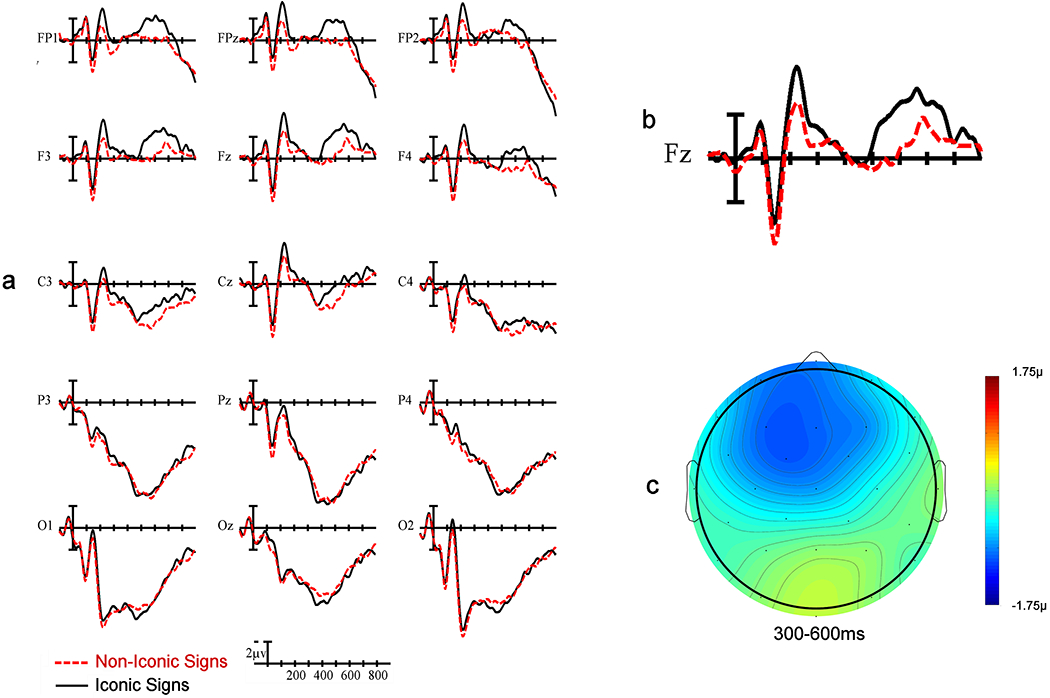

Electrophysiological effects of iconicity.

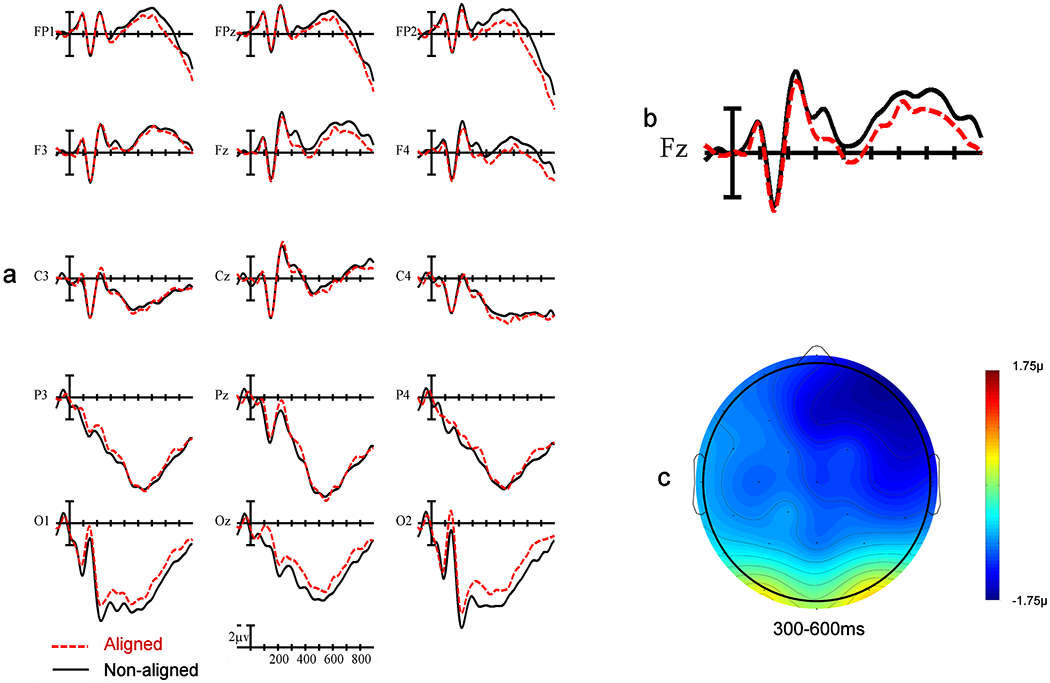

There was no main effect of Iconicity, F(1,24) =0.78, p =.39, but there was a significant interaction between Iconicity x Laterality, F(1,24)= 5.89, p = .009, indicating that the production of iconic signs was associated with greater left-sided negativity across the N400 window. Additionally, production of iconic signs elicited greater negativities at frontal electrodes sites, as evidenced by an Iconicity x Anteriority interaction, F(1,24) =4.76, p = .02 (see Figure 4).

Figure 4.

a) ERP results from the fifteen electrode sites included in analysis, b) single-site recording from the frontal central (FZ) electrode site, c) voltage map illustrating the greater frontal negativity for iconic compared to non-iconic signs in the N400 window.

Effects of picture alignment for iconic signs

To test the effect of alignment, we compared naming times and ERPs for the pictures that were visually aligned with the features of the target sign (N = 40) and the pictures that were non-aligned with the target sign (N = 40). Again, as a control, naming latencies and accuracy were compared for speakers naming the same pictures in English.

Naming accuracy.

There was no significant difference in accuracy between aligned and non-aligned pictures for either ASL signers or English speakers (p=.19 and p=.14 respectively).

Naming latencies.

A similar linear mixed effects structure was used as for the iconicity comparison, with alignment included as a categorical variable in the place of iconicity. For signers, there was a significant main effect of alignment, t = 2.46, p = .02. Signs with aligned pictures were named significantly faster than signs with non-aligned pictures, (M = 767ms, SD = 155 and M = 817ms, SD = 180, respectively). This effect was not found for English speakers, t = −0.92, p = .35, as aligned pictures and nonaligned pictures had similar reaction times (M = 827ms, SD = 109 and M = 820ms, SD = 108, respectively.)

Electrophysiological effects of alignment.

There was no main effect of Alignment, F(1,24)= 0.5, p = .83, but there was a significant interaction between Alignment x Anteriority, F(1,24)= 5.68, p = .01. Pictures in the non-aligned condition generated a larger negativity at frontal electrode sites compared to pictures in the aligned condition. In addition, there was a three-way interaction between Alignment x Anteriority x Laterality, F(2,48)= 3.87, p = .003. The effect of alignment was strongest for the frontal electrodes over the right hemisphere (see Figure 5).

Figure 5.

a) ERP recordings from the fifteen electrode sites included in analysis, b) single-site recording from the frontal central (FZ) electrode site, c) voltage map illustrating greater frontal right-sided negativity for pictures in the non-aligned condition compared to the aligned condition in the N400 window.

Discussion

The goals of this study were to investigate a) whether sign iconicity facilitates lexical retrieval and/or modulates the N400 ERP component during picture naming and b) whether picture-sign alignment (shared visual features between a picture and an iconic sign) speeds naming latencies and/or modulates the N400 response. We found behavioral and electrophysiological effects for both types of manipulations, and we address these findings separately below.

Iconic vs. non-iconic sign production

Iconic signs were produced marginally faster than non-iconic signs, but English speakers showed no effect of the iconicity manipulation when producing spoken English translations. These findings are consistent with several previous studies of iconicity and picture-naming in different sign languages (Baus & Costa, 2015; Vinson et al., 2015; Navarrete et al., 2017; Pretato et al., 2017). One possible explanation for these findings is that the link between semantic features and phonological representations for iconic signs facilitates production compared to non-iconic signs. Another possible (not mutually exclusive) explanation suggested by Navarette et al. (2017) is that the semantic features depicted by iconic signs are encoded more robustly at the semantic level, which could facilitate lexical retrieval and thus speed naming times for iconic signs.

The electrophysiological results revealed that production of iconic signs elicited a more negative response than non-iconic signs. This effect was strongest over frontal sites. Behavioral facilitation is typically associated with reduced N400 amplitude, suggesting a different mechanism accounts for our facilitated naming latencies. When compared to abstract words, concrete words are typically responded to faster, as well as eliciting a larger N400 (e.g., Holcomb, Kounios, Anderson, & West, 1999; Kounios & Holcomb, 1994; van Elk et al., 2010). Several studies have established that this effect is generally anterior in distribution, resulting in greater negativities over frontal sites for concrete words (Kounios & Holcomb, 1994; Holcomb et al., 1999; Barber et al., 2013). Greater negativity for concrete words compared to abstract words has been interpreted to be the result of the simultaneous activation of both sensory-motoric conceptual features and linguistic features for concrete words. In the present study, the iconic signs all depicted specific perceptual and/or motoric features of the concepts they denoted (e.g., the form of an object; how an object is held), while the non-iconic signs did not depict any sensory-motoric features. If, as suggested by Navarette et al. (2017), the semantic features depicted by iconic signs are more robustly represented and activated, then this could give rise to greater activation of sensory-motoric features for iconic compared to non-iconic signs, which would result in greater frontal negativity for iconic signs. Thus, despite the fact that both iconic and non-iconic signs referred to concrete, picturable concepts, the sensory-motoric semantic features of iconic signs may be more robustly represented which facilitates lexical retrieval and also elicits a larger negativity that tends to be frontal in distribution.

Effects of picture alignment for iconic signs

For iconic signs, we manipulated the structural alignment between the signs and pictures and found that when the iconic features of the sign and the picture were aligned, naming times were faster than when the sign and picture were not aligned. This result is consistent with comprehension studies of picture-sign matching that found that structural alignment facilitated matching of signs and pictures (Thompson et al, 2009; Vinson et al., 2015). Crucially, there was no behavioral effect of alignment for our control group of English speakers.

Naming facilitation for iconic signs in the aligned vs. non-aligned picture condition was associated with a reduction in N400 amplitude for aligned pictures. This reduction in amplitude suggests less effortful retrieval as a result of priming between the picture and sign form. Both the reduced N400 and faster reaction times to aligned pictures indicate that the overlap between the picture and the sign form has a faciliatory effect on lexical retrieval and production. The priming effect during the N400 window was strongest over frontal, right hemisphere sites. We hypothesize that this distribution may be associated with form processing in ASL based on a similar distribution for implicit ASL phonological priming found by Meade, Midgley, Sehyr, Holcomb, and Emmorey (2017). In that study, deaf signers made semantic relatedness judgements on pairs of English words that had either phonologically-related or unrelated ASL translations (all pairs in the experimental condition were semantically unrelated). When signs were phonologically-related they were visually similar, sharing the same location, handshape and/or movement. Target words in the ASL phonologically-related trials elicited smaller N400 amplitudes than targets in the unrelated trials. Importantly, this effect was strongest over the right frontal electrode sites, and was interpreted as evidence for implicit phonological priming in ASL. As the effect of alignment in the present study has a similar distribution, this may indicate that the relationship between the phonological form of the sign and the visual features of the picture are being implicitly activated in a similar manner, thus resulting in the facilitatory priming effect.

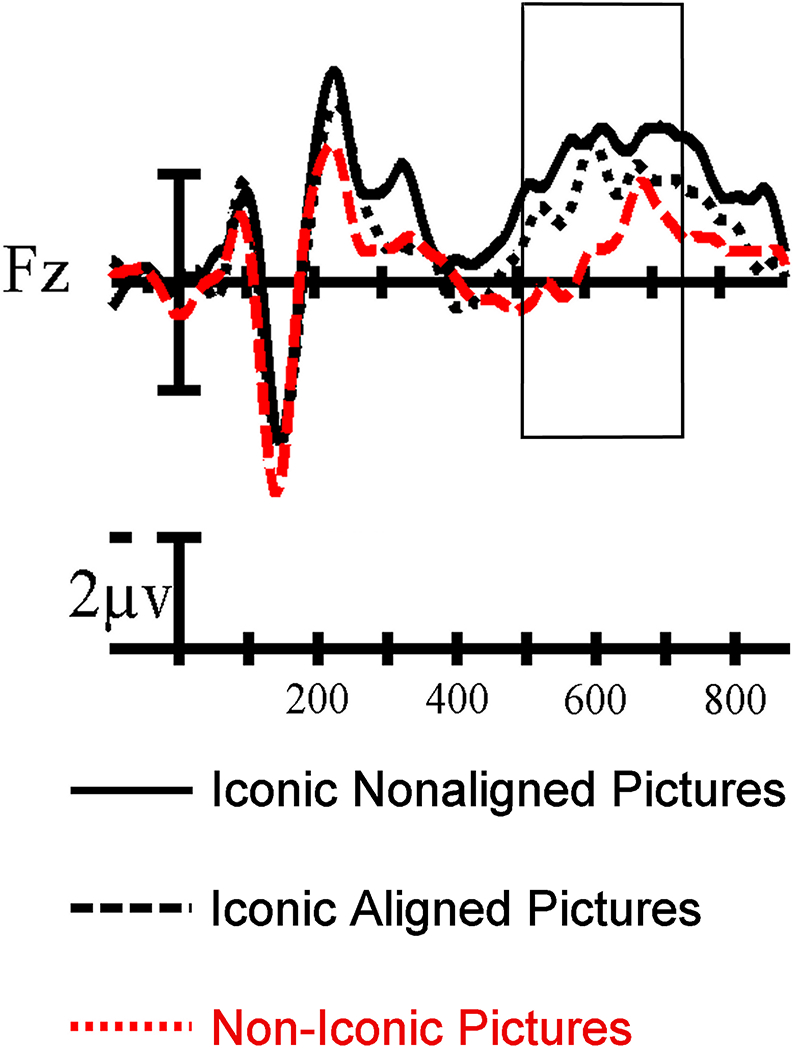

To explore the relationship between alignment (iconic signs with aligned vs. non-aligned pictures) and iconicity (iconic vs. non-iconic signs), we visually inspected the relationship between these three conditions. As shown in Figure 6, regardless of picture alignment, production of iconic signs was associated with a more negative amplitude than non-iconic signs.3 Thus, the priming effect for iconic signs in the aligned condition does not override the negativity associated with the concreteness-like effect for iconic signs. In addition, both effects extend after the typical N400 epoch and are visible 700ms after stimuli presentation.

Figure 6.

Single-site ERP recording comparing the iconic (both non-aligned and aligned shown separately) and non-iconic condition. The proposed N700 effect is highlighted by the box.

We suggest that the late concreteness-like effect for iconic signs may reflect the N700 component, which has been previously suggested to index the amount of resources required to form a mental image of a concrete concept (Barber et al., 2013; West & Holcomb, 2000). In a sentence reading task, West and Holcomb (2000) found greater frontal negativity for trials where participants needed to generate an image of the final word in order to determine whether the concept was imageable or not versus when participants read the sentence and answered questions that did involve imagery (e.g., about the spelling of the final word). This imageablity effect onset around the typical N400 epoch and continued to be visible more than 700ms after stimuli presentation. West and Holcomb proposed that this extended greater negativity may be due to activation of perceptual features of a concept when generating a mental image. Although both iconic and non-iconic signs in the present study referred to imageable concepts, iconic signs may facilitate image generation because these signs depict imageable features of the concept. Our finding that the production of iconic signs is associated with greater negativity in the N700 time window may reflect greater imageabilty of iconic than non-iconic signs. Greater negativities in both the N400 and N700 time windows for iconic signs may indicate greater activation of semantic and perceptual features that are depicted by these signs and that are emphasized by the picture-naming task.

Conclusions

Signed languages, unlike most spoken languages, have notable iconicity that is widespread across the lexicon, providing an opportunity to investigate the impact of form-meaning mapping on lexical retrieval. The present study replicates the faciliatory effect for production of iconic signs (faster naming times), particularly when the signs are structurally aligned with the picture being named. The electrophysiological results revealed that retrieval of iconic signs elicited greater frontal negativities than non-iconic signs which we interpreted as reflecting greater activation of sensory-motor semantic features for iconic signs. The amplitude of this effect was reduced for iconic signs in the picture-aligned condition. We interpreted this reduced negativity as a priming effect arising from the form overlap between semantic features depicted in the picture and in the iconic sign.

The targeted iconic signs in this study demonstrated multiple types of iconicity, including iconicity based on perceptual features (such as the handshape of the sign mimicking the shape of the bird’s beak in Figure 1) and iconicity based on the way the object is handled or held (such as the handshape of the sign mimicking how an ice cream cone is held near the mouth). The perceptually iconic signs made up 77% of the target signs (39/44), while the handling type signs constituted only 23% of the target signs (5/44). Unfortunately, the number of handling iconic signs is too small for a separate analysis. However, the distinction between these two types of signs is worth exploring in future work because studies have suggested cross-linguistic differences in the distribution of these sign types (Padden et al., 2013), and object manipulability has been shown to speed picture-naming times (Lorenzoni, Peressotti, & Navarrete, 2018; Salmon, Matheson, & McMullen, 2014). In addition, children may acquire signs with perceptual iconicity later than those with motoric iconicity (Caselli & Pyers, 2020; Tolar et al., 2008), and these two sign types also give rise to different cross-modal priming effects (Ortega & Morgan, 2015).

While the present study cannot explore differences between perceptual and motor-iconicity and alignment due to the small sample size, it is possible that different forms of iconicity may lead to different degrees or manners of behavioral facilitation and/or EEG modulation. The type of iconicity for the perceptual iconic signs involves a structured overlap between the features of an object and the phonology of the sign, resulting in a wide variety of possible handshapes. When non-aligned, the picture does not depict physical features that are easily mapped onto the phonological features of the iconic sign. In contrast, the type of iconicity for the handling signs requires the signer to mentally imagine themselves interacting with the pictured object and configure the hands to manipulate the object. Pictures of objects do not show the hands, and thus the alignment for handling iconic signs does not rely on a clear overlap between phonological features (e.g., handshape) and visual features of the object. This difference in the nature of picture-sign alignment could potentially influence performance in a picture-naming task. The reaction-time benefit and/or the electrophysiological priming may be strongest for the perceptually iconic signs. Future research intentionally comparing cases of perceptual iconicity to other forms of iconicity may discover whether these different types of structural overlap affect lexical retrieval and/or sign production in different ways.

Supplementary Material

Acknowledgements

We would like to thank Lucinda O’Grady Farnady, Stephanie Osmond, and the researchers at the Laboratory for Language and Cognitive Neuroscience and at the NeuroCognitionLab for help running this study. This work was supported by the National Institute on Deafness and other Communication Disorders under Grant R01 DC010997. We are also grateful to the participants who took part in this study.

Footnotes

When analyzed separately, native and early signers exhibited the same pattern of behavioral and ERP results, indicating that our findings were not driven by the native signers.

Given the results of Baus and Costa (2015), we compared ERPs for iconic and non-iconic signs in the 70–140ms window post picture onset. There were no significant effects of iconicity in this early time window (all ps > .1)

An ANOVA revealed a significant difference between non-iconic and iconic aligned conditions, with a three-way interaction with anteriority and laterality, F(8,176) = 3.29, p = .02; the difference was strongest over frontal right-hemisphere sites. An ANOVA also revealed a significant difference between the non-iconic and the iconic non-aligned conditions, with an interaction between condition and anteriority, F(4,88) = 8.97, p = .002; the difference was strongest in the frontal sites.

References

- Assaneo MF, Nichols JI, & Trevisan MA (2011). The Anatomy of Onomatopoeia. PLOS ONE, 6(12), e28317. 10.1371/journal.pone.0028317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber HA, Otten LJ, Kousta S-T, & Vigliocco G (2013). Concreteness in word processing: ERP and behavioral effects in a lexical decision task. Brain and Language, 125(1), 47–53. 10.1016/j.bandl.2013.01.005 [DOI] [PubMed] [Google Scholar]

- Baus C, & Costa A (2015). On the temporal dynamics of sign production: An ERP study in Catalan Sign Language (LSC). Brain Research, 1609, 40–53. 10.1016/j.brainres.2015.03.013 [DOI] [PubMed] [Google Scholar]

- Blasi DE, Wichmann S, Hammarström H, Stadler PF, & Christiansen MH (2016). Sound–meaning association biases evidenced across thousands of languages. Proceedings of the National Academy of Sciences, 113(39), 10818–10823. 10.1073/pnas.1605782113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caselli NK, & Pyers JE (2020). Degree and not type of iconicity affects sign language vocabulary acquisition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 46(1), 127–139. 10.1037/xlm0000713 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caselli NK, Sehyr ZS, Cohen-Goldberg AM, & Emmorey K (2017). ASL-LEX: A lexical database of American Sign Language. Behavior Research Methods, 49(2), 784–801. 10.3758/s13428-016-0742-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dell GS, & O’Seaghdha PG (1992). Stages of lexical access in language production. Cognition, 42(1), 287–314. 10.1016/0010-0277(92)90046-K [DOI] [PubMed] [Google Scholar]

- Dingemanse M (2012). Advances in the Cross-Linguistic Study of Ideophones. Language and Linguistics Compass, 6(10), 654–672. 10.1002/lnc3.361 [DOI] [Google Scholar]

- Emmorey K (2014). Iconicity as structure mapping. Phil. Trans. R. Soc. B, 369(1651), 20130301. 10.1098/rstb.2013.0301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Petrich JAF, & Gollan TH (2012). Bilingual processing of ASL–English code-blends: The consequences of accessing two lexical representations simultaneously. Journal of Memory and Language, 67(1), 199–210. 10.1016/j.jml.2012.04.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentner D (1983). Structure-mapping: A theoretical framework for analogy. Cognitive Science, 7(2), 155–170. 10.1016/S0364-0213(83)80009-3 [DOI] [Google Scholar]

- Gentner D, & Markman AB (1997). Structure mapping in analogy and similarity. American Psychologist, 52(1), 45–56. 10.1037/0003-066X.52.1.45 [DOI] [Google Scholar]

- Holcomb PJ, Kounios J, Anderson JE, & West WC (1999). Dual-coding, context-availability, and concreteness effects in sentence comprehension: An electrophysiological investigation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25(3), 721–742. 10.1037/0278-7393.25.3.721 [DOI] [PubMed] [Google Scholar]

- Imai M, Miyazaki M, Yeung HH, Hidaka S, Kantartzis K, Okada H, & Kita S (2015). Sound Symbolism Facilitates Word Learning in 14-Month-Olds. PLOS ONE, 10(2), e0116494. 10.1371/journal.pone.0116494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kounios J, & Holcomb PJ (1994). Concreteness effects in semantic processing: ERP evidence supporting dual-coding theory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20(4), 804–823. 10.1037/0278-7393.20.4.804 [DOI] [PubMed] [Google Scholar]

- Kuperman V, Stadthagen-Gonzalez H, & Brysbaert M (2012). Age-of-acquisition ratings for 30,000 English words. Behavior Research Methods, 44(4), 978–990. 10.3758/s13428-012-0210-4 [DOI] [PubMed] [Google Scholar]

- Kutas M, & Federmeier KD (2011). Thirty years and counting: Finding meaning in the N400 component of the event-related brain potential (ERP). Annual Review of Psychology, 62, 621–647. 10.1146/annurev.psych.093008.131123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levelt WJM, Roelofs A, & Meyer AS (1999). A theory of lexical access in speech production. Behavioral and Brain Sciences, 22(1), 1–38. 10.1017/S0140525X99001776 [DOI] [PubMed] [Google Scholar]

- Lorenzoni A, Peressotti F, & Navarrete E (n.d.). The Manipulability Effect in Object Naming. Journal of Cognition, 1(1). 10.5334/joc.30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meade G, Midgley KJ, Sevcikova Sehyr Z, Holcomb PJ, & Emmorey K (2017). Implicit co-activation of American Sign Language in deaf readers: An ERP study. Brain and Language, 170, 50–61. 10.1016/j.bandl.2017.03.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miozzo M, & Caramazza A (2003). When more is less: A counterintuitive effect of distractor frequency in the picture-word interference paradigm. Journal of Experimental Psychology: General, 132(2), 228. [DOI] [PubMed] [Google Scholar]

- Navarrete E, Peressotti F, Lerose L, & Miozzo M (2017). Activation cascading in sign production. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(2), 302–318. 10.1037/xlm0000312 [DOI] [PubMed] [Google Scholar]

- Ortega G, & Morgan G (2015). The effect of iconicity in the mental lexicon of hearing non-signers and proficient signers: Evidence of cross-modal priming. Language, Cognition and Neuroscience, 30(5), 574–585. 10.1080/23273798.2014.959533 [DOI] [Google Scholar]

- Ortega G, Sümer B, & Özyürek A (2017). Type of iconicity matters in the vocabulary development of signing children. Developmental Psychology, 53(1), 89–99. 10.1037/dev0000161 [DOI] [PubMed] [Google Scholar]

- Padden CA, Meir I, Hwang S-O, Lepic R, Seegers S, & Sampson T (2013). Patterned iconicity in sign language lexicons. Gesture, 13(3), 287–308. 10.1075/gest.13.3.03pad [DOI] [Google Scholar]

- Perniss P, Thompson RL, & Vigliocco G (2010). Iconicity as a General Property of Language: Evidence from Spoken and Signed Languages. Frontiers in Psychology, 1. 10.3389/fpsyg.2010.00227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perry LK, Perlman M, & Lupyan G (2015). Iconicity in English and Spanish and Its Relation to Lexical Category and Age of Acquisition. PLOS ONE, 10(9), e0137147. 10.1371/journal.pone.0137147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pretato E, Peressotti F, Bertone C, & Navarrete E (2018). The iconicity advantage in sign production: The case of bimodal bilinguals. Second Language Research, 34(4), 449–462. 10.1177/0267658317744009 [DOI] [Google Scholar]

- Salmon JP, Matheson HE, & McMullen PA (2014). Slow categorization but fast naming for photographs of manipulable objects. Visual Cognition, 22(2), 141–172. 10.1080/13506285.2014.887042 [DOI] [Google Scholar]

- Sevickova Sehyr Z, Caselli N, Cohen-Goldberg A, & Emmorey K (submitted).The ASL-LEX 2.0 project: A database of lexical and phonological properties for 2, 723 signs in American Sign Language. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strijkers K, Baus C, Runnqvist E, FitzPatrick I, & Costa A (2013). The temporal dynamics of first versus second language production. Brain and Language, 127(1), 6–11. 10.1016/j.bandl.2013.07.008 [DOI] [PubMed] [Google Scholar]

- Strijkers K, Costa A, & Thierry G (2010). Tracking Lexical Access in Speech Production: Electrophysiological Correlates of Word Frequency and Cognate Effects. Cerebral Cortex, 20(4), 912–928. 10.1093/cercor/bhp153 [DOI] [PubMed] [Google Scholar]

- Szekely A, Jacobsen T, D’Amico S, Devescovi A, Andonova E, Herron D, Lu CC, Pechmann T, Pléh C, Wicha N, Federmeier K, Gerdjikova I, Gutierrez G, Hung D, Hsu J, Iyer G, Kohnert K, Mehotcheva T, Orozco-Figueroa A, … Bates E (2004). A new on-line resource for psycholinguistic studies. Journal of Memory and Language, 51(2), 247–250. 10.1016/j.jml.2004.03.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taub SF (2001). Language from the Body: Iconicity and Metaphor in American Sign Language. Cambridge University Press. [Google Scholar]

- Thompson RL, Vinson DP, & Vigliocco G (2009). The Link Between Form and Meaning in American Sign Language: Lexical Processing Effects. Journal of Experimental Psychology. Learning, Memory, and Cognition, 35(2), 550–557. 10.1037/a0014547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolar TD, Lederberg AR, Gokhale S, & Tomasello M (2008). The Development of the Ability to Recognize the Meaning of Iconic Signs. The Journal of Deaf Studies and Deaf Education, 13(2), 225–240. 10.1093/deafed/enm045 [DOI] [PubMed] [Google Scholar]

- van Elk M, van Schie HT, & Bekkering H (2010). The N400-concreteness effect reflects the retrieval of semantic information during the preparation of meaningful actions. Biological Psychology, 85(1), 134–142. 10.1016/j.biopsycho.2010.06.004 [DOI] [PubMed] [Google Scholar]

- Vinson D, Thompson RL, Skinner R, & Vigliocco G (2015). A faster path between meaning and form? Iconicity facilitates sign recognition and production in British Sign Language. Journal of Memory and Language, 82(Supplement C), 56–85. 10.1016/j.jml.2015.03.002 [DOI] [Google Scholar]

- West WC, & Holcomb PJ (2000). Imaginal, Semantic, and Surface-Level Processing of Concrete and Abstract Words: An Electrophysiological Investigation. Journal of Cognitive Neuroscience, 12(6), 1024–1037. 10.1162/08989290051137558 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.