Abstract

Background

Medical chart documentation is an essential skill acquired in a clinical clerkship (CC). However, the utility of medical chart writing simulations as a component of the objective structured clinical examination (OSCE) has not been sufficiently evaluated. In this study, medical chart documentation in several clinical simulation settings was performed as part of the OSCE, and its correlation with CC performance was evaluated.

Methods

We created a clinical situation video and images involving the acquisition of informed consent, cardiopulmonary resuscitation, and diagnostic imaging in the emergency department, and assessed medical chart documentation performance by medical students as part of the OSCE. Evaluations were conducted utilizing original checklist (0–10 point). We also analyzed the correlation between medical chart documentation OSCE scores and CC performance of 120 medical students who performed their CC in 2019 as 5th year students and took the Post-CC OSCE in 2020 as 6th year students.

Results

Of the OSCE components, scores for the acquisition of informed consent and resuscitation showed significant correlations with CC performance (P<0.001 for each). In contrast, scores for diagnostic imaging showed a slightly positive, but non-significant, correlation with CC performance (P = 0.107). Overall scores for OSCE showed a significant correlation with CC performance (P<0.001).

Conclusion

We conducted a correlation analysis of CC performance and the quality of medical chart documentation in a simulation setting. Our results suggest that medical chart documentation can be one possible alternative component in the OSCE.

Introduction

A strong medical education values, above all else, the ability for undergraduate through postgraduate students to hone their skills so that they become trusted healthcare professionals [1, 2]. To this end, medical educators must establish an effective clinical training curriculum for undergraduate students in medical school that allows them to move seamlessly into their basic skill training as postgraduates [3].

In Japan, clinical training takes the form of a clinical clerkship (CC) [4], which differs from conventional observation-based clinical training. A CC student is a member of the medical team and participates in actual medical practice and care with supervising doctors [5]. Because students are allowed to perform a certain range of medical procedures under the guidance and monitoring of a teaching doctor [6, 7], they are able to acquire practical clinical skills. Students are required to acquire basic physical examination and precise medical chart documentation skills, as well as the ability to present at conferences. Clinical training in diagnoses and treatment for CC students follows a curriculum that is determined by each hospital department [8].

Physical examinations and medical chart documentation are among the most important skills learned in a CC [9]. Medical chart documentation is essential not only from legal and insurance (e.g., providing evidence for insurance) perspectives, but also for cultivating clinical abilities such as making a differential diagnosis [9].

In 2005, with the intent to ensure basic clinical competency in medical students, the Common Achievement Test Organization (CATO) was established as a third party and introduced the objective structured clinical examination (OSCE) and computer-based testing (CBT) to evaluate basic medical knowledge [10]. Both the OSCE and CBT are required for the Association of Japanese Medical Colleges to recognize a medical student as a ‘student doctor.’ The Pre-CC OSCE (conducted prior to the CC) evaluates basic clinical competency. In 2020, the Post-CC OSCE, which evaluates clinical competency after CC, was introduced as well. A strong performance on this examination is required to graduate from medical schools in Japan.

While the above tests are used to evaluate clinical competencies such as medical interviews and physical assessments, only a few tests offer a comprehensive evaluation of a student’s medical chart documentation skills after CC completion [11, 12]. Furthermore, no study to date has examined correlations between medical chart documentation performance and CC performance. Therefore, we created a medical chart documentation OSCE and assessed its relationship with CC performance in the context of medical education in Japan. In this study, we developed three simulation-based medical chart documentation OSCE components for Post-CC students and analyzed correlations between their performance on the medical chart documentation OSCE and CC.

Methods

Ethical considerations

This study was approved by the Research Ethics Committee of Osaka Medical College (No.2806-1). Verbal informed consent was obtained from students by medical teacher and clerks also witnessed the process. All students were informed about the nature and purpose of the study and anonymity was guaranteed. Students were also informed that they had the opportunity to withdraw from the study if they notified the investigator within a week after taking the OSCE. We also emphasized that withdrawing from the study would not influence their academic outcomes in any way. There were no minors in the study population, since all 6th year medical students in Japan are aged >23 years.

Settings

As is the case for most medical schools in Japan, Osaka Medical College requires its students to take the Pre-CC OSCE and CBT in their 4th year, before they enter into CC in their 5th and 6th years. The Pre-CC OSCE included the following seven basic clinical components: medial interview, chest examination, abdominal examination, head and neck examination, neurological examination, basic clinical technique, and emergency response.

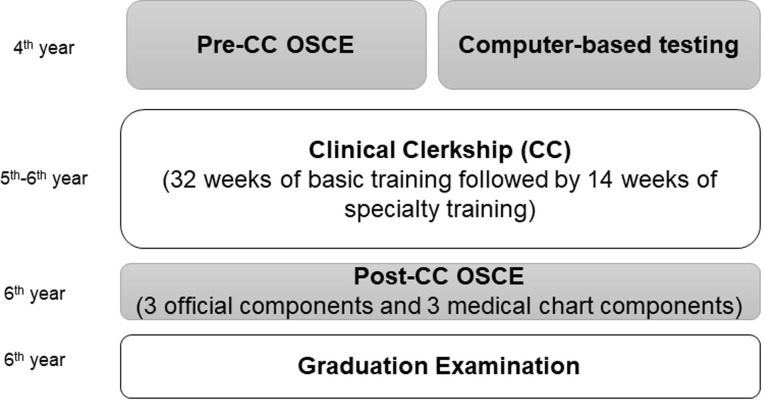

In 2020, the Post-CC OSCE was introduced as a way to evaluate the clinical skills cultivated in CC (Fig 1). The Post-CC OSCE comprises three official mandatory components provided by the CATO in Japan and three original components created by each medical college. Each medical school must perform the three official mandatory components and at least three original components. Mandatory components include a medical interview, physical examination, and presentation. We considered medical chart documentation to be an essential skill for medical students and developed three medical chart simulation components as original components to include in the Post-CC OSCE.

Fig 1. Timeline of medical student curriculum as related to the Objective Structured Clinical Examination (OSCE) and Clinical Clerkship (CC).

In 2020, we assessed only the official components for the post-CC OSCE due to the COVID-19 pandemic.

Study population

We recruited 120 students of Osaka Medical College who were in their 5th year in 2019 and 6th year in 2020. Repeat-year students in the 2019 curriculum year were excluded because the evaluators of student performance are generally not the same every year.

Study measures

Post-CC OSCE original components

All 120 medical students were tested on the 3 original components of informed consent, resuscitation, and diagnostic imaging, as discussed below.

The first original component involved documenting the content of a five-minute video in which medical doctors and nurses go through the process of obtaining informed consent from various patients. We created four scenarios: surgical explanation, palliative care introduction, general anesthesia, and emergency response. One of these was randomly selected for the Post-CC OSCE [13]. After watching the video twice, students were given 10 minutes to document whatever information they felt was relevant by free description on a paper note using the standard format they were taught in CC training. With regard to the content of informed consent, they were instructed to document essential information such as patient condition, treatments, risks, and questions from the patient.

The second original component involved documenting the content of a five-minute video on a case requiring resuscitation. In the video, medical doctors used manikins to perform advanced life support for an adult patient and basic life support for a pediatric patient [14]. One of three available resuscitation scenarios was randomly selected and used for the Post-CC OSCE. After watching the video twice, students were given 10 minutes to document relevant information in the manner described above for the first original component. They were also instructed to document essential information about each situation or each treatment during resuscitation.

The third original component involved diagnostic imaging in the emergency department. We created a five-minute clinical presentation video in which age, sex, height and weight, past and current medical history, symptoms, and physical assessment were first presented and followed by several images (scenarios) of conditions requiring an emergency response (brain hemorrhage, tension pneumothorax, and cardiac tamponade) [15]. One of these scenarios was selected randomly for the Post-CC OSCE. Here too, students watched the video and images twice and were given 10 minutes to document essential information regarding the patient, differential diagnosis, and treatment.

Medical chart evaluation utilizing

Student performance was evaluated by double-blinded examiners using original checklist (0–10 points) (Table 1). These checklists were developed by 3 clinical educators (authors NK, FT, and RK) with practical experience in evaluating the quality of student documentation. In developing the checklists, essential elements of documentation were determined by consensus and also by referring to previous studies [16, 17]. Evaluations were performed by one teacher (author NK) who was a Certified Healthcare Simulation Educator and a Japan Society for Medical Education Certified Medical Education Specialist. Checklist evaluations were performed by one teacher to ensure consistency.

Table 1. Medical chart documentation checklist in each component.

| Component 1 Informed consent |

Component 2 Resuscitation |

Component 3 Diagnostic imaging |

|

|---|---|---|---|

| 1 | Date, place | Date, place | Date, place |

| 2 | Patient or family name | Patient name | Patient name |

| 3 | Explainer, Attender | Present history | Present history |

| 4 | Patient condition | Electric treatment | Disease name |

| 5 | Content of medical procedure | Drug treatment | Image findings |

| 6 | Alternative description | Type of cardiac arrest | Left or Right |

| 7 | Complications | Differential diagnosis | Initial treatment |

| 8 | Understanding by patient or family | Communication with medical staff members | Differential diagnosis |

| 9 | Question and answer | Time course | Time course |

| 10 | Organized | Organized | Organized |

CC content and evaluation

During their 5th year, medical students enter into a basic CC, in which they participate in the CC for all clinical departments of the hospital over the course of 32 weeks. Each CC spans roughly 1–2 weeks in duration. Once the basic CC is completed, students must then select a discipline they wish to study for 14 weeks from the end of their 5th year to early in their 6th year (Fig 1).

During each CC, supervising (teaching) doctors of each department evaluate the clinical skills of students using an evaluation sheet based on the mini-clinical evaluation exercise (CEX) and direct observation of procedural skills (DOPS) [18, 19]. This assessment comprises a 5-point evaluation sheet with 16 items (80%), subjective evaluation by the organizer of each department (10%), and a written report (10%).

Scores for each CC are collected by the medical education center and used to calculate an average score. In this study, we focused on the basic CC (32 weeks) score, since all medical students follow the same curriculum during their basic CC [20].

Statistical analysis

Statistical analysis was performed using JMP® 11 (SAS Institute Inc., Cary, NC, USA). Results were compared using Pearson’s correlation test. Data are presented as mean ± SD. P < 0.05 was considered statistically significant.

Patient and public involvement

Neither patients nor the public were involved in the design, execution, reporting, or dissemination of this study.

Results

The Post-CC OSCE was performed on July 7, 2020. Due to the COVID-19 pandemic, we were unable to perform the mandatory components of CATO; however, we were able to perform our three original medical chart documentation components. None of the medical students withdrew from the study.

For the 2020 Post-CC OSCE, we selected informed consent for general anesthesia as Component 1, adult advanced life support as Component 2, and diagnostic imaging for cardiac tamponade as Component 3. We analyzed data from 120 medical students who took the Post-CC OSCE in 2020 and who had undergone the basic CC in 2019. Medical students scored between 70–90% on each component (Table 2).

Table 2. Medical student scores for Clinical Clerkship (CC) and Post-CC Objective Structured Clinical Examination (OSCE).

| Component 1 Informed consent (0–10) |

Component 2 Resuscitation (0–10) |

Component 3 Diagnostic imaging (0–10) |

Total Score (0–30) |

Clinical Clerkship |

|

|---|---|---|---|---|---|

| Average | 7.2 | 7.2 | 7.4 | 22.0 | 78.3 |

| SD | 1.4 | 1.2 | 1.1 | 2.2 | 2.5 |

Correlations between clinical competency scores and medical chart documentation scores of the OSCE are shown in Table 3. With regard to OSCE components, the acquisition of informed consent and resuscitation showed significant correlations with a student’s CC performance (P<0.001 for each), and diagnostic imaging showed a slightly positive, but non-significant, correlation (P = 0.107). When all OSCE scores were combined, the correlation was statistically significant (P<0.001).

Table 3. Correlations between medical chart documentation scores from the Objective Structured Clinical Examination (OSCE) and Clinical Clerkship (CC).

| Component 1 Informed consent |

Component 2 Resuscitation |

Component 3 Diagnostic imaging |

Total Score | |

|---|---|---|---|---|

| R | 0.813 | 0.729 | 0.148 | 0.749 |

| Co-efficient | 0.661 | 0.532 | 0.013 | 0.561 |

| P | <0.001 | <0.001 | 0.107 | <0.001 |

*P<0.05.

Discussion

One main objective of most medical schools worldwide is providing students with an education that prepares them to transition seamlessly from the stage of knowledge acquisition to performing practical skills in clinical settings. Accordingly, medical education programs aim to produce competent graduates who can record patient history through medical interviews, conduct a comprehensive physical examination, and perform medical chart documentation. Of these competencies, medical chart documentation skills are considered to be very important [20, 21].

Patient interviews and medical chart documentation are essential skills, and accurate documentation and evaluation allow medical students to collect the information required for appropriate diagnosis and treatment during their CC [22, 23]. In the clinical setting, it is not rare to miss abnormal physical findings or to perform a critical evaluation incorrectly. Incorrect medical chart documentation can lead to diagnostic errors, which can result in an adverse outcome for the patient. If medical students are to acquire clinical competency and become trustworthy healthcare professionals, then they must be taught and expected to perform both technical and non-technical skills at a very high level [24].

Medical students must practice medical chart documentation before moving on to post-graduate training. In their CC rotations, medical students are expected to be active participants in the healthcare team, interviewing patients, documenting complaints and findings, and serving as patient advocates by communicating patient issues to the team. In these ways, students facilitate information sharing among healthcare team members [25]. Students also acquire competency by practicing and receiving feedback on their clinical skills, including the ability to fill out medical charts and reason through diagnostic and therapeutic plans [26]. The medical record is a critical resource for promoting clinical competency among medical students because it provides access to patient information and educational resources. It also serves as an important venue for assessing medical student competencies. In fact, medical record review is one of the assessment tools described in the Accreditation Council for Graduate Medical Education toolbox for guiding competency assessment in the six core domains [27, 28].

In our study, overall OSCE scores showed a strong and significant correlation with medical student performance with regard to informed consent and resuscitation components, but showed no significant correlation with performance on the emergency diagnostic imaging component. This may be attributed to the fact that during their CC, medical students participate regularly in situations involving acquisition of informed consent, and advanced life support training is becoming common in simulation training during the CC. In contrast, medical students are less likely to have direct clinical experience with emergency diagnostic imaging. Accordingly, the inclusion of emergency diagnostic imaging using a problem-based learning simulation in the curriculum may be warranted. While our medical chart documentation OSCE does not require human-to-human contact, it may be effective for summative evaluation during pandemic situations such as COVID-19.

This study has several limitations worth noting. First, we performed a summative evaluation of OSCE and CC performance in a single number though medical students rotate through so many subject areas, are assessed on so many skills. It would be more relevant to evaluate correlations with validated measures of documentation quality or future (and not past) performance. Furthermore, we evaluated overall scores for the CC rather than those for individual components. Assessing correlations among various evaluation aspects during CC may further clarify relationships with performance on the medical chart documentation OSCE. Second, we developed the original checklists based on previous studies and one teacher performed all evaluations. It will be important to validate the checklists to ensure their generalizability and to have multiple evaluators perform the evaluations. Third, all documentation by students was made on paper because we believe paper documentation to be a fundamental skill. However, given the spread of electric medical records, it will be important to include electric medical chart documentation scenarios in the future. Finally, as the data came from a single institution, our findings may not be generalizable to other medical schools. However, we believe that our results can be applied to most other Japanese medical schools as they all follow the main core curriculum provided by the Ministry of education.

In this study, we performed a correlation analysis of student performance on CC with the quality of medical chart documentation in a simulation setting. Our results suggest that medical chart documentation can be one possible alternative component in the OSCE.

Supporting information

(XLSX)

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

Financial support for the study was provided by Osaka Medical College which had no role in study design, data collection and analysis, publication decisions, or manuscript preparation.

References

- 1.Ellaway RH, Graves L, Cummings BA. Dimensions of integration, continuity and longitudinality in clinical clerkships. Med Educ 2016;50:912–921. 10.1111/medu.13038 [DOI] [PubMed] [Google Scholar]

- 2.Hudson JN, Poncelet AN, Weston KM, et al. Longitudinal integrated clerkships. Med Teach 2017;39:7–13. 10.1080/0142159X.2017.1245855 [DOI] [PubMed] [Google Scholar]

- 3.Takahashi N, Aomatsu M, Saiki T, et al. Listen to the outpatient: qualitative explanatory study on medical students’ recognition of outpatients’ narratives in combined ambulatory clerkship and peer role-play. BMC Med Educ 2018;18:229. 10.1186/s12909-018-1336-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dong T, Zahn C, Saguil A, et al. The Associations Between Clerkship Objective Structured Clinical Examination (OSCE) Grades and Subsequent Performance. Teach Learn Med 2017;29:280–285. 10.1080/10401334.2017.1279057 [DOI] [PubMed] [Google Scholar]

- 5.Durning SJ, Artino AR, Pangaro LN, van der Vleuten C, Schuwirth L: Context and clinical reasoning: understanding the situation from the perspective of the expert’s voice. Med Educ 2011; 45: 927–38. 10.1111/j.1365-2923.2011.04053.x [DOI] [PubMed] [Google Scholar]

- 6.Murata K, Sakuma M, Seki S, et al. Public attitudes toward practice by medical students: a nationwide survey in Japan. Teach Learn Med 2014;26:335–343. 10.1080/10401334.2014.945030 [DOI] [PubMed] [Google Scholar]

- 7.Durning SJ, Artino AR, Pangaro LN, et al. Redefining context in the clinical encounter: implications for research and training in medical education. Acad Med 2010; 85: 894–901. 10.1097/ACM.0b013e3181d7427c [DOI] [PubMed] [Google Scholar]

- 8.Friedman E, Sainte M, Fallar R. Taking note of the perceived value and impact of medical student chart documentation on education and patient care. Acad Med. 2010;85:1440–4. 10.1097/ACM.0b013e3181eac1e0 [DOI] [PubMed] [Google Scholar]

- 9.Tagawa M, Imanaka H. Reflection and self-directed and group learning improve OSCE scores. Clin Teach. 2010;7:266–70. 10.1111/j.1743-498X.2010.00377.x [DOI] [PubMed] [Google Scholar]

- 10.Dong T, Saguil A, Artino AR Jr, et al. Relationship between OSCE scores and other typical medical school performance indicators: a 5-year cohort study. Mil Med. 2012;177:44–6. 10.7205/milmed-d-12-00237 [DOI] [PubMed] [Google Scholar]

- 11.Hoonpongsimanont W, Velarde I, Gilani C, et al. Assessing medical student documentation using simulated charts in emergency medicine. BMC Med Educ. 2018;28;18:203. 10.1186/s12909-018-1314-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Olvet DM, Wackett A, Crichlow S, et al. Analysis of a Near Peer Tutoring Program to Improve Medical Students’ Note Writing Skills. Teach Learn Med. 2020. February 24;1–9. 10.1080/10401334.2020.1730182 Online ahead of print. [DOI] [PubMed] [Google Scholar]

- 13.Ishikawa H, Hashimoto H, Kinoshita M, et al. Evaluating medical students’ non-verbal communication during the objective structured clinical examination. Med Educ. 2006;40:1180–7. 10.1111/j.1365-2929.2006.02628.x [DOI] [PubMed] [Google Scholar]

- 14.Neumar RW, Shuster M, Callaway CW, et al. Part 1: Executive Summary: 2015 American Heart Association Guidelines Update for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. Circulation 2015;132:S315–67. 10.1161/CIR.0000000000000252 [DOI] [PubMed] [Google Scholar]

- 15.Edgerley S, McKaigney C, Boyne D, et al. Impact of Night Shifts on Emergency Medicine Resident Resuscitation Performance. Resuscitation 2018;127:26–30. 10.1016/j.resuscitation.2018.03.019 [DOI] [PubMed] [Google Scholar]

- 16.Dreimüller N, Schenkel S, Stoll M, et al. Development of a checklist for evaluating psychiatric reports. BMC Med Educ. 2019;19:121. 10.1186/s12909-019-1559-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Park SG, Park KH. Correlation between nonverbal communication and objective structured clinical examination score in medical students. Korean J Med Educ. 2018;30:199–208. 10.3946/kjme.2018.94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lörwald AC, Lahner FM, Mooser B, et al. Influences on the implementation of Mini-CEX and DOPS for postgraduate medical trainees’ learning: A grounded theory study. Med Teach. 2019;41:448–456. 10.1080/0142159X.2018.1497784 [DOI] [PubMed] [Google Scholar]

- 19.Lörwald AC, Lahner FM, Nouns ZM, et al. The educational impact of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) and its association with implementation: A systematic review and meta-analysis. PLoS One. 2018;13:e0198009. 10.1371/journal.pone.0198009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Komasawa N, Terasaki F, Nakano T, et al. Relationship between objective skill clinical examination and clinical clerkship performance in Japanese medical students. PLoS ONE 2020;15:e0230792. 10.1371/journal.pone.0230792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schiekirka S, Reinhardt D, Heim S, et al. Student perceptions of evaluation in undergraduate medical education: a qualitative study from one medical school. BMC Med Educ. 2012;12:45. 10.1186/1472-6920-12-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med. 2006;355:2217–2225 10.1056/NEJMra054782 [DOI] [PubMed] [Google Scholar]

- 23.Nomura S, Tanigawa N, Kinoshita Y, et al. Trialing a new clinical clerkship record in Japanese clinical training. Adv Med Educ Pract. 2015;6:563–5. 10.2147/AMEP.S90295 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shikino K, Ikusaka M, Ohira Y, et al. Influence of predicting the diagnosis from history on the accuracy of physical examination. Adv Med Educ Pract. 2015;6:143–8. 10.2147/AMEP.S77315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Duffy FD, Gordon GH, Whelan G, et al. Assessing competence in communication and interpersonal skills: The Kalamazoo 2 report. Acad Med. 2004;79:495–507. 10.1097/00001888-200406000-00002 [DOI] [PubMed] [Google Scholar]

- 26.Mukohara K, Kitamura K, Wakabayashi H, et al. Evaluation of a communication skills seminar for students in a Japanese medical school: a non-randomized controlled study. BMC Med Educ. 2004;4:24. 10.1186/1472-6920-4-24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Accreditation Council for Graduate Medical Education; American Board of Medical Specialties. Toolbox of Assessment Methods. Available at: http://www.acgme.org/Outcome/assess/Toolbox.pdf. Accessed July 17, 2020.

- 28.Accreditation Council for Graduate Medical Education.CommonProgram Requirements: General Competencies. Available at: http://www. acgme.org/outcome/comp/GeneralCompetenciesStandards21307.pdf. Accessed July 15, 2020.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(XLSX)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.