Abstract

In many applications such as copy number variant (CNV) detection, the goal is to identify short segments on which the observations have different means or medians from the background. Those segments are usually short and hidden in a long sequence, and hence are very challenging to find. We study a super scalable short segment (4S) detection algorithm in this paper. This nonparametric method clusters the locations where the observations exceed a threshold for segment detection. It is computationally efficient and does not rely on Gaussian noise assumption. Moreover, we develop a framework to assign significance levels for detected segments. We demonstrate the advantages of our proposed method by theoretical, simulation, and real data studies.

Keywords: copy number variation, inference, nonparametric method, signal detection

1. Introduction

Chromosome copy number variant (CNV) is a type of structural variation with abnormal copy number changes involving DNA fragments [6,5]. CNVs result in gains or losses of the genome, therefore interfering downstream functions of the DNA contents. Accounting for a substantial amount of genetic variation, CNVs are considered to be a risk factor for human diseases. Over the past decade, advances in genomic technologies have revealed that CNVs underlie many human diseases, including autism [15], cancer [4], schizophrenia [3], and major depressive disorder [13]. It is fundamental to develop fast and accurate CNV detection tools.

A variety of statistical tools have been developed to discover structural changes in CNV data during last 20 years. Popular algorithms include circular binary segmentation [14], the fused LASSO [16], likelihood ratio selection [10], and screening and ranking algorithm [12]. Some other change-point detection tools such as wild binary segmentation [8] and simultaneous multiscale change-point estimator [7] can be also applied to CNV data. See [11] for a recent review on modern change-point analysis techniques. A majority of existing methods are based on Gaussian assumption, although quantile normalization [18] or local median transformation [2] can be used for normalization. The computational complexity is also of concern for some of the existing methods as the modern technologies produce extraordinarily big data. In spite of some fast algorithms [17], few algorithms are known to possess both computational efficiency and solid theoretical foundation. Moreover, with only a few exceptions [9,7], the existing methods focus on detection whereas not offering statistical inference. For these reasons, we develop a fast nonparametric method for CNV detection with theoretical foundation and the opportunity of conducting statistical inference.

In this paper, we model the CNVs as short segments with nonzero height parameters, which are sparsely hidden in a long sequence. The goal is to identify those segments with high probability and, moreover, to assess the significance levels for detected segments. In particular, we propose a scalable nonparametric algorithm for short segment detection. It depends on only the ranks of the absolute values of the measurements and hence requires minimal assumptions on the noise distribution. A short segment may be present when there are a large enough number of observations exceeding a certain threshold on a short segment; for instance, 8 on a segment of 10 observations are larger than the 99th percentile of the data. Following this idea, we implement a super scalable short segment (4S) detection algorithm to cluster the points to form a segment when such a phenomenon occurs. The advantages of our method are fourfold. First, this nonparametric method requires minimal assumption on the noise distribution. Second, it is super fast as the core algorithm requires only O(n) operations to analyze a sequence of n measurements. In particular, it takes less than 2 seconds for our R codes to analyze 272 sequences with a range of about 34,000 measurements. Third, we establish a non-asymptotic theory to ensure the detection of all signal segments. Last but not least, our method can compute the significance level for each detected segment and offer a convenient approach to statistical inference.

2. Method

2.1. Notations and the main idea

Let be a sequence of random variables such that

| (1) |

where the height parameter vector μ = (μ1, …, μn)⊤ is sparse and are random noises with median 0. Moreover, we assume that the nonzero entries of μ are supported in the union of disjoint intervals so that

Here we assume that and for all k. Note that such a representation of is unique and used throughout this paper for or its estimator . For convenience and without confusion, we use the interval [, rk] to imply the set of integers when referred to a set of locations. We call those intervals segments. In particular, a signal segment is a segment where the height parameter is a nonzero constant. Let μI denote a subvector of μ restricted to I ⊂ {1, …, n}. We denote by the cardinality of a set . In particular, for . 0 and 1 denote vectors, (0, …, 0)⊤ and (1, …, 1)⊤, respectively.

Naturally, a primary goal for model (1) is to identify the set of signal segments . Moreover, while rarely done, it is useful to assign a significance level for each of the detected segments. In this paper, we will study both estimation and related inference problems on segment detection. Our strategy is to cluster “putative locations” using spatial information. In particular, we consider the set of positions , where the observations exceed a threshold c > 0. Intuitively, for a properly chosen c and some segment , if is big enough compared with , it is likely that .

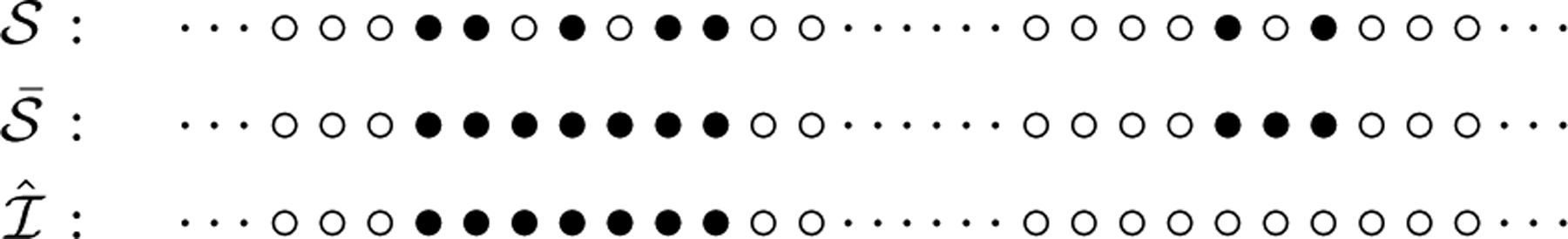

To illustrate idea, we consider a game of ball painting. Suppose that we start with n white balls in a row, and paint the jth ball with black color if |Xj| > c. Let m be the total number of black balls, which is much smaller than n. If we observe that there are a few black balls crowded in a short segment, e.g., ‘segment 1’ illustrated in Figure 1, it is plausible that the height parameter is not zero in the segment. Our proposed algorithm can easily identify those segments. Also it may happen that in a neighborhood there is only a single black ball, e.g., ‘segment 2’ in Figure 1. Then, we may not have strong evidence against μ = 0. To put this intuition into a sound theoretical framework, it is imperative to evaluate the significance for each pattern. In fact, given the numbers of white and black balls in a short segment, we may calculate how likely a certain pattern appears in a sequence of length n with m black balls, when white and black balls are actually randomly placed. We will develop a framework of inference based on this idea in Section 2.3.

Fig. 1:

Segment 1 and segment 2.

2.2. Algorithm

To estimate , we propose a super scalable short segment (4S) detection algorithm which is described as follows.

Step 1: thresholding. Define . That is, we collect the positions where the observations exceed a threshold.

Step 2: completion. Construct the completion set by the criterion that if and only if there exist j1, , j1 ≤ j2 ≤ j1+ d +1 such that j1 ≤ j ≤ j2. That is, we add the whole segment [j1, j2] into the completion set if the gap between j1, is small enough.

Write where with . Note that this decomposition is unique.

Step 3: clean up. We delete from if , and obtain our final estimator . That is, we ignore the segments that are too short to be considered.

The whole procedure depends on three parameters c, d, and h. The choice of c is crucial and depends on applications. d and h are relatively more flexible as we can screen false positives using significance levels defined later. We may ignore the subscripts and simply refer to , and when the sets obtained from the three steps above corresponding to c, d and h are clear in the context. Figure 2 illustrates our procedure, where the location set obtained in each step is indicated by the positions of black balls.

Fig. 2:

An illustration of three steps in the 4S algorithm with d = h = 3. Top row: the black balls indicate the locations where the observations has absolute value larger than a threshold. Middle row: the small gaps with length ≤ d between the black balls are filled in with the black balls. Bottom row: the segment of black balls with length ≤ h is deleted.

2.3. Theory: consistency and inference

Our goal is to identify the set of signal segments with a false positive control. Here we say that is identified by an estimator if there is a unique , such that , and for all and j ≠ k. Such an is a true positive. We define that is identified by an estimator if every is identified by . That is, there is a one-to-one correspondence between and a subset of , and the K pairs under this correspondence are the only pairs with nonempty interaction among all segments in and . See Figure 3 for an illustration of the definition.

Fig. 3:

An illustration of relationship between signal segments (*) and four estimators (•). consists of two signal segments I1 and I2. Both I1 and I2 are successfully identified by . I1 is also identified by and . has one true positive (left) and one false positive (right). has one true positive (left) and two false positives (middle and right). has one false positive.

In our three-step procedure, the first two steps establish an estimator and the last one aims to delete obvious false positives. Our theory proceeds in two main steps. First, we characterize the non-asymptotic probability that the first two steps produce an estimator which successfully identifies . In order to identify , we should ensure two conditions. Condition one is that, after step 1, the black balls are dense enough on Ik so that they do not split into two or more segments in step 2. Condition two is that, in the gap between Ik and Ik+1, the black balls are sparse enough so that the black balls on Ik and Ik+1 do not connect to a big segment. Theorem 1 addresses how to bound the probabilities of these two conditions for all k.

Second, we develop a framework of inference to control false positives. As a rough control, Theorem 2 gives an upper bound for the expected number of false positives if all segments of length one are deleted in step 3. In general, after step 2, it is not optimal to decide the likelihood of a detected segment being a false positive only by its length. Therefore, for each segment in , we check its original color pattern back in step 1 and calculate a p-value of this pattern under null hypothesis μ = 0. This assigns a significance level for each detected segment which helps control false positive. It is difficult to find the exact p-values. Lemma 2 offers a reasonable approximation.

To facilitate theoretical analysis, we assume that, in this subsection, are independent and identically distributed (IID) noises with median 0. Moreover, εj has a continuous density function f that is symmetric with respect to 0. Under this assumption, the black balls are randomly distributed for arbitrary threshold c when μ = 0.

Now we investigate when a signal segment can be detected by our algorithm. Let F be the cumulative distribution function of the noise density f. We use fα = F−1(α) to denote the α-percentile. Suppose that there is a segment I such that |I| = L, μI = ν1 and , where H is a segment containing I such that Ic ⋂ H is the union of two segments both of which are of length D. Without loss of generality, we assume that ν = fα > 0 i.e. α > 0.5. For a threshold 0 < c = fβ ≤ ν, let us continue our game of ball painting and focus on this segment and its neighborhood. Recall that we paint the ball at position j with black color if and only if |Xj| > c = fβ. The following two events together can ensure that the segment I is identified by our method.

Event ensures that this segment can be detected as a whole segment while event controls the total length of the detected segment and makes sure that the detected segments are separated from each other. and together guarantee that our algorithm identifies a segment such that , and for any other signal segment I′. The following lemma gives non-asymptotic bounds for and .

Lemma 1

Let H ⊃ I be two segments such that μI = ν1 and , |I| = L. Ic ⋂ H is the union of two segments both of which are of length D. Let β′ = 2β − 1. For 0 < c = fβ ≤ ν and d > 0, after the thresholding and completion steps, we have

and

Let νmin = mink |νk| be the minimal signal strength among all Ik’s, Lmin = mink |Ik|, Lmax = maxk |Ik| be the minimal and maximal lengths of signal segments, respectively, and be the minimal gap between two signal segments. Define βmin = F(νmin) so . Let . Taking into account all signal segments in , the theorem below gives a lower probability bound for identifying after first two steps.

Theorem 1

With , d > 0 and h = 0, can identify all signal segments in with probability at least

| (2) |

Corollary 1

The probability (2) goes to 1 asymptotically if log K+log Lmax ≪ min {d, Lmin} → ∞ and log K ≪ Dmin /d → ∞ as n → ∞.

Although Theorem 1 gives a theoretical guarantee to recover all signal segments with a large probability, there are some false positives. As an adhoc way, we may take h = 2 or 3 to eliminate some obvious false positives. This clean up step is simple and helpful to delete isolated black balls. The Theorem below gives an upper bound on the number of false positives with a conservative choice h = 1.

Theorem 2

Assume μ = 0 and Then with h = 1.

The expected number of false positive segments can be well controlled if both m/n and d are small. Next, we illustrate how to access the significance levels for the detected segments by our method, which is helpful to control false positives. Recall our estimator where . For each , let , , and . Now consider n balls in a row with m black and n − m white balls. Let be an event that there exists a segment of length sk where at least tk balls are black, in a sequence of n balls with m blacks ones. The p-value of can be defined as the probability of if the balls are randomly placed. This p-value can effectively control the false positives. However, it is challenging to find the exact formula to calculate the p-value. The lemma below gives an upper bound of .

Lemma 2

where Y follows a hypergeometric distribution with total population size n − 1, number of success states m − 1, and number of draw sk − 1.

This approximated p-value is useful to eliminate false positives.

2.4. Implementation

Our proposed method is nonparametric and depends on only the rank of absolute measurements . For a fixed triplet (c, d, h), it typically needs less than 3n operations to determine when is small, say, less than 0.1. We need 2n operations to compare each measurement with the threshold to determine . Let w = (w1, …, wm)⊤ be a vector of locations in in an ascending order. In the completion step, we compare Δw = (w2 − w1, …, wm − wm−1)⊤ to a threshold. In particular, we declare that wi and wi+1 belong to different segments if and only if wi+1 − wi > d. Let be those indices such as . consists of segments [w1, ], …, [, wm]. We record the start and end points of each segment only. In the deletion step, we delete if its length is not greater than h. The total operations can be controlled within 2n + 10m.

The choice of threshold c is crucial to the 4S algorithm, and may need to be determined on a case-by-case basis. Here we offer a general guideline for parameter selection. Recall that for the Gaussian model, the signal strength of a segment with length L and height ν = δσ is usually measured by S = δ2L; see, e.g. Table 1 in [11]. If there are two segments with the same overall signal strength S, however, one with large δ and small L (say, type A), and another one with small δ and large L (say, type B), then it is usually not equally easy to detect both of them by an algorithm of complex O(n). Indeed, for many segment detection algorithms, it is tricky to balance the powers to detect these two types of segments. Intuitively, the threshold parameter c controls this tradeoff in our methods. A higher threshold may be more powerful in detecting type A segments but less powerful in detecting type B segments; and vice versa. In practice, we may choose the threshold as a certain sample percentile of the absolute values of the observations based on a pre-specified preference. For example, if we know the signal segments have relatively large height parameters but can be as short as 5 data points, then with a fixed n, we can find largest m such as and the percentile is chosen as . That is, we want to guarantee that a segment of 5 consecutive black balls is significant enough to stand out. In another scenario, our preference might be longer segments with possibly lower heights. Then we may choose a threshold to include segments of length 10 with at least 6 black balls. In general, such m (or α) can be easily determined given n, s, t and p, by solving . We illustrate in Figure 4 the relationship between log n and selected percentile α for (s, t) = (5, 5), (10, 6), p = 0.05 and 0.1. Because our main goal in this paper is to identify short segments, we prefer a large threshold such as 95th sample percentile. An even larger threshold can be used to identify shorter segments, and a smaller threshold can be used for detecting longer segments with lower heights.

Fig. 4:

Selected percentile versus log n for (a) s = t = 5, p = 0.05 and 0.10; (b) s = 10, t = 6, p = 0.05 and 0.10.

3. Numerical Studies

3.1. Simulated data

We use simulation studies to evaluate the performance of our method in terms of the average number of true positives (TP) and false positives (FP) for identifying signal segments. Recall that in our definition, a detected segment is a true positive, if it interacts with only one signal segment , and it is the only one in that interacts I.

In Example 1, we show the effectiveness of our inference framework on the false positive control of the 4S algorithm by a null model. As suggested by Figure 4, we choose the 95th percentile of absolute values of the observations as the threshold c. We set d = 9 and h = 3. We use various p-value thresholds for false positive control and compare them with a vanilla version of 4S, which is the one without p-value control.

Example 1 (Null Model)

We generate a sequence based on model (1) with n = 10, 000 and μ = 0. We consider three scenarios for the error distributions. In the first two scenarios, we consider which are IID from N(0, 1) and t3, respectively. In the last scenario, we consider which are marginally N(0, 1) and jointly from an autoregressive (AR) model with autocorrelation 0.2.

As in this example, all detected segments are FPs. We report the average FPs for three versions of 4S (Vanilla, p = 0.05 and p = 0.1) based on 100 replicates in Table 1. We see that our inference framework can effectively control the number of FPs.

Table 1:

Average number of FPs for the null model

| 4S (Vanilla) | 4S (p=0.05) | 4S (p=0.1) | |

|---|---|---|---|

| N(0,1) | 102.38 | 0.03 | 0.13 |

| t3 | 101.68 | 0.12 | 0.26 |

| AR(1) | 100.39 | 0.10 | 0.33 |

In Example 2, we compare 4S with three algorithms CBS [14], LRS [10] and WBS [8]. The CBS and WBS methods, implemented by R packages DNAcopy and wbs respectively, give a segmentation of the sequence which consists of a set of all segments rather than only the signal segments. In order to include their results for comparison, we ignore the long segments (with length greater than 100) detected by CBS or WBS, which decreases their false positives. For LRS, we set the maximum length of signal segments as 50.

Example 2

We generate a sequence based on model (1) with n = 10, 000. There are 5 signal segments with lengths 8, 16, 24, 32 and 40 respectively. We use the same error distributions as in Example 1. We consider two levels of height parameter for different signal strengths. In particular, we set height ν as the 99-, and 97-th percentiles of the marginal error distribution in two scenarios, labeled by S1 and S2. For the standard normal error, the height values are 2.326 and 1.881, respectively.

The threshold c we used for 4S is the 95-th sample percentile of absolute values, that is around the 97.5-th percentile of the error distribution, e.g., around 1.96 for the Gaussian case. Therefore, the true height is greater than c in S1, but lower in S2. Average numbers of TPs and FPs are reported in Tables 2 and 3.

Table 2:

Average number of TPs

| S1 | 4S (p=0.05) | 4S (p=0.1) | 4S (p=0.5) | CBS | LRS | WBS |

|---|---|---|---|---|---|---|

| N(0,1) | 4.41 | 4.58 | 4.73 | 4.89 | 4.41 | 4.53 |

| t3 | 4.95 | 4.98 | 4.99 | 2.16 | 4.41 | 4.52 |

| AR(1) | 4.40 | 4.53 | 4.65 | 4.68 | 4.41 | 4.53 |

| S2 | 4S (p=0.05) | 4S (p=0.1) | 4S (p=0.5) | CBS | LRS | WBS |

| N(0,1) | 3.77 | 3.94 | 4.20 | 4.59 | 3.76 | 3.94 |

| t3 | 3.34 | 3.47 | 3.73 | 0.51 | 3.75 | 3.93 |

| AR(1) | 3.75 | 3.94 | 4.09 | 4.43 | 3.76 | 3.95 |

Table 3:

Average number of FPs

| S1 | 4S (p=0.05) | 4S (p=0.1) | 4S (p=0.5) | CBS | LRS | WBS |

|---|---|---|---|---|---|---|

| N(0,1) | 0.02 | 0.05 | 0.29 | 0.10 | 0.05 | 0.14 |

| t3 | 0.04 | 0.09 | 0.36 | 0.13 | 0.45 | 0.36 |

| AR(1) | 0.05 | 0.14 | 0.44 | 1.99 | 0.05 | 0.14 |

| S2 | 4S (p=0.05) | 4S (p=0.1) | 4S (p=0.5) | CBS | LRS | WBS |

| N(0,1) | 0.02 | 0.08 | 0.40 | 0.16 | 0.09 | 0.22 |

| t3 | 0.10 | 0.18 | 0.63 | 0.07 | 0.49 | 0.44 |

| AR(1) | 0.09 | 0.22 | 0.58 | 1.84 | 0.09 | 0.23 |

Overall, CBS performs the best for the IID Gaussian case, but suffers from a low power in the heavy-tail case, and high FPs in the correlated case. LRS and WBS perform reasonable well with slightly high FPs in the heavy-tail case. 4S methods are more robust against the error type. When the noise is Gaussian and the signal strength is weak, it is slightly less powerful than the methods based on the Gaussian assumption. In terms of computation time (Table 4), 4S is about 100 times faster than all other methods.

Table 4:

Computation time (in second) to complete 300 sequences in S1 for each method.

| Method | 4S | CBS | LRS | WBS |

|---|---|---|---|---|

| Time | 0.83 | 115.52 | 108.72 | 86.17 |

3.2. Real data example

We applied the 4S method to the 272 individuals from HapMap project. In particular, we tried 4S (with p-value thresholds 0.05 and 0.5) to the LRR sequence of chromosome 1, which consists of 33991 measurements for each subject. We compared 4S with CBS, which has been a benchmark method in CNV detection. Note that CBS produces a segmentation of the sequence rather than the CNV segments directly. Therefore, we focused on only the short (less than 100 data points) segments detected by CBS because the long segments had means close to zero and are not likely to be CNVs. We found that most of these short segments are separated. But a very small portion of them are connected as CBS sometimes tends to over segment the sequence. Therefore, we merged two short segments detected by CBS if they are next to each other.

The 4S algorithm is extremely fast. It took less than 2 seconds (on a desktop with CPU 3.6 GHz Intel Core i7 and 16GB memory) to complete 272 sequences with p-values calculated for all detected segments. CBS algorithm is reasonably fast, but much slower than our algorithm. In Table 5 we list the total number of detected CNVs, average length of CNVs and computation time for all methods.

Table 5:

Real data results: total number of detected CNVs, average length of CNVs, and computation time for all methods.

| 4S (p=0.05) | 4S (p=0.5) | CBS | |

|---|---|---|---|

| Number of CNVs | 2832 | 3141 | 2962 |

| Average length | 28.60 | 27.70 | 23.15 |

| Computation time | 1.86 | 1.93 | 230.18 |

Overall, the segment detection results were very similar. We further compared the segments detected by two algorithms, i.e., 4S with threshold p = 0.05 and CBS. We found that 2753 segments are in common. Here by a common segment we mean a pair of segments, one detected from each algorithm, such that they overlap to each other but do not overlap with other detected segments. Among these common segments, we calculated a similarity measure, called affinity in [1], defined as follows.

| (3) |

ρ(I, I′) = 1 if two segments I and I′ are the same and ρ(I, I′) = 0 if they do not overlap. We found that the average value of this similarity measure is 0.9290 among 2753 pairs.

Figure 5 presents the histogram of affinity among 2753 pairs of commonly detected segments by 4S and CBS. We can see that 87.76% of those pairs have affinity values larger than 0.8. We further divided the detected segments into three groups: those detected by both methods (group 1); those detected by only 4S method with p = 0.05 (group 2); those detected by only CBS (group 3). For each detected segment, we calculated its length and the sample mean of the measurements on the segment. Figure 6 displays the scatter plots of sample means versus lengths for all the segments in three groups. Most segments in group 1 carries relatively strong signals. So it is not surprised that they were detected by both algorithms. The groups 2 and 3 have much smaller sizes than group 1. In particular, we found that most segments in group 3 (i.e. those detected by only CBS) are very short, consisting of only 2 or 3 data points. Those segments are not significant in our inference framework unless we set a very high threshold c in step 1. Some segments in group 2 (i.e. those detected by only the 4S method) have relatively small sample mean values, which explains why they were not detected by CBS. Some of these segments might be true positives with the sample mean affected by outliers. Overall, the 4S and CBS methods gave similar results. The segments detected by only one method may be prone to false positives, or true positives have weak signal strengths.

Fig. 5:

Histogram of affinity, a similarity measure defined in (3), among 2753 pairs of commonly detected CNVs by 4S and CBS. Affinity equals to 1 if two detected CNVs are identical, and equals to 0 if two detected CNVs do not overlap.

Fig. 6:

Scatter plots of sample means versus lengths for detected segments in three groups. Group 1: segments detected by both methods; Group 2: segments detected by only 4S method with p = 0.05; Group 3: segments detected by only CBS.

4. Discussion

We proposed a scalable nonparametric algorithm for segment detection, and applied it to real data for CNV detection. Two main advantages of the 4S algorithm are its computational efficiency and independence of the normal error assumption. We introduced an inference framework to assign significance levels to all detected segments. Our numerical studies demonstrated that our algorithm was much faster than CBS and performed similarly to CBS under the normality assumption and better when the normality assumption was violated. Although our inference framework depended on the assumption of IID noise, our numerical experiments suggested that our algorithm worked well under weakly correlated noises. Hence, the proposed method is faster and more robust against non-normal noises than CBS. Overall, the 4S algorithm is a safe and fast alternative to CBS, which has been a benchmark method in CNV studies.

In the literature, there are two popular classes of change-point models used to study CNV related problems. The first one assumes only a piecewise constant median/mean structure. The second one assumes, in addition, a baseline, which reflects the background information or normal status of the data. Quite often, it assumes that the abnormal part, called signal segments in our paper, are sparse. For the first approach, the goal is to identify the change points. In contrast, the second approach emphasizes more on segment detection rather than change-point detection. The difference is subtle for estimation but might become remarkable for inference. For example, it is technically difficult to define ‘true positive’ in the context of change-point detection [9]. But it is easier to define related concepts for segment detection as we did in this paper. Roughly speaking, the first approach is more general, and the second one is more specific and suitable to model certain CNV data, e.g., SNP array data. In particular, the 4S algorithm aims to solve change-point models in the second class. It can be applied to any data sequence when there is a baseline. When the baseline mean/median is unknown, we suggest that the data should be centered first by the estimated mean/median. Our method can not be applied to data when a baseline does not exist. Besides change-point models, there are other approaches to study CNV such as hidden Markov model [17]. Due to the space limit, we restricted our comparison to the methods based on change-point models and implemented by R packages.

Most segment detection algorithms involve one or more tuning parameters, whose values are critical to the results. In the study of segment detection, there are two trade-offs that researchers should consider in choosing algorithms as well as their parameters. The first one is the usual type I/type II errors trade-off, which might be tricky sometimes but well-known. The second one is more delicate and quite unique. For a signal segment, both its height and length determine the signal strength. Therefore, segments with weak but detectable signals can be roughly divided into two categories, the ones with small length (say, type A) and the ones with small height (say, type B). Typically a method may detect type A segments more powerfully, but type B segments less powerfully, than the other method. For the proposed 4S algorithm, a choice of a larger threshold parameter in step 1 makes the algorithm more powerful in detecting short and high signal segments (type A), and vice versa. The 4S algorithm can be easily tuned to maximize the power in detecting of a certain type of the signal segments. We may also try different thresholding levels in data analysis in order to detect different types of segments. In general, the choice of the parameters depends on the research goals and balance of two trade-o s mentioned above.

There are various platforms and technologies which produce data for CNV detection. Besides the SNP array data studied in this work, read depth data from next generation sequencing (NGS) technologies are often used in CNV studies. As one referee pointed out, the speed of 4S algorithm would be an advantage when applied to read depth data from whole genome sequencing. This is a wonderful research direction that we will investigate next.

An R package SSSS implementing our proposed method can be download via https://publichealth.yale.edu/c2s2/software.

Acknowledgement

The authors are grateful to two referees, an associate editor, and the editor for their helpful comments.

Funding

The authors are partially supported by NSF grants DMS-1722691 (Niu and Hao), CCF-1740858 (Hao), DMS-1722562 (Xiao), DMS-1722544 (Zhang), Simons Foundation 524432 (Hao), University of Arizona faculty seed grant (Niu), and NIH grants R01DA016750, 1R01HG010171, and R01MH116527 (Zhang).

5. Appendix

Proof of Lemma 1.

Let I be the interval of integers [, r] with . For each Xi, i ∈ I, the probability that the ball at i is white is as ν ≥ c and f is symmetric. It is trivial to bound for the case d ≥ L as . Now let us consider the case d < L. Let , i ∈ I be the event that the first segment of d consecutive white balls starts from position i. Then

Therefore, and . Note that π is a constant depending on f, ν and c, so a sharper bound than for π may be used to bound if more information is available.

Let us consider the segment [r + 1, r + D] on the right hand side of I. implies that there is at least one black ball in each of the segments [r+1, r+d], [r+d+1, r+2d], etc. Note that Xi ~ F on these segments so the probability of white ball at i is P(|Xi| ≤ c = fβ) = β′ = 2β − 1. Consider all segments of length d on the right side of I. The probability that all these segments contain at least one black ball is . Therefore, . □

Proof of Theorem 1.

For , let Lk = |Ik| and Dk be the gap between Ik and Ik+1. Define = {on Ik, there does not exist a sub-segment of min{d, Lk} consecutive white balls} = {there are d consecutive white balls on Dk}.

Note that all segments in are identified under event . By Lemma 1, or , which can be bounded by . Moreover, . The conclusion follows Bonferroni inequality. □

Proof of Theorem 2.

Let be the locations of black balls after step 1. Note that ji and ji+1 will be connected in step 2 if and only if ji+1 − ji ≤ d. We aims to count the number of segments with at least 2 consecutive black balls after step 2, as all isolated black balls will be eliminated in step 3. Such a segment starts at ji only if ji+1 − ji ≤ d. So the total number of such segments is at |{i : ji+1 – ji ≤ d}|. Let Zi follow Bernoulli distribution with Zi = 1 if and only if ji+1 − ji ≤ d for i = 1, …, m. When μ = 0, all black balls are randomly distributed. P(Zi = 0), in i.e., the probability that all balls are white next d positions following ji is . Therefore, . □

Proof of Lemma 2.

We drop the subscript k in as it is irrelevant in our derivation below. Under the assumption that m black balls are randomly assigned in n position, at a position of black ball, we calculate the probability that there are at least t − 1 black balls in next s − 1 positions. Let Y be the count of black balls in those s − 1 positions. Y follows a hypergeometric distribution with total population size n − 1, number of success states m − 1, and number of draws s − 1. Therefore, as there are m black balls. □

Contributor Information

Feifei Xiao, Department of Epidemiology and Biostatistics, University of South Carolina, Columbia, SC 29201.

Heping Zhang, Department of Biostatistics, Yale School of Public Health, New Haven, CT 06510.

References

- 1.Arias-Castro E, Donoho DL, Huo X: Near-optimal detection of geometric objects by fast multiscale methods. IEEE Transactions on Information Theory 51(7), 2402–2425 (2005) [Google Scholar]

- 2.Cai TT, Jeng XJ, Li H: Robust detection and identification of sparse segments in ultrahigh dimensional data analysis. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 74(5), 773–797 (2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Castellani CA, Awamleh Z, Melka MG, O’Reilly RL, Singh SM: Copy number variation distribution in six monozygotic twin pairs discordant for schizophrenia. Twin Research and Human Genetics 17(02), 108–120 (2014) [DOI] [PubMed] [Google Scholar]

- 4.Fanale D, Iovanna JL, Calvo EL, Berthezene P, Belleau P, Dagorn JC, Ancona C, Catania G, D’alia P, Galvano A, et al. : Analysis of germline gene copy number variants of patients with sporadic pancreatic adenocarcinoma reveals specific variations. Oncology 85(5), 306–311 (2013) [DOI] [PubMed] [Google Scholar]

- 5.Feuk L, Carson AR, Scherer SW: Structural variation in the human genome. Nature Reviews Genetics 7(2), 85–97 (2006) [DOI] [PubMed] [Google Scholar]

- 6.Freeman JL, Perry GH, Feuk L, Redon R, McCarroll SA, Altshuler DM, Aburatani H, Jones KW, Tyler-Smith C, Hurles ME, et al. : Copy number variation: new insights in genome diversity. Genome research 16(8), 949–961 (2006) [DOI] [PubMed] [Google Scholar]

- 7.Frick K, Munk A, Sieling H: Multiscale change point inference. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 76(3), 495–580 (2014) [Google Scholar]

- 8.Fryzlewicz P: Wild binary segmentation for multiple change-point detection. The Annals of Statistics 42(6), 2243–2281 (2014) [Google Scholar]

- 9.Hao N, Niu YS, Zhang H: Multiple change-point detection via a screening and ranking algorithm. Statistica Sinica 23, 1553–1572 (2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jeng XJ, Cai TT, Li H: Optimal sparse segment identification with application in copy number variation analysis. Journal of the American Statistical Association 105(491), 1156–1166 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Niu YS, Hao N, Zhang H: Multiple change-point detection: A selective overview. Statistical Science 31(4), 611–623 (2016). DOI 10.1214/16-STS587 . URL 10.1214/16-STS587http://dx.doi.org/10.1214/16-STS587. URL http://dx.doi.org/10.1214/16-STS587 [Google Scholar]

- 12.Niu YS, Zhang H: The screening and ranking algorithm to detect DNA copy number variations. The Annals of Applied Statistics 6(3), 1306–1326 (2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.O’Dushlaine C, Ripke S, Ruderfer DM, Hamilton SP, Fava M, Iosifescu DV, Kohane IS, Churchill SE, Castro VM, Clements CC, et al. : Rare copy number variation in treatment-resistant major depressive disorder. Biological psychiatry 76(7), 536–541 (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Olshen AB, Venkatraman E, Lucito R, Wigler M: Circular binary segmentation for the analysis of array-based DNA copy number data. Biostatistics 5(4), 557–572 (2004) [DOI] [PubMed] [Google Scholar]

- 15.Pinto D, Pagnamenta AT, Klei L, Anney R, Merico D, Regan R, Conroy J, Magalhaes TR, Correia C, Abrahams BS, et al. : Functional impact of global rare copy number variation in autism spectrum disorders. Nature 466(7304), 368–372 (2010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tibshirani R, Wang P: Spatial smoothing and hot spot detection for CGH data using the fused lasso. Biostatistics 9(1), 18–29 (2008) [DOI] [PubMed] [Google Scholar]

- 17.Wang K, Li M, Hadley D, Liu R, Glessner J, Grant SF, Hakonarson H, Bucan M: PennCNV: an integrated hidden markov model designed for high-resolution copy number variation detection in whole-genome SNP genotyping data. Genome research 17(11), 1665–1674 (2007) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Xiao F, Min X, Zhang H: Modified screening and ranking algorithm for copy number variation detection. Bioinformatics p. btu850 (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]