The readability of 20 patient education articles found on the ASNR Web site were evaluated using 10 quantitative readability scales and compared with those found on the Web site of the Society of Neurointerventional Surgery. The authors concluded that the patient education resources on both Web sites failed to meet the guidelines of the National Institutes of Health and American Medical Association. Members of the public may fail to fully understand these resources and would benefit from revisions that result in more comprehensible information cast in simpler language.

Abstract

BACKGROUND AND PURPOSE:

The ubiquitous use of the Internet by the public in an attempt to better understand their health care requires the on-line resources written at an appropriate level to maximize comprehension for the average user. The National Institutes of Health and the American Medical Association recommend on-line patient education resources written at a third-to-seventh grade level. We evaluated the readability of the patient education resources provided on the Web site of the American Society of Neuroradiology (http://www.asnr.org/patientinfo/).

MATERIALS AND METHODS:

All patient education material from the ASNR Web site and the Society of Neurointerventional Surgery Web site were downloaded and evaluated with the computer software, Readability Studio Professional Edition, by using 10 quantitative readability scales: the Flesch Reading Ease, Flesch-Kincaid Grade Level, Simple Measure of Gobbledygook, Coleman-Liau Index, Gunning Fog Index, New Dale-Chall, FORCAST Formula, Fry Graph, Raygor Reading Estimate, and New Fog Count. An unpaired t test was used to compare the readability level of resources available on the American Society of Neuroradiology and the Society of Neurointerventional Surgery Web sites.

RESULTS:

The 20 individual patient education articles were written at a 13.9 ± 1.4 grade level with only 5% written at <11th grade level. There was no statistical difference between the level of readability of the resources on the American Society of Neuroradiology and Society of Neurointerventional Surgery Web sites.

CONCLUSIONS:

The patient education resources on these Web sites fail to meet the guidelines of the National Institutes of Health and American Medical Association. Members of the public may fail to fully understand these resources and would benefit from revisions that result in more comprehensible information cast in simpler language.

Easy accessibility and a seemingly unlimited supply of information on the Internet have made it a frequently accessed resource by the public. In fact, almost 80% of Americans are regularly on-line and up to 80% of them consult the Internet for information on health-related topics.1,2 Patients and their families are most apt to seek Internet materials about a new diagnosis, the side effects of medications, a diagnostic or therapeutic procedure, or other treatment options.3 The Internet is often accessed both before and after an initial visit to a health care provider. Perhaps not surprising, patients tend to value the Internet information. In one study, it was reported as the second most important resource, superseded in value only by consulting information coming directly from the physician.4 As a result of the known importance of on-line health care information, many organizations have published Internet-based resources pitched specifically to patients.

However, by itself the delivery of health care–related information does not necessarily mean that the patient or his or her family will comprehend it. The American Medical Association has noted that the average American reads at only an eighth grade level, while those enrolled in Medicaid read at an even lower fifth grade level.5 Limited health literacy, in particular, can be a barrier to care, leaving patients with inadequate knowledge for making informed health care decisions.6 Several studies have shown that limited health literacy has been associated with poor understanding of relatively simple instructions.7–9 These studies found that of those with limited health literacy, 26% did not know when their next appointment was scheduled, 42% did not understand what it meant by “take medication on an empty stomach,” and 86% did not understand the Medicaid application rights and responsibilities section.7–9 A 2003 report from the American Medical Association (AMA) that evaluated the health literacy of adults found that those who did not graduate high school, those older than 65 years of age, Hispanic adults, black adults, those who did not speak English before starting school, those without medical insurance, those with disabilities, and prison inmates were more likely to have below-basic health literacy.10 In an effort to broaden the reach of patient education materials, the AMA and the National Institutes of Health have recommended that they be written at a third-to-seventh grade level.5,11

Despite these guidelines, many of the Web sites of several national physician organizations, including medical, surgical, and subspecialty fields, have provided texts at a level too complex for most of the public to comprehend.12–23 Recent reports that evaluate the readability of patient education resources on radiology Web sites, sponsored by major organizations such as the Radiological Society of North America, the American College of Radiology, the Society of Interventional Radiology, and the Cardiovascular and Interventional Radiologic Society of Europe, demonstrated that the material offered to the public is written at a level well above the AMA and the National Institutes of Health recommendations.24,25

In this study, we investigated the level of readability of all patient education resources on the American Society of Neuroradiology (ASNR) Web site by using a variety of quantitative readability-assessment scales. Additionally, we analyzed patient education resources from the Society of Neurointerventional Surgery (SNIS) Web site because the ASNR patient education Web site has links directly to the SNIS site.

Materials and Methods

In September 2013, all patient education material available on the ASNR (http://www.asnr.org/patientinfo/) and SNIS (http://snisonline.org/patient-center) Web sites were downloaded into Microsoft Word (Microsoft Corporation, Redmond, Washington). Copyright information, references, and images were removed from the text. The ASNR Web site had 17 articles, which were subdivided into 3 categories: neuroradiology, procedures, and conditions. The patient education on the SNIS Web site was directly referenced from the ASNR Web site and included 3 additional articles. All 20 articles were individually analyzed for their level of readability, with 10 different quantitative readability scales by using the software program Readability Studio Professional Edition, Version 2012.1 (Oleander Software, Vandalia, Ohio). The readability scales included the Flesch Reading Ease (FRE),26 Flesch-Kincaid Grade Level (FKGL),27 Simple Measure of Gobbledygook (SMOG),28 Coleman-Liau Index (CLI),29 Gunning Fog Index (GFI),30 New Dale-Chall (NDC),31 FORCAST Formula,32 Fry Graph,33 Raygor Reading Estimate (RRE),34 and the New Fog Count (NFC).27 The FRE readability scale reports scores from 0 to 100, with higher scores indicating more readable text (Table 1). The 9 additional readability scores report a number that corresponds to an academic grade level (On-line Table).

Table 1:

The FRE readability scale scoring system

| FRE Score | Readability |

|---|---|

| 0–30 | Very difficult |

| 30–50 | Difficult |

| 50–60 | Fairly difficult |

| 60–70 | Standard |

| 70–80 | Fairly easy |

| 80–90 | Easy |

| 90–100 | Very easy |

The 9 readability scales that report scores corresponding to an academic grade level were compared with a 1-way ANOVA test. A Tukey Honestly Statistically Different post hoc analysis was conducted for all ANOVA results with a P < .05. Additionally, the patient education material available on the ASNR and SNIS Web sites was compared by using an unpaired t test. Statistical analysis was performed by using OriginPro (OriginLab, Northampton, Massachusetts).

Results

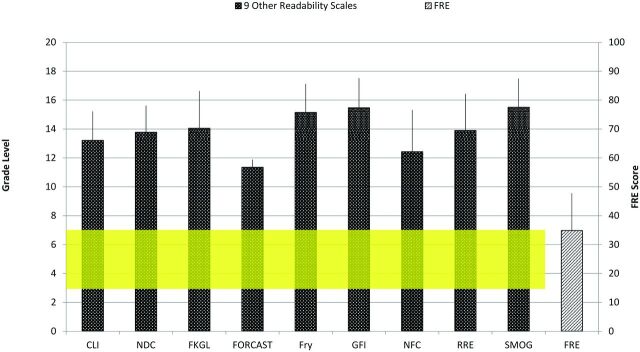

The FRE found that collectively, the 20 articles were written at a difficult (30–50) level, given its score of 34.9 ± 12.9 (Fig 1 and Table 2). The 9 other readability scales (CLI, NDC, FKGL, FORCAST Formula, Fry Graph, GFI, NFC, RRE, and SMOG) determined the readability of all 20 articles to be at the 13.9 ± 1.4 grade level. Individually, the 9 scales found the level of readability to range from the 11.4 (FORCAST Formula) grade level to the 15.5 (both the GFI and SMOG) grade level (Fig 1 and Table 2). Only one (5%) of the articles, which was on MRI, was written below the 11th grade level, and it was still difficult text to understand with a readability score at the 10.3 grade level (Fig 1 and Table 2). According to assessments using the NFC, CLI, NDC, and RRE scales, the material was written at the 12.4 ± 2.9, 13.2 ± 2.0, 13.8 ± 1.8, and 13.9 ± 2.5 grade levels, respectively (Fig 1 and Table 2). Analysis with the FKGL and Fry Graph revealed the patient education material to be even more difficult, with scores at the 14.1 ± 2.6 and 15.2 ± 2.0 grade levels, respectively (Fig 1 and Table 2). Only 3 articles (15%) were written at a level appropriate for viewers who had some level of high school education (10.3, 11.2, and 12.0 grade levels) (Table 2). Most of the articles, 65% (13/20), were written for a reader with some level of college education (12.3–15.0 grade levels), while 20% (4/20) were written for someone with a graduate level of education (16.2–16.9 grade levels) (Table 2). There was no statistical difference between the patient education materials found on the ASNR Web site and those provided by the SNIS Web site (Fig 2).

Fig 1.

The readability of all the articles as measured individually by the 10 readability scales. The 9 other readability scales include the CLI, NDC, FKGL, FORCAST Formula, Fry Graph, GFI, NFC, RRE, and SMOG; and their grade level is measured on the left y-axis (Grade Level). The FRE score is measured on the right y-axis (FRE Score). The yellow area corresponds to the grade level recommendations from the National Institutes of Health and the AMA. FRE scores of 0–30 indicate the patient education resources are very difficult, 30–50 are difficult, 50–60 are fairly difficult, 60–70 are standard, 70–80 are fairly easy, 80–90 are easy, and 90–100 are very easy.

Table 2:

The level of readability of each patient education article as measured by the 10 individual readability assessment scales

| Document | Web Site | FRE | CLI | NDC | FKGL | FORCAST | Fry | GFI | NFC | RRE | SMOG | Avg | SD |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| About neurointerventions | SNIS | 22 | 15.8 | 16 | 18.6 | 11.8 | 16 | 19 | 19 | 17 | 19 | 16.9 | 2.4 |

| Acute stroke | SNIS | 50 | 11.6 | 14 | 12.4 | 10.2 | 12 | 13.8 | 11.9 | 13 | 14.5 | 12.6 | 1.4 |

| Aneurysms | SNIS | 41 | 13.1 | 14 | 13 | 11.3 | 15 | 15.5 | 11.9 | 13 | 15.3 | 13.6 | 1.5 |

| Alzheimer disease | ASNR | 33 | 13.3 | 14 | 14.6 | 11.7 | 16 | 16.2 | 14.1 | 17 | 16 | 14.8 | 1.7 |

| Brain tumor | ASNR | 39 | 12.2 | 14 | 14 | 11.1 | 14 | 15.2 | 13.3 | 12 | 15.1 | 13.4 | 1.4 |

| CT | ASNR | 44 | 11.3 | 11.5 | 11.7 | 11.2 | 14 | 13.9 | 10.1 | 11 | 13.7 | 12.0 | 1.4 |

| Headache | ASNR | 29 | 14.2 | 14 | 14.9 | 11.8 | 17 | 15.7 | 11.6 | 17 | 15.6 | 14.6 | 2.0 |

| Hearing loss | ASNR | 51 | 10.8 | 11.5 | 11.3 | 10.3 | 11 | 12.5 | 9.7 | 11 | 13.1 | 11.2 | 1.0 |

| Is CT safe for my child? | ASNR | 25 | 12.9 | 16 | 18 | 11.3 | 17 | 14 | 11.4 | 13 | 18.1 | 14.6 | 2.7 |

| MR angiography | ASNR | 43 | 12.3 | 11.5 | 11.1 | 11.2 | 16 | 14.2 | 9.1 | 12 | 13.5 | 12.3 | 2.0 |

| MRI | ASNR | 54 | 10.2 | 9.5 | 9.8 | 10.8 | 11 | 11.7 | 8.5 | 9 | 12 | 10.3 | 1.2 |

| Multiple sclerosis | ASNR | 29 | 13.5 | 16 | 16.9 | 11.4 | 16 | 19 | 18.9 | 17 | 17.6 | 16.3 | 2.5 |

| Myelography | ASNR | 40 | 12.4 | 14 | 13.7 | 11.3 | 14 | 16.1 | 14.4 | 13 | 15.1 | 13.8 | 1.4 |

| Percutaneous vertebroplasty | ASNR | 41 | 13.5 | 14 | 11.7 | 11.6 | 17 | 13.4 | 8.9 | 13 | 13.2 | 12.9 | 2.2 |

| Should I be concerned about radiation? | ASNR | 16 | 15.1 | 16 | 16.1 | 12.2 | 17 | 19 | 15.4 | 17 | 17.9 | 16.2 | 1.9 |

| Sinusitis | ASNR | 36 | 12.1 | 14 | 13.9 | 11.3 | 16 | 15.7 | 13.3 | 13 | 15.6 | 13.9 | 1.6 |

| Stroke | ASNR | 42 | 12.8 | 11.5 | 12.4 | 11 | 14 | 14.5 | 11.2 | 13 | 14.3 | 12.7 | 1.3 |

| Traumatic brain injury and concussion | ASNR | 37 | 12.6 | 14 | 13.2 | 11.4 | 16 | 16.2 | 12.3 | 13 | 15.3 | 13.8 | 1.7 |

| What is neuroradiology? | ASNR | 0 | 19 | 16 | 19 | 12.3 | 17 | 16.6 | 11.6 | 17 | 19 | 16.4 | 2.8 |

| Why subspecialty medicine? | ASNR | 25 | 15.6 | 14 | 14.7 | 11.8 | 17 | 17.1 | 11.9 | 17 | 16.1 | 15.0 | 2.1 |

Note:—Avg indicates average; FORCAST, FORCAST Formula; Fry, Fry Graph.

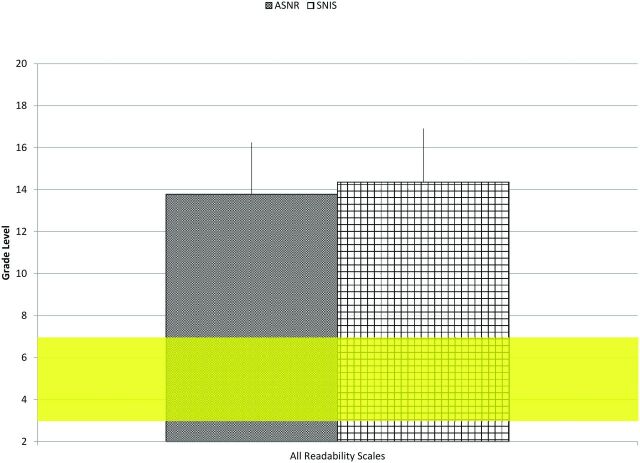

Fig 2.

The readability of all articles from both the ASNR and SNIS patient education Web sites as measured by the 9 readability scales that correspond to academic grade level (CLI, NDC, FKGL, FORCAST Formula, Fry Graph, GFI, NFC, RRE, and SMOG). The yellow area corresponds to the grade level recommendations from the National Institutes of Health and the AMA.

There was no statistical difference (P = .69) between the level of difficulty of the patient education material when comparing the ASNR and SNIS Web sites as assessed by the FRE readability scale. Furthermore, there was no difference (P = .27) between these Web sites as assessed by the 9 other readability scales. The 1-way ANOVA demonstrated a statistical difference in the average readability reported among the 9 readability scales, F (8,171) = 8.59, P = .0001. The Tukey Honestly Statistically Different post hoc analysis found real differences with the CLI (13.2 ± 2.0 grade level) and the GFI (15.5 ± 2.1 grade level) and SMOG (15.5 ± 2.0 grade level) scales. It also found differences among the FORCAST Formula (11.4 ± 0.5 grade level) and the Fry Graph (15.2 ± 2.0 grade level), GFI (15.5 ± 2.1 grade level), NDC (13.8 ± 1.8 grade level), RRE (13.9 ± 2.5 grade level), SMOG (15.5 ± 2.0 grade level), and FKGL(14.1 ± 2.6 grade level). Last, it found differences between the NFC (12.4 ± 2.9 grade level) and the GFI (15.5 ± 2.1 grade level) and SMOG (15.5 ± 2.0 grade level) and Fry Graph (15.2 ± 2.0 grade level) scales. Despite these statistical differences among some of the readability scales, all patient education resources available on the ASNR and SNIS sites were still written at advanced levels above the National Institutes of Health and AMA recommendations.

Discussion

Each of the patient education resources from the ASNR and SNIS Web sites failed to meet the AMA and the National Institutes of Health guidelines recommending a seventh grade level of readability. This finding held true regardless of the readability scale used. Furthermore, the readability assessment scales determined that the overwhelming majority, 85%, of the material was written at the college level or higher. The complex nature of the textual narratives provided by the ASNR and SNIS will likely hinder widespread understanding of the material by the public. Their narrative complexity may prevent effective transmission of health care information. These discrepancies in content versus intent are not a new phenomenon inasmuch as other Web sites have been shown to have a disjunction between the level of complexity of their patient education materials and the ability of the average reader to understand them.13–15,20,23–25 A recent article from JAMA Internal Medicine revealed that 16 major national physician organizations had Web sites that presented patient education materials above the AMA and National Institutes of Health guidelines.22 That study did note that one organization had marginally acceptable levels of readability of their resources (American Academy of Family Physicians), meeting the AMA and the National Institutes of Health guidelines on some but not all readability scales.22

Approximately 70% of patients have said that the on-line information they review has impacted their health care decisions.35 In an effort to better help these patients make informed health care decisions, it is critical to revise current on-line resources so they are in accord with the capabilities of the consumers of the material. The AMA, National Institutes of Health, and Centers for Disease Control and Prevention have developed instructional guidelines on various ways to compose patient education narratives at or below a seventh grade level.5,11,36 Web site developers may also benefit from consulting organizations such as the Institute for Healthcare Improvement (www.ihi.org) when developing patient education resources for the Internet.

Specific suggestions for improvement include the following: 1) Simple identifying methods, such as using bold type to emphasize major terms and categories, can facilitate patient comprehension.37 2) Using a font size between 12 and 14 points and avoiding all capital letters, italics, and nontraditional fonts can augment patient use of the Web site material.38 3) Avoiding the use of medical terminology, unless absolutely necessary, could improve patient comprehension as well.39 4) A major tool is the use of videos. A study on the implementation of videos for patients with breast cancer undergoing surgery showed that those who viewed educational videos, despite low education levels, lack of insurance, unemployment, and cultural diversity, were still able to score >80% on questionnaires.40 When constructing these videos, one should also tailor the message to patients by including an introduction addressing the purpose of the video and how it can personally assist in their decision-making processes.41 5) Furthermore, the use of stories and pictures, which are often more memorable than statistics and recommendations, can help alleviate patient fears and address their emotional states.42 Recent studies have demonstrated that pictorial aids enhance a patient's recall, comprehension, and adherence to treatments.43 For individuals who perhaps understand the basic information and desire more advanced resources, links to scientific information could be provided.44

While the textual materials on the ASNR and SNIS Web sites demonstrate a high level of readability, there are likely other factors that contribute to understanding of patient education materials. Perhaps most important, as mentioned above, the use of images and videos can enhance the material and likely improve the reader's comprehension.40–42 The impact of multimedia needs to be further studied to determine its impact on patients. Additionally, terminology related to neuroradiology is often complex. This may lead to unavoidably high readability scores. There are important limitations of this study. It is still imperative to address the complexity of the current text to improve its potential for patient appreciation. Goals for future work include evaluation of real patients to determine their understanding and comprehension of such resources.

Conclusions

Patient education resources available on the ASNR and SNIS Web sites are written well above the AMA and National Institutes of Health guidelines. Such information should be revised to the third-to-seventh grade reading levels. To achieve greater comprehension by the average Internet viewer, modification of this material into a simpler, easier-to-read format, would expand the population who could benefit from the information related to neuroradiology, its diagnostic procedures, and common conditions in which neuroradiology plays a vital role.

Supplementary Material

ABBREVIATIONS:

- AMA

American Medical Association

- ASNR

American Society of Neuroradiology

- CLI

Coleman-Liau Index

- FKGL

Flesch-Kincaid Grade Level

- FRE

Flesch Reading Ease

- GFI

Gunning Fog Index

- NDC

New Dale-Chall

- NFC

New Fog Count

- RRE

Raygor Reading Estimate

- SMOG

Simple Measure of Gobbledygook

- SNIS

Society of Neurointerventional Surgery

Footnotes

An abstract for this paper was accepted at: Annual Meeting of the American Society of Neuroradiology, May 17–22, 2014, Montreal, Canada, under the title “Online patient resources—the readability of neuroradiology-based education materials.”

References

- 1. Fox S. The Social Life of Health Information, 2011. http://www.pewinternet.org/∼/media/Files/Reports/2011/PIP_Social_Life_of_Health_Info.pdf. Accessed September 1, 2013

- 2. Internet & American Life Project. Demographics of internet users. Pew Research Center; 2011. http://pewinternet.org/∼/media/Files/Reports/2012/PIP_Digital_differences_041312.pdf. Accessed September 1, 2013

- 3. Fox S, Rainie L. Vital Decisions. Pew Internet & American Life Project; 2002. http://www.pewinternet.org/Reports/2002/Vital-Decisions-A-Pew-Internet-Health-Report.aspx. Accessed September 1, 2013

- 4. Diaz JA, Griffith RA, Ng JJ, et al. Patients' use of the Internet for medical information. J Gen Intern Med 2002;17:180–85 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Weis BD. Health Literacy: A Manual for Clinicians. Chicago: American Medical Association, American Medical Foundation; 2003 [Google Scholar]

- 6. Kutner M, Greenberg E, Jin Y, et al. The Health Literacy of America's Adults: Results From the 2003 National Assessment of Adult Literacy (NCES 2006-483). Washington, DC: National Center for Education Statistics; 2006 [Google Scholar]

- 7. Williams MV, Parker RM, Baker DW, et al. Inadequate functional health literacy among patients at two public hospitals. JAMA 1995;274:1677–82 [PubMed] [Google Scholar]

- 8. Baker D, Parker R, Williams MV, et al. The health care experience of patients with low literacy. Arch Fam Med 1996;5:329–34 [DOI] [PubMed] [Google Scholar]

- 9. Potter L, Martin C. Health Literacy Fact Sheets. Lawrenceville, New Jersey: Center for Health Care Strategies, 2013. http://www.chcs.org/usr_doc/CHCS_Health_Literacy_Fact_Sheets_2013.pdf. Accessed August 18, 2013 [Google Scholar]

- 10. White S. Assessing the Nation's Health Literacy: Key Concepts and Findings of the National Assessment of Adult Literacy (NAAL). Chicago: AMA Foundation; 2008 [Google Scholar]

- 11. National Institutes of Health. How to Write Easy-to-Read Health Materials. http://www.nlm.nih.gov/medlineplus/etr.html. Accessed July 1, 2013

- 12. Colaco M, Svider PF, Agarwal N, et al. Readability assessment of online urology patient education materials. J Urol 2013;189:1048–52 [DOI] [PubMed] [Google Scholar]

- 13. Eloy JA, Li S, Kasabwala K, et al. Readability assessment of patient education materials on major otolaryngology association Websites. Otolaryngol Head Neck Surg 2012;147:848–54 [DOI] [PubMed] [Google Scholar]

- 14. Kasabwala K, Agarwal N, Hansberry DR, et al. Readability assessment of patient education materials from the American Academy of Otolaryngology-Head and Neck Surgery Foundation. Otolaryngol Head Neck Surg 2012;147:466–71 [DOI] [PubMed] [Google Scholar]

- 15. Misra P, Agarwal N, Kasabwala K, et al. Readability analysis of healthcare-oriented education resources from the American Academy of Facial Plastic and Reconstructive Surgery (AAFPRS). Laryngoscope 2013;123:90–96 [DOI] [PubMed] [Google Scholar]

- 16. Misra P, Kasabwala K, Agarwal N, et al. Readability analysis of internet-based patient information regarding skull base tumors. J Neurooncol 2012;109:573–80 [DOI] [PubMed] [Google Scholar]

- 17. Svider PF, Agarwal N, Choudhry OJ, et al. Readability assessment of online patient education materials from academic otolaryngology-head and neck surgery departments. Am J Otolaryngol 2013;34:31–35 [DOI] [PubMed] [Google Scholar]

- 18. Kasabwala K, Misra P, Hansberry DR, et al. Readability assessment of the American Rhinologic Society patient education materials. Int Forum Allergy Rhinol 2013;3:325–33 [DOI] [PubMed] [Google Scholar]

- 19. Agarwal N, Sarris C, Hansberry DR, et al. Quality of patient education materials for rehabilitation after neurological surgery. NeuroRehabilitation 2013;32:817–21 [DOI] [PubMed] [Google Scholar]

- 20. Agarwal N, Chaudhari A, Hansberry DR, et al. A comparative analysis of neurosurgical online education materials to assess patient comprehension. J Clin Neurosci 2013;20:1357–61 [DOI] [PubMed] [Google Scholar]

- 21. Hansberry DR, Suresh R, Agarwal N, et al. Quality assessment of online patient education resources for peripheral neuropathy. J Peripher Nerv Syst 2013;18:44–47 [DOI] [PubMed] [Google Scholar]

- 22. Agarwal N, Hansberry DR, Sabourin V, et al. A comparative analysis of the quality of patient education materials from medical specialties. JAMA Intern Med 2013;173:1257–59 [DOI] [PubMed] [Google Scholar]

- 23. Hansberry DR, Agarwal N, Shah R, et al. Analysis of the readability of patient education materials from surgical subspecialties. Laryngoscope 2014;124:405–12 [DOI] [PubMed] [Google Scholar]

- 24. Hansberry DR, John A, John E, et al. A critical review of the readability of online patient education resources from RadiologyInfo.org. AJR Am J Roentgenol 2014;202:566–75 [DOI] [PubMed] [Google Scholar]

- 25. Hansberry DR, Kraus C, Agarwal N, et al. Health literacy in vascular and interventional radiology: a comparative analysis of online patient education resources. Cardiovasc Intervent Radiol 2014. January 31. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 26. Flesch R. A new readability yardstick. J Appl Psychol 1948;32:221–33 [DOI] [PubMed] [Google Scholar]

- 27. Kincaid EH. The Medicare program: exploring federal health care policy. N C Med J 1992;53:596–601 [PubMed] [Google Scholar]

- 28. McLaughlin GH. SMOG grading: a new readability formula. Journal of Reading 1969;12:639–46 [Google Scholar]

- 29. Coleman M, Liau TL. A computer readability formula designed for machine scoring. J Appl Psychol 1975;60:283–84 [Google Scholar]

- 30. Gunning R. The Technique of Clear Writing. New York: McGraw-Hill; 1952 [Google Scholar]

- 31. Chall JS. Readability Revisited: The new Dale-Chall Readability Formula. Cambridge, Massachusetts: Brookline Books; 1995 [Google Scholar]

- 32. Caylor JS, Sticht TG, Fox LC, et al. Methodologies for Determining Reading Requirements of Military Occupational Specialties: Tech Report No. 73–5. Human Resources Research Organization. Defense Technical Information Center: Alexandria, Virginia; 1973 [Google Scholar]

- 33. Fry E. A readability formula that saves time. Journal of Reading 1968;11:513–16, 575–78 [Google Scholar]

- 34. Raygor AL. The Raygor Readability Estimate: a quick and easy way to determine difficulty. In: Pearson PD, ed. Reading: Theory Research and Practice. Clemson, South Carolina: National Reading Conference; 1977:259–63 [Google Scholar]

- 35. Penson RT, Benson RC, Parles K, et al. Virtual connections: internet health care. Oncologist 2002;7:555–68 [DOI] [PubMed] [Google Scholar]

- 36. US Department of Health and Human Services. Simply Put: A Guide for Creating Easy-to-Understand Materials. 3rd ed. Atlanta: Centers for Disease Control and Prevention; 2009 [Google Scholar]

- 37. Aliu O, Chung KC. Readability of ASPS and ASAPS educational web sites: an analysis of consumer impact. Plast Reconstr Surg 2010;125:1271–78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Badarudeen S, Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res 2010;468:2572–80 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Greywoode J, Bluman E, Spiegel J, et al. Readability analysis of patient information on the American Academy of Otolaryngology-Head and Neck Surgery website. Otolaryngol Head Neck Surg 2009;141:555–58 [DOI] [PubMed] [Google Scholar]

- 40. Bouton ME, Shirah GR, Nodora J, et al. Implementation of educational video improves patient understanding of basic breast cancer concepts in an undereducated county hospital population. J Surg Oncol 2012;105:48–54 [DOI] [PubMed] [Google Scholar]

- 41. Doak CC, Doak LG, Friedell GH, et al. Improving comprehension for cancer patients with low literacy skills: strategies for clinicians. CA Cancer J Clin 1998;48:151–62 [DOI] [PubMed] [Google Scholar]

- 42. Davis TC, Williams MV, Marin E, et al. Health literacy and cancer communication. CA Cancer J Clin 2002;52:134–49 [DOI] [PubMed] [Google Scholar]

- 43. Katz MG, Kripalani S, Weiss BD. Use of pictorial aids in medication instructions: a review of the literature. Am J Health Syst Pharm 2006;63:2391–97 [DOI] [PubMed] [Google Scholar]

- 44. Friedman DB, Hoffman-Goetz L. A systematic review of readability and comprehension instruments used for print and web-based cancer information. Health Educ Behav 2006;33:352–73 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.