Abstract

Mobile health (mHealth) holds considerable promise as a way to give people greater control of their health information, privacy, and sharing in the context of HIV research and clinical services. The purpose of this study was to determine the feasibility of an mHealth research application from the perspective of three stakeholder groups involved in an HIV clinical trial in Jakarta, Indonesia: (a) incarcerated people living with HIV (PLWH), (b) RAs, and (c) research investigators. Incarcerated PLWH (n = 150) recruited from two large all-male prisons completed questionnaires, including questions about mHealth acceptability, on an mHealth survey application using a proprietary data collection software development platform. RAs who administered questionnaires (n = 8) rated the usability of the software application using the system usability scale (SUS) and open-ended questions. Research investigators (n = 2) completed in-depth interviews, that were coded and analyzed using the technology acceptance model (TAM) as a conceptual framework. Over 90% of incarcerated PLWH felt the mHealth application offered adequate comfort, privacy, and accuracy in recording their responses. RAs’ SUS scores ranged from 60% to 90% (M = 76.25) and they found the mHealth survey application challenging to learn, but highly satisfying. Compared to paper-based data collection, researchers felt that electronic data collection led to improved accuracy and efficiency of data collection and the ability to monitor data collection remotely and in real time. The researchers perceived the learnability of the application as acceptable but required self-instruction.

Keywords: feasibility, HIV, mHealth, technology acceptance, prisoners, usability

1 |. INTRODUCTION

Information technology has brought important changes to health care including a wide variety of technologies to support research and clinical decision-making. Mobile health (mHealth) refers to the use of mobile and wireless devices in health care and health research. mHealth applications have the potential to influence how researchers, healthcare providers, and consumers gather and access information and make healthcare decisions (Agarwal et al., 2016; Gagnon, Ngangue, Payne-Gagnon, & Desmartis, 2016; Pham et al., 2019) and can play a role in prevention and treatment of chronic and communicable diseases (Peiris, Praveen, Johnson, & Mogulluru, 2014), including HIV (Burrus et al., 2018; Catalani, Philbrick, Fraser, Mechael, & Israelski, 2013; Schnall, Bakken, Rojas, Travers, & Carballo-Dieguez, 2015). In the context of the global AIDS pandemic, mHealth applications have been used to monitor disease outbreaks, collect sensitive behavioral information, and deliver behavioral interventions to people living with HIV (PLWH), including text messages via mobile phones to increase medication adherence (Comulada et al., 2019; Pop-Eleches et al., 2011).

Two populations of special interest when considering the adoption of mHealth are people who inject drugs (PWID) and prisoners (Krishnan & Cravero, 2017). Globally, criminalization of drug use has resulted in high rates of incarceration among socially and medically vulnerable populations, including people living with HIV and drug dependence (Altice et al., 2016). An estimated 3.8% of the world’s prison population are PLWH (Dolan et al., 2016) and one third are drug dependent (Fazel, Yoon, & Hayes, 2017). Prison overcrowding leads to unsafe and unhealthy conditions that fuel the spread of tuberculosis and other opportunistic infections, (Kamarulzaman et al., 2016) and health services often are inadequate to address the high burden of communicable and non-communicable diseases among prisoners (Bick et al., 2016). Consequently, health outcomes in prison populations are especially poor. In recent meta-analyses of studies from mostly high-income countries, only half of PLWH (54.6%) achieved adequate ART adherence during detention (Uthman, Oladimeji, & Nduka, 2017), and receipt of ART and virologic suppression fell to 29% and 21%, respectively, after prison release (Iroh, Mayo., & Nijhawan, 2015). In Indonesia, poor adherence to ART and discontinuation of treatment after prison release contribute to high rates of post-release mortality (238 deaths per 1,000 person years) (Culbert et al., 2017) and the emergence of drug-resistant HIV among PWID. In a recent 3-country study, drug resistance was highest (24%) in the Indonesian cohort and PWID with a history of incarceration were 6 times more likely to have HIV drug resistance (Palumbo et al., 2018). Because most prisoners eventually return to the community, failure to adequately treat HIV and addiction in prison populations is one of the single most important factors contributing to HIV transmission in global “hot spots” such as Eastern Europe and Southeast Asia (Altice et al., 2016; Culbert, Pillai, et al., 2016).

mHealth interventions show considerable promise for helping to address the challenges of HIV service provision to PLWH who are transitioning from prison to the community. Behavioral interventions have utilized mHealth to provide counseling and case management services to improve linkage to care and reduce HIV transmission after prison release (Kurth et al., 2014; Spaulding et al., 2018). Yet, most of this evidence comes from high-income countries where access to and support for mHealth technologies may be higher and cultural attitudes toward the use of technology likely differ . Consequently, there is a need to evaluate the acceptability and feasibility of mHealth in lower-resource settings as a potential conduit to improving health outcomes for prisoners with HIV.

Indonesia is a lower middle-income country with high HIV prevalence in prisons, universal healthcare, and a large and expanding telecommunications market. This combination of factors makes Indonesia an important setting in which to investigate mHealth as a strategy for linking PLWH in prison to health services after prison release. As a first step toward adopting mHealth for research and service provision, we examined the feasibility of a mHealth survey application for collecting sensitive behavioral health information from soon-to-be-released PLWH in Indonesia. Results from this study may be useful for evaluating the practicality of mHealth as a means to improve health outcomes in a socially and medically vulnerable population.

1.2 |. Objective

The purpose of this study was to evaluate the acceptability and feasibility of an mHealth survey application from the perspectives of three different stakeholder groups: (a) incarcerated persons living with HIV, (b) research assistants (RAs), and (c) research investigators.

1.3 |. Conceptual Framework

Our assessment builds on the technology acceptance model (TAM), one of the most widely used frameworks in technology adoption (Venkatesh & Bala, 2008). The TAM is based upon the theory of reasoned action, a conceptual framework used by scientists to predict and explain human behavior (Fishbein & Ajzen, 1975) and extended with the TAM to predict and explain technology adoption (Davis Jr, 1986). The TAM theorizes that technology acceptance is influenced by perceived utility (i.e., whether or not the technology provides the needed features) and perceived usability (i.e., ease of use as measured by learnability, efficiency, memorability, error prevention and satisfaction; Nielsen, 1993). In turn, technology acceptance influences actual technology use.

Holden and Karsh (2010) reviewed the use of TAM in health care and found that the model predicts technology acceptance quite well but recommended adding contextualizing variables that best match the characteristics of the setting, end users (i.e., people who use the technology) and technology. Other researchers have adapted the TAM to include characteristics and needs of specific types of end users in a given project (Campbell et al., 2017). In this study, we assessed technology feasibility using the perspectives three different types of end users: (a) the researchers who seek to evaluate the use of the mHealth application for future research of intervention efficacy, (b) the incarcerated PLWH who would use mHealth in the future, and (c) the RAs who would use the technology in the current study. In addition to the core concepts of utility and usability in TAM, we added cost as a key feasibility consideration for researchers and practitioners in lower resource settings; and acceptability to the study participants, a broad concept in health services research that focuses on the affective attitudes, experiences, burden, and ethical consequences for end-users or intended beneficiaries (Sekhon, Cartwright, & Franics, 2017).

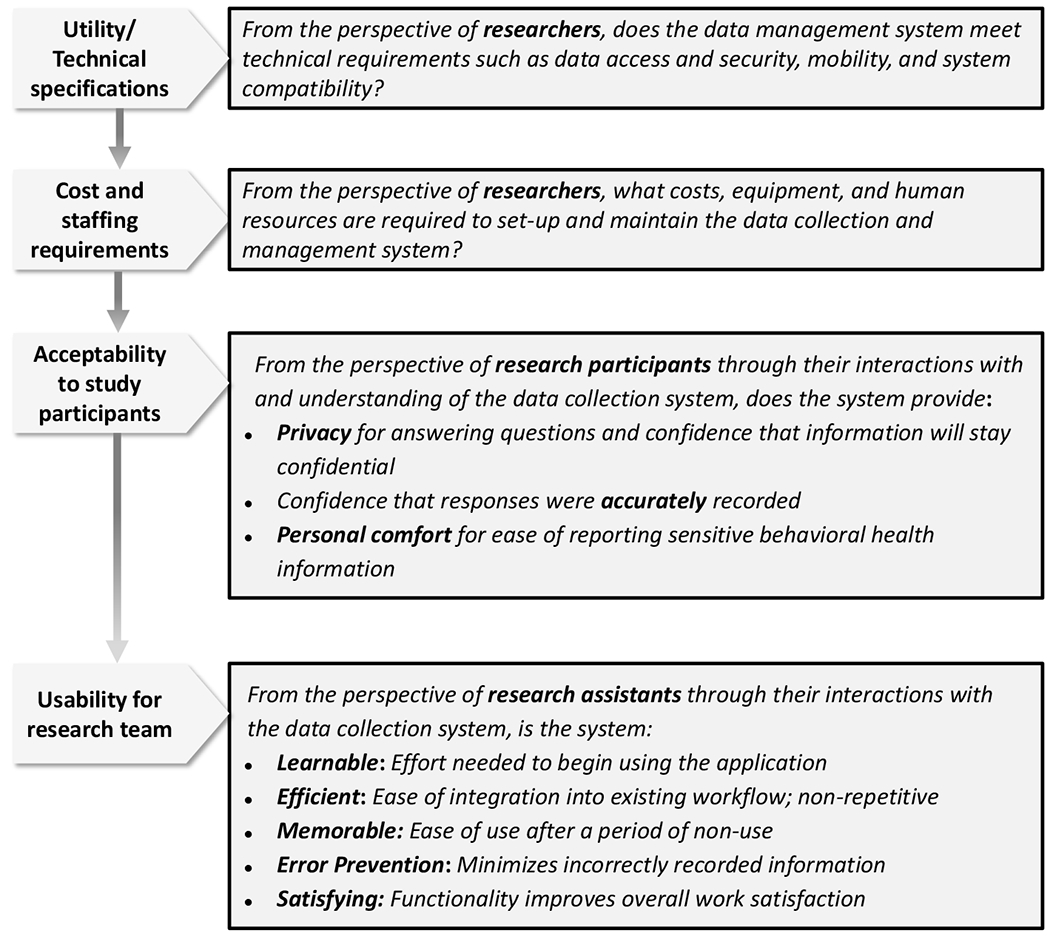

The determination of feasibility, defined for this project as utility, cost, acceptability, and usability, occurred in a stepwise process (see Figure 1). First a determination of utility to the researchers was assessed, after which cost was evaluated. If the cost to train, use, and maintain the technology was considered low, patient acceptability was assessed in terms of privacy, accuracy, and confidentiality as the key concepts needed to establish whether the mHealth survey application would be acceptable for use in future studies. Finally, if the mHealth survey application was deemed acceptable to the PLWH in prison, usability was assessed from the perspective of the study’s RAs.

Figure 1.

Evaluating the feasibility of an mHealth research application from the perspectives of multiple users

1.4 |. Application Development and Technical Specifications

In 2014, researchers developed and deployed an mHealth data collection tool using a proprietary web-based data collection and management platform with a strong evidence base (Chatfield, Javetski, Fletcher, & Lesh, 2015). Like other electronic data collection and management systems, the platform selected for this study allowed users to build and customize applications for research and data collection purposes and provided additional data security, offline capability, and case management features that might facilitate data collection and offer a higher level of research protection. Based on preliminary feedback from incarcerated PLWH and RAs that the mHealth data collection tool met their needs with minimal technical problems or privacy concerns, researchers revised the mHealth application and deployed it on handheld tablets to collect data in an HIV clinical trial in Indonesia. Here, we present an analysis of feasibility data obtained in the HIV clinical trial from three stakeholder groups: (a) study participants enrolled in the clinical trial, (b) RAs who used the mHealth application to gather participant information, and (c) research investigators who designed the mHealth application and supervised data collection. Here, we use “mHealth” to refer to the data collection and management system as well as the software and Android tablet devices that were used to administer questionnaires to study participants.

2 |. METHODS

Here, we describe an evaluation of an electronic data collection and management tool (hereafter referred to as ‘mHealth survey application’) that was utilized for data collection and management in a research study involving: incarcerated PLWH enrolled in an HIV clinical trial in Indonesia (ClinicalTrials.gov registration number NCT03397576) and the RAs and investigators implementing that same trial. Details of the clinical trial (parent study) design have been described in previously published research (Culbert, Earnshaw, & Levy, 2019). Given the numerous ethical and methodological challenges of bio-behavioral research with prison populations (Azbel et al., 2016), an evaluation of the tools and technologies through which researchers and study participants interact with and understand one another may help to advance research in this area. Embedded within the clinical trial, therefore, was an evaluation of initial acceptability of the mHealth survey application. The initial acceptability assessment utilized data collected at three time points from three participant groups including: study participants in the HIV clinical trial (n = 150), RAs who were trained to use the mHealth survey application as data collectors (n = 8), and research investigators (n = 2) involved in the mHealth survey design and data management. Each of the three stakeholder groups engaged differently with the data collection application and provided their expertise toward future acceptability. The research was approved by Institutional Review Boards in the United States and Indonesia.

2.1 |. Study Populations and Settings

Study participants recruited for the HIV clinical trial were Indonesian male citizens, diagnosed with HIV and aware of the HIV-positive status, and incarcerated in one of two large prisons in Jakarta, Indonesia. Characteristics of the study population and setting have been described previously. Summarily, Indonesia has the 8th largest prison population worldwide (Wamsley,2019). Prisons in Indonesia are extremely overcrowded (174% overcapacity), and HIV is prevalent in an estimated 1.1% of male prisoners nationwide (Blogg, Utomo, Silitonga, Ayu N. Hidayati, & Sattler, 2014), but varies widely, with higher HIV prevalence (6.5%) in specialized narcotic prisons that house persons charged with drug-related offenses (Directorate of Corrections, 2012). Many of those living with HIV in prison are individuals who were first diagnosed and offered treatment in prison. Under Indonesian guidelines, all participants were eligible for antiretroviral therapy (ART) at no cost.

Graduates of professional nursing programs were recruited as RAs to conduct research activities including participants and to administer informed consent and study questionnaires. RAs participated in a 2-week training inclusive of research ethics, study protocols, and instruction and role play using handheld computer tablets to administer study questionnaires.

Research investigators included the study’s co-author and principal investigator (GJC) who designed and supervised the clinical trial and a research consultant (CC), also co-author, who assisted with the development and deployment of the mHealth survey application. Both investigators were involved in developing the study’s research questionnaires and the mHealth survey application. By including investigators as analysts and informants of initial acceptability, we self-reflexively consider our role in shaping the lessons learned from this study and allow the reader to do the same. Decisions about what was important to the researchers are presented alongside information about acceptability gathered from study participants and RAs to provide transparency and facilitate critique and comparison.

2.3 |. Data Collection and Study Measures

2.3.1 |. Incarcerated People Living with HIV

During informed consent, RAs explained the use of electronic data collection and the rules governing how and where the data would be stored and used. After obtaining informed consent, RAs administered a set of study questionnaires to gather information about participants’ demographic, clinical and behavioral characteristics, including sensitive and personal questions about HIV and substance use. To minimize the possibility of coercion or breaches of privacy during the initial interview in prison, questionnaires were administered in private comfortable rooms within the prison clinic by RAs using handheld Android computer tablets to display questions and record responses. Participants were given the option of reading and responding to questionnaires by themselves or to have a RA read questions and response options aloud and record responses. After completing the study questionnaire, participants were asked to respond to 4 Likert-type items as measures of initial acceptability, including their: a) level of comfort using a tablet to record their answers, b) agreement that the tablet provided adequate privacy for questions and responses, c) confidence that the information which they shared would remain confidential, and d) confidence that their responses were accurately recorded. Research investigators developed these four items based on their previous research in these prison settings and a review of the acceptability measurement literature, most of which is not specific to the unique concerns of people in prison. Although not exhaustive, these four dimensions of initial acceptability (privacy, confidentiality, overall comfort, and accuracy) were considered important measures of participant approval, precisely because these protections are often lacking in prisons and likely influence whether or not people in these settings trust and accept researchers and clinicians, their technologies, and interventions (Lazzarone & Altice, 2000; Culbert et al., 2019)

2.3.2 |. Research Assistants (RAs)

RAs had varying levels of experience in using the mHealth survey application (i.e., electronic data collection tool), but all had training and at least 3 months of daily use. During the first two years of the clinical trial, the mobile survey application was field tested in a variety of settings, including prisons, urban neighborhood or street settings, and remote villages. Each RA had an average caseload of 15 study participants for whom they were responsible for tracking and follow-up data collection after study participants were released from prison. Thus, RAs continuously interacted with the data collection software to locate participants for study visits, update participant contact information, and to record participant responses to study questionnaires.

As part of our evaluation, we asked RAs to retrospectively assess their experiences of using the mHealth survey application. This evaluation consisted of a two-part usability survey. Part one was the system usability scale (SUS) survey developed by John Brooke (1996) and translated into Bahasa Indonesia (Sharfina & Santoso, 2016). The SUS focuses on two of Nielsen’s usability attributes (learnability and satisfaction) (Nielsen, 1993; Sousa et al., 2015), is quick to administer, freely available, performs well psychometrically (coefficient alphas .70.92; Lewis, 1995; Lewis & Sauro, 2009), and is widely accepted as a reliable means for testing the usability of both hardware and software (Lewis & Sauro, 2009). Based on multiple research projects over 30 years, a score ≥ 68 is considered above average (Brooke, 2013). In part two, we asked three open-ended questions to assess usability attributes not included in the SUS: (a) Please comment on the how easy or hard it was to use the mHealth survey application after a period of not using it (memorability), (b) What difficulties, if any, did you encounter while using the mHealth survey application research application to collect data? (error prevention and recovery), and (c) How has the mHealth survey application facilitated your overall workflow? (efficiency). Items were translated into the Indonesian language using a direct forward translation approach (Behling & Law, 2000) and checked for accuracy by an experienced Indonesian language instructor. The RAs completed the usability survey via pen and paper. Answers were translated back into English prior to analysis.

2.3.3 |. Research Investigators

Co-authors (GJC) and (CC) were interviewed separately by co-author (KDL), an expert in usability who was not involved in the parent study. KDL conducted one-on-one asynchronous semi-structured interviews using a shared online document (Google Docs ™) over a 10-day period (Spring, 2018) by asking questions to the principal investigator (GJC) and co-investigator (CC) using methods adapted from Hershberger and Kavanaugh’s (2017) email interview approach. The interviewer entered two questions into the Google Docs ™ along with an email to notify the recipients the questions were ready. The principal investigator and co-investigator entered their question responses into their separate Google Docs ™ document and sent KDL an email to notify her when they had completed the questions. Follow-up questions were entered into the same Google Docs ™ in groups of 24 questions based on the responses or continued with items as numbered sequentially in the interview guide.

Each interview began with questions about the research investigator’s role on the team, experiences with data collection, and goals for the new data collection methods. Then the TAM was used as a heuristic to frame the interview questions around the concepts of utility and usability using Nielsen’s (1993) definition of usability that includes the following attributes: learnability, memorability, efficiency, error prevention, and satisfaction. Clarifying questions were added as needed and each interview consisted of 1,214 questions.

2.4 |. Analysis

2.4.1 |. Incarcerated People Living with HIV

Descriptive statistics (means, percentages) were used to summarize participant responses to the four questions in the acceptability questionnaire.

2.4.2 |. RAs

SUS responses range from strongly disagree to strongly agree. Because the SUS has both positively and negatively worded items, we computed and report the means and standard deviations from the raw score so that disagreeing with a negatively worded item indicates higher usability. Next, we used the methods described by Brooke (1996) to “convert” each item to have a positive direction in order to create a summative score for the entire scale. To do so, for each positively worded item, we subtracted one from the score and for each negatively worded item we subtracted the raw score from 5. This process allows disagreeing with a negative items to yield a higher score. To compute the summative SUS score across all 10 items, we multiplied the 2.5 to convert the score of the mean each item from a 04 scale to a 010. Next, we summed across each item to derive a total score that ranges from 0 to 100.

For each usability attribute included in the open-ended questions (memorability, errors, and efficiency), responses were coded by KDL as positive, negative, or mixed. Responses that did not focus on the usability attribute in the question were coded as “did not focus” or could not be coded this way were given new codes inductively. To establish trustworthiness (Lincoln & Guba, 1985) of the coding decisions, a second author (VS) applied the coding schema to the responses. Given the small amount of data, each author independently coded all responses. Interrater agreement was 90% in one round. The remaining 10% were decided by consensus.

2.4.3 |. Research Investigators

We used directed content analysis procedures (Hsieh & Shannon, 2005) to conduct the analysis. Content analysis is a widely used approach to qualitative data is used to interpret meaning from text. Directed content analysis begins with a set of deductive codes based existing knowledge, frameworks, or theories (Hsieh & Shannon, 2005). Interview content that cannot be coded using the existing deductively derived coding schema are coded inductively. In this study, the original coding schema was developed based on the TAM model’s central constructs of usefulness and usability as well as cost. Usability was operationalized in the coding schema based on Neilsen’s (1993) five usability attributes: learnability, efficiency, memorability, error prevention, and satisfaction (see Figure 1). Interviews were organized and assigned codes using Socio Cultural Research Consultants Dedoose software (Version 7.0.23).

Analysis began by KDL reading each of the interviews in their entirety without taking notes. A second reading was then performed during which excerpts related to usefulness and usability attributes were marked and codes were assigned. Coding decisions were reviewed and revised iteratively using constant comparative methods. Codes were then examined and relationships identified that were then synthesized into themes. To establish trustworthiness (Lincoln & Guba, 1985) of the coding decisions, a second author also not involved in the parent study (VS) applied the coding dictionary to 10% of the interviews in rounds until acceptable agreement was achieved (round 1, 80%, round 2, 94%). The remaining 6% were decided by consensus.

3 |. RESULTS

3.1 |. Incarcerated People Living with HIV

Participant responses to the mHealth acceptability survey are shown in Table 1. Most participants (92.1%) were comfortable or very comfortable using a tablet to record their responses. Likewise, most participants agreed or strongly agreed that the tablet provided adequate privacy and confidentiality and that the tablet was useful for ensuring that their responses were accurately recorded.

Table 1.

Acceptability of an electronic mHealth survey application for data collection by incarcerated people living with HIV (N = 150)

| Acceptability concept and question | Response n (%) | |||

|---|---|---|---|---|

| Very comfortable | Comfortable | Neutral | Uncomfortable | |

| Overall Comfort: How comfortable were you answering survey questions using a tablet to answer questions? | 38 (25.0) | 102 (67.1) | 11 (7.2) | 0 (0.0) |

| Strongly agree | Agree | Neutral | Disagree | |

| Privacy: The tablet provided privacy for answering questions. | 29 (19.1) | 111 (73.0) | 11 (7.2) | 0 (0.0) |

| Confidentiality: I’m confident that information stored will remain confidential. | 32 (21.1) | 107 (70.4) | 12 (7.9) | 0 (0.0) |

| Response Accuracy: I’m confident that the tablet accurately recorded my responses. | 20 (13.2) | 114 (84.9) | 2 (1.3) | 0 (0.0) |

3.2 |. Research Assistants

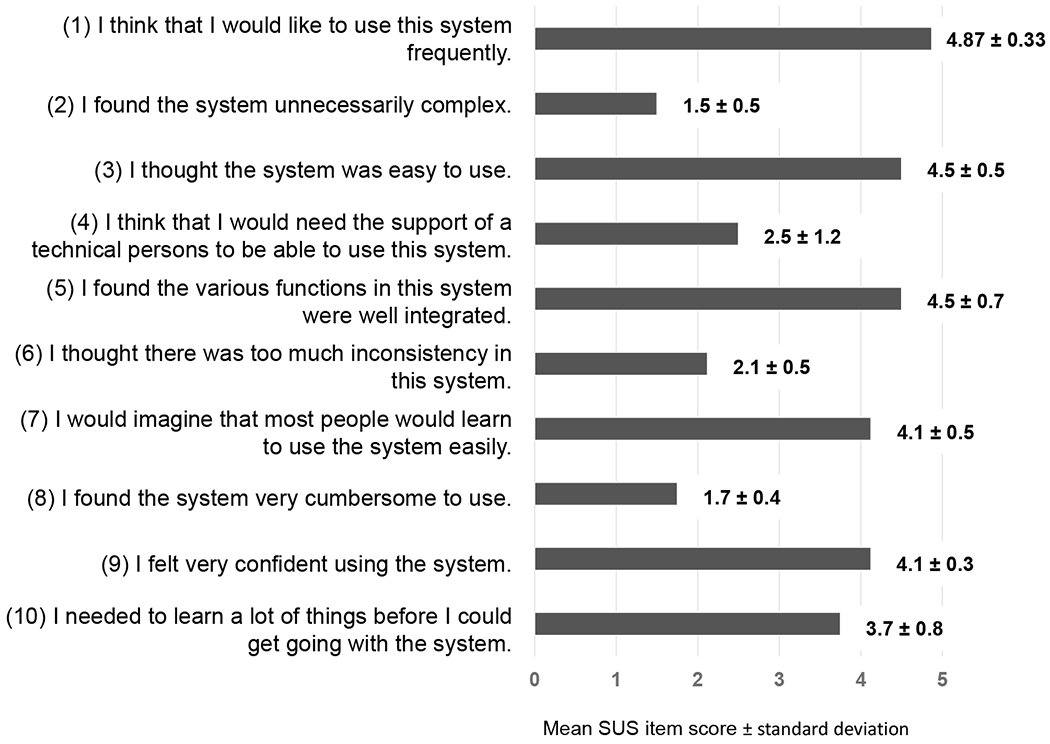

The raw per-item SUS scores are shown in Figure 2. The positively worded items (1,2,5,7 & 9) scores ranged from 4.1 to 4.87, the negatively worded items (2, 4, 6, 8 & 10) scores ranged from 1.5 to 3.7. The summative SUS score (means for each item multiplied by 2.5) was 76.25 on a 0-100 scale. In the open-ended responses, all eight RAs commented positively about efficiency and workflow. Comments included, “Makes it easier because data is entered directly without re-entering like paper data.” and “[the mHealth survey application ] has made data collection more practical.” Most of the RAs (7/8) responded to the open-ended question about memorability, but only one RA focused on memorability in their response. This RA noted that it was difficult to remember how to use the mHealth survey application after a period of nonuse, “Easy to use. But if you don’t use [it] for a long time, you must adjust.” Six of 8 responded to the question about error detection and problems. Two indicated they did not encounter problems when using the mHealth survey application, and 4 noted occasional problems including battery life and technical issues with the tablets.

Figure 2.

Mean scores and standard deviations for individual SUS items (n=8)

3.3 |. Research Investigators

The semi-structured Google Docs ™ based asynchronous interviews were composed of 1,214 questions, completed 4 or 5 turns (a cycle of interviewer questions and interviewee responses), contained 1,481-1,906 words and took a total of about 30 total minutes of question answering for each of the investigators. Although the interview included questions about utility, cost, and all five usability attributes, the concepts discussed with the highest number of coded contents were utility (23), learnability (12), error prevention (9), and satisfaction (4), with minimal discussion of memorability and efficiency. Overall, the research investigators were satisfied with the mHealth survey application. The coded content were synthesized thematically into four themes related to making decisions for remote digital data collection in both research and health care practice.

Theme 1: Utility is the most important factor to investigators when considering digital data collection.

The investigators had a defined list of features required for the mHealth survey application (see Table 2). The application features surpassed the investigators requirements and led to new possibilities for the research. This was summarized by one investigator who said: “The technology has opened new possibilities; it has influenced how I design studies.” and “In general, I would say that the system offers much more than what we currently do with it. In other words, we are mostly limited by our imagination at this stage.”

Table 2.

Required features for the mHealth survey application

| Technical Requirement | Description |

|---|---|

| Data security | Complete HIPAA compliance on mobile device and servers. Software must be fully supported in order to comply with high standards of data stewardship. |

| Longitudinal case tracking | Software data model allows for repeated submissions to a participant’s case file. |

| Mobility | Data is stored on a cloud-based storage system that allows access from multiple devices. |

| Offline capability | Has offline capability for preventing workflow disruptions for data collection in prison and other settings without internet connectivity. |

| Skip logic | Skip logic pre-coded into the survey thus maximizing efficiency and eliminating missing data. |

| Bi-lingual deployment | Complete translation in both Bahasa and English. Provides a common technical language for talking about data collection. |

| Compatibility with analytic software | Software easily and accurately exports to Excel for analysis. Allows researchers working internationally to securely share information. |

| Remote quality assurance | Software has dashboard that allows investigators to view and generate data reports remotely. |

| Flexibility | Software can be deployed in a variety of field settings, including prisons, and has offline capabilities enabling queries and modification to the survey to be done accurately and with efficiency. |

Theme 2: Error prevention was a major motivator of decision to adopt.

This theme emerged in the interviewee’s description of the time and effort to address non-response items (missing data) in the paper-based method of data collection. This priority was addressed by the skip-logic feature noted in the mHealth survey application technical requirements in Table 2.

Theme 3: Despite high satisfaction, the application was not intuitive and was somewhat time consuming to learn.

This was evident in the number of self-led tutorials described. The investigators’ overall satisfaction may have been related to low expectations for intuitive design. This was expressed by one investigator:

I can imagine that another user - someone who prefers learning through text or video and going at their own pace - would find the existing learning tools adequate.

There are a number of supports for people who are learning to build forms and manage data in the mHealth, including self-guided video tutorials, a user-community blog, and help tools. I have been very pleasantly surprised how quickly and easily I can have my questions addressed by finding the information. That said, I prefer to learn from people.

Theme 4: The cost of the software played a role in the usefulness of the software.

The application has 4 pricing options. As expected, with higher costs, comes greater features and support in learning and troubleshooting the software. The research investigators in this project chose to use the lowest cost version of the application but surmised that moving to the higher price categories would have improved the overall usability.

4 |. DISCUSSION

In this study, we found that an mHealth survey application operating on password protected tablets provided a feasible alternative to paper-based data collection for an international research team to gather HIV-related behavioral health information in prisons in Indonesia. Specifically, we found that incarcerated men living with HIV in Indonesia found this method of sharing sensitive behavioral information to be acceptable. Also, the mHealth survey application was deemed useful by RAs who helped collect the sensitive health information and research investigators who planned the study and developed the mHealth survey application. We adapted the TAM for use in this feasibility study by including technical specifications, cost, and staffing requirements from a researcher perspective as well as acceptability to the study participants. Although feasibility is most often determined by the specific research context, we believe these additions may be useful to researchers operating under cost constraints that seek to add the use of technology by participants and data collectors.

Although this is not the first study to use an mHealth application with PLWH (Brown, Krishnan, Ranjit, Marcus, & Altice, 2020; Castonguay et al., 2020), we believe it is the first to demonstrate the acceptability of collecting sensitive HIV-related behavioral health and treatment information from an incarcerated PLWH population. Research with communities affected by HIV and incarceration raises serious ethical and methodological concerns, including whether data collection methods provide adequate privacy and confidentiality for subjects to be open and truthful in sharing their responses (Azbel et al., 2016). People in prison are disproportionately affected by stigma, criminalization, and human rights concerns that can lead to under-reporting of sex and drug use behaviors. A concern at the outset of this project was whether or not electronic data collection might interfere with researchers’ ability to establish an open dialogue with participants, which is essential for building trust and rapport and for ensuring that participants, many of whom have low health literacy, accurately understand questions and response options. Paper-based data collection that requires paper shuffling or reduces eye contact can make a researcher seem distracted and prevent the researcher from listening carefully and responding appropriately, both of which are obstacles to effectively administer research questionnaires. Although we found that electronic data collection made it difficult for RAs to go back and change responses that the participant later corrected, the format did not appear to reduce participant trust or their belief that their responses were accurately recorded in the survey application.

We were pleased to learn that the majority of PLWH in our sample were comfortable using electronic data capture and believed that the mHealth survey application provided adequate privacy, confidentiality, and accuracy in recording their responses. The latter is especially important because participants who suspect that their responses are being mishandled or mis-recorded are likely to be less forthright in their answers or refuse participation altogether. Our findings suggest that incarcerated PLWH feel secure in responding to surveys electronically and, when administered in a private room by a non-prison research staff member, are conducive to sharing of honest and reliable information. Previous studies have shown high reliability of self-reported HIV risk data prior to widespread adoption of electronic data collection (Needle et al., 1995). Self-administered computer-assisted questionnaires (e.g., ACASI; Brown et al., 2013) reduce social desirability and may increase self-reporting of drug and sex risk behaviors (Adebajo et al. 2014); however, some individuals still require assistance to complete ACASI. In addition, some platforms for non mhealth-type digital data collection require users to have access to some difficult to use and expensive equipment and a set of core technical competencies to use said equipment. Nevertheless, newer information and communication technologies like the one used in this study are already being used in prison populations and also as an innovative approach to link individuals to care and improve HIV suppression after prison release in the United States. (Kurth, et al., 2013; Kuo et al., 2019). Compared to some computerized self-administered questionnaires, using mHealth for data collection leverages the ubiquity of mobile devices and eases data collection and data transfer.

RAs were key to locating and developing trust with study participants and are essential to maintaining confidentiality and data integrity. Their experiences with the data collection system in terms of its mobility and ease of use in field operations and without immediate access to supervisors, coworkers, or support technicians, were of primary concern when evaluating feasibility. We were pleased to see that the average total SUS score was nearly 10 points higher than the widely accepted usability score of ≥ 68 (Brooke, 2013; Lewis & Sauro, 2018) as an “above average” and nearly 20 points higher when compared to other commercially available software used in research (average SUS score of 57; Kortum & Bangor, 2013), indicating high levels of overall satisfaction with the mHealth survey application.

Despite high satisfaction scores, learnability, memorability, and error prevention showed some weaknesses open-ended response SUS items. Some RAs found the system unnecessarily complex. Based on our testing, we recommend that some RAs, who may not have formal technology backgrounds, to be trained specifically to troubleshoot the software.

Nevertheless, most RAs found the mHealth survey application improved the efficiency of their workflow when compared to their experiences with paper-based data collection (e.g., hospital charting or data collection in previous studies) and rated the SUS question “I think I would like to use this system frequently” as the highest rated SUS item. We note also that cell phone ownership in Indonesia is high (120 subscriptions per 100 people; World Bank, n.d.) and likely the RAs’ comfort and familiarity was influenced by the ubiquity of these devices. Earlier studies using TAM found that utility was a significant determinant of intention to use technology in health-care settings while usability was not (Hu, Chau, Liu Sheng, & Tam, 1999; Zhang, Cocosila, & Archer, 2010). This substantiates the need, recognized by many other usability scientists (Dwivedi et al., 2019; Lee, Kozer, & Larson, 2003; Wu, Chen, & Lin, 2007), for additional variables in the most parsimonious version of TAM that predict perceived usability.

From an investigator perspective, the mHealth survey application provided substantial utility for research investigators, including major programmatic and financial efficiencies. Previously a project of this scale would require an investigator remain onsite (internationally for this research) to provide constant support to the research team. Investigators were able to remotely monitor, make necessary adjustments to translate the survey, improve the data model, and add user profiles.

Like the RAs and participants in earlier technology acceptance studies (Hu et al., 1999; Zhang, Cocosila, & Archer,, 2010), our investigators placed a larger emphasis on the utility of the software application over some of the limitations in learnability and error prevention to guide their decision of whether to adopt the mHealth survey application. The mHealth application used in this study met researchers’ expectations for usability yet required a significant investment of time to become proficient in designing and troubleshooting the mHealth survey application. The system was felt to reduce data entry errors yet presented challenges for editing data and revising questionnaires after the initial deployment. Over time, however, the mHealth application proved to be very reliable, and from the perspective of researchers, valuable as an approach to data collection.

Evaluation of usability of software applications to support investigators’ role in data collection and analysis are rare. Nonetheless, we believe usability is an important consideration for investigators. Research that focuses on recreational and consumer focused software applications have found usability, along with enjoyment, are major predictors of technology acceptance (Naqvi, Chandio, Soomro, & Abbasi, 2018; Wang & Goh, 2017). Our findings concur with others (Chau & Hu, 2001) that find that utility is more important than usability for decisions about software applications used by professionals to support their work.

5 |. Study Limitations and Future Research

This feasibility study has limitations. First, social desirability may have influenced participants’ responses to the acceptability questionnaire despite assurances that their responses would have no bearing on study participation. Second, although we measured acceptability in relatively large sample of incarcerated PLWH, and a representative sample of RAs and research investigators, the number of research team members was small and therefore these findings may not generalize to other research teams. Third, qualitative data from the one open-ended survey item was insufficient to examine the learnability and memorability issues identified on the SUS. Fourth, the use of one study author to interview others may introduce some bias into the study, however we note that the authors had not worked together in the past, neither served in a supervisory role for each other, and the interviews were conducted separately. We also note that the interviews did uncover both positive and negative findings about the mHealth survey application.

Future studies should include a larger number of all categories of end users, or stakeholders, of the mHealth survey application to better support generalizability. Finally, mHealth studies in vulnerable populations, such as prisoners, should follow a systematic strategy to establish efficacy and scalability (Krishnan & Cravero, 2017).

6 |. CONCLUSION

This study demonstrated that mHealth data collection systems can be implemented in low resource settings without compromising functionality. This is especially valuable for global health research where geographic distance and differences in the availability of information technology present challenges for research integrity. Additionally, our study demonstrated that prisoners infected with HIV find it acceptable to report private information using an mHealth data collection system.

Acknowledgements

We thank study participants for generously sharing of their time. We thank research staff at Universitas Indonesia and the Directorate General of Corrections, Ministry of Law and Human Rights, Republic of Indonesia. Thanks also to Tissa A. Putri and Rhami Hartati-Aoyama for translation assistance.

Funding Information

This study was supported with an award from the National Institute on Drug Abuse (K23DA041988) to GJC.

Conflicts of Interest

Claire Cravero is was previously employed by Dimagi Inc. that created and supports the CommCare software. The CommCare software was used to design and deploy the application used in this study.

References

- Adebajo S, Obianwu O, Eluwa G, Vu L, Oginni A, Tun W, Sheehy M, Ahonsi B, Bashorun A, Idogho O, & Karlyn A (2014). Comparison of audio computer assisted self-interview and face-to-face interview methods in eliciting HIV-related risks among men who have sex with men and men who inject drugs in Nigeria. PLOS ONE, 9(1), Article e81981. 10.1371/journal.pone.0081981 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agarwal S, LeFevre AE, Lee J, L’Engle K, Mehl G, Sinha C, & Labrique A (2016). Guidelines for reporting of health interventions using mobile phones: Mobile health (mHealth) evidence reporting and assessment (mERA) checklist. British Medical Journal, 352(i1174). 10.1136/bmj.i1174 [DOI] [PubMed] [Google Scholar]

- Altice FL, Azbel L, Stone J, Brooks-Pollock E, Smyrnov P, Dvoriak S, Taxman FS, El-Bassel N, Martin NK, Booth R, Stöver H, Dolan K, & Vickerman P (2016). The perfect storm: Incarceration and the high-risk environment perpetuating transmission of HIV, hepatitis C virus, and tuberculosis in Eastern Europe and Central Asia. The Lancet, 388(10050), 1228–1248. 10.1016/S0140-6736(16)30856-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azbel L, Grishaev Y, Wickersham JA, Chernova O, Dvoryak S, Polonsky M, & Altice FL (2016). Trials and tribulations of conducting bio-behavioral surveys in prisons: Implementation science and lessons from Ukraine. International Journal of Prisoner Health, 12(2), 78–87. https://dx.doi.org/10.1108%2FIJPH-10-2014-0041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behling O, & Law KS (2000). Translating questionnaires and other research instruments: Problems and solutions. Sage Publications. [Google Scholar]

- Bick J, Culbert G, Al-Darraji HA, Koh C, Pillai V, Kamarulzaman A, & Altice FL (2016). Healthcare resources are inadequate to address the burden of illness among HIV-infected male prisoners in Malaysia. International Journal of Prisoner Health, 12(4), 253–269. 10.1108/ijph-06-2016-0017 [DOI] [PubMed] [Google Scholar]

- Blogg S, Utomo B, Silitonga N, Ayu N Hidayati D, & Sattler G (2014). Indonesian national inmate bio-behavioral survey for HIV and syphilis prevalence and risk behaviors in prisons and detention centers, 2010. SAGE Open, 4(1), 2158244013518924. [Google Scholar]

- Brooke J (1996). SUS: A quick and dirty usability scale. In Jordan PW, Thomas B, Weerdmeester BA, & McClelland IL (Eds.), Usability evaluation in industry (pp. 189–194). Taylor & Francis. [Google Scholar]

- Brooke J (2013). SUS: A retrospective. Journal of Usability Studies, 8(2), 29–40. Retrieved from https://uxpajournal.org/sus-a-retrospective/ [Google Scholar]

- Brown JL, Swartzendruber A, & DiClemente RJ (2013). Application of audio computer-assisted self-interviews to collect self-reported health data: An overview. Caries Research, 47(Suppl. 1), 40–45. 10.1159/000351827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S-E, Krishnan A, Ranjit YS, Marcus R, & Altice FL (2020). Assessing mobile health feasibility and acceptability among HIV-infected cocaine users and their healthcare providers: Guidance for implementing an intervention. mHealth, 6, 4. 10.21037/mhealth.2019.09.12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burrus O, Gupta C, Ortiz A, Zulkiewicz B, Furberg R, Uhrig J, Harshbarger C, & Lewis MA (2018). Principles for developing innovative HIV digital health interventions: The case of positive health check. Medical Care, 56(9), 756–760. 10.1097/mlr.0000000000000957 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell JI, Aturinda I, Mwesigwa E, Burns B, Santorino D, Haberer JE, Bangsberg DR, Holden RJ, Ware NC, & Siedner MJ (2017). The technology acceptance model for resource-limited settings (TAM-RLS): A novel framework for mobile health interventions targeted to low-literacy end-users in resource-limited settings. AIDS and Behavior, 21(11), 3129–3140. 10.1007/s10461-017-1765-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Castonguay BJU, Cressman AE, Kuo I, Patrick R, Trezza C, Cates A, Olsen H, Peterson J, Kurth A, Bazerman LB, & Beckwith CG (2020). The implementation of a text messaging intervention to improve HIV continuum of care outcomes among persons recently released from correctional facilities: Randomized controlled trial. Journal of Medical Internet Research mHealth and uHealth, 8(2), e16220. 10.2196/16220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catalani C, Philbrick W, Fraser H, Mechael P, & Israelski DM (2013). mHealth for HIV treatment & prevention: A systematic review of the literature. The Open AIDS Journal, 7, 17–41. 10.2174/1874613620130812003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chatfield A, Javetski G, Fletcher A, & Lesh N (2015). The CommCare evidence base. Retrieved from https://lib.digitalsquare.io/handle/123456789/77309

- Chau PYK, & Hu PJ-H (2001). Information technology acceptance by individual professionals: A model comparison approach. Decision Sciences, 32(4), 699–719. 10.1111/j.1540-5915.2001.tb00978.x [DOI] [Google Scholar]

- Comulada WS, Wynn A, van Rooyen H, Barnabas RV, Eashwari R, & van Heerden A (2019). Using mHealth to deliver a home-based testing and counseling program to improve linkage to care and ART adherence in rural South Africa. Prevention Science, 20(1), 126–136. 10.1007/s11121-018-0950-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culbert GJ, Bazazi AR, Waluyo A, Murni A, Muchransyah AP, Iriyanti M, Finnahari Polonsky, M., Levy J, Altice FL. (2016). The influence of medication attitudes on utilization of antiretroviral therapy (ART) in Indonesian prisons. AIDS and Behavior, 20(5), 1026–1038. https://dx.doi.org/10.1007%2Fs10461-015-1198-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culbert GJ, Crawford FW, Murni A, Waluyo A, Bazazi AR, Sahar J, Altice FL (2017). Predictors of mortality within prison and after release among persons living with HIV in Indonesia. Research and Reports in Tropical Medicine, 8, 25–35. 10.2147/rrtm.s126131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culbert GJ, Pillai V, Bick J, Al-Darraji HA, Wickersham JA, Wegman MP, Bazazi AR, Ferro E, Copenhaver M, Kamarulzaman A, & Altice FL (2016). Confronting the HIV, tuberculosis, addiction, and incarceration syndemic in Southeast Asia: Lessons learned from Malaysia. Journal of Neuroimmune Pharmacology, 11(3), 446–455. 10.1007/s11481-016-9676-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culbert GJ, Waluyo A, Wang M, Putri TA, Bazazi AR, & Altice FL (2019). Adherence to antiretroviral therapy among incarcerated persons with HIV: Associations with methadone and perceived safety. AIDS and Behavior, 23(8), 2048–2058. 10.1007/s10461-018-2344-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culbert GJ, Earnshaw VA, Levy JA (2019) Ethical challenges of HIV partner notification in prisons. Journal of the International Association of Providers of AIDS Care, 18, 2325958219880582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis FD Jr (1986). A technology acceptance model for empirically testing new end-user information systems: Theory and results [Doctoral dissertation, Massachusetts Institute of Technology; ]. DSpace@MIT. http://hdl.handle.net/1721.1/15192 [Google Scholar]

- Directorate of Corrections (2012). HIV and HCV prevalence and risk behavior study in Indonesian narcotics prisons. Jakarta: Directorate of Corrections, Ministry of Justice and Human Rights, Republic of Indonesia. [Google Scholar]

- Dolan K, Wirtz AL, Moazen B, Ndeffo-mbah M, Galvani A, Kinner SA, Courtney R, McKee M, Amon JJ, Maher L, Hellard M, Beyrer C, & Altice FL (2016). Global burden of HIV, viral hepatitis, and tuberculosis in prisoners and detainees. The Lancet, 388(10049), 1089–1102. 10.1016/s0140-6736(16)30466-4 [DOI] [PubMed] [Google Scholar]

- Dwivedi YK, Rana NP, Jeyaraj A, Clement M, & Williams MD (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21(3), 719–734. 10.1007/s10796-017-9774-y [DOI] [Google Scholar]

- Fazel S, Yoon IA, & Hayes AJ (2017). Substance use disorders in prisoners: An updated systematic review and meta-regression of prevalence studies in recently incarcerated men and women. Addiction, 112(10), 1725–1739. 10.1111/add.13877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fishbein M, & Ajzen. (1975). Belief, attitude, intention, and behavior: An introduction to theory and research. MA: Addison-Wesley. [Google Scholar]

- Gagnon M-P, Ngangue P, Payne-Gagnon J, & Desmartis M (2016). m-Health adoption by healthcare professionals: A systematic review. Journal of the American Medical Informatics Association, 23(1), 212–220. 10.1093/jamia/ocv052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershberger PE, & Kavanaugh K (2017). Comparing appropriateness and equivalence of email interviews to phone interviews in qualitative research on reproductive decisions. Applied Nursing Research, 37, 50–54. 10.1016/j.apnr.2017.07.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden RJ, & Karsh B-T (2010). The technology acceptance model: Its past and its future in health care. Journal of Biomedical Informatics, 43(1), 159–172. 10.1016/j.jbi.2009.07.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsieh H-F, & Shannon SE (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. https://doi.org/10.1177%2F1049732305276687 [DOI] [PubMed] [Google Scholar]

- Hu PJ, Chau PYK, Liu Sheng OR, & Tam KY (1999). Examining the technology acceptance model using physician acceptance of telemedicine technology. Journal of Management Information Systems, 16(2), 91–112. 10.1080/07421222.1999.11518247 [DOI] [Google Scholar]

- Iroh PA, Mayo H, & Nijhawan AE (2015). The HIV care cascade before, during, and after incarceration: A systematic review and data synthesis. American Journal of Public Health, 105(7), e1–e12. 10.2105/AJPH.2015.302635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izenberg JM, Bachireddy C, Wickersham JA, Soule M, Kiriazova T, Dvoriak S, & Altice FL (2014). Within-prison drug injection among HIV-infected Ukrainian prisoners: Prevalence and correlates of an extremely high-risk behaviour. International Journal of Drug Policy, 25(5), 845–852. 10.1016/j.drugpo.2014.02.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamarulzaman A, Reid SE, Schwitters A, Wiessing L, El-Bassel N, Dolan K, Moazen B, Wirtz AL, Verster A, & Altice FL (2016). Prevention of transmission of HIV, hepatitis B virus, hepatitis C virus, and tuberculosis in prisoners. The Lancet, 388(10049), 1115–1126. 10.1016/s0140-6736(16)30769-3 [DOI] [PubMed] [Google Scholar]

- Krishnan A, & Cravero C (2017). A multipronged evidence-based approach to implement mHealth for underserved HIV-infected populations. Mobile Media and Communication 5(2), 194–211. https://doi.org/10.1177%2F2050157917692390 [Google Scholar]

- Kuo I, Liu T, Patrick R, Trezza C, Bazerman L, Uhrig Castonguay. B. J., Peterson J, Kurth A, & Beckwith CG. (2019). Use of an mHealth intervention to improve engagement in HIV community-based care among persons recently released from a correctional facility in Washington, DC: A pilot study. AIDS and Behavior, 23(4), 1016–1031. 10.1007/s10461-018-02389-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kortum PT, & Bangor A (2013). Usability ratings for everyday products measured with the system usability scale. International Journal of Human-Computer Interaction, 29(2), 67–76. 10.1080/10447318.2012.681221 [DOI] [Google Scholar]

- Kurth A, Kuo I, Peterson J, Azikiwe N, Bazerman L, Cates A, & Beckwith CG (2013). Information and communication technology to link criminal justice reentrants to HIV care in the community. AIDS Research and Treatment, Article 547381, 1–6. 10.1155/2013/547381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurth AE, Spielberg F, Cleland CM, Lambdin B, Bangsberg DR, Frick PA, Severynen AO, Clausen M, Norman RG, Lockhart D, Simoni JM, & Holmes KK (2014). Computerized counseling reduces HIV-1 viral load and sexual transmission risk: Findings from a randomized controlled trial. Journal of Acquired Immune Deficiency Syndrome, 65(5), 611–620. 10.1097/QAI.0000000000000100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazzarini Z, & Altice FL (2000). A review of the legal and ethical issues for the conduct of HIV-related research in prisons. AIDS Public Policy Journal, 15(3-4),105–135. [PubMed] [Google Scholar]

- Lee Y, Kozar KA, & Larsen KRT (2003). The technology acceptance model: Past, present, and future. Communications of the Association for Information Systems, 12(1), 752–780. 10.17705/1CAIS.01250 [DOI] [Google Scholar]

- Lewis JR (1995). IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. International Journal of Human-Computer Interaction, 7(1), 57–78. 10.1080/10447319509526110 [DOI] [Google Scholar]

- Lewis JR, & Sauro J (2009). The factor structure of the system usability scale. In Kurosu M (Ed.), Lecture Notes in Computer Science (pp. 94–103). Human Centered Design. 10.1007/978-3-642-02806-9_12 [DOI] [Google Scholar]

- Lewis JR, & Sauro J (2018). Item benchmarks for the system usability scale. Journal of Usability Studies, 13(3) 158–167. [Google Scholar]

- Lincoln YS, & Guba EG (1985). Naturalistic Inquiry. CA: Sage Publications. [Google Scholar]

- Naqvi HF, Chandio FH, Soomro AF, & Abbasi MS (2018). Software as a service acceptance model: A user-centric perspective in cloud computing context. 2018 IEEE 5th International Conference on Engineering Technologies and Applied Sciences (ICETAS), 1-4. Institute of Electical and Electronics Engineers. 10.1109/icetas.2018.8629200 [DOI] [Google Scholar]

- Needle R, Fisher DG, Weatherby N, Chitwood D, Brown B, Cesari H, Booth R, Williams ML, Watters J, Andersen M, & Braunstein M (1995). Reliability of self-reported HIV risk behaviors of drug users. Psychology of Addictive Behaviors, 9(4), 242–250. 10.1037/0893-164X.9.4.242 [DOI] [Google Scholar]

- Nielsen J (1993). Usability Engineering. Morgan Kaufmann. [Google Scholar]

- Palumbo PJ, Zhang Y, Fogel JM, Guo X, Clarke W, Breaud A, Richardson P, Piwowar-Manning E, Hart S, Hamilton EL & Hoa NT (2019) HIV drug resistance in persons who inject drugs enrolled in an HIV prevention trial in Indonesia, Ukraine, and Vietnam: HPTN 074. PLOS ONE, 14(10), e0223829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peiris D, Praveen D, Johnson C, & Mogulluru K (2014). Use of mHealth systems and tools for non-communicable diseases in low-and middle-income countries: A systematic review. Journal of Cardiovascular Translational Research, 7(8), 677–691. 10.1007/s12265-014-9581-5 [DOI] [PubMed] [Google Scholar]

- Pham Q, Graham G, Carrion C, Morita PP, Seto E, Stinson JN, & Cafazzo JA (2019). A library of analytic indicators to evaluate effective engagement with consumer mHealth apps for chronic conditions: Scoping review. Journal of Medical Internet Research mHealth and uHealth, 7(1), e11941. 10.2196/11941 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pop-Eleches C, Thirumurthy H, Habyarimana JP, Zivin JG, Goldstein MP, De Walque D, MacKeen L, Haberer J, Kimaiyo S, & Sidle J (2011). Mobile phone technologies improve adherence to antiretroviral treatment in a resource-limited setting: A randomized controlled trial of text message reminders. AIDS, 25(6), 825–834. https://dx.doi.org/10.1097%2FQAD.0b013e32834380c1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnall R, Bakken S, Rojas M, Travers J, & Carballo-Dieguez A (2015). mHealth technology as a persuasive tool for treatment, care and management of persons living with HIV. AIDS and Behavior, 19(2), 81–89. 10.1007/s10461-014-0984-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sekhon M, Cartwright M, & Francis JJ (2017). Acceptability of healthcare interventions: An overview of reviews and development of a theoretical framework. BMC Health Services Research, 17(1),1–3. 10.1186/s12913-017-2031-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharfina Z, & Santoso HB (2016). An Indonesian adaptation of the system usability scale (SUS). 2016 International Conference on Advanced Computer Science and Information Systems (ICACSIS), 145-148. Institute of Electical and Electronics Engineers. 10.1109/ICACSIS.2016.7872776 [DOI] [Google Scholar]

- Sousa VE, & Lopez KD (2017). Towards usable E-Health: A systematic review of usability questionnaires. Applied Clinical Informatics, 8(2), 470–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spaulding AC, Drobeniuc A, Frew PM, Lemon TL, Anderson EJ, Cerwonka C, Bowden C, Freshley J, & del Rio C (2018). Jail, an unappreciated medical home: Assessing the feasibility of a strengths-based case management intervention to improve the care retention of HIV-infected persons once released from jail. PLOS ONE, 13(3), Article e0191643. 10.1371/journal.pone.0191643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uthman OA, Oladimeji O, & Nduka C (2017). Adherence to antiretroviral therapy among HIV-infected prisoners: A systematic review and meta-analysis. AIDS Care, 29(4), 489–497. 10.1080/09540121.2016.1223799 [DOI] [PubMed] [Google Scholar]

- Venkatesh V, & Bala H (2008). Technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39(2), 273–315. 10.1111/j.1540-5915.2008.00192.x [DOI] [Google Scholar]

- Walmsley R (2018). World Prison Population List (12 Edition). Retrieved December 1, 2020 from: http://www.prisonstudies.org/research-publications

- Wang X, & Goh DH-L (2017). Video game acceptance: A meta-analysis of the extended technology acceptance model. Cyberpsychology, Behavior, and Social Networking, 20(11), 662–671. https://psycnet.apa.org/doi/10.1089/cyber.2017.0086 [DOI] [PubMed] [Google Scholar]

- World Bank (n.d.). Mobile cellular subscriptions (per 100 people). International Telecommunication Union (ITU) World Telecommunication/ICT Indicators Database. Retrieved September 8, 2020, from: https://data.worldbank.org/indicator/IT.CEL.SETS.P2?locations=ID

- Wu J-H, Chen Y-C, & Lin L-M (2007). Empirical evaluation of the revised end user computing acceptance model. Computers in Human Behavior, 23(1), 162–174. 10.1016/j.chb.2004.04.003 [DOI] [Google Scholar]

- Zhang H, Cocosila M, & Archer N (2010). Factors of adoption of mobile information technology by homecare nurses: A technology acceptance model 2 approach. CIN: Computers, Informatics, Nursing, 28(1), 49–56. 10.1097/ncn.0b013e3181c0474a [DOI] [PubMed] [Google Scholar]