Abstract

As a part of a larger interdisciplinary project on Shakespeare sonnets’ reception (1, 2), the present study analyzed the eye movement behavior of participants reading three of the 154 sonnets as a function of seven lexical features extracted via Quantitative Narrative Analysis (QNA). Using a machine learning-based predictive modeling approach five ‘surface’ features (word length, orthographic neighborhood density, word frequency, orthographic dissimilarity and sonority score) were detected as important predictors of total reading time and fixation probability in poetry reading. The fact that one phonological feature, i.e., sonority score, also played a role is in line with current theorizing on poetry reading. Our approach opens new ways for future eye movement research on reading poetic texts and other complex literary materials(3).

Keywords: Literary reading, eye movements, eye tracking, QNA, predictive modeling

Introduction

When was the last time you read a poem, or a piece of literature? The answer of many people might well be ‘today’ or ‘yesterday’. Even though reading literature may no longer count among the essential activities of people’s leisure time, it still has a significant number of benefits in promoting, for example, general and cross-cultural education, social cognition or cognitive development (4, 5, 6 ,7). However, within the fields of reading and eye tracking research, single words or single sentences from non-literary materials appear to be the most extensively investigated text materials (8 ,9 ,10). Although psycholinguistic features, e.g., word length or word frequency, work differently in a connected text context (11, 12, 13), empirical research using natural materials like narrative texts or poems are quite rare and the majority of studies on literary works confine to text-based qualitative aspects (e.g., ‘close reading’). Reading research seems to be experiencing difficulty to open itself for empirical studies focusing on more natural and ecologically valid reading acts, as recently admonished by several researchers (14, 15, 13).

With the present study, we aim to explore which and how psycholinguistic features influence literary reading (e.g., some famous poems) by analyzing participants’ eye movement behavior which provides a valid measure of moment-to-moment comprehension processes (16, 17). To achieve our objective, we faced two major challenges: dissecting the complex literary works into measurable and testable features and applying computational methods which can handle the intercorrelated psycholinguistic features and the nonlinear relationship between them and reading behavior. In the following sections, we expound the two challenges separately, and at the end put forward our hypotheses.

Quantitative Narrative Analysis (QNA)

As we all know, natural texts mostly show a high level of complexity. They are built of single words that can be characterized by more than 50 lexical and sublexical features influencing their processing in single-word recognition tasks (18). The actual amount of these (or other) lexical features influencing eye movement parameters in natural reading of literary texts is a wide-open empirical question. These complex units then are combined to larger units like phrases, sentences, stanzas or paragraphs which again are characterized by an overabundance of text features (14, 19) including a great variety of rhetorical devices (20). While it is far from easy to qualitatively describe all these features—as evidenced by extensive debates on e.g., the classification of metaphors and similes (21)—, the challenge to quantify relevant text features properly is even greater and still in its beginnings. To start empirical investigations using (more) natural and complex materials, appropriate models and methods are necessary to handle the plethora of text and/or reader features and their multiple (nonlinear) interactions. On the modeling side, the Neurocognitive Poetics Model of literary reading (NCPM) (22, 14, 23 ,24, 25, 26) is a first theoretical account offering predictions about the relationship between different kinds of text features and reader responses, e.g., in eye tracking studies using natural text materials (27, 28). On the methods side, inspired by the NCPM, our group has been working for quite some time on different QNA approaches. In contrast to qualitative analysis, these try to quantitatively describe a maximum of the psycholinguistic features of complex natural verbal materials, as impressively demonstrated using the example of the 154 Shakespeare sonnets (1). Additionally, this approach proposes advanced tools for computing both cognitive and affective-aesthetic features potentially influencing reader responses at all three levels of observation, i.e., the experiential (e.g., questionnaires and ratings (29, 30, 31, 32, 1, 33, 34, 35)), the behavioral (e.g., eye movements(2)), and the neuronal (36).

Shakespeare’s sonnets indeed are a particularly challenging and fascinating stimulus material for QNA and count among the most aesthetically successful or popular pieces of verbal art in the world. Facilitating QNA, most of them have the same structure and rhythmic pattern, typically decasyllabic 14-liners in iambic pentameter with three quatrains and a concluding couplet, making them perfect research materials. They have been the object of countless essays by literary critics and of theoretical scientific studies (37, 38, 39). Furthermore, all 154 sonnets have been extensively ‘QNA-ed’ in our previous work yielding precise predictions concerning e.g., eye movement data (1). Furthermore, to our knowledge, none of the previous studies on reading literary texts or poems (40, 41, 32, 28, 42, 43, 44) examined the eye movement behavior of Shakespeare sonnets.

Since it is not possible to identify all relevant features characterizing a natural text [e.g., over 50 features mentioned for single word recognition (18) or over 100 features computed for the corpus of Shakespeare sonnets (1)], nearly all empirical studies we know of tested only a few selected features while ignoring the others without giving explicit reasons for this neglect, e.g., by using eye tracking (45, 46, 47, 48, 49, 10). Thus, for the present study about the influence of basic psycholinguistic features we decided to start –relatively– simple by concentrating on a set of seven easily computable (sub)lexical surface features combining well established and less tested ones. We excluded complex inter- and supralexical features (e.g., surprisal, syntactic simplicity), as well as any features that cannot be computed via QNA (e.g., age-of-acquisition, metaphoricity). The resulting set of surface features consists of two standard features (word length, word frequency) used in many eye movement studies and three standard features from word recognition studies much less used in the eye movement field (orthographic neighborhood density, higher frequent neighbors, and orthographic dissimilarity), and two phonological features theoretically playing a role in poetry reading (consonant vowel quotient, sonority score). In the following paragraphs, we further explain these features and summarize their effects, if available, observed in eye tracking studies using single sentences or short nonliterary texts:

In eye tracking studies of reading non-literary texts it is widely acknowledged that longer and low frequency words attract longer total reading time (sum of all fixations on the target word) and more fixations (50, 51, 52 ,53). Apart from these two basic surface features, a wealth of research also found effects of orthographic neighborhood density (number of words that can be created by changing a single letter of a target word (54), e.g., bat, fat, and cab are neighbors of cat) in word recognition and reading tasks (55). While effects of orthographic neighborhood density are usually facilitative, the presence of higher frequent neighbors in the hypothetical mental lexicon inhibits processing of a target word (56, 57, 58). However, there are no clear conclusions as to the effects of both features on eye movements in reading (25). Furthermore, using the Levenshtein distance metric, we can also compute an additional orthographic dissimilarity index for all words, going beyond the standard operationalization based on words of the same length. As far as we know, systematic effects of the above features on eye movements in the reading of poetry have not been reported so far.

Most people will agree with the statement that poetry is an artful combination of sound and meaning (21). While the above features are basically ‘orthographic’, the effects of sublexical and lexical phonological features that have been found in a variety of silent reading studies (59, 60, 61, 62, 63, 64, 23, 3, 65, 66) and the wide use of phonetic rhetorical devices in poetic language lead us to include also two phonological features: the consonant vowel quotient and the sonority score. Consonant vowel quotient is a simple proxy for the pronounceability of a word—which hypothetically is related to its ease of automatic phonological recoding (67). To quantify the acoustic energy or loudness of a sound, called sonority (68), we used the sonority score, a simplified index based on the sonority hierarchy of English phonemes, which allows to estimate the degree of distance from the optimal syllable structure (69). It was previously applied in the study of aphasia (70) and has recently been proposed as an important feature influencing the subjective beauty of words (29). There is evidence that consonant status and sonority play a role in silent reading (71, 72), especially of poetic texts (73). Both features have not been examined in literary reading studies using eye tracking.

Non-linear Interactive Models and Predictive Modeling

With the help of QNA, we can quantify psycholinguistic features and predict reader responses successfully (34). However, we still need to tackle the second challenge: within and between the disciplines involved in reading research there is an unspoken consent that all these psycholinguistic features influence the reading and interpretation of literary texts in a highly interactive and nonlinear way (14, 23, 34, 21). Kliegl et al. (74) already pointed out that using standard accounts like hierarchical regressions is not a solution for handling intercorrelated predictors and the nonlinear relationship between predictors and reading behavior. Consequently, we must look for appropriate tools to tackle these problems. One option is offered by recent developments e.g., in the fields of bioinformatics (75), ecology (76, 77), geology and risk analysis (78, 79), quantitative sociolinguistics (80, 81), epidemiology (82), neurocognitive poetics (29, 19, 33, 34, 1), fMRI data analysis (83) or applied reading research (84, 85) highlighting the application of machine learning tools like neural nets or bootstrap forests to predictive modeling accounts of big data sets with complex interactions and intercorrelations. Moreover, as an alternative and complement to the traditional ‘explanation approach’ of experimental psychology, machine learning principles and techniques can also help psychology become a more predictive and explorative science (86, 87). Thanks to such computational methods, tackling the challenge of analyzing human cognition, emotion or eye movement behavior in rich naturalistic settings (88) has become a viable option especially as concerns literary reading (89, 90, 26).

For present study, two non-linear interactive models, i.e., neural nets and bootstrap forests, were compared with one general linear model (standard least squares regression), to find out which approach optimally predicted relevant eye movement parameters during the reading and experiencing of poetry. The neural net model is a multi-layer perceptron which can predict one or more response variables using a flexible function of the input variables. It has the ability to implicitly detect all possible (nonlinear) interactions between predictor variables and a number of other advantages over regression models when dealing with complex stimulus-response environments (82). Bootstrap forests predict a response by averaging the predicted response values across many decision trees. Each tree is grown on a bootstrap sample of the training data (91). Both the non-linear interactive models and the general linear model were evaluated in a predictive modeling approach comparing a goodness of fit index (R2) for training and validation sets.

Taken together, in the context of our QNA-based predictive modeling approach, here we considered a minimalistic first attempt at introducing an already considerably more complex way of analyzing eye movements in reading poetic texts. We focused on potential effects of seven simple ‘surface’ features: word length, word frequency, orthographic neighborhood density, higher frequency neighbors, orthographic dissimilarity index, consonant vowel quotient, and sonority score on three eye movement parameters (first fixation duration, total reading time and fixation probability).

Hypotheses

Since non-linear interactive models can deal with complex interactions and detect hidden structures in complex data sets (92), we proposed that they would outperform the general linear model and produce satisfactory model fits for both the training and validation sets.

Based on previous eye tracking studies and existent models of eye movement control (48, 93, 94, 46, 49), we assumed that word length and word frequency play a key role in accounting for variance in total reading time and fixation probability, i.e., longer and low frequency words should attract longer total reading time and higher fixation probability also in poetry reading.

On account of the facilitative effect of orthographic neighborhood density and the inhibitory effect of higher frequency neighbors in the above mentioned word recognition studies, we also expected words with many (lower frequency) orthographic neighbors to produce shorter total reading time and lower fixation probability than low orthographic neighborhood density words and words with higher frequency neighbors. Similarly, we hypothesized that higher orthographic dissimilarity of a word (as a proxy for its orthographic salience) would increase its total reading time and fixation probability.

As concerns the two phonological features, consonant vowel quotient and sonority score, our hypothesis was that words with a high consonant vowel quotient (as a proxy for hindered phonological processing) and sonority score (as a proxy for increased aesthetic potential) require a more exigent processing (95, 71, 96) and thus would attract longer reading time and higher fixation probability. All effects were assumed to be smaller or non-significant for first fixation durations which usually reflect fast and automatic reading behavior less influenced by lexical parameters (97, 8).

Methods

Participants

Fifteen native English participants (five female; Mage= 31.5 years, SDage = 14.1, age range: 18–68 years) were recruited from an announcement released at Freie Universität Berlin. All participants had normal or corrected-to-normal vision. They were naive to the purposes of the experiment and were not trained literature scholars of poetry. Participants gave their informed, written consent before commencing the experiment and received either course credit or volunteered freely. This study was conducted in line with the standards of the ethics committee of the Department of Education and Psychology at Freie Universität Berlin.

Apparatus

Participants’ eye movements were recorded with a sampling rate of 1000 Hz, using a remote SR Research EyeLink 1000 desktop-mount eye tracker (SR Research Ltd., Mississauga, Ontario, Canada). Stimulus presentation was controlled by Eyelink Experiment Builder software (version 1.10.1630, https://www.sr-research.com/experiment-builder). Stimuli were presented on a 19-inch LCD monitor with a refresh rate of 60 Hz and a resolution of 1,024 × 768 pixels. A chin-and-head rest was used to minimize head movements. The distance from the participant’s eyes to the stimulus monitor was approximately 50 cm. We only tracked the right eye. Each tracking session was initialized by a standard 9-point calibration and validation procedure to ensure a spatial resolution error of less than 0.5° of visual angle.

Design and Stimuli

The three Sonnets chosen from the Shakespeare Corpus of 154 sonnets were: Sonnets 27 (‘Weary with toil…’), 60 (‘Like as the waves…’) and 66 (‘Tired with all these…’). The choice was made by an interdisciplinary team of experts taking into account the considerable poetic quality and representativeness of the motifs not only within the Shakespeare Sonnet’s corpus but also within European poetry. The motifs are: love as tension between body and soul (sonnet 27), death as related to time and soul (sonnet 60) and social evils during the period Shakespeare lived (sonnet 66). All three have the same metrical and rhythmical structure as most Shakespeare sonnets (see Introduction). Inspired by our previous QNA study on Shakespeare sonnets (1), we conducted a fine-grained lexical analysis of all words used in the present three sonnets, summarized in Table 1. The Pearson Chi-square test indicated no significant differences in the distribution of four main word classes between the three sonnets (χ2 = 6.31, df = 6, p = .39). We therefore collapsed the data across all sonnets to increase statistical power for predictive modeling.

Table 1.

Number of Words per Category within Each Sonnet and within all Three Sonnets

| Sonnet | Closed-class | Adj./ Adv. | N. | V. | Total |

|---|---|---|---|---|---|

| count [ % ] | count [ % ] | count [ % ] | count [ % ] | ||

| 27 | 49 [44.14] | 20 [18.02] | 28 [25.23] | 14 [12.61] | 111 |

| 60 | 48 [44.44] | 12 [11.11] | 30 [27.78] | 18 [16.67] | 108 |

| 66 | 33 [36.26] | 20 [21.98] | 21 [23.08] | 17 [18.68] | 91 |

| Total | 130 [41.94] | 52 [16.77] | 79 [25.48] | 49 [15.81] | 310 |

Note. Closed-class refers to the category of function words; Adj./ Adv. refers to adjective or adverb; N. refers to noun; V. refers to verb; % is the percentage of each word category within each sonnet or within all three sonnets.

Procedure

The experiment was conducted in a dimly lit and sound-attenuated room. The data acquisition for each sonnet was split in two parts: a first initial reading of the sonnet with eye tracking and a following paper-pencil memory test accompanied by several rating questions and marking tasks.

For the initial reading participants were instructed to “read each sonnet attentively and naturally” for their own understanding. Prior to the onset of the sonnet on a given trial, participants were presented with a black dot fixation marker (0.6° of visual angle), to the left of (the left-side boundary of) the first word in line 1; the distance between the cross and first word was 4.6°. The sonnets were presented to the participants automatically, when they fixated on a fixation marker presented left to the first line. Participants read the sonnets following their own reading speed. They could go back and forth as often as they wanted within a maximum time window of two minutes. Thirteen participants stopped reading before this deadline. To achieve a certain level of ecological validity, all sonnets were presented left-aligned in the center of the monitor (distance: 8.0° from the left margin of the screen) by using a variable-width font (Arial) with a letter size of 22-point size (approximately 4.5 × 6.5 mm, 0.5 × 0.7 degrees of visual). In order to facilitate accurate eye tracking 1.5-line spacing was used.

For the second part of data acquisition, participants went to another desk to work on the paper-pencil tasks self-developed in close cooperation with literature scholars. Our questionnaire had altogether 18 close- and open-ended questions concerning memory, topic identification, attention, understanding and emotional reactions. It also included three marking tasks where participants had to indicate unknown words, key words and the most beautiful line of the poem (the rating results will be reported elsewhere by the ‘humanities’ section of our interdisciplinary team). After answering the questionnaire for the first sonnet, participants continued with reading the second sonnet in front of the eye tracker and so on. The order of the three sonnets was counterbalanced across participants. In order to make the reading of the first sonnet comparable to the reading of the latter two, participants became acquainted with the questionnaire before the initial reading of the first sonnet.

At the beginning and end of the experiment, we used an English translation of the German multidimensional mood questionnaire (MDBF) (98) to evaluate the participants’ mood state. This questionnaire assesses three bipolar dimensions of subjective feeling (depressed vs. elevated, calmness vs. restlessness, sleepiness vs. wakefulness) on a 7-point rating scale. The results showed that our participants were in a neutral mood of calmness and slight sleepiness. Simple t-tests comparing the mood ratings at the beginning and the end of the experiments indicated no significant mood changes (all t (14)s < 1). Thus, reading sonnets did not induce longer-lasting changes in the global dimensions assessed by the MDBF.

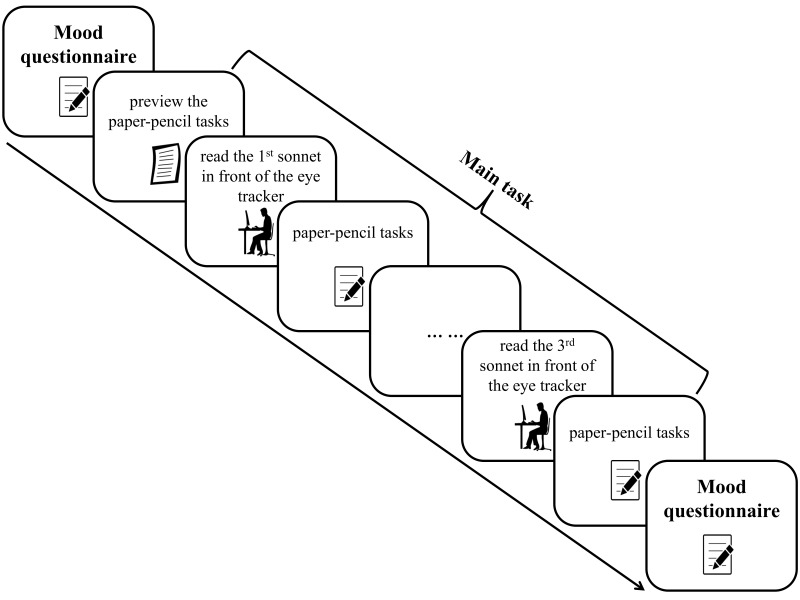

Altogether, the experiment took about 40 minutes (see Figure 1 for an illustration of the procedure).

Figure 1.

The Procedure of the Experiment. An English translation of the German multidimensional mood questionnaire (MDBF) (98) was presented to the participants before and after the main tasks to evaluate whether sonnets reading induced longer-lasting changes in participants’ mood state. The data acquisition for each sonnet was split in two parts: first initial reading of the sonnet with eye tracking and the following paper-pencil tasks. After answering the questionnaire for the first sonnet, participants continued with reading the second sonnet in front of the eye tracker and so on. The order of the three sonnets was counterbalanced across participants. In order to make the reading of the first sonnet comparable to the reading of the latter two, participants became acquainted with a questionnaire example before the initial reading of the first sonnet.

Data Analysis

Psycholinguistic features. All seven psycholinguistic features were computed for all unique words (word-type, 205 words, data for words appearing several times in the texts were the same) in the three sonnets based on the Gutenberg Literary English Corpus as reference (GLEC) (99): word length (wl) is the number of letters per word; word frequency (logf) is the log transformed number of occurrences of word; orthographic neighborhood density (on) is the number of words of the same length as the target word differing by one letter; higher frequent neighbors (hfn) is the number of orthographic neighbors with higher word frequency than the target word; orthographic dissimilarity density (odc) is the target word’s mean Levenshtein distance from all other words in the corpus, a metric that generalizes on to words of different lengths; consonant vowel quotient (cvq) is the quotient of consonants and vowels in one word; sonority score (sonscore) is the sum of phonemes’ sonority hierarchy with a division by the square root of wl (the sonority hierarchy of English phonemes yields 10 ranks: [a] > [e o] > [i u j w] > [ɾ] > [l] > [m n ŋ] > [z v] > [f θ s] > [b d ɡ] > [p t k]) (69, 34), e.g., in our three sonnets, ART got the sonscore of 10×1 [a] + 7×1 [r] + 1×1 [t] = 18/ SQRT (3) = 10.39.

The correlations between our seven features are given in Table 2. There were several significant correlations (e.g., wl & on, r = .81, p < .0001) indicating the usefulness of machine learning tools in literary text reading studies.

Table 2.

Correlations between Seven QNA Features

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| 1. wl | − | ||||||

| 2. logf | -.75 | − | |||||

| 3. on | -.81 | .68 | − | ||||

| 4. hfn | -.31 | .00 | .36 | − | |||

| 5. odc | .74 | -.48 | -.39 | -.18 | − | ||

| 6. cvq | .19 | -.10 | -.24 | -.05 | .10 | − | |

| 7. sonscore | .72 | -.55 | -.57 | -.28 | .62 | .00 | − |

Eye tracking parameters. Raw data were pre-processed using the EyeLink Data Viewer (https://www.sr-research.com/data-viewer/)1. Rectangular areas of interest (AOI) were defined automatically for each word; their centers were coincident with the center of each word. For the upcoming analysis we first calculated for each word, participant and sonnet the first fixation duration (duration of first fixation on the target word) as a measure of word identification, gaze duration (the sum of all fixations on the target word during first pass), re-reading time (sum of fixations on the target word after first pass), and the total reading time (sum of all fixations on the target word) as a measure of general comprehension difficulty (100). In a next step we aggregated the data over all participants to obtain the mean values for each word within each sonnet. For this aggregation skipped words were treated as missing values (skipping rate: M = .13, SD = .04). The amount of skipping was taken into account by calculating the fixation probability for each word. Words fixated by all participants, like ‘captain’ (sonnet 66), ‘cruel’ (sonnet 60) or ‘quiet’ (sonnet 27) had a probability of 100%. Words fixated by only one or two participants like ‘to’ (sonnet 27), ‘in’ (sonnet 60), or ‘I’ (sonnet 27) had fixation probabilities below 20%. In total, over 40% of the words had a fixation probability of 100% leading to a highly asymmetric distribution. Due to the fact that our psycholinguistic features do not differ for the same word occurring at different positions within a poem all eye tracking measures were aggregated again across sonnets. For all words appearing twice or more often within all three sonnets data were collapsed into a general mean.

Before running the three different models we calculated the correlations between the five aggregated eye tracking parameters. Because gaze duration had a high correlation with first fixation duration (r = .56, p < .0001) and total reading time (r = .73, p < .0001), and regression time had a high correlation with total reading time (r = .97, p < .0001), we only chose first fixation duration, total reading time and fixation probability as response parameters in the predictive modeling analyses (see Table 3).

Table 3.

Correlations between Five Common Eye-movement Parameters used in Reading Research

| Variables | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| 1. First fixation duration | − | ||||

| 2. Gaze duration | .56 | − | |||

| 3. Total reading time | .30 | .73 | − | ||

| 4. Fixation probability | .13 | .31 | .48 | − | |

| 5. Regression time | .16 | .53 | .97 | .47 | − |

Predictive modeling. JMP 14 Pro (https://www.jmp.com/en_us/software/predictive-analytics-software.html) was used to run all statistical analyses2. The values of all variables (seven predictors and three eye movement parameters) were standardized before modeling. To counter possible overfitting, for all three models we used a cross-validation procedure using 90% of the data as training set and the remaining 10% as validation set3. Given the intrinsic probabilistic nature of two of the models and the limited sample size (N = 205 words, i.e., about 20 in the validation sets), predictive modeling results varied across repeated runs, depending on which words were selected as training or validation subset. Therefore, the procedure was repeated 1000 times and the model fit scores were averaged (77).

When the model fits of non-linear interactive tools (i.e., neural nets, bootstrap forests) were acceptable (R2 > .30; low SD), feature importances (FIs) were calculated. FI is a term used in machine learning (https://scikit-learn.org/stable/modules/feature_selection.html). They were computed as the total effect of each predictor assessed by the dependent resampled inputs option of the JMP14 Pro software. The total effect is an index quantified by sensitivity analysis reflecting the relative contribution of a feature both alone and in combination with other features (for details(79)). This measure is interpreted as an ordinal value on a scale of 0 to 1 with FI values > .1 considered ‘important’ (75). To make our results better comparable with previous work, we also tested the effects of ‘important predictors’ (FIs > .10) in simple linear regressions using again the cross-validation procedure (90%/ 10% split) for 1000 times, although the intercorrelations between the predictors were not eliminated. If general linear model, i.e., standard least squares regression, got acceptable model fit as described above, instead of reporting FIs and simple regression results, we would report the mean of 1000 iterations’ parameter estimates.

We repeated the described analytical procedure for all three eye tracking parameters separately.

Results

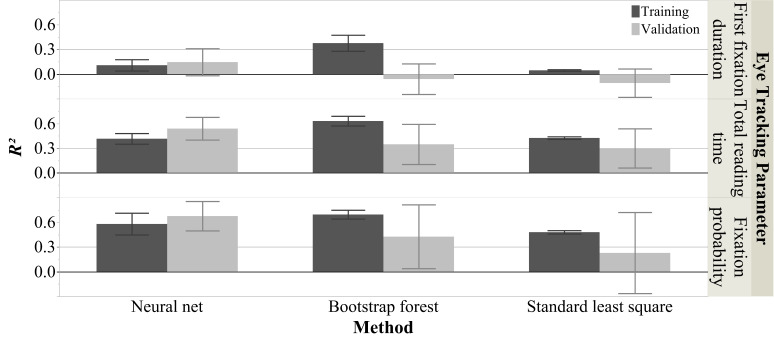

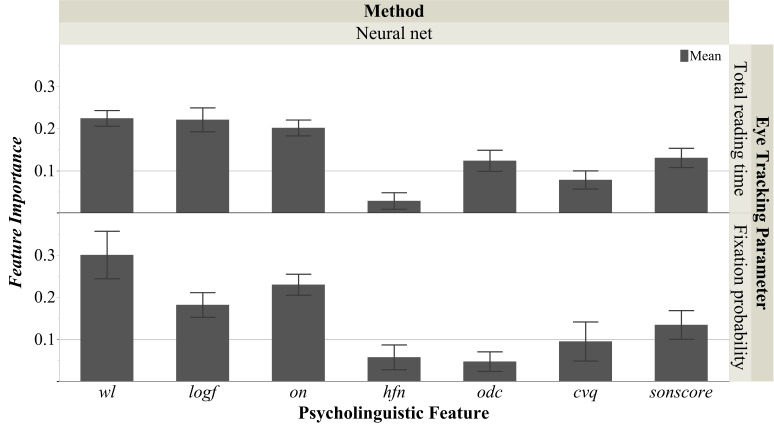

Figure 2 shows the overall mean R2s (averaged across 1000 iterations) for the three eye tracking parameters for both the training and validation sets using all three modeling approaches. Figure 3 shows the seven FIs for the optimal non-linear interactive approach. Below we illustrate our results for the three eye tracking parameters respectively. At the end of the results section we also reported the effects of ‘important predictors’ (FI > .10) in simple linear regressions.

Figure 2.

Model Fits of Different Measure Groups via Different Modeling Methods. This figure shows the mean R2s from 1000 iterations for three eye tracking parameters for both the training and validation sets using all three modeling approaches. Each error bar is constructed using 1 standard deviation from the mean.

Figure 3.

Feature Importances for Total Reading Time and Fixation Probability. Figure 3 shows the feature importances (FIs) for the neural net model. The FIs were calculated by using the dependent resampled inputs option and mean total effects of 1000 iterations. The total effect is an index quantified by sensitivity analysis, which reflects the relative contribution of that feature both alone and in combination with other features (for details (79). All seven psycholinguistic features were computed for all unique words (word-type, 205 words, data for words appearing several times in the texts were the same) in the three sonnets based on the Gutenberg Literary English Corpus as reference (GLEC) (99): wl was the number of letters per word; logf was log transformed word, on was the number of words of the same length as the target differing by one letter, hfn was the number of orthographic neighbors with higher word frequency than the target word; odc was the target word’s mean Levenshtein distance from all other words in the corpus; cvq was the quotient of consonant and vowels in one word; sonscore was a simplified index based on the sonority hierarchy of English phonemes which yields 10 ranks (69, 34). Each error bar is constructed using 1 standard deviation from the mean. (Note that, because of the bad model fits (see Figure 2), the FIs in explaining first fixation duration were excluded from this figure).

Mean First Fixation Duration

Figure 2 shows that while in the training set (train) the bootstrap forests model’s fit was satisfactory (mean R2train = .38, SD = .10), it did not generalize to the validation set (val) at all (mean R2val = -.10, SD = .19). The neural nets model and standard least squares regression also showed poor fits for both training (neural nets: mean R2train = .11, SD = .07; standard least squares: mean R2train = .05, SD = .01) and validation set (neural nets: mean R2val = .15, SD = .16; mean R2val = -.10, SD = .17). Thus, none of the three models seemed appropriate for predicting first fixation durations during poetry reading (at least not in the present text-reader context). Given the poor model fits, FIs were not calculated.

RMean Total Reading Time

As illustrated in Figure 2, all three model fits in the training set were good (neural nets: mean R2train = .42, SD = .07; bootstrap forests: mean R2train = .63, SD = .06; standard least squares: mean R2train = .43, SD = .02). However, only the neural net model performed well for both the training and validation sets (mean R2val = .54, SD = .14), while bootstrap forests’ and standard least squares regression’s fits in the validation set were smaller and had higher standard deviations (bootstrap forests: mean R2val = .35, SD = .25; standard least squares: mean R2val = .30, SD = .24).

The FI analysis of the optimal neural nets model, shown in Figure 3, suggests that two of the seven features were of minor importance (FIs for hfn and cvq were < .10), the rest being important: wl (.23), logf (.22), and on (.20) turned out to be vital predictors, followed by two other less important ones: sonscore (.13) and odc (.12).

Fixation Probability

Similar to total reading time, for fixation probability Figure 2 also shows that the fits for the training set of all three models were good (neural nets: mean R2train = .58, SD = .13; bootstrap forests: mean R2train = .70, SD = .05; standard least squares: mean R2train = .48, SD = .02). Again, only the neural nets performed well for both the training and validation sets (mean R2val = .68, SD = .18), while the model fits in the validation sets of bootstrap forests and standard least squares regression were insufficient (bootstrap forests: mean R2val = .43, SD = .39; standard least squares: mean R2val = .23, SD = .49).

For the FIs of neural net model shown in Figure 3, only four predictors were of importance: wl (.30) > on (.23) > logf (.18) > sonscore (.14) (FIs for odc, hfn and cvq were < .10).

Simple linear regressions

Simple linear regression results indicate that: Words with longer wl (total reading time: mean R2train = .37, SD = .02; mean R2val = .29, SD = .27; fixation probability: mean R2train = .33, SD = .01; mean R2val = .14, SD = .75), lower logf (total reading time: mean R2train = .36, SD = .02; mean R2val = .25, SD = .26; fixation probability: mean R2train = .27, SD = .02; mean R2val = .06, SD = .66) and smaller on (total reading time: mean R2train = .26, SD = .01; mean R2val = .18, SD = .23; fixation probability: mean R2train = .33, SD = .02; mean R2val = .09, SD = .73) had longer total reading time and a higher fixation probability. Words with higher odc (total reading time: mean R2train = .17, SD = .02; mean R2val = .07 SD = .26) attracted longer total reading time. The linear relationship between sonscore and the two eye movement parameters was positive: total reading time: mean R2train = .19, SD = .01; mean R2val = .11, SD = .20; fixation probability: mean R2train = .15, SD = .001; mean R2val = .02, SD = .41.

Discussion

Following up on earlier proposals (1), this study aimed to identify psycholinguistic surface features that shape eye movement behavior while reading Shakespeare sonnets by using a combination of QNA and predictive modeling techniques. Since understanding what happens while readers read poetry is a very complex task, a major challenge of Neurocognitive Poetics is to develop appropriate tools facilitating this task (23), in particular new combined computational QNA and machine learning tools (29, 33, 34). A wealth of text features can be quantified via QNA and their likely nonlinear interactive effects can best be analyzed with state-of-the-art predictive modeling techniques which can produce results largely differing from standard general linear model analyses (81, 86). Such techniques can deal with complex interactions difficult to model in a mixed-effects logistic framework (80) and detect hidden structure in complex data sets, e.g., by recursively scanning and (re-)combining variables (92).

Our results provide evidence for current theoretical discussions which highlight the good reputation regarding the predictive performance of non-linear interactive models (86, 87): both non-linear interactive models outperformed the general linear model with higher model fits (mean R2) in the training sets. Regarding the validation sets, again the general linear model performed poorly. Among the two non-linear interactive models, although bootstrap forests produced higher mean R2 in the training sets, they could not generalize well to the validation set (high SD). The poor performance of the general linear model suggests that there are relatively large low-order (e.g., two-way) interactions or other nonlinearities that the non-linear interactive models implicitly captured but that regression did not (101, 86). The good cross-validated performance of our neural nets together with the FI analysis offers a considerable heuristic potential for generating hypotheses that can be tested in subsequent experimental designs. Thus, our results suggest that five out of seven surface features (word length, word frequency, orthographic neighborhood density, sonority score, and orthographic dissimilarity index) are important predictors of mean total reading time, while four (all previous ones minus orthographic dissimilarity index) are important for fixation probability, at least in the context of classical poetry.

In line with previous studies, the results from simple linear regressions indicate that longer words with lower word frequency and smaller orthographic neighborhood density attract longer total reading times and more likely fixations (50, 51, 52, 53, 55). Words with higher orthographic dissimilarity attract longer total reading time. Moreover, a higher sonority of a word increased both its total reading time and fixation probability, which is a new finding in poetry reading studies.

Our findings confirm those of previous studies in that longer and low frequency words tend to be fixated more often and longer (50, 51, 52, 53), but also suggest other important predictors, at least for the reading of poetry: words high in orthographic neighborhood density attract less fixations and shorter total reading time supporting the facilitative effect hypothesis of Andrews (102, 103). Additionally, words which were more orthographically dissimilar (i.e., more salient) also attracted longer total reading time. The results concerning the feature higher frequent neighbors are inconclusive across the three models which may be due to the fact that in our texts target words had relatively small higher frequent neighbors values (M = .62, SD = 1.24). The effect of this feature requires further investigation using different texts.

Our results also support the hypothesis that through a process of more or less unconscious phonological recoding (63, 66), text sonority may play a role in reading poetic texts: indeed, a higher sonority of a word increased both its total reading time and fixation probability supporting our hypothesis. Although replications—e.g. in studies with experimental designs—are required before any conclusions can be drawn, we propose that readers tend to have a more intensive phonological recoding during poetry reading (73).

In sum, we take our results as first encouraging evidence that QNA in combination with predictive modeling can be usefully applied to the study of eye tracking behavior in reading complex literary texts. We are also confident that in future studies with bigger samples (i.e., more and longer texts, more readers) and extended feature sets (including interlexical and supralexical ones (23) better generalization performance will be obtained. Here we focused on a few relatively simple QNA-based lexical surface features, but in future studies we will also use computable semantic and syntactic features at the sentence or paragraph levels, as well as predictors related to aesthetic aspects (19).

Limitations and Outlook

A first obvious limitation of the present analyses is the focus on (sub)lexical surface features. There is little doubt that also other sublexical, lexico-semantic, as well as complex interlexical and supralexical features (e.g., syntactic complexity) affect eye tracking parameters during literary reading and, in fact, the multilevel hypothesis of the NCPM—empirically supported by behavioral, peripheral-physiological and neuronal data predicts just that (36, 32). However, for this first study with a relatively small sample size, we felt that using these seven features—several of which are novel to the field of eye tracking in reading—already made things complicated enough. We think that the present five ‘important’ features will also play a role in future extended predictive modeling studies including other features, but this is of course an open empirical question. We are currently working on extending the present research to other lexical and inter/supra-lexical features including qualitative ones like metaphoricity (104), but including more features also requires extending sample sizes (i.e., more/longer texts and more participants), a costly enterprise.

Another issue concerns the fact that word repetition or position was not included in the present analyses (i.e., data for words appearing several times in the texts were averaged). In contrast to the immediacy assumption of Just and Carpenter (50), parafoveal preview effects as predicted by current eye movement control models indicate that both spatial and temporal eye tracking parameters are affected by other factors than the features of the fixated word (for review (9, 46)). Moreover, since Just and Carpenter’s study (50), it is known that words at line beginnings or ends have a special status. This should also be true for rhyming words at line ends in sonnets or similar poem forms. While we think that our averaging procedure might have added some noise to our data without invalidating them, future studies should definitely have a closer look at word position and repetition effects in poetry reading.

Another limitation is the relatively small sample size of our study. In all, only 15 participants read only three Shakespeare sonnets with only 205 words. Even though we used predictive modeling with 1000 iterations, our findings require replication and extension. However, our goal in this study was to reach out to bridge the gap between text based qualitative analyses (dominant in the humanities) and empirical research on literature reading. In the future, we need to check the validity of our findings with larger samples and the generalizability to other literary works.

In sum, with all caution due to the limitations of this first exploratory study, the present results offer the perspective that some psycholinguistic features so far unused in (or unknown to) the ‘eye tracking in reading community’, in particular orthographic neighborhood density and sonority score could be important predictors to be looked at more closely in future research. Whether they are specific to the current selection of three sonnets or of more general interest is a valid open research issue not only for neurocognitive poetics but also for research on eye movements in reading in general.

Ethics and Conflict of Interest

We declare that the contents of the article are in agreement with the ethics described in http://biblio.unibe.ch/portale/elibrary/BOP/jemr/ethics.html and that there is no conflict of interest regarding the publication of this paper.

Acknowledgements

We wish to thank Giordano D., Gambino, R., Pulvirenti G., Mangen, A., Papp-Zipernovszky, O., Abramo, F., Schuster S., and Schmidtke, D. for providing and discussing some ideas in this project. We also thank Tilk S. for helping with carrying out the experiment. The authors would like to acknowledge networking support by COST Action IS1404 E-READ. Xue S. would like to thank Chinese Scholarship Council for supporting her PhD study at Freie Universität Berlin.

Footnotes

Firstly, if fixations of a line drifted from the whole line, we corrected them into the right position. Secondly, fixation dura-tions less than 80 ms were merged with nearby fixations (if the distance between them was less than one degree) or removed from further analysis.

Based on the results of pilot and related work (e.g., (35), for the neural nets model we used the following parameter set: one hidden layer with 3 nodes, hyperbolic tan (TanH) activation function; number of boosting models = 10, learning rate = 0.1; number of tours = 10. For the bootstrap forests model, we used the default set: number of trees in the forest = 100, number of terms sampled per split = 1, minimum/maximum splits per tree = 10/ 2000, minimum size split = 5, except that we defined the max number of terms = 3. For standard least squares regression analysis, we only specified the seven fixed effects (wl, logf, on, hfn, odc, cvq, and sonscore) and predicted each eye tracking parameter using the same seven predictors (emphasis option: effect leverage).

Using a 70/30% training/test cross-validation decreased model fits, probably due to the limited sample size.

References

- Abramo F, Gambino R, Pulvirenti G, Xue S, Sylve-ster T, Magen A, et al. A qualitative-quantitative analysis of Shakespeare’s sonnet 60.

- Andrews, S. (1989). Frequency and Neighborhood Effects on Lexical Access: Activation or Search? Journal of Experimental Psychology. Learning, Memory, and Cognition, 15(5), 802–814. 10.1037/0278-7393.15.5.802 [DOI] [Google Scholar]

- Andrews, S. (1992). Frequency and neighborhood effects on lexical access: Lexical similarity or orthographic re-dundancy? Journal of Experimental Psychology. Learning, Memory, and Cognition, 18(2), 234–254. 10.1037/0278-7393.18.2.234 [DOI] [Google Scholar]

- Andrews, S. (1997). The effect of orthographic similarity on lexical retrieval: Resolving neighborhood conflicts. Psychonomic Bulletin & Review, 4(4), 439–461. 10.3758/BF03214334 [DOI] [Google Scholar]

- Aryani, A., Conrad, M., Schmidtke, D., & Jacobs, A. (2018. a). Why ‘piss’ is ruder than ‘pee’? The role of sound in affective meaning making. PLoS One, 13(6), e0198430. 10.1371/journal.pone.0198430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aryani, A., Hsu, C. T., & Jacobs, A. M. (2018. b). The sound of words evokes affective brain responses. Brain Sciences, 8(6), 94. 10.3390/brainsci8060094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aryani, A., Kraxenberger, M., Ullrich, S., Jacobs, A. M., & Conrad, M. (2016). Measuring the basic affective tone of poems via phonological saliency and iconicity. Psy-chol Aesthetics. Creat Arts., 10(2), 191–204. [Google Scholar]

- Aryani, A., Jacobs, A. M., & Conrad, M. (2013). Extracting salient sublexical units from written texts: “Emophon,” a corpus-based approach to phonological iconicity. Frontiers in Psychology, 4(OCT), 654. 10.3389/fpsyg.2013.00654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berent, I. (2013). The phonological mind. Trends in Cognitive Sciences, 17(7), 319–327. 10.1016/j.tics.2013.05.004 [DOI] [PubMed] [Google Scholar]

- Boston, M. F., Hale, J., Kliegl, R., Patil, U., & Vasishth, S. (2008). Parsing costs as predictors of reading difficulty: An evaluation using the Potsdam Sentence Corpus. Journal of Eye Movement Research, 2(1), 1–12. [Google Scholar]

- Braun, M., Hutzler, F., Ziegler, J. C., Dambacher, M., & Jacobs, A. M. (2009). Pseudohomophone effects provide evidence of early lexico-phonological processing in visual word recognition. Human Brain Mapping, 30(7), 1977–1989. 10.1002/hbm.20643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman, L. E. O. (2001). Random forests. Machine Learning, 45(1), 5–32. 10.1023/A:1010933404324 [DOI] [Google Scholar]

- Carrol, G., & Conklin, K. (2014). Getting your wires crossed: Evidence for fast processing of L1 idioms in an L2. Bilingualism., 17(4), 784–797. 10.1017/S1366728913000795 [DOI] [Google Scholar]

- Cichy, R. M., & Kaiser, D. (2019). Deep Neural Networks as Scientific Models. Trends in Cognitive Sciences, 23(4), 305–317. 10.1016/j.tics.2019.01.009 [DOI] [PubMed] [Google Scholar]

- Cichy, R. M., Khosla, A., Pantazis, D., & Oliva, A. (2017). Dynamics of scene representations in the human brain revealed by magnetoencephalography and deep neural networks. NeuroImage, 153, 346–358. 10.1016/j.neuroimage.2016.03.063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clements, G. N. (1990). The role of the sonority cycle in core syllabification (pp. 283–333). Papers in Laboratory Phonology. 10.1017/CBO9780511627736.017 [DOI] [Google Scholar]

- Clifton C, Staub A, Rayner K. Eye movements in reading words and sentences. Eye Movements: A Window on Mind and Brain. Amsterdam, Nether-lands: Elsevier; 2007. p. 341–71. 10.1016/B978-008044980-7/50017-3 [DOI] [Google Scholar]

- Coltheart, M., Davelaar, E., Jonasson, T., & Besner, D. (1977). Access to the internal lexicon. Attention and Performance, VI, 535–555. [Google Scholar]

- Dixon, P., & Bortolussi, M. (2015). Measuring Literary Expe-rience: Comment on Jacobs. Scientific Study of Literature, 5(2), 178–182. 10.1075/ssol.5.2.03dix [DOI] [Google Scholar]

- Engbert, R., Nuthmann, A., Richter, E. M., & Kliegl, R. (2005). SWIFT: A dynamical model of saccade generation during reading. Psychological Review, 112(4), 777–813. 10.1037/0033-295X.112.4.777 [DOI] [PubMed] [Google Scholar]

- Graf, R., Nagler, M., & Jacobs, A. M. (2005). Faktorenanalyse von 57 Variablen der visuellen Worterkennung. Zeitschrift für Psychol. J Psychol., 213(4), 205–218. [Google Scholar]

- Grainger, J., O’Regan, J. K., Jacobs, A. M., & Segui, J. (1989). On the role of competing word units in visual word recognition: The neighborhood frequency effect. Perception & Psychophysics, 45(3), 189–195. 10.3758/BF03210696 [DOI] [PubMed] [Google Scholar]

- Grainger, J., & Jacobs, A. M. (1996). Orthographic processing in visual word recognition: A multiple read-out model. Psychological Review, 103(3), 518–565. 10.1037/0033-295X.103.3.518 [DOI] [PubMed] [Google Scholar]

- Hastie, T., Tibshirani, R., & Friedman, J. (2009). Unsupervised Learning. In The Elements of Statistical Learning (pp. 485–585). New York, NY: Springer. 10.1007/978-0-387-84858-7_14 [DOI] [Google Scholar]

- Hsu, C. T., Jacobs, A. M., Citron, F. M. M., & Conrad, M. (2015). The emotion potential of words and passages in reading Harry Potter—An fMRI study. Brain and Language, 142, 96–114. 10.1016/j.bandl.2015.01.011 [DOI] [PubMed] [Google Scholar]

- Hyönä, J., & Hujanen, H. (1997). Effects of Case Marking and Word Order on Sentence Parsing in Finnish: An Eye Fixation Analysis. Q J Exp Psychol Sect A., 50(4), 841–858. 10.1080/713755738 [DOI] [Google Scholar]

- Inhoff, A. W., & Rayner, K. (1986). Parafoveal word processing during eye fixations in reading: Effects of word frequency. Perception & Psychophysics, 40(6), 431–439. 10.3758/BF03208203 [DOI] [PubMed] [Google Scholar]

- Jacobs, A. M. (2011). Neurokognitive Poetik: Elemente eines Modells des literarischen Lesens. [Neurocognitive poetics: Elements of a model of literary reading] In Schrott R. & Jacobs A. M. (Eds.),. Gehirn und Gedicht: Wie wir unsere Wirklichkeiten konstruieren [Brain and Poetry: How We Construct Our Realities]. (pp. 492–520). Munich: Carl Hanser. [Google Scholar]

- Jacobs, A. M. (2015. a). Neurocognitive poetics: Methods and models for investigating the neuronal and cognitive-affective bases of literature reception. Frontiers in Human Neuroscience, 9, 186. 10.3389/fnhum.2015.00186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs, A. M. (2015. b). Towards a neurocognitive poetics model of literary reading. In Cognitive neuroscience of nat-ural language use (pp. 135–159). New York, NY, US: Cambridge University Press. 10.1017/CBO9781107323667.007 [DOI] [Google Scholar]

- Jacobs, A. M. (2015. c). The scientific study of literary experience: Sampling the state of the art. Scientific Study of Literature, 5(2), 139–170. 10.1075/ssol.5.2.01jac [DOI] [Google Scholar]

- Jacobs, A. M. (2017). Quantifying the Beauty of Words: A Neurocognitive Poetics Perspective. Frontiers in Human Neuroscience, 11, 622. 10.3389/fnhum.2017.00622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs, A. M. (2018. a). The Gutenberg English Poetry Corpus: Exemplary Quantitative Narrative Analyses. Front Digit Humanit., 5, 5. 10.3389/fdigh.2018.00005 [DOI] [Google Scholar]

- Jacobs, A. M. (2018. b). (Neuro-)Cognitive poetics and computa-tional stylistics. Scientific Study of Literature, 8(1), 165–208. 10.1075/ssol.18002.jac [DOI] [Google Scholar]

- Jacobs, A. M., & Kinder, A. (2017). “The Brain Is the Prisoner of Thought” : A Machine-Learning Assisted Quantitative Narrative Analysis of Literary Metaphors for Use in Neurocognitive Poetics. Metaphor and Symbol, 32(3), 139–160. 10.1080/10926488.2017.1338015 [DOI] [Google Scholar]

- Jacobs, A. M., & Kinder, A. (2018). What makes a metaphor lite-rary? Answers from two computational studies. Metaphor and Symbol, 33(2), 85–100. 10.1080/10926488.2018.1434943 [DOI] [Google Scholar]

- Jacobs, A. M., & Lüdtke, J. (2017). Immersion into narrative and poetic worlds. Narrat Absorpt., 27, 69–96. 10.1075/lal.27.05jac [DOI] [Google Scholar]

- Jacobs, A. M., & Willems, R. M. (2018). The fictive brain: Neuro-cognitive correlates of engagement in literature. Review of General Psychology, 22(2), 147–160. 10.1037/gpr0000106 [DOI] [Google Scholar]

- Jacobs, A., Hofmann, M. J., & Kinder, A. (2016. a). On elementary affective decisions: To like or not to like, that is the question. Frontiers in Psychology, 7, 1836. 10.3389/fpsyg.2016.01836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs, A. M., Lüdtke, J., Aryani, A., Meyer-Sickendieck, B., & Conrad, M. (2016. b). Mood- empathic and aesthetic res-ponses in poetry reception: A model-guided, multilevel, multimethod approach. Scientific Study of Literature, 6(1), 87–130. 10.1075/ssol.6.1.06jac [DOI] [Google Scholar]

- Jacobs, A. M., Rey, A., Ziegler, J. C., & Grainger, J. (1998). MROM-p: An interactive activation, multiple readout model of orthographic and phonological processes in visual word recognition. In Localist connectionist ap-proaches to human cognition (pp. 147–188). Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers. [Google Scholar]

- Jacobs, A. M., Schuster, S., Xue, S., & Lüdtke, J. (2017). What’s in the brain that ink may character….: A quantitative narrative analysis of Shakespeare’s 154 sonnets for use in neurocognitive poetics. Scientific Study of Literature, 7(1), 4–51. 10.1075/ssol.7.1.02jac [DOI] [Google Scholar]

- Jacobs, A. M., Võ, M. L. H., Briesemeister, B. B., Conrad, M., Hofmann, M. J., Kuchinke, L., et al. Braun, M. (2015). 10 years of BAWLing into affective and aesthetic processes in reading: What are the echoes? Frontiers in Psychology, 6, 714. 10.3389/fpsyg.2015.00714 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jakobson, R., & Jones, L. G. (1970). Shakespeare’s verbal art in Th’expence of spirit (p. 32). Walter de Gruyter. 10.1515/9783110889673 [DOI] [Google Scholar]

- Just, M. A., & Carpenter, P. A. (1980). A theory of reading: From eye fixations to comprehension. Psychological Review, 87(4), 329–354. 10.1037/0033-295X.87.4.329 [DOI] [PubMed] [Google Scholar]

- Kidd DC, Castano E. Reading Literary Fiction Im-proves Theory of Mind. Science. 2013. October 18;342(6156):377 LP–380. [DOI] [PubMed]

- Kliegl, R., Olson, R. K., & Davidson, B. J. (1982). Regression analyses as a tool for studying reading processes: Comment on Just and Carpenter’s eye fixation theory. Memory & Cognition, 10(3), 287–296. 10.3758/BF03197640 [DOI] [PubMed] [Google Scholar]

- Klitz, T. S., Legge, G. E., & Tjan, B. S. (2000). Saccade Planning in Reading With Central Scotomas: Comparison of Hu-man and Ideal Performance. Reading as a Perceptual Process (pp. 667–682). Amsterdam, Netherlands: North-Holland/Elsevier Science Publishers. [Google Scholar]

- Koopman, E. M. (2016). (Emy). Effects of “literariness” on emotions and on empathy and reflection after reading. Psychology of Aesthetics, Creativity, and the Arts, 10(1), 82–98. 10.1037/aca0000041 [DOI] [Google Scholar]

- Kraxenberger, M. (2017). On Sound-Emotion Associations in Poetry. Freie Universität Berlin. [Google Scholar]

- Kuperman, V., Dambacher, M., Nuthmann, A., & Kliegl, R. (2010). The effect of word position on eye-movements in sentence and paragraph reading. Quarterly Journal of Experimental Psychology, 63(9), 1838–1857. 10.1080/17470211003602412 [DOI] [PubMed] [Google Scholar]

- Kuperman, V., Drieghe, D., Keuleers, E., & Brysbaert, M. (2013). How strongly do word reading times and lexical decision times correlate? Combining data from eye movement corpora and megastudies. Quarterly Journal of Experimental Psychology, 66(3), 563–580. 10.1080/17470218.2012.658820 [DOI] [PubMed] [Google Scholar]

- Ladefoged P. A Course in Phonetics. Fort Worth: Harcourt: Brace Jovanovich College Publishers; 1993. [Google Scholar]

- Lappi, O. (2015). Eye Tracking in the Wild: The Good, the Bad and the Ugly. Journal of Eye Movement Research, 8(5). [Google Scholar]

- Lausberg, H. (1960). Handbuch der literarischen Rhetorik : Eine Grundlegung der Literaturwissenschaft. Hueber. [Google Scholar]

- Lauwereyns, J., & d’Ydewalle, G. (1996). Knowledge acquisition in poetry criticism: The expert’s eye movements as an information tool. International Journal of Human-Computer Studies, 45(1), 1–18. 10.1006/ijhc.1996.0039 [DOI] [Google Scholar]

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444. 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- Lee, H.-W., Rayner, K., & Pollatsek, A. (2001). The relative contri-bution of consonants and vowels to word identifica-tion during reading. Journal of Memory and Language, 44(2), 189–205. 10.1006/jmla.2000.2725 [DOI] [Google Scholar]

- Leech, G. N. (1969). A linguistic guide to English poetry. London, United Kingdom: Longman. [Google Scholar]

- Legge, G. E., Klitz, T. S., & Tjan, B. S. (1997). Mr. Chips: An ideal-observer model of reading. Psychological Review, 104(3), 524–553. 10.1037/0033-295X.104.3.524 [DOI] [PubMed] [Google Scholar]

- Lou, Y., Liu, Y., Kaakinen, J. K., & Li, X. (2017). Using support vector machines to identify literacy skills: Evidence from eye movements. Behavior Research Methods, 49(3), 887–895. 10.3758/s13428-016-0748-7 [DOI] [PubMed] [Google Scholar]

- Maïonchi-Pino, N., de Cara, B., Écalle, J., & Magnan, A. (2012). Are French dyslexic children sensitive to consonant sonority in segmentation strategies? Preliminary evidence from a letter detection task. Research in Developmental Disabilities, 33(1), 12–23. 10.1016/j.ridd.2011.07.045 [DOI] [PubMed] [Google Scholar]

- Maïonchi-Pino N, de Cara B, Magnan A, Ecalle J. Roles of consonant status and sonority in printed syl-lable processing: Evidence from illusory conjunction and audio-visual recognition tasks in French adults. Curr Psychol Lett Behav Brain Cogn. 2008. September 8;24(2).

- Manel, S., Dias, J. M., Buckton, S. T., & Ormerod, S. J. (1999). Alterna-tive methods for predicting species distribution: An il-lustration with Himalayan river birds. Journal of Applied Ecology, 36(5), 734–747. 10.1046/j.1365-2664.1999.00440.x [DOI] [Google Scholar]

- Marr, M. J. (2018). Overtaking the Creases: Introduction to Francis Mechner’s “A Behavioral and Biological Analysis of Aesthetics.”. The Psychological Record, 68(3), 285–286. 10.1007/s40732-018-0307-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsuki, K., Kuperman, V., & Van Dyke, J. A. (2016). The Random Forests statistical technique: An examination of its value for the study of reading. Scientific Studies of Reading, 20(1), 20–33. 10.1080/10888438.2015.1107073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mueller, H., Geyer, T., Günther, F., Kacian, J., & Pierides, S. (2017). Reading English-Language Haiku: Processes of Meaning Construction Revealed by Eye Movements. Journal of Eye Movement Research, 10(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nefeslioglu, H. A., Gokceoglu, C., & Sonmez, H. (2008). An as-sessment on the use of logistic regression and artificial neural networks with different sampling strategies for the preparation of landslide susceptibility maps. Engineering Geology, 97(3–4), 171–191. 10.1016/j.enggeo.2008.01.004 [DOI] [Google Scholar]

- Nicklas, P., & Jacobs, A. M. (2017). Rhetoric, Neurocognitive Poetics, and the Aesthetics of Adaptation. Poet Today., 38(2), 393–412. 10.1215/03335372-3869311 [DOI] [Google Scholar]

- Perea, M., & Pollatsek, A. (1998). The effects of neighborhood frequency in reading and lexical decision. Journal of Experimental Psychology. Human Perception and Performance, 24(3), 767–779. 10.1037/0096-1523.24.3.767 [DOI] [PubMed] [Google Scholar]

- Pynte, J., New, B., & Kennedy, A. (2008). A multiple regression analysis of syntactic and semantic influences in read-ing normal text. Journal of Eye Movement Research, 2(1). [Google Scholar]

- Radach, R., & Kennedy, A. (2013). Eye movements in reading: Some theoretical context. Quarterly Journal of Experimental Psychology, 66(3), 429–452. 10.1080/17470218.2012.750676 [DOI] [PubMed] [Google Scholar]

- Radach, R., Huestegge, L., & Reilly, R. (2008). The role of global top-down factors in local eye-movement control in reading. Psychological Research, 72(6), 675–688. 10.1007/s00426-008-0173-3 [DOI] [PubMed] [Google Scholar]

- Raney GE, Rayner K. Word frequency effects and eye movements during two readings of a text. Can J Exp Psychol Can Psychol expérimentale. 1995;49(2):151–73. 10.1037/1196-1961.49.2.151 [DOI] [PubMed]

- Rayner, K. (1998). Eye movements in reading and information processing: 20 years of research. Psychological Bulletin, 124(3), 372–422. 10.1037/0033-2909.124.3.372 [DOI] [PubMed] [Google Scholar]

- Rayner, K. (2009). The 35th Sir Frederick Bartlett Lecture: Eye movements and attention in reading, scene per-ception, and visual search. Quarterly Journal of Experimental Psychology, 62(8), 1457–1506. 10.1080/17470210902816461 [DOI] [PubMed] [Google Scholar]

- Rayner, K., Chace, K. H., Slattery, T. J., & Ashby, J. (2006). Eye Movements as Reflections of Comprehension Processes in Reading. Scientific Studies of Reading, 10(3), 241–255. 10.1207/s1532799xssr1003_3 [DOI] [Google Scholar]

- Rayner, K., & Pollatsek, A. (2006). Eye-Movement Control in Reading. Second. Handbook of Psycholinguistics (pp. 613–657). Academic Press. [Google Scholar]

- Rayner, K., Binder, K. S., Ashby, J., & Pollatsek, A. (2001). Eye movement control in reading: Word predictability has little influence on initial landing positions in words. Vision Research, 41(7), 943–954. 10.1016/S0042-6989(00)00310-2 [DOI] [PubMed] [Google Scholar]

- Reichle, E. D., Rayner, K., & Pollatsek, A. (2003). The E-Z reader model of eye-movement control in reading: Comparisons to other models. Behavioral and Brain Sciences, 26(4), 445–476. 10.1017/S0140525X03000104 [DOI] [PubMed] [Google Scholar]

- Reilly, R. G., & Radach, R. (2006). Some empirical tests of an interactive activation model of eye movement control in reading. Cognitive Systems Research, 7(1), 34–55. 10.1016/j.cogsys.2005.07.006 [DOI] [Google Scholar]

- Saltelli, A. (2002). Sensitivity analysis for importance assessment. Risk Analysis, 22(3), 579–590. 10.1111/0272-4332.00040 [DOI] [PubMed] [Google Scholar]

- Samur, D., Tops, M., & Koole, S. L. (2018). Does a single session of reading literary fiction prime enhanced mentalising performance? Four replication experiments of Kidd and Castano (2013). Cognition and Emotion, 32(1), 130–144. 10.1080/02699931.2017.1279591 [DOI] [PubMed] [Google Scholar]

- Schmidtke, D. S., Conrad, M., & Jacobs, A. M. (2014). Phonological iconicity. Frontiers in Psychology, 5, 80. 10.3389/fpsyg.2014.00080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schrott R, Jacobs AM. Gehirn und Gedicht : Wie wir unsere Wirklichkeiten konstruieren (Brain and Poetry: How We Construct Our Realities). München, Germa-ny: Hanser; 2011.

- Simonto, D. K. (1989). Shakespeare’s Sonnets: A Case of and for Single-Case Historiometry. Journal of Personality, 57(3), 695–721. 10.1111/j.1467-6494.1989.tb00568.x [DOI] [Google Scholar]

- Stenneken, P., Bastiaanse, R., Huber, W., & Jacobs, A. M. (2005). Syllable structure and sonority in language inventory and aphasic neologisms. Brain and Language, 95(2), 280–292. 10.1016/j.bandl.2005.01.013 [DOI] [PubMed] [Google Scholar]

- Steyer, R., Schwenkmezger, P., Notz, P., & Eid, M. (1997). Der Mehrdimensionale Befindlichkeitsfragebogen (MDBF). Göttingen: Hogrefe. [Google Scholar]

- Strobl, C., Malley, J., & Tutz, G. (2009). An introduction to recursive partitioning: Rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychological Methods, 14(4), 323–348. 10.1037/a0016973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun, F., Morita, M., & Stark, L. W. (1985). Comparative patterns of reading eye movement in Chinese and English. Perception & Psychophysics, 37(6), 502–506. 10.3758/BF03204913 [DOI] [PubMed] [Google Scholar]

- Tagliamonte, S. A., & Baayen, R. H. (2012). Models, forests, and trees of York English: Was/were variation as a case study for statistical practice. Language Variation and Change, 24(02), 135–178. 10.1017/S0954394512000129 [DOI] [Google Scholar]

- Tu, J. V. (1996). Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. Journal of Clinical Epidemiology, 49(11), 1225–1231. 10.1016/S0895-4356(96)00002-9 [DOI] [PubMed] [Google Scholar]

- Ullrich, S., Aryani, A., Kraxenberger, M., Jacobs, A. M., & Conrad, M. (2017). On the Relation between the General Affective Meaning and the Basic Sublexical, Lexical, and Inter-lexical Features of Poetic Texts-A Case Study Using 57 Poems of H. M. Enzensberger. Frontiers in Psychology, 7, 2073. 10.3389/fpsyg.2016.02073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van den Hoven, E., Hartung, F., Burke, M., & Willems, R. M. (2016). Individual Differences in Sensitivity to Style During Literary Reading: Insights from Eye-Tracking. Collabra, 2(1), 25. 10.1525/collabra.39 [DOI] [Google Scholar]

- van Halteren, H., Baayen, H., Tweedie, F., Haverkort, M., & Neijt, A. (2005). New Machine Learning Methods Demon-strate the Existence of a Human Stylome. Journal of Quantitative Linguistics, 12(1), 65–77. 10.1080/09296170500055350 [DOI] [Google Scholar]

- Vendler, H. (1997). The art of Shakespeare’s sonnets. Belknap Press of Harvard University Press. [Google Scholar]

- Wallot S, Hollis G, van Rooij M.. Connected Text Reading and Differences in Text Reading Fluency in Adult Readers. Paterson K, editor. PLoS One. 2013. August 20;8(8):e71914. 10.1371/journal.pone.0071914 [DOI] [PMC free article] [PubMed]

- Were, K., Bui, D. T., Dick, Ø. B., & Singh, B. R. (2015). A comparative assessment of support vector regression, artificial neural networks, and random forests for predicting and mapping soil organic carbon stocks across an Afromontane landscape. Ecological Indicators, 52, 394–403. 10.1016/j.ecolind.2014.12.028 [DOI] [Google Scholar]

- Willems, R. M. (2015). Cognitive Neuroscience of Natural Language Use. Cambridge: Cambridge University Press. 10.1017/CBO9781107323667 [DOI] [Google Scholar]

- Willems, R. M., & Jacobs, A. M. (2016). Caring About Dostoyevsky: The Untapped Potential of Studying Literature. Trends in Cognitive Sciences, 20(4), 243–245. 10.1016/j.tics.2015.12.009 [DOI] [PubMed] [Google Scholar]

- Williams, C. C., Perea, M., Pollatsek, A., & Rayner, K. (2006). Previewing the neighborhood: The role of orthographic neighbors as parafoveal previews in reading. Journal of Experimental Psychology. Human Perception and Performance, 32(4), 1072–1082. 10.1037/0096-1523.32.4.1072 [DOI] [PubMed] [Google Scholar]

- Xue S, Giordano D, Lüdtke J, Gambino R, Pulvirenti G, Spampinato C, et al. Weary with toil, I haste me to my bed Eye tracking Shakespeare sonnets. Wuppertal, Germany; 2017. [Google Scholar]

- Yarkoni, T., & Westfall, J. (2017). Choosing Prediction Over Explanation in Psychology: Lessons From Machine Learning. Perspectives on Psychological Science, 12(6), 1100–1122. 10.1177/1745691617693393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ziegler, J. C., & Jacobs, A. M. (1995). Phonological Information Provides Early Sources of Constraint in the Processing of Letter Strings. Journal of Memory and Language, 34(5), 567–593. 10.1006/jmla.1995.1026 [DOI] [Google Scholar]