Abstract

The use of air sensor technology is increasing worldwide for a variety of applications, however, with significant variability in data quality. The United States Environmental Protection Agency held a workshop in July 2019 to deliberate possible performance targets for air sensors measuring particles with aerodynamic diameters of 10 μm or less (PM10), nitrogen dioxide (NO2), carbon monoxide (CO), and sulfur dioxide (SO2). These performance targets were discussed from the perspective of non-regulatory applications and with the sensors operating primarily in a stationary mode in outdoor environments. Attendees included representatives from multiple levels of government organizations, sensor developers, environmental nonprofits, international organizations, and academia. The workshop addressed the current lack of sensor technology requirements, discussed fit-for-purpose data quality needs, and debated transparency issues. This paper highlights the purpose and key outcomes of the workshop. While more information on performance and applications of sensors is available than in past years, the performance metrics, or parameters used to describe data quality, vary among the studies reports and there is a need for more clear and consistent approaches for evaluating sensor performance. Organizations worldwide are increasingly considering, or are in the process of developing, sensor performance targets and testing protocols. Workshop participants suggested that these new guidelines are highly desirable, would help improve data quality, and would give users more confidence in their data. Given the wide variety of uses for sensors and user backgrounds, as well as varied sensor design features (e.g., communication approaches, data tools, processing/adjustment algorithms and calibration procedures), the need for transparency was a key workshop theme. Suggestions for increasing transparency included documenting and sharing testing and performance data, detailing best practices, and sharing data processing and correction approaches.

Keywords: Air sensors, Performance targets, PM10, NO2, CO, SO2

1. Introduction

A growing class of miniaturized, direct-reading air monitoring devices are continuing to be used for different purposes by a wide variety of individuals and groups (Williams et al., 2019; Karagulian et al., 2019; Lewis et al., 2018; Kumar et al., 2015). There are varying descriptors used for these devices that sometimes leads to confusion. Examples include “low cost air sensors”, “air sensor devices”, and “air quality sensors”. Sometimes the internal sensing component is referred to as a “sensor” while the integrated product, including an enclosure, microprocessor, and other components, may also be referred to as a “sensor” (Morawska et al., 2018). The term “low cost” is another topic of debate, as different end users may disagree on what price point would be considered “low” and whether data management costs should be considered in the accounting (Morawska et al., 2018; Rai et al., 2017). In this paper, we use the term “air sensor” or “sensor” to describe the integrated products that are triggering a worldwide evolution toward increased measurement locations and a significant increase in air quality data collection.

Current applications of air sensors range from basic environmental education/awareness (e.g., informational data collection, classroom or community educational activities) to more complex decision-making purposes (e.g., where to locate a regulatory monitor, determining when to go outdoors for exercise) (Williams et al., 2014, 2019; Morawska et al., 2018; Lewis et al., 2018; Rai et al., 2017). Data quality needs for specific end uses will differ. It is widely known that the data quality of air sensor measurements varies or is simply not well characterized (Clements et al., 2019; Lewis et al., 2018; Woodall et al., 2017). Sensor technology development is moving at a fast pace and questions need to be addressed about sensor performance for various applications. To facilitate discussion on these topics, the United States Environmental Protection Agency (US EPA) held the first “Deliberating Performance Targets for Air Quality Sensors Workshop” in June 2018 in Research Triangle Park, North Carolina, USA to discuss air sensor performance targets for non-regulatory monitoring applications. This workshop focused on fine particulate matter (PM2.5) and ozone (O3) sensors since more technical information and better technological approaches existed for those pollutants at that point in time. The workshop sought to gain perspectives from a variety of stakeholders on various issues related to sensor performance targets and test protocols. Major findings from the workshop – summarized in Williams et al. (2019) – were that sensor performance metrics reported in the literature are highly variable and the lack of performance targets leads to uncertainty in the data quality and reduces confidence in using the data for any given purpose. Similar conclusions have been observed in recent literature. For example, a review of the performance of air sensors pointed out the difficulty in summarizing performance evaluations and the potential for misrepresenting findings due to the lack of uniform data quality reporting metrics (Karagulian et al., 2019). Many organizations, including the European Union (EU) (Gerboles, 2019), ASTM International (formerly known as American Society for Testing and Materials), and the US EPA (Williams et al., 2019), are considering sensor performance targets, however, there is still no standardized approach for assessing air sensor performance.

Since the June 2018 workshop, the use of air sensors has grown significantly (Kilaru et al., 2020). Given the current pace, the use of air sensors will likely continue to expand to many different disciplines making clear and consistent performance information even more important. To build on the 2018 workshop, expand the discussion to other pollutants, and continue discussions on air sensor performance targets, the “EPA’s Second Workshop on Deliberating Performance Targets for Air Quality Sensors” was held on July 16, 2019 in Durham, North Carolina, USA (www.epa.gov/air-research/deliberating-performance-targets-air-quality-sensors-workshops). The purpose of the second workshop was to solicit individual stakeholder views related to non-regulatory performance targets for air sensors with a focus on sensors measuring particulate matter (PM) with aerodynamic diameters of 10 μm or less (PM10), nitrogen dioxide (NO2), carbon monoxide (CO), and sulfur dioxide (SO2). In framing the discussion, both “targets” and “standards” were discussed – the former is a recommended and testable performance metric that may guide the improvement of technology; the latter refers to a nominally achievable air sensor performance metric that is often connected with a certification program. The US EPA framed the workshop by communicating its future intention to publish recommended performance targets. The workshop also included perspectives from other organizations actively developing or contemplating air sensor performance standards. Therefore, both “targets” and “standards” were topics presented during the workshop. Through on-site and webinar discussions, participants discussed a range of technical issues related to establishing performance targets for air sensor technologies. Topics included:

The state of technology with respect to air sensor performance metrics reported in the literature,

Important performance metrics and reasons to establish performance targets,

Merits of the adoption of a single set of performance targets for all non-regulatory purposes versus a tiered approach for different applications, and

Lessons learned from other countries about choices or trade-offs made or debated in establishing targets for measurement technologies.

2. Approach

The “EPA’s Second Workshop on Deliberating Performance Targets for Air Quality Sensors” was modeled after the first workshop in 2018. There was a one-day public workshop (held on July 16, 2019) followed by a one-day closed meeting (held on July 17, 2019) attended by invited Subject Matter Experts (SMEs) and US EPA staff. Details on the meeting formats are described in the next sections.

2.1. Public workshop

The public workshop was attended both in-person (107 participants total, including 40 federal and 67 non-federal) and via webinar (267 participants). The US EPA provided a summary of the outcomes from the 2018 workshop and results from a supplemental literature search on the state-of-science of PM10, NO2, SO2, and CO sensors. Short presentations were given by the SMEs on important performance considerations for air sensors and/or what they deemed as the key parameters for evaluating sensor performance. The following topics were covered:

Local agency perspectives on sensors – How “good” does sensor data need to be to help address air quality concerns?

Sensors in scientific research – What are the most important data quality objectives for scientific research?

Voluntary consensus standards – How might the National Institute of Standards and Technology (NIST) use performance standards to inform future sensor standards?

European Union (EU) perspective on sensor standards – What factors were used to develop EU standards?

Following each presentation, attendees were able to ask clarifying questions. Any questions that were not addressed were posted on a ‘parking lot’ board, then introduced for further discussion during the meeting. In addition, a session was included where air sensor manufacturer representatives shared their perspectives on how market drivers could improve air sensor performance. Manufacturers from the registration list were contacted prior to the workshop about the opportunity to share their insights; those who participated were asked to speak about what they saw as the most critical data quality parameters for evaluating air sensors and what guidance from the US EPA would be useful to them on achieving air sensor performance targets. Finally, the last workshop session included an open discussion on data quality perspectives on air sensors. Presentations from the public workshop are available on the US EPA Air Sensor Toolbox website (https://www.epa.gov/air-sensor-toolbox).

2.2. Closed meeting

The purpose of the closed meeting was to further discuss workshop take-aways, performance targets, and testing protocols to inform the development of this paper. The SMEs, shown in Table S1, represented a variety of organizations to provide a balanced perspective on the discussion topics. SMEs shared their experience and knowledge of air sensors, championed discussion points and ‘parking lot’ questions from the public workshop and, debated sensor performance metrics and their target values. The meeting included the following:

Report out and discussion on the main take-aways from the public meeting,

Small group discussions and report out on performance metrics, targets, and testing considerations for each pollutant,

Discussion on similarities and differences in performance parameters and/or targets for different pollutants or applications,

Open discussion on all meeting topics.

Toward the end of the closed meeting, the group decided to add an addendum conference call to further discuss the issue of online and offline modes of operation of air sensors, as well as reference monitors, during testing. This was conducted as two similar calls facilitated by the US EPA, with SMEs joining based on their availability.

3. Results

3.1. Summary of peer-reviewed literature discussing performance of PM10, NO2, CO, and SO2 sensors

As an update to the literature review in support of US EPA’s June 2018 workshop (Williams et al., 2018), the US EPA reported on a supplemental literature search to inform the development of air sensor performance targets and testing protocols for non-regulatory supplemental and informational monitoring applications (Kilaru et al., 2020). Database searches were conducted using EBSCO Environment Complete, Web of Science, Science Citation Index, ProQuest – Science, and Google Scholar. This review covered the time frame from Jan 1, 2017 to Mar 31, 2019. The initial search found 289 resources which were screened to remove resources previously identified in the 2018 report and remove resources related to sensor technology development, mobile and indoor sensor applications, and remote sensing applications. A total of 42 new resources were found and grouped into one or more of the following categories for additional analysis: 1) performance assessments, evaluations, or specifications, 2) testing methodologies and protocols, 3) initial and on-going calibration, 4) analyzing and interpreting sensor data, and 5) best practices. Resources from the first and third categories were combined as these were considered the most beneficial in informing sensor performance targets and test protocols. Table 1 summarizes findings from sensor performance evaluations by pollutant and reflects an aggregate of sensor models/manufacturers. The data represent hourly averaged sensor measurements and reflect out-of-the-box performance (i.e., sensor data not adjusted by further calibrations in the field and/or laboratory beyond what was already conducted by the manufacturer). Air sensor performance was determined by comparing sensor measurements to measurements from reference instrument(s). The reference instruments were typically those used for regulatory monitoring purposes [e.g., Ambient Air Quality EU Directive (2008/50/EC); US EPA Federal Reference Method (FRM) and Federal Equivalent Method (FEM)]. However, one study used a non-regulatory instrument (Cavaliere et al., 2018). Collocation approaches varied and included either locating a sensor at an existing regulatory monitoring site containing reference instruments, using data from a nearby/closest regulatory monitoring station, or temporarily installing reference instruments at the testing location (Table S2). Across the studies, field deployment timeframes ranged from 2 weeks to 1 year and covered many different seasons and climates.

Table 1.

Summary of field performance evaluations for air sensors based on 1-h averaged data. R2 = coefficient of determination (from linear regression) and r = Pearson correlation coefficient.

| Pollutant (units) | Study | No. Of Air Sensors | Slope | Intercept | R2 | r |

|---|---|---|---|---|---|---|

| PM10 (μg/m3) | Borrego et al. (2018)a | 5 total (2 sets identical, 1 different) | NA | NA | 0.02–0.61 | 0.14–0.78 |

| Cavaliere et al. (2018)bc | 1 total | NA | NA | 0.84 | NA | |

| Feinberg et al. (2018)d | 3 total (all identical) | 0.12–0.54 | −1.06 – 2.98 | NA | 0.20–0.68 | |

| Mukherjee et al. (2017)e | 3 total (all identical) | 0.12–0.20 | 2.83–5.36 | 0.81–0.84 | NA | |

| Penza et al. (2017)f | 3 total (all identical) | NA | NA | NA | NA | |

| NO2 (ppbv) | Borrego et al. (2018)a | 6 total (all different) | NA | NA | 0.09–0.86 | 0.10–0.93 |

| Feinberg et al. (2018)d | 3 total (all identical) | 0.65–0.67 | − 15—10 | NA | 0.84–0.87 | |

| Masey et al. (2018)g | 2 total (all identical) | NA | NA | 0.33–0.92 | NA | |

| Munir et al. (2019)h | 10 total (all identical) | NA | NA | NA | 0.60–0.99 | |

| Schneider et al. (2017)i | 24 total (all identical) | NA | NA | NA | 0.21–0.72 | |

| CO (ppmv) | Borrego et al. (2018)a | 4 total (all different) | NA | NA | 0.03–0.78 | 0.18–0.88 |

| Munir et al. (2019)h | 10 total (all identical) | NA | NA | NA | 0.35–0.98 | |

| SO2 (ppbv) | Borrego et al. (2018)a | 2 total (all different) | NA | NA | 0.01–0.63 | 0.11–0.80 |

Study conducted in Aveiro, Portugal from Oct 14, 2014 to Oct 27, 2014 (~2 weeks).

Study conducted in Florence, Italy from Nov 1, 2016 to Apr 15, 2017 (~5.5 months).

Results based on 24-h averaged data.

Study conducted in Denver, Colorado, USA from Sep 2015 to Mar 2015 (~6 months).

Study conducted in Cuyama Valley, California, USA from Apr 14, 2016 to Jul 6, 2016 (~3 months).

Study conducted in Bari, Italy from Jan 2016 to Dec 2016 (~12 months); study only reported on the mean absolute error (MAE) shown in Table S2.

Study conducted in Glasgow, United Kingdom from Nov 2015 to May 2016 (~6 months; intermittent deployment).

Study conducted in Sheffield, United Kingdom from Oct 2016 to Sep 2017 (~12 months).

Study conducted in Oslo, Norway from Apr 13, 2015 to Jun 24, 2015 (~2.5 months).

The performance evaluations showed a range of results and reported on a variety of parameters to understand sensor performance (additional results shown in Table S2). Only one study was identified in the new literature search that conducted both a field and laboratory evaluation (Cavaliere et al., 2018). The laboratory evaluation included one PM10 sensor and reported an R2 of 0.67 and root mean square error (RMSE) of 4.5 μg/m3 (based on 2-min averaged data).

Calibration techniques used in the sensor evaluations included initial deployment of the sensor and procedures for calibrating sensors during field deployment. Most of the resources focused on initial calibrations and typically included temperature (T) and relative humidity (RH) adjustments. Various techniques were used to correct sensor field data including but not limited to simple linear regression (Borrego et al., 2018; Cavaliere et al., 2018; Feinberg et al., 2018; Munir et al., 2019; Masey et al., 2018), multiple linear regression (Munir et al., 2019), and machine learning (Borrego et al., 2018). Due to the variety of approaches for calibrating sensors, detailed documentation is important regarding the modeling approach used to fit the sensor measurement to reference data, and the calibration conditions (such as concentrations, and T and RH ranges) to inform how the modeled approach can be generalized to other cases. Further, greater consistency in performance metrics would better support comparison of results.

3.2. Voluntary consensus standards and organizations considering performance standards for air sensors

A discussion on voluntary consensus standards was led by Gordon Gillerman (NIST) (Gillerman, 2019). Gillerman pointed out that voluntary standards can help provide common methods for comparability which are important for purchasing and application decisions. As air sensor technologies evolve over time, voluntary standards will be able to more efficiently keep pace with technology improvements. Gillerman noted that use of voluntary consensus standards is supported by federal regulatory policy, namely the National Technology Transfer and Advancement Act (NTTAA; www.nist.gov/standardsgov/national-technology-transfer-and-advancement-act-1995). The NTTAA requires that federal agencies and departments use voluntary consensus standards where they exist and meet their mission needs rather than developing new government unique standards. Furthermore, NTTAA promotes cooperation among the federal government and the private sector to participate in the development of standards that can advance commercialization of technology and innovation. Gillerman suggested that sensor standards may be harmonized as the market is global and should ideally avoid mutually exclusive technical requirements which could force a manufacturer to produce multiple products to meet various standards. Gillerman also stressed the importance of conformity assessment which provides confidence that products perform as expected and helps manage risks associated with nonconforming products (e.g., making decisions based on poor data quality from sensors).

Several organizations including ASTM International, the National Institute for Occupational Safety and Health (NIOSH), and the EU are either in the process of developing or have developed performance standards or guidance documentation for air sensor technologies. ASTM International (through its air quality subcommittee D22.03), is developing an international standard to evaluate ambient, outdoor air sensors and other sensor-based instruments (ASTM WK64899). The proposed standard will outline a protocol for laboratory and field testing of sensors and set criteria to assess the performance of air quality sensors. These practices are intended to address the lack of information about key performance metrics related to sensor technologies such as linearity, repeatability, sensitivity, cross interferences, and others in relation to instruments of well characterized reliability such as those used by government agencies. Similarly, in response to increased use of sensors for occupational safety and health applications, NIOSH established the NIOSH Center for Direct Reading and Sensor Technologies (NCDRST; www.cdc.gov/niosh/topics/drst/default.html) to coordinate research and develop guidance documents (including validation and performance characteristics) on direct reading and sensor technologies. Additionally, the EU is continuing efforts to develop sensor standards for monitoring air pollutants in ambient air at fixed sites. During the workshop, updates on these activities were provided by Dr. Michel Gerboles (EU Joint Research Center) (Gerboles, 2019). The goal of the EU’s activities is to determine if sensors can meet prescribed data quality objectives via a three-tiered system ranging from regulatory quality (established in the EU Directive, 2008/50/EC) (European Union, 2008) to less restrictive, informal measurements. These efforts are anticipated to provide a protocol describing specific performance requirements and test methods for sensors under set laboratory and field conditions. Performance metrics being considered to classify sensors include repeatability, response time, R2 and other measures of agreement, slope and intercept of a regression line, RMSE, long term drift, cross sensitivities, impacts of T and RH, and measurement uncertainty.

3.3. Considerations for non-regulatory monitoring and associated measurement performance targets

In recent years, both the types of users and envisioned applications of air sensors have expanded. Air quality professionals (researchers, air quality agencies) consider air sensors as a potential new method to meet their monitoring objectives. Meanwhile, new participants in air monitoring include many groups (e.g., school districts, municipalities) concerned about air quality but who may not have extensive technical backgrounds in air quality monitoring. Understanding the expected air sensor measurement data quality under real-world conditions is critical for appropriate selection and use. Workshop discussions on performance target considerations for non-regulatory, ambient monitoring applications of sensors for PM10, NO2, CO, and SO2 are summarized in the next sections. It should be noted that while this discussion is primarily focused on ambient, outdoor, fixed site applications, sensors are commonly being used and marketed for a variety of applications including mobile monitoring, and human exposure and health studies (outdoor, indoor, and personal monitoring) which have unique issues and testing considerations. These additional applications and related issues are acknowledged however, they are not discussed in detail in this paper.

3.3.1. PM10

Common uses for PM10 sensors include characterizing ambient air, near-source environments, ‘hotspots’ (locations with significantly higher pollutant levels), fire plumes, and dust sources (e.g., storms, construction, agriculture, etc.). Further, PM10 data from a network of sensors could inform longer term monitoring network design decisions. PM10 sensors are also useful in characterizing the combined exposure of coarse particles (also called PM10-2.5 and defined as PM with aerodynamic diameters between 2.5 and 10 μm) and fine particles (also called PM2.5 and defined as PM with aerodynamic diameters of 2.5 μm or less).

Several measurement issues related to PM10 were discussed during the workshop. First, currently available PM10 sensors do not appropriately sample coarse particles in the air and thereby do not truly represent PM10. Sampling of coarse particles typically requires a large flowrate, ensuring that the larger, heavier coarse particles will be drawn into and characterized by the device. If the flowrate is insufficient, the device may under-sample large particles. Existing PM10 sensor technologies often use small fans or pumps that produce low flowrates and therefore do not collect representative PM10 data. Additionally, due to the principles of physics, sensor device efficiency for the collection and measurement of larger particles can be affected by features such as the position of the sampling train (e.g., located either horizontal or vertical), shape and size of the sampling inlet, and bends within the sample flow path.

One may expect more rapid changes to the airborne concentration of PM10 relative to PM2.5 because coarse particles have a shorter atmospheric residence time than fine particles (Seinfeld and Pandis, 1998). This may lead a user to look at the shortest reporting interval available from the sensor (seconds to minutes). However, there is often a trade-off between the reporting interval and detection limit because with fewer particles to detect, the error in reported concentration may be higher. For applications in which there is interest in obtaining results relative to a 24-h (midnight to midnight) regulatory standard, longer reporting intervals for PM10 sensors is encouraged. Similar to sensors for other pollutants, PM10 sensors may have challenges with high RH environments, calibration lifetime, and may need to be tested in multiple environments with different PM10 to PM2.5 ratios to better understand performance and ability to measure the coarse particle fraction separate from fine particles. For example, during dust storms in the desert southwest of the US, the ratio of PM10 to PM2.5 is known to be substantially higher compared to days with light to negligible winds. Additionally, extreme wind speeds that are typically associated with dust storms can bias PM10 measurements as high winds can overpower low flow rates and prevent the sampling of blowing dust. Based on the measurement issues for existing PM10 sensors, the overall uncertainty in their performance is expected to be greater than PM2.5 sensors.

Beyond measurement issues, considerations for designing studies for certain PM10 applications were discussed. For example, PM10 sensors might be used to understand exposure to coarse and fine PM and related health outcomes. In some locations, there may only be PM10 data publicly available (i.e., no PM2.5 data are available). In these cases, any data available would be considered representative of both coarse and fine PM fractions. Not having both PM10 and PM2.5 data can be problematic since fine and coarse PM are known to have different sources and can have different health outcomes. Thus, in areas with PM2.5 data, PM10 data can readily be interpreted, by difference, as coarse particles (i.e., PM10-2.5). However, in areas with only PM10 data, it may not be obvious if the exposures were due to fine or coarse particles. When using PM10 sensors, the added value of co-located PM2.5 sensors should be considered.

3.3.2. NO2

There is a desire to use NO2 sensors with relatively high time resolution (e.g., 1-min averaged data) in a variety of applications including general ambient air quality characterization, along fence lines, near roads, and as part of mobile monitoring efforts. Workshop participants discussed that current NO2 sensor performance is quite variable. Issues regarding existing NO2 sensors include uncertainty in how valid the calibration is over the life of the instrument and with decline in sensor performance over time. Ideally, these performance traits should be thoroughly tested by the manufacturer. Testing would likely need to include subjecting the sensor to a variety of pollutant concentrations in differing T, RH, and multi-pollutant mixtures, and could be conducted in the field and the laboratory. The discussion of interferences included attention to O3, oxides of nitrogen (NOx, which might correspond more to fresh combustion emissions) versus NO2 specifically (which might correlate more with aged combustion emissions), and an acknowledgement that other pollutants or specific mixtures could affect NO2 readings. The possibility of a user calibrating or “tuning” the sensor may be an ideal feature. The concept of a calibration or operational setting that can be based on the sensor application was discussed as an approach to the development of reliable, long-lasting NO2 sensor calibration capabilities.

Hysteresis is also a common problem for NO2 sensors. Hysteresis occurs when changes in environmental conditions do not always cause the same response from the sensor. For instance, the sensor may respond one way while concentrations increase but then respond differently when concentrations decrease. The use of longer time averaging intervals may give greater confidence in the data by averaging data positively and negatively affected by hysteresis. However, this approach may not be feasible for high time resolution applications. Vendor testing could also be a means to understand and address hysteresis, possibly identifying environmental and exposure conditions that might exacerbate the impact.

The workshop participants discussed how a concentration range from single digit ppbv up to several hundred ppbv would be a sufficient range for all applications discussed. NO2 sensor development is needed to focus on accuracy and stability over the long-term, or lifespan, of the sensor. It was also suggested that sensors with greater inherent uncertainty might benefit from having complementary on-board measurements of influential environmental factors like T, RH, and possibly other pollutants. These complementary measurements might be used to support NO2 data quality and possible on-board calibration processes, or even be used in support of real-time or post-collection data analysis and corrections.

3.3.3. CO

CO sensors have been used for quite some time for safety purposes in occupational settings and in homes. Other desired uses include monitoring of CO levels in general ambient settings, near roads, in near-source applications, and for indoor purposes. For all applications, workshop participants discussed that real-time data were desired and simultaneous T and RH readings were necessary for artifact assessment. For applications focused on understanding sources and/or emissions factors, simultaneous measurement of CO2 and/or non-methane hydrocarbons (NMHC) may be useful.

One unique issue for CO sensors is the challenging ability to perform well in both low and high CO concentration environments. Depending on the environment, CO levels can vary widely. For example, occupational CO concentrations can be high, upwards of 300 ppmv (ATSDR, 2012). On the other hand, ambient CO levels can be low such as those encountered in the US – which are typically under 3 ppmv, annual average (US EPA, 2019) and well below the US National Ambient Air Quality Standards (NAAQS). For field testing, if ambient CO levels are low, it may be challenging to test and compare CO sensors to regulatory monitors. The detection limit of regulatory monitors that can measure very low CO concentrations is less than 0.04 ppmv, whereas the detection limits of CO sensors often range from 0.05 ppmv and up. Workshop participants discussed that CO sensors need to be tested in low (ambient) and high (wildfire, near source, other) CO concentration environments to understand sensor performance. In addition, field and laboratory testing may both be necessary to capture more extreme conditions.

Workshop participants also noted that factory calibrations of CO sensors are generally stable over the lifetime of the sensor but can be inaccurate depending on the application of the sensor due to the concentration range selected for calibration. The lifespan of CO sensors is also not well understood.

3.3.4. SO2

Workshop attendees discussed that the primary use for SO2 sensors is in source-oriented air quality assessments. Example applications include stationary source characterization, hotspot evaluation (e.g., characterization of air quality from natural pollution events such as volcanoes, or leaks from refineries or industrial operations), airborne surveillance of stationary and mobile sources (including plume chasing and sniffing), saturation studies (deploying many sensors to determine concentration gradients or simply the presence or absence of peak concentrations), and model evaluation. Workshop participants agreed that SO2 sensors are not as widely available as sensors for other pollutants and there is limited information related to testing and applications of SO2 sensors.

Considering all the possible applications of SO2, there is a need for sensor development to include a focus on performance and accuracy within a wide, dynamic range of concentrations. It is expected that SO2 sensors may experience large shifts in concentration over very short time spans (e.g., measurements targeting plumes or aboard mobile platforms). This requires sensors with little to no hysteresis that can generate highly time resolved data. Users may desire data as frequent as 1 s averages, although longer time frames (tens of seconds to minutes) would be preferred for most applications. Workshop attendees also raised the issue that sensor performance needs to account for interferences, such as hydrocarbons, and noted that on-board complementary measurements of T, RH, and possibly interfering compounds (e.g., water, O3, NOx would be useful. A key testing limitation discussed was the typically low ambient SO2 concentration such as in the US; many regulatory monitoring sites have a high frequency of non-detects which may limit the ability for sensor comparison. Given that end user applications of SO2 are likely to emphasize near-source impacts, laboratory or selective field testing may be needed to address performance, hysteresis, wide dynamic measurement range, and durability in harsh conditions such as those that could be experienced near emission sources, aboard mobile platforms, and inside plumes.

3.3.5. Similarities and differences across PM10, NO2, CO, and SO2

Common to all sensor types is a desire to measure pollutant concentrations in ambient air and near sources, find hotspots, understand concentration gradients at high spatial and temporal resolution, and determine where regulatory monitors should be placed for longer term monitoring and/or health protection needs. Common concerns for all pollutant types is the influence of T and RH on sensor performance and understanding variability in sensor performance due to different pollution levels and mixtures. There is a need for sensor performance testing protocols to be conducted under variable T and RH conditions and in a variety of pollutant mixtures that may be encountered in the real-world. These testing parameters and environments should be well-documented and reported.

SMEs discussed the value of field vs. laboratory testing for the different pollutants. For PM10, while laboratory tests can provide specific information as it relates to situations that a sensor may encounter (e.g., extreme weather, rapid changes in concentrations, T, RH), SMEs noted that it is difficult to accurately recreate the ambient mix of particle sizes and types within a laboratory environment. Thus, field testing is of high value. For example, there are several parts of the US in which coarse particle concentrations are much lower than fine particle concentrations making it difficult to determine how efficiently larger particle sizes are measured. It is therefore important that field tests be conducted in environments where the ratio of fine to coarse particle concentrations span the real-world range of conditions. Additional laboratory testing provides an opportunity to understand how a sensor responds to extreme concentrations which are more rarely encountered in the field (e.g., haboob dust storms in the Southwest of the US), but are of great interest for certain users, and for which sampling efficiency can be tested based on particle size, wind speed, and inlet orientation. For gas-phase sensors, field testing is important to check sensor response under highly variable T and RH conditions, and with realistic mixtures of co-pollutants. However, field tests for CO and SO2 may be hindered in clean environments where ambient concentrations are frequently far below regulatory standards (e.g., in the US) and below the detection limit of the regulatory monitors. Laboratory tests provide means whereby gas sensors can be tested for response to a wider range of pollutant concentrations, specific interfering pollutants, and to step changes in target pollutant concentrations to check for lag time and hysteresis.

Table 2 summarizes some sensor use cases and testing considerations that are specific to each pollutant. At present, the average lifespan of a sensor and applicable calibration lifetime vary by pollutant. For instance, a PM sensor may have a long lifetime in a low concentration environment but likely needs to be calibrated at least once every season. Currently available NO2 sensors generally have short lifespans and short calibration lifetimes. Users would like manufacturers to describe calibration needs for various deployment types (e.g., outdoor vs. indoor vs. personal monitoring, or stationary vs. mobile) and for performance testing to verify those recommendations. Ultimately, users want sensors with long lifespans, calibration lifetimes of a year or more, and manufacturer facilitated diagnostic monitoring, alerting/flagging, and automated calibration.

Table 2.

Summary of air sensors use cases and testing considerations by pollutant.

| PM10 | NO2 | CO | SO2 | |

|---|---|---|---|---|

| Desired Uses | Fires, plumes, dust storms | Fence line, near roads, mobile monitoring | Combustion, occupational, indoors | Plume identification, plume sniffing |

| Desired Measurement Range | 2–1000 μg/m3 | 1 to >100 ppbv | 0.04 to 3 ppmv (ambient) or up to 300 ppmv (ambient, source, occupational) | 1 ppbv (ambient) or up to ppmv (source) |

| Expected Interferents or Other Causes of Bias | Variation in ratio of fine/coarse particles, RH, sampling loss of coarse particles | T, RH, O3 | T, RH | NOx, hydrocarbons, T, RH |

| Field Testing | Variation in RH, nominally variation in ratio of fine to coarse particles over time or through multiple test sites | Ambient and near-source locations | High and low concentration regimes | High variability of concentrations in near-source plumes |

| Laboratory Testing | Test high PM concentrations, response to variable size distribution, sampling efficiency including effects of orientation and wind | Test for wider variety of interferents and for hysteresis | Test wider concentration range and variety of interferents | Test wider concentration range and for hysteresis |

| Complementary Measurements | PM2.5, T, RH | T, RH, O3, other pollutants | T, RH | T, RH, hydrocarbons |

3.4. Considerations for performance targets

Supporting the development and improvement of air sensor technologies through the establishment of performance targets was of shared interest among the workshop participants. The SMEs were in general agreement that performance targets would positively impact the marketplace and likely help improve data quality across the domain. The creation of performance targets would give sensor manufacturers a consistent set of performance parameters (e.g., precision, accuracy) and associated target values to determine how well their air sensors perform. Air sensor customers could also use performance targets along with other considerations to assess the ability of a given sensor to meet their specific monitoring needs. Additionally, the SMEs suggested that some users have become more interested in collecting actionable data that can support policy change or legal action, and as a result, information on sensor performance is needed for justification. The goal of performance targets overall is to support the collection of consistent air sensor performance information and allow customers to better distinguish which technology suits their needs. The next sections summarize key considerations for sensor performance targets that were discussed during the workshop.

3.4.1. Single vs. multi-tiered approach

During the US EPA’s first workshop, attendees discussed the development of a single set of performance targets for all non-regulatory purposes versus a tiered approach for different air sensor applications. Perspectives varied on the issue as summarized in Williams et al. (2019). During the second workshop, multiple SMEs expressed a desire for a tiered system to reflect the different levels of data quality needed for various end use applications. A tiered approach could address the entire spectrum of sensor technologies for supplemental and informational monitoring as well as meeting the needs of various consumers of information (e.g., academics, general public, municipalities). While some SMEs thought a tiered approach would be useful, others stated that interim performance targets for a single tier would allow for an assessment of how well targets drive data quality to a certain goal and would be useful in determining whether targets should evolve over time to include more tiers. For one or multiple tiers, discussion further delved into the details of: 1) how many performance parameters to include, 2) what level to set each performance parameter so that it is neither too stringent nor too lenient, 3) how the division between field and laboratory testing should be structured, and 4) consideration of cost and burden on the manufacturer.

3.4.2. Technology agnostic sensor targets

To embody a technology agnostic philosophy, the performance targets would be grounded in data quality needs for measurement applications rather than defined by how well current air sensors perform. Therefore, performance targets based on measurement needs could be generally applied to any air monitoring technology with intended use for non-regulatory monitoring purposes. In terms of specific technology agnostic performance targets, opinions were shared regarding stringent versus more relaxed performance criteria. For example, should air sensors provide some minimal ability to detect pollution changes (e.g., significant shifts in pollution levels related to an emissions event) or be required to have higher sensitivity to understand ambient concentration variability. Given the voluntary nature of air sensor targets, SMEs felt that if the targets were too stringent, manufacturers might refrain from using them, therefore minimizing the overall impact. On the other hand, setting a very minimal requirement would not foster product improvement and innovation nor allow users to differentiate between well versus poorly performing sensors. Furthermore, SMEs were of the opinion that air sensor target levels should not approach those outlined in the US EPA’s FRM/FEM program (US EPA, 40 CFR Part 50; US EPA, 40 CFR Part 53). This program is designed for determining compliance with the US NAAQS. Given the FRM/FEM program, this may embody a technology agnostic approach via a tiered system – the FRM/FEM program would represent the highest tier of performance and sensor performance targets would represent a non-regulatory, lower tier of performance that could accommodate the current air sensor market.

3.4.3. Scope of technology

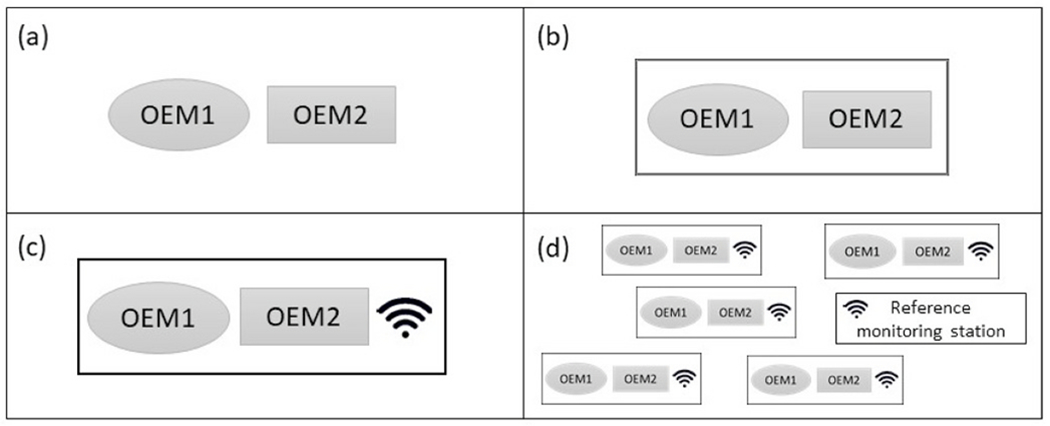

The design of air sensor technology is quickly evolving. The core of the instrumentation [original equipment manufacturer (OEM) sensors] has had more gradual change over time with the pollutant measurement principles involved generally remaining the same. For example, to date, all direct-reading PM sensors on the market use an optical measurement method where either individual particles or groups of particles are detected by their interaction with a light source. Beyond the OEM sensors, the integration of sensors into working devices and methods of data management have had rapid development in recent years. During the workshop, there were discussions on how the same OEM sensors are often used in different commercial air sensor technologies, and that full engineering of the commercial monitoring technology and data handling need to be considered in the development of performance targets and testing protocols. The various states of technology discussed ranged from the OEM sensor to network enabled devices (Fig. 1).

Fig. 1.

Variety of commercially available sensor technologies (adapted from Hagler, 2019). Where: a) represents the OEM sensors in their original form; b) represents offline, integrated OEM sensors in an enclosure with ancillary components (e.g., power, microprocessor); c) represents integrated OEM sensors with added data connectivity, but where the device does not interact with external data sources; and d) represents integrated OEM sensors where data are managed as a network and external data (e.g., reference monitor) may be used to adjust the sensor data for any individual device.

Associated with these technology states is the variety in how the data are managed and processed. This emerging variety motivated the development of recommendations on specific terminology for the scientific community to describe the resulting data from sensors and its associated processing levels (Schneider et al., 2019). Linking Fig. 1 with the processing levels from Schneider et al. (2019), the OEM-only components in Fig. 1a would produce data of Level-0 (raw signals produced by sensor system) or Level-1 (estimated measurements combining Level-0 data with basic physical principles or pre-established calibrations); integrated devices in Fig. 1b–c may produce data of Level-1 or Level-2A (estimated measurements combining Level-0 data with data from onboard artifact-correcting sensors); and technology represented in Fig. 1d may be Level-2B (estimated measurements combining Level-0 data with data from nearby reference monitors or other external data to correct for artifacts) or Level-3 (estimated measurements that includes other data unrelated to the measurement principle).

During the workshop discussions, a majority opinion was that end users would not benefit from sensor performance targets and test protocols that would be applied solely to OEM sensors (Fig. 1a). This is based on experiences in how the engineering of the integrated device and how its data are managed which can result in varying performance for identical OEM sensors. Therefore, air sensor performance targets should focus on the integrated sensor device. There was debate among SMEs regarding the state of technology and its implications for sensor testing, as well as what demands a specific testing protocol may make on the technology design. For example, one point of debate was regarding “out-of-the-box” testing versus allowing a device to have a “training” period through collocation with a reference monitor in the testing environment. The former approach was voiced as valuable to customers who do not have access to reference monitoring stations and would use the technology as is after purchase, such as the widespread use of sensor devices by the public throughout the US. However, the countering opinion was that the device-maker instructions should be followed for appropriate use – if the instructions require a training period prior to use, incorporating that information would align with the intended use of the technology and the test results would demonstrate the optimal performance of the technology.

A related topic of debate focused upon the connectivity of devices during testing and development of calibration equations, as well as the web-accessibility of the reference monitor data utilized as a benchmark for field tests. Four scenarios were discussed (Table 3) among the workshop participants, with varying combinations of data connectivity for the tested device and/or reference monitor. “Online” is a shorthand term used to indicate that the data are transmitted (e.g., via WiFi, Bluetooth, cellular modem) to an off-site location and the data are subsequently accessible for use in data processing algorithms. “Offline” implies the data are not transmitted off-site and unavailable for use in off-site data processing algorithms. For a sensor device, the tested technology could have both online and offline operating modes, with “offline” testing meaning that the data transmission mode is not utilized during testing. For a reference monitor, “offline” indicates that reference data have only private access by the tester, in contrast to many regulatory monitoring stations that have hourly data that is publicly available.

Table 3.

Summary of testing scenarios for developing calibration algorithms, benefits (B) and risks (R) of various testing protocols in terms of the representativeness of the test results, and compatibility with technology on the market (TD = tested device; Ref = reference monitor; AQI = Air Quality Index).

| Description | Scenario A TDoffline; Ref offline |

Scenario B TD offline; Ref online |

Scenario C TD online; Ref offline |

Scenario D TD online; Ref online |

|---|---|---|---|---|

| Test results demonstrate how TD performs in isolation. | B | B | ||

| TD is “blind” to the Ref. | B | B | B | |

| May encourage technology to include offline mode of use valuable for some applications. | B | B | ||

| Allows Ref data to be used for other purposes (e.g., AQI communication). | B | B | ||

| Approach compatible for all sensor devices on the market, in terms of allowable forms of data transmission and access. | B | B | ||

| Test results may not represent real-world performance for some applications (e.g., remote areas) if TD incorporates external data. | R | R | ||

| Potential over-the-air updates to the TD or other forms of performance modification such as firmware updates testing. | R | R | ||

| Potential data privacy or security threat. | R | R | ||

| Possibility that the Ref is incorporated into TD data adjustment algorithms. | R |

Workshop SMEs discussed their perspectives related to the various scenarios described in Table 3. SMEs noted that numerous applications need the sensor technology operating in offline mode – such as remote areas or when requiring onboard back-up data – but noted that requiring offline mode for all sensor technologies may be misaligned with future technology development and drive up cost for some existing sensors on the market. Another experience discussed involved the evaluation of many sensors in a mode similar to Scenario C where some sensor developers declined to have their technology tested, upon discovering that the reference monitor data would not be web-accessible which might affect their results. Data privacy, security, and integrity were also noted as points of concern for sensors reporting to manufacturers’ servers, especially following the publication by Luo et al. (2018) showing an example of the ability to hack and alter data only accessible via a device manufacturers’ server. Overall, SMEs had common views regarding the various risks and benefits for Scenarios A-D but, diverged regarding which testing scenario was optimal and whether multiple testing approaches should be considered.

3.4.4. Testing considerations

Numerous testing considerations for evaluating the performance of air sensors were discussed during the workshop. Commonly, air sensor performance is evaluated against a reference monitor or against known test concentrations of particulate or gas-phase standards. The choice of reference monitor is important and can impact the sensor performance statistics. Workshop attendees discussed that it may be advantageous to leverage existing regulatory air monitoring network instruments such as US EPA’s FRM/FEM monitors. These methods are not only specific instruments but also specify operation and quality assurance methods to ensure high quality data. One consideration discussed is that the FRM/FEM program is designed to quantify attainment of the NAAQS which have specific averaging time intervals, but many sensor users want exposure information below the current standards and over shorter time intervals (minute to hourly). The averaging interval of measurements can impact the sensor performance statistics. For example, testing at shorter time intervals may introduce noise in some reference methods especially at low concentrations. Many of the SMEs suggested hourly comparisons for ambient data.

Another consideration discussed was how the term “collocation” of a sensor and reference monitor was interpreted to evaluate sensor performance. Some of the SMEs thought a true collocation should only be considered valid at very close distances of 2–4 m. Evaluating “collocated” data at longer distances may risk overstating error due to true spatial variability. Minimizing collocation distance is particularly important for PM10 because this pollutant is typically locally generated and has low ambient residence times compared to PM2.5. Related to the points discussed in Section 3.4.3, sensors that are online may use data from sources external to the sensor which may include nearby reference monitor data. Matching or comparing a sensor’s data to a reference monitor that is located further away risks adjusting or eliminating true spatial variability in pollutant concentrations between the sensor location and the reference monitor location. In addition, using collocated or nearby reference data creates uncertainty in real-world performance where distance to other reference monitors and localized sources will vary.

Attendees also discussed evaluating the precision between devices – in other words, evaluating whether the data produced by identical devices are consistent. Some users are interested in this evaluation as it is known that the same sensor devices with the same measurement principle and electronics, can produce vastly different data. These differences could be reduced by manufacturers and advanced users utilizing testing methods in voluntary consensus standards and publicizing the result to benefit individuals and/or other users. Transparency in “out-of-the-box” variation from sensor to sensor sold by the manufacturer is important to end users with little expertise. Manufacturers might consider selling calibration batches of sensors to eliminate the need for users to calibrate individual sensors.

Workshop attendees discussed the importance of testing sensors over the wide range of pollutant concentrations and environmental conditions that will be experienced during sensor use. These include a relevant concentration range of the pollutant of interest and relevant concentration ranges of any interferent or cross sensitive pollutants. Different technologies have different interferents and influences, which may need to be considered for testing to be most informative of real-world performance. For example, electrochemical sensors may have different interferents than metal oxide sensors for the same pollutant; also, depending on the pollutant, electrochemical sensors will have different interferents. In addition, the testing should include relevant ranges and combinations of T and RH. If performance testing is focused on outdoor US monitoring, this parameter space would be decided based on environmental conditions and pollutant concentrations across the US, and across seasons. Not only the range of T and RH but also the rate of change of these parameters may be important to consider when designing tests, as rapidly changing T and RH such as those associated with weather fronts, can cause large errors in electrochemical and potentially other sensor types.

The appropriate test concentration ranges are dependent on the desired sensor application. Most of the workshop discussions focused on ambient outdoor use in the US. If performance targets focus on typical outdoor US concentrations, it was unclear if sensor testing ranges should include high concentration events like wildfires. In addition, SMEs expressed an interest in evaluating realistic exposures for indoor, personal, and international outdoor concentrations, and the performance in mobile monitoring settings to further expand the utility of performance testing. These applications would have additional testing considerations including higher time resolution performance evaluations for mobile applications, different concentration ranges for international applications, and different pollutant mixtures and particle compositions for indoor and personal use. Depending on the pollutant, field or lab tests, or a combination of both, may be required to achieve the desired combination of concentrations and conditions for outdoor use. Additional testing would likely be needed if the targeted environments and use cases were expanded beyond US outdoor, ambient conditions. In any case, transparency regarding ranges of pollutants, T, and RH for which the sensor was tested is important information to assess performance.

SMEs discussed that a combination of field and laboratory tests is likely needed to meet the desired range of testing conditions. Field tests may need to cover a range of locations and seasons to adequately capture pollutant concentration ranges (target and interferent pollutants) and environmental conditions across the US. Laboratory testing can mimic certain field conditions however it cannot replicate all possible conditions. Achieving a representative particle mix, dispersion, and sampling for PM10 in a laboratory might be particularly challenging. However, some performance parameters, such as response time and detection limit, may be more challenging to evaluate in the field and may be good candidates for laboratory evaluations. Laboratory evaluations may be used as a pre-test to determine whether further field testing and more extensive lab testing should occur as is done by the EU (Gerboles, 2019). The necessity and extent of laboratory and field testing varies by pollutant. Field testing in multiple seasons (high O3 and low O3) was recommended for NO2. Field testing in multiple seasons and locations with a range of meteorology and particle compositions was recommended for PM10. Since SO2 sensors are primarily used for detection of short term SO2 episodes it will be challenging for field tests under the typical low ambient concentrations to capture an adequate concentration range; laboratory testing is likely required. CO may also require laboratory testing since ambient concentrations are often low such as in the US, and sometimes are below the detection limits of the regulatory monitors.

It was also discussed that the length of testing is important and should likely be specified as drift, sensor aging, sensor lifespan, and calibration lifespan, which are important issues for end users. This poses a challenge for testing as there are advantages to releasing test results as quickly as possible as sensor hardware and software versions frequently change. The lifespan of the device and calibration could be determined using a combination of field and laboratory tests where sensors are tested periodically in the laboratory setting after field testing over longer periods (e.g., a month or more) to determine drift, aging, and lifespan.

As shared during the workshop, manufacturers have different expectations as to what a user will do with the sensor before deploying it. This may include local calibration with reference monitor or gas calibration that may not be accessible to many sensor users. It was also discussed that there is some uncertainty as to what should be done both before a sensor is evaluated and before a sensor is deployed in a study. There may be tiered use cases for sensors with different expectations of how much the user will do when receiving the sensor (i.e., nothing, basic field calibration, extensive evaluation). If a manufacturer provides performance statistics for a corrected measurement, that information may not be clear to less experienced users.

3.4.5. Testing parameters to report

Throughout the workshop there was much discussion of general sensor performance metrics including accuracy, precision, repeatability, bias, error, linearity, lifespan, specificity, and completeness. Testing parameters and performance statistics are not always calculated, used, and interpreted in the same way. It is important that metrics are calculated consistently for performance to be comparable across studies. There are several considerations including the air quality data distribution (normal or non-normal distributions), the error in the reference method and the sensor, and time resolution of the data that will affect the testing parameters reported. SMEs were interested in comparing the sensor measurements to reference measurements to understand accuracy and comparing measurements from multiple, duplicate sensors to understand precision across sensors. Additionally, some SMEs believed this information would be particularly helpful in educating users on the capability of sensors and appropriate applications. During the workshop, the need for standards of transparency was emphasized.

Workshop discussions also noted the importance that any test consistently report on the following conditions in which the testing was conducted: 1) whether the test was performed in a laboratory or in the field; 2) the concentration range for a given pollutant, including the range for any known interferents; 3) environmental conditions including T and RH; and 4) other sensor specific parameters if the method depends on it (e.g., flowrate for particle sensors). Additionally, for comparative testing involving reference instruments, some SMEs recommended that the make and model of the reference instrument should be reported, as well as the exact distance between the sensor and the reference instrument.

Linearity, as measured by either r or R2, is a common metric for evaluating performance. However, r and R2 may be insufficient to characterize sensor performance as these calculations are driven by concentration range and outliers. If the overall concentration range in which the sensors are tested is limited, this can impact the linear regression (namely the slope, intercept, and R2 values). A single high concentration point can drive a strong correlation that would not exist if that point was removed and likewise the relationship can be negatively skewed by one bad outlier. Slope and intercept are often used as a correction factor for sensors. However, they may be challenging to interpret if correlations are low. In addition, data may not be normally distributed. Sensor data could show a high R2 value that indicates good agreement, yet the data could be inaccurate.

There are several ways to calculate the measurement error in terms of both absolute (e.g., μg/m3, ppbv) and relative error (in percent). Mean absolute error (MAE) and mean bias error (MBE) are often used. RMSE, also referred to as the root mean square deviation (RMSD), is also commonly used. RMSE/RMSD penalizes outliers and larger deviations in a data set. The RMSE can be normalized by dividing by the average concentration, resulting in the normalized root mean square error (NRMSE). Less commonly used metrics include average relative percentage deviation (ARPD) (Hannigan, 2019) and measurement uncertainty (Gerboles, 2019). In some cases, it may be ideal to report these performance parameters over multiple concentration ranges or environmental conditions if the sensor performs much differently under different conditions. Many SMEs suggested that RMSE may better represent measurement error because it captures large deviations in measured concentrations.

Several other parameters were discussed in less detail. Response time may be important to report for high time resolution applications as reporting at time intervals less than the response time can introduce error. Drift is also important to report over the course of longer deployment timeframes, although this may be confounded by seasonal bias. Lifetime, measurement consistency, and data completeness are also important to determine and report. Limits of detection (both lower and upper limits) may also be important since users may need this information to determine how well-suited the devices are for their application. Lastly, data processing methods such as cleaning or other treatments of the data (e.g., removing outliers, adjustments based on the limit of detection for a device) need to be clearly reported. Some SMEs noted the importance of reporting on the performance of a sensor after corrections have been made following field or laboratory testing. It is commonly recommended that sensors be calibrated either with known pollutant concentrations or through field collocation before being deployed for projects. Consistent reporting and calculation of parameters would allow for a true comparison of various sensor devices.

3.4.6. User perspective on usability, data handling, and best practices

Presentations from the SMEs, as well as workshop attendee questions and comments, highlighted a common need to know more about the usability features of a sensor prior to purchase. At present, few manufacturers provide a comprehensive list of sensor features or what they recommend as appropriate use cases for their device(s). As a result, buyers spend too much time researching options and in lengthy conversations with manufacturers. Users would like this information to be presented more routinely, in a common format, and to have that information readily accessible on product websites and specification (spec) sheets. Price transparency is important to consumers. Publicly displayed pricing would help buyers more quickly prioritize options that fit budget constraints while giving confidence that the costs are fair. In today’s market, manufacturers are offering a variety of buying options (e.g., buy, lease), sometimes separate the costs of equipment and data hosting (e.g., one time purchase followed by monthly cloud service charges), and even offer “data as a service” options (e.g., company retains ownership of the equipment and users pay for access to the data). Prospective users need clear information about these up front and reoccurring costs, but also more information about the cost of ownership (e. g., cellular communication charges, calibration services, component replacement, refurbishment, bulk discounts) and data ownership.

A variety of power configurations are currently being offered by sensor manufacturers including plug-in, solar, and battery options. This basic information is often cataloged on websites or spec sheets, but some additional information might assist users. For instance, expected battery life under specific deployment conditions or configurations is extremely helpful information. Users are often adapting sensors to meet project needs, including conversion from plug-in to solar power or battery power and/or incorporating several sensors into one package. In these cases, power consumption information would be helpful and would likely reduce trial and error. Ideally, a variety of power options would be available from a manufacturer.

In terms of data storage and communications, today’s market includes sensors which solely store data on-board for later download (e.g., memory card), communicate by direct wired connection, communicate wirelessly to central servers that are customer-owned or manufacturer-owned using various protocols [e.g., WiFi, Bluetooth, low-power wide-area network (LoRa), cellular, satellite connection], or offer multiple options or hybrid approaches. This breadth of options matches the variety of situations where sensors are currently being used (e.g., remote locations, cities, homes, wearables). Basic information about the communication option employed is not always cataloged on websites or spec sheets but is vital information for choosing a sensor. As an example, users have found trouble connecting some WiFi enabled sensors to public networks, networks featuring splash page authentication or advanced security, or at 5 GHz frequencies. Users of cellular enabled sensors have reported trouble when the carrier network for which the sensor was designed (e.g., Verizon, AT&T, T-Mobile) is not available in their area. In recent years, manufacturers have made significant advancements in communications strategies anticipating that users wish to use sensors in more situations than previously imagined. However, buyers still need manufacturers to disclose all known limitations so that together, they can decide if the sensors can be used successfully when deployed. Lastly, users desire more information regarding flexibility in setting the data communication rate especially for communication schemes for which those choices influence operation costs (e.g., cellular or satellite data).

Unless a manufacturer is highlighting their user interface or data tools, few provide details about how users will access the data from their sensor. At present, there is little conformity among manufacturers in choosing a file format (e.g.,.csv,.txt, JSON) or data access method (e.g., display only, internal storage, cloud interface, proprietary software, subscription service, direct connection, external data logger). At a minimum, manufacturers should disclose their data access and ownership plan. Further, they should consider how limiting the need for proprietary software or specific operating system specifications may be, how users may wish to combine the sensor data with other data sources, and what existing data management system and data visualization tools users may wish to use. Movement towards a more standardized data file type and format would significantly aid those working to integrate data from several sensor types into databases and/or data analysis and visualization tools. Further, metadata is an important component that should not be overlooked.

Several SMEs noted that there are still issues with data files having inconsistent measurement definitions, non-descriptive file formats and column names, missing or inaccurate units and column headers, formatting abnormalities, and missing or clearly erroneous data. These are difficult inconsistencies for any user but, are especially difficult for ingestion of the data by software used for data analysis and visualization. Firmware updates sometimes change data outputs without notice requiring users to re-work existing data handling procedures. Each of these issues present significant challenges for users and ideally would be handled by manufacturers.

In practice, sensors are being operated by all kinds of users, including community members. It was discussed that ease-of-use features are important to better understand what level of knowledge is needed for someone to properly operate the sensor. Some features might include manuals and training videos, status indicators for power and data transmission, alarms and notifications, automated quality control review and flagging of data, and ‘smart’ calibrations which remove the need for data manipulation by the user (although this could also present issues if that user is unaware that data has been adjusted). Manufacturers should also consider the potential injury risk posed to an operator by electrical, optical, or thermal hazards and might also consider UL certification of the design. Sensors are more useable if they “play well” with other sensors by sharing communication resources, not interfering with other signals, having compatible data formats, etc. Finally, for privacy and safety of the user (e.g., user does not want location publicly disclosed or situations where pollution emitters and sensor users/operators may be in potential conflict), data recordings and displays should include privacy setting, options to ‘blur’ locations, or use encrypted data communication protocols for online data.

At present, sensors often do not produce data that is directly comparable to ambient regulatory monitors. Thus, sensor data are often adjusted to become more comparable. Sometimes the adjustments are relatively simple (e.g., simple linear regression) and sometimes they are labor intensive (e.g., complicated models, machine learning). Traditionally, determining what adjustments are needed has required users to conduct a field collocation near regulatory monitors and to apply that adjustment to the data themselves. This can be a difficult task for some users who may not have access to regulatory monitors (e.g., developing countries, remote locations, individuals) or the technical skills needed to create, validate, and apply complicated models. In recent years, manufacturers have sought to set themselves apart by automating some data adjustments, developing new data adjustment procedures that yield a better performing out-of-the-box sensor, and offering functionality to incorporate a collocation-based adjustment to enhance performance even further. A wide variety of methodologies exist, and most are considered proprietary. Some workshop attendees voiced interest in full disclosure of this proprietary information. Others understood the desire of manufacturers to keep innovation proprietary but asked for more transparency to help them better understand performance implications. At a minimum, users suggested that manufacturers should disclose that an algorithm is being used, the test conditions or ranges of situations it covers, and the artifact the algorithm is intended to address. A summary of all the discussed user needs related to air sensors is provided in Table 4.

Table 4.

Summary of user needs associated with air sensors.

| Category | Specific User Need | Why is this important for a user? |

|---|---|---|

| Price | Publicly list the cost of the sensor, data hosting services, and data access services. | •Helps users determine if sensor fits within their budget. •Makes users fully aware of additional costs beyond the sensor itself. •Helps users know if costs are one-time or reoccurring. |

| Publicly list purchasing options (e.g., buy, lease, rent). | •Assists users in choosing a purchase option that fits within their budget. | |

| Power | Detailed information about expected battery life of a sensor. Detailed information about power consumption of a sensor. |

•Informs the user of potential deployment limitations. •Informs users who may wish to independently change the power configuration or combine many sensors into one unit. |

| Data communication | Basic information about communication options and network compatibilities. | •Helps users select an appropriate sensor for their deployment area infrastructure. •Helps users understand if additional equipment costs may be needed to successfully operate a sensor. |

| Data access and ownership | Detailed information about how data can be accessed from a sensor and who owns the data. | •Helps users understand how to access sensor data (e.g., private, public, manufacturer site). •Helps users select a sensor that meets their data ownership requirements, security needs, or access preferences. •Makes users aware of additional costs beyond the sensor itself. |

| Data storage | Information on how much and how long data can be stored either on a device or by the manufacturer’s data hosting site. | •Sets expectations for sensor deployment and data retrieval both short-term and long-term. |

| Data format | Information on the file format of data (e.g., csv,.txt) | •Helps users determine if additional software programs or technical expertise is needed to access data. |

| Easy-of-use features | Level of knowledge needed to properly and safely operate and maintain a sensor. |

•Assists users in selecting an appropriate sensor. •Helps users determine if additional technical expertise is needed on the project team. |

| General operating conditions | Information about limit of detection (upper and lower) and expected ranges of T, RH, and pollutant concentrations for operating a device. | •Helps users understand which sensor fits their sampling goals. •Sets expectations for sensor deployment. |

| Privacy | Information about whether data is public or private or if the sensor location can be hidden or blurred. | •Allows users to determine if a sensor meets privacy needs. |

| Device lifespan | Detailed information about the expected lifetime of the device and any conditions which effect the estimate. | •Allows users to plan for device/sensor replacement or maintenance. •Helps users understand what conditions to monitor in order to estimate the device lifespan. |

| Calibration | Detailed information about how to calibrate a sensor and how frequent a calibration is needed. | •Assists users in collecting quality data. •Gives users an approach for improving sensor data. |

| Sensor precision | Information about the variability in the measurements among multiple copies of the same sensor. | •Gives users an indication of whether a data adjustment equation can be applied to a batch of sensors or needs to be applied to individual sensors. |