Abstract

Life cycle interpretation is the fourth and last phase of life cycle assessment (LCA). Being a “pivot” phase linking all other phases and the conclusions and recommendations from an LCA study, it represents a challenging task for practitioners, who miss harmonized guidelines that are sufficiently complete, detailed, and practical to conduct its different steps effectively. Here, we aim to bridge this gap. We review available literature describing the life cycle interpretation phase, including standards, LCA books, technical reports, and relevant scientific literature. On this basis, we evaluate and clarify the definition and purposes of the interpretation phase and propose an array of methods supporting its conduct in LCA practice. The five steps of interpretation defined in ISO 14040–44 are proposed to be reorganized around a framework that offers a more pragmatic approach to interpretation. It orders the steps as follows: (i) completeness check, (ii) consistency check, (iii) sensitivity check, (iv) identification of significant issues, and (v) conclusions, limitations, and recommendations. We provide toolboxes, consisting of methods and procedures supporting the analyses, computations, points to evaluate or check, and reflective processes for each of these steps. All methods are succinctly discussed with relevant referencing for further details of their applications. This proposed framework, substantiated with the large variety of methods, is envisioned to help LCA practitioners increase the relevance of their interpretation and the soundness of their conclusions and recommendations. It is a first step toward a more comprehensive and harmonized LCA practice to improve the reliability and credibility of LCA studies.

Keywords: completeness and consistency checks, decision-making, ISO, life cycle assessment (LCA), result evaluation, uncertainty and sensitivity analysis

1 |. INTRODUCTION

According to ISO 14040–44 standards (ISO, 2006a, 2006b) and assuming a single iteration of the LCA study, life cycle interpretation is the fourth LCA phase, after the definition of the goal and scope of the study, the life cycle inventory (LCI) analysis, and the life cycle impact assessment (LCIA). It is defined as the “final phase of the LCA procedure, in which the results of an LCI or an LCIA, or both, are summarized and discussed as a basis for conclusions, recommendations and decision-making in accordance with the goal and scope definition” (ISO, 2006a, 2006b). General requirements and guidance are provided in the ISO standards and reference documents such as the ILCD Handbook (EC-JRC, 2010). These have been further described and sometimes complemented in several LCA textbooks and scientific papers.

However, these often fail to provide a comprehensive and detailed picture of the interpretation phase, focusing on single aspects or lacking specificity in their guidance. Hence, there is a lack of complete and detailed procedures to conduct the different steps within life cycle interpretation effectively. How to proceed and which methods to apply are left for LCA practitioners to decide, without recourse to any explicit context. Better understanding and application of the interpretation phase could thus prevent situations, where (i) practitioners overlook important aspects of their results, (ii) the reliability of the results are insufficiently understood, leading to inappropriate conclusions, and/or (iii) comparability of the results of LCA studies end up compromised because some key steps are not systematically performed.

In this study, we, therefore, review available guidelines and methods for life cycle interpretation and use this to derive a consolidated definition as well as to provide a scientific basis for harmonized procedures and detailed guidance supporting interpretation of LCA results. For each of the interpretation steps, we present an overview of some possible methods and approaches, presented in the form of a toolbox associated with recommendations. The target group is the broad community of LCA practitioners, from beginners to experts. This work has been performed within the Life Cycle Initiative’s flagship project on “Global guidance for life cycle impact assessment,” as part of the task force addressing cross-cutting issues (UN Environment – Life Cycle Initiative, 2019; Verones et al. 2017). The current review and its results are an essential part of the project scope because life cycle interpretation is a central phase within the LCA methodology, iteratively connecting all phases while also being the final link between the outcome of the LCIA phase and the decisions undertaken by the stakeholders of the LCA study. As part of the internal discussion in the project, it also became evident that the interpretation phase in LCA is vaguer than the other phases. This reinforces the relevance of providing recommended practice and to show the range of methods applicable in LCA studies, as the first step toward harmonized procedures.

The focus of the paper is on the interpretation phase and therefore does not address detailed steps related to the LCIA phase, such as normalization and weighting, which have already been reviewed elsewhere, for example, in Pizzol et al. (2017). After describing the review methodology (Section 2), the main findings are presented in two main sections: Section 3 provides a consolidated definition of the interpretation phase and details the identification and organization of the methodological steps to perform it; Section 4 presents the overview of methods, procedures, and approaches within each of these steps along with some recommendations. Although we refer to these overviews as toolboxes, the purpose of this section is to provide a scientific overview of methods, thus only summarizing their key features and pointing out existing literature for more detailed documentation on their application.

2 |. REVIEW METHODOLOGY

2.1 |. Selection of literature

The review focuses on reference documents and published LCA textbooks identified through the knowledge of the author team, since only these address the life cycle interpretation phase in a relatively comprehensive manner, for example, through dedicated book chapters. With few exceptions, such as Skone (2000), scientific papers and reports typically have other focuses than just interpretation and thus only touch upon the topic (e.g., Finnveden et al., 2009, Guinée et al., 2011) or have a narrow focus on specific aspects of interpretation, like uncertainty analysis (e.g., Heijungs & Klein, 2001; Mendoza Beltran et al., 2018). A full systematic review of all LCA studies to evaluate their conduct of interpretation is outside the scope of this review. Additional literature, including relevant technical reports and key scientific publications, is identified using Google Scholar search engine (http://scholar.google.com/), with keywords “life cycle assessment interpretation” and “LCA interpretation.”

2.2 |. Critical review

We critically reviewed each identified literature source in Table 1 and compiled proposed definitions of the interpretation phase allowing us to develop a consolidated definition. In order to prevent contradictions with the ISO standards, this consolidated definition uses the ISO definition as a starting point and complements it with relevant points from the reviewed sources. From each of the sources, we extracted (i) steps recommended (following ISO or not), (ii) potential focus on specific steps, and (iii) methods, procedures, or approaches proposed or recommended. A few complementary methods useful for interpretation, albeit not commonly used in LCA studies, were also added based on the authors’ knowledge and experience in LCA.

TABLE 1.

Reviewed LCA reference documents, books, reports, and other relevant literature for life cycle interpretation definition and methodology

| Documents (references) | Type | Inclusion of definitions of interpretation phase? | Brief description of content (relevant to interpretation) |

|---|---|---|---|

| ISO Standards 14040–44 (ISO, 2000, 2006a, 2006b) | Reference documents | Yes | Provides overarching guidance and requirements, including provision of the step-wise approach |

| ILCD Handbook—LCA Detailed Guidance (EC-JRC 2010) | Reference document | Yes | Provides overarching guidance and requirements, including provision of the step-wise approach |

| Barthel, Fava, James, Hardwick, and Khan (2017) | Reference document | No | Detailed guidance to perform hotspot analysis studies. Includes eight steps for study conduct, including three of them (steps 4–6) relating to interpretation and validation of the results. Focus on hotspot analysis and identification of significant impacts |

| EC (2018a, 2018b) | Reference documents | No | Environmental Footprint Category Rules (PEFCRs) guidance document providing detailed procedures and specifications for performing assessments in different sectors, including methods for hotspot analysis and identification of significant impacts |

| Vigon et al. (1994) | Book | No (dated pre-ISO-14040) | Addresses lifecycle inventory analysis, including model development and evaluation of data issues (quality, specificity, etc.) |

| Ciambrone (1997) | Book | No (dated pre-ISO-14040) | Includes a succinct section dedicated to interpretation of LCA results, as part of the life cycle framework |

| Wenzel, Hauschild, and Alting (1997) | Book | No (dated pre-ISO-14040) | Describes need and method to conduct interpretation of results together with uncertainty and sensitivity analysis |

| Guinée (2002) | Book | Yes | Includes dedicated chapters on interpretation from practical to methodological point of view, including step-wise approach and detailed guidelines for applying specific methods |

| Heijungs and Suh (2002) | Book | Yes | Strong focus on inventory analysis with a subchapter dedicated to interpretation, describing several methods to perform quantitative uncertainty analysis |

| Baumann and Tillman (2004) | Book | Yes | Includes dedicated chapter on interpretation and presentation of results, differentiating quantitative and qualitative interpretation and testing of result robustness |

| Horne, Grant, and Verghese (2009) | Book | No | Reflections on LCA practice, briefly touching upon interpretation of results as part of LCA framework presentation (LCA methodological guidance being outside scope of the book) |

| Curran (2012) | Book | Yes | Describes definition and overarching methodological guidance for interpretation of LCIA results |

| Klopffer and Grahl (2014) | Book | Yes | Includes dedicated chapter on interpretation and critical review, detailing a number of mathematical and non-numerical methods, supported by an illustrative case study |

| Matthews, Hendrickson, and Matthews (2016) | Book | Yes | Includes chapters dedicated to specific methods or groups of methods supporting interpretation, for example, uncertainty assessment, structural path analysis, etc. |

| Jolliet, Saadé-Sbeih, Shaked, Jolliet, and Crettaz (2016) | Book | Yes | Includes dedicated chapter on interpretation, detailing several steps and aspects to check and evaluate, including a detailed part on quality control and uncertainty assessments |

| Hauschild, Rosenbaum, and Olsen (2018a) | Book | Yes | Includes dedicated chapters on interpretation (Hauschild et al., 2018b), uncertainty and sensitivity analysis (Rosenbaum, Georgiadis, & Fantke, 2018), and practical cookbook (Hauschild & Bjørn, 2018) |

| Ciroth (2017) | Book chapter | Yes | Describes the interactions between goal and scope definition phase and interpretation phase succinctly. Part of Curran (2017), detailing goal and scope definition phase |

| Zamagni et al. (2008) | Technical report | No | Provides generic guidance to critical review of LCA studies, including a chapter on interpretation |

| Zampori et al. (2016) | Technical report | No | Provides practical guidance for conducting interpretation with examples and case studies |

| Skone (2000) | Scientific paper | Yes | Discusses the concept of life cycle interpretation, and steps to carry it out |

| Heijungs and Kleijn (2001) | Scientific paper | No | Focus on the numerical approaches in interpretation (contribution analysis, perturbation analysis, etc.) |

| Finnveden et al. (2009) | Scientific paper | No | Touches upon the topic when presenting an overview of LCA; strong focus on uncertainty analysis |

| Guinée et al. (2011) | Scientific paper | No | Touches upon the topic when presenting an overview of LCA |

| Weidema (2019) | Scientific paper | No | Provides detailed procedure and guidance for performing the consistency check |

3 |. CONSOLIDATED DEFINITION AND RECOMMENDED STEPS OF THE LIFE CYCLE INTERPRETATION PHASE

3.1 |. Sources providing interpretation guidance and definition

The main publications identified that address life cycle interpretation are listed in Table 1. These include reference documents, that is, mainly the ISO 14040/44 standards (ISO, 2006a, 2006b) and ILCD Handbook detailed guidance for LCA (EC-JRC, 2010), all published LCA textbooks at the time of the study (first half of 2018), as well as relevant technical reports and scientific publications. The definitions of life cycle interpretation thus found in reference documents and most LCA textbooks all rely on the ISO standards, sometimes taken as such, sometimes reformulated.

Our consolidated definition is as follows: “Life cycle interpretation is the LCA phase, which quantitatively and qualitatively identifies, checks, and evaluates the outcomes of the life cycle inventory (LCI) and the life cycle impact assessment (LCIA) steps, concerning the goal and scope definition. It aims to provide robust conclusions and recommendations to the target audience of the study (e.g., decision-maker, who needs to select among several scrutinized options) while allowing for a suitable communication of the results. This phase is iterative as it enables pinpointing improvement potentials in the other LCA phases, thus offering cues to increase consistency and completeness of the LCA study in the next iteration. The interpretation phase does not encompass communication of the results to stakeholders, although it can be considered a preparation step for communication.”

The above definition builds atop of—and remains compliant with—the definitions provided in the ISO 14043:2000 (focused on life cycle interpretation and later fed into ISO 14044) and ISO 14044:2006 standards (ISO, 2000, 2006b). However, it adds more emphasis on the quantitative and qualitative character of the identification, check, and evaluation of the LCI and/or LCIA results concerning other phases, including the goal of the study. It stresses the iterative nature of the interpretation phase, which can be regarded as a “pivot phase,” with LCA practitioners potentially being sent back to refine the goal, scope, LCI or LCIA phases. Finally, the definition distinguishes interpretation from the communication of the results, although the target audience and the possibility for appropriate communication of the results should be integrated into the interpretation phase.

3.2 |. RECOMMENDED STEPS FOR CONDUCTING LIFE CYCLE INTERPRETATION

To conduct the interpretation phase, ISO 14044:2006 recommends, first, the identification of significant issues, that is, implications of the methods used and assumptions made on the results (e.g., allocation rules, system boundaries, and impact categories, models, and indicators used, value choices), significant impact categories and significant contributions from life cycle stages to LCI and LCIA results (ISO, 2006b). The identification of significant issues is iteratively linked to the evaluation of the results, where three different checks should then be performed: completeness check, sensitivity check, and consistency check (ISO, 2006b). Following this iterative process between the identification of significant issues and the evaluation checks, conclusions, limitations, and recommendations from the case study are drawn.

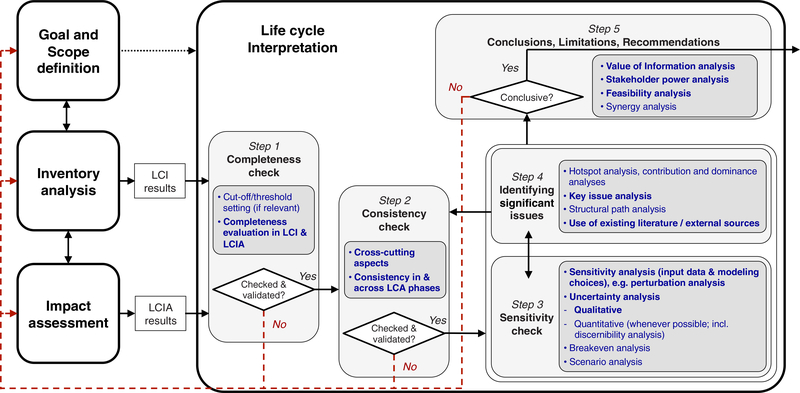

These major methodological steps remain as part of our recommendations for performing life cycle interpretation, which is illustrated in Figure 1. The flowchart depicts our proposed sequence of the five methodological steps associated with specific methods and procedures applicable within each; these different methods and procedures are introduced and developed in Section 4. In comparison with the ISO 14044:2006 standard, we split the evaluation step into the completeness, consistency, and sensitivity checks, respectively, thus rendering five steps instead of three. Building on this more scattered structure, we suggest a notable reordering, in which the completeness and consistency checks are conducted before the sensitivity check and the identification of significant issues (third and fourth steps, which are conducted co-jointly). This reordering is motivated by pragmatism: LCA practitioners first need to evaluate if the study has been done with a sufficient level of completeness or consistency, that is, if the results are associated with sufficient representativeness and reliability to be valid and ready for further interpretation. Otherwise, LCA practitioners need to take a step back and review the goal and scope definition, LCI, and/or LCIA phases.

FIGURE 1.

Overarching framework proposed to perform life cycle interpretation. The five main steps are highlighted in light gray boxes; proposed methods and procedures are summarized in the dark gray boxes. Methods and procedures in bold blue text are considered part of minimum requirements for the conduct of interpretation. The black dotted arrow indicates the provision of inputs/information from the goal and scope definition phase to the interpretation process. Iterative nature of the interpretation phase with other phases is highlighted by the dark red-dashed arrows

In compliance with ISO 14044:2006, the uncertainty analysis is included as part of the sensitivity check, which has a general purpose of understanding the relationships between model input and output, and thus integrates both sensitivity and uncertainty analyses. We also added a link from the identification of significant issues (Step 4) back to the consistency check (Step 2) to acknowledge that the identification of significant issues might involve aggregations and value choices that need a consistency check before proceeding with Steps 3 and 4 (this contributes to the iterative nature of the interpretation steps). The conclusions, recommendations and limitations of the study are kept as a final step (Step 5) in the methodology, although we advocate therein—through the loop to the other phases in Figure 1 (red dotted arrows)—that the practitioner assesses whether or not the study is conclusive before actually drawing the conclusions and recommendations (see details in Section 4.5).

As visible in Figure 1, we designate some methods and procedures as recommended minimum requirements for each of the five interpretation steps, while other methods may be relevant on a case-by-case basis. Although completeness and consistency of the LCA study should be guaranteed when conducting the study in the first place, the completeness and consistency checks (Steps 1 and 2) are the steps, where practitioners demonstrate and document transparently that this is the case, and hence validate their LCA conduct, securing that the obtained results are indeed ready for interpretation. We, therefore, recommend the procedures to do so as mandatory steps. As part of the sensitivity check, the recommended minimum requirement is a sensitivity analysis and a qualitative uncertainty analysis (aligned with previous recommendations, e.g., Igos, Benetto, Meyer, Baustert, & Othoniel, 2019). Several generic methods, which are regarded as major facilitators to identify significant issues (Step 4) and structure the interpretation of results to reach sound and relevant conclusions and recommendations (Step 5), are also proposed as mandatory practice for LCA practitioners.

4 |. METHODS AND DETAILED GUIDANCE FOR EACH INTERPRETATION STEP

A large variety of quantitative and qualitative methods have been proposed and can be used within each of the methodological steps, that is, completeness check, consistency check, sensitivity check, identification of significant issues, and conclusions and recommendations. These methods are briefly described and discussed for each of these steps in the “toolboxes” of Sections 4.1–4.5, which include additional references for further details on their applications.

4.1 |. Toolbox for performing completeness check

According to ISO 14040, the “completeness check” is “the process of verifying whether information from the phases of a life cycle assessment is sufficient for reaching conclusions following the goal and scope definition” (ISO, 2006a). Completeness checks can be performed by applying both quantitative and qualitative approaches and can be quantified as a percentage of flow that is measured or estimated (ISO 14044; ISO, 2006b). Performing a completeness check is inherently paradoxical, as it requires gauging the degree of completeness while the absolute 100% value cannot be known. Therefore, there is a need to approximate the 100% true value and define what degree of completeness is sufficient for the context of a study.

According to ISO 14044, the objective of the completeness check is to ensure that all relevant information and data needed for the interpretation are available and complete. If any relevant information is missing or incomplete, the necessity of such information for satisfying the goal and scope of the LCA shall be considered, recorded and justified. In cases where the missing information is considered unnecessary, the reason for this should be justified and transparently reported. However, if any relevant information, considered necessary for determining the significant issues, is missing or incomplete, the preceding LCI and/or LCIA phases should be revisited or the goal and scope definition should be adjusted. It is important to note that some completeness checks are not always straightforward and can be challenging for LCA practitioners, who often rely on existing guidance. Such situations may, therefore, call for assistance or review from experts to check the completeness of system boundaries, data, parameters, and methods used in the different phases of the studies.

A completeness check consists of the following aspects: (i) completeness check of inventory (Section 4.1.2); and (ii) completeness of the impact assessment, including impact indicators and characterization factors (Section 4.1.3). In addition, the completeness of the procedures and documentation should be checked (see detailed guidance in ISO 14040, Guinée, 2002, or Wenzel et al., 1997). The completeness should be evaluated concerning how the study is defined in the goal and scope phase, that is, which activities are to be included within the system boundaries and which impact categories should be covered. A particularly challenging aspect of the scope definition for practitioners is the definition of cut-off criteria and its relation to completeness evaluation; this point is clarified in Section 4.1.1.

4.1.1 |. Clarifying the definition and relevance of cut-off criteria

ISO 14040 includes an option for defining cut-off criteria, defined as “specifications of the amount of material or energy flow or the level of environmental significance associated with unit processes or product system to be excluded from a study” (ISO, 2006a). According to this standard, the cut-off criteria, for example, 95% or 90% completeness, is quantified as a percentage of flow that is measured or estimated and can be based on mass, energy content, environmental impact or cost. However, to know what 95% or 90% completeness is, one would need to have an estimate of what 100% is, and if this information is known, there would thus be no reason to “cut-off” the 5% or 10%. Therefore, the “cut-off” should be regarded as a “data collection threshold,” below which any further data collection would be unnecessary due to the negligible contributions from these data, and below which the LCA practitioner, therefore, retains the existing data. Often the data that are below that threshold (i.e., retained in their existing low quality) belong to the following two types of data: (i) data for which it is disproportionally difficult to obtain better data quality, (ii) missing specific data for which an estimate (e.g., with a proxy) is regarded as giving sufficient data quality. It should be noted that even the 100% estimate might be incomplete due to ignorance or lack of awareness of the occurrence of elementary flows or impacts. The completeness check can seek to assess the latter by using other data sources to arrive at alternative 100% estimates for comparison.

4.1.2 |. Completeness check of the life cycle inventory analysis phase

The completeness check of the LCI phase is performed to determine:

Completeness of LCI unit process coverage and system modeling;

Completeness of intermediate and elementary flow coverage;

Approaches to identify and deal with missing or incomplete information/data;

Completeness check requirements for comparative assertions.

These four aspects are described below.

Completeness of LCI unit process coverage and system modeling

For process coverage, all relevant processes in the system should be covered. Therefore, LCA practitioners need to check whether all relevant unit processes have been consistently included in the product system, that is, whether the relevant product properties have been consistently traced up to the system boundary with the environment (for attributional studies) or whether all relevant consequences of the studied decision(s) are traced through the affected unit processes to the system boundary with the environment (for consequential studies).

Such evaluation should be aligned with the information reported in the scope definition phase (i.e., delimitation of the system boundaries), where it is recommended to illustrate processes that are included and excluded with a system boundary diagram (Bjørn et al., 2018; Jolliet et al., 2016). Excluded processes need to be justified and their influences on the degree of incompleteness of the study should be addressed transparently as part of the completeness check (EC-JRC, 2010). Use of literature or past studies that provide quantified impacts of the excluded processes can support such verification and should be used to justify the exclusion, when available. The use of input–output modeling for building the LCI can also help identify potentially important elements of the system that have been left out (Mattila, 2018). Alternative techniques have also been proposed to estimate influence of missing information such as the use of algorithms, like the similarity-based approach by Hou, Cai, and Xu (2018) or the FineChem approach for chemical production proposed by Wernet, Hellweg, Fischer, Papadokonstantakis, and Hungerbühler (2008) and Wernet, Papadokonstantakis, Hellweg, and Hungerbühler (2009).

Completeness of intermediate and elementary flow coverage

The overarching recommendation is to perform mass and energy balances of input and output flows to check for completeness and/or potential errors. Conducting a comparison analysis with similar studies is a useful approach to identify potential data gaps (Jolliet et al., 2016). In addition to checking individual mass and energy flows, environmental relevance based on preselected impact categories with or without normalization and weighting (e.g., carbon footprint or weighted single score) must be used to quantify the degree and sufficiency of completeness.

Approaches for identifying and dealing with missing or incomplete information/data

When missing information has been identified, for example, type and quantity of initially missing flow data or element composition and energy balance of flows, it should be approximately quantified. To do so, estimations can be performed using mass/energy balance check, sufficiently similar processes for filling in missing inventory data, knowledge from expert judgment, or legal provisions (e.g., emission thresholds for related processes or industries, with additional expert adjustments to increase their accuracies whenever possible). For addressing inventory data gaps, LCI data sets of similar goods or services, or average LCI data sets of the group of goods or services, to which the respective intermediate flow belongs to, can be used (EC-JRC, 2010). Expert judgement based on process understanding is another means of evaluating data gaps and incompleteness in the study. It is recognized that incomplete modeling (e.g., waste management) is often a weakness identified during a completeness check (EC-JRC, 2010).

Completeness of comparative assertions

As an ISO requirement for comparative studies, cut-off criteria shall always include mass and energy flows as well as environmental impact (see also Section 4.1.1). Furthermore, the completeness level of each system should be evaluated to ensure comparability across the analyzed systems and fairness in their assessments. An example is illustrated in ISO 14044 (see table B.9; ISO, 2006b).

4.1.3 |. Completeness check of the life cycle impact assessment phase

The completeness check of the impact assessment phase involves the following evaluations:

Completeness of the covered impact categories in the selected LCIA method(s) relative to the range of impacts potentially occurring;

Completeness of the models for addressing each specific impact (e.g., impact pathway coverage);

Completeness of the elementary flows concerning a specific model, for example, how many elementary flows of a certain impact have a corresponding characterization factor or how many relevant flows with characterization factors are captured in inventory flow data.

Regarding point (i), to conduct the completeness check of all relevant impact categories, a taxonomy has been proposed (Bare & Gloria, 2008). It guides practitioners in selecting impacts and impact categories for inclusion within the goal and scope phase and it can be used iteratively when evaluating the completeness of the impact assessment. This assessment supports transparency in a study where some impact categories are missing in the selected LCIA methods, hence unveiling so-called “known unknowns” (Grieger, Hansen, & Baun, 2009; Pawson, Wong, & Owen, 2011).

Concerning point (ii), addressing whether a model is complete enough in covering a given impact requires a specific domain expertise, for example, by model developers. Ideally, an LCIA model should be accompanied by information concerning how robust the model for an impact category is (Bare & Gloria, 2006). For example, the ILCD-LCIA methodology provided different levels of recommendations for each impact category (i.e., level I, II, III, interim), referring to the robustness of the underlying models and completeness of the characterization factors (EC-JRC, 2011; Hauschild et al., 2013). Such level of recommendations could be extended to normalization factors (e.g., evaluation of the normalization coverage for ILCD-LCIA by Sala, Benini, Mancini, & Pant, 2015). The level of recommendations could be reported within a characterization model, for example, for distinguishing robustness of characterization factors (e.g., the interim characterization factors (CFs) in the USEtox model; Rosenbaum et al., 2008).

Even though endpoint modeling recently has improved with the development of globally differentiated LCIA methodologies like Impact World+ (Bulle et al., 2019), ReCiPe 2016 (Huijbregts et al., 2017), and LC-Impact (Verones et al., 2019a), the cause-effect chains are relatively similar to traditional ones and several impact pathways remain uncovered, thus making indicators at midpoint level more comprehensive in such cases (Bare, Hofstetter, Pennington, & Udo de Haes, 2000). For example, when an evaluation is done at the endpoint or damage level for stratospheric ozone depletion, there is a risk that only a subset of damages is included within the evaluation (e.g., cataracts and skin cancer cases) while other damages may be omitted, for example, crop damages and damages to human-made materials, which are not covered in current LCIA methodologies (e.g., Impact World+, Bulle et al., 2019; ReCiPe 2016, Huijbregts et al., 2017; or LC-Impact, Verones et al., 2019a). When the effects are not certain, they may not always be included in the damage modeling, either because of a lack of data or because certain choices are made whether or not to include them, for example, via value choices (note that recent LCIA methodologies have proposed several sets of CFs to address such issues, e.g., LC-Impact or Impact World+).

Incompleteness issues may arise due to a mismatch of flows (i.e., point (iii)) in the LCI and/or the characterization models. In some cases, characterization factors are available, but corresponding inventory items are not (Section 4.1.2). In other cases, inventory flows may be available without corresponding characterization factors, and thus cannot be characterized with the selected models (e.g., inclusion of precursors for secondary particulate matter like NOx or SOx in some LCIA methods). Finally, a third case may occur, especially with toxicity-related impact categories, where an inventory flow (known or unknown) might not be estimated quantitatively, nor be associated with characterization factors in any selected LCIA method. Missing but relevant LCIA characterization factors and (intermediate or elementary) flows should, therefore, be considered with quantitative estimation wherever possible. For LCA practitioners, the potential solution to overcome these limitations is to conduct sensitivity analysis with different choices of LCIA methods. In the long run, this limitation should be addressed by LCI database developers and LCIA method developers together.

4.1.4 |. Iteration of the completeness check

During the interpretation phase, the completeness of inventory and impact assessment are evaluated concerning the goal and scope definition (Sections 4.1.2 and 4.1.3). In the case of insufficient completeness, the inventory analysis or impact assessment phases should be revisited iteratively to increase the degree of completeness by focusing on life cycle stages and processes, which are assumed or deduced to be the most contributing ones when compared to total obtained impacts. The iterative nature of this exercise is important in order to ensure that emphasis is not improperly placed on processes or life cycle stages that appear to contribute largely, only because important parts of the system are not captured in the assessment.

4.2 |. Consistency check

A detailed procedure for performing the consistency check is available in a spinoff article to this work (Weidema, 2019). The reader is therefore referred to this for a comprehensive step-by-step guidance. A condensed summary of the key aspects is presented in the below subsections, including cross-cutting aspects (Section 4.2.1) and points related to specific LCA phases (Sections 4.2.2–4.2.5). It shall be kept in mind that like the completeness check, the consistency check is an iterative process (Figure 1, red dashed arrows), where the LCA practitioner can revisit and correct identified inconsistencies. However, the lack of resources may impede such adjustments, and the practitioner should thus account for these in their elaboration of conclusions, limitations and recommendations.

4.2.1 |. Cross-cutting issues for checking

Several cross-cutting issues should be checked in the conduct of the LCA. These include (i) ensuring that the LCA is performed following any standard that it claims to be in accordance with (with disclosure of any potential conflicts between ISO 14044 and other standards used); (ii) ensuring consistency in use of LCA terms and definitions to avoid misunderstandings and improve the communication (post-interpretation) and reproducibility of the LCA study; (iii) ensuring in both LCI and LCIA phases the consistency of considered reference systems, defined as the status-quo (or business as usual) situations, to which the analyzed system would be compared to allow a calculation of the specific contribution of the analyzed product system; (iv) ensuring that the use of aggregation in LCI and LCIA phases is justified and compliant with the goals of the study and the stated conclusions and recommendations; and (v) critically checking the value choices made in the study (e.g., functional unit definition, selection of aggregation techniques), including evaluating if they are necessary and, if so, their potential influence on the results.

4.2.2 |. Consistency with the goal and scope definition

Within the goal definition, it is important to make sure the LCA performed is consistent with the intended application of the study, so that it can support the decision(s) to be made. This applies to both the choice of modeling and data in the LCA study. Key aspects for checking include the definition of the functional unit, the selection of impact categories and associated LCIA methods and the system boundaries (see details on each in Weidema, 2019). These aspects should be made consistent with the goal and the scope of the project and its intended application. For example, using an attributional modeling approach to inform a decision-maker on the future consequences of choosing one or another product alternative would be highly inconsistent. Regarding data requirements, for example, geographical, temporal, and technological scopes, consistency between foreground and background systems within the system boundaries, and the connection between LCI and LCIA, should be carefull checked.

4.2.3 |. Consistency in the LCI phase

In addition to checking the consistency across the goal and scope definition and LCI phases (Sections 4.2.1 and 4.2.2), evaluation should be performed within the system model and collected data themselves. Besides being compliant with the requirements specified in the scope definition, it is important to ensure that no overlaps of unit processes exist in the model and the data used are all sufficiently relevant for the identified system regarding the geographical, temporal, and technological scopes of the analyzed system. The latter should be addressed by flagging the data with uncertainty estimations, with emphasis on tracking data of lower quality (which can then support the sensitivity check; see Section 4.3). Calculation procedures need to be aligned across the entire LCI phase, and even beyond, to all LCA phases (e.g., same procedures to be applied to all systems analyzed). To enable the run of these checks, LCA practitioners, however, must keep track of all data and modeling characteristics (data sources, data specificities, uncertainties, modeling and calculation procedures, assumptions, etc.) in a structured and transparent manner.

4.2.4 |. Consistency in the LCIA phase

In addition to checking the consistency of the cross-cutting issues, such as reference systems and aggregation (Section 4.2.1), and the impact assessment methods defined in the goal and scope (Section 4.2.2), the consistency check should include the sufficiency of the matching of the elementary flows in the LCI and LCIA phases so that all significant elementary flows from the LCI are indeed included in the relevant impact characterization models of the LCIA. It is particularly important to check for overlaps or gaps between the activities that are covered as unit processes in the LCI and the activities that are covered as environmental mechanisms in the LCIA. Similar to the consistency check for the LCI phase (Section 4.2.3), the consistency check for the LCIA phase should include the geographical and temporal relevance of the LCIA data used. A serious inconsistency can for instance occur if applying a normalization reference calculated with a different modeling approach than the one used for the LCI of the analyzed product system (Pizzol et al., 2017).

4.2.5 |. Consistency in the interpretation phase

The interpretation phase itself requires a mostly qualitative consistency check, looking for instance, at how results are presented and at the strengths and limitations of the applied interpretation methods and techniques. Consistency in the identification of the significant issues is particularly required (explaining the arrow in Figure 1 from “Identification of significant issues” back to “Consistency check”), where the consistency check shall ensure that aggregated data are not applied to draw conclusions at a more disaggregated level than the data allow. In general, it is important to check the link to the goal and scope definition and the relation to the strengths and limitations of the LCA methodology application (characterized in the completeness, consistency, and sensitivity checks).

4.3 |. Toolbox for performing sensitivity check

According to ISO 14044, the sensitivity check is the process of verifying the relevance of the information obtained from a sensitivity analysis for reaching the conclusions and giving recommendations (ISO, 2006b). The aim of the sensitivity check is, therefore, to assess and enhance the robustness of the study’s final results and conclusions, by determining how the conclusions of the study may be affected by uncertainties, such as those related to the LCI data, LCI modeling, LCIA methods, or to the calculation of category indicator results, to name a few. Situations, where a sensitivity analysis fails to show significant differences between studied alternatives, do not lead to the conclusion that no differences exist, just that these are not significant (ISO, 2006b). It is worth noting the planning, preparation, and execution of sensitivity and uncertainty analyses should be considered parts of the inventory and/or impact assessment phases, whereas the sensitivity check, as well as the evaluation of its results, is an integral part of the interpretation phase (ISO, 2006b).

Based on the literature mentioned in the above review, we highlight the following key methods from which the information can be verified as part of the sensitivity check: (i) sensitivity analysis of input data and modeling choices; (ii) uncertainty analysis and discernibility analysis; (iii) breakeven analysis; and (iv) scenario analysis. These methods are briefly introduced in the following subsections. For a comprehensive synopsis of respective methods, we advise further readings via the references provided under each subsection.

4.3.1 |. Sensitivity analysis for input data and modeling choices

In general, sensitivity analysis can be defined as the procedure in which the values of variables are changed to verify the consequent effects on the output values. Following ISO 14044, sensitivity analysis “tries to determine the influence of variations in assumptions, methods and data on the results” (ISO, 2006b). Thereby a sensitivity analysis allows, in a comprehensive way, to determine and document changes in the results due to altered input data/parameters (Klöpffer & Grahl, 2014). Sensitivity analyses can be performed in the LCI and/or LCIA phases. According to ISO 14044, a sensitivity analysis is valuable for the following: rules for allocation, system boundary definition, assumptions concerning data, selection of impact categories, assignment of inventory results to impact categories (classification), calculation of category indicators (characterization), normalized data, weighted data, weighting method, and data quality.

As indicated by Groen, Bokkers, Heijungs, and de Boer (2017), different methods for conducting a sensitivity analysis exist. One of the most prominent methods is the perturbation analysis, which was introduced as the marginal analysis by Heijungs (1994), and examines the deviation in the outputs due to uniform, arbitrarily chosen, small (marginal) perturbations in the input data/parameters (Heijungs & Kleijn, 2001). It follows a one-at-a-time approach, which takes a subset of input data/parameters, which are altered one at a time, and examines the influence on the results due to this change. Thus, without any prior information of the actual uncertainty of each parameter, an LCA-practitioner gains knowledge of which input data/parameter may potentially entail large deviations. Heijungs and Kleijn (2001) mention two main purposes of perturbation analysis. The first purpose is to identify those input data/parameters, for which inaccuracy leads to relatively high changes in the results, as opposed to those, which, even with large uncertainty, do not change the results noticeably. Consequently, an LCA-practitioner learns to focus further collection of uncertainty information on the identified sensitive input data/parameters, thus going back to targeted aspects within the scope definition and/or LCI phases (part of the iterative conduct of the sensitivity check and the identification of significant issues; see Figure 1). The second purpose of the perturbation analysis is application-driven, as the information gained from identifying sensitive input data/parameters can be used to support product and process development, for example, prioritization of (re)designing those components of the systems that matter to the sensitivity of the environmental performances of the systems. In addition to the above-described one-at-a-time alteration of input parameters, a global sensitivity analysis should be considered. It applies the same small perturbation of all input parameters in one comprehensive calculation, resulting in a complete ranking of all parameters by their sensitivity (Groen et al., 2017).

It should be noted that while LCA practitioners investigate how impact results change as a result of a change in input, it is, however, important to relate to the goal of the study. For example, in a comparative study, a sensitivity analysis may lead to identifying a largely sensitive parameter, meaning that this parameter greatly influences the impact results, but it does not mean the conclusions are necessarily changed if all impact results co-vary, leaving the ranking of the alternatives unaffected.

For further readings about the theoretical background and application of the methods for sensitivity analysis, including calculation procedures, we recommend the publications by Baumann and Tillmann (2004), Cellura, Longo, and Mistretta (2011), Groen et al. (2017), Heijungs (1994), Heijungs and Suh (2002), Markwardt and Wellenreuther (2016), Rosenbaum et al. (2018), and Wei et al. (2015).

4.3.2 |. Uncertainty analysis and discernibility analysis

Many input parameters to an LCA study are associated with uncertainties. Uncertainty can be defined as “the discrepancy between a measured or calculated quantity and the true value of that quantity,” (Finnveden et al., 2009) or, in other words, as “the degree to which the quantity under study may be off from the truth,” which is then reflected by degree of confidence or probability of occurrence (Rosenbaum et al., 2018). Huijbregts (1998) distinguishes the following types of uncertainty: parameter uncertainty, model uncertainty, uncertainty due to choices, spatial and temporal variability, and variability between objects/sources and humans.

The uncertainty analysis studies the influence of the totality of all those individual uncertainties on the final results, that is, how they propagate through the modeling and impact assessment to result in overall uncertainty of the impact results (e.g., Finnveden et al., 2009; Heijungs & Klein, 2001). Such analysis can be used to evaluate the reliability of the study and the potential need to go back to the scope definition or LCI analysis phases if too large and influential uncertainties need to be addressed. It can be used to meet the goal of the study robustly, for example, quantifying the distinction between two compared alternatives or discerning their impact scores. The latter is often termed “discernibility analysis” (when used quantitatively, see below; Heijungs & Klein, 2001). Both quantitative and qualitative methods exist, although there are several research needs and challenges left to address to systematize comprehensive uncertainty analysis in LCA studies (see review of methods in Igos et al., 2019).

Quantitative methods

The most widely used method for performing quantitative uncertainty analyses is the Monte Carlo simulation approach (Heijungs & Klein, 2001; Rosenbaum et al., 2018), although a large variety of efficient analytic error propagation approaches, often based on first-order approximations, are also used with the limitation of only yielding accurate results when higher order terms or covariance terms are small (Groen & Heijungs, 2017; Heijungs, 1994; Heijungs & Suh, 2002; Hong, Shaked, Rosenbaum, & Jolliet, 2010; Imbeault-Tétrault, Jolliet, Deschênes, & Rosenbaum, 2013). The Monte Carlo simulation approach is nowadays available in some LCA software, although its runs do not yet cover all parts of an LCA (i.e., LCI, LCIA, model uncertainties). The uncertainties of input parameters are represented by a distribution with a mean value and standard deviation and distribution shape. The LCA calculation is then conducted a large number of times (i.e., iterations), each time taking a different sample of values of the input parameters from their defined probability distribution (Heijungs & Klein, 2001). The resulting impact scores are thus expressed as probability distributions instead of single values. Co-variance of the uncertainties within as well as between compared alternatives might occur in comparative studies. In such a situation, LCA practitioners should consider how the overall uncertainty of the comparisons is impacted by such co-varied uncertainties in the alternatives (Groen & Heijungs, 2017; Huijbregts, 1998). Likewise, co-variance of the uncertainties may take place in single-product studies, and their influence on the study results should then be considered too. When methods, data and software capacity is unavailable for performing comprehensive assessments of covariance, LCA practitioners are advised to either qualitatively discuss identified co-variance or explicitly indicate its exclusion in the overall uncertainty evaluation. Several methods and procedures have been proposed over the years to quantitatively assess uncertainties primarily in the context of comparative studies, for example, the probabilistic scenario-aware analysis developed by Gregory, Olivetti, and Kirchain (2016) or the review of performances of five uncertainty statistics methods, as described in Mendoza Beltran et al. (2018) (see also overview of methods in Igos et al., 2019). However, important gaps remain both in terms of data (unquantified uncertainty sources, particularly in the LCIA phase), harmonization in the methods and approaches to apply, and in building user-friendly software to integrate them (Igos et al., 2019).

Qualitative methods

Particularly when quantitative methods cannot be applied for characterizing overall uncertainty (e.g., due to lack of information or LCA software restrictions), LCA practitioners should qualitatively discuss the uncertainties underlying their modeling and how these can influence the associated results and conclusions (in addition to sensitivity analysis). They should critically evaluate the data sources, data quality and data representativeness, with a focus on the data behind the most contributing processes, substances, or life cycle stages. It is therefore recommended to draw on a prior contribution analysis to direct the discussion of the overall uncertainty in the study to the key drivers of the impacts. Such qualitative consideration of the study uncertainties should be linked iteratively to the completeness, consistency, and sensitivity checks (Sections 4.1 and 4.2).

4.3.3 |. Breakeven analysis

Breakeven analysis is a method used to calibrate uncertainty related to decisions between two or more alternatives, by determining which input values lead to the same output for the alternatives. It has been used as a deterministic technique in project budgeting to evaluate the cost of choosing different alternatives, considering how the revenues and costs are affected by changes in the project parameters (Dean, 1948). The point at which the costs and benefits of an alternative are equal is called “breakeven point,” and characterizes the input values that will lead to a breakeven result.

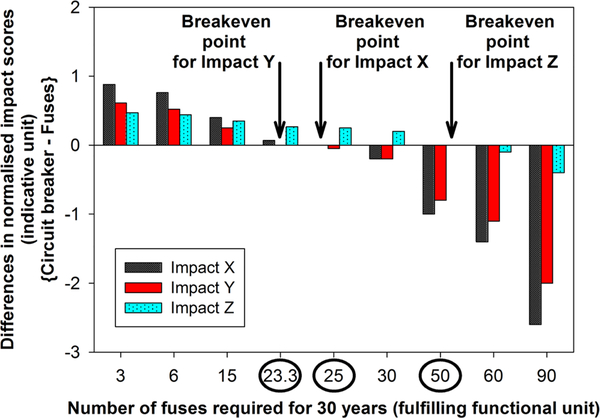

However, the concept of breakeven value is not limited to economic applications. In more general terms, a breakeven analysis allows finding the breakeven point for any parameter of two alternatives, namely the point, at which the contribution of the input values will lead to the same output for both alternatives. A breakeven point could thus be calculated to support the choice of a specific recycling scenario, as opposed to the use of virgin materials (e.g., recycled vs. virgin polymers), by quantifying the requirements (e.g., percentage substitution ratio) for the recycling scenario to be environmentally preferable compared to the use of primary resources (Rajendran et al., 2013). Another example is the use of breakeven analysis to estimate breakeven transportation distances, beyond which waste transportation could lead to more environmental impacts for one municipal solid waste management system, for example, recycling, over another, for example, incineration with energy recovery (Merrild, Larsen, & Christensen, 2012). Trade-offs between different impact categories can be analyzed with a breakeven analysis. An illustrative example is provided in Figure 2.

FIGURE 2.

Illustrative example of breakeven analysis with case of circuit breaker versus fuse systems (functional unit: to control an average current of 1 A and shut down when the limit of 6 A is exceeded or in case of short circuits for 30 years). The tested parameter in the analysis is the number of breakdowns per year (translated into the number of fuses over 30 years, as represented on x-axis), for which the circuit breaker becomes more beneficial than fuses (breakeven points circled in x-axis). The fictional dataset pertaining to the illustrative graph is available in the Supporting Information

Such applications of breakeven analysis in environmental LCA studies could, therefore, bring valuable support to decision-makers, and could be considered in ad hoc situations by LCA practitioners. However, weighting schemes may be needed to solve issues associated with trade-offs among impact categories and develop the most appropriate environmental strategy.

4.3.4 |. Scenario analysis

Scenario analysis is a method of forecasting and analyzing the possible future outcomes (short, medium, or long-term) resulting from decisions taken and/or influence from external factors. It is an important auxiliary method for sensitivity check to verify whether the assumptions made are relevant and/or valid under different scenario conditions, and to ensure these assumptions are well documented and transparent (Refsgaard, van der Sluijs, Hojberg, & Vanrolleghem, 2007). According to Börjeson, Höjer, Dreborg, Ekvall, and Finnveden (2006), scenarios can be classified into three types, depending on what is to be analyzed, and the time scale of the prediction: (i) predictive scenarios, (ii) explorative scenarios, and (iii) normative scenarios.

Before applying scenarios, an LCA practitioner should check what the main questions to be answered are:

What is likely to happen? leading to the use of predictive scenarios, that is, extrapolated forecasting or what-if scenarios;

What can happen? leading to the use of explorative scenarios, that is, external or strategic scenarios, depending on whether external factors, beyond the control of the decision-maker (e.g., changing policies), are included, or strategic actions taken within the system are included, such as compliance with policy instruments (Hoejer et al., 2008);

How can a well-defined target be reached?leading to the use of normative scenarios. One example of a normative scenario method is back-casting, in which one defines a desirable future scenario, and, from that, identifies actions and strategies that will lead to that scenario. That is, for example, applied when defining the input data for the background system in LCI.

Different methods may be applied to define these scenario types, and they are explained in detail, for example, in Weidema et al. (2004) and Hoejer et al. (2008).

4.4 |. Toolbox for identifying significant issues

The methods for identification of significant issues address two different groups of issues: issues associated with the conduct of the study that may alter its validity or reliability, and issues related to the study results and how they answer the goal of the study. The first issue is mainly addressed via comparisons to previous studies; see Section 4.4.4, while the latter issue is addressed with the techniques described in Sections 4.4.1–4.4.3. Significant issues can be identified and characterized qualitatively and/or quantitatively. As indicated in Figure 1, the identification of significant issues is an iterative process interlinked with several other interpretation steps, particularly the sensitivity check, where both feed into each other (e.g., EC, 2010; Hauschild, Bonou, & Olsen, 2018b; ISO, 2006a, 2006b).

4.4.1 |. Hotspot analysis, contribution analysis and dominance analysis

According to Barthel, Fava, James, Hardwick, and Khan (2017), a hotspot is defined as a “life cycle stage whose contribution to the impact category is greater than the even distribution of that impact across the life cycle stages.” (Barthel et al., 2017). We extend this definition to other levels of analysis than just life cycle stages and we define hotspots as life cycle stage(s), process(es), and flow(s) that contribute to the impact results with a large contribution to one or several impact categories. The identification of hotspots, therefore, consists of tracking the origins and causes of the largest impacts of the study.

A “large contribution” can relate to life cycle stages or processes or flows that have larger contributions to an impact category than the average contribution from all sources of that impact (expanded from the definition in Barthel et al., 2017). This average contribution is inherently dependent on the level of aggregation in the impact sources, for example, whether the total impact is provided for four life cycle stages versus for 10 processes versus detailed into 100 processes. Hence, it is important to ensure in the consistency check that no hotspot is overlooked because of a too high or a too low level of aggregation. It is noteworthy that practitioners should not rule out hotspots that are limited to only one impact category as that specific impact may be a significant impact (as determined by contribution to damage categories, single scores, or specific stakeholder interests). Hotspot identification can be performed using the characterized impact results alone and taking the whole spectrum of assessed impact categories into consideration. Methods like contribution analyses and dominance analyses can assist in this effort.

Contribution analysis refers to the translation of the impact results into distributions showing the share of impacts assigned to the different life cycle stages, processes or substances (Guinée, 2002; Heijungs & Klein, 2001; Heijungs & Suh, 2002; see ISO, 2006b, Annex B). These distributions are obviously specific to each impact category. Dominance analysis (DA) expands the contribution analysis with statistical tools or other techniques (e.g., ranking) like the DA method described in Kraha, Turner, Nimon, Reichwein Zientek, and Henson (2012) that relies on pairwise comparisons of variables (e.g., processes) in their ability to explain the results (with consideration of collinearity between variables). To perform contribution and dominance analyses, the practitioners need to keep track of the life cycle stages, processes, and substances contributing to the different impacts; most LCA software have features allowing for this, enabling graphically illustrating them as stacked bar charts summed up to 100% for each impact category.

4.4.2 |. Key issue analysis

Heijungs (1996) defines key issues as “those aspects of an LCA which need more detailed research to reach more robust conclusions.” Key issue analysis is thereby a part of the iterative process of LCA, providing guidance on which aspects to focus in the next iteration. Key issue analysis is the combination of uncertainty analysis and perturbation analysis; a detailed mathematical description of the method can be found in Heijungs and Suh (2002). By explaining the output uncertainty caused by the uncertainty of specific input parameters, it aims to find uncertain parameters, for which the result of the study is sensitive (Heijungs & Suh, 2002). Heijungs (1996) categorizes parameters into three sets: (i) a key issue (e.g., uncertain and highly contributing parameters), (ii) a non-key issue (e.g., parameters which are certain and show little contribution), and (iii) a possible key issue. Although the analysis itself belongs to the sensitivity check, the ensued categorization of the issues is an integral part of the identification of significant issues; hence, it is listed in this latter interpretation step. Most issues are typically considered as “possible key issues” (Heijungs & Suh, 2002), and they, therefore, need to be considered cautiously, particularly as some of them might call for a new iteration to refine the LCA study (red dotted arrows in Figure 1). Proven key issues are the areas,on which practitioners should concentrate in elaborating the conclusions of the study and/or outlining a new iteration of the study (Heijungs, 1996).

4.4.3 |. Structural path analysis

Structural path analysis (Defourny & Thorbecke, 1984) supplements the identification of the hotspots (Section 4.4.1), with a focus on identifying the detailed upstream value chains of the system under study that contribute the most to the results. It thus provides a more detailed understanding of the structure of the product/service system and is a particularly useful support for policy analysis as well as for identifying market-based improvement options. Structural path analysis can be complemented with structural decomposition analysis, as exemplified by Wood and Lenzen (2009), aiming to identify the key driving factors for changes in an economy.

In practice, structural path analysis utilizes the fact that an LCI result (R) can be expressed as a power series expansion of the technology matrix (A)—see Equation (1):

| (1) |

where B is the matrix of elementary flows, I is the identity matrix, and y is the final demand vector representing the functional unit. This allows the isolation of the contribution to R from each tier {A, A2, A3, etc.} of each single value chain (i.e., path).

4.4.4 |. Use of existing literature

Literature sources with a similar study goal (case studies or meta-analyses) can serve as a useful benchmark for evaluating and comparing the obtained environmental impact results of the analyzed system. However, LCA practitioners then need to carefully check the comparability of the studies, for example, the potential differences in modeling choices and assumptions or impact assessment methods.

The existing literature can additionally be a useful method to check for plausibility in the study results and identify issues related to the assessment conduct. It thus contributes to performing an anomaly assessment, as recommended in the ISO14044 (ISO, 2006b), although such use of the literature can be regarded as belonging to the consistency check (Section 4.2). Results of an LCA study that largely deviate from previous studies made on the same object and with similar scoping of the system thus should call for going back to the scope definition, inventory and impact assessment phases of the study and look for potential sources of errors or else for well-justified discrepancies (Guinée, 2002). Likewise, comparisons with alternative literature sources can also feed into the completeness check as they can inform on the completeness of the performed study as opposed to that of previous studies, which may have been associated with similar or different data gaps (Section 4.1).

Literature sources can take the form of past LCA studies or meta-analyses conducted from reviews of all LCA studies made in a specific domain (i.e., statistical analysis of harmonized LCIA results from the pool of selected studies). Scientific literature and gray literature should, therefore, be reviewed by LCA practitioners, although caution should be applied to only consider studies of sufficient quality and transparency.

Another means of checking the plausibility of results via external sources is the use of an external normalization reference, which can provide insights into whether or not the results are off-target (Laurent & Hauschild, 2015). The use of per-capita normalization references, as available in literature (e.g., Laurent, Lautier, Rosenbaum, Olsen, & Hauschild, 2011; Laurent, Olsen, & Hauschild, 2011; Sala et al., 2015) and various LCA software, can thus help appraise the magnitude of the results relative to a common situation and identify orders of magnitude errors (Laurent & Hauschild, 2015; Pizzol et al., 2017).

4.5 |. Toolbox for drawing conclusions and recommendations

After the significant issues have been identified, and the results checked for their consistency, completeness, and sensitivity, the LCA practitioner moves to the step aiming at drawing conclusions and recommendations. It is an important step, in which s/he reflects on the goal of the study and attempts to provide answers to the initial research questions that should support the decisions to be made, thereby “closing the loop” of the LCA study.

The LCA practitioner must first assess whether or not the study is conclusive (Figure 1). In our proposed framework, the question is intended for the practitioner to evaluate whether there is sufficient basis from the previous steps in the interpretation phase to address the initial research questions defined in the goal of the study. If not the case, the practitioner should then iterate the study unless arguments are dictating that no further refinements would enable answering the questions. In the latter case, the practitioner should conclude on the inconclusive nature of the study, give a detailed account of limitations, and provide recommendations that could help resolve the deadlock in future works. If the study is deemed conclusive, conclusions and recommendations should be provided to the decision-makers and stakeholders.

In the subsequent sections, four methods that can support this step of the interpretation are highlighted: stakeholder power analysis, value of information (VoI) analysis, feasibility analysis, and synergy analysis.

4.5.1 |. Stakeholder power analysis

Stakeholder power analysis (Mayers, 2005) is an identification of the key actors or stakeholders in a system or decision context and an assessment of their interests in and influence on that system or decision. As such, the stakeholder power analysis is one possible implementation of the “influence analysis” recommended in ISO 14044 (ISO, 2006b, Clause B.2.3) under the heading of “identification of significant issues.” The core part of the stakeholder power analysis is an identification of each stakeholder group in terms of (i) its position concerning the outcome of a recommended decision to address an environmental issue, classified into those who obtain an overall benefit from the decision (“winners”) and those who are detrimentally impacted (“losers”); and (ii) its power to influence a decision and/or its implementation and/or consequences.

Depending on its position within this two-dimensional grid (Table 2), a stakeholder group can be expected to support or work against a recommended decision and its implementation. Based on the power analysis, a strategic recommendation can be made on how to address the concerns of each stakeholder group and to facilitate the implementation of the recommended decision. The result of a power analysis can be a modification of the originally recommended decision.

TABLE 2.

Four general strategies for managing stakeholder relations. Extracted from step 5 in Mayers (2005)

| Stakeholder power/position | Winner | Loser |

|---|---|---|

| High power | Collaborate with | Mitigate impacts on; defend against |

| Low power | Involve, build capacity, and secure interests | Monitor or ignore |

A situation with high-power winners and low-power losers is simple to address, but a situation with high-power losers and low-power winners requires a more sophisticated strategy. If the stakeholders that benefit from a recommended decision (“winners”) have low power, they may not be able to implement the decision against the high-power interests that will lose from the decision. Therefore, an implementation strategy may require building the capacity and securing the interests of the low-power winners so that they can defend their interests versus the high-power losers. To reach a social consensus that includes the losers, it may be necessary to partly and/or temporarily compensate them for their losses, even when this reduces the social value of the decision.

4.5.2 |. Value of information analysis

Value of information (VoI) analysis compares the costs of reducing the uncertainty of a decision through additional information with the costs of false negative or positive outcomes when only relying on the already available, more uncertain information. The focus is thus on uncertainties that can alter the LCA results from recommending one option to recommending another option. Although there is no convincing published example of VoI use within LCA, a succinct description is provided below, building on the introduction and use of VoI within health economics, as described by Wilson (2015).

The costs of the additional data collection and handling should be expressed relative to the gained uncertainty reduction, which can then be translated into probabilities of changes in decision outcomes. It may be relevant to include the costs of waiting for the additional data, especially in situations where the postponement of a decision implies important risks. The latter can be seen as a practical implementation of the precautionary principle. In situations where the uncertainty of the current information is caused by willful ignorance, the costs should include reputational risk (the cost of dealing with dissatisfied stakeholders and the potential losses of market share). It may often be relevant to include the business-as-usual or 0-decision as one of the decision options, to quantify the baseline cost of non-action. Note the VoI is zero if it does not imply a change in decision.

The outcome of a VoI analysis is either that information is sufficient for a decision (when additional information is costlier than the cost of false negative or positive outcomes) or an identification of the most significant data to collect (those that are most cost-effective to collect, that is, have the highest reduction in cost of false negative or positive outcomes per cost of additional data). Therefore, while the VoI analysis per se may be positioned in the step of identifying significant issues, it can directly feed into the conclusion step of the result interpretation (hence its current positioning) by providing useful insights into the need and relevance for further iterations (red arrows in Figure 1).

4.5.3. Feasibility analysis

A feasibility analysis seeks to identify the most cost-effective implementation of a decision. It will typically consider the following aspects to arrive at an overall recommendation for and planning of the implementation:

Parallel autonomous developments that could make the implementation unnecessary or simpler,

Links to existing procedures and existing or new informational or management tools that can facilitate implementation,

Technical, resource, legal, normative, cultural, financing, and scheduling constraints and barriers to implementation, and ways to overcome these,

Needs for and experiences from pilot implementation,

Possible incentives required for implementation,

Needs for monitoring to ensure implementation,

Scalability of the solutions, and

Total costs of different options for effective implementation.

An example of a simple feasibility analysis for an LCA study is provided in Chapter 6.2 of Weidema, Wesnæs, Hermansen, Kristensen, and Halberg (2008).

4.5.4. Synergy analysis

The implementation of an improvement option may cause the improvement potential of other improvement options to increase (a synergic influence) or decrease (a dysergic influence). When a study investigates several improvement options, each of these should be analyzed for possible synergic or dysergic influences and the most important of these should be quantified and their impact on the overall improvement potentials reported. An illustration of a comprehensive synergy analysis is provided in Weidema et al. (2008); Chapter 4.7.2), describing two cases of improvement options for meat and dairy products in Europe, as summarized below:

Case of synergy: The reduction in nitrate emissions from intensively grown cereals crops resulting from the introduction of catch crops (improvement option 1) will increase by 43% if currently extensively grown cereal areas are intensified (improvement option 2), since the latter increases the area under intensified growing practice that will benefit from the introduction of catch crops.

Case of dysergy: Ammonia emissions from manure are reduced both by optimized protein feeding (improvement option 3) and by reduction in the pH of liquid manure (improvement option 4). Since it is the same ammonia emissions that are targeted by both improvement options, the combined effects from implementing both options are smaller than the sum of the reductions from a separate implementation of either of the two options.

5 |. CONCLUSIONS AND OUTLOOK

Through a comprehensive review of existing literature, particularly LCA standards and books, the definition, purposes, and steps of the life cycle interpretation phase have been evaluated and revisited in this study. In order to help LCA practitioners interpret their LCA results in a comprehensive and harmonized way, a framework repositioning the five main interpretation steps is proposed and recommended for use in future practices. We complemented it with an operational toolbox that includes step-wise procedures and methods applicable within each of the five steps. Some of these methods have been in use for many years, while others have not been used extensively within LCA and need be picked up by LCA practitioners.

This article presents, for the first time, an overview of the various challenges and the inherent complexity of the interpretation phase, which is very often performed very rudimentarily in published studies. Our review and step-wise framework can form the basis of detailed and systematic guidance on interpretation, providing additional focus on facilitating practical implementation of methods and procedures that may be perceived as difficult to be applied by a practitioner. We recommend a follow-up study to evaluate the effectiveness of our guidance and to identify potential remaining challenges. In such study, the transitions between the different steps and the conduct of specific methods in future LCA case studies using the framework should be assessed to pinpoint possible needs for refinements and method development. A few of the proposed methods, in particular, those related to uncertainty assessment, require additional research to make them fully operational. Recent and ongoing studies primarily focus on developing new methods for evaluating uncertainty of LCA results and on filling gaps in providing uncertainty estimates for LCIA methods (Verones et al., 2019b; Wender, Prado, Fantke, Ravikumar, & Seager, 2018). Research is, however, still needed to bridge remaining gaps and gather the developed methods through a review and consensus-building process to eventually reach recommended guidance for systematic and quantitative evaluation of uncertainties in LCA studies.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank Anders Bjørn and Pradip Kalbar for their support in the review of the documents reporting on methodological aspects and guidance about life cycle interpretation.

Footnotes

CONFLICT OF INTEREST

The authors declare no conflict of interest.

DISCLAIMER

The views expressed in this article are those of the authors and do not necessarily represent the views or policies of the organizations to which they belong. The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of the UNEP/SETAC Life Cycle Initiative concerning the legal status of any country, territory, city, or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. Moreover, the views expressed do not necessarily represent the decision or the state policy of the UNEP/SETAC Life Cycle Initiative, nor does citing of trade names or commercial processes constitute an endorsement. Although a U.S. EPA employee contributed to this article, the research presented was not performed or funded by and was not subject to U.S. EPA’s quality system requirements. Consequently, the views, interpretations, and conclusions expressed in the article are solely those of the authors and do not necessarily reflect or represent U.S. EPA’s views or policies.

SUPPORTING INFORMATION

Additional supporting information may be found online in the Supporting Information section at the end of the article.

REFERENCES

- Bare JC, & Gloria TP (2006). Critical analysis of the mathematical relationships and comprehensiveness of life cycle impact assessment approaches. Environmental Science & Technology, 40, 1104–1113. [DOI] [PubMed] [Google Scholar]

- Bare JC, & Gloria TP (2008). Environmental impact assessment taxonomy providing comprehensive coverage of midpoints, endpoints, damages, and areas of protection. Journal of Cleaner Production, 16, 1021–1035. [Google Scholar]