Abstract

The dopamine system has been implicated in decision-making particularly when associated with effortful behavior. We examined acute optogenetic stimulation of dopamine cells in the ventral tegmental area (VTA) as mice engaged in an effort-based decision-making task. Tyrosine hydroxylase-Cre mice were injected with Cre-dependent ChR2 or eYFP control virus in the VTA. While eYFP control mice showed effortful discounting, stimulation of dopamine cells in ChR2 mice disrupted effort-based decision-making by reducing choice toward the lever associated with a preferred outcome and greater effort. Surprisingly, disruptions in effortful discounting were observed in subsequent test sessions conducted in the absence of optogenetic stimulation, however during these sessions ChR2 mice displayed enhanced high choice responding across trial blocks. These findings suggest increases in VTA dopamine cell activity can disrupt effort-based decision-making in distinct ways dependent on the timing of optogenetic stimulation.

The neurotransmitter dopamine has been implicated in a wide-range of learning and motivational processes including effort-based decision-making. At present, many studies investigating dopamine's role in this form of decision-making have found a dichotomous pattern of results in which receptor antagonism (Robles and Johnson 2017; Bryce and Floresco 2019) or depletion (Cousins and Salamone 1994; Mingote et al. 2005) of dopamine results in a biasing of behaviors away from more preferred rewards associated with increased effort. Conversely, dopamine stimulation (Bardgett et al. 2009; Wardle et al. 2011) or transgenic overexpression of dopamine receptors (Trifilieff et al. 2013) tends to invigorate responding for outcomes associated with higher effort. While much has been learned, a limiting factor with these approaches is that they lack temporal specificity, thus constraining resultant interpretations. We used optogenetics to determine the effect of acute ventral tegmental area (VTA) dopamine stimulation at the timepoint of decision-making. Such an approach allows for a focus strictly on the role of dopamine in evaluating choices with different cost–benefit outcomes, without the potentially confounding factor of consistently altered dopamine signaling throughout the decision-making test.

Twenty-three mice expressing Cre recombinase under the control of the tyrosine-hydroxylase promoter were produced via outbreeding with wild-type C57BLJ mice (Jackson Laboratory) under the auspices of the Michigan State University Institutional Animal Care and Use Committee. Mice were housed by sex, up to five per cage presurgery. At 10 wk of age, mice underwent stereotaxic surgery in which 0.5 µL of either AAV5-Ef1α-DIO-ChR2-eYFP (n = 10 males, n = 8 females) or AAV5-Ef1α-DIO-eYFP (n = 5 males, n = 3 females; Vector Biolabs) was virally infused unilaterally at the level of the VTA (AP −3.08, ML ±0.6, DV −4.5 mm) in a manner counter-balanced for hemisphere. Optic fiber ferrule tips (200-µm core, 4.1 mm; Thorlabs) were implanted dorsal (≈0.3 mm) to the injection site and affixed with dental acrylic (Lang Dental Manufacturing Co.). Mice were given 4 wk postsurgery to recover and to allow for sufficient viral transfection, during which time they were singly housed, which continued for the duration of the experiment in order to avoid ferrule tip damage.

Following recovery, mice were food-deprived to 90% of their free-feeding weight by limiting access to a single daily portion of lab chow. Subsequently, mice were trained in operant chambers (Med Associates) where in separate sessions, responses on one lever led to 50-µL delivery of a preferred high value reinforcer (e.g., left lever → orange-flavored 20% sucrose), whereas a different lever resulted in delivery of the lower value outcome (e.g., right lever → grape-flavored 5% sucrose). Apart from reversal testing, these lever contingencies remained fixed throughout and were counterbalanced across viral groups. Training continued until mice hit a criterion of 25 responses for each lever in a single session. Next, mice were trained on the effort-based decision-making task at which time they were tethered, however no optical stimulation occurred. These ∼1-h sessions were divided into four blocks consisting of 10 trials each and were conducted once per day for 10–12 d. Within each block, mice first received four forced trials (two low effort, two high effort, and randomly ordered) in which only one lever was extended. Subsequently, mice received six choice trials where both levers extended simultaneously. Across blocks, a single press on the low effort, low choice lever led to the delivery of the low value reinforcer (i.e., fixed-ratio 1; FR-1). Alternatively, responses on the high choice lever led to the delivery of the high value reinforcer, which gradually required more responses to acquire across blocks: block 1 = FR-1; block 2 = FR-5, block 3 = FR-20, and block 4 = FR-40. For choice trials, the first response during block 1 trials led to the retraction of both levers, whereas during blocks 2–4 the first response on the high choice lever led to the retraction of the low choice lever. Each trial and block were separated by a fixed 60-sec interval, and during the trials if mice failed to perform a lever response across a 60-sec interval the trial was omitted.

Following training, mice underwent two optogenetic testing days. During these stimulation sessions, light intensity was initially calibrated to emit ≈20 mW from the tip of the optical fiber, with 1 sec optogenetic stimulation of VTA dopamine cells (473 nm, 5-msec pulses at 20 Hz) beginning 5 sec prior to each choice trial, which was delivered via a waveform generator (Agilent Technologies) integrated into the Med Associates apparatus. Subsequently, half the mice from each viral condition received one poststimulation test, whereas the other half received two poststimulation tests. Finally, in order to control for any potentially confounding effects of dopamine stimulation on contingency learning, a small cohort of mice (n = 4) received additional training wherein response outcome contingencies were reversed (i.e., low effort lever always yielded 20% sucrose under FR1, while high effort lever yielded 5% sucrose under increasing effortful action). Following ten sessions of training, these mice received optogenetic testing as described above, albeit with the new response-outcome contingencies.

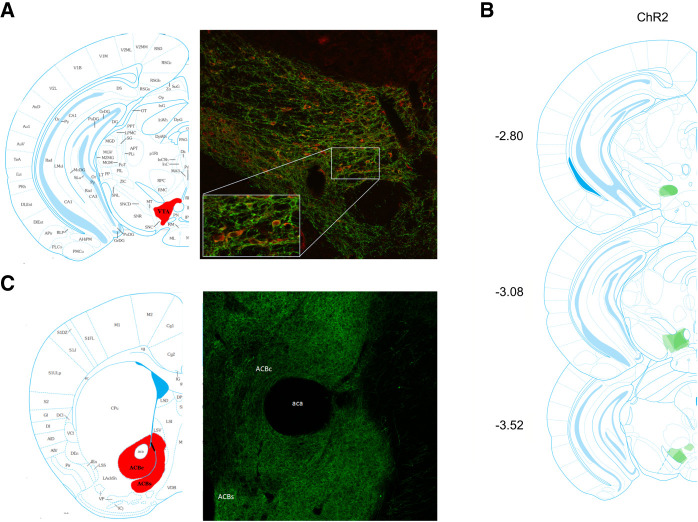

Upon conclusion of testing, mice received an intraperitoneal injection of sodium pentobarbital (100 mg/kg). Subsequently, they were perfused transcardially with 0.9% saline followed by 4% cold paraformaldehyde (Sigma-Aldrich) in 0.1 M phosphate buffer (PB). Brains were extracted and postfixed in a 12% sucrose, 4% paraformaldehyde solution, for ≈24 h at 4°C. Brains were then extracted, sliced at 30 µm using a freezing microtome and stained using a mouse anti-tyrosine-hydroxylase primary (MilliporeSigma MAB318) in addition to a rabbit-anti-GFP primary (1:1000; MilliporeSigma MAB318) with donkey anti-rabbit-488 (1:500; Invitrogen A21206) and donkey-anti-mouse-568 (1:500; Invitrogen A10037) corresponding to ChR2 and TH positive cells, respectively. Imaging of the VTA (Fig. 1A) was carried out using a Nikon A1 laser-scanning confocal microscope (Nikon Instruments, Inc.). Viral targeting and colocalization were scored qualitatively using separate raters that were blind to viral conditions (Fig. 1B). On completion, n = 2 males and n = 2 females from group ChR2 were excluded from analysis due to poor targeting.

Figure 1.

(A) Representative photomicrograph showing immunohistochemical verification of Cre-dependent ChR2 (green) in tyrosine-hydroxylase positive cells (red) in VTA. (B) Quantified eYFP expression of ChR2 in VTA is displayed where light shading represents minimal spread and darker shading represents maximal spread of viral expression at each level. (C) Dense viral transfection was noted in terminals in nucleus accumbens. (ACBs) Nucleus accumbens shell, (ACBc) nucleus accumbens core, (aca) anterior commissure.

For data analysis, for each trial block the percentage choice on the high choice lever with omissions excluded was averaged for the final two training sessions (prestimulation), the optogenetic test sessions (stimulation) and the proceeding nonstimulated session(s) (poststimulation). These data were subjected to a three-way repeated measures ANOVA with a between-subjects variable of virus (ChR2 vs. eYFP) and within-subjects variable of session type (prestimulation, stimulation, and poststimulation) and block (1–4). Follow-up virus × block interactions were carried out for each session separately, with tests of simple main effects used to determine the nature of any significant interactions. Post-hoc Bonferroni correction to control for multiple comparisons was used to determine significant main effects of either block or session. Finally, due to a lack of variance in a number of the test blocks and nonuniform distribution, analysis of the omission and contingency-reversal data was conducted using Wilcoxon matched pairs test. The α level for significance was 0.05 and all analyses were conducted using Statistica (Statsoft).

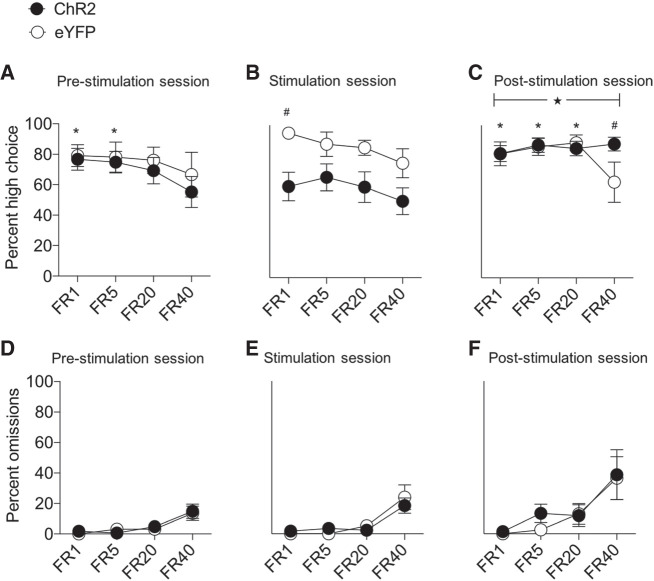

The data of primary interest are depicted in Figure 2. For the choice test data (Fig. 2A–C), analyses revealed a significant virus × session × block interaction (F(6,120) = 2.38, P < 0.05). Subsequent virus × block ANOVA for the prestimulation session (Fig. 2A) revealed a main effect of block only (F(3,60) = 3.58, P = 0.01) due to significant discounting of the high effort reward in all mice on block 4 relative to blocks 1 and 2 (Ps ≤ 0.02). By comparison, optogenetic stimulation of DA cells significantly disrupted choice test responding (Fig. 2B). ANOVA revealed a main effect of virus (F(1,20) = 5.12, P < 0.05) and block (F(3,60) = 3.55, P = 0.01). Planned comparisons revealed a significant reduction on high choice responses in ChR2 mice relative to eYFP controls on block 1 (F(1,20) = 7.51, P = 0.01) and a tendency for group differences on blocks 3 and 4 (smallest F-value; block 4, F(1,20) = 3.28, P = 0.08). This difference in performance between the groups could not be attributed to nonspecific effects of laser stimulation on motoric action as omissions (Fig. 2E) were comparable across trial blocks (largest Z-value; block 2, Z = 0.54, P = 0.58). Strikingly, in the sessions proceeding optogenetic testing, ChR2 mice displayed disruptions in effortful discounting as they continued performing on the high choice lever irrespective of increases in the effort required to obtain reward (Fig. 2C). ANOVA revealed a significant block × virus interaction (F(3,60) = 4.66, P = 0.005) due in part to significant reduction in high choice responses on block 4 in eYFP relative to ChR2 mice (F(1,20) = 4.72, P < 0.05). Moreover, eYFP mice discounted the 20% sucrose reinforcer as the amount of effort increased to FR-40 relative to performance during all other trial blocks (smallest F-value; block 1 vs. 4, F(1,20) = 4.54, P < 0.05). In contrast, ChR2 mice displayed comparable responding for the high choice lever across all trial blocks (Fs < 1). These effects also did not reflect generalized motoric disruptions as omissions across trial blocks were comparable in ChR2 and eYFP mice (largest Z-value; block 2, Z = 0.98, P = 0.32) (Fig. 2F). Finally, as the effects of optogenetic stimulation in ChR2 animals were present in the first block (Fig. 2B) we wanted to confirm that laser activation in this group did not disrupt the capacity of mice to encode and/or retrieve lever contingencies (Fig. 3). Analyses revealed that irrespective of dopamine VTA stimulation, mice continued to bias their choice performance toward the low choice lever, which at this stage of testing led to delivery of the preferred higher value reinforcer (largest Z-value; block 3, Z = 1.60, P = 0.1).

Figure 2.

Optogenetic stimulation of VTA dopamine cells disrupts effort-based decision-making. (A) In the prestimulation decision-making test sessions prior to optogenetic stimulation, both ChR2 and eYFP mice displayed a comparable pattern of responding by reducing their high choice lever responses during the final block of FR-40 trials. Post-hoc Bonferroni contrasts revealed significant reduction in high choice lever responding in blocks 1 ([*] P < 0.01) and 2 ([*] P = 0.02) relative to block 4. (B) Optogenetic stimulation in ChR2 mice led to a reduced preference for the high choice lever across trial blocks. (#) Significant group difference during block 1 (P = 0.01). (C) In the effort-based decision-making tests following optogenetic stimulation, poststimulation performance reflected a disruption in effortful discounting in ChR2 mice as they persistently preferred the high choice lever across trial blocks. (★) Significant virus × block interaction (P = 0.005), (#) significant group differences during block 4 (P < 0.05), (*) significant reduction in high choice responses for eYFP block 4 trials relative to blocks 1 (P = 0.05), 2 (P = 0.003), and 3 (P < 0.001). (D–F) Omissions generally increased during FR-40 trials across prestimulation (D), stimulation (E), and poststimulation (F) test sessions, though importantly no group differences were noted (Ps > 0.17).

Figure 3.

Following reversal of the lever contingencies such that the low effort lever always produced the preferred high value outcome, ChR2 mice continually maintained their preference for the low effort lever irrespective of laser stimulation or trial block.

Previous studies have shown that dopamine depletion or antagonism reduces performance following increases in the effort required to obtain reward (Salamone et al. 1991; Nowend et al. 2001; Mingote et al. 2005; Bardgett et al. 2009; Robles and Johnson 2017), whereas facilitations in dopamine signaling bias performance toward effortful actions (Bardgett et al. 2009; Wardle et al. 2011; Trifilieff et al. 2013). These findings suggest dopamine plays an integral role in decision-making when options differ in their costs and are broadly consistent with the sensorimotor activational hypothesis (Salamone et al. 2007), which posits that dopamine promotes action generation and the prolongation of effortful behavior. However, a limitation of these studies is their reliance on perturbations of dopamine function over relatively protracted timeframes.

In the current study, when acute optogenetic stimulation of dopamine cells transiently preceded effort-based decision-making, mice displayed a general reduction in choice performance for the high effort lever. These findings are in contrast to the vast majority of decision-making studies that indicate increases in dopamine promote responding for more effortful actions (Bardgett et al. 2009; Wardle et al. 2011; Trifilieff et al. 2013). Nevertheless, several findings suggest nuances in this relationship. For instance, peripheral injections of amphetamine in rats significantly decreased lever responding for preferred food pellets (Cousins and Salamone 1994) and reduced preference for a high value food outcome as the amount of effort required to obtain it increased (Floresco et al. 2008). Similarly, administration of the D2/D3 receptor agonist quinpirole in rats (Depoortere et al. 1996), or transgenic overexpression of postsynaptic striatal dopamine D2 (Drew et al. 2007) or D3 (Simpson et al. 2014) receptors produce progressive ratio deficits. Moreover, D2 receptor overexpression also reduced the willingness to work for palatable food in a cost–benefit decision-making task (Filla et al. 2018). More targeted pharmacological approaches in rats likewise reveal a similar pattern of performance, whereby administration of a D2/D3 receptor agonist (but not D3 agonism alone) in the nucleus accumbens reduced choice responding for the high effort lever during effort-based decision-making (Bryce and Floresco 2019). Given that VTA injections of ChR2 was trafficked to a number of targets including the nucleus accumbens (Fig. 1C), this potentially suggests mesostriatal modulation underlying the disruptions in effort-based decision-making (Fig. 2B). Notably, the influence of optogenetic stimulation on choice behavior persisted beyond optogenetic testing such that in the poststimulation sessions (i.e., when the laser was not activated), ChR2 mice displayed a persistent preference for the high choice lever that was not dampened by increases in effort (Fig. 2C). This disruption in effortful discounting is similar to the preponderance of pharmacological and transgenic findings in which dopamine stimulation or receptor overexpression augments responding for higher effort outcomes (Bardgett et al. 2009; Wardle et al. 2011; Trifilieff et al. 2013).

Our findings raise important questions regarding the role and consequences of acute mesencephalic dopamine cell stimulation on effort-based decision-making. Unlike past studies, acute stimulation of VTA dopamine cell activity reduced preference for the high value outcome even when effort costs were equated (i.e., during FR-1 block trials; Fig. 2A). This suggests optogenetic stimulation may have disrupted the reinforcing efficacy of the higher value reinforcer, and/or the animals’ capacity to discriminate between lever contingencies. However, this interpretation is unlikely given that when mice were tested under conditions where the higher value outcome was continually paired with low effort, mice maintained their preference for the larger preferred reward across trial blocks independent of VTA stimulation (Fig. 3). In addition, findings from either the stimulation or poststimulation tests are unlikely to reflect either baseline group differences in performance (Fig. 2A; Supplemental Fig. 1), or gross changes in motoric output and motivation, as omissions were comparable across all test days (Fig. 2D–F). Beyond these more prosaic interpretations, it is worthwhile considering that in addition to its activational effects, dopamine acts as a value-based prediction error signal critical for reward learning (Schultz 1997; Waelti et al. 2001; Bayer and Glimcher 2005; Pessiglione et al. 2006; Bayer et al. 2007; Steinberg et al. 2014; Eshel et al. 2016). Accordingly, in the optogenetic stimulation sessions decision-making performance might be influenced by disruptions in associative mechanisms controlled by reward prediction error signals. Furthermore, more recent studies suggest dopamine transients can be uncoupled from model-free reinforcement value signals to encode associative information that is computationally detailed in nature (e.g., its sensory features; Gardner et al. 2018; Sharpe et al. 2019). With this in mind, the current findings also suggest that dopamine functions beyond a pure value-based signal, to include recall of past, and/or anticipation of detailed upcoming contingencies, which may bias performance toward actions that overall have the highest immediacy of reinforcer delivery. This could serve to direct actions toward the low effort lever where execution of a single lever press consistently results in immediate reward delivery even under cost–benefit conditions where larger rewards may be available. Alternatively, the pattern of responding during laser activation may be an artifact of optogenetic stimulation of VTA dopamine transients, whereby endogenous phasic dopamine release that supports effortful action when produced by extension of the levers may have been attenuated as a result of the preceding optogenetic stimulation (e.g., via an insufficient refractory period). Regarding poststimulation performance, our findings suggest that prior optogenetic stimulation subsequently enhanced responding for more rewarding outcomes when mice were tested without acute dopamine cell activation. Perhaps in the absence of prior dopamine cell activation, ChR2 mice were in an attenuated reward state during the poststimulation decision-making task and compensated for this by enhancing their reward seeking for the highest value reward present (i.e., 20% sucrose). Moreover, these findings should be considered when developing future experimental designs, given that the longevity of poststimulation disruptions in effortful discounting is currently unknown.

While our study provides important insight into the role of VTA dopamine transients on effort-based decision-making, a number of caveats should be acknowledged. As suggested above, this study would benefit from optical stimulation at different time points during the choice test. In addition, VTA dopamine efferents target numerous striatal (nucleus accumbens core and shell), limbic (basolateral amygdala) and prefrontal (orbitofrontal cortex, anterior cingulate cortex) sites that are implicated in decision-making (Cousins et al. 1996; Winstanley et al. 2004; Floresco and Ghods-Sharifi 2007; Hauber and Sommer 2009; Walton et al. 2009). Thus, photostimulation of axonal terminals in these downstream targets will provide additional insight into the circuit-level effects of dopamine cell stimulation on effortful discounting. It should also be recognized that our use of TH-Cre mice to control dopamine cell function is somewhat confounded by expression of TH transgene in nondopamine cells within the mesencephalon (Lammel et al. 2015; Stuber et al. 2015), consequently future approaches should use DAT-Cre lines where ectopic expression is minimal. Finally, a number of relevant measures including latencies for deliberation time were not recorded.

Effort-based decision-making impairments have been noted in numerous settings including in patients with depression, schizophrenia and autism (Damiano et al. 2012; Treadway et al. 2012, 2015; Green et al. 2015), along with obese individuals (Mata et al. 2017). Although reduced dopamine transmission has been generally attributed to underlie these disruptions, results from our study add to a smaller yet important body of work indicating that aberrant elevations in dopamine signaling may impair cost/benefit decision-making. They are also consistent with contemporary accounts that indicate a more complex computational role for dopamine transients, which may include promoting action selection toward more immediate reward delivery. Surprisingly, the acute effects of dopamine stimulation endure beyond the stimulation test sessions by altering decision-making performance and invigorating reward-seeking for higher value and effortful rewards.

Supplementary Material

Acknowledgments

Funding for this study was supported in part by National Institutes of Health grant R01DK111475 to A.W.J.

Footnotes

[Supplemental material is available for this article.]

Article is online at http://www.learnmem.org/cgi/doi/10.1101/lm.053082.120.

References

- Bardgett ME, Depenbrock M, Downs N, Points M, Green L. 2009. Dopamine modulates effort-based decision making in rats. Behav Neurosci 123: 242. 10.1037/a0014625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. 2005. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47: 129–141. 10.1016/j.neuron.2005.05.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer HM, Lau B, Glimcher PW. 2007. Statistics of midbrain dopamine neuron spike trains in the awake primate. J Neurophysiol 98: 1428–1439. 10.1152/jn.01140.2006 [DOI] [PubMed] [Google Scholar]

- Bryce CA, Floresco SB. 2019. Alterations in effort-related decision-making induced by stimulation of dopamine D1, D2, D3, and corticotropin-releasing factor receptors in nucleus accumbens subregions. Psychopharmacology (Berl) 236: 2699–2712. 10.1007/s00213-019-05244-w [DOI] [PubMed] [Google Scholar]

- Cousins MS, Salamone JD. 1994. Nucleus accumbens dopamine depletions in rats affect relative response allocation in a novel cost/benefit procedure. Pharmacol Biochem Behav 49: 85–91. 10.1016/0091-3057(94)90460-X [DOI] [PubMed] [Google Scholar]

- Cousins MS, Atherton A, Turner L, Salamone JD. 1996. Nucleus accumbens dopamine depletions alter relative response allocation in a T-maze cost/benefit task. Behav Brain Res 74: 189–197. 10.1016/0166-4328(95)00151-4 [DOI] [PubMed] [Google Scholar]

- Damiano CR, Aloi J, Treadway M, Bodfish JW, Dichter GS. 2012. Adults with autism spectrum disorders exhibit decreased sensitivity to reward parameters when making effort-based decisions. J Neurodev Disord 4: 13. 10.1186/1866-1955-4-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Depoortere R, Perrault GH, Sanger DJ. 1996. Behavioural effects in the rat of the putative dopamine D 3 receptor agonist 7-OH-DPAT: comparison with quinpirole and apomorphine. Psychopharmacology (Berl) 124: 231–240. 10.1007/BF02246662 [DOI] [PubMed] [Google Scholar]

- Drew MR, Simpson EH, Kellendonk C, Herzberg WG, Lipatova O, Fairhurst S, Kandel E, Malapani C, Balsam PD. 2007. Transient overexpression of striatal D2 receptors impairs operant motivation and interval timing. J Neurosci 27: 7731–7739. 10.1523/JNEUROSCI.1736-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eshel N, Tian J, Bukwich M, Uchida N. 2016. Dopamine neurons share common response function for reward prediction error. Nat Neurosci 19: 479–486. 10.1038/nn.4239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filla I, Bailey MR, Schipani E, Winiger V, Mezias C, Balsam PD, Simpson EH. 2018. Striatal dopamine D2 receptors regulate effort but not value-based decision making and alter the dopaminergic encoding of cost. Neuropsychopharmacology 43: 2180–2189. 10.1038/s41386-018-0159-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S. 2007. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb Cortex 17: 251–260. 10.1093/cercor/bhj143 [DOI] [PubMed] [Google Scholar]

- Floresco SB, Onge JRS, Ghods-Sharifi S, Winstanley CA. 2008. Cortico-limbic-striatal circuits subserving different forms of cost-benefit decision making. Cogn Affect Behav Neurosci 8: 375–389. 10.3758/CABN.8.4.375 [DOI] [PubMed] [Google Scholar]

- Gardner MP, Schoenbaum G, Gershman SJ. 2018. Rethinking dopamine as generalized prediction error. Proc R Soc B 285: 20181645. 10.1098/rspb.2018.1645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green MF, Horan WP, Barch DM, Gold JM. 2015. Effort-based decision making: a novel approach for assessing motivation in schizophrenia. Schizophr Bull 41: 1035–1044. 10.1093/schbul/sbv071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauber W, Sommer S. 2009. Prefrontostriatal circuitry regulates effort-related decision making. Cereb Cortex 19: 2240–2247. 10.1093/cercor/bhn241 [DOI] [PubMed] [Google Scholar]

- Lammel S, Steinberg EE, Földy C, Wall NR, Beier K, Luo L, Malenka RC. 2015. Diversity of transgenic mouse models for selective targeting of midbrain dopamine neurons. Neuron 85: 429–438. 10.1016/j.neuron.2014.12.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mata J, Dallacker M, Hertwig R. 2017. Social nature of eating could explain missing link between food insecurity and childhood obesity. Behav Brain Sci 40: e122. 10.1017/S0140525X16001473 [DOI] [PubMed] [Google Scholar]

- Mingote S, Weber SM, Ishiwari K, Correa M, Salamone JD. 2005. Ratio and time requirements on operant schedules: effort-related effects of nucleus accumbens dopamine depletions. Eur J Neurosci 21: 1749–1757. 10.1111/j.1460-9568.2005.03972.x [DOI] [PubMed] [Google Scholar]

- Nowend KL, Arizzi M, Carlson BB, Salamone JD. 2001. D1 or D2 antagonism in nucleus accumbens core or dorsomedial shell suppresses lever pressing for food but leads to compensatory increases in chow consumption. Pharmacol Biochem Behav 69: 373–382. 10.1016/S0091-3057(01)00524-X [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. 2006. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature 442: 1042–1045. 10.1038/nature05051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robles CF, Johnson AW. 2017. Disruptions in effort-based decision-making and consummatory behavior following antagonism of the dopamine D2 receptor. Behav Brain Res 320: 431–439. 10.1016/j.bbr.2016.10.043 [DOI] [PubMed] [Google Scholar]

- Salamone JD, Steinpreis RE, McCullough LD, Smith P, Grebel D, Mahan K. 1991. Haloperidol and nucleus accumbens dopamine depletion suppress lever pressing for food but increase free food consumption in a novel food choice procedure. Psychopharmacology 104: 515–521. 10.1007/BF02245659 [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM. 2007. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology 191: 461–482. 10.1007/s00213-006-0668-9 [DOI] [PubMed] [Google Scholar]

- Schultz W. 1997. Dopamine neurons and their role in reward mechanisms. Curr Opin Neurobiol 7: 191–197. 10.1016/S0959-4388(97)80007-4 [DOI] [PubMed] [Google Scholar]

- Sharpe MJ, Batchelor HM, Mueller LE, Chang CY, Maes EJ, Niv Y, Schoenbaum G. 2019. Dopamine transients delivered in learning contexts do not act as model-free prediction errors. bioRxiv 10.1101/574541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson EH, Winiger V, Biezonski DK, Haq I, Kandel ER, Kellendonk C. 2014. Selective overexpression of dopamine D3 receptors in the striatum disrupts motivation but not cognition. Biol Psychiatry 76: 823–831. 10.1016/j.biopsych.2013.11.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steinberg EE, Boivin JR, Saunders BT, Witten IB, Deisseroth K, Janak PH. 2014. Positive reinforcement mediated by midbrain dopamine neurons requires D1 and D2 receptor activation in the nucleus accumbens. PLoS One 9: e94771. 10.1371/journal.pone.0094771 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuber GD, Stamatakis AM, Kantak PA. 2015. Considerations when using cre-driver rodent lines for studying ventral tegmental area circuitry. Neuron 85: 439–445. 10.1016/j.neuron.2014.12.034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Bossaller NA, Shelton RC, Zald DH. 2012. Effort-based decision-making in major depressive disorder: a translational model of motivational anhedonia. J Abnorm Psychol 121: 553. 10.1037/a0028813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treadway MT, Peterman JS, Zald DH, Park S. 2015. Impaired effort allocation in patients with schizophrenia. Schizophr Res 161: 382–385. 10.1016/j.schres.2014.11.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trifilieff P, Feng B, Urizar E, Winiger V, Ward RD, Taylor KM, Martinez D, Moore H, Balsam PD, Simpson EH, et al. 2013. Increasing dopamine D2 receptor expression in the adult nucleus accumbens enhances motivation. Mol Psychiatry 18: 1025–1033. 10.1038/mp.2013.57 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waelti P, Dickinson A, Schultz W. 2001. Dopamine responses comply with basic assumptions of formal learning theory. Nature 412: 43–48. 10.1038/35083500 [DOI] [PubMed] [Google Scholar]

- Walton ME, Groves J, Jennings KA, Croxson PL, Sharp T, Rushworth MF, Bannerman DM. 2009. Comparing the role of the anterior cingulate cortex and 6-hydroxydopamine nucleus accumbens lesions on operant effort-based decision making. Eur J Neurosci 29: 1678–1691. 10.1111/j.1460-9568.2009.06726.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardle MC, Treadway MT, Mayo LM, Zald DH, de Wit H. 2011. Amping up effort: effects of d-amphetamine on human effort-based decision-making. J Neurosci 31: 16597–16602. 10.1523/JNEUROSCI.4387-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Cardinal RN, Robbins TW. 2004. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci 24: 4718–4722. 10.1523/JNEUROSCI.5606-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.