Abstract

A global pandemic has significantly impacted the ability to conduct diagnostic evaluations for autism spectrum disorder (ASD). In the wake of the coronavirus, autism centers and providers quickly needed to implement innovative diagnostic processes to adapt in order to continue serve patient needs while minimizing the spread of the virus. The International Collaborative for Diagnostic Evaluation of Autism (IDEA) is a grassroots organization that came together to discuss standards of care during the pandemic and to provide a forum wherein providers communicated decisions. This white paper is intended to provide examples of how different centers adjusted their standard approaches to conduct diagnostic evaluations for ASD during the pandemic and to provide insight to other centers as they go through similar challenges.

Keywords: COVID-19, Autism, Diagnostic evaluations

Introduction

Autism spectrum disorder (ASD) is one of the most prevalent neurodevelopmental disorders, characterized by social and communication deficits and the presence of restricted and repetitive behaviors. Making an autism diagnosis can be difficult due to many factors, including symptom heterogeneity, developmental factors such as cognitive and language abilities, and other comorbidities. Best practices in ASD diagnostic evaluations must consider multiple areas of functioning. A comprehensive evaluation, at minimum, includes a clinical interview with caregivers to gather information about the child’s medical and developmental history and current concerns, as well as standardized observation and interaction with the child by an experienced clinician. In addition, assessment of cognitive, language, and adaptive abilities is often needed for differential diagnosis and to understand developmental strengths and weaknesses. Traditionally, the evaluation, from interview to feedback, has been done in-person with families. In-person assessments are especially important because standardized ASD evaluations require interactions between the examiner and child (e.g., play-based activities, conversations), manipulation of physical materials, and observation of the child’s social skills and repetitive behaviors (e.g., asking for help when needs it, engaging in reciprocal play, giving others a turn to speak, appropriately maintaining a conversation, etc.).

A global pandemic, however, has necessitated drastic changes to how we conduct diagnostic evaluations, prompting clinicians to consider and implement innovative diagnostic processes to adapt and serve patient needs. When stay-at-home orders were issued in March in response to the COVID-19 pandemic in the U.S., many states started to employ video-conferencing platforms to continue to meet the needs of our patients while minimizing close contact between people. Since then, in an effort to maintain social distancing and adhere to federal guidelines, a mix of video-conferencing and in-person visits has been implemented. However, in-person assessments have remained drastically reduced and changed as providers work to conduct assessments in as safe and socially-distanced manner as possible. Although there is some literature showing promising results on telehealth assessments in ASD (e.g., Corona Hine et al., 2020; Smith et al., 2017), telehealth assessments need to be further examined to determine how they compare to traditional in-person assessments. It is reasonable to assume that psychometric properties such as sensitivity, specificity, and the validity of scores of diagnostic measures may change considerably in the context of telehealth.

The Big IDEA: A Venue for Building Consensus on Best Practices in Telehealth for ASDs

Shortly after the effects of the COVID-19 pandemic were starting to be realized, autism organizations (e.g., Autism Speaks, Autism Science Foundation, Autism Society, etc.) started offering relevant information and resources to support individuals with ASD and their families, educators, and service providers. With the sudden loss of professional help, evidence-based tools to help these families through the crisis became readily available through these organizations (e.g., tips and ideas for helping children cope with disrupted routines, strategies to manage increased challenging behaviors, preparing for successful telehealth visits, helping children with ASD wear masks).

Furthermore, representatives of autism centers across the nation began meeting remotely to discuss how different autism centers were responding to meet the needs of our patients during these unprecedented times. The International Collaborative for Diagnostic Evaluation of Autism (IDEA) is a grassroots organization originating at the University of Missouri, Columbia, and quickly grew to include service providers from 91 centers across seven countries. The group came together to discuss how to provide care for our patients during the pandemic, given the concerns regarding safety, lack of strong empirical evidence for telehealth assessments across the age-span, lack of provider training in telehealth assessments, possibility of increasing disparities in care, concern of providing a diagnosis with the possibility of no available treatment options, and lack of available telehealth measures to be able to conduct a best-practice assessment. At the start of the pandemic, there were no guidelines to inform how to conduct ASD diagnostic evaluations during the COVID-19 pandemic, and IDEA meetings became a forum for centers and providers across the nation to discuss standards of care during the pandemic and to provide a forum wherein providers communicated decisions and discussed ideas on how to respond to the unprecedented situation.

This paper is intended to summarize some of these discussions and provide examples of how different centers adjusted their standard approaches of delivering services. It is not intended to be a comprehensive review of telehealth services for individuals with ASD. Currently, as many states are facing a resurgence with rising COVID-19 cases, all centers continue to monitor and respond to the rapidly changing COVID-19 situation. Processes that are followed by some centers may be beneficial to others as they act and adjust. This commentary aims to describe how three autism centers that were part of IDEA, representing diverse geographical and catchment areas, have responded to the pandemic. Though the following does not represent all of the providers and centers that participate in IDEA, the themes and solutions are representative of many. The goal is to provide insight to other centers as they go through similar challenges and to make recommendations as to how diagnostic evaluations for ASD can and should be continued during this time.

Contributing Sites

Three autism centers who were actively involved in IDEA agreed to describe in more detail the practices that they have implemented with regard to diagnostic evaluations since the pandemic: Marcus Autism Center Diagnostic Services Program, University of Minnesota Autism and Neurodevelopment Clinic (UMN-AND), and Thompson Autism Center at CHOC Children’s (TACC). As noted, many of the other participating centers were following similar procedures; thus, there was considerable consensus on the various approaches that were implemented.

Marcus Autism Center is a not-for-profit clinical, science and training organization and a wholly-owned subsidiary of Children’s Healthcare of Atlanta (CHOA). Marcus is also the Division of Autism and Related Disorders in the Department of Pediatrics, Emory University School of Medicine. Prior to the COVID-19 pandemic, the Marcus diagnostics clinic saw approximately 2500 patients per year, inclusive of research characterizations as well as clinical diagnostic rule-outs. One of the Center’s missions is to decrease the time from first concern to diagnosis and thus 85% of the clinical assessment program’s patients are under 5 years of age. These assessments are either a one-day model working within a multidisciplinary team for young toddlers or a two-day model where a diagnostic interview and testing are completed on separate days for preschool and school age children.

The University of Minnesota Autism and Neurodevelopment Clinic (UMN-AND) is a specialty clinic within MHealth Fairview University of Minnesota Masonic Children’s Hospital. Prior to COVID-19, approximately 400 diagnostic evaluations were performed annually, and 80 to 100 patients received individual or group therapy. The focus of UMN-AND’s evaluation arm is on implementing comprehensive, evidence-based practices in the diagnosis of ASD, prioritizing young children and individuals with complex presentations. Evaluations typically involve two visits, with developmental testing completed at the first visit and diagnostic evaluation, feedback, and discussion of recommendations at the second. Care coordination and referrals are provided after the initial diagnosis, and patients are followed over time as their needs change with development and with participation in intervention.

The Thompson Autism Center at CHOC Children’s (TACC) is one of the newest autism centers in Orange County, CA. The center was designed to provide diagnostic and therapy services to expand the region’s capacity to serve children with ASD and their families. The assessment clinic currently has two clinic pathways: confirmatory or comprehensive. Patients who are triaged to be at “high-risk” for having ASD are referred to the confirmatory clinic that does not involve more comprehensive testing in the interest of efficiency and quickest route to care. All other cases (e.g., psycho-socially or medically complex, preschool/school-aged children) are referred to the comprehensive clinic that allows for more comprehensive testing across different domains (e.g., Psychology, Neurology/Developmental Pediatrics, Occupational Therapy, Speech/Language).

Telehealth Diagnostic Evaluations

Triage and Preparation

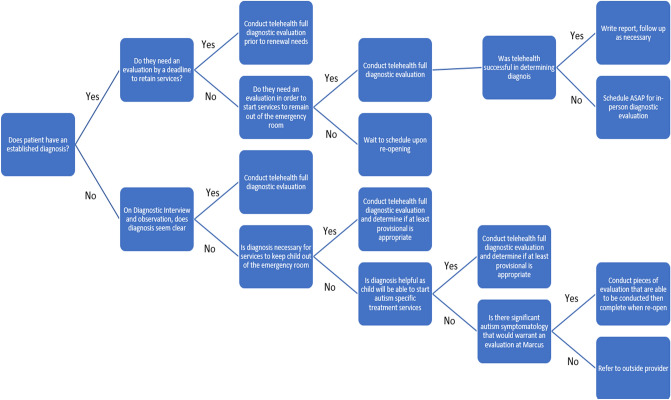

Identifying which patients would be appropriate for a telehealth assessment was a high initial priority and a topic of much consideration, particularly prior to each center’s opening for in-person assessments. It was agreed that a triage system was necessary to identify patients appropriate for telehealth and prioritize certain patients considered “urgent.” How different centers defined “urgent” differed based on the nature of the existing programs but generally gave consideration to new patients (e.g., young age, high severity of problems) and follow-up patients who required re-evaluations for service maintenance (see Fig. 1 for a sample triage tree). Marcus Autism Center and UMN-AND decided to prioritize patients (1) without a previous autism diagnosis but who needed a diagnosis to access additional services (e.g., young children, children with more significant needs) and (2) those who had autism but were experiencing additional complexities that would impact care decisions.

Fig. 1.

Sample triage tree to identify patients appropriate for diagnostic evaluation via telehealth

Families were then contacted to assess whether they would be interested in participating in telehealth services and whether they had the required technology. Prior to telehealth assessments, it was crucial to communicate changes in access to services, set clear expectations from the appointment, provide information on how to best prepare for the telehealth appointments (e.g., how to set up a space in their home, what types of toys/activities to have readily available), and to provide technical support, if needed. Patients who did not have high urgency or who wished to wait to be seen in person were also contacted to conduct any safety assessments, provide referrals, and offer resources.

Diagnostic Interview via Telehealth

As the pandemic unfolded and rates across regions varied, centers were able to offer in-person services to differing degrees. Because many are still not able to offer in-person services, the practice of conducting diagnostic interviews via telehealth has continued for many centers. For all three sites, continuing the practice of telehealth for diagnostic interviews has reduced the need for families to come to the center multiple times in order to complete the evaluation. Given patient and clinician satisfaction with this methodology, if insurance companies continue to cover telehealth practice, it may be a preferred option to continue even when the pandemic is over.

The structure of the diagnostic interview across the three centers (and many centers within the IDEA consortium) include consenting families to participate in telehealth services electronically via a video-based platform, performing a Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5) based interview, or completing the Autism Diagnostic Interview-Revised (ADI-R; Rutter et al., 2003) to assess for autism symptomatology. In addition, many providers also conducted a brief observation of the child to record a mental status exam and aid with initial triage. Translation services in the family’s native language have also been made available. Translators connect to the same video platform link as families and are visible during appointments just the same as clinicians.

Telehealth Behavioral Assessment

At many sites, a single telehealth visit was conducted by a psychologist. At UMN-AND, all patients triaged to high urgency were booked for two telehealth visits; one consisting of a visit with a psychometrist, and one consisting of a visit with a licensed psychologist. In planning developmental testing, the psychologist attempted to assess as many domains as possible, selecting measures based on the child’s age, the availability of a tool for telehealth administration, and the child’s ability to respond to a telehealth testing format. The psychologist also selected checklists to be completed by parents to gather information on co-occurring emotional and behavioral issues (ASD-specific checklists were de-emphasized due to their limited sensitivity and specificity for new patients). Regardless of the single versus multi-day assessment, the types of measures and procedures were chosen with the intention of replicating in-person assessment visits as best as possible. Essentially, measures of development or cognitive abilities, adaptive behavior, and specific diagnostic measures were all attempted.

To gather direct observation of diagnostic symptomatology, psychologists at UMN-AND conducted a structured observation of a parent–child interaction, or for older children and adults, a structured interaction with the psychologist. Psychologists completed trainings that were made available on the TELE-ASD-PEDS (Corona Hine et al., 2020), the Systematic Observation of Red Flags of Autism Spectrum Disorder (SORF; Wetherby et al., 2004), and the Childhood Autism Rating Scale, Second Edition (CARS-2; Schopler et al., 2010). In preparation for return to in-person visits, psychologists also trained on the Brief Observation of Symptoms of Autism (BOSA; Lord et al., 2020). If the TELE-ASD-PEDS, CARS-2, or SORF was administered, they were scored per standardized instructions. For children whose age or language level were inappropriate for the TELE-ASD-PEDS or SORF, a non-standardized structured observation was performed. Table 1 illustrates a sample menu of activities completed as parent–child interactions performed during a 30- to 45-min observation during the session. Activities were selected to provide a balance of structured and unstructured activities, interactive (e.g., ball play, doing a puzzle) versus independent activities, play-based (make-believe together and alone), language-based (shared book reading, show-and-tell), and daily routine types of activities (e.g., clean-up, snack). Instructions for the observation were sent to families ahead of their appointment, indicating that they could choose toys and activities similar to those in Table 1 to complete during the session. During the observation, the psychologists provided coaching to the parent on when to switch activities and when to interact or sit back so that they were able to observe interactive as well as some independent play. Some activities for children who were verbally fluent or using flexible phrase speech were conducted with the child and examiner together. In the show-and-tell activity, the child picked two toys and talked about them to the examiner, who asked questions and attempted to build a conversation. Verbally fluent children and adults also were asked the interview questions from the ADOS-2 (Lord et al., 2012) the BOSA (Lord et al., 2020) to gather information on their social understanding and spark conversation. The examiner took notes for qualitative information. As an effort to create some standardization, TACC prepared a toy kit that parents could use to administer the TELE-ASD-PEDS. The toy kit included a toy phone, ball, cars, bubbles, stuffed animal, stickers, dinosaur toys, snack container, and toy utensils. Parents were scheduled to pick up the kit prior to their telehealth appointment. Table 2 lists some of the instruments that are being used via telehealth.

Table 1.

Sample menu of activities for structured observations of parent–child interactions over telehealth

| Minimally verbal menu of activities (less than phrase speech) | |

|

Play with toys • Stuffed animal and feeding set • Vehicles and people figures • Blocks • Play dough |

Pretend play together • Stuffed animals and feeding set • Vehicles and people figures • Dolls or action figures • Farm set or doll house |

|

Play with people • Bubbles • Balloons • Ball game (tossing a ball gently back and forth) |

Table (or floor) play together • Puzzle • Coloring • Stacking blocks or putting them in a container |

|

Joint attention • Instruct parents to point to something at a distance as in ADOS-RJA |

Response to name • Instruct parents to try calling child’s name up to 4 times • If no response, instruct to do or say something without touching • If no response, instruct to touch |

|

Meals and snacks • Pick two kinds of foods and have Tupperware containers available with lids to put them in (e.g., grapes and goldfish crackers) |

|

| Phrases menu of activities | |

|

Play with toys • Stuffed animal and feeding set • Vehicles and people figures • Blocks • Play dough |

Pretend play together • Stuffed animals and feeding set • Vehicles and people figures • Dolls or action figures • Farm set or doll house |

|

Play with people • Bubbles • Balloons • Ball game (tossing a ball gently back and forth) |

Table (or floor) play together • Puzzle • Coloring • Stacking blocks or putting them in a container |

|

Joint attention • Instruct parents to point to something at a distance as in ADOS-RJA |

Response to name • Instruct parents to try calling child’s name up to 4 times • If no response, instruct to do or say something without touching • If no response, instruct to touch |

|

Meals and snacks • Pick two kinds of foods and have Tupperware containers available with lids to put them in (e.g., grapes and goldfish crackers) |

Book sharing • Pick 1–2 picture books that your child likes and look at the books together • Encourage parent to comment and ask questions to build interaction around the book |

|

Show and tell • Have your child pick 1 favorite toy or item to tell the parent about it | |

| Verbally fluent menu of activities, ages 4 to adolescent | |

|

Book sharing • Pick 1–2 picture books that your child likes and look at the books together |

Show and tell • Have your child pick 1 favorite toy or item to tell the examiner about • Also pick a more neutral item—something your child likes but is not a super strong interest (e.g., toy or something they drew or made for school) |

|

Pretend play alone (as appropriate for age) • Action figures or animal figurines, doll set • Vehicles and people figures • Legos (pretending) |

Pretend play together (as appropriate for age) • Action figures or animal figurines, doll set • Vehicles and people figures • Legos (pretending) |

|

Table (or floor) play together • Puzzle • Coloring/art • Legos (building) • Board game |

Conversation attempts up to 3x |

| BOSA interview questions to build conversation (select ~ 3) | Module 3 interview questions |

| Verbally fluent menu of activities, adolescent and adult | |

| BOSA interview questions to build conversation (select ~ 4) | Module 4 interview questions |

| Conversation attempts up to 3x |

Show and tell • Have your child pick 1 favorite item, movie, or book to tell the examiner about • Also pick a more neutral item—something your child likes but is not a super strong interest (e.g., something they built or did for school) |

Table 2.

Instruments available for diagnostic telehealth evaluation of autism via telehealth

| Instrument | Age range | Description |

|---|---|---|

| Brief observation of symptoms of autism (BOSA) | BOSA-MV (any age, minimally verbal); BOSA-PSYF (any age who use flexible phrase speech or verbally fluent children under the ages of 6–8); BOSA-F1 (verbally fluent children ages 6–8 through 10); BOSA-FS (verbally fluent children ages 11 and up through adults) |

A brief 15-min observation tool of parent/caregiver-child interaction designed to be used by those who are trained and experienced with the ADOS-2. The BOSA uses activities adapted from the ADOS-2 and Brief Observation of Social Communication Change (BOSCC) https://www.semel.ucla.edu/autism/bosa-training At this time, no BOSA data have been analyzed. Preliminary data analyses based on the ADOS available |

| Childhood autism rating scale-second edition (CARS-2-ST) | < 6 years of age; 6 and older with IQs less than 80 or who are not verbally fluent |

A 15-item rating scale used to identify children with ASD based on a single source of information Its use via telehealth has not been validated Possible activities for a telehealth assessment include: free play to observe for functional, pretend, or repetitive play; parent setting up a ready set go routine; parent engaging in social routine; responding to parent calling name; looking at a picture of the family from a family vacation engage in a conversation; playing tic tac toe with parent to look for simple turn taking and motor skills |

| Childhood autism rating scale-second edition, high functioning (CARS-2-HF) | > 6 years of age or older; IQ of 80 or above; fluent, spontaneous speech |

15-item rating scale used to identify individuals with ASD based on any combination of the direct observation and interview with the individual and interview with caregiver/review of records/questionnaire for caregivers Its use via telehealth has not been validated Possible activities for a telehealth assessment include: Asking about both positive and negative events; talking about pictures of people in everyday situations; asking Sally-Anne Task questions; interviewing about their feelings and emotional regulation; asking questions regarding central coherence (e.g., Birthday Story developed by Carol Gray (1996); having the parent engage in simple games (e.g., Tic Tac Toe) |

| Systematic observation of red flags of ASD (SORF) | 18–24 months |

An observational measure designed to detect red flags for ASD in toddlers. The Home Observation is designed to collect a naturalistic observation in the home during 6 different everyday activities over an hour Preliminary data support the utility of the SORF as a valid measure of current ASD symptoms based on a home observation (Dow et al., 2020). Replication studies needed |

| TELE-ASD-PEDS | < 36 months |

This telehealth assessment tool is designed for providers with expertise in ASD assessments to guide a caregiver through play-based tasks (e.g., toy play, responding to social bids, requesting items, “ready-set-go” play, physical play, and ignore) to allow the provider to watch for the presence of ASD symptoms. Not appropriate for children with flexible phrase speech Initial data support the diagnostic accuracy of the TELE-ASD-PEDS when implemented in a laboratory setting (Corona Weitlauf et al., 2020). Its use in home settings is not yet validated. Additional research on the TELE-ASD-PEDS are in progress |

At UMN-AND, after the observation, psychologists completed the OSU Autism Rating Scale-DSM-5 (OARS-5, 2005) to document ASD symptoms seen during the structured observation and their level of frequency or impact on the child. The OARS-5 presents each DSM-5 symptom criterion, and the examiner rates the presence and severity of each symptom on a scale of 0 to 3, where 0 means no evidence of the symptom and 3 indicates a significant deficit occurring at a high frequency. UMN-AND also added an option for “not applicable” for each symptom to note when there was no opportunity to observe a particular symptom (e.g., difficulties with peer interactions). The OARS-5 was completed to organize the data collected through the observation and to facilitate diagnostic decision-making; no cutoffs were set for determining diagnosis, and no scores were reported to parents or in the final report.

At all centers, to obtain the related developmental and adaptive information, additional measures conducted via telehealth included assessments of adaptive functioning via parent interview (e.g., Vineland Adaptive Behavior Scales-Third Edition [VABS-3; Sparrow et al., 2016]) or rating scale (e.g., Adaptive Behavior Assessment System-Third Edition [ABAS-3; Harrison & Oakland, 2015]), and completion of parent and/or teacher rating scales via Q-Global (e.g., Behavioral Assessment System for Children-3 [BASC-3; Reynolds & Kamphaus, 2015]). In some states, (Georgia for example), specific tests are required by insurance in order for children to access evidenced-based behavioral therapies (e.g., ABA). Thus, clinicians also considered this when assessing the appropriateness for participation in telehealth assessment.

Estimations of cognitive/developmental level were performed via parent interview measures (e.g., Developmental Profile-Third Edition [DP-3; Alpern, 2007]), or with caution, available online intelligence tests, such as the Wechsler Intelligence Test for Children, 5th Edition (WISC-V; Wechsler, 2014) were administered. At UMN-AND, the WISC-V and the Wechsler Adult Intelligence Scale, 4th edition (WAIS-IV; Wechsler, 2008) were given regularly to patients age 6 and up who were able to participate via teleconference and whose skills were likely to fall within the ranges appropriate for these measures. Many of the patients had been seen in person in the UMN-AND clinic in the past, and to date, anecdotal comparisons indicated that scores obtained via telehealth evaluation were largely consistent with past testing. Direct cognitive testing for children under age 6 or with significant developmental impairments was not possible via telehealth, which presented diagnostic challenges. About 7 months into the pandemic, UMN-AND received COVID-19 relief funds from the state to provide telehealth test kits to families to facilitate testing. The kits contained materials for administration of a standardized developmental measure, the Developmental Assessment of Young Children, 2nd edition (DAYC-2; Judith & Maddox, 2013). The DAYC-2 can be scored based on observed behaviors and/or parent report, and parents were provided with instructions and coaching from providers over telehealth on how to present the materials to test the child’s skills. This was helpful in differential diagnosis of autism versus global developmental delay in young children and also allowed us to refine treatment recommendations based on the child’s strengths and weaknesses.

Feedback to Families

At some sites, the telehealth-based feedback followed immediately after a break where the psychologist prepared for feedback. Other sites scheduled a follow-up feedback session. At all sites, the psychologist would make a determination about whether enough information was available to arrive at a diagnosis regarding autism or whether in-person testing was needed to reach that conclusion, and this was shared with families at the feedback sessions. Regardless, results of the assessment as well as detailed recommendations were provided, including treatment/intervention recommendations, referrals to treatment providers, referrals to other medical providers, and recommended behavioral and learning strategies to be implemented at home or in school (e.g., toileting, sleep hygiene, positive behavior intervention supports). Clinic or care coordination staff at all sites collected and collated materials for newly diagnosed families, organized by age, that could be shared with parents as an initial packet of information, enabling families to get started with finding services and communicating the new diagnosis with others in their lives. These materials were also helpful to have present during the feedback to provide visuals to describe what autism is and what services might be beneficial. When autism was ruled out, the various centers still provided treatment recommendations and further information similar to what they would do in an in-person session. After the visits, the psychologist wrote a detailed report with appropriate caveats and cautions regarding the use of telehealth methods.

Regardless of whether a diagnosis of ASD was able to be determined, UMN-AND and TACC also asked families to return for an in-person visit if they were unable to complete developmental and cognitive testing important to diagnosis and treatment planning. For younger children and children with co-occurring intellectual and developmental disabilities, this often included coming back for in-person cognitive and language testing. Older children and adults with cognitive skills outside of the range of intellectual disability often could complete telehealth administration of a Wechsler intellectual battery and language measures, although UMN-AND offered repeated cautions and caveats about the limits of our knowledge on the validity of these tools in a telehealth setting. Any patient for whom there was doubt about the validity of a telehealth measure was asked to return for an in-person visit for retesting, and scores were not reported in the report. At TACC, the need for general and neurological examination was also considered to be a requirement for a complete evaluation and was scheduled as part of the in-person visit where confirmatory testing also be performed.

Hybrid Model

When stay-at-home orders were initially issued, 100% of diagnostic evaluations including the interview, assessment, and feedback were provided via telehealth. More recently, centers started implementing a hybrid model (e.g., intake sessions conducted via telehealth, testing done in person, feedback done in person or via telehealth) as an effort to continue limiting contact as much as possible while seeing those who need in-person testing. Many centers including TACC formed a task force to develop a comprehensive process in order to maintain safe practices and to mitigate risks for exposure for in-person visits. The Task Force team created a detailed report outlining recommendations for building safety, health screening, visitor restrictions, infection control/cleaning, etc. At TACC, patients who need to be seen in-person are scheduled to screen for COVID-19 a day prior to their appointment. The test is performed with the patient in their car, under a drive through test in the hospital parking lot. A temperature is taken, and the test is done by nasopharyngeal (NP) swabs. If the patient cannot tolerate NP swabs, specimens from alternative sites were collected (i.e., anterior nasal specimen, OP specimen). At Marcus and UMN-AND, screening questions and a temperature check were done on-site on the day of appointment, but no direct assessment of coronavirus was conducted. At all centers, additional safety measures include the screening of caregivers attending visits, limiting the number of caregivers, and family members to wear masks. For children, the mask requirement depended on their age and developmental level. Clinicians were also required to wear masks and face shields, and if appropriate and feasible, plexiglass shields could be placed between the examiner and child during developmental testing.

The hybrid model allows one to conduct developmental or cognitive testing in person, and thus increases the diagnostic certainty by not relying solely on a telehealth model while also decreases the risk of infection by minimizing contact, decreasing length of visits, as well as allowing for more people to participate, given that many sites started limiting the number of visitors allowed to bring a patient. For tests, materials were chosen specifically that did not involve the need for removing a mask. For example, balloon activities and snacks that were normally part of the in-person assessments before COVID-19 restrictions were imposed were no longer being conducted. For cognitive testing, unsharpened pencils were used as pointers to reduce the touching stimulus books, plastic sheet protectors were used between pages of the stimulus books and things that could be laminated. Examiners, caregivers, and when possible, children wore facemasks, and examiners also wore face shields. Given these modifications, standardization of the assessment was reduced, but it is believed that these modifications will likely have only a minor impact on the validity of the assessment, though this remains to be tested. Furthermore, all testing materials and areas were cleaned after each use. Items that cannot be cleaned (e.g., Play-doh) were replaced after each use, and cloth items in testing kits (e.g., blankets) were washed after each use. Table 3 summarizes how the pandemic impacted our ability to complete in-person ASD diagnostic evaluations and how we modified “standard” procedures to meet the current guidelines to reduce the spread of COVID-19.

Table 3.

Suggestions for modifying standard ASD diagnostic evaluation procedures during COVID-19

| Evaluation segment | Pre-COVID-19 practice | Limitations during COVID-19 | Suggestions |

|---|---|---|---|

| Interview |

Semi-structured or unstructured interview Standardized measure (e.g., ADI-R) |

Standardized (e.g., ADI-R) measure has not been validated over telehealth | Using a structured interview (e.g., ADI-R) over telehealth to gather qualitative information to support diagnosis |

| Observation and interaction | Standardized measures (e.g., ADOS-2, CARS-2) |

Requires in-person testing Lack of opportunity for the examiner to put “presses” on child Most measures have not been validated for PPEs or telehealth |

Using alternatives such as the TELE-ASD-PEDS, SORF, or BOSA via telehealth.* Conducting a structured observation (such as described in Table 1) and using it to score the CARS-2.* Completing the ADOS-2 activities with PPEs in clinic and using that observation to score the CARS-2 Completing the ADOS-2 activities with PPEs in clinic and using that observation to develop qualitative observations to support diagnosis *Variability in parent comfort and fidelity in carrying out different levels of presses for each assessment may be a limitation |

| Additional testing | Standardized cognitive testing (e.g., WISC-V, Mullen Scales of Early learning [MSEL, Mullen, 1995], Differential Ability Scales-II [DAS-II; Elliot, 2007], etc.) | Standardized measures not validated for PPE or telehealth |

Administering cognitive tests online, if possible Cognitive testing might reasonably be postponed in some cases where adaptive/language skills can be assessed using other measures (e.g., Vineland-II) Completing cognitive testing with PPEs in clinic |

| Feedback | Semi-structured or unstructured format in person | In-person may be preferred |

Complete via telehealth Provide handouts on recommendations via email |

Data Collection Tools for Evaluation of Telehealth Methods

Without empirical validation of the telehealth diagnostic evaluation, different centers began collecting data on their telehealth procedures and their feasibility and validity to guide adjustments to procedures and help inform the field on the uses and limits of telehealth. For example, after each visit, UMN-AND psychologists rated their best estimate of whether the child was on the autism spectrum and then rated their certainty on a 5-point scale. They also rated items to capture feasibility, including audio-visual quality and its impact on results, quality of caregiver report, caregiver perceived comfort-level with telehealth, and the presence of distractions, interruptions, and/or child dysregulation that may have impacted results. They plan to analyze this information to identify patterns related to whether a patient is able to be diagnosed via telehealth, diagnostic certainty, and potential disparities in access and feasibility for those who have reduced access to internet tools. Similarly, the TACC and Cincinnati Children’s Hospital Medical Center developed a survey to assess patient satisfaction, perceived effectiveness and efficiency, patient preferences, and the technical quality of telehealth services. These surveys were de-identified and sent via the REDCap survey function using the random code.

Although all centers are still collecting data, there are some preliminary data on patient/family satisfaction with the evaluation process. At Marcus, patients/families completed an electronic survey 3 months post-diagnostic assessment aimed at gaining information about the patient experience including patient/family understanding of their child's diagnosis and treatment plan, access to recommended interventions, and satisfaction with the assessment received. Surveys of assessments completed between the months of May 2020 and July 2020 at Marcus were used for analyses. Patients seen during this time and who completed the survey (N = 30) participated in the hybrid model which consisted of a telehealth diagnostic interview, in-person assessment following COVID-19 protocols (i.e., with personal protective equipment (PPE) worn by all adults and some children depending on age and compliance), and either in-person or telehealth feedback. Overall, the majority of patient families indicated being “Extremely Satisfied” (n = 20) or “Satisfied” (n = 8) with their experience, and two families responded “Neutral.” None of the families who completed the survey indicated they were “Dissatisfied” or “Very Dissatisfied.” Additionally, all families who completed the survey indicated that they would “recommend” the site to other families seeking a diagnostic assessment. This is a small sample and so further information about the satisfaction of families completing diagnostic assessments during COVID-19 needs to be collected before conclusions can be made. Additionally, this survey was also created to collect general satisfaction data and did not include specific question prompts that may have provided more elucidating information about families’ perception of and/or satisfaction with telehealth or in-person assessment with personal protective equipment (PPE). That being said, data were collected about access to intervention during COVID-19, providing some limited understanding of the impact to families based on these COVID-19 adapted assessments. Within this same group of respondents, the majority of families were able to access some of the recommended interventions 3 months post diagnosis (n = 21). From a list of interventions provided, respondents indicated all of the interventions they were able to access. Based on those that responded to this question (n = 20); 10 families were able to access a “medical follow-up” appointment (e.g., psychiatrist, developmental pediatrician), nine families were able to access autism specific treatments such as Applied Behavior Analysis, eight families were able to access “school services” (i.e., enrolled in special educational services with an Individualized Education Plan), seven families were able to access “speech therapy” in the community, five families were able to access “occupational therapy” in the community, three families were able to access “individual therapy” (e.g., meeting with psychologist or social worker in the community), three families were able to access a “social skills group”, and two families were able to access a “parent support group.” Telehealth was indicated as the modality of implementation for these interventions by the majority of families (n = 15). It is important to note that the data reported are likely impacted by dynamic environmental factors (e.g., presence and perceptions of local mandates, viral spread in communities) and family-specific conditions (e.g., state- or insurance-imposed requirements for specific tests). Much more information must be gathered nationally and globally to fully understand what barriers the pandemic has imposed on access to ASD diagnostic assessment and interventions for children and families.

Billing

The Centers for Medicare and Medicaid Services (CMS) issued a range of waivers providing expanded care during COVID-19, including the provision of Telemedicine. These waivers were originally released on April 30th but are applied retroactively to March 1st. Under the waiver, visits are considered the same as in-person visits and are paid at the same rate. Billing is contingent on approved telecommunications systems, which include interactive audio and video platforms that permit real-time communication. Effective April 30th, however, CMS has updated their policy to include reimbursement for services provided by audio phone only, when videoconference platforms are unavailable.

A range of private insurance payers have followed CMS guidelines and expanded psychological services to include telehealth services. Ultimately, reimbursement is contingent on specific state law and an individual’s insurance plan. As such, discussion of billing parameters continues to be an important part of the informed consent process between a clinic and potential patient. Psychologists and the administrative staff supporting them must review each patients’ individual benefits and communicate these prior to initiation of services. Billing considerations should be factored into their decision on whether to pursue telemedicine evaluations or to postpone until in-person services are reinstated.

It is important to consider that for many payers, expansion of telemedicine benefits is temporary. It is recommended that clinics continually monitor telemedicine policies when considering future services, especially when considering the need to request insurance authorization for follow-up in-person visits at a later time. It is uncertain whether insurance plans will authorize in-person psychological testing if telemedicine has been billed within the same calendar year. Some states have waived the requirement that the therapist needs to be licensed in the same state as the family, which is important to note given that some families have left the state to stay with relatives or other seasonal homes to avoid dense population areas.

Conclusion/Future Directions

Collaboration through the IDEA consortium demonstrates that various member centers have different ways of prioritizing ASD evaluations during the pandemic. Prioritization may differ depending on availability of human and equipment resources, policies that may be sensitive to local versus institutional needs and limitations, and the goal of the ASD evaluations (e.g., research, re-evaluations, new evaluations).

Using telehealth has led many clinicians to realize what may have been missing by not including an evaluation of a child’s behavior in the home environment. It also has revealed many advantages especially during the COVID-19 pandemic by increasing access for families. For certain patients, diagnoses can be made during the telehealth visit; however, cautions and caveats about the limits of our current knowledge on the validity of these tools in a telehealth setting should be considered.

In addition to ensuring access to timely evaluations during the pandemic, it is imperative that centers and clinicians provide resources and recommendations that are helpful and supportive especially for those newly diagnosed families. During the pandemic, this often means updating current resource databases to include information about who is providing in-person services and prioritizing access to respite resources for families that have now had to take on more of the responsibility for schooling and behavior management. Services may not be accessible for those individuals who get diagnosed with ASD, and providing a diagnosis in circumstances where treatments are not available may lead to more frustration and anxiety for certain families. While this is not a reason to avoid giving diagnoses as early as possible, it does mean that additional ongoing support and counseling may be needed in these instances.

Future opportunities include evaluating the effectiveness of nascent tools for telehealth evaluations of ASD, developing novel tools and instruments for assessing ASD remotely, and including studies to evaluate comparative accuracy to standard assessment tools. Experiences with telehealth evaluations also open the opportunity to study how ‘hybrid’ models which include a telehealth component alongside our standard evaluations might lead to improvements in diagnostic accuracy and/or needs assessments even after the current pandemic passes. Many providers have noted during the IDEA discussions that the provision of telehealth services will change their processes on evaluating patients in the future to improve patient care and access—a “silver-lining” gleaned from the pandemic.

As with any white paper designed to address unexpected and unheralded changes to the way we deliver care to the children, adolescents, and families we care for, much of the information contained within is based on expert opinion, anecdotal or case experiences, and conclusions based on group discussion and agreement. As a result, the recommendations contained within should be viewed as an initial attempt to assess the current landscape and relay in a timely manner information to other clinicians about how different centers are dealing with assessment of ASD within the limitations set about by the COVID-19 pandemic. This paper is not meant to represent best practices or serve as recommendations for a standard of care. Rather, it serves as a waypoint while further studies are executed to establish evidence-based standards and practices for care, and to help guide clinicians to provide some level of service during these unprecedented times. As we have learned in many aspects of these times, it is important to communicate during uncertain times so that we can learn from each other, adopting, adapting, and improving upon the experience of others.

Acknowledgments

The authors wish to thank all of the providers and centers that participate in International Collaborative for Diagnostic Evaluation of Autism (IDEA).

Author Contributions

All authors jointly contributed to conceptualize this manuscript. JJ, SPW, ANE, SHK, CK, JTM, and SMK drafted the initial manuscript. All authors critically reviewed and revised the manuscript. All authors read and approved the final manuscript.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Jina Jang, Email: jina.jang@choc.org.

Stormi Pulver White, Email: stormi.pulver.white@emory.edu.

Amy N. Esler, Email: esle0007@umn.edu

So Hyun Kim, Email: sok2015@med.cornell.edu.

Cheryl Klaiman, Email: cheryl.klaiman@emory.edu.

Jonathan T. Megerian, Email: JMegerian@choc.org

Amy Morse, Email: amorse@choc.org.

Cy Nadler, Email: cnadler@cmh.edu.

Stephen M. Kanne, Email: smk4004@med.cornell.edu

References

- Alpern G. Developmental profile 3. Western Psychological Services; 2007. [Google Scholar]

- Corona, L., Hine, J., Nicholson, A., Stone, C., Swanson, A., Wade, J., Wagner, L., Weitlauf, A., & Warren, Z. (2020a). TELE-ASD-PEDS: A telemedicine-based ASD evaluation tool for toddlers and young children. Vanderbilt University Medical Center. https://vkc.vumc.org/vkc/triad/tele-asd-peds

- Corona LL, Weitlauf AS, Hine J, Berman A, Miceli A, Nicholson A, Warren Z. Parent perceptions of caregiver-mediated telemedicine tools for assessing autism risk in toddlers. Journal of Autism and Developmental Disorders. 2020;51:476–486. doi: 10.1007/s10803-020-04554-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dow D, Day TN, Kutta TJ, Nottke C, Wetherby AM. Screening for autism spectrum disorder in a naturalistic home setting using the systematic observation of red flags (SOFR) at 18–24 months. Autism Research. 2020;13:122–133. doi: 10.1002/aur.2226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elliott CD. Differential ability scales. 2. Harcourt Assessment; 2007. [Google Scholar]

- Gray, C. (1996). Gray's guide to neurotypical behavior: Appreciating the challenge we present to people with autistic spectrum disorders/part 1: Cognition and communication. The Morning News, pp. 10–14.

- Harrison PL, Oakland T. Adaptive behavior assessment system. 3. Western; 2015. [Google Scholar]

- Judith KV, Maddox T. Developmental assessment of young children-2nd edition (DAYC-2) PRO-ED; 2013. [Google Scholar]

- Lord C, Dow D, Holbrook A, Kim SH. Brief observation of symptoms of autism (BOSA) Western Psychological Services; 2020. [Google Scholar]

- Lord C, Rutter M, DiLavore PC, Risi S, Gotham K, Bishop S. Autism diagnostic observation schedule, second edition (ADOS-2) Western Psychological Services; 2012. [Google Scholar]

- Mullen, E. M. (1995). Mullen scales of early learning: AGS edition. Minneapolis, MN: Pearson (AGS).

- Reynolds CR, Kamphaus RW. Behaviour assessment system for children – third edition manual. American Guidance Service; 2015. [Google Scholar]

- Rutter M, Le Couteur A, Lord C. ADI-R autism diagnostic interview revised manual. Western Psychological Services; 2003. [Google Scholar]

- Schopler E, Van Bourgondien ME, Wellman GJ, Love SR. The childhood autism rating scale (CARS2) 2. Western Psychological Services; 2010. [Google Scholar]

- Smith CJ, Rozga A, Matthews N, Oberleinter R, Nazneen N, Abowd G. Investigating the accuracy of a novel telehealth diagnostic approach for autism spectrum disorder. Psychological Assessment. 2017;29:245–252. doi: 10.1037/pas0000317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sparrow SS, Cicchetti DV, Saulnier CA. Vineland adaptive behavior scales, third edition (Vineland-3) Pearson; 2016. [Google Scholar]

- The Ohio State University (OSU) Research Unit on Pediatric Psychopharmacology (2005). OSU Autism Rating Scale (OARS; adapted for SEED) and Clinical Global Impression (CGI; adapted for SEED). Retrieved 30 Aug 2020 from http://psychmed.osu.edu/resources.htm

- Wechsler D. Wechsler adult intelligence scale. 4. Psychological Corporation; 2008. [Google Scholar]

- Wechsler D. Wechsler intelligence scale for children-fifth edition. Pearson; 2014. [Google Scholar]

- Wetherby A, Woods J, Allen L, Cleary J, Dickinson H, Lord C. Early indicators of autism spectrum disorders in the second year of life. Journal of Autism and Developmental Disorders. 2004;34:473–493. doi: 10.1007/s10803-004-2544-y. [DOI] [PubMed] [Google Scholar]