Abstract

Brain-computer interfaces (BCIs) record brain activity and translate the information into useful control signals. They can be used to restore function to people with paralysis by controlling end effectors such as computer cursors and robotic limbs. Communication neural prostheses are BCIs that control user interfaces on computers or mobile devices. Here we demonstrate a communication prosthesis by simulating a typing task with two rhesus macaques implanted with electrode arrays. The monkeys used two of the highest known performing BCI decoders to type out words and sentences when prompted one symbol/letter at a time. On average, Monkeys J and L achieved typing rates of 10.0 and 7.2 words per minute (wpm), respectively, copying text from a newspaper article using a velocity-only two dimensional BCI decoder with dwell-based symbol selection. With a BCI decoder that also featured a discrete click for key selection, typing rates increased to 12.0 and 7.8 wpm. These represent the highest known achieved communication rates using a BCI. We then quantified the relationship between bitrate and typing rate and found it approximately linear: typing rate in wpm is nearly three times bitrate in bits per second. We also compared the metrics of achieved bitrate and information transfer rate and discuss their applicability to real-world typing scenarios. Although this study cannot model the impact of cognitive load of word and sentence planning, the findings here demonstrate the feasibility of BCIs to serve as communication interfaces and represent an upper bound on the expected achieved typing rate for a given BCI throughput.

I. Introduction

ONE potential application of brain-computer interfaces (BCIs) is the restoration of communication for people with movement disabilities. The goal of such systems is to communicate text quickly and efficiently. Healthy individuals type text via a keyboard through discrete selections of keys using multiple fingers near simultaneously. This is a relatively high throughput task, and even the highest performing BCI algorithms are several times too slow to sustain such data rates [1–3]. One alternative approach is to leverage the use of a neurally-driven cursor on a virtual keyboard, akin to using a computer mouse or finger on a mobile computing device. In this manner, the interface has fewer degrees of freedom (e.g., two-dimensional movement and optionally a single discrete click), and operates with lower data rates. This approach has demonstrated feasibility in both preclinical [2–8] and early clinical [9–14] studies. The current highest sustained throughput achieved via a BCI has been with primate studies and achieves around 4–6 bits per second [2, 3, 15]. Although bitrate is an important measure of achieved performance, for a communication BCI, a more relevant measure is typing rate. However, the relationship between bitrate and typing rate is not well understood. The aim of this study was to conduct typing task experiments in non-human primates using the highest known performing BCIs to then quantify typing rate. Although this experiment is limited because monkeys do not possess a written language, such a study would provide an upper limit to the expected effective typing rate with a given level of BCI throughput.

II. Methods

Two sets of experiments were conducted in this study. They differed in the neural decoder and the resulting method of selection. The first experiment, termed dwell typing, involved only cursor velocity control [7], while the second, called click typing, included both cursor velocity control and a discrete click [3]. The aim in both experiments was to communicate prompted text as quickly and accurately as possible.

Experimental setup

Monkey protocol and behavior

All procedures and experiments were approved by the Stanford University Institutional Animal Care and Use Committee (IACUC). Experiments were conducted with two adult male rhesus macaques (L and J), implanted with 96 channel Utah electrode arrays (Blackrock Microsystems, Salt Lake City, UT) using standard neurosurgical techniques. Electrode arrays were implanted in arm motor regions of primary motor cortex (M1) and dorsal premotor cortex (PMd), as estimated visually from local anatomical landmarks as previously described [1]. Monkey L was implanted with a single array at the M1/PMd border on 2008-01-22. Monkey J had two arrays implanted on 2009-08-24, one in M1 and the other in PMd. All available channels were used from both monkeys. Monkeys had been previously trained to make point-to-point reaches in a 2D plane with a virtual cursor controlled by the contralateral arm or by a neurally-driven decoder, as diagrammed in Figure 1a. They exhibited no learning behavior to the task during these studies. The arm ipsilateral to the implanted hemisphere was gently restrained at all times during these experiments. The contralateral arm was left unbound and typically continued to move even during neurally-controlled sessions. This approach was preferred because it minimized any behavioral, and thus neural, differences between the training set (arm control sessions) and the testing set (neural prosthesis sessions). This animal model was selected because we believe it more closely mimicked the neural state a human user would employ when controlling a neural prosthesis in a clinical setting [16]. This model has previously demonstrated comparable performance to the dual arm-restrained animal model, where the contralateral arm is additionally restrained [7].

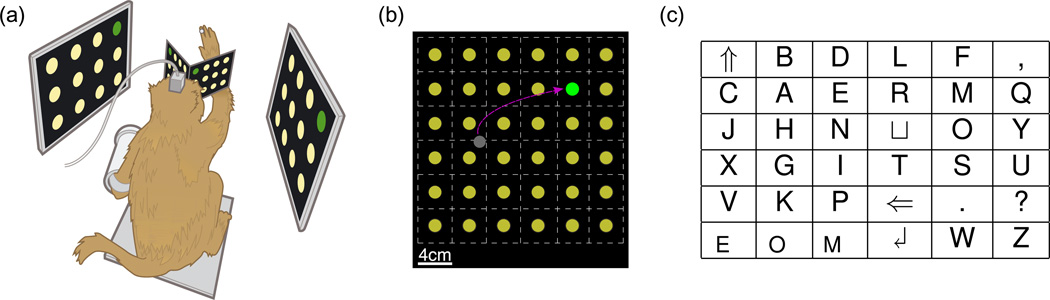

Fig. 1. Experimental Setup.

a Diagram of monkey performing typing task using experimental neuroelectrophysiology rig. b Diagram of virtual typing task. Workspace was divided into a 6 × 6 grid of 4 cm square targets. Gray cursor was under control of monkey. Monkeys were presented with yellow targets and a green prompted target to navigate to. Dashed lines denote the acquisition region boundaries–not shown to the monkey. c Layout of the 6 × 6 keyboard. Special keys were: ⇑ - shift, ⊔ - space, ⇐ - delete, ↲ - return, EOM (bottom left) - triplet of keys to select in sequence to note “end of message”.

Experimental hardware

The virtual cursor and grid of targets were presented in a 3D environment (MSMS, MDDF, USC, Los Angeles, CA) [17] as previously reported [2]. Hand position was measured with an infrared reflective bead tracking system (Polaris, Northern Digital, Ontario, Canada) polling the acquisition region at 60 Hz. Neural data was processed by the Cerebus recording system (Blackrock Microsystems, Salt Lake City, UT) and made available to the behavioral control system within 5 ± 1 ms. Spike counts were collected by applying a single negative threshold, set to −4.5 × root mean square of the voltage of the spike band of each neural channel using the Cerebus software’s thresholding algorithm [18]. Behavioral control and neural decode were run on separate PCs using the Simulink Real-Time/xPC platform (Mathworks, Natick, MA) with communication latencies of 3 ms. This system enabled millisecond-timing precision for all computations. Visual presentation was provided via two LCD monitors with refresh rates at 120 Hz, yielding frame updates within 7 ± 4 ms. Two mirrors, setup as a Wheatstone stereograph, visually fused the monitors into a single 3D percept for the monkey, although all cursor motion was constrained to two dimensions [19]. Datasets are referenced by a monkey and date format where a dataset from Monkey J would be JYYYYMMDD.

Decoder design

Both of the decoders used in this study have been previously described and demonstrated [3, 7], but are briefly described below.

ReFIT Kalman Filter

The cursor movement decoder used in this task was the Recalibrated Feedback Intention-Trained Kalman Filter (ReFIT-KF) [7, 20]. The ReFIT-KF algorithm uses a two-step training protocol that corrects the errors made during an initial block of closed-loop control to arrive at a final high-performing decoder. The ReFIT-KF has demonstrated the highest sustained throughput [2] and longest sustainable performance [15] of any single BCI decoder to date.

To build the decoder used in this study, 500 trials of center-out and back trials were performed under a hand-controlled cursor to targets in eight directions in a 12 cm radius as previously reported [7]. This initial dataset was used to build a first pass decoder, which was then tested online to collect another training set for which the kinematics were corrected, yielding the final decoder that was used for the remainder of the experimental day.

HMM Click Decoder

A hidden Markov model was used to decode the intention of click during the second set of experiments. Details of this decoder were described in a prior study [3]. A click decoder helps avoid the dwell time necessary in a cursor movement only BCI when selecting targets, yielding performance gains of up to 50%. Running in parallel with the ReFIT-KF, these two decoders achieved the highest throughput of any BCI under any control modality. In general, the HMM is trained by separating the hand-controlled reaches into two states: move and stop. The stop state is the period of time where the cursor is dwelling over the target. These labelled states are used to build the transition model and the corresponding neural time bins are used to estimate the emissions model. Together with the ReFIT-KF, these two decoders enable two-dimensional cursor control and a click signal, akin to using a conventional computer mouse.

Typing task

The typing task was setup in a grid layout. The task was similar to that used in a prior study that optimized keyboard-like tasks [2]. The grid was laid out in a 6×6 grid with 4×4 cm acceptance regions, where letters and symbols were assigned to specific “keys” in the workspace as shown in Figure 1b. The arrangement of letters was derived from a Metropolis keyboard layout [21, 22] as shown in Figure 1c. The monkeys were not shown this mapping and saw only yellow dots in a grid layout. However, the prompting of these targets was done in a specific sequence as dictated by the text to be copied. The prompted target was lit in green, and the monkey’s goal was to navigate the cursor to that green target and select it while avoiding selection of a yellow target. Monkeys were rewarded with a liquid reward for each successful trial. Selection of a yellow target was considered an error and resulted in a failed trial and no liquid reward. Every error was required to be corrected by the selection of the “delete key” before resuming the text sequence, just as done in a conventional keyboard. In this fashion, the task simulated the typing of words and sentences that a human participant would perform.

There were two methods for selecting a letter, depending on which experiment was being conducted. In dwell typing experiment with only the ReFIT-KF and 2D cursor control, targets were selected by dwelling on them for the required 450 ms hold time. In the click typing experiment, the HMM click decoder controlled symbol selection by detecting the intention to select the target under the cursor.

The passages for the two experiments were different and selected from articles from the New York Times (i.e., dwell [23] and click [24] typing). For the purpose of this study, capitalization and punctuation was ignored. These experiments were repeated on more than one day, but only one representative day from each control modality is shown from each monkey. From the monkeys’ points of view, these experiments were effectively identical to prior experiments where only bitrate was measured [2, 3, 15], differing only in that targets were prompted in a non-random fashion. For clarity and simplicity of presentation, as these three prior studies contained over a million trials between them and confer significant confidence in the tasks and decoding paradigms, we elected here to show only a single representative day of typing tasks under each decoding modality.

Metrics

There are three relevant metrics in this study. Each will be discussed briefly below.

Typing rate

Typing rate is the primary measure used in this study and the most intuitive because of its familiarity and applicability. Conventional typing rate is measured as the effective characters per minute transmitted when copying text. These approaches have varying ways to account for uncorrected errors (e.g., a misspelled word). However, we did not utilize any of these in this study as we continually prompted the delete key until all errors were corrected before resuming. Thus, the stream of text transmitted was always correct, except for the most recent sequence of errors.

Typing rate can be described via the following equation:

where T represents typing rate in words per minute, Sc represents the correct number of symbols/keys transmitted (including spaces and deletes), Si is the incorrect symbols/keys transmitted (that would then have to be deleted), and t the elapsed time. The division by five is the convention used to convert characters (including spaces) per minute into words per minute [25]. Note that this measure is independent of information theoretic influences such as dictionary size and channel code. Any coding method can be used so long as the output is meaningful English, though the most common method used is the single-symbol channel coded QWERTY keyboard.

Achieved bitrate

Achieved bitrate represents the effective throughput of the system under a single-symbol channel code, previously described in detail [2]. A single-symbol channel code is the most conventional form of communication channel used by people when they type (i.e., a QWERTY keyboard). Although multi-symbol channel codes are more efficient and resistant to errors and considered best practice in electronic communication channels, they are not practical or easy to use by people. In a single-symbol channel coded keyboard, the delete button is used to correct errors one symbol/letter at a time. The equation for achieved bitrate under this coding scheme is below:

where B is the achieved bitrate in bits per second (bps) and N is the number of selectable symbols on the interface (including delete key). As with typing rate, the other three parameters are the same: Sc is the correct number of symbols, Si is the number of incorrect symbols, and t is the elapsed time. The N − 1 term accounts for the reduction in dictionary size by one because the delete key does not produce a symbol, but instead is a marker which edits the stream of transmitted symbols. The max function is necessary because bitrate cannot be negative, and this demonstrates that a 50% success rate (i.e., where Sc = Si) conveys no net information. This metric has been previously derived in a slightly different, but mathematically equivalent form termed “practical bit rate” in an EEG-based BCI study [26].

Information transfer rate

The most popular metric in the field of BCIs is information transfer rate and was first introduced nearly thirty years ago [27]. It is a measure of channel capacity and thus always overestimates the throughput of the communication channel. Further, it is not a practical measure because of the many assumptions it makes [28], including infinite length channel codes. The equation for information transfer rate is shown below:

where ITR is the information transfer rate in bps, N is the number of selectable symbols, P is the probability of correctly selecting a symbol (i.e., ), and t is the elapsed time. Comparisons between ITR and achieved bitrate appear towards the end of the text.

III. Results

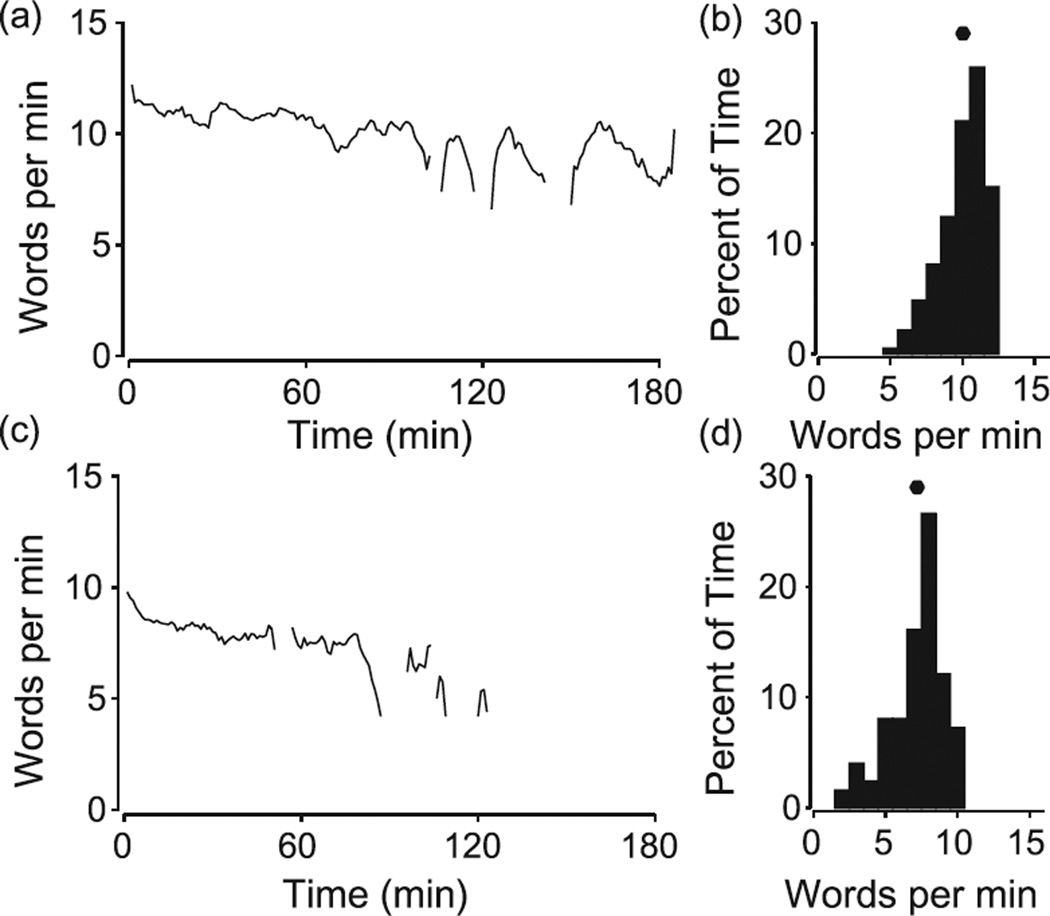

Both the dwell and click typing experiments were successful in simulating typing under the varying control modalities. Performance on the dwell typing experiment with both monkeys is shown in Figure 2. Monkey J achieved an average rate of 10.0 words per minute (wpm), completing the prompted article in about three hours. Monkey L achieved an average of 7.2 wpm and completed about two thirds of the article in two hours before voluntarily stopping. Part of the reason for Monkey L’s lower performance is that he has lower overall behavioral motivation and BCI throughput than Monkey J. An example video of Monkey J using the BCI to transmit text with the dwell typing experiment is shown in Figure 3, which is a still frame from Supplementary Video 1.

Fig. 2. Dwell typing performance.

a Plot of dwell typing experiment of Monkey J. Discontinuities seen after 100 minutes are when the monkey took voluntary pauses. Data from dataset J20111021. b Histogram of dwell typing performance from the same dataset in Monkey J. Dot at top notes the average typing rate of 10.0 wpm. c Same plot as in panel a, but for Monkey L, from dataset L20111103. d Same plot as in panel b, but for Monkey L, with an average typing rate of 7.2 wpm.

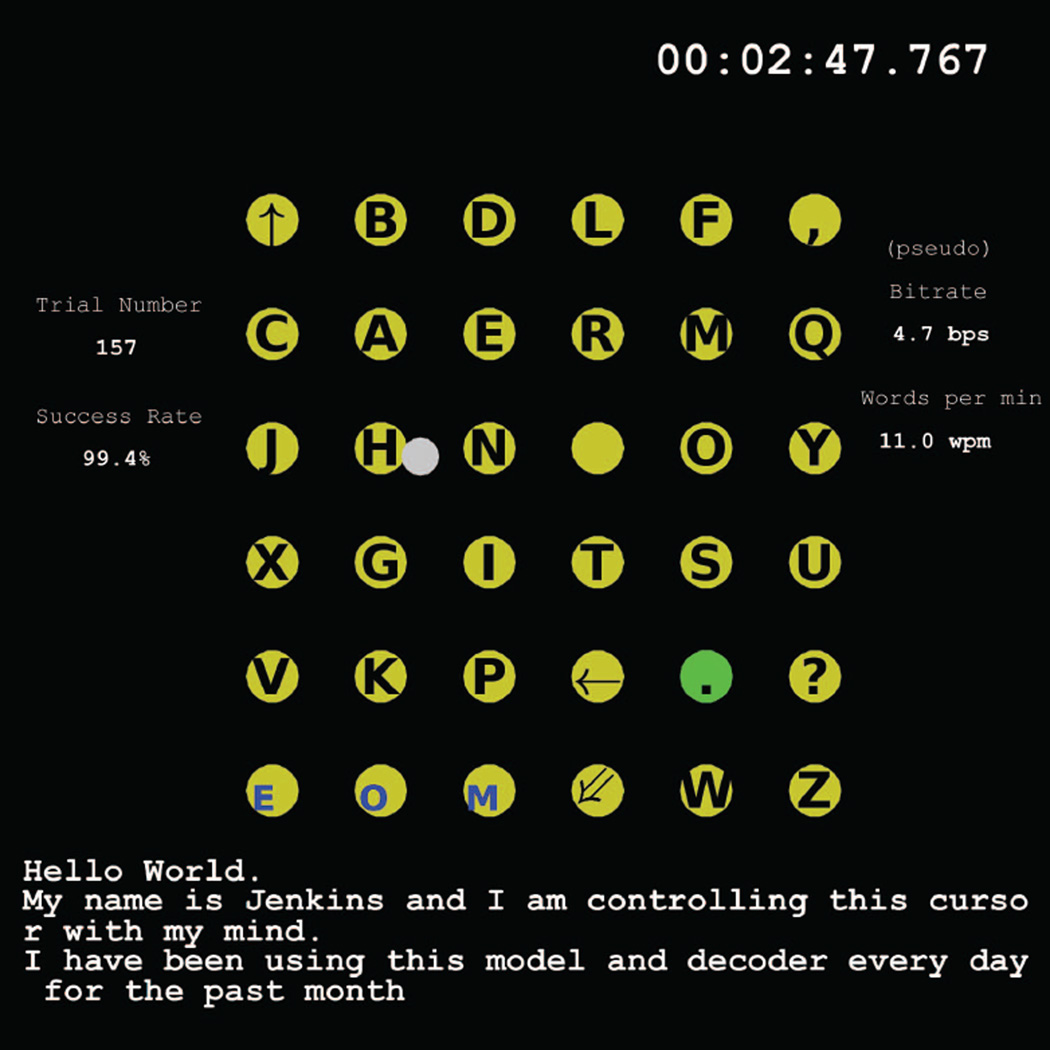

Fig. 3. Example of dwell typing.

Still frame from Supplementary Video 1 showing performance of dwell typing task of Monkey J. Data is from J20111014. Monkey only saw yellow and green dots, letters were overlaid in post-processing. The particular decoder used in this video had been held constant for over thirty days from a prior study [15]. The trial shown in this frame is trial 157, where the period key is prompted.

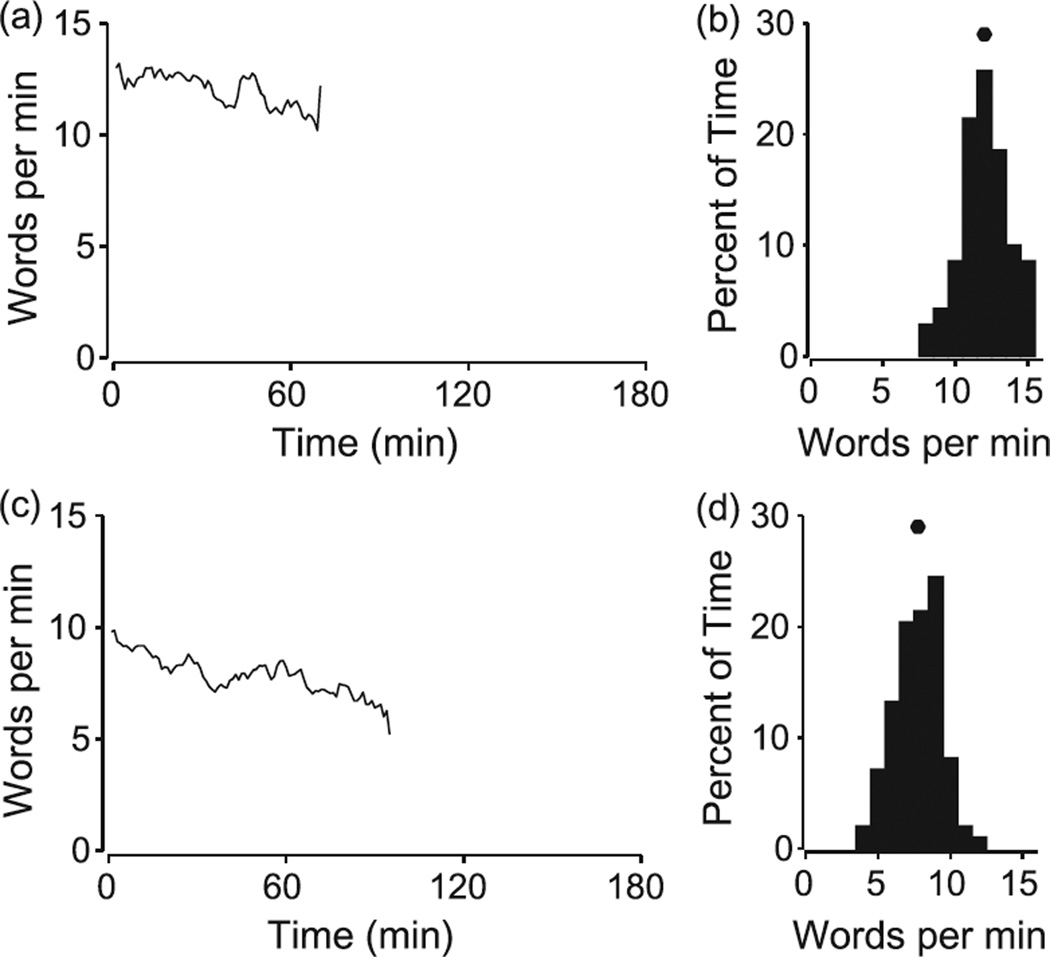

Performance on the click typing experiment with both monkeys is shown in Figure 4. Monkey J achieved an average rate of 12.0 wpm, completing all but the last two paragraphs of the article in just over an hour. Monkey L achieved an average rate of 7.8 wpm in just over an hour and half, stopping at the sentence prior to where Monkey J stopped. As the click typing experiment is faster paced and more strenuous, the monkeys (Monkey J in particular) are less willing to perform the click typing task for as long [3]. Example videos of Monkey J using the BCI to transmit text under the click typing experiment appear as Supplementary Videos 2 and 3.

Fig. 4. Click typing performance.

a Plot of click typing experiment of Monkey J. Data from dataset J20120411. b Histogram of click typing performance from the same dataset in Monkey J. Dot at top notes the average typing rate of 12.0 wpm. c Same plot as in panel a, but for Monkey L, from dataset L20120501. d Same plot as in panel b, but for Monkey L, with an average typing rate of 7.8 wpm.

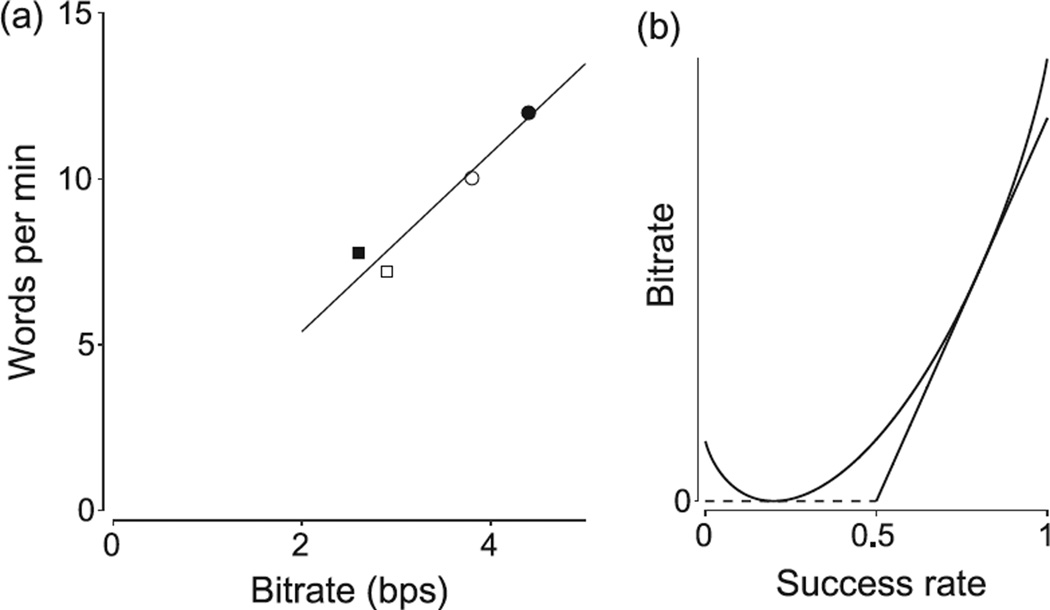

Combining these datasets with data collected from these same monkeys using the same decoding methods from earlier studies [2, 3, 15], we related typing rate to achieved bitrate, shown in Figure 5a. This plot shows the average typing rates and bitrates achieved by both monkeys using both experiments. A regression line fit to this data (with an assumed intercept at the origin), yielded a slope of 2.69 (95%CI: 2.43 − 2.96). Thus, the relationship between typing rate in words per minute and achieved bitrate in bps can be approximated by the following equation:

Fig. 5. Metrics comparison.

a Plot of typing rate vs achieved bitrate. The achieved bitrate measures are from prior studies [2, 3, 15]. Circles denotes Monkey J. Squares denote Monkey L. Hollow marker denotes dwell typing experiment. Filled marker denotes click typing experiment. The regressed line is of the form T = 2.7 · B. b Plot of achieved bitrate and ITR as a function of success rate. The y-axis does not have ticks because only the relationship of the lines is important in this plot and absolute bitrate varies as a function of dictionary size. Achieved bitrate is the linear piecewise line and ITR is the curved line. Note that the ITR curve is always greater than or equal to the achieved bitrate line. This plot is for N = 5. Intercept points are at (chance rate) and .

Finally, we mathematically modeled the relationship between achieved bitrate and information transfer rate. For a dictionary size of five, this relationship is plotted in Figure 5b. The precise shape of the ITR curve and the slope of the achieved bitrate line vary with dictionary size, but the general features do not change for all tasks with N > 2. Consistent with the notion that ITR is a measure of capacity, we see that it is strictly higher than achieved bitrate regardless of the BCI communication accuracy (i.e., success rate). The ITR curve touches zero at the chance level: . However, achieved bitrate is zero at all values at or below 50% success rate, reflecting the fact that no symbols are effectively transmitted at these success rates because of the constant need to select the delete key. The other point at which achieved bitrate and ITR are equivalent is when the success rate is . Note that the relationship to ITR is not one-to-one for success rates at or below 50%. This implies that a single measurement of ITR cannot well predict typing rates at low success rates.

IV. Discussion

This study demonstrates the feasibility of BCIs as communication devices for transmitting text. To our knowledge, the typing rates achieved in this study represent the highest of any BCI under any control modality. We also found that text can be communicated uninterrupted for hours via a single BCI decoder without any changes. The click typing experiment was conducted 2.5 (Monkey J) and 4 (Monkey L) years post surgical implantation, serving as an additional existence proof that these electrode arrays can facilitate high-performing BCI control for years. We note that this study is limited by the use of monkeys who were only acquiring prompted targets. The results presented here do not account for the cognitive load of word and sentence formation that a person would encounter. However, for a copy typing task as is commonly used for measuring typing performance, this may be a close approximation. At the least, this study provides an upper limit for the expected typing rate for a given achieved bitrate. A more accurate measure of the relationship between achieved bitrate and effective typing rate would best be performed by human participants with comparable high-performing BCIs. Nevertheless, this study builds additional evidence towards the promise of BCIs for building neural prostheses for people with motor disabilities.

Acknowledgments

We thank C Pandarinath for his discussions on information theoretic metrics and the relationship between achieved bitrate and ITR; M Risch, J Aguayo, S Kang, W Kalkus, C Sherman, and E Morgan for surgical assistance and veterinary care; S Eisensee, B Davis, and E Castaneda for administrative support; D Haven and B Oskotsky for information technology support.

Funding

This work was supported by the Stanford Medical Scholars Program, Howard Hughes Medical Institute Medical Research Fellows Program, Paul and Daisy Soros Fellowship, Stanford Medical Scientist Training Program (PN); National Science Foundation Graduate Research Fellowships (JCK); Christopher and Dana Reeve Paralysis Foundation (SIR and KVS); and the following to KVS: Burroughs Welcome Fund Career Awards in the Biomedical Sciences, Defense Advanced Research Projects Agency Revolutionizing Prosthetics 2009 N66001-06-C-8005 and Reorganization and Plasticity to Accelerate Injury Recovery N66001-10-C-2010, US National Institutes of Health National Institute of Neurological Disorders and Stroke Collaborative Research in Computational Neuroscience Grant R01-NS054283 and Bioengineering Research Grant R01-NS064318 and Transformative Research Award T-R01NS076460, US National Institutes of Health National Institute on Deafness and Other Communication Disorders R01-DC014034, and US National Institutes of Health Office of the Director EUREKA Award R01-NS066311 and Pioneer Award 8DP1HD075623.

Biographies

Paul Nuyujukian (S05-M13) received the B.S. degree in cybernetics from the University of California, Los Angeles in 2006. He received an M.S. and Ph.D. degrees in bioengineering and M.D degree from Stanford University in 2011, 2012, and 2014, respectively. He is currently a postdoctoral scholar in the departments of Neurosurgery and Electrical Engineering at Stanford University. His research interests include the development and clinical translation of brain-machine interfaces for neural prostheses and other neurological conditions.

Jonathan C. Kao (S13) received the B.S. and M.S. degree in electrical engineering from Stanford University in 2010. He is currently pursuing his Ph.D. degree in electrical engineering at Stanford University. His research interests include algorithms for neural prosthetic control, neural dynamical systems modeling, and the development of clinically viable neural prostheses.

Stephen I. Ryu received the B.S. and M.S. degree in electrical engineering from Stanford University, Stanford, CA, in 1994 and 1995, respectively, and the M.D. degree from the University of California at San Diego, La Jolla in 1999. He was a postdoctoral fellow at Stanford in Neurobiology and Electrical Engineering from 2002–2006. He completed neurosurgical residency and fellowship training at Stanford University in 2006. He was on faculty as an Assistant Professor of Neurosurgery at Stanford University until 2009. Since 2009, he has been a Consulting Professor of Electrical Engineering at Stanford University. His neurosurgical clinical practice is with the Palo Alto Medical Foundation in Palo Alto, CA. His research interests include brainmachine interfaces, neural prosthetics, minimally invasive neurosurgery, and stereotactic radiosurgery.

Krishna V. Shenoy (S87-M01-SM06) received the B.S. degree in electrical engineering from U.C. Irvine in 1990, and the M.S. and Ph.D. degrees in electrical engineering from MIT, Cambridge in 1992 and 1995, respectively. He was a Neurobiology Postdoctoral Fellow at Caltech from 1995 to 2001 and then joined Stanford University where he is currently a Professor in the Departments of Electrical Engineering, Bioengineering, and Neurobiology, and in the Bio-X and Neurosciences Programs. He is also with the Stanford Neurosciences Institute and is a Howard Hughes Medical Institute Investigator. His research interests include computational motor neurophysiology and neural prosthetic system design. He is the director of the Neural Prosthetic Systems Laboratory and co-director of the Neural Prosthetics Translational Laboratory at Stanford University. Dr. Shenoy was a recipient of the 1996 Hertz Foundation Doctoral Thesis Prize, a Burroughs Wellcome Fund Career Award in the Biomedical Sciences, an Alfred P. Sloan Research Fellowship, a McKnight Endowment Fund in Neuroscience Technological Innovations in Neurosciences Award, a 2009 National Institutes of Health Director’s Pioneer Award, the 2010 Stanford University Postdoctoral Mentoring Award, and the 2013 Distinguished Alumnus Award from the Henry Samueli School of Engineering at U.C. Irvine.

Footnotes

Author Contributions

PN was responsible for designing and conducting experiments, data analysis, and manuscript writeup. JCK assisted in conducting experiments and manuscript review. SIR was responsible for surgical implantation and assisted in manuscript review. KVS was involved in all aspects of experimentation, data review, and manuscript writeup.

Contributor Information

Paul Nuyujukian, Neurosurgery Department, the Electrical Engineering Department, the Bioengineering Department, and Stanford Neurosciences Institute, Stanford University, Stanford, CA 94305 USA.

Jonathan C. Kao, Electrical Engineering Department, Stanford University, Stanford, CA 94305 USA.

Stephen I. Ryu, Electrical Engineering Department, Stanford University, Stanford, CA 94305 USA and also with Palo Alto Medical Foundation, Palo Alto, CA 94550 USA.

Krishna V. Shenoy, Electrical Engineering Department, the Bioengineering Department, the Neurobiology Department, and Stanford Neurosciences Institute, Stanford University, Stanford, CA 94305 USA and also with Howard Hughes Medical Institute, Chevy Chase, MD 20815 USA.

References

- 1.Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006 Jul;442(7099):195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- 2.Nuyujukian P, Fan JM, Kao JC, Ryu SI, Shenoy KV. A high-performance keyboard neural prosthesis enabled by task optimization. IEEE Trans Biomed Eng. 2015 Jan;62(1):21–29. doi: 10.1109/TBME.2014.2354697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kao JC, Nuyujukian P, Ryu SI, Shenoy KV. A high-performance neural prosthesis incorporating discrete state selection with hidden markov models. IEEE Transactions in Biomedical Engineering. doi: 10.1109/TBME.2016.2582691. in press. [DOI] [PubMed] [Google Scholar]

- 4.Serruya M, Hatsopoulos N, Paninski L, Fellows M, Donoghue J. Instant neural control of a movement signal. Nature. 2002;416:141–142. doi: 10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- 5.Taylor DM, Tillery SIH, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002 Jun;296(5574):1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 6.Carmena JM, Lebedev MA, Crist RE, O’Doherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS, Nicolelis MAL. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biol. 2003 Nov;1(2):E42. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gilja V, Nuyujukian P, Chestek CA, Cunningham JP, Yu BM, Fan JM, Kao JC, Ryu SI, Shenoy KV. A high-performance neural prosthesis enabled by control algorithm design. Nature Neuroscience. 2012 Nov;15:1752–1757. doi: 10.1038/nn.3265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kao JC, Nuyujukian P, Ryu SI, Churchland MM, Cunningham JP, Shenoy KV. Single-trial dynamics of motor cortex and their applications to brain-machine interfaces. Nat Commun. 2015;6:7759. doi: 10.1038/ncomms8759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006 Jul;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 10.Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P, Donoghue JP. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012 May;485(7398):372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJC, Velliste M, Boninger ML, Schwartz AB. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet. 2013 Feb;381(9866):557–564. doi: 10.1016/S0140-6736(12)61816-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Aflalo T, Kellis S, Klaes C, Lee B, Shi Y, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C, Andersen RA. Neurophysiology. decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science. 2015 May;348(6237):906–910. doi: 10.1126/science.aaa5417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gilja V, Pandarinath C, Blabe CH, Nuyujukian P, Simeral JD, Sarma AA, Sorice BL, Perge JA, Jarosiewicz B, Hochberg LR, Shenoy KV, Henderson JM. Clinical translation of a high-performance neural prosthesis. Nat Med. 2015 Oct;21(10):1142–1145. doi: 10.1038/nm.3953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jarosiewicz B, Sarma AA, Bacher D, Masse NY, Simeral JD, Sorice B, Oakley EM, Blabe C, Pandarinath C, Gilja V, Cash SS, Eskandar EN, Friehs G, Henderson JM, Shenoy KV, Donoghue JP, Hochberg LR. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain-computer interface. Sci Transl Med. 2015 Nov;7(313):313ra179. doi: 10.1126/scitranslmed.aac7328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nuyujukian P, Kao JC, Fan JM, Stavisky SD, Ryu SI, Shenoy KV. Performance sustaining intracortical neural prostheses. Journal of Neural Engineering. 2014;11(6):066003. doi: 10.1088/1741-2560/11/6/066003. [Online]. Available: http://stacks.iop.org/1741-2552/11/i=6/a=066003. [DOI] [PubMed] [Google Scholar]

- 16.Nuyujukian P, Fan JM, Gilja V, Kalanithi PS, Chestek CA, Shenoy KV. Monkey models for brain-machine interfaces: the need for maintaining diversity. Conf Proc IEEE Eng Med Biol Soc. 2011;2011:1301–1305. doi: 10.1109/IEMBS.2011.6090306. [DOI] [PubMed] [Google Scholar]

- 17.Davoodi R, Loeb GE. Real-time animation software for customized training to use motor prosthetic systems. IEEE Trans Neural Syst Rehabil Eng. 2012 Mar;20(2):134–142. doi: 10.1109/TNSRE.2011.2178864. [DOI] [PubMed] [Google Scholar]

- 18.Chestek CA, Gilja V, Nuyujukian P, Foster JD, Fan JM, Kaufman MT, Churchland MM, Rivera-Alvidrez Z, Cunningham JP, Ryu SI, Shenoy KV. Long-term stability of neural prosthetic control signals from silicon cortical arrays in rhesus macaque motor cortex. J Neural Eng. 2011 Aug;8(4):045005. doi: 10.1088/1741-2560/8/4/045005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cunningham JP, Nuyujukian P, Gilja V, Chestek CA, Ryu SI, Shenoy KV. A closed-loop human simulator for investigating the role of feedback control in brain-machine interfaces. J Neurophysiol. 2011 Apr;105(4):1932–1949. doi: 10.1152/jn.00503.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Fan JM, Nuyujukian P, Kao JC, Chestek CA, Ryu SI, Shenoy KV. Intention estimation in brain-machine interfaces. J Neural Eng. 2014 Feb;11(1):016004. doi: 10.1088/1741-2560/11/1/016004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhai S, Hunter M, Smith BA. The metropolis keyboard – an exploration of quantitative techniques for virtual keyboard design; Proceeding of the 13th Annual ACM Symposium on User Interface Software and Technology; 2000. Nov, pp. 119–128. [Google Scholar]

- 22.Zhai S, Hunter M, Smith BA. Performance optimization of virtual keyboards. Human-Computer Interaction. 2002;17(2–3):229–269. [Online]. Available: http://www.tandfonline.com/doi/abs/10.1080/07370024.2002.9667315. [Google Scholar]

- 23.Perez-Pena R. Two top suitors are emerging for new graduate school of engineering. The New York Times. 2011 Oct;:A19. [Online]. Available: http://www.nytimes.com/2011/10/17/nyregion/stanford-and-cornell-favored-for-ny-engineering-school.html. [Google Scholar]

- 24.Markoff J. Seeking robots to go where first responders can’t. The New York Times. 2012 Apr;:D2. [Online]. Available: http://www.nytimes.com/2012/04/10/science/pentagon-contest-to-develop-robots-to-work-in-disaster-areas.html?r = 0. [Google Scholar]

- 25.Yamada H. A historical study of typewriters and typing methods: from the position of planning japanese parallels. Journal of Information Processing. 1980 Feb;2(4):175–202. [Google Scholar]

- 26.Townsend G, LaPallo BK, Boulay CB, Krusienski DJ, Frye GE, Hauser CK, Schwartz NE, Vaughan TM, Wolpaw JR, Sellers EW. A novel p300-based brain-computer interface stimulus presentation paradigm: moving beyond rows and columns. Clin Neurophysiol. 2010 Jul;121(7):1109–1120. doi: 10.1016/j.clinph.2010.01.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wolpaw JR, Ramoser H, McFarland DJ, Pfurtscheller G. Eeg-based communication: improved accuracy by response verification. IEEE Transactions on Rehabilitation Engineering. 1998;6:326–333. doi: 10.1109/86.712231. [DOI] [PubMed] [Google Scholar]

- 28.Yuan P, Gao X, Allison B, Wang Y, Bin G, Gao S. A study of the existing problems of estimating the information transfer rate in online brain–computer interfaces. Journal of Neural Engineering. 2013;10(2):026014. doi: 10.1088/1741-2560/10/2/026014. [Online]. Available: http://stacks.iop.org/1741-2552/10/i=2/a=026014. [DOI] [PubMed] [Google Scholar]