Abstract

The von Neumann entropy, named after John von Neumann, is an extension of the classical concept of entropy to the field of quantum mechanics. From a numerical perspective, von Neumann entropy can be computed simply by computing all eigenvalues of a density matrix, an operation that could be prohibitively expensive for large-scale density matrices. We present and analyze three randomized algorithms to approximate von Neumann entropy of real density matrices: our algorithms leverage recent developments in the Randomized Numerical Linear Algebra (RandNLA) literature, such as randomized trace estimators, provable bounds for the power method, and the use of random projections to approximate the eigenvalues of a matrix. All three algorithms come with provable accuracy guarantees and our experimental evaluations support our theoretical findings showing considerable speedup with small loss in accuracy.

Keywords: von Neumann entropy, randomized algorithms, randNLA, Taylor polynomials, Chebyshev polynomials, random projections

I. Introduction

Entropy is a fundamental quantity in many areas of science and engineering. von Neumann entropy, named after John von Neumann, is an extension of classical entropy concepts to the field of quantum mechanics. Its foundations can be traced to von Neumann’s work on Mathematische Grundlagen der Quantenmechanik1. In his work, Von Neumann introduced the notion of a density matrix, which facilitated extension of the tools of classical statistical mechanics to the quantum domain in order to develop a theory of quantum mechanics.

From a mathematical perspective (see Section I-A for details) the real density matrix R is a symmetric positive semidefinite matrix in with unit trace. Let pi, i = 1 … n be the eigenvalues of R in decreasing order; then, the entropy of R is defined as2

| (1) |

The above definition is a proper extension of both the Gibbs entropy and the Shannon entropy to the quantum case. It implies an obvious algorithm to compute by computing the eigendecomposition of R; known algorithms for this task can be prohibitively expensive for large values of n, particularly when the matrix becomes dense [1]. For example, [2] describes an entangled two-photon state generated by spontaneous parametric down-conversion, which can result in a sparse and banded density matrix with n ≈ 108.

Motivated by the high computational cost, we seek numerical algorithms that approximate the von Neumann entropy of large density matrices, e.g., symmetric positive definite matrices with unit trace, faster than the trivial approach. Our algorithms build upon recent developments in the field of Randomized Numerical Linear Algebra (RandNLA), an interdisciplinary research area that exploits randomization as a computational resource to develop improved algorithms for large-scale linear algebra problems. Indeed, our work here focuses at the intersection of RandNLA and information theory, delivering novel randomized linear algebra algorithms and related quality-of-approximation results for a fundamental information-theoretic metric.

A. Background

We focus on finite-dimensional function (state) spaces. In this setting, the density matrix R represents the statistical mixture of k ≤ n pure states, and has the form

| (2) |

The vectors for i = 1 … k represent the k ≤ n pure states and can be assumed to be pairwise orthogonal and normal, while pi’s correspond to the probability of each state and satisfy pi > 0 and . From a linear algebraic perspective, eqn. (2) can be rewritten as

| (3) |

where is the matrix whose columns are the vectors ψi and is a diagonal matrix whose entries are the (positive) pi’s. Given our assumptions for ψi, ΨTΨ = I; also R is symmetric positive semidefinite with its eigenvalues equal to pi and corresponding left/right singular vectors equal to ψi’s; and . Notice that eqn. (3) essentially reveals the (thin) Singular Value Decomposition (SVD) [1] of R. The Von Neumann entropy of R, denoted by is equal to (see also eqn. (1))

| (4) |

The second equality follows from the definition of matrix functions [3]. More precisely, we overload notation and consider the full SVD of R, namely R = ΨΣpΨT, where is an orthogonal matrix whose top k columns correspond to the k pure states and the bottom n − k columns are chosen so that ΨΨT = ΨTΨ = In. Here Σp is a diagonal matrix whose bottom n−k diagonal entries are set to zero. Let h(x) = x ln x for any x > 0 and let h(0) = 0. Then, using the cyclical property of the trace and the definition of h(x),

| (5) |

B. Trace estimators

The following lemma appeared in [4] and is immediate from Theorem 5.2 in [5]. It implies an algorithm to approximate the trace of any symmetric positive semidefinite matrix A by computing inner products of the matrix with Gaussian random vectors.

Lemma 1. Let be a positive semi-definite matrix, let 0 < ϵ < 1 be an accuracy parameter, and let 0 < δ < 1 be a failure probability. If are independent random standard Gaussian vectors, then, for s = ⌈20 ln(2/δ)/ϵ2⌉, with probability at least 1 − δ,

C. Our contributions

We present and analyze three randomized algorithms to approximate the von Neumann entropy of density matrices. The first two algorithms (Sections II and III) leverage two different polynomial approximations of the matrix function : the first approximation uses a Taylor series expansion, while the second approximation uses Chebyschev polynomials. Both algorithms return, with high probability, relative-error approximations to the true entropy of the input density matrix, under certain assumptions. More specifically, in both cases, we need to assume that the input density matrix has n non-zero eigenvalues, or, equivalently, that the probabilities pi, i = 1 … n, corresponding to the underlying n pure states are non-zero. The running time of both algorithms is proportional to the sparsity of the input density matrix and depends (see Theorems 2 and 4 for precise statements) on, roughly, the ratio of the largest to the smallest probability p1/pn (recall that the smallest probability is assumed to be non-zero), as well as the desired accuracy.

The third algorithm (Section V) is fundamentally different, if not orthogonal, to the previous two approaches. It leverages the power of random projections [6], [7] to approximate numerical linear algebra quantities, such as the eigenvalues of a matrix. Assuming that the density matrix R has exactly k ⪡ n non-zero eigenvalues, e.g., there are k pure states with non-zero probabilities pi, i = 1 … k, the proposed algorithm returns, with high probability, relative error approximations to all k probabilities pi. This, in turn, implies an additive-relative error approximation to the entropy of the density matrix, which, under a mild assumption on the true entropy of the density matrix, becomes a relative error approximation (see Theorem 10 for a precise statement). The running time of the algorithm is again proportional to the sparsity of the density matrix and depends on the target accuracy, but, unlike the previous two algorithms, does not depend on any function of the pi.

From a technical perspective, the theoretical analysis of the first two algorithms proceeds by combining the power of polynomial approximations, either using Taylor series or Chebyschev polynomials, to matrix functions, combined with randomized trace estimators. A provably accurate variant of the power method is used to estimate the largest probability pi. If this estimate is significantly smaller than one, it can improve the running times of the proposed algorithms (see discussion after Theorem 2). The third algorithm leverages a powerful, multiplicative matrix perturbation result that first appeared in [8]. Our work in Section V is a novel application of this inequality to derive bounds for RandNLA algorithms.

Finally, in Section VI, we present a detailed evaluation of our algorithms on synthetic density matrices of various sizes, most of which were generated using Matlab’s QETLAB toolbox [9]. For some of the larger matrices that were used in our evaluations, the exact computation of the entropy takes hours, whereas our algorithms return approximations with relative errors well below 0.5% in only a few minutes.

D. Prior work

The first non-trivial algorithm to approximate the von Neumann entropy of a density matrix appeared in [2]. Their approach is essentially the same as our approach in Section III. Indeed, our algorithm in Section III was inspired by their approach. However, our analysis is somewhat different, lever-aging a provably accurate variant of the power method, as well as provably accurate trace estimators to derive a relative error approximation to the entropy of a density matrix, under appropriate assumptions. A detailed, technical comparison between our results in Section III and the work of [2] is delegated to Section III-C.

Independently and in parallel with our work, [10] presented a multipoint interpolation algorithm (building upon [11]) to compute a relative error approximation for the entropy of a real matrix with bounded condition number. The proposed running time of Theorem 35 of [10] does not depend on the condition number of the input matrix (i.e., the ratio of the largest to the smallest probability), which is a clear advantage in the case of ill-conditioned matrices. However, the dependence of the algorithm of Theorem 35 of [10] on terms like (log n/ϵ)6 or (where nnz(A) represents the number of non-zero elements of the matrix A) could blow up the running time of the proposed algorithm for reasonably conditioned matrices.

We also note the recent work in [4], which used Taylor approximations to matrix functions to estimate the log determinant of symmetric positive definite matrices (see also Section 1.2 of [4] for an overview of prior work on approximating matrix functions via Taylor series). The work of [12] used a Chebyschev polynomial approximation to estimate the log determinant of a matrix and is reminiscent of our approach in Section III and, of course, the work of [2].

We conclude this section by noting that our algorithms use two tools (described, for the sake of completeness, in the Appendix) that appeared in prior work. The first tool is the power method, with a provable analysis that first appeared in [13]. The second tool is a provably accurate trace estimation algorithm for symmetric positive semidefinite matrices that appeared in [5].

II. An approach via Taylor series

Our first approach to approximate the von Neumann entropy of a density matrix uses a Taylor series expansion to approximate the logarithm of a matrix, combined with a relative-error trace estimator for symmetric positive semi-definite matrices and the power method to upper bound the largest singular value of a matrix.

A. Algorithm and Main Theorem

Our main result is an analysis of Algorithm 1 (see below) that guarantees relative error approximation to the entropy of the density matrix R, under the assumption that has n pure states with 0 < ℓ ≤ pi for all i = 1 … n. The following theorem is our main quality-of-approximation result for Algorithm 1.

Algorithm 1.

A Taylor series approach to estimate the entropy.

| 1: | INPUT: , accuracy parameter ε > 0, failure probability δ, and integer m > 0. |

| 2: | Compute , the estimate of the largest eigenvalue of R, p1, using Algorithm 8 (see Appendix) with and . |

| 3: | Set . |

| 4: | Set s = ⌈20 ln(2/δ)/ε2⌉. |

| 5: | Let be i.i.d. random Gaussian vectors. |

| 6: | OUTPUT: return . |

Theorem 2. Let R be a density matrix such that all probabilities pi, i = 1 … n satisfy 0 < ℓ ≤ pi. Let u be computed as in Algorithm 1 and let be the output of Algorithm 1 on inputs R, m, and ϵ < 1; Then, with probability at least 1 − 2δ,

by setting . The algorithm runs in time

A few remarks are necessary to better understand the above theorem. First, ℓ could be set to pn, the smallest of the probabilities corresponding to the n pure states of the density matrix R. Second, it should be obvious that u in Algorithm 1 could be simply set to one and thus we could avoid calling Algorithm 8 to estimate p1 by and thus compute u. However, if p1 is small, then u could be significantly smaller than one, thus reducing the running time of Algorithm 1, which depends on the ratio u/l. Third, ideally, if both p1 and pn were used instead of u and l, respectively, the running time of the algorithm would scale with the ratio p1/pn.

B. Proof of Theorem 2

We now prove Theorem 2, which analyzes the performance of Algorithm 1. Our first lemma presents a simple expression for using a Taylor series expansion.

Lemma 3. Let be a symmetric positive definite matrix with unit trace and whose eigenvalues lie in the interval [ℓ, u], for some 0 < ℓ ≤ u ≤ 1. Then,

Proof: From the definition of the von Neumann entropy and a Taylor expansion,

| (6) |

Eqn. (6) follows since R has unit trace and from a Taylor expansion: indeed, for a symmetric matrix A whose eigenvalues are all in the interval (−1, 1). We note that the eigenvalues of In − u−1R are in the interval [0, 1 − (ℓ/u)], whose upper bound is strictly less than one since, by our assumptions, ℓ/u > 0.

We now proceed to prove Theorem 2. We will condition our analysis on Algorithm 8 being successful, which happens with probability at least 1 − δ. In this case, is an upper bound for all probabilities pi. For notational convenience, set C = In − u−1R. We start by manipulating as follows:

We now bound the two terms Δ1 and Δ2 separately. We start with Δ1: the idea is to apply Lemma 1 on the matrix with s = ⌈20 ln(2/δ)/ϵ2⌉. Hence, with probability at least 1 − δ:

| (7) |

A subtle point in applying Lemma 1 is that the matrix must be symmetric positive semidefinite. To prove this, let the SVD of R be R = ΨΣpΨT, where all three matrices are in and the diagonal entries of Σp are in the interval [ℓ, u]. Then, it is easy to see that C = In − u−1R = Ψ(In − u−1Σp)ΨT and RCk = ΨΣp(In − u−1Σp)kΨT, where the diagonal entries of In − u−1Σp are non-negative, since the largest entry in Σp is upper bounded by u. This proves that RCk is symmetric positive semidefinite for any k, a fact which will be useful throughout the proof. Now,

which shows that the matrix of interest is symmetric positive semidefinite. Additionally, since RCk is symmetric positive semidefinite, its trace is non-negative, which proves the second inequality in eqn. (7) as well.

We proceed to bound Δ2 as follows:

| (8) |

| (9) |

| (10) |

To prove eqn. (8), we used von Neumann’s trace inequality3. Eqn. (8) now follows since Ck−mR is symmetric positive sem)definite4. To prove eqn. (9), we used the fact that tr (RCk)/k ≥ 0 for any k ≥ 1. Finally, to prove eqn. (10), we used the fact that ‖C‖2 = ‖In − u−1Σp‖2 ≤ 1 − ℓ/u since the smallest entry in Σp is at least ℓ by our assumptions. We also removed unnecessary absolute values since tr (RCk)/k is non-negative for any positive integer k.

Combining the bounds for Δ1 and Δ2 gives

We have already proven in Lemma 3 that

where the last inequality follows since u ≤ 1. Collecting our results, we get

Setting

and using (1 − x−1)x ≤ e−1 (x > 0), guarantees that (1 − ℓ/u)m ≤ ϵ and concludes the proof of the theorem. We note that the failure probability of the algorithm is at most 2δ (the sum of the failure probabilities of the power method and the trace estimation algorithm).

Finally, we discuss the running time of Algorithm 1, which is equal to . Since and , the running time becomes (after accounting for the running time of Algorithm 8)

III. An approach via Chebyschev polynomials

Our second approach is to use a Chebyschev polynomial-based approximation scheme to estimate the entropy of a density matrix. Our approach follows the work of [2], but our analysis uses the trace estimators of [5] and Algorithm 8 and its analysis. Importantly, we present conditions under which the proposed approach is competitive with the approach of Section II.

A. Algorithm and Main Theorem

The proposed algorithm leverages the fact that the von Neumann entropy of a density matrix R is equal to the (negative) trace of the matrix function R ln R and approximates the function R ln R by a sum of Chebyschev polynomials; then, the trace of the resulting matrix is estimated using the trace estimator of [5].

Let with , , and for w ≥ 2. Let and x ∈ [0, u] be the Chebyschev polynomials of the first kind for any integer w > 0. Algorithm 2 computes u (an upper bound estimate for the largest probability p1 of the density matrix R) and then computes fm(R) and estimates its trace. We note that the computation can be done efficiently using Clenshaw’s algorithm; see Appendix C for the well-known approach.

Algorithm 2.

A Chebyschev polynomial-based approach to estimate the entropy.

| 1: | INPUT: , accuracy parameter ε > 0, failure probability δ, and integer m > 0. |

| 2: | Compute , the estimate of the largest eigenvalue of R, p1, using Algorithm 8 (see Appendix) with and . |

| 3: | Set . |

| 4: | Set s = ⌈20 ln(2/δ)/ε2⌉. |

| 5: | Let be i.i.d. random Gaussian vectors. |

| 6: | OUTPUT: . |

Our main result is an analysis of Algorithm 2 that guarantees a relative error approximation to the entropy of the density matrix R, under the assumption that has n pure states with 0 < ℓ ≤ pi for all i = 1 … n. The following theorem is our main quality-of-approximation result for Algorithm 2.

Theorem 4. Let R be a density matrix such that all probabilities pi, i = 1 … n satisfy 0 < ℓ ≤ pi. Let u be computed as in Algorithm 1 and let be the output of Algorithm 2 on inputs R m, and ϵ < 1; Then, with probability at least 1 − 2δ,

by setting . The algorithm runs in time

The similarities between Theorems 2 and 4 are obvious: same assumptions and directly comparable accuracy guarantees. The only difference is in the running times: the Taylor series approach has a milder dependency on ϵ, while the Chebyschev-based approximation has a milder dependency on the ratio u/ℓ, which controls the behavior of the probabilities pi. However, for small values of ℓ (ℓ → 0),

Thus, the Chebyschev-based approximation has a milder dependency on u but not necessarily ℓ when compared to the Taylor-series approach. We also note that the discussion following Theorem 2 is again applicable here.

B. Proof of Theorem 4

We will condition our analysis on Algorithm 8 being successful, which happens with probability at least 1−δ. In this case, is an upper bound for all probabilities pi. We now recall (from Section I-A) the definition of the function h(x) = x ln x for any real x ∈ (0, 1], with h(0) = 0. Let be the density matrix, where both Σp and Ψ are matrices in . Notice that the diagonal entries of Σp are the pis and they satisfy 0 < ℓ ≤ pi ≤ u ≤ 1 for all i = 1…n.

Using the definitions of matrix functions from [3], we can now define h(R) = Ψh(Σp)ΨT, where h(Σp) is a diagonal matrix in with entries equal to h(pi) for all i = 1 … n. We now restate Proposition 3.1 from [2] in the context of our work, using our notation.

Lemma 5. The function h(x) in the interval [0, u] can be approximated by

where , , and for w ≥ 2. For any m ≥ 1,

for x ∈ [0, u].

In the above, for any integer w ≥ 0 and x ∈ [0, u]. Notice that the function (2/u)x − 1 essentially maps the interval [0, u], which is the interval of interest for the function h(x), to [−1, 1], which is the interval over which Chebyschev polynomials are commonly defined. The above theorem exploits the fact that the Chebyschev polynomials form an orthonormal basis for the space of functions over the interval [−1, 1].

We now move on to approximate the entropy using the function fm(x). First,

| (11) |

Recall from Section I-A that . We can now bound the difference between tr(−fm(R)) and . Indeed,

| (12) |

The last inequality follows by the final bound in Lemma 5, since all pi’s are in the interval [0, u].

Recall that we also assumed that all pis are lower-bounded by ℓ > 0 and thus

| (13) |

We note that the upper bound on the pis follows since the smallest pi is at least ℓ > 0 and thus the largest pi cannot exceed 1 − ℓ < 1. We note that we cannot use the upper bound u in the above formula, since u could be equal to one; 1 − ℓ is always strictly less than one but it cannot be a priori computed (and thus cannot be used in Algorithm 2), since ℓ is not a priori known.

We can now restate the bound of eqn. (12) as follows:

| (14) |

where the last inequality follows by setting

| (15) |

Next, we argue that the matrix −fm(R) is symmetric positive semidefinite (under our assumptions) and thus one can apply Lemma 1 to estimate its trace. We note that

which trivially proves the symmetry of −fm(R) and also shows that its eigenvalues are equal to −fm(pi) for all i = 1 … n. We now bound

where the inequalities follow from Lemma 5 and our choice for m from eqn. (15). This inequality holds for all i = 1 … n and implies that

using our upper (1 − ℓ < 1) and lower (ℓ > 0) bounds on the pis. Now ϵ ≤ 1 proves that −fm(pi) are non-negative for all i = 1 … n and thus −fm(R) is a symmetric positive semidefinite matrix; it follows that its trace is also non-negative.

We can now apply the trace estimator of Lemma 1 to get

| (16) |

For the above bound to hold, we need to set

| (17) |

We now conclude as follows:

The first inequality follows by adding and subtracting −tr(fm(R)) and using sub-additivity of the absolute value; the second inequality follows by eqns. (14) and (16); the third inequality follows again by eqn. (14); and the last inequality follows by using ϵ ≤ 1.

We note that the failure probability of the algorithm is at most 2δ (the sum of the failure probabilities of the power method and the trace estimation algorithm). Finally, we discuss the running time of Algorithm 2, which is equal to . Using the values for m and s from eqns. (15) and (17), the running time becomes (after accounting for the running time of Algorithm 8)

C. A comparison with the results of [2]

The work of [2] culminates in the error bounds described in Theorem 4.3 (and the ensuing discussion). In our parlance, [2] first derives the error bound of eqn. (12). It is worth emphasizing that the bound of eqn. (12) holds even if the pis are not necessarily strictly positive, as assumed by Theorem 4: the bound holds even if some of the pis are equal to zero.

Unfortunately, without imposing a lower bound assumption on the pis it is difficult to get a meaningful error bound and an efficient algorithm. Indeed, the error implied by eqn. (12) (without any assumption on the pis) necessitates setting m to at least (perhaps up to a logarithmic factor, as we will discuss shortly). To understand this, note that the entropy of the density matrix R ranges between zero and ln k, where k is the rank of the matrix R, i.e., the number of non-zero pi’s. Clearly, k ≤ n and thus ln n is an upper bound for . Notice that if is smaller than n/(2m2), the error bound of eqn. (12) does not even guarantee that the resulting approximation will be positive, which is, of course, meaningless as an approximation to the entropy.

In order to guarantee a relative error bound of the form via eqn. (12), we need to set m to be at least

| (18) |

which even for “large” values of (i.e., values close to the upper bound ln n) still implies that m is . Even with such a large value for m, we are still not done: we need an efficient trace estimation procedure for the matrix −fm(R). While this matrix is always symmetric, it is not necessarily positive or negative semi-definite (unless additional assumptions are imposed on the pis, like we did in Theorem 4).

IV. Approaches for Hermitian Density Matrices

Hermitian, instead of symmetric, positive definite matrices, frequently arise in quantum mechanics. The analyses of Sections II and III focus on real density matrices; we now briefly discuss how they can be extended to Hermitian density matrices. Recall that both approaches follow the same algorithmic scheme. First, the dominant eigenvalue of the density matrix is estimated via the power method; a trace estimation follows using Gaussian trace estimators on either the truncated Taylor expansion of a suitable matrix function or on a chebyshev polynomial approximation of the same matrix function. Interestingly, the Taylor expansions, as well as the chebyshev polynomial approximations, both work when the input matrix is complex. However, the estimation of the dominant eigenvalue of R poses a theoretical difficulty: to the best of our knowledge, there is no known bound for the accuracy of the power method in the case where R is complex. Lemma 14 guarantees relative error approximations to the dominant eigenvalue of real matrices, but we are not aware of any provable relative error bound for the complex case. To avoid this issue we will be using one as a (loose) upper bound for the dominant eigenvalue.

The crucial step in order to guarantee relative error approximations to the entropy of a Hermitian positive definite matrix is to guarantee relative error approximations for the trace of a Hermitian positive definite matrix. Lemma 1 assumes symmetric positive semi-definite matrices; we now prove that the same lemma can be applied on Hermitian positive definite matrices to achieve the same guarantees.

Theorem 6. Every Hermitian matrix can be expressed as

| (19) |

where is symmetric and is anti-symmetric (or skew-symmetric). If is positive semi-definite, then B is also positive semi-definite.

Proof: The proof is trivial and uses the fact that for any Hermitian (symmetric) positive semi-definite matrix all eigenvalues are real and greater than zero.

Theorem 7. The trace of a Hermitian matrix expressed as in eqn. (19) is equal to the trace of its real part:

Proof: Using tr(A) = tr(AT), it is easy to see that

The last equality follows by noticing that the only way for the equality to hold for a skew-symmetric matrix C is if tr(CT) = −tr(CT). This is true only if C is the all-zeros matrix.

In words, Theorem 7 states that the trace of a Hermitian matrix equals the trace of its real part. Similarly, Theorem 6 states that the real part of a Hermitian positive semi-definite matrix is symmetric positive semi-definite. combining both theorems we conclude that we can estimate the trace of a Hermitian positive definite matrix up to relative error, using the Gaussian trace estimator of Lemma 1 on its real part. Therefore, both approaches generalize to Hermitian positive definite matrices using one as an upper bound instead of u for the dominant eigenvalue. Algorithms 3 and 4 are modified versions of Algorithms 1 and 2 respectively that work on Hermitian inputs (the function Re(·) returns the real part of its argument in an entry-wise manner).

Algorithm 3.

A Taylor series approach to estimate the entropy.

| 1: | INPUT: , accuracy parameter ε > 0, failure probability δ, and integer m > 0. |

| 2: | Set s = [20 ln(2/δ)/ε2]. |

| 3: | Let be i.i.d. random Gaussian vectors. |

| 4: | OUTPUT: return . |

Algorithm 4.

A Chebyschev polynomial-based approach to estimate the entropy.

| 1: | INPUT: , accuracy parameter ϵ > 0, failure probability δ, and integer m > 0. |

| 2: | Set s = [20 ln(2/δ)/ε2]. |

| 3: | Let be i.i.d. random Gaussian vectors. |

| 4: | OUTPUT: . |

Theorems 8 and 9 are our main quality-of-approximation results for Algorithm 3 and 4.

Theorem 8. Let R be a complex density matrix such that all probabilities pi, i = 1 … n satisfy 0 < ℓ ≤ pi. Let be the output of Algorithm 3 on inputs R, m, and ϵ < 1. Then, with probability at least 1 − δ,

by setting . The algorithm runs in time

Theorem 9. Let R be a density matrix such that all probabilities pi, i = 1 … n satisfy 0 < ℓ ≤ pi. Let be the output of Algorithm 4 on inputs R, m, and ϵ < 1. Then, with probability at least 1 − δ,

by setting . The algorithm runs in time

V. An approach via random projection matrices

Finally, we focus on perhaps the most interesting special case: the setting where at most k (out of n, with k ⪡ n) of the probabilities pi of the density matrix R of eqn. (2) are non-zero. in this setting, we prove that elegant random-projection-based techniques achieve relative error approximations to all probabilities pi, i = 1 … k. The running time of the proposed approach depends on the particular random projection that is used and can be made to depend on the sparsity of the input matrix.

A. Algorithm and Main Theorem

The proposed algorithm uses a random projection matrix Π to create a “sketch” of R in order to approximate the pis. In words, Algorithm 5 creates a sketch of the input matrix R by post-multiplying R by a random projection matrix; this is a well-known approach from the RandNLA literature (see [6] for details). Assuming that R has rank at most k, which is equivalent to assuming that at most k of the probabilities pi in eqn. (2) are non-zero (e.g., the system underlying the density matrix R has at most k pure states), then the rank of RΠ is also at most k. In this setting, Algorithm 5 returns the non-zero singular values of RΠ as approximations to the pi, i = 1 … k.

Algorithm 5.

Approximating the entropy via random projection matrices

| 1: | INPUT: Integer n (dimensions of matrix R) and integer k (with rank of R at most k ⪡ n, see eqn. (2)). |

| 2: | Construct the random projection matrix (see Section V-B for details on Π and s). |

| 3: | Compute . |

| 4: | Compute and return the (at most) k non-zero singular values of , denoted by , i = 1 … k. |

| 5: | OUTPUT: , i = 1 … k and . |

The following theorem is our main quality-of-approximation result for Algorithm 5.

Theorem 10. Let R be a density matrix with at most k ⪡ n non-zero probabilities and let ϵ < 1/2 be an accuracy parameter. Then, with probability at least 0.9, the output of Algorithm 5 satisfies

for all i = 1 … k. Additionally,

Algorithm 5 (combined with Algorithm 7 below) runs in time

Comparing the above result with Theorems 2 and 4, we note that the above theorem does not necessitate imposing any constraints on the probabilities pi, i = 1 … k. instead, it suffices to have k non-zero probabilities. The final result is an additive-relative error approximation to the entropy of R (as opposed to the relative error approximations of Theorems 2 and 4); under the mild assumption , the above bound becomes a true relative error approximation5.

B. Two constructions for the random projection matrix

We now discuss two constructions for the matrix Π and we cite two bounds regarding these constructions from prior work that will be useful in our analysis. The first construction is the subsampled Hadamard Transform, a simplification of the Fast Johnson-Lindenstrauss Transform of [14]; see [15], [16] for details. We do note that even though it appears that Algorithm 7 is always better than Algorithm 6 (at least in terms of their respective theoretical running times), both algorithms are worth evaluating experimentally: in particular, prior work [17] has reported that Algorithm 6 often outperforms Algorithm 7 in terms of empirical accuracy and running time when the input matrix is dense, as is often the case in our setting. Therefore, we choose to present results (theoretical and empirical) for both well-known constructions of Π (Algorithms 6 and 7).

Algorithm 6.

The subsampled Randomized Hadamard Transform

| 1: | INPUT: integers n, s > 0 with s ⪡ n. |

| 2: | Let S be an empty matrix. |

| 3: | For t = 1, …, s (i.i.d. trials with replacement) select uniformly at random an integer from {1, 2, …, n}. |

| 4: | If i is selected, then append the column vector ei to S, where is the i-th canonical vector. |

| 5: | Let be the normalized Hadamard transform matrix. |

| 6: | Let be a diagonal matrix with |

| 7: | OUTPUT: . |

The following result has appeared in [7], [15], [16].

Lemma 11. Let such that UTU = Ik and let be constructed by Algorithm 6. Then, with probability at least 0.9,

by setting .

Our second construction is the input sparsity transform of [18]. This major breakthrough was further analyzed in [19], [20] and we present the following result from [19, Appendix A1].

Lemma 12. Let such that UTU = Ik and let be constructed by Algorithm 7. Then, with probability at least 0.9,

by setting .

We refer the interested reader to [20] for improved analyses of Algorithm 7 and its variants.

Algorithm 7.

An input-sparsity transform

| 1: | INPUT: integers n, s > 0 with s ⪡ n. |

| 2: | Let S be an empty matrix. |

| 3: | For t = 1, …, n (i.i.d. trials with replacement) select uniformly at random an integer from {1, 2, …, s}. |

| 4: | If i is selected, then append the row vector to S, where is the i-th canonical vector. |

| 5: | Let be a diagonal matrix with |

| 6: | OUTPUT: . |

C. Proof of Theorem 10

At the heart of the proof of Theorem 10 lies the following perturbation bound from [8] (Theorem 2.3).

Theorem 13. Let DAD be a symmetric positive definite matrix such that D is a diagonal matrix and Aii = 1 for all i. Let DED be a perturbation matrix such that ‖E‖2 < λmin(A). Let λj be the i-the eigenvalue of DAD and let be the i-th eigenvalue of D(A + E)D. Then, for all i,

We note that λmin(A) in the above theorem is a real, strictly positive number6. Now consider the matrix RΠΠTRT; we will use the above theorem to argue that its singular values are good approximations to the singular values of the matrix RRT. Recall that R = ΨΣpΨT where Ψ has orthonormal columns. Note that the eigenvalues of are equal to the eigenvalues of the matrix ; similarly, the eigenvalues of ΨΣpΨTΠΠTΨΣpΨT are equal to the eigenvalues of ΣpΨTΠΠTΨΣp. Thus, we can compare the matrices

In the parlance of Theorem 13, E = ΨTΠΠTΨ − Ik. Applying either Lemma 11 (after rescaling the matrix Π) or Lemma 12, we immediately get that ‖EA‖2 ≤ ϵ < 1 with probability at least 0.9. Since λmin (Ik) = 1, the assumption of Theorem 13 is satisfied. We note that the eigenvalues of ΣpIkΣp are equal to for i = 1 … k (all positive, which guarantees that the matrix ΣpIkΣp is symmetric positive definite, as mandated by Theorem 13) and the eigenvalues of ΣpΨTΠΠTΨΣp are equal to , where are the singular values of ΣpΨTΠ. (Note that these are exactly equal to the outputs returned by Algorithm 5, since the singular values of ΣpΨTΠ are equal to the singular values of ΨΣpΨTΠ = RΠ). Thus, we can conclude:

| (20) |

The above result guarantees that all pis can be approximated up to relative error using Algorithm 5. We now investigate the implication of the above bound to approximating the von Neumann entropy of R. Indeed,

In the second to last inequality we used 1/(1 − ϵ) ≤ 1 + 2ϵ for any ϵ ≤ 1/2 and in the last inequality we used ln(1 + 2ϵ) ≤ 2ϵ for ϵ ∈ (0, 1/2). Similarly, we can prove that:

Combining, we get

We conclude by discussing the running time of Algorithm 5. Theoretically, the best choice is to combine the matrix Π from Algorithm 7 with Algorithm 5, which results in a running time

D. The Hermitian case

The above approach via random projections critically depends on Lemmas 11 and 12, which, to the best of our knowledge, have only been proven for the real case. These results are typically proven using matrix concentration inequalities, which are well-explored for sums of random real matrices but less explored for sums of real complex matrices. We leave it as an open problem to extend the theoretical analysis of our approach to the Hermitian case.

VI. Experiments

In this section we report experimental results in order to demonstrate the practical efficiency of our algorithms. We show that our algorithms are both numerically accurate and computationally efficient. Our algorithms were implemented in Matlab R2016a on a compute node with two 10-Core Intel Xeon-E5 processors (2.60GHz) and 512 GBs of RAM.

We generated random density matrices for most of which we used the QETLAB Matlab toolbox [9] to derive (realvalued) density matrices of size 5, 000 × 5, 000, on which most of our extensive evaluations were run. We also tested our methods on a much larger 30, 000 × 30, 000 density matrix, which was close to the largest matrix that Matlab would allow us to load. We used the function RandomDensityMatrix of QETLAB and the Haar measure; we also experimented with the Bures measure to generate random matrices, but we did not observe any qualitative differences worth reporting. Recall that exactly computing the Von-Neumann entropy using eqn. (1) presumes knowledge of the entire spectrum of the matrix; to compute all singular values of a matrix we used the svd function of Matlab. The accuracy of our proposed approximation algorithms was evaluated by measuring the relative error; wall-clock times were reported in order to quantify the speedup that our approximation algorithms were able to achieve.

A. Empirical results for the Taylor and Chebyshev approximation algorithms

We start by reporting results on the Taylor and Chebyshev approximation algorithms, which have two sources of error: the number of terms that are retained in either the Taylor series expansion or the Chebyshev polynomial approximation and the trace estimation that is used in both approximation algorithms. We will separately evaluate the accuracy loss that is contributed by each source of error in order to understand the behavior of the proposed approximation algorithms.

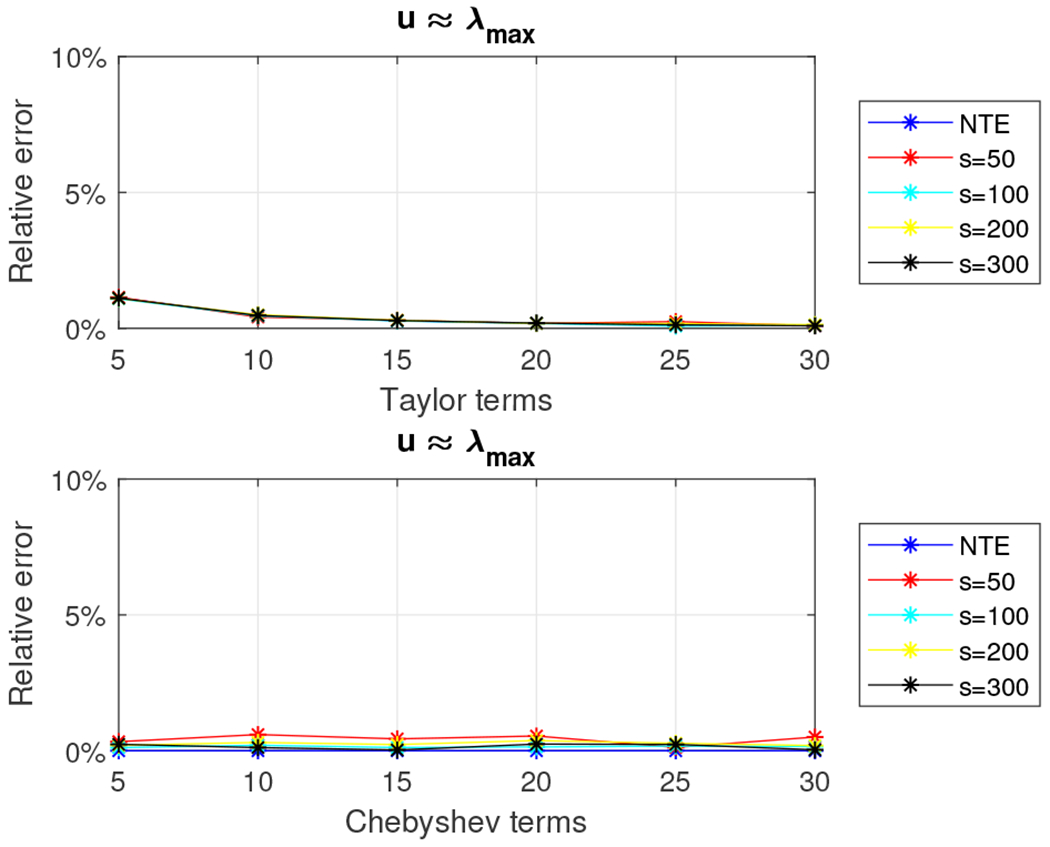

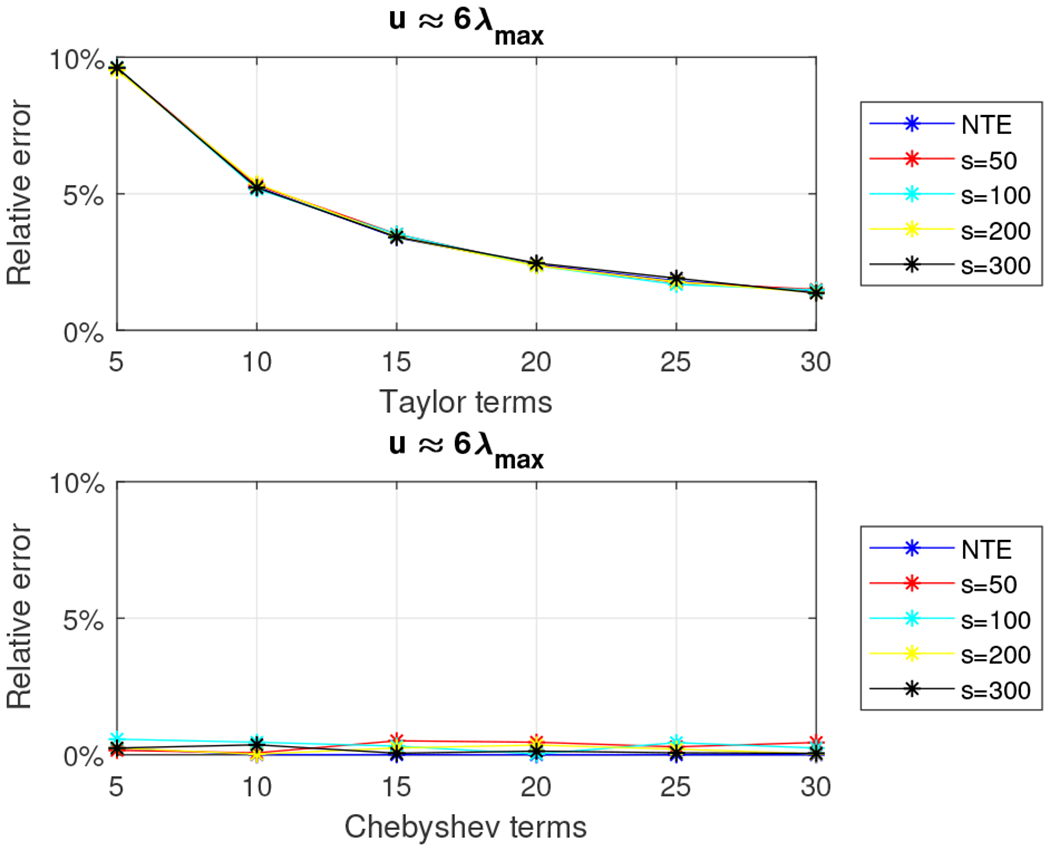

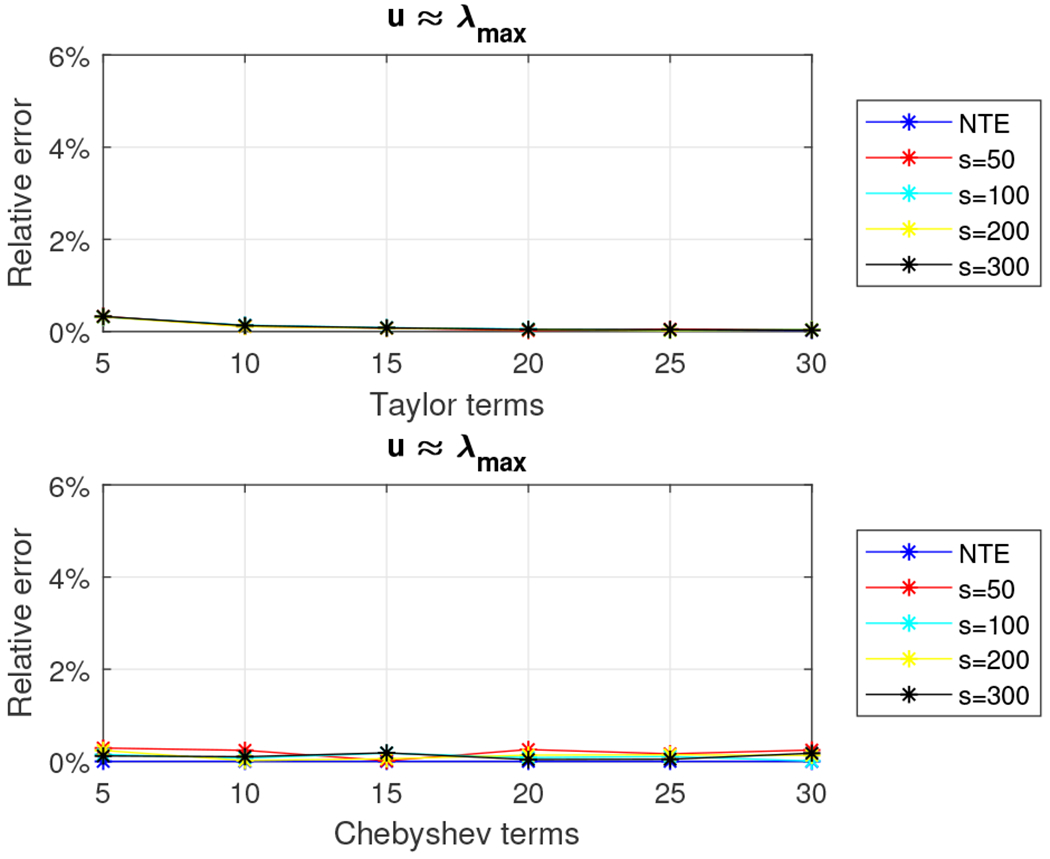

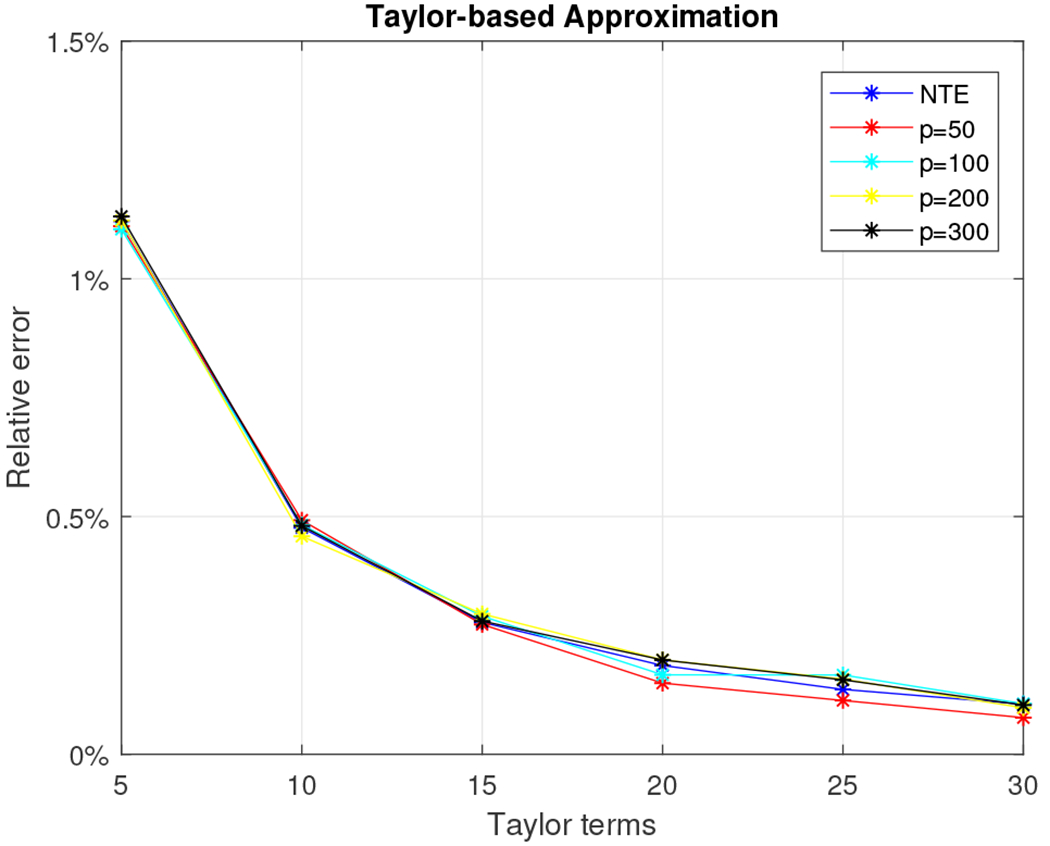

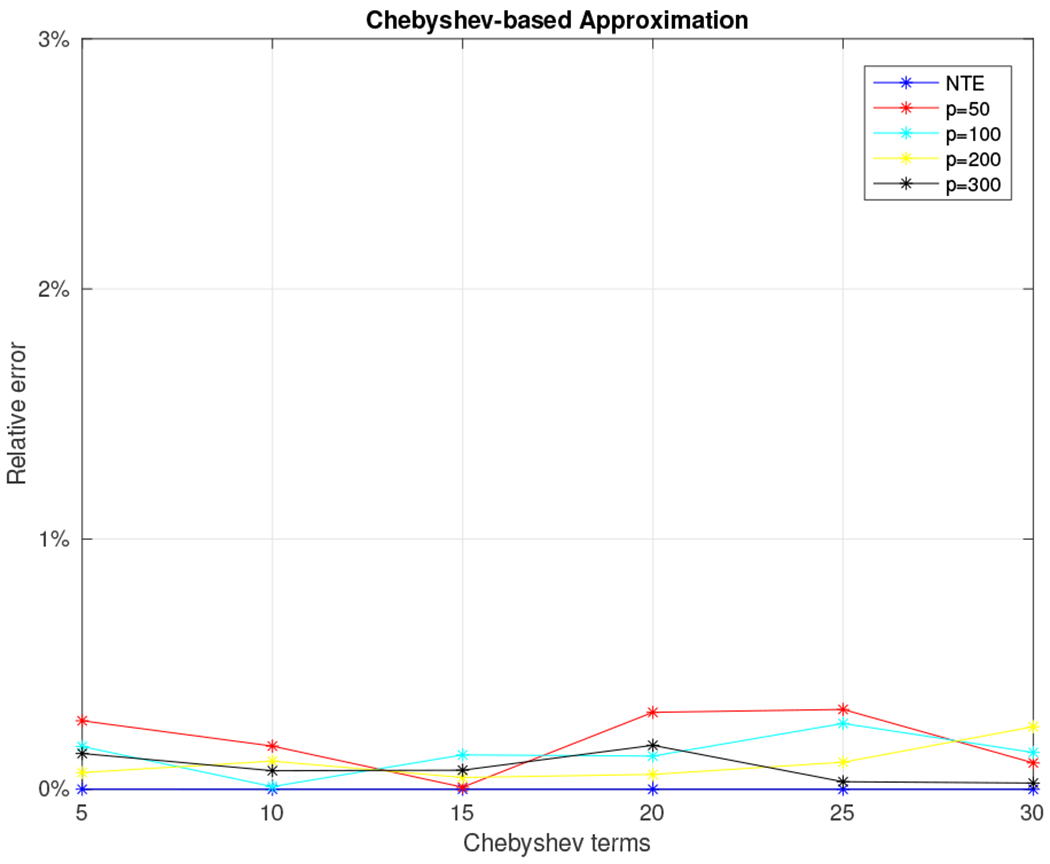

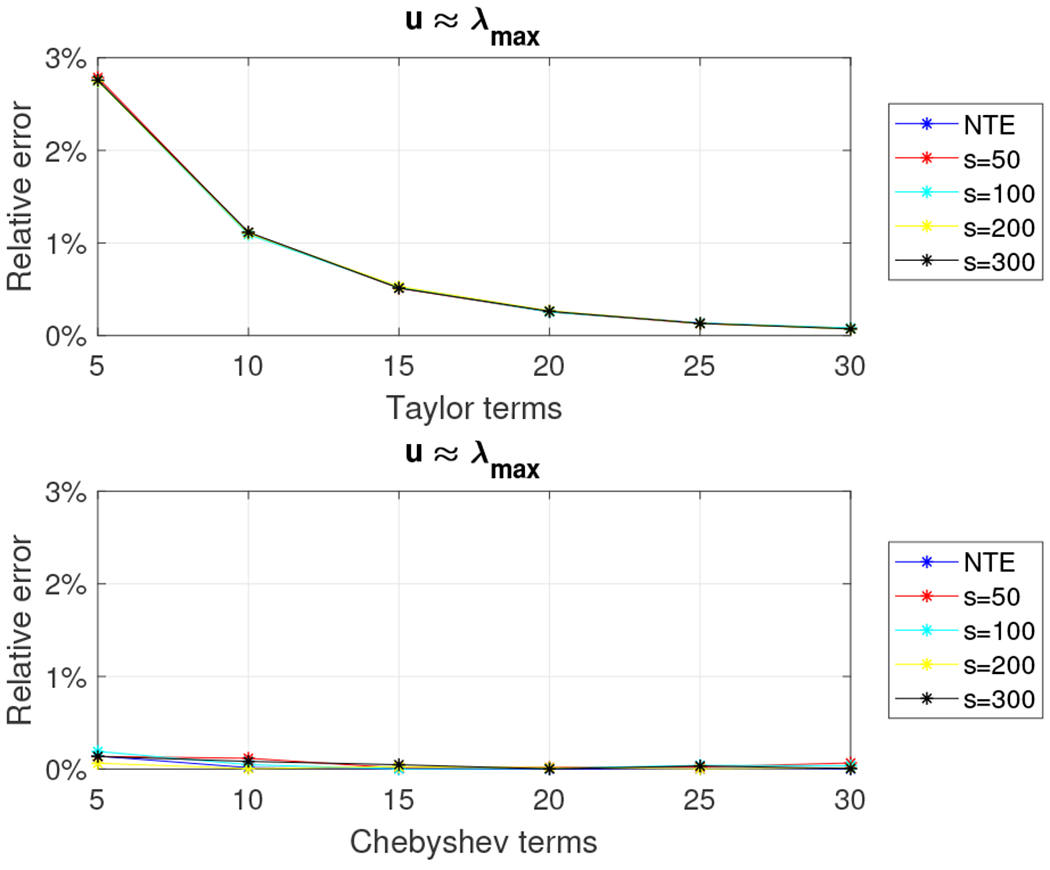

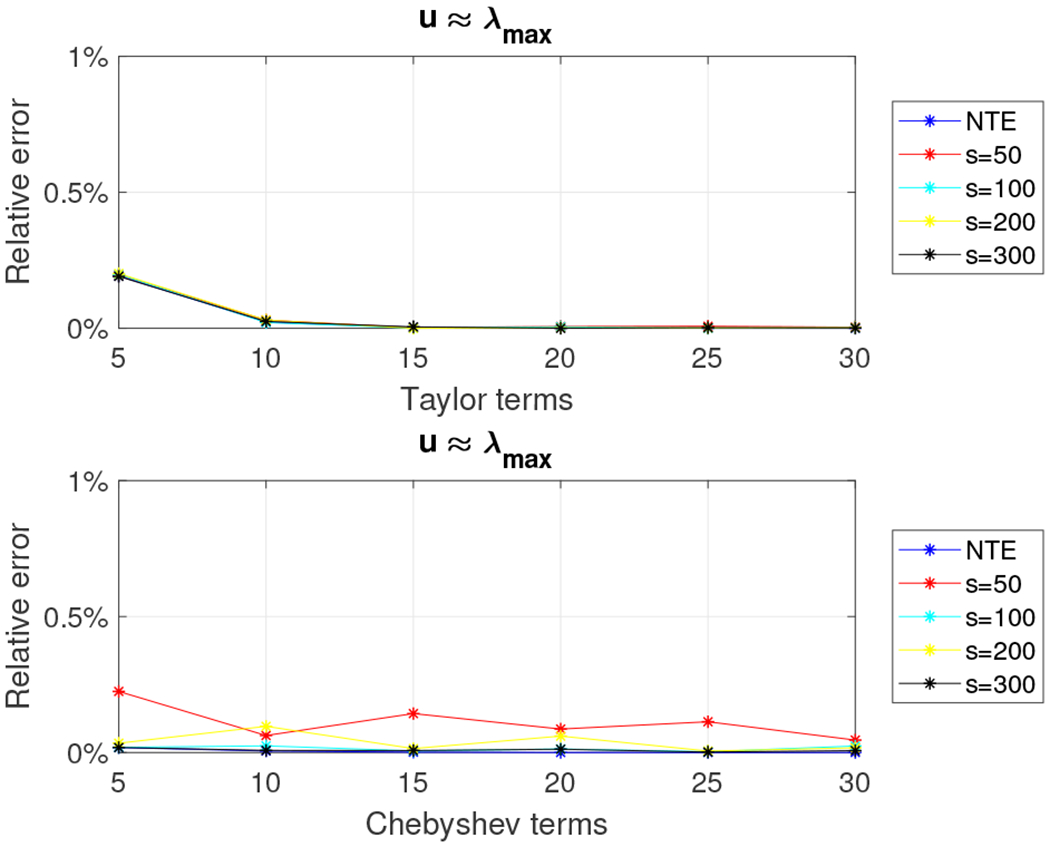

Consider a 5, 000 × 5, 000 random density matrix and let m (the number of terms retained in the Taylor series approximation or the degree of the polynomial used in the Chebyshev polynomial approximation) range between five and 30 in increments of five. Let s, the number of random Gaussian vectors used to estimate the trace, be set to {50,100,200, 300}. Recall that our error bounds for Algorithms 1 and 2 depend on u, an estimate for the largest eigenvalue of the density matrix. We used the power method to estimate the largest eigenvalue (let be the estimate) and we set u to and . Figures 1 and 2 show the relative error (out of 100%) for all combinations of m, s, and u for the Taylor and Chebyshev approximation algorithms. It is worth noting that we also report the error when no trace estimation (NTE) is used in order to highlight that most of the accuracy loss is due to the Taylor/Chebyshev approximation and not the trace estimation.

Fig. 1.

Relative error for 5, 000 × 5, 000 density matrix using the Taylor and the Chebyshev approximation algorithms with .

Fig. 2.

Relative error for 5, 000 × 5, 000 density matrix using the Taylor and the Chebyshev approximation algorithms with .

We observe that the relative error is always small, typically close to 1-2%, for any choice of the parameters s, m, and u. The Chebyshev algorithm returns better approximations when u is an overestimate for λmax while the two algorithms are comparable (in terms of accuracy) where u is very close to λmax, which agrees with our theoretical results. We also note that estimating the largest eigenvalue incurs minimal computational cost (less than one second). The NTE line (no trace estimation) in the plots serves as a lower bound for the relative error. Finally, we note that computing the exact Von-Neumann entropy took approximately 1.5 minutes for matrices of this size.

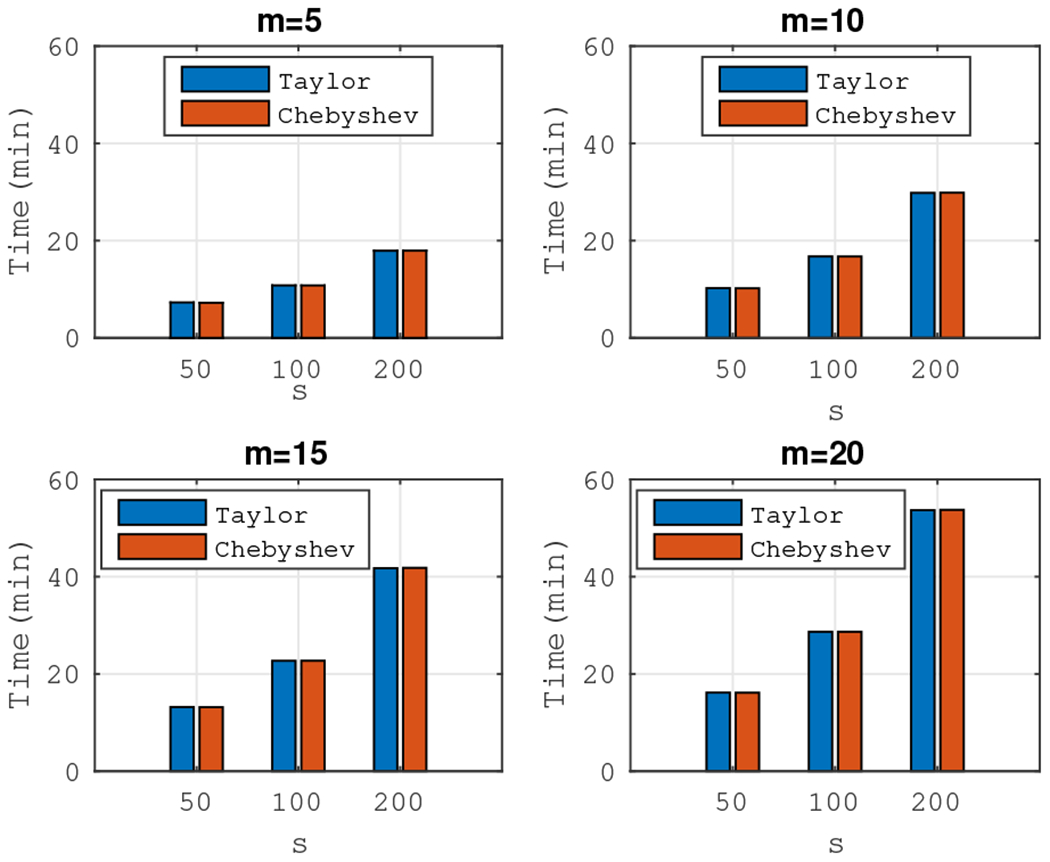

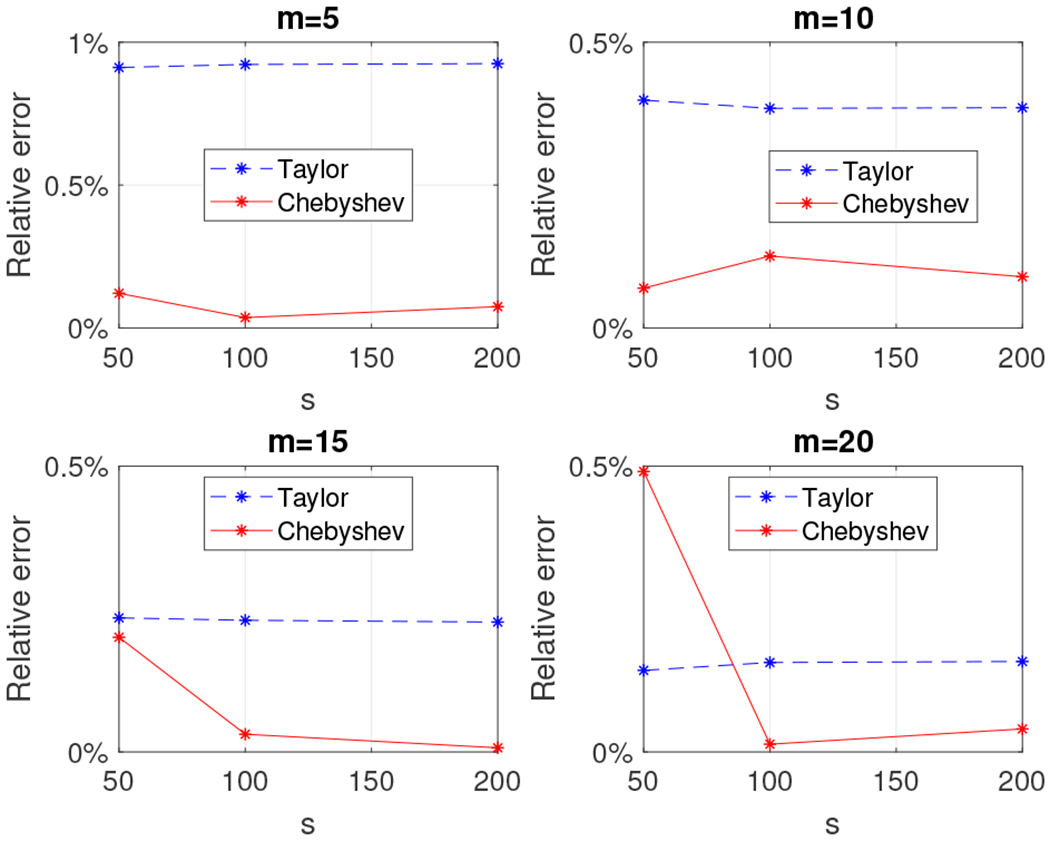

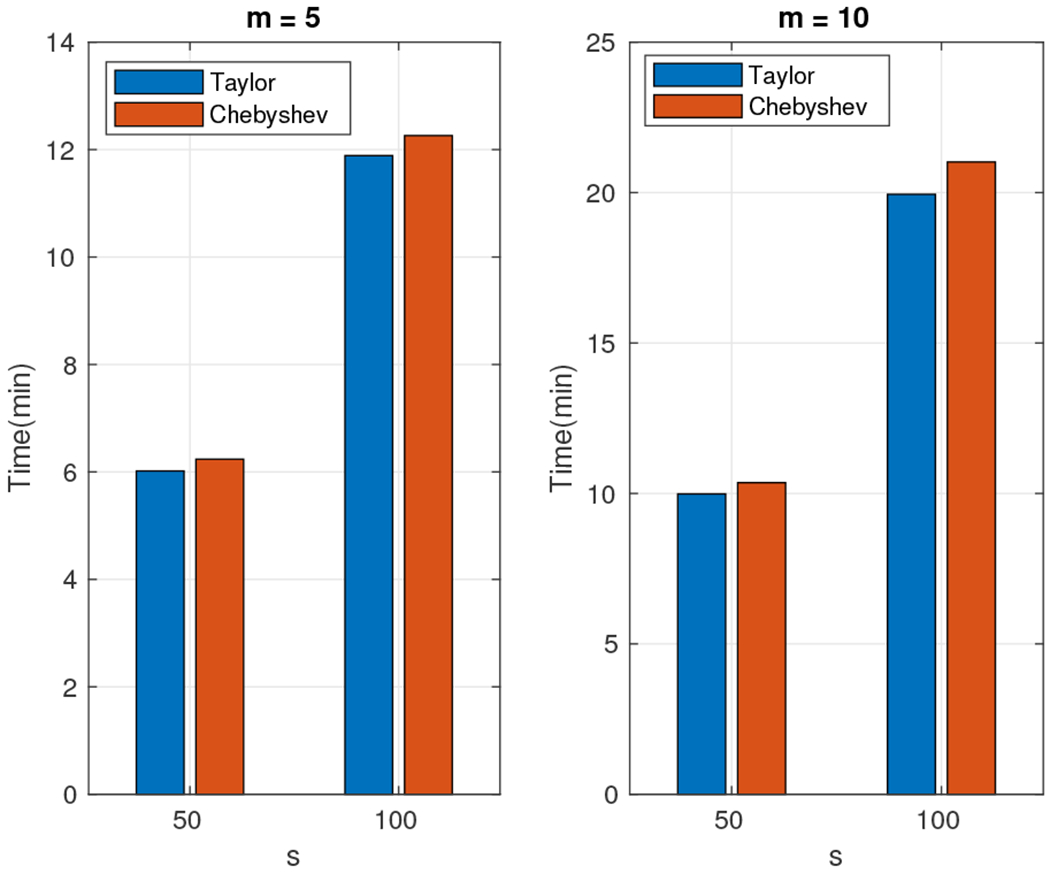

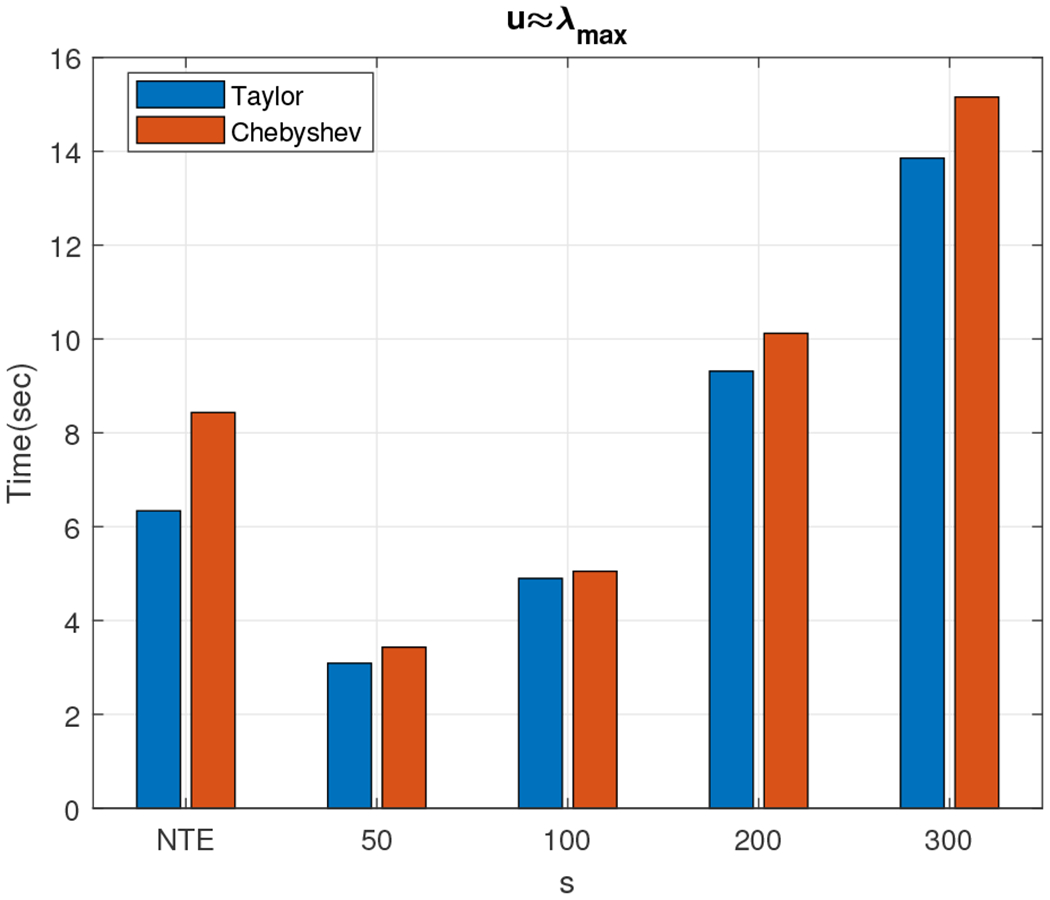

The second dataset that we experimented with was a much larger density matrix of size 30, 000 × 30, 000. This matrix was the largest matrix for which the memory was sufficient to perform operations like the full SVD. Notice that since the increase in the matrix size is six-fold compared to the previous one and SVD’s running time grows cubically with the input size, we expect the running time to compute the exact SVD to be roughly 63 · 90 seconds, which is approximately 5.4 hours; indeed, the exact computation of the Von-Neumann entropy took approximately 5.6 hours. We evaluated both the Taylor and the Chebyshev approximation schemes by setting the parameters m and s to take values in the sets {5, 10, 15, 20} and {50, 100, 200}, respectively. The parameter u was set to , where the latter value was computed using the power method, which took approximately 3.6 minutes. We report the wall-clock running times and relative error (out of 100%) in Figures 5 and 4.

Fig. 5.

Wall-clock times: Taylor approximation (blue) and Chebyshev approximation (red) for . Exact computation needed approximately 5.6 hours.

Fig. 4.

Relative error for 30, 000 × 30, 000 density matrix using the Taylor and the Chebyshev approximation algorithms with .

We observe that the relative error is always less than 1% for both methods, with the Chebyshev approximation yielding almost always slightly better results. Note that our Chebyshev-polynomial-based approximation algorithm significantly outperformed the exact computation: e.g., for m = 5 and s = 50, our estimate was computed in less than ten minutes and achieved less than .2% relative error.

The third dataset we experimented with was the tridiagonal matrix from [12, Section 5.1]:

| (21) |

This matrix is the coefficient matrix of the discretized onedimensional Poisson equation:

defined in the interval [0, 1] with Dirichlet boundary conditions v(0) = v(1) = 0. We normalize A by dividing it with its trace in order to make it a density matrix. Consider the 5, 000 × 5, 000 normalized matrix A and let m (the number of terms retained in the Taylor series approximation or the degree of the polynomial used in the Chebyshev polynomial approximation) range between five and 30 in increments of five. Let s, the number of random Gaussian vectors used for estimating the trace be set to 50, 100, 200, or 300. We used the formula

| (22) |

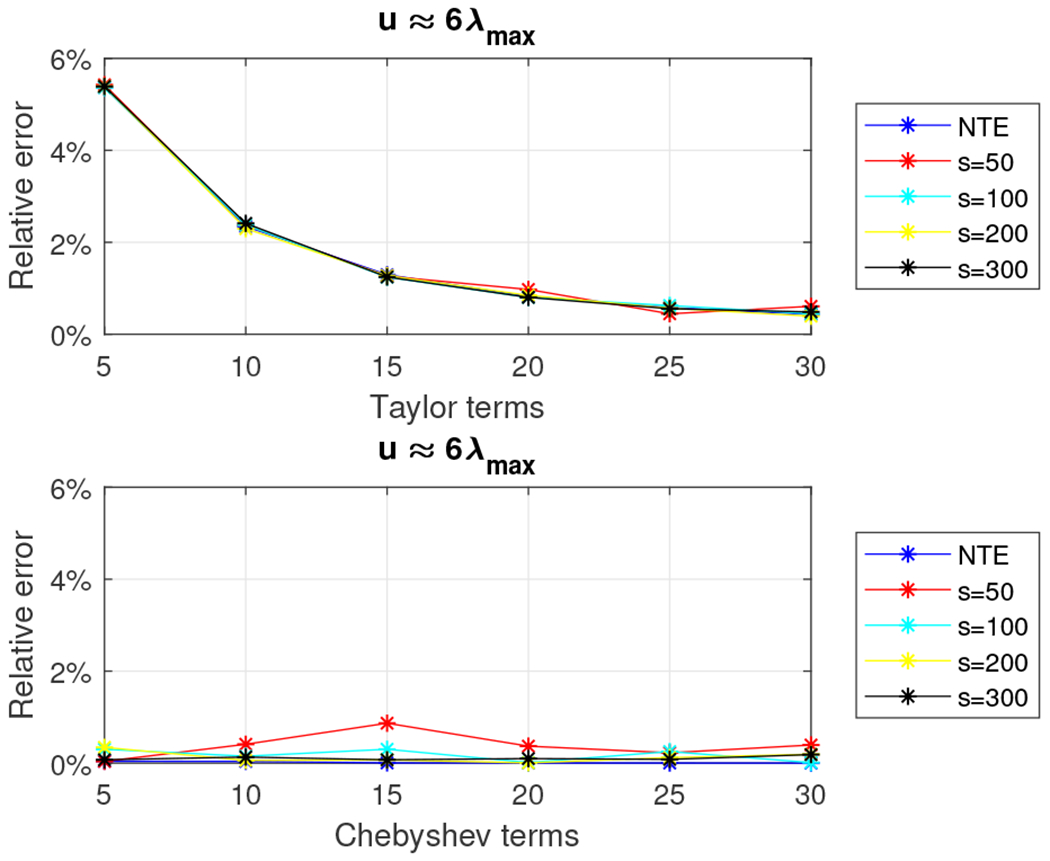

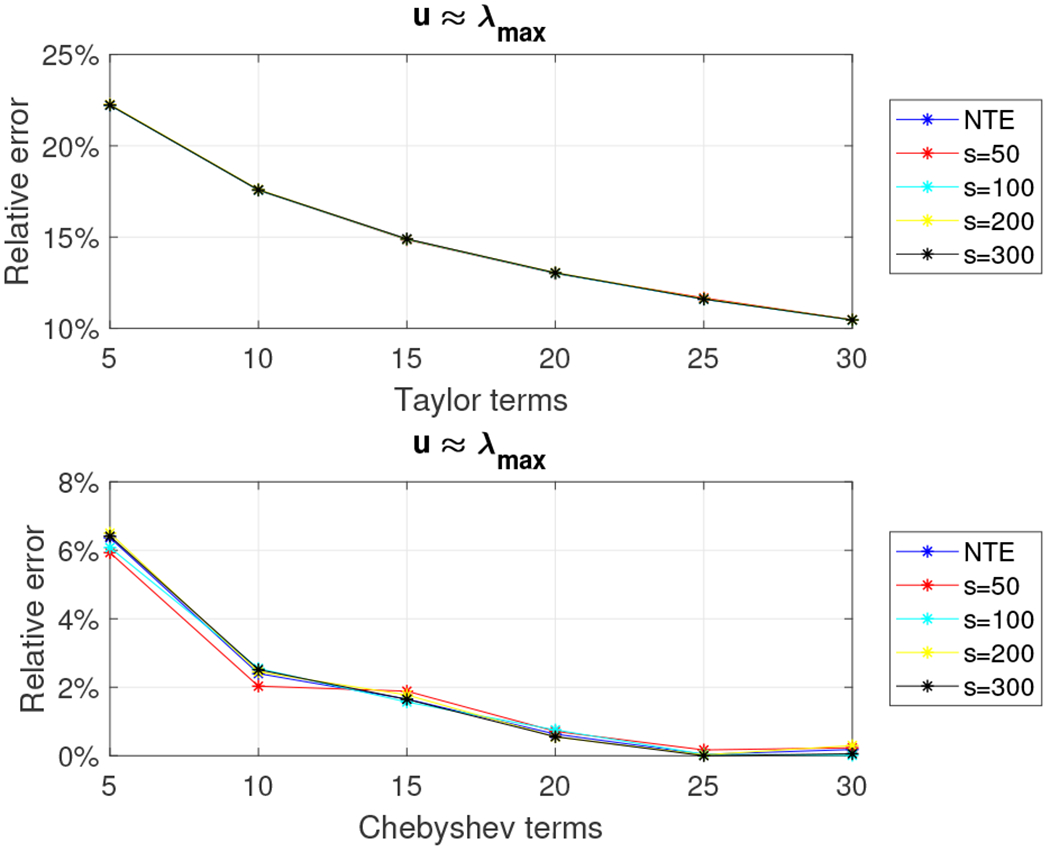

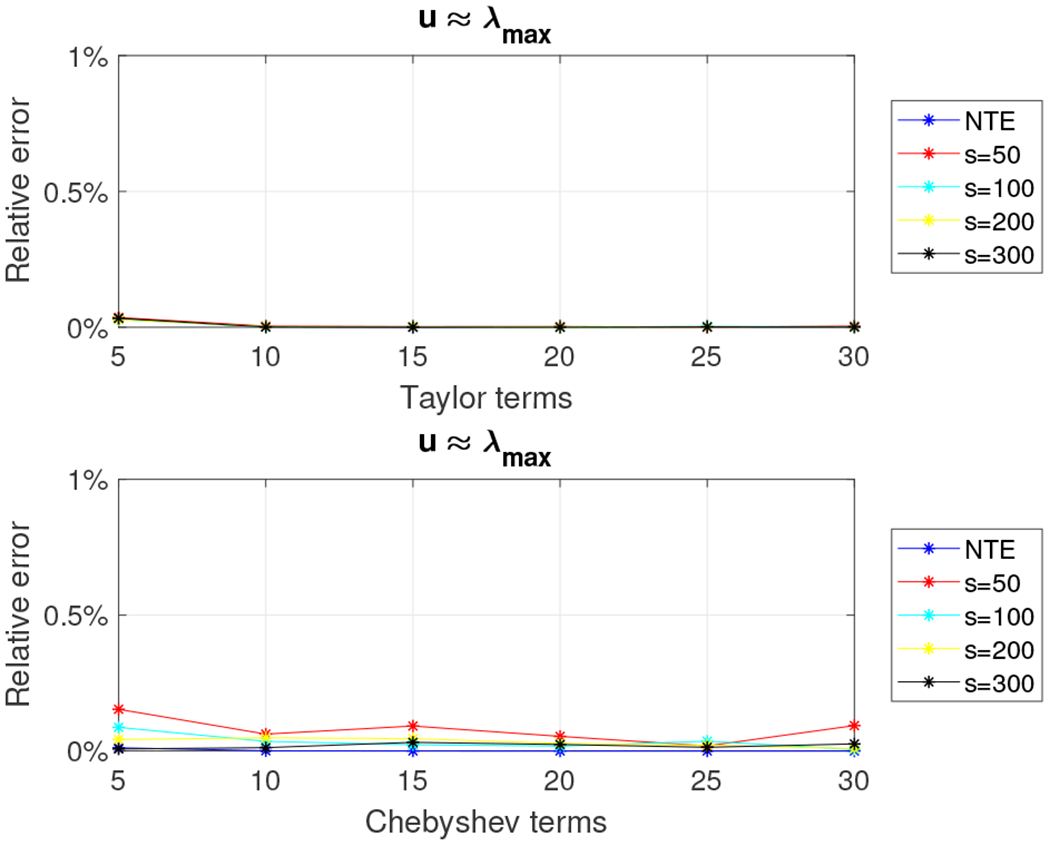

to compute the eigenvalues of A (after normalization) and we set u to λmax and 6λmax. Figures 6 and 7 show the relative error (out of 100%) for all combinations of m, s, and u for the Taylor and Chebyshev approximation algorithms. We also report the error when no trace estimation (NTE) is used.

Fig. 6.

Relative error for 5, 000 × 5, 000 tridiagonal density matrix using the Taylor and the Chebyshev approximation algorithms with u = λmax.

Fig. 7.

Relative error for 5, 000 × 5, 000 tridiagonal density matrix using the Taylor and the Chebyshev approximation algorithms with u = 6λmax.

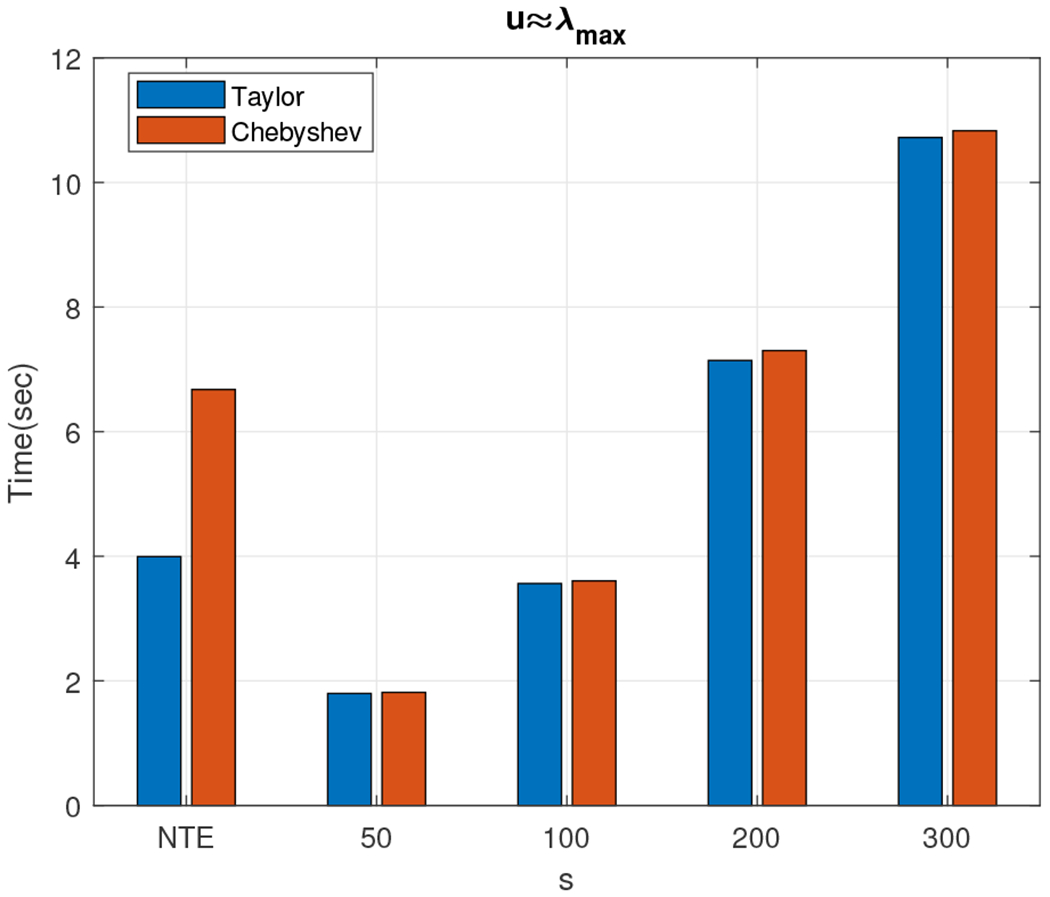

We observe that the relative error is higher than the one observed for the 5, 000 × 5, 000 random density matrix. We report wall-clock running times in Figure 8. The Chebyshev-polynomial-based algorithm returns better approximations for all choices of the parameters and, in most cases, is faster than the Taylor-polynomial-based algorithm, e.g. for m = 5, s = 50 and u = λmax, our estimate was computed in about two seconds and achieved less than .5% relative error.

Fig. 8.

Wall-clock times: Taylor approximation (blue) and Chebyshev approximation (red) for m = 5. Exact computation needed approximately 30 seconds.

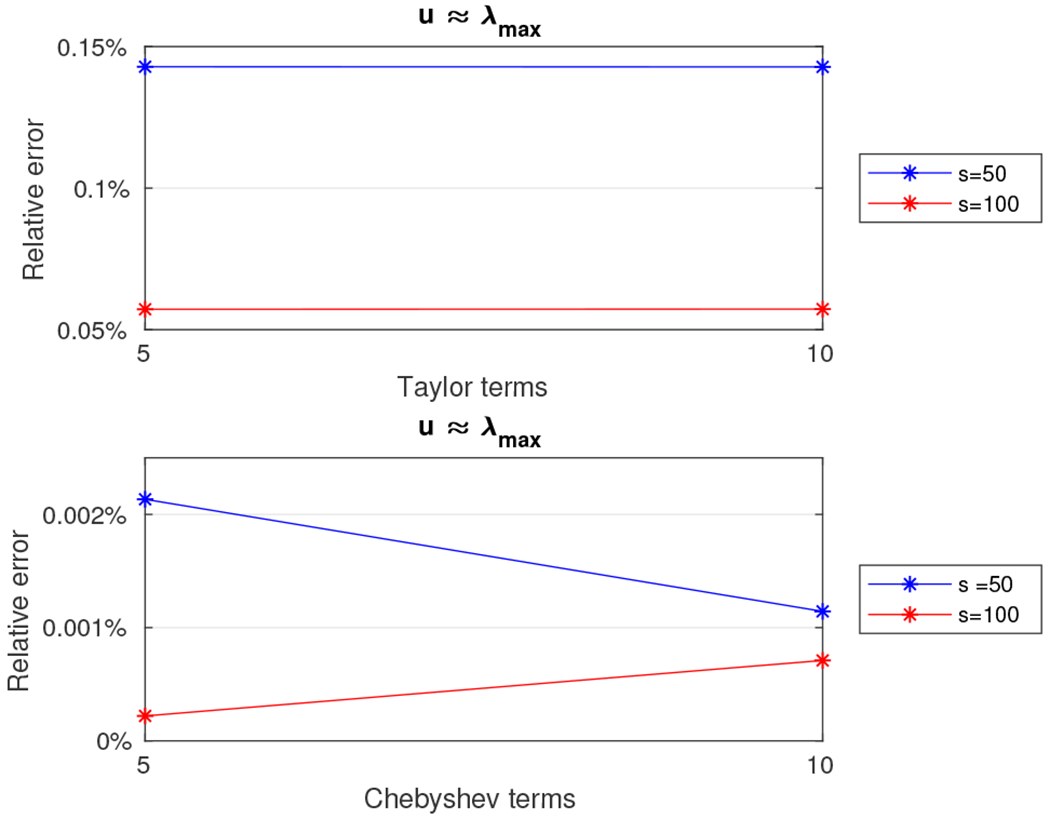

We further considered a 108 × 108 tridiagonal matrix of the form of eqn. (21). Although an exact computation of the singular values of A is not feasible (at least with our computational resources), such a computation is not necessary since eqn. (22) provides a closed formula for its eigenvalues and, thus, its entropy. Let m (the number of terms retained in the Taylor series approximation or the degree of the polynomial used in the Chebyshev polynomial approximation) be equal to five or ten and let s, the number of random Gaussian vectors used to estimate the trace be equal to 50 or 100. Figures 9 and 10 show the relative error (out of 100%) and the runtime, respectively, for all combinations of m and s for both the Taylor and Chebyshev approximation algorithms. We observe that in both cases we estimated the entropy in less than ten minutes with a relative error below 0.15%.

Fig. 9.

Relative error for the 108 × 108 tridiagonal density matrix using the Taylor and the Chebyshev approximation algorithms with u = λmax.

Fig. 10.

Wall-clock times: Taylor approximation (blue) and Chebyshev approximation (red) for the 108 × 108 triadiagonal density matrix. Exact computation using the Singular Value Decomposition was infeasible using our computational resources.

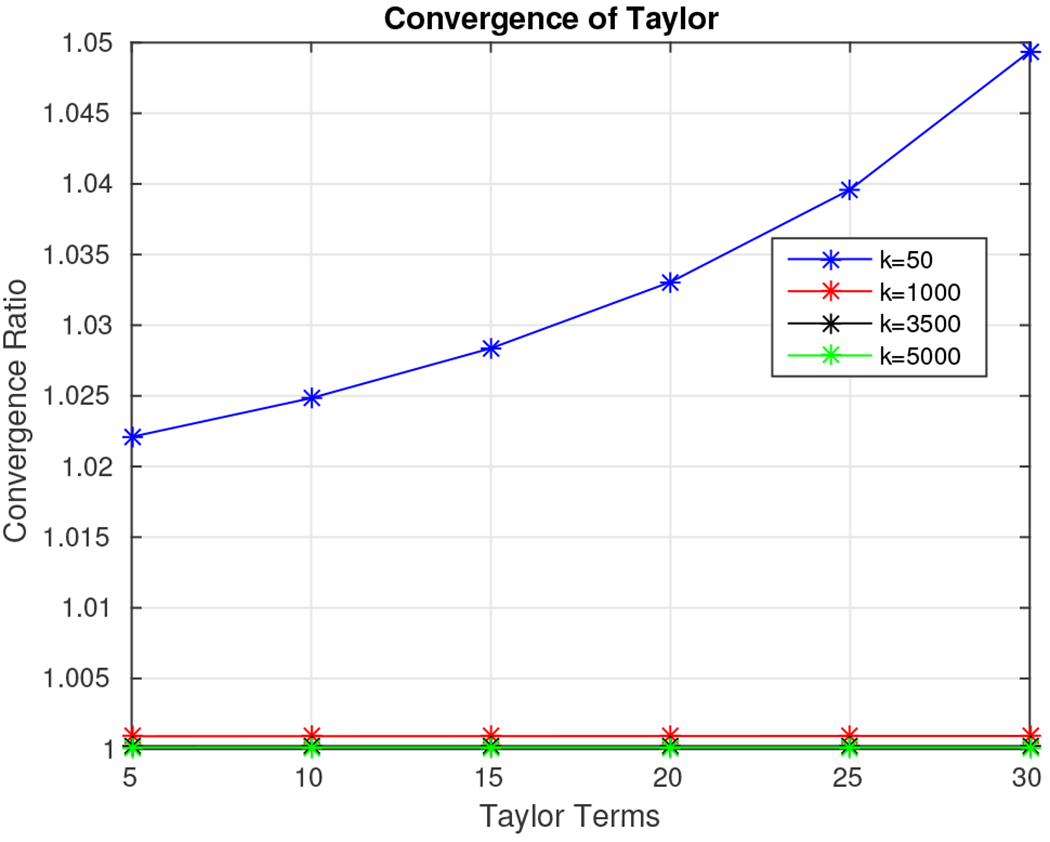

The fourth dataset we experimented with includes 5, 000 × 5, 000 density matrices whose first top-k eigenvalues follow a linear decay and the remaining 5, 000 – k a uniform distribution. Let k, the number of eigenvalues that follow the linear decay, take values in the set {50, 1000, 3500, 5000}. Let m, the number of terms retained in the Taylor series approximation or the degree of the polynomial used in the Chebyshev polynomial approximation, range between five and 30 in increments of five. Let s, the number of random Gaussian vectors used to estimate the trace, be set to {50, 100, 200, 300}. The estimate of the largest eigenvalue u is set to . Figures 11 to 14 show the relative error (out of 100%) for all combinations of k, m, s, and u for the Taylor and Chebyshev approximation algorithms.

Fig. 11.

Relative error for 5, 000 × 5, 000 density matrix with the top-50 eigenvalues decaying linearly using the Taylor and the Chebyshev approximation algorithms with u = λmax.

Fig. 14.

Relative error for 5, 000 × 5, 000 density matrix with the top-5000 eigenvalues decaying linearly using the Taylor and the Chebyshev approximation algorithms with u = λmax.

We observe that the relative error is decreasing as k increases. It is worth noting that when k = 3,500 and k = 5, 000 the Taylor-polynomial-based algorithm returns better relative error approximation than the Chebyshev-polynomial-based algorithm. In the latter case we observe that the relative error of the Taylor-based algorithm is almost zero. This observation has a simple explanation. Figure 15 shows the distribution of the eigenvalues in the four cases we examine. We observe that for k = 50 the eigenvalues are spread in the interval (10—2, 10−4); for k = 1, 000 the eigenvalues are spread in the interval (10−3, 10−4); while for k = 3, 500 or k = 5, 000 the eigenvalues are of order 10−4. It is well known that the Taylor polynomial returns highly accurate approximations when it is computed on values lying inside the open disc centered at a specific value u, which, in our case, is the approximation to the dominant eigenvalue. The radius of the disk is roughly r = λm+1/λm, where m is the degree of the Taylor polynomial. If r ≤ 1 then the Taylor polynomial converges; otherwise it diverges. Figure 16 shows the convergence rate for various values of k. We observe that for k = 50 the polynomial diverges, which leads to increased errors for the Taylor-based approximation algorithm (reported error close to 23%). In all other cases, the convergence rate is close to one, resulting in negligible impact to the overall error.

Fig. 15.

Eigenvalue distribution of 5, 000 × 5, 000 density matrices with the top-k = {50, 1000, 3500, 5000} eigenvalues decaying linearly and the remaining ones (5, 000 – k) following a uniform distribution.

Fig. 16.

Convergence radius of the Taylor polynomial for the 5, 000 × 5, 000 density matrices with the top-k = {50, 1000, 3500, 5000} eigenvalues decaying linearly and the remaining ones (5, 000 – k) following a uniform distribution.

In all four cases, the Chebyshev-polynomial based algorithm behaves better or similar to the Taylor-polynomial based algorithm. It is worth noting that when the majority of the eigenvalues are clustered around the smallest eigenvalue, then to achieve relative error similar to the one observed for the QETLAB random density matrices, more than 30 polynomial terms need to be retained, which increases the computational time of our algorithms. The increase of the computational time as well as the increased relative error can be justified by the large condition number that these matrices have (remember that for both approximation algorithms the running time depends on the approximate condition number u/l). As an example, for k = 50, the condition number is in the order of hundreds which is significant larger than the roughly constant condition number when k = 5, 000.

B. Empirical Results for the Hermitian Case

Our last dataset is a random 5, 000 × 5, 000 complex density matrix generated using the QETLAB Matlab toolbox. We used the function RandomDensityMatrix of QETLAB and the Haar measure. Let m (the number of terms retained in the Taylor series approximation or the degree of the polynomial used in the Chebyshev polynomial approximation) range between five and 30 in increments of five. Let s, the number of random Gaussian vectors used to estimate the trace, be set to {50, 100, 200, 300}. Figures 17 and 18 show the relative error (out of 100%) for all combinations of m, s, and u for the Taylor-based and Chebyshev-based approximation algorithms respectively.

Fig. 17.

Relative error for 5, 000 × 5, 000 density matrix using the approximation algorithm.

Fig. 18.

Relative error for 5, 000 × 5, 000 density matrix using the Chebyshev approximation algorithm.

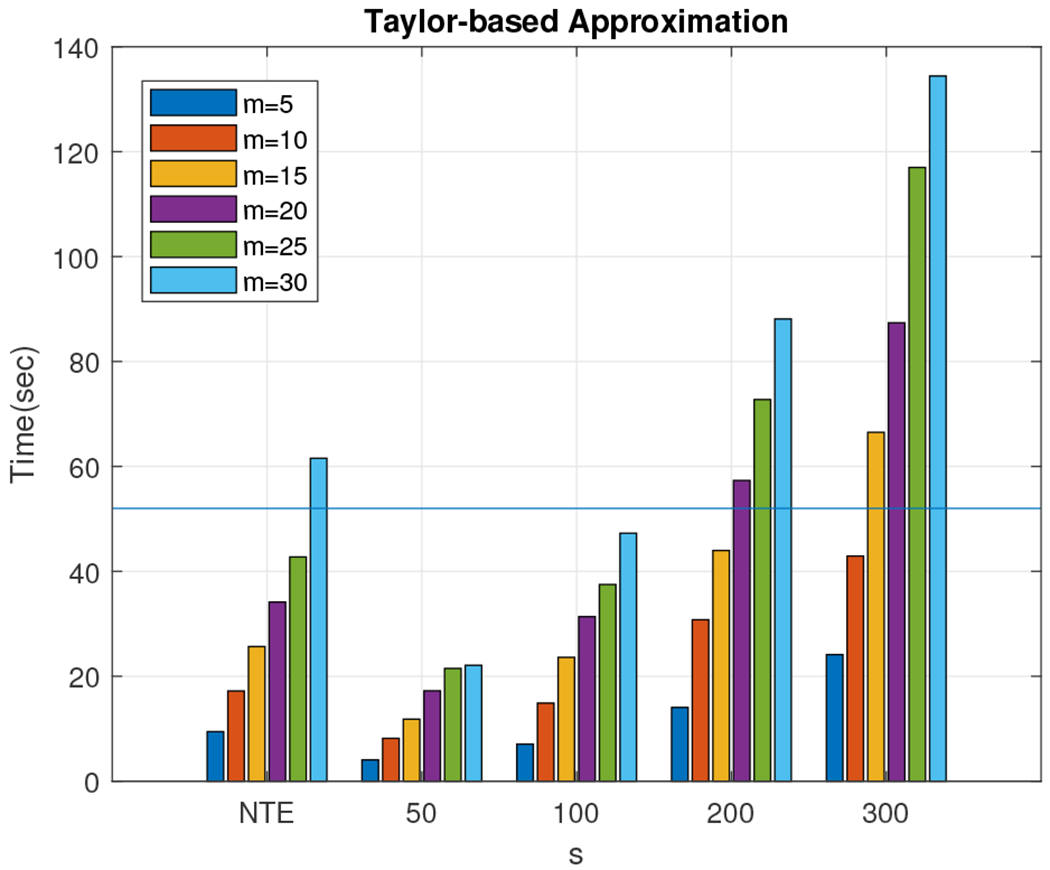

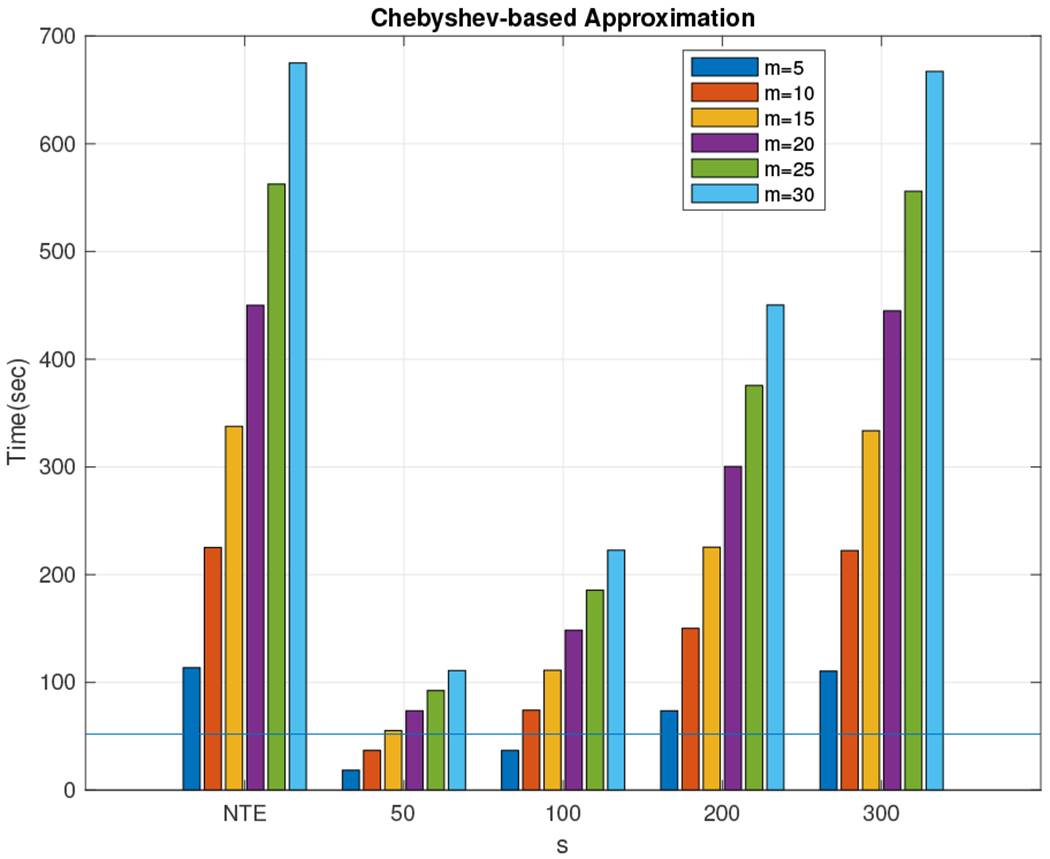

We observe that the relative error is always small, typically below 1%, for any choice of the parameters s and m. The NTE line (no trace estimation) in the plots serves as a lower bound for the relative error. We note that computing the exact Von-Neumann entropy took approximately 52 seconds for matrices of this size. Finally, our algorithm seems to outperform exact computation of the von-Neumann entropy by approximating it in about ten seconds (for the Taylor-based approach) with a relative error of 0.5% using 100 random Gaussian vectors and retaining ten Taylor terms (see Fig. 19) or in about 18 seconds (for the Chebyshev-based approach) with a relative error of 0.2% using 50 random Gaussian vectors and five Chebyshev polynomials (see Fig. 20) .

Fig. 19.

Time (in seconds) to run the Taylor-based algorithm for the 5, 000 × 5, 000 density matrix for all combinations of m and s. Exactly computing the Von-Neumann entropy took approximately 52 seconds, designated by the straight horizontal line in the figure.

Fig. 20.

Time (in seconds) to run the Chebyshev-based algorithm for the 5, 000 × 5, 000 density matrix for all combinations of m and s. Exactly computing the Von-Neumann entropy took approximately 52 seconds, designated by the straight horizontal line in the figure.

C. Empirical results for the random projection approximation algorithms

In order to evaluate our third algorithm, we generated low-rank random density matrices (recall that the algorithm of Section V works only for random density matrices of rank k with k ⪡ n). Additionally, in order to evaluate the subsampled randomized Hadamard transform and avoid padding with allzero rows, we focused on values of n (the number of rows and columns of the density matrix) that are powers of two. Finally, we also evaluated a simpler random projection matrix, namely the Gaussian random matrix, whose entries are all Gaussian random variables with zero mean and unit variance.

We generated low rank random density matrices with exponentially (using the QETLAB Matlab toolbox) and linearly decaying eigenvalues. The sizes of the density matrices we tested were 4, 096 × 4, 096 and 16, 384 × 16, 384. We also generated much larger 30, 000 × 30, 000 random matrices on which we only experimented with the Gaussian random projection matrix.

We computed all the non-zero singular values of a matrix using the svds function of Matlab in order to take advantage of the fact that the target density matrix has low rank. The accuracy of our proposed approximation algorithms was evaluated by measuring the relative error; wall-clock times were reported in order to quantify the speedup that our approximation algorithms were able to achieve.

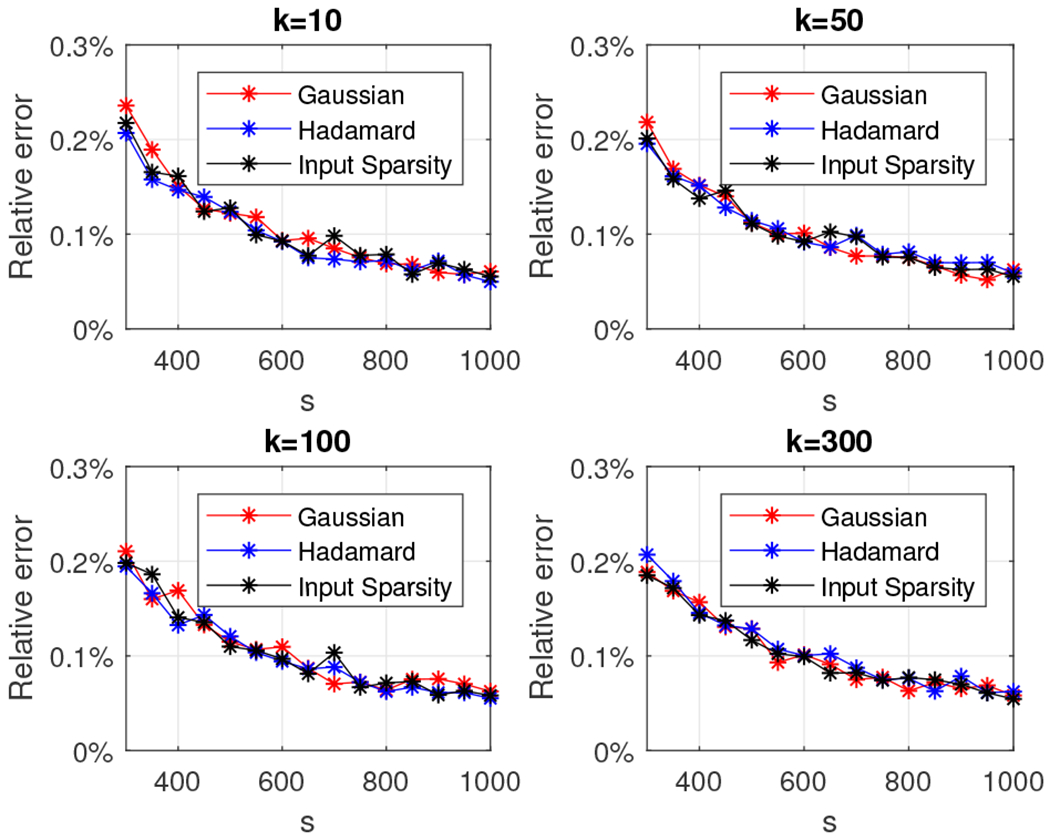

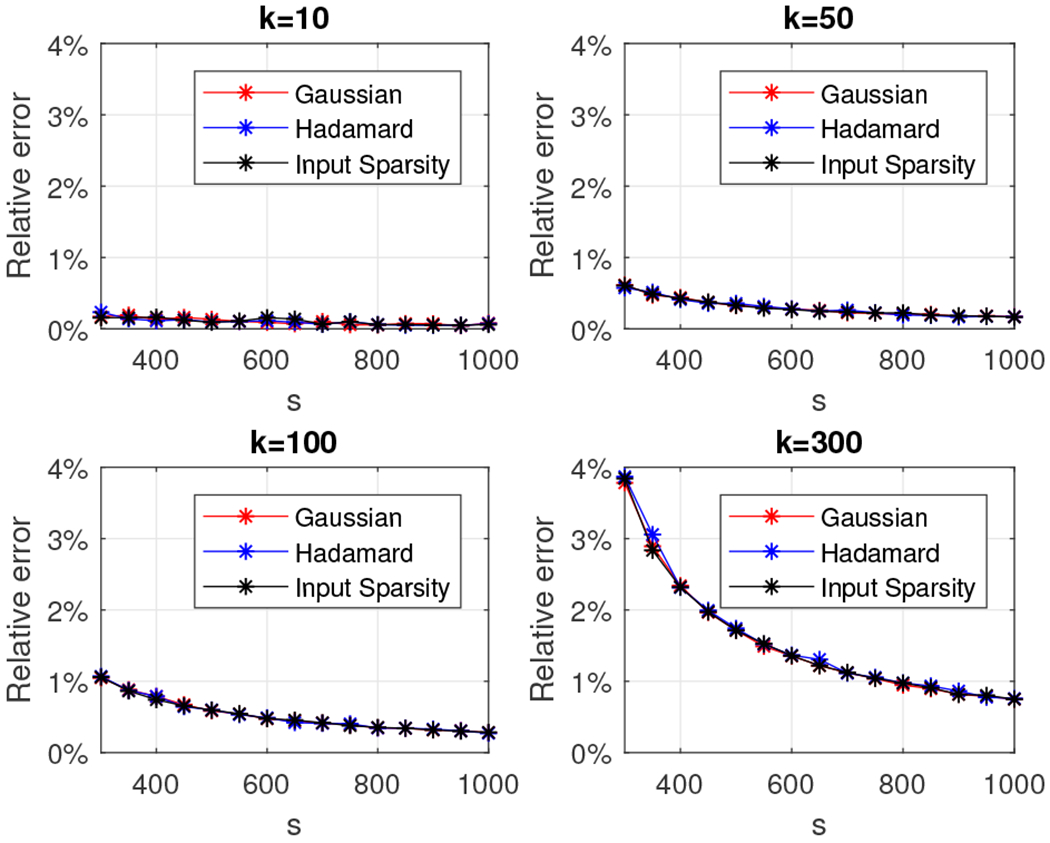

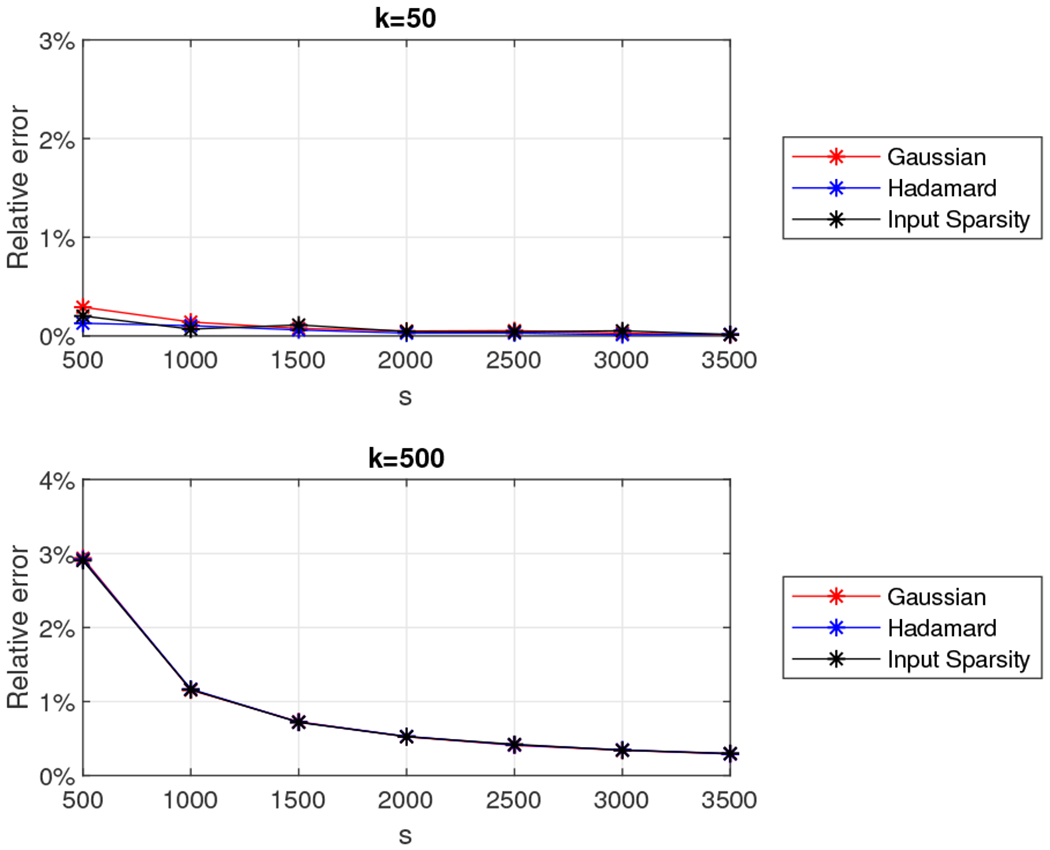

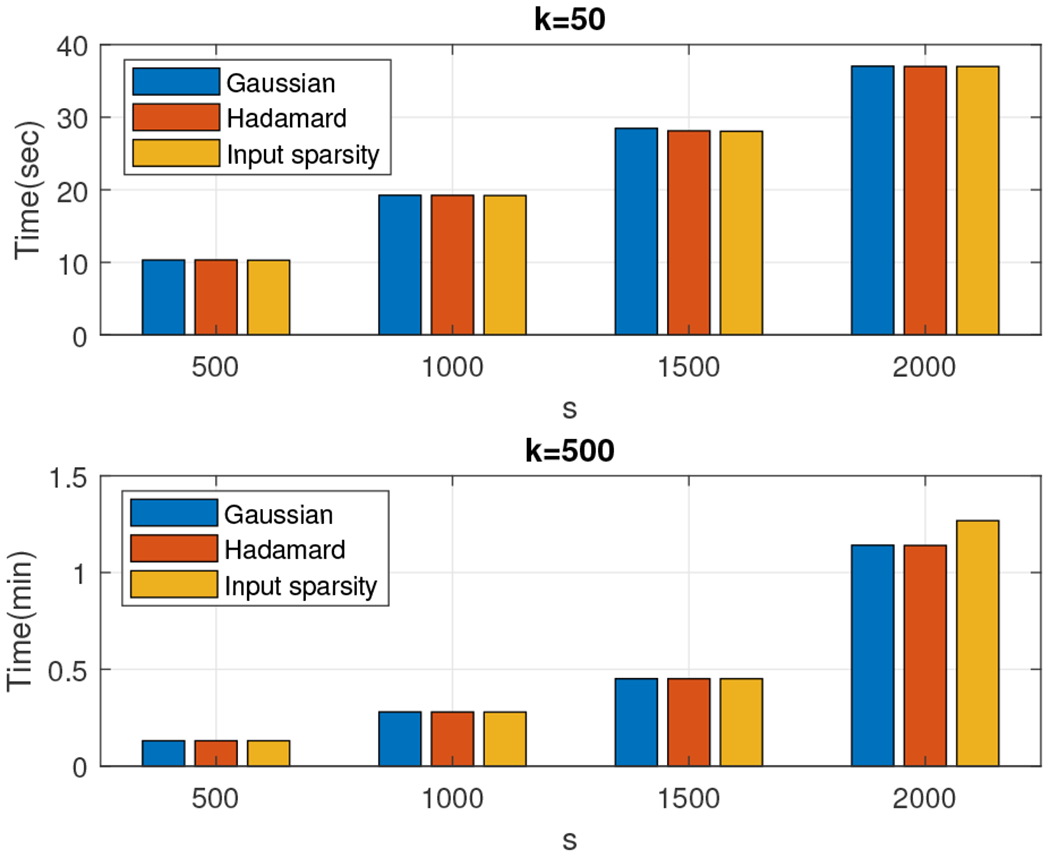

We start by reporting results for Algorithm 5 using the Gaussian, the subsampled randomized Hadamard transform (Algorithm 6), and the input-sparsity transform (Algorithm 7) random projection matrices. Consider the 4, 096 × 4, 096 low rank density matrices and let k, the rank of the matrix, be 10, 50, 100, and 300. Let s, the number of columns of the random projection matrix, range from 50 to 1,000 in increments of 50. Figures 21 and 22 depict the relative error (out of 100%) for all combinations of k and s. We also report the wall-clock running times for values of s between 300 and 450 at Figure 23.

Fig. 21.

Relative error for the 4, 096 × 4, 096 rank-k density matrix with exponentially decaying eigenvalues using Algorithm 5 with the Gaussian (red), the subsampled randomized Hadamard transform (blue), and the input sparsity transform (black) random projection matrices.

Fig. 22.

Relative error for the 4, 096 × 4, 096 rank-k density matrix with linearly decaying eigenvalues using Algorithm 5 with the Gaussian (red), the subsampled randomized Hadamard transform (blue), and the input sparsity transform (black) random projection matrices.

Fig. 23.

Wall-clock times: Algorithm 5 on 4, 096 × 4, 096 random matrices, with the Gaussian (blue), the subsampled randomized Hadamard transform (red) and the input sparsity transform (orange) projection matrices. The exact entropy was computed in 1.5 seconds for the rank-10 approximation, in eight seconds for the rank-50 approximation, in 15 seconds for the rank-100 approximation, and in one minute for the rank-300 approximation.

We observe that in the case of the random matrix with exponentially decaying eigenvalues and for all algorithms the relative error is under 0.3% for any choice of the parameters k and s and, as expected, decreases as the dimension of the projection space s grows larger. Interestingly, all three random projection matrices returned essentially identical accuracies and very comparable wall-clock running time results. This observation is due to the fact that for all choices of k, after scaling the matrix to unit trace, the only eigenvalues that were numerically non-zero were the 10 dominant ones.

In the case of the random matrix with linearly decaying eigenvalues (and for all algorithms) the relative error increases as the rank of the matrix increases and decreases as the size of the random projection matrix increases. This is expected: as the rank of the matrix increases, a larger random projection space is needed to capture the “energy” of the matrix. Indeed, we observe that for all values of k, setting s = 1, 000 guarantees a relative error under 1%. Similarly, for k = 10, the relative error is under 0.3% for any choice of s.

The running time depends not only on the size of the matrix, but also on its rank, e.g. for k = 100 and s = 450, our approximation was computed in about 2.5 seconds, whereas for k = 300 and s = 450, it was computed in less than one second. Considering, for example, the case of k = 300 exponentially decaying eigenvalues, we observe that for s = 400 we achieve relative error below 0.15% and a speedup of over 60 times compared to the exact computation. Finally, it is observed that all three algorithms returned very comparable wall-clock running time results. This observation could be due to the fact that matrix multiplication is heavily optimized in Matlab and therefore the theoretical advantages of the Hadamard transform did not manifest themselves in practice.

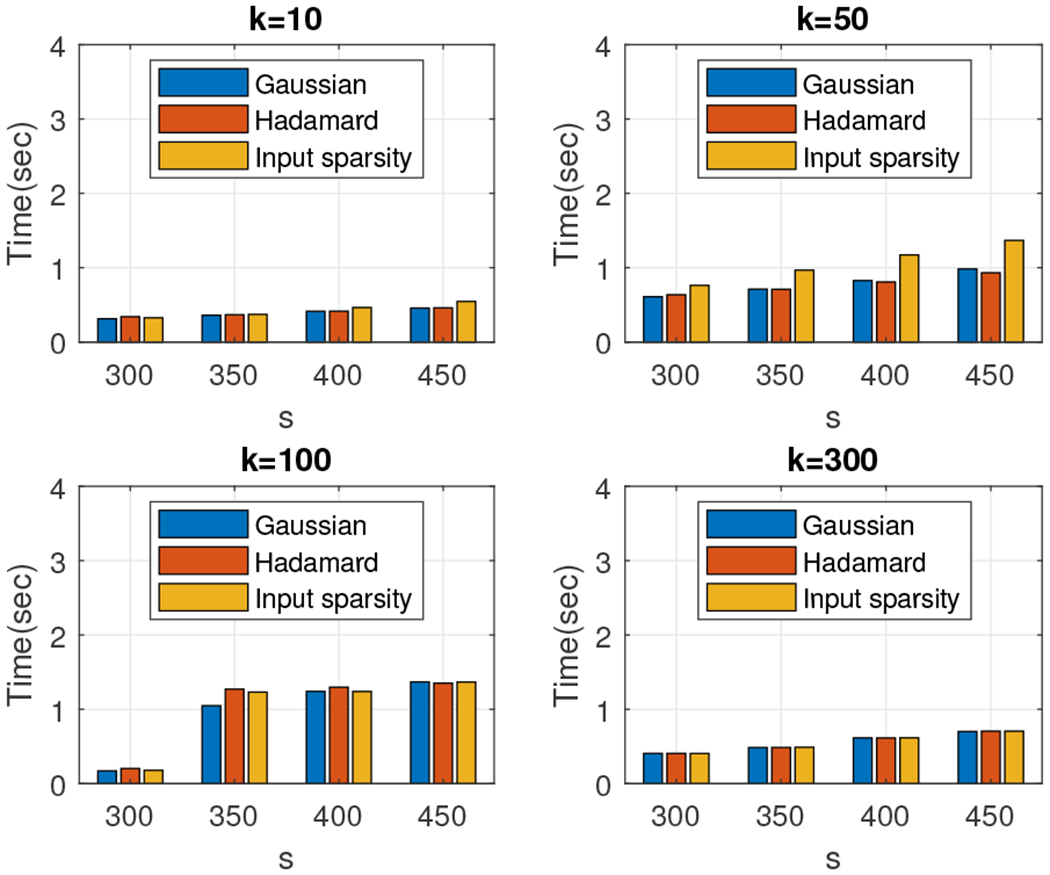

The second dataset we experimented with was a 16,384 × 16,384 low rank density matrix. We set k = 50 and k = 500 and we let s take values in the set {500, 1000, 1500, …, 3000, 3500}. We report the relative error (out of 100%) for all combinations of k and s in Figure 24 for the matrix with exponentially decaying eigenvalues and in Figure 25 for the matrix with linearly decaying eigenvalues. We also report the wall-clock running times for s between 500 and 2,000 in Figure 26. We observe that the relative error is typically around 1% for both types of matrices, with running times ranging between ten seconds and four minutes, significantly outperforming the exact entropy computation which took approximately 3.6 minutes for the rank 50 approximation and 20 minutes for the rank 500 approximation.

Fig. 24.

Relative error for the 16, 384 × 16, 384 rank-k density matrix with exponentially decaying eigenvalues using Algorithm 5 with the Gaussian (red), the subsampled randomized Hadamard transform (blue), and the input sparsity transform (black) random projection matrices.

Fig. 25.

Relative error for the 16, 384 × 16, 384 rank-k density matrix with linearly decaying eigenvalues using Algorithm 5 with the Gaussian (red), the subsampled randomized Hadamard transform (blue), and the input sparsity transform (black) random projection matrices.

Fig. 26.

Wall-clock times: Algorithm 5 with the Gaussian (blue), the subsampled randomized Hadamard transform (red) and the input sparsity transform (orange) projection matrices. The exact entropy was computed in 1.6 minutes for the rank 50 approximation and in 20 minutes for the rank 500 approximation.

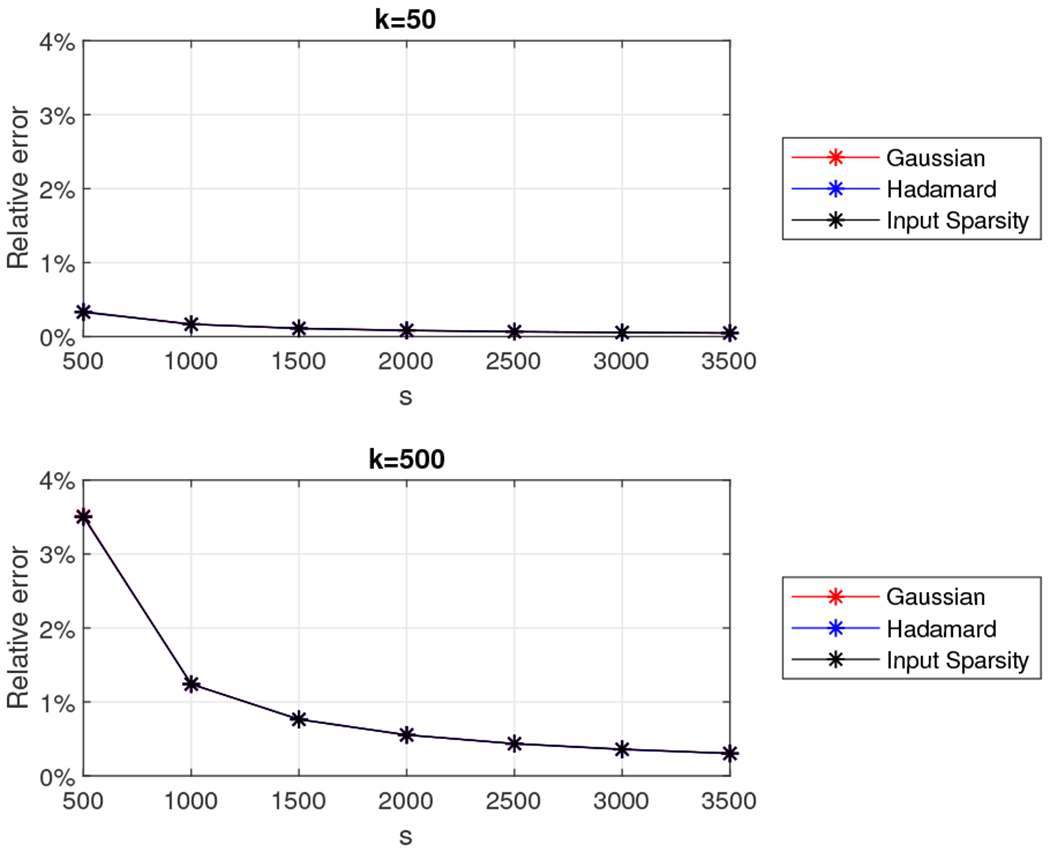

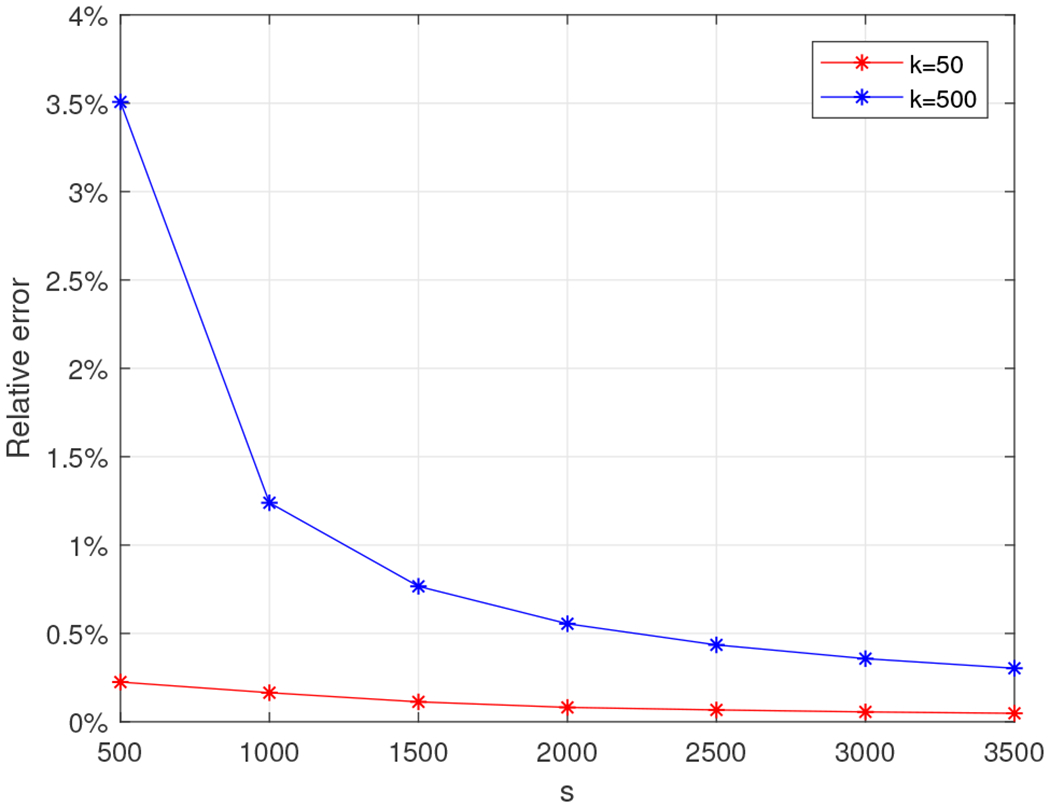

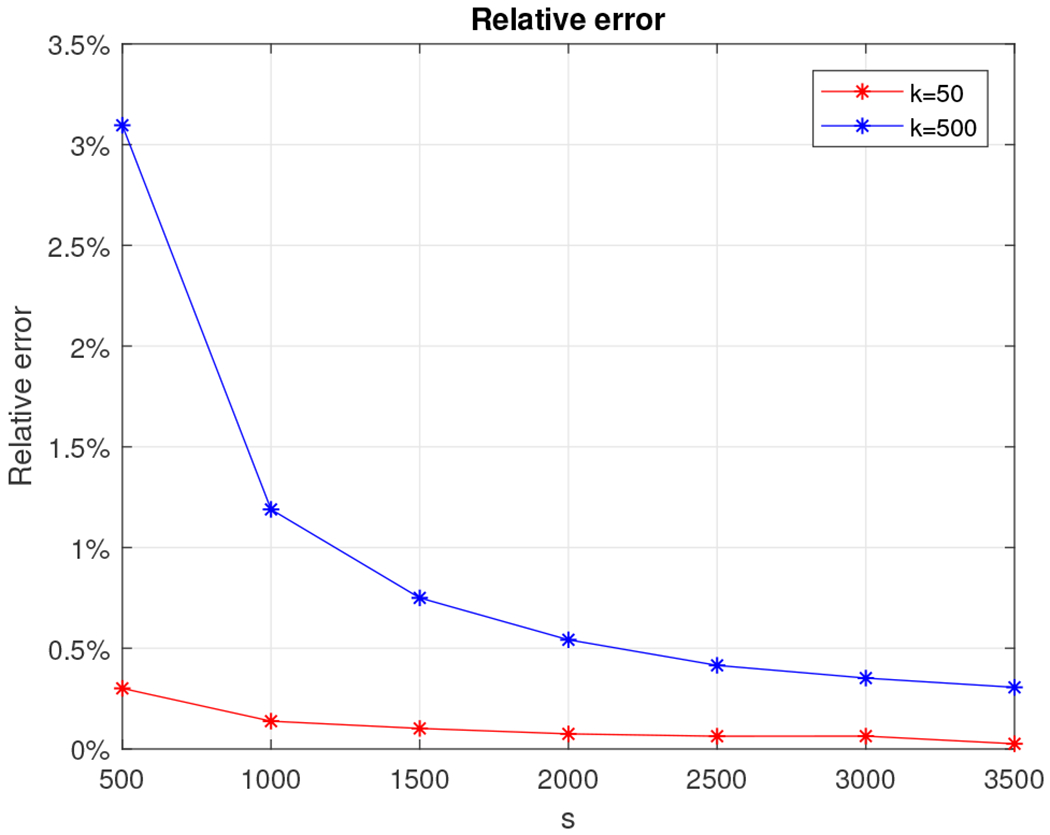

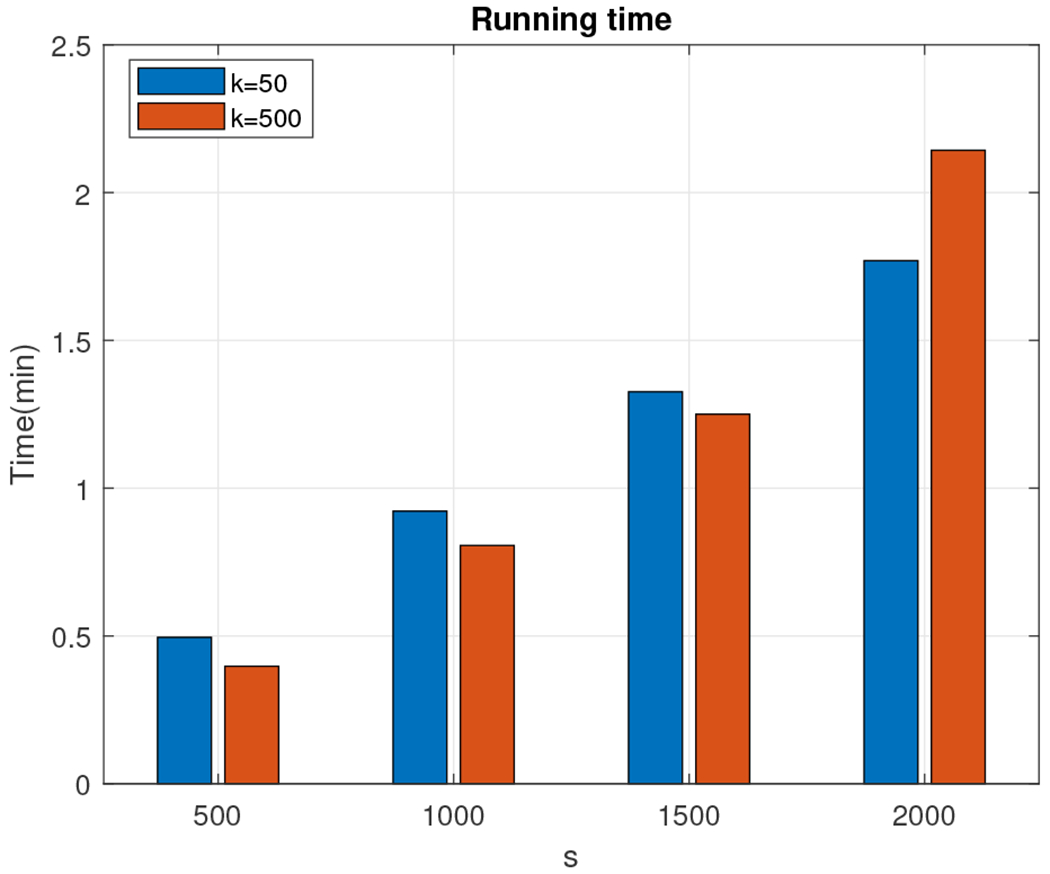

The last dataset we experimented with was a 30, 000 × 30, 000 low rank density matrix on which we ran Algorithm 5 using a Gaussian random projection matrix. We set k = 50 and k = 500 and we let s take values in the set {500, 1000, 1500, …, 3000, 3500}. We report the relative error (out of 100%) for all combinations of k and s in Figure 27 for the matrix with exponentially decaying eigenvalues and in Figure 28 for the matrix with the linearly decaying eigenvalues. We also report the wall-clock running times for s ranging between 500 and 2, 000 in Figure 29. We observe that the relative error is typically around 1% for both types of matrices, with the running times ranging between 30 seconds and two minutes, outperforming the exact entropy which was computed in six minutes for the rank 50 approximation and in one hour for the rank 500 approximation.

Fig. 27.

Relative error for the 30, 000 × 30, 000 rank-k density matrix with exponentially decaying eigenvalues using Algorithm 5 with the Gaussian random projection matrix for k = 50 (red) and for k = 500 (blue).

Fig. 28.

Relative error for the 30, 000 × 30, 000 rank-k density matrix with linearly decaying eigenvalues using Algorithm 5 with the Gaussian random projection matrix for k = 50 (red) and for k = 500 (blue).

Fig. 29.

Wall-clock times: rank-50 approximation (blue) and rank-500 approximation (red). Exact computation needed about six minutes and one hour respectively.

VII. Conclusions and open problems

We presented and analyzed three randomized algorithms to approximate the von Neumann entropy of density matrices. Our algorithms leverage recent developments in the RandNLA literature: randomized trace estimators, provable bounds for the power method, the use of random projections to approximate the singular values of a matrix, etc. All three algorithms come with provable accuracy guarantees under assumptions on the spectrum of the density matrix. Empirical evaluations on 30, 000 × 30, 000 synthetic density matrices support our theoretical findings and demonstrate that we can efficiently approximate the von Neumann entropy in a few minutes with minimal loss in accuracy, whereas an the exact computation takes over 5.5 hours.

An interesting open problem would be to consider the estimation of the cross entropy. The cross entropy is a measure between two probability distributions and is particularly important in information theory. Algebraically, it can be defined as , where and are density matrices with a full set of pure states. One can further extend our polynomial-based approaches using the Taylor expansion or the Chebyshev polynomials to approximate the matrix Γ = S log R. The case where both or one of the density matrices have an incomplete set of pure states is an open problem: if R is low-rank, then our first two approaches would not work for the reasons discussed in Section V. However, if the only low rank matrix is S, then our first two approaches would still work: S is only appearing in the trace estimation part, and having eigenvalues equal to zero does not affect the positive semi-definiteness of Γ. When R is of low rank then one might be able to use our random projection approaches to reduce its dimensionality and/or the dimensionality of S.

The most important open problem is to relax (or eliminate) the assumptions associated with our three key technical results without sacrificing our running time guarantees. It would be critical to understand whether our assumptions are, for example, necessary to achieve relative error approximations and either provide algorithmic results that relax or eliminate our assumptions or provide matching lower bounds and counterexamples.

Fig. 3.

Time (in seconds) to run the approximate algorithms for the 5, 000 × 5, 000 density matrix for m = 5. Exactly computing the Von-Neumann entropy took approximately 90 seconds.

Fig. 12.

Relative error for 5, 000 × 5, 000 density matrix with the top-1000 eigenvalues decaying linearly using the Taylor and the Chebyshev approximation algorithms with u = λmax.

Fig. 13.

Relative error for 5, 000 × 5, 000 density matrix with the top-3500 eigenvalues decaying linearly using the Taylor and the Chebyshev approximation algorithms with u = λmax.

Acknowledgment

The authors would like to thank the editor for numerous useful suggestions that significantly improved the presentation of our work, especially in the Hermitian case.

PD and EK were supported by NSF IIS-1319280 and IIS-1661760. WS and AG were supported by the NSF Center for Science of Information (CSoI) Grant CCF-0939370 and by NSF CCF-1524312 and NIH 1U01CA198941-01.

Biographies

Eugenia-Maria Kontopoulou is a Ph.D. candidate with the Computer Science Department at Purdue University. She earned her B. Eng. and M. Eng. from the Computer Science and Informatics Department of University of Patras Greece in 2012. Her current interests lie in the areas of (Randomized) Numerical Linear Algebra with a focus on designing and implementing randomized algorithms for the solution of linear algebraic problems in large-scale data applications.

Gregory Dexter is a current senior at Purdue University majoring in honors statistics and mathematics. He is broadly interested in artificial intelligence and hopes to pursue a PhD focused in this area.

Wojciech Szpankowski is Saul Rosen Distinguished Professor of Computer Science at Purdue University where he teaches and conducts research in analysis of algorithms, information theory, analytic combinatorics, data science, random structures, and stability problems of distributed systems. He held several Visiting Professor/Scholar positions, including McGill University, INRIA, France, Stanford, Hewlett-Packard Labs, Universite de Versailles, University of Canterbury, New Zealand, Ecole Polytechnique, France, the Newton Institute, Cambridge, UK, ETH, Zurich, and Gdansk University of Technology, Poland. He is a Fellow of IEEE, and the Erskine Fellow. In 2010 he received the Humboldt Research Award, in 2015 the Inaugural Arden L. Bement Jr. Award, and in 2020 he was the recipient of the Flajolet Lecture Prize. He published two books: “Average Case Analysis of Algorithms on Sequences”, John Wiley & Sons, 2001, and “Analytic Pattern Matching: From DNA to Twitter”, Cambridge, 2015. In 2008 he launched the interdisciplinary Institute for Science of Information, and in 2010 he became the Director of the newly established NSF Science and Technology Center for Science of Information.

Ananth Grama is the Samuel Conte Professor of Computer Science at Purdue University. His research interests include parallel and distributed computing, large-scale data analytics, and applications in life sciences. Grama received a Ph.D. in computer science from the University of Minnesota. He is a recipient of the National Science Foundation CAREER award and the Purdue University Faculty Scholar Award. Grama is a Fellow of the American Association for the Advancement of Sciences and a Distinguished Alumnus of the University of Minnesota. He chaired the Bio-data Management and Analysis (BDMA) Study Section of the National Institutes of Health from 2012 to 2014. Contact him at ayg@purdue.edu.

Petros Drineas is a Professor at the Computer Science Department of Purdue University. He earned a PhD in Computer Science from Yale University in 2003 and a BS in Computer Engineering and Informatics from the University of Patras, Greece, in 1997. From 2003 until 2016, Prof. Drineas was an Assistant (until 2009) and then an Associate Professor at Rensselaer Polytechnic Institute. His research interests lie in the design and analysis of randomized algorithms for linear algebraic problems, as well as their applications to the analysis of modern, massive datasets, with a particular emphasis on the analysis of population genetics data.

Appendix A. The power method

We consider the well-known power method to estimate the largest eigenvalue of a matrix. In our context, we will use the power method to estimate the largest probability pi for a density matrix R.

Algorithm 8 requires arithmetic operations to compute . The following lemma appeared in [4], building upon [13].

Lemma 14. Let be the output of Algorithm 8 with q = ⌈4.82 log(1/δ)⌉ and . Then, with probability at least 1 − δ,

Algorithm 8.

Power method repeated q times.

| • INPUT: SPD matrix , integers q, t > 0. |

| • For j = 1, …, q |

| 1) Pick uniformly at random a vector . |

| 2) For i = 1, …, t |

| – . |

| 3) Compute: . |

| • OUTPUT: . |

The running time of Algorithm 8 is .

Appendix B. The Clenshaw Algorithm

We briefly sketch Clenshaw’s algorithm to evaluate Chebyshev polynomials with matrix inputs. Clenshaw’s algorithm is a recursive approach with base cases bm+2(x) = bm+1(x) = 0 and the recursive step (for k = m, m − 1, …, 0):

| (23) |

(See Section III for the definition of αk.) Then,

| (24) |

Using the mapping x → 2(x/u) − 1, eqn. (23) becomes

| (25) |

In the matrix case, we substitute x by a matrix. Therefore, the base cases are Bm+2(R) = Bm+1(R) = 0 and the recursive step is

| (26) |

for k = m, m − 1, …, 0. The final sum is

| (27) |

Using the matrix version of Clenshaw’s algorithm, we can now rewrite the trace estimation gT fm(R)g as follows. First, we right multiply eqn. (26) by g,

| (28) |

Eqn. (28) follows by substituting yi = Bi(R)g. Multiplying the base cases by g, we get ym+2 = ym+1 = 0 and the final sum becomes

| (29) |

Algorithm 9 summarizes all the above.

Algorithm 9.

Clenshaw’s algorithm to compute gT fm(R)g.

| 1: | INPUT: αi, i = 0, …, m, , |

| 2: | Set ym+2 = ym+1 = 0 |

| 3: | for k = m, m − 1, …, 0 do |

| 4: | |

| 5: | end for |

| 6: | OUTPUT: |

Footnotes

This paper was presented in part at the 2018 IEEE International Symposium on Information Theory.

Originally published in German in 1932; published in English under the title Mathematical Foundations of Quantum Mechanics in 1955

R is symmetric positive semidefinite and thus all its eigenvalues are non-negative. If pi is equal to zero we set pi ln pi to zero as well.

Indeed, for any two matrices A and B, tr (AB) ≤ ∑i σi(A)σi(B), where σi(A) (respectively σi(B)) denotes the i-th singular value of A (respectively B). Let ‖·‖2 to denote the induced-2 matrix or spectral norm, then ‖A‖2 = σ1(A) (its largest singular value). Given that each singular value of A is upper bounded by σ1(A) then we can rewrite tr(AB) ≤ ‖A‖2 ∑i πi(B); if B is symmetric positive semidefinite, tr(B) = ∑i σi(B).

This can be proven using an argument similar to the one used to prove eqn. (7).

Recall that ranges between zero and ln k.

This follows from the fact that A is a symmetric positive definite matrix and the inequality 0 ≤ ‖E‖2 < λmin(A).

References

- [1].Golub GH and Van Loan CF, Matrix Computations (3rd Ed.). Baltimore, MD, USA: Johns Hopkins University Press, 1996. [Google Scholar]

- [2].Wihler TP, Bessire B, and Stefanov A, “Computing the Entropy of a Large Matrix,” Journal of Physics A: Mathematical and Theoretical, vol. 47, no. 24, p. 245201, 2014. [Google Scholar]

- [3].Higham NJ, Functions of Matrices: Theory and Computation. Philadelphia, PA, USA: Society for Industrial and Applied Mathematics, 2008. [Google Scholar]

- [4].Boutsidis C, Drineas P, Kambadur P, Kontopoulou E-M, and Zouzias A, “A Randomized Algorithm for Approximating the Log Determinant of a Symmetric Positive Definite Matrix,” Linear Algebra and its Applications, vol. 533, pp. 95–117, 2017. [Google Scholar]

- [5].Avron H and Toledo S, “Randomized Algorithms for Estimating the Trace of an Implicit Symmetric Positive Semi-definite Matrix,” Journal of the ACM, vol. 58, no. 2, p. 8, 2011. [Google Scholar]

- [6].Drineas P and Mahoney MW, “RandNLA: Randomized Numerical Linear Algebra,” Communications of the ACM, vol. 59, no. 6, pp. 80–90, 2016. [Google Scholar]

- [7].Woodruff DP, “Sketching as a Tool for Numerical Linear Algebra,” Foundations and Trends in Theoretical Computer Science, vol. 10, no. 1-2, pp. 1–157, 2014. [Google Scholar]

- [8].Demmel J and Veselic K, “Jacobi’s Method is more Accurate than QR,” SIAM Journal on Matrix Analysis and Applications, vol. 13, no. 4, pp. 1204–1245, 1992. [Google Scholar]

- [9].Johnston N, “QETLAB: A Matlab toolbox for quantum entanglement, version 0.9,” http://qetlab.com, 2016.

- [10].Musco C, Netrapalli P, Sidford A, Ubaru S, and Woodruff DP, “Spectrum Approximation Beyond Fast Matrix Multiplication: Algorithms and Hardness,” 2018. [Online]. Available: http://arxiv.org/abs/1704.04163

- [11].Harvey NJ, Nelson J, and Onak K, “Sketching and streaming entropy via approximation theory,” in IEEE Annual Symposium on Foundations of Computer Science, 2008, pp. 489–498. [Google Scholar]

- [12].Han I, Malioutov D, and Shin J, “Large-scale Log-determinant Computation through Stochastic Chebyshev Expansions,” Proceedings of the 32nd International Conference on Machine Learning, vol. 37, pp. 908–917, 2015. [Google Scholar]

- [13].Trevisan L, “Graph Partitioning and Expanders,” 2011, handout 7.

- [14].Ailon N and Chazelle B, “The Fast Johnson–Lindenstrauss Transform and Approximate Nearest Neighbors,” SIAM Journal on Computing, vol. 39, no. 1, pp. 302–322, 2009. [Google Scholar]

- [15].Drineas P, Mahoney MW, Muthukrishnan S, and Sarlós T, “Faster Least Squares Approximation,” Numerische Mathematik, vol. 117, pp. 219–249, 2011. [Google Scholar]

- [16].Tropp JA, “Improved Analysis of the Subsampled Randomized Hadamard Transform,” Advances in Adaptive Data Analysis, vol. 03, no. 01, p. 8, 2010. [Google Scholar]

- [17].Paul S, Boutsidis C, Magdon-Ismail M, and Drineas P, “Random projections and support vector machines,” in Proceeding of the 16th International Conference on Artificial Intelligence and Statistics, 2013. [Google Scholar]

- [18].Clarkson KL and Woodruff DP, “Low Rank Approximation and Regression in Input Sparsity Time,” in Proceedings of the 45th annual ACM Symposium on Theory of Computing. ACM Press, 2013, pp. 81–90. [Google Scholar]

- [19].Meng X and Mahoney MW, “Low-distortion Subspace Embeddings in Input-sparsity Time and Applications to Robust Linear Regression,” in Proceedings of the 45th annual ACM Symposium on Theory of Computing, 2013, pp. 91–100. [Google Scholar]

- [20].Nelson J and Nguyn HL, “OSNAP: Faster Numerical Linear Algebra Algorithms via Sparser Subspace Embeddings,” in Proceedings of the 46th annual IEEE Symposium on Foundations of Computer Science, 2013. [Google Scholar]