Abstract

Motivated by the structure reconstruction problem in single-particle cryo-electron microscopy, we consider the multi-target detection model, where multiple copies of a target signal occur at unknown locations in a long measurement, further corrupted by additive Gaussian noise. At low noise levels, one can easily detect the signal occurrences and estimate the signal by averaging. However, in the presence of high noise, which is the focus of this paper, detection is impossible. Here, we propose two approaches—autocorrelation analysis and an approximate expectation maximization algorithm—to reconstruct the signal without the need to detect signal occurrences in the measurement. In particular, our methods apply to an arbitrary spacing distribution of signal occurrences. We demonstrate reconstructions with synthetic data and empirically show that the sample complexity of both methods scales as SNR−3 in the low SNR regime.

Keywords: autocorrelation analysis, expectation maximization, frequency marching, cryo-EM, blind deconvolution

I. Introduction

We consider the multi-target detection (MTD) problem [10] to estimate a signal from a long, noisy measurement

| (1) |

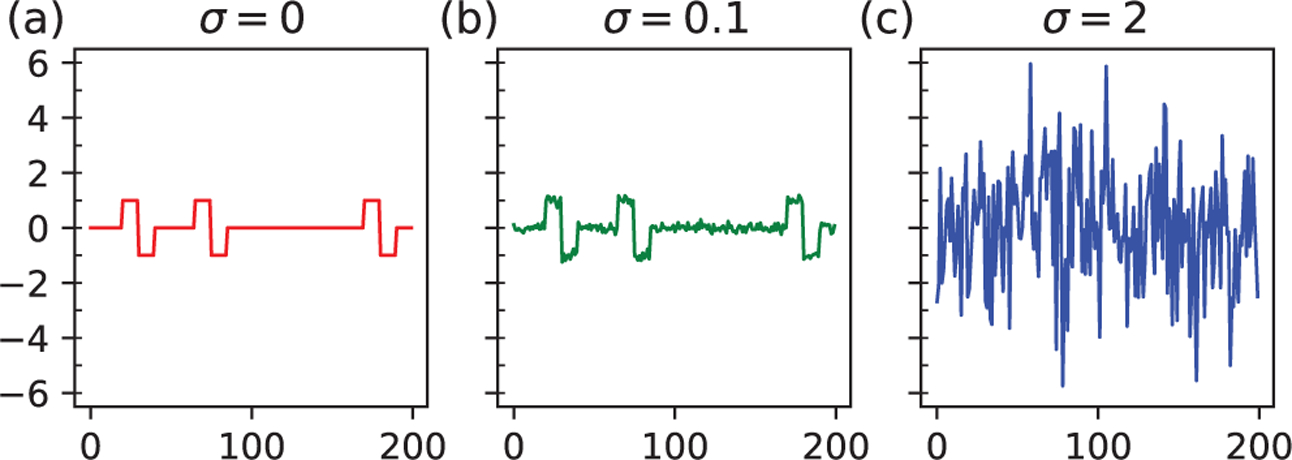

where is the linear convolution of an unknown binary sequence s ∈ {0, 1}N−L+1 with the signal, further corrupted by additive white Gaussian noise with zero mean and variance σ2, and we assume L ≪ N. Both x and s are treated as deterministic variables. The signal length L and the noise variance σ2 are assumed to be known. The non-zero entries of s indicate the starting positions of the signal occurrences in y. We require the signal occurrences not to overlap, so consecutive non-zero entries of s are separated by at least L positions. Figure 1 gives an example of the measurement y that contains three signal occurrences at different noise levels.

Fig. 1.

An example of a measurement in the MTD model corrupted by additive Gaussian noise with (a) σ =0, (b) σ =0.1 (high SNR regime) and (c) σ =2 (low SNR regime).

MTD belongs to the wider class of blind deconvolution problems [25], which have applications in astronomy [24], [33], microscopy [28], [32], system identification [1], and motion deblurring [17], [26], among others. The main difference is that we are only interested in estimating the signal x: we treat s as a nuisance variable, while most of the literature on blind deconvolution aims to estimate both x and s. This distinction allows us to estimate the signal at higher noise levels, which are usually not addressed in the literature on blind deconvolution, specifically because s cannot be accurately estimated in such regimes. We give a theoretical argument for this claim below, and corroborate it with numerical experiments in Section IV.

This high-noise MTD model has been studied in [9], [10] under the assumption that the signal occurrences either are well separated or follow a Poisson distribution. In applications, however, the signal occurrences in the measurement might be arbitrarily close to each other. In this study, we extend the framework of [9], [10] to allow an arbitrary spacing distribution of signal occurrences by simultaneously estimating the signal and the distance distribution between consecutive signal occurrences.

The solution of the MTD problem is straightforward at high signal-to-noise ratio (SNR), such as the case shown in Figure 1(b). The signal can be estimated by first detecting the signal occurrences and then averaging. In the low SNR regime however, this simple method becomes problematic due to the difficulty of detection, as illustrated by Figure 1(c). More than difficulty, reliable detection becomes impossible beyond a critical noise level. This can be understood from [9, Proposition 3.1], which we reproduce here (the proof is based on the Neyman–Pearson lemma).

Proposition 1:

Consider two known vectors θ0 = x and θ1 = 0 in and a random variable η taking value 0 or 1 with equal probability. We observe the random vector with the following distribution:

For any deterministic estimator of η,

that is, the probability of success for the best deterministic estimator of η is no better than a random guess in the limit of σ → ∞.

In our context, this simple fact can be interpreted as follows: even if we know the true signal x and an oracle provides windows of length L in the observation y which, with equal probability, contain either the noisy signal or pure noise, deciding whether a given window contains one or the other cannot be done significantly better than a random chance decision at high noise level. Results similar in spirit (but distinct in mathematical tools) are derived in [3].

In this work, we suggest to circumvent the estimation of s by estimating the signal x directly. Specifically, we propose two different approaches—autocorrelation analysis and an approximate version of the expectation maximization (EM) algorithm [18]. Since these methods do not require estimating s, Proposition 1 does not limit their performance in the low SNR regime.

Autocorrelation analysis relates the autocorrelations calculated from the noisy measurement to the signal. The signal is then estimated by fitting the autocorrelations through least squares. This approach is efficient as it requires only one pass over the data to calculate the autocorrelations; this is of particular importance as the data size grows.

In the second approach, the approximate EM algorithm, the signal is reconstructed by iteratively maximizing the data likelihood, which marginalizes over s; importantly, it does not estimate it explicitly. In contrast to autocorrelation analysis, the approximate EM algorithm scans through the whole dataset in each iteration, and hence requires much longer computational time.

In this study, we demonstrate the reconstruction of the underlying signal from the noisy measurement using the two proposed approaches. Our numerical experiments show that the approximate EM algorithm provides slightly more accurate estimates of the signal in the low SNR regime, whereas autocorrelation analysis is considerably faster, especially at low SNR. It is empirically shown that the sample complexity of both approaches scales as SNR−3 at low SNR, with details discussed later in the text. In the high SNR regime, the sample complexity of both methods scales as SNR−1, the same as the sample complexity of the simple method that estimates the signal by first detecting the signal occurrences and then averaging.

The main contributions of this work are as follows.

We formalize MTD for an arbitrary signal spacing distribution, making it a more realistic model for applications.

We propose two algorithms to solve the MTD problem. In particular, our algorithm based on autocorrelations illustrates why, to recover x, we need not estimate all of s, but rather only a concise summary of it; and why it is possible, in principle at least, to solve this problem for arbitrary noise levels (given a sufficiently long observation). For our second algorithm, we note that the popular EM method is intractable, but we show how to implement an approximation of it, which performs well in practice.

For both algorithms, we design a coarse-to-fine multi-resolution scheme to alleviate issues pertaining to non-convexity. This is related to the ideas of frequency marching which are often used in cryo-electron microscopy (cryo-EM) [6], [34].

II. Autocorrelation analysis

In what follows, we discuss autocorrelations of both the signal x (of length L) and the measurement y (of length N). To keep notation general, we here consider a sequence z of length m, and define its autocorrelations of order q = 1, 2, … for any integer shifts l1, l2, …, lq−1 as

where z is zero-padded for indices out of the range [0, m−1]. We have m = L when z represents the signal x and m = N when z represents the measurement y. Since the autocorrelations only depend on the differences of the integer shifts and are invariant under any permutation of the shifts, for the second- and third-order autocorrelations we have the symmetries

| (2) |

For applications of higher-order autocorrelations and their symmetries, see for example [4], [22], [31], [35].

In this section, we describe how the autocorrelations of the noisy measurement y are related to the underlying signal x. These relations are later used to estimate x without the need to identify the locations of signal occurrences, which are nuisance variables and difficult, if not impossible, to determine reliably at high noise levels. For completeness, we include a brief discussion of the special case where the signals are well separated and refer the reader to [9], [10] for details. The generalization to an arbitrary signal spacing distribution follows.

A. Well-separated signals

The signals are said to be well-separated when the consecutive non-zero entries of s are separated by at least 2L − 1 positions. Under this condition, the autocorrelations of y with integer shifts l1, l2, …, lq−1 within the range [−(L−1), L−1] are unaffected by the relative positions of signal occurrences. As a result, these autocorrelations of y provide fairly direct information about those of x, and therefore about x itself.

Due to the presence of Gaussian noise, the entries of y are stochastic. Taking the expectations of the first three autocorrelations of y with respect to the distribution of Gaussian noise, we obtain these relations (see Appendix A):

| (3) |

| (4) |

| (5) |

where 0 ≤ l < L and 0 ≤ l1 ≤ l2 < L. Here, ρ0 = ML/N denotes the signal density, where M is the number of signal occurrences in y. We assume M grows with N at the constant rate ρ0/L. The delta functions, defined by δ[0] = 1 and δ[l ≠ 0] = 0, are due to the autocorrelations of the white Gaussian noise. As indicated by the studies in phase retrieval [7], [12], [15], in general a 1D signal x cannot be uniquely determined by its first two autocorrelations. It is thus necessary (and generically sufficient [10]) to include the third-order autocorrelations to uniquely determine x.

The expectations of the autocorrelations of y are estimated by averaging over the given noisy measurement. This data reduction requires only one pass over the data, which is a great computational advantage as the data size grows. As shown in Appendix A, for a given signal x, the average over the noisy entries of y gives the relations:

where η1, η2[l] and η3[l1, l2] are random variables with zero mean and variances , , and respectively. For autocorrelations of order q, the standard deviations scale as at high noise levels. Therefore, we need in order for the calculated from the noisy measurement to be a good estimator for , and thus to establish a reliable relation with the signal x such as (3)–(5). Since the SNR is proportional to σ−2, the sample complexity therefore scales as SNR−q. We also expect the error of the reconstructed signal to depend on the errors of the highest-order autocorrelations used in the analysis at high noise levels.

We estimate the signal density ρ0 and signal x by fitting the first three autocorrelations of y via non-linear least squares:

| (6) |

The weights are chosen as the inverse of the number of terms in the respective sums. Since the autocorrelations have symmetries, as indicated in (2), the summations above are restricted to the non-redundant shifts. Due to the errors in estimating with , we expect the root-mean-square error of the reconstructed signal ,

to scale as σ3 in the low SNR regime.

We mention that there exist alternative methods to fit the observed autocorrelations. One possibility is to reformulate the problem as a tensor sensing problem: the autocorrelations are then linear functions of an (L + 1) × (L + 1) × (L + 1) tensor. Such an approach has been proven to be effective for the related problems of multi-reference alignment [5], [29] and phase retrieval [16]. Similar formulations are also found in tensor optimization [2], [30]. Nevertheless, this method requires lifting the original problem to a higher-dimensional space, which increases the computational burden and scales poorly with the dimension of the problem. In contrast, our least-squares formulation operates in the ambient dimension of the problem and thus might be applicable to high-dimensional setups.

B. Arbitrary spacing distribution

The condition of well-separated signals can be further relaxed to allow arbitrary spacing distribution by assuming that the signal occurrences in any subset of y follow the same spatial distribution. To this end, we define the pair separation function as follows.

Definition 1:

For a given binary sequence s identifying M starting positions of signal occurrences (that is, ), the pair separation function ξ[l] is defined as

| (7) |

where sk indicates the index of the kth non-zero entry of the sequence s. It is not hard to see that .

In particular, we force ξ[0] = ⋯ = ξ[L − 1] = 0 since we exclude overlapping occurrences; then ξ[L] is the fraction of pairs of consecutive signal occurrences that occur right next to each other (no spacing at all), ξ[L + 1] is the fraction of pairs of consecutive signal occurrences that occur with one signal-free entry in between them, etc.

In contrast to the well-separated model, autocorrelations of y may now involve correlating distinct occurrences of x, which may be in various relative positions. The crucial observation is that these autocorrelations depend only indirectly on s, namely, through L − 1 entries of the unknown ξ, which have much smaller dimensions than s. As shown in Appendix A, for a given signal x, the autocorrelations of the given measurement y gives the relations:

| (8) |

| (9) |

| (10) |

where 0 ≤ l < L and 0 ≤ l1 ≤ l2 < L. The random variables , and have zero mean and variances , and respectively. Note that (8)–(10) reduce to (3)–(5) when ξ[L] = ξ[L+1] = ··· = ξ[2L−2] = 0, as required by the condition of well-separated signals. Expressions (8)–(10) further simplify upon defining

| (11) |

After calculating the first three autocorrelations of y from the noisy measurement, we estimate the signal x and the parameters ρ0 and ρ1 by fitting the autocorrelations of y through the non-linear least squares

| (12) |

Numerically, we find that this problem can often be solved, meaning that, even though s cannot be estimated, we can still estimate x and the summarizing statistics ρ0 and ρ1. As discussed in Section II-A, the RMSE of the reconstructed signal is expected to scale as σ3 in the low SNR regime owing to the errors in estimating with .

C. Frequency marching

To minimize the least squares in (6) and (12), we use the trust-regions method in Manopt [13] over the product of the Euclidean manifold with the constraints that and are positive. However, as the least squares problems are inherently non-convex, we observe that the iterates of the trust-regions method used for minimization are liable to stagnate in local minima. To alleviate this issue, we adopt the frequency marching scheme [6] in our optimization. The idea behind frequency marching is based on the following heuristics:

The coarse-grained (low-resolution) version of the original problem has a smoother optimization landscape so that it is empirically more likely for the iterates of the optimization algorithm to reach the global optimum of the coarse-grained problem.

Intuitively, the global optimum of the original problem can be reached more easily by following the path along the global optima of a series of coarse-grained problems with incremental resolution.

Our goal is to guide the iterates of the optimization algorithm to reach the global optimum of the original problem by successively solving the coarse-grained problems, which are warm-started with the solution from the previous stage.

The coarse-grained problems are characterized by the order of the Fourier series, , used to express the low-resolution approximate by

| (13) |

where l = 0, 1, …, L−1. Instead of the entries of x, the least squares are minimized with respect to the Fourier coefficients in our frequency marching scheme. The order nmax is related to the spatial resolution by Nyquist rate:

The spatial resolution subdivides the signal x into L′ = L/Δx units and represents the “step size” of the shifts for the coarse-grained autocorrelations.

We define the coarse-grained autocorrelations of of order q for integer shifts l1, l2, …, lq−1 as

where ⌊·⌉ rounds the argument to the nearest integer. The coarse-grained autocorrelations of y are given by sub-sampling the original autocorrelations calculated from the full measurement. With b[l] denoting the bin centered at lΔx, where l = 0, 1, …, L′−1, we estimate the coarse-grained autocorrelations⌊ of y by

where 0 ≤ l < L′, 0 ≤ l1 ≤ l2 < L′, and B2 and B3 represent the number of terms in the respective sums.

Following the discussion in Section II-B, we relate the first three autocorrelations of y to those of , as defined in (13), by

The autocorrelations are related by approximation instead of equality to reflect the errors due to the low-resolution approximation. Above, we define as the product of the signal density ρ0 and the coarse-grained pair separation function:

| (14) |

where is defined as

| (15) |

In each stage of frequency marching, we estimate the Fourier coefficients, which are related to through (13), and the parameters ρ0, by fitting the coarse-grained autocorrelations of y through the non-linear least squares

Our frequency marching scheme increments the order nmax from 1 to ⌊L/2⌋, and the computed solution of each stage is used to initialize optimization in the next stage.

III. Expectation maximization

In this section, as an alternative to the autocorrelations approach, we describe an approximate EM algorithm to address both the cases of well-separated signals and arbitrary spacing distribution. A frequency marching scheme is also designed to help the iterates of the EM algorithm converge to the global maximum of the data likelihood.

A. Well-separated signals

Given the measurement y that follows the MTD model (1), the maximum marginal likelihood estimator (MMLE) for the signal x is the maximizer of the likelihood function p(y|x). Within the EM framework [18], the nuisance variable s is treated as a random variable drawn from some distribution under the condition of non-overlapping signal occurrences. The EM algorithm estimates the MMLE by iteratively applying the expectation (E) and maximization (M) steps. Specifically, given the current signal estimate xk, the E-step constructs the expected log-likelihood function

where the summation runs over all admissible configurations of the binary sequence s. The signal estimate is then updated in the M-step by maximizing Q(x|xk) with respect to x. The major drawback of this approach is that the number of admissible configurations for s grows exponentially with the problem size. Therefore, the direct application of the EM algorithm is computationally intractable, even for very short measurements.

In our framework of the approximate EM algorithm, we first partition the measurement y into Nd = N/L non-overlapping segments, each of length L, and denote the mth segment by ym. Overall, the signal can occur in 2L−1 different ways when it is present in a segment. The signal is estimated by the maximizer of the approximate likelihood function

| (16) |

where we ignore the dependencies between segments. Our approximate EM algorithm works by applying the EM algorithm to estimate the MMLE of (16), without any prior on the signal. As we will see in Section IV, the validity of the approximation is corroborated by the results of our numerical experiments.

Depending on the position of signal occurrences, the segment ym can be modeled by

Here, Z first zero-pads L entries to the left of x, and circularly shifts the zero-padded sequence by lm positions, that is,

where lm = 0, 1, …, 2L − 1 and is treated as a random variable. The operator C then crops the first L entries of the circularly shifted sequence, which are further corrupted by additive white Gaussian noise. In this generative model, lm = 0 represents no signal occurrence in ym, and lm = 1, …, 2L−1 enumerate the 2L − 1 different ways a signal can appear in a segment.

In the E-step, our algorithm constructs the expected log-likelihood function

| (17) |

given the current signal estimate xk, where

| (18) |

with the normalization . From Bayes’ rule, we have

| (19) |

which is the normalized likelihood function p(ym|l, xk), weighted by the prior distribution p(l|xk). In general, the prior distribution p(l|xk) is independent of the model xk and can be estimated simultaneously with the signal.

Denoting the prior distribution p(l) by α[l], we rewrite (17) as (up to an irrelevant constant)

We note that the dependence of Q(x, α|xk, αk) on the current prior estimates αk lies in p(l|ym, xk) through (19). The M-step updates the signal estimate and the priors by maximizing Q(x, α|xk, αk) under the constraint that the priors lie on the simplex Δ2L:

| (20) |

As shown in Appendix B, we obtain the update rules

| (21) |

where 0 ≤ j < L, and

| (22) |

where 0 ≤ l < 2L. We repeat the iterations of the EM algorithm until the estimates stop improving, as judged within some tolerance.

B. Arbitrary spacing distribution

We extend the approximate EM approach to address arbitrary spacing distribution of signal occurrences by reformulating the probability model: each segment ym can now contain up to two signal occurrences. In this case, the two signals can appear in L(L − 1)/2 different combinations in a segment, which is explicitly modeled by

where and . Given the signal estimate xk and the shifts , the likelihood function p(ym|l1, l2, xk) can be written as

| (23) |

and we have the normalization condition

Incorporating the terms with two signal occurrences, the E-step constructs the expected log-likelihood function as

| (24) |

where the prior p(l1, l2) is denoted by α[l1, l2].

Under the assumption that the signal occurrences in any subset of y follow the same spatial distribution, we can parametrize the priors with the pair separation function ξ (see Definition 1). Recall that (M − 1)ξ[l] is the number of pairs of consecutive signal occurrences whose starting positions are separated by exactly l positions. The priors α[l1, l2] can be related to the probability that two signal occurrences appear in the combination specified by (l1, l2) in a segment of length L selected from the measurement y, which is estimated by

| (25) |

where L < l1 < 2L and 0 < l2 ≤ l1 −L. Here, (M −1)ξ[l1 −l2] is the number of segments that realize the configuration of signal occurrences specified by (l1, l2), and N − L + 1 indicates the total number of segment choices. The priors α[l] can similarly be related to the probability that a signal occurs in the way specified by l in a segment of length L:

| (26) |

where 0 < l ≤ L. An interesting observation is that the number of signal occurrences M can be estimated by

since the signal occurrences are required not to overlap. The value of the prior α[0] is determined by the normalization

| (27) |

From (25), (26) and (27), we see that the priors are uniquely specified by the positive parameters α[0], α[1], ρ1[0], ρ1[1], …, ρ1[L−2], with ρ1 defined in (11). Therefore, the normalization (27) can be rewritten as

| (28) |

In the special case of well-separated signals, where ξ[i] = 0 for L ≤ i ≤ 2L − 2, we have α[1] = α[2] = ⋯ = α[2L − 1] and the normalization α[0] + (2L − 1)α[1] = 1.

In the M-step, we update the signal estimate and the parameters α[0], α[1], ρ1 by maximizing Q(x, α|xk, αk) under the constraint that (28) is satisfied:

| (29) |

As shown in (24), Q(x, α|xk, αk) is additively separable for x and α, so the constrained maximization (29) can be reached by maximizing Q(x, α|xk, αk) with respect to x and the parameters α[0], α[1], ρ1 separately. Maximizing Q(x, α|xk, αk) with respect to x yields the update rule (see Appendix C for derivation)

| (30) |

where 0 ≤ j < L. To update the parameters α[0], α[1], ρ1, we note that the function Q(x, α|xk, αk) is concave with respect to these parameters and the constraint (28) forms a compact convex set. Therefore, the constrained maximization is achieved using the Frank-Wolfe algorithm [20].

C. Frequency marching

Because the iterates of the EM algorithm are not guaranteed to converge to the maximum likelihood, we develop a frequency marching scheme to help the iterates converge to the global maximum. Recall that frequency marching converts the original optimization problem into a series of coarse-grained problems with gradually increasing resolution. The coarse-grained version of the EM algorithmis characterized by the spatial resolution

where nmax = 1, 2, …, ⌊(L + 1)/2⌋. This spatial resolution models the signal shifts in the coarse-grained problem, with the corresponding expected likelihood function given by

where L′ = L/Δx. This likelihood function is equal to the original one shown in (24) restricted to the rounded shifts, so the signal can be updated with (30) by ignoring the terms with irrelevant shifts.

Mimicking (25) and (26), we construct the expressions for the priors in the coarse-grained problem as

where L′ < l1 < 2L′, 0 < l2 ≤ l1 − L′, 0 < l ≤ L′ and is defined in (15). Since the priors are uniquely specified by the positive parameters , , , , …, , with defined in (14), we can update the priors by maximizing with respect to these parameters under the constraint that the parameters lie on the simplex defined by

Incrementing from nmax = 1 to nmax = ⌊(L + 1)/2⌋, the estimated signal and priors in each stage of frequency marching are used to initialize the optimization problem in the next stage.

IV. Numerical Experiments

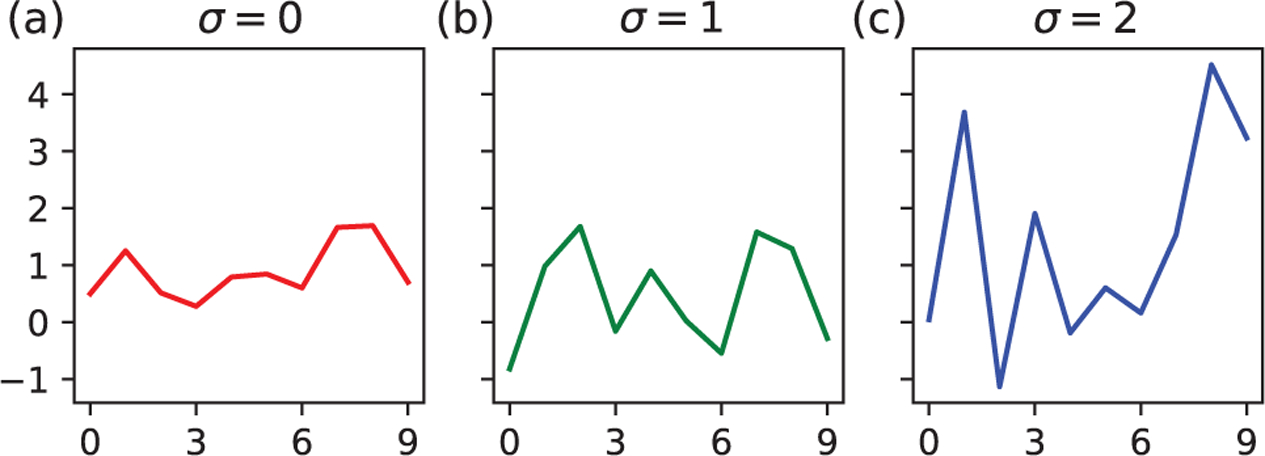

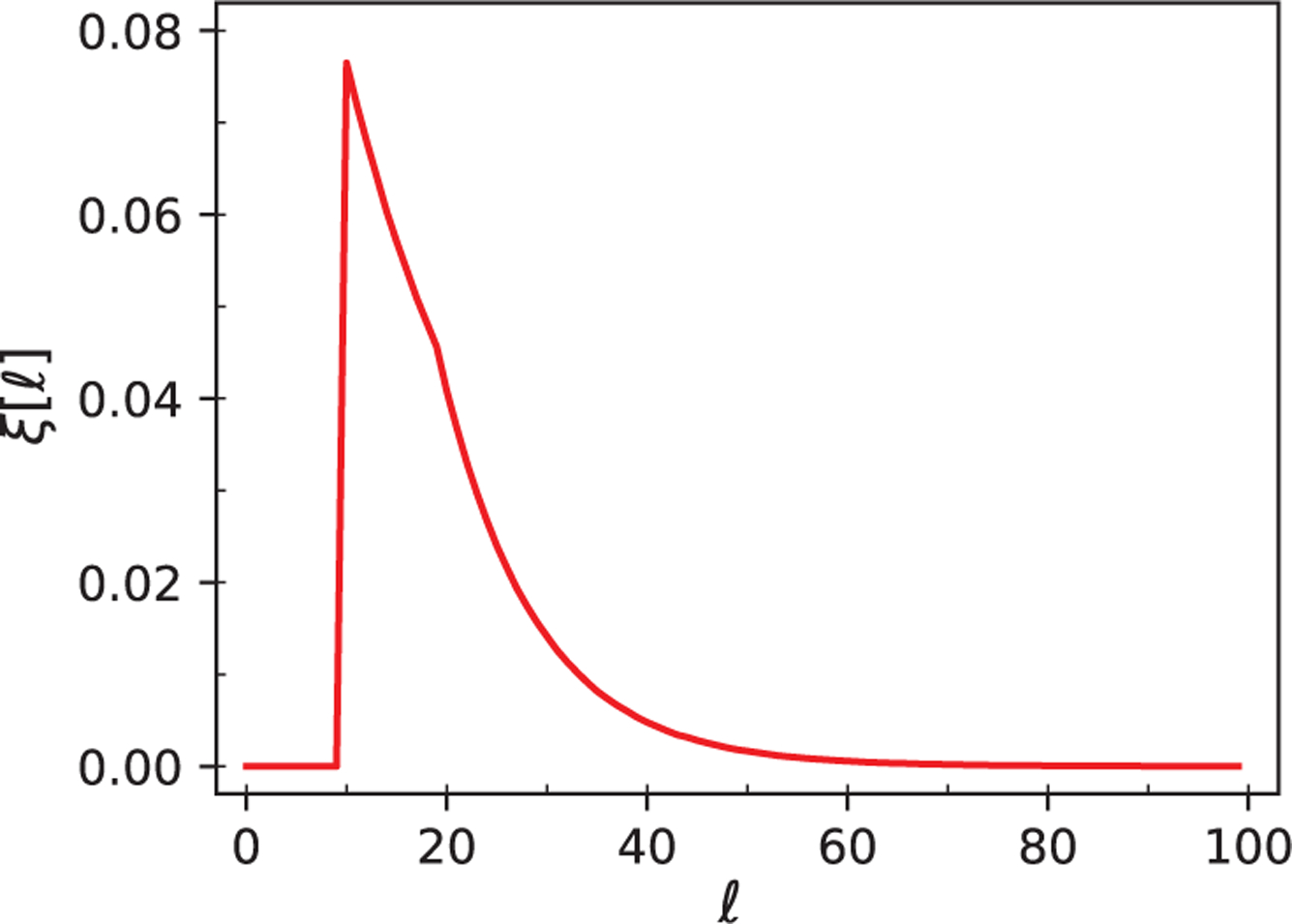

This section describes the construction of our synthetic data and the performance of the two proposed methods.1 A measurement is generated by first sampling M integers from [0, N −L], with the constraint that any two samples differ by at least L+W entries, for some integer W. We use W = L−1 for the case of well-separated signals, while W = 0 is chosen to test our methods for arbitrary spacing distribution of signal occurrences. The sampling is done by generating the random integers uniformly one by one, rejecting any integer that would violate the constraint with respect to previously accepted samples. The M integers indicate the starting positions of the signal occurrences, which are recorded as the non-zero entries of the binary sequence s. The noisy measurement y is given by the linear convolution of s with the signal x, with each entry of y further corrupted by additive white Gaussian noise of zero mean and variance σ2. Our synthetic data all have length N = 106, and are constructed with the signal shown in Figure 2(a), which has length L = 10. Also shown in Figures 2(b) and 2(c) are examples of the corrupted signals by different levels of noise. The number of signal occurrences M is adjusted so that the signal density ρ0 = ML/N is equal to 0.3 and 0.5 for the cases of well-separated signals and arbitrary spacing distribution respectively. Figure 3 shows the resulting pair separation function for our test case of arbitrary spacing distribution.

Fig. 2.

(a) The signal used to generate our synthetic data, with the ℓ2 norm scaled to . (b), (c) Signals corrupted by additive white Gaussian noise with zero mean and standard deviations σ =1 and σ =2.

Fig. 3.

Pair separation function to test arbitrary signal spacing distributions.

For both cases of signal spacing distribution, we reconstruct the signal from synthetic data using the two proposed methods with the frequency marching scheme over a wide range of σ. When the frequency marching scheme is not implemented, we observe that the algorithms usually suffer from large errors due to the non-convexity of the problems. A total of 20 instances of synthetic data are generated for each value of σ, and each instance is solved by the two methods using the frequency marching schemes. For our autocorrelation analysis methods, we run the optimization with 10 different randoinitializations and retain the one that minimizes the cost function, as defined in (6) or (12). For the EM approach, we also use 10 random initializations and retain the solution with the maximum data likelihood. Given the signal estimate xk, we approximate the data likelihood for the cases of well-separated signals and arbitrary spacing distribution by

and

respectively. This is the same approximation we use to formulate the EM algorithm.

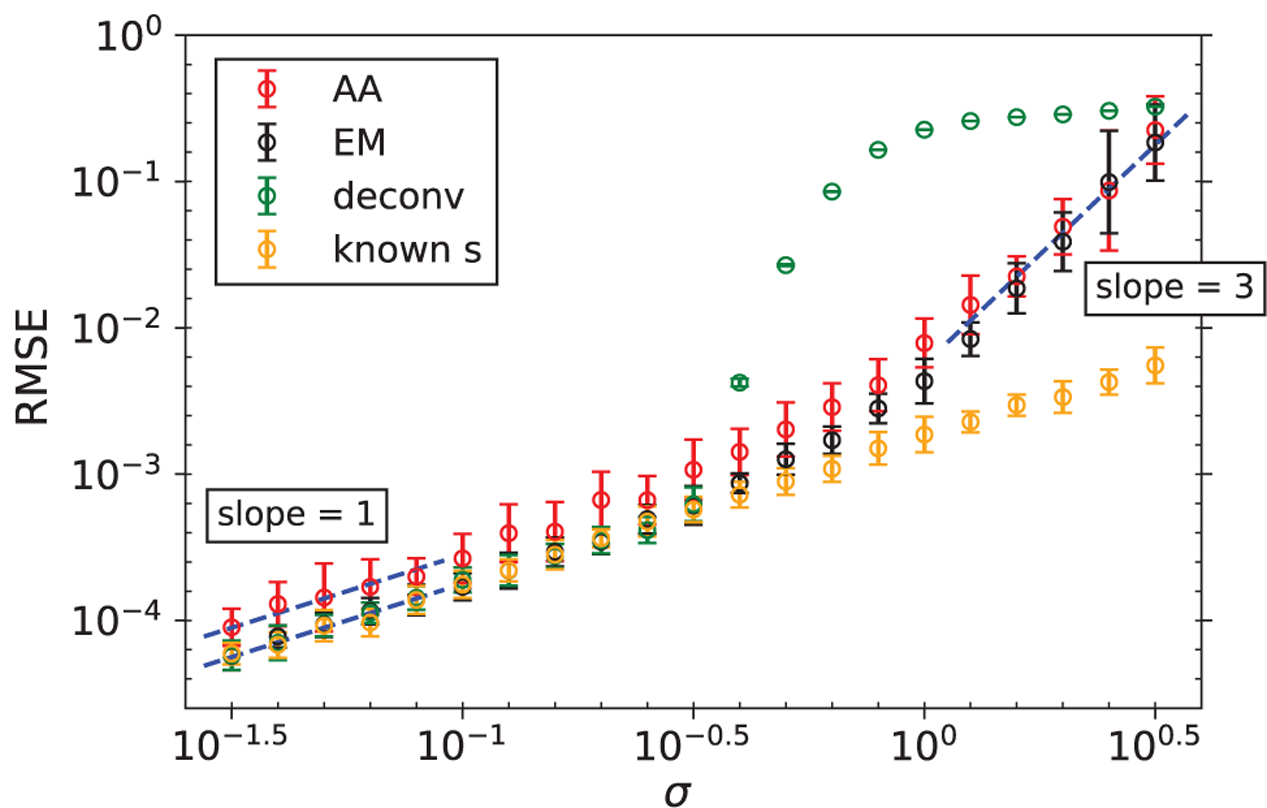

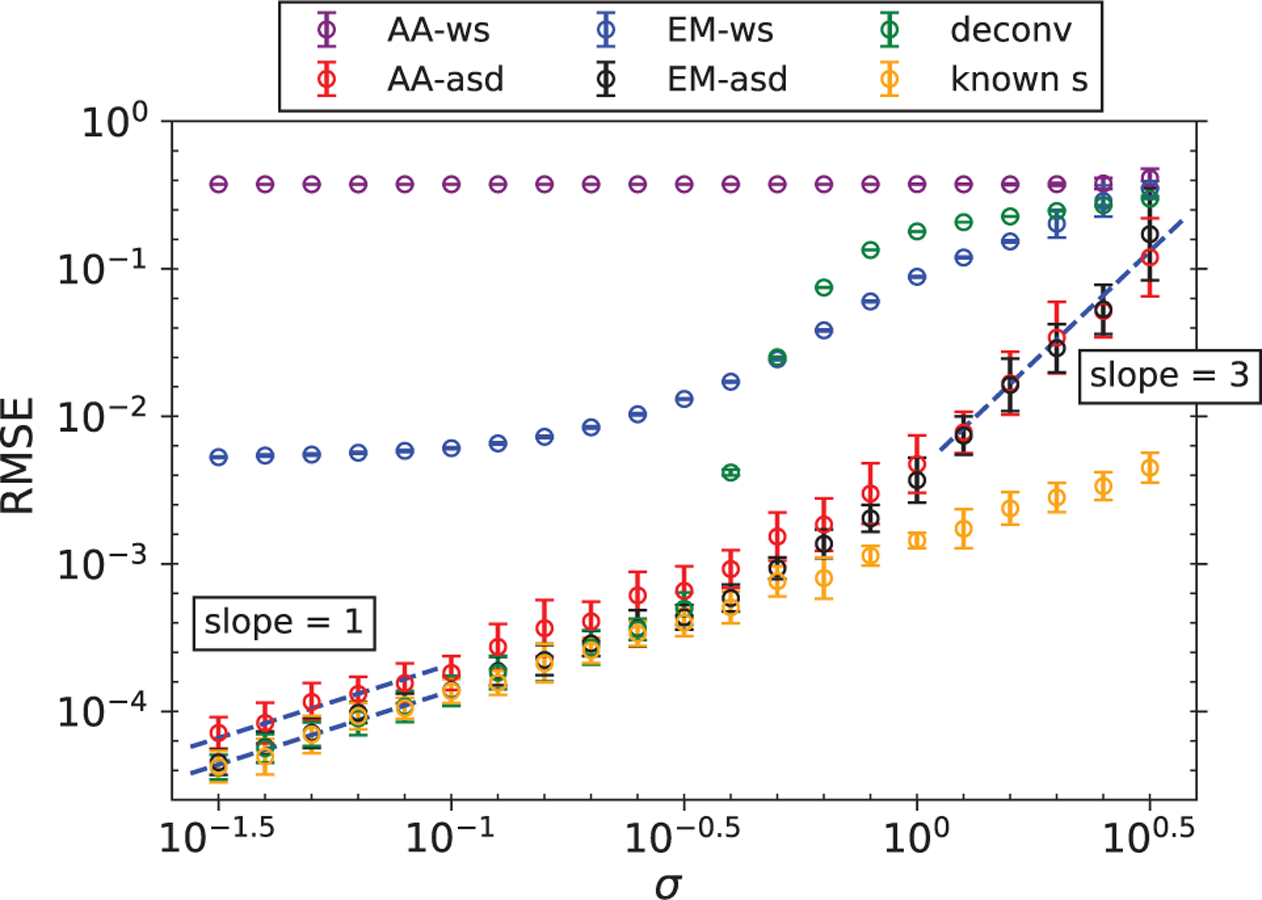

The RMSEs of the reconstructions for the case of well-separated signals are shown in Figure 4. As predicted in Section II-A, the RMSE for autocorrelation analysis scales in proportion to σ at high SNR, and to σ3 at low SNR. Interestingly, the EM algorithm exhibits the same behavior, which empirically shows that the two approaches share the same scaling of sample complexity in the two extremes of noise levels. Figure 5(a) and 5(b) show an instance of the signals reconstructed from data generated with σ = 100.3 and σ = 100.5 respectively. The same scaling is not observed for the reconstructed signal density ρ0 (not shown), although the relative errors are generally well below a few percents even in the noisiest cases.

Fig. 4.

The RMSEs of the reconstructed signal from data generated with well-separated occurrences. We show performance of our autocorrelation analysis (AA) and of expectation-maximization (EM). Also shown are the RMSEs of the signal estimated by the oracle-based deconvolution algorithm ‘deconv’ described in Section IV and the RMSEs of the estimated signal when the binary sequence s is known.

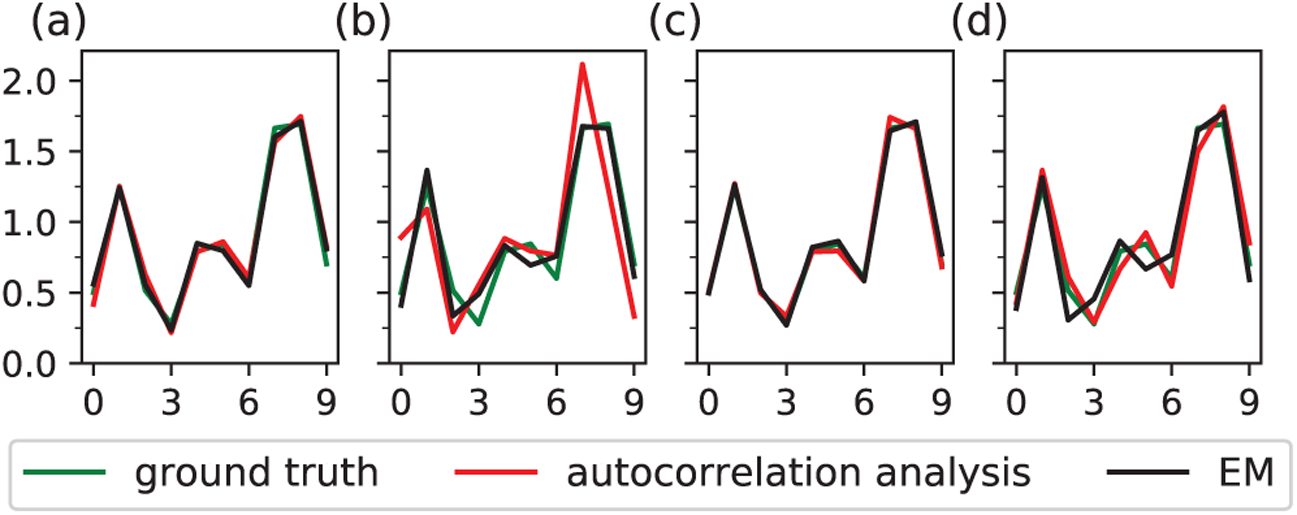

Fig. 5.

(a), (b) An instance of the reconstructed signals from data generated with well-separated occurrences for σ =100.3 and σ =100.5 respectively. (c), (d) An instance of the reconstructed signals from data generated with an arbitrary spacing distribution for σ =100.3 and σ =100.5 respectively.

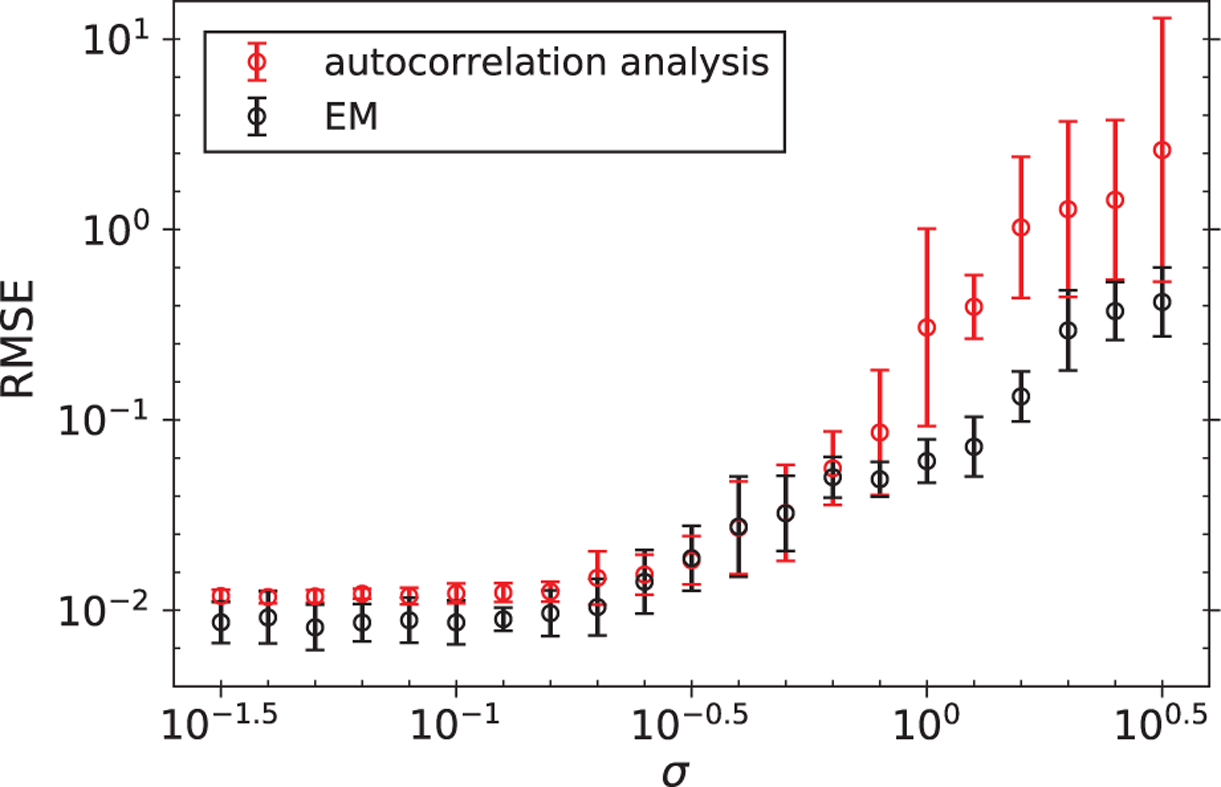

Figure 6 shows the RMSEs of the reconstructed signal for an arbitrary spacing distribution displayed in Figure 3. As a comparison, we also run the estimation algorithms under the (incorrect) assumption of well-separated signals on the same datasets. We first see that autocorrelation analysis assuming well-separated signals obtains poor reconstruction at all noise levels. Although the EM approach that assumes well-separated signals produces more accurate estimates, the nearly constant RMSEs at high SNR indicate the systematic error due to model misspecification. By contrast, the algorithms that assume an arbitrary spacing distribution achieve much better reconstruction, and the resulting RMSEs have the same scaling behaviors at the two extremes of SNR as shown in Figure 4. An instance of the signals reconstructed from data generated with σ = 100.3 and σ = 100.5 are shown in Figure 5(c) and 5(d) respectively.

Fig. 6.

The RMSEs of the reconstructed signal from data generated with an arbitrary spacing distribution. We show performance of our autocorrelation analysis (AA) and of expectation-maximization (EM), both under the (incorrect) assumptions of well-separated signals (ws) and the (correct) arbitrary spacing distribution model (asd). Also shown are the RMSEs of the signal estimated by the oracle-based deconvolution algorithm ‘deconv’ described in Section IV and the RMSEs of the estimated signal when the binary sequence s is known.

One of the premises of this paper is that, at high noise levels, detection-based methods are destined to fail because detection cannot be done reliably. To support this theoretical argument further, in Figures 4 and 6 we display the performance of the following oracle, named ‘deconv’. This method is given a strictly simpler task to solve: it must estimate x given the observation y, the noise level σ and the number of signal occurrences M, as well as the ℓ2-distance between the ground truth x and every single window of length L in the observation y. Precisely, the oracle has access to the vector defined for i = 0, …, N − L by

The vector z provides this estimator the advantage of finding the likely locations of signal occurrences. If the norm of x is known, this oracle advantage is equivalent to knowledge of the cross-correlation between x and y. Then, the oracle-based estimator ‘deconv’ proceeds as follows: it selects the index i corresponding to the lowest value in z, excludes other indices too close to i as prescribed by the signal separation model under consideration, and repeats this procedure M times to produce an estimator of s: the M starting positions of signal occurrences in y. The signal x is then estimated by averaging the M selected segments of y, effectively deconvolving y by the estimator of s. To our point: despite the unfair oracle advantage, this estimator is unable to estimate x to a competitive accuracy when the noise level is large.

Also plotted in Figures 4 and 6 are the RMSEs of the estimated signal when the binary sequence s is known. In this case, the RMSEs scale as σ and serve as the lower bounds of any algorithm. We can see that, at the low noise levels, our approximate EM algorithm performs nearly as well as the case when s is known.

Figure 7 shows the RMSEs of the reconstructed values of ρ1 for the experiments “AA-asd” and “EM-asd” shown in Figure 6. Although our methods are not able to reconstruct ρ1 to high precision, the results shown in Figure 6 indicate the necessity to include the pair separation function in the model to achieve good signal reconstruction.

Fig. 7.

The (relative) RMSEs of the reconstructed values of ρ1 as defined by (11), for the case of arbitrary spacing distribution.

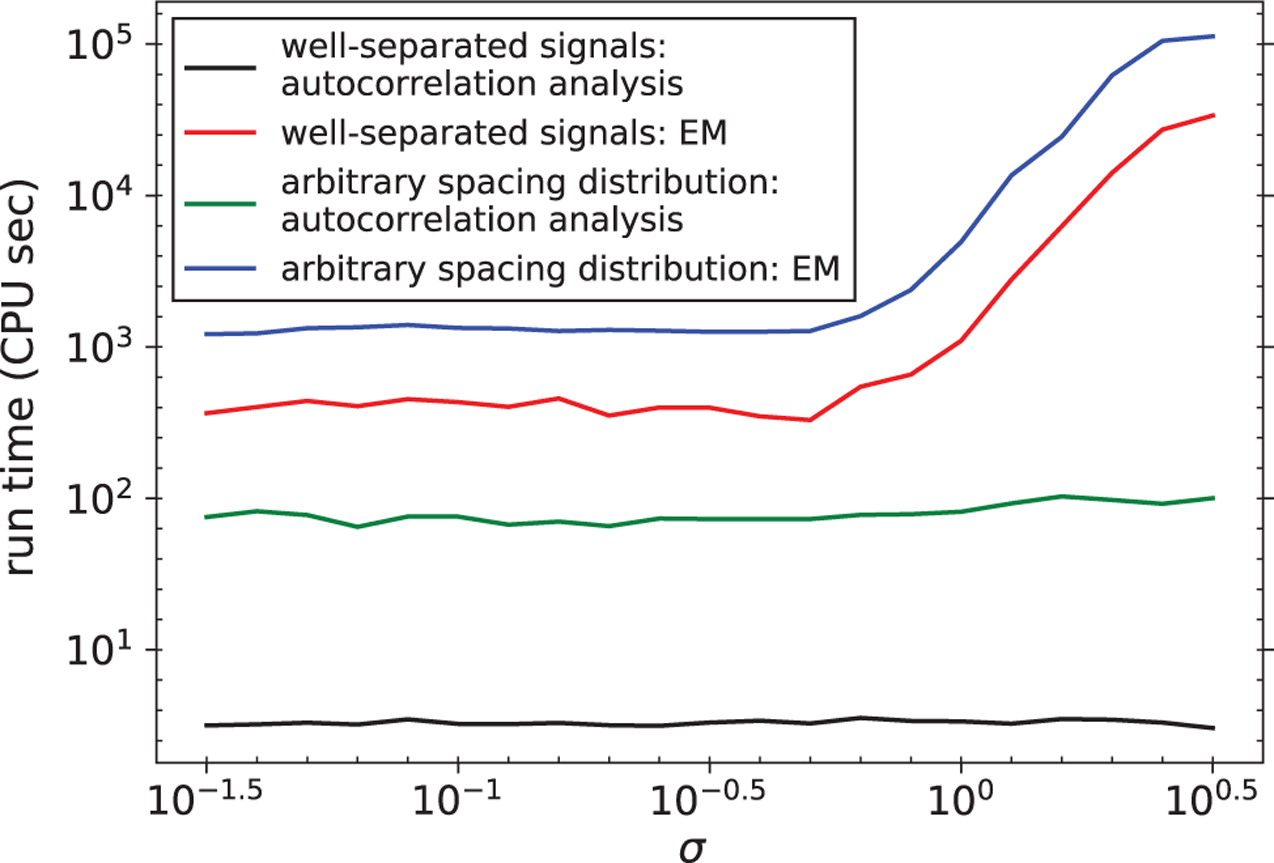

Figure 8 shows the average computation time for the main methods in Figures 4 and 6. EM is slower because it cross-correlates all the observed segments with the signal estimate in each iteration. This issue becomes especially prominent in the case of arbitrary spacing distribution. On the other hand, autocorrelation analysis requires shorter computation time by summarizing the data as autocorrelation statistics with one pass over the data. We note that the distinct computational speeds for autocorrelation analysis and the EM algorithm is also observed in the related problem of multi-reference alignment [8], [14]. The difference in run time and the similar reconstruction quality of the two methods at low SNR make autocorrelation analysis the preferred approach for large datasets.

Fig. 8.

The average run time to solve an instance of synthetic data for the two methods in both cases of signal spacing distribution.

V. Discussion

In this study, we have presented two approaches—autocorrelation analysis and an approximate EM algorithm—to tackle the MTD problem without the need to detect the positions of the underlying signal occurrences. By incorporating the notion of pair separation function, we generalize the solution of the MTD problem from the special case of well-separated signals to allow an arbitrary spacing distribution of signal occurrence. It is empirically shown that the two methods have the same scaling of sample complexity in the two extremes of noise levels, in particular, SNR−3 at high noise level. Since the optimizations in both methods are non-convex, computing schemes based on frequency marching are designed to help the iterates converge to the global optimum.

Our study of the MTD model is primarily motivated by the goal to reconstruct the structures of small biomolecules using cryo-EM [11], [21]. In a cryo-EM experiment, individual copies of the target biomolecule are dispersed at unknown 2D locations and 3D orientations in a thin layer of vitreous ice, from which 2D tomographic projection images are produced by an electron microscope. To minimize the irreversible structural damage, it is necessary to keep the electron dose low. As a result, the projection images are considerably noisy, and high-resolution structure estimation requires averaging over a large number of noisy projections. In particular, as the size of the molecule gets smaller, the SNR of the data drops correspondingly.

Currently, the analysis workflow of cryo-EM data is roughly divided into two steps: The first step, known as particle picking, detects the locations of the biomolecules in the noisy projection images. The 3D structure of the biomolecule is reconstructed in the second step from the unoriented particle projections. When the sizes of biomolecules get smaller, however, reliable detection of their positions becomes challenging, which in turn hampers successful particle picking [3], [9]. It is estimated from first principles that, in order to obtain a 3 Å resolution reconstruction, the particle size should be at least 45 Å so that the particle occurrences can be accurately detected [23]. The results of [9], [10] and this work suggest that it is possible to bypass the need to detect particle positions but still reconstruct the structure at high resolution.

A key feature of cryo-EM that the MTD model fails to capture is that the “signals” are actually 2D projections of the underlying biomolecules at random 3D orientations, and the orientation distribution is usually non-uniform and unknown. This distinction makes the direct application of our approximate EM algorithm prohibitive, because each observed window now needs to cross-correlate with model projections with all possible 2D in-plane translations and 3D orientations, let alone the case where multiple copies of biomolecules may appear in a window. Further approximations such as representing the data and model projections in low-dimensional subspaces [19], [27] seem necessary to push this approach forward. We note that the use of frequency marching in the MTD problem is also related to this idea: the signal is mapped to low-dimensional subspaces with growing dimensions specified by nmax.

On the other hand, the autocorrelations calculated from the noisy measurements in cryo-EM involve averages over both the 3D orientations and 2D in-plane translations. It was pointed out that the 3D structure reconstruction problem by fitting the first three autocorrelations of a biomolecule might be ill-conditioned [9], so information from higher-order autocorrelations seems required. We also expect the cross-terms between 2D projections of different copies to further complicate the reconstruction problem. The resolution of these technical challenges may help extend the use of cryo-EM to smaller biomolecules that are currently believed insurmountable, and is the subject of our ongoing studies.

Acknowledgment

We would like to thank Ayelet Heimowitz, Joe Kileel, Eitan Levin and Amit Moscovich for stimulating discussions and the anonymous reviewers for their valuable comments.

The research is supported in parts by Award Number R01GM090200 from the NIGMS, FA9550-17-1-0291 from AFOSR, Simons Foundation Math+X Investigator Award, the Moore Foundation Data-Driven Discovery Investigator Award, and NSF BIGDATA Award IIS-1837992. NB is partially supported by NSF award DMS-1719558.

Appendix A

Derivations of Relations (3)–(5) and (8)–(10)

With the noisy measurement y defined in (1), we express its first order autocorrelation as

Taking the expectation of with respect to the distribution of Gaussian noise, we obtain

with variance . We note that this expression applies to both the cases of well-separated signals and arbitrary spacing distribution.

The second order autocorrelation of y with shifts l = 0, 1, …, L − 1 can be written as

| (31) |

where denotes the second order autocorrelation of the linear convolution s * x with shifts l, and sk indicates the index of the kth non-zero entry of the sequence s. Taking the expectation of with respect to the distribution of Gaussian noise, we have

The term that scales as in (31) contributes to the variance by , while the terms that scale as contribute to the variance by . Since we assume that M grows with N at a constant rate, the resulting variance scales as .

For well-separated signals,

and we obtain the relation in (4). For arbitrary spacing distribution, we need to include the correlation terms between consecutive signal occurrences:

which gives the relation in (9). The quantity ξ[j] denotes the number of consecutive 1’s that are separated by exactly j entries in the sequence s, divided by M − 1 so that ∑j ξ[j]=1.

As for the third order autocorrelation of y with shifts 0 ≤ l1 ≤ l2 < L, we have

| (32) |

where denotes the third order autocorrelation of the linear convolution s * x with shifts (l1, l2), and x is zero-padded for indices out of the range [0, L − 1].

Taking the expectation of with respect to the distribution of Gaussian noise, we obtain

As for the variance, the term that scales as in (32) contributes to the variance by , while the terms that scale as contribute to the variance by . Since we assume that M grows with N at a constant rate, the resulting variance scales as .

For well-separated signals, we have

and we obtain the relation in (5). By including the correlations between consecutive signal occurrences, we expand for the case of arbitrary spacing distribution as

which gives the relation in (10).

Appendix B

Derivation of (21) and (22)

The constrained maximization in (20) can be achieved with the unconstrained maximization of the Lagrangian

where λ denotes the Lagrange multiplier. We note that the constraints in (20) involve the inequalities that the priors are non-negative. Such constrained maximization in general cannot be achieved by maximizing the Lagrangian, for the inequalities might be violated. As we will see later, however, these inequalities are automatically satisfied at the computed maximum (or local maximum) of the Lagrangian, which justifies this approach.

Since Q(x, α|xk, αk) is additively separable for x and α, we maximize with respect to x and α separately. At the maximum of , we have

| (33) |

where 0 ≤ j < L. With p(ym|l, x) give by (18), we can write

| (34) |

Substituting this expression into (33), we obtain

Rearranging the terms gives the update rule shown in (21).

In order to update the priors, we maximize with respect to α:

where 0 ≤ l < 2L. We thus obtain the update rule for α as

and we can immediately solve λ = Nd from the normalization .

Appendix C

Derivation of (30)

As shown in (24), Q(x, α|xk, αk) is additively separable for x and α. Moreover, the constraint (28) only involves the values of the parameters α[0], α[1], ρ1, so the maximization of Q(x, α|xk, αk) is unconstrained on x. At the maximum of Q(x, α|xk, αk), we have

| (35) |

where 0 ≤ j < L. With p(ym|l1, l2, xk) given by (23), we obtain

| (36) |

Substituting (34) and (36) into (35), we obtain

Rearranging the terms, we obtain the update rule in (30).

Footnotes

The code for all experiments is publicly available at https://github.com/tl578/multi-target-detection.

References

- [1].Abed-Meraim K, Qiu W, and Hua Y, “Blind system identification, “ Proceedings of the IEEE, vol. 85, no. 8, pp. 1310–1322, 1997. [Google Scholar]

- [2].Aura A, Carrillo R, Thiran J-P, and Wiaux Y, “Tensor optimization for optical-interferometric imaging, “ Monthly Notices of the Royal Astronomical Society, vol. 437, no. 3, pp. 2083–2091, 2013. [Google Scholar]

- [3].Aguerrebere C, Delbracio M, Bartesaghi A, and Sapiro G, “Fundamental limits in multi-image alignment, “ IEEE Transactions on Signal Processing, vol. 64, no. 21, pp. 5707–5722, 2016. [Google Scholar]

- [4].Aizenbud Y, Landa B, and Shkolnisky Y, “Rank-one multi-reference factor analysis, “ arXiv:1905.12442, 2019. [Google Scholar]

- [5].Bandeira AS, Blum-Smith B, Kileel J, Perry A, Weed J, and Wein AS, “Estimation under group actions: recovering orbits from invariants, “ arXiv:1712.10163, 2017. [Google Scholar]

- [6].Barnett A, Greengard L, Pataki A, and Spivak M, “Rapid solution of the cryo-EM reconstruction problem by frequency marching, “ SIAM Journal on Imaging Sciences, vol. 10, no. 3, pp. 1170–1195, 2017. [Google Scholar]

- [7].Bates RHT, “Fourier phase problems are uniquely solvable in more than one dimension. I: underlying theory, “ Optik vol. 61, pp. 247–262, 1982. [Google Scholar]

- [8].Bendory T, Boumal N, Ma C, Zhao Z and Singer A, “Bispectrum inversion with application to multireference alignment, “ IEEE Transactions on Signal Processing, vol. 66, no. 4, pp. 1037–1050, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Bendory T, Boumal N, Leeb W, Levin E, and Singer A, “Toward single particle reconstruction without particle picking: Breaking the detection limit, “ arXiv:1810.00226, 2018. [Google Scholar]

- [10].Bendory T, Boumal N, Leeb W, Levin E, and Singer A, “Multi-target detection with application to cryo-electron microscopy, “ Inverse Problems, 2019. [Google Scholar]

- [11].Bendory T, Bartesaghi A, and Singer A, “Single-particle cryo-electron microscopy: Mathematical theory, computational challenges, and opportunities, “ arXiv:1908.00574, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Beinert R and Plonka G. “Ambiguities in one-dimensional discrete phase retrieval from Fourier magnitudes, “ Journal of Fourier Analysis and Applications, vol. 21, no. 6, pp. 1169–1198, 2015. [Google Scholar]

- [13].Boumal N, Mishra B, Absil P-A, and Sepulchre R, “Manopt, a Matlab toolbox for optimization on manifolds.” Journal of Machine Learning Research, vol. 15, pp. 1455–1459, 2014. [Google Scholar]

- [14].Boumal N, Bendory T, Lederman RR, and Singer A, “Heterogeneous multireference alignment: A single pass approach, “ 2018 52nd Annual Conference on Information Sciences and Systems (CISS), pp. 1–6, 2018. [Google Scholar]

- [15].Bruck YM and Sodin LG, “On the ambiguity of the image reconstruction problem, “ Optics Communications, vol. 30, no. 3, pp. 304–308, 1979. [Google Scholar]

- [16].Candes EJ, Strohmer T, and Voroninski V, “Phaselift: Exact and stable signal recovery from magnitude measurements via convex programming.” Communications on Pure and Applied Mathematics, vol. 66, no. 8, pp. 1241–1274, 2013. [Google Scholar]

- [17].Cho S and Lee S, “Fast motion deblurring, “ ACM Transactions on Graphics, vol. 28, no. 5, 2009. [Google Scholar]

- [18].Dempster A, Laird N, and Rubin D, “Maximum likelihood from incomplete data via the EM algorithm, “ Journal of the Royal Statistical Society, Series B, vol. 39, no. 1, pp. 1–38, 1977. [Google Scholar]

- [19].Dvornek NC, Sigworth FJ, and Tagare HD, “SubspaceEM: A fast maximum-a-posteriori algorithm for cryo-EM single particle reconstruction, “ Journal of Structural Biology, vol. 190, no. 2, pp. 200–214, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Frank M and Wolfe P, “An algorithm for quadratic programming, “ Naval Research Logistics Quarterly, vol. 3, pp. 95–110, 1956. [Google Scholar]

- [21].Frank J, “Three-dimensional electron microscopy of macromolecular assemblies: Visualization of biological molecules in their native state, “ Oxford University Press, 2006. [Google Scholar]

- [22].Giannakis GB and Mendel JM, “Identification of nonminimum phase systems using higher order statistics, “ IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 37, no. 3, pp. 360–377, 1989. [Google Scholar]

- [23].Henderson R, “The potential and limitations of neutrons, electrons and X-rays for atomic resolution microscopy of unstained biological molecules, “ Quarterly Reviews of Biophysics, vol. 28, no. 2, pp. 171–193, 1995. [DOI] [PubMed] [Google Scholar]

- [24].Jefferies SM and Christou JC, “Restoration of astronomical images by iterative blind deconvolution, “ Astrophysical Journal vol. 415, pp. 862–874, 1993. [Google Scholar]

- [25].Kondur D and Hatzinakos D, “Blind image deconvolution, “ IEEE Signal Processing Magazine, vol. 13, no. 3, pp. 43–64, 1996. [Google Scholar]

- [26].Levin A, “Blind motion deblurring using image statistics, “ Advances in Neural Information Processing Systems, 2006. [Google Scholar]

- [27].Landa B and Shkolnisky Y, “The steerable graph Laplacian and its application to filtering image datasets, “ SIAM Journal on Imaging Sciences, vol. 11, no. 4, pp. 2254–2304, 2018. [Google Scholar]

- [28].McNally JG, Karpova T, Cooper J, and Conchello JA, “Three-dimensional imaging by deconvolution microscopy, “ Methods, vol. 19, no. 3, pp. 373–385, 1999. [DOI] [PubMed] [Google Scholar]

- [29].Perry A, Weed J, Bandeira A, Rigollet P, and Singer A, “The sample complexity of multi-reference alignment.” SIAM Journal on Mathematics of Data Science, vol. 1, no. 3, pp.497–517, 2019. [Google Scholar]

- [30].Holger R, and. Stojanac, “Tensor theta norms and low rank recovery.” arXiv:1505.05175, 2015. [Google Scholar]

- [31].Sadler BM and Giannakis GB, “Shift- and rotation-invariant object reconstruction using the bispectrum, “ Journal of the Optical Society of America A, vol. 9, no. 1, pp. 57–69, 1992. [Google Scholar]

- [32].Sarder P and Nehorai A, “Deconvolution methods for 3-D fluorescence microscopy images, “ IEEE Signal Processing Magazine, vol. 23, no. 3, pp. 32–45, 2006. [Google Scholar]

- [33].Schulz TJ, “Multi-frame blind deconvolution of astronomical images, “ Journal of the Optical Society of America A, vol. 10, no. 5, pp. 1064–1073, 1993. [Google Scholar]

- [34].Scheres SHW, “RELION: Implementation of a Bayesian approach to cryo-EM structure determination, “ Journal of Structural Biology, vol. 180, no. 3, pp. 519–530, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Swami A, Giannakis GB, and Mendel J, “Linear modeling of multidimensional non-gaussian processes using cumulants, “ Multi-dimensional Systems and Signal Processing, vol. 1, no. 1, pp. 11–37, 1990. [Google Scholar]