Abstract

BACKGROUND AND PURPOSE:Language dominance research using functional neuroimaging has made important contributions to clinical applications. Nevertheless, although recent neuroimaging studies demonstrated right-lateralized activation by human voice perception, the influence of voice perception in terms of language dominance has not been adequately studied. We aimed to accurately clarify language dominance for lexical-semantic processing in the temporal cortices by focusing on human voice perception.

METHODS:Thirty normal right-handed subjects were scanned by functional MR imaging while listening to sentences (SEN), reverse sentences (rSEN), and identifiable nonvocal sounds (SND). We investigated cerebral activation and the distribution of individual Laterality Index under 3 contrasts: rSEN-SND, SEN-SND, and SEN-rSEN.

RESULTS:The rSEN-SND contrast, including human voice perception, revealed right-lateralized activation in the anterior temporal cortices. Both SEN-SND and SEN-rSEN contrasts, including lexical-semantic processing, showed left-lateralized activation in the inferior and middle frontal gyrus and middle temporal gyrus. The SEN-rSEN contrast, without the influence of human voice perception, showed no temporal activation in the right hemisphere. Symmetrical or right-lateralized activation was observed in 22 of 27 subjects (81.4%) under the rSEN-SND contrast in the temporal cortices. Although 9 of 27 subjects (33.3%) showed symmetrical or right-lateralized activation under the SEN-SND contrast in the temporal cortices, all subjects showed left-lateralized activation under the SEN-rSEN contrast.

CONCLUSION: Our results demonstrated that right-lateralized activation by human voice perception could mask left-lateralized activation by lexical-semantic processing. This finding suggests that the influence of human voice perception should be adequately taken into account when language dominance is determined.

The research of language dominance using functional neuroimaging provides an important contribution to clinical applications. Evaluation of the language area facilitates an efficient procedure for surgery of brain tumors or untreatable epilepsy to prevent the over-resection of the language area.1–5 Despite the disadvantage that the function of the language is temporarily impaired, the Wada method,6 which is the intracarotid amobarbital procedure, has gained wide acceptance as a standard method to decide language dominance. Recently, noninvasive examinations for the evaluation of the language area, consisting of functional MR imaging (fMRI) and functional transcranial Doppler sonography (fTCD), have been developed as alternatives.1,4,7–10 fMRI is especially useful for the accurate evaluation of cerebral activity because of its highly spatial resolution.

Previous fMRI studies analyzing language dominance demonstrated that investigating different aspects of language processing helped to assess the laterality in the location of activation, which is helpful for planning surgery.1,9,11,12 Most fMRI studies use expressive language tasks such as reading5,13–16 or verbal fluency tasks14,17–19 to examine language dominance. Passive auditory comprehension tasks are also a very useful tool for determining language dominance because cerebral function of the frontotemporal region by lexical-semantic processing can be investigated.14,20–24 Recent fMRI studies have demonstrated more widespread activation in the frontotemporal cortices by sentence processing.25,26 Thus, when language dominance is determined, it is important to perform the estimation using various tasks.

Recent fMRI studies have indicated that the left temporal cortex plays a role in semantic processing,27–30 whereas the right temporal cortex is associated with human voice perception.31–33 Human voice has a role as an essential tool for conveying language and information related to language modification such as intonation.34 If cerebral activation as a result of human voice perception is right hemisphere-dominant, the influence of human voice perception needs to be considered when hemisphere dominance for language is determined. In fact, fMRI studies to determine cerebral laterality for language have reported right hemisphere dominance for language in right-handed subjects (ie, atypical lateralization).5,35,36 The determination of atypical lateralization could in fact be attributable to right hemisphere dominance for human voice perception. Therefore, to reveal language dominance in each subject, it is essential to investigate cerebral activation as a result of human voice perception as well as lexical-semantic processing.

In the present study, by examining the effect of general sounds, language comprehension, and human voice perception in a single session of fMRI scanning, we aimed to clarify language dominance for lexical-semantic processing in the temporal cortices while considering human voice perception.

Materials and Methods

Subjects

Thirty healthy right-handed subjects (mean ± SD of Edinburgh Handedness Inventory score was 91.2 ± 10.7),37 15 women and 15 men, participated in the study (mean age, 32.6; SD, 7.1). All volunteers were native speakers of Japanese, and none had any history of neurologic disorders. None of the subjects was taking alcohol or medication at the time, and none had a history of psychiatric disorder, significant physical illness, head injury, neurologic disorder, or alcohol or drug dependency. All subjects underwent an MR imaging to rule out cerebral anatomic abnormalities. After complete explanation of the study, written informed consent was obtained from all subjects, and the study was approved by the Ethics Committee.

Experimental Design

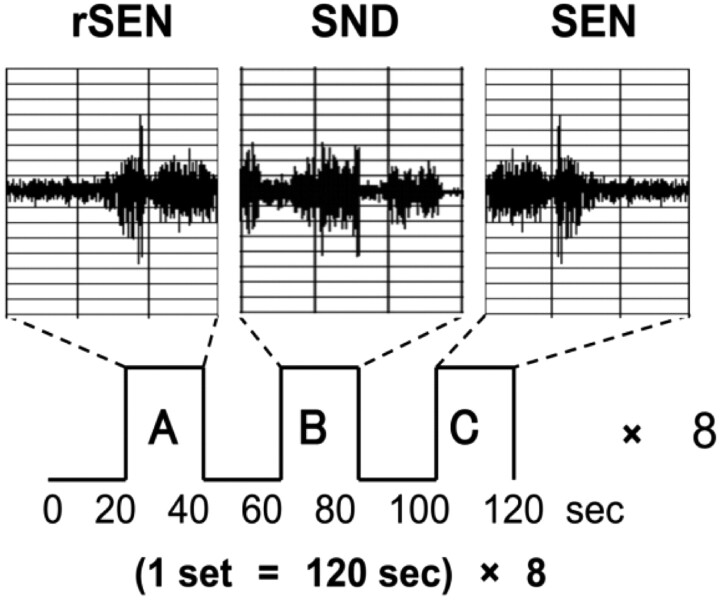

In a single session, 3 types of stimuli were presented: forward-played sentences (SEN); reverse sentences (rSEN; ie, the same sentences played in reverse); and identifiable nonvocal sounds (SND). The duration of each stimulus was 20 seconds, and rSEN, SND, and SEN were played in sequence to each subject. Before each sound, the subjects listened to silence from the headphones for 20 seconds (rest condition). Each set was 120 seconds, consisting of these 3 sound conditions and the rest conditions. One session consisted of 8 sets, with a total scanning time of 960 seconds (Fig 1). As identifiable nonvocal sounds for the SND condition, the complex sounds of a shower, washing machine, bell, and computer printer were used. These identifiable sounds were continued for 20 seconds with tonal fluctuation. The sentences represented a single topic per set, and one session consisted of 4 topics, each repeated twice randomly. Concerning the contents of the sentences, each topic was expressed by 1 or 2 sentences, consisting of 6–7 phrases included in compound sentences. These sentences used conjunctional phrases or long adjuncts. Therefore, each subject was required to comprehend complex situations and understand the connection of the phrases or sentences (Appendix). The material of sentences included the linguistic section of the contents of Wechsler’s Memory Scale–Revised, translated into Japanese.

Fig. 1.

A, reverse sentences (rSEN); B, identifiable nonvocal sounds (SND). C, sentences (SEN).

We used reverse sentences for the human voice condition. Reverse sentences have the same spectrum domain as forward sentences (Fig 1) and maintain the character of human voice. A neuropsychological study has demonstrated that reverse speech continued for more than 200 ms loses meaning.38 In addition, previous fMRI studies have used reversed speech as nonsemantic vocal sound.39–41 Furthermore, subjects listening to reversed words or reversed phrases might guess the meaning of the terms or contents.39 Therefore, instead of using reverse words or reverse phrases as nonsemantic vocal sounds, we used reverse sentences of sufficient length to preclude guessing their meaning. We performed a preliminary study with 30 different subjects from those participating in the fMRI study. The 30 subjects listened to 2 reverse sentences without being informed in advance that these sounds were reverse sentences. At first, the subjects listened to reverse sentences of a male voice, and then they listened to those of a female voice, each for 20 seconds. After listening to these 2 sounds, we questioned them concerning the human voice, intonation, contents of the sentences, and male or female voice (Appendix 2). All subjects could perceive reverse sentences as “human voice,” “a sound having intonation,” “nonsemantic information,” and “a sound that can be differentiated as either male or female voice.”

Instruments Used for the Presentation of Stimuli

The stimuli were presented by using Media Studio Pro (version 6.0; Ulead Systems, Ulead Systems, Taiwan) running under Windows 98. Subjects listened to the sound stimuli through headphones attached to an air conductance sound delivery system (Commancer X6, MR imaging Audio System; Resonance Technology Inc, Los Angeles, Calif). The average sound pressure of stimulus amplitude was kept at 80 dB at the end of the audio system.

fMRI Acquisition

The images were acquired with a 1.5T Signa system (General Electric, Milwaukee, Wis). Functional images of 240 volumes were acquired with T2*-weighted gradient echo-planar imaging sequences sensitive to blood oxygenation level–dependent contrast. Each volume consisted of 40 transaxial contiguous sections with a section thickness of 3 mm to cover almost the whole brain (flip angle, 90°; echo time [TE], 50 ms; repetition time [TR], 4 seconds; matrix, 64 × 64; field of view, 24 × 24).

Behavioral Data

To ensure that the subjects actively participated in the fMRI study, we conducted a postscan session in which each subject was asked a series of questions regarding the contents of each condition (rSEN, SND, SEN). For the rSEN condition, the subjects were asked 3 questions, whether the subjects could recognize the sound as voice, could understand the contents, and could discriminate the voice as male or female. For the SEN condition, the questionnaire consisted of 4 questions regarding the situation relevant to the sentences, and 4 questions regarding the proper nouns used in the sentences. For the SND condition, we asked each subject to identify the names of the sound stimuli.

Image Processing

Data analysis was performed with statistical parametric mapping software SPM99 (Wellcome Department of Cognitive Neurology, London, UK), which ran with MATLAB (Mathworks, Natick, Mass). All volumes were realigned to the first volume of each session to correct for subject motion, and they were spatially normalized to the standard space defined by the Montreal Neurologic Institute (MNI) template. After normalization, all scans had a final resolution of 3 × 3 ×3 mm3. Functional images were spatially smoothed with a 3D isotropic Gaussian kernel (full width at half maximum of 8 mm). Low frequency noise was removed by applying a high-pass filter (cutoff period of 80 seconds) to the fMRI time series at each voxel. A temporal smoothing function was applied to the fMRI time series to enhance the temporal signal intensity-to-noise ratio. Significance of hemodynamic changes in each condition was examined by using the general linear model with boxcar functions convoluted with a hemodynamic response function. Statistical parametric maps for each contrast of the t statistics were calculated on a voxel-by-voxel basis. The t values were then transformed to unit normal distribution, resulting in z scores.

Statistical Analysis

Group analysis was performed on the data for all 30 subjects with the use of a random effect model on a voxel-by-voxel basis. Three trials (rSEN, SND, and SEN conditions) were presented by each explanatory variable. Each explanatory variable was convoluted with a standard hemodynamic response function taken from SPM99 to account for the hemodynamic response lag. The t statistics were calculated for contrast among the 3 trials.

Cognitive Systems

To assess the specific condition effect, we used the contrasts of reverse sentences minus identifiable sounds (rSEN-SND), sentences minus identifiable sounds (SEN-SND), and sentences minus reverse sentences (SEN-rSEN). As shown in Table 1, the contrast of rSEN-SND included human voice perception. The contrast of SEN-SND was assumed to represent the activation as a result of lexical-semantic processing and voice perception. The contrast of SEN-rSEN included lexical-semantic processing.

Table 1.

Cognitive systems involved in listening to SEN, rSEN, and SND

| SND | rSEN | SEN | rSEN-SND | SEN-SND | SEN-rSEN | |

|---|---|---|---|---|---|---|

| Lexical-semantic processing | − | − | + | − | + | + |

| Human voice perception | − | + | + | + | + | − |

| Tonal fluctuation | + | + | + | − | − | − |

| Attention | + | + | + | − | − | − |

| Primary auditory processing | + | + | + | − | − | − |

Note:—SND indicates nonvocal sounds; rSEN, reverse sentences; SEN, sentences. SND, rSEN, and SEN are in the context of subjects scanned by functional MR imaging while listening to these sounds.

A random effects model that estimates the error variance for each condition across the subjects was implemented for group analysis. The contrast images obtained from single-subject analysis were entered into the group analysis. A 1-sample t test was applied to determine group activation for each effect. Significant clusters of activation were determined by using the conjoint expected probability distribution of the height and extent of z scores with the height and extent threshold. Coordinates of activation were converted from MNI coordinates to the Talairach and Tournoux coordinates42 by using the mni2tal algorithm (http://www.mrc-cbu.cam.ac.uk/Imaging/Common/mnispace.shtml).

Laterality Index

To investigate cerebral laterality in the temporal cortices, we used the Laterality Index (LI),1,4 which is calculated by the ratio [VL − VR]/[VL + VR] × 100 (range, −100 ≤ LI ≤100), where VL is the voxel number of the left hemisphere and VR is the voxel number of the right hemisphere. LI > 20 corresponds to left hemisphere dominance, −20 ≤ LI ≤ 20 corresponds to symmetrical, and LI < −20 corresponds to right hemisphere dominance.

In our current study, activation of the temporal cortices was examined using a localized mask that was previously made of an MR imaging T1 template of the whole brain scanned at 1-mm intervals. In calculating LI, the total voxel number was fixed for all subjects. When cerebral activation under each contrast was analyzed in each subject, the t value of each voxel in the bilateral temporal cortices was calculated with an spmT file, and the t value of each subject was arranged in turn from the greatest number. From the assembly of t values of the left and right hemispheres, a total of 400 voxels were selected from the greatest value in declining order. We determined the extracted voxel number of VL + VR as 400 voxels, which closely correspond to 60% of cerebral activation of the temporal cortices under the contrast of rSEN-SND (with threshold P < .001, random effect model cluster-level corrected, VL = 283 voxels, VR = 382 voxels, VL + VR = 665, and [VL + VR] × 0.6 = [283 + 382] × 0.6 = 399). Language dominance was determined by examining the deviation of each voxel number of each hemisphere.

Results

Among the subjects, the mean rate of correct answers to the questionnaire was 96.8 ± 6.5% for the SEN condition, 97.2 ± 8.0% for the rSEN condition, and 94.4 ± 10.6% for the SND condition (post hoc P > .05). In the subsequent analyses, we discarded the data if either of the performance rates of the questionnaire was 2 SD or more below the mean (approximately 75% for both conditions). This procedure removed 2 women and 1 man from the original group of subjects. Therefore, 27 subjects were investigated (13 women and 14 men).

Group Analysis

Group analysis was performed to investigate cerebral activation of the following 3 contrasts: rSEN-SND, SEN-SND, and SEN-rSEN.

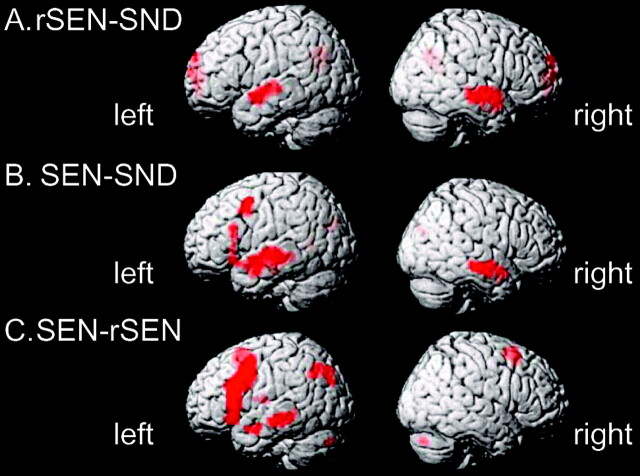

Cerebral activation under the rSEN-SND contrast, including human voice perception, was demonstrated in the temporal cortices. These activated regions were localized at the temporal cortices along the bilateral superior temporal sulcus (STS) and the bilateral middle temporal gyrus (MTG) (z > 4.16; P < .00005, random effect model uncorrected, extent threshold >10 voxels) (Fig 2A; and Table 2). Under this threshold, cerebral activation of the temporal cortices was right hemisphere-dominant (LI = −26.1 [< −20], L = 136 voxels, R = 232 voxels). The peaks of the activated regions of the left and right hemispheres were found in slightly different locations. Activation of the left temporal cortex was observed at the anterior and central portion along the upper bank of the STS, whereas that of the right temporal cortex was centered at the anterior temporal cortex along the STS and the anterior and central portion of MTG. The activated region of the right temporal cortex was extended more toward the anterior direction of the temporal cortex than that of the left temporal cortex.

Fig. 2.

Activated areas revealed by the contrasts. rSEN-SND (A), SEN-SND (B), and SEN-rSEN (C) with a statistical threshold of P < .00005 (random effect model uncorrected; extent threshold at 10 voxels). SEN, sentences; rSEN, reverse sentences; SND, identifiable nonvocal sounds.

Table 2.

Peak coordinates (x, y, z) and their z values of cerebral activation under three contrasts (rSEN-SND, SEN-SND, SEN-rSEN)

| Brain Regions | Contrast |

||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| rSEN-SND |

SEN-SND |

SEN-rSEN |

|||||||||||||||||||||||||||||||||||||||||||||

| Left |

Right |

Left |

Right |

Left |

Right |

||||||||||||||||||||||||||||||||||||||||||

| x | y | z | z Value | x | y | z | z Value | x | y | z | z Value | x | y | z | z Value | x | y | z | z Value | x | y | z | z Value | ||||||||||||||||||||||||

| Frontal cortices | |||||||||||||||||||||||||||||||||||||||||||||||

| MFG BA6 | −45 | 9 | 45 | 6.29 | −45 | 9 | 45 | 8.29 | |||||||||||||||||||||||||||||||||||||||

| IFG | |||||||||||||||||||||||||||||||||||||||||||||||

| Operculum BA9/46 | −54 | 21 | 21 | 4.54 | −54 | 21 | 27 | 8.38 | |||||||||||||||||||||||||||||||||||||||

| Triangular BA45 | −54 | 21 | 0 | 4.38 | −54 | 24 | 3 | 6.45 | |||||||||||||||||||||||||||||||||||||||

| SFG BA6 | −3 | 15 | 60 | 6.61 | |||||||||||||||||||||||||||||||||||||||||||

| Temporal cortices | |||||||||||||||||||||||||||||||||||||||||||||||

| Anterior | |||||||||||||||||||||||||||||||||||||||||||||||

| STS BA22 | −51 | −3 | −6 | 6.28 | 51 | 3 | −3 | 6.16 | −57 | 0 | −9 | 6.68 | |||||||||||||||||||||||||||||||||||

| MTG BA21 | −51 | 0 | −12 | 4.93 | 51 | 0 | −9 | 6.83 | −57 | −3 | −18 | 6.64 | 63 | −12 | −18 | 6.42 | −54 | 0 | −21 | 5.90 | |||||||||||||||||||||||||||

| Central | |||||||||||||||||||||||||||||||||||||||||||||||

| STS BA22 | −51 | −9 | −3 | 6.14 | 51 | −6 | −3 | 6.34 | −54 | −12 | 0 | 4.86 | |||||||||||||||||||||||||||||||||||

| MTG BA21 | 54 | −12 | −9 | 6.83 | −57 | −15 | −15 | 4.83 | 61 | −21 | −9 | 4.82 | −57 | −27 | −12 | 6.69 | |||||||||||||||||||||||||||||||

| Heschl’s gyrus BA41 | 54 | −21 | 12 | 4.16 | |||||||||||||||||||||||||||||||||||||||||||

| Posterior | |||||||||||||||||||||||||||||||||||||||||||||||

| STS BA22 | −48 | −21 | 3 | 4.68 | |||||||||||||||||||||||||||||||||||||||||||

| MTG BA21 | 45 | −18 | −9 | 4.68 | −54 | −33 | −9 | 4.90 | −54 | −33 | −6 | 6.38 | |||||||||||||||||||||||||||||||||||

| Parietal cortices | |||||||||||||||||||||||||||||||||||||||||||||||

| Precuneus BA19 | −33 | −78 | 36 | 5.75 | |||||||||||||||||||||||||||||||||||||||||||

| Cerebellum | 9 | −78 | −33 | 6.16 | |||||||||||||||||||||||||||||||||||||||||||

Note:—SND indicates identifiable nonvocal sounds; rSEN, reverse sentences; SEN, sentences. SND, rSEN, and SEN are in the context of subjects scanned by functional MR imaging while listening to these sounds. MFG indicates middle frontal gyrus; IFG, inferior frontal gyrus; SFG, superior frontal gyrus; STS, superior temporal sulcus; MTG, middle temporal gyrus. Activation differences were considered significant at height threshold (P < .00005, random effect model uncorrected) and extent threshold (10 voxels).

The contrast of SEN-SND demonstrated cerebral activation in the left frontal cortex, the left parietal cortex, and the bilateral temporal cortices (z > 4.16; P < .00005 random effect model uncorrected, extent threshold >10 voxels) (Fig 2B and Table 2). Cerebral activation in the frontal cortex was most prominent at the left inferior frontal gyrus (IFG) and the left middle frontal gyrus (MFG). The activated region of the left IFG was observed at the anterior triangular and opercular portions. Cerebral activation of the temporal cortices was left hemisphere-dominant (LI = 22.1 [> 20], L = 213 voxels, R = 136 voxels) and the peak of the region centered on the temporal cortices along the upper bank of the STS and the MTG. Cerebral activation in the parietal cortex was observed at the inferior parietal cortex, including the left angular gyrus and the left precuneus.

The SEN-rSEN contrast showed cerebral activation in the left hemisphere except the cerebellum, which showed right-lateralized activation (z > 4.16; P < .00005, random effect model uncorrected, extent threshold >10 voxels) (Fig 2C and Table 2). Cerebral activation of the frontal cortex was observed at the left MFG and the triangular and opercular portion of the left IFG. Cerebral activation of the latter was spatially extended more in the direction of the central sulcus than the activated area under the contrast of SEN-SND. Additional activation was found in the internal portion of the left superior frontal gyrus (SFG) along the longitudinal fissure. In the temporal cortices, only the left MTG was activated (LI = 100 [> 20], L = 178 voxels, R = 0 voxels). In the parietal cortices, the locus of the activation was observed around the left inferior parietal cortex including the precuneus, the left posterior parietal cortex, and the angular gyrus. All these results (cerebral activation under the rSEN-SND, SEN-SND, and SEN-rSEN contrasts) satisfied with statistical significance the cluster level in the temporal cortices (random effect model, cluster level corrected, P < .001) as well as the significance on a voxel-by-voxel basis (random effect model uncorrected).

Although sex difference of groups was investigated by the 2-sample t test, significant differences of cerebral activation under each of the 3 contrasts of rSEN-SND, SEN-SND, and SEN-rSEN were not observed (there was no activation even at the following lower threshold; P < .005, random effect model uncorrected). Furthermore, no significant difference in the average of LI was observed between the male and female subgroups (rSEN-SND, Mann-Whitney U = 89.5, P = .801; SEN-SND, Mann-Whitney U = 78.0, P = .550; SEN-rSEN, Mann-Whitney U = 84.5, P = .756).

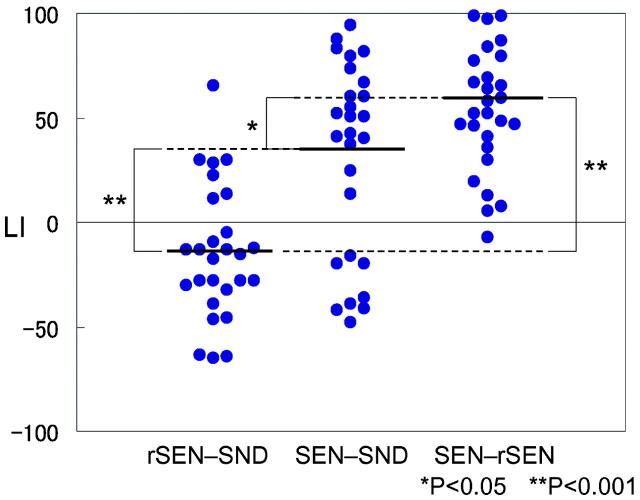

Individual Variability in LI under the 3 Contrasts

Figure 3 shows the LI distribution of the temporal activation under the rSEN-SND, SEN-SND, and SEN-rSEN contrasts. Mean ± SD of LI under the 3 respective contrasts was −14.6 ± 6.1, 30.6 ± 8.9, and 56.2 ± 5.6. One-way analysis of variance (ANOVA) for individual LI in temporal activation was significantly different among the 3 contrasts (ANOVA: F (2, 78) = 26.28, P < .001). Multiple comparison by Bonferroni test after ANOVA was significantly different among all 3 contrasts: between rSEN-SND and SEN-SND, P < .001; between rSEN-SND and SEN-rSEN, P < .001; between SEN-SND and SEN-rSEN, P = .036. These results showed that LI in each contrast was significantly different and that language dominance of the SEN-rSEN contrast was the greatest among the 3 contrasts.

Fig. 3.

LI distribution of the temporal activation under rSEN-SND, SEN-SND, and SEN-rSEN contrasts: The bold line shows the mean of LI under each contrast. One-way ANOVA and multiple comparison by Bonferroni test for individual LI in temporal activation was significantly different among the 3 contrasts (ANOVA: F (2, 78) = 26.28, P < .001, Bonferroni: P < .05). *, P < .05; **, P < .001.

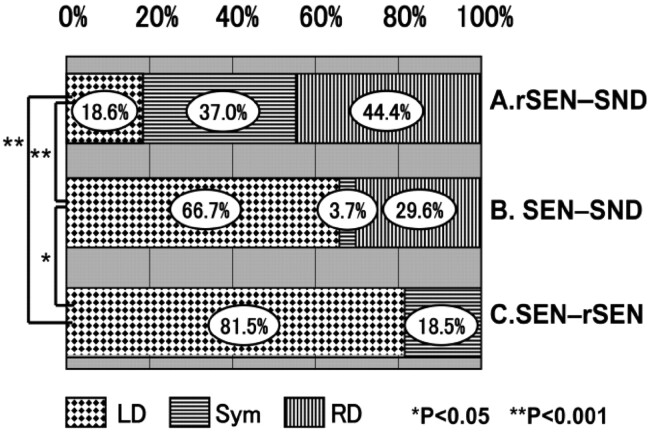

Fig 4 shows individual variability of LI of the temporal cortices. For the rSEN-SND contrast, 44.4% of the subjects exhibited right hemisphere dominance, 37% were symmetrical, but 18.6% had left hemisphere dominance. On the other hand, for the SEN-SND contrast, 66.7% of the subjects exhibited left hemisphere dominance, 3.7% were symmetrical, but 29.6% showed right hemisphere dominance. For the SEN-rSEN contrast, 81.5% of the subjects exhibited left hemisphere dominance, and 18.5% were symmetrical. Although 9 of 27 subjects (33%) showed symmetrical or right-lateralized activation under the SEN-SND contrast, all subjects showed left-lateralized activation under the SEN-rSEN contrast.

Fig. 4.

Individual variability of LI of the temporal cortices: symmetrical or right-lateralized activation was observed in 22 of 27 subjects (81.4%) under the rSEN-SND contrast in the temporal cortices. Although 9 of 27 subjects (33.3%) showed symmetrical or right-lateralized activation under the SEN-SND contrast in the temporal cortices, all subjects showed left hemisphere dominance under the SEN-rSEN contrast. *, P < .05; **, P < .001.

The rate of subjects showing left hemisphere dominance in the temporal cortices was significantly less in the rSEN-SND contrast (18.6%) than in the SEN-SND (66.7%) and SEN-rSEN contrasts (81.5%) (rSEN-SND versus SEN-SND, χ2 = 47.00, P < .001; rSEN-SND versus SEN-rSEN, 77.73, χ2 = 47.00, P < .001). Furthermore, the difference in the rate of left-lateralized subjects was also significant in the temporal cortices between the SEN-SND and SEN-rSEN contrasts (χ2 = 4.56 [Yates’s adjustment], P = .033 [Fig 4]).

Discussion

In the present study, we used an fMRI protocol to reveal the general trend of language dominance in the temporal cortices by examining lexical-semantic processing and human voice perception within a single session. Our results showed that temporal activation under the rSEN-SND contrast, including human voice perception, was right hemisphere-dominant, whereas temporal activation under the SEN-SND or SEN-rSEN contrast, including lexical-semantic processing, was left hemisphere-dominant. All of the subjects, who were symmetrical or right hemisphere-dominant under the SEN-SND contrast, showed left-lateralized activation under the SEN-rSEN contrast.

Cerebral Laterality in Human Voice Perception

We confirmed that reverse sentences could be perceived as “human voice” and “nonsemantic information” in our pilot study (see “Appendix 2” and “Experimental Design” in “Materials and Methods”). By using the contrast of rSEN-SND, we investigated the cerebral activation of human voice perception.

In our group analysis, right-lateralized activation by human voice perception was observed in the anterior portion of STS and MTG. According to fMRI studies of human voice perception, the right anterior temporal cortex is significantly activated when subjects listen to syllables spoken by different voices compared with those by a single voice.43 Thus, cerebral activation of the right anterior temporal cortex was believed to play a role in the perception of subtle tone timbre. Previous fMRI studies revealed that the right anterior temporal cortex was implicated in the recognition of prosody and specific pitch.44–47 Taking these features into account, our results showed that there is cerebral activation in the right anterior temporal cortex by perception of the characteristic sounds constituted by the human voice.

fMRI studies of human voice perception have mainly reported the results of group analysis,33,48,49 but few studies have reported the results of single subject analysis.32 Although LI was not estimated, a previous fMRI study of human voice perception in 8 subjects demonstrated that 4 of the 8 were right hemisphere-dominant, another 2 were symmetrical, and the remaining 2 subjects were left hemisphere-dominant.32 In our present study, cerebral laterality under the rSEN-SND contrast showed right hemisphere dominance in 44.4% of the subjects and bilateral activation in 37.0%. On the other hand, the rate of subjects showing left hemisphere dominance was significantly less under the rSEN-SND contrast (18.6%) than under the SEN-SND (66.7%) and SEN-rSEN contrasts (81.5%). The results of cerebral laterality for human voice perception revealed that most people were symmetrical or right hemisphere-dominant, whereas relatively few people were left hemisphere-dominant.

Cerebral Laterality in Lexical-Semantic Processing

In our study, cerebral activation showed left hemisphere dominance in the frontotemporal region under both of the SEN-SND and SEN-rSEN contrasts. Recent neuroimaging studies have investigated language dominance by using the reading task,14,50–53 verbal fluency task,54,55 or auditory comprehension task,14,28 but to our knowledge, the influence of human voice perception has not been adequately taken into consideration in determining language dominance.

Some fMRI studies have demonstrated right hemisphere dominance for language in right-handed subjects—atypical lateralization.4,5,36,56–58 Although atypical lateralization is mainly reported in cerebral activation of the frontal cortex under word production tasks, in an fMRI study using a story-listening task, atypical lateralization was demonstrated in the temporal cortices.59 In that study, right hemisphere dominance in the temporal cortices could be influenced by human voice perception.

Our results under the SEN-SND contrast revealed that 66.7% of subjects were left hemisphere-dominant, and 29.6% were right hemisphere-dominant. On the other hand, the results under the SEN-rSEN contrast showed that 81.5% of subjects were left hemisphere-dominant, and none was right hemisphere-dominant. This difference of language dominance may be attributed to the difference of cognitive function between the SEN-SND and SEN-rSEN contrasts. Cerebral activation under the SEN-SND contrast includes cerebral activation by lexical-semantic processing and human voice perception. On the other hand, cerebral activation under the SEN-rSEN contrast could be regarded as more activated by lexical-semantic processing than by human voice perception because the subjects could recognize reverse sentences under the rSEN condition as human voice. Therefore, our results suggest that evaluating cerebral activation of human voice perception could represent a better way of investigating the cerebral laterality of language.

Conclusion

The present study demonstrated that right-lateralized activation was observed in the temporal cortices by human voice perception. In contrast, left-lateralized activation was shown in the frontotemporal region and inferior parietal cortex by lexical-semantic processing. Although 9 of 27 subjects (33.3%) were symmetrical or right hemisphere dominant under the SEN-SND contrast, all subjects showed left-lateralized activation under the SEN-rSEN contrast. Our results demonstrated that right-lateralized activation by human voice perception could mask left-lateralized activation by lexical-semantic processing. These findings suggest that the influence of human voice perception should be adequately taken into account when determining language dominance.

Appendix 1: Contents of the Sentences

We used the following sentences in the task.

Ms. Keiko Ueda, who lives in Kitakyushu city and works as a licensed cook at a company cafeteria, notified the police near the station that 56,000 yen was stolen when she was mugged at Odouri last night.

Last night, when Mr. Ichiro Sato was driving a 10-ton truck full of eggs along the road to Yokohama, near the mouth of the Tama River the axle of the truck broke, and the truck slipped off the road and was buried in a ditch.

These days “Casual Day” during which businessmen work in plain clothes with no tie has been established, but the apparel business has developed and is marketing a “Dressed Up Monday Campaign” that advertises “Let’s be smartly dressed in a suit every Monday.”

Today, the designs of Northern Europe have become increasingly popular, and a cultural event showing a collection of Swedish designs, music and images, etc., called “Swedish style 2001,” will be held at various locations in Tokyo.

Appendix 2: Contents of the Questions in the Pilot Study Questionnaire

Please answer the following question after listening to 2 sounds.

As what did you recognize these sounds? Please circle the appropriate one.

Human voice

Animal sound

Machine sound

Environmental sound

If you circled no. 1, please answer these questions.

As what did you recognize the first sound?

Male voice

Female voice

As what did you recognize the second sound?

Male voice

Female voice

Did you recognize these sounds as having intonation?

Yes No

Did you recognize a message from these sounds?

Yes No

Acknowledgments

The staffs of the Section of Biofunctional Informatics, Graduate School of Medicine, Tokyo Medical and Dental University, and of Asai Hospital, are gratefully acknowledged. We are indebted to Prof. J. Patrick Barron of the International Medical Communications Center of Tokyo Medical University for his review of this manuscript.

Footnotes

This work was supported by a Grant-in-Aid for Scientific Research from the Japanese Ministry of Education, Culture, Sports, Science and Technology (11B-3), a research grant for nervous and mental disorders (14B-3), and a Health and Labor Sciences Research Grant for Research on Psychiatric and Neurologic Diseases and Mental Health (H15-kokoro-03) from the Japanese Ministry of Health, Labor and Welfare.

References

- 1.Springer JA, Binder JR, Hammeke TA, et al. Language dominance in neurologically normal and epilepsy subjects: a functional MRI study. Brain 1999;122 (Pt 11):2033–46 [DOI] [PubMed] [Google Scholar]

- 2.Gleissner U, Helmstaedter C, Elger CE. Memory reorganization in adult brain: observations in three patients with temporal lobe epilepsy. Epilepsy Res 2002;48:229–34 [DOI] [PubMed] [Google Scholar]

- 3.Spreer J, Quiske A, Altenmuller DM, et al. Unsuspected atypical hemispheric dominance for language as determined by fMRI. Epilepsia 2001;42:957–59 [DOI] [PubMed] [Google Scholar]

- 4.Szaflarski JP, Binder JR, Possing ET, et al. Language lateralization in left-handed and ambidextrous people: fMRI data. Neurology 2002;59:238–44 [DOI] [PubMed] [Google Scholar]

- 5.Sabbah P, Chassoux F, Leveque C, et al. Functional MR imaging in assessment of language dominance in epileptic patients. Neuroimage 2003;18:460–67 [DOI] [PubMed] [Google Scholar]

- 6.Wada J, Rasmussen T. Intracarotid injection of sodium amytal for the lateralization of cerebral speech dominance. J Neurosurgery 1960;17:266–82 [DOI] [PubMed] [Google Scholar]

- 7.Spreer J, Arnold S, Quiske A, et al. Determination of hemisphere dominance for language: comparison of frontal and temporal fMRI activation with intracarotid Amytal testing. Neuroradiology 2002;44:467–74 [DOI] [PubMed] [Google Scholar]

- 8.Knecht S, Deppe M, Drager B, et al. Language lateralization in healthy right-handers. Brain 2000;123(Pt 1):74–81 [DOI] [PubMed] [Google Scholar]

- 9.Gaillard WD, Balsamo L, et al. Language dominance in partial epilepsy patients identified with an fMRI reading task. Neurology 2002;59:256–65 [DOI] [PubMed] [Google Scholar]

- 10.Binder JR, Frost JA, Hammeke TA, et al. Human brain language areas identified by functional magnetic resonance imaging. J Neurosci 1997;17:353–62 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kim H, Yi S, Son EI, et al. Lateralization of epileptic foci by neuropsychological testing in mesial temporal lobe epilepsy. Neuropsychology 2004;18:141–51 [DOI] [PubMed] [Google Scholar]

- 12.Lehericy S, Cohen L, Bazin B, et al. Functional MR evaluation of temporal and frontal language dominance compared with the Wada test. Neurology 2000;54:1625–33 [DOI] [PubMed] [Google Scholar]

- 13.Saygin AP, Wilson SM, Dronkers NF, et al. Action comprehension in aphasia: linguistic and non-linguistic deficits and their lesion correlates. Neuropsychologia 2004;42:1788–804 [DOI] [PubMed] [Google Scholar]

- 14.Gaillard WD, Balsamo L, Xu B, et al. fMRI language task panel improves determination of language dominance. Neurology 2004;63:1403–08 [DOI] [PubMed] [Google Scholar]

- 15.Maeda K, Yasuda H, Haneda M, et al. Braille alexia during visual hallucination in a blind man with selective calcarine atrophy. Psychiatry Clin Neurosci 2003;57:227–29 [DOI] [PubMed] [Google Scholar]

- 16.Canevini MP, Vignoli A, Sgro V, et al. Symptomatic epilepsy with facial myoclonus triggered by language. Epileptic Disord 2001;3:143–46 [PubMed] [Google Scholar]

- 17.Annoni JM, Khateb A, Gramigna S, et al. Chronic cognitive impairment following laterothalamic infarcts: a study of 9 cases. Arch Neurol 2003;60:1439–43 [DOI] [PubMed] [Google Scholar]

- 18.Gaillard WD, Hertz-Pannier L, Mott SH, et al. Functional anatomy of cognitive development: fMRI of verbal fluency in children and adults. Neurology 2000;54:180–85 [DOI] [PubMed] [Google Scholar]

- 19.Weiss EM, Hofer A, Golaszewski S, et al. Brain activation patterns during a verbal fluency test—a functional MRI study in healthy volunteers and patients with schizophrenia. Schizophr Res 2004;70:287–91 [DOI] [PubMed] [Google Scholar]

- 20.Pouratian N, Bookheimer SY, Rex DE, et al. Utility of preoperative functional magnetic resonance imaging for identifying language cortices in patients with vascular malformations. Neurosurg Focus 2002;13:e4. [PubMed] [Google Scholar]

- 21.Sakai KL, Tatsuno Y, Suzuki K, et al. Sign and speech: amodal commonality in left hemisphere dominance for comprehension of sentences. Brain 2005;128:1407–17 [DOI] [PubMed] [Google Scholar]

- 22.Sadato N, Yamada H, Okada T, et al. Age-dependent plasticity in the superior temporal sulcus in deaf humans: a functional MRI study. BMC Neurosci 2004;5:56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Just MA, Newman SD, Keller TA, et al. Imagery in sentence comprehension: an fMRI study. Neuroimage 2004;21:112–24 [DOI] [PubMed] [Google Scholar]

- 24.Schlosser MJ, Luby M, Spencer DD, et al. Comparative localization of auditory comprehension by using functional magnetic resonance imaging and cortical stimulation. J Neurosurg 1999;91:626–35 [DOI] [PubMed] [Google Scholar]

- 25.Humphries C, Willard K, Buchsbaum B, et al. Role of anterior temporal cortex in auditory sentence comprehension: an fMRI study. Neuroreport 2001;12:1749–52 [DOI] [PubMed] [Google Scholar]

- 26.Friederici AD. Towards a neural basis of auditory sentence processing. Trends Cogn Sci 2002;6:78–84 [DOI] [PubMed] [Google Scholar]

- 27.Binder JR, Frost JA, Hammeke TA, et al. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex 2000;10:512–28 [DOI] [PubMed] [Google Scholar]

- 28.Michael EB, Keller TA, Carpenter PA, et al. fMRI investigation of sentence comprehension by eye and by ear: modality fingerprints on cognitive processes. Hum Brain Mapp 2001;13:239–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kotz SA, Cappa SF, von Cramon DY, et al. Modulation of the lexical-semantic network by auditory semantic priming: an event-related functional MRI study. Neuroimage 2002;17:1761–72 [DOI] [PubMed] [Google Scholar]

- 30.James TW, Gauthier I. Auditory and action semantic features activate sensory-specific perceptual brain regions. Curr Biol 2003;13:1792–96 [DOI] [PubMed] [Google Scholar]

- 31.von Kriegstein K, Eger E, Kleinschmidt A, et al. Modulation of neural responses to speech by directing attention to voices or verbal content. Brain Res Cogn Brain Res 2003;17:48–55 [DOI] [PubMed] [Google Scholar]

- 32.Belin P, Zatorre RJ, Ahad P. Human temporal-lobe response to vocal sounds. Brain Res Cogn Brain Res 2002;13:17–26 [DOI] [PubMed] [Google Scholar]

- 33.Belin P, Zatorre RJ, Lafaille P, et al. Voice-selective areas in human auditory cortex. Nature 2000;403:309–12 [DOI] [PubMed] [Google Scholar]

- 34.Belin P, Fecteau S, Bedard C. Thinking the voice: neural correlates of voice perception. Trends Cogn Sci 2004;8:129–35 [DOI] [PubMed] [Google Scholar]

- 35.Knecht S, Drager B, Floel A, et al. Behavioural relevance of atypical language lateralization in healthy subjects. Brain 2001;124:1657–65 [DOI] [PubMed] [Google Scholar]

- 36.Knecht S, Jansen A, Frank A, et al. How atypical is atypical language dominance? Neuroimage 2003;18:917–27 [DOI] [PubMed] [Google Scholar]

- 37.Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 1971;9:97–113 [DOI] [PubMed] [Google Scholar]

- 38.Saberi K, Perrott DR. Cognitive restoration of reversed speech. Nature 1999;398:760. [DOI] [PubMed] [Google Scholar]

- 39.Burton MW, Noll DC, Small SL. The anatomy of auditory word processing: individual variability. Brain Lang 2001;77:119–31 [DOI] [PubMed] [Google Scholar]

- 40.Howard D, Patterson K, Wise R, et al. The cortical localization of the lexicons. Positron emission tomography evidence. Brain 1992;115:1769–82 [DOI] [PubMed] [Google Scholar]

- 41.Price CJ, Wise RJ, Warburton EA, et al. Hearing and saying. The functional neuro-anatomy of auditory word processing. Brain 1996;119:919–31 [DOI] [PubMed] [Google Scholar]

- 42.Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain: three dimensional proportional system. New York: Thieme Medical;1988

- 43.Belin P, Zatorre RJ. Adaptation to speaker’s voice in right anterior temporal lobe. Neuroreport 2003;14:2105–09 [DOI] [PubMed] [Google Scholar]

- 44.Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci 2002;6:37–46 [DOI] [PubMed] [Google Scholar]

- 45.Zatorre RJ, Evans AC, Meyer E, et al. Lateralization of phonetic and pitch discrimination in speech processing. Science 1992;256:846–49 [DOI] [PubMed] [Google Scholar]

- 46.Heim S, Opitz B, Muller K, et al. Phonological processing during language production: fMRI evidence for a shared production-comprehension network. Brain Res Cogn Brain Res 2003;16:285–96 [DOI] [PubMed] [Google Scholar]

- 47.Binder JR, Rao SM, Hammeke TA, et al. Effects of stimulus rate on signal response during functional magnetic resonance imaging of auditory cortex. Brain Res Cogn Brain Res 1994;2:31–38 [DOI] [PubMed] [Google Scholar]

- 48.Mitchell RL, Elliott R, Barry M, et al. The neural response to emotional prosody, as revealed by functional magnetic resonance imaging. Neuropsychologia 2003;41:1410–21 [DOI] [PubMed] [Google Scholar]

- 49.Stevens AA. Dissociating the cortical basis of memory for voices, words and tones. Brain Res Cogn Brain Res 2004;18:162–71 [DOI] [PubMed] [Google Scholar]

- 50.Cohen L, Jobert A, Le Bihan D, et al. Distinct unimodal and multimodal regions for word processing in the left temporal cortex. Neuroimage 2004;23:1256–70 [DOI] [PubMed] [Google Scholar]

- 51.Lee KM. Functional MRI comparison between reading ideographic and phonographic scripts of one language. Brain Lang 2004;91:245–51 [DOI] [PubMed] [Google Scholar]

- 52.Tan LH, Liu HL, Perfetti CA, et al. The neural system underlying Chinese logograph reading. Neuroimage 2001;13:836–46 [DOI] [PubMed] [Google Scholar]

- 53.Hund-Georgiadis M, Lex U, von Cramon DY. Language dominance assessment by means of fMRI: contributions from task design, performance, and stimulus modality. J Magn Reson Imaging 2001;13:668–75 [DOI] [PubMed] [Google Scholar]

- 54.Thivard L, Hombrouck J, du Montcel ST, et al. Productive and perceptive language reorganization in temporal lobe epilepsy. Neuroimage 2005;24:841–51 [DOI] [PubMed] [Google Scholar]

- 55.Schlosser R, Hutchinson M, Joseffer S, et al. Functional magnetic resonance imaging of human brain activity in a verbal fluency task. J Neurol Neurosurg Psychiatry 1998;64:492–98 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Vingerhoets G, Deblaere K, Backes WH, et al. Lessons for neuropsychology from functional MRI in patients with epilepsy. Epilepsy Behav 2004;5 Suppl 1:S81–89 [DOI] [PubMed] [Google Scholar]

- 57.Rutten GJ, Ramsey NF, van Rijen PC, et al. FMRI-determined language lateralization in patients with unilateral or mixed language dominance according to the Wada test. Neuroimage 2002;17:447–60 [DOI] [PubMed] [Google Scholar]

- 58.Hund-Georgiadis M, Zysset S, Weih K, et al. Crossed nonaphasia in a dextral with left hemispheric lesions: a functional magnetic resonance imaging study of mirrored brain organization. Stroke 2001;32:2703–07 [PubMed] [Google Scholar]

- 59.Jayakar P, Bernal B, Santiago Medina L, et al. False lateralization of language cortex on functional MRI after a cluster of focal seizures. Neurology 2002;58:490–92 [DOI] [PubMed] [Google Scholar]