Abstract

Virtual reality (VR) provides immersive visualization that has proved to be useful in a variety of medical applications. Currently, however, no free open-source software platform exists that would provide comprehensive support for translational clinical researchers in prototyping experimental VR scenarios in training, planning or guiding medical interventions. By integrating VR functions in 3D Slicer, an established medical image analysis and visualization platform, SlicerVR enables virtual reality experience by a single click. It provides functions to navigate and manipulate the virtual scene, as well as various settings to abate the feeling of motion sickness. SlicerVR allows for shared collaborative VR experience both locally and remotely. We present illustrative scenarios created with SlicerVR in a wide spectrum of applications, including echocardiography, neurosurgery, spine surgery, brachytherapy, intervention training and personalized patient education. SlicerVR is freely available under BSD type license as an extension to 3D Slicer and it has been downloaded over 7,800 times at the time of writing this article.

Index Terms—: Medical treatment, Open source software, Telemedicine, Virtual reality

I. INTRODUCTION

VIRTUAL reality (VR) has promised to revolutionize various fields of industry and research for many years. Virtual reality is a fully immersive technique that allows the user to experience realistic scenes with complete control over the visual and auditory sensations in VR, the real world is fully replaced by the virtual content. Only recently, however, the necessary hardware components have reached a level of maturity where VR techniques can be efficiently applied and, at last, VR hardware became available to wide audiences [1].

Many areas of medicine have seen the benefits of virtual reality visualization and interaction. Since the pedagogic and educational advantages of virtual environments have been established [2], numerous training applications using VR-based simulators have been developed and successfully evaluated. It has been shown that VR training reduces surgical times and improves performance in interventions in neurosurgery [3][4] and urology [5], applications in endoscopy [6] laparoscopy [7], or dentistry [8]. In addition to training the interventionalists, VR simulation can also be used for patient education [9–12] and rehabilitation [13][14]. Another major area where VR techniques have been found beneficial is surgical planning, by promising reduced surgical times and improved outcomes in a patient-specific approach. Virtual planning software have been developed and evaluated for tumor resection [15–17], brachytherapy [18], radiofrequency ablation [19] and radiation therapy [20].

Peer reviewed literature suggests that the applicability of VR in medical research has been well established. VR techniques have become significant in the clinical industry as well: the U.S. Food and Drug Administration (FDA) organizes workshops for medical virtual reality [21], and VR is frequently featured in the trending topics of the annual meetings of the Radiological Society of North America (RSNA) [22][23]. The annual growth rate of the virtual and augmented reality market in healthcare is estimated between 16% and 36% in the 2019–2026 period [24–26].

Established platforms exist for development of VR software, such as Unity3D1 and Unreal Engine.2 These, however, have been created and optimized for the main source of the recent extended reality boom: the video game industry. Various research fields and industries make use of these platforms besides the entertainment sector: manufacturing, education, military, etc. Although each of these fields have their own specialized requirements for data and workflow management, visualization, and interaction, the extra software components for those can be added to the base platform with reasonable overhead. In addition, all the above-mentioned areas deal exclusively with “geometrical objects”. This means that the objects presented to the user in the scene are represented the same way (as polygonal meshes), and interaction is typically manual motion.

The medical use cases, however, share a large set of complex software features that are not present in the available platforms: DICOM [27] data management, segmentation, registration, connections to medical hardware, and common evaluation strategies. Moreover, the objects these features involve are “medical objects”; data that is created, managed, and stored in various ways, such as annotations, treatment plans, transformations, or volumetric images. Previous work in medical research involving virtual reality have aspired to reuse existing components as much as possible, but the integration of the steps into a complete workflow has proved to be difficult. Either the development burden is greater than otherwise necessary [28], or the resulting software is rather heterogeneous [29][30]. Difficulties in integration is aggravated by using different technologies, such as the C# implementation of Unity3D, which hinders integration with systems using C/C++, which is still the standard in the medical imaging and robotics industry. This problem prevents creating streamlined, integrated workflows, and restricts flexibility in deviating from the defined steps (or, as seen in most of the works cited above, a combination of these two).

3D Slicer [31] is an open-source medical image visualization and analysis platform covering the generic medical image computing functions, and offering a great number of extensions to support specialized fields such as image-guided therapy [32], radiation therapy [33], diffusion tractography [34], chest imaging [35], or extended DICOM support [36]. The 3D Slicer community [37] has been working over two decades on developing these features in a robust, maintainable, and flexible way, so it seems reasonable to add virtual reality support to this platform in order to leverage the powerful medical feature set it provides, and thus enable establishing complex medical workflows with minimal additional effort.

In addition to the considerations about functions supporting medical data and procedures, the set of requirements in terms of virtual reality visualization is different for medical applications. A major difference is the heavy use of volume rendering, which is a 3D image visualization technique also used in the gaming industry for rendering particle clouds such as fog and fire but is used in medical imaging in a more demanding and complex way. It visualizes large image volumes such as computed tomography (CT) or magnetic resonance (MR) in 3D, and applies complex transfer functions and custom shader programs - not available in the platforms used in gaming - to achieve a rendering that allows quick and unambiguous interpretation of the detailed medical data.

Another difference is the limited visual context when showing medical data. In most cases, there is no floor, sky, or other clues typically present in virtual reality, because the only focus is the patient data itself. The method of interacting with objects in the scene is also different. With the distinct set of VR requirements, the creation of a medically focused platform that can be directly applied to a wide range of use cases is justifiable.

In this paper, which is an extended version of work published in [38], we report the current state, as well as the established and future medical use cases of the free, open-source SlicerVR platform.

II. Materials and Methods

SlicerVR (www.SlicerVR.org) is a comprehensive virtual reality software toolkit, providing immersive virtual reality visualization [39], exploration [40], and navigation during image-guided therapy training and planning, taking advantage of the functionality of the 3D Slicer ecosystem. SlicerVR is designed to provide one-button virtual reality integration in a well-established medical research platform, so instead of targeting certain applications and performing certain tasks, the toolkit aims to endow 3D Slicer with seamless but comprehensive VR capabilities. Arbitrary rendered scenes showing and providing access to medical objects can be simply transferred to the head mounted display (HMD) and manipulated using the VR controllers, without the need for any programming or configuration steps.

The SlicerVR toolkit can be installed from the 3D Slicer extension manager. A module named “Virtual Reality” appears in the menu containing VR-related settings, in addition to the toolbar buttons controlling the frequent operations such as show scene in virtual reality. After the user has populated the 3D Slicer scene to be viewed, VR rendering can be launched by pressing a single button. By managing a single rendering scene, simultaneous manipulation of the immersive virtual reality scene and the desktop (i.e. conventional monitor-mouse) scene is possible. As consequence, in contrast to the habitual “virtual exploration” environments, all modifications made in the virtual scene are propagated back to 3D Slicer. This shared scene approach makes it possible, for example, to edit a therapy plan in VR and then applying it without the need to take further steps.

A. Visualization and navigation

The greatest advantage of virtual reality is the intuitive, stereoscopic visualization it offers. The intuitiveness lies in the fact that it is possible to change the point of view by moving one’s head, making it possible to look at objects from different angles just as one would in the real world. Natural head movement is also useful to get a better sense of depth, which is otherwise provided by the stereoscopic rendering. The two eyes receive two different renderings of the virtual scene, the cameras being assigned to the two eye positions, thus providing a natural feel when looking at the scene.

Navigating within the scene is also possible by using the VR controllers. The user can fly in the direction of pointing or away from that, by pressing the button corresponding to the direction (forward or backwards). The speed of flight can be changed from the VR module’s user interface (UI). An advanced but intuitive view operation, which we call pinch 3D, allows the user to manipulate the virtual world itself using the two controllers. The user presses a button on both controllers, which selects two points in the virtual world. As the user moves the two controllers while keeping the buttons pressed, the world is being rotated, scaled, and moved at the same time.

The third option to navigate in the scene is to set the desired view in the 3D viewer on the desktop and press the “Set virtual reality view to match reference view” button on the toolbar or in the VR module. The VR cameras are then calculated based on the single-view reference camera, and propagated to the HMD, thus “jumping” to the desired position and viewing direction. Navigation within a virtual medical scene differs from traditional VR applications, in that the only defined objects are the medical data (images, segmentations, plans), and there is no environment context explicitly determined. This means that the user cannot rely on a floor or sky being present at all times to orient themselves, so it is easier to lose bearings. The option to set the camera from a traditional desktop view is thus quite important and useful. At the same time, the lack of visual anchors also enables certain functions such as pinch 3D.

Additional display options exist in SlicerVR to fine-tune the visual experience. These include options such as two-sided lighting, which determines whether the sides of the objects not facing the light sources are lit, and back lights, which activates additional light sources. It is also possible to toggle visibility of the physical objects controlling the user’s VR environment and experience. Such devices are the VR controllers in the user’s hands or the lighthouses that are used by certain VR systems (e.g. HTC Vive and Oculus Rift) for external position tracking of the HMD and controllers.

B. Motion sickness

In highly immersive environments such as VR, many users experience discomfort such as nausea or headache after extended use or upon receiving inconsistent stimulus [41–43]. One of the major factors that contribute to motion sickness is the frame rate experienced within the virtual environment. Frames per second need to be kept as high and constant as possible to increase the comfort of the wearer. In medical applications it is often needed to visualize large 3D images via the volume rendering technique, or complex surface models containing tens or hundreds of thousands of triangles. The rendering of these complex datasets pushes the limits of even the most modern hardware, especially since they need to be rendered multiple times: the 3D view and the 2D slice view intersections on the desktop (depending on the selected layout), plus the two VR cameras. Thus, it is critical to ensure that rendering the virtual scene happens as smoothly as possible on the available hardware.

There are three mechanisms in SlicerVR to keep the rendering frame rate high in order to allow prolonged convenient stay in the virtual world. The update rate option allows setting a desired frame rate, which is enforced by reducing volume rendering quality to the highest possible setting while keeping the desired frame per second. The motion sensitivity setting enables temporary reduction of volume rendering quality when head motion is detected. At the default value of zero motion is never detected, and at high values even a little motion triggers the quality change. These two options implement progressive rendering, i.e. harmonization of rendering parameters based on user actions. The third option, namely the “Optimize scene for virtual reality” toolbar button allows a one-time optimization of rendering settings on the current scene to increase performance. It forces volume rendering to use the graphics card and turns off 2D slice intersection visibility for all existing surface models and segmentations, because calculating the intersections is a computation-heavy task. The optimize feature also turns off backface culling for all surface models so that the user sees surfaces even when going inside an object. Although this last setting is not related to performance, it enhances in-VR experience.

There are other considerations to prevent motion sickness in addition to rendering performance. Degree of control is an important factor influencing level of comfort. Movements that occur without the user’s explicit command, especially if high acceleration is involved, cause sensory conflict that may increase discomfort and lead to motion sickness symptoms. Each mode of motion in SlicerVR is initiated by the user, and does not involve acceleration, in order to minimize sources of unease while in the virtual environment.

C. Interaction

For many use cases it is essential to be able to interact with objects in the virtual scene. For example, in therapy planning the target needs to be defined and the device movements planned, or in education the instructor may want to make annotations. In SlicerVR it is possible to manipulate objects intuitively using the controllers. Objects can be grabbed by moving either the left or right controller’s active point inside them and pressing the trigger button. The active point is a defined position on the controller, which can be set up to be indicated by a colored dot. The user can rotate and move the grabbed objects and let go of them when done by releasing the button.

The state of in-VR manipulation is separately stored in an interaction transform object in the application, which is automatically created on the top of the transformation tree that belongs to the manipulated object. The in-VR interaction can thus be reverted by simply resetting the interaction transform. Manipulation can be disabled by changing a setting called selectable on the object that the user wants to keep static. This is useful for example when there are objects inside other objects (such as tools or structures inside the body), and we want the object picking logic to ignore those that are not to be moved.

The capability of 3D Slicer to reuse transforms in transformation trees enables SlicerVR to connect objects to manipulated objects or to a controller. A 2D slice view can be set up to be movable by attaching a “handle” object. When the user moves the handle around, the slice view moves rigidly along with it, thus slicing through an anatomical image such as CT. Similarly, by attaching a surgical tool model to the controller transform, directly moving the surgical tool with the user’s hand can be simulated.

Finally, any function in 3D Slicer or its extensions can be accessed in the virtual environment via the virtual widget. The user can invoke it by pressing the menu button. The widget appears in front of the user, and a laser pointer is attached to one of the controllers, which can be used as a regular mouse. The virtual widget initially shows the home widget, containing the basic VR settings, and a list of registered application widgets, which appear on the bottom as a list of icons. By clicking (i.e. shooting with the trigger button) on one of the icons, the application widget replaces the home widget, with an additional back button in the corner allowing to return to home. The application widgets can be added as plugins, which provides a flexible and extensible way to make any feature accessible from within VR, so that the user can perform every step without taking the HMD off. The currently implemented widgets are the Data widget and the Segment Editor widget. The Data widget shows the loaded data objects and allows changing visibility, opacity, etc. The Segment Editor widget is a simplified version of the 3D Slicer module with the same name, which provides image segmentation features. The widget contains the editing tools that are applicable in VR, and is useful to make annotations for education or collaboration purposes or perform actual image segmentation tasks, for example correcting errors in auto-segmentation, which is difficult to do in a regular monitor-mouse setting, and is expected to be considerably faster and more convenient in VR. Additional application widgets may be implemented for advanced volume rendering parameters, registration, or changing therapy plan settings. The virtual widget feature is not yet fully implemented, thus it is not included in the SlicerVR release at the time of writing the article.

D. Virtual collaboration

As the VR technology makes it possible to experience a synthetic reality, in theory it also enables to place multiple persons in the same reality, thus sharing the exact same experience, and allowing them to collaborate. By loading the same medical scene and connecting the application instances over the network, such collaboration can be achieved using SlicerVR. Transformations describing the pose of the HMDs and the controllers, as well as the shared objects in the virtual scene are transmitted between the participating instances. As a consequence, the avatars of the other participants including head and hands can be shown in real time, while the virtual scene is being manipulated together.

In our feasibility tests (see reference in caption of Fig. 5) no significant performance drop could be perceived while in collaboration compared to viewing the scene individually. Collaboration does not use more resources other than the additional burden of rendering the avatars if enabled, and there is no noticeable latency due to only exchanging lightweight transforms for the existing objects.

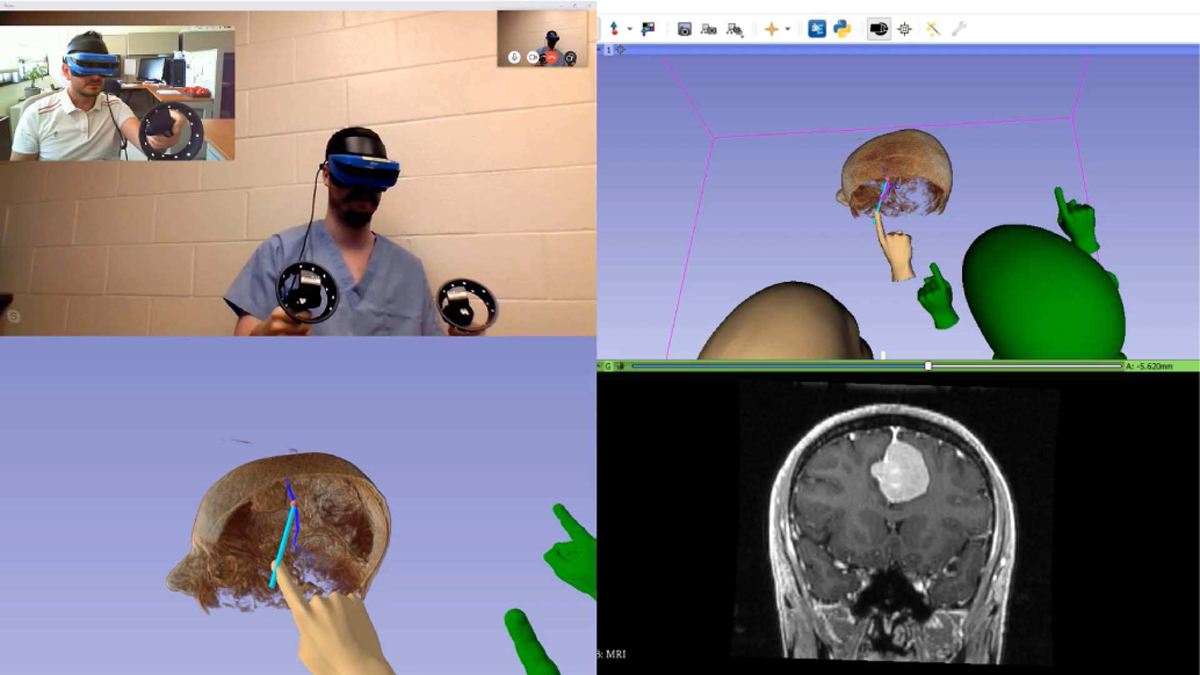

Fig. 5.

Collaborative brain surgery in virtual reality using real-time tractography. Top left: Video of the local (blue headset) and remote (black headset) participant. Bottom left: VR mirror image (local). Top right: 3D view of the SlicerVR instance (local). Bottom right: coronal slice in the position of the tractography seed. Introduction video: https://youtu.be/rG9ST6xv6vg

Sharing the virtual scene enables creating novel cooperative experiences. Medical professionals connected through SlicerVR may plan interventions together or evaluate medical images and findings together for diagnosis. A doctor can explain to the patient the planned operation in an intuitive way, informing them of the possible complications and offering them choices (i.e. patient education). Broadcasting (1 to N) scenarios are also possible to initiate in which an instructor can explain anatomy or an intervention to a group of students, who can observe the scene from a distance, angle, and magnification of their choice.

E. Architecture

SlicerVR is an extension to 3D Slicer, and as a consequence the 3D Slicer factory machine downloads and builds the source code from the public repository,3 runs the automated regression tests, and then publishes the packaged extension every night (starting at midnight in EST time zone). The package appears in the Extension Manager; a 3D Slicer component offering extensions to the users with single-click install.

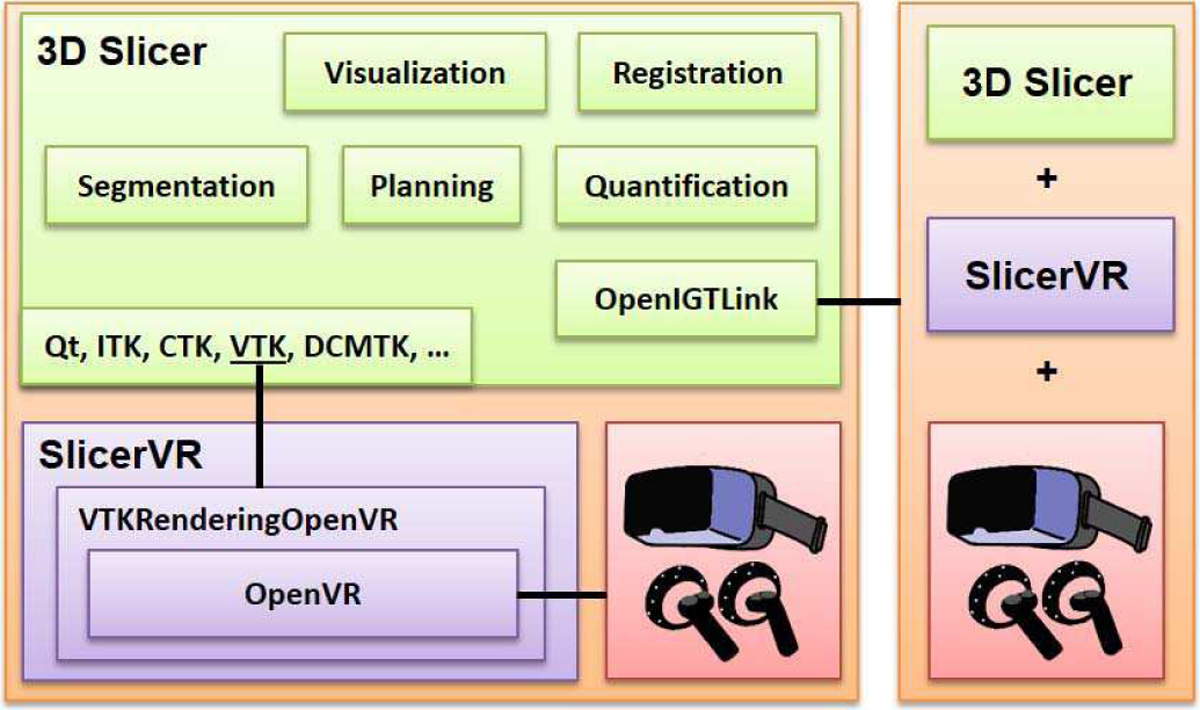

The SlicerVR build utilizes a special external module for the Visualization Toolkit (VTK) [44] implemented for virtual reality visualization and interaction. This module called VTKRenderingOpenVR builds on the OpenVR software development kit that provides hardware abstraction. Thus, SlicerVR supports all the head-mounted displays that OpenVR offers, such as the HTC Vive, Oculus Rift, and all Windows Mixed Reality headsets. The connection between SlicerVR and the hardware is provided via the OpenVR interface within SteamVR, a Windows-based virtual reality system. Thus, although 3D Slicer supports several operating systems, SlicerVR is currently only available on the Windows platform. In a virtual collaboration session, the internal state of the 3D Slicer instance running on each workstation is synchronized via an OpenIGTLink [45] connection. The architecture is shown in detail in Fig. 1.

Fig. 1.

SlicerVR architecture overview. Left side shows a detailed SlicerVR system with 3D Slicer and the VR hardware. The right side is a similar system connecting to the other via OpenIGTLink.

III. Results

SlicerVR is freely available for 3D Slicer versions 4.10 and later. It can be downloaded from the Extension Manager with a single click. The number of downloads exceeds 7,800 at the time of writing.

Several of the use cases outlined in the above sections have been realized and assessed, thus proving the feasibility of using SlicerVR in training and clinical settings. Each of these scenarios were created without the need of any programming, only using different components of SlicerVR and the 3D Slicer ecosystem.

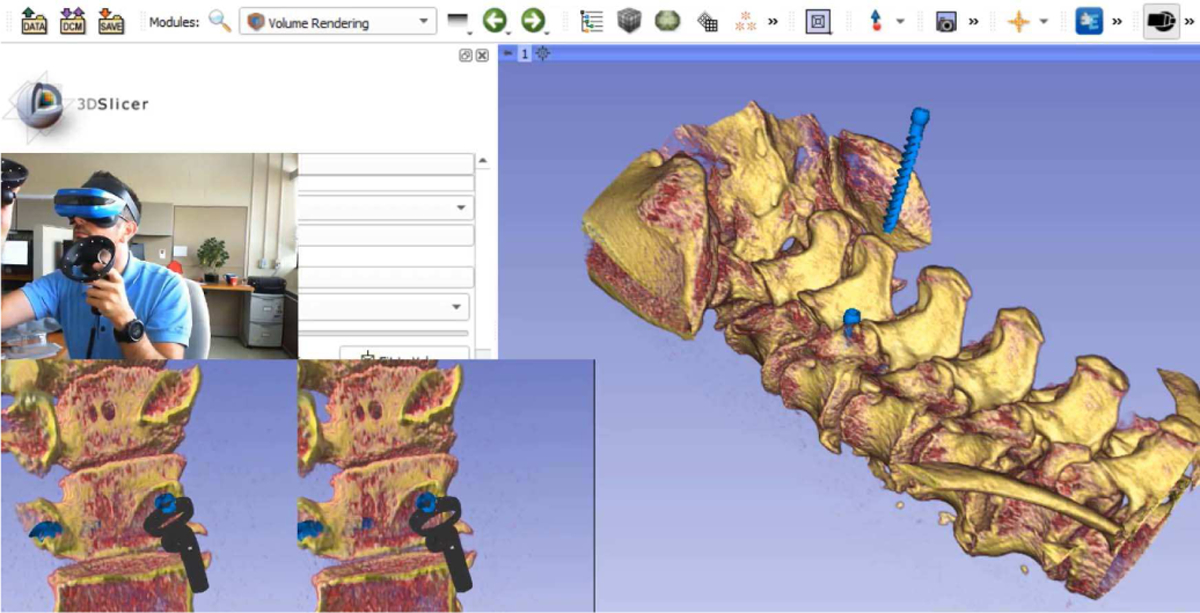

A. Pedicle screw placement planning

One large group of use cases is treatment planning, many of which scenarios involve planning the position of one or more implants in the patient body. Since the anatomy of each patient is different, and the disease developed differently in each of them, it is challenging to efficiently and accurately plan the target location of the devices using a 2D monitor.

A quite common procedure of this sort is spinal stabilization, which involves the insertion of numerous screws in the spinal column. Accurate planning of the screw positions is crucial, but manual planning is time-consuming [46]. The use of VR may potentially improve the accuracy and duration of planning, thanks to the intuitive stereoscopic display and natural hand interaction offered by the technology.

The scene used for the feasibility study contained a volume rendering of the spine CT or the segmented vertebral surfaces, along with multiple screw models. The spine anatomy data was set to be static (selectable setting off), and the screws to be movable. The steps to prepare the scene:

Start 3D Slicer. Make sure the SlicerVR extension is installed in the Extension Manager.

Load spine CT using the DICOM module or drag&drop.

- Prepare spine visualization. There are two ways for this.

- Segment the vertebrae to provide surface mesh visualization. It can be done in the Segment Editor module. The vertebrae will be hollow, and rendering will be faster. Tutorial is available online.4

- Use the Volume Rendering module to perform real-time3D rendering of the CT. A CT transfer function needs to be selected and adjusted. It takes more resources than displaying surfaces, but rendering will provide more anatomic detail.

Disable VR interactions for the spine in the Data module. Right-click on the segmentation (if 2/a was chosen) or the CT (otherwise) and select Toggle selectable.

Load screw model file (e.g. STL) by drag&drop.

Replicate as many screws as necessary for planning. Right-click the screw model in the Data module and select Clone as many times as needed.

Start VR by clicking the Show scene in virtual reality button on the toolbar.

The screws were initially placed near the spine, occupying the same location, serving as a sort of quiver from which the user can take the next screw. The workflow was as follows:

Take a screw from the stack of screws and move it closer to the area of the next insertion.

Adjust view using the pinch 3D operation to focus on the insertion area (shift and magnify).

Perform initial insertion of the screw.

Move the head back and forth, thus “looking into” the spine along the path of the screw. Make small adjustments on the placement as needed (see Fig. 2).

Zoom out and start next placement with step 1.

Fig. 2.

SlicerVR pedicle screw insertion planning use case. Background: 3D Slicer with a single 3D view showing the spine volume rendering and the screws. Bottom left: VR mirror (image transmitted to each eye of the wearer). Middle left: User with the headset and controllers, placing the current screw. Introduction video: https://youtu.be/F_UBoE4FaoY

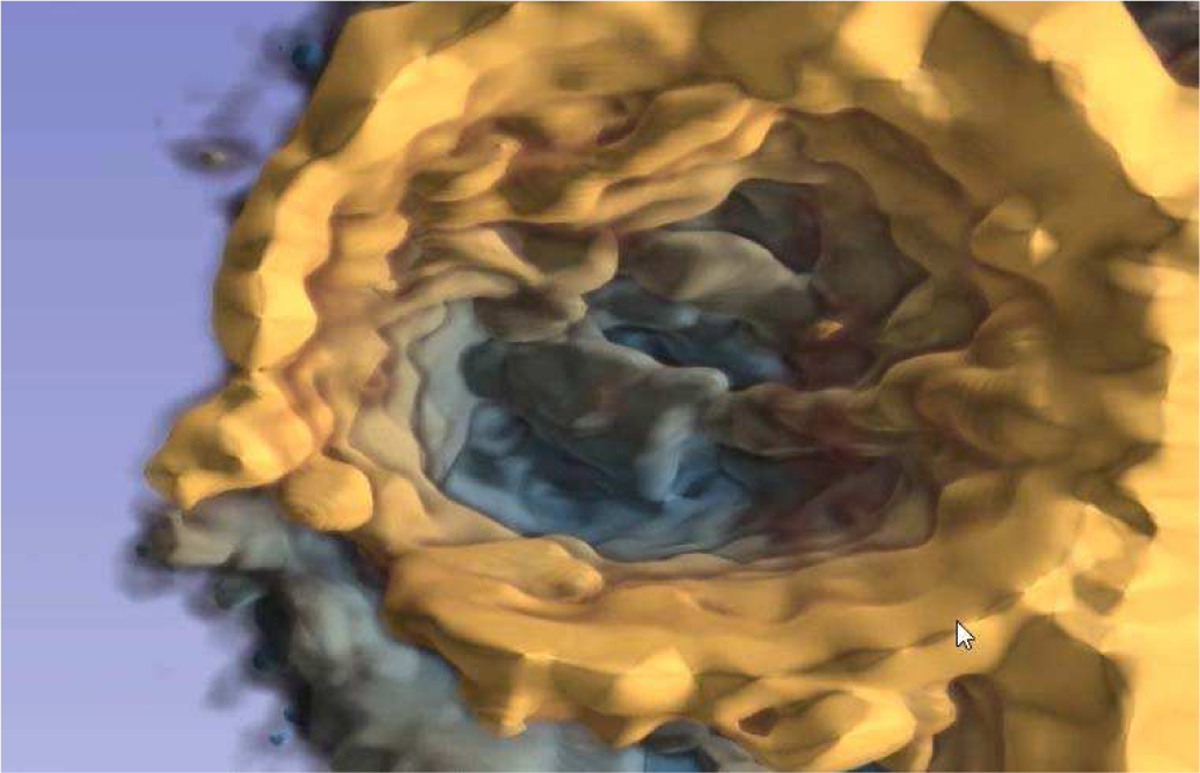

B. Visualization of cardiac tomographic and echocardiographic images

As medical imaging techniques become more complex, the image data gets more difficult to interpret using traditional visualization methods. Moving 3D cardiac image data is such a complex image modality, for which VR is expected to provide a more efficient and intuitive method of exploration [39]. 3D Slicer provides functions to import the DICOM 3DE format and manage the time series in a sequence. The beating heart can thus be visualized in virtual reality through volume rendering. Perception of depth in the noisy ultrasound data is enhanced by depth-encoded rendering, in which the color hue changes by the distance from the viewer. This rendering setting is illustrated in Fig. 3.

Fig. 3.

Depth-encoded cardiac 3D ultrasound. Depth perception is enhanced by coloring the data from yellow to blue with increased depth.

C. Brachytherapy catheter identification

Besides data that is complex by nature (i.e. dimensionality, noise, modality), visualization is also challenging in case of traditional image modalities when the imaged objects themselves are complex. Such a scenario is visualizing brachytherapy catheters. Brachytherapy is a radiation therapy technique where the radiation is provided by radioactive seeds that are inserted inside the body. High dose rate brachytherapy involves implanted catheters in which a highly radioactive seed moves in and out. Planning of the seed dwelling points is only possible after identifying the inserted catheters.

The catheters, however, are often difficult to follow due to their high number and proximity, and also the fact that they may cross each other (see Fig. 4). Virtual reality’s stereoscopic visualization provides an environment that aids in following the catheters. Looking at the catheters in a real 3D view with the ability to naturally change the point of view and applying basic assumptions (e.g. the catheters do not take sharp turns) enables the operator to effectively label the catheters.

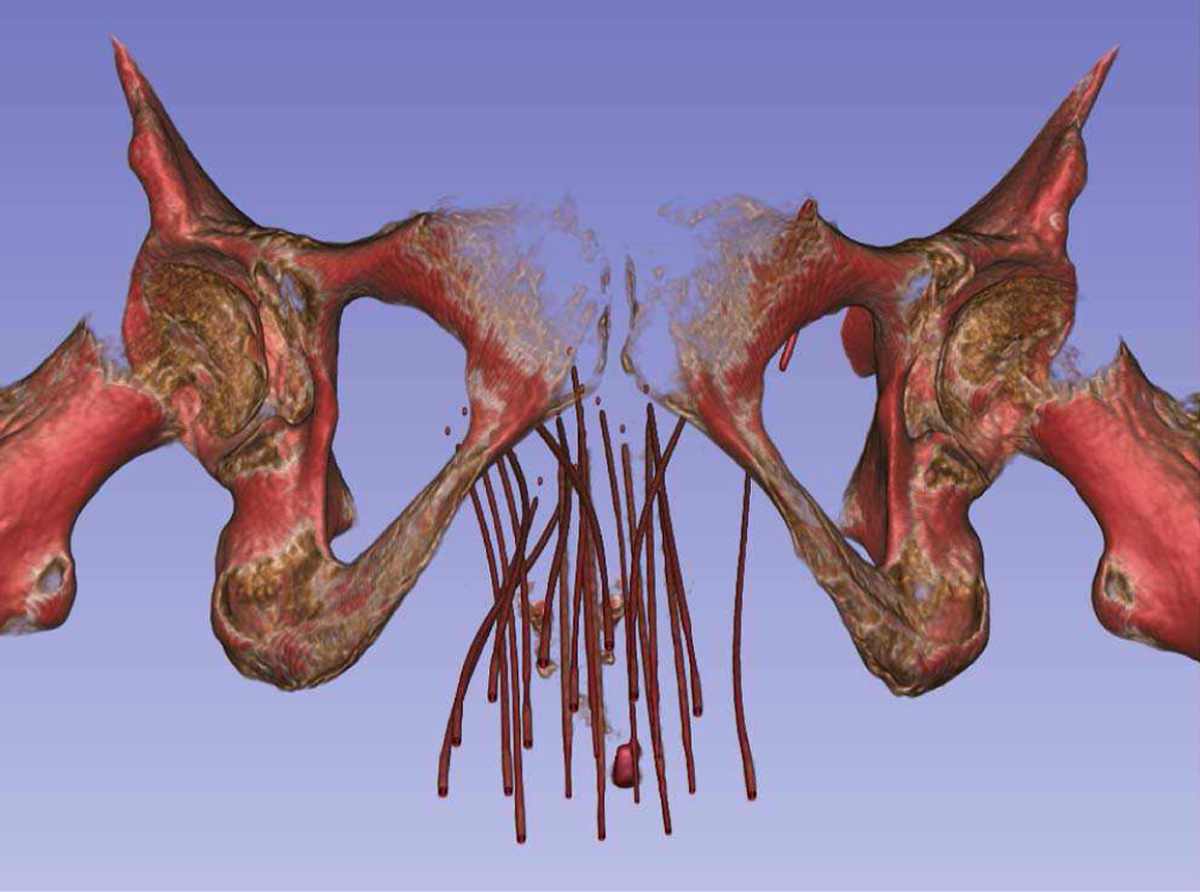

Fig. 4.

Gynecological brachytherapy catheters in a volume rendered CT. It can be seen that many catheters are inserted in a small region, close to each other.

D. Collaborative surgery planning

Remote virtual collaboration in medicine is an interesting and relatively unexplored aspect of virtual reality [47]. Early implementations of such systems [48][49] show promising results. However, at present there is no open-source software system to allow setting up arbitrary medical scenarios for virtual collaboration.

SlicerVR, as described in sections 2/D and 2/E, is capable of synchronizing the virtual scene running in different 3D Slicer instances, thus producing a collaborative experience. In our experiment, we chose the use case of brain surgery planning, in which two users explore a patient’s brain imaging data and discuss the best surgical approach (Fig. 5). The data consisted of two MRI images: a T1-weighted image showing the anatomy, and a diffusion tensor image containing information about the nerve tracts. The anatomical image was visualized via volume rendering, and the diffusion image served as input for tractography analysis using the SlicerDMRI extension [34]. The tractography analysis generated a set of nerve tracts likely passing through a point of interest, visualized as surface models of the calculated tracts. The point of interest was defined by the tip of a needle, the position of which was also shared in the collaborative scene.

When either participant moved the needle using a VR controller (as described in section 2/C), the tractography analysis was executed in real-time, and the tracts passing the needle tip were visualized for both participants. Thus, they were able to discuss the surgical approach based on what brain functions were connected to a certain point in the brain. The avatars were useful in various ways. The hand models used in this scenario had their index fingers extended so that they can be used as pointers, and the participants could use the hands to direct attention. The head avatars, on the other hand, were useful for one participant to know from what perspective and magnification the other person saw the scene, thus being able to tell whether they can see the object of discussion. In our setup, we used a generic video conferencing software for voice communication.

E. Needle insertion training

Performing interventions requires a set of special skills that may only be acquired through practice, such as hand-eye coordination and spatial awareness. Traditionally, medical students and residents had access to a few rounds of practice on cadavers before having to continue practicing on the patients themselves. In the past few decades, various simulators appeared on the market, both physical and virtual.

Needle insertion is a type of intervention that is quite commonplace in today’s clinic: biopsies, catheterizations, drug injections, etc. Many of the more difficult needle insertions happen under ultrasound guidance, which, in addition to the generic skills (i.e. knowledge of local anatomy, steps of the procedure, risks and emergency techniques), requires the ability to interpret the live ultrasound image and the motor skills for simultaneously manipulating the needle and the ultrasound probe. The 3D Slicer ecosystem offers a wide variety of tools that can help create training scenarios for needle insertions.

The SlicerIGT extension for image-guided procedures [32], along with the PLUS ultrasound research toolkit [50] that offers hardware abstraction for image acquisition and position devices, provides software support to connect to image guidance systems and visualize, record, and manage the data stream. The PerkTutor extension [51] allows analyzing the recording of the practice session and calculating metrics that allow quantitative evaluation of the trainee’s skills.

F. Personalized patient education

The main purpose of personalized patient education is to support the emotional and physical needs of patients, while providing them sufficient information to understand their health status, or to make a decision about their treatment. The use of patient-specific virtual reality visualizations allows presenting the medical information in an easier to understand manner than in verbal form or through drawings or 2D images, allowing the patients undergoing a procedure reduce anxiety or make a better educated decision. Such personalized visualizations are also called “digital twin” applications.

By leveraging the interoperability and visualization features of 3D Slicer, the patient’s personal medical data can be presented in a way that for the patient it is easy to interpret, and for the clinician it is easy to walk the patient through what they see. The one-button virtual reality access provided by SlicerVR allows seamless switching to the fully 3D environment, providing the patient with an immersive experiment while looking at their own anatomy.

IV. Discussion

The presented method is a software toolkit providing seamless but comprehensive virtual reality capabilities to the 3D Slicer medical software platform. The main outcome of this work is - rather than offering a solution to a specific problem -the engineering contribution that makes it possible for other people to use VR in a wide range of medical scenarios on a button click. Given the documented need to offer virtual reality functions to address medical problems, as presented in the introduction, we created a free, open-source tool that opens the way for other translational researchers to introduce VR to specific clinical or educational situations.

Rather than implementing problem-specific VR visualization contexts, in which certain generic geometrical objects are provided with functions that can be interpreted in their medical situation, SlicerVR adds virtual reality features to a mature software platform that has been created to manage, visualize, and quantify medical objects. We can illustrate this difference through the example use case of pedicle screw insertion described in section III/A. In a generic VR development platform, the pedicle screws can only be represented as screw shaped static objects, with minimal flexibility to experiment with the medical context of interaction. This limitation can be overcome for example, by adding the necessary software code for import/export of treatment plans, and the result metrics could be computed later in a spreadsheet. SlicerVR, however, can manage the treatment plan as such, importing and exporting it in a way that is directly compatible with the clinical navigation system. Then, in the quantitative analysis of the planning or navigation step SlicerVR directly reports the total motion a pedicle screw with respect to the vertebra. Thus, the greatest advantage of using SlicerVR is that it relieves the medical application developer from the tedious implementation work that connects the VR interaction on a geometrical object with a medical object.

In order to extend the number of supported use cases and improve user-friendliness, several features will be added in the future. The virtual widgets described in section 2/C need to be integrated in SlicerVR, with the currently implemented Home, Data, and Segment Editor widgets, with additional widgets such as volume rendering settings, landmark registration, or sequence management (to play, pause recording, etc.). Interaction with objects can be further improved by adding the possibility of two-handed object manipulation, since sometimes it is needed to change the size of objects in addition to their pose.

Currently there is no quick and intuitive way for the users to orient themselves. Although it is possible to set the camera from the desktop view, there is no “I’m lost” function, a feature that has been requested by the community. This could be implemented as a quick-reset function, which makes the user jump to a position to see the entire loaded dataset from a reasonable distance.

Another difficulty related to self-orientation is judging distances. Despite the stereoscopic vision, it is very difficult to decide whether the viewer is far from large objects, or close to small objects. This may be a problem when using the pinch 3D function, because the user may change the magnification of the scene in a way that the objects become very large and the user very far. Since flying speed remains constant, it may seem that the fly function does not work. Providing a floor that has a finite and constant size could help. Since there is no information of the actual floor that was present when acquiring the data, the virtual floor needs to be placed based on assumptions. A safe assumption is that the floor is to the inferior direction from the medical data, as defined by their coordinate systems. The floor may show a grid to illustrate distances and may be semi-transparent so that any object that passes it remains visible.

One more feature that may help navigation is a “turntable” type rotation around the scene, since often it is useful to be able to walk around the objects. This could be implemented quite simply, by finding the inferior-superior middle axis of the bounding box of the visible scene, and make the cameras move around this axis.

Many of the latest VR devices are equipped with video cameras, mainly for self-tracking. Merged reality is a technique that uses such VR hardware devices in an augmented setting, by capturing the wearer’s surroundings and projecting them onto the virtual scene. It is a quite promising intra-operative modality due to the benefit of direct visual access to the surgical site [52][53]. It is conceivable that with the decrease of latency thanks to rapid technical advancements, merged reality approaches will appear in the future for intra-operative guidance as well. Thus, supporting merged reality in SlicerVR could open the way for a wide range of novel applications in interventional navigation in an affordable way. By projecting this stereoscopic video feed in the background of the virtual scene, the user would be able to see the real world, augmented with projected information such as planned needle trajectory or segmented target. Proof of concept work has been carried out in this topic using SlicerVR. Its integration in the toolkit is expected to be carried out in the near future.

V. Conclusion

This article describes an open-source virtual reality toolkit fully integrated in one of the most widely used medical image visualization and analysis software platforms. The importance of the high connectivity to a medical platform lies in the possibility of single-click launch of virtual reality from any diagnostic, planning, training, or navigation scenario, and the access to the multitude of medical applications already supported by the platform. We expect that by having added VR support to a huge set of established medical functions, development will move away from adding such features to generic VR platforms, thus fostering innovation by enabling rapid prototypization of medical virtual reality applications.

Acknowledgment

This work was partially funded by the Southeastern Ontario Academic Medical Association (SEAMO), Educational Innovation and Research Fund. Gabor Fichtinger is supported as a Canada Research Chair in Computer-Integrated Surgery. This work was funded, in part, by NIH/NIBIB and NIH/NIGMS (via grant 1R01EB021396–01A1). Initial development was carried out by Kitware Inc.

This paragraph of the first footnote will contain the date on which you submitted your paper for review. It will also contain support information, including sponsor and financial support acknowledgment. For example, “This work was supported in part by the U.S. Department of Commerce under Grant BS123456.”

Biographies

Csaba Pinter Received the M.Sc. degree in Computer Science in 2006 at the University of Szeged, Hungary, and the Ph.D. degree also in Computer Science in 2019 at Queen’s University, Canada. He is a research software engineer at Queen’s University. He spent several years in the industry, working on projects for medical image computing. He now delves into the world of open source. He participated in the creation of the PLUS toolkit. He is an active contributor to 3D Slicer, he is the owner of the SlicerRT toolkit for radiation therapy, and one of the main contributors of SlicerVR. His main interest is the design of innovative medical applications in the fields of image-guided interventions, radiation therapy, and virtual reality.

Andras Lasso is a senior research engineer in Laboratory of Percutaneous Surgery, specialized in translational research and system development for minimally invasive interventions. He is lead contributor of open-source software packages including 3D Slicer and PLUS toolkit, which are used by academic groups and companies worldwide. Prior to joining the lab at Queen’s University, he worked for 9 years at GE Healthcare, developing advanced image analysis, fusion and guidance applications for interventional medical devices.

Saleh Choueib Born in Hamilton, Ontario, Canada in 1997. He is currently working on his Bachelors in Computer Science with Honours in Biomedical Computing from Queen’s University, Kingston, Ontario, Canada; and is projected to graduate April of 2020. His academic aspirations include completing a M.S. degree in biomedical computing by the year 2022.

From May 2017 to present, he has worked as a researcher and software developer intern at the Laboratory for Percutaneous Surgery, Queen’s University. His research interests include image-guided intervention, computer-assisted therapies, and the use of virtual reality as an instrument for medical training and procedural planning.

Mark Asselin received the BComp (Hons) degree in Biomedical Computation from Queen’s University (Kingston, ON, Canada) in 2019. He is currently pursuing the MSc degree in Computing at Queen’s University and is a visiting researcher at the Austrian Center for Medical Innovation and Technology. His research interests lie at the intersection of medicine and computing, including image guidance techniques and approaches for intra-operative tissue analysis.

Jean-Christophe Fillion-Robin received a B.S. in Computer Science from the University Claude Bernard of Lyon in 2003 and an M.S. in Electrical Engineering and Information Processing in 2008 from the ESCPE Lyon (France). His Master’s thesis was realized from 2007 to 2008 at the Swiss Federal Institute of Technology of Lausanne (EPFL, Switzerland). Since 2009 Jean-Christophe is a Principal Engineer at Kitware, Inc.’s branch office in Chapel Hill, North Carolina where he is leading the development of commercial applications based on “3D Slicer”, a medical imaging platform built with C++ and Python.

Jean-Baptiste Vimort received his master’s degree in 2017 at the Ecole supérieure de Chimie Physique Electronique de Lyon with a major in Computer Science, focusing on image processing algorithms. After an internship at the University of Michigan, he took part at Kitware Inc.’s project to create the hardware interface of the SlicerVR virtual reality research toolkit, based on OpenVR.

Ken Martin received bachelor degrees in Physics and Computer Science from Rensselaer in 1990. He then joined General Electric Corporate Research and Development as a member of the Software Technology Program. During this program, Dr. Martin completed his master’s degree in Electrical & Computer Systems Engineering at Rensselaer with a thesis on abnormality detection for cutting tool fault prediction. In 1998, Dr. Martin completed his Ph.D. in Computer Science with his thesis titled “Image Guided Borescope Tip Pose Determination.”

He is a co-founder of Kitware and currently serves as a Distinguished Engineer. Dr. Martin has made contributions to the fields of visualization and software architecture including a number of peer reviewed papers and patents. He is co-author of The Visualization Toolkit: An Object Oriented Approach to Computer Graphics and was co-developer and lead architect for the Visualization Toolkit (www.vtk.org), a comprehensive visualization package. He is also a coauthor and developer for CMake (www.cmake.org), a popular build system tool that is seeing wide adoption.

Matthew A. Jolley received the bachelor’s degree in chemistry from Stanford in 1998, and the M.D. degree from the University of Washington in 2003. He is an Attending Physician of Pediatric Cardiology (Imaging) and Pediatric Cardiac Anesthesia with the Children’s Hospital of Philadelphia (CHOP) and an Assistant Professor with the Perelman School of Medicine, University of Pennsylvania. He leads a Laboratory, CHOP, focused on the visualization and quantification of congenitally abnormal heart structures using 3D imaging, with the long-term goal of delivering personalized structural modeling to inform optimal interventions in an individual patient. His group in active in the development of pediatric cardiac focused, 3D slicer-based applications, including SlicerVR-based modeling.

Gabor Fichtinger (IEEE M’04, S’2012, F’2016) received the doctoral degree in computer science from the Technical University of Budapest, Budapest, Hungary, in 1990. He is a Professor and Canada Research Chair in Computer-Integrated Surgery at Queen’s University, Canada, where he directs the Percutaneous Surgery Laboratory. His research and teaching specialize in computational imaging and robotic guidance for surgery and medical interventions, focusing on the diagnosis and therapy of cancer and musculoskeletal conditions.

Footnotes

Contributor Information

Csaba Pinter, Laboratory for Percutaneous Surgery, Queen’s University, Kingston, Canada.

Andras Lasso, Laboratory for Percutaneous Surgery, Queen’s University, Kingston, Canada.

Saleh Choueib, Laboratory for Percutaneous Surgery, Queen’s University, Kingston, Canada.

Mark Asselin, Laboratory for Percutaneous Surgery, Queen’s University, Kingston, Canada.

Jean-Christophe Fillion-Robin, Kitware Incorporated, Carrboro, North Carolina, USA..

Jean-Baptiste Vimort, Kitware Incorporated, Carrboro, North Carolina, USA..

Ken Martin, Kitware Incorporated, Carrboro, North Carolina, USA..

Matthew A. Jolley, Children’s Hospital of Philadelphia, Philadelphia, PA 19104 USA Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA 19104 USA.

Gabor Fichtinger, Laboratory for Percutaneous Surgery, Queen’s University, Kingston, Canada.

References

- [1].Rubin P “The inside story of oculus rift and how virtual reality became reality,” in Wired. May 20, 2014. Available at: https://www.wired.com/2014/05/oculus-rift-4/. Accessed October 14, 2019

- [2].Dalgarno B, & Lee MJW (2010) “What are the learning affordances of 3-D virtual environments?” British Journal of Educational Technology, 41(1), 10–32. 10.1111/j.1467-8535.2009.01038.x [DOI] [Google Scholar]

- [3].Heredia-Pérez SA, Harada K, Padilla-Castañeda MA, Marques-Marinho M, Márquez-Flores JA, & Mitsuishi M (2019) “Virtual reality simulation of robotic transsphenoidal brain tumor resection: Evaluating dynamic motion scaling in a master-slave system.” International Journal of Medical Robotics and Computer Assisted Surgery, 15(1), 1–13. 10.1002/rcs.1953 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Dardick J, Allen S, Scoco A, Zampolin RL, & Altschul DJ (2019) “Virtual reality simulation of neuroendovascular intervention improves procedure speed in a cohort of trainees”, 10(184), 8–11. 10.25259/SNI [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Schulz GB, Grimm T, Buchner A, Jokisch F, Casuscelli J, Kretschmer A, …Karl A (2019) “Validation of a High-End Virtual Reality Simulator for Training Transurethral Resection of Bladder Tumors”. Journal of Surgical Education, 76(2), 568–577. 10.1016/j.jsurg.2018.08.001 [DOI] [PubMed] [Google Scholar]

- [6].Bhushan S, Anandasabapathy S, & Shukla R (2018) “Use of Augmented Reality and Virtual Reality Technologies in Endoscopic Training”. Clinical Gastroenterology and Hepatology, 16(11), 1688–1691. 10.1016/j.cgh.2018.08.021 [DOI] [PubMed] [Google Scholar]

- [7].Nagendran M, Gurusamy KS, Aggarwal R, Loizidou M, & Davidson BR (2013) “Virtual reality training for surgical trainees in laparoscopic surgery”. Cochrane Database of Systematic Reviews, 2013(8). 10.1002/14651858.CD006575.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Roy E, Bakr MM, & George R (2017) “The need for virtual reality simulators in dental education: A review”. Saudi Dental Journal, 29(2), 41–47. 10.1016/j.sdentj.2017.02.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Jimenez YA, Cumming S, Wang W, Stuart K, Thwaites DI, & Lewis SJ (2018) “Patient education using virtual reality increases knowledge and positive experience for breast cancer patients undergoing radiation therapy”. Supportive Care in Cancer, 26(8), 2879–2888. 10.1007/s00520-018-4114-4 [DOI] [PubMed] [Google Scholar]

- [10].Pandrangi VC, Gaston B, Appelbaum NP, Albuquerque FC Jr, Levy MM, & Larson RA (2019). The application of virtual reality in patient education. Annals of vascular surgery, 59, 184–189. [DOI] [PubMed] [Google Scholar]

- [11].Ernstoff N, Cuervo J, Carneiro F, Dalmacio I, Deshpande A, Sussman DA, …& Feldman PA (2018). Breaking Down Barriers: Using Virtual Reality to Enhance Patient Education and Improve Colorectal Cancer Screening: 254. American Journal of Gastroenterology, 113, S146. [Google Scholar]

- [12].Johnson K, Liszewski B, Dawdy K, Lai Y, & McGuffin M (2020). Learning in 360 Degrees: A Pilot Study on the Use of Virtual Reality for Radiation Therapy Patient Education. Journal of Medical Imaging and Radiation Sciences. [DOI] [PubMed] [Google Scholar]

- [13].Hartney JH, Rosenthal SN, Kirkpatrick AM, Skinner JM, Hughes J, & Orlosky J (2019, March). Revisiting Virtual Reality for Practical Use in Therapy: Patient Satisfaction in Outpatient Rehabilitation. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 960–961). IEEE. [Google Scholar]

- [14].Rutkowski S, Rutkowska A, Kiper P, Jastrzebski D, Racheniuk H, Turolla A, …& Casaburi R (2020). Virtual Reality Rehabilitation in Patients with Chronic Obstructive Pulmonary Disease: A Randomized Controlled Trial. International Journal of Chronic Obstructive Pulmonary Disease, 15, 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Frajhof L, Borges J, Hoffmann E, Lopes J, & Haddad R (2018) “Virtual reality, mixed reality and augmented reality in surgical planning for video or robotically assisted thoracoscopic anatomic resections for treatment of lung cancer”. Journal of Visualized Surgery, 4, 143–143. 10.21037/jovs.2018.06.02 [DOI] [Google Scholar]

- [16].Shirk JD, Kwan L, & Saigal C “The Use of 3-Dimensional, Virtual Reality Models for Surgical Planning of Robotic Partial Nephrectomy”. Urology, 125, 92–97, 2019. 10.1016/j.urology.2018.12.026 [DOI] [PubMed] [Google Scholar]

- [17].Guerriero L, Quero G, Diana M, Soler L, Agnus V, Marescaux J, & Corcione F. “Virtual reality exploration and planning for precision colorectal surgery”. Diseases of the Colon and Rectum, 61(6), 719–723, 2018. 10.1097/DCR.0000000000001077 [DOI] [PubMed] [Google Scholar]

- [18].Zhou Z, Jiang S, Yang Z, & Zhou L (2018). “Personalized planning and training system for brachytherapy based on virtual reality”. Virtual Reality, (0123456789), 1–15. 10.1007/s10055-018-0350-7 [DOI] [Google Scholar]

- [19].Villard C, Soler L, & Gangi A (2008). “Radiofrequency ablation of hepatic tumors: Simulation, planning, and contribution of virtual reality and haptics”. Computer Methods in Biomechanics and Biomedical Engineering, 8(4), 215–227. 10.1080/10255840500289988 [DOI] [PubMed] [Google Scholar]

- [20].Glaser S, Warfel B, Price J, Sinacore J, & Albuquerque K (2012). “Effectiveness of virtual reality simulation software in radiotherapy treatment planning involving non-coplanar beams with partial breast irradiation as a model”. Technology in Cancer Research and Treatment, 11(5), 409–414. 10.7785/tcrt.2012.500256 [DOI] [PubMed] [Google Scholar]

- [21].FDA to host workshop on medical applications of AR, VR, AuntMinnie.com, January 23, 2020. Accessed on February 12, 2020. [Online]. Available: https://www.auntminnie.com/index.aspx?sec=sup&sub=adv&pag=dis&ItemID=127955

- [22].Ridley EL, Madden Yee K, Kim A, Forrest W, and Casey B, Top 5 trends from RSNA 2017 in Chicago, AuntMinnie.com, December 13, 2017. Accessed on February 12, 2020. [Online]. Available: https://www.auntminnie.com/index.aspx?sec=rca&sub=rsna_2017&pag=dis&ItemID=119393

- [23].Allyn J, RSNA 2019 Trending Topics, RSNA.org, October 25, 2019. Accessed on February 12, 2020. [Online]. Available: https://www.rsna.org/en/news/2019/October/RSNA-2019-Trending-Topics-Intro

- [24].Virtual Reality in Healthcare Market By Technology (Full Immersive Virtual Reality, Non-Immersive Virtual Reality and SemiImmersive Virtual Reality), By Component (Hardware and Software), By Application And Segment Forecasts, 2016–2026, Reports and Data, March, 2019. Accessed on February 12, 2020. [Online]. Available: https://www.reportsanddata.com/report-detail/virtual-realityin-healthcare-market

- [25].Global $10.82 Billion Healthcare Augmented Reality and Virtual Reality Market Trend Forecast and Growth Opportunity to 2026, Research and Markets, December 17, 2019. Accessed on February 12, 2020. [Online]. Available: https://www.businesswire.com/news/home/20191217005455/en/Global-10.82-Billion-Healthcare-Augmented-Reality-Virtual

- [26].Augmented Reality (AR) & Virtual Reality (VR) in Healthcare Market Size to grow extensively with 29.1% CAGR by 2026, AmericaNewsHour, December 23, 2019. Accessed on February 12, 2020. [Online]. Available: https://www.marketwatch.com/press-release/augmented-reality-ar-virtual-reality-vr-in-healthcare-market-size-to-grow-extensively-with-291-cagr-by-2026-2019-12-23 [Google Scholar]

- [27].Mildenberger P, Eichelberg M, & Martin E (2002). “Introduction to the DICOM standard”. European Radiology, 12(4), 920–927. 10.1007/s003300101100 [DOI] [PubMed] [Google Scholar]

- [28].Williams JK (2019). “A method for viewing and interacting with medical volumes in virtual reality” M.S. thesis, Iowa State Univ, Ames, IA, USA, 2019. [Google Scholar]

- [29].Fiederer LDJ, Alwanni H, Volker M, Schnell O, Beck J, & Ball T (2019). “A Research Framework for Virtual-Reality Neurosurgery Based on Open-Source Tools”. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 922–924). IEEE. 10.1109/VR.2019.8797710 [DOI] [Google Scholar]

- [30].Ntourakis D, Memeo R, Soler L, Marescaux J, Mutter D, & Pessaux P (2016). “Augmented Reality Guidance for the Resection of Missing Colorectal Liver Metastases: An Initial Experience”. World Journal of Surgery, 40(2), 419–426. 10.1007/s00268-015-3229-8 [DOI] [PubMed] [Google Scholar]

- [31].Fedorov A, Beichel R, Kalpathy-Cramer J, Finet J, Fillion-Robin J-C, Pujol S, …Kikinis R (2012). “3D Slicer as an image computing platform for the Quantitative Imaging Network”. Magnetic Resonance Imaging, 30(9), 1323–1341. 10.1016/j.mri.2012.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Ungi T, Lasso A, & Fichtinger G (2016). “Open-source platforms for navigated image-guided interventions”. Medical Image Analysis, 33(August), 181–186. 10.1016/j.media.2016.06.011 [DOI] [PubMed] [Google Scholar]

- [33].Pinter C, Lasso A, Wang A, Jaffray D, & Fichtinger G (2012). “SlicerRT: radiation therapy research toolkit for 3D Slicer”. Medical Physics, 39(10), 6332–6338. 10.1118/1.4754659 [DOI] [PubMed] [Google Scholar]

- [34].Norton I, Essayed WI, Zhang F, Pujol S, Yarmarkovich A, Golby AJ, …O’Donnell LJ (2017). “SlicerDMRI: Open source diffusion MRI software for brain cancer research”. Cancer Research, 77(21), e101–e103. 10.1158/0008-5472.CAN-17-0332 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Yip SSF, Parmar C, Blezek D, Estepar RSJ, Pieper S, Kim J, & Aerts HJWL (2017). “Application of the 3D slicer chest imaging platform segmentation algorithm for large lung nodule delineation”. PLoS ONE, 12(6), 1–17. 10.1371/journal.pone.0178944 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Herz C, Fillion-Robin J-C, Onken M, Riesmeier J, Lasso A, Pinter C, …Fedorov A (2017). “dcmqi?: An Open Source Library for Standardized Communication of Quantitative Image Analysis Results Using DICOM”. Cancer Research, 77(21), e87–e90. 10.1158/0008-5472.CAN-17-0336 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Kapur T, Pieper S, Fedorov A, Fillion-Robin J-C, Halle M, O’Donnell L, …Kikinis R (2016). “Increasing the impact of medical image computing using community-based open-access hackathons: The NA-MIC and 3D Slicer experience”. Medical Image Analysis, 33. 10.1016/j.media.2016.06.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Pinter C, Lasso A, Asselin M, Fillion-Robin J-C, Vimort J-B, Martin K, & Fichtinger G (2019). “SlicerVR for image-guided therapy planning in immersive virtual reality”. In The 12th Hamlyn Symposium on Medical Robotics, 23–26 June 2019, Imperial College, London, UK (pp. 91–92). London, UK. [Google Scholar]

- [39].Lasso A, Nam HH, Dinh PV, Pinter C, Fillion-Robin J-C, Pieper S, …Jolley MA (2018). “Interaction with Volume-Rendered Three-Dimensional Echocardiographic Images in Virtual Reality”. Journal of the American Society of Echocardiography, 6–8. 10.1016/j.echo.2018.06.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Choueib S, Pinter C, Vimort J-B, Lasso A, Fillion Robin J-C, Martin K, & Fichtinger G (2019). “Evaluation of 3D slicer as a medical virtual reality visualization platform”, In Medical Imaging 2019: Image-Guided Procedures, Robotic Interventions, and Modeling, vol. 10951, p. 1095113. International Society for Optics and Photonics, 2019. 10.1117/12.2513053 [DOI] [Google Scholar]

- [41].Porcino TM, Clua E, Trevisan D, Vasconcelos CN, & Valente L (2017). “Minimizing cyber sickness in head mounted display systems: Design guidelines and applications”. 2017 IEEE 5th International Conference on Serious Games and Applications for Health, SeGAH 2017, 1–6. 10.1109/SeGAH.2017.7939283 [DOI] [Google Scholar]

- [42].Lu D, & Mattiasson J (2013). “How does Head Mounted Displays affect users’ expressed sense of in-game enjoyment”. DivaPortal.Org Retrieved from http://www.divaportal.org/smash/get/diva2:632858/FULLTEXT01.pdf [Google Scholar]

- [43].Wilson ML (2016). “The Effect of Varying Latency in a Head-Mounted Display on Task Performance and Motion Sicknes”s. M.S. thesis, Clemson University, Clemson, SC, USA, 2016 [Google Scholar]

- [44].Schroeder WJ, Lorensen B, & Martin K (2004). “The visualization toolkit: an object-oriented approach to 3D graphics”. Kitware, Inc. [Google Scholar]

- [45].Tokuda J, Fischer GS, Papademetris X, Yaniv Z, Ibanez L, Cheng P, …& Kapur T (2009). “OpenIGTLink: an open network protocol for image-guided therapy environment”. The International Journal of Medical Robotics and Computer Assisted Surgery, 5(4), 423–434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Knez D, Mohar J, Cirman RJ, Likar B, Pernuš F, & Vrtovec T (2018). “Variability analysis of manual and computer-assisted preoperative thoracic pedicle screw placement planning”. Spine, 43(21), 1487–1495. 10.1097/BRS.0000000000002659 [DOI] [PubMed] [Google Scholar]

- [47].Muhammad Nur Affendy N, & Ajune Wanis I (2019). “A Review on Collaborative Learning Environment across Virtual and Augmented Reality Technology”. IOP Conference Series: Materials Science and Engineering, 551, 012050. 10.1088/1757-899x/551/1/012050 [DOI] [Google Scholar]

- [48].Dech F, Jonathan C, & Silverstein MD (2002). “Rigorous exploration of medical data in collaborative virtual reality applications”. Proceedings of the International Conference on Information Visualisation, 2002-January, 32–38. 10.1109/IV.2002.1028753 [DOI] [Google Scholar]

- [49].Lee J. Der, Lan TY, Liu LC, Lee ST, Wu CT, & Yang B (2007). “A remote virtual-surgery training and teaching system”. Proceedings of 3DTV-CON, 2, 0–3. 10.1109/3DTV.2007.4379441 [DOI] [Google Scholar]

- [50].Lasso A, Heffter T, Rankin A, Pinter C, Ungi T, & Fichtinger G (2014). “PLUS: Open-Source Toolkit for Ultrasound-Guided Intervention Systems”. IEEE Transactions on Bio-Medical Engineering, 61(10), 2527–2537. 10.1109/TBME.2014.2322864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [51].Ungi T, Sargent D, Moult E, Lasso A, Pinter C, McGraw RC, & Fichtinger G (2012). “Perk tutor: An open-source training platform for ultrasound-guided needle insertions”. IEEE Transactions on Biomedical Engineering, 59(12), 3475–3481. 10.1109/TBME.2012.2219307 [DOI] [PubMed] [Google Scholar]

- [52].Tepper OM, Rudy HL, Lefkowitz A, Weimer KA, Marks SM, Stern CS, & Garfein ES (2017). “Mixed reality with hololens: Where virtual reality meets augmented reality in the operating room”. Plastic and Reconstructive Surgery, 140(5), 1066–1070. 10.1097/PRS.0000000000003802 [DOI] [PubMed] [Google Scholar]

- [53].Groves L, Li N, Peters TM, & Chen ECS (2019). “Towards a Mixed-Reality First Person Point of View Needle Navigation System”. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019 (Vol. 1). Springer International Publishing. 10.1007/978-3-030-32254-0_28 [DOI] [Google Scholar]