Abstract

Here we characterize establishment of joint attention in hearing parent-deaf child dyads and hearing parent-hearing child dyads. Deaf children were candidates for cochlear implantation who had not yet been implanted and who had no exposure to formal manual communication (e.g., American Sign Language). Because many parents whose deaf children go through early cochlear implant surgery do not themselves know a visual language, these dyads do not share a formal communication system based in a common sensory modality prior to the child’s implantation. Joint attention episodes were identified during free play between hearing parents and their hearing children (N = 4) and hearing parents and their deaf children (N = 4). Attentional episode types included successful parent-initiated joint attention, unsuccessful parent-initiated joint attention, passive attention, successful child-initiated joint attention, and unsuccessful child-initiated joint attention. Group differences emerged in both successful and unsuccessful parent-initiated attempts at joint attention, parent passive attention, and successful child-initiated attempts at joint attention based on proportion of time spent in each. These findings highlight joint attention as an indicator of early communicative efficacy in parent-child interaction for different child populations. We discuss the active role parents and children play in communication, regardless of their hearing status.

Keywords: joint attention, multimodal cue integration, language learning

I. INTRODUCTION

Much of social engagement in humans occurs in the form of joint attention, which consists of two people simultaneously focusing on an object or event while maintaining social awareness of one other (Markus et al., 2000). A crucial component of joint attention is the sharing of one’s attention with another person, a phenomenon that has been referred to as “shared intentionality” (Tomasello, 1995; Tomasello & Carpenter, 2007). Humans are not born able to participate in joint attention, but a typically developing infant will begin to acquire the ability to engage in spontaneous joint attention by the end of the first year of life (Carpenter, Nagell, & Tomasello, 1998). The precursors to joint attention, such as following another person’s eye gaze, emerge fairly early in development, and become more proficient over time. For example, three- to six-month-olds correctly followed an adult’s gaze towards a puppet 73 percent of the time (D’Entremont, Hains, & Muir, 1997). Additionally, and in support of the trajectory of increased proficiency with development, 11- to 14-month-old infants have been shown to follow another person’s eye gaze more often than did two- to four-month-olds (Scaife & Bruner, 1975). Overall, infants’ gaze following and gaze switching increases linearly between nine and 18 months of age (Mundy et al., 2007), forming the basis for the increasingly mature joint attention abilities that emerge during this developmental period.

Joint attention can be divided into two sub-types: The initiation of joint attention, in which one participant in a dyad tries to get the attention of the other regarding an object of mutual interest, and response to another’s bid for joint attention, in which one participant responds to the other’s attempts to engage his or her attention by following the other’s point or gaze, by verbalizing to the other, or by changing the affective response towards the object of mutual interest (Mundy & Newell, 2007; Mundy et al., 2007). Some researchers have argued that the ability to initiate joint attention marks the beginning of formalized intentional communication in humans; as such, this may be considered a more appropriate developmental milestone to track than one’s ability to respond to bids for joint attention (Brinck, 2001). Nonetheless, both forms are critical components of communication, and the development of both underlies a child’s trajectory towards formal (i.e., symbolic) communication.

In addition to being identified as unique to human communication, joint attention has emerged as an important indicator of possible developmental delay. One notable population in which joint attention is delayed and/or absent is in children with Autism Spectrum Disorder (ASD) (Bean & Eigsti, 2012). For example, children with ASD demonstrated less joint attention than typically developing children at 24 months (Naber et al., 2008). And, although children with ASD begin to display basic joint attention behaviors (e.g., pointing, gaze following) similar to age-matched typically developing children at 42 months, children with ASD continue to show deficiencies in more advanced joint attention behaviors (e.g., checking between other and object, following another’s pointing) relative to typically developing children (Naber et al., 2008). Given that these advanced behaviors are crucial to the development of mature communication (Akhtar & Gernsbacher, 2008), subtle deficits within the various behavioral manifestations of joint attention may serve as an important potential indicator of developmental delay. Consequently, identifying such indicators in different populations of children has become an active area of research.

One population of children for whom the development of joint attention may be compromised and about which relatively little is known is deaf and hard-of-hearing children, particularly those of hearing parents. In a deaf child-hearing parent dyad, the child has limited-to-no access to the auditory modality, despite spoken language being the primary form of communication for the parent. For the majority of children born deaf, this is the situation in which they find themselves. While causes of early deafness include genetic origins, it can also be due to exposure as a neonate to ototoxic medications such as aminoglycoside antibiotics or loop diuretics, noise exposure, hyperbilirubinaemia, cytomegalovirus exposure, and hypoxia (Cristobal & Oghalai, 2008). Deafness at birth occurs in one to two of every 1,000 infants (Nikolopoulos & Vlastarakos, 2010); in the United States, the rate is estimated to be two to three of every 1,000 infants (National Institute of Health, 2010). Importantly, nine out of every ten deaf infants born in the US are born to hearing families (National Institute of Health, 2010). Hearing parents of deaf children can attempt to learn and communicate with their child in sign language, and many do. But many more opt for their child to receive assistive technology, such as a cochlear implant, a device that bypasses the hair cells of the inner ear to directly stimulate the auditory nerve and thus providing the sensation of hearing (Yawn et al., 2015). But hearing through a cochlear implant is different from normal hearing and it takes time for children to learn language from this somewhat degraded signal (Sevy et al., 2010 and references therein). Because learning outcomes remain quite variable among pediatric implant users, the consensus from the research community is that the earlier deaf children are implanted, the more robust their language abilities will be (Bruijnzeel et al., 2016). Despite this, differences in the time to the child’s diagnosis, the length of the parental decision-making process, and the logistics of qualifying for the procedure itself all factor into the amount of time that passes before the deaf child has access to spoken language (Saliba et al., 2016; Sevy et al., 2010).

These, and other, factors contribute to differences in the length of time during which hearing parents and their deaf child remain do not share a common sensory modality to support transmission of a formal communication system. Moreover, there is limited information available to hearing parents regarding how to communicate with their children during the pre-implant period, particularly if they opt not to use sign language. Finally, there is very little data on what parents actually do during their child’s implant candidacy period (Depowski et al., 2015).

One line of research that can inform our understanding of how mismatched modalities of formal communication in parent-child dyads may influence interaction is something called the Still Face Paradigm. In the Still Face Paradigm, the mother interacts with her infant normally, but at specific time intervals indicated by the researcher, the mother maintains a stoic, unemotive face and continues to do so regardless of the child’s behavior (Cohn & Tronick, 1983). Although originally developed as a tool to mimic the decreased affect displayed by mothers with depression (Cohn & Tronick, 1983), researchers have begun using this paradigm to probe how parent interaction influences child emotions and cognition (Mesman, van IJzendoorn, & Bakermans-Kranenburg, 2009). In the case of deaf and hard-of-hearing children, it has revealed that hearing mothers of these children use the vocal modality (that is, speech) to re-engage their infants at the completion of the Still Face Paradigm and do so more than deaf mothers in deaf parent-deaf child dyads (Koester, Karkowski, & Traci, 1998), despite the children not having access to audition. No differences emerged between the deaf and hearing mothers in relative use of either the visual or tactile modalities to re-engage their infants. However, in other work, researchers observed that over a nine-month period (during which children went from nine to 18 months of age), deaf mothers used the visual modality more than hearing mothers to re-engage their deaf children after the Still Face Paradigm, while hearing mothers of deaf children continued to rely primarily on the auditory modality (Koester, 2001).

However, there is at least some evidence that hearing mothers of deaf children change how they interact with their deaf children, presumably in an effort to accommodate their children’s lack of access to sound. For example, during free interaction sessions between mothers and their deaf children (interacting as they normally would without toys), hearing mothers used vocal games accompanied by extreme gestures (e.g., while singing ‘The Itsy Bitsy Spider’) more so than did hearing mothers of hearing children (Koester, Brooks, & Karkowski, 1998). Moreover, hearing mothers are more likely to position objects in a deaf child’s line of sight (visual field), as well as to tap on, touch, or point at the objects, than hearing mothers of hearing children (Waxman & Spencer, 1997). In other words, there is evidence that hearing parents of deaf children use different forms of interaction to engage their children’s attention relative to those used by hearing parents of hearing children, though the research on this is limited.

While the previously mentioned studies are informative, relatively few have examined how either deaf or hearing parents of deaf children establish a mutual focus of attention (i.e., joint attention) when interacting with their children. In an important early study comparing hearing parents’ communication with their deaf or hearing toddlers (Prezbindowski, Adamson, & Lederberg, 1998), researchers found that deaf children were engaged in joint attention by parents much less often than their hearing peers. The authors hypothesized that the hearing parents tried to engage their deaf children by using oral language (i.e., the auditory modality), which was ineffective, resulting in the deaf children and their parents spending more time in a more rudimentary form of joint attention (i.e., coordinated) compared to hearing children, who spent more time in a more advanced form of joint attention (i.e., symbol-infused). In another study, success rates of both child- and parent-initiated joint attention bids in hearing parent-deaf child dyads were compared to those of hearing parent-hearing child dyads. Results indicated that success rates of maternal-initiated bids for joint attention were lower in hearing parent-deaf child dyads than in hearing parent-hearing child dyads (Nowakowski, Tasker, & Schmidt, 2009), although no difference emerged between dyad types for child-initiated joint attention episodes. Additional research has demonstrated that dyads consisting of hearing parents-hearing children or hearing parents-deaf children with a cochlear implant both engaged in more instances of joint attention than did dyads of hearing parents-deaf children without a cochlear implant (Tasker, Nowakowski, & Schmidt, 2010).

As should be clear, results on the development of joint attention in deaf and hard-of-hearing children are mixed. Much of the variability stems from methodological differences across the studies (i.e., age of children included, use of sign language or not, with and without cochlear implants, with and without language delay). Given the connection between the early abilities that support development of joint attention and subsequent language learning (e.g., Morales, Mundy, & Rojas, 1998), this is an important issue to pursue in more detail.

The current study is an exploratory examination of differences in the amount of successful joint attention between hearing parents and their deaf child and hearing parents and their hearing children. First, we have operationalized joint attention coding based on a careful review of the literature. Second, in contrast to previous joint attention studies in which children were almost exclusively under the age of two years (cf., Prezbindowski, Adamson, & Lederberg, 1998), we included children whose ages ranged from 18 months to three years to capture differences between dyad types at different ages. Support for expanding the age range for deaf children is motivated by recent research indicating increases in gaze shifting in deaf children (a crucial component of joint attention) up to three-and-a-half years of age (Lieberman, Hatrak, & Mayberry, 2011, 2014). In short, our focus here is to quantify and compare the success and failure rates of attempts in establishing joint attention by both parents and children across hearing-hearing and hearing-deaf dyads.

II. METHOD

A. Participants

Four deaf children (n = 4 females) ages 18.2 to 36.7 months (M = 26.83, SD = 7.78) who were severely to profoundly deaf and their hearing parents (n = 4 females) participated in the study. In addition, four hearing children (n = 4 females) ages 18.3 to 36.7 months (M = 26.85, SD = 7.72) and their hearing parents (n = 4 females) participated in this study. The children in each dyad type were aged-matched. Each was recruited via the National Institute of Health website or via local recruitment in either the Southwestern or Northeastern United States. Parents were all Caucasian and two of the deaf children identified as Caucasian, Hispanic/Latino. All but one parent had at least a high school education.

B. Materials

A set of attractive toys (a ball, a set of large blocks, a set of stacking cups, tableware, a tower of stacking rings, and toy cars) were positioned in the room used for the free-play session. This session was part of the children’s regular visit to their speech language pathologist (in the case of the deaf children) or to the Husky Pup Language Lab at the University of Connecticut (in the case of the typically developing children). The parent was instructed to play with her child as she would at home. Each play session lasted for at least five minutes (M = 464.23, SD = 154.35) and all were videorecorded. Clinical videos shared with researchers at the University of Connecticut using REDCap electronic data capture tools (Harris et al., 2009). REDCap (Research Electronic Data Capture) is a secure, web-based application designed to support data capture for research studies.

III. CODING PROCEDURE

Videos were coded using ELAN (Wittenburg et al., 2006), a language annotation software created at the Max Planck Institute for Psycholinguistics (The Language Archive, Nijmegen, The Netherlands). The five-minute play sessions were evaluated in ELAN for episodes of successful or failed joint attention. ELAN (http://tla.mpi.nl/tools/tla-tools/elan/) allows for analyses of videos, accommodating coding across modalities. It is free of charge. We used coding criteria for joint attention based on the work of Tek (2010), which was guided by criteria in Roos, McDuffie, Weismer, and Gernsbacher (2008) and Mundy and Acra (2006). Tek’s protocol (2010) adapted these researchers’ joint attention coding for use with children Autism Spectrum Disorders. Analyses were conducted using ELAN, SPSS, and Microsoft Excel.

A. Video Processing

Videos were first reviewed for clarity and visual capture of both parent and child throughout the play session. Videos were they edited using Adobe Premiere Pro to truncate the video to begin at the start of the play session. Start time was defined as the first video frame during which the testing room door was completely closed, such that the parent and child were alone together. The time from start to end values was the baseline length of time for a particular play session. The videos were then reviewed for intervals during which the behavior was uncodeable (e.g., the child or parent went out of view). A participant’s face had to be out of view of at least five seconds to be marked as uncodeable. The uncodeable time for each video was subtracted from that video’s baseline length, yielding a total time for each dyad’s play session. Uncodeable time never exceeded more than 5% of the overall video length.

B. Coding Criteria

Five possible types of joint attention episodes were coded for:

Successful, parent-initiated joint attention: Such an episode included the parent making a bid for attention to her child in any manner, including pointing, switching her gaze between the object and the child, touching or tapping the child with either her hand or an object, moving her hand in the child’s visual field, and/or using vocal speech or sign language to attract the child’s attention. The bid had to be responded to by the child. This could include the child pointing at the object, switching gaze between the object and the parent, tapping or touching the parent, waving in the parent’s line of sight, and/or using vocal speech or sign language to refer to the object. Such an episode could also occur if a parent shifted the child’s attention from one object to another using any of these techniques.

Unsuccessful, parent-initiated joint attention: When the parent attempted to engage in joint attention with the child using the means outlined above (e.g., pointing, gaze switching between child and object, touching the child, waving, and/or using speech/sign). However, in this type of episode, the child did not respond to the parent’s bid.

Passive attention: Such an episode was identified as instances in which the parent tried to merge into the child’s stream of attention but was unsuccessful in doing so. In passive attention, the child initiates the interaction with the object. In contrast, in a failed bid, the parent attempts to initiate interaction with an object.

Successful, child-initiated joint attention: In this type of episode, a child obtained the parent’s attention using pointing, gaze switching between the parent and the object, touching or tapping the parent, waving in the parent’s line of sight, and/or using speech/sign. Such a bid had to be responded to by the parent likewise using pointing, gaze switching, tapping, waving, and/or speech/sign.

Unsuccessful, child-initiated joint attention: In this type of episode, the child used pointing, gaze switching, touching or tapping the parent, waving in her line of sight, and/or using speech/sign in an attempt to engage the parent in joint attention. In this case, however, the parent did not respond to the child’s bid.

C. Coding in ELAN and Extracting Data for Analysis

Despite broad agreement about the importance of joint attention in development, there is less agreement on how to define joint attention and operationalize its coding. Based on a collective examination of the primary empirical work on this topic (see Abney, Smith, & Yu, 2017 for a review), we coded joint attention in ELAN using a five second “rule of engagement.” This represents our characterization of joint attention as an episode of engagement rather than as a momentary state, and the five second time window represents a the rounded midpoint of the time windows used by different researchers in the field (ranging from 3 to 6.5 seconds). Practically speaking, this meant that, after interacting with an object, a member of the dyad had five seconds to begin to engage with the other member of the dyad for it to be considered part of the same episode. A five second rule of disengagement (i.e., when neither participant engaged in joint attention behavior for five seconds) was also used, after which a joint attention episode was coded as terminated.

Using the “View Annotation Statistics” function in ELAN, codes were compiled across videos. Because each episode type was assigned a separate tier in ELAN, total times could then be extracted for each episode type. Inter-observer reliability (n = 3) was > 90% agreement across the different measures. Data were analyzed as described below.

D. Analyses and Results

Occurrences of unsuccessful, child-initiated bids for attention were rare; as such, this variable was excluded from subsequent analyses. Thus, the metrics of parent-initiated successful bids for joint attention, passive attention, childinitiated successful bids for joint attention, and parent-initiated unsuccessful bids for joint attention were considered for this study. Given small differences in the lengths of each dyad’s free play session, proportion of total session length spent in each selected attention type was used as the time metric. This was determined by extracted total amount of time spent by each dyad in each episode type. This time was then divided by the total session length for a particular dyad. Uncodeable time periods were excluded from this calculation (< 5%). So, for example, total time spent in parent-initiated, successful bids for joint attention was divided by total codeable session length.

Mann-Whitney U-tests were used to assess differences between dyad types because measures were not normally distributed. In contrast to a t-test, this non-parametric test compares median rather than mean scores of two samples. Thus, it is more robust against outliers and heavy tail distributions (i.e., non-normal distributions), as in these data. The box plot figures all represent medians rather than modes, and are the data upon which the Mann-Whitney U-tests were based.

The first comparison was made to determine whether differences existed between hearing parent-hearing child dyads and hearing parent-deaf child dyads in the total proportion of time spent in parent-initiated successful bids for attention. Hearing parent-deaf child dyads spent a significantly lower proportion of time in parent-initiated, successful bids for joint attention than hearing parent-hearing child dyads, U = 11.5, p < .05 (see Figure 1).

Figure 1.

Total proportion of time dyads spent in successful, parent-initiated joint attention by child’s hearing status.

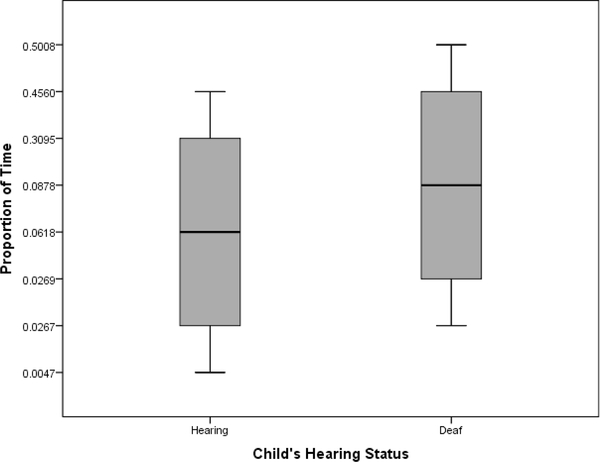

Differences also existed between dyad types in the total proportion of time spent in parent-initiated unsuccessful bids for joint attention. Hearing-parent deaf child dyads spent a significantly higher proportion of time in parent-initiated unsuccessful bids for attention than hearing parent-hearing child dyads, U = 5.5, p < .05 (Figure 2).

Figure 2.

Total proportion of time dyads spent in unsuccessful, parent-initiated joint attention by child’s hearing status.

Dyad types differed with regard to the total proportion of time spent parents spent attending passively to what the child was doing. Hearing parent-deaf child dyads spent a significantly higher proportion of time in passive attention episodes than hearing parent-hearing child dyads, U = 5, p < .05 (Figure 3).

Figure 3.

Total proportion of time dyads spent with parent in passive attention by child’s hearing status.

Finally, dyad types differed in proportion of time spent in successful, child-initiated joint attention episodes. Hearing parent-deaf child dyads spent a significantly lower proportion of time in successful, child-initiated joint attention episodes than hearing parent-hearing child dyads, U = 11, p < .05 (Figure 4).

Figure 4.

Total proportion of time dyads spent in successful, child-initiated joint attention by child’s hearing status.

E. Discussion

Results indicated significant differences between groups for all constructs but unsuccessful, child-initiated joint attention, for which relatively little data was produced by either dyad type.

First, it is important to consider caregivers’ contributions to joint attention. During the free play sessions between parents and children, hearing parent-hearing child dyads were engaged in successful, parent-initiated joint attention a significantly greater proportion of time than hearing parent-deaf child dyads. Likewise, hearing parent-deaf child dyads were engaged in unsuccessful, parent-initiated joint attention episode a significantly greater proportion of time than hearing parent-hearing child dyads. It has been found that hearing mothers tend to use the auditory modality to engage their children, regardless of the child’s hearing status (Koester & Lahti-Harper, 2010). Given that deaf children in this study had no access to the auditory modality, this could serve to explain the differences in proportion of time spent in successful and unsuccessful parent-initiated joint attention. If a deaf child is not responsive to the communicative modality used (i.e. spoken language), how can the child be expected to respond to bids for attention? Not much research on the role of modality in hearing parent-deaf child dyads’ establishment of joint attention exists, and more is needed. However, some evidence exists that hearing mothers of deaf children do accommodate their children’s hearing status in the form of making attempts to use visual and tactile modalities to engage their child (Traci & Koester, 2003; Koester, Brooks, & Karkowski, 1998; Koester, 2001). Similar findings demonstrate that during free play sessions with nine-, 12-, and 18-month-old infants, hearing mothers of deaf infants tend to move objects into the child’s line of sight or touch /point to objects (i.e. use the tactile and visual modalities) more than mothers in hearing parent/hearing child dyads (Waxman & Spencer, 1997). If this is the case, then one might expect to see similar, or perhaps even greater, levels of joint attention in the specific hearing parent-deaf child dyads in which parents use accommodating techniques to gain their deaf children’s attentions.

This difference in parent accommodation of a child’s hearing status likely also explains the difference in amounts of passive attention across dyad types: It may be that the hearing parents of deaf children are trying very hard to accommodate their children’s hearing loss, but just aren’t succeeding and at some point give up. Our results support this interpretation.

It is also important to consider the child’s role in joint attention: While limited research has been conducted regarding the role of the deaf child in parent-child joint attention success, what research has been done has demonstrated that deaf children learn to accommodate their parents’ hearing statuses in this domain (Lieberman et al., 2011, 2014). The findings of the present study suggest that more work is needed to assess the role that the deaf child occupies in responding to and initiating joint attention from a hearing parent. The significant difference we observed between dyad types for successful, child-initiated joint attention points to this issue. For example, the variability in both parent and child engagement is highlighted by a hearing parent-deaf child dyad (36-month-old child), in which the highest level of successful, parent-initiated joint attention and the highest level of successful, child-initiated joint attention was displayed of all of the hearing parent-deaf child dyads. While this may be due to the child’s relatively advanced age, such that the parent and child had had time to develop means of communicating without a formal system in place with which to do so, both the parent and the child worked were actively to engage with one another. Clearly, much more data is needed to understand how those factors contribute to joint attention.

While findings presented here are exploratory in nature and should be interpreted with caution given our small sample size, our approach further establishes a means by which specific interactive behaviors produced by participants in a dyad, regardless of hearing status, might be tracked over time. In particular, given increasing evidence of the association between joint attention and successful language development (see Morales et al., 1998), understanding the influence of parent accommodation of deaf children’s unique communication needs, whether or not they are candidates for cochlear implantation, is important. Future research should consider additional factors, beyond child age and hearing status, which may contribute to differences in communication between members of hearing parent-deaf child dyads. These include: etiology of hearing loss, family socio-economic status, family language background, parental sensitivity, and parental stress levels (see Oghalai et al., 2012) and their effects on parents’ successful (and failed) efforts to establish joint attention.

Recent findings regarding ASD and joint attention demonstrate a strong relationship between joint attention and language development in children in children with communication difficulties (see Tasker et al., 2010; Tek, 2010). These findings suggest that joint attention helps language development indirectly, above and beyond formal (i.e., symbolic) language input. Given unequal access to information in the auditory modality in hearing parent-deaf child dyads, therapeutic approaches that emphasize the establishment and maintenance of joint attention in these dyads may facilitate the child’s subsequent language development (either while learning a signed language or awaiting a cochlear implant). Likewise, given the possibility of establishing joint attention via non-auditory means (e.g. in the visual or haptic modalities) (Akhtar & Gernsbacher, 2008), there are ways in which meaningful communication does take place between hearing parents and deaf children even without formal language. Finally, although this is an exploratory study, the observations reported here highlight the utility of moving beyond standardized measures to obtain rich, ecologically valid data on parent-child interactions. For example, as noted, a high rate of successful joint attention was observed in one of the four hearing parent-deaf child dyads; critically, this dyad also had a high rate of failed bids for joint attention. In this case, the high rates of unsuccessful attempts to establish joint attention on the part of the parent may be interpreted as a measure of parent effort. Future studies should consider, on a broader scale, what different metrics and numbers used in joint attention research really mean.

The present study lends support for tracking the use of rich multisensory data from parent-child interactions. Examination of such information stands to inform our understanding of the various factors underlying communicative success across a wide range of child populations. With regard to deaf infants and children who are candidates for cochlear implantation, it will be important to establish how much the behavior of the parents is driven by knowing that their child will receive a cochlear implant and whether they are trying to emphasize aural/oral behavior rather than building up visual/tactile communication (as compared to similar dyads who might not be pursuing implants). Moreover, how these two groups compare after the deaf children receive cochlear implants will be important to track in future research. For example, do the dyads learn quickly how to establish joint attention once the child can hear parents’ vocal attempts to capture their attention? Or is joint attention something that must be developed early or children will be permanently delayed in this aspect of language acquisition? And how do various other factors influence this? Such findings will help inform therapists and clinicians on how they might advise parents of deaf children to interact with their child, whether the child is a candidate for cochlear implantation or not.

ACKNOWLEDGMENT

We thank the parents and children for participating in our study and the funding agencies for their support. Support was provided by National Institutes of Health grants R56 DC010164 and R01 DC010075, the National Institute on Deafness and Other Communication Disorders, and The Dana Foundation.

National Institutes of Health grants R56 DC010164 and R01 DC010075

Contributor Information

Heather Bortfeld, Psychological Sciences and Cognitive & Information Sciences, University of California, Merced, Merced, CA USA..

John Oghalai, Tina and Rick Caruso Department of Otolaryngology – Head and Neck Surgery, Keck School of Medicine at the University of Southern California, Los Angeles, CA USA.

REFERENCES

- Akhtar N, & Gernsbacher M (2008). On privileging the role of gaze in infant social cognition. Child Development Perspectives, 2, 59–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bean JL, & Eigsti I-M. (2012). Assessment of joint attention in school-age children and adolescents. Research in Autism Spectrum Disorders, 6, 1304–1310. [Google Scholar]

- Brinck I (2001). Attention and the evolution of intentional communication. Pragmatics & Cognition, 9(2), 259–277. [Google Scholar]

- Bruijnzeel H, Draaisma K, van Grootel R, Stegemen I, Topsakal V, & Grolman W (2016). Systematic review on surgical outcomes and hearing preservation for cochlear implantation in children and adults. Otolaryngology–Head and Neck Surgery, 154, 4, 586–596. [DOI] [PubMed] [Google Scholar]

- Carpenter M, Nagell K, & Tomasello M (1998). Social cognition, joint attention, and communicative competence from 9 to 15 months of age. Monographs of the Society for Research in Child Development, 63, 1–174. [PubMed] [Google Scholar]

- Cohn JF, & Tronick EZ (1983). Three-month-old infants’ reaction to simulated maternal depression. Child Development, 54, 185–193. [PubMed] [Google Scholar]

- Cristobal R & Oghalai JS (2008). Hearing loss in children with very low birth weight: Current review of epidemiology and pathophysiology. Archives of Disease in Childhood. Fetal and Neonatal Edition, 93, F462–F468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Entremont BB, Hains SJ, & Muir DW (1997). A demonstration of gaze following in 3- to 6-month-olds. Infant Behavior & Development, 20, 569–572. [Google Scholar]

- Depowski N, Abaya H, Oghalai JS, & Bortfeld H (2015). Modality use in joint attention between hearing parents and deaf children. Frontiers in Psychology, 6, 1556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, & Conde JG (2009). Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42(2), 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koester LS, Karkowski AM, & Traci MA (1998). How do deaf and hearing mothers regain eye contact when their infants look away? American Annals of the Deaf, 143, 5–13. [DOI] [PubMed] [Google Scholar]

- Koester LS (2001). Nonverbal communication between deaf and hearing infants and their parents: A decade of research. Hrvatska Revija Za Rehabilitacijska Istraživanja, 37, 61–76. [Google Scholar]

- Koester LS, Brooks LR, & Karkowski AM (1998). A comparison of the vocal patterns of deaf and hearing mother-infant dyads during face-to-face interactions. Journal of Deaf Studies and Deaf Education, 3, 290–301. [DOI] [PubMed] [Google Scholar]

- Koester LS, Brooks L, & Traci MA (2000). Tactile contact by deaf and hearing mothers during face-to-face interactions with their infants. Journal of Deaf Studies and Deaf Education, 5, 127–139. [DOI] [PubMed] [Google Scholar]

- Koester LS & Lahti-Harper E (2010). Mother-infant hearing status and intuitive parenting behaviors during the first 18 months. American Annals of the Deaf, 155, 5–18. [DOI] [PubMed] [Google Scholar]

- Lieberman A, Hatrak M, & Mayberry RI (2011). The development of eye gaze control for linguistic input in deaf children. Proceedings of the 35th Annual Boston University Conference on Language Development (Vol. 0108, pp. 391–404). [Google Scholar]

- Lieberman A, Hatrak M, Mayberry RI (2014). Learning to look for language: development of joint attention in young deaf children. Language Learning and Development, 10, 9–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markus J, Mundy P, Morales M, Delgado CF, & Yale M (2000). Individual differences in infant skills as predictors of child–caregiver joint attention and language. Social Development, 9, 302–315. [Google Scholar]

- Mesman J, van IJzendoorn MH, & Bakermans-Kranenburg MJ (2009). The many faces of the Still-Face Paradigm: A review and meta-analysis. Developmental Review, 29, 120–162. [Google Scholar]

- Morales M, Mundy P, & Rojas J (1998). Following the direction of gaze and language development in 6-month-olds. Infant Behavior and Development, 21, 373–377. [Google Scholar]

- Mundy PC, & Acra C (2006). Joint Attention, Social Engagement, and the Development of Social Competence. In Marshall PJ, Fox NA, Marshall PJ, Fox NA (Eds.), The development of social engagement: Neurobiological perspectives (81–117). New York, NY US: Oxford University Press. [Google Scholar]

- Mundy P, Block J, Delgado C, Pomares Y, Van Hecke AV, & Parlade MV (2007). Individual differences and the development of joint attention in infancy. Child Development, 78, 938–954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mundy P, & Newell L (2007). Attention, joint attention, and social cognition. Current Directions in Psychological Science, 16, 269–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naber FBA, Bakermans-Kranenburg MJ, van Ijzendoorn MH, Dietz C, van Daalen E, Swinkels SHN, Buitelaar JK, & van Engeland H (2008). Joint attention development in toddlers with autism. European Child & Adolescent Psychiatry, 17, 143–152. [DOI] [PubMed] [Google Scholar]

- National Institute of Health. (2010). Quick Statistics. Retrieved November 3, 2012, from http://www.nidcd.nih.gov/health/statistics/Pages/quick.aspx.

- Nikolopoulos TP, & Vlastarakos PV (2010). Treating options for deaf children. Early Human Development, 86, 669–674. [DOI] [PubMed] [Google Scholar]

- Nowakowski ME, Tasker SL, & Schmidt LA (2009). Establishment of joint attention in dyads involving hearing mothers of deaf and hearing children, and its relation to adaptive social behavior. American Annals of the Deaf, 154, 15–29. [DOI] [PubMed] [Google Scholar]

- Oghalai JS, Caudle SE, Bentley B, Abaya H, Lin J, Baker D, Emery C, Bortfeld H, Winzelberg J (2012). Cognitive outcomes and familial stress after cochlear implantation in deaf children with and without developmental delays. Journal of Otology and Neurotology, 33, 947–956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prezbindowski AK, Adamson LB, Lederberg AR (1998). Joint attention in deaf and hearing 22-mont-old children and their hearing mothers. Journal of Applied Developmental Psychology, 19, 377–387. [Google Scholar]

- Roos EM, McDuffie AS, Weismer S, & Gernsbacher M (2008). A comparison of contexts for assessing joint attention in toddlers on the autism spectrum. Autism, 12, 275–291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saliba J, Bortfeld H, Levitin DJ, & Oghalai JS (2016). Functional near-infrared spectroscopy for neuroimaging in cochlear implant recipients. Hearing Research, 338, 64–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scaife M, & Bruner J (1975). The capacity for joint visual attention in the infant. Nature, 253, 265–266. [DOI] [PubMed] [Google Scholar]

- Sevy A, Bortfeld H, Huppert T, Beauchamp M, Tonini R, & Oghalai J (2010). Neuroimaging with near-infrared spectroscopy demonstrates speech-evoked activity in the auditory cortex of deaf children following cochlear implantation. Hearing Research, 270, 39–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tasker SL, Nowakowski ME, & Schmidt LA (2010). Joint attention and social competence in deaf Children with cochlear implants. Journal of Developmental and Physical Disabilities, 22, 509–532. [Google Scholar]

- Tek S (2011). A longitudinal analysis of joint attention and language development in young children with autism spectrum disorders. Dissertation Abstracts International, 71, 7126. [Google Scholar]

- Tomasello M (1995). Joint attention as social cognition. In Moore C, Dunham PJ (Eds.), Joint attention: Its origins and role in development (pp. 103–130). Hillsdale, NJ England: Lawrence Erlbaum Associates, Inc. [Google Scholar]

- Tomasello M, & Carpenter M (2007). Shared intentionality. Developmental Science, 10, 121–125. [DOI] [PubMed] [Google Scholar]

- Traci M, & Koester LS (2003). Parent-infant interactions: A transactional approach to understanding the development of deaf infants. In Marschark M & Spencer PE (Eds.), Oxford Handbook of Deaf Studies, Language, and Education, 190–202. [Google Scholar]

- Waxman RP, & Spencer PE (1997). What mothers do to support infant visual attention: sensitivities to age and hearing status. Journal of Deaf Sudies and Deaf Education, 2, 104–114. [DOI] [PubMed] [Google Scholar]

- Wittenburg P, Brugman H, Russel A, Klassmann A, Sloetjes H (2006). ELAN: A professional framework for multimodality research. In: Proceedings of LREC 2006, Fifth International Conference on Language Resources and Evaluation. [Google Scholar]

- Yawn R, Hunter J, Sweeney A, & Bennett M (2015). Cochlear implantation: a biomechanical prosthesis for hearing loss. F1000 Prime Reports, 7, 45. [DOI] [PMC free article] [PubMed] [Google Scholar]