Abstract

In humans, the occipital middle-temporal region (hMT+/V5) specializes in the processing of visual motion, while the planum temporale (hPT) specializes in auditory motion processing. It has been hypothesized that these regions might communicate directly to achieve fast and optimal exchange of multisensory motion information. Here we investigated, for the first time in humans (male and female), the presence of direct white matter connections between visual and auditory motion-selective regions using a combined fMRI and diffusion MRI approach. We found evidence supporting the potential existence of direct white matter connections between individually and functionally defined hMT+/V5 and hPT. We show that projections between hMT+/V5 and hPT do not overlap with large white matter bundles, such as the inferior longitudinal fasciculus and the inferior frontal occipital fasciculus. Moreover, we did not find evidence suggesting the presence of projections between the fusiform face area and hPT, supporting the functional specificity of hMT+/V5–hPT connections. Finally, the potential presence of hMT+/V5–hPT connections was corroborated in a large sample of participants (n = 114) from the human connectome project. Together, this study provides a first indication for potential direct occipitotemporal projections between hMT+/V5 and hPT, which may support the exchange of motion information between functionally specialized auditory and visual regions.

SIGNIFICANCE STATEMENT Perceiving and integrating moving signal across the senses is arguably one of the most important perceptual skills for the survival of living organisms. In order to create a unified representation of movement, the brain must therefore integrate motion information from separate senses. Our study provides support for the potential existence of direct connections between motion-selective regions in the occipital/visual (hMT+/V5) and temporal/auditory (hPT) cortices in humans. This connection could represent the structural scaffolding for the rapid and optimal exchange and integration of multisensory motion information. These findings suggest the existence of computationally specific pathways that allow information flow between areas that share a similar computational goal.

Keywords: hMT+/V5, motion processing, multisensory, planum temporale, tractography

Introduction

Perceiving motion across the senses is arguably one of the most important perceptual skills for the survival of living organisms. Single-cell recordings in primates (Dubner and Zeki, 1971) and fMRI studies in humans (Zeki et al., 1991) demonstrated that the middle temporal cortex (hereafter, area hMT+/V5) specializes in the processing of visual motion. One hallmark feature of this region is that it contains cortical columns that are preferentially tuned to a specific direction/axis of visual motion (Maunsell and Van Essen, 1983; Rezk et al., 2020). When the function of this region is disrupted, either because of brain damage or by applying transcranial magnetic stimulation, the perception of visual motion directions is selectively impaired (Zihl et al., 1983, 1991; Matthews et al., 2001). Even if less research has been dedicated to study the neural substrates of auditory motion, the human planum temporale (hPT), in the superior temporal gyrus, is thought to notably specialize in the processing of moving sounds (Baumgart et al., 1999; Warren et al., 2002). Analogous to hMT+/V5, hPT shows an axis-of-motion organization reminiscent of the organization observed in hMT+/V5 (Battal et al., 2019).

In everyday life, moving events are often perceived simultaneously across vision and audition. Psychophysical studies have shown the automaticity and perceptual nature of audiovisual motion perception (Kitagawa and Ichihara, 2002; Soto-Faraco et al., 2002; Vroomen and De Gelder, 2003). Classical models of movement perception suggest that visual and auditory motion inputs are first processed separately in sensory-specific brain regions and then integrated in multisensory convergence zones (e.g., intraparietal area) (Bremmer et al., 2001; Macaluso and Driver, 2005). This hierarchical model has been recently challenged by studies suggesting that the integration of auditory and visual motion information can occur within regions typically considered unisensory (Ghazanfar and Schroeder, 2006; Sadaghiani et al., 2009; von Saldern and Noppeney, 2013; Ferraro et al., 2020). In particular, in addition to its well-documented role for visual motion, hMT+/V5 has been found to respond preferentially to auditory motion (Poirier et al., 2005) and to contain information about auditory motion directions (Dormal et al., 2016) using a similar representational format in vision and audition (Rezk et al., 2020).

However, how the visual and auditory motion systems communicate is still debated. Although it was initially suggested that audiovisual motion signals in occipital or temporal regions could rely on feedback projections from multimodal areas (Driver and Spence, 2000; Soto-Faraco et al., 2004), an alternative hypothesis supports the existence of direct connections between motion-selective regions in the occipital and temporal cortices (Rezk et al., 2020). This hypothesis finds support in human studies showing increased connectivity between occipital and temporal motion-selective areas during the processing of moving information (Lewis and Noppeney, 2010; Dormal et al., 2016) as well as in primate tracer studies showing monosynaptic connections between MT, the equivalent of area hMT+/V5 in primates, and motion-sensitive areas in the temporal lobe (Ungerleider and Desimone, 1986; Boussaoud et al., 1990; Palmer and Rosa, 2006a), caudal portions of the auditory parabelt (Palmer and Rosa, 2006a) and middle lateral belt regions (Majka et al., 2019). This latter area is considered part of the hPT equivalent in macaques (Poirier et al., 2017). In humans, the potential existence of a direct anatomic connections between auditory and visual motion-selective regions remains, however, unexplored.

In our study, we provide a first indication for the potential presence of direct anatomic connections between hMT+/V5 and hPT in humans using a combined functional and diffusion-weighted MRI approach. To overcome the difficulties in the localization of hMT+/V5 and hPT from anatomic landmarks alone (Westbury et al., 1999; Dumoulin et al., 2000), each participant was first involved in a visual and an auditory fMRI motion localizer to individually localize these areas functionally. A series of control tractography analyses as well as a replication study in a large sample from the Human Connectome Project (HCP) speak for the specificity and reliability of our results.

Materials and Methods

Dataset 1: Trento

Sixteen participants (6 women; mean age ± SD, 30.6 ± 5.1 years; range, 20-40 years) were scanned at the Center for Mind/Brain Sciences of the University of Trento using a Bruker BioSpin MedSpec 4T MR-scanner equipped with an 8-channel transmit and receive head coil. The study was approved by the Committee for Research Ethics of the University of Trento. All participants gave informed consent in agreement with the ethical principles for medical research involving human subject (Declaration of Helsinki, World Medical Association) and the Italian Law on individual privacy (D.l. 196/2003). One participant was excluded from the analysis because of excessive movement during the auditory motion localizer task.

Experimental design

Functional visual motion localizer experiment

A visual motion localizer experiment was implemented to localize hMT+/V5. Visual stimuli were back-projected onto a screen: width: 42 cm, frame rate: 60 Hz, screen resolution: 1024 × 768 pixels; mean luminance: 109 cd/m2 via a liquid crystal projector (OC EMP 7900, Epson Nagano) positioned at the back of the scanner and viewed via mirror mounted on the head coil at a distance of 134 cm. Stimuli were 16 s of random-dot patterns, consisting of circular aperture (radius 4°) of radial moving and static dots (moving and static conditions, respectively) with a central fixation cross (Huk et al., 2002). A total of 120 white dots (diameter of each dot was 0.1 visual degree) were displayed on a gray background, moving 4° per second. In all conditions, each dot had a limited lifetime of 0.2 s. Limited lifetime dots were used to ensure that the global direction of motion could only be determined by integrating local signals over a larger summation field rather than by following a single dot (Bex et al., 2003). Additionally, limited lifetime dots allowed the use of control flickering (as opposed to purely static). Stimuli were presented for 16 s followed by a 6 s rest period. Stimuli within motion blocks alternated between inward and outward motion (expanding and contracting) once per second. Because the localizer aimed to localize the global hMT+/V5 complex (e.g., MT and MST regions), the static block was composed of dots maintaining their position throughout the block to prevent flicker-like motion (A. T. Smith et al., 2006). The localizer consisted of 14 alternating blocks of moving and static dots (7 each) and lasting a total of 6 min 40 s (160 volumes). In order to maintain the participant's attention and to minimize eye movement during acquisition during the localizer's run, participants were instructed to detect a color change (from black to red) of a central fixation cross (0.03°) by pressing the response button with the right index finger. Three of the 16 participants had a slightly modified version of such a visual motion localizer as described previously (Rezk et al., 2020).

Functional auditory motion localizer experiment

To localize hPT, we implemented an auditory motion localizer. Previous studies have demonstrated that, in addition to the hPT, a region within the middle temporal cortex and anterior to hMT+/V5 (called hMTa) (Rezk et al., 2020) is also selectively recruited for the processing of moving sounds (Poirier et al., 2005; Saenz et al., 2008; Battal et al., 2019; Rezk et al., 2020). hMTa was identified individually using the auditory motion localizer data and used as an exclusion mask to avoid confounding hPT–hMT+/V5 connections with hPT–hMTa connections at later stages. To create an externalized ecological sensation of sound location and motion inside the MRI scanner, we recorded individual in-ear stereo recordings in a semi-anechoic room outside the MRI scanner and on 30 loudspeakers on horizontal and vertical planes, mounted on two semicircular wooden structures. Participants were seated in the center of the apparatus with their head on a chinrest, such that the speakers on the horizontal and vertical planes were equally distant from participants' ears. Sound stimuli consisted of 1250 ms pink noise (50 ms rise/fall time). In the motion condition, pink noise was presented moving in leftward, rightward, upward, and downward directions. In the static condition, the same pink noise was presented separately at one of the following four locations: left, right, up, and down. These recordings were then replayed to the blindfolded participants inside the MRI scanner via MR-compatible closed-ear head-phones (500 to 10 kHz frequency response; Serene Sound, Resonance Technology). In each run, participants were presented with eight auditory categories (four motion and four static) randomly presented using a block design. Each category of sound was presented for 15 s (12 repetitions of 1250 ms sound, no interstimulus interval) and followed by 7 s gap to indicate the corresponding direction/location in space and 8 s of silence (total interblock interval, 15 s). Participants completed a total of 12 runs, with each run lasting 4 min and 10 s. A more detailed description can be found elsewhere (Battal et al., 2019). As for the visual modality, 3 participants conducted a slightly modified version of this auditory motion localizer that had one long run of 13 motion blocks and 13 static blocks (587.5 s in total) (for more details, see Rezk et al., 2020).

Image acquisition

Four imaging datasets were acquired from each participant: an fMRI visual motion localizer, an fMRI auditory motion localizer, diffusion-weighted MR images, and structural T1-weighted images. Participants were instructed to lie still during acquisition, and foam padding was used to minimize scanner noise and head movement.

fMRI sequences

Functional images were acquired with T2*-weighted gradient echoplanar sequence. Acquisition parameters were as follows: TR, 2500 ms; TE, 26 ms; flip angle, 73°; FOV, 192 mm; matrix size, 64 × 64; and voxel size, 3 × 3 × 3 mm3. A total of 39 slices was acquired in an ascending feet-to-head interleaved order with no slice gap. The three initial scans of each acquisition run were discarded to allow for steady-state magnetization. Before each EPI run, we performed an additional scan to measure the point-spread function of the acquired sequence, including fat saturation, which served for distortion correction that is expected with high-field imaging (Zeng and Constable, 2002).

Diffusion MRI (dMRI)

Whole-brain diffusion weighted images were acquired using a single-refocused EPI sequence (TR = 7100 ms, TE = 99 ms, image matrix = 112 × 112, FOV = 100 × 100 mm2, voxel size 2.29 mm isotropic). Ten volumes without any diffusion weighting (b0 images) and 60 diffusion-weighted volumes with a b value of 1500 s/mm2 were acquired. By using a large number of directions and 10 repetitions of the baseline images on a high magnetic field strength, we aimed at improving the signal-to-noise ratio and reduce implausible tracking results (Fillard et al., 2011).

Structural (T1) images

To provide detailed anatomy a total of 176 axial slices were acquired with a T1- weighted MP-RAGE sequence covering the whole brain. The imaging parameters were as follows: TR = 2700 ms, TE= 4.18 ms, flip angle = 7°, isotropic voxel = 1 mm3, FOV, 256 × 224 mm and inversion time, 1020 ms (Papinutto and Jovicich, 2008).

Image processing

Definition of ROIs using functional data

Functional volumes from the localizer experiments were used to define regions responding preferentially to motion (hMT+/V5 for vision; hPT and hMTa for audition). For the preprocessing and analysis of functional data, we used SPM8 (Welcome Department of Imaging Neuroscience, London), implemented in MATLAB 2016b (The MathWorks). The preprocessing of functional data included the realignment of functional time series with a second degree B-spline interpolation, coregistration of functional and anatomic data and spatial smoothing (Gaussian kernel, 6 mm FWHM). Visual and auditory motion localizer experiments were analyzed separately and the ROIs in each experiment were localized both (1) in each subject individually and (2) at the group level. The rationale behind defining group-level ROIs was twofold: (1) it allowed us to assess the reproducibility of the connection using subject-specific versus group-level ROIs; and (2) it allowed us to use the group coordinates in other datasets where the individual localization of hMT+/V5 or hPT is not possible, such as the HCP dataset (Dataset 2; see below).

hMT+/V5 coordinates definition from visual motion localizer

Individually defined hMT+/V5

Following the preprocessing steps, we obtained BOLD activity related to visual motion and visual static blocks. For each subject, we computed statistical comparisons with a fixed-effect GLM. The GLM was fitted for every voxel with the visual motion and the visual static conditions as regressors of interest and six head motion parameters derived from realignment of the functional volumes (three translational motion and three rotational motion parameters) as regressors of no interest. Each regressor was modeled with a boxcar function and convolved with the canonical HRF. A high-pass filter of 128 s was used to remove low-frequency signal drifts. Brain regions that responded preferentially to the moving visual stimuli were identified for every subject individually by subtracting visual motion conditions [Visual Motion] and static conditions [Visual Static]. Subject-specific hMT+/V5 coordinates were defined by identifying voxels in a region nearby the intersection of the ascending limb of the inferior temporal sulcus and the lateral occipital sulcus (Dumoulin et al., 2000) that responded significantly more to motion than static conditions using a lenient threshold of p < 0.01 uncorrected to localize this peak in every participant.

Group-level hMT+/V5

The preprocessing of the functional data for the group-level analysis additionally included the spatial normalization of anatomic and functional data to the to the MNI template using a resampling of the structural and functional data to an isotropic 2 mm resolution. The individual [Visual Motion > Visual Static] contrast was further smoothed with an 8 mm FWHM kernel and entered into a random effects model for the second-level analysis consisting of a one-sample t test against 0. Group-level hMT+/V5 coordinates were defined by identifying voxels in a region nearby the intersection of the ascending limb of the inferior temporal sulcus and the lateral occipital sulcus (Dumoulin et al., 2000) that responded significantly more to motion than static conditions using family-wise error correction for multiple comparisons at p < 0.05.

hPT and hMTa coordinates definition from auditory motion localizer

Individually defined hPT and hMTa

For the auditory motion localizer, the same preprocessing as for the visual motion localizer was applied. The GLM included eight regressors from the motion and static conditions (four motion directions, four sound source locations) and six movement parameters (three translational motion and three rotational motion parameters) as regressors of no interest. Each regressor was modeled with a boxcar function, convolved with the canonical HRF and filtered with a high-pass filter of 128 s. Brain regions responding preferentially to the moving sounds were identified for every subject individually by subtracting all motion conditions [Auditory Motion] and all static conditions [Auditory Static]. Individual hPT coordinates were defined at the peaks in the superior temporal gyrus that lie posterior to Hesclh's gyrus and responded significantly more to motion than static. Individual hMTa coordinates were defined at the peaks in the middle temporal cortex and anterior to hMT+/V5 that responded significantly more to motion than static sounds. We used a lenient threshold of p < 0.01 uncorrected in the individual [Auditory Motion > Auditory Static] contrast to be able to localize these regions in each participant.

Group-level hPT and hMTa

For hPT and hMTa defined at the group level, an analogous procedure to the one conducted for the visual motion localizer was used.

Fusiform face area (FFA) coordinate definition from previous literature

To define the FFA, we relied on face-preferential group coordinates extracted from a previous study of our laboratory (Benetti et al., 2017) for the right [44, −50, −16] and the left [−42, −52, −20] hemisphere (in MNI space).

dMRI preprocessing

Data preprocessing was implemented in MRtrix 3.0 (Tournier et al., 2012) (www.mrtrix.org), and in FSL 5.0.9 (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/FSL). Briefly, data were denoised (Veraart et al., 2016), removed of Gibbs ringing, corrected for Eddy current distortions and head motion (Andersson and Sotiropoulos, 2016), and corrected for low-frequency B1 field inhomogeneities (Tustison et al., 2010). Spatial resolution was up-sampled by a factor of 2 in all three dimensions using cubic b-spline interpolation, to a voxel size of 1.15 mm3, and intensity normalization across subjects was performed by deriving scale factors from the median intensity in select voxels of white matter, gray matter, and CSF in b = 0 s/mm2 images, then applying these across each subject image (Raffelt et al., 2012b). This step normalizes the median white matter b = 0 intensity (i.e., non–diffusion-weighted image) across participants so that the proportion of one tissue type within a voxel does not influence the diffusion-weighted signal in another. The diffusion data were nonlinearly registered to the T1-weighted structural images using the antsRegistrationSyNQuick script available in ANTs (Avants et al., 2008) with the brain-extracted T1 image defined as the fixed image and the up-sampled FA map (1 × 1 × 1 mm3) and the up-sampled b0 volume as the moving images. We segmented maps for white matter, gray matter, CSF, and subcortical nuclei using the FAST algorithm in FSL (Zhang et al., 2001). This information was combined to form a five tissue type image to be used for anatomically constrained tractography in MRtrix3 (R. E. Smith et al., 2012). These maps were used to estimate tissue-specific response functions (i.e., the signal expected for a voxel containing a single, coherently-oriented fiber bundle) for gray matter, white matter, and CSF using multishell multitissue constrained spherical deconvolution (CSD) (Jeurissen et al., 2014). Fiber orientation distribution (FOD) functions were then estimated using the obtained response function coefficients averaged across subjects to ensure subsequent differences in FOD function amplitude will only reflect differences in the diffusion-weighted signal. By using multishell multitissue constrained spherical deconvolution (CSD) in our single-shell, data benefitted from the hard non-negativity constraint, which has been observed to lead to more robust outcomes (Jeurissen et al., 2014). Spatial correspondence between participants was achieved by generating a group-specific population template with an iterative registration and averaging approach using FOD images from all the participants (Raffelt et al., 2011). That is, each subject's FOD image was registered to the template using FOD-guided nonlinear registrations available in MRtrix (Raffelt et al., 2012a). These registration matrices were required to transform the seed and target regions from native diffusion space to the template diffusion space, where tractography was conducted.

Preparation of ROIs for tractography

Individually defined hMT+/V5, hPT, and hMTa

We transformed individually defined coordinates from the native structural space to the native diffusion space applying the antsApplyTransforms command in ANTs that uses the warps (inverse warps in this case) calculated in the previous nonlinear registration. The reconstruction of white matter connections between functionally defined regions is particularly challenging because it is likely to encompass portions of gray matter, which suffer from ill-defined fiber orientations (Whittingstall et al., 2014; Jeurissen et al., 2019). Therefore, once in native diffusion space, the peak-coordinates were moved to the closest white matter voxel (Fractional anisotropy > 0.25) (Blank et al., 2011; Benetti et al., 2018) and a sphere of 5 mm radius was centered there. To ensure that tracking was done from white matter voxels only, we masked the sphere with individual white matter masks. Last, ROIs were transformed from native diffusion space to template diffusion space, where tractography was conducted.

Group-level hMT+/V5, hPT, hMTa, and FFA

First, we computed the warping images between the standard MNI space and the native structural space of each participant using the antsRegistrationSyNQuick script in ANTs. (T1-weighted structural images were nonlinearly registered to the MNI-152 standard-space T1-template image). This registration was computed using the MNI-152 standard-space T1-template image as the fixed image and each subject's native T1 image as the moving image. To note, the structural T1 images matched the orientation of the MNI-152 T1-template image, as we first applied the fslreorient2std command in FSL. Using these (inverse) registration matrices, we transformed the group peak coordinates from the standard MNI space to the native structural space of each participant. Once the coordinates were in native structural space, we followed the same procedure described for the individually defined coordinates.

Probabilistic tractography

Probabilistic tractography was conducted between three pairs of ROIs: (1) individually defined hMT+/V5 and hPT, (2) group-level hMT+/V5 and hPT, and (3) group-level hPT and FFA. Tractography was conducted using each subject's individual FOD after being transformed and reoriented to template diffusion space. We selected hMT+/V5 and hPT as inclusion regions for tractography, based on their preferential response to visual and auditory motion, respectively. To prevent hPT from connecting with regions in the vicinity of hMT+/V5, which respond preferentially to auditory, but not visual, motion, hMTa (identified in the auditory motion localizer task) was used as an exclusion mask for connections between hMT+/V5 and hPT. hMT+/V5 masks were mutually exclusive with hMTa masks, both at the individual and at the group level. This way, we avoided the reconstruction of tracts between motion-selective regions responding preferably to the auditory modality.

For each pair of ROIs, we computed tractography in symmetric mode (i.e., seeding from one ROI and targeting the other, and conversely). We then merged the tractography results pooling together the reconstructed streamlines. We used two tracking algorithms in MRtrix (iFOD2 and Null Distribution2). The former is a conventional tracking algorithm, whereas the latter reconstructs streamlines by random tracking. The iFOD2 algorithm (Second-order Integration over FODs) is a probabilistic algorithm that uses a Bayesian approach to account for more than one fiber orientation within each voxel and takes as input a FOD image represented in the spherical harmonic basis. Candidate streamline paths are drawn based on short curved “arcs” of a circle of fixed length (the step size), tangent to the current direction of tracking at the current points rather than stepping along straight-line segments (Tournier et al., 2010). The Null Distribution2 algorithm does not use any image information relating to fiber orientations. This algorithm reconstructs connections based on random orientation samples, identifying voxels where the diffusion data are providing more evidence of connection than that expected from random tracking (Morris et al., 2008). As this random tracking relies on the same seed and target regions as the iFOD2 algorithm, we can directly compare the number of reconstructed streamlines between the two tracking algorithms. We used the following parameters for fiber tracking: randomly placed 5000 seeds for each voxel in the ROI, a step length of 0.6 mm, FOD amplitude cutoff of 0.05, and a maximum angle of 45 degrees between successive steps. We applied the anatomically constrained variation of this algorithm (Tournier et al., 2010, 2012) (ACT), whereby each participant's five-tissue-type segmented T1 image provided biologically realistic priors for streamline generation, reducing the likelihood of false positives (R. E. Smith et al., 2012). The set of reconstructed connections were refined by removing streamlines whose length was 3 SDs longer than the mean streamline length or whose position was >3 SDs away from the mean position, following a similar procedure as previous studies despite the fact that the exact parameters vary across studies (Yeatman et al., 2012; Takemura et al., 2016). To calculate a streamline's distance from the core of the tract, we resampled each streamline to 100 equidistant nodes and treated the spread of coordinates at each node as a multivariate Gaussian. The tract's core was calculated as the mean of each fibers x, y, z coordinates at each node.

Statistical and data analysis

Testing the presence of connections between ROIs

We independently tested the presence of (1) individually defined hMT+/V5–hPT connections, (2) group-level hMT+/V5–hPT connections, and (3) group-level hPT–FFA connections. No agreement on statistical thresholding of probabilistic tractography exists, but previous studies have considered a connection reliable at the individual level when it had a minimum of 10 streamlines (Makuuchi et al., 2009; Blank et al., 2011; Müller-Axt et al., 2017; Benetti et al., 2018; Tschentscher et al., 2019). However, setting the same absolute threshold for different connections does not take into account that the probability of connections drops exponentially with the distance between the seed and target regions (Markov et al., 2013), or the difficulty to separate real from false connections (Iturria-Medina et al., 2011). To take these into account, we compared the number of streamlines reconstructed by random tracking (Null Distribution2 algorithm), with those generated by conventional tracking (iFOD2 algorithm) (Morris et al., 2008; McFadyen et al., 2019). Since both algorithms conduct tractography using the same seed and target regions, we can directly compare the number of reconstructed streamlines between them without correcting for a possible difference in the distance between the seed and target regions or their sizes (Morris et al., 2008).

As done in previous studies (Müller-Axt et al., 2017; Tschentscher et al., 2019), we calculated the logarithm of the number of streamlines [log(streamlines)] to increase the likelihood of obtaining a normal distribution, which was tested before application of parametric statistics using the Shapiro–Wilk test in RStudio (Allaire, 2012). The log-transformed number of streamlines were compared using two-sided paired t tests. To control for unreliable connections, we calculated the group mean and SD of the log-transformed number of streamlines for each connection, and we discarded participants whose values were >3 SDs away from the group mean for the respective connection. Connections were only considered reliable when the number of streamlines reconstructed with the iFOD2 algorithm were higher than the ones obtained with the Null distribution algorithm. Significance was thresholded at p = 0.05 Bonferroni-corrected for multiple comparisons, p = 0.008 (three connections, two hemispheres).

In case we found no difference between the number of streamlines derived from a null distribution and the number of streamlines generated with the iFOD algorithm, these results were additionally tested with Bayesian analyses (see, e.g., Ly et al., 2016). Such an analysis was based on the t values obtained with the t tests mentioned above and on a Cauchy prior centered on zero (scale = 0.707) representing the null hypothesis. The Bayes factor (BF) values obtained with this analysis represent a measure of how strongly the data support the null hypothesis of no effect (i.e., low BF values, <1). The Bayesian analysis was performed using JASP (JASP Team 2019).

Overlap of hMT+/V5–hPT connections with the inferior frontal occipital fasciculus (IFOF) and inferior longitudinal fasciculus (ILF)

To assess whether hMT+/V5–hPT connections followed the same trajectory as the ILF or the IFOF, two major white matter bundles that connect the occipital lobe with the temporal and frontal lobes, respectively (Dejerine, 1895; Catani et al., 2003), we computed the spatial overlap between these bundles. The Dice Similarity Coefficient (DSC) (Dice, 1945) was used as a metric to evaluate the overlap of hMT+/V5–hPT connections with the ILF and the IFOF, in each participant and hemisphere separately. The DSC measures the spatial overlap between Regions A and B, and it is defined as DSC(A,B) = 2(A∩B)/(A + B) where ∩ is the intersection. We calculated the DSC of hMT+/V5–hPT connections and the ILF, using as Region A the binarized tract-density images of hMT+/V5–hPT connections in the template diffusion space. Region B represents the ILF, dissected in our population template. To extract the ILF, a tractogram was generated using whole-brain probabilistic tractography on the population template (Mito et al., 2018). Ten million streamlines were first generated, and these were subsequently filtered to 2 million streamlines using the SIFT (spherical-deconvolution informed filtering of tractograms) algorithm (R. E. Smith et al., 2013) to reduce reconstruction biases. Then, we used the two-ROI approach to dissect the ILF (Wakana et al., 2007). The same procedure was applied to determine the overlap of hMT+/V5–hPT connections and the IFOF.

Testing whether hMT+/V5–hPT connections are different when relying on individual or group-level hMT+/V5 and hPT

We analyzed the impact that group-level localization of hMT+/V5 and hPT (compared with individually localized regions) had in the reconstruction of hMT+/V5–hPT connections, as we aimed (1) to investigate the reproducibility of the connection under these two approaches to define the ROIs, and (2) to assess the potential presence of hMT+/V5–hPT connections in Dataset 2 (HCP) where the individual definition of hMT+/V5 and hPT is not possible. We first investigated whether hMT+/V5–hPT tracts could be reconstructed when the location of the ROIs was derived from group-averaged functional data, as described in Testing the presence of hMT+/V5–hPT connections. Then, we assessed the effect that group-level localization of hMT+/V5 and hPT (compared with individually localized ROIs) had on hMT+/V5–hPT connections comparing the connectivity index (CI) between tracts derived from individual and group functional data.

As the number of streamlines connecting two regions strongly depends on the number of voxels in the seed and target masks and we conducted the probabilistic tracking in symmetric mode (from the seed to the target and from the target to the seed), the CI was determined by the number of streamlines from the seed that reached the target divided by the product of the generated sample streamlines in each seed/target voxel (5000) and the number of voxels in the respective seed/target mask (Müller-Axt et al., 2017; Tschentscher et al., 2019). To increase the likelihood of gaining a normal distribution, log-transformed values were computed (Müller-Axt et al., 2017; Tschentscher et al., 2019) as follows:

Following a similar procedure as in previous studies (Müller-Axt et al., 2017; Tschentscher et al., 2019), subjects whose CI was ≥3 SDs away from the group mean were considered outliers. CIs between connections derived from individual and group-level ROIs were compared using two-sided paired t tests, after testing for normality using the Shapiro–Wilk test. Significance threshold was set at p = 0.025 (right and left hemisphere).

As the CI is highly influenced by the distance between the seed and target regions, we assessed differences in hMT+/V5–hPT distance between individual and group-level definition of ROIs. Distance in hMT+/V5–hPT connections was defined as the Euclidian distance between hMT+/V5 and PT coordinates in each subject. Distance values were normally distributed after a log transformation, and we compared them using two-sided paired t tests. If differences in distance were found, the distance-corrected CI was calculated, replacing the number of streamlines with the product of the distance and the number of streamlines (d*streamlines). Similar approaches have been used by other studies to take into account the effect of distance in the number of streamlines generated between two regions (Tomassini et al., 2007; Eickhoff et al., 2010; Blank et al., 2011).

Dataset 2: HCP

We investigated the reproducibility of hMT+/V5–hPT connections using an independent dMRI dataset that involves a large number of participants. Minimally processed dMRI data from the new subjects (n = 236) in the HCP S1200 release were used (Van Essen et al., 2013) (https://www.humanconnectome.org/study/hcp-young-adult/document/1200-subjects-data-release). Participants who did not complete the diffusion imaging protocol (n = 51), gave positive drug/alcohol tests (n = 19), and had abnormal vision (n = 1) were excluded from the analysis. Taking into account the high number of siblings in the HCP sample and the fact that this might spuriously decrease the variance because of the structural and functional similarity, we only selected unrelated participants and kept one member of each family (from the 86 siblings, 47 participants were excluded). The selection resulted in 114 healthy participants (50 women; mean age ± SD years, 28.6 ± 3.7 years; range, 22-36 years).

Image acquisition and processing

The minimally processed structural data included T1-weighted high-resolution MP-RAGE images (voxel size = 0.7 mm isotropic) corrected for gradient distortions and for low-frequency field inhomogeneities. The diffusion data were constituted by a multishell acquisition (b-factor = 1000, 2000, 3000 s/mm2) for a total of 90 directions, at a spatial resolution of 1.25 mm isotropic. Minimal processing included intensity normalization across runs, EPI distortion correction, and eddy current/motion correction. Further details on image acquisition can be found elsewhere (https://www.humanconnectome.org/storage/app/media/documentation/s1200/HCP_S1200_Release_Appendix_I.pdf).

The preprocessing of the diffusion images in this dataset is highly similar to the processing of images in Dataset 1. The only difference in the processing of data arises from the multishell nature of the acquisition as opposed to the single-shell acquisition of Dataset 1. Given the sample size, we selected a subset of 60 participants (approximately half of the total sample) to create a representative population template and white matter mask (McFadyen et al., 2019). We used this template to normalize the white matter intensity of all 114 participants' dMRI volumes.

Preparation of ROIs and probabilistic tractography

Individual definition of hMT+/V5 and hPT was not possible, since the HCP scanning protocol does not include any motion localizer experiment. Hence, inclusion regions for tractography were derived from group-level hMT+/V5 and hPT, as described in Dataset 1. The ROIs were transformed to template diffusion space, where we conducted tractography using the same procedure used in Dataset 1.

Statistical analysis: testing the presence hMT+/V5–hPT connections

We assessed the potential presence of connections between group-level hMT+/V5 and hPT as described in Dataset 1.

Results

Location of individually defined and group-level hMT+/V5 and hPT

Group-level coordinates for hMT+/V5 were located in MNI coordinates (44, −70, −4) and (−50, −70, −2) for right and left hemispheres, respectively, which is consistent with reported MNI coordinates for this region (Watson et al., 1993) and lie within the V5 mask of the Juelich histologic atlas available in FSL (Eickhoff et al., 2005) (see Fig. 1A). Subject-specific hMT+/V5 coordinates were on average 7 ± 3 mm (mean ± SD) and 10 ± 4 mm away from the group-maxima on the right and the left hemisphere (Dumoulin et al., 2000).

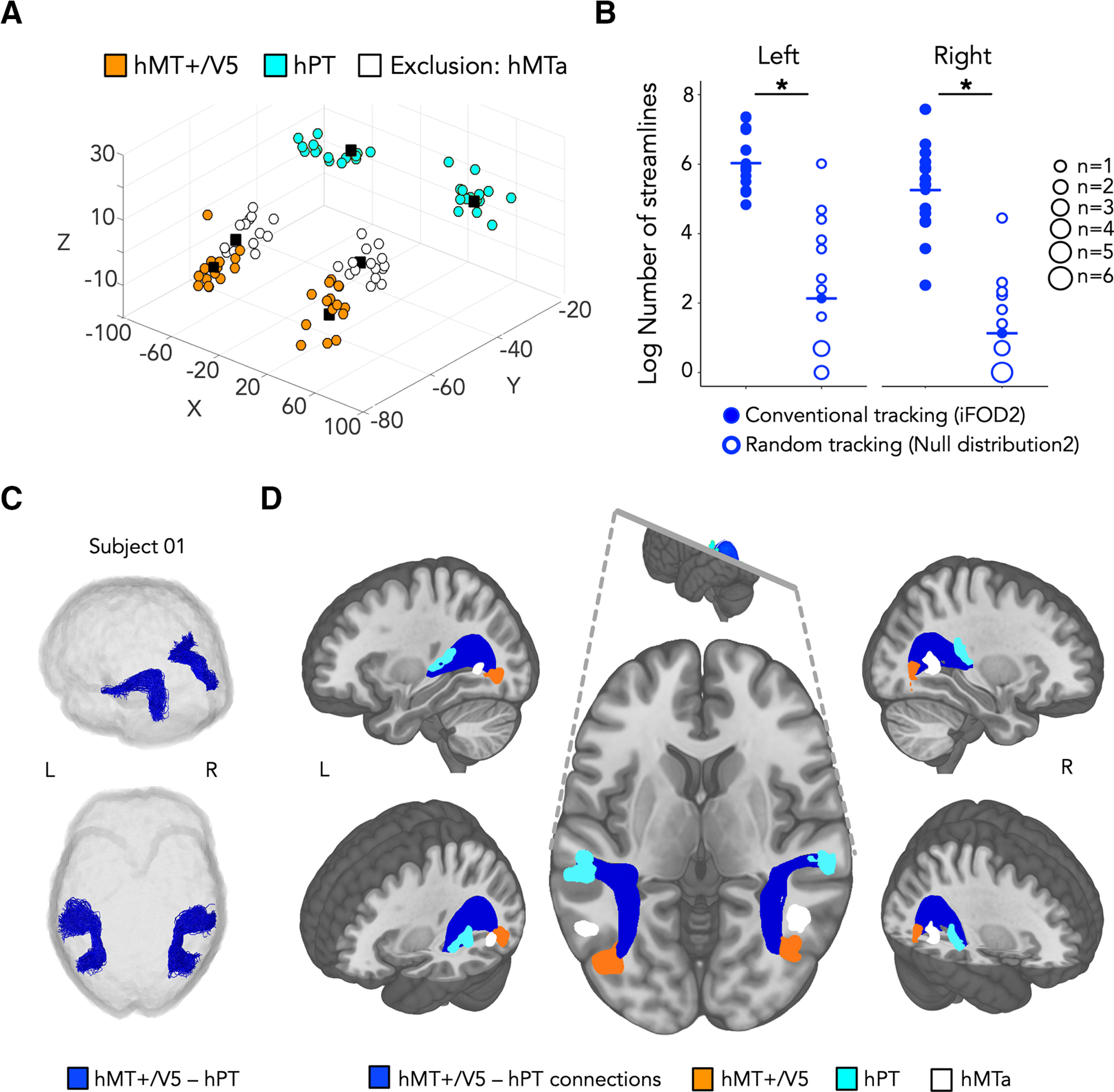

Figure 1.

A, 3D scatterplot representing subject-specific hMT+/V5 (orange), hPT (light blue), and exclusion mask (hMTa, white) in MNI coordinates. Black squares represent the group-level peak coordinates for the same regions. B, Balloon plot represents the log-transformed number of streamlines reconstructed for hMT1/V5 -hPT connections. Streamlines are reconstructed by conventional tracking (iFOD2 algorithm, represented by filled circles) and random tracking (Null distribution2 algorithm, represented by empty circles). The iFOD algorithm relies on FODs, whereas the streamlines generated with the Null distribution algorithm rely on random orientation samples where no information relating to fiber orientations is used. pSignificant difference. C, Resulting tractography reconstruction for hMT+/V5–hPT connections (dark blue) for 1 representative subject. D, Group-averaged structural pathways between hMT+/V5 and hPT (dark blue). Orange represents inclusion region hMT+/V5. Light blue represents inclusion region hPT. White represents exclusion region hMTa. Individual connectivity maps were binarized, overlaid, and are shown at a threshold of >9 subjects. Inclusion regions followed the same procedure and are shown at a threshold of >2 subjects. Results are shown on the T1 MNI-152 template. R, Right; L, left.

Group-level hPT coordinates were located at coordinates (64, −34, 13) and (−44, −32, 12) for the right and left hemisphere, respectively, which is consistent with reported MNI coordinates for this region (Warren et al., 2002) and lie within the hPT Harvard–Oxford atlas from FSL (Desikan et al., 2006). Individually defined hPT coordinates were on average 9 ± 5 mm and 15 ± 4 mm away from the group-maxima, for the right and left hemisphere, respectively, which is also consistent with reported interindividual variability in the location of hPT (Battal et al., 2019).

hMTa peak coordinates were located at MNI coordinates (42, −60, 6) and (−62, −60, −2), which is consistent with previously reported MNI coordinates for this region (Dormal et al., 2016; Battal et al., 2019; Rezk et al., 2020). Individually defined hMTa coordinates were on average 6 ± 2 mm and 12 ± 6 mm away from the group-maxima, for the right and left hemisphere, respectively.

The respective locations of individually defined coordinates for hMT+/V5, hPT and exclusion region hMTa are illustrated in Figure 1A.

Testing the presence of individually defined hMT+/V5–hPT connections

For hMT+/V5–hPT connections that relied on individual hMT+/V5 and hPT, the percentage of streamlines rejected based on aberrant length or position was 3.9 ± 3.9 (mean ± SD) for the right and 6.3 ± 4.3 for the left hemisphere. The number of streamlines in all participants was within a range of 3 SDs away from the group mean. For the right hemisphere, the number of reconstructed streamlines was significantly above chance, as the comparison between the connections reconstructed driven by random tracking (Null distribution) with those driven by conventional tracking (iFOD2), revealed a higher streamline count generated with the iFOD2 algorithm compared with that produced by the Null distribution algorithm (log streamlines[iFOD2] = 5.2 ± 1.2, log streamlines[Null distribution] = 1.1 ± 1.3, paired t test, t(14) = 9.7, p = 1e-7, d = 2.5). Similar results were obtained for the left hemisphere (log streamlines[iFOD2] = 6.0 ± 0.9, log streamlines [Null distribution] = 2.1 ± 2.0, paired t test, t(14) = 8.3, p = 8e-7, d = 2.1). The distribution of the number of streamlines generated with each algorithm can be seen in Figure 1B. Tractography reconstruction for hMT+/V5–hPT connections in a representative subject is illustrated in Figure 1C, and group-averaged tracts derived are shown in Figure 1D.

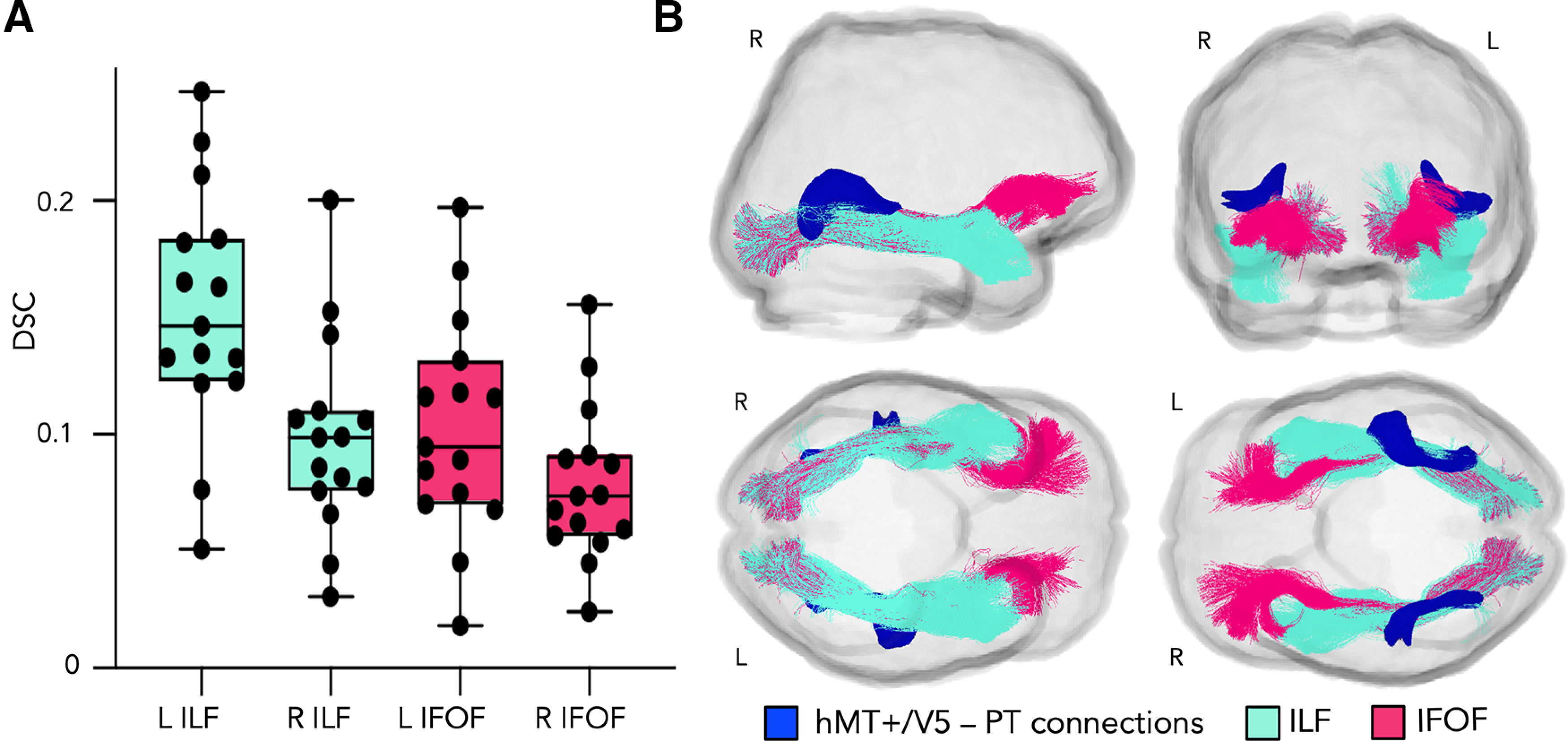

Overlap of hMT+/V5–hPT connections with the IFOF and ILF

The DSC was used as a metric to evaluate the spatial overlap between hMT+/V5–hPT connections, as reflected by the binarized individual tract-density images and the ILF and the IFOF. For the overlap between hMT+/V5–hPT and the ILF, the DSC was 0.153 ± 0.053 (mean ± SD) in the left hemisphere and 0.099 ± 0.043 in the right hemisphere (see Fig. 2A). For the overlap between hMT+/V5–hPT and the IFOF, the DSC was 0.103 ± 0.047 (mean ± SD) in the left and 0.078 ± 0.034 in the right hemisphere. For illustrative purposes, the position of hMT+/V5–hPT connections, as reflected by the sum of binarized individual tract-density images that were thresholded at >9 subjects, relative to the ILF and the IFOF can be seen in Figure 2B.

Figure 2.

A, Boxplots represent DSCs between individual hMT1/V5 -hPT connections and the ILF (turquoise) and the IFOF (pink). Dots represent individual DSC values. R, Right; L, left. B, Position of hMT+/V5–hPT connections (dark blue) relative to the left ILF (turquoise) and the left IFOF (pink). Sagittal view from the right hemisphere, coronal view from the anterior part of the brain, and axial view from the inferior (bottom left) and superior (bottom right) parts of the brain. ILF and IFOF dissected in our population template following the two-ROI approach described by Wakana et al. (2007). hMT+/V5–hPT connections shown at a threshold of >9 subjects. Results are shown on the template diffusion space. R, Right; L, left.

Are hMT+/V5–hPT connections different depending on individual or group-level hMT+/V5 and hPT?

The effect of relying on group-averaged functional data to determine the location of hMT+/V5 and hPT instead of relying on individual activity maps was assessed by two analyses. First, we investigated whether hMT+/V5–hPT connections could be reconstructed when the location of the ROIs was derived from group-averaged functional data. We then compared the CI between the tracts derived from group or subject-level functional data.

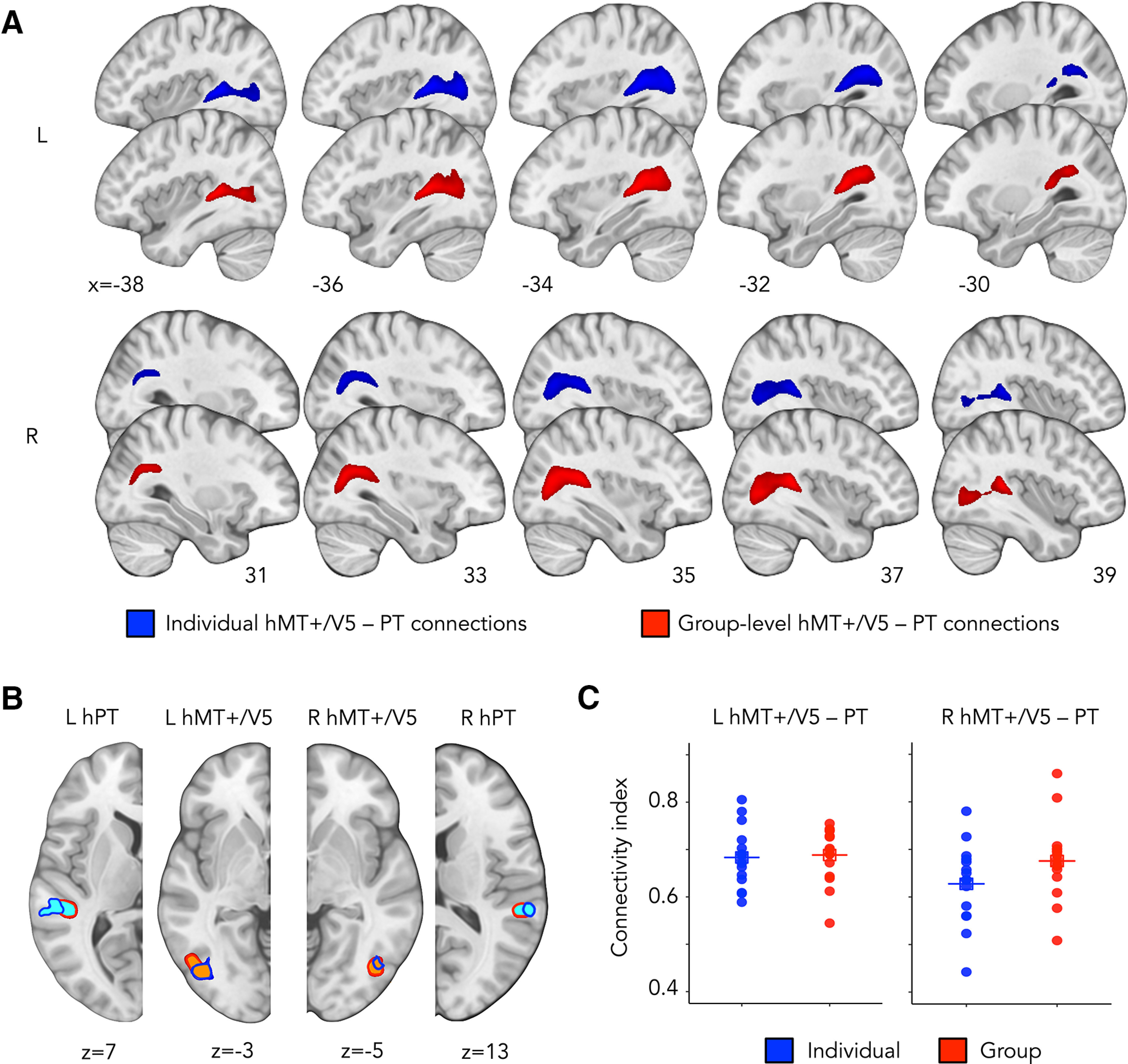

Testing the presence of group-level hMT+/V5–hPT connections

The percentage of streamlines rejected based on aberrant length or position was 5.0 ± 4.3 (mean ± SD) for the right and 9.4 ± 2.7 for the left hemisphere. The number of streamlines in all participants was within a range of 3 SDs away from the group mean. hMT+/V5–hPT connections derived from hMT+/V5 and hPT defined at the group-level were reconstructed above chance, suggesting that the dMRI data produced meaningful streamlines between hMT+/V5 and hPT. For right hMT+/V5–hPT connections, the log-transformed number of streamlines reconstructed with the iFOD2 algorithm (mean ± SD, 6.2 ± 1.3) was significantly higher than those reconstructed by random tracking (mean ± SD, 2.3 ± 1.1) (paired t test, t(14) = 11.1, p = 2e-8, d = 2.9). Similar results were obtained for left hMT+/V5–hPT connections (log streamlines[iFOD2] = 6.2 ± 0.9, log streamlines [Null distribution] = 4.4 ± 0.8, paired t test, t(14) = 6.9, p = 8e-6, d = 1.8). hMT+/V5–hPT connections derived from group-level or subject-level functional data are shown in Figure 3A. The overlap between hMT+/V5 and hPT regions derived from group-level or subject-level functional data is shown in Figure 3B.

Figure 3.

A, Group-averaged structural pathways between hMT+/V5 and hPT for connections derived from individual ROIs (dark blue) and group-level ROIs (red). Connections are shown at a threshold of >9 subjects. Results are depicted on the T1 MNI-152 template. R, Right; L, left. B, Group-averaged hMT1/V5 (orange) and hPT (light blue) derived from individual ROIs (outline in dark blue) and group-level ROIs (outline in red). ROIs are shown at a threshold of .2 subjects. R, Right; L, left. C, Distance-corrected CI for right and left hMT+/V5–hPT connections derived from individual (dark blue) or group-level (red) hMT+/V5 and hPT. Horizontal lines indicate mean values. Individual connectivity maps were binarized and overlaid. R, Right; L, left.

CI

For right hMT+/V5–hPT connections, the CI for tracts derived from individual ROIs (mean ± SD, 0.37 ± 0.09) was lower than that obtained from group-level ROIs (mean ± SD, 0.42 ± 0.09) (paired t test, t(14) = 2.77, p = 0.01, d = 0.7). For left hMT+/V5–hPT connections, the CI between tracts relying on subject-specific or group-level ROIs revealed no differences (CI [subject-specific ROIs] = 0.42 ± 0.06, CI [group-level ROIs] = 0.44 ± 0.06, paired t test, t(14) = 0.87, p = 0.4, d = 0.2).

To control for possible differences in the distance between seed and targets that could drive differences in the CI, we compared the seed-target distance between individual and group-level ROIs. For both right and left hMT+/V5–hPT connections, the distance between hMT+/V5 and hPT was larger when relying on individually defined ROIs than for group-level ROIs (right hemisphere: log Distance [subject-specific ROIs] = 3.71 ± 0.11, log Distance [group-level ROIs] = 3.60 ± 0.08, paired t test, t(14) = −2.93, p = 0.01, d = 0.8; left hemisphere: log Distance [subject-specific ROIs] = 3.71 ± 0.20, log Distance [group-level ROIs] = 3.57 ± 0.07, paired t test, t(14) = −2.81, p = 0.01, d = 0.7). Hence, we computed the distance-corrected CI, replacing the number of streamlines, by the product of the number of streamlines and the distance between the hMT+/V5 and hPT. Distance-corrected CIs did not differ between individual and group-level ROIs for any hemisphere (right hemisphere: CI [subject-specific ROIs] = 0.63 ± 0.08, CI [group-level ROIs] = 0.68 ± 0.08, paired t test, t(14) = 2.5, p = 0.03, d = 0.6; left hemisphere: CI [subject-specific ROIs] = 0.68 ± 0.06, CI [group-level ROIs] = 0.68 ± 0.06, paired t test, t(14) = 0.28, p = 0.8, d = 0.07). This means that the lower CI observed for individual ROIs compared with group-level ROIs in the right hemisphere was likely because of a higher distance between individual hMT+/V5 and hPT. The distribution of the distance-corrected CI for tracts derived from subject-specific and group-level hMT+/V5 and hPT can be seen in Figure 3C.

Testing the presence of group-level FFA–hPT connections

The percentage of streamlines discarded based on the length or position criteria was (mean ± SD) 9.2 ± 2.6 for the right and 10.2 ± 3.2 for the left hemisphere. All the participants were included in the analysis, as there were no outliers considering the number of streamlines. As opposed to hMT+/V5–hPT connections, we did not find evidence to suggest the potential existence of FFA–hPT connections. For the right hemisphere, the number of streamlines derived from a null distribution did not differ compared with the number of streamlines generated with the iFOD algorithm (right FFA–hPT (log streamlines[iFOD2] = 5.0 ± 1.5, log streamlines [Null distribution] = 5.1 ± 1.1, paired t test, t(14) = −0.6, p = 0.6, d = 0.2). The absence of difference between the number of streamlines derived from a null distribution and the number of streamlines generated with the iFOD algorithm was further assessed by the BF. Low BF values (<1) represent how strongly the data support the null hypothesis of no effect. In accordance with the previous results, the BF was 0.307.

For the left hemisphere, the number of streamlines derived from a null distribution was higher than the number of streamlines obtained with the iFOD algorithm, showing an opposite pattern compared with the results obtained for hMT+/V5–hPT connections (log streamlines[iFOD2] = 5.0 ± 1.2, log streamlines [Null distribution] = 6.4 ± 1.0, paired t test, t(14) = −6.9, p = 7e-6, d = 1.8). This means that diffusion data are not providing evidence for the potential presence of FFA-hPT connections than that expected from random tracking.

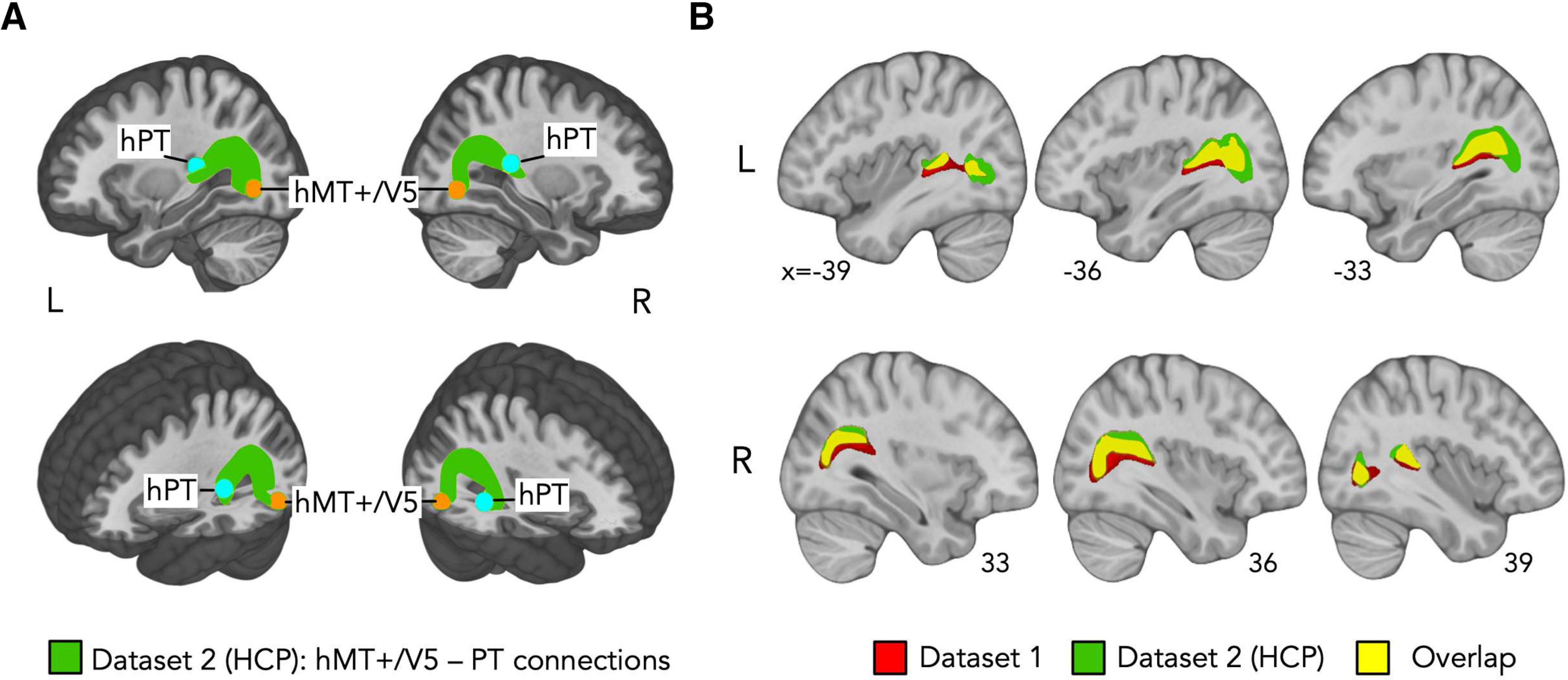

Replication in Dataset 2: testing the presence of hMT+/V5–hPT connections

To evaluate the consistency of hMT+/V5–hPT connections obtained in Dataset 1, the potential existence of hMT+/V5–hPT projections was also investigated in the HCP dataset (Dataset 2). This dataset allowed us to test the reproducibility of our findings using a larger sample size (compared with Dataset 1) and different acquisition parameters. Whereas single-shell diffusion data were acquired in Dataset 1, multishell diffusion data were acquired in Dataset 2. For the reconstruction of hMT+/V5–hPT tracts on this dataset, we relied on the group-level hMT+/V5 and hPT described in Dataset 1. We corroborated evidence supporting the potential existence of hMT+/V5–hPT connections above chance levels. For right hMT+/V5–hPT connections, the percentage of streamlines rejected based on aberrant length or position was 3.4 ± 4.2 (mean ± SD). The number of streamlines (log-transformed) were not normally distributed (Shapiro-Wilk test, p = 1e-7). Hence, in addition to a paired t test, we also assessed differences using the nonparametric Wilcoxon test. The log-transformed number of streamlines reconstructed with the iFOD2 algorithm (mean ± SD, 1.92 ± 1.86) was significantly above chance (mean ± SD, 0.04 ± 0.17) after removing 3 outlier participants whose number of streamlines was >3 SD away from the group mean (paired t test, t(110) = 10.9, p = 2.2e-16, d = 1.0; Wilcoxon Test, Z = −7.47, p = 8e-14, n = 111). For left hMT+/V5–hPT connections, the percentage of streamlines discarded based on aberrant length or position was 5.2 ± 4.9 (mean ± SD). The log-transformed number of streamlines were normally distributed and streamlines were reconstructed above chance-levels after rejecting 1 participant because of aberrant number of streamlines (log streamlines[iFOD2] = 2.94 ± 2.27, log streamlines [Null distribution] = 2.16 ± 1.64, paired t test, t(113) = 4.4, p = 2e-5, d = 0.4). Group-averaged tracts derived for this dataset can be seen in Figure 4A, and the overlap between hMT+/V5–hPT connections from Dataset 1 and Dataset 2 is shown in Figure 4B.

Figure 4.

A, Group-averaged structural pathways between hMT+/V5 and hPT (green) in Dataset 2 (HCP). Orange represents inclusion region hMT+/V5. Light blue represents inclusion region hPT. Individual connectivity maps were binarized, overlaid, and are shown at a threshold of >9 subjects. Results are shown on the T1 MNI-152 template. R, Right; L, left. B, Overlap (yellow) of hMT+/V5–hPT connections (from group-level ROIs) derived from Dataset 1 (red) and Dataset 2 (green). Results are shown on the T1 MNI-152 template. R, Right; L, left.

Discussion

Visual and auditory motion signals interact to create a unified perception of motion. Ambiguous audiovisual stimuli or stimuli moving in opposite directions across the senses can lead to erroneous motion perception (Kitagawa and Ichihara, 2002; Soto-Faraco et al., 2002), whereas congruent audiovisual moving stimuli are shown to enhance the processing of directional motion (Meyer et al., 2005; Alink et al., 2008; Sadaghiani et al., 2009; Lewis and Noppeney, 2010). But which is the neural architecture supporting the integration of multisensory motion information? Classical hierarchical models of information processing in the brain suggest that visual and auditory motion inputs are first processed separately in sensory-selective regions and then integrated in multisensory convergence zones, such as the posterior parietal and premotor cortices (Bremmer et al., 2001). In contrast to this hierarchical view of how multisensory motion information unfolds in the brain, moving sounds have been found to modulate the spike-count rate of neurons responding to visual motion (Barraclough et al., 2005) and influence the BOLD response of hMT+/V5 (Alink et al., 2008; Sadaghiani et al., 2009; Scheef et al., 2009; Lewis and Noppeney, 2010). Indeed, moving sounds activate the anterior portion of MT+/V5 in humans and macaques (Poirier et al., 2005, 2017; Rezk et al., 2020), and planes of auditory motion are successfully encoded in the distributed activity of hMT+/V5 (Dormal et al., 2016). Importantly, motion directions in the visual modality can be predicted by the patterns elicited by auditory directions in hMT+/V5, and reversely, demonstrating a partially shared representation for motion direction across the senses in hMT+/V5 (Rezk et al., 2020).

It was proposed that the presence of auditory motion signal in hMT+/V5 resulted from feedback connections from multisensory convergence zones, such as the posterior parietal cortex (Macaluso and Driver, 2005). However, the exclusive role of feedback connections in the integration of audiovisual motion in regions typically conceived as unisensory has been challenged by studies showing audiovisual interactions as early as 40 ms after stimulus presentation (Giard and Peronnet, 1999; Molholm et al., 2002; Ferraro et al., 2020). Moreover, tracer studies in animals have demonstrated the existence of direct monosynaptic connections between primary auditory and visual regions (Falchier et al., 2002; Rockland and Ojima, 2003). In agreement with our findings, previous macaque studies have revealed monosynaptic connections between area MT, the equivalent of area hMT+/V5 in primates, and regions in the temporal lobe assumed to be sensitive to auditory motion (Ungerleider and Desimone, 1986; Boussaoud et al., 1990; Palmer and Rosa, 2006a,b; Majka et al., 2019). Our suggestion for direct projections between hMT+/V5 and hPT is therefore consistent with these findings suggesting that sensory information might be directly exchanged at a much lower level of the information processing hierarchy than was previously thought (Bavelier and Neville, 2002; Foxe and Schroeder, 2005; Murray et al., 2016).

How does the presence of direct connections between hMT+/V5 and PT complement the demonstrated existence of multisensory integration of moving signal in the parietal cortex (Bremmer et al., 2001)? A recent fMRI study revealed that multisensory interactions in primary and association cortices are governed by distinct computational principles (Rohe and Noppeney, 2016). Although multisensory integration was observable in both early (including extrastriate visual regions and PT) and associative (parietal) regions of the brain, only parietal cortices weighted signals by their reliability and task relevance. Extending these observations to motion processing, we therefore speculate that hMT+/V5 and hPT might be involved in coregistering directional audiovisual moving signals while the parietal cortex might involve in weighting the reliability of each sensory signal for optimal (Bayesian) inference of the direction of motion (Rohe and Noppeney, 2015). Similarly, previous studies (Von Kriegstein et al., 2005; Blank et al., 2011; Schall et al., 2013; Benetti et al., 2018) found direct connections between FFA, a functionally selective region in the occipital cortex preferentially responding to faces (Kanwisher et al., 1997), and regions in the middle temporal gyrus selective to vocal sounds. The presence of those direct connections may also complement the existence of polymodal regions in the superior temporal sulcus supporting face-voice integration (Beauchamp et al., 2004; Davies-Thompson et al., 2019).

We actually investigated the potential presence of projections between hPT and the FFA. The choice of FFA as a control region was motivated by three main reasons. First, there is a similar distance between FFA and PT compared with the distance between V5 and PT. Distance between ROIs has an impact on probabilistic tractography outcomes (Tomassini et al., 2007; Jbabdi and Johansen-Berg, 2011; Saygin et al., 2011; Wang et al., 2017), so connections where the distance between the ROIs is similar will be governed by the same distance effects. Second, like hMT+/V5, FFA is a higher-order functional region responding selectively to a specific class of stimuli (faces) while being part of a separate functional stream (ventral for FFA, dorsal for hMT+/V5). Last, because of the physical separation between the location of FFA and hMT+/V5, the potential fibers between FFA–hPT and hMT+/V5–hPT could be well distinguished. In accordance with a specific role of hMT+/V5–hPT connections in motion processing, we did not find evidence to suggest the potential presence of connections between FFA and hPT. We further investigated the specificity of the hMT+/V5–hPT connections and showed that they do not follow the same trajectory as either the ILF or the IFOF. Together, our results suggest that direct connections only emerge between temporal and occipital regions that share a similar computational goal (e.g., in our study regarding moving information) since these regions need a fast and optimal transfer of redundant perceptual information across the senses.

The intrinsic preferential connectivity between functionally related brain regions, such as hMT+/V5 and hPT for motion, could provide the structural scaffolding for the subsequent development of these areas in a process of interactive specialization (Johnson, 2011). Evolution may have coded in our genome a pattern of connectivity between functionally related regions across the senses as, for instance, those involved in motion perception, to facilitate the emergence of functional networks dedicated to a specific perceptual/cognitive process and facilitate exchange in computationally related multisensory information. Actually developmental studies show that young infants (Bremner et al., 2011) and even newborns (Orioli et al., 2018) can detect motion congruency across the senses. Interestingly, these intrinsic connections between sensory systems devoted to the processing of moving information may constrain the expression of crossmodal plasticity in case of early sensory deprivation. Several studies have indeed reported that early blinds preferentially recruit an area consistent with the location of hMT+/V5 for the processing of moving sounds (Poirier et al., 2006; Wolbers et al., 2011; Dormal et al., 2016). Likewise, hPT is preferentially recruited by visual motion in case of early deafness (Bavelier et al., 2001; Shiell et al., 2016; Retter et al., 2019). In this context, crossmodal reorganization would express relying on occipitotemporal motion-selective connections that can also be found in people without sensory deprivation (Pascual-Leone et al., 2005; Collignon et al., 2013), such as the hMT+/V5–hPT connections that we find in the present study.

Despite the relevance of dMRI to support the presence of white matter bundles that are reconstructed by following pathways of high diffusivity (Catani et al., 2003), the reconstruction of streamlines from dMRI is, however, an imperfect attempt to capture the full complexity of millions of densely packed axons that form a bundle (Rheault et al., 2020a,b). As such, diffusion imaging in humans cannot be considered as a definitive proof for the existence of an anatomic pathway in the brain (Maier-Hein et al., 2017). As for all studies involving dMRI data, postmortem tracer studies could help to overcome the inherent constrains of dMRI, providing direct evidence for the existence of a white matter connection as well as information on the directionality of the connections and finer spatial details (Ungerleider et al., 2008; Banno et al., 2011; Grimaldi et al., 2016).

In conclusion, our findings provide a first indication for the potential existence of direct white matter connections in humans between visual and auditory motion-selective regions using a combined fMRI and dMRI approach. This connection could represent the structural scaffolding for the rapid exchange and optimal integration of visual and auditory motion information (Lewis and Noppeney, 2010). This finding has important implications for our understanding on how sensory information is shared across the senses, suggesting the potential existence of computationally specific pathways that allow information flow between areas traditionally conceived as unisensory, in addition to the bottom-up/top-down integration of sensory signals to/from multisensory convergence areas.

Footnotes

This work was supported by the European Research Council Starting Grant MADVIS Project 337573, Belgian Excellence of Science Program from the FWO and FRS-FNRS Project 30991544, and a “mandat d'impulsion scientifique” from the FRS-FNRS to O.C. Computational resources have been provided by the supercomputing facilities of the Université catholique de Louvain and the Consortium des Équipements de Calcul Intensif en Fédération Wallonie Bruxelles funded by the Fond de la Recherche Scientifique de Belgique (FRS-FNRS) under convention 2.5020.11 and by the Walloon Region. A.G.-A. was supported by the Wallonie Bruxelles International Excellence Fellowship and the FSR Incoming PostDoc Fellowship by Université Catholique de Louvain. O.C. is a research associate and M.R. a doctoral fellow supported by the Fond National de la Recherche Scientifique de Belgique (FRS-FNRS).

The authors declare no competing financial interests.

References

- Alink A, Singer W, Muckli L (2008) Capture of auditory motion by vision is represented by an activation shift from auditory to visual motion cortex. J Neurosci 28:331–340. 10.1523/JNEUROSCI.2980-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allaire J (2012) RStudio: integrated development environment for R. Boston, MA, 770, 394. [Google Scholar]

- Andersson JL, Sotiropoulos SN (2016) An integrated approach to correction for off-resonance effects and subject movement in diffusion MR imaging. Neuroimage 125:1063–1078. 10.1016/j.neuroimage.2015.10.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avants BB, Epstein CL, Grossman M, Gee JC (2008) Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med Image Anal 12:26–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banno T, Ichinohe N, Rockland KS, Komatsu H (2011) Reciprocal connectivity of identified color-processing modules in the monkey inferior temporal cortex. Cereb Cortex 21:1295–1310. [DOI] [PubMed] [Google Scholar]

- Barraclough NE, Xiao D, Baker CI, Oram MW, Perrett DI (2005) Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J Cogn Neurosci 17:377–391. 10.1162/0898929053279586 [DOI] [PubMed] [Google Scholar]

- Battal C, Rezk M, Mattioni S, Vadlamudi J, Collignon O (2019) Representation of auditory motion directions and sound source locations in the human planum temporale. J Neurosci 39:2208–2220. 10.1523/JNEUROSCI.2289-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumgart F, Gaschler-Markefski B, Woldorff MG, Heinze HJ, Scheich H (1999) A movement-sensitive area in auditory cortex. Nature 400:724–726. 10.1038/23390 [DOI] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ (2002) Cross-modal plasticity: where and how? Nat Rev Neurosci 3:443–452. 10.1038/nrn848 [DOI] [PubMed] [Google Scholar]

- Bavelier D, Brozinsky C, Tomann A, Mitchell T, Neville H, Liu G (2001) Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. J Neurosci 21:8931–8942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A (2004) Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nat Neurosci 7:1190–1192. 10.1038/nn1333 [DOI] [PubMed] [Google Scholar]

- Benetti S, Novello L, Maffei C, Rabini G, Jovicich J, Collignon O (2018) White matter connectivity between occipital and temporal regions involved in face and voice processing in hearing and early deaf individuals. Neuroimage 179:263–274. 10.1016/j.neuroimage.2018.06.044 [DOI] [PubMed] [Google Scholar]

- Benetti S, van Ackeren MJ, Rabini G, Zonca J, Foa V, Baruffaldi F, Rezk M, Pavani F, Rossion B, Collignon O (2017) Functional selectivity for face processing in the temporal voice area of early deaf individuals. Proc Natl Acad Sci USA 114:E6437–E6446. 10.1073/pnas.1618287114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bex PJ, Simmers AJ, Dakin SC (2003) Grouping local directional signals into moving contours. Vision Res 43:2141–2153. 10.1016/s0042-6989(03)00329-8 [DOI] [PubMed] [Google Scholar]

- Blank H, Anwander A, von Kriegstein K (2011) Direct structural connections between voice- and face-recognition areas. J Neurosci 31:12906–12915. 10.1523/JNEUROSCI.2091-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R (1990) Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol 296:462–495. 10.1002/cne.902960311 [DOI] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann KP, Zilles K, Fink GR (2001) Polymodal motion processing in posterior parietal and premotor cortex. Neuron 29:287–296. 10.1016/S0896-6273(01)00198-2 [DOI] [PubMed] [Google Scholar]

- Bremner JG, Slater AM, Johnson SP, Mason UC, Spring J, Bremner ME (2011) Two- to eight-month-old infants' perception of dynamic auditory-visual spatial colocation. Child Dev 82:1210–1223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catani M, Jones DK, Donato R, Ffytche DH (2003) Occipitotemporal connections in the human brain. Brain 126:2093–2107. 10.1093/brain/awg203 [DOI] [PubMed] [Google Scholar]

- Collignon O, Dormal G, Lepore F (2013) Building the brain in the dark: functional and specific crossmodal reorganization in the occipital cortex of blind individuals. In: Plasticity in sensory systems. Cambridge university press: New York. [Google Scholar]

- Davies-Thompson J, Elli GV, Rezk M, Benetti S, van Ackeren M, Collignon O (2019) Hierarchical brain network for face and voice integration of emotion expression. Cereb Cortex 29:3590–3605. [DOI] [PubMed] [Google Scholar]

- Dejerine J (1895) Anatomie des Centres Nerveux. Paris: Rueff et Cie. [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, Albert MS, Killiany RJ (2006) An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31:968–980. 10.1016/j.neuroimage.2006.01.021 [DOI] [PubMed] [Google Scholar]

- Dice LR (1945) Measures of the amount of ecologic association between species. Ecology 26:297–302. 10.2307/1932409 [DOI] [Google Scholar]

- Dormal G, Rezk M, Yakobov E, Lepore F, Collignon O (2016) Auditory motion in the sighted and blind: early visual deprivation triggers a large-scale imbalance between auditory and “visual” brain regions. Neuroimage 134:630–644. 10.1016/j.neuroimage.2016.04.027 [DOI] [PubMed] [Google Scholar]

- Driver J, Spence C (2000) Multisensory perception: beyond modularity and convergence. Curr Biol 10:R731–R735. [DOI] [PubMed] [Google Scholar]

- Dubner R, Zeki SM (1971) Response properties and receptive fields of cells in an anatomically defined region of the superior temporal sulcus in the monkey. Brain Res 35:528–532. 10.1016/0006-8993(71)90494-X [DOI] [PubMed] [Google Scholar]

- Dumoulin SO, Bittar RG, Kabani NJ, Baker CL Jr, Le Goualher G, Pike GB, Evans AC (2000) A new anatomical landmark for reliable identification of human area V5/MT: a quantitative analysis of sulcal patterning. Cereb Cortex 10:454–463. 10.1093/cercor/10.5.454 [DOI] [PubMed] [Google Scholar]

- Eickhoff S, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K (2005) A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage 25:1325–1335. 10.1016/j.neuroimage.2004.12.034 [DOI] [PubMed] [Google Scholar]

- Eickhoff S, Jbabdi S, Caspers S, Laird AR, Fox PT, Zilles K, Behrens TE (2010) Anatomical and functional connectivity of cytoarchitectonic areas within the human parietal operculum. J Neurosci 30:6409–6421. 10.1523/JNEUROSCI.5664-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H (2002) Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci 22:5749–5759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferraro S, Van Ackeren MJ, Mai R, Tassi L, Cardinale F, Nigri A, Bruzzone MG, D'Incerti L, Hartmann T, Weisz N, Collignon O (2020) Stereotactic electroencephalography in humans reveals multisensory signal in early visual and auditory cortices. Cortex 126:253–264. 10.1016/j.cortex.2019.12.032 [DOI] [PubMed] [Google Scholar]

- Fillard P, Descoteaux M, Goh A, Gouttard S, Jeurissen B, Malcolm J, Ramirez-Manzanares A, Reisert M, Sakaie K, Tensaouti F, Yo T, Mangin JF, Poupon C (2011) Quantitative evaluation of 10 tractography algorithms on a realistic diffusion MR phantom. Neuroimage 56:220–234. 10.1016/j.neuroimage.2011.01.032 [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Schroeder CE (2005) The case for feedforward multisensory convergence during early cortical processing. Neuroreport 16:419–423. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE (2006) Is neocortex essentially multisensory? Trends Cogn Sci 10:278–285. [DOI] [PubMed] [Google Scholar]

- Giard MH, Peronnet F (1999) Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J Cogn Neurosci 11:473–490. [DOI] [PubMed] [Google Scholar]

- Grimaldi P, Saleem KS, Tsao D (2016) Anatomical connections of the functionally defined “face patches” in the macaque monkey. Neuron 90:1325–1342. 10.1016/j.neuron.2016.05.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huk AC, Dougherty RF, Heeger DJ (2002) Retinotopy and functional subdivision of human areas MT and MST. J Neurosci 22:7195–7205. 10.1523/JNEUROSCI.22-16-07195.2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iturria-Medina Y, Fernández AP, Morris DM, Canales-Rodríguez EJ, Haroon HA, Pentón LG, Augath M, García LG, Logothetis N, Parker JM, Melie-García L (2011) Brain hemispheric structural efficiency and interconnectivity rightward asymmetry in human and nonhuman primates. Cereb Cortex 21:56–67. [DOI] [PubMed] [Google Scholar]

- Jbabdi S, Johansen-Berg H (2011) Tractography: where do we go from here? Brain Connect 1:169–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeurissen B, Tournier JD, Dhollander T, Connelly A, Sijbers J (2014) Multi-tissue constrained spherical deconvolution for improved analysis of multishell diffusion MRI data. Neuroimage 103:411–426. 10.1016/j.neuroimage.2014.07.061 [DOI] [PubMed] [Google Scholar]

- Jeurissen B, Descoteaux M, Mori S, Leemans A (2019) Diffusion MRI fiber tractography of the brain. NMR Biomed 32:e3785. 10.1002/nbm.3785 [DOI] [PubMed] [Google Scholar]

- Johnson MH (2011) Interactive specialization: a domain-general framework for human functional brain development? Dev Cogn Neurosci 1:7–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of neuroscience 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitagawa N, Ichihara S (2002) Hearing visual motion in depth. Nature 416:172–174. 10.1038/416172a [DOI] [PubMed] [Google Scholar]

- Lewis R, Noppeney U (2010) Audiovisual synchrony improves motion discrimination via enhanced connectivity between early visual and auditory areas. J Neurosci 30:12329–12339. 10.1523/JNEUROSCI.5745-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ly A, Verhagen J, Wagenmakers EJ (2016) Harold Jeffreys's default Bayes factor hypothesis tests: Explanation, extension, and application in psychology. J Math Psychol 72:19–32. [Google Scholar]

- Macaluso E, Driver J (2005) Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci 28:264–271. 10.1016/j.tins.2005.03.008 [DOI] [PubMed] [Google Scholar]

- Maier-Hein KH, Neher PF, Houde JC, Côté MA, Garyfallidis E, Zhong J, Chamberland M, Yeh FC, Lin YC, Ji Q, Reddick WE, Glass JO, Chen DQ, Feng Y, Gao C, Wu Y, Ma J, He R, Li Q, Westin CF, et al. (2017) The challenge of mapping the human connectome based on diffusion tractography. Nat Commun 8:1349. 10.1038/s41467-017-01285-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Majka P, Rosa MG, Bai S, Chan JM, Huo BX, Jermakow N, Lin MK, Takahashi YS, Wolkowicz IH, Worthy KH, Rajan R, Reser DH, Wójcik DK, Okano H, Mitra PP (2019) Unidirectional monosynaptic connections from auditory areas to the primary visual cortex in the marmoset monkey. Brain Struct Funct 224:111–131. 10.1007/s00429-018-1764-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makuuchi M, Bahlmann J, Anwander A, Friederici AD (2009) Segregating the core computational faculty of human language from working memory. Proc Natl Acad Sci USA 106:8362–8367. 10.1073/pnas.0810928106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markov NT, Ercsey-Ravasz M, Van Essen DC, Knoblauch K, Toroczkai Z, Kennedy H (2013) Cortical high-density counterstream architectures. Science 342:1238406. 10.1126/science.1238406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthews N, Luber B, Qian N, Lisanby SH (2001) Transcranial magnetic stimulation differentially affects speed and direction judgments. Exp Brain Res 140:397–406. 10.1007/s002210100837 [DOI] [PubMed] [Google Scholar]

- Maunsell JH, Van Essen DC (1983) The connections of the middle temporal visual area (MT) and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci 3:2563–2586. 10.1523/JNEUROSCI.03-12-02563.1983 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McFadyen J, Mattingley JB, Garrido MI (2019) An afferent white matter pathway from the pulvinar to the amygdala facilitates fear recognition. Elife 8:1–51. 10.7554/eLife.40766 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer GF, Wuerger SM, Röhrbein F, Zetzsche C (2005) Low-level integration of auditory and visual motion signals requires spatial co-localisation. Exp Brain Res 166:538–547. 10.1007/s00221-005-2394-7 [DOI] [PubMed] [Google Scholar]

- Mito R, Raffelt D, Dhollander T, Vaughan DN, Tournier JD, Salvado O, Brodtmann A, Rowe CC, Villemagne VL, Connelly A (2018) Fibre-specific white matter reductions in Alzheimer's disease and mild cognitive impairment. Brain 141:888–902. 10.1093/brain/awx355 [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ (2002) Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res 14:115–128. 10.1016/S0926-6410(02)00066-6 [DOI] [PubMed] [Google Scholar]

- Morris DM, Embleton KV, Parker GJ (2008) Probabilistic fibre tracking: differentiation of connections from chance events. Neuroimage 42:1329–1339. 10.1016/j.neuroimage.2008.06.012 [DOI] [PubMed] [Google Scholar]

- Müller-Axt C, Anwander A, von Kriegstein K (2017) Altered structural connectivity of the left visual thalamus in developmental dyslexia. Curr Biol 27:3692–3698.e4. 10.1016/j.cub.2017.10.034 [DOI] [PubMed] [Google Scholar]

- Murray MM, Thelen A, Thut G, Romei V, Martuzzi R, Matusz PJ (2016) The multisensory function of the human primary visual cortex. Neuropsychologia 83:161–169. 10.1016/j.neuropsychologia.2015.08.011 [DOI] [PubMed] [Google Scholar]

- Orioli G, Bremner AJ, Farroni T (2018) Multisensory perception of looming and receding objects in human newborns. Curr Biol 28:R1294–R1295. [DOI] [PubMed] [Google Scholar]

- Palmer S, Rosa M (2006a) Quantitative analysis of the corticocortical projections to the middle temporal area in the marmoset monkey: evolutionary and functional implications. Cereb Cortex 16:1361–1375. 10.1093/cercor/bhj078 [DOI] [PubMed] [Google Scholar]

- Palmer S, Rosa M (2006b) A distinct anatomical network of cortical areas for analysis of motion in far peripheral vision. Eur J Neurosci 24:2389–2405. 10.1111/j.1460-9568.2006.05113.x [DOI] [PubMed] [Google Scholar]