Abstract

Pathological images are easily accessible data with the potential of prognostic biomarkers. Moreover, integration of heterogeneous data types from multi-modality, such as pathological image and gene expression data, is invaluable to help predicting cancer patient survival. However, the analytical challenges are significant. Here, we take the hepatocellular carcinoma (HCC) pathological image features extracted by CellProfiler, and apply them as the input for Cox-nnet, a neural network-based prognosis prediction model. We compare this model with the conventional Cox proportional hazards (Cox-PH) model, CoxBoost, Random Survival Forests and DeepSurv, using C-index and log-rank P-values. The results show that Cox-nnet is significantly more accurate than Cox-PH and Random Survival Forests models and comparable with CoxBoost and DeepSurv models, on pathological image features. Further, to integrate pathological image and gene expression data of the same patients, we innovatively construct a two-stage Cox-nnet model, and compare it with another complex neural-network model called PAGE-Net. The two-stage Cox-nnet complex model combining histopathology image and transcriptomic RNA-seq data achieves much better prognosis prediction, with a median C-index of 0.75 and log-rank P-value of 6e−7 in the testing datasets, compared to PAGE-Net (median C-index of 0.68 and log-rank P-value of 0.03). Imaging features present additional predictive information to gene expression features, as the combined model is more accurate than the model with gene expression alone (median C-index 0.70). Pathological image features are correlated with gene expression, as genes correlated to top imaging features present known associations with HCC patient survival and morphogenesis of liver tissue. This work proposes two-stage Cox-nnet, a new class of biologically relevant and interpretable models, to integrate multiple types of heterogenous data for survival prediction.

INTRODUCTION

Prognosis prediction is important for providing effective disease monitoring and management. Various biomaterials have been proposed as potential biomarkers to predict patient survival. Among them, hematoxylin and eosin (H&E) stained histopathological images are very attractive materials to extract biomarker features. Compared to genomic materials, such as RNA-seq transcriptomics, these images are much more easily accessible and cheaper to obtain, through processing archived formalin-fixed paraffin-embedded (FFPE) blocks. In H&E staining, the hematoxylin is oxidized into phematein, a basic dye that stains acidic (basophilic) tissue components (ribosomes, nuclei and rough endoplasmic reticulum) into darker purple color, whereas acidic eosin dye stains other protein structures of the tissue (stroma, cytoplasm and muscle fibers) into a pink color. As patients' survival information is retrospectively available in electronic medical record data and FFPE blocks are routinely collected clinically, the histopathology images can be generated and used for highly valuable and predictive prognosis models.

Previously, we developed a neural-network model called Cox-nnet to predict patient survival, using transcriptomic data (1). Cox-nnet is an alternative to the conventional methods, such as Cox proportional hazards (Cox-PH) methods with LASSO or ridge penalization. We have demonstrated that Cox-nnet is more optimized for survival prediction from high-throughput gene expression data, with comparable or better performance than other conventional methods, including Cox-PH, Random Survival Forests (2) and CoxBoost (3). Moreover, Cox-nnet reveals much richer biological information, at both the pathway and gene levels, through analyzing the survival-related ‘surrogate features’ represented as the hidden layer nodes in Cox-nnet. A few other neural network-based models were also proposed around the same time as Cox-nnet, such as DeepSurv (4). It remains to be explored whether Cox-nnet can take input features from other data types that are less biologically intuitive than genomic data, such as histopathology imaging data. It is also important to benchmark Cox-nnet with the other above-mentioned methods. Moreover, some neural network-based models were reported to handle multi-modal data (5). For example, PAGE-Net is a complex neural-network model that has a convolutional neural network (CNN) layer followed by pooling and a genomic model involved in transformation of the gene layer to pathway layer (5). The genomic neural network portion is followed by two hidden layers, the latter of which is combined with the image neural-network model to predict glioblastoma patient survival. It is therefore of interest to test whether a model built upon Cox-nnet, using pre-extracted, biologically informative features, can combine multiple types of data, e.g. imaging and genomic data, and if so, how well it performs relative to other models, such as PAGE-Net.

In this study, we extend Cox-nnet to take up pathological image features extracted from imaging processing tool CellProfiler (6), and compare the predictive performance of Cox-nnet relative to Cox proportional hazards model, CoxBoost and Random Survival Forests which we had compared before on genomic data, as well as the state-of-the-art method DeepSurv (4). Moreover, we propose a new type of two-stage complex Cox-nnet model, which combines the hidden node features from multiple first-stage Cox-nnet models, and then use these combined features as the input nodes to train a second-stage Cox-nnet model. We apply these models on The Cancer Genome Atlas (TCGA) hepatocellular carcinoma (HCC), which we had previously gained domain experience on (7,8). HCC is the most prevalent type of liver cancer that accounts for 70–90% of all liver cancer cases. It is a devastating disease with poor prognosis, where the 5-year survival rate is only 12% (9), and the prognosis prediction becomes very challenging due to the high level of heterogeneity in HCC as well as the complex etiological factors. Limited treatment strategies in HCC relative to other cancers, also impose an urgent need to develop tools for patient survival prediction. Further, we also evaluate the performance of two-stage Cox-nnet with PAGE-Net on integrating pathological images and gene expression data of HCC, and show that Cox-nnet achieves higher accuracy in testing data.

MATERIALS AND METHODS

Datasets

The histopathology images and their associated clinical information are downloaded from TCGA. A total of 384 liver tumor images are collected. Among them, 322 samples are clearly identified with tumor regions by pathology inspection. Among these samples, 290 have gene expression RNA-seq data, and thus are selected for pathology–gene expression integrated prognosis prediction. The gene expression RNA-seq dataset is also downloaded from TCGA; each feature is normalized into RPKM using the function ProcessRNASeqData by TCGA-Assembler (10).

Tumor image preprocessing

For each FFPE image stained with H&E, two pathologists at the University of Michigan provide us with the Region of Interest (tumor regions). The tumor regions are then extracted using Aperio software ImageScope (11). To reduce computational complexities, each extracted tumor region is divided into non-overlapping 1000 by 1000 pixel tiles. The density of each tile is computed as the summation of red, green and blue values, and 10 tiles with the highest density are selected for further feature extraction, following the guideline of others (12). To ensure that the quantitative features are measured under the same scale, the red, green and blue values are rescaled for each image. Image #128 with the standard background color (patient barcode: TCGA-DD-A73D) is selected as the reference image for the others to be normalized against. The means of red, green and blue values of the reference image are computed and the rest of the images are normalized by the scaling factors of the means of red, green and blue values relative to those of the reference image.

Feature extraction from the images

CellProfiler is used for feature extraction (13). Images are first preprocessed by ‘UnmixColors’ module to H&E stains for further analysis. ‘IdentifyPrimaryObject’ module is used to detect unrelated tissue folds, which are then removed by ‘MaskImage’ module to increase the accuracy for detection of tumor cells. Nuclei of tumor cells are then identified by ‘IdentifyPrimaryObject’ module again with parameters set by Otsu algorithm. The identified nuclei objects are utilized by ‘IdentifySecondaryObject’ module to detect the cell body objects and cytoplasm objects that surround the nuclei. Related biological features are computed from the detected objects, by a series of feature extraction modules, including ‘MeasureGranularity’, ‘MeasureObjectSizeShape’, ‘MeasureObjectIntensity’, ‘MeasureObjectIntensityDistribution’, ‘MeasureTexture’, ‘MeaureImageAreaOccupied’, ‘MeasureCorrelation’, ‘MeasureImageIntensity’ and ‘MeasureObjectNeighbors’. To aggregate the features from the primary and secondary objects, the related summary statistics (mean, median, standard deviation and quartiles) are then calculated to summarize data from object level to image level, yielding 2429 features in total. Each patient is represented by 10 images, and the median of each feature is selected to represent the patient's image biological feature.

Survival prediction models

Cox-nnet model

The Cox-nnet model is implemented in the Python package named Cox-nnet (1). Current implementation of Cox-nnet is a fully connected, two-layer neural-network model, with a hidden layer and an output layer for Cox regression. A drop-out rate of 0.7 is applied to the hidden layer to avoid overfitting. The size of the hidden layer is equal to the square root of the size of the input layer. We use a hold-out method by randomly splitting the dataset to 80% training set and 20% testing set, using train_test_split function from Sklearn package. We use grid search and 5-fold cross-validation to optimize the hyperparameters for the deep learning model on the selected training set. We train the model with a learning rate of 0.01 for 500 epochs with no mini-batch applied, and then evaluate the model on testing data. The procedure is repeated 20 times to assess the average performance. More details about Cox-nnet are described in (1).

Cox proportional hazards model

Since the number of features produced by CellProfiler exceeds the sample size, an elastic-net Cox proportional hazards model is built to select features and compute the prognostic index (PI) (14). Function cv.glmnet in the Glmnet R package is used to perform cross-validation to select the tuning parameter lambda. The parameter alpha that controls the trade-off between the quadratic penalty and linear penalty is selected by grid search. Same hold-out setting is employed by training the model using 80% randomly selected data and evaluating the model on the remaining 20% testing set. The procedure is repeated 20 times to assess the mean accuracy of the model.

CoxBoost model

CoxBoost is a modified version of Cox-PH model, but is especially suited for data with a large number of predictors (3). As an iterative gradient boosting method, CoxBoost divides the parameters into individual partitions. It first selects the partition that leads to the largest improvement in the penalized partial log likelihood, then selects other blocks in subsequent iterations and refits the parameters by maximizing the penalized partial log likelihood.

Random Survival Forests model

Random Survival Forests is a non-linear tree-based ensemble method (15). Each tree in the forest is fitted on bootstrapped data, with nodes split by maximizing the log-rank statistics. A patient's cumulative hazard is then calculated as the averaged cumulated hazard over all trees in the ensemble. We implement RSF using R package ‘randomForestSRC’ (16), where the hyperparameters, such as node size or the number of trees, are optimized by random search.

DeepSurv model

DeepSurv is a deep learning generalization of the Cox proportional hazards model, for predicting a patient's risk of failure (4). DeepSurv is implemented in Python using Theano and Lasagne, with hyperparameters optimized by random search over box constraints. The optimal DeepSurv architecture for our imaging data is determined by its built-in random hyperparameter search, consisting of two hidden layers with 38 nodes and 0.07 dropout for each. We train DeepSurv model on imaging data with a learning rate of 1e-5 and a learning rate decay of 6e-4 for 10,000 epochs.

Two-stage Cox-nnet model

The two-stage Cox-nnet model has two phases, as indicated in the name. For the first stage, we construct two separate Cox-nnet models in parallel, one for the image data and the other one for gene expression data. For each model, we optimize the hyperparameters using grid search under 5-fold cross-validation, as described earlier. In the second stage, we extract and combine the nodes of the hidden layer from each Cox-nnet model as the new input features to train a new Cox-nnet model. We construct and evaluate the second stage Cox-nnet model with the same parameter-optimization strategy as in the first stage.

PAGE-Net model

It is another neural-network method that can combine imaging and genomics (e.g. gene expression) information to predict patient survival (5). The imaging prediction module is very complex, with a patch-wide pre-trained CNN layer followed by pooling them together for another neural network. The pre-trained CNN of PAGE-Net consists of an input layer, three pairs of dilated convolutional layers (a kernel size of 5 by 5, 50 feature maps and a dilation rate of 2) and a max-pooling layer of 2 by 2 size. The sequential layers are followed by a flatten layer and a fully connected layer. The size of patches for training CNN is 256 by 256. After CNN, PAGE-Net consists of one gene layer (4675 nodes), one pathway layer (659 nodes), two hidden layers (100 nodes; 30 nodes), one pathology layer (30 nodes) and a cox output layer. Drop-out rates of 0.7, 0.5, 0.3 are applied to two hidden layers and pathology hidden layer respectively. We train PAGE-Net with a learning rate of 1.5e-6 and a batch size of 32 for 10,000 epochs. The hyperparameters are optimized by grid search. It is noteworthy that PAGE-Net has prolonged running time in CNN pre-training and feature pooling steps, therefore we only repeat the training on the integrative layer 20 times.

Model evaluation

Similar to the previous studies (1,7–8), we also use concordance index (C-index) and log-rank P-value as the metrics to evaluate model accuracy. C-index signifies the fraction of all pairs of individuals whose predicted survival times are correctly ordered and is based on Harrell's C statistics. The equation is as follows:

|

where  is the predicted risk score, T is the ‘time-to-event’ response, d is an auxiliary variable such that d = 1 if event is observed and d = 0 if patient is censored. A C-index of 1 means the model fits the survival data perfectly, whereas a score around 0.50 means randomness. In practice, a C-index around 0.70 indicates a good model. As both Cox-nnet and Cox-PH models quantify the patient's prognosis by log hazard ratios, we use the predicted median hazard ratios to stratify patients into two risk groups (high versus low survival risk groups). We also compute the log-rank P-values to test if two Kaplan–Meier survival curves produced by the dichotomized patients are significantly different, similar to earlier reports (7,14,17–20).

is the predicted risk score, T is the ‘time-to-event’ response, d is an auxiliary variable such that d = 1 if event is observed and d = 0 if patient is censored. A C-index of 1 means the model fits the survival data perfectly, whereas a score around 0.50 means randomness. In practice, a C-index around 0.70 indicates a good model. As both Cox-nnet and Cox-PH models quantify the patient's prognosis by log hazard ratios, we use the predicted median hazard ratios to stratify patients into two risk groups (high versus low survival risk groups). We also compute the log-rank P-values to test if two Kaplan–Meier survival curves produced by the dichotomized patients are significantly different, similar to earlier reports (7,14,17–20).

Feature evaluation

The input feature importance scores are calculated by drop-out method. The values of a variable are set to its mean and the log likelihood of the model is recalculated. The difference between the original log likelihood and the new log likelihood is considered as feature importance (21). We select 100 features with the highest importance scores from Cox-nnet for association analysis between pathology image and gene expression features. We regress each of the top 10 image features (y) on each of the top 50 gene expression features (x), and use the R-square statistics with significant P-value (<0.05) as the correlation metric.

Code availability

The code for two-stage Cox-nnet, including model training, hidden layer integration and feature importance calculation are available on Github: https://github.com/lanagarmire/two-stage-cox-nnet.

RESULTS

Overview of Cox-nnet model on pathological image data

In this study, we test if pathological images can be used to predict cancer patients. The initial task is to extract image features that can be used as the input for the predictive models. As described in the ‘Materials and Methods’ section, pathological images of 322 TCGA HCC patients are individually annotated with tumor contents by pathologists, before being subject to a series of processing steps. The tumor regions of these images then undergo segmentation, and the top 10 tiles (as described in ‘Materials and Method’ section) out of 1000 by 1000 tiles are used to represent each patient. These tiles are next normalized for RGB coloring against a common reference sample, and 2429 image features of different categories are extracted by CellProfiler. Summary statistics (mean, median, standard deviation and quartiles) are calculated for each image feature, and the median values of them over 10 tiles are used as the input imaging features for survival prediction.

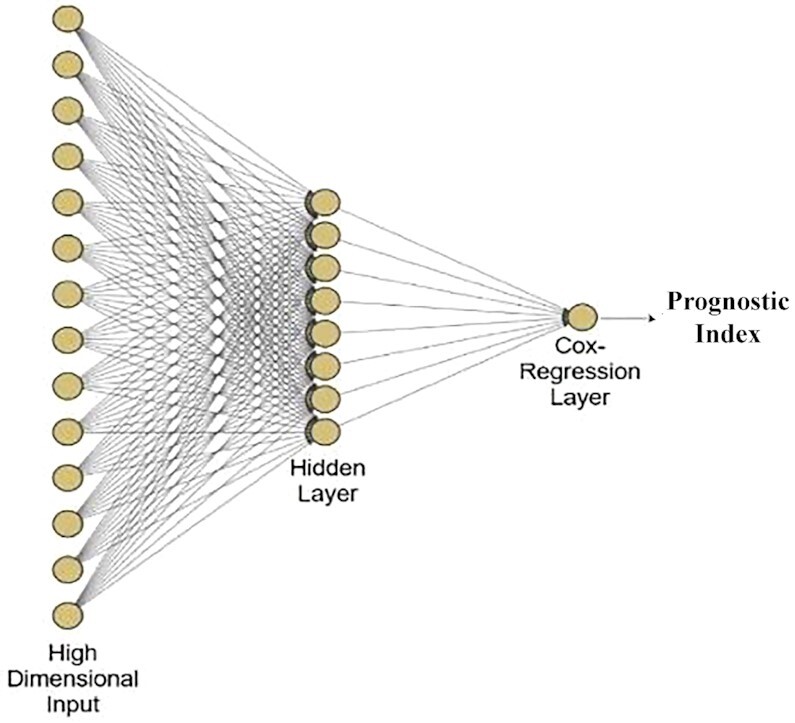

We apply these imaging features on Cox-nnet, a neural network-based prognosis prediction method previously developed by our group. The architecture of Cox-nnet is shown in Figure 1. Briefly, Cox-nnet is composed of one input layer, one fully connected hidden layer and one output ‘proportional hazards’ layer. We use 5-fold cross-validation (CV) to find the optimal regularization parameters. Based on our previous results on RNA-seq transcriptomics, we use dropout as the regularization method.

Figure 1.

The architectures of Cox-nnet model: the sketch of Cox-nnet model for prognosis prediction, based on a single data type.

Comparison of prognosis prediction among Cox-nnet and other methods on pathology imaging data

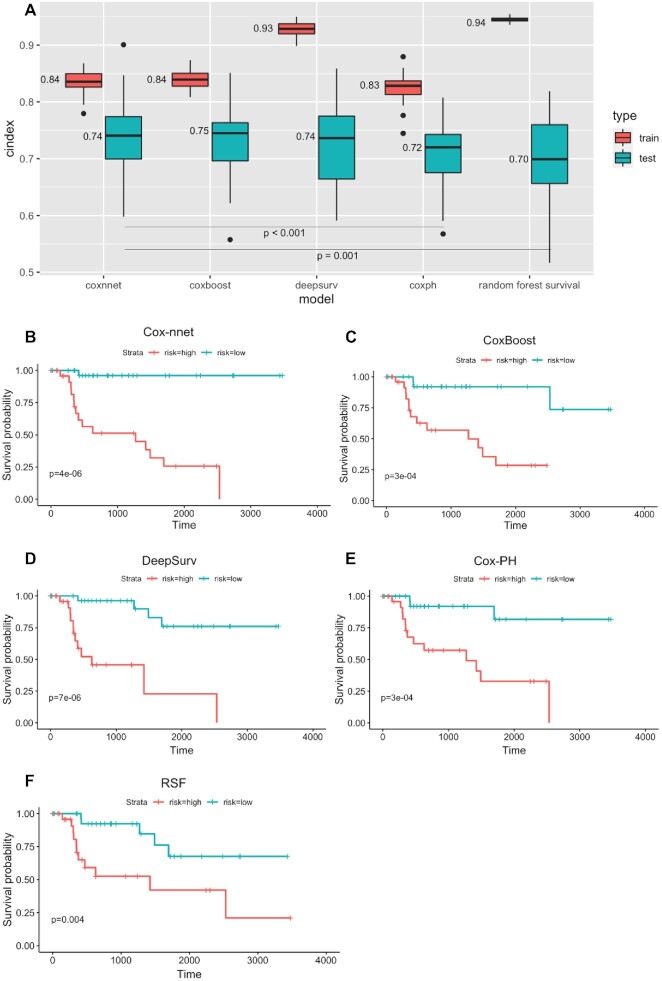

To evaluate the results on pathology image data, we compare Cox-nnet with three other models which were benchmarked before using genomics data: Cox-PH model, CoxBoost and Random Survival Forests (3), as well as another state-of-the-art neural network-based method DeepSurv (Figure 2). We use two accuracy metrics to evaluate the performance of models in comparison: C-index and log-rank P-values. C-index measures the fraction of all pairs of individuals whose predicted survival times are correctly ordered by the model. The higher C-index, the more accurate the prognosis model is. As shown in Figure 2A, on the testing datasets, the median C-index score from Cox-nnet (0.74) is significantly higher (P < 0.001) than both Cox-PH (0.72) and Random Survival Forests (0.70). Moreover, Cox-nnet achieves a comparable C-index score with other two models, CoxBoost (0.75, P = 0.10) and DeepSurv (0.74, P = 0.19). In particular, Cox-nnet yields more stable results than DeepSurv, as shown by the smaller variations in the C-index scores in different test sets. Additionally, we dichotomize survival risks using the median score of predicted PI from each model. We then use log-rank P-value to show the survival difference between the Kaplan–Meier survival curves of high versus low survival risk groups in a typical run (Figure 2B–F and Supplementary Figure S3A–E). In the training dataset, Cox-nnet achieves the third highest log-rank P-value of 1e-12 (Supplementary Figure S3A), after those of DeepSurv (3e-19) and RSF (2e-21). Whereas in the testing dataset, Cox-nnet achieves the highest log-rank P-value of 4e-6, better than those of Cox-PH (3e-4), DeepSurv (7e-6), CoxBoost (3e-4) and Random Survival Forests (0.004).

Figure 2.

Comparison of prognosis prediction among different models using pathology imaging data. (A) C-index results on training and testing datasets. (B–F) Kaplan–Meier survival curves on testing datasets using different methods. (B) Cox-nnet (C) CoxBoost (D) DeepSurv (E) Cox-PH (F) Random Survival Forests (RSF).

We next investigate the top 100 image features according to Cox-nnet ranking (Supplementary Figure S1). Interestingly, the most frequent features are those involved in textures of the image, accounting for 48% of raw input features. Intensity and area/shape parameters make up the second and third highest categories, with 18% and 15% features. Density, on the other hand, is less important (3%). It is also worth noting that among the 47 features selected by the conventional Cox-PH model, 70% are also found in the top 100 features selected by Cox-nnet, showing the connections between the two models.

Two-stage Cox-nnet model to predict prognosis on combined histopathology imaging and gene expression RNA-seq data

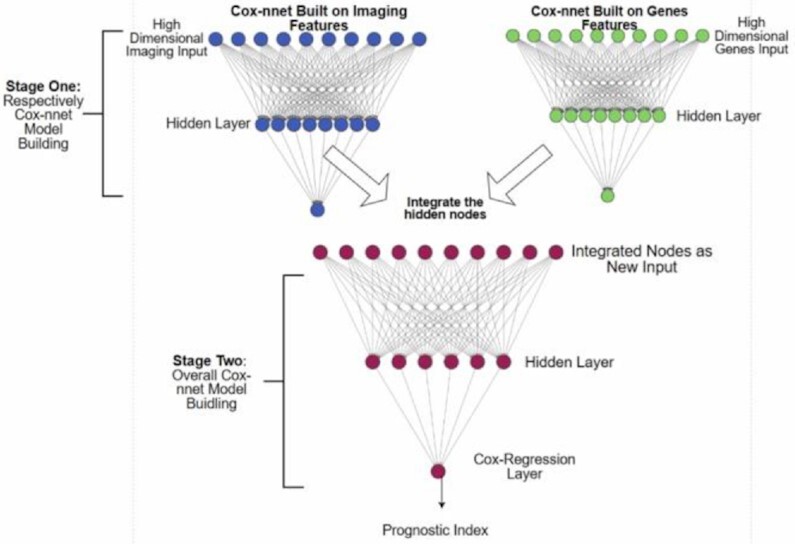

Multi-modal and multi-type data integration is challenging, particularly for survival prediction. We next ask if we can utilize Cox-nnet framework for such purpose, exemplified by pathology imaging and gene expression RNA-seq based survival prediction. Toward this, we propose a two-stage Cox-nnet complex model, inspired by other two-stage models in genomics fields (22–24). The two-stage Cox-nnet model is depicted in Figure 3. For the first stage, we construct two Cox-nnet models in parallel, using the image data and gene expression data of HCC, respectively. For each model, we optimize the hyperparameters by grid search under 5-fold cross-validation. Then we extract and combine the nodes of the hidden layer from each Cox-nnet model as the new input features for the second-stage Cox-nnet model. We construct and evaluate the second-stage Cox-nnet model with the same parameter-optimization strategy as in the first stage.

Figure 3.

The architectures of two-stage Cox-nnet. The first stage builds individual Cox-nnet models for each data type. The second stage combines the hidden nodes from the first stage Cox-nnet models as the input, and builds a new Cox-nnet model.

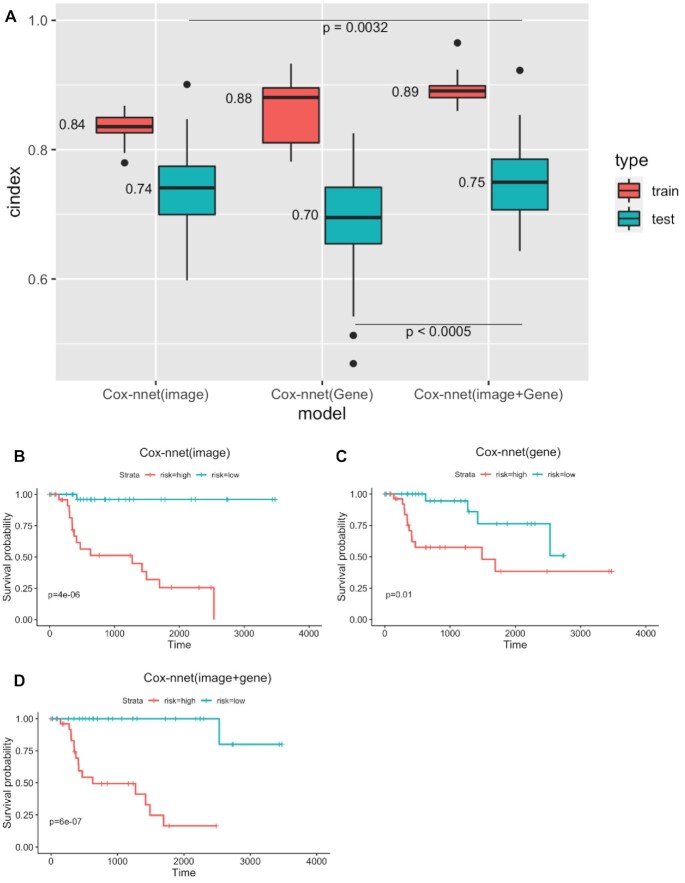

As shown in Figure 4A, the resulting two-stage Cox-nnet model yields very good performance, judging by the C-index values. The median C-index scores for the training and testing sets are 0.89 and 0.75, respectively. These C-index values are significantly improved, compared to the Cox-nnet models that are built on either imaging (described earlier) or gene expression RNA-seq data alone. For example, on the testing datasets, the median C-index score from two-stage Cox-nnet (0.75) is significantly higher (P < 0.0005) than that from the Cox-nnet model built on gene expression data (0.70). It is also significantly higher (P < 0.005) than that from the Cox-nnet model built on image data (0.74). The superior predictive performance of the two-stage Cox-nnet model is also confirmed by the log-rank P-values in the Kaplan–Meier survival curves (Figure 4B–D). It achieves a log-rank P-value of 6e-7 in testing data (Figure 4D), higher than the Cox-nnet models based on pathological image data (Figure 4B) or gene expression RNA-seq data (Figure 4C), which have log-rank P-values of 4e-6 and 0.01, respectively.

Figure 4.

Comparison of Cox-nnet prognosis prediction using pathology imaging, gene expression and the combination of the two data types. (A) C-index results on training and testing datasets. (B–D) Kaplan–Meier survival curves on testing datasets. (B) Cox-nnet model on imaging data only. (C) Cox-nnet model on gene expression data only. (D) two-stage Cox-nnet model combining imaging and gene expression data.

Comparing two-stage Cox-nnet model with another imaging and gene expression-based prognosis model

We compare two-stage Cox-nnet with PAGE-Net, another neural-network method that combines imaging and genomics information to predict patient survival (5). The imaging prediction module of PAGE-Net is very complex, with a patch-wide pre-trained CNN layer followed by pooling them together for another neural network. The genomics model involves transformation of gene layer to pathway layer, and then followed by two hidden layers, the latter of which is combined with the image neural network to predict patient survival. For a fair comparison, we use the same image and gene inputs for PAGE-Net and Cox-nnet. The data are randomly split into 80% training and 20% testing. The training set for PAGE-Net is further split into 90% training and 10% validation, which is used for early stopping. The whole experiment is repeated 20 times with different train-test splits.

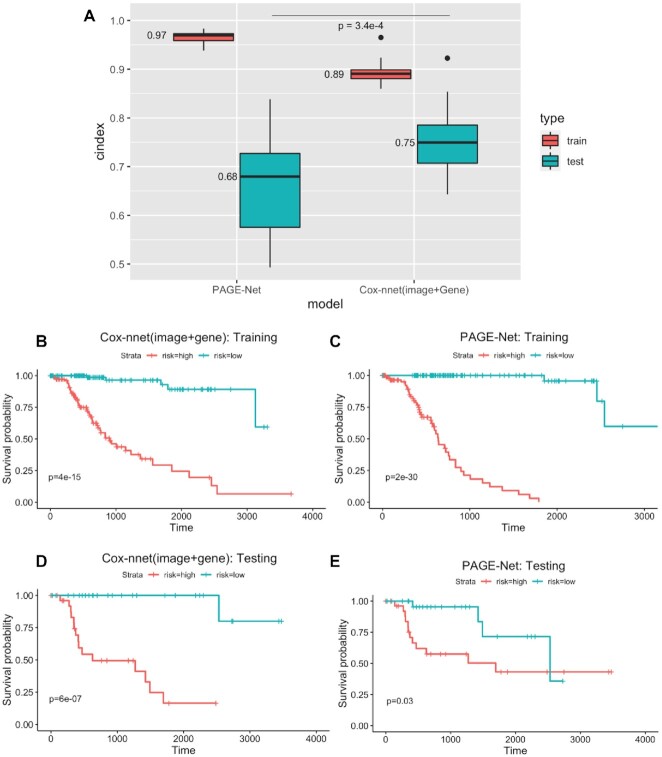

As shown in Figure 5A, on the testing datasets, the median C-index score of 0.75 from the two-stage Cox-nnet model is significantly higher (P-value < 3.4e-4) than that of PAGE-Net (0.68). The C-index values from the PAGE-Net model are much more variable (less stable), compared to those from two-stage Cox-nnet model. Moreover, PAGE-Net model appears to have an overfitting issue: the median C-index score of PAGE-Net model on the training set is very high (0.97), however, its predictability on hold-out testing data is much poorer (0.68). Extensive running time is another concern for PAGE-Net. On Graphic Processing Unit (GPUs) of Nvidia V100-PCIE with 16GB of memory each, it takes over a week to pre-train CNN and extract image features from 290 samples, prohibiting its practical use. The Kaplan–Meier survival differences using median PI as the threshold confirm the observations by C-index (Figure 5B–E). On testing data, two-stage Cox-nnet achieves a much better log-rank P-value of 6e-7 (Figure 5D), compared to that of 0.03 for PAGE-Net prediction (Figure 5E), even though Cox-nnet has a lower log-rank P-value of 4e-15 in the training data (Figure 5B), compared to the value of 2e-30 of PAGE-Net (Figure 5C).

Figure 5.

Comparison of two-stage Cox-nnet and PAGE-Net, based on combined pathological images and gene expression. (A) C-index of the two methods on training (red) and testing (blue) datasets, on 20 repetitions. (B–E) Kaplan–Meier survival curves resulting from the Cox-nnet (B and D) and PAGE-Net model (C and E) using training and testing datasets, respectively.

Relationship between histology and gene expression features in the two-stage Cox-nnet model

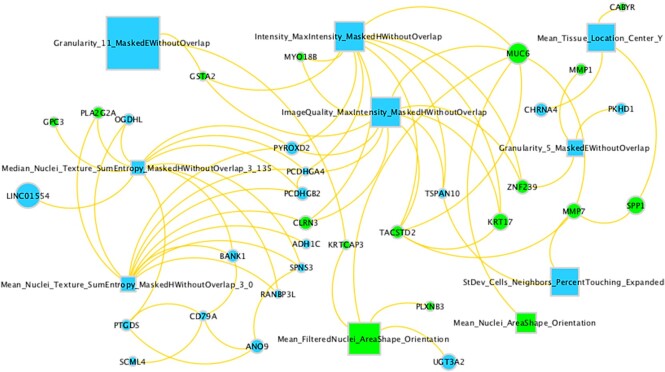

We also investigate the correlations between the top imaging features and top RNA-seq gene expression features. These top features are determined by the ranks of their feature scores (see ‘Materials and Methods’ section). The top 10 images and their Cox-nnet importance scores are also listed in Supplementary Table S1. We then use the bipartite graph to show the image-gene pairs with Pearson's correlations > 0.1 and P-value < 0.05, among the top 10 histopathology features and top 50 gene expression features (Figure 6). Top genes associated with top 10 histopathology features include long intergenic non-protein coding RNA 1554 (LINC01554), mucin 6 (MUC6), keratin 17 (KRT17), matrix metalloproteinase 7 (MMP7), secreted phosphoprotein 1 (SPP1) and myosin XVIIIB (MYO18B). Gene Set Enrichment (GSEA) analysis on top 1000 genes correlated to each image feature shows that image feature correlated genes are significantly (false discovery rate, FDR < 25% as recommended) enriched in pathways-in-cancer (normalized enrichment score, NES = 1.41, FDR = 0.18) and focal-adhesion (NES = 1.22, FDR = 0.21) pathways, which are upregulated in HCC patients with poor prognosis. Pathways-in-cancer is a pan-pathway that covers multiple important cancer-related signaling pathways, such as PI3-AKT signaling, MAPK signaling and p53 signaling. Focal-adhesion pathway includes genes involved in cell-matrix adhesion, which is important for cell motility, proliferation, differentiation and survival. These two pathways play important roles in the survival of HCC patients (25).

Figure 6.

Relationship between top imaging and gene features. Rectangle nodes are image features and circle nodes are gene features. Node sizes are proportional to importance scores from Cox-nnet. Two gene nodes are connected only if their correlation is > 0.5; an image node and a gene node are connected only if their correlation is > 0.1 (P-value < 0.05). Green nodes represent features with positive coefficients (hazard ratio) in univariate Cox-PH regression, indicating worse prognosis. Blue nodes represent features with negative coefficients (hazard ratio) in univariate Cox-PH regression, indicating protection against bad prognosis.

DISCUSSION

Driven by the objective to build a uniform workframe to integrate multi-modal and multi-type data to predict patient survival, we extend Cox-nnet model, a neural network-based survival prediction method, on pathology imaging and transcriptomic data. Using TCGA HCC pathology images as the example, we demonstrate that Cox-nnet is more robust and accurate at predicting survival, compared to Cox-PH, the standard method that was also the second best method in the original RNA-seq transcriptomic study (1). We also demonstrate that Cox-nnet achieves better or comparable performance compared to other methods, including DeepSurv, CoxBoost and Random Survival Forests. Moreover, we propose a new two-stage complex Cox-nnet model to integrate imaging and RNA-seq transcriptomic data, and showcase its superior accuracy on HCC patient survival prediction, compared to another neural network method: PAGE-Net. The two-stage Cox-nnet model combines the transformed, hidden node features from the first-stage Cox-nnet models for imaging or gene expression RNA-seq data, respectively, and uses these combined hidden features as the new inputs to train a second-stage Cox-nnet model.

Rather than using CNN models that are more complex, such as PAGE-Net, we utilize a less complex but more biologically interpretable approach, where we extract imaging features defined by the tool CellProfiler. These features are then used as input nodes in relatively simple, two-layer neural network models. Hidden features extracted from each Cox-nnet model can then be combined flexibly to build new Cox-nnet models. On the other hand, PAGE-Net uses a pre-trained CNN for images and a gene-pathway layer to handle gene expression data. Despite great efforts, image features extracted by CNN in PAGE-Net are not easily interpretable and the model appears to overfit given the small sample size. PAGE-Net also requires very long training time. The significantly higher predictive performance of two-stage Cox-nnet model argues for the advantages to use a relatively simple neural network model with input nodes of biological relevance, such as those extracted by image processing tools and gene expression input features.

Besides the interpretability of histopathology image features themselves, correlation analysis between top gene features and top image features identifies the genes known to be related to survival of HCC patients or morphology of the tissue, such as LINC01554, MUC6, MMP7, KRT17, MYO18B and SPP1. LINC01554 is a long non-coding RNA that is downregulated in HCC and its expression corresponds to good survival of HCC patients previously (26). MUC6 is a mucin protein that participates in the remodeling of the ductal plate in the liver (27), which was also involved in the carcinogenesis of HCC (28). MMP7, also known as matrilysin, is an enzyme that breaks down extracellular matrix by degrading macromolecules, including casein, type I, II, IV and V gelatins, fibronectin and proteoglycan (29). MMP7 participates in the remodeling of extracellular matrix (30) and impacts the morphology of liver tissue (31), which may explain its link to histopathology features. MMP7 expression was also shown association to poor prognosis in patients with HCC (32). KRT17 serves as an oncogene and a predictor of poor survival in HCC patients (33). MYO18B is a myosin family gene that promotes HCC progression by activating PI3K/AKT/mTOR signaling pathway. Overexpression of MYO18B predicted poor survival of HCC patients (34). SPP1 functions as an enhancer of cell growth in HCC. The 5-year overall survival rate generated from 364 HCC cases demonstrated a poor survival of the patients with relatively higher SPP1 expression (35).

In summary, we extend the previous Cox-nnet model to process pathological imaging data, and propose a new class of two-stage Cox-nnet neural-network model that creatively addresses the general challenge of multi-modal data integration for patient survival prediction. Using input imaging features extracted from CellProfiler, Cox-nnet models are biologically interpretable. Some image features are also correlated with genes of known HCC relevance, enhancing their biological interpretability. Since the proposed two-stage Cox-nnet is generic, we would like to extend this methodology to other types of cancers in the future, including those in TCGA consortium.

DATA AVAILABILITY

The code for two-stage Cox-nnet, including model training, hidden layer integration and feature importance calculation are available on Github (https://github.com/lanagarmire/two-stage-cox-nnet).

Supplementary Material

ACKNOWLEDGEMENTS

We thank previous Garmire group members Dr Fadhl Alakwaa, Dr Olivier Poirion and Dr Travers Ching for the discussions.

Author contributions: L.X.G. envisioned the project, supervised the study and wrote the majority of the manuscript. Z.Z. and Z.J. performed modeling and data analysis, with help from B.H. and N.H. M.W. and E.Y.C. assisted with the tumor section labeling of all the images.

Contributor Information

Zhucheng Zhan, School of Science and Engineering, Chinese University of Hong Kong, Shenzhen Campus, Shenzhen 518172, P.R. China.

Zheng Jing, Department of Applied Statistics, University of Michigan, Ann Arbor, MI 48104, USA.

Bing He, Department of Computational Medicine and Bioinformatics, University of Michigan, Ann Arbor, MI 48104, USA.

Noshad Hosseini, Department of Computational Medicine and Bioinformatics, University of Michigan, Ann Arbor, MI 48104, USA.

Maria Westerhoff, Department of Pathology, University of Michigan, Ann Arbor, MI 48104, USA.

Eun-Young Choi, Department of Pathology, University of Michigan, Ann Arbor, MI 48104, USA.

Lana X Garmire, Department of Computational Medicine and Bioinformatics, University of Michigan, Ann Arbor, MI 48104, USA.

SUPPLEMENTARY DATA

Supplementary Data are available at NARGAB Online.

FUNDING

NIEHS [K01ES025434 to L.X.G.]; NLM [R01 LM012373, R01 LM012907 to L.X.G.]; NICHD [R01 HD084633 to L.X.G.].

Conflict of interest statement. None declared.

REFERENCES

- 1. Ching T., Zhu X., Garmire L.X. Cox-nnet: an artificial neural network method for prognosis prediction of high-throughput omics data. PLoS Comput. Biol. 2018; 14:e1006076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Ishwaran H., Lu M. Random survival forests. Wiley StatsRef: Statistics Reference Online. 2019; 1–13. [Google Scholar]

- 3. De Bin R. Boosting in Cox regression: a comparison between the likelihood-based and the model-based approaches with focus on the R-packages CoxBoost and mboost. Comput. Stat. 2016; 31:513–531. [Google Scholar]

- 4. Katzman J.L., Shaham U., Cloninger A., Bates J., Jiang T., Kluger Y. DeepSurv: personalized treatment recommender system using a Cox proportional hazards deep neural network. BMC Med. Res. Methodol. 2018; 18:24–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hao J., Kosaraju S.C., Tsaku N.Z., Song D.H., Kang M. PAGE-Net: interpretable and integrative deep learning for survival analysis using histopathological images and genomic data. Pac. Symp. Biocomput. 2020; 25:355–366. [PubMed] [Google Scholar]

- 6. McQuin C., Goodman A., Chernyshev V., Kamentsky L., Cimini B.A., Karhohs K.W., Doan M., Ding L., Rafelski S.M., Thirstrup D. et al. CellProfiler 3.0: next-generation image processing for biology. PLoS Biol. 2018; 16:e2005970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Chaudhary K., Poirion O.B., Lu L., Garmire L.X. Deep learning-based multi-omics integration robustly predicts survival in liver cancer. Clin. Cancer Res. 2018; 24:1248–1259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Chaudhary K., Poirion O.B., Lu L., Huang S., Ching T., Garmire L.X. Multi-modal meta-analysis of 1494 hepatocellular carcinoma samples reveals vast impacts of consensus driver genes on phenotypes. Clin. Cancer Res. 2019; 25:463–472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Khalaf N., Ying J., Mittal S., Temple S., Kanwal F., Davila J., El-Serag H.B. Natural history of untreated hepatocellular carcinoma in a US cohort and the role of cancer surveillance. Clin. Gastroenterol. Hepatol. 2017; 15:273–281. [DOI] [PubMed] [Google Scholar]

- 10. Zhu Y., Qiu P., Ji Y. TCGA-Assembler: open-source software for retrieving and processing TCGA data. Nat. Methods. 2014; 11:599–600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Marinaccio C., Ribatti D. A simple method of image analysis to estimate CAM vascularization by APERIO ImageScope software. Int. J. Dev. Biol. 2015; 59:217–219. [DOI] [PubMed] [Google Scholar]

- 12. Yu K-H., Zhang C., Berry G.J., Altman R.B., Ré C., Rubin D.L., Snyder M. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat. Commun. 2016; 7:12474–12483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kamentsky L., Jones T.R., Fraser A., Bray M-A., Logan D.J., Madden K.L., Ljosa V., Rueden C., Eliceiri K.W., Carpenter A.E. Improved structure, function and compatibility for CellProfiler: modular high-throughput image analysis software. Bioinformatics. 2011; 27:1179–1180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Huang S., Yee C., Ching T., Yu H., Garmire L.X. A novel model to combine clinical and pathway-based transcriptomic information for the prognosis prediction of breast cancer. PLoS Comput. Biol. 2014; 10:e1003851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Ishwaran H., Kogalur U.B., Blackstone E.H., Lauer M.S. Random survival forests. Ann. Appl. Stat. 2008; 2:841–860. [Google Scholar]

- 16. Ishwaran H., Kogalur U.B. Fast unified random forests for survival, regression, and classification (RF-SRC). R package version. 2019; 2:1–113. [Google Scholar]

- 17. Chaudhary K., Poirion O.B., Lu L., Huang S., Ching T., Garmire L.X. Multimodal meta-analysis of 1,494 hepatocellular carcinoma samples reveals significant impact of consensus driver genes on phenotypes. Clin. Cancer Res. 2019; 25:463–472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Huang S., Chong N., Lewis N.E., Jia W., Xie G., Garmire L.X. Novel personalized pathway-based metabolomics models reveal key metabolic pathways for breast cancer diagnosis. Genome Med. 2016; 8:34–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Fang X., Liu Y., Ren Z., Du Y., Huang Q., Garmire L.X. Lilikoi V2.0: a deep-learning enabled, personalized pathway-based R package for diagnosis and prognosis predictions using metabolomics data. Gigascience. 2021; 10:giaa162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Poirion O.B., Chaudhary K., Huang S., Garmire L.X. Multi-omics-based pan-cancer prognosis prediction using an ensemble of deep-learning and machine-learning models. Genetic and Genomic Medicine. 2019; medRxiv doi:25 October 2019, Preprint: not peer reviewed 10.1101/19010082. [DOI] [PMC free article] [PubMed]

- 21. Bengio Y., Boulanger-Lewandowski N., Pascanu R. Advances in optimizing recurrent networks. 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. 2013; Vancouver, BC: IEEE; 8624–8628. [Google Scholar]

- 22. Schulz-Streeck T., Ogutu J.O., Piepho H-P. Comparisons of single-stage and two-stage approaches to genomic selection. Theor. Appl. Genet. 2013; 126:69–82. [DOI] [PubMed] [Google Scholar]

- 23. Wei R., De Vivo I., Huang S., Zhu X., Risch H., Moore J.H., Yu H., Garmire L.X. Meta-dimensional data integration identifies critical pathways for susceptibility, tumorigenesis and progression of endometrial cancer. Oncotarget. 2016; 7:55249–55263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Pinu F.R., Beale D.J., Paten A.M., Kouremenos K., Swarup S., Schirra H.J., Wishart D. Systems biology and multi-omics integration: viewpoints from the metabolomics research community. Metabolites. 2019; 9:76–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Ding X-X., Zhu Q-G., Zhang S-M., Guan L., Li T., Zhang L., Wang S.Y., Ren W.L., Chen X.M., Zhao J. et al. Precision medicine for hepatocellular carcinoma: driver mutations and targeted therapy. Oncotarget. 2017; 8:55715–55730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ding Y., Sun Z., Zhang S., Chen Y., Zhou B., Li G., Sun Q., Zhou D., Ge Y., Yan S. et al. Down-regulation of long non-coding RNA LINC01554 in hepatocellular cancer and its clinical significance. J. Cancer. 2020; 11:3369–3374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Terada T. Human fetal ductal plate revisited: II. MUC1, MUC5AC, and MUC6 are expressed in human fetal ductal plate and MUC1 is expressed also in remodeling ductal plate, remodeled ductal plate and mature bile ducts of human fetal livers. Int. J. Clin. Exp. Pathol. 2013; 6:571–585. [PMC free article] [PubMed] [Google Scholar]

- 28. Kasprzak A., Adamek A. Mucins: the old, the new and the promising factors in hepatobiliary carcinogenesis. Int. J. Mol. Sci. 2019; 20:1288–1317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Yokoyama Y., Grünebach F., Schmidt S.M., Heine A., Häntschel M., Stevanovic S., Rammensee H.G., Brossart P. Matrilysin (MMP-7) is a novel broadly expressed tumor antigen recognized by antigen-specific T cells. Clin. Cancer Res. 2008; 14:5503–5511. [DOI] [PubMed] [Google Scholar]

- 30. Benyon R.C., Arthur M.J. Extracellular matrix degradation and the role of hepatic stellate cells. Semin. Liver Dis. 2001; 21:373–384. [DOI] [PubMed] [Google Scholar]

- 31. Huang C-C., Chuang J-H., Chou M-H., Wu C-L., Chen C-M., Wang C-C., Chen Y.S., Chen C.L., Tai M.H. Matrilysin (MMP-7) is a major matrix metalloproteinase upregulated in biliary atresia-associated liver fibrosis. Mod. Pathol. 2005; 18:941–950. [DOI] [PubMed] [Google Scholar]

- 32. Rong W., Zhang Y., Yang L., Feng L., Wei B., Wu F., Wang L., Gao Y., Cheng S., Wu J. et al. Post-surgical resection prognostic value of combined OPN, MMP7, and PSG9 plasma biomarkers in hepatocellular carcinoma. Front. Med. 2019; 13:250–258. [DOI] [PubMed] [Google Scholar]

- 33. Chen Y., Chen J., Tian Z-.C., Zhou D-.H., Ji R., Ge S-.J., Liu Z.X., Huang W. KRT17 serves as an oncogene and a predictor of poor survival in hepatocellular carcinoma patients. 2020; Research Square doi:03 December 2020, Preprint: not peer reviewed 10.21203/rs.3.rs-117723/v1. [DOI]

- 34. Zhang Z., Zhu J., Huang Y., Li W., Cheng H. MYO18B promotes hepatocellular carcinoma progression by activating PI3K/AKT/mTOR signaling pathway. Diagn. Pathol. 2018; 13:85–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Wang J., Hao F., Fei X., Chen Y. SPP1 functions as an enhancer of cell growth in hepatocellular carcinoma targeted by miR-181c. Am. J. Transl. Res. 2019; 11:6924–6937. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The code for two-stage Cox-nnet, including model training, hidden layer integration and feature importance calculation are available on Github (https://github.com/lanagarmire/two-stage-cox-nnet).