Abstract

Memories for episodes are temporally structured. Cognitive models derived from list-learning experiments attribute this structure to the retrieval of temporal context information that indicates when a memory occurred. These models predict key features of memory recall, such as the strong tendency to retrieve studied items in the order in which they were first encountered. Can such models explain ecological memory behaviors, such as eye movements during encoding and retrieval of complex visual stimuli? We tested predictions from retrieved-context models using three datasets involving recognition memory and free viewing of complex scenes. Subjects reinstated sequences of eye movements from one scene-viewing episode to the next. Moreover, sequence reinstatement decayed overtime and was associated with successful memory. We observed memory-driven reinstatement even after accounting for intrinsic scene properties that produced consistent eye movements. These findings confirm predictions of retrieved-context models, suggesting retrieval of temporal context influences complex behaviors generated during naturalistic memory experiences.

Introduction

Memory processing occurs as individuals interact with complex environments across space and time (Eichenbaum, 2017). However, theoretical accounts of memory mostly derive from experiments in which participants are passive recipients of information. These paradigms severely restrict opportunities for interaction with sensory information (Voss, Bridge, Cohen, & Walker, 2017). When individuals are allowed to interact with sensory stimuli, memory-related brain structures such as the hippocampus and connected neuromodulatory centers are upregulated (Bridge, Cohen, & Voss, 2017; Murty, DuBrow, & Davachi, 2015; Voss, Gonsalves, Federmeier, Tranel, & Cohen, 2011; Voss, Warren, et al., 2011; Yebra et al., 2019), indicating that such interaction could impact the functional properties of memory processing. Thus, the validity of current models of memory derived from passive-format experiments in describing the cognitive and neural events that support ecological memory functions remains unclear. Here, we tested whether retrieved-context models of episodic memory (Howard & Kahana, 2002; Lohnas, Polyn, & Kahana, 2015; Polyn, Norman, & Kahana, 2009; Sederberg, Howard, & Kahana, 2008) can be used to accurately describe the patterns of eye movements that individuals make as they explore and retrieve information from complex visual scenes.

Retrieved-context models such as the temporal context model (Howard & Kahana, 2002) provide a mechanistic account of how a slowly evolving representation of recent experience (i.e., temporal context) is used to retrieve information from memory. For example, in free-recall tasks subjects study a list of words and later attempt to recall studied items from memory without any experimenter-imposed constraints on the order in which items must be recalled (Fig. 1a). Despite the lack of temporal constraints, subjects nonetheless demonstrate robust contiguity effects in nearly all list-learning experiments (Healey, Long, & Kahana, 2019; Kahana, 1996), whereby items studied nearby in time are likely to be recalled together (Fig. 1b). According to retrieved-context models, a representation of temporal context becomes associated with each item as it is studied. During memory retrieval, successful retrieval of an item is accompanied by reinstatement of its associated temporal context. This contextual reinstatement then serves as a cue to recall other items that were studied close in time and therefore that share similar temporal context.

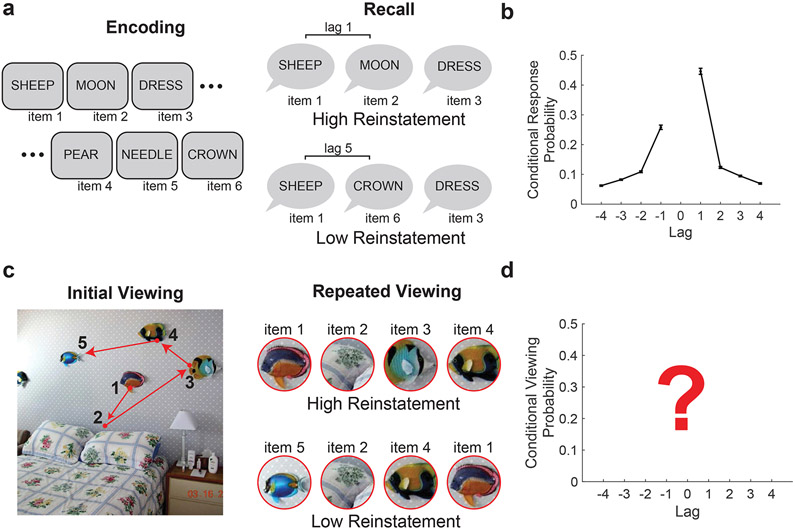

Figure 1. Temporal contiguity in free recall and scene viewing.

a, Free-recall task. Subjects study a sequence of visually presented words. Following encoding, subjects are prompted with a cue to recall as many items as possible from memory in any order. Responses are typically vocalized or written. b, Temporal contiguity in free recall. Curves depict the conditional response probability as a function of lag (lag-CRP). The plot shows the probability of recalling an item presented before (negative lags) or after (positive lags) the most recently recalled item. Data are from Experiment 1 of the Penn Electrophysiology of Encoding and Retrieval Study (Healey & Kahana, 2016). Error bars denote SEM. c, Fixations serialize visual input during scene viewing. During visual exploration of a scene, the sequence of fixations provides sequential input of visual information for memory encoding. During repeated viewing, temporal context reinstatement results in contiguity of eye movements across viewing episodes. d, Hypothesized contiguity effects during visual exploration.

Retrieved-context models provide a parsimonious account of contiguity effects that are highly robust, persisting for recall of memories across both short (within a list) and long (across lists) timescales (Howard, Youker, & Venkatadass, 2008), extending to days for real-world memories (Uitvlugt & Healey, 2019). The processes that produce contiguity in recall also accurately describe recognition behavior (Healey & Kahana, 2016), where retrieval of temporal context facilitates recognition of temporally adjacent probes (Schwartz, Howard, Jing, & Kahana, 2005). Retrieved-context models also account for the pervasive finding that contiguity is asymmetrical (Healey et al., 2019), with forward-going transitions (i.e., recalling items that presented after the just-recalled item in the study list) more prevalent than backward-going transitions that oppose the order in which items were initially studied. The forward asymmetry is predicted because item-related information integrates into context and persists with the passage of time during encoding. As a result, context representations following the encoding of novel information are more similar than preceding states, which do not contain information about the encoded stimulus. Because items encoded in similar contexts are favored during the retrieval process, memories are typically recalled with a forward bias (Howard & Kahana, 2002). Although retrieved-context models provide powerful descriptions of behavior and have been strongly associated with memory processing by key regions such as the hippocampus (Howard, Viskontas, Shankar, & Fried, 2012; Kragel, Morton, & Polyn, 2015), it is unclear how well they account for memory function outside of list-learning experiments, when self-guided exploration determines what is encoded.

A potential reconciliation of retrieved-context models with ecological memory function can be achieved if one assumes that active sampling behaviors, such as saccadic eye movements, serve to discretize interactions with the environment into individual memoranda (Zelinsky & Loschky, 2005). This assumption is grounded in the physiology of the visual system. Because visual acuity falls off away from the fovea (Weymouth, 1958), sequential sampling is necessary for detailed scene processing (Buswell, 1935; Yarbus, 1967). During saccades, inputs from the retina are suppressed (Matin, 1974; Thiele, Henning, Kubischik, & Hoffmann, 2002), disrupting neuronal activity in early visual cortex during fixations (Brunet et al., 2015). While higher order memory processes maintain visuospatial information across these eye movements (Hollingworth, Richard, & Luck, 2008), visual sampling is highly sequential in nature. That is, the viewing behaviors that individuals use to interact with the environment turn complex visual inputs into ordered lists of information because saccades sample discrete locations in a specific order (Fig. 1c).

An influential study by Noton and Stark (Noton & Stark, 1971b) demonstrated that sequences of eye movements, or scanpaths, are reinstated with repeated viewing of stimulus arrays. Extending these findings to complex natural scenes, it has been shown that subjects make refixations to the same locations (Foulsham & Kingstone, 2013) and have similar scanpaths across repeated presentations (Wynn et al., 2016). Scanpath reinstatement (Noton & Stark, 1971a) could be governed by the same properties that are described by retrieved-context theories of episodic memory (Howard & Kahana, 2002; Lohnas et al., 2015; Polyn et al., 2009; Sederberg et al., 2008). That is, viewing a familiar scene triggers retrieval of temporal context present during initial viewing, leading to the recapitulation of the sequence of fixations made during encoding. Thus, although many theories of visual attention suggest eye movements are driven by low-level features (Itti & Koch, 2000), and cognitive factors including meaning (Henderson & Hayes, 2017), global scene features (Torralba, Oliva, Castelhano, & Henderson, 2006), and current goals (Castelhano, Mack, & Henderson, 2009; Einhäuser, Rutishauser, & Koch, 2008; Rothkopf, Ballard, & Hayhoe, 2007), we propose that a major contribution of memory to viewing behavior (Althoff & Cohen, 1999; Wynn, Shen, & Ryan, 2019) derives from the reinstatement of temporal context. Thus, temporal context models would predict that eye movements during retrieval should proceed in a sequence similar to the order in which they were encoded (producing scanpath reinstatement (Noton & Stark, 1971a)), and that this reinstatement should occur with similar properties as does verbal free recall of word lists, including forward asymmetry and prevalence across multiple timescales.

We sought to determine whether episodic memory processes captured by retrieved-context models can account for viewing behavior during repeated viewing of complex scenes. If reinstatement of temporal context facilitates recognition of familiar scenes, the aforementioned contiguity, forward-asymmetry, and long-range contiguity effects should manifest in the sequence of visual fixations that subjects make to previously viewed scene content (Fig. 1d). We therefore re-examined eye-tracking data from three previous experiments that involved free viewing of repeated scenes. As a control analysis, we tested whether eye movements driven by stimulus properties contributed to scanpath reinstatement. By controlling for eye movements made by other subjects viewing the same scene, we accounted for the general tendency to view salient as well as semantically meaningful information in particular sequences. In doing so, we are able to provide evidence that reinstatement of temporal context explains the similarity of scanpaths made to repeated scenes.

Method

Study 1.

The FIne-GRained Image Memorability (FIGRIM) dataset was initially collected by Bylinskii, Isola, Bainbridge, Torralba, and Oliva (2015) to examine the influence of intrinsic and extrinsic factors on whether novel images will be subsequently remembered or forgotten.

To examine the influence of temporal context on memory for individual scenes, we reanalyzed the eye-tracking data collected during a continuous recognition task utilizing 630 target images from the FIGRIM stimulus set. Images were displayed at 1000 × 1000 px. From each of 7 randomly chosen scene categories, 50% of images were randomly chosen as targets (i.e., repeated images) and the remaining images were used as lures.

40 subjects (24 females) participated in the study, each viewing randomly selected images (M = 14.1, SD = 1.2 participants per image). In each session, a participant was presented a sequence of approximately 1000 stimuli. The sequence of stimuli contained 210 targets (presented three times) that were separated by 50 to 60 filler images. Images were presented for a duration of 2 s, followed by a forced-choice response to indicate whether the image was repeated. Subjects received verbal feedback for a duration of 0.3 s, followed by presentation of a blank screen for 0.2 s and a fixation cross for 0.4 s.

Eye tracking was conducted using an Eyelink1000 desktop system (SR Research, Ontario, Canada) with a sampling rate of 500 Hz. Images were presented on a 19 inch CRT monitor with a resolution of 1280 x 1024 pixels, 22 inches from the chinrest mount. Images subtended 30° of visual angle. A 9-point calibration and validation procedure was performed at the beginning of the experiment. Drift checks were performed throughout the experiment, allowing for recalibration where appropriate.

Study 2.

This study by Kaspar and König (2011a) examined the interaction between individual motivation and free-viewing behavior using repeated presentation of complex stimuli. 45 university students (33 females) provided informed consent and participated in the study.

48 total images were selected from four categories: nature, urban, fractal, and pink noise. Images were presented at a resolution of 1280 × 960 px on a 21” CRT Monitor at 80 cm with a refresh rate of 85 Hz. Five blocks of 48 images were presented to each subject while eye movements were recorded. Each block contained all 48 unique images, presented in a pseudorandom order for a duration of 6 s. Subjects had no task demands other than to “observe the images as you want.” There was a 5 minute break after the third block avoid fatigue.

Eye tracking was conducted using an Eyelink II (SR Research, Ontario, Canada) with a sampling rate of 500 Hz. A 13 point calibration and validation was performed at the beginning of each experimental session. Following stimulus presentation, a fixation dot appeared in the center of the screen to detect drifts in eye tracking. Recalibration was performed when errors exceeded 1°.

Study 3.

This study (Kaspar & König, 2011b) follows up on the observation from Study 2 that eye movements on repeated urban scenes are increasingly uncoupled from low-level image features, relative to other types of scenes. This experiment examined the dependence of this effect on image complexity. 35 university students (28 females) provided informed consent and participated in the study.

The stimulus pool contained 30 images depicting urban scenes of high, medium, or low complexity (10 scenes per level). High complexity scenes depicted urban settings at a broad scale, such as multiple houses or cityscapes. Medium complexity scenes depicted local arrangements such as individual homes or storefronts. Low complexity scenes depicted closeups of urban locations, such as individual benches. Images were presented at a resolution of 2560 × 1600 px on a 30” Apple Cinema HD Display (Apple, California, USA) at 80 cm with a refresh rate of 60 Hz. As in Study 2, stimuli were displayed for a duration of 6 s, with all stimuli presented in pseudorandom order within each block. Due to the smaller stimulus pool, there was no break between any of the 5 presentation blocks.

Eye movement analysis.

To measure temporal reinstatement during repeated presentation of scenes, we extended contiguity analysis typically applied in the domain of free recall (Howard & Kahana, 1999; Kahana, 1996) to sequences of fixations. During the initial viewing of novel scenes, an encoding sequence was serialized from all fixations. Contiguity during retrieval was measured by computing the probability of viewing a region of the scene, conditional on the previous fixation occurring at a specific lag in the encoding sequence (i.e., the lag-CVP). Individual fixations at retrieval were matched to the encoding sequences using a nearest neighbor approach (using Euclidean distance), with a threshold of 2° of visual angle. That is, each retrieval fixation was numbered according to nearest fixation in the encoding sequence within the threshold. The transition distance (lags) in the retrieval sequence were then computed based on this updated sequence, excluding transitions involving novel locations within the scene. We additionally assessed the probability of repeating fixations to the same region (lag 0). To measure asymmetry in the lag-CVP, we measured the difference in forward- and backward-going transitions.

Stimulus-driven control analysis.

We developed a control procedure to rule out the possibility that scanpath reinstatement was driven by stimulus features (e.g., low-level visual properties or semantic content) rather than memory, per se. For each subject, we computed a standardized measure of conditional viewing probability (CVPZ) using a bootstrapping procedure (n = 1000). Rather than using the scanpath from a prior viewing episode from that subject, we randomly sampled scanpaths from other subjects viewing the same scene (matched for repetition number and memory outcome). Raw CVP values were standardized using the mean and standard deviation of these distributions. The mean of these distributions provided a measure of stimulus-driven reinstatement.

Statistics.

We performed repeated measures ANOVAs to examine the effects of scene category and lag on temporal reinstatement, with post hoc t-tests used to examine the direction of effects. In all cases where multiple tests were conducted, Bonferroni correction was used to control for multiple comparisons. Unless otherwise noted, we used two-tailed tests.

We used a generalized linear mixed-effects model to investigate the influence of scene memorability and stimulus-driven reinstatement on overall scanpath reinstatement. For each scene, we predicted scanpath reinstatement (the number of lag +1 transitions, out of the total possible number of lag +1 transitions). We included fixed effects of scene memorability (d’ for each scene, measured in an independent group of subjects, see Bylinskii et al. (2015)) and stimulus-driven reinstatement (i.e., the average lag +1 CVP for each scene, only considering fixations sequences that were consistent across subjects) to predict overall scanpath reinstatement, using a binomial link function. In addition to these fixed effects, the effects of memorability and stimulus-driven reinstatement were grouped by subject (random effects). We tested the significance of fixed effects using a parametric bootstrap technique (Halekoh & Højsgaard, 2014). We computed the likelihood ratio test statistic comparing the full model to a reduced model without the fixed effect of interest. Under the hypothesis that the reduced model was true, we generated a distribution of the test statistic to serve as a reference distribution. P-values for significance testing were computed as the fraction of test statistics in the reference distribution that were greater than the observed value.

Results

In Study 1, subjects performed a continuous recognition task in which target scenes were repeated multiple times. We analyzed the relation between scanpath reinstatement and recognition memory. In Studies 2 and 3, subjects performed a free-viewing task in which scenes were repeated five times. Scenes derived from different semantic categories, and we tested whether the forward asymmetry was influenced by semantic content within a given scene. Further, we utilized the multiple repeated presentations of each scene to examine long-range contiguity effects. Together, these eye-tracking experiments allowed us to test multiple predictions of retrieved-context theory in an ecological setting with free-viewing formats that were very different from the list-learning experiments with predetermined memoranda order used to develop the theory.

Study 1

We analyzed the FIGRIM dataset provided by Bylinskii and colleagues (Bylinskii et al., 2015). This study examined eye movements collected while 40 subjects viewed scenes that were repeated three times within a continuous recognition task (Figure 2a). As previously reported, subjects recognized 75.8% (SD = 14.4%) of repeated scenes and false alarmed to 5.2% (SD = 7.4%) of novel scenes on average, demonstrating highly accurate memory.

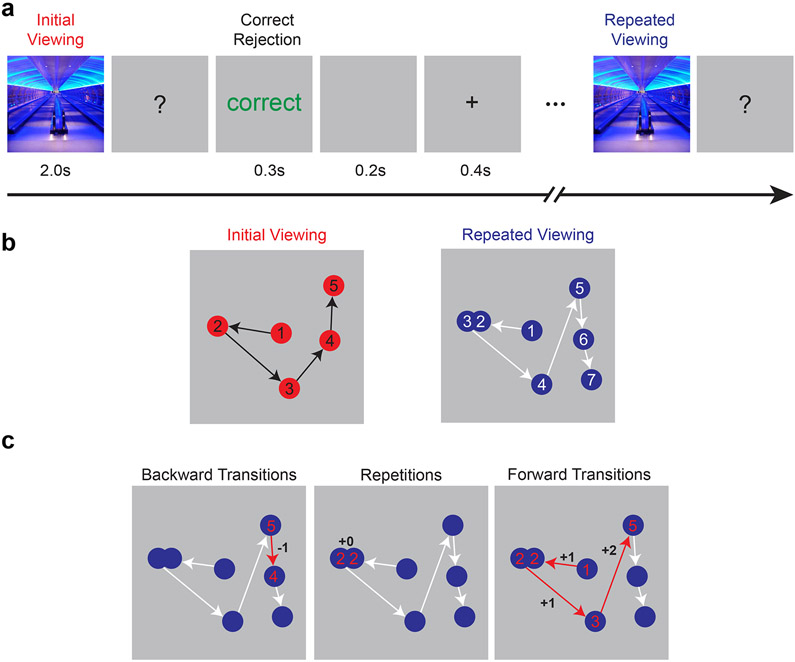

Figure 2. Measuring temporal context reinstatement during repeated scene viewing.

a, FIGRIM task schematic. Subjects judged scene novelty in a continuous recognition task. Target scenes were presented three times in each sequence. b, Example fixation sequences are displayed for initial and repeated viewing of a scene. c, Contiguity of viewing across episodes is assessed by identifying transitions during repeated viewing that were present in the initial encoding sequence. Backward transitions (negative lags), repetitions (zero lag), and forward transitions (positive lags) from the repeated viewing sequence are highlighted with red arrows. The serial positions of fixations based on the encoding sequence are labeled in red.

We first examined the contiguity of fixation sequences across repeated presentations of individual scenes (see Figure 2b for a hypothetical example). We treated fixations made during the viewing of a scene as an ordered sequence. Fixations during a subsequent presentation of the same scene were labeled according to whether they occurred at locations viewed during the previous viewing episode or at previously unexplored regions of the scene. Analysis of correspondence between these two sequences allowed us to assess, during repeated viewing, the probability of viewing a scene location conditional on having just viewed a location that appeared previously or subsequently in the original sequence (i.e., the lag conditional viewing probability, lag-CVP). We analyzed three types of repeated viewing: backward transitions (negative lags) that occur in the opposite order of previous viewing (Fig. 2c, left), repeated fixations to the same previously viewed location (zero lags; Fig. 2c, middle), and forward transitions (positive lags) that occur in the same direction as prior experience (Fig. 2c, right).

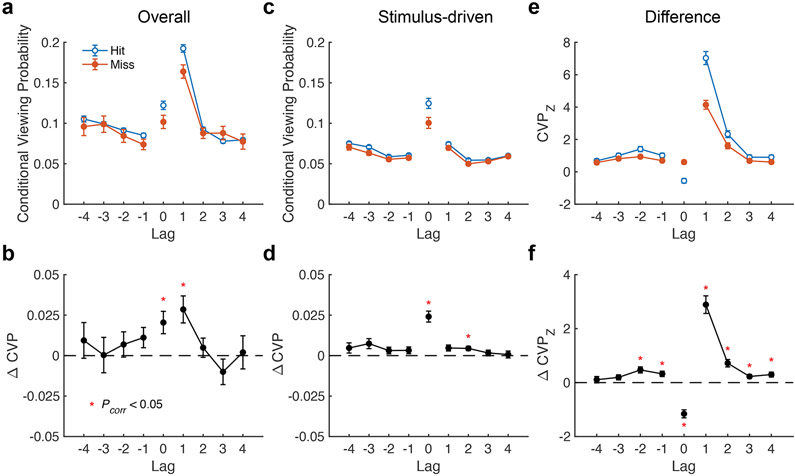

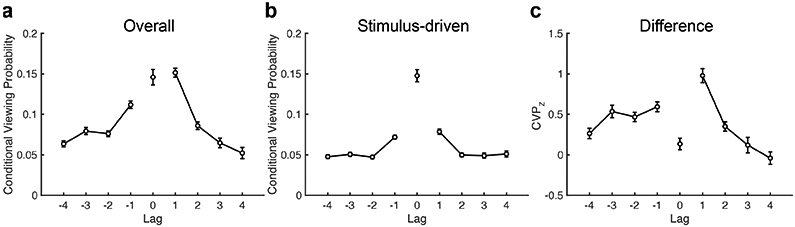

Retrieved-context models predict that the lag-CVP should be dominated by positive lag-one repeats (see Fig. 1b). Figure 3a shows the lag-CVP averaged separately for recognition hits and misses. As predicted, both lag-CVP curves exhibited a contiguity effect with forward asymmetry. In addition, the lag-CVP predicted whether a repeated scene was accurately recognized. Lag-CVP varied significantly by memory success (F(1, 63)=7.5, p=0.008, ηp2=0.11), by lag (F(3, 189)=45.3, p < 0.0001, ηp2=0.42), by forward versus reverse direction (F(1, 63)=23.3, p < 0.0001, ηp2=0.32), and by the interaction of lag and direction (F(3, 189)=82.7, p < 0.0001, ηp2=0.57). No other interactions were significant (all F < 2.1, p > 0.10). Planned post-hoc comparisons (Fig. 3b) found that lag-CVP was greater for hits than misses for both forward lag+1 fixations (t(66)=3.4, p=0.001, g=0.52) and repeat fixations (lag 0, t(66) = 3.0, p = 0.004, g = 0.36). These findings demonstrate reinstatement of scanpaths with forward asymmetry and link this viewing effect to better memory performance, as predicted by scanpath theory and retrieved-context models.

Figure 3. Scanpath reinstatement predicts scene recognition.

a, The curves show the probability of making a saccade to a previously viewed location as a function of lag in the initial viewing sequence for recognition hits and misses. b, The plot depicts the difference in conditional viewing probability (CVP) between hits and misses. c, Curves depict stimulus-driven scanpath transitions, based on fixations made by different subjects to the same scene. d, The plot shows repeated fixations to stimulus-driven locations predict scene recognition. e, The plot shows the comparison between overall and stimulus-driven transition probabilities, revealing memory-driven effects. f, The line depicts the difference in the memory-driven CVP for recognition hits and misses. Differences that remain significant after Bonferroni correction are denoted with a red asterisk. Error bars denote SEM.

Although we interpret these results as evidence for a retrieved-context account of scanpath reinstatement, it is possible that some stimulus features were both highly memorable (Bainbridge, Isola, & Oliva, 2013) and also caused stereotyped eye movements that contributed to the lag-CVP. If this were the case, then the difference in the lag-CVP for hits versus misses could reflect stimulus qualities rather than an influence from contextual memory reinstatement. To assess this alternative hypothesis, we performed several control analyses.

First, we examined scanpath contiguity for scenes that differed in the elapsed time between repeated presentations. Temporal context is believed to be scale-invariant (Howard, Shankar, Aue, & Criss, 2015), and therefore retrieved-context theory predicts contiguity across long and short timescales (Howard et al., 2008). In addition to the observed contiguity over short time scales (i.e., within individual fixation sequences), we would expect greater scanpath reinstatement for viewing events that occur closer together in time due to the similarity of their temporal context. In contrast, stimulus-driven influences on memorability and eye movements should not vary with elapsed time between scene presentations because such features remain constant irrespective of time. Consistent with the retrieved-context predictions, we found that the probability of making a forward lag-one transition (i.e., scanpath reinstatement) was greater for short (initial and second, second and third viewing) delays (M =0.20, SE =0.005) than between the initial and third viewing (M =0.18, SE =0.005), t(66)=5.6, p < 0.0001, g =0.41.

To further test the alternative account that influences from perceptual as well as semantic features of scenes could explain scanpath reinstatement, we computed an alternative measure of stimulus-driven reinstatement. Rather than using a subject’s fixations from a previous viewing, this measure uses sequences of eye movements made by different subjects viewing the same scene (matched for presentation number and memory outcome). If the observed scanpath reinstatement were primarily due to stimulus-driven effects, one would expect highly similar patterns of reinstatement in this control analysis relative to the main analysis. Figure 3c depicts the estimated stimulus-driven reinstatement effects, averaged over bootstrap samples (n = 1000) from each subject. We examined the influence of memory on scanpath reinstatement by comparing true reinstatement effects (i.e., based on scanpaths from the same subject) to the bootstrap distribution (Fig. 3e). This revealed strong main effects of memory (F(1, 64)=81.5, p < 0.0001, ηp2 = 0.56), lag (F (3, 192) = 227.8, p < 0.0001, ηp2 = 0.78) and direction (F (1, 64) = 211.1, p < 0.0001, ηp2 = 0.77). We observed a significant three way interaction (F(3, 192)=61.1, p < 0.0001, ηp2=0.49), as both lag and memory robustly interacted in the forward direction (F(3, 192)=70.8, p < 0.0001, ηp2=0.53), with a small effect in the reverse direction (F(3, 192)=4.1, p=0.008, ηp2=0.06). Pairwise comparisons provided evidence for accurate scene recognition to be associated with greater memory-driven reinstatement (Fig. 3f), providing evidence that the observed scanpath reinstatement was not purely driven by stimulus properties alone.

Compared to stimulus-driven viewing, repeated viewing of a previously viewed location (lag 0) was associated with impaired memory performance (Fig. 3f; t(66)=−9.4, p < 0.0001, g =−1.3). Given the relatively short viewing time for each scene (2.0 s), it is likely that this difference indicates viewing of salient parts of the scene (that generally attract fixations across subjects) that were not attended during initial viewing. As a result, repeated inspection of these locations would cause the subject to incorrectly identify the scene as novel. Consistent with this explanation, repeated viewing of the same location (fixations within 2° of visual angle) was more likely when fixating on novel (M=0.22, SE=0.01) compared to previously viewed (M =0.09, SE =0.006) locations, t(66)= 18.4, p < 0.0001, g = 1.9.

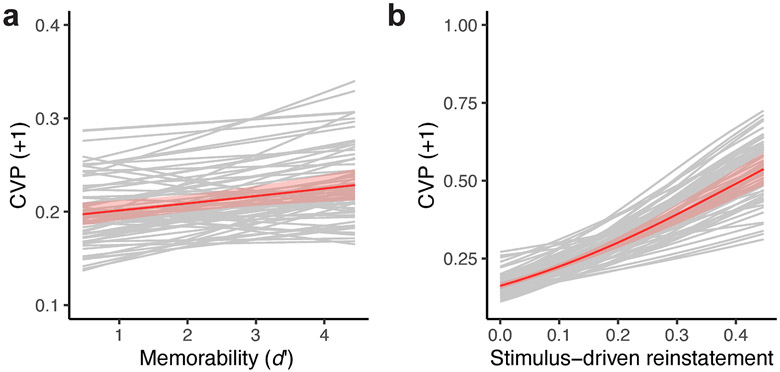

It is also possible that lapses in sustained attention could produce the observed relation between scanpath reinstatement and memory success, as failure to attend could produce both poor memory and disruptions in viewing behavior. To test this possibility, we examined the relation between scanpath reinstatement (the CVP for +1 lags) and the memorability of each scene as measured by d' in an independent set of subjects. By only evaluating the lag-CVP for trials with successful scene recognition at the group level, differences in reinstatement would be unlikely to have resulted from lapses in attention for a given subject. We also controlled for stimulus-driven reinstatement (the CVP for +1 lags), to account for intrinsic properties of each scene that explain memorability (Bainbridge et al., 2013; Bylinskii et al., 2015; Khosla, Raju, Torralba, & Oliva, 2015).

Group-level analysis revealed consistent within-subject effects of memorability on scanpath reinstatement (Fig. 4a, χ12 = 7.3, parametric bootstrap p = 0.007). In addition, we found strong evidence for stimulus-driven reinstatement explaining scene-level variability in scanpath reinstatement (Fig. 4b, χ12=84.4, parametric bootstrap p = 0.006). These findings suggest that in addition to consistent stimulus-driven fixation sequences, the memorability of a scene is determined by its ability to reinstate previous episodic contexts. As an attentional account relating scanpath reinstatement to scene recognition does not predict this relationship, these findings provide additional evidence that reinstatement of temporal context guides visual exploration and scene recognition during repeated viewing.

Figure 4. Scanpath reinstatement accounts for variability in scene memorability.

a, Grey lines depict the predicted effect of memorability (d’) on the conditional viewing probability (CVP) for a forward lag of one (i.e., scanpath reinstatement) for individual subjects. At the group level, depicted in red, we found a positive relation between the memorability of a scene and scanpath reinstatement. b, Variability in scanpath reinstatement explained by stimulus-driven viewing. In the same format as a, line plots depict the relation between stimulus-driven reinstatement and overall scanpath reinstatement. Shaded regions indicate SEM.

Despite the strong forward contiguity effect in this study, the lag-CVP (Fig. 3a) also demonstrated an anticontiguity effect in the backward direction (F(3, 198)=19.2, p < 0.0001, ηp2 =0.23), in contrast to contiguity commonly observed in free recall (Fig. 1b). This anticontiguity effect more closely resembles serial recall behavior, where short backward transitions occur infrequently as they reflect repetition errors. Retrieved-context theory makes an important distinction between forward and backward transitions. During memory search, retrieval of temporal context provides a symmetric cue for memories encoded before or after the retrieved temporal state (Howard & Kahana, 2002). When acontextual item representations cue memory, they provide a forward cue for memories encoded after the retrieved item. Forward chaining through previous scanpaths (e.g., through asymmetric associations between items) could accurately explain the observed patterns of viewing, without the need for temporal context retrieval.

To determine whether this was the case, we focused our lag-CVP analysis on fixations where subjects first jumped forward in their viewing patterns (e.g., position 1 followed by position 4), excluding fixations to previously viewed locations1. If forward-going associations determine the next location, viewing should continue along the initial viewing sequence. Alternatively, viewing could return to the skipped locations, similar to “fill-in” errors observed in serial recall (Farrell, Hurlstone, & Lewandowsky, 2013; Farrell & Lewandowsky, 2004; Osth & Dennis, 2015). When focusing on fixations following forward jumps, the lag-CVP exhibited significant contiguity (F (3, 189) = 108.8, p < 0.0001, ηp2 = 0.63) and asymmetry (F (1, 63) = 4.3, p=0.04, ηp2=0.07) effects (Fig. 5a). We found significant contiguity effects in both the forward (F(3, 189)=64.9, p < 0.0001, ηp2=0.51) and backward (F(3, 189)=30.9, p < 0.0001, ηp2=0.33) directions, with greater contiguity in the forward direction (F(3, 189)= 12.9, p < 0.0001, ηp2 = 0.17).

Figure 5. Scanpath reinstatement following forward jumps.

a, The plot shows the lag-CVP, after making a forward jump (lag > 1) during repeated viewing. b, The curve depicts the lag-CVP as in a, but for stimulus-driven eye movements (i.e., fixations sequences that are consistent across subjects). c, The curve indicates the standardized CVP (CVPZ, adjusted for stimulus-driven viewing) following forward jumps in the fixation sequence. Error bars denote SEM.

These results indicate that scanpaths during repeated viewing are biased in the forward direction, even when earlier locations in the sequence have not yet been viewed. This forward asymmetry was specific, however, to short lags (lags 1 and 2, t(63)=7.6, p < 0.0001, g = 0.95) with a backward asymmetry for longer lags (lags 3 and 4, t(63)=−2.6, p=0.01, g=−0.32). We did not find evidence that the asymmetry was attributable to stimulus-driven viewing (Fig. 5b), which gave rise to a significant contiguity effect (F (3, 198) = 43.3, p < 0.0001, ηp2 = 0.39), but effects of direction (F(1, 66)=2.3, p=0.04, ηp2=0.07) and interaction between contiguity and direction (F(3, 198)=1.0, p=0.40, ηp2=0.01) failed to reach significance. Adjusting the original lag-CVP for stimulus-driven viewing did not change these effects (Fig. 5c). We still observed significant contiguity effects in the forward (F (3, 189) = 37.5, p < 0.0001, ηp2 = 0.37) and backward (F (3, 189) = 4.0, p = 0.009, ηp2 = 0.06) directions. The direction of the asymmetry effect varied with lag, with forward asymmetry for short lags (lags 1 and 2, t(63)=2.2, p=0.03, g =0.28) and backward asymmetry for long lags (lags 3 and 4, t(63)=−5.5, p < 0.0001, g=−0.69). Taken together, these results indicate that the absence of backward contiguity resulted from the tendency to avoid returning to recently viewed locations. This effect could reflect inhibition of return (Posner & Cohen, 1984), which prevents revisiting of recently viewed locations. When locations at negative lags were not recently viewed, the lag-CVP more prominently displayed the contiguity effect as observed in free recall (Fig. 1b).

Study 2

We analyzed the dataset collected by Kaspar and König (Kaspar & König, 2011a). It provides eye-tracking measurements during a free-viewing task with repeated scenes belonging to different categories, including urban, nature, fractal, and pink noise scenes (Figure 6a). Because each scene was repeated five times across experimental blocks, this experiment is ideally suited to test long-range contiguity effects predicted by retrieved-context theory. In addition, retrieved context theory predicts that the amount of pre-experimental exposure to scene content would influence the magnitude of the forward asymmetry (Howard & Kahana, 2002). This can be tested because the four scene categories contain different amounts of semantic content, with urban and nature scenes including content with pre-experimental familiarity versus fractal and pink-noise scenes relatively devoid of such content (although certain fractals could be assigned semantic meaning (Voss & Paller, 2009)).

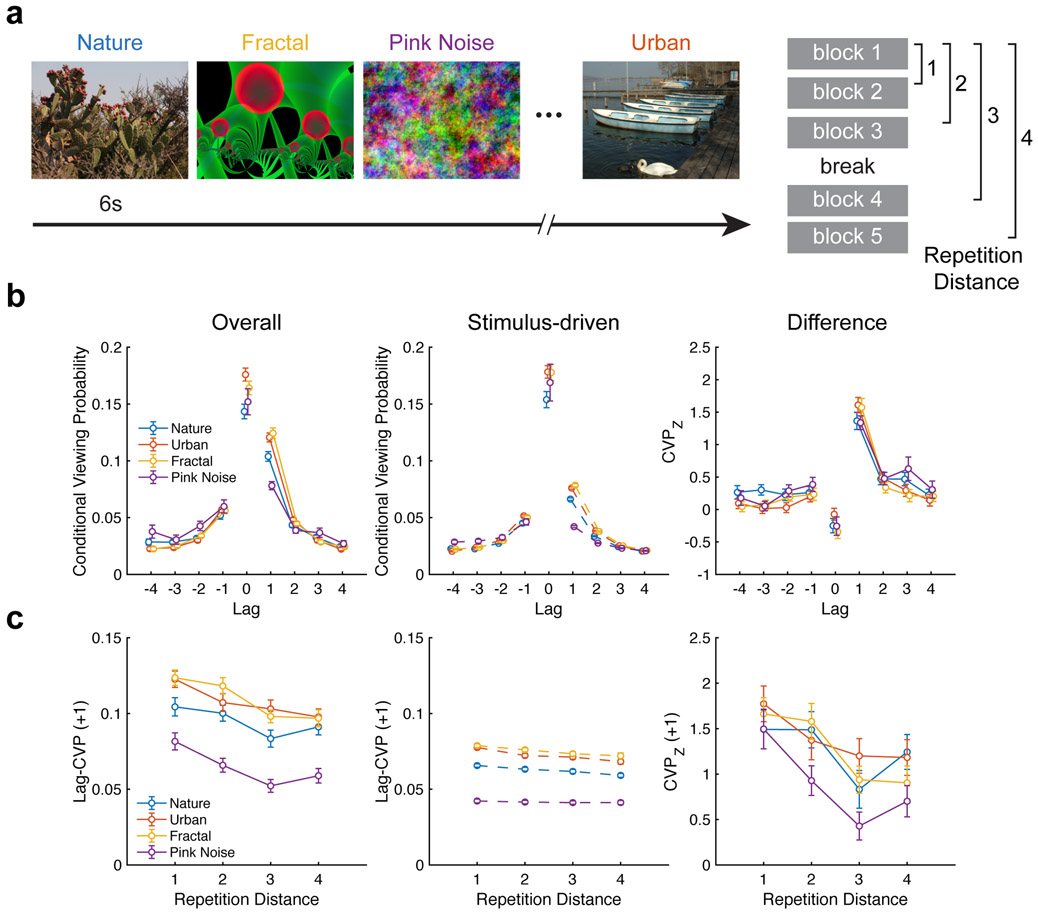

Figure 6. Influence of semantic content on scanpath reinstatement.

a, Experimental design. All scenes were presented in pseudorandom order within a block, with scenes repeated across blocks. b, Left, lag-CVP curves are plotted for each scene category, demonstrating contiguity and forward asymmetry. The forward asymmetry is reduced for repeated viewing of pink noise. Middle, stimulus-driven lag-CVP curves exhibit reduced scanpath reinstatement. Right, differences in overall and stimulus-driven lag-CVP curves reveal a consistent scanpath reinstatement effect. c, Left, line plots depict the decreasing probability of scanpath reinstatement as a function of repetition distance. Middle, the plot depicts greatly reduced magnitude of stimulus-driven CVP curves. Right, difference curves reveal the presence of long-range contiguity after accounting for stimulus effects.

As predicted, we observed a contiguity effect across all scene categories (F(3, 132)=419.2, p < 0.0001, ηp2=0.91), with no evidence of an effect of category (F(3, 132)=0.3, p=0.86, ηp2=0.01), but a significant interaction between the two factors (F(9, 396)=15.3, ηp2=0.26, p < 0.0001), predicted by retrieved-context theory to be driven by differences in the forward asymmetry (Figure 6b). To test this prediction, we computed an asymmetry measure (the difference in forward and backward probability) and found it to vary by lag (F(3, 132)=135.6, p < 0.0001, ηp2=0.75) and category (F(3, 132)=23.16, p < 0.0001, ηp2=0.34), with a significant interaction between lag and category (F(9, 396)=9.1, p < 0.0001, ηp2=0.17). Planned post-hoc tests indicated significant differences in lag-one asymmetry between pink noise and urban (t(44)=4.5, p < 0.0001, g=0.89), nature (t(44)=6.1, p < 0.0001, g = 1.27), and fractal (t(44)=7.8, p < 0.0001, g=1.32) scenes. These findings are consistent with the prediction that pre-experimental exposure to scene content increases scanpath reinstatement.

As was the case for our analysis of Study 1, it is possible that scanpath reinstatement was stimulus-driven rather than memory-driven. Therefore, we computed stimulus-driven reinstatement effects (Figure 6b, middle panel) that exhibited contiguity, with a significant main effect of lag (F (3, 132) = 1555.4, p < 0.0001, ηp2=0.97) and scene category (F(3, 132)=20.2, p < 0.0001, ηp2=0.31), and a significant interaction between lag and category (F(9, 396)=199.4, p < 0.0001, ηp2=0.81). When comparing the overall pattern of contiguity to stimulus-driven effects (Fig. 6b, right panel), the influence of scene category was greatly reduced. Indeed, while the effect of lag on transition probability was still present (F(3, 132)=52.2, p < 0.0001, ηp2=0.54), there was no longer a main effect of category (F (3, 132) = 1.6, p=0.18, ηp2=0.04) or an interaction between category and lag (F(9, 396)=1.14, p=0.33, ηp2=0.03). These findings confirm the influence of scene semantics on the forward asymmetry, which combines with memory-driven scanpath reinstatement to produce the overall contiguity effect.

In addition to accounting for category-level differences in contiguity, stimulus-driven reinstatement produced forward asymmetry (Fig. 6b, middle panel), with significant main effects of lag (F(3, 132)=308.3, p< 0.0001, ηp2=0.88) and category (F (3, 132) = 44.7, p < 0.0001, ηp2=0.50), with strong evidence for an interaction between the two (F(9, 396)=80.5, p < 0.0001, ηp2=0.65). Indeed, planned post-hoc comparisons identified significant differences between pink noise and all other categories including nature (t(44)=9.4, p < 0.0001, g=1.67), urban (t(44)= 11.0, p < 0.0001, g=2.09), and fractal (t(44)= 11.0, p < 0.0001, g=2.16) scenes. These results show that stimulus-driven effects drive eye movements in predictable sequences, contributing to the forward asymmetry observed during repeated viewing.

After accounting for the stimulus-driven reinstatement, category-level differences in the asymmetry effect were reduced (Fig. 6b, right panel). We no longer found significant variation by category (F(3, 132)=1.1, p=0.35, ηp2=0.02); however, we still observed an effect of lag (F(3, 132)=51.6, p < 0.0001, ηp2=0.54) and an interaction of category by lag (F(9, 396)=1.98, p=0.04, ηp2=0.04). Pairwise comparisons of the asymmetry effect between pink noise and each other category no longer survived correction for multiple comparisons (nature, t(44)=0.65, p=0.52, g=0.15; urban, t(44)=2.39, p=0.02, g=0.48; fractal, t(44)=2.3, p=0.03, g=0.39). As such, category-level differences in the forward asymmetry can be primarily attributed to stimulus-driven fixation sequences that are reliable across subjects. Yet memory-driven scanpath reinstatement was present across all scene categories. These findings are somewhat counter to retrieved-context theory, which would predict more robust interactions between temporal context and semantic knowledge during encoding to produce the forward asymmetry. Instead, they suggest that the influence of memory on scanpath reinstatement was relatively constant across scene categories, which differed in the extent that their perceptual or conceptual properties influenced contiguity effects.

Retrieved-context models predict that upon repeated viewing of a scene, scanpaths from recent viewing experiences are more likely to be reinstated. To test this prediction, we examined how scanpath reinstatement changed over multiple scene repetitions. That is, we tested for long-range contiguity effects across multiple presentations of the same scene. The left panel of Figure 6c depicts the average probability of making a forward lag-one transition for different repetition distances. We found the probability of making a forward transition varied with category (F(3, 132)=48.1, p < 0.0001, ηp2=0.52) and repetition distance (F(3, 132)=23.3, p < 0.0001, ηp2=0.35). We found no evidence for an interaction between these two factors (F(9, 396)=0.9, p=0.49, ηp2=0.02). Consistent with the results of Study 1, these findings suggest that subjects are more likely to reinstate scanpaths made during the most recent presentation of a scene, which is consistent with a memory interpretation of scanpath reinstatement.

Are these long-range contiguity effects explained by stimulus-driven reinstatement? We re-examined long-range contiguity effects using our measure stimulus-driven reinstatement (Fig. 6c, middle). In contrast to the forward asymmetry, long-range contiguity effects (i.e., decreases in contiguity with repetition distance) were smaller when measured from stimulus-driven reinstatement. We found reduced effects of repetition distance on reinstatement (F(3, 132)=18.9, p < 0.0001, ηp2=0.3), with a robust main effect of category (F(3, 132)=383.4, p < 0.0001, ηp2=0.90) and a modest interaction between the two factors (F (9, 396) = 2.3, p = 0.02, ηp2 = 0.05). After controlling for the effect of stimulus-driven reinstatement (Fig. 6c, right), there was only a weak effect of category (F (3, 132) = 3.9, p = 0.01, ηp2 = 0.08). The effect of repetition distance remained (F(3, 132)=17.5, p < 0.0001, ηp2=0.28), with no evidence for an interaction (F (9, 396) = 1.0, p = 0.46, ηp2 = 0.02). These findings provide additional evidence for reinstatement of temporal context as a mechanism to guide eye movements during free viewing of repeated scenes.

Study 3

We analyzed data from Kaspar and König (2011b). This study originally examined the influence of scene complexity on the ability for low-level features to predict gaze allocation during repeated viewing of urban scenes. The experimental procedure followed that of Study 2, with repeated presentation of 10 urban scenes of low, medium, and high complexity (Figure 7a) across 5 blocks. Here, we use this study as an additional, independent test of the role of retrieved temporal context in gaze allocation during repeated viewing. We repeat the same analysis procedure as in Study 2 to examine predictions of the retrieved-context model regarding the forward asymmetry and long-range contiguity in scanpath reinstatement. We predicted that because medium complexity scenes have greater ecological validity and pre-experimental exposure (Kaspar & König, 2011b), fixation sequences during repeated viewing of these scenes would produce the greatest forward asymmetry. As in the analyses described above, we additionally examined the extent to which reinstatement could be accounted for by stimulus-driven, rather than memory-driven processes.

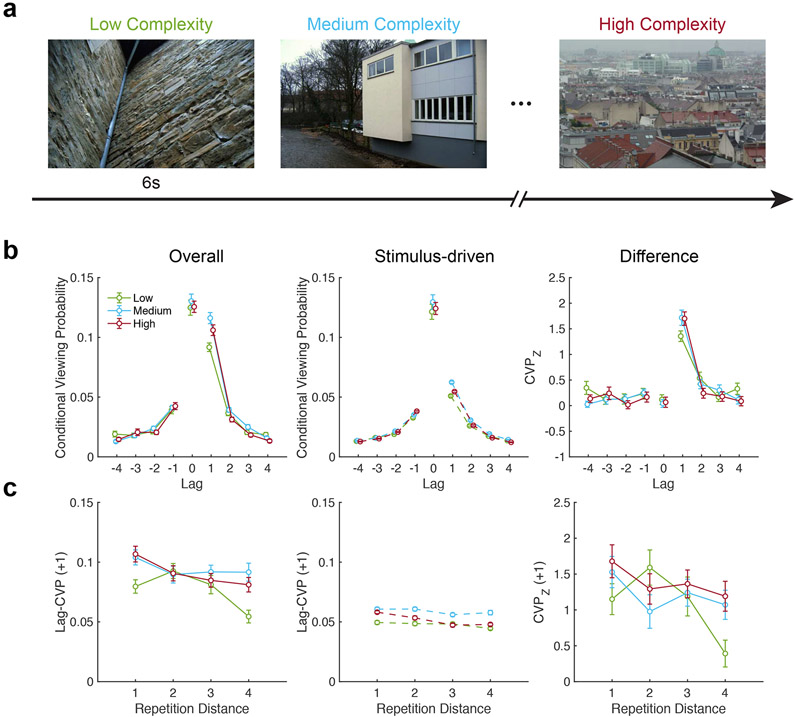

Figure 7. Influence of scene complexity on scanpath reinstatement.

a, Experimental design. Urban scenes of low, medium, and high complexity were repeated across five blocks. b, Left, lag-CVP curves are plotted for each level of visual complexity, demonstrating contiguity and forward asymmetry. Middle, stimulus-driven CVP curves reveal the effects of scene semantics on scanpath reinstatement. Right, z-scored lag-CVP curves show the remaining effects of episodic memory after adjusting for stimulus-driven effects on scanpaths. c, Left, line plots depict the decreasing probability of reinstating the scanpath as a function of repetition distance. Middle, lines depict a reduced long-range contiguity effect generated by stimulus properties. Right, the curves depict the adjusted long-range contiguity effect, which is preserved after controlling for scanpath reinstatement that is observed across subjects.

We observed both contiguity and forward-asymmetry effects in fixations sequences made in this study (Fig. 7b, left). Analysis of fixation probabilities to previously viewed locations revealed main effects of lag (F(3, 99)=541.5, p < 0.0001, ηp2=0.94) and scene complexity (F(2, 66)=5.5, p=0.006, ηp2=0.14), with a significant interaction between lag and complexity (F (6, 198) = 7.1, p < 0.0001, ηp2=0.18). We further assessed whether asymmetry varied with scene complexity and found significant main effects of complexity (F(2, 66)=9.4, p=0.0003, ηp2=0.22) and lag (F(3, 99)=230.9, p < 0.0001, ηp2=0.88), with interaction between these two factors (F(6, 198)=2.5, p=0.02, ηp2=0.07). We evaluated differences in asymmetry between levels of scene complexity, and found differences between medium- and low-complexity scenes (t(33)=3.2, p=0.003, g=0.73) but not between high- and low-complexity scenes (t(33) = 1.8, p = 0.08, g = 0.38). We also did not find strong evidence for differences in the forward asymmetry between medium and high levels of complexity (t(33)=1.8, p=0.08, g=0.41). These findings suggest that differences in scanpath reinstatement do not directly follow scene complexity and may be driven by scene context or meaning.

As in Study 2, stimulus-driven lag-CVP curves indicated both contiguity and asymmetry effects (Fig. 7b, middle). The lag-CVP varied with lag (F(3, 99)=2987.8, p < 0.0001, ηp2=0.99) and complexity (F(2, 66)=39.9, p < 0.0001, ηp2=0.55) with significant interaction of the two (F(6, 198)=28.7, p < 0.0001, ηp2=0.46). The stimulus-driven forward asymmetry varied with scene complexity (F(2, 66)=37.5, p < 0.0001, ηp2=0.53) and lag (F(3, 99)=557.8, p < 0.0001, ηp2=0.94), with interaction between the two (F (6, 198) = 13.6, p < 0.0001, ηp2=0.29). Our estimates of reinstatement caused by stimulus-driven factors revealed pairwise differences in the forward asymmetry across levels scene complexity. We found medium-complexity scenes had the largest forward asymmetry, significantly greater than high-complexity (t(33) = 10.0, p < 0.0001, g = 2.02) and low-complexity (t(33) = 6.5, p < 0.0001, g = 1.34) scenes. Low-complexity scenes produced forward asymmetries of similar magnitude to high-complexity scenes (t(33) = 1.3, p = 0.2, g = 0.31). These findings suggest that stimulus properties of scenes with the most ecological validity (i.e., pre-experimental exposure and associated semantic knowledge) account for category-level differences in the tendency to reinstate scanpaths.

After controlling for stimulus-driven viewing patterns (Fig. 7b, right), differences in the forward asymmetry related to scene complexity were no longer present (F (2, 66) = 1.1, p = 0.33, ηp2 = 0.03), although the asymmetry still varied with lag (F(3, 99)=65.4, p < 0.0001, ηp2=0.66), reflecting a memory-driven reinstatement effect. We found no evidence for an interaction between these two factors (F(6, 198)=1.1, p=0.39, ηp2=0.03). The overall contiguity effect persisted after accounting for stimulus-driven reinstatement. We found a strong main effect of lag (F(3, 99)=63.3, p < 0.0001, ηp2=0.66), no evidence for an effect of scene complexity (F(2, 66)=0.7, p=0.51, ηp2=0.02), and significant interaction of complexity with lag (F(6, 198)=2.9, p=0.01, ηp2=0.08). These findings replicate the results from Study 2 and reveal unique contributions of stimulus- and memory-driven processes to visual exploration under free viewing.

In our final test of a context-based account of scanpath reinstatement, we examined whether contiguity decreased with the distance between scene repetitions. Consistent with a role of temporal context in driving scanpaths during repeated viewing, we observed an effect of repetition distance on gaze reinstatement (Fig. 7c, left), with greater transition probabilities for more recent encounters. We found reinstatement varied with repetition distance (F(3, 99)=6.8, p=0.0003, ηp2=0.17) and complexity (F(2, 66)=6.7, p=0.002, ηp2=0.17), with significant interaction between the two (F(6, 198)=2.9, p=0.01, ηp2=0.08). As in Study 2, this effect could not be explained by stimulus-driven reinstatement (Fig. 7c, middle). Despite reduced long-range contiguity effects produced by stimulus-driven reinstatement, we still observed reliable effects of repetition distance (F(3, 99)=12.2, p < 0.0001, ηp2=0.27) and scene complexity (F(2, 66)=51.2, p < 0.0001, ηp2=0.61), as well as interaction between these factors (F(6, 198) = 3.5, p = 0.003, ηp2 = 0.10).

After controlling for stimulus-driven fixations (Fig. 7c, right), we still found evidence for a long-range contiguity effect. Scanpath reinstatement was influenced by repetition distance (F(3, 99)=4.3, p=0.007, ηp2=0.12), but not scene complexity (F(2, 66) = 1.5, p=0.24, ηp2=0.04), with interaction between these two factors (F(6, 198)=2.4, p=0.03, ηp2=0.07). These results replicate the long-range contiguity effects found in Study 2 and provide further evidence that a representation of temporal context influences how individuals explore scenes.

Discussion

We found that multiple predictions of retrieved-context models of episodic memory (Howard & Kahana, 2002; Lohnas et al., 2015; Polyn et al., 2009; Sederberg et al., 2008) apply to viewing behavior during the repetition of complex scenes. Scanpaths demonstrated strong contiguity effects with forward asymmetry. These forward-asymmetrical contiguity effects predicted memory performance, including accurate recognition responses and variability in memorability across scenes. Moreover, as would be expected for a memory signal, the contiguity effects decayed with increasing delay between consecutive presentations of the same scene. Finally, contiguity effects increased in magnitude with knowledge of scene semantics. All of these properties are predicted by retrieved-context models, developed from list-learning experiments that preclude interaction with or free exploration of memoranda. We therefore conclude that visual exploration of repeated scenes is guided by the same cognitive systems that support episodic memory function.

Our findings highlight scanpath contiguity as a relevant measure of experience-dependent changes in visual exploration. It is well established that prior viewing of a scene (Ryan, Althoff, Whitlow, & Cohen, 2000; Ryan, Hannula, & Cohen, 2007; Smith, Hopkins, & Squire, 2006; Smith & Squire, 2008) or face (Althoff & Cohen, 1999) influences visual exploration. Repeated viewing produces fewer fixations that are longer in duration, focusing fixations to a smaller viewing area. Such repeated viewing effects are thought to depend on the hippocampus particularly when they reflect successful relational binding, such as when scene content must be appreciated from multiple viewpoints (Olsen et al., 2016) or when viewing reflects appreciation that relationships among objects have changed across repetitions (Ryan et al., 2000; Smith et al., 2006; Smith & Squire, 2008, 2018). These studies provide consensus regarding the role of the hippocampus in rapidly binding the elements of a scene (Cohen et al., 1999; Davachi, 2006; Olsen, Moses, Riggs, & Ryan, 2012). However, there is a great deal of controversy regarding whether relational viewing effects can occur without awareness of memory retrieval (Hannula & Ranganath, 2009; Ryan et al., 2000), or whether such viewing effects reflect typical expressions of explicit memory that support recognition judgments (Smith & Squire, 2008, 2018; Urgolites, Smith, & Squire, 2018).

It is noteworthy that we observed scanpath reinstatement even without accurate recognition (i.e., on miss trials) in Study 1. This could indicate scanpath reinstatement may occur without memory awareness. However, reinstatement was significantly greater with accurate recognition (i.e., on hit trials) and therefore likely reflected explicit memory. It is possible that explicit memory occurred even on miss trials, but below the threshold required to endorse the scene as old. In addition, retrieval of temporal context may lead to scanpath reinstatement, but failure in recognition or shifts in attention to previously unexplored regions of the scene could still cause subjects to endorse a scene as novel. This behavior suggests that retrieval of temporal context contributes to scene recognition by guiding fixations to previously viewed locations in the encoding sequence. If recognition decisions are based upon evidence accumulated throughout viewing, scenes with greater scanpath reinstatement would likely generate aware recognition because fixations are focused to previously viewed content. Future studies using more nuanced subjective estimates of memory strength (e.g., Ramey, Yonelinas, and Henderson 2019) could be used to help determine whether the influence of temporal context on visual exploration occurs with or without awareness of memory retrieval.

The forward-asymmetrical contiguity effects provide evidence in support of the scanpath theory of scene retrieval (Noton & Stark, 1971b). Previous experiments have shown that repeated viewing of scenes leads to increased scanpath similarity (Foulsham & Underwood, 2008). However, scanpath similarity has typically been assessed using metrics of the aggregate distance between visual fixations during repeated versus initial viewing (Foulsham & Underwood, 2008; Le Meur & Baccino, 2013; Underwood, Foulsham, & Humphrey, 2009; Wynn et al., 2016). These measures do not directly assess the order of visual fixations and therefore have not identified temporal reinstatement. Thus, our finding that fixation sequences are recapitulated during repeated viewing provides novel support for scanpath theory, and recent models of gaze reinstatement (Wynn et al., 2019) that suggest eye movements play a functional role in retrieval by reinstating the spatial and temporal context present during initial encoding. Determining whether retrieval of temporal context plays a causal role in scanpath reinstatement (e.g., via stimulation of hippocampal circuits involved in temporal reinstatement (Kragel et al., 2015)) and understanding the behaviors that trigger retrieval of temporal context during exploration are important topics for future research.

Our results highlight the role of memory in controlling viewing behavior. Despite the success of salience-based models in explaining viewing (Itti & Koch, 2001), many models have demonstrated the need to account for top-down influences of higher-order cognitive processing such as semantic meaning (Henderson & Hayes, 2017), task goals (Borji & Itti, 2014; Castelhano et al., 2009; Henderson, Shinkareva, Wang, Luke, & Olejarczyk, 2013), and scene context (Torralba et al., 2006). Notably, we found that when we controlled for fixation sequences that were generated from low-level visual properties and scene content (i.e., fixation sequences that were consistent across individuals), robust forward-asymmetrical contiguity effects remained. Thus, this pattern rules out the possibility that additional processes account for the observed scanpath reinstatement, such as guidance of early fixations by semantic gist of the scene (Castelhano & Henderson, 2007). In addition, scanpath initiation from central fixation to the first fixation, often described as an automatic or bottom-up process (Anderson, Ort, Kruijne, Meeter, & Donk, 2015; Einhäuser et al., 2008; Foulsham & Underwood, 2008; Mackay, Cerf, & Koch, 2012; Parkhurst, Law, & Niebur, 2002), is controlled for by this analysis. While this approach rules out the influence of low-level factors that are consistent across subjects, individual differences in stimulus-driven viewing could produce spurious memory effects. However, individual differences cannot explain the increase in temporal reinstatement during correct recognition, or recency effects observed across multiple viewings of the same scene (i.e., because memory varies with lag but, presumably, scene-specific idiosyncratic viewing preferences would not). Taken together, our findings suggest that retrieved-context models contain the computational mechanisms necessary to help explain the influence of memory on visual exploration, which is a critical component of any complete model of how individuals visually explore complex scenes.

Although most scanpath reinstatement effects that we report were memory-related and not merely stimulus-driven, the effects of scene content and pre-experimental familiarity on scanpath reinstatement were primarily stimulus-driven. That is, differences in the forward asymmetry between scenes of different categories in Study 2 and Study 3 were markedly reduced after controlling for stimulus-driven fixation sequences that were common across subjects, and therefore were not memory related. However, according to retrieved-context theory, retrieving the semantic meaning of presented words (i.e., pre-experimental context, with words that occur in similar contexts having similar representations (Howard & Kahana, 2002; Polyn et al., 2009)) during memory search is necessary to produce forward asymmetry in recall.

Without this pre-experimental knowledge, contiguity would be symmetrical in nature. After accounting for stimulus-driven viewing, the magnitude of the forward asymmetry was comparable across scenes of different content, including those with no pre-experimental familiarity (i.e., pink noise in Study 2). One possible explanation for this apparent discrepancy is the difference in external retrieval cues across these two paradigms. During scene recognition, visual inputs provide multiple sensory cues for viewing whereas there is an absence of external cues during free recall. Therefore, pre-experimental knowledge of scene semantics might guide eye movements from one meaningful scene feature to the next without the need for episodic retrieval. Alternatively, it is possible that our approach for controlling stimulus-driven eye movements was overly conservative, given that some fixation sequences might have been guided by episodic retrieval yet been attributed to stimulus properties if they were of a typical pattern for a given scene. In either case, our findings in total demonstrate that familiarity with scene content produces consistent fixation sequences across repeated viewing and suggest that memory yields recapitulation of fixation sequences beyond what would be expected given only scene content.

Scanpath contiguity was robust across three independent datasets that varied in a number of key factors, including whether they involved an overt recognition memory task (Study 1) versus free viewing (Studies 2 and 3). Thus, the predictions of retrieved-context models for eye-movement behavior is highly reproducible and robust to even major differences in experiment demands. However, differences between these studies provide insight into the factors that may influence temporal context retrieval. In Study 1, the lag-CVP curve showed an anticontiguity effect in the backward direction. In contrast, Studies 2 and 3 revealed expected contiguity effects, irrespective of direction. Both electrical stimulation of the entorhinal cortex (Goyal et al., 2018) and medial temporal lobe amnesia (Palombo, Di Lascio, Howard, & Verfaellie, 2019) disrupt backward transitions, suggesting intact hippocampal function is necessary to retrieve temporal context. Reduced backward contiguity in Study 1 may reflect viewing driven by items within the scene more so than retrieved temporal context. Alternatively, the influence of retrieved context may be at odds with attentional processes that promote exploration of the scene (Klein & MacInnes, 1999), inhibiting saccades to previously viewed locations. After examining the lag-CVP following forward jumps in fixation sequences, we found contiguity in both forward and backward directions, indicating guidance of fixations by temporal context.

Comparing contiguity effects observed in the present work with those observed in free recall suggests that temporal context may play a greater role in recall organization than visual exploration. Specifically, the probability of lag 1 transitions was nearly three times greater in free recall (Fig. 1b) than scene viewing. While it is tempting to interpret these differences as evidence for a greater role of temporal context in recall, there are several factors that complicate this comparison. In free recall, no sensory information competes with memory to recall items and influence contiguity. During repeated viewing, on the other hand, previously unexplored content can become the focus of attention, driving down reinstatement. With this in mind, it is worth considering whether scanpath reinstatement depends upon visual inputs or occurs during recall-like behaviors such as mental imagery. Previous studies (Brandt & Stark, 1997; Laeng & Teodorescu, 2002) have shown that scanpaths made during initial viewing are recapitulated during the mental imagery of the encoded content. This reinstatement predicted the accuracy of the recalled image, and when eye movements during imagery were restricted (i.e., central fixation was required) memory performance suffered. While the functional relevance of eye movements at retrieval has been challenged (Johansson, Holsanova, Dewhurst, & Holmqvist, 2012), it is clear that scanpath reinstatement can occur in the absence of sensory inputs. Just as retrieved temporal context can explain behavioral performance on recall and recognition tasks alike (Healey & Kahana, 2016), our findings suggest it can account for scanpath reinstatement during scene recognition and imagery alike.

Findings from Study 1 highlight an important factor in determining the memorability of scenes: the ability to accurately reinstate a previously encoded sequence of eye movements. Memorability of a scene is determined by multiple factors, including intrinsic factors such as contextual distinctiveness (Bylinskii et al., 2015), and extrinsic factors such as the typicality of eye movements made during viewing (Bylinskii et al., 2015; Khosla et al., 2015). Prior studies of scene recognition (Schwartz et al., 2005) and verbal free recall (Sederberg, Miller, Howard, & Kahana, 2010) have shown that temporal contiguity effects between items predict successful memory performance. Experiments of this nature typically examine temporal associations between sequentially presented stimuli, limiting the ability to observe temporal contiguity at faster timescales. In contrast, eye movements provide assessment of contiguity at faster timescales. Given contiguity effects in verbal free recall are disrupted with fast presentation rates (Toro-Serey, Bright, Wyble, & Howard, 2019), it is possible that cycles of the theta rhythm (4-8 Hz) are responsible for generating contiguity.

Memory-related eye movements during successful encoding (Jutras, Fries, & Buffalo, 2013) and retrieval (Kragel et al., 2020) are phase-locked to hippocampal theta, suggesting this may be the case. Our results provide behavioral evidence that the same cognitive systems that provide episodic structure to our memories do so irrespective of the manner in which memory is encoded and expressed. That is, just as the temporal context model can describe the dynamics of verbal free recall after the passive encoding of word lists, it can describe the repetition of complex patterns of visual fixations that are made as individuals encode complex visual stimuli under free-viewing conditions. A stronger test of this hypothesis requires examining the neural systems that support context reinstatement during repeated viewing. Investigations using functional MRI (Hsieh, Gruber, Jenkins, & Ranganath, 2014; Kragel et al., 2015), intracranial EEG (Manning, Polyn, Baltuch, Litt, & Kahana, 2011) and single unit recordings (Folkerts, Rutishauser, & Howard, 2018; Howard et al., 2012) suggest that the hippocampus and surrounding entorhinal and parahippocampal regions are critical for the reinstatement of temporal context in list-learning experiments. Characterizing the involvement of these brain structures in gaze control during scene recognition is critical to understanding how scanpath reinstatement occurs. Such data could account for involvement of the hippocampus in memory-guided eye movements (Kragel et al., 2020; Voss et al., 2017), with retrieved-context theory providing a link between seemingly disparate verbal recall and visual exploration behaviors.

In addition to facilitating accurate scene recognition, retrieval of temporal context could explain behaviors where memory interacts with oculomotor control. For example, it has been shown that overlap in visual exploration of configurally similar scenes is associated with an increased sense of familiarity and hippocampal activity (Ryals, Wang, Polnaszek, & Voss, 2015). Thus, viewing a perceptually similar scene may trigger episodic retrieval of prior viewing, leading to scanpath reinstatement. Reinstatement driven by perceptual similarity does not always lead to accurate memory performance. When visual information is degraded or incomplete, individuals are likely to falsely endorse a perceptually similar lure scene as old when gaze is similarly allocated across the two viewing experiences (Wynn, Ryan, & Buchsbaum, 2020). These findings suggest that episodic memory systems generate predictions of where to direct eye movements based on prior experience, especially when sensory information is insufficient to accomplish current goals.

We have provided evidence that retrieval of temporal context accounts for exploratory behavior as individuals interact with a previously viewed scene. These findings highlight the contributions of episodic memory systems to ongoing, exploratory behavior. Episodic memory systems that support temporal context thus may play a role in a broader array of cognitive functions than typically appreciated. Even though we have focused on eye tracking as a tool to monitor episodic memory function (Hannula et al., 2010), any temporally extended environmental action could be biased by memory-based predictions. Although future work is required to understand the neural basis of scanpath reinstatement (cf. Bone et al., 2018) and its similarity to episodic recall, we show that even the simple act of exploring a visual scene produces nuanced behavioral signatures predicted by retrieved-context models of episodic memory.

Context

This work represents a new direction in our ongoing efforts to understand how episodic memory operates during visual exploration. We demonstrate that visual exploration of the environment is influenced by the same processes that provide temporal organization to our memories. These findings help explain the functional relevance of eye movements during retrieval, allowing memory-guided exploration to support recognition of past experiences. From a practical standpoint, scanpath reinstatement has the potential to serve as tool to understand memory dysfunction when explicit memory is impaired and difficult to study. Further, it may be useful to measure changes in memory function following modulation of hippocampal networks. As a behavioral target, contiguity effects in eye movements will allow computational models to consider visual exploration at fixation-level time scales in a way that has not previously been possible.

Acknowledgments

This work was supported by National Institute of Neurological Disorders and Stroke grant T32NS047987.

Footnotes

3 subjects were excluded from this analysis due to long-range transitions not being possible after forward jumps

References

- Althoff RR, & Cohen NJ (1999). Eye-movement-based memory effect: a reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25 (4), 997. [DOI] [PubMed] [Google Scholar]

- Anderson NC, Ort E, Kruijne W, Meeter M, & Donk M (2015). It depends on when you look at it: Salience influences eye movements in natural scene viewing and search early in time. Journal of Vision, 15 (5), 9–9. [DOI] [PubMed] [Google Scholar]

- Bainbridge WA, Isola P, & Oliva A (2013). The intrinsic memorability of face photographs. Journal of Experimental Psychology: General, 142 (4), 1323–1334. doi: 10.1037/a0033872 [DOI] [PubMed] [Google Scholar]

- Bone MB, St-Laurent M, Dang C, McQuiggan DA, Ryan JD, & Buchsbaum BR (2018). Eye movement reinstatement and neural reactivation during mental imagery. Cerebral Cortex, 29 (3), 1075–1089. [DOI] [PubMed] [Google Scholar]

- Borji A, & Itti L (2014). Defending Yarbus: Eye movements reveal observers’ task. Journal of Vision, 14 (3), 29–29. [DOI] [PubMed] [Google Scholar]

- Brandt SA, & Stark LW (1997). Spontaneous eye movements during visual imagery reflect the content of the visual scene. Journal of Cognitive Neuroscience, 9 (1), 27–38. [DOI] [PubMed] [Google Scholar]

- Bridge DJ, Cohen NJ, & Voss JL (2017). Distinct hippocampal versus frontoparietal network contributions to retrieval and memory-guided exploration. Journal of Cognitive Neuroscience, 29 (8), 1324–1338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunet N, Bosman CA, Roberts M, Oostenveld R, Womelsdorf T, De Weerd P, & Fries P (2015). Visual cortical gamma-band activity during free viewing of natural images. Cerebral Cortex, 25 (4), 918–926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buswell GT (1935). How people look at pictures: a study of the psychology and perception in art.

- Bylinskii Z, Isola P, Bainbridge C, Torralba A, & Oliva A (2015). Intrinsic and extrinsic effects on image memorability. Vision Research, 116, 165–78. doi: 10.1016/j.visres.2015.03.005 [DOI] [PubMed] [Google Scholar]

- Castelhano MS, & Henderson JM (2007). Initial scene representations facilitate eye movement guidance in visual search. Journal of Experimental Psychology: Human Perception and Performance, 33 (4), 753. [DOI] [PubMed] [Google Scholar]

- Castelhano MS, Mack ML, & Henderson JM (2009). Viewing task influences eye movement control during active scene perception. Journal of Vision, 9 (3), 6–6. [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Ryan J, Hunt C, Romine L, Wszalek T, & Nash C (1999). Hippocampal system and declarative (relational) memory: summarizing the data from functional neuroimaging studies. Hippocampus, 9 (1), 83–98. [DOI] [PubMed] [Google Scholar]

- Davachi L (2006). Item, context and relational episodic encoding in humans. Current Opinion in Neurobiology, 16 (6), 693–700. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H (2017). On the integration of space, time, and memory. Neuron, 95 (5), 1007–1018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Einhäuser W, Rutishauser U, & Koch C (2008). Task-demands can immediately reverse the effects of sensory-driven saliency in complex visual stimuli. Journal of Vision, 8 (2), 2–2. [DOI] [PubMed] [Google Scholar]

- Farrell S, Hurlstone MJ, & Lewandowsky S (2013). Sequential dependencies in recall of sequences: Filling in the blanks. Memory & Cognition, 41 (6), 938–952. [DOI] [PubMed] [Google Scholar]

- Farrell S, & Lewandowsky S (2004). Modelling transposition latencies: Constraints for theories of serial order memory. Journal of Memory and Language, 51 (1), 115–135. [Google Scholar]

- Folkerts S, Rutishauser U, & Howard MW (2018). Human episodic memory retrieval is accompanied by a neural contiguity effect. Journal of Neuroscience, 38 (17), 4200–4211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foulsham T, & Kingstone A (2013). Fixation-dependent memory for natural scenes: An experimental test of scanpath theory. Journal of Experimental Psychology: General, 142 (1), 41. [DOI] [PubMed] [Google Scholar]

- Foulsham T, & Underwood G (2008). What can saliency models predict about eye movements? spatial and sequential aspects of fixations during encoding and recognition. Journal of Vision, 8 (2), 6–6. [DOI] [PubMed] [Google Scholar]

- Goyal A, Miller J, Watrous AJ, Lee SA, Coffey T, Sperling MR, … others (2018). Electrical stimulation in hippocampus and entorhinal cortex impairs spatial and temporal memory. Journal of Neuroscience, 38 (19), 4471–4481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halekoh U, & Højsgaard S (2014). A kenward-roger approximation and parametric bootstrap methods for tests in linear mixed models-the R package pbkrtest. Journal of Statistical Software, 59 (9), 1–30.26917999 [Google Scholar]

- Hannula DE, Althoff RR, Warren DE, Riggs L, Cohen NJ, & Ryan JD (2010). Worth a glance: using eye movements to investigate the cognitive neuroscience of memory. Frontiers in Human Neuroscience, 4,166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula DE, & Ranganath C (2009). The eyes have it: hippocampal activity predicts expression of memory in eye movements. Neuron, 63 (5), 592–599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Healey MK, & Kahana MJ (2016). A four-component model of age-related memory change. Psychological Review, 123 (1), 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Healey MK, Long NM, & Kahana MJ (2019). Contiguity in episodic memory. Psychonomic Bulletin & Review, 26 (3), 699–720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henderson JM, & Hayes TR (2017). Meaning-based guidance of attention in scenes as revealed by meaning maps. Nature Human Behaviour, 1 (10), 743–747. doi: 10.1038/s41562-017-0208-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henderson JM, Shinkareva SV, Wang J, Luke SG, & Olejarczyk J (2013). Predicting cognitive state from eye movements. PloS One, 8 (5), e64937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A, Richard AM, & Luck SJ (2008). Understanding the function of visual short-term memory: transsaccadic memory, object correspondence, and gaze correction. Journal of Experimental Psychology: General, 137 (1), 163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard MW, & Kahana MJ (1999). Contextual variability and serial position effects in free recall. Journal of Experimental Psychology: Learning Memory and Cognition, 25 (4), 923–41. [DOI] [PubMed] [Google Scholar]

- Howard MW, & Kahana MJ (2002). A distributed representation of temporal context. Journal of Mathematical Psychology, 46 (3), 269–299. doi: 10.1006/jmps.2001.1388 [DOI] [Google Scholar]

- Howard MW, Shankar KH, Aue WR, & Criss AH (2015). A distributed representation of internal time. Psychological Review, 122 (1), 24–53. doi: 10.1037/a0037840 [DOI] [PubMed] [Google Scholar]

- Howard MW, Viskontas IV, Shankar KH, & Fried I (2012). Ensembles of human MTL neurons “jump back in time” in response to a repeated stimulus. Hippocampus, 22 (9), 1833–1847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard MW, Youker TE, & Venkatadass VS (2008). The persistence of memory: Contiguity effects across hundreds of seconds. Psychonomic Bulletin & Review, 15 (1), 58–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsieh L-T, Gruber MJ, Jenkins LJ, & Ranganath C (2014). Hippocampal activity patterns carry information about objects in temporal context. Neuron, 81 (5), 1165–1178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itti L, & Koch C (2000). A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research, 40 (10–12), 1489–506. [DOI] [PubMed] [Google Scholar]

- Itti L, & Koch C (2001). Computational modelling of visual attention. Nature Reviews Neuroscience, 2 (3), 194. [DOI] [PubMed] [Google Scholar]

- Johansson R, Holsanova J, Dewhurst R, & Holmqvist K (2012). Eye movements during scene recollection have a functional role, but they are not reinstatements of those produced during encoding. Journal of Experimental Psychology: Human Perception and Performance, 38 (5), 1289. [DOI] [PubMed] [Google Scholar]

- Jutras MJ, Fries P, & Buffalo EA (2013). Oscillatory activity in the monkey hippocampus during visual exploration and memory formation. Proceedings of the National Academy of Sciences, 110 (32), 13144–13149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahana MJ (1996). Associative retrieval processes in free recall. Memory & Cognition, 24 (1), 103–109. doi: 10.3758/Bf03197276 [DOI] [PubMed] [Google Scholar]

- Kaspar K, & König P (2011a). Overt attention and context factors: the impact of repeated presentations, image type, and individual motivation. PLoS One, 6 (7), e21719. doi: 10.1371/journal.pone.0021719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaspar K, & König P (2011b). Viewing behavior and the impact of low-level image properties across repeated presentations of complex scenes. Journal of Vision, 11 (13), 26. doi: 10.1167/11.13.26 [DOI] [PubMed] [Google Scholar]

- Khosla A, Raju AS, Torralba A, & Oliva A (2015). Understanding and predicting image memorability at a large scale. In Proceedings of the IEEE international conference on computer vision (pp. 2390–2398). [Google Scholar]

- Klein RM, & MacInnes WJ (1999). Inhibition of return is a foraging facilitator in visual search. Psychological Science, 10 (4), 346–352. [Google Scholar]

- Kragel JE, Morton NW, & Polyn SM (2015). Neural activity in the medial temporal lobe reveals the fidelity of mental time travel. Journal of Neuroscience, 35 (7), 2914–2926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kragel JE, VanHaerents S, Templer JW, Schuele S, Rosenow JM, Nilakantan AS, & Bridge DJ (2020). Hippocampal theta coordinates memory processing during visual exploration. eLife, 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laeng B, & Teodorescu DS (2002). Eye scanpaths during visual imagery reenact those of perception of the same visual scene. Cognitive Science, 26 (2), 207–231. doi: 10.1016/S0364-0213(01)00065-9 [DOI] [Google Scholar]

- Le Meur O, & Baccino T (2013). Methods for comparing scanpaths and saliency maps: strengths and weaknesses. Behavior Research Methods, 45 (1), 251–266. [DOI] [PubMed] [Google Scholar]

- Lohnas LJ, Polyn SM, & Kahana MJ (2015). Expanding the scope of memory search: Modeling intralist and interlist effects in free recall. Psychological Review, 122 (2), 337. [DOI] [PubMed] [Google Scholar]

- Mackay M, Cerf M, & Koch C (2012). Evidence for two distinct mechanisms directing gaze in natural scenes. Journal of Vision, 12 (4), 9–9. [DOI] [PubMed] [Google Scholar]

- Manning JR, Polyn SM, Baltuch GH, Litt B, & Kahana MJ (2011). Oscillatory patterns in temporal lobe reveal context reinstatement during memory search. Proceedings of the National Academy of Sciences, 108 (31), 12893–12897. [DOI] [PMC free article] [PubMed] [Google Scholar]