Abstract

Objective

Facial masks are an essential personal protective measure to fight the COVID-19 (coronavirus disease) pandemic. However, the mask adoption rate in the United States is still less than optimal. This study aims to understand the beliefs held by individuals who oppose the use of facial masks, and the evidence that they use to support these beliefs, to inform the development of targeted public health communication strategies.

Materials and Methods

We analyzed a total of 771 268 U.S.-based tweets between January to October 2020. We developed machine learning classifiers to identify and categorize relevant tweets, followed by a qualitative content analysis of a subset of the tweets to understand the rationale of those opposed mask wearing.

Results

We identified 267 152 tweets that contained personal opinions about wearing facial masks to prevent the spread of COVID-19. While the majority of the tweets supported mask wearing, the proportion of anti-mask tweets stayed constant at about a 10% level throughout the study period. Common reasons for opposition included physical discomfort and negative effects, lack of effectiveness, and being unnecessary or inappropriate for certain people or under certain circumstances. The opposing tweets were significantly less likely to cite external sources of information such as public health agencies’ websites to support the arguments.

Conclusions

Combining machine learning and qualitative content analysis is an effective strategy for identifying public attitudes toward mask wearing and the reasons for opposition. The results may inform better communication strategies to improve the public perception of wearing masks and, in particular, to specifically address common anti-mask beliefs.

Keywords: social media [L01.178.751], Natural Language Processing [L01.224.050.375.580], machine learning [G17.035.250.500], public health [H02.403.720], health communication [L01.143.350], coronavirus [B04.820.504.540.150], masks [E07.325.877.500], personal protective equipment [E07.700.560]

INTRODUCTION

The coronavirus disease 2019 (COVID-19) pandemic has caused significant morbidity and mortality across the globe. On December 28, 2020, there were 441 861 new confirmed cases worldwide, with 145 959 of these being in the United States.1,2 While scientific research has advanced our knowledge of the disease, new therapeutic treatments have been developed and vaccines are now approved and available, widespread adoption of individual protective behaviors, such as wearing personal protective equipment and practicing social distancing, remains crucial to reducing the spread of COVID-19.2 Despite a handful of studies questioning the effectiveness of community mask wearing,3,4 it has been shown that facial masks, even if homemade, can lead to a decrease in mortality by more than 20% if worn by more than 80% of community members.5

The benefits of facial masks can only be realized when most people wear them, which is known as universal mask use.6 While several countries (eg, Singapore, South Korea, China) have achieved this goal,7 promoting widespread mask wearing in the United States has encountered substantial obstacles. Besides cultural norms dictating that only the sick wear masks, the anti-mask opinion held by some government officials, and shifting positions by U.S. and international public health authorities, have added confusion to the debate. In particular, the U.S. Centers for Disease Control and Prevention (CDC) initially recommended against public mask wearing on February 27, 2020.8 However, on April 3, the CDC reversed its position to instead recommend universal mask use.9 Then, on April 6, the World Health Organization (WHO) issued an advisory stating that healthy individuals do not need to wear masks, directly contradicting the CDC’s recommendation.10 Further, there has been significant variation across state and county health departments on mask-wearing policies, and the debate on whether or not there should be a national mask mandate in the United States remains unsettled.11

While surveys by The New York Times, the Pew Research Center, and the CDC reported a relatively high self-reported mask use rate in the United States (59%, 65%, and 74.1%, respectively), the actual adoption rate is questionable. For example, in the same survey conducted by the Pew Research Center, only 44% of the participants reported that members in their communities were actually wearing masks all or most of the time when in public, suggesting that social desirability bias may be affecting self-reported rates.9 Further, all currently available surveys used simple yes/no questions on mask wearing without soliciting the rationales behind the opposing opinions7,9,12; only a handful of news outlets and advocacy groups have provided excerpts from the public or conjectured on why some people refused to wear masks.13–16 Finally, most of the existing surveys were carried out at sporadic times for cross-sectional analysis in limited geographic areas. Continuous monitoring of public perception across the country is rare, leaving a knowledge vacuum of understanding how the public attitudes toward mask wearing have evolved over time since the beginning of the pandemic.7,9,12

To address these gaps, we analyzed a large Twitter dataset collected in the United States from January to October 2020 to answer the following research questions (RQs):

1. (a) What is the general public’s attitude toward mask wearing in the United States?

(b) How has the general public’s attitude changed over time as the pandemic progressed?

2. Among those expressing an anti-mask opinion, what are their concerns or justifications?

3. What is the external source of information shared to support the pro- or anti-mask arguments?

Based on the results of available conventional surveys, we hypothesize that the general public’s attitude toward facial masking expressed through tweets would be generally positive, even though unfavorable viewpoints would not be uncommon. Further, this attitude would have shifted over time as a result of changing CDC guidelines and local mask-wearing policies and how such policies are enforced. We also hypothesize that anti-mask tweets would be less likely to cite external sources of information especially from public health authorities.

MATERIALS AND METHODS

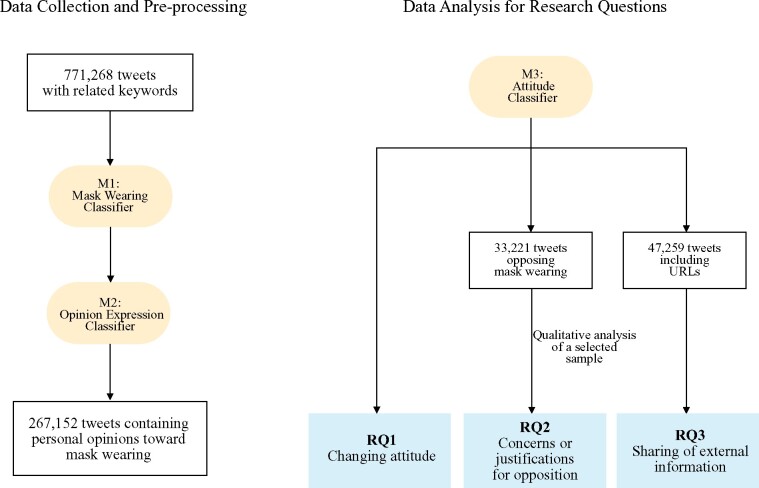

We used a computer-aided qualitative analysis approach that combines machine learning and qualitative content analysis. To answer RQ1 (changing attitude), we trained a machine learning classifier to label personal opinions regarding mask wearing and to examine its evolution over time. To answer RQ2 (concerns or justifications for opposition), we conducted an in-depth qualitative content analysis of a random set of anti-mask tweets to examine the common beliefs held by those who opposed mask wearing, as well as the reasoning behind such beliefs. To answer RQ3 (sharing of external information), we quantitatively analyzed the external evidence cited in the tweets, eg, by calculating the proportion from public health authorities such as WHO and the CDC. The overall analytical flow is exhibited in Figure 1. To protect the privacy of individuals, we paraphrased all tweets presented in the following sections, instead of directly quoting the original tweets.

Figure 1.

Method flowchart. RQ: research question.

Data collection and preprocessing

Retrieving tweets

As this study concerns the public attitudes toward mask wearing in the United States, only those geocoded tweets falling into the coordinates of {-173.847656, 17.644022, -65.390625, 70.377854}, which approximately represents the continental United States, were included. Geocodes are the main source of information that researchers could use to determine user locations. While user profiles are available through the Twitter API, we did not opt to use them for inferring geolocations because users’ locations specified at the point of registration may not match their locations when relevant tweets were posted. Therefore, only the geolocations attached to tweets meeting our criteria were used. Based on the estimate provided by Twitter, approximately 1% to 2% of Twitter users opt to allow their geolocation information to be tracked.17

The data collection of this study covered a 10-month period between January 1 and November 1, 2020. It was conducted by leveraging the official Twitter API version 1.1, which continuously retrieves all tweets meeting the search criteria from a stream of general tweets that are located in the United States provided by Twitter.18 The keywords used are detailed in Table 1, which were developed based on a manual review of sample tweets in addition to examining the search terms employed in prior research.19

Table 1.

Search keywords

| “mask” OR “face cover” OR “cloth cover” OR “face cloth” OR “mouth cover” OR “nose cover” OR “facial cover” OR “nose cloth” OR “eye cloth” OR “mouth cloth” |

Further filtering

Social media data retrieved through keywords search may contain a substantial amount of irrelevant content, eg, “face cloth” may refer to facial washcloth for makeup removal.20 To remove such noise, we manually analyzed a random set of tweets to examine the characteristics of irrelevant posts (a total of 200 tweets were reviewed; saturation was achieved after coding about 70 tweets). The results show that the majority of such tweets fall into the following 2 categories: (1) those related to mask manufacturing or product advertisements or that referred to other meanings of the word mask (eg, “The government has been masking the fact that the it is a failure”); and (2) those pertinent to mask wearing but did not express any personal attitude (eg, tweets that merely shared a URL with no personal opinions explicitly stated), or the attitude is difficult to discern from the content of the tweet (eg, “Should you wear a mask?? #COVID #facemask #comfortmask. Read this blog: URL”).

To remove such tweets from further analyses, we developed 2 models using supervised machine learning. Model 1 (mask wearing classifier) is a text classifier for determining whether a tweet is related to mask wearing in the COVID-19 context; and Model 2 (opinion expression classifier) determines whether a tweet contained personal opinions. To train these models, we annotated a total of 1000 tweets through the following 2 steps. First, 2 authors (L.H. and C.H.) separately coded a random sample of 200 tweets to calibrate the annotation. The interrater agreement ratio was 0.93; differences were resolved in consensus development meetings. Then, the same 2 authors independently coded an additional random set of 800 tweets. To prepare for text classification, all tweets were preprocessed by, eg, lowercasing, removal of punctuations and hashtags, and stemming. Next, for each model, words were represented as 100-dimensional vectors trained by the word2vec algorithm.21 To address the issue of imbalance between relevant and irrelevant tweets in the training data, we used the oversampling strategy as specified by Hilario et al.22 Then, we tested several commonly used machine learning models including support vector machine, XGBoost, and long short-term memory (LSTM) network. The best-performing one, LSTM, was selected for further analyses, which achieved the highest F1-score under 10-fold cross validation. LSTM, due to its ability to account for sequential information and order dependencies, is particularly suited for handling data such as time series and natural language. Extant literature has demonstrated the predictive power of LSTM on natural language processing (NLP) tasks such as document classification and sentiment analysis.23 We added a dropout layer (rate = 0.3) to avoid overfitting, a common technique used in the literature that randomly removes units of neural networks.24

To identify tweets posted by social bots (ie, programmed Twitter accounts that generate posts automatically),25 we first applied Botometer,26,27 a well-established social bot detection tool that has been widely used by researchers and organizations such as the Pew Research Center.28 Following Rauchfleisch and Kaiser’s recommendation, we used a random sample of 500 distinct users from our dataset to validate the tool’s performance.29 Two authors (L.H. and C.H.) manually reviewed these users’ profiles (eg, profile picture, description, account creation time, number of followers), tweeting history (eg, number of tweets, retweets, and likes), and interactions with other users (eg, commenting on others’ tweets), in order to determine if a user was a social bot or not. The interrater reliability is 100% when calibrating based on 100 users’ data; none of these users were determined as social bots. We then annotated the remaining 400 users; none of them were determined as social bots either. On the contrary, Botometer labeled 29 (5.8%) users as social bots. However, based on manual review, many of these users were simply hyperactive tweeters. Their online activities did exhibit the normal behavior of human users, eg, the content that they posted did not appear to be automatically authored and they participated in active interactions with other Twitter users. Removing these users could thus result in systematic biases in our analysis of the data.

Data analyses

RQ1a: Attitude

To classify public opinions toward mask wearing, we first applied the sentiment analysis approach which has been commonly used in the literature to study attitudes expressed in social media data.30 We tested 4 off-the-shelf sentiment analysis tools that have been most commonly used: VADER (Valence Aware Dictionary for sEntiment Reasoning),31 TextBlob,32 Stanford NLP,33 and Linguistic Inquiry and Word Count (LIWC).30,34,35 We manually annotated the sentiment of 500 random tweets and compared the results to the outputs of these tools. We found that none of these off-the-shelf tools was able to produce accurate attitude classifications, at least not in our study context. The results produced by VADER, TextBlob, Stanford NLP, and LIWC achieved low F1 scores of 59%, 57.4%, 58.6%, and 51.9%, respectively. This is likely because of domain transferability issues, ie, such tools are often trained with text corpora from nonhealthcare domains such as movie reviews. Also, in our study, positivity of the sentiment is often not consistent with the mask wearing attitude expressed. For example, users tend to use strong negative tones such as “Fuck. Mask On!” to encourage others to wear masks, the sentiment of which was labeled by the off-the-shelf sentiment analysis as negative, even though the tweet was in fact in favor of mask wearing.

Thus, we developed a specialized machine learning model (model 3 [attitude classifier]) instead, using the same approach adopted in model 1 and model 2. This produced an LSTM text classifier for attitudes, trained based on 500 annotated tweets.

RQ1b: Evolution of attitude

Further, to investigate the temporal trend of public attitudes toward mask wearing, we grouped tweets by week and calculated the percentage of tweets expressing support for, or opposition to, mask wearing on a week-to-week basis. We also analyzed word frequencies based on term frequency-inverse document frequency to assess changes in commonly discussed topics related to mask wearing over time.

RQ2: Concerns or justifications for opposition

We conducted a manual qualitative content analysis on a random set of tweets posted by distinct users in order to answer RQ2 (“among those expressing an anti-mask opinion, what are their concerns or justifications”). We did not opt to use a computational approach for this RQ because the concerns expressed in tweets were heterogeneous and subtle that were challenging for the machine to classify. For example, the following tweet, “Where I live in Los Angeles—You can’t get groceries without a mask… In reality, very few are sick in CA. 250 out of 40 million have died, most with preexisting health issues,” implies that coronavirus does not cause many casualties and masks are not necessary; hence, the attitude expressed in this tweet was opposing mask wearing. This level of natural language understanding is difficult for currently available lexicon-based tools or machine learning models to disentangle, especially on short texts such as tweets.36 We therefore decided to qualitatively analyze such concerns and justifications for opposing mask wearing. We used grounded theory to code the data.37 Using this method, we first randomly selected 100 tweets for open coding to generate a set of initial codes (eg, perceived physical harm and discomfort). Differences were resolved through consensus development research meetings, which produced a final set of codes for coding the rest of the data. During the open coding, saturation was achieved after coding approximately 70 tweets.

Based on the finalized codebook (provided in Supplementary Appendix 1), 2 authors (L.H. and C.H.) independently coded 100 randomly selected tweets to calibrate coding. The interrater reliability was 0.87. Then, each of them separately coded an additional set of 200 randomly selected tweets. Thus, in total, 500 tweets were coded and analyzed to answer this RQ.

RQ3: Sharing of external information

In this analysis, we extracted all external URLs embedded in the tweets (eg, websites, images, or videos). We then calculated and compared the proportion of pro- and anti-mask tweets that cited external sources of information. Then, we analyzed the nature of such external information, for example, whether the information originating from public health authorities such as the WHO, the CDC, and state- or county-level health departments (based on the URLs [eg, “cdc,” “who,” “.gov”, “.int”]) was cited differently between the pro- and anti-mask groups.

We further did a drill-down analysis through manually reviewing a random set of 100 anti-mask tweets that contained external links. The objective was to specifically investigate what types of external information was used to support the anti-mask attitude. To do this, we read each of these tweets and followed the external links to review and analyze the source information cited (eg, news articles, journal papers, and videos and images).

RESULTS

A total of 771 268 tweets met our inclusion criteria. Model 1 (personal mask wearing classifier) achieved an F1 score of 84.48% under 10-fold cross validation. After applying this model, 463 369 tweets that were not relevant to mask wearing were removed. The remaining 307 899 tweets were then analyzed using model 2 (opinion expression classifier), the purpose of which was to exclude tweets that did not express a personal opinion, or the opinion was difficult to discern. Model 2 achieved an F1 score of 86.38% under 10-fold cross validation. This model further removed 40 747 irrelevant tweets, leaving a total of 267 152 tweets used in the subsequent model 3 (attitude classifier) analysis. Model 3 achieved an F1 score of 90.16% under 10-fold cross validation.

Descriptive analysis

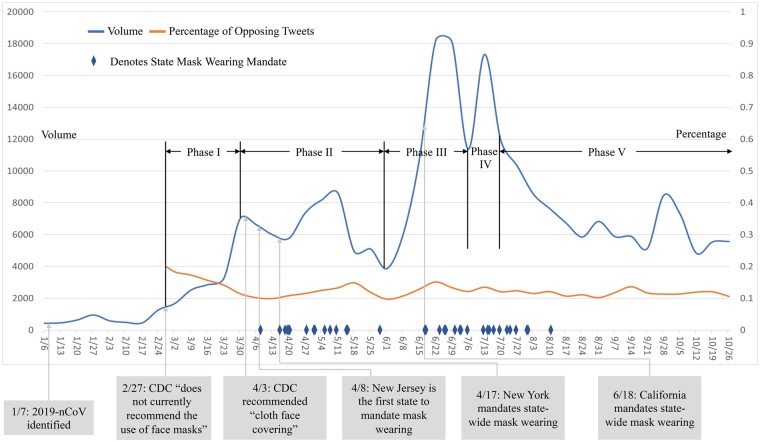

Figure 2 exhibits the temporal trend of relevant tweets from January 1 to November 1, 2020. Several distinct phases can be observed. Phase I started on February 27, around the time when a statement was issued that the CDC “does not currently recommend the use of face masks,”9 and ended around March 30, representing a gradual increase in the volume of relevant Twitter discussions. Phase II lasted until around June 1, representing continued public interests in the subject with some fluctuations in tweet volume. A sharp increase of the number of relevant tweets followed, starting around June 2 and lasting approximately 1 month until July 6 (phase III), which may be associated with public reactions toward facial mask mandates by large states such as California. This phase was followed by another dramatic increase of relevant tweet volume (phase IV), leading toward a second peak around July 13, before returning to the phase II level, around July 20. The last phase, phase V, showed a downward trend of relevant tweet volume until the end of October, when the data collection for this study stopped.

Figure 2.

Temporal change of tweet volume and attitude. CDC: Centers for Disease Control and Prevention.

Table 2 reports the results of a word frequency analysis for examining the evolution of commonly discussed topics across these 5 distinct phases. Initially, in phase I (2/27-3/30), the discussions focused on symptoms of COVID-19 and its mechanisms of transmission. In phase II (3/31-6/1), frequently appearing topics included daily experience coping with the pandemic and the reactions to the recommendation of wearing facial masks by the CDC. Frustration and distress can be observed in phase III (6/2-7/6), expressing strong sentiments toward mask wearing. This could be associated with many circumstantial factors such as isolation, mask mandates, and a series of protests that broke out across the United States. The facial mask discussions continued in phase IV (7/7-7/20), and in phase V (7/21-10/31), moved on to focus on school reopening and the U.S. general election.

Table 2.

Evolution of frequently discussed topics over time

| Phase | Frequently observed words | Topic |

|---|---|---|

|

Phase I (2/27-3/30) |

symptoms, coughing, sneeze | Symptom |

| droplets, mouth, touch, skin, airborne, respiratory, spreading | Mechanism of transmission | |

| protection, sanitizer, hand, washing, soap, water, sleep, eye, face, wash, clean, mask, scarf | Best practices for personal protection | |

|

Phase II (3/31-6/1) |

walking, breath, grocery, outside, seeing, wear, people, place, public, stores, shopping, car, talking, shop | Daily experience during the pandemic |

| CDC, cloth, mask, bandana, mask, wearing, apart, home, covering, homemade, protect, quarantine | CDC recommendation on facial mask wearing | |

| Phase III (6/2-7/6) | fucking, dumb, stupid, wrong, bad, fuck, hate, damn, hard, selfish, lives, risk | Distress with strong sentiments |

| Phase IV (7/7-7/20) | people, asthma, mask, mandate, science, enough, children, folks, mandatory, life, rights, never, shut, refuse | Continued discussion on a variety of topics |

| Phase V (7/21-10/31) | kids, family, wear, school, safe, first, day | School reopening |

| vote, trump, tested, distancing | U.S. general election |

CDC: Centers for Disease Control and Prevention.

RQ1a: Attitude

Overall, during the 10-month period, 87.56% of the relevant tweets (233 931) expressed a supportive attitude toward mask wearing, whereas 12.44% (33 221) opposed it; the latter is shown as the orange line in Figure 2. This finding confirms our hypothesis that pro-mask tweets would outnumber anti-mask tweets; although the latter were not uncommon. Note that in Figure 2, we only plotted data from February 27 onward, as the number of relevant tweets at the initial stage of the pandemic was very small.

RQ1b: Evolution of attitude

As Figure 2 shows, initially, when the CDC recommended against the use of facial masks, the proportion of opposing tweets was around 20%. This was followed by a gradual decrease, and around the time when the CDC began to recommend mask wearing (April 3), the proportion of opposing tweets had dropped to about 10% level. Between February and October, there were a number of bursts in the volume of opposing tweets, all of which appear to be associated with state-level mask mandates. The overall opposing rate nonetheless remained around 10% to 15% throughout the study period.

RQ2: Concerns and justifications for opposition

The qualitative content analysis of a sample of opposing tweets revealed 6 major categories of concerns or justifications for opposing facial masks: (1) physical discomfort or negative effects (30.6%), (2) lack of effectiveness (27.4%), (3) unnecessary or inappropriate for certain people or under certain circumstances (17%), (4) political beliefs (12.2%), (5) lack of mask-wearing culture (9.6%), and (6) coronavirus not a serious threat (3.2%). Table 3 provides more details on each of these categories.

Table 3.

Major categories of concerns or justifications for opposing mask wearing (examples paraphrased to protect confidentiality)

| Category | Description | Example | Proportion (N = 500) |

|---|---|---|---|

| Physical discomfort or negative effects | Perception or experience of discomfort or negative effects as a result of mask wearing such as rash, acne, shortness of breath, or fainting; or beliefs that wearing a mask would cause damage to the immune system. | “It is mandatory to wear a mask at work at my very physical job will cause restrictions to airflow, making it tough to breathe. Is CDC correct or city?!!!! WTF” | 30.6% |

| Lack of effectiveness | Beliefs that mask wearing is not effective as it claims to be, or is not always effective (eg, if not properly worn), or that there are other better alternatives. | “Non-medical face mask made of clothes are not useful for COVID-19—NAFDAC warns URL” | 27.4% |

| Unnecessary or inappropriate for certain people or under certain circumstances | Beliefs that healthy individuals, children, and/or those with certain health conditions should not wear masks, or that masks are not necessary outdoors or when social distancing is practiced. |

|

17% |

| Political beliefs | Beliefs that mandatory mask-wearing policies infringe upon personal liberty, or that those mask mandates are politicized and are manipulation tactics by certain politicians and special interest groups. |

|

12.2% |

| Lack of mask-wearing culture | The negative connotations associated with mask wearing such as being odd-looking, “unAmerican,” criminal resembling, or reflective of panic and fear. | “I have always been made uncomfortable by someone wearing a surgical mask. Masks make me feel uneasy. This alone is enough to keep me inside. Well done, CDC.” | 9.6% |

| Coronavirus not a serious threat | Coronavirus is not a serious threat, or not as serious as what the government suggests, and thus widespread mask wearing is an overreaction. | “During flu season you run into many people who have been exposed to the flu without knowing it and in turn expose you and we are not wearing a mask all flu season! Coronavirus is not a new virus, it is just a new strand!” | 3.2% |

As shown in Table 3, the most common concerns expressed in anti-mask tweets are related to “physical discomfort and negative effects” (30.6%). These included difficulties in breathing and causing sweaty face and foggy glasses or skin ailments (eg, rash and acne). A substantial proportion of the opposing tweets also argued that facial masks were not effective in preventing the spread of coronavirus (27.4%), because the particles carrying the virus were too small and ordinary cloth coverings would not be able to stop them from penetrating through. The next notable category is beliefs that facial masks were “unnecessary or inappropriate for certain people or in certain situations” (17%), believing that only those infected with the virus needed to wear masks, or that masks were only useful in settings in which social distancing was not possible. Additional reasons for the anti-mask attitude included “political beliefs” (12.2%), postulating that mask mandates were unconstitutional that infringed upon one’s personal liberty, and were primarily politicians or the government’s attempt to control the thinking and behavior of the people; “lack of mask-wearing culture” (9.6%), eg, wearing a mask is associated with panic and fear; and “coronavirus not a serious threat” (3.2%), which involved beliefs that the threat of coronavirus had been intentionally overstated.

RQ3: Sharing of external information

About one-fifth of the relevant tweets (17.69%) included external information (eg, websites, images, or videos) to support the pro- or anti-mask arguments. Commonly used sources of external information included other tweets (31 619), Instagram images (10 742), YouTube videos (705), the CDC website (189), The New York Times (134), and CNN.com (134). Table 4 shows a comparison between the pro- and the anti-mask groups. Those opposed mask wearing appear to be much less likely to include external information in their tweets, compared with those supporting. This difference is statistically significant (P < .05). Further, the opposing group was statistically less likely to use external information from public health authorities such as the CDC, WHO, and other state- or county-level health departments (P < .05). These findings confirm our hypothesis that anti-mask tweets would be less likely to cite external sources of information especially from public health authorities.

Table 4.

Comparison of use of external information among pro- vs anti-mask tweets

| Source | Pro-Mask Tweets (%) | Anti-Mask Tweets (%) |

|---|---|---|

| Other tweets | 12.96 | 3.88 |

| 4.48 | 0.75 | |

| YouTube | 0.28 | 0.16 |

| CDC website | 0.073 | 0.054 |

| The New York Times | 0.055 | 0.039 |

| Websites of local public health agencies (eg, coronavirus.ohio.gov) | 0.093 | 0.03 |

| Information sourced from any public health authority | 0.178 | 0.093 |

| Total | 19.35 | 5.98 |

CDC: Centers for Disease Control and Prevention.

Based on the qualitative content analysis that we conducted on a subset of the relevant tweets, we performed a drill-down analysis of the external supporting evidence cited in tweets that opposed mask wearing. The results show that the opposing tweets often cited user-created YouTube videos arguing against mask wearing (eg, “COVID-19 MASK REFURBISHMENT,”38) and news articles from conservative news media (eg, Newsmax). While opposing tweets were less likely to cite information disseminated by public health authorities, a few articles published in prestigious medical journals were prominently featured, such as the perspective article “Universal Masking in Hospitals in the Covid-19 Era” published on May 21 in The New England Journal of Medicine stating that, “We know that wearing a mask outside health care facilities offers little, if any, protection from infection.”39 Further, the opposing tweets commonly referenced health experts within their social circles to support the anti-mask arguments, eg, “A family member of mine is a dr of infectious diseases. Surgical mask is 8-10 microns a n95 mask is 4 microns filter size the covid virius is .6 - 1 micron meaning the mask filter is 4-10x bigger then the virius is Covid can enter the body through your eyes and ears But we dont cover those why?”

DISCUSSION

In this study, we analyzed public opinions expressed on Twitter regarding whether to or not to wear facial masks to help to prevent the spread of coronavirus. We studied a total of 771 268 tweets collected from January 1 to November 1, 2020 using an analytical strategy that combined qualitative content analysis for understanding opinions expressed in subtle human language and machine learning for scalability. The results show that while the overall volume of mask-related tweets fluctuated according to real-world events (eg, WHO/CDC recommendations and mask mandates), the proportion of anti-mask tweets stayed constant at approximately 10%. The top 3 reasons for opposing public mask wearing were physical discomfort and negative effects, lack of effectiveness, and being unnecessary or inappropriate for certain people or under certain circumstances. The results also show that anti-mask tweets were significantly less likely to use external sources of information to support the arguments, particularly information from public health authorities such as WHO and the CDC.

While there has been a large body of literature analyzing social media data to understand public opinions toward controversial health-related issues, many studies simply applied off-the-shelf sentiment analyzers by equaling the sentiment of an expression to the attitude expressed in the expression,40,41 which may lead to incorrect interpretation of the data. Such studies may also suffer from poor domain transferability of the existing sentiment analysis tools, as most of them were developed in nonhealth domains (eg, movie reviews).30 In this article, instead of using the off-the-shelf tools, we developed a comprehensive computational pipeline that included multiple machine learning models trained on human-annotated data. These models helped us improve the relevance of data retrieved by keywords search by excluding tweets that were not related to the mask-wearing behavior or did not contain personal opinions. These models also helped us achieve more accurate classification of pro- or anti-mask attitude expressed.

To the best of our knowledge, no studies to date have used social media data to investigate the public’s attitude toward mask wearing, except for 1 medRxiv article that used unsupervised topic modeling to look at the general discussion trends related to use of facial masks.19 Conventional surveys available only reported on respondents’ pro- vs anti-mask stances.7,9,12 In contrast, our study was able to understand the reasoning of those who were against mask wearing. Further, our study was able to capture the evolution of attitudes over time to reveal the public’s reactions to the constantly changing public health recommendations and local, regional, and national mask mandating policies. Understanding such longitudinal trends can be cost-prohibitive to achieve using the conventional survey method. Despite the advantages, use of social media data such as tweets has several limitations. First, the only way to reliably identify tweets posted by users in the United States is to use geocodes, which may introduce self-selection bias as not all Twitter users would opt to turn on geotracking. Further, the dominance of active/vocal users on Twitter, and on any other social media platforms more generally, may introduce additional self-selection biases in the data.

The results of this study provide several implications for researchers, public health practitioners, and policymakers. First, methodologically, we noticed that how to properly retrieve tweets relevant to mask wearing in the context of the pandemic requires careful consideration. Initially, we followed the common practice used in prior research (eg, Sanders et al)19 in searching for such tweets by including COVID-19–related keywords in addition to mask-related keywords. We found that doing this would result in a loss of nearly 50% of the relevant data, owing to the fact that many tweets relevant to COVID-19 did not explicitly use any word related to COVID-19 because of its prevalence in public discourse. Further, as mentioned earlier, we found that commonly used off-the-shelf sentiment analyzers (eg, VADER, LIWC, Stanford NLP, TextBlob) failed to produce accurate sentiment classifications, or the sentiments identified were not in accordance with the attitudes expressed. Therefore, we suggest that future studies thoroughly compare existing computational tools and, if needed, train specialized, domain-specific tools to ensure the validity of study results.

Second, our data analysis revealed several distinct phases of mask-related discussions on Twitter, which closely aligned with real-world events such as shifting recommendations from public health authorities, mask mandates issued at the state level, and other contemporary events such as school reopening and the U.S. general election. This finding confirms previous studies41,42 and suggests that social media can be used as a reliable source of information for continuously monitoring the changing public attitude in response to major social, political, and public health events. This may provide implications into designing and implementing a social media–based real-time dashboard to monitor and track public opinions toward important health-related issues, so that health communication strategies could be more targeted and thus effective.

Third, our analysis also revealed common reasons underlying the anti-mask opinions, eg, perceived physical damage and discomfort. While there was information addressing some concerns on public health authority websites43 and disseminated through traditional news media,44 it appears that such information had not become highly visible through social media. Therefore, public health authorities may consider finding more creative and engaging ways to provide public education and combat with misinformation on social media platforms, eg, by creating entertaining YouTube or TikTok short videos to show how to make facial masks and how to properly wear them.45

Further, some users opposed mask wearing because they believed that it was not an effective measure for preventing the spread of the virus and it was unnecessary for healthy individuals. These stances were indeed supported by authoritative bodies such as the CDC and the U.S. Surgeon General at the early stage of the pandemic. However, many of these tweets were posted in June and July, yet the CDC had reversed its position on public mask wearing in April. This finding indicates that certain segments of the U.S. population might not be aware of, or refuse to believe in, new recommendations from the CDC, and that earlier, contradicting recommendations might have a long-lasting impact. This means that public health experts and officials must clearly communicate about scientific uncertainty, reasons for specific recommendations, and the possibility that recommendations could change as more evidence emerges. In addition, public health experts should continue to engage the public so that new scientific findings, conclusions, and recommendations are immediately delivered to all members of the public.

Last, it is also interesting to note that while political beliefs are thought to be the key reason for anti-mask opinions,7 based on our analysis, it was not the most often cited reason in the anti-mask tweets. Instead, such tweets emphasized physical discomfort and negative effects and lack of effectiveness. However, based on our data, we are unable to determine if these reasons had ties to political beliefs that might not be made explicit in these tweets.

The findings of this study provide several insights into developing better public health communication strategies to convey the benefits of wearing facial masks, using other protective measures, as well as combating with misinformation. First, transparency is paramount in public heath communication on sensitive and/or controversial issues.46 This is particularly true for politically charged debates such as mask wearing. As our data show, misinformation about the lack of effectiveness of using facial masks was widespread, and many opposing opinions reflected strong political beliefs that mandatory mask wearing had been used as a tool by certain political groups to control and manipulate the public. For example, recently, the state officials of California refused to disclose the data and reasoning for the state’s decision to lift the stay-at-home order, stating that “they rely on a very complex set of measurements that would confuse and potentially mislead the public if they were made public.”47 This lack of transparency, intentionally or unintentionally, will likely lead to loss of public trust and incubate conspiracy theories. Second, given the complexity, the efficacy of community mask wearing on preventing the spread of SARS-CoV-2 (severe acute respiratory syndrome coronavirus 2) is difficult to definitively prove using scientific methods. As a result, the general public tends to cherry pick the results aligned with their beliefs, or they use cautionary language commonly used in study limitation sections, eg, “the results of this study may not be generalizable,” as proof of lack of evidence. As our data show, some sentences from a handful of studies published in high-impact journals (eg, The New England Journal of Medicine) were taken out of context and shared widely among those holding anti-mask beliefs. Therefore, in trying times such as this, public health officials need to put an extraordinary effort in educating the public how to properly interpret the findings reported in scientific studies,48 in addition to proactively addressing misinformation that may result from inconclusive research findings. Third, policy changes are often inevitable as new scientific evidence emerges. The rationale of such changes must be well articulated to the public. Public health officials should also not be hesitant to admit the mistakes that they might have made at the early stage of this unprecedented global pandemic due to the lack of information and uncertainties in decision making. Last, public health agencies should develop a means to constantly monitor the public’s opinions, particularly those circulated through social media, in order to timely adjust their communication strategies in response to viral spread of misinformation, misinterpretation, or misbelieves.

Future work should develop more effective machine learning classifiers to facilitate opinion mining using social media data, tweets in particular, which are often short and informal, so that automatic and continuous extraction and monitoring of public opinions are possible. In addition, future work should include more diverse social media platforms representing different types of user groups and different interaction modalities and use qualitative approaches such as interviews and focus groups to obtain a more in-depth understanding of why certain segments of the population have a strong attitude against mask wearing, rather than relying solely on their publicly available social media posts.

CONCLUSION

Public mask wearing, while believed to be an essential personal protection measure to contain the COVID-19 pandemic, has provoked significant controversies in the United States. Through an analysis of a large Twitter dataset using a combination of qualitative content analysis and machine learning approaches, this study classified the public’s attitude toward mask wearing and the evolution of this attitude over time. The results show that while most tweets were pro-mask, opposing opinions were not uncommon, and the proportion stayed rather constant throughout the pandemic to date. Common reasons for the anti-mask attitude included physical discomfort and negative effects, lack of effectiveness, and being unnecessary or inappropriate for certain people or under certain circumstances. Based on these findings, we recommend public health agencies improve their communication strategies to better convey to the public the benefits of mask wearing and combat with misinformation. Such strategies may include increased transparency in data and reasoning, being not afraid of admitting mistakes that might have been made at the early stage of the pandemic due to the lack of information, and educating the public on how to properly interpret inconclusive or conflicting findings from scientific studies.

FUNDING

This work was supported in part by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through grant UL1TR001414. The work of Dr. Chen Li, Qiushi Bai, and Yicong Huang are supported by the Orange County Health Care Agency and NSF RAPID award 2027254. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health and the Orange County Health Care Agency.

AUTHOR CONTRIBUTIONS

LH, CH, and YC designed the study. LH annotated data, designed coding scheme, and drafted the manuscript. CH annotated data, built machine learning models, and drafted a significant portion of the manuscript. TLR resolved disagreement during qualitative analysis, assisted in literature review, and revised the manuscript. KZ contributed to the study design and revised the manuscript. YH, QB, and CL collected the data and provided feedback to the manuscript. All authors have read and approved the final draft of the manuscript.

CONFLICT OF INTEREST STATEMENT

The authors have no competing interests to declare.

DATA AVAILABILITY STATEMENT

The data analyzed in this paper were retrieved using Twitter API and can be made available upon request, provided that proper data use agreements are established with the research team and with Twitter (San Francisco, CA).

Supplementary Material

REFERENCES

- 1.Centers for Disease Control and Prevention. COVID-19 Cases, Deaths, and Trends in the US. CDC COVID Data Tracker. https://covid.cdc.gov/covid-data-tracker. Accessed December 23, 2020.

- 2.WHO Coronavirus Disease (COVID-19) Dashboard. https://covid19.who.int. Accessed December 28, 2020.

- 3. Bundgaard H, Bundgaard JS, Raaschou-Pedersen DET, et al. Effectiveness of adding a mask recommendation to other public health measures to prevent SARS-CoV-2 infection in Danish mask wearers. Ann Intern Med 2020; 174: 335–43. doi:10.7326/M20-6817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.National Academies of Sciences, Engineering, and Medicine. Rapid Expert Consultation on the Effectiveness of Fabric Masks for the COVID-19 Pandemic (April 8, 2020). Washington, DC: National Academies Press; 2020.

- 5. Eikenberry SE, Mancuso M, Iboi E, et al. To mask or not to mask: Modeling the potential for face mask use by the general public to curtail the COVID-19 pandemic. Infect Dis Model 2020; 5: 293–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Sunjaya AP, Jenkins C.. Rationale for universal face masks in public against COVID‐19. Respirology 2020; 25 (7): 678–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Katz J, Sanger-Katz M, Quealy K. A detailed map of who is wearing masks in the U.S. The New York Times. https://www.nytimes.com/interactive/2020/07/17/upshot/coronavirus-face-mask-map.html.2020. Accessed September 6, 2020.

- 8.Centers for Disease Control and Prevention. Transcript for CDC Telebriefing: CDC Update on novel coronavirus. 2020. https://www.cdc.gov/media/releases/2020/t0212-cdc-telebriefing-transcript.html. Accessed December 1, 2020.

- 9.Centers for Disease Control and Prevention. COVID-19: Considerations for Wearing Masks. https://www.cdc.gov/coronavirus/2019-ncov/prevent-getting-sick/cloth-face-cover-guidance.html. Accessed September 28, 2020.

- 10.World Health Organization. Advice on the use of masks in the context of COVID-19: interim guidance, 6 April 2020. https://apps.who.int/iris/handle/10665/331693. Accessed November 9, 2020.

- 11.Richards JW. Biden’s National Mask Mandate is Absurd and Despotic. Discovery Institute. 2020. https://www.discovery.org/a/bidens-mask-mandate/. Accessed December 6, 2020.

- 12.Pew Research Center. Most Americans say they regularly wore a mask in stores in the past month; fewer see others doing it. https://www.pewresearch.org/fact-tank/2020/06/23/most-americans-say-they-regularly-wore-a-mask-in-stores-in-the-past-month-fewer-see-others-doing-it/. Accessed August 2, 2020.

- 13.Advisory Board. Why some Americans won’t wear face masks, in their own words. http://www.advisory.com/daily-briefing/2020/06/19/mask-wearing. Accessed August 2, 2020.

- 14.Health.com. Why Do Some People Refuse to Wear a Face Mask in Public? https://www.health.com/condition/infectious-diseases/coronavirus/face-mask-refuse-to-wear-one-but-why. Accessed August 2, 2020.

- 15.Jarry J. Why Some People Choose Not to Wear a Mask. McGill Office for Science and Society. https://www.mcgill.ca/oss/article/covid-19-health/why-some-people-choose-not-wear-mask. Accessed November 7, 2020.

- 16.Siemaszko C. Here’s why some people are not wearing masks during the coronavirus crisis. NBC News. https://www.nbcnews.com/news/us-news/here-s-why-some-people-are-not-wearing-masks-during-n1200701. Accessed November 7, 2020.

- 17.Tweet geospatial metadata. https://developer.twitter.com/en/docs/tutorials/tweet-geo-metadata. Accessed February 1, 2021.

- 18.Twitter. Twitter API Standard v1.1. https://developer.twitter.com/en/docs/twitter-api/v1. Accessed December 31, 2020.

- 19. Sanders A, White R, Severson L, et al. Unmasking the conversation on masks: Natural language processing for topical sentiment analysis of COVID-19 Twitter discourse. medRxiv, doi: https://www.medrxiv.org/content/10.1101/2020.08.28.20183863v3, 20 Mar 2021, preprint: not peer reviewed. [PMC free article] [PubMed]

- 20. Kim Y, Huang J, Emery S.. Garbage in, garbage out: data collection, quality assessment and reporting standards for social media data use in health research, infodemiology and digital disease detection. J Med Internet Res 2016; 18 (2): e41.doi:10.2196/jmir.4738 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J. Distributed representations of words and phrases and their compositionality. In: Burges CJC, Bottou L, Welling M, Ghahramani Z, Weinberger KQ, eds. Advances in Neural Information Processing Systems 26. Red Hook, NY: Curran Associates; 2013: 3111–9. http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf

- 22. Hilario AF, López SG, Galar M, Prati RC, Krawczyk B, Herrera F.. Learning from Imbalanced Data Sets. Berlin, Germany: Springer International; 2018. [Google Scholar]

- 23. Wang Y, Huang M, Zhu X, Zhao L. Attention-based LSTM for Aspect-level Sentiment Classification. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing. Stroudsberg, PA: Association for Computational Linguistics; 2016: 606–15.

- 24. Gal Y, Ghahramani Z.. A theoretically grounded application of dropout in recurrent neural networks. In: Proceedings of the 30th International Conference on Neural Information Processing Systems; 2016; 29: 1027–35. [Google Scholar]

- 25. Ferrara E, Varol O, Davis C, Menczer F, Flammini A.. The rise of social bots. Commun ACM 2016; 59 (7): 96–104. [Google Scholar]

- 26.GitHub - IUNetSci/botometer-python: A Python API for Botometer by OSoMe. https://github.com/IUNetSci/botometer-python. Accessed February 6, 2021

- 27. Yang K-C, Varol O, Davis CA, Ferrara E, Flammini A, Menczer F.. Arming the public with artificial intelligence to counter social bots. Hum Behav Emerg Tech 2019; 1 (1): 48–61. [Google Scholar]

- 28.Pew Research Center. How we identified bots on Twitter. Pew Research Center. https://www.pewresearch.org/fact-tank/2018/04/19/qa-how-pew-research-center-identified-bots-on-twitter/. Accessed February 24, 2021.

- 29. Rauchfleisch A, Kaiser J.. The False positive problem of automatic bot detection in social science research. PLoS One 2020; 15 (10): e0241045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. He L, Yin T, Hu Z, Chen Y, Hanauer DA, Zheng K.. Developing a standardized protocol for computational sentiment analysis research using health-related social media data. J Am Med Inform Assoc 2020. Dec 22 [E-pub ahead of print].doi:10.1093/jamia/ocaa298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Hutto CJ, Gilbert E V. A parsimonious rule-based model for sentiment analysis of social media text. In: Eighth International AAAI Conference on Weblogs and Social Media. 2014. https://www.aaai.org/ocs/index.php/ICWSM/ICWSM14/paper/view/8109. Accessed July 15, 2019.

- 32.TextBlob: Simplified Text Processing — TextBlob 0.15.2 documentation. https://textblob.readthedocs.io/en/dev/index.html. Accessed March 30, 2019.

- 33. Manning C, Surdeanu M, Bauer J, Finkel J, Bethard S, McClosky D. The Stanford CoreNLP Natural Language Processing Toolkit. In: Proceedings of 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations. Stroudsburg, PA: Association for Computational Linguistics; 2014: 55–60. doi:10.3115/v1/P14-5010

- 34. Tausczik YR, Pennebaker JW.. The psychological meaning of words: LIWC and computerized text analysis methods. J Lang Soc Psychol 2010; 29 (1): 24–54. [Google Scholar]

- 35. He L, Zheng K.. How do general-purpose sentiment analyzers perform when applied to health-related online social media data? Stud Health Technol Inform 2019; 264: 1208–12. doi:10.3233/SHTI190418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Tang J, Meng Z, Nguyen X, Mei Q, Zhang M. Understanding the Limiting Factors of Topic Modeling via Posterior Contraction Analysis. In: Proceedings of the 31st International Conference on Machine Learning; 2014; 32: I-190–8 .

- 37. Corbin J, Strauss A.. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks, CA: Sage; 2014. [Google Scholar]

- 38.COVID-19 mask refurbishment. https://www.youtube.com/watch?v=LrXLqXydVIo. Accessed December 22, 2020.

- 39.Klompas M, Morris CA, Sinclair J, Pearson M, Shenoy ES. Universal masking in hospitals in the Covid-19 era. N Engl J Med 2020; 382: e63. [DOI] [PubMed]

- 40. Davis MA, Zheng K, Liu Y, Levy H.. Public response to Obamacare on Twitter. J Med Internet Res 2017; 19 (5): e167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Du J, Xu J, Song H-Y, Tao C.. Leveraging machine learning-based approaches to assess human papillomavirus vaccination sentiment trends with Twitter data. BMC Med Inform Decis Mak 2017; 17 (Suppl 2): 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Gui X, Wang Y, Kou Y, et al. Understanding the patterns of health information dissemination on social media during the Zika outbreak. AMIA Annu Symposium Proc 2017; 2017: 820–9. [PMC free article] [PubMed] [Google Scholar]

- 43.Centers for Disease Control and Prevention. Your guide to masks. https://www.cdc.gov/coronavirus/2019-ncov/prevent-getting-sick/about-face-coverings.html. Accessed February 13, 2021.

- 44. Godoy M. Why some people don’t wear masks. NPR.org. https://www.npr.org/2020/07/01/886299211/why-some-people-dont-wear-masks. Accessed February 13, 2021.

- 45. Hosie R. Dentist on TikTok shows how to make face mask fit better. Insider.https://www.insider.com/doctor-tiktok-shows-how-to-make-face-mask-fit-better-2020-7. Accessed February 12, 2021.

- 46. French PE. Enhancing the legitimacy of local government pandemic influenza planning through transparency and public engagement. Public Admin Rev 2011; 71 (2): 253–64. [Google Scholar]

- 47. Thompson D. It’s a secret: California keeps key virus data from public. ABC News. https://abcnews.go.com/Technology/wireStory/secret-california-key-virus-data-public-75432129. Accessed February 13, 2021.

- 48. Gruber-Miller S. Fact check: New England journal article taken out of context, didn’t bash face masks. USA Today. https://www.usatoday.com/story/news/factcheck/2020/07/22/fact-check-new-england-journal-medicine-article-face-masks-coronavirus-covid-19-spread/5454384002/. Accessed February 25, 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data analyzed in this paper were retrieved using Twitter API and can be made available upon request, provided that proper data use agreements are established with the research team and with Twitter (San Francisco, CA).