Abstract

Eye contact established by a human partner has been shown to affect various cognitive processes of the receiver. However, little is known about humans’ responses to eye contact established by a humanoid robot. Here, we aimed at examining humans’ oscillatory brain response to eye contact with a humanoid robot. Eye contact (or lack thereof) was embedded in a gaze-cueing task and preceded the phase of gaze-related attentional orienting. In addition to examining the effect of eye contact on the recipient, we also tested its impact on gaze-cueing effects (GCEs). Results showed that participants rated eye contact as more engaging and responded with higher desynchronization of alpha-band activity in left fronto-central and central electrode clusters when the robot established eye contact with them, compared to no eye contact condition. However, eye contact did not modulate GCEs. The results are interpreted in terms of the functional roles involved in alpha central rhythms (potentially interpretable also as mu rhythm), including joint attention and engagement in social interaction.

Keywords: eye contact, joint attention, interactive gaze, gaze cueing, human–robot interaction

Introduction

Eye contact occurs when gaze of two agents is directed to one another (Emery, 2000). It is one of the most important social signals communicating the intention to engage in an interaction (Kleinke, 1986). The impact of eye contact on human cognition has been long investigated using behavioural and electrophysiological measures and has been shown to affect a wide range of cognitive processes and states, including arousal, memory, action and attention (for reviews on behavioural measures, see Macrae et al., 2002; Senju and Johnson, 2009; Hamilton, 2016; Hietanen, 2018). It has been shown that seeing another person’s direct gaze modulates oscillatory electroencephalogram (EEG) activity in frequency ranges centred around 10 Hz, namely alpha and mu rhythms (Gale et al., 1972, 1975; Hietanen et al., 2008; Pönkänen et al., 2011; Wang et al., 2011; Hoehl et al., 2014; Dikker et al., 2017; Leong et al., 2017; Prinsen et al., 2017; Prinsen and Alaerts, 2020).

Alpha rhythm was first described in parieto-occipital regions and is known to be primarily modulated by visual inputs (Adrian and Matthews, 1934), while its suppression also reflects arousal and attention mechanisms (for reviews, see Ward, 2003; Foxe and Snyder, 2011). For example, alpha desynchronization occurs under conditions of high arousal and/or increased attentiveness. Related to modulation of eye contact effect on alpha power, studies in the 1970s showed that a live direct gaze induced alpha suppression at occipital electrodes compared to an averted gaze (Gale et al., 1972, 1975). The authors explained their results in terms of higher arousal in face-to-face contact compared to an averted gaze. Moreover, more recent studies have shown that a live direct gaze from a social partner elicited left-sided asymmetry in anterior alpha cortical activity (associated with activation of the approach system and positive emotion; Harmon-Jones, 2003; Davidson, 2004; Van Honk and Schutter, 2006), whereas a live averted gaze has been related to weaker left-sided or stronger right-sided asymmetry in alpha cortical activity (associated with avoidance system and negative emotion; Harmon-Jones, 2003; Davidson, 2004; Van Honk and Schutter, 2006; Hietanen et al., 2008; Pönkänen et al., 2011). Additionally, eye contact can modulate inter-brain EEG synchronized activity in alpha frequency range both in infants (Leong et al., 2017) and in adults (Dikker et al., 2017). Furthermore, a reduction of alpha power has been reported for other instances involving a mechanism of sharing attention, i.e. joint attention. For example, Lachat and colleagues found that the power of alpha rhythm was reduced in left centro-parieto-occipital electrodes (11–13 Hz) during joint attention periods (looking at the same object) compared to no-joint attention periods (looking at different objects) (Lachat et al., 2012).

On the other hand, rolandic mu rhythm, which was first described by Gastaut (1952), occurs in the same frequency band as the alpha but is topographically centred over the sensorimotor regions of the brain (i.e. electrode positions C3, Cz and C4). Thus, the two rhythms can be mainly distinguished by their topographic activation. Mu rhythm was first associated with the execution of a motor activity (Pfurtscheller and Berghold, 1989) but subsequently also with action observation and imagination. More specifically, mu oscillations are reduced during movement execution/observation/imagination as compared to a condition of no movement (Pfurtscheller and Berghold, 1989; Pineda et al., 2000; Perry and Bentin, 2009). Related to eye contact, research has shown a link between eye contact and interpersonal motor resonance, indicating that the mirroring of observed movements is enhanced when accompanied with mutual eye contact between actor and observer (Wang et al., 2011; Prinsen et al., 2017). Importantly, Prinsen et al. found a mu rhythm suppression when a movement observation was accompanied with direct compared to averted gaze (Prinsen and Alaerts, 2020). Furthermore, a reduction of mu power has been observed for joint attention both for adults (Lachat et al., 2012) and infants (Hoehl et al., 2014). Specifically for infants, mu desynchronization occurred only when the adult had engaged in eye contact with them prior to looking to the object (Hoehl et al., 2014).

The abovementioned electrophysiological studies involved humans as interaction partners to investigate the effect of eye contact (Senju and Johnson, 2009) on oscillatory activity of the brain. Real humans as interactive partners in lab-based protocols increase the ecological validity and naturalness of the interaction and evoke mechanisms of social cognition closer to real-life interactions, relative to 2D stimuli presented on the screen. Indeed, recent studies have shown that more dynamic and naturalistic gaze cue stimuli do not necessarily reveal the same pattern of results compared to static screen-based stimuli (Risko et al., 2016). However, involving humans as interactive agents can impose limitations to the replicability of results, since there are various aspects of the interaction that are difficult to control and can eventually alter participants’ reaction to these processes, for instance natural human variability in repetition of the same movement over many trials (Chevalier et al., 2019). Recently, it has been suggested that embodied humanoid agents can address the challenges of naturalistic approaches in social cognition, providing, on one hand, higher ecological validity, as compared to screen-based observational studies with 2D stimuli and, on the other hand, better experimental control relative to human–human interaction studies (Wykowska et al., 2016; Wiese et al., 2017; Chevalier et al., 2019; Schellen and Wykowska, 2019). Regarding ecological validity, it has been shown that robots that are embodied increase social presence (Jung and Lee, 2004). Additionally, an embodied agent can impact on interaction differently than a virtual representation of the same agent, as shown in various contexts, e.g. better temporal coordination, facilitation in learning, increased persuasiveness (Bartneck, 2003; Kose-Bagci et al., 2009; Leyzberg et al., 2012; Li, 2015). Furthermore, humanoid agents allow for interactive paradigms requiring manipulation of objects in the environment and joint actions (Admoni et al., 2014; Ciardo et al., 2020). Regarding experimental control, humanoids can repeat specific behaviours in the exact same manner over many trials. Moreover, humanoids allow for tapping onto specific cognitive mechanisms, since their movements can be decomposed into individual elements, known as ‘modularity of control’, and allow for studying their separate or combined contribution on the mechanism of interest (Sciutti et al., 2015).

Research examining the effect of eye contact exhibited by a robotic agent has mainly focused on subjective evaluations of the robot or the quality of human–robot interaction (Imai et al., 2002; Ito et al., 2004; Yonezawa et al., 2007; Satake et al., 2009; Choi et al., 2013). For example, it has been found that people are sensitive to a robot’s gaze, i.e. they perceive a robot’s gaze directed towards them but not when it is directed to a person sitting nearby (Imai et al., 2002). Additionally, a robot exhibiting eye contact improves its social evaluation, attribution of intentionality and engagement (Ito et al., 2004; Yonezawa et al., 2007; Kompatsiari et al., 2017, 2018a, 2019a). Moreover, the effect and subjective perception of eye contact can be mediated by the content of conversation (Choi et al., 2013). However, these results have been based on subjective reports, which require conscious awareness of examined mechanisms or phenomena, and are easily affected by biases, such as the social desirability effect (Humm and Humm, 1944). Furthermore, explicit subjective measures cannot unveil certain cognitive mechanisms that are often automatic and implicit. Recently, Kompatsiari and colleagues, using objective measures, showed that eye contact exhibited by iCub humanoid robot (Metta et al., 2010) elicited a higher degree of ‘attentional engagement’ (Kompatsiari et al., 2019b). In more detail, eye contact engaged participants’ attention to iCub’s face by attracting longer fixations to the face, compared to no eye contact. Furthermore, when the robot established eye contact with the participants before shifting its gaze to a potential target location, it engaged them in joint attention. On the contrary, joint attention was not elicited when the robot did not establish eye contact (Kompatsiari et al., 2018a).

Aim of the study

Here, we aimed at investigating human brain responses to eye contact established by a humanoid robot in a face-to-face interaction. The eye contact was embedded in a joint attention protocol based on the traditional gaze-cueing paradigm (Friesen and Kingstone, 1998). The typical behavioural finding of gaze-cueing paradigms is that reaction times in target detection or discrimination are faster for validly compared to invalidly cued targets (validity effect), reflecting a gaze-cueing effect (GCE). Additionally, a neural signature underlying the validity effect has been identified, i.e. earlier and larger P1/N1 components of event-related potentials (ERPs) have been reported as an ERP index of attentional focus (Mangun et al., 1993) and the GCE specifically (Wykowska et al., 2014; Perez-Osorio et al., 2017). To translate the gaze-cueing paradigm to a human–robot interaction protocol, a 3-D gaze/head-cueing paradigm was employed, in which the iCub humanoid robot was positioned between two computer screens, where target letters would appear. Most importantly for the purposes of this study, the gaze contact of the robot was manipulated prior to directional gaze cue. In one condition, iCub looked towards participants’ eyes, presumably established eye contact with them, and then looked at one of the lateral screens. In the other condition, the robot avoided the human’s gaze by looking down without establishing eye contact before looking towards one of the lateral screens.

We hypothesized that if eye contact with a humanoid robot has an impact on oscillatory neural activity, it might modulate the alpha/mu frequency range, as observed in case of eye contact with another human (Gale et al., 1972, 1975; Hietanen et al., 2008; Pönkänen et al., 2011; Wang et al., 2011; Lachat et al., 2012; Hoehl et al., 2014; Dikker et al., 2017; Leong et al., 2017; Prinsen et al., 2017; Prinsen and Alaerts, 2020). More specifically, we expected a reduction of alpha power during the period of establishing eye contact with iCub humanoid robot compared to when iCub avoided eye contact, by looking downwards. Moreover, similarly to previous studies, we hypothesized that participants would rate as more engaging the eye contact condition, compared to no eye contact (Kompatsiari et al., 2017, 2018a, 2019a). As the focus of this paper is on neural activity related to eye contact with a humanoid robot, the analysis and results of GCEs related to target presentation (both at behavioural and neural level) are reported in Supplementary Material, see also Kompatsiari et al., 2018b.

Methods

Participants

A sample size of 10 participants was calculated to be sufficient based on an a priori power analysis (Faul et al., 2007) for the alpha synchronization analysis during periods of eye contact/no eye contact, using a paired-samples test, the effect size (d = 1.29) of a previous study investigating alpha desynchronization in a similar joint-attention paradigm (Lachat et al., 2012), an alpha error equal to 0.05 and a power level of 0.95 (based on an a-priori power analysis, Faul et al., 2007). The effect size was based on the F value (F = 12.21) of the main effect of the condition attention (joint attention/no joint attention) in the study of Lachat et al., (2012). Additionally, a sample size of 21 participants was calculated to be sufficient for the validity effect, using the effect size (d = 0.7) of a similar study (Wykowska et al., 2015), an alpha error equal to 0.05 and a power level of 0.85. Based on these analyses and in order to account for some data sets that would potentially need to be excluded due to poor data quality, we recruited and tested 24 healthy right-handed participants (mean age = 26.16 ± 4.02, 16 women) in total. All participants had normal or corrected to normal vision and provided written informed consent before enrolment in the study. At the end of the experiment, participants were reimbursed for their participation and were debriefed about the purpose of the study. The study was conducted at the Istituto Italiano di Technologia, IIT, Genova, and it was approved by the local ethical committee (Comitato Etico Regione Liguria).

Stimuli and apparatus

The experiment was carried out in an isolated and noise-attenuated room. Participants and iCub were seated at the opposite sides of a desk at a distance of 125 cm. Participants’ and iCub’s eyes were aligned at 122 cm from the floor. iCub’s gaze shifts were always combined with a head movement. The gaze was directed to five different locations in space: (i) rest—towards a position between the desk and participants’ upper body, (ii) eye contact—towards participants’ eyes or no eye contact—towards the table, (iii) left—towards the target on the left screen or right—towards the target on the right screen. The coordinates of these positions were predetermined in order to ensure that the amplitude of the gaze shifts was the same for both gaze conditions: eye contact/no eye contact. More specifically, the height of robot’s gaze (z-coordinate) in eye contact/no eye contact was equally distanced from rest and left/right positions.

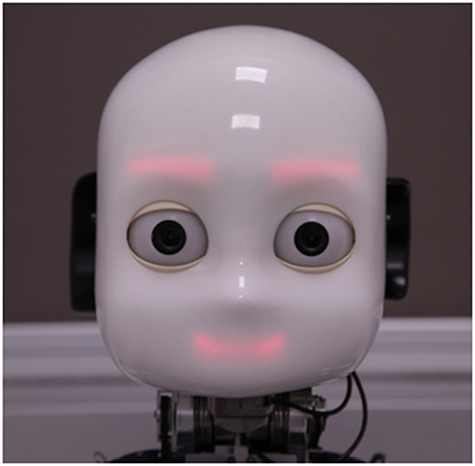

iCub and algorithms

iCub is a full humanoid robot. It has physically embodied 3D mechanical eyes with 3 degrees of freedom (tilt, vergence and version) and 3 additional degrees of freedom in the neck (roll, pitch and yaw) (Metta et al., 2010), see Figure 1. The contrast between the black and the white part of iCub’s eyes is similar to the contrast in human eyes (black iris and white sclera), thereby adding realism to eye contact and/or gaze-mediated orienting of attention. In order to control the movement of the iCub, we used the iCub middleware Yet Another Robot Platform (YARP) (Metta et al., 2006), which is a multi-platform open-source framework. The movement of the eyes and the neck of iCub were controlled by the YARP Gaze Interface, iKinGazeCtrl (Roncone et al., 2016), which allows the control of iCub’s gaze through independent movement of the neck and eyes in a biologically inspired way. The vergence of the robot’s eyes was set to 5° and maintained constant. The trajectory time for the movement of eyes and neck was set to 200 and 400 ms, respectively.

Fig. 1.

iCub robot.

Procedure

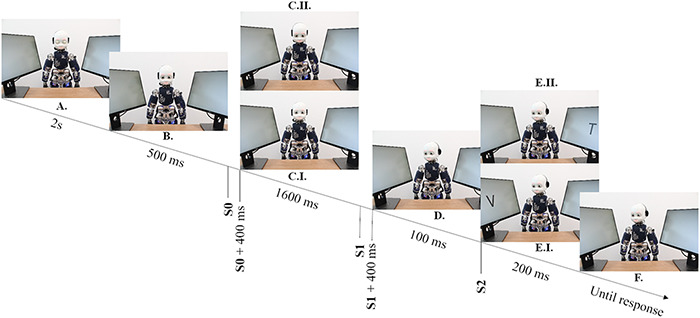

The experiment lasted for about 1 h. It consisted of 20 blocks, pseudo-randomly assigned to eye contact or no eye contact condition. The order of the blocks was counterbalanced across participants so that half of the participants experienced a sequence starting with an eye contact block (Sequence Type A), while the other half experienced the exact opposite sequence starting with a no eye contact gaze block (Sequence Type B). Each block consisted of 16 trials. Regarding trial sequence, at the beginning of every trial the robot had its eyes closed for 2 s, see Figure 2A. Afterwards, it opened its eyes for 500 ms (see Figure 2B) and looked either towards the participant’s eyes (in eye contact blocks, see Figure 2C.I) or downwards (in no eye contact blocks, see Figure 2C.II). This phase lasted for 2 s. The duration of this phase includes the robot movement that lasted for 400 ms, equivalent to the neck trajectory time. Subsequently, the robot looked to the left or right screen, see Figure 2D. The letter target appeared on the same (valid trial, see Figure 2E.I) or opposite screen (invalid trial, see Figure 2E.II), 500 ms after the initiation of the robot’s gaze shift. Thus, our gaze-cueing procedure involved a stimulus-onset asynchrony of 500 ms. Gaze direction was uninformative with respect to target location (i.e. cue-target validity = 50%). Target identity (T, V), target location (left or right screen) and gaze direction (left or right screen) were counterbalanced and randomly selected within each block. Participants were instructed to keep their eyes fixated on the robot’s face and discriminate the target as fast as possible by pressing the letter ‘V’ or ‘T’ depending on the target identity, see Figure 2F. Keeping the fixation on the face of the robot was the best strategy to perform the task. Indeed, if participants had moved the eyes towards one of the screens, they would have likely missed the target in the case it was presented on the opposite one. The experimenter monitored whether participants fixated their gaze on the iCub face, as instructed, through cameras located in iCub eyeballs. Half of the participants pressed the key ‘V’ for the V stimulus with their right hand, and the key ‘T’ for the T stimulus with their left hand (stimulus-response mapping 1). The other half were instructed to respond with the opposite configuration (stimulus-response mapping 2). In order to reduce fatigue, a pause of around 10 min was programmed after half of the experiment, while short self-paced breaks were allowed at the end of every block. Additionally, at the end of each block, participants were requested to respond to the following question: ‘How much did you feel engaged with the robot (1–10)’.

Fig. 2.

Trial Sequence. iCub has its eyes closed for 2s (A). Then, it opens its eyes, keeping its head at the same position (B). After 500 ms, iCub initiates its movement towards participants’ eyes (eye contact, C.I) or downwards (no eye contact, C.II). S0 represents the EEG trigger related to the initiation of the robot movement towards the establishment of eye contact. The (no) eye contact is established 400 ms following S0 trigger. The robot remains in this position for another 1600 ms. Then, iCub turns its head laterally to gaze towards a potential target location (D). S1 represents the EEG trigger related to the initiation of the robot’s movement towards the lateral position. The lateral movement is completed within 400 ms following the S1 trigger. iCub remains in the lateral position with blank screens for another 100 ms. Then, the target letter appears randomly on one of the screens for 200 ms (valid trial: E.I, invalid trial: E.II). S2 represents the EEG trigger related to the target appearance on the screen. A participant (not shown) identifies the target by pressing the response button (T or V), (F).

EEG data recording

EEG was recorded from 64 electrode sites of an active electrode system using Ag–AgCl electrodes, at a sampling rate of 500 Hz (ActiCap, Brain Products, GmbH, Munich, Germany). Bipolar horizontal and vertical electro-oculogram (EOG) activity was recorded from the outer canthi of the eyes and from above and below the observer’s left eye, respectively. All electrodes were referenced to FCz. Electrode impedances were kept below 10 kΩ throughout the experimental procedure. EEG activity was amplified with a band-pass filter of 0.1–250 Hz BrainAmp amplifiers (Brain Products, GmbH).

Analysis

For the analysis of GCEs, related to target presentation, please see Supplementary Material.

Eye contact-related effects

Time frequency analysis of the epoch during gaze contact.

EEG data were analysed using MATLAB® version R2017a (The Mathworks Inc., 2017) and customised scripts as well as the EEGLAB (Delorme and Makeig, 2004) and FieldTrip toolboxes (Oostenveld et al., 2011). The data were down-sampled to 250 Hz, while a band-pass filter (1–100 Hz) and a notch filter (50 Hz) were applied to narrow the signal between specific frequencies of interest and remove the power line noise. The signal was re-referenced to the common average of all electrodes (Dien, 1998). The epoch of interest consisted of the actual eye contact/no eye contact phase, but we also included the time period of robot movement towards the establishment of eye contact and the period of robot movement towards the lateral shift after the end of gaze contact period. For this analysis, we annotate as time t = 0 s the event in which iCub started moving its head towards establishing eye contact or not (EEG trigger S0, see Figure 2). Data were subsequently segmented into epochs (i.e. trials) of 5 s length, including 2.5 s before and after S0. Each trial was baseline-corrected by removing the values averaged over a period of 500 ms (from −1.5 to −1.0 s before S0), during which iCub had its eyes closed, see Figure 2A. The specific baseline window was chosen in order to ensure a relatively large task-irrelevant window without any robot movement and with eyes closed. The removal of trials with large artefacts (i.e. muscle movements and electric artefacts) and bad channels was first performed manually by visual inspection. Removal of adjacent electrodes was performed only to electrodes easily susceptible to noise and out of the focus of current analysis (fronto-temporal: FT9, FT7 or FT8, FT10 frontal: Fp1, AF7, AF3, or Fp2, AF4, AF8). On average, 6.18 ± 3.06 electrodes were removed and interpolated afterwards across both gaze conditions. The mean number of the artefact-free trials was similar across conditions and equal to 144.42 ± 5.54 trials (out of 160) on average. The remaining artefacts, i.e. muscular activity, ocular activity and bridges were removed by applying independent component analysis (ICA). The number of removed ICA components was similar across gaze conditions and equivalent to 23.5 ± 6.88 in average. After the artefact removal, noisy channels were spatially interpolated.

All trials were averaged for each condition across participants and the butterfly plots were used to inspect for potential ERPs during the period of interest. No ERPs were observed in this period. Time-frequency representations (TFRs) of oscillatory power changes were computed separately for each condition (eye contact and no eye contact). Time-frequency power spectra were estimated using Morlet wavelet analysis based on varying cycles to allow for high spectral resolution in lower frequencies (3.5 cycles at the lowest considered frequency: 2 Hz) and high temporal resolution for higher frequencies (18 cycles at the highest considered frequency: 60 Hz). Time steps were set to 10 ms, while frequency steps were set to 1 Hz (Oostenveld et al., 2011). An absolute baseline correction for each trial was performed by subtracting the average oscillatory activity of the −1.5 to −1.0 s period (Premoli et al., 2017) where iCub had its eyes closed. This baseline correction was used to avoid any task-unrelated time-frequency activity. Subsequently, TFRs were averaged across trials per experimental condition. In the end, TFRs were cropped to the phase of interest (S0: 0 to 2.5 s) in order to include the oscillatory activity of the whole gaze dynamics, i.e. the period towards the establishment of eye contact/no eye contact (S0: 0 s to 400 ms), the actual period of eye contact/no eye contact gaze (400 ms to 2.0 s) and the period of robot’s gaze shift following both conditions until target appearance (S1: 2.0 to 2.5 s).

Data were averaged to calculate power within alpha frequency band, i.e. 8–12 Hz. In this frequency range, spatio-temporal data across conditions were compared by performing non-parametric cluster-based permutation analyses (using a Monte-Carlo method based on paired t-statistics) (Maris and Oostenveld, 2007). Samples between gaze conditions with a t-value larger than an a priori threshold of P < 0.05 were clustered in connected sets on the basis of temporal and spatial adjacency. Cluster-level statistics were calculated by taking the sum of the t-values. Subsequently, comparisons were performed for the maximum values of summed t-values. The reference distribution of cluster-level t-values (using maximum of the summed values) was approximated using a permutation test (i.e. randomising data across conditions and re-running the statistical test N = 1500 times). Clusters comprising a minimum of two electrodes were considered statistically significant at an alpha level of 0.05 if <5% of the permutations used to construct the reference distribution yielded a maximum cluster-level statistic larger than the cluster-level value observed in the original data.

Exploratory analysis on alpha asymmetry during gaze contact.

In addition to the general oscillatory activity during the period of eye contact/no eye contact, alpha asymmetry at frontal sites was assessed. More specifically, asymmetry values in alpha range were calculated for electrode pairs at frontal sites (F8/F7, F4/F3) by subtracting the ln-transformed power density values for the left site from that for the right site (Allen et al., 2004; Coan and Allen, 2003; Koslov et al., 2011; Papousek et al., 2012, 2013).

Engagement ratings.

Median ratings for social engagement were analysed using a Wilcoxon signed-rank test in order to compute the statistical difference between eye contact vs. no eye contact blocks.

Results

For the results of GCEs, related to target presentation, please see Supplementary Material.

Eye contact-related effects

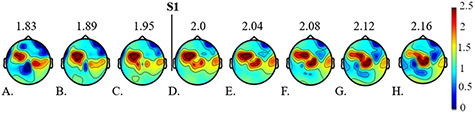

Time frequency analysis of the epoch during gaze contact.

The analysis of alpha-band activity showed that participants responded with higher desynchronization in alpha activity in eye contact compared to no eye contact condition in a left fronto-central and a central cluster (corrected for multiple comparisons P = 0.035) (Cohen’s d = 0.49) during the time window 1.83–2.19 s, see Figure 3. This time window corresponded to different phases of the experiment, i.e. eye contact/no eye contact condition during the time period: t = 1.83–2.0 s and the robot’s gaze shift towards a lateral position (left or right) for the time period t = 2.01–2.19 s. Data were further averaged across the abovementioned temporal bins, and the spatial data across conditions were compared by performing non-parametric cluster-based permutation analyses (using a Monte-Carlo method based on paired t-statistics) (Maris and Oostenveld, 2007). During gaze condition period (eye contact/no eye contact), the cluster of the electrodes was located in a left fronto-central position, multiple comparisons corrected P = 0.01 (Cohen’s d = 0.62), with a significant cluster of four electrodes: FC5, FC1, C3 and FC3 (see Figure 3A-C). Data were further averaged over the above-mentioned temporal period and the significant electrodes and were then submitted to Bayesian paired-sample t-test. The estimated Bayes factor (alternative/null) suggested that the data were 7.07 times more likely to occur under the alternative hypothesis, providing thus substantial evidence for this hypothesis. During the robot’s gaze shift, the cluster of the electrodes was located in a more central position, multiple comparisons corrected P = 0.008 (Cohen’s d = 0.64), with a significant cluster of six electrodes: FC1, CP2, FC3, C1, CPz and C2 (see Figure 3D-H). Data were further averaged over the above-mentioned time period and the significant electrodes and were submitted to Bayesian paired-sample t-test. The estimated Bayes factor (alternative/null) suggested that the data were 9.07 times more likely to occur under the alternative hypothesis, providing thus substantial evidence for this hypothesis.

Fig. 3.

Scalp topographies of statistically significant clusters between gaze conditions (no eye contact and eye contact) in alpha range band, 8–12 Hz: depicted time range between t = 1.832 to t = 2.16 s, relative to the initiation of the robot movement towards the eye/no eye contact. The vertical line S1 indicates the initiation of the robot’s movement towards the lateral position (t = 2 s). The topographies are depicted every 60 ms before S1 (3A–3C) and every 40 ms after S1 (3D–3H).

Exploratory analysis on alpha asymmetry during gaze contact.

Alpha asymmetry values were assessed for the F3/F4 and F7/F8 electrode pairs. Values did not differ either for F3/F4 electrodes: t (1, 23) = 1.22, P = 0.23 (Mno eye contact = 0.13, s.d. = 0.27; Meye contact = 0.05, s.d. = 0.33) or for F7/F8 electrodes: t (1, 23) = –0.59, P = 0.56 (Mno eye contact = –0.02, s.d. = 0.58; Meye contact = 0.07, s.d. = 0.44). The data were also examined by estimating a Bayes factor using Bayesian information criteria (Wagenmakers, 2007), comparing the fit of the data under the null hypothesis and the alternative hypothesis. Regarding the F3/F4 electrodes, the estimated Bayes factor (null/alternative) suggested that the data were 2.41 times more likely to occur under the null hypothesis. A similar pattern was reported for F7/F8 electrodes, where the estimated Bayes factor (null/alternative) suggested that the data were 3.9 as likely under the null hypothesis.

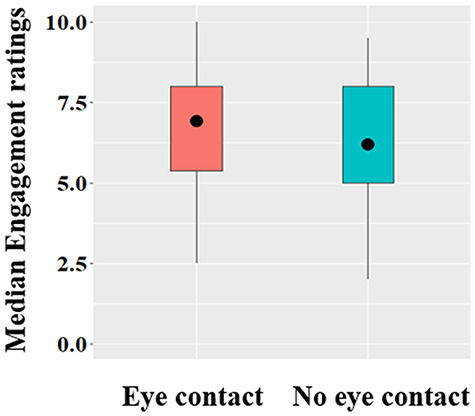

Engagement ratings.

Participants rated the eye contact as more engaging compared to no eye contact condition, Z = –2.0, P = 0.045 (Meye contact = 6.92, s.d. = 1.74, Mno eye contact = 6.21, s.d. = 2.1). The mean of median ratings across gaze conditions are presented in Figure 4.

Fig. 4.

Median engagement ratings across gaze conditions. The circle represents the mean of the data. End of the whiskers represent the lowest and maximum data points within 1.5 interquartile range of the lower and upper quartile, respectively.

Discussion

The aim of the present study was to investigate how eye contact with a humanoid robot affects participants’ oscillatory neural activity and subjective experience of engagement. To this end, we designed an interactive paradigm where iCub humanoid robot either established eye contact with the participants or not. The modulation of eye contact was embedded in a joint attention paradigm. Along with the effects related to eye contact, we examined the impact of eye contact on GCE both on behaviour and neural activity (see Supplementary Material).

Eye contact phase (before the onset of robot’s directional gaze)

Participants responded with higher desynchronization of alpha-band activity when the robot established eye contact with them, compared to no eye contact condition. This effect was prominent in a left fronto-central cluster of electrodes, during approximately the last 200 ms of the gaze manipulation phase. Although the effect size of our result is smaller than the effect size it was powered to detect, a Bayesian approach showed substantial evidence in favour of our effect, namely that alpha-band activity is more desynchronized in eye contact compared to no eye contact condition. Alpha asymmetry analysis at frontal sites did not reveal any significant differences between the two hemispheres, thereby suggesting that the weaker left-sided activation was not related to typical pattern of alpha asymmetry involved in withdrawal motivation system (Hietanen et al., 2008; Pönkänen et al., 2011). The results can be interpreted in line with recent studies that have found left-sided activity related to instances of sharing attention. For example, Lachat et al. (2012) found a suppression of alpha and mu frequency band oscillatory activities in the left centro-parieto-occipital electrodes during joint attention periods. Similarly, in a live joint attention fMRI study, Saito et al. (2010) reported that gaze following elicited activation in the left intraparietal sulcus. Thus, the activation of a sharing attention mechanism induced by the establishment of eye contact might explain the left lateralization of our results, although without source localisation, we cannot draw strong conclusions regarding exact neural sites corresponding to the observed topography.

The desynchronization of a frequency band in the alpha range at frontal left lateral and central sites could also be explained in terms of the functional roles that have been proposed for central mu rhythms. Mu rhythm suppression has originally been associated with action mirroring and activation of the human mirror system (Muthukumaraswamy and Johnson, 2004; Perry and Bentin, 2009). However, more recently, it has been also shown to be modulated by processes involving a mechanism of sharing attention, i.e. joint attention (Lachat et al., 2012). Interestingly for the purposes of this study, mu suppression has also been previously associated with the degree of engagement in social interactions (Oberman et al., 2007; Perry et al., 2011) and the value associated with an action (Brown et al., 2013). For example, Oberman et al., (2007) showed mu desynchronization modulated by the degree of involvement of participants in a computerized ball throwing game: the more a participant was involved (i.e. received the ball from the on-screen players), the more reduced mu oscillatory activity was. Additionally, Perry et al., (2011) found a similar result with participants viewing or playing a game of Rock–Paper–Scissors. Mu rhythm has been also modulated by the value associated with an action, i.e. observing a rewarding action suppressed the mu rhythm compared to a punishing or neutral action (Brown et al., 2013). In line with these findings, our results suggest that eye contact elicited greater engagement of the participants in the social interaction than the no eye contact condition.

The impact of eye contact on mu desynchronization was paralleled by the subjective experience of engagement, which was rated higher in eye contact blocks compared to no eye contact blocks. Similar results have been obtained in previous studies, in which participants rated eye contact as more engaging compared to the no eye contact condition (Kompatsiari et al., 2018a, 2019a). In addition, participants attributed a higher level of human likeness to eye contact compared to no eye contact condition (Kompatsiari et al., 2019a). Furthermore, results on likeability scale of Godspeed questionnaire (Kompatsiari et al., 2019a, Exp. 2) showed that participants liked more the robot with eye contact compared to no eye contact. These factors might have attributed to the increased level of engagement towards the robot exhibiting eye contact. Moreover, the engaging and/or rewarding effect of eye contact and other gaze contingent behaviours is also supported by neuroimaging studies in humans (Kampe et al., 2001; Schilbach et al., 2010). Apart from an increased feeling of engagement/reward associated with eye contact, an increase in ‘attentional’ engagement has been also reported (delayed attentional disengagement from a human face: Senju and Hasegawa, 2005; looking longer at human faces with direct than faces with averted gaze: Wieser et al., 2009; Palanica and Itier, 2012; decreased peak velocity: Dalmaso et al., 2017; longer fixations at iCub’s face during the eye contact compared to the no eye contact condition: Kompatsiari et al., 2019b).

Oscillatory activity during iCub’s shift

Regarding oscillatory activity during the iCub’s head/eyes shifting, we also found an alpha/mu desynchronization in eye contact compared to no eye contact condition in a fronto-centro-parietal cluster of electrodes during the first 200 ms of the movement. Although one cannot exclude the possibility that this might have been a carry-over effect from the gaze contact phase, it is quite plausible that the effect is linked to mu activity, rather than alpha, as studies have shown that mu suppression occurs not only when engaging in motor activity (Gastaut, 1952), but also while observing actions executed by someone else (e.g. Gastaut, 1952; Muthukumaraswamy et al., 2004b) or even imagining performing an action (Pfurtscheller et al., 2008). A recent meta-analysis further supports the idea that mu desynchronization occurs during both action execution and observation (Fox et al., 2016). Interestingly, it has been shown that the mirror neuron system responds also to robotic actions (Gazzola et al., 2007; for a review, see Wykowska et al., 2016). Here, it can be argued that the engagement of participants with the robot during eye contact might have resulted in a difficulty to ‘disengage’ from the task-irrelevant information (i.e. the head/eyes direction) and thus increased the subsequent action ‘mirroring’ of robot’s head turning. Indeed, a decrease in mu activity has been reported during periods of joint attention (looking at the same object) in both adults and infants (Hoehl et al., 2014; Lachat et al., 2012). Along a similar line, at the behavioural level, Bristow et al. showed that a face with direct gaze attracted attention covertly and facilitated joint attention (compared to an averted gaze) by enabling a better discrimination of the subsequent gaze shift (Bristow et al., 2007). The results of the present study support this argument, since the establishment of eye contact might have enhanced processing of subsequent robot’s actions compared to no eye contact, as reflected in reduced mu activation during the period of robot’s head/eye shifting in eye contact condition.

Humanoid-based protocols to investigate gaze-related mechanisms

The current research has implications both for social cognition and for human–robot interaction research. Regarding social cognition research, the present findings provide the first evidence that the eye contact with a humanoid robot modulates humans’ oscillatory brain activity in the same frequency range as in the case of human eye contact, thereby suggesting that the robot’s gaze might be perceived as a meaningful social signal. Results can be interpreted in terms of the functional roles involved in alpha and mu rhythms, associated with a mechanism of sharing attention (alpha rhythm) and increased engagement in a social interaction (mu rhythm). However, as no direct comparison has been made between a human and a robot agent in the exact same experimental paradigm, the interpretation of these effects in terms of socio-cognitive mechanisms cannot be definitive and has rather a speculative character. Regarding the human–robot interaction research, the present gaze-cueing study provides an example of how a well-studied paradigm of cognitive science can be implemented in a human–robot interaction set-up using objective neuroscientific methods. Current findings clearly show that objective measures can target specific cognitive mechanisms that are at stake during the interaction but are often not necessarily accessible to conscious awareness. This approach can cast a light on design of robots that would be capable of evoking mechanisms of human social cognition potentially improving the quality of human–robot interaction. For example, on the one hand, increased attention and engagement in the human–robot interaction (during the establishment of eye contact) might be beneficial when a robot has to sustain our attention (e.g. a teaching assistant robot). On the other hand, when the robot has to perform a joint task with another person (e.g. cooking a meal together), the eye contact might be counterproductive by delaying the shifting of attention to crucial locations in space.

In sum, the present results are informative not only for research in the area of social neuroscience but also for scientific domains of robotics and human–robot interaction.

Supplementary Material

Acknowledgements

The authors would like to thank Jairo Perez-Osorio and Davide de Tommaso for programming the experiment and Jairo Perez-Osorio and Francesca Ciardo for assisting in data collection and discussing the results.

Contributor Information

Kyveli Kompatsiari, Italian Institute of Technology, Social Cognition in Human-Robot Interaction (S4HRI), Genova 16152, Italy.

Francesco Bossi, IMT School for Advanced Studies, Lucca 55100, Italy.

Agnieszka Wykowska, Italian Institute of Technology, Social Cognition in Human-Robot Interaction (S4HRI), Genova 16152, Italy.

Funding

This work has received support from the European Research Council under the European Union’s Horizon 2020 research and innovation programme, ERC Starting Grant ERC-2016-StG-715058, awarded to Agnieszka Wykowska. The content of this paper is the sole responsibility of the authors. The European Commission or its services cannot be held responsible for any use that may be made of the information it contains.

Author Contributions

K.K. conceived, designed and performed the experiments, analysed the data, discussed and interpreted the results and wrote the manuscript. F.B. analysed the data and discussed and interpreted the results. A.W. conceived the experiments, discussed and interpreted the results and revised, commented and edited the manuscript. All authors reviewed the manuscript.

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest

None declared.

References

- Adrian, E.D., Matthews, B.H. (1934). The Berger rhythm: potential changes from the occipital lobes in man. Brain, 57(4), 355–85. [DOI] [PubMed] [Google Scholar]

- Admoni, H., Dragan, A., Srinivasa, S.S., Scassellati, B. (2014). Deliberate delays during robot-to-human handovers improve compliance with gaze communication. In: Proceedings of the 2014 ACM/IEEE International Conference on Human-robot Interaction Bielefeld, March, IEEE, 49–56. [Google Scholar]

- Allen, J.J.B., Coan, J.A., Nazarian, M. (2004). Issues and assumptions on the road from raw signals to metrics of frontal EEG asymmetry in emotion. Biological Psychology, 67, 183–218. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen, S. (1995). Mindblindness: An Essay on Autism and Theory of Mind. Boston: MIT Press/Bradford Books. [Google Scholar]

- Bartneck, C. (2003). Interacting with an embodied emotional character. In: Proceedings of the 2003 International Conference on Designing pleasurable products and interfaces Pittsburgh, June, ACM, 55–60. [Google Scholar]

- Bristow, D., Rees, G., Frith, C.D. (2007). Social interaction modifies neural response to gaze shifts. Social Cognitive and Affective Neuroscience, 2(1), 52–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown, E.C., Wiersema, J.R., Pourtois, G., Brüne, M. (2013). Modulation of motor cortex activity when observing rewarding and punishing actions. Neuropsychologia, 51(1), 52–8. [DOI] [PubMed] [Google Scholar]

- Chevalier, P., Kompatsiari, K., Ciardo, F., Wykowska, A. (2019). Examining joint attention with the use of humanoid robots—a new approach to study fundamental mechanisms of social cognition. Psychonomic Bulletin and Review,27, 217–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi, J.J., Kim, Y., Kwak, S.S. (2013). Have you ever lied?: the impacts of gaze avoidance on people’s perception of a robot. In: 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI) Tokyo, March, IEEE, 105–106. [Google Scholar]

- Ciardo, F., Beyer, F., De Tommaso, D., Wykowska, A. (2020). Attribution of intentional agency towards robots reduces one’s own sense of agency. Cognition, 194, 104109. [DOI] [PubMed] [Google Scholar]

- Coan, J.A., Allen, J.J.B. (2003). Varieties of emotional experience during voluntary emotional facial expressions. Annals of the New York Academy of Sciences, 1000, 375–9. doi: 10.1196/annals.1280.034 [DOI] [PubMed] [Google Scholar]

- Dalmaso, M., Castelli, L., Galfano, G. (2017). Attention holding elicited by direct-gaze faces is reflected in saccadic peak velocity. Experimental Brain Research, 235, 3319–32. doi: 10.1007/s00221-017-5059-4 [DOI] [PubMed] [Google Scholar]

- Davidson, R.J. (2004). What does the prefrontal cortex “do” in affect: perspectives on frontal EEG asymmetry research. Biological Psychology, 67, 219–33. [DOI] [PubMed] [Google Scholar]

- Delorme, A., Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009 [DOI] [PubMed] [Google Scholar]

- Dien, J. (1998). Issues in the application of the average reference: review, critiques, and recommendations. Behavior Research Methods, Instruments and Computers, 30(1), 34–43. doi: 10.3758/BF03209414 [DOI] [Google Scholar]

- Dikker, S., Wan, L., Davidesco, I., et al. (2017). Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Current Biology, 27(9), 1375–80. [DOI] [PubMed] [Google Scholar]

- Emery, N.J. (2000). The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience and Biobehavioral Reviews, 24(6), 581–604. [DOI] [PubMed] [Google Scholar]

- Faul, F., Erdfelder, E., Lang, A., Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [DOI] [PubMed] [Google Scholar]

- Friesen, C.K., Kingstone, A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin and Review, 5(3), 490–5. [Google Scholar]

- Fox, N.A., Bakermans-Kranenburg, M.J., Yoo, K.H., et al. (2016). Assessing human mirror activity with EEG mu rhythm: a meta-analysis. Psychological Bulletin, 142(3), 291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe, J.J., Snyder, A.C. (2011). The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Frontiers in Psychology, 2, 154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gale, A., Lucas, B., Nissim, R., Harpham, B. (1972). Some EEG correlates of face-to-face contact. British Journal of Social and Clinical Psychology, 11(4), 326–32. [DOI] [PubMed] [Google Scholar]

- Gale, A., Spratt, G., Chapman, A.J., Smallbone, A. (1975). EEG correlates of eye contact and interpersonal distance. Biological Psychology, 3(4), 237–45. [DOI] [PubMed] [Google Scholar]

- Gastaut, H. (1952). Electrocorticographic study of the reactivity of rolandic rhythm. Revue Neurologique, 87(2), 176–82. [PubMed] [Google Scholar]

- Gazzola, V., Rizzolatti, G., Wicker, B., Keysers, C. (2007). The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage, 35(4), 1674–84. [DOI] [PubMed] [Google Scholar]

- Hamilton, A.F.C. (2016). Gazing at me: the importance of social meaning in understanding direct-gaze cues. Philosophical Transactions of the Royal Society B, 371(1686).doi: 10.1098/rstb.2015.0080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harmon-Jones, E. (2003). Clarifying the emotive functions of asymmetrical frontal cortical activity. Psychophysiology, 40, 838–48. [DOI] [PubMed] [Google Scholar]

- Hietanen, J.K., Leppänen, J.M., Peltola, M.J., Linna-aho, K., Ruuhiala, H.J. (2008). Seeing direct and averted gaze activates the approach–avoidance motivational brain systems. Neuropsychologia, 46(9), 2423–30. [DOI] [PubMed] [Google Scholar]

- Hietanen, J.K. (2018). Affective eye contact: an integrative review. Frontiers in Psychology, 9, 158.doi: 10.3389/fpsyg.2018.01587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoehl, S., Michel, C., Reid, V.M., Parise, E., Striano, T. (2014). Eye contact during live social interaction modulates infants’ oscillatory brain activity. Social Neuroscience, 9(3), 300–8. [DOI] [PubMed] [Google Scholar]

- Humm, D.G., Humm, K.A. (1944). Validity of the Humm-Wadsworth Temperament Scale: with consideration of the effects of subjects’ response-bias. Journal of Psychology, 18(1), 55–64. [Google Scholar]

- Imai, M., Kanda, T., Ono, T., Ishiguro, H., Mase, K. (2002). Robot mediated round table: analysis of the effect of robot’s gaze. In: Proceedings 11th IEEE International Workshop on Robot and Human Interactive Communication Berlin, September, IEEE, 411–6. [Google Scholar]

- Ito, A., Hayakawa, S., Terada, T. (2004). Why robots need body for mind communication-an attempt of eye-contact between human and robot. In: RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No. 04TH8759) Kurashiki, September, IEEE, 473–8. [Google Scholar]

- Jung, Y., Lee, K.M. (2004). Effects of physical embodiment on social presence of social robots. In: Proceedings of PRESENCE Valenci, October, ISPR; 80–7. [Google Scholar]

- Kampe, K.K.W., Frith, C.D., Dolan, R.J., Frith, U. (2001). Psychology: reward value of attractiveness and gaze. Nature, 413(6856), 589. [DOI] [PubMed] [Google Scholar]

- Kleinke, C.L. (1986). Gaze and eye contact: a research review. Psychological Bulletin, 100(1), 78. [PubMed] [Google Scholar]

- Kompatsiari, K., Tikhanoff, V., Ciardo, F., Metta, G., Wykowska, A. (2017). The importance of mutual gaze in human-robot interaction. In: International Conference on Social Robotics, November, Cham, Springer, 443–52. [Google Scholar]

- Kompatsiari, K., Ciardo, F., Tikhanoff, V., Metta, G., Wykowska, A. (2018a). On the role of eye contact in gaze cueing. Scientific Reports, 8(1), 17842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kompatsiari, K., Pérez-Osorio, J., De Tommaso, D., Metta, G., Wykowska, A. (2018b). Neuroscientifically-grounded research for improved human-robot interaction. In: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October, IEEE, 3403–8. [Google Scholar]

- Kompatsiari, K., Ciardo, F., Tikhanoff, V., Metta, G., Wykowska, A. (2019a). It’s in the eyes: the engaging role of eye contact in HRI. International Journal of Social Robotics, 1–11.doi: 10.1007/s12369-019-00565-4 [DOI] [Google Scholar]

- Kompatsiari, K., Ciardo, F., De Tommaso, D., Wykowska, A. (2019b). Measuring engagement elicited by eye contact in Human-Robot Interaction. In: 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) Macau, November, IEEE, 6979–85. [Google Scholar]

- Kose-Bagci, H., Ferrari, E., Dautenhahn, K., Syrdal, D.S., Nehaniv, C.L. (2009). Effects of embodiment and gestures on social interaction in drumming games with a humanoid robot. Advanced Robotics, 23(14), 1951–96. [Google Scholar]

- Koslov, K., Mendes, W.B., Pajtas, P.E., Pizzagalli, D.A. (2011). Asymmetry in resting intracortical activity as a buffer to social threat. Psychological Science, 22, 641–9. doi: 10.1177/0956797611403156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachat, F., Hugueville, L., Lemaréchal, J.-D., Conty, L., George, N. (2012). Oscillatory brain correlates of live joint attention: a dual-EEG study. Frontiers in Human Neuroscience, 6, 156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leong, V., Byrne, E., Clackson, K., Georgieva, S., Lam, S., Wass, S. (2017). Speaker gaze increases information coupling between infant and adult brains. Proceedings of the National Academy of Sciences, 114(50), 13290–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leyzberg, D., Spaulding, S., Toneva, M., Scassellati, B. (2012). The physical presence of a robot tutor increases cognitive learning gains. Proceedings of the Annual Meeting of the Cognitive Science Society, 34, 34. [Google Scholar]

- Li, J. (2015). The benefit of being physically present: a survey of experimental works comparing copresent robots, telepresent robots and virtual agents. International Journal of Human-Computer Studies, 77, 23–37. [Google Scholar]

- Macrae, C.N., Hood, B.M., Milne, A.B., Rowe, A.C., Mason, M.F. (2002). Are you looking at me? Eye gaze and person perception. Psychological Science, 13(5), 460–4. [DOI] [PubMed] [Google Scholar]

- Mangun, G.R., Hillyard, S.A., Luck, S.J. (1993). IQ electrocortical substrates of visual selective attention. Attention and Performance XIV, 14, 219. [Google Scholar]

- Maris, E., Oostenveld, R. (2007). Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods, 164(1), 177–90. [DOI] [PubMed] [Google Scholar]

- Metta, G., Fitzpatrick, P., Natale, L. (2006). YARP: yet another robot platform. International Journal of Advanced Robotic Systems, 3(1), 8. [Google Scholar]

- Metta, G., Natale, L., Nori, F., et al. (2010). The iCub humanoid robot: an open-systems platform for research in cognitive development. Neural Networks, 23(8-9), 1125–34. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy, S.D., Johnson, B.W. (2004). Primary motor cortex activation during action observation revealed by wavelet analysis of the EEG. Clinical Neurophysiology, 115(8), 1760–6. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy, S.D., Johnson, B.W., McNair, N.A. (2004b). Mu rhythm modulation during observation of an object-directed grasp. Cognitive Brain Research, 19(2), 195–201. [DOI] [PubMed] [Google Scholar]

- Oberman, L.M., Pineda, J.A., Ramachandran, V.S. (2007). The human mirror neuron system: a link between action observation and social skills. Social Cognitive and Affective Neuroscience, 2(1), 62–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld, R., Fries, P., Maris, E., Schoffelen, J.-M. (2011). FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 156869. doi: 10.1155/2011/156869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palanica, A., Itier, R.J. (2012). Attention capture by direct gaze is robust to context and task demands. Journal of Nonverbal Behavior, 36(2), 123–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papousek, I., Reiser, E.M., Schulter, G., et al. (2013). Serotonin transporter genotype (5-HTTLPR) and electrocortical responses indicating the sensitivity to negative emotional cues. Emotion, 13, 1173–81. doi: 10.1037/a0033997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papousek, I., Reiser, E.M., Weber, B., Freudenthaler, H.H., Schulter, G. (2012). Frontal brain asymmetry and affective flexibility in an emotional contagion paradigm. Psychophysiology, 49, 489–98. doi: 10.1111/j.1469-8986.2011.01324.x [DOI] [PubMed] [Google Scholar]

- Perez-Osorio, J., Müller, H.J., Wykowska, A. (2017). Expectations regarding action sequences modulate electrophysiological correlates of the gaze-cueing effect. Psychophysiology, 54(7), 942–54. [DOI] [PubMed] [Google Scholar]

- Perry, A., Bentin, S. (2009). Mirror activity in the human brain while observing hand movements: a comparison between EEG desynchronization in the μ-range and previous fMRI results. Brain Research, 1282(1282), 126–32. [DOI] [PubMed] [Google Scholar]

- Perry, A., Stein, L., Bentin, S. (2011). Motor and attentional mechanisms involved in social interaction—evidence from mu and alpha EEG suppression. Neuroimage, 58(3), 895–904. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller, G., Berghold, A. (1989). Patterns of cortical activation during planning of voluntary movement. Electroencephalography and Clinical Neurophysiology, 72(3), 250–8. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller, G., Scherer, R., Müller-Putz, G.R., Lopes da Silva, F.H. (2008). Short-lived brain state after cued motor imagery in naive subjects. European Journal of Neuroscience, 28(7), 1419–26. [DOI] [PubMed] [Google Scholar]

- Pineda, J.A., Allison, B.Z., Vankov, A. (2000). The effects of self-movement, observation, and imagination on μ rhythms and readiness potentials (RP’s): toward a brain-computer interface (BCI). IEEE Transactions on Rehabilitation Engineering, 8(2), 219–22. [DOI] [PubMed] [Google Scholar]

- Pönkänen, L.M., Peltola, M.J., Hietanen, J.K. (2011). The observer observed: frontal EEG asymmetry and autonomic responses differentiate between another person’s direct and averted gaze when the face is seen live. International Journal of Psychophysiology, 82(2), 180–7. [DOI] [PubMed] [Google Scholar]

- Premoli, I., Bergmann, T.O., Fecchio, M., et al. (2017). The impact of GABAergic drugs on TMS-induced brain oscillations in human motor cortex. Neuroimage.163, 1–12 doi: 10.1016/j.neuroimage.2017.09.023 [DOI] [PubMed] [Google Scholar]

- Prinsen, J., Bernaerts, S., Wang, Y., et al. (2017). Direct eye contact enhances mirroring of others’ movements: a transcranial magnetic stimulation study. Neuropsychologia, 95, 111–8. [DOI] [PubMed] [Google Scholar]

- Prinsen, J., Alaerts, K. (2020). Enhanced mirroring upon mutual gaze: multimodal evidence from TMS-assessed corticospinal excitability and the EEG mu rhythm. Scientific Reports 10(1), 20449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Risko, E.F., Richardson, D.C., Kingstone, A. (2016). Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Current Directions in Psychological Science, 25(1), 70–4. [Google Scholar]

- Roncone, A., Pattacini, U., Metta, G., Natale, L.A. (2016). Cartesian 6-DoF gaze controller for Humanoid Robots. Proceedings of Robotics: Science and Systems, Ann Arbor, October, RSS, 2016. doi: 10.15607/rss.2016.xii.022 [DOI] [Google Scholar]

- Satake, S., Kanda, T., Glas, D.F., Imai, M., Ishiguro, H., Hagita, N. (2009). How to approach humans?: strategies for social robots to initiate interaction. In: Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction California, March, ACM, 109–116. [Google Scholar]

- Saito, D.N., Tanabe, H.C., Izuma, K., et al. (2010). “Stay tuned”: inter-individual neural synchronization during mutual gaze and joint attention. Frontiers in Integrative Neuroscience, 4, 127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schellen, E., Wykowska, A. (2019). Intentional mindset toward robots—open questions and methodological challenges. Frontiers in Robotics and AI, 5, 139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schilbach, L., Wilms, M., Eickhoff, S.B., et al. (2010). Minds made for sharing: initiating joint attention recruits reward-related neurocircuitry. Journal of Cognitive Neuroscience, 22(12), 2702–15. [DOI] [PubMed] [Google Scholar]

- Sciutti, A., Ansuini, C., Becchio, C., Sandini, G. (2015). Investigating the ability to read others’ intentions using humanoid robots. Frontiers in Psychology, 6, 1362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senju, A., Hasegawa, T. (2005). Direct gaze captures visuospatial attention. Visual Cognition, 12(1), 127–44. [Google Scholar]

- Senju, A., Johnson, M. (2009). The eye contact effect: mechanisms and development. Trends in Cognitive Sciences, 13(3), 16. [DOI] [PubMed] [Google Scholar]

- Van Honk, J., Schutter, D.J.L.G. (2006). From affective valence to motivational direction: the frontal asymmetry of emotion revised. Psychological Science, 17, 963–5. [DOI] [PubMed] [Google Scholar]

- Wagenmakers, E.-J. (2007). A practical solution to the pervasive problems of p values. Psychonomic Bulletin and Review, 14(5), 779–804. doi: 10.3758/BF03194105 [DOI] [PubMed] [Google Scholar]

- Wang, Y., Ramsey, R., Hamilton, A.F.D.C. (2011). The control of mimicry by eye contact is mediated by medial prefrontal cortex. Journal of Neuroscience, 31(33), 12001–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ward, L.M. (2003). Synchronous neural oscillations and cognitive processes. Trends in Cognitive Sciences, 7(12), 553–9. [DOI] [PubMed] [Google Scholar]

- Wiese, E., Metta, G., Wykowska, A. (2017). Robots as intentional agents: using neuroscientific methods to make robots appear more social. Frontiers in Psychology, 8, 1663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser, M.J., Pauli, P., Alpers, G.W., Mühlberger, A. (2009). Is eye to eye contact really threatening and avoided in social anxiety?—an eye-tracking and psychophysiology study. Journal of Anxiety Disorders, 23(1), 93–103. [DOI] [PubMed] [Google Scholar]

- Wykowska, A., Wiese, E., Prosser, A., Müller, H.J., Hamed, S.B. (2014). Beliefs about the minds of others influence how we process sensory information. PLoS One, 9(4), e94339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wykowska, A., Chaminade, T., Cheng, G. (2016). Embodied artificial agents for understanding human social cognition. Philosophical Transactions of the Royal Society B, 371(1693), 20150375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wykowska, A., Kajopoulos, J., Ramirez-Amaro, K., Cheng, G. (2015). Autistic traits and sensitivity to human-like features of robot behavior. Interaction Studies, 16(2), 219–48. [Google Scholar]

- Yonezawa, T., Yamazoe, H., Utsumi, A., Abe, S. (2007). Gaze-communicative behavior of stuffed-toy robot with joint attention and eye contact based on ambient gaze-tracking. In: Proceedings of the International Conference on Multimodal Interfaces (ICMI), November, Nagoya, Japan, ACM Press, 140–5. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.