Abstract

In 2020, the COVID‐19 pandemic led to the suspension of the annual Summer Internship at the American Center for Reproductive Medicine (ACRM). To transit it into an online format, an inaugural 6‐week 2020 ACRM Online Mentorship Program was developed focusing on five core pillars of andrology research: scientific writing, scientific methodology, plagiarism understanding, soft skills development and mentee basic andrology knowledge. This study aims to determine mentee developmental outcomes based on student surveys and discuss these within the context of the relevant teaching and learning methodology. The mentorship was structured around scientific writing projects established by the team using a student‐centred approach, with one‐on‐one expert mentorship through weekly formative assessments. Furthermore, weekly online meetings were conducted, including expert lectures, formative assessments and social engagement. Data were collected through final assessments and mentee surveys on mentorship outcomes. Results show that mentees (n = 28) reported a significant (p < .0001) improvement in all criteria related to the five core pillars. These results illustrate that the aims of the online mentorship program were achieved through a unique and adaptive online educational model and that our model has demonstrated its effectiveness as an innovative structured educational experience through the COVID‐19 crisis.

Keywords: andrology, goals, plagiarism, research internship, scientific writing

1. INTRODUCTION

The COVID‐19 pandemic has had profound health, social, economic and educational impact on humanity. Mainly resulting in significant restrictions or suspensions of regular in‐person teaching, these new challenges have forced educators to shift to an online mode of teaching. Online learning is considered a valuable, innovative and more flexible approach that can make the teaching‐learning process more student‐centered (Dhawan, 2020). Efficient use of digital platforms (such as Zoom, WebEx, Google Meet and Skype) has opened up opportunities for mentors to engage with medical trainees in these challenging times and impart virtual training in scientific research and writing skills (Almarzooq et al., 2020). In a meta‐analysis of online learning studies prepared by the U.S. Department of Education, Means et al. found that students who engaged in online learning performed modestly better on average than students engaged in face‐to‐face instruction (Means et al., 2010).

Scientific literacy aims to develop the creation and dissemination of knowledge for critical thinking (Klucevsek, 2017). Reading and writing activities are essential components for establishing scientific literacy in trainees (Baker & Saul, 1994). Smart tutoring strategies need to be further explored to engage the students in the most authentic research experiences and fully train them in the profession (Hunter et al., 2007; Klucevsek, 2017). To facilitate further a connection between research scientists and clinicians, and develop scientific‐analytical and writing skills, the American Center for Reproductive Medicine (ACRM) organized a Summer Internship program in 2008, which has been repeated annually since then. Here, the broad goals are to provide student insight into the dynamics of research and medical practice, expose trainees, physicians and residents to scientific research, provide an opportunity to translate the information gained in lectures and hands‐on practical work into potentially publishable articles, facilitate the role of research in the improvement of patient care and to encourage the development of physician–researchers or scientists. The program also focuses on the development of soft skills including professionalism, time management, communication and public speaking (Durairajanayagam et al., 2015; Kashou et al., 2016). The basis of this training includes lectures and bench research relevant to human reproduction, infertility and urology, with training in laboratory diagnostic techniques, data collection and scientific writing (Kashou et al., 2016). In the first six years (2008–2014), the ACRM Summer Internship trained 114 interns, predominantly females (64%), including undergraduate/pre‐medical students (71.1%), medical students (19.3%), post‐graduate students (7%) and medical doctors (2.6%) (Kashou et al., 2016). A survey on the 2014 cohort reported that overall 88% of the participants found the course to be beneficial and worth the time and effort invested by faculty and interns alike (Durairajanayagam et al., 2015). During the years, the internship has changed to fit the interns’ needs. In 2018 and 2019, the internship included lectures (~17 hr), scientific writing workshops (~18 hr), biostatistics (~13 hr), practical training (~17 hr), scientific writing practice (~22 hr), scientific presentation training (~21 hr), dedicated one‐on‐one mentor training (~14 hr) and self‐study (~148 hr), totally 270 hr equating to six credits (USA). Furthermore, many publications have emerged from the projects initially developed with interns at the summer internship (Agarwal et al., 2018; Bui et al., 2018; Henkel et al., 2019).

The 2020 COVID‐19 pandemic has drastically changed the educational systems at all levels that demanded rapid adaptation to remote learning. Within this pandemic, the ACRM Summer Internship Program also had to switch from its pre‐planned ‘face‐to‐face’ model to an entirely online mode to maintain its continuity and provide scientific training to prospective scientists and clinicians. The program was designed to meet the needs of several promising candidates from all over the globe to participate in this renowned reproductive medicine research training. This study aims to determine mentee developmental outcomes of the inaugural ACRM Online Mentorship Program based on student surveys and outcomes, and discuss these within the context of the relevant teaching and learning methodology and the broad traditional principles of the annual ACRM Summer Internship Program.

2. METHODOLOGY

2.1. Mentorship overview

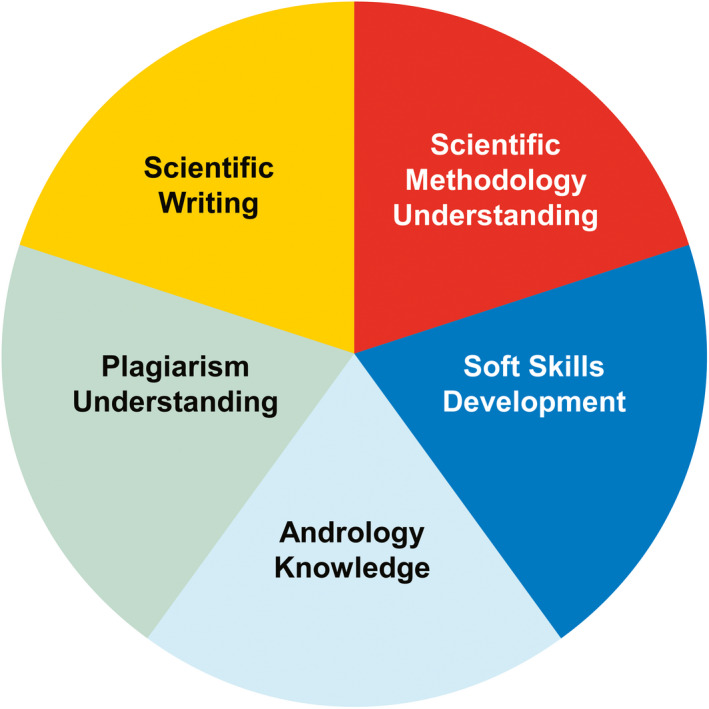

To transit the program into an online format, the ACRM expanded its team of collaborators and international partners. A mentorship team of educational and clinical experts (n = 18) was assembled as mentors, including experts in Andrology, Urology and male infertility from Egypt, Iran, Italy, Malaysia, Qatar, Romania, Saudi Arabia, South Africa and the USA. This included clinicians and scientists with expertise in clinical practice, scientific publications and teaching programs, with a proven track record of publications in high raking journals. This team, therefore, reflected appropriate clinical, research and teaching experience from a diverse background, which ensured numerous different viewpoints and contributions to all aspects of the mentorship. This team, under the guidance of the ACRM management, developed an innovative teaching structure that revolved around five core education pillars (Figure 1, Table S1). The training was structured on scientific writing projects, as well as weekly lecture series (called Virtual Colloquium Meetings—VCMs) and relevant formative assessments.

FIGURE 1.

Five core outcomes of the ACRM Online Mentorship program

The central teaching strategy used was based on one student—one mentor philosophy. As a consequence, each student was guided by one mentor and, in some cases, by additional co‐mentors. The mentors guided and helped the mentees throughout the entire mentorship program. They conducted multiple‐choice question (MCQ) tests, weekly online meetings and tutorials, provided home assignments, positive and encouraging comments, as well as constructive feedback with specific suggestions for improvements into the different areas being evaluated.

2.2. Scientific writing projects

Each mentor proposed several scientific writing projects for the 2020 Online Mentorship Program based on their area of interest and availability of data collected through ethically approved projects. The scientific writing projects were submitted by mentors as a written project synopsis, further orally presented, discussed and evaluated in an online meeting organized by the ACRM management.

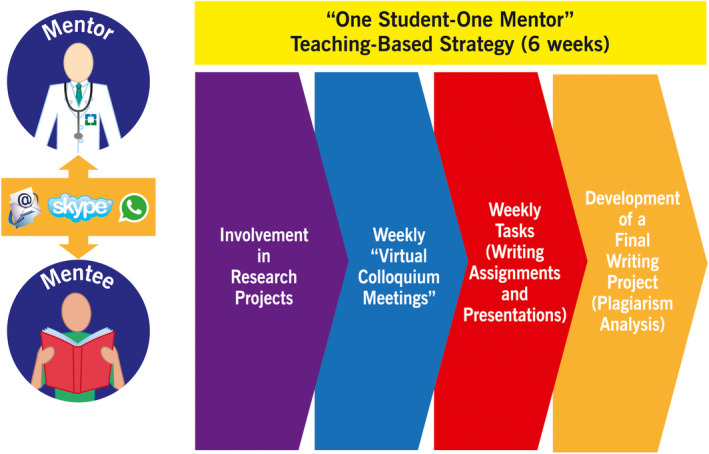

The core structure for scientific literacy development over 6 weeks was for students to be mentored in the writing of a scientific manuscript in a standardized publication format. This was done under direct and regular one‐on‐one online meetings between mentees and mentors through suitable online platforms. Within this format, structured scientific writing assignments and presentations were to be conducted and evaluated each week, including the provision of written and oral feedback. The structure of the weekly focus tasks contributed towards the development of a final writing project for evaluation after the 6‐week mentorship concluded. This structure included an abstract, introduction, methodology, results, discussion and conclusion sections, as well as relevant figures, tables and references. Scientific literacy development included focused and systematic literature database searching, application of data analysis, critical analytic skills, scientific writing skills, referencing, speaking and presentation skills. Weekly written submissions underwent plagiarism analysis through similarity index reporting, and discussions on plagiarism and how to avoid it were among the central features of the training program. Besides, soft skills including punctuality, attention to detail, initiative, critical thinking, self‐organisation and effective communication were integrated into the program, discussed and evaluated by the mentors through regular online engagements. Figure 2 summarizes the general structure of the mentorship program.

FIGURE 2.

Schematic representation of the 2020 Online Mentorship program

2.3. Mentees’ selection

Prospective mentees who applied to the mentorship program went through a round of online interviews with the Program Director and the Chief Coordinator of the Online Mentorship program to screen their qualifications and interest in participation. Then, the prospective mentees were asked to review and undergo a self‐oriented study of the Handbook of Andrology (Andrology, 2010) and subsequently underwent an online MCQ test based on this reading. Mentees who scored a minimum of 70% were included in the mentorship program following this screening process. A description of the available scientific writing projects was shared with the mentees during an online meeting, and the mentees were asked to rank all the projects offered based on their own interest (from 1—very interested to 18—not interested). Mentees were matched with the writing topic of most interest through this matching process. Compliance of the mentees throughout the course was assessed by a weekly logbook submitted by the students to their mentors who in turn approved it based on the assignment that he/she had given the student and then submitted it to the course administration.

2.4. Virtual Colloquium Meetings

During the 6 weeks of the 2020 Summer Mentorship Program, the mentees attended weekly VCM as part of the program's teaching and learning strategy. Activities at each virtual meeting included lectures, formative assessments, a ‘meet the mentors and the mentees’ session and recognition of top mentees based on their week’s performance.

Each week, the VCM included lectures by experts from the mentorship, as well as external guest experts, for a total of 14 virtual lectures over the 6 weeks. These lectures were based on a range of topics around the central pillars of the mentorship, including topics on male infertility (such as evaluation and management of male infertility), research methodology and application, plagiarism in scientific writing and lectures on public speaking and scientific presentations. Prior to the colloquium, each presenting lecturer provided their PowerPoint presentation and MCQ tests for the mentees to ACRM to ensure appropriate quality, and length and structure of the MCQ test. Following the lecture, the mentees undertook the MCQ test, and results were immediately aggregated, made available and discussed by the experts. The test was conducted using Google Forms (https://www.google.com/forms/about/). The aim of these assessments was primarily to enhance the key information given during the presentation and the learning experience.

A social interactive program followed the formal lectures and assessments. These sessions were structured on selected mentors and mentees sharing snippets of their personal and professional life, interests and hobbies. All mentees and mentors had an opportunity to showcase themselves across the six VCMs. Furthermore, the top students were recognized based on their performance in three different scoring categories: lowest similarity index for weekly writing assignments, highest MCQ test scores and soft skills.

2.5. Assessments

Assessment is a fundamental part of any educational program, and is defined as ‘the systematic collection and analysis of information to improve student learning’ (Stassen et al., 2001). In the current online mentorship, regular assessments were conducted throughout the course schedule starting from enrolment until the exit from the program. These assessments can be categorized into four groups: formative assessment, final assessment (presentation and writing assignments), soft skills assessment and surveys (weekly and final exit surveys). The online survey was reviewed and approved by the Institutional Review Board (IRB) of Cleveland Clinic (IRB # 20‐1013).

2.6. Formative assessments

The first tools used for formative assessment were online MCQ tests based on the 14 lectures provided at the weekly VCMs, as described above. The second formative assessment tool was weekly mentors’ assessments for the scientific writing submitted by the mentees. This was done through standardized scoring systems created in Google Forms (https://www.google.com/forms/about/), evaluating different parts of the manuscript. The evaluation was conducted on a weekly basis to assess the mentee's weekly progress and improvement in scientific writing. Furthermore, the submitted writing projects were reviewed using the reviewer tracking function in Microsoft Word, with constructive feedback provided within 48 hr of submission. This feedback was enhanced with verbal discussions as part of the regular mentee–mentor online engagements.

2.7. Final assessment

The final assessment was constructed to reflect the students’ overall progress throughout the course based on the final submission of a scientific writing manuscript and a presentation of the research in an online forum. Therefore, each of the mentees was assessed after the mentorship through written submissions and oral presentation formats.

2.8. Assessment of scientific writing

The final written projects were evaluated in three formats, with each submission assessed and reviewed independently by 14 selected mentors (assessors). The first format was a summative grading process through a structured rubric format. This quantitative rubric was constructed by the mentorship team, subjected to a review process at the start of the mentorship, and aligned to the structured format prescribed to the mentees for the final written submission. The rubric consisted of two sections. Section I was for the manuscript structure assessing different parts of the manuscript, namely abstract, introduction, methodology, results, discussion, conclusion, references, tables and figures. Each part was assessed based on the best practice guidelines for scientific writing, and scoring was assigned to each part in detail in the most objective way. Section II assessed the quality of the manuscript, by discussing the writing quality and style. The second format was intended to provide qualitative constructive feedback from each assessor through the Microsoft Word review function. The third format was a standardized online grading report using Google Forms that was completed independently by each assessor, providing both quantitative and qualitative feedback. This consisted of 23 questions covering the evaluation of the writing project with regards to novelty, strengths and weaknesses as well as the readiness of the manuscript for publication. It included open‐ended questions where the evaluator would write his/her review comments. The results of all these assessment forms were handed to the mentees at the end of the mentorship who, in turn, had the opportunity to discuss the comments with their mentors. This was followed by a revision of the manuscript to get it ready for publication.

2.9. Assessment of final presentations

At the end of the mentorship, each mentee was asked to prepare a presentation discussing the writing project that they had been working on. The presentations were conducted online for all mentees, mentors and guests. These were online presentation sessions dedicated to this assessment process, and were conducted over three separate sessions to accommodate a 10‐min presentation and 5‐min discussion with the audience for each mentee. The mentees were assessed with a structured online rubric (via Google Forms) by the internal and external experts present. It included assessment of the knowledge about the subject as well as the presentation skills including organization and quality of the slides, as well as delivery style and adherence to the allocated time.

2.10. Soft skills

Soft skills were assessed directly by mentors weekly using a standardized report on Google Forms. It assessed qualities such as the mentee's communication and organization skills, attention to detail, punctuality, critical thinking and taking initiative as well as their general attitude. Assessment of soft skills provided the mentees with knowledge about their strengths and weakness in professional life. Therefore, it helped them identify which of their skills needed to be improved and which skills they were good at and could transmit to others while increasing personal success as well as teamwork. Data for this study were collected through the exit survey described below.

2.11. Surveys

2.11.1. Weekly surveys

Throughout the 6‐week program, both mentors and mentees were asked to fill different surveys assessing the teaching and learning progress of the program. This helped the program administration to handle any deficiencies promptly and deliver the online mentorship in the best possible way. Weekly mentee and mentor surveys were conducted online anonymously using Google Forms. These were tailored to generic feedback and weekly specific topics and amendments. Based on the feedback, there were regular meetings with the mentorship team to discuss the progress, challenges and adaption to mentees' needs during the course of the program. Mentee feedback was also anonymously provided through mentee representatives, where mentees were encouraged to connect online and discuss the program.

2.11.2. Exit survey

At the end of the ACRM online mentorship program, a self‐reported exit survey from the mentees was conducted through a structured online questionnaire using Google Forms. This survey was done voluntarily and anonymously by each mentee, and informed consent was obtained.

The first set of questions included mentee feedback on the ‘Online Engagement with the Mentors’. Mentees were asked to strongly agree, agree, disagree or have a neutral position on statements related to the mentors. This included (a) my mentor was an effective guide, (b) my online meetings were clear and organized, (c) my mentor stimulated my interest in the writing project, (d) my mentor provided me with constructive feedback, and (e) my mentor was readily available and helpful.

The second set of questions included mentee feedback on the VCMs. Mentees were asked to rate these meetings as either poor, fair, good, very good or excellent. This included (a) lectures by ACRM or guest speakers, (b) MCQ assessment to improve learning, (c) ‘Get to Know Your Mentor’ sessions and (d) ‘Meet the Mentee’ sessions.

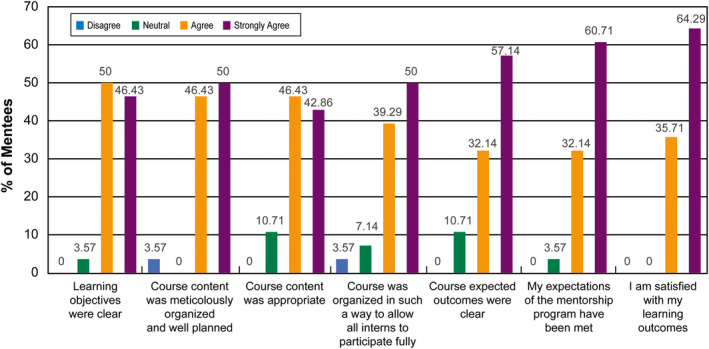

The third set of questions included mentee feedback on the ‘Course Content’. Mentees were asked to strongly agree, agree, disagree or have a neutral position on statements related to the course contents. This included: (a) learning objectives were clear; (b) course content was meticulously organized and well planned; (c) course content was appropriate; (d) course was structured in such a way to allow all mentees to participate fully; (e) course expected outcomes were clear, (f) the expectations of the mentorship program have been met; and (g) I am satisfied with my learning outcomes.

The fourth set of questions comprised a pre‐ and post‐analysis of the central pillars of the online mentorship. This included the following: (a) scientific writing skills; (b) understanding of the scientific methodologies; (c) understanding of plagiarism; (d) soft skill development; and (e) knowledge in Andrology. Mentees were asked to rate statements based on their self‐reported knowledge pre‐ and post‐mentorship under these sections as poor, fair, good, very good or excellent. These were converted into a numerical scale of 1 (poor), 2 (fair), 3 (good), 4 (very good) and 5 (excellent) to statistically compare pre‐ and post‐mentorship differences.

2.12. Statistical analysis

Statistical analysis was performed using the MedCalc statistical software version 19.1 (MedCalc Software). Descriptive statistics of the mentee cohort are represented as the percentage of the included cohort and/or mean ± SD and median (interquartile range—IQR). Kolmogorov–Smirnov test for normal distribution was used to understand the distribution of the data variables. Wilcoxon test for comparison was used to compare pre and post self‐reporting of mentees performance based on the nonparametric distribution of data. A p‐value of less than .05 was considered statistically significant.

3. RESULTS

3.1. Mentee cohort characteristics

A total number of 28 mentees attended the Online Mentorship 2020 at the ACRM. The cohort descriptive statistics are summarized in Table 1. Of these, 21 mentees were American candidates, with seven international mentees from India (n = 4), Iran (n = 2) and Algeria (n = 1). There was a higher proportion of females (78.6%) compared to males (21.4%), and undergraduate students (75%) compared to graduate (10.7%) and Ph.D. (14.3%) students. To facilitate the communication between mentors and mentees, several preferential online tools were used, including WhatsApp (32.1%), Google Meet (28.6%), Skype (17.9%), e‐mails (14.3%) and Zoom (7.1%). During the online mentorship, each mentee was guided by a mentor and worked on a writing project including systematic reviews (53.6%), original studies (25.0%) and meta‐analysis (21.4%). Results from the Andrology Handbook MCQ showed a mean of 79.8 ± 8.78% (median [IQR]: 82.0% [79.0–86.0]). The results of final presentation of research projects showed a mean of 89.8 ± 4.47% (median [IQR]: 91.0% [88.7–92.4]). In addition, the results of final scientific writing projects showed a mean of 69.4 ± 7.58% (median [IQR]: 70.9% [45.3–77.1]). Most of the manuscripts required a major revision (59.2%), mostly related to the compact nature and depth of discussions relevant to the results. However, 33.1% required a minor revision, and 2.5% were considered ready for submission in the current format, while 5.2% were considered not appropriate for revision.

TABLE 1.

Descriptive statistics of the 2020 ACRM Online Mentorship Program mentee cohort

| Category | Sub‐category (n) |

Frequency or Mean ± SD [Median (IQR)] |

|---|---|---|

| Gender | Male (n = 6) | 21.4% |

| Female (n = 22) | 78.6% | |

| Nationality | American (n = 21) | 75% |

| Indian (n = 4) | 14% | |

| Iranian (n =2) | 7% | |

| Algerian (n =1) | 4% | |

| Education Level | Undergraduate (n = 21) | 75% |

| Graduate (n = 3) | 10.7% | |

| PhD (n = 4) | 14.3% | |

| Mentee Most Convenient Communication Platforms | WhatsApp (n = 9) | 32.1% |

| Google Meet (n = 8) | 28.6% | |

| Skype (n = 5) | 17.9% | |

| E‐Mail (n = 4) | 14.3% | |

| Zoom (n = 2) | 7.1% | |

| Type of Scientific Study | Original study (n = 7) | 25.0% |

| Systematic review (n = 15) | 53.6% | |

| Meta‐analysis (n = 6) | 21.4% | |

| Andrology Handbook Entry MCQ Result (%) | n = 28 |

79.8 ± 8.78 [82 (79.0–86.0)] |

| Final Presentation Outcomes (%) | n = 28 |

89.8 ± 4.47 [91.0 (88.7–92.4)] |

| Final Scientific Writing Outcome (%) | n = 28 |

69.4 ± 7.58 [70.95 (45.3–77.1)] |

| Publication Ready Manuscripts | Ready in current format | 2.5% |

| Requires minor revision | 33.1% | |

| Requires major revision | 59.2% | |

| Not appropriate for publication | 5.2% |

Abbreviations: IQR, interquartile range; SD, standard deviation.

This article is being made freely available through PubMed Central as part of the COVID-19 public health emergency response. It can be used for unrestricted research re-use and analysis in any form or by any means with acknowledgement of the original source, for the duration of the public health emergency.

3.2. Exit survey

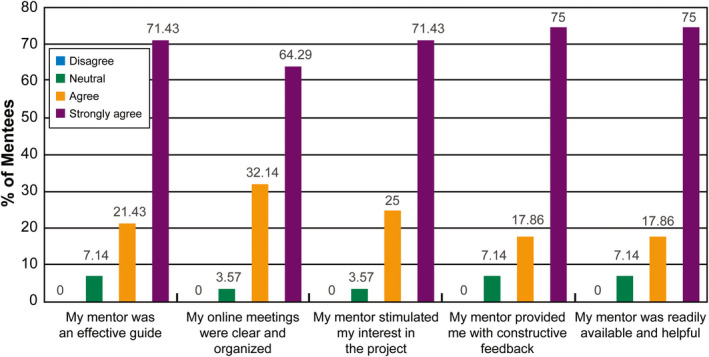

3.2.1. Online engagement with mentors

Regular online engagement with mentors was a central component of the online mentorship. The online engagement with mentors was evaluated by all the mentees and summarized in Figure 3. Overall, the majority of mentees agreed with each statement: my mentor as an effective guide (92.9%), online meetings were clear and organized (96.4%), mentors stimulated interest in the assigned projects (96.4%), mentors provided prompt and constructive feedback (92.9%), and mentors were always available and helpful (92.9%).

FIGURE 3.

Mentees’ feedback on the online engagement with their mentors during the 6‐week Online Mentorship program

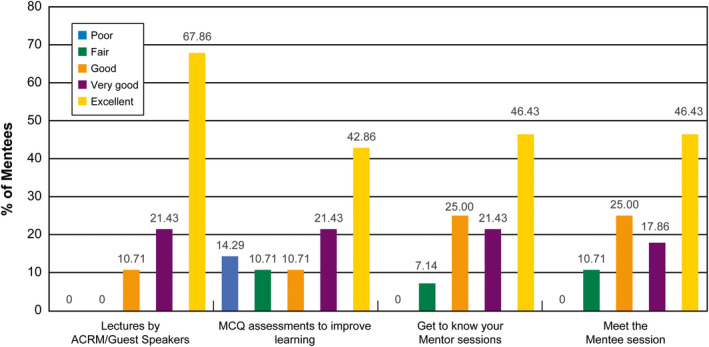

3.2.2. Virtual colloquium meetings

The VCM survey feedback results are summarized in Figure 4. Overall, the majority of mentees rated the following statements as excellent or very good: lectures by ACRM or guest speakers (67.8% and 21.4%, respectively), MCQ assessments to improve learning (42.8% and 21.4%, respectively), meet your mentor sessions (46.4% and 21.4%, respectively) and meet the mentee sessions (46.4% and 17.9% respectively).

FIGURE 4.

Mentees’ feedback on the Virtual Colloquium Meetings during the 6‐week Online Mentorship program

3.2.3. Course content

The mentor response to the course content provided is summarized in Figure 5. Overall, the majority of mentees strongly agreed or agreed with each statement: learning objectives were clear (50.0% and 46.4%, respectively), the course content was meticulously organized and well planned (50.0% and 46.4%, respectively), course workload was appropriate (42.8% and 46.4%, respectively), mentees were able to participate fully in the program (50.0% and 39.3%, respectively), course outcomes were clear (57.1% and 32.1%, respectively), my expectations of the course have been met (60.1% and 32.1%, respectively), and I am satisfied with my learning outcomes (64.3% and 35.7% respectively).

FIGURE 5.

Mentees’ feedback on the course content during the 6‐week Online Mentorship program

3.3. Pre‐ and post‐mentorship improvements

Mentees were asked to self‐evaluate their skills in scientific writing, scientific methodology and plagiarism understanding, soft skills and basic andrology knowledge, at the beginning of the course and during the exit survey. Specifically, the mentees were asked to report their perceived skills in several aspects of each outcome. Results globally showed a significant improvement in their skills in all five core outcomes, as summarized in Table 2. For scientific writing, there was a significant (p < .001) self‐reported improvement for mentee writing ability, grammar and punctuation, use of appropriate terminology, ability to search and identify appropriate articles for scientific writing and ability to choose correct references (Table 2). For understanding scientific methodology, there was a significant (p < .001) self‐reported improvement for mentee understanding of the research process, understanding of the application of PICO (population, intervention, control and outcomes) in research methodology, understanding of observational studies, understanding of clinical trials (experimental studies), systematic reviews and meta‐analyses and understanding of the aims of a research study in quantitative research (Table 2). Besides, there was a significant (p < .001) self‐reported improvement for mentee understanding of plagiarism in scientific writing, the application of the similarity index in anti‐plagiarism software, plagiarism as a misrepresentation of another person’s work, that plagiarism can be considered a criminal offence and the ability to recognize and avoid unintentional plagiarism in their writing (Table 2). For mentee soft skill development, there was a significant (p < .001) self‐reported improvement for mentee punctuality and attendance, initiative, attention to detail, critical thinking, ability to self‐organize and effective communication (Table 2). For mentee andrology knowledge, there was a significant (p < .001) self‐reported improvement for mentee understanding of the causes of male infertility, the semen analysis, assisted reproduction techniques, the role of the urological surgeon in the management of male infertility, the role of empirical medical treatments in male infertility, the importance of sperm DNA fragmentation in male infertility and the importance of oxidative stress in male infertility (Table 2).

TABLE 2.

Pre and post self‐reporting of the 2020 ACRM Online Mentorship Program mentees

| Pre‐online mentorship | Post‐online mentorship | p‐value | |||||

|---|---|---|---|---|---|---|---|

| n | Mean ± SD | Median (IQR) | n | Mean ± SD | Median (IQR) | ||

| Mentee scientific writing | |||||||

| My writing ability | 26 | 2.6 ± 0.7 | 3 (2–3) | 28 | 3.7 ± 0.7 | 4 (3–4) | <.0001 |

| My grammar and punctuation | 28 | 2.9 ± 1.0 | 3 (2–4) | 27 | 4.0 ± 0.8 | 4 (4–4) | <.0001 |

| My use of appropriate terminology | 28 | 2.4 ± 0.8 | 2.4 (2–3) | 28 | 3.8 ± 0.9 | 4 (3–4) | <.0001 |

| My ability to search and identify appropriate articles for scientific writing | 28 | 2.5 ± 1.0 | 2 (2–3) | 28 | 4.3 ± 0.6 | 4 (4–5) | <.0001 |

| My ability to choose correct references | 27 | 2.7 ± 1.0 | 3 (2–3) | 28 | 4.1 ± 0.8 | 4 (3.75–5) | <.0001 |

| Scientific methodology understanding | |||||||

| My understanding of the research process | 28 | 2.3 ± 0.8 | 2.25 (1.75–3) | 28 | 4.1 ± 0.7 | 4 (4–5) | <.0001 |

| My understanding of the application of PICO in research methodology | 27 | 1.5 ± 0.7 | 1 (1–2) | 28 | 3.5 ± 1.1 | 4 (3–4) | <.0001 |

| My understanding of observational studies | 28 | 2.4 ± 0.8 | 3 (2–3) | 28 | 4.0 ± 0.9 | 4 (3.72–5) | <.0001 |

| My understanding of clinical trials (experimental studies) | 28 | 2.4 ± 0.8 | 3 (2–3) | 28 | 3.9 ± 0.9 | 4 (3.72–5) | <.0001 |

| My understanding of systematic reviews | 28 | 1.8 ± 1.0 | 1 (1–3) | 28 | 4.1 ± 0.9 | 4 (3.75–5) | <.0001 |

| My understanding of meta‐analysis | 28 | 1.8 ± 0.9 | 1 (1–3) | 28 | 4.0 ± 1.0 | 4 (3–5) | <.0001 |

| My understanding of the aims of a research study in quantitative research | 28 | 2.1 ± 0.9 | 2 (1–3) | 28 | 4.1 ± 0.8 | 4 (3.75–5) | <.0001 |

| Plagiarism understanding | |||||||

| My understanding of plagiarism in scientific writing | 28 | 3.5 ± 1.2 | 3.5 (3–4) | 28 | 4.5 ± 0.6 | 5 (4–5) | .0001 |

| My understanding of the application of the similarity index in anti‐plagiarism software | 28 | 3.1 ± 1.2 | 3 (2.5–4) | 28 | 4.4 ± 0.6 | 4.5 (4–5) | <.0001 |

| My understanding of plagiarism as a misrepresentation of another person’s work | 27 | 3.8 ± 1.3 | 4 (3–5) | 28 | 4.5 ± 0.6 | 5 (4–5) | .0005 |

| My understanding that plagiarism can be considered a criminal offence | 28 | 4.0 ± 1.2 | 4 (3–5) | 28 | 4.5 ± 0.6 | 5 (5–5) | .0010 |

| My ability to recognize and avoid unintentional plagiarism in my writing | 28 | 3.4 ± 1.2 | 3.5 (3–4) | 28 | 4.4 ± 0.7 | 5 (4–5) | <.0001 |

| Soft skill development | |||||||

| My punctuality and attendance | 28 | 3.6 ± 1.1 | 4 (3–4) | 28 | 4.3 ± 0.8 | 4 (4–5) | .0001 |

| My initiative | 28 | 3.5 ± 1.1 | 4 (3–4) | 28 | 4.4 ± 0.8 | 5 (4–5) | <.0001 |

| My attention to detail | 28 | 3.5 ± 1.1 | 3 (3–4) | 28 | 4.4 ± 0.8 | 5 (4–5) | <.0001 |

| My critical thinking | 27 | 3.5 ± 0.9 | 3 (3–4) | 28 | 4.4 ± 0.7 | 4 (4–5) | <.0001 |

| My ability to self‐organize | 27 | 3.6 ± 1.3 | 4 (3–4.75) | 28 | 4.3 ± 0.9 | 4 (4–5) | .0001 |

| My effective communication | 28 | 3.5 ± 1.0 | 3 (3–4) | 28 | 4.4 ± 0.7 | 4 (4–5) | <.0001 |

| Andrology knowledge | |||||||

| My understanding of the causes of male infertility | 28 | 1.9 ± 1.1 | 1 (1–3) | 28 | 4.1 ± 0.9 | 4 (3.5–5) | <.0001 |

| My understanding of the semen analysis | 28 | 1.8 ± 1.0 | 1 (1–3) | 28 | 4.0 ± 0.9 | 4 (3–5) | <.0001 |

| My understanding of assisted reproduction techniques | 28 | 1.8 ± 0.9 | 1.5 (1–3) | 28 | 3.8 ± 0.9 | 4 (3–4.5) | <.0001 |

| The role of the urological surgeon in the management of male infertility | 28 | 1.5 ± 0.7 | 1 (1–2) | 28 | 3.5 ± 0.8 | 3.5 (3–4) | <.0001 |

| The role of empirical medical treatments in male infertility | 28 | 1.7 ± 0.9 | 1 (1–2.5) | 28 | 3.7 ± 0.9 | 4 (3–4) | <.0001 |

| The importance of sperm DNA fragmentation in male infertility | 28 | 1.7 ± 1.0 | 1 (1–2.5) | 28 | 3.7 ± 0.8 | 4 (3–4) | <.0001 |

| The importance of oxidative stress in male infertility | 28 | 1.7 ± 1.0 | 1 (1–2) | 28 | 3.7 ± 0.8 | 4 (3–4) | <.0001 |

Measured on a scale of 1—5 (poor—excellent, respectively).

Abbreviations: IQR, interquartile range; SD, standard deviation.

This article is being made freely available through PubMed Central as part of the COVID-19 public health emergency response. It can be used for unrestricted research re-use and analysis in any form or by any means with acknowledgement of the original source, for the duration of the public health emergency.

4. DISCUSSION

The inaugural Online Summer Mentorship course hosted by ACRM is an innovative and dynamic result‐oriented program aiming to train early investigators, researchers and medical professionals in reproductive medicine and related research areas. This is modelled on the annual face‐to‐face Summer Internship hosted by the ACRM since 2008 (Durairajanayagam et al., 2015). Being entirely online and at no cost to mentees who met the selection criteria, the mentorship was well received, including participation from international mentees and mentors. This program was planned and the content was carefully customized by a team of 18 international experts choosen by the ACRM management. The virtual platform provided the mechanism to overcome the challenges with time and distance in selecting an international team of eminent mentors to deliver a world‐class program, consistent with the reputation of the ACRM training program. The principles of this online program were similar to the past 12 annual Summer Internships that were offered as face‐to‐face theory and practical training at the ACRM. The content included training students in physiology and pathophysiology of reproductive medicine and clinical andrology, training in quantitative research methodology and statistics, teaching the art and science of writing scientific articles, presenting research in the PowerPoint format, and development and assessment of soft skills and plagiarism (Durairajanayagam et al., 2015). Unfortunately, a significant drawback to the annual face‐to‐face training program was the lack of practical and laboratory training in andrological diagnostics and bench research skills.

4.1. Provision of clear learning objectives and outcomes

The setting of clear learning objectives is important for any educational activity, forming a basis to emphasize the purpose of the learning, aid in subsequent study planning, clarify the intended goals or outcomes, allow an objective evaluation of clearly defined milestones and elicit student‐led academic discussions. This consideration was very important in the planning and delivery of the mentorship and reflected in the student feedback. Based on the results of the exit survey, the mentees reported that the course content and learning objectives were clear, and the content was meticulously organized and well planned and that they were able to participate fully and meet these learning outcomes. This enabled mentees to set appropriate goals with mentors for weekly teaching activities and assessments. Goal setting is a powerful tool used during student education, which utilizes the strengths of students to improve upon their weaknesses and allow mentors to provide focused advice and nurture which would ultimately help them to achieve the intended goals (Johnson & Graham, 1990). Day and Tosey (2011) believe that setting educational goals can ‘direct students attention to completing tasks, can motivate them to greater effort in performing tasks that move them towards achieving goals’ (Day & Tosey, 2011). Evidence indicates that goal setting has a significant positive impact on student performance. Dotson (2016) assessed 328 students who served as their controls and reported a significant improvement in their performance after setting clear objectives in comparison with an earlier period that was not based on pre‐set goals (Dotson, 2016). Another study assessing the impact of goal‐directed education on 147 students of the English language revealed that student performance was enhanced amongst participants exposed to clear objectives at the beginning of the learning activity in comparison with a control group (Idowu et al., 2014).

4.2. Constructive alignment and the meddler in the middle

The concept of constructive alignment, as put forward by Biggs (1996, 1999), is an alignment of teaching and learning activities, including content, teaching methodology and assessments, that address the constructivist learning theory (Biggs, 1996; Briggs & Tang, 1999). This online mentorship attempted a deliberate alignment between learning activities, assessments and outcomes. Design focused evaluation further included student feedback on the alignment between learning outcomes and teaching methods (Smith, 2008), also integrated into the core teaching strategy. Closely associated with constructive alignment is the need to allow students to do the learning themselves (student‐centred approach), to allow them to engage autonomously and collaboratively with the material through work‐based inside and outside the classroom (Morrison, 2014). This is effectively opposed to a teacher‐centred approach, the so‐called ‘sage on the stage’ (providing lectures as a core teaching methodology) (Morrison, 2014). A student‐centred approach, the so‐called ‘guide on the side’, is really a progression from ‘transmitting information’ (teacher‐focused) to that of ‘concept acquisition’ (teacher–learner interaction) and conceptual development (student‐focused) (Morrison, 2014; Trigwell et al., 1994). According to Morrison (2013), this requires the students (mentees) to shift their roles and responsibilities in the process, as well as distinguish between ‘information’ and ‘knowledge’ (described as information within a context, that has a purpose, that is relevant) (Morrison, 2014).

However, there are benefits and risks of both approaches, and the concept of the ‘meddler in the middle’ represents active intervention‐based pedagogy where the student (mentee) and teacher (mentor) are mutually involved in assembling knowledge (McWilliam, 2009). The concept is that a learning partnership is created between teacher and student, where meddling refers to the implications for what content is considered important, how this engagement should be done and how it should be assessed (McWilliam, 2009). As the name implies, it is somewhere in the middle of being a ‘sage’ and a ‘facilitator’, which was a goal for the core teaching methodology and constructive alignment of the online mentorship.

These principles were centrally important in the design of the online mentorship, in which the teaching model focused on one‐on‐one mentorship predominantly through writing exercises, research methodology techniques, data analysis and prompt and regular feedback. This was aligned further into the VCM events as a teaching and learning methodology, which provided more generic training in andrology, reproductive medicine, research methodology and plagiarism, to support the five core outcomes in the mentorship.

4.3. Feedback in teaching and learning

Student feedback is an ongoing discussion that may often be contentious and even confusing, within higher education (Boud & Molloy, 2013b). There is a general concern in higher education training that feedback is not provided enough for learners, and a view point that teachers put much effort into feedback that is then generally ignored or not used to improve learning outcomes. Therefore, feedback from teaching tasks, activities and assessments is very important in the learning outcomes; however, this needs to be more effective (Boud & Molloy, 2013a). Feedback can be enhanced in the digital age with online learning, but do not change the fundamental nature of feedback for learning and formative assessment, which is well suited to the so‐called Millennial Generation (born 1982 and 1994) and Generation Z (born between 1994 and early 2000s), where Generation Z (relevant to the mentee cohort) is highly connected and networked within the current digital age which has been used as an advantage in the online mentorship (Williams et al., 2012).

In this context, there was immediate summary feedback from the online MCQ results made available to mentees after each VCM lecture, followed by the lecturer discussing each question within the context of the overall mentee performance. The use of online technology through Google Forms provided the tool for this rapid review of mentee performance in a formative feedback strategy. Furthermore, the one‐on‐one engagement provided prompt and regular written and verbal feedback for student weekly assignments. This was associated with an authentic learning approach through authentic tasks, based on scientific writing and engagement with reading through scientific literature database searches: this approach was associated with a more accurate measure of student performance and outcomes (Herrington and Herrington, 2006). Importantly, through the concept of sustainable feedback, there was an opportunity for the mentees to drive their learning through guidance and therefore also have the generation of their feedback, which is proposed to provide learning outcomes in addition to the original task(s), and reduce any false expectations that cannot be delivered from the mentorship (Boud & Molloy, 2013b).

For feedback to be effective, it needs to be frequent and timely, should provide sufficient detail, must be aligned to the purpose of the task and outcomes and must be provided in a language easy to understand for students; students allowed improvements through guidance, provided exactly where the concerns arise using document review formats. The focus should be on learning and improvement through the relationship to future tasks (Gibbs & Simpson, 2005). These were central principles in the methodology for the mentorship, which was shown to have a significant positive impact on student learning and outcomes through the feedback strategy in place for the mentorship program. Therefore, written feedback that was clear and specific to the mentee and the student, underpinned by frequent and rapid feedback approach, providing unique one‐on‐one opportunity that is associated with improved outcomes.

4.4. Breaking the power dynamics

A variety of factors might impact or influence the learning outcomes of the mentees during the online mentorship, such as gender, religion and socio‐political issues (Zaidi, Verstegen, Naqvi, Morahan, et al., 2016). Amongst these factors, a healthy mentor–mentee association or relationship would be one of the essential factors for achieving optimal learning (Chan et al., 2017). The imbalance of power in the mentor–mentee association might adversely affect the optimal learning outcomes of the online mentorship program (Chan et al., 2017). To break any imbalance in power dynamics for developing an optimal mentor and mentee relationship and fostering cultural interaction (Zaidi, Verstegen, Naqvi, Dornan, 2016), the mentors and mentees were highlighted through the ‘Get to Know Your Mentor’ session and the ‘Meet the Mentee’ session, respectively, at VMC each week. The mentors shared the pictorial introduction of their family, their personal and professional interests, academic and research environment, conference and leisure trips, etc. Afterwards, the mentors interacted with a student representative and replied to the questions asked on behalf of all the mentees from the mentor’s presentation. Similarly, mentees shared information about their family, friends, academic interests, achievements, holiday trips, etc. and answered the questions asked by an ACRM mentor from their presentation. The results from the student feedback show that these sessions were generally rated as excellent, contributing to a sense of inclusion and reducing power dynamics to improve the mentee learning process and outcomes.

4.5. Plagiarism

Appropriate knowledge of research ethics is a fundamental part of academic teaching. Students must know that plagiarism represents cheating and dishonesty and is punishable by law. However, they must first be provided with proper training in research writing. This should be done through continuous education starting by proper language and grammar, research ethics and repeated practice on writing projects with stress on citations and referencing. Plagiarism, derived from the Latin words ‘Plaga’ (a hunting net) and ‘Plagiārius’ (kidnap), can be defined as the use or publishing of another author’s original data without providing proper or sufficient credit to the original author and trying to pass it off as your own (Gasparyan et al., 2017; Health & Services). It is a very serious ethical misconduct and is viewed as a criminal act that may be subjected to a number of legal actions ranging from rejection or retraction of the plagiarized manuscript to temporary or permanent banning of the plagiarist from publication together with public shaming (Wittmaack, 2005).

Plagiarism is more prevalent amongst junior researchers, especially undergraduates in non‐English speaking areas (Park, 2003). The reason behind increased plagiarism is mainly the lack of proper education in the context of writing, such as the not inclusion of undergraduate courses on plagiarism, poor English language inhibiting the ability to rephrase or the fact that, in some countries, the education system encourages copying of previous experiences and suppresses creativity (Chaurasia, 2016; Kokkinaki et al., 2015). Different studies have proven that proper education and knowledge of research ethics and the legality of authorship can significantly decrease the problem of plagiarism (Chaurasia, 2016).

Therefore, the mentorship had a focus on plagiarism, through interactive lectures, assessments and the application of the similarity index for all written assignments each week. During writing a manuscript or a thesis, some researchers just copy and paste complete sentences or paragraphs from other papers, sometime even without citing the original paper. The results of the exit survey support the feedback from mentors that there were significant improvements in similarity index in writing assignments as mentees learned about plagiarism in an active learning process and through appropriate regular feedback.

4.6. Soft skills development and professional conduct

Besides theoretical knowledge, hands‐on bench research, scientific writing and mentorship, soft skills represent one of the five pillars of a successful mentorship (Durairajanayagam et al., 2015). Considering that this mentorship had the aim to prepare the mentees for the future working environment, soft skills such as professionalism, time management, being organized and punctual, teamwork, participation in lectures and presentations, curiosity, critical thinking, effective communication and ability to abide by policies were evaluated. This online mentorship drew attention to the importance of soft skills for both professionals working in health care and students enrolled. The outcome suggested that the mentees became aware of the importance of soft skills as they made important progress when comparing the pre‐online mentorship and post‐online mentorship results. Besides professional skills, the mentees were trained to life values and interpersonal skills (Kashou et al., 2016) that are so necessary nowadays.

5. LIMITATIONS

Online learning is not free of disadvantages. Some researchers argue that interaction and timely feedback are often absent from online instruction (El‐Tigi & Branch, 1997; Olson & Wisher, 2002). It has also been widely recognized that online courses experience much higher attrition rates than classroom‐based courses (El‐Tigi & Branch, 1997; Merisotis & Phipps, 1999; Olson & Wisher, 2002). However, there was no dropout in our program. In addition, specialized skills are required to work with the technology, often resulting in sound and video production that is less than broadcast quality (Kerka, 1996). Students must also display greater learner initiative as there is less supervision than in a classroom environment; further, there is a risk for them to experience social isolation (Kerka, 1996). In addition, the teaching subjects requiring direct hands‐on or practical training are compromised. Most significantly, the online teaching and learning process is more demanding for both lecturers, who need to adapt to presenting lectures in a virtual environment, and students, who must be more self‐disciplined and self‐driven to achieve. Further, in the current study, the interpretation of the results might be biased due to the limited number of mentees (n = 28) attending the mentorship and the self‐reported evaluation. However, several measures were put in place to reduce the risk of bias and strengthen study findings.

Face validity of the final survey instrument was achieved through the construction of relevant, reasonable and unambiguous questions that were directed towards specific aims and revised by multiple authors until approval (Taherdoost, 2016). Those authors were highly qualified and experienced experts in research as well as teaching (Kember & Leung, 2008). The selected survey questions were specific to students attending the program and with a similar background in the scientific/medical field. Although the survey was anonymous and not obligatory, there was a 100% response rate which reduced the sampling errors and avoided any bias of nonresponse (Krumpal, 2013). This type of survey often presents a social desirability bias, but this is mostly directed to sensitive topics such as alcohol, sex and racism, amongst others. Computer‐assisted anonymity of a relatively low sensitivity topic reduces these biases (Krumpal, 2013). This cohort included the complete results of all students, and thus, there was no dropout during this online course, which strengthens the data (Wladis & Samuels, 2016). Thus, although there are some minor limitations, this might be considered a well‐designed questionnaire that gathered useful and accurate information.

6. CONCLUSIONS

These results illustrate that the aims of the online mentorship were achieved through an innovative and adaptive online educational model, collaborative development of clear learning outcomes and guidelines and effective online planning with the mentors. Teaching structure focused development through the application of scientific process and writing, under frequent one‐on‐one guidance. Furthermore, regular and real‐time written and/or verbal feedback was provided, which is also an established effective learning tool for development. Clear and immediate feedback on lecture assessments and discussions further entrenched a formative assessment model, which is considered most effective in teaching and learning. This provided a sense of facilitated education process, rather than a lecture‐based format, which is also shown to be more effective in educational outcomes. This innovative model has proven to be effective as an educational response during the ongoing COVID‐19 crisis.

Supporting information

Table S1

ACKNOWLEDGEMENT

The research conducted for this article was supported by the American Center for Reproductive Medicine, Cleveland Clinic.

Agarwal A, Leisegang K, Panner Selvam MK, et al. An online educational model in andrology for student training in the art of scientific writing in the COVID‐19 pandemic. Andrologia. 2021;53:e13961. 10.1111/and.13961

Contributor Information

Ashok Agarwal, Email: agarwaa@ccf.org.

Shubhadeep Roychoudhury, Email: shubhadeep1@gmail.com.

DATA AVAILABILITY STATEMENT

The authors confirm that the data supporting the findings of this study are available within the article.

References

- Agarwal, A. , Rana, M. , Qiu, E. , AlBunni, H. , Bui, A. D. , & Henkel, R. (2018). Role of oxidative stress, infection and inflammation in male infertility. Andrologia, 50(11), e13126. 10.1111/and.13126 [DOI] [PubMed] [Google Scholar]

- Almarzooq, Z. I. , Lopes, M. , & Kochar, A. (2020). Virtual learning during the COVID‐19 pandemic. Journal of the American College of Cardiology, 75(20), 2635–2638. 10.1016/j.jacc.2020.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker, L. , & Saul, W. (1994). Considering science and language arts connections: A study of teacher cognition. Journal of Research in Science Teaching, 31(9), 1023–1037. 10.1002/tea.3660310913 [DOI] [Google Scholar]

- Biggs, J. (1996). Enhancing teaching through constructive alignment. Higher Education, 32(3), 347–364. [Google Scholar]

- Biggs, J. (1999). Teaching for quality at university. SHRE & OU Press. [Google Scholar]

- Boud, D. , & Molloy, E. (2013a). Feedback in higher and professional education: Understanding it and doing it well. Routledge. [Google Scholar]

- Boud, D. , & Molloy, E. (2013b). Rethinking models of feedback for learning: The challenge of design. Assessment & Evaluation in Higher Education, 38(6), 698–712. 10.1080/02602938.2012.691462 [DOI] [Google Scholar]

- Briggs, J. , & Tang, C. (1999). Teaching for quality learning at university. Open University. [Google Scholar]

- Bui, A. D. , Sharma, R. , Henkel, R. , & Agarwal, A. (2018). Reactive oxygen species impact on sperm DNA and its role in male infertility. Andrologia, 50(8), e13012. 10.1111/and.13012 [DOI] [PubMed] [Google Scholar]

- Chan, Z. C. , Tong, C. W. , & Henderson, S. (2017). Power dynamics in the student‐teacher relationship in clinical settings. Nurse Education Today, 49, 174–179. 10.1016/j.nedt.2016.11.026 [DOI] [PubMed] [Google Scholar]

- Chaurasia, A. (2016). Stop teaching Indians to copy and paste. Nature, 534(7609), 591. 10.1038/534591a [DOI] [PubMed] [Google Scholar]

- Day, T. , & Tosey, P. (2011). Beyond SMART? A new framework for goal setting. The Curriculum Journal, 22(4), 515–534. 10.1080/09585176.2011.627213 [DOI] [Google Scholar]

- Dhawan, S. (2020). Online learning: A Panacea in the time of COVID‐19 crisis. Journal of Educational Technology Systems, 49(1), 5–22. 10.1177/0047239520934018 [DOI] [Google Scholar]

- Dotson, R. (2016). Goal setting to increase student academic performance. Journal of School Administration Research and Development, 1(1), 45–46. [Google Scholar]

- Durairajanayagam, D. , Kashou, A. H. , Tatagari, S. , Vitale, J. , Cirenza, C. , & Agarwal, A. (2015). Cleveland Clinic's summer research program in reproductive medicine: An inside look at the class of 2014. Medical Education Online, 20, 29517. 10.3402/meo.v20.29517 [DOI] [PMC free article] [PubMed] [Google Scholar]

- El‐Tigi, M. , & Branch, R. M. (1997). Designing for interaction, learner control, and feedback during web‐based learning. Educational Technology, 37(3), 23–29. [Google Scholar]

- Gasparyan, A. Y. , Nurmashev, B. , Seksenbayev, B. , Trukhachev, V. I. , Kostyukova, E. I. , & Kitas, G. D. (2017). Plagiarism in the context of education and evolving detection strategies. Journal of Korean Medical Science, 32(8), 1220–1227. 10.3346/jkms.2017.32.8.1220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbs, G. , & Simpson, C. (2005). Conditions under which assessment supports students’ learning. Learning and Teaching in Higher Education (1), 3–31. [Google Scholar]

- Handbook of Andrology (2010). The American Society of Andrology (2nd ed.). https://www.andrologysociety.org/wp‐content/uploads/2019/08/Handbook‐of‐Andrology‐Second‐Edition‐English.pdf

- Health, U. D. O., & Services, H. Office of Research Integrity . ORI policy on plagiarism. https://ori.hhs.gov/ori‐policy‐plagiarism

- Henkel, R. , Sandhu, I. S. , & Agarwal, A. (2019). The excessive use of antioxidant therapy: A possible cause of male infertility? Andrologia, 51(1), e13162. 10.1111/and.13162 [DOI] [PubMed] [Google Scholar]

- Herrington, A. , & Herrington, J. (2006). What is an authentic learning environment? In Herrington A., & Herrington J. (Eds.), Authentic learning environments in higher education (pp. 1–13). Information Science Publishing. [Google Scholar]

- Hunter, A.‐B. , Laursen, S. L. , & Seymour, E. (2007). Becoming a scientist: The role of undergraduate research in students' cognitive, personal, and professional development. Science Education, 91(1), 36–74. 10.1002/sce.20173 [DOI] [Google Scholar]

- Idowu, A. , Chibuzoh, I. , & Madueke, I. (2014). Effects of goal‐setting skills on students’ academic performance in English language in Enugu Nigeria. Journal of New Approaches in Educational Research (NAER Journal), 3(2), 93–99. [Google Scholar]

- Johnson, L. A. , & Graham, S. (1990). Goal setting and its application with exceptional learners. Preventing School Failure: Alternative Education for Children and Youth, 34(4), 4–8. 10.1080/1045988X.1990.9944567 [DOI] [Google Scholar]

- Kashou, A. , Durairajanayagam, D. , & Agarwal, A. (2016). Insights into an award‐winning summer internship program: The first six years. World Journal of Men's Health, 34(1), 9–19. 10.5534/wjmh.2016.34.1.9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kember, D. , & Leung, D. Y. P. (2008). Establishing the validity and reliability of course evaluation questionnaires. Assessment & Evaluation in Higher Education, 33(4), 341–353. 10.1080/02602930701563070 [DOI] [Google Scholar]

- Kerka, S. (1996). ERIC Clearinghouse on Adult Career and Vocational Education Columbus OH. Journal Writing and Adult Learning. https://files.eric.ed.gov/fulltext/ED399413.pdf [Google Scholar]

- Klucevsek, K. M. (2017). The intersection of information and science literacy. Communications in Information Literacy, 11(2), 7. [Google Scholar]

- Kokkinaki, A. I. , Demoliou, C. , & Iakovidou, M. (2015). Students’ perceptions of plagiarism and relevant policies in Cyprus. International Journal for Educational Integrity, 11(1), 3. 10.1007/s40979-015-0001-7 [DOI] [Google Scholar]

- Krumpal, I. (2013). Determinants of social desirability bias in sensitive surveys: A literature review. Quality & Quantity, 47(4), 2025–2047. 10.1007/s11135-011-9640-9 [DOI] [Google Scholar]

- McWilliam, E. (2009). Teaching for creativity: From sage to guide to meddler. Asia Pacific Journal of Education, 29(3), 281–293. 10.1080/02188790903092787 [DOI] [Google Scholar]

- Means, B. , Toyama, Y. , Murphy, R. , Bakia, M. , & Jones, K. (2010). Evaluation of evidence‐based practices in online learning: A meta‐analysis and review of online learning studies. https://www.ed.gov/about/offices/list/opepd/ppss/reports.html [Google Scholar]

- Merisotis, J. P. , & Phipps, R. A. (1999). What's the Difference? Outcomes of distance vs. traditional classroom‐based learning. Change: The Magazine of Higher Learning, 31(3), 12–17. 10.1080/00091389909602685 [DOI] [Google Scholar]

- Morrison, C. D. (2014). From ‘sage on the stage’to ‘guide on the side’: A good start. International Journal for the Scholarship of Teaching and Learning, 8(1). 10.20429/ijsotl.2014.080104 [DOI] [Google Scholar]

- Morrison, C. D. (2013). From ‘Sage on the Stage’ to ‘Guide on the Side’: A Good Start. International Journal for the Scholarship of Teaching and Learning, 8(1). [Google Scholar]

- Olson, T. , & Wisher, R. A. (2002). The effectiveness of web‐based instruction: An initial inquiry. The International Review of Research in Open and Distributed Learning, 3(2), 1–17. 10.19173/irrodl.v3i2.103 [DOI] [Google Scholar]

- Park, C. (2003). In other (People's) words: Plagiarism by university students–literature and lessons. Assessment & Evaluation in Higher Education, 28(5), 471–488. 10.1080/02602930301677 [DOI] [Google Scholar]

- Smith, C. (2008). Design‐focused evaluation. Assessment & Evaluation in Higher Education, 33(6), 631–645. 10.1080/02602930701772762 [DOI] [Google Scholar]

- Stassen, M. L. , Doherty, K. , & Poe, M. (2001). Course‐based review and assessment: Methods for understanding student learning. Office of Academic Planning & Assessment, University of Massachusetts Amherst. [Google Scholar]

- Taherdoost, H. (2016). Validity and reliability of the research instrument; how to test the validation of a questionnaire/survey in a research. International Journal of Academic Research in Management (IJARM), 5, 28–36. 10.2139/ssrn.3205040 [DOI] [Google Scholar]

- Trigwell, K. , Prosser, M. , & Taylor, P. (1994). Qualitative differences in approaches to teaching first year university science. Higher Education, 27(1), 75–84. 10.1007/BF01383761 [DOI] [Google Scholar]

- Williams, B. , Brown, T. , & Benson, R. (2012). Feedback in the digital environment. In Boud D., & Molloy E. (Eds.), Feedback in higher and professional education: Understanding it and doing it well (pp. 125–139). Taylor & Francis Group. [Google Scholar]

- Wittmaack, K. (2005). Penalties plus high‐quality review to fight plagiarism. Nature, 436(7047), 24. 10.1038/436024d [DOI] [PubMed] [Google Scholar]

- Wladis, C. , & Samuels, J. (2016). Do online readiness surveys do what they claim? Validity, reliability, and subsequent student enrollment decisions. Computers & Education, 98, 39–56. 10.1016/j.compedu.2016.03.001 [DOI] [Google Scholar]

- Zaidi, Z. , Verstegen, D. , Naqvi, R. , Dornan, T. , & Morahan, P. (2016). Identity text: An educational intervention to foster cultural interaction. Medical Education Online, 21, 33135. 10.3402/meo.v21.33135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaidi, Z. , Verstegen, D. , Naqvi, R. , Morahan, P. , & Dornan, T. (2016). Gender, religion, and sociopolitical issues in cross‐cultural online education. Advances in Health Sciences Education, 21(2), 287–301. 10.1007/s10459-015-9631-z [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.