Abstract.

Purpose: Automatic and consistent meningioma segmentation in T1-weighted magnetic resonance (MR) imaging volumes and corresponding volumetric assessment is of use for diagnosis, treatment planning, and tumor growth evaluation. We optimized the segmentation and processing speed performances using a large number of both surgically treated meningiomas and untreated meningiomas followed at the outpatient clinic.

Approach: We studied two different three-dimensional (3D) neural network architectures: (i) a simple encoder-decoder similar to a 3D U-Net, and (ii) a lightweight multi-scale architecture [Pulmonary Lobe Segmentation Network (PLS-Net)]. In addition, we studied the impact of different training schemes. For the validation studies, we used 698 T1-weighted MR volumes from St. Olav University Hospital, Trondheim, Norway. The models were evaluated in terms of detection accuracy, segmentation accuracy, and training/inference speed.

Results: While both architectures reached a similar Dice score of 70% on average, the PLS-Net was more accurate with an -score of up to 88%. The highest accuracy was achieved for the largest meningiomas. Speed-wise, the PLS-Net architecture tended to converge in about 50 h while 130 h were necessary for U-Net. Inference with PLS-Net takes less than a second on GPU and about 15 s on CPU.

Conclusions: Overall, with the use of mixed precision training, it was possible to train competitive segmentation models in a relatively short amount of time using the lightweight PLS-Net architecture. In the future, the focus should be brought toward the segmentation of small meningiomas () to improve clinical relevance for automatic and early diagnosis and speed of growth estimates.

Keywords: three-dimensional segmentation, deep learning, meningioma, magnetic resonance imaging, clinical diagnosis

1. Introduction

Arising from the arachnoid caps cells on the outer surface of the meninges, meningiomas are the second most common primary brain tumor after gliomas, and account for approximately one-third of all central nervous system tumors.1 With the increase in use of neuroimaging for checkups and precautionary diagnostics, incidental meningiomas are found more often.2 Magnetic resonance imaging (MRI), adopted as the first routine examination, represents the gold standard for diagnosis and planning of the optimal treatment strategy (i.e., surgery or conservative management).3,4 While several different MR sequences may be used for meningioma imaging, measurements of tumor diameters and volumes are done using the contrast-enhanced T1-weighted sequence. Systematic and consistent segmentation of brain tumors is of utmost importance for accurate monitoring of growth and for guiding treatment decisions. With meningiomas being typically slow-growing tumors, performing detection at an early stage and monitoring systematically growth over time could improve clinical decision making and the patient’s outcome.5 Manual segmentation by radiologists, often in a slice-by-slice fashion is too time consuming to be part of daily clinical routine. Tumor volume and thus growth is therefore usually assessed based on manual measurements of tumor diameters resulting in considerable inter- and intra-rater variability6 and rough measures for growth evaluation.7 Automatic segmentation of pathology from MR images has been an active area of research for several decades but has made considerable progress with the recent advances in deep learning-based methods.8,9 Nevertheless, the task of brain tumor segmentation remains challenging due to the large variability in appearance, shape, structure, and location.10 Similarly, problems might arise from the MRI volumes themselves whereby variability in resolution, intensity inhomogeneity,11,12 or varying intensity ranges among the same sequences and scanners can be noticed. Gliomas, especially of low grade, are considered the most difficult brain tumors to segment in MRI since they often are diffuse, poorly contrasted, and with a tentacle-like structure. Conversely, typical meningiomas are sharply circumscribed with a strong contrast enhancement. However, smaller meningiomas may resemble other contrast-enhancing structures, for example blood vessels (intensity, shape, and size) particularly at the base of the brain, making them challenging to detect automatically. In this study, we focus on the task of automatic meningioma segmentation using solely T1-weighted MRI volumes from both surgically treated patients and untreated patients followed at the outpatient clinic to create a method that is able to segment all tumor types and sizes.

1.1. State-of-the-Art

As described in a recent review study,13 brain tumor segmentation methods can be classified into three categories based on the level of user interaction: manual, semi-automatic, and fully automatic. For this study, we narrow the work to only fully automatic methods specifically focused on deep learning methods. In the past, a large majority of studies in brain tumor segmentation have been carried out using the multimodal brain tumor image segmentation (BRATS) challenge dataset, which only contains glioma images.14 The task of brain tumor segmentation can be approached in 2D where each axial image (slice) from the original 3D MRI volume is processed sequentially. Havaei et al.15 proposed a two-pathway convolutional neural network (CNN) architecture to combine local and global information, arguing that the prediction for a given pixel should be influenced by both the immediate local neighborhood and a larger context such as the overall position in the brain. Using the BRATS dataset for their experiments, they also proposed using a combination of all available MRI modalities as input for their method. In Zhao et al.,16 the authors proposed to train CNNs and recurrent neural networks using image patches and slices along the three different acquisition planes (i.e., axial, coronal, and sagittal) and fuse the predictions using a voting-based strategy. Both methods have been benchmarked on the BRATS dataset and were able to reach up to 80% to 85% in terms of Dice coefficient and sensitivity/specificity. A large number of other studies have been carried out using image or image patch-based techniques as an attempt to deal with large MRI volumes in an efficient way.17–19 However, methods based on features obtained from image patches or across planes generally achieve lower performance than methods using features extracted from the entire 3D volume directly or through a slabbing process (i.e., using a set of slices). Simple 3D CNN architectures,20,21 multi-scale approaches,22,23 and ensembling of multiple CNNs24 have been explored. While they achieve better segmentation performances, are more robust to hyper-parameters and generalize better, the 3D nature of MRI volumes still poses challenges with respect to memory and computation limitations even on high-end GPUs.

While the availability of the BRATS dataset has triggered a large amount of work on glioma segmentation, meningioma segmentation has been less studied resulting in a scarce body of work. More traditional machine learning methods (e.g., SVM and graph cut) have been used for multi-modal (T1c and T2f) and multi-class (core tumor and edema) segmentation.6 While the reported performances are quite promising, the validation studies have been carried out on a dataset of only 15 patients. More recently, Laukamp et al.25,26 used the DeepMedic architecture and framework,22 operating patch-wise in 3D, on their own multi-modal dataset. Using both T1-weighted contrast-enhanced and Fluid Attenuated Inversion Recovery (FLAIR) sequences, segmentation performance was reported for the contrast-enhancing tumor volume and total lesion including surrounding edema. While reported results reached above 90% Dice score for the former, the validation group consisted of only 56 patients and a second 3D fully connected network was used as post-processing to remove false positives. In addition, they investigated the use of heavy preprocessing techniques such as atlas registration and skull-stripping in combination with resampling and normalization. In their study, Pereira et al.17 documented the effectiveness of preprocessing steps such as normalization and data augmentation techniques, especially rotation, for brain tumor segmentation. Common limitations from previous meningioma segmentation studies include the relatively minimal datasets used and lack of advanced validation studies, such as -fold cross-validation, to prove generalization. In addition, leveraging the processing of a 3D MRI volume as a whole, without the need for a slab/patch-wise solution, has barely been investigated. In general, the global trend in CNN architecture development leads to ever larger and deeper 3D networks, even more so when considering ensembling strategies. As a consequence, the models’ training and inference is becoming extremely computationally intensive, potentially prohibiting their use in clinical settings with limited time and access only to regular computers.

In this paper, our two main contributions are: (i) the study of a lightweight 3D architecture, and (ii) a set of validation studies on the largest meningioma dataset showcasing potential for clinical use. In the former, a full T1-weighted MRI volume can be leveraged as input and false positive reduction capabilities are inherently embedded end-to-end, removing the need for post-processing cleaning. In the latter, a cross-validation over 698 patients is performed, featuring MR acquisitions with a wide range of slice thicknesses and tumor expressions (i.e., volume and location) from both the hospital and outpatient clinic, exhibiting good performances in a widespread context.

2. Data

For this study, we have used a dataset of 698 Gd-enhanced T1-weighted MRI volumes acquired on 1.5 or 3 Tesla scanners at one the seven hospitals in the geographical catchment region of the Department of Neurosurgery at St. Olavs University hospital, Trondheim, Norway between 2006 and 2015. All patients were 18 years or older with radiologically or histopathologically confirmed meningioma. Of those, 324 patients underwent surgery to remove the meningioma while the remaining 374 patients were followed at the outpatient clinic. Overall, MRI volume dimensions covered voxels and the voxel sizes ranged between . All the meningiomas were manually delineated by an expert using 3D Slicer,27 and two examples are provided in Fig. 1. Given the wide range in voxel sizes, especially in the -dimension (slice thickness), we decided to further split our dataset in two. The first subset (DS1) consisted of the 600 high-quality MRIs with a slice thickness of at most 2 mm, whereas the second subset (DS2) consisted of all 698 MRIs including the 98 images with a considerably higher slice thickness. Overall, the meningiomas had a volume ranging .

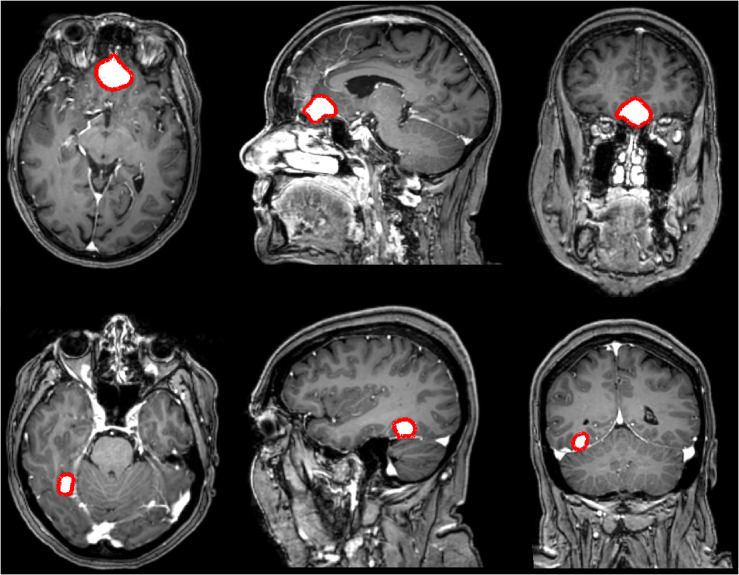

Fig. 1.

Illustrations of the manually annotated meningiomas over the dataset (in red). Each row represents a different patient, and each column represents respectively the axial, coronal, and sagittal view.

We analyzed the differences between the groups of meningiomas. The volume of the surgically resected meningiomas was on average larger () compared to the untreated meningiomas followed at the outpatient clinic (). A -test showed statistical significance () between treatment strategy and tumor volume. Meningiomas for patients followed at the outpatient clinic are significantly smaller, making them more difficult to identify. Conversely, no statistical significance () has been unveiled between treatment strategy and poor image resolution. There were 50 MRIs with poor resolution for patients followed at the outpatient clinic and 48 MRIs for patient who underwent surgery.

3. Methods

First, in Sec. 3.1, we explain our rationale for selecting the architectures and deep learning frameworks. Then, in Sec. 3.2, we introduce the different preprocessing steps that can be applied. Finally, we present the selected training strategies for the two architectures in Sec. 3.3.

3.1. Architectures and Frameworks

In early studies using fully CNN architectures, the original 3D MRI volumes were required to be split into 2D patches or slices before being processed independently and sequentially due to insufficient GPU memory. While it presented an advantage with respect to memory use, the lack of sufficient global information about the 3D relationships between voxels was detrimental for the overall performance. The advances in GPU design and increased memory capacity enabled the research on 3D neural network architectures to become mainstream. For the task of semantic segmentation, encoder-decoder architectures have been favored, especially since the emergence of the U-Net,28 followed by the 3D U-Net.29 Many U-Net variants have been studied in 2D and 3D for medical image segmentation over the past years and this architecture can be considered as a strong baseline.30–32 In this study, we have implemented an architecture close to the initial 3D U-Net, shown in Fig. 2. When working with 3D images, preprocessing is needed to fit the number of parameters on the GPU. Typical solutions are to either downsample the input volume, perform sub-division into slabs that are sequentially processed, or reduce the batch size to 1, which will result in poor convergence. Training on mini-batches from size 2 to 32 have shown to improve generalization performances.33

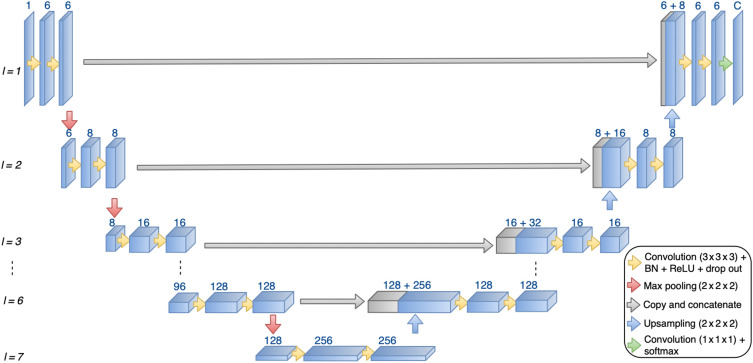

Fig. 2.

3D U-Net architecture used in this study. The number of layers (l) and number of filters for each layer can vary based on input sample resolution.

To take full advantage of the high-resolution MRI volumes as input, multi-scale encoder-decoder architectures have been proposed. Initially designed for the segmentation of lung lobes in computed tomography volumes, the Pulmonary Lobe Segmentation Network (PLS-Net) architecture is based on three insights: efficiency, multi-scale feature representation, and high-resolution 3D input/output.34 The core components are (i) depthwise separable convolutions making the model lightweight and computationally efficient, (ii) dilated residual dense blocks to capture wide-range and multi-scale context features, and (iii) an input reinforcement scheme to maintain spatial information after downsampling layers. We have implemented the architecture as described in the original paper, and an illustration is shown in Fig. 3.

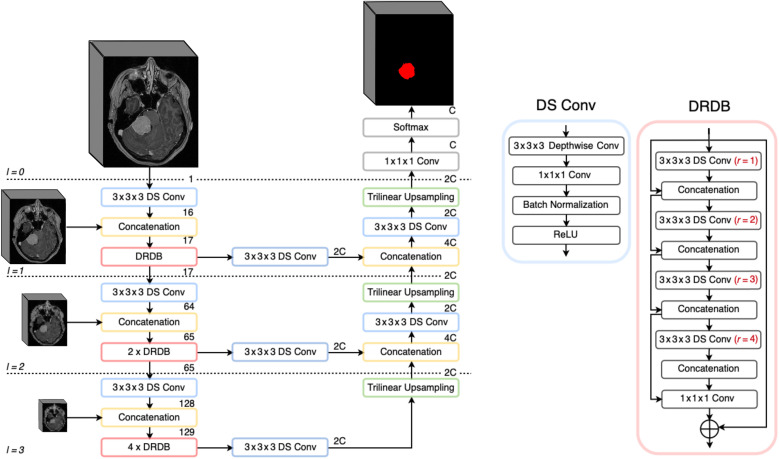

Fig. 3.

PLS-Net architecture used in this study, kept identical as described in the original paper.34

To address the issue of limited GPU memory, recent advances have been made for enabling the use of mixed precision computation rather than full precision for training neural networks. Mixed precision consists in using the full precision (i.e., float32) for some key specific layers (e.g., loss layer) while reducing most of the other layers to half precision (i.e., float16). The training process therefore requires less memory due to faster data transfer operations while at the same time math-intensive and memory-limited operations are sped up. These benefits are ensured at no accuracy expense compared to a full precision training. Not all combinations of deep learning frameworks and GPU architectures are fully compatible with mixed precision training. Given our hardware, and the original description of the PLS-Net architecture implementation, PyTorch35 was favored for running experiments with mixed precision. For the remaining experiments benefiting of full precision, we used TensorFlow,36 which is our default framework for deep learning.

3.2. Preprocessing

To maximize and standardize the information input to the neural network, we propose a series of independent preprocessing steps for generating the training samples:

-

•

N4 bias correction using the implementation from the Advanced Normalization Tools (ANTs) ecosystem.37

-

•

Resampling to a uniform and isotropic spacing of 1 mm using NiBabel, with a spline interpolation of order 1.

-

•

Cropping the volumes as tightly as possible around the patient’s head by discarding the 20% lowest intensity values (background noise) and identifying the largest remaining region. This is less restrictive and faster to perform than skull stripping.

-

•

Volume resizing to a specific shape dependent on the study/architecture using spline interpolation of order 1. When resizing based on an axial slice resolution, the new depth value is automatically inferred.

-

•

Finally, either normalization of the intensity to the range [0, 1] (S) or zero-mean standardization (ZM).

3.3. Training Strategies

With the large range and inhomogeneous distribution of meningioma volumes, the baseline sampling strategy was to populate each fold with a similar volume distribution. We therefore split the meningiomas into three equally populated bins and randomly sampled from these bins to generate the cross-validation folds. All models were trained from scratch using the Adam optimizer with an initial learning rate of , the class-average Dice as loss function, and training was stopped after 30 consecutive epochs without validation loss improvement. All U-Net models were trained with a batch size of eight using full precision in TensorFlow while all PLS-Net models were trained with batch size four using mixed precision with PyTorch. We used a classical data augmentation approach where the following transforms have been applied to each input sample with a probability of 50%: horizontal and vertical flipping, random rotation in the range , translation up to 10% of the axis dimension, zoom between [80,120]%, and perspective transform with a scale within [0.0, 0.1]. We defined two sets of augmentation methods: a set of transforms with proven efficacy (Augm1), and a set including more complex transformations for experimenting (Augm2). In the former, only the common flipping, rotation, and translation transforms are performed. In the latter, all the above-mentioned transformations are included.

3.3.1. U-Net

As training strategy, we specifically investigated the impact of different sampling patterns in addition to the augmentation approach described above. Each patient’s MRI volume was split into a collection of training samples (slabs) made of 32 slices along the -axis. The stride parameter determined the number of slices shared by two consecutive slabs (i.e., an overlap of 24 slices for an stride of 8). Since some meningiomas are tiny, we also investigated balancing the ratio of positive to negative samples for each MRI volume. Random negative slabs were removed when the ratio was exceeded but no positive slab was excluded, purposely crafted as a non-bijective function. All MRI volumes were resized to an axial resolution of , leaving the third dimension adjusted dynamically following Eq. (1). For the architecture design, we used seven layers with [8, 16, 32, 64, 128, 256, 256] as number of filters and all spatial dropouts were set to a value of 0.1. In Table 1, we summarize the different configurations.

| (1) |

Table 1.

Overview of the different training strategies for the U-Net architecture.

| Configuration | Stride | Neg/Pos ratio | Norm. | Augm. | Resolution |

|---|---|---|---|---|---|

| Cfg1 | 8 | None | S | Augm1 | |

| Cfg2 | 8 | 2 | S | Augm1 | |

| Cfg3 | 16 | 2 | S | Augm1 | |

| Cfg4 | 8 | 1 | S | Augm1 |

3.3.2. PLS-Net

We decided to use the exact same architecture, number of layers, and kernel sizes as presented in the original paper. The single design choice was to keep a fixed input size of while we focused on different preprocessing and data augmentation aspects, summarized in Table 2.

Table 2.

Overview of the different training strategies for the PLS-Net architecture.

| Configuration | Data | Bias Cor. | Norm. | Augm1 | Augm2 | Resolution |

|---|---|---|---|---|---|---|

| Cfg1 | DS1 | False | S | True | False | |

| Cfg2 | DS1 | True | S | True | False | |

| Cfg3 | DS1 | False | ZM | True | False | |

| Cfg4 | DS1 | False | S | True | True | |

| Cfg5 | DS2 | False | S | True | True |

4. Validation Studies

In this work, we aim to maximize the pixel-wise segmentation and instance detection performances. In addition, we investigate the ability to generalize for realistic use in a clinical setting. Unless specified otherwise, we followed a five-fold cross-validation approach whereby at every iteration three folds were used for training, one for validation, and one for testing.

4.1. Measurements

For quantifying the performances, we used: (i) the Dice score, (ii) the -score, and (iii) the training/inference speed. The Dice score, reported in percentage (%), is used to assess the quality of the pixel-wise segmentation by computing how well a detection overlaps with the corresponding manual ground truth. The -score, reported in %, assesses the combination of recall and precision performances. Finally, the training speed (in ), the inference speed IS (in ms), and the test speed TS (in ) to process one MRI are reported.

4.2. Metrics

For the segmentation task, the Dice score is computed between the ground truth and a binary representation of the probability map generated by a trained model. The binary representation is computed for ten different equally spaced probability thresholds (PT) in the range [0, 1]. For the detection task, a similar range of PTs is used to generate the binary results. A detection threshold (DT), in the list [0, 0.25, 0.50, 0.75], is used to decide at the patient level if the meningioma has been sufficiently segmented to be considered a true positive, discarded otherwise (reported as Dice-TP). In case of multifocal meningiomas, a connected components approach coupled to a pairing strategy was employed to compute the recall and precision values. Pooled estimates, computed from each fold’s results, are reported for each measurement.38 Measurements are either reported with mean, mean and standard deviation, or mean and respective percentile confidence interval. If not stated otherwise, a significance level of 5% was used when calculating confidence intervals.

-

1.

Optimization study: Performances using the different training configurations reported in Tables 1 and 2 are studied. For U-Net, results are reported after training on the first fold only, given the time required to train one model.

-

2.

Speed versus segmentation accuracy: This study aims at assessing which of the two architectures achieves the best overall performances considering all measurements, using the best configurations identified in the previous study.

-

3.

Impact of dataset quality and variability: Models trained with the best PLS-Net configuration were used for inference on the 98 low-resolution MRI volumes and the results were averaged. A direct comparison is done over the high-resolution and low-resolution images with models trained including the whole dataset (PLS-Cfg5).

-

4.

Ground-truth quality: To assess the quality of the manual annotations, all performed by a single expert, we performed an inter-annotator variability study. A random subset of 30 MRI volumes, 20 high-resolution and 10 low-resolution, was given for annotation to a second expert and differences were computed using the Dice score.

5. Results

5.1. Implementation Details

Results were obtained using an HP desktop with an Intel Xeon @3.70 GHz, 62.5 GiB of RAM, NVIDIA Quadro P5000 (16 GB), and a regular hard-drive. Implementation was done in Ubuntu 18.04, using Python 3.6, TensorFlow v1.13.1, and PyTorch lightning v0.7.3 with PyTorch back-end v1.3. For further training speed-up, all PLS-Net models were trained using the benchmark flag and Amp optimization level 2 (FP16 training with FP32 batch normalization and FP32 master weights). For data augmentation, all the methods used came from the Imgaug Python library.39

5.2. Optimization Study

Results obtained for the U-Net configurations are reported in Table 3, and the ones for the PLS-Net architecture are reported in Table 4. With an optimized distribution of positive and negative training samples, U-Net performs similarly to PLS-Net regarding Dice performances. The highest precision is achieved with the first U-Net configuration, which was to be expected since all negative samples are kept. However, the best -score obtained with the U-Net architecture is far worse than with the PLS-Net architecture. The slabbing strategy generates more false positives since only local image features struggle to differentiate a small meningioma from other anatomical structures such as blood vessels. The different training configurations of PLS-Net provide comparable results across the board. The average Dice-TP reaches up to 87%, indicating a good segmentation quality when a meningioma is detected. Considering the -score as the most important measurement for a relevant diagnosis use in clinical practice, UNet-cfg2 and PLS-cfg4 are the two best configurations.

Table 3.

Segmentation performances obtained with the different U-Net architecture configurations, over the first fold only.

| Cfg | PT | DT | Dice | Dice-TP | Recall | Precision | |

|---|---|---|---|---|---|---|---|

| Cfg1 | 0.6 | 0.5 | 77.13 | 73.55 | 81.06 | ||

| Cfg2 | 0.6 | 0.25 | 77.78 | 81.82 | 77.76 | ||

| Cfg3 | 0.4 | 0.25 | 76.27 | 84.29 | 69.63 | ||

| Cfg4 | 0.6 | 0.5 | 76.34 | 81.82 | 71.55 |

Note: Bold values are used to highlight the best/key results.

Table 4.

Segmentation performances obtained with the different PLS-Net architecture configurations, averaged across all folds.

| Cfg | PT | DT | Dice | Dice-TP | Recall | Precision | |

|---|---|---|---|---|---|---|---|

| Cfg1 | 0.5 | 0.5 | |||||

| Cfg2 | 0.6 | 0.5 | |||||

| Cfg3 | 0.6 | 0.5 | |||||

| Cfg4 | 0.5 | 0.25 |

Note: Bold values are used to highlight the best/key results.

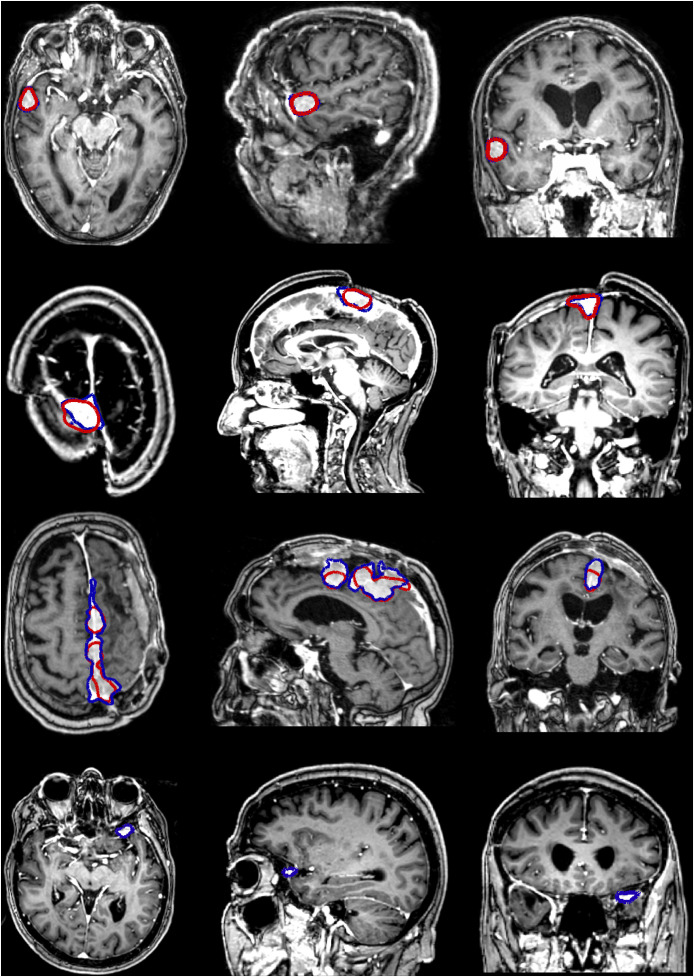

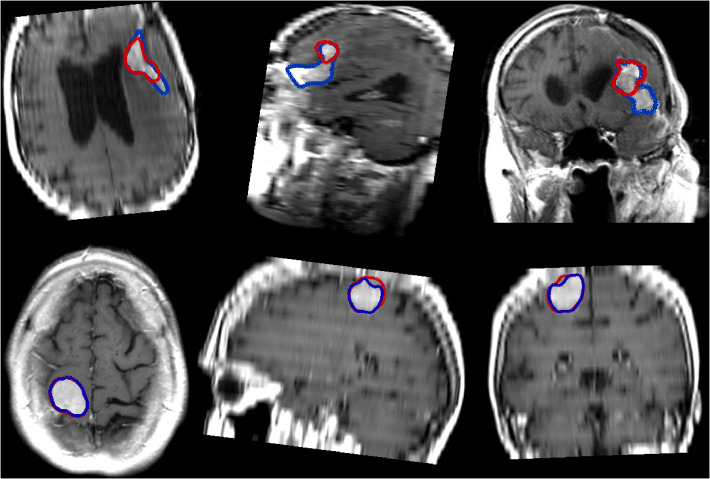

Some examples, obtained with the PLS-cfg4 model, are shown in Fig. 4 where the ground truth is indicated in blue and the obtained segmentation is indicated in red. For the patients featured in the first two rows, the segmentation is almost perfect, whereas for the third patient the whole extent of the meningioma is not fully segmented. In the last case, the meningioma is both relatively small and located right behind the eye socket, and as such has not been detected at all.

Fig. 4.

Illustrations of segmentation results using the PLS-cfg4 model, each row representing a different patient. The ground truth for the meningioma in shown in blue whereas the automatic segmentation is shown in red.

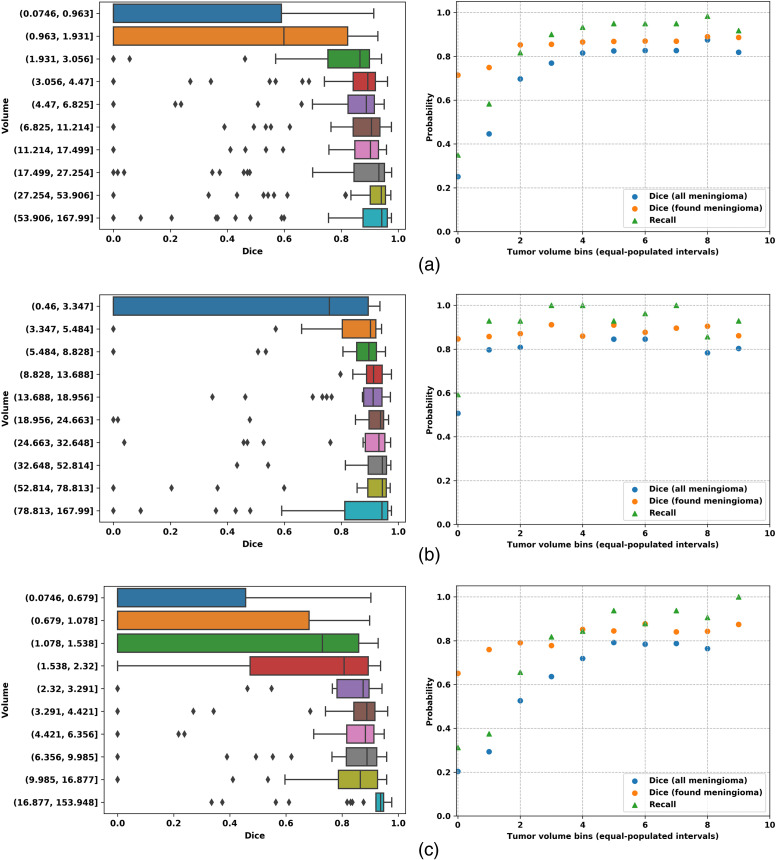

When considering the origin of the data (i.e., hospital or outpatient clinic) reported in Table 5, performance appears to be better for surgically treated tumors reaching an -score higher than 90% with the PLS-Net architecture. Conversely, more meningiomas from the outpatient clinic are left undetected and thus unsegmented, explaining the lower recall and average Dice score. Meningiomas from outpatient clinic patients being statistically smaller than surgically treated meningiomas, we further analyzed the relationship between the treatment strategy and tumor volume as shown in Fig 5. Small meningiomas () are challenging to segment and are either not or poorly segmented with at best a 50% Dice score. For larger meningiomas (), the Dice score reaches 90% whether surgically resected or followed at the outpatient clinic. Segmentation performance is heavily impacted by meningioma volumes, and probably also by the larger variability in tumor location within the brain for those smaller meningiomas. This is a clear indication that designing better sampling strategies for training is of utmost importance to train a robust and generic model.

Table 5.

Best segmentation performances based on the treatment strategy: surgery or follow-up at the outpatient clinic.

| Cfg | Origin | Dice | Dice-TP | Recall | Precision | |

|---|---|---|---|---|---|---|

| UNet-cfg2 | Hospital | |||||

| Clinic | ||||||

| PLS-cfg4 | Hospital | |||||

| Clinic |

Fig. 5.

(a) Overall; (b) hospital; and (c) outpatient clinic results for PLS-cfg4. The first column shows Dice performances over tumor volumes, the second column shows Dice, Dice-TP, and recall performances over tumor volumes. Ten equally populated bins, based on tumor volumes, have been used to group the meningioma performances.

5.3. Speed versus Segmentation Accuracy

On average, convergence is achieved much faster with the PLS-Net architecture () than with U-Net (130 h) when leaving enough room for the models to grind. Competitive models with a validation loss below 0.2 can even be generated in shorter time using the PLS-Net architecture (). A summary of the training time and convergence speed is given in Table 6. The inference speed is fast with both architectures, making them both usable in practice. On average with the U-Net architecture, the inference speed is of for a total processing time of . With this architecture, the MRI volume is split into non-overlapping slabs that are processed sequentially. For the PLS-Net architecture, the inference speed is lowered to for a total processing time of . The small number of trainable parameters with PLS-Net (0.251 M) also makes it usable on low-end computers simply equipped with a CPU. In comparison, our U-Net architecture is made of 14.75 M trainable parameters, which is consequently higher. In case of pure CPU usage, the total processing time of a new MRI with the PLS-Net architecture increases to .

Table 6.

Training time results for the different U-Net and PLS-Net configurations. Results are averaged across the five folds when possible.

| Cfg | # samples | Best epoch | Train time (h) | |

|---|---|---|---|---|

| UNet-Cfg1 | 19 684 | 5 800 | 79 | 127.28 |

| UNet-Cfg2 | 14 617 | 3 990 | ||

| UNet-Cfg3 | 7 359 | 2 120 | 153 | 90.1 |

| UNet-Cfg4 | 10 321 | 2 860 | 105 | 83.42 |

| PLS-Cfg1 | 600 | 1 920 | ||

| PLS-Cfg2 | 600 | 1 920 | ||

| PLS-Cfg3 | 600 | 1 920 | ||

| PLS-Cfg4 | 600 | 1 920 | ||

| PLS-Cfg5 | 698 | 2 220 |

5.4. Impact of Input Resolution

Segmentation performances for the high- and low- resolution images are summarized in Table 7. Only minor differences across all performance metrics can be seen whether the low-resolution images are used during the training process or left aside. Selecting only the high-resolution images for training, coupled to advanced data augmentation methods, allows the trained models to be robust to extreme image stretching when resizing an MRI with for example an original slice thickness of 5 mm. Figure 6 shows segmentation results on low-resolution images.

Table 7.

Performances analysis when including the images with a slice thickness .

| Resolution | Cfg | Dice | Dice-TP | Recall | Precision | |

|---|---|---|---|---|---|---|

| High | PLS-cfg4 | |||||

| PLS-cfg5 | ||||||

| Low | PLS-cfg4 | |||||

| PLS-cfg5 |

Fig. 6.

Illustrations of segmentation results on images with a poor resolution using the PLS-cfg4 model, each row representing a different patient. The ground truth for the meningioma in shown in blue whereas the automatic segmentation is shown in red.

5.5. Ground Truth Quality

Between the two experts, the segmentation is matching with an average Dice score of 89.1 [86.3, 92], indicating a strong similarity. The Dice was higher for the high-resolution scans, with a Dice of 92 [89.8, 94.2], compared to 83.4 [75.8, 91] for the low-resolution ones. However, as the confidence intervals overlap, there is not a significant difference between the annotators with respect to image resolution. There was also found no difference in the models performances on both ground truths. This indicates that the initial ground truth is sufficient for training good models in terms of segmentation.

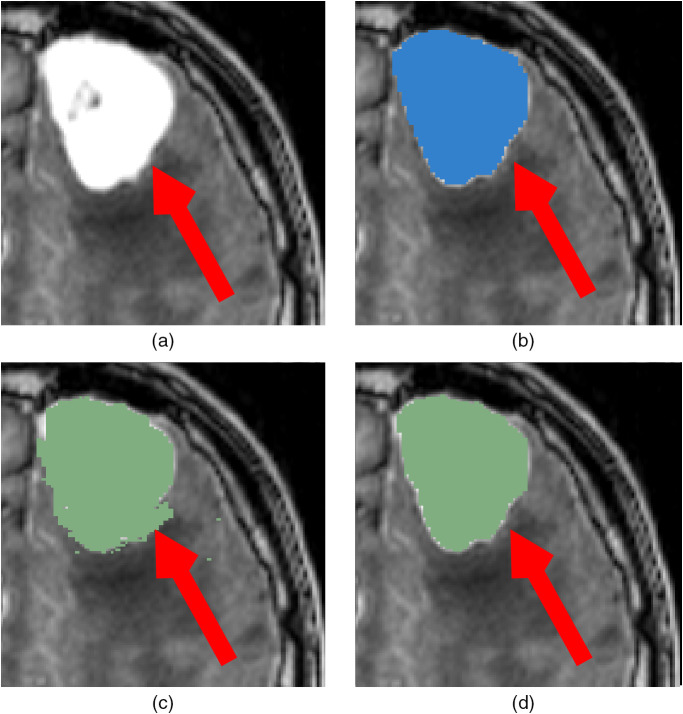

The ground-truths were originally not created in a pure manual fashion but rather with the assistance of semi-automatic methods from 3D Slicer for time-efficiency purposes. As a result, the presence of some noise in the ground truth has been identified, as shown in Fig. 7. While such noise is not detrimental since our models appear to be robust and do not generate small artifacts, cleaning the ground truth should lead to a slightly better model and increase the obtained Dice by some percents.

Fig. 7.

Illustration of noise in the ground truth from the use of 3D Slicer, indicated by a red arrow. (a) Original image; (b) prediction with PLS-Cfg4; (c) ground truth used for training; (d) fully manual ground truth from a second expert.

6. Discussion

The dataset used in this study is larger than any previously described dataset in a meningioma segmentation paper. MRI investigations have been performed using multiple scanners in seven different hospitals, reducing potential biases, and preventing overfitting issues. In addition, the smaller meningiomas from the outpatient clinic exhibit a wider range of size and location in the brain, which enables our models to be more robust. The identification of slight noise in the ground-truth, due to the use of an external software to facilitate the task, is a slight inconvenience and should be adjusted. Putting the noise aside, the manual annotations from both experts were matching almost perfectly ensuring the overall quality of our dataset.

Overall, the PLS-Net architecture provides the best performances with no additional efforts or adjustments. Smarter training schemes are necessary to be implemented for the U-Net architecture, providing a clear speed-up with no impact on the segmentation performances. Nevertheless, due to the slabbing strategy and the lack of global information, reaching the same performances as with PLS-Net seems unachievable. In a local slab a part of a meningioma might appear similar to other hyperintense structures, making the network struggle. The PLS-Net architecture offers the possibility to leverage the entire 3D MRI volume and at the same time bypasses the need for postprocessing refinement using a second model to remove false positives. In the future, and with the increasing access to medical data, such training schemes would need to be even more complex with no clear benefit until GPUs are large enough to fit high-resolution MRI volumes in combination with deep architectures. While using a batch-size of 4 is not detrimental for reaching an optimum when training the PLS-Net architecture, batch normalization layers are not optimally put to use, which can be one explanation regarding the difference between the validation loss and the actual results. This discrepancy can also originate from not computing the Dice score on exactly the same images. The difference in resolution, spacing, and extent between a preprocessed MRI volume and its original version can amount to Dice score variations when computed using the exact same ground-truth and detection. Trained models are also robust to the ground-truth noise since predictions do not exhibit the same patterns of small fragmentation. Nevertheless, cleaning the ground-truth is imperative to generate better models since the loss function is based on the Dice score computation.

Considering the trade-off between model complexity, memory consumption, and training/inference speed, the PLS-Net architecture is clearly superior for the task of single class segmentation. While meningiomas can be expressed in a large variety of shapes and sizes, their localization in the brain is important. Such information can only be captured by processing the entire MRI volume at once and will be somewhat lost when using a slabbing scheme. In addition, and given that only one class is to be segmented, the huge amount of trainable parameters from U-Net is superfluous. The limitations of the PLS-Net would be apparent if multiple classes were to be segmented. Compared with the U-Net architecture, the use of the lightweight PLS-Net architecture proves to be better both in terms of segmentation performances but also in terms of training and inference speed. Dividing tenfold the training time is especially relevant with the increase in data collection and the need for models re-training on a regular basis. Different training schemes and data augmentation techniques can also be investigated in a relatively short amount of time.

On top of the neural network architecture choice, using mixed precision during training played an essential role to drastically reduce training time. Given the reduced memory footprint, larger-resolution input samples or larger batch size can be investigated. Having identified that small meningiomas are often missed, increasing the input resolution should help the network finding smaller objects. The downside would be a longer training time, and potentially difficulties to converge if the batch size has to be lowered all the way to 1. Increasing the variability or ratio of small meningiomas in the training set might also steer the network in the correct direction. Lastly, hard-mining could be a potential alternative after careful analysis of the training samples. In any case, using mixed precision by default in the future seems to be a promising strategy in many applications.

Compared to previous studies, similar results are obtained using only one MR sequence and without heavy preprocessing (i.e., bias correction, registration to MNI space, and skull stripping). In a lightweight framework, and with a shallow multi-scale model, a new patient’s MRI can be processed in at most 2 min with CPU making it interesting for clinical routine use.

In this study, directly benchmarking our models’ performances with state-of-the-art results was not possible due to a lack of a publicly available meningioma dataset. Most previous brain tumor segmentation studies have used the BRATS challenge dataset, which contains only glioma patients. The few studies focusing on meningioma segmentation used considerably smaller datasets with at most 56 patients in the test set while not being openly accessible at the same time. Nevertheless, the size of our dataset is on-par with the BRATS challenge dataset, which contains 542 patients overall and a fixed test set of 191 patients as of 2018. In addition, we do report our results after performing five-fold cross-validations, which provides better insight into the model’s reproducibility, robustness, and capacity to generalize, compared to a single dataset split into training, testing, and validation sets.

By featuring MRI volumes from the hospital and outpatient clinic in our dataset, we have showcased our method’s potential on even the smaller and more challenging cases. As many meningiomas are never surgically removed but rather followed over time, there is a need for tumor detection at an early stage and consistent volume and growth estimation, where such method could be of clear benefit. In addition, our dataset is featuring MRI volumes of highly varying quality, with slice thicknesses in the range [0.6, 7.0] mm. Based on the results from our fourth study, we illustrated that a similar performance could be reached for high/low-resolution MRI volumes. By lifting additional restrictions regarding an MRI acquisition quality or origin, and only needing a T1-weighted sequence, our proposed approach is eligible for use in a broader clinical setting.

7. Conclusion

In this paper, we investigated the task of meningioma segmentation in T1-weighted MRI volumes. We considered two different fully CNN architectures: U-Net and PLS-Net. The lightweight PLS-Net architecture enables both high segmentation performances while having a very competitive training and processing speed. Using multi-scale architectures and leveraging the whole MRI volume at once impacts mostly the -score, which is beneficial for automatic diagnosis purposes. Smarter data balancing and training schemes have also shown to be necessary to improve performances. In future works, improved multi-scale architectures specifically tailored for such tasks should be explored, but improvements could also come from better data analysis and clustering.

Acknowledgments

This work was funded by the Norwegian National Advisory Unit for Ultrasound and Image-Guided Therapy (usigt.org).

Biography

Biographies of the authors are not available.

Disclosures

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Informed consent was obtained from all individual participants included in the study.

Contributor Information

David Bouget, Email: david.bouget@sintef.no.

André Pedersen, Email: andre.pedersen@sintef.no.

Sayied Abdol Mohieb Hosainey, Email: s.a.m.h@live.no.

Johanna Vanel, Email: johanna.vanel@sintef.no.

Ole Solheim, Email: ole.solheim@ntnu.no.

Ingerid Reinertsen, Email: Ingerid. Reinertsen@sintef.no.

References

- 1.Ostrom Q. T., et al. , “CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the united states in 2012–2016,” Neuro Oncol. 21(Suppl. 5), v1–v100 (2019). 10.1093/neuonc/noz150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Spasic M., et al. , “Incidental meningiomas: management in the neuroimaging era,” Neurosurg. Clin. 27(2), 229–238 (2016). 10.1016/j.nec.2015.11.012 [DOI] [PubMed] [Google Scholar]

- 3.Goldbrunner R., et al. , “Eano guidelines for the diagnosis and treatment of meningiomas,” Lancet Oncol. 17(9), e383–e391 (2016). 10.1016/S1470-2045(16)30321-7 [DOI] [PubMed] [Google Scholar]

- 4.Kunimatsu A., et al. , “Variants of meningiomas: a review of imaging findings and clinical features,” Jpn. J. Radiol. 34(7), 459–469 (2016). 10.1007/s11604-016-0550-6 [DOI] [PubMed] [Google Scholar]

- 5.Fountain D. M., et al. , “Volumetric growth rates of meningioma and its correlation with histological diagnosis and clinical outcome: a systematic review,” Acta Neurochir. 159(3), 435–445 (2017). 10.1007/s00701-016-3071-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Binaghi E., Pedoia V., Balbi S., “Collection and fuzzy estimation of truth labels in glial tumour segmentation studies,” Comput. Methods Biomech. Biomed. Eng. Imaging Visualization 4(3-4), 214–228 (2016). 10.1080/21681163.2014.947006 [DOI] [Google Scholar]

- 7.Berntsen E. M., et al. , “Volumetric segmentation of glioblastoma progression compared to bidimensional products and clinical radiological reports,” Acta Neurochir. 162(2), 379–387 (2020). 10.1007/s00701-019-04110-0 [DOI] [PubMed] [Google Scholar]

- 8.Bauer S., et al. , “A survey of mri-based medical image analysis for brain tumor studies,” Phys. Med. Biol. 58(13), R97 (2013). 10.1088/0031-9155/58/13/R97 [DOI] [PubMed] [Google Scholar]

- 9.Ueda D., Shimazaki A., Miki Y., “Technical and clinical overview of deep learning in radiology,” Jpn. J. Radiol. 37(1), 15–33 (2019). 10.1007/s11604-018-0795-3 [DOI] [PubMed] [Google Scholar]

- 10.Watts J., et al. , “Magnetic resonance imaging of meningiomas: a pictorial review,” Insights Imaging 5(1), 113–122 (2014). 10.1007/s13244-013-0302-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Nyúl L. G., Udupa J. K., Zhang X., “New variants of a method of MRI scale standardization,” IEEE Trans. Med. Imaging 19(2), 143–150 (2000). 10.1109/42.836373 [DOI] [PubMed] [Google Scholar]

- 12.Tustison N. J., et al. , “N4ITK: improved N3 bias correction,” IEEE Trans. Med. Imaging 29(6), 1310–1320 (2010). 10.1109/TMI.2010.2046908 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Işın A., Direkoğlu C., Şah M., “Review of MRI-based brain tumor image segmentation using deep learning methods,” Procedia Comput. Sci. 102, 317–324 (2016). 10.1016/j.procs.2016.09.407 [DOI] [Google Scholar]

- 14.Menze B. H., et al. , “The multimodal brain tumor image segmentation benchmark (BRATS),” IEEE Trans. Med. Imaging 34(10), 1993–2024 (2015). 10.1109/TMI.2014.2377694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Havaei M., et al. , “Brain tumor segmentation with deep neural networks,” Med. Image Anal. 35, 18–31 (2017). 10.1016/j.media.2016.05.004 [DOI] [PubMed] [Google Scholar]

- 16.Zhao X., et al. , “A deep learning model integrating fcnns and crfs for brain tumor segmentation,” Med. Image Anal. 43, 98–111 (2018). 10.1016/j.media.2017.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pereira S., et al. , “Brain tumor segmentation using convolutional neural networks in MRI images,” IEEE Trans. Med. Imaging 35(5), 1240–1251 (2016). 10.1109/TMI.2016.2538465 [DOI] [PubMed] [Google Scholar]

- 18.Dvorak P., Menze B., “Structured prediction with convolutional neural networks for multimodal brain tumor segmentation,” in Proc. Multimodal Brain Tumor Image Segmentation Challenge, pp. 13–24 (2015). [Google Scholar]

- 19.Zikic D., et al. , “Segmentation of brain tumor tissues with convolutional neural networks,” in Proc. MICCAI-BRATS, pp. 36–39 (2014). [Google Scholar]

- 20.Myronenko A., “3D MRI brain tumor segmentation using autoencoder regularization,” Lect. Notes Comput. Sci. 11384, 311–320 (2018). 10.1007/978-3-030-11726-9_28 [DOI] [Google Scholar]

- 21.Isensee F., et al. , “No new-Net,” Lect. Notes Comput. Sci. 11384, 234–244 (2018). 10.1007/978-3-030-11726-9_21 [DOI] [Google Scholar]

- 22.Kamnitsas K., et al. , “Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation,” Med. Image Anal. 36, 61–78 (2017). 10.1016/j.media.2016.10.004 [DOI] [PubMed] [Google Scholar]

- 23.Xu Y., et al. , “Multi-scale masked 3-D U-Net for brain tumor segmentation,” Lect. Notes Comput. Sci. 11384, 222–233 (2018). 10.1007/978-3-030-11726-9_20 [DOI] [Google Scholar]

- 24.Feng X., et al. , “Brain tumor segmentation using an ensemble of 3D U-Nets and overall survival prediction using radiomic features,” Front. Comput. Neurosci. 14, 25 (2020). 10.3389/fncom.2020.00025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Laukamp K. R., et al. , “Fully automated detection and segmentation of meningiomas using deep learning on routine multiparametric MRI,” Eur. Radiol. 29(1), 124–132 (2019). 10.1007/s00330-018-5595-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Laukamp K. R., et al. , “Automated meningioma segmentation in multiparametric MRI,” Clin. Neuroradiol. 1–10 (2020). 10.1007/s00062-020-00884-4 [DOI] [PubMed] [Google Scholar]

- 27.Pieper S., Halle M., Kikinis R., “3D slicer,” in 2nd IEEE Int. Symp. Biomed. Imaging: Nano to Macro, IEEE, pp. 632–635 (2004). 10.1109/ISBI.2004.1398617 [DOI] [Google Scholar]

- 28.Ronneberger O., Fischer P., Brox T., “U-Net: convolutional networks for biomedical image segmentation,” Lect. Notes Comput. Sci. 9351, 234–241 (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 29.Çiçek Ö., et al. , “3D U-Net: learning dense volumetric segmentation from sparse annotation,” Lect. Notes Comput. Sci. 9901, 424–432 (2016). 10.1007/978-3-319-46723-8_49 [DOI] [Google Scholar]

- 30.Zhou Z., et al. , “Unet++: a nested U-Net architecture for medical image segmentation,” Lect. Notes Comput. Sci. 11045, 3–11 (2018). 10.1007/978-3-030-00889-5_1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Alom M. Z., et al. , “Recurrent residual convolutional neural network based on U-Net (R2U-Net) for medical image segmentation,” arXiv:1802.06955 (2018).

- 32.Isensee F., et al. , “nnU-Net: Self-adapting Framework for U-Net-Based Medical Image Segmentation,” in Bildverarbeitung für die Medizin, Handels H., et al., Eds., Informatik aktuell, Springer Vieweg, Wiesbaden, Germany: (2019). [Google Scholar]

- 33.Masters D., Luschi C., “Revisiting small batch training for deep neural networks,” arXiv:1804.07612 (2018).

- 34.Lee H., et al. , “Efficient 3D fully convolutional networks for pulmonary lobe segmentation in CT images,” arXiv:1909.07474 (2019).

- 35.Paszke A., et al. , “Pytorch: an imperative style, high-performance deep learning library,” in Adv. Neural Inf. Process. Syst., pp. 8024–8035 (2019). [Google Scholar]

- 36.Abadi M., et al. , “Tensorflow: a system for large-scale machine learning,” in 12th USENIX Symp. Oper. Syst. Des. and Implement., pp. 265–283 (2016). [Google Scholar]

- 37.Avants B. B., Tustison N., Song G., “Advanced normalization tools (ANTs),” Insight J. 2(365), 1–35 (2009). [Google Scholar]

- 38.Killeen P. R., “An alternative to null-hypothesis significance tests,” Psychol. Sci. 16(5), 345–353 (2005). 10.1111/j.0956-7976.2005.01538.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jung A. B., et al. , “imgaug,” 2020, https://github.com/aleju/imgaug (accessed 1 February 2020).