Abstract

Recent studies have demonstrated prominent and widespread movement-related signals in the brain of head-fixed mice, even in primary sensory areas. However, it is still unknown what role these signals play in sensory processing. Why are these sensory areas “contaminated” by movement signals? During natural behavior, animals actively acquire sensory information as they move through the environment and use this information to guide ongoing actions. In this context, movement-related signals could allow sensory systems to predict self-induced sensory changes and extract additional information about the environment. In this review we summarize recent findings on the presence of movement-related signals in sensory areas, and discuss how their study in the context of natural freely-moving behaviors could advance models of sensory processing.

Keywords: active sensation, ethology, sensory physiology, cortical processing

Sensory processing and movement

Neuroscientists seek to understand the functions performed by brain regions and their constituent neural circuits. Historically, lesion and stimulation studies revealed clear delineations between brain regions, allowing their assignment to specific sensory, motor, or cognitive functions [1,2]. This “localization of function” framework has motivated highly specific, reductionist experimental paradigms in sensory systems, wherein experiments are designed to isolate and dissect the parameters encoded by the brain region of interest. For example, experiments in primary visual cortex traditionally employed a narrow range of purely visual stimuli, such as bars and gratings to study orientation selectivity [3,4]. These studies are often based on a feedforward model of sensory processing, where successive brain regions in a sensory pathway encode progressively more elaborate features of the sensory scene. After sensory processing is complete, the output is then transmitted to cognitive and motor areas to drive specific behaviors. It is now widely recognized that this standard feedforward model is an over-simplification, since it overlooks important components such as topdown feedback and various forms of contextual modulation [5]. Despite the growing recognition of these complexities, many experimental paradigms in sensory research still involve restricting or averaging out the subject’s movements and reducing stimulus complexity, in order to isolate processing of specific input features. This reductionist approach has undeniable advantages, including more precise stimulus control and greater amenability to neural recordings. However, isolating sensory stimulation from movement may present an important limitation if sensory and motor processing are not cleanly segregated in the brain.

Recent studies, particularly those in which a restrained animal’s movements are carefully monitored, are eroding the model of clearly delineated sensory versus motor processing in the brain. Even regions considered primary sensory areas have been shown to encode prominent correlates of movement, including locomotion, location, goal-directed actions, and spontaneous body movements. What computational role might these movement correlates play in sensory processing, particularly in primary sensory areas? Under natural conditions, sensory processing often occurs in the context of ongoing movements that are disrupted in head-fixed preparations; thus the role of resulting movement-related signals becomes challenging to interpret. Freely-moving ethological paradigms in which animals can naturally interact with their sensory environment to extract relevant information may therefore help clarify the role of movement-related signals in sensory computations. Recent advances in tracking an animal’s sensory input and motor output have made such ethological paradigms more accessible to rigorous sensory neuroscience. In this review, we summarize recent findings of movement-related signals in sensory areas, focusing primarily on experiments in mice but drawing upon examples from other species where relevant. We discuss the potential contributions of these movement-related signals to sensory processing, and describe experimental approaches to understanding sensory function in freely-moving animals performing natural behavior.

Recent findings on movement-related signals in sensory regions

Historically in sensory physiology experiments, parameterized stimuli are presented either to a passively observing subject or to a subject trained to report perception of a specific stimulus parameter. Neural responses are analyzed in relation to either the sensory input or to the subject’s response in a task. This approach has led to major advances in understanding how sensory information is encoded in the brain, and how information leading to a decision is computed [6–8]. While this approach is powerful in isolating the individual signals under investigation, the constrained nature of the tasks, stimuli, and behavioral readouts results in many other signals being eliminated or averaged out. Indeed, it is common to ascribe any variability that is not related to either the stimulus or decision as “noise correlations”, suggesting that it represents background activity arising from the network. However, it is now clear that much of this variability is in fact due to specific signals, such as movement, that were not previously measured. Here we provide a brief overview of recent findings (Figure 1), some of which have also been reviewed elsewhere [9–12], before focusing on their implications for sensory computations used in natural behavior.

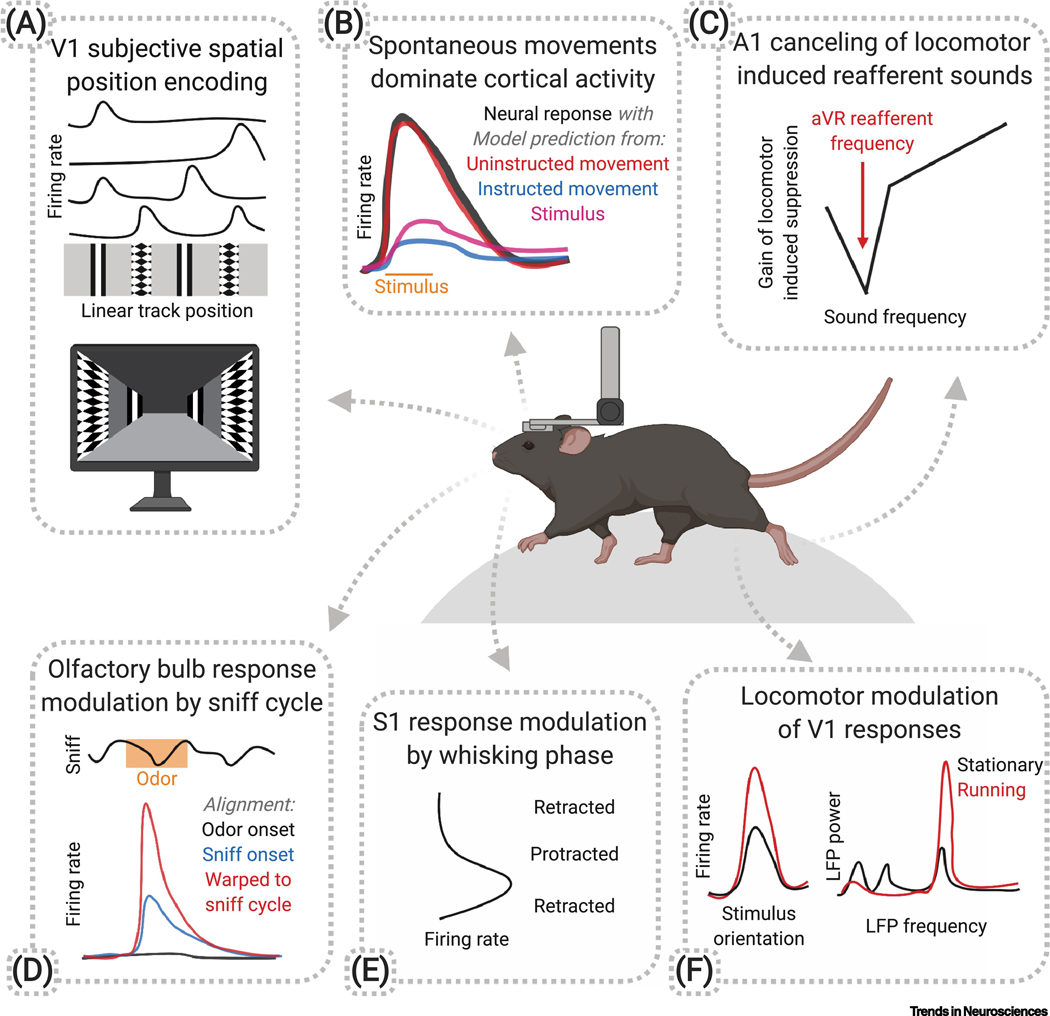

Figure 1. Overview of studies revealing movement-related signals in sensory areas in rodents.

A) A subset of mouse V1 neurons respond to a visual feature at a single location despite its repeated presence along a linear track, suggesting place cell-like encoding. Based on [25], and see also [24]. B) Movement-modulated activity in mouse primary sensory cortical neurons is often best explained by models incorporating uninstructed movements during a task, rather than instructed movements or stimulus presentations. Based on [27]. C) Linking the presence of a single tone to locomotion using auditory virtual reality (aVR) in mice leads to M1-mediated suppression of locomotor gain modulation in A1 at that specific frequency. Based on [23]. D) Alignment of odorant responses in mouse olfactory bulb mitral/tufted cells to odorant responses belie robust responses that become apparent when spikes are warped to match the phase of the sniff cycle. Based on [62]. E) The magnitude of rat S1 responses to whisker contacts depends on the phase within the whisking cycle. Based on [33]. F) Locomotion increases the gain of mouse V1 responses without affecting orientation tuning, and shifts the local field potential toward higher frequencies. Based on [14].

The advent of recordings in awake head-fixed mice moving on a treadmill [13] led to a series of studies demonstrating movement-related signals in sensory areas. These findings were made possible by allowing mice to make body movements such as locomotion, and by systematically correlating a range of behavioral parameters with neural activity. A number of studies have now shown that spontaneous locomotion strongly modulates activity in visual and auditory cortex. In visual cortex, locomotion generally increases visual responses via changes in gain [14] and spatial integration [15]. In addition, a significant fraction of cells encode movement speed directly [16], or encode mismatch of movement with the visual field when recorded in closed-loop virtual reality (VR) [17]. While most prominent in cortex, changes of a significantly smaller magnitude are also found in LGN [18] and superior colliculus [19]. In contrast to these increases in response, locomotion generally leads to decreased sensory responses in auditory cortex [20–22], which has been linked to the need to filter out self-generated noise [23]. Furthermore, beyond simple modulation of response magnitude, some movement-related signals in sensory cortices represent the net result of movement and location within the environment. For instance, recent studies using VR demonstrated responses in V1 that resemble hippocampal place cells [24,25] and represent rewarded locations independent of visual input [26].

A pair of recent studies [27,28] revealed that movement-related signals are more widespread in the brain that previously realized, and correspond to more than just locomotion and location. Both studies took advantage of high-throughput recording methods to measure the activity of large numbers of neurons across widespread brain regions, as well as detailed quantification of body movements including high resolution video of the face. Stringer et al. [28] examined activity during passive presentation of visual stimuli to head-fixed mice. Like other studies, they found that neural responses to repeated stimuli were highly variable, however this variability could be partially explained by a model accounting for behavioral variables including locomotor speed and arousal (as measured by pupil diameter), along with dimensionally-reduced measurements from the facial video. Although some of these variables are themselves correlated, there was no single factor that could explain the impact of each of these measures, suggesting that visual cortex contains a high-dimensional representation of body movements and behavioral state. Indeed, even specific facial twitches were represented in neural activity.

Musall et al. [27] performed a related analysis in the context of an auditory/visual discrimination task. They observed that even during performance of a highly trained task, the animal made frequent task-irrelevant “spontaneous” movements. While the sensory input and accompanying behavioral response explained a significant fraction of neural activity when averaged across trials, the untrained spontaneous movements explained much of the trial-to-trial variance and overall a larger amount of the total activity relative to task-related activity. As the authors note, “much of the single-trial activity that is often assumed to be noise or to reflect random network fluctuations is instead related to uninstructed movements.” Similar to Stringer et al. [28], these movements ranged from facial twitches to paw movements and licking. Together, these two studies demonstrated that a rich array of movement signals are represented across cortical areas, from primary sensory areas to regions associated with cognition and motor output.

Information about locomotion and facial movements may seem out of place in some primary sensory areas, but in fact, movements are essential to obtaining sensory input in many sensory systems, and corresponding neural correlates have been found. For example, sniffing controls the exposure of olfactory sensory neurons to odorant molecules, and drives synchronous neural activity in the olfactory bulb even in the absence of odor stimulation [29–31]. Likewise, the whisk cycle entrains activity in many areas of the somatosensory system [32,33]. Further, whisking and touch signals are integrated via sensorimotor loops at multiple points along the somatosensory pathway, from brainstem to cortex [34]. During investigatory behavior, rodents synchronize cycles of sniffing, whisking, nose movement, and three dimensional head rotation, thus moving the sensory organs of the head in synchrony [35–37]. Consequently, these cyclic sampling movements drive activity in widespread brain areas [38,39], similar to the locomotion and facial movement-related signals described above. Finally, head movements are important for sampling the visual scene, and head orientation relative to the environment is critical during navigation. Accordingly, recent studies have shown neural signals for head rotation in mouse primary visual cortex, in both head-fixed mice on a rotating platform [40] and freely moving animals [41].

In addition to movement, many studies measuring neural activity in primary sensory areas have also found correlates of other non-sensory variables, including arousal [20,42,43], reward [44], and thirst [45]. In fact, the effects of locomotion are closely coupled with arousal [42]. However, in this review we focus on correlates of movement per se, and their implications for sensory processing.

Movement signals are clearly prominent in the rodent brain, but are they similarly present in humans and other primates? In humans, respiration has been shown to drive activity and entrains oscillations in olfactory regions, amygdala, and hippocampus [46,47]. People on treadmills or stationary bikes show small but significant changes in the amplitude of visually evoked EEG signals [48,49], although in some cases shifts in spatial integration are opposite to those in mice [48]. Interestingly, human subjects experience a sensation of accelerated self-motion after running on a treadmill, which is dependent on vision and specific to the direction of motion on and off the treadmill [50]. Furthermore, freely moving humans show increased sensitivity to stimuli in the peripheral visual field when walking [51], which is particularly striking given that the periphery plays a major role in control of navigation [52], and suggests that movement modulates processing for particular behavioral goals. Also similarly to mice, human auditory cortex shows a decreased response to sounds resulting from one’s own actions [53]. These studies suggest there are at least some parallels between modulation of sensory processing by movement in humans and mice.

More direct comparisons to mouse studies could be made using single unit electrophysiology in non-human primates, such as during locomotion on a treadmill, however such studies have not yet been performed. Nevertheless, it is clear that when constraints on movement in primate experiments are released, non-sensory signals are present in visual cortex. For example, allowing an animal to move their eyes rather than fixating reveals modulation of visual responses by eye movement and gaze direction in V1 [54–56]. Even very small magnitude fixational eye movements, or “microsaccades,” strongly modulate the activity of visual cortical neurons [57–59]. It remains to be determined whether other movement-related signals are as dominant in primates as they are in rodents; these diverse signals may be more prevalent in higher, rather than primary, sensory areas of primates, given the more extensive elaboration of primate cortical sensory areas.

Collectively, these findings suggest that a significant component of activity in sensory cortices reflects multiple aspects of movement across species. The next step is to determine how these movement signals facilitate computations that are necessary for creating and acting on representations of the world.

Computational benefits of movement signals for sensory processing

Animals are constantly moving, whether locomoting through the environment, performing sampling movements to acquire sensory information, or at least breathing. These movements shape the statistics of sensory input in ways that can either impede or benefit sensory inference, and movement-related signals have the potential to provide information necessary to address both of these.

Movement poses an impediment to sensory processing by complicating even the most basic sensory computations that would be trivial for a stationary observer. For example, movement of the head and eyes transforms a static visual scene into a complicated and dynamic retinal input, dependent on the location of the eyes and direction of gaze at each moment. Simple computations, such as determining the orientation of a stationary visual feature, therefore become non-trivial. Similarly in the olfactory system, active sniffing behavior imposes variation on how odorants interact with receptors [60–62]. Consequently, judging something as seemingly simple as external odor concentration becomes confounded by self-generated stimulus dynamics. The brain may resolve these ambiguities by integrating information about eye movements or sniff cycles with sensory data. In the olfactory bulb, for example, information about the sniff waveform present in the “spontaneous” activity [29] is integrated with information about the inhaled odors. Using such an integrative mechanism, the olfactory system could account for the effect of variable sniffing rate and amplitude [63,64]. To make accurate inferences about the external environment, the brain must generally correct for where and how the sensors are moving, and movement-related signals could enable sensory areas to compute such corrections.

Movement can also benefit sensory processing. At a fundamental level, some modalities generally require movement to receive stimulation, as when using a hand to palpate an object or inhaling odorant molecules. Movement allows an animal to selectively gain information about the environment, as when the eyes and head move to survey a visual scene. In addition to facilitating the acquisition of new sensory input, important features of sensory scenes can be extracted from the dynamics that arise during movement, a view advocated for instance by J. J. Gibson (Box 1). In vision, for example, self-generated optic flow provides a strong cue to estimate one’s motion through the environment, as well as the positions of objects in the environment [65]. In particular, movement can facilitate estimation of the distance to an object based on visual looming or motion parallax, or the relative locations of objects based on the resulting pattern of occlusion. These self-motion based cues distinguish the experience of watching an image on a screen from that of real-world movement, or virtual reality settings, in that the visual scene responds in predictable ways as the subject moves through an environment. Additionally, the flow of images that appears as one moves around an object, together with knowledge of self-motion, allows identification of its three-dimensional properties [66]. Other sensory modalities also have specific cues that are generated by movement. In olfaction, the direction towards an odor source can be determined based on temporal gradients that result from movement of the nose through an odor plume, often resulting from strategic sampling movements [67–69]. Likewise, texture of an object can be deduced from the pattern of sensory input that arises as a hand (or whisker) moves across a surface [70,71]. Finally, in auditory processing, the external structure of the head and ear shape the amplitude and spectra of incoming sounds, providing cues to their location [72] that would depend on head/ear positioning.

Box 1 -. Gibson and the role of self-motion in sensory perception.

Perception is often treated as a problem of constructing veridical representations of the environment from the information received by the sensory organs. For example, a three-dimensional visual scene must be “recovered” from a pair of two-dimensional retinal images. James Gibson argued that it makes sense to instead think about perception as a problem of obtaining relevant information from interactions with the environment. In particular, Gibson proposed that features of the environment have “affordances”, which are defined by the potential interaction between the organism and that surface or object [66]. For a mouse, the ground affords walking, but a river does not. These interactions, present in natural behaviors, thus provide an important basis for studying sensory processing.

Gibson also emphasized that perception is not a passive process: for example, the retina is rotated and translated through space by the eyes, body, and head at the behest of the organism in order to seek out information about the environment. Gibson, defined sensory systems as extending beyond their respective primary sensory organs: the legs are considered a part of the visual system [66]. Perception therefore entails both determining what is out there in the world and how it relates to the organism. This requires “co-perception” of the environment and the self: “To perceive is to be aware of the surfaces of the environment and of oneself in it.” [66]. This goal could be achieved by integrating sensory input from the receptors with the motor efference and sensory reafference signals that accompany active exploration. The need for this integration may explain why motor signals should be incorporated in early sensory areas in the brain. Therefore, investigating how movement-related signals contribute to sensory processing may benefit from incorporating Gibson’s ideas when developing experimental paradigms where animals can freely explore their environment: “Perceiving gets wider and finer and longer and richer and fuller as the observer explores the environment.” [66]

Extracting all these types of information generally requires knowledge of the corresponding movements. One influential framework for understanding the relationship of sensation and movement comes from the “reafference principle” [73]. This framework is motivated by a fundamental ambiguity of sensory input – distinguishing self-generated stimulation (“reafference”) from stimulation driven by the outside world (“exafference”). These can be teased apart using signals about self-generated motor commands, such as efference copy or corollary discharge. A more modern refinement of the same concept is the “forward model”, which converts a motor command into a prediction of sensory consequences [74,75]. Biological implementations of forward models have been observed in diverse neural systems. Crickets inhibit auditory afferents and interneurons during singing [76]. In electric fish, corollary discharges play multiple roles, cancelling reafferent input in one brain region [77] while facilitating readout of sensory input in another [78]. In mice, feedback from anterior cingulate and secondary motor cortex into primary visual cortex carries signals that can predict the consequence of motor actions on visual flow [79], and similar signals cancel self-generated sounds in auditory cortex [23]. In non-human primates, an oculomotor pathway from superior colliculus to frontal eye field carries corollary discharge signals that are essential for executing accurate sequential eye movements [80].

Another framework of relevance in this context is predictive coding, wherein the brain continuously constructs an internal model of the external world that is used to encode predictions of future sensory input [81,82]. Predictive coding can largely overlap with forward models under some definitions, but can be differentiated in models where predictive signals are subtracted from bottom-up sensory input in order to encode error signals in dedicated neural populations. Like standard feature encoding neurons, these populations would be tuned to specific features but respond to differences in expected and actual presence of these features [81,82]. The possibility that large movement-related signals in sensory cortices of head-fixed animals might correspond to activity in prediction-error neurons due to a lack of expected sensory change is particularly intriguing.

This rich body of work, across a range of species, demonstrates that movement has the potential to provide important information for sensory processing. We discuss next ways in which experimental studies may address how movement signals are integrated into sensory computations during natural behavior.

Studying movement signals in natural behavior

The incorporation of self-motion signals into sensory computations may be difficult to understand from experiments using head-fixation and simple stimuli. In these paradigms, self-induced stimulus dynamics are limited by design. Therefore, when an animal tries to rotate its head, the world does not rotate in the expected direction, and vestibular signals are absent. Even in virtual reality, the transformation from movement on a treadmill to rendering of the visual input is unnatural, in part because it is typically only coupled to locomotion (often along a single dimension). Furthermore, even freely moving operant tasks rarely capture the natural sensory consequences of the animal’s ethological movement repertoire [83], and thus may not engage the same circuitry as natural behavior [84,85]. Based on these considerations, elucidating the role of movement signals in sensory circuits could greatly benefit from studying them in naturalistic, freely moving paradigms.

While there are many features to consider in designing more naturalistic experiments [85–87], here we focus on what we consider to be the most critical components for understanding sensory processing: allowing the animal to move freely and extract ethologically relevant features from a rich sensory environment. Critically, a calculated approach will be required to overcome the difficulties that experimenters using physical restraint originally sought to avoid. Such experiments require determining the sensory input by monitoring external stimuli and sampling behavior, which is now feasible due to technological and conceptual advances. Just as experiments in awake head-fixed animals were a tremendous advance from anesthetized recordings, experiments in freely-moving animals performing natural behaviors have the potential to significantly expand our understanding of perception.

Ethological paradigms for sensory physiology

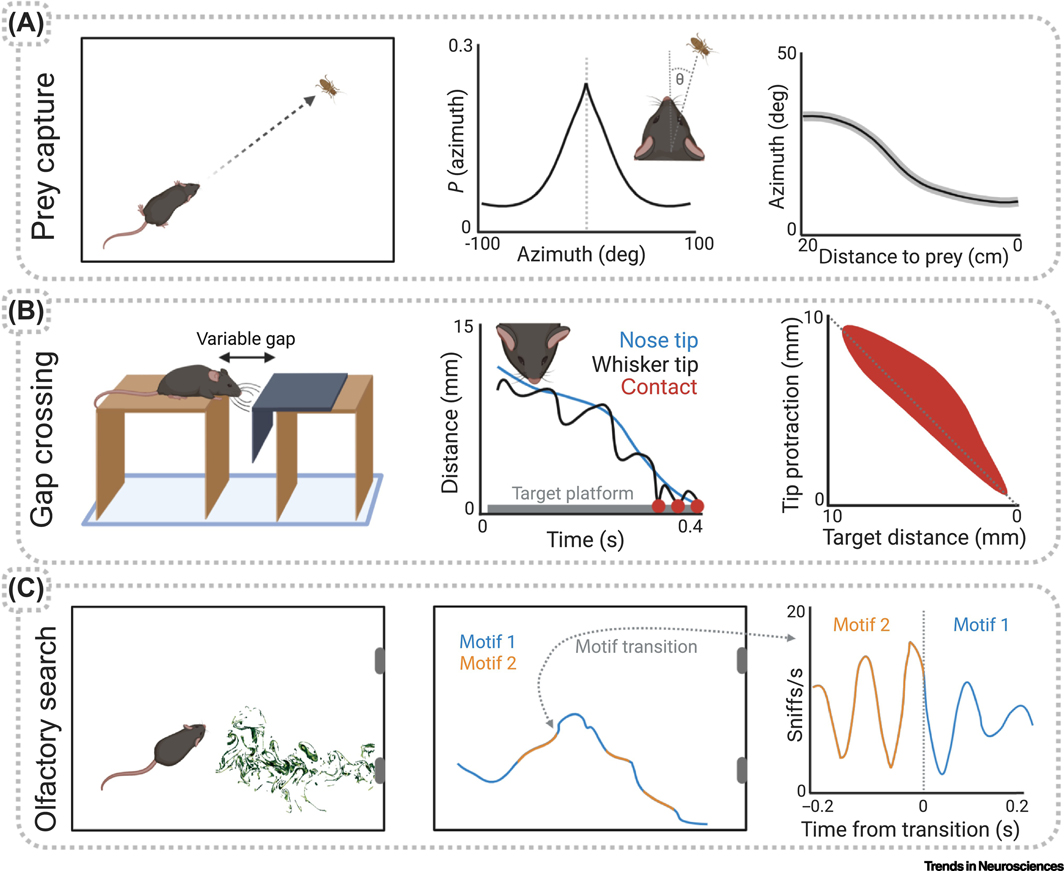

Systems neuroscience has seen a recent surge in the adaptation of ethological behaviors to quantifiable laboratory paradigms [85–87]. These tasks span a number of learned and innate behaviors, and often require the use of one or more sensory modalities to perform motor actions and make decisions. For example, laboratory mice will readily pursue and capture insect prey [88], providing a paradigm that engages vision for detection and orienting towards a target as the mouse moves through its environment (Figure 2A). To determine the distance across a gap, mice perform carefully controlled whisker movements that can provide a mismatch signal between the expected and actual distance [89] (Figure 2B). In olfactory search tasks, mice sample relative odor concentrations to determine the location of an odor source (Figure 2C). Even classic laboratory paradigms can provide a naturalistic context for studying sensory processing, such as the Morris water maze, where mice must find the remembered location of a hidden platform based on visual landmarks around the arena [90]. Although generally used as a test of learning and memory, it is also inherently a vision task, as it is based on the fact that animals naturally use the visual scene to build a representation of the world and their location within it.

Figure 2. Examples of freely moving ethological paradigms for the study of movement-related signals in sensory processing.

A) Laboratory mice reliably pursue and capture cricket prey after only a short habituation period, showing a consistently narrow range of head angles relative to the cricket that decrease as the mouse approaches the cricket. Modified from [88]. B) Mice spontaneously cross a gap by first judging the target platform distance with their whiskers. The incorporation of a short-latency variable gap showed that whisker protractions match the expected, rather than actual, position of the platform, and thus each whisking phase encodes a mismatch signal. Modified from [89]. C) Mice rapidly learn to report the location of an odor plume source. Unsupervised computational techniques parse complex trajectories into a sequence of movement motifs. Many of these motifs synchronize to the sniff cycle with 10s of ms precision [134].

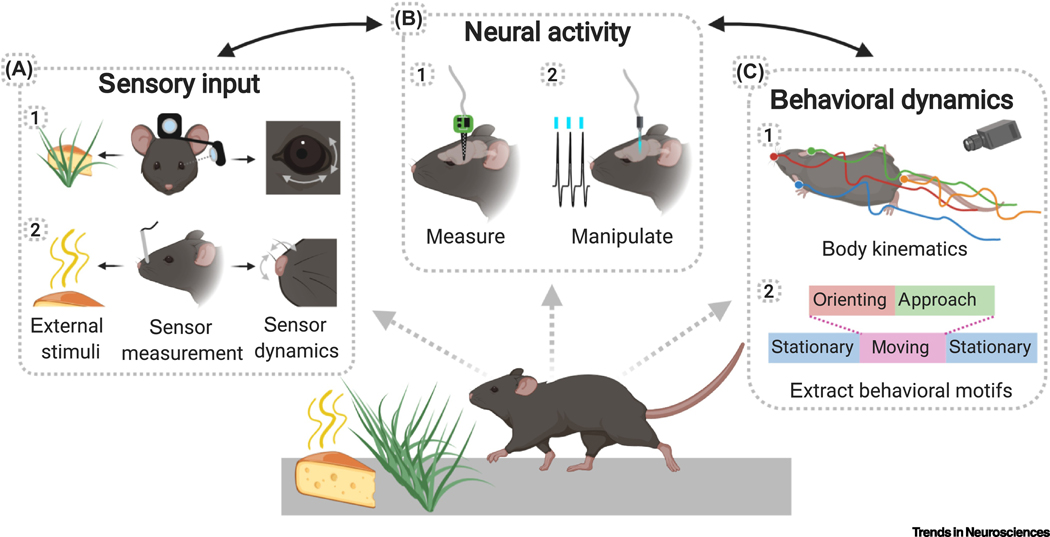

Together, the existing repertoire of ethological sensory paradigms, along with the continuing development of new paradigms, now provides researchers with ample options for studying different sensory modalities. However, to use these ethological paradigms to understand sensory systems, the experimenter must address several challenges, including measuring the animal’s behavioral repertoire, determining the sensory input, and monitoring and manipulating neural activity (Figure 3, Key Figure). We next discuss advances that facilitate each of these in the context of complex freely-moving tasks.

Figure 3, Key Figure. Experimental techniques for investigating sensory processing during freely moving natural behavior.

A) (1) Miniature head-mounted cameras facing outward and at the eye can measure the visual scene and eye movements to estimate the current visual input. (2) Chemical sensors and/or fluid dynamic modeling can indicate the spatiotemporal distribution of odor concentration, intranasal temperature or pressure measurement can reveal sniffing, and video tracking or head mounted accelerometers can capture active sampling movements. B) Concurrent measurement of neural activity with microelectrodes (1) or miniaturized microscopes permits alignment of neural data with known sensor dynamics and sensory inputs. Optogenetic and chemogenetic protein expression in defined subpopulations (2) allows identification of neuron types in electrophysiology recordings, or manipulation of circuits to test hypotheses about movement-related inputs to sensory areas. C) Continuous behavioral monitoring with markerless video tracking (1) permits alignment of neural and sensory data with body movements. Supervised and unsupervised analyses reveal substructures in behavior (2) that further refine analysis of the relationship between neural activity and sensory input.

Measuring and analyzing the behavioral repertoire

A key distinction between trial-based head-fixed paradigms and natural behaviors is that the latter do not always have a specific decision point or “correct” answer - animals continuously integrate sensory and motor signals while performing a sequence of actions [91]. By using natural behavior in the laboratory, one can analyze what animals do at each step during the behavior and relate that to sensory input, motor output, and eventually neural activity. In addition to providing a richer readout, this is much more closely aligned with how sensory input drives our actions in the real world – for instance, when looking for an entrance, we do not typically stop and “decide” where the door is, but rather walk toward it in a continuous path, without clear-cut decision-points and without the “errors” of running into adjacent walls. However, as much as this behavioral approach is common during naturalistic behavior, it raises important challenges in an experimental context, particularly around measuring and analyzing behavioral output.

Capturing the richness of natural behavior requires highly precise quantification of an animal’s movement in the environment. A number of machine learning-based tools now permit automated tracking of many points on the animal’s body with high spatial and temporal resolution without the need for physical markers, providing a far more detailed characterization of movement kinematics with dramatically less effort than previously required [92–95]. Also, miniaturized motion tracking devices such as accelerometers can be implanted on the body to reconstruct the dynamics of movements [36,96]. These tools produce datasets that are richer and leave fewer unmeasured variables than datasets obtained using more traditional techniques, and that can ultimately be aligned with neural recording data for examining neural processing during naturalistic behavior.

Likewise, recent advances in analyzing these rich behavioral readouts can help tame the complexity of ethological paradigms. When an animal moves differently in every trial, or when a paradigm is not trial-based, choosing the appropriate behavioral metrics is not obvious, let alone choosing the best features to align with neural activity. Fortunately, the metrics no longer need to be the experimenter’s best guess, but now can be proposed after careful analysis of continuous data. For example, both supervised and unsupervised analysis of video tracking data allows continuous behavior to be parsed into discrete motifs or events, resulting in a quantitative model of behavior based on probabilities of transitions [97–99]. In mice, this approach has revealed consistent ‘behavioral syllables’ underlying spontaneous open-field behavior that are directly related to neural activity [100,101]. These techniques represent a critical advance from manually scoring behaviors as biased categorical variables, establishing quantitative models of ongoing actions toward a better understanding of their neural representations.

Measurement and control of the sensory input

Perhaps the greatest difficulty for studying sensory processing in freely moving behavior is measurement and control of the stimulus. When an animal freely explores the environment, the experimenter loses both knowledge and control of how the sensory organs are stimulated. For example, the retina of a head-fixed mouse can be stimulated in a controlled way with many repetitions of parameterized images, but a freely moving mouse can look wherever it pleases, so knowing or controlling the image on the retina becomes challenging. Fortunately, these difficulties can be overcome with recent advances in experimental techniques.

One way to quantify the stimulus reaching the animal is to directly measure it at the sensory organ. The miniaturization of cameras allows one to capture the subject’s point of view [102] and eye position [41,103] to recover the visual stimulus on the retina. Whisker movements and deflections from contact with objects can be measured during active whisking behavior in freely moving animals [89,104]. Inhalation patterns underlying active olfactory search in freely moving animals can be measured using cannulas or thermistors implanted in the nasal passage [61,67]. In addition to physically measuring the sensory organs, the stimulus could be inferred by recording activity in the animal’s primary sensory neurons, e.g. through calcium imaging of olfactory sensory neurons, or recording with a retinal mesh [105]. Thus, tools now exist to quantitatively characterize primary sensory organs and the activity of primary sensory neurons during freely moving behavior.

Delivering stimuli in closed loop with the animal’s behavior can improve the experimenter’s control of the sensory scene. One of these benefits is the ability to present repeated trials of a parameterized stimulus, which can be achieved by monitoring the animal’s position and presenting the stimulus conditioned on the animal’s position or movement, as in a one-parameter open-loop system [106], or a closed-loop system to create a real world-like environment[107,108]. Such approaches have recently been implemented in freely moving mice and rats [109,110]. Losing control of the stimulus can be a significant limitation in adopting freely-moving paradigms, thus reinforcing a crucial need to further develop technology that will aid in overcoming these barriers.

Measuring neural activity during naturalistic behavior

There is a long history of neurophysiological recordings in freely-moving rodents, particularly in the context of studying the neural basis of navigation [111,112]. However, free-moving experiments have traditionally required sacrificing some of the experimental accessibility and high-density recording techniques available in head-fixed paradigms. The use of intermediate options that can isolate key movement parameters represent significant steps forward, such as head-fixed recording systems allowing rotational head movements [113], or virtual reality systems that have revealed sensory coding for optic flow, predictive coding, and location coding in V1 [24–26,114]. These can provide an important counterpart to completely unrestrained approaches, due to the increased experimental control and accessibility for recording.

Fortunately, recent technological advances have significantly decreased the traditional trade-off between natural movement and neural recording capacity, and have made freely moving recording both more powerful and broadly accessible. While these advances have been reviewed elsewhere [12,115,116] we highlight several that offer advantages specifically for ethological sensory paradigms.

Among the most common tools used to measure neural activity are electrophysiological recordings and imaging of genetically-encoded calcium or voltage indicators. A fundamental challenge to optimizing these systems for naturally behaving animals is maximizing the number of recorded neurons while minimizing the impact of hardware on the animal. Neuropixel probes have significantly increased the number of available channels for electrophysiological recording and they have been successfully used in freely moving animals [117]. Advances in miniscope design have increased imaging resolution as well as flexibility in region of interest targeting and focal depth [115], and freely moving two-photon imaging systems are emerging [118]. However, improvements in recording techniques also create new challenges for data acquisition. These include the long term nature of chronic implantations and maximizing data transfer capacity while minimizing movement resistance caused by tethering. For the latter, automated commutators relieve some of the burden [119], and advances in battery technology and power efficiency will likely enable fully wireless designs [120]. Long-term viability of chronically implanted electrodes is a concern shared with head fixed preparations and remains an area of active research. These concerns are being addressed by probes designed to reduce insertion damage [121] by moving with and more closely mimicking the brain’s modulus of elasticity [122] and through novel coatings that are biocompatible and maintain low impedance over time [123].These advances allow previously unprecedented measurements of neural activity in naturally behaving animals.

Experimenter control of neural activity

Finally, the ability to modulate the activity of genetically- and anatomically-defined neural populations in freely moving animals permits testing the roles of these circuits in sensory-guided behaviors. Optogenetic techniques afford multiple advantages in studying neural circuits. For example, neurons can be identified during electrophysiological recordings using channelrhodopsin expressed in genetically-defined cell types (e.g., using Cre-recombinase lines) or antero/retrograde viral techniques [124]. Furthermore, a variety of optogenetic tools can be used to excite or inhibit specific neuronal populations [125] in a closed-loop manner contingent on the current state of the animal’s behavior or ongoing neural activity measured using electrophysiology [126,127]. The rapid timescale of optogenetic perturbations offers advantages in some contexts, but can also lead to artificial and potentially confounding effects on neural activity and behavior, particularly during bulk stimulation [128,129]. Chemogenetic techniques provide a complementary approach, operating on longer timescales and similarly allowing manipulation of activity in defined neural populations, tagged using genetic tools [130].

Concluding remarks and future perspectives

Based on the advances described above, sensory neuroscience is now in a position to address a number of significant questions surrounding the role of movement-related signals in sensory computations during natural behavior (see Outstanding Questions). In addition to explaining how these computations are achieved, uncovering the role of movement signals may help address some broader unsolved questions in sensory processing. Olshausen and Field [4] raised the prospect that due to sampling biases and other issues, our understanding of neural activity, even in areas as extensively studied as V1, may relate to only a small fraction of the activity in these brain regions. It is now clear that once subjects’ movement is permitted, a significant fraction of neural activity in sensory regions is due to the movement-related signals themselves, as well as other non-sensory signals. It seems likely that further non-sensory signals, in addition to the currently known ones, will be revealed as natural behavioral patterns are engaged. Additionally, a number of “non-canonical” response properties have been observed but often overlooked in standard models [131]. Experiments in mouse V1, for instance, revealed “suppressed-by-contrast” neurons that respond strongly to locomotion, but non-selectively reduce their firing in the presence of visual contrast [14]. While this type of coding may not appear to serve an obvious role in passive vision, it could be instrumental during active exploration, e.g., for supporting motion parallax by combining self-movement and temporal contrast with opposite signs.

Outstanding Questions.

How will findings from head-fixed paradigms relate to freely-moving conditions? Will the nature of movement signals be different in free movement? And will models of basic sensory processing, such as the statistics and structure of receptive fields, still hold true under dynamic conditions of movement?

What is the range of computations that movement-related signals are used for? Are movement-related signals in primary sensory areas required principally for cancelling the effects of self-motion, or are they used to extract more complex features from the sensory input?

What are the microcircuits involved in processing movement-related signals? Do specific cell types integrate this information into the circuit, or perhaps use these signals to gate or modify ongoing sensory processing?

How are movement signals specialized across brain regions? Do the types of signals vary according to sensory modality, and between primary and higher sensory cortical areas? Alternatively, are the same signals broadcast globally?

How will findings in rodents relate to those in other animals? The types of computations that movement is useful for may depend on constraints such as the sensory ecology and behavioral repertoire of different species. Furthermore, differences in brain architecture across species may also dictate where along the sensory processing hierarchy these signals are integrated.

A broader view of sensory processing that takes into account movement and the interactions with the environment may also provide insight into the neural circuitry for fundamental computations that the brain performs. For example, it has been proposed that a major function of cortical circuits is linking together feedforward processing with contextual signals [132]. Although this is often discussed in the context of associative learning, movement itself is an important contextual signal, and this same circuit could provide a mechanism for linking together movements with feedforward visual processing. Likewise, predictive coding has been proposed as a canonical computation [82,133], and as discussed in previous sections, is likely to be engaged continuously as an animal moves through a physical environment in order to guide sensory inference.

It has become increasingly clear that the brain integrates information about movement with information about sensory receptor activation early and often. Yet how we use our senses to guide actions, even seemingly simple ones such as walking through a crowded room without bumping into objects, remains poorly understood relative to the ability to make simple perceptual discrimination such as the orientation of a grating. This disparity owes largely to the reductionist constraints of traditional sensory experiments in which movement is prevented or limited. Designing experimental paradigms that allow animals to freely interact with the environment will facilitate gaining insight into how the brain works not just within the laboratory, but under real, ecological conditions.

Highlights.

Real-world behavior requires integrating sensory input with movement signals to both compensate for sensor motion and form stable internal representations of the external world.

Recent findings in animal models demonstrate that even in primary sensory areas, a significant fraction of neural activity corresponds to movement of the animal.

The roles that many of these movement-related signals play in the neural computations underlying sensory processing are unknown.

The use of paradigms involving freely moving animals engaged in natural behavior could help reveal the roles of movement signals and lead to the development of more ecologically relevant models of sensory processing.

Acknowledgments

We would like to thank members of the Niell and Smear labs for helpful discussions and feedback. We also thank Drs. Jianhua Cang, Santiago Jaramillo, Daniel O’Connor, and Michael Wehr, as well as the editor and two anonymous reviewers, for critical reading of the manuscript. This work was supported by NIH grants F32EY027696 (PRLP), F32MH118724 (MAB), R21NS104935 (MCS) and R34NS111669 (CMN). Figures were created using Biorender.com.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Penfield W and Boldrey E. (1937) Somatic Motor And Sensory Representation In The Cerebral Cortex Of Man As Studied By Electrical Stimulation. Brain 60, 389–443 [Google Scholar]

- 2.Henschen SE (1893) On The Visual Path And Centre. Brain 16, 170–180 [Google Scholar]

- 3.Rust NC and Movshon JA (2005) In praise of artifice. Nat. Neurosci 8, 1647–1650 [DOI] [PubMed] [Google Scholar]

- 4.Olshausen BA and Field DJ (2005) How close are we to understanding V1? Neural Comput. 17, 1665–1699 [DOI] [PubMed] [Google Scholar]

- 5.Churchland Patricia S., Ramachandran Vilayanur S., and Sejnowski Terrence J. (1994) A critique of pure vision. In Large-scale neuronal theories of the brain (Koch Christof And Joel, ed), pp. 23, MIT Press [Google Scholar]

- 6.Parker AJ and Newsome WT (1998) Sense and the single neuron: probing the physiology of perception. Annu. Rev. Neurosci 21, 227–277 [DOI] [PubMed] [Google Scholar]

- 7.Carandini M and Churchland AK (2013) Probing perceptual decisions in rodents. Nat. Neurosci 16, 824–831 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gold JI and Shadlen MN (2007) The neural basis of decision making. Annu. Rev. Neurosci 30, 535–574 [DOI] [PubMed] [Google Scholar]

- 9.Khan AG and Hofer SB (2018) Contextual signals in visual cortex. Curr. Opin. Neurobiol 52, 131–138 [DOI] [PubMed] [Google Scholar]

- 10.Pakan JM et al. (2018) Action and learning shape the activity of neuronal circuits in the visual cortex. Curr. Opin. Neurobiol 52, 88–97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schneider DM and Mooney R. (2018) How Movement Modulates Hearing. Annu. Rev. Neurosci 41, 553–572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Händel BF and Schölvinck ML (2019) The brain during free movement - What can we learn from the animal model. Brain Res. 1716, 3–15 [DOI] [PubMed] [Google Scholar]

- 13.Dombeck DA et al. (2007) Imaging large-scale neural activity with cellular resolution in awake, mobile mice. Neuron 56, 43–57 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Niell CM and Stryker MP (2010) Modulation of visual responses by behavioral state in mouse visual cortex. Neuron 65, 472–479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ayaz A et al. (2013) Locomotion Controls Spatial Integration in Mouse Visual Cortex. Current Biology 23, 890–894 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Saleem AB et al. (2013) Integration of visual motion and locomotion in mouse visual cortex. Nat. Neurosci 16, 1864–1869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Keller GB et al. (2012) Sensorimotor mismatch signals in primary visual cortex of the behaving mouse. Neuron 74, 809–815 [DOI] [PubMed] [Google Scholar]

- 18.Erisken S et al. (2014) Effects of locomotion extend throughout the mouse early visual system. Curr. Biol 24, 2899–2907 [DOI] [PubMed] [Google Scholar]

- 19.Savier EL et al. (2019) Effects of Locomotion on Visual Responses in the Mouse Superior Colliculus. J. Neurosci 39, 9360–9368 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McGinley MJ et al. (2015) Cortical Membrane Potential Signature of Optimal States for Sensory Signal Detection. Neuron 87, 179–192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhou M et al. (2014) Scaling down of balanced excitation and inhibition by active behavioral states in auditory cortex. Nat. Neurosci 17, 841–850 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Schneider DM et al. (2014) A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature 513, 189–194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schneider DM et al. (2018) A cortical filter that learns to suppress the acoustic consequences of movement. Nature 561, 391–395 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fiser A et al. (2016) Experience-dependent spatial expectations in mouse visual cortex. Nat. Neurosci 19, 1658–1664 [DOI] [PubMed] [Google Scholar]

- 25.Saleem AB et al. (2018) Coherent encoding of subjective spatial position in visual cortex and hippocampus. Nature 562, 124–127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pakan JMP et al. (2018) The Impact of Visual Cues, Reward, and Motor Feedback on the Representation of Behaviorally Relevant Spatial Locations in Primary Visual Cortex. Cell Rep. 24, 2521–2528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Musall S et al. (2019) Single-trial neural dynamics are dominated by richly varied movements. Nat. Neurosci 22, 1677–1686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Stringer C et al. (2019) Spontaneous behaviors drive multidimensional, brainwide activity. Science 364, 255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Adrian ED (1942) Olfactory reactions in the brain of the hedgehog. J. Physiol 100, 459–473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Macrides F and Chorover SL (1972) Olfactory bulb units: activity correlated with inhalation cycles and odor quality. Science 175, 84–87 [DOI] [PubMed] [Google Scholar]

- 31.Wachowiak M. (2011) All in a sniff: olfaction as a model for active sensing. Neuron 71, 962–973 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Szwed M et al. (2003) Encoding of vibrissal active touch. Neuron 40, 621–630 [DOI] [PubMed] [Google Scholar]

- 33.Curtis JC and Kleinfeld D. (2009) Phase-to-rate transformations encode touch in cortical neurons of a scanning sensorimotor system. Nat. Neurosci 12, 492–501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kleinfeld D et al. (2006) Active sensation: insights from the rodent vibrissa sensorimotor system. Curr. Opin. Neurobiol 16, 435–444 [DOI] [PubMed] [Google Scholar]

- 35.Moore JD et al. (2013) Hierarchy of orofacial rhythms revealed through whisking and breathing. Nature 497, 205–210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kurnikova A et al. (2017) Coordination of Orofacial Motor Actions into Exploratory Behavior by Rat. Curr. Biol 27, 688–696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ranade S et al. (2013) Multiple modes of phase locking between sniffing and whisking during active exploration. J. Neurosci 33, 8250–8256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Karalis N and Sirota A. (2018) Breathing coordinates limbic network dynamics underlying memory consolidation. bioRxiv DOI: 10.1101/392530 [DOI] [Google Scholar]

- 39.Tort ABL et al. (2018) Respiration-Entrained Brain Rhythms Are Global but Often Overlooked. Trends Neurosci. 41, 186–197 [DOI] [PubMed] [Google Scholar]

- 40.Vélez-Fort M et al. (2018) A Circuit for Integration of Head- and Visual-Motion Signals in Layer 6 of Mouse Primary Visual Cortex. Neuron 98, 179–191.e6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Meyer AF et al. (2018) A Head-Mounted Camera System Integrates Detailed Behavioral Monitoring with Multichannel Electrophysiology in Freely Moving Mice. Neuron 100, 46–60.e7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Vinck M et al. (2015) Arousal and locomotion make distinct contributions to cortical activity patterns and visual encoding. Neuron 86, 740–754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Reimer J et al. (2014) Pupil fluctuations track fast switching of cortical states during quiet wakefulness. Neuron 84, 355–362 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Shuler MG and Bear MF (2006) Reward timing in the primary visual cortex. Science 311, 1606–1609 [DOI] [PubMed] [Google Scholar]

- 45.Allen WE et al. (2019) Thirst regulates motivated behavior through modulation of brainwide neural population dynamics. Science 364, 253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sobel N et al. (1998) Sniffing and smelling: separate subsystems in the human olfactory cortex. Nature 392, 282–286 [DOI] [PubMed] [Google Scholar]

- 47.Zelano C et al. (2016) Nasal Respiration Entrains Human Limbic Oscillations and Modulates Cognitive Function. J. Neurosci 36, 12448–12467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Benjamin AV et al. (2018) The Effect of Locomotion on Early Visual Contrast Processing in Humans. J. Neurosci 38, 3050–3059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bullock T et al. (2017) Acute Exercise Modulates Feature-selective Responses in Human Cortex. J. Cogn. Neurosci 29, 605–618 [DOI] [PubMed] [Google Scholar]

- 50.Pelah A and Barlow HB (1996) Visual illusion from running. Nature 381, 283. [DOI] [PubMed] [Google Scholar]

- 51.Cao L and Händel B. (2019) Walking enhances peripheral visual processing in humans. PLoS Biol. 17, e3000511 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Warren WH, Jr et al. (2001) Optic flow is used to control human walking. Nat. Neurosci 4, 213–216 [DOI] [PubMed] [Google Scholar]

- 53.Martikainen MH et al. (2005) Suppressed responses to self-triggered sounds in the human auditory cortex. Cereb. Cortex 15, 299–302 [DOI] [PubMed] [Google Scholar]

- 54.Morris AP and Krekelberg B. (2019) A Stable Visual World in Primate Primary Visual Cortex. Curr. Biol 29, 1471–1480.e6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.McFarland JM et al. (2015) Saccadic modulation of stimulus processing in primary visual cortex. Nat. Commun 6, 8110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Weyand TG and Malpeli JG (1993) Responses of neurons in primary visual cortex are modulated by eye position. J. Neurophysiol 69, 2258–2260 [DOI] [PubMed] [Google Scholar]

- 57.Gur M et al. (1997) Response variability of neurons in primary visual cortex (V1) of alert monkeys. J. Neurosci 17, 2914–2920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Leopold DA and Logothetis NK (1998) Microsaccades differentially modulate neural activity in the striate and extrastriate visual cortex. Exp. Brain Res 123, 341–345 [DOI] [PubMed] [Google Scholar]

- 59.Snodderly DM et al. (2001) Selective activation of visual cortex neurons by fixational eye movements: implications for neural coding. Vis. Neurosci 18, 259–277 [DOI] [PubMed] [Google Scholar]

- 60.Carey RM et al. (2009) Temporal structure of receptor neuron input to the olfactory bulb imaged in behaving rats. J. Neurophysiol 101, 1073–1088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Verhagen JV et al. (2007) Sniffing controls an adaptive filter of sensory input to the olfactory bulb. Nat. Neurosci 10, 631–639 [DOI] [PubMed] [Google Scholar]

- 62.Shusterman R et al. (2011) Precise olfactory responses tile the sniff cycle. Nat. Neurosci 14, 1039–1044 [DOI] [PubMed] [Google Scholar]

- 63.Shusterman R et al. (2018) Sniff Invariant Odor Coding. eNeuro 5, [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jordan R et al. (2018) Sniffing Fast: Paradoxical Effects on Odor Concentration Discrimination at the Levels of Olfactory Bulb Output and Behavior. eNeuro 5, [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Lee DN (1980) The optic flow field: the foundation of vision. Philos. Trans. R. Soc. Lond. B Biol. Sci 290, 169–179 [DOI] [PubMed] [Google Scholar]

- 66.Gibson JJ (1979) Ecological approach to visual perception. Houghton, Mifflin and Company. [Google Scholar]

- 67.Khan AG et al. (2012) Rats track odour trails accurately using a multi-layered strategy with near-optimal sampling. Nat. Commun 3, 703. [DOI] [PubMed] [Google Scholar]

- 68.Catania KC (2013) Stereo and serial sniffing guide navigation to an odour source in a mammal. Nat. Commun 4, 1441. [DOI] [PubMed] [Google Scholar]

- 69.Jones PW and Urban NN (2018) Mice follow odor trails using stereo olfactory cues and rapid sniff to sniff comparisons. bioRxiv DOI: 10.1101/293746 [DOI] [Google Scholar]

- 70.Isett BR et al. (2018) Slip-Based Coding of Local Shape and Texture in Mouse S1. Neuron 97, 418–433.e5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Gibson JJ (1962) Observations on active touch. Psychol. Rev 69, 477–491 [DOI] [PubMed] [Google Scholar]

- 72.Hebrank J and Wright D. (1974) Spectral cues used in the localization of sound sources on the median plane. J. Acoust. Soc. Am 56, 1829–1834 [DOI] [PubMed] [Google Scholar]

- 73.von Holst E and Mittelstaedt H. (1950) Das Reafferenzprinzip. Naturwissenschaften, 37, 464–476 [Google Scholar]

- 74.Wolpert DM et al. (1995) An internal model for sensorimotor integration. Science 269, 1880–1882 [DOI] [PubMed] [Google Scholar]

- 75.Webb B. (2004) Neural mechanisms for prediction: do insects have forward models? Trends in Neurosciences 27, 278–282 [DOI] [PubMed] [Google Scholar]

- 76.Poulet JFA and Hedwig B. (2002) A corollary discharge maintains auditory sensitivity during sound production. Nature 418, 872–876 [DOI] [PubMed] [Google Scholar]

- 77.Bell CC (1989) Sensory coding and corollary discharge effects in mormyrid electric fish. J. Exp. Biol 146, 229–253 [DOI] [PubMed] [Google Scholar]

- 78.Hall C et al. (1995) Behavioral evidence of a latency code for stimulus intensity in mormyrid electric fish. Journal of Comparative Physiology A, 177 [Google Scholar]

- 79.Leinweber M et al. (2017) A Sensorimotor Circuit in Mouse Cortex for Visual Flow Predictions. Neuron 96, 1204. [DOI] [PubMed] [Google Scholar]

- 80.Sommer MA and Wurtz RH (2002) A pathway in primate brain for internal monitoring of movements. Science 296, 1480–1482 [DOI] [PubMed] [Google Scholar]

- 81.Rao RP and Ballard DH (1999) Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci 2, 79–87 [DOI] [PubMed] [Google Scholar]

- 82.Keller GB and Mrsic-Flogel TD (2018) Predictive Processing: A Canonical Cortical Computation. Neuron 100, 424–435 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Ahissar E and Assa E. (2016) Perception as a closed-loop convergence process. Elife 5, e12830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Krakauer JW et al. (2017) Neuroscience Needs Behavior: Correcting a Reductionist Bias. Neuron 93, 480–490 [DOI] [PubMed] [Google Scholar]

- 85.Gomez-Marin A and Ghazanfar AA (2019) The Life of Behavior. Neuron 104, 25–36 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Juavinett AL et al. (2018) Decision-making behaviors: weighing ethology, complexity, and sensorimotor compatibility. Curr. Opin. Neurobiol 49, 42–50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Datta SR et al. (2019) Computational Neuroethology: A Call to Action. Neuron 104, 11–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Hoy JL et al. (2016) Vision Drives Accurate Approach Behavior during Prey Capture in Laboratory Mice. Curr. Biol 26, 3046–3052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Voigts J et al. (2015) Tactile object localization by anticipatory whisker motion. J. Neurophysiol 113, 620–632 [DOI] [PubMed] [Google Scholar]

- 90.Morris RGM (1981) Spatial localization does not require the presence of local cues. Learning and Motivation 12, 239–260 [Google Scholar]

- 91.Huk A et al. (2018) Beyond Trial-Based Paradigms: Continuous Behavior, Ongoing Neural Activity, and Natural Stimuli. J. Neurosci 38, 7551–7558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Mathis A et al. (2018) DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci 21, 1281–1289 [DOI] [PubMed] [Google Scholar]

- 93.Arac A et al. (2019) DeepBehavior: A Deep Learning Toolbox for Automated Analysis of Animal and Human Behavior Imaging Data. Front. Syst. Neurosci 13, 20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Pereira TD et al. (2019) Fast animal pose estimation using deep neural networks. Nat. Methods 16, 117–125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Graving JM et al. (2019) DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. Elife 8, e47994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Venkatraman S et al. (2010) Investigating neural correlates of behavior in freely behaving rodents using inertial sensors. J. Neurophysiol 104, 569–575 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Calhoun AJ et al. (2019) Unsupervised identification of the internal states that shape natural behavior. Nat. Neurosci 22, 2040–2049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Mearns DS et al. (2020) Deconstructing Hunting Behavior Reveals a Tightly Coupled Stimulus-Response Loop. Curr. Biol 30, 54–69.e9 [DOI] [PubMed] [Google Scholar]

- 99.Johnson RE et al. (2020) Probabilistic Models of Larval Zebrafish Behavior Reveal Structure on Many Scales. Curr. Biol 30, 70–82.e4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Markowitz JE et al. (2018) The Striatum Organizes 3D Behavior via Moment-to-Moment Action Selection. Cell 174, 44–58.e17 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Wiltschko AB et al. (2015) Mapping Sub-Second Structure in Mouse Behavior. Neuron 88, 1121–1135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Froudarakis E et al. (2014) Population code in mouse V1 facilitates readout of natural scenes through increased sparseness. Nat. Neurosci 17, 851–857 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Wallace DJ et al. (2013) Rats maintain an overhead binocular field at the expense of constant fusion. Nature 498, 65–69 [DOI] [PubMed] [Google Scholar]

- 104.Ferezou I et al. (2006) Visualizing the cortical representation of whisker touch: voltage-sensitive dye imaging in freely moving mice. Neuron 50, 617–629 [DOI] [PubMed] [Google Scholar]

- 105.Hong G et al. (2018) A method for single-neuron chronic recording from the retina in awake mice. Science 360, 1447–1451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Fry SN et al. (2008) TrackFly: virtual reality for a behavioral system analysis in free-flying fruit flies. J. Neurosci. Methods 171, 110–117 [DOI] [PubMed] [Google Scholar]

- 107.Fry SN et al. (2004) Context-dependent stimulus presentation to freely moving animals in 3D. Journal of Neuroscience Methods 135, 149–157 [DOI] [PubMed] [Google Scholar]

- 108.Schulze A et al. (2015) Dynamical feature extraction at the sensory periphery guides chemotaxis. Elife 4, e06694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Del Grosso NA et al. (2017) Virtual Reality system for freely-moving rodents. bioRxiv DOI: 10.1101/161232 [DOI] [Google Scholar]

- 110.Stowers JR et al. (2017) Virtual reality for freely moving animals. Nat. Methods 14, 995–1002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.O’Keefe J and Dostrovsky J. (1971) The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175 [DOI] [PubMed] [Google Scholar]

- 112.Hafting T et al. (2005) Microstructure of a spatial map in the entorhinal cortex. Nature, 436, 801–806 [DOI] [PubMed] [Google Scholar]

- 113.Voigts J and Harnett MT (2020) Somatic and Dendritic Encoding of Spatial Variables in Retrosplenial Cortex Differs during 2D Navigation. Neuron 105, 237–245.e4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Leinweber M et al. (2017) A Sensorimotor Circuit in Mouse Cortex for Visual Flow Predictions. Neuron 96, 1204. [DOI] [PubMed] [Google Scholar]

- 115.Aharoni D et al. (2019) All the light that we can see: a new era in miniaturized microscopy. Nature Methods 16, 11–13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Wallace DJ and Kerr JND (2019) Circuit interrogation in freely moving animals. Nat. Methods 16, 9–11 [DOI] [PubMed] [Google Scholar]

- 117.Juavinett AL et al. (2019) Chronically implanted Neuropixels probes enable high-yield recordings in freely moving mice. Elife 8, e47188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 118.Ozbay BN et al. (2018) Three dimensional two-photon brain imaging in freely moving mice using a miniature fiber coupled microscope with active axial-scanning. Sci. Rep 8, 8108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Fee MS and Leonardo A. (2001) Miniature motorized microdrive and commutator system for chronic neural recording in small animals. J. Neurosci. Methods 112, 83–94 [DOI] [PubMed] [Google Scholar]

- 120.Gutruf P and Rogers JA (2018) Implantable, wireless device platforms for neuroscience research. Curr. Opin. Neurobiol 50, 42–49 [DOI] [PubMed] [Google Scholar]

- 121.Ferro MD and Melosh NA (2018) Electronic and Ionic Materials for Neurointerfaces. Advanced Functional Materials 28, 1704335 [Google Scholar]

- 122.Chen R et al. (2017) Neural recording and modulation technologies. Nature Reviews Materials, 2(2), 1–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Cogan SF (2008) Neural Stimulation and Recording Electrodes. Annual Review of Biomedical Engineering 10, 275–309 [DOI] [PubMed] [Google Scholar]

- 124.Lima SQ et al. (2009) PINP: A New Method of Tagging Neuronal Populations for Identification during In Vivo Electrophysiological Recording. PLoS ONE 4, e6099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Yizhar O et al. (2011) Optogenetics in neural systems. Neuron 71, 9–34 [DOI] [PubMed] [Google Scholar]

- 126.Armstrong C et al. (2013) Closed-loop optogenetic intervention in mice. Nat. Protoc 8, 1475–1493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Grosenick L et al. (2015) Closed-loop and activity-guided optogenetic control. Neuron 86, 106–139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Hong YK et al. (2018) Sensation, movement and learning in the absence of barrel cortex. Nature 561, 542–546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.O’Sullivan C et al. (2019) Auditory Cortex Contributes to Discrimination of Pure Tones. eNeuro 6, [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Roth BL (2016) DREADDs for Neuroscientists. Neuron 89, 683–694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Masland RH and Martin PR (2007) The unsolved mystery of vision. Curr. Biol 17, R577–82 [DOI] [PubMed] [Google Scholar]

- 132.Larkum M. (2013) A cellular mechanism for cortical associations: an organizing principle for the cerebral cortex. Trends Neurosci. 36, 141–151 [DOI] [PubMed] [Google Scholar]

- 133.Bastos AM et al. (2012) Canonical microcircuits for predictive coding. Neuron 76, 695–711 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 134.Findley TM et al. (2020) Sniff-synchronized, gradient-guided olfactory search by freely-moving mice. bioRxiv DOI: 10.1101/2020.04.29.069252 [DOI] [PMC free article] [PubMed] [Google Scholar]