Abstract

The Integrated Information Theory (IIT) of consciousness starts from essential phenomenological properties, which are then translated into postulates that any physical system must satisfy in order to specify the physical substrate of consciousness. We recently introduced an information measure (Barbosa et al., 2020) that captures three postulates of IIT—existence, intrinsicality and information—and is unique. Here we show that the new measure also satisfies the remaining postulates of IIT—integration and exclusion—and create the framework that identifies maximally irreducible mechanisms. These mechanisms can then form maximally irreducible systems, which in turn will specify the physical substrate of conscious experience.

Keywords: causation, consciousness, intrinsic, existence

1. Introduction

Integrated information theory (IIT; [1,2,3]) identifies the essential properties of consciousness and postulates that a physical system accounting for it—the physical substrate of consciousness (PSC)—must exhibit these same properties in physical terms. Briefly, IIT starts from the existence of one’s own consciousness, which is immediate and indubitable. The theory then identifies five essential phenomenal properties that are immediate, indubitable and true of every conceivable experience, namely intrinsicality, composition, information, integration and exclusion. These phenomenal properties, called axioms, are translated into essential physical properties of the PSC, called postulates. The postulates are conceptualized in terms of cause–effect power and given a mathematical formulation in order to make testable predictions and allow for inferences and explanations.

So far, the mathematical formulation employed well-established measures of information, such as Kullback–Leibler divergence (KLD) [4] or earth mover’s distance (EMD) [3]. Ultimately, however, IIT requires a measure that is based on the postulates of the theory and is unique, because the quantity and quality of consciousness are what they are and cannot vary with the measure chosen. Recently, we introduced an information measure, called intrinsic difference [5], which captures three postulates of IIT—existence, intrinsicality and information—and is unique. Our primary goal here is to explore the remaining postulates of IIT—composition, integration and exclusion—in light of this unique measure, focusing on the assessment of integrated information for the mechanisms of a system. In doing so, we will also revisit the way of performing partitions.

The plan of the paper is as follows. In Section 2, we briefly introduce the axioms and postulates of IIT; in Section 3, we introduce the mathematical framework for measuring based on intrinsic difference (ID), which satisfies the postulates of IIT and is unique; in Section 4, we explore the behavior of the measure in several examples; and in Section 5, we discuss the connection between the new framework, previous versions of IIT and future developments.

2. Axioms and Postulates

This section summarizes the axioms of IIT and the corresponding postulates. For a complete description of the axioms and their motivation, the reader should consult [2,3,6].

Briefly, the zeroth axiom, existence, says that experience exists, immediately and indubitably. The zeroth postulate requires that the PSC must exist in physical terms. The PSC is assumed to be a system of interconnected units, such as a network of neurons. Physical existence is taken to mean that the units of the system must be able to be causally affected by or causally affect other units (take and make a difference). To demonstrate that a unit has a potential cause, one can observe whether the unit’s state can be caused by manipulating its input, while to demonstrate that a unit has a potential effect one can manipulate the state of the unit and observe if it causes the state of some other unit [7].

The first axiom, intrinsicality, says that experience is subjective, existing from the intrinsic perspective of the subject of experience. The corresponding postulate requires that a PSC has potential causes and effects within itself.

The second axiom, composition, says that experience is structured, being composed of phenomenal distinctions bound by phenomenal relations. The corresponding postulate requires that a PSC, too, must be structured, being composed by causal distinctions specified by subsets of units (mechanisms) over subsets of units (cause and effect purviews) and by causal relations that bind together causes and effects overlapping over the same units. The purviews are then subset of units whose states are constrained by another subset of units, the mechanisms, in its particular state. The set of all causal distinctions and relations within a system compose its cause–effect structure.

The third axiom, information, says that experience is specific, being the particular way it is, rather than generic. The corresponding postulate states that a PSC must specify a cause–effect structure composed of distinctions and relations that specify particular cause and effect states.

The fourth axiom, integration, says that experience is unified, in that it cannot be subdivided into parts that are experienced separately. The corresponding postulate states that a PSC must specify a cause–effect structure that is unified, being irreducible to the cause–effect structures specified by causally independent subsystems. Integrated information () is a measure of the irreducibility of the cause–effect structure specified by a system [8]. The degree to which a system is irreducible can be interpreted as a measure of its existence. Mechanism integrated information () is an analogous measure that quantifies the existence of a mechanism within a system. Only mechanisms that exist within a system () contribute to its cause–effect structure.

Finally, the exclusion axiom says that experience is definite, in that it contains what it contains, neither less nor more. The corresponding postulate states that the cause–effect structure specified by a PSC should be definite: it must specify a definite set of distinctions and relations over a definite set of units, neither less nor more. The PSC and associated cause–effect structure is given by the set of units for which the value of is maximal, and its distinctions and relations corresponding to maxima of . According to IIT, then, a system is a PSC if it is a maximum of integrated information, meaning that it has higher integrated information than any overlapping systems [3,9]. Moreover, the cause–effect structure specified by the PSC is identical to the subjective quality of the experience [10].

3. Theory

We first describe the process for measuring the integrated information () of a mechanism based on the postulates of IIT. In order to contribute to experience, a mechanism must satisfy the postulates described in Section 2 (note that mechanisms cannot be compositional because, as components of the cause–effect structure, they cannot have components themselves). We then present some theoretical developments related to partitioning a mechanism in order to assess integration and to measuring the difference between probability distributions for quantifying intrinsic information. The subsequent process of measuring the integrated information of the system () will be discussed elsewhere.

3.1. Mechanism Integrated Information

Our starting point is a stochastic system with state space and current state (Figure 1a). The system is constituted of n random variables that represent the units of a physical system and has a transition probability function

| (1) |

which describes how the system updates its state (see Appendix A.1 for details). The goal is to define the integrated information of a mechanism in a state based on the postulates of IIT. To this end, we will develop a difference measure which quantifies how much a mechanism M in state constrains the state of a purview, a set of units , compared to a partition

| (2) |

of the mechanism and purview into k independent parts (Figure 1b). As we evaluate the IIT postulates step by step, we will provide mathematical definitions for the required quantities, introduce constraints on and eventually arrive at a unique measure. Since potential causes of are always inputs to M, and potential effects of are always outputs of M, we will omit the corresponding update indices (, t, ) unless necessary.

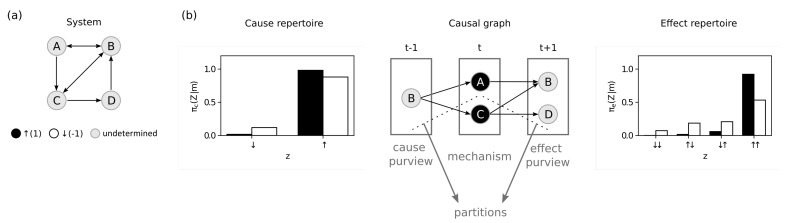

Figure 1.

Theory. (a) System S with four random variables. (b) Example of a mechanism in state constraining a cause purview and an effect purview . Dashed lines show the partitions. The bar plots show the probability distributions, that is the cause repertoire (left) and effect repertoire (right). The black bars show the probabilities when the mechanism is constraining the purview, and the white bars show the probabilities after partitioning the mechanism.

3.1.1. Existence

For a mechanism to exist in a physical sense, it must be possible for something to change its state, and it must be able to change the state of something (it has potential causes and effects). To evaluate these potential causes and effects, we define the cause repertoire (see Equation (A2)) and the effect repertoire (see Equation (A1)), which describe how m constrains the potential input or output states of respectively (Figure 1b) [3,11,12,13].

The cause and effect repertoires are probability distributions derived from the system’s transition probability function (Equation (1)) by conditioning on the state of the mechanism and causally marginalizing the variables outside the purview (). Causal marginalization is also used to remove any contributions to the repertoire from units outside the mechanism (). In this way, we capture the constraints due to the mechanism in its state and nothing else. Note that the cause and effect repertoires generally differ from the corresponding conditional probability distributions.

Having introduced cause and effect repertoires, we can write the difference

where corresponds to the partitioned effect repertoire (see Equation (A3)) in which certain connections from M to Z are severed (causally marginalized). When there is no change after the partition, we require that

The same analysis holds for causes, replacing with in the definition of . Unless otherwise specified, in what follows we focus on effects.

3.1.2. Intrinsicality

The intrinsicality postulate states that, from the intrinsic perspective of the mechanism over a purview Z, the effect repertoire is set and has to be taken as is. This means that, given the purview units and their connections to the mechanism, the constraints due to the mechanism are defined by how all its units at a particular state m at t constrain all units in the effect purview at and cause purview at . For example, if the mechanism fully constrains all of its purview units except for one unit which remains fully unconstrained, the mechanism cannot just ignore the unconstrained unit or optimize its overall constraints by giving more weight to some states than others in the effect repertoire. For this reason, the intrinsicality postulate should make the difference measure D between the partitioned and unpartitioned repertoire sensitive to a tradeoff between “expansion” and “dilution”: the measure should increase if the purview includes more units that are highly constrained by the mechanism but decrease if the purview includes units that are weakly constrained. The mathematical formulation of this requirement is given in Section 3.3.

3.1.3. Information

The information postulate states that a mechanism M, by being in its particular state m, must have a specific effect, which means that it must specify a particular effect state z over the purview Z. The effect state should be the one for which m makes the most difference. To that end, we require a difference measure of the form

such that the difference D between effect repertoires is evaluated as the maximum of the absolute value of some function f that is assessed for particular states. The function f is one of the main developments of the current work and is discussed in Section 3.3.

3.1.4. Integration

The integration postulate states that a mechanism must be unitary, being irreducible to independent parts. By comparing the effect repertoire against the partitioned repertoire , we can assess how much of a difference the partition makes to the effect of m. To quantify how irreducible m’s effect is on Z, one must compare all possible partitioned repertoires to the unpartitioned effect repertoire. In other words, one must evaluate each possible partition . Of all partitions, we define the minimum information partition (MIP)

which is the one that makes the least difference to the effect. The intrinsic integrated effect information (or integrated effect information for short) of the mechanism M in state m about a purview Z is then defined as

If , there is a partition of the candidate mechanism that does not make a difference, which means that the candidate mechanism is reducible.

3.1.5. Exclusion

The exclusion postulate states that a mechanism must be definite, it must specify a definite effect over a definite set of units. That is, a mechanism must be about a maximally irreducible purview

which maximizes integrated effect information and is in the effect state

The purview is then used to define the integrated effect information of the mechanism M

Returning to the existence postulate, a mechanism must have both a cause and an effect. By an analogous process using cause repertoires instead of effect repertoires , we can define the integrated cause information of m

and the integrated information of the mechanism

| (3) |

Thus, if a candidate mechanism M in state m is reducible over every purview either on the cause or effect side, and M does not contribute to experience. Otherwise, is irreducible and forms a mechanism within the system. As such, it specifies a distinction

which links its maximally irreducible cause with its maximally irreducible effect, for , and . While a mechanism always specifies a unique value, due to symmetries in the system it is possible that there are multiple equivalent solutions for or . We expect such “ties” to be exceedingly rare in physical systems with variable connection strengths, as well as a certain amount of indeterminism and outline possible solutions to resolves “ties” in the discussion, Section 5.

3.2. Disintegrating Partitions

According to the integration postulate, a mechanism can only exist from the intrinsic perspective of a system if it is irreducible, meaning that any partition of the mechanism would make a difference to its potential cause or effect. Accordingly, computing the integrated information of a mechanism requires partitioning the mechanism and assessing the difference between partitioned and unpartitioned repertoires. In this section we give additional mathematical details and theoretical considerations for how to partition a mechanism together with its purview Z.

Generally, a partition of a mechanism M and a purview Z is a set of parts as defined in Equation (2), with some restrictions on . The partition "cuts apart" the mechanism, severing any connections from to (). We use causal marginalization (see Appendix A) to remove any causal power has over () and compute a partitioned repertoire. Practically, it is as though we do not condition on the state of when consider . Before describing the restrictions on we will look at a few examples to highlight the conceptual issues. First, consider a third-order mechanism with the same units (as inputs or outputs) in the corresponding third order purview . A standard example of a partition of this mechanism is

which cuts units away from unit . Now consider the situation where we would like to additionally cut in the purview away from in the mechanism. This partition can be represented as

This example raises the issue of whether to allow the empty set as part of a partition. The question is not only conceptual but also practical, in a situation where and have opposite effects (e.g., excitatory and inhibitory connections), then it may be that the MIP (see Section 4.2 for an example). Here, the mechanism is always partitioned together with a purview subset.

While the definition of should include partitions such as above, this raises additional issues. Consider the partition

In , the set of all mechanism units is contained in one part. Should such a partition count as "cutting apart" the mechanism? The same problem arises for partitions of first-order mechanisms. Consider, for example, with purview and partition

A first-order mechanism should be considered completely irreducible by definition, yet for the proposed partition only a small fraction of its constraint is considered integrated information: while may constrain A, B, and C, only its constraints over C would be evaluated by . A similar argument applies to , which would only allow us to evaluate the constraint of the mechanism on C, not the entire purview . In sum, and should not be permissible partitions by the integration postulate. The set of mechanism units may not remain integrated over a purview subset once a partition is applied.

Based on the above argument, we propose a set of disintegrating partitions

| (4) |

such that for each : is a partition of M and is a partition of Z but allows the empty set to be used as a part. Moreover, if the mechanism is not partitioned into at least two parts, then the mechanism must be cut away from the entire purview.

In summary, the above definition of possible partitions ensures that the mechanism set must be divided into at least two parts, except for the special case where one part contains the whole mechanism but no units in the purview (complete partition, ). This special partition can be interpreted as “destroying” the whole mechanism at once and observing the impact its absence has on the purview.

3.3. Intrinsic Difference (ID)

In this section we define the measure D, which quantifies the difference between the unpartitioned and partitioned repertoires specified by a mechanism and thus plays an important role in measuring integrated information. We propose a set of properties that D should satisfy based on the postulates of IIT described above, and then identify the unique measure that satisfies them.

Our desired properties are described in terms of discrete probability distributions and . Generally, represents the cause or effect repertoire of a mechanism , while represents the partitioned repertoire .

The first property, causality, captures the requirement for physical existence (Section 3.1.1) that a mechanism has a potential cause and effect,

| (5) |

The interpretation is that the integrated information m specifies about Z is only zero if the unpartitioned and partitioned repertoires are identical. In other words, by being in state m, the mechanism M does not constrain the potential state of Z above its partition into independent parts.

The second property, intrinsicality, captures the requirement that physical existence must be assessed from the perspective of the mechanism itself (Section 3.1.2). The idea is that information should be measured from the intrinsic perspective of the candidate mechanism M in state m, which determines the potential state of the purview Z by itself, independent of external observers. In other words, the constraint m has over Z must depend only on their units and connections. In contrast, traditional information measures were conceived to quantify the amount of signal transmitted across a channel between a sender and a receiver from an extrinsic perspective, typically that of a channel designer who has the ability to optimize the channel’s capacity. This can be done by adjusting the mapping between the states of M and Z through encoders and decoders to reduce indeterminism in the signal transmission. However, such a remapping would require more than just the units and connections present in M and Z, thus violating intrinsicality [5].

The intrinsicality property is defined based on the behavior of the difference measure when distributions are extended by adding units to the purview or increasing the number of possible states of a unit [14]. A distribution is extended by a distribution to create a new distribution , where ⊗ is the Kronecker product. When a fully selective distribution (one where an outcome occurs with probability one) is extended by another fully selective distribution, the measure should increase additively (expansion). However, if a distribution is extended by a fully undetermined distribution (one where all n outcomes are equally likely), then the measure should decrease by a factor of n (dilution). For expansion, suppose and are fully selective distributions, then for any and we have

| (6) |

For dilution, suppose and are fully undetermined distributions, then for any , we have

| (7) |

Together, Equations (6) and (7) define the intrinsicality property.

The final property, specificity, requires that physical existence must be about a specific purview state (Section 3.1.3),

| (8) |

The function defines the difference between two probability distributions at a specific state of the purview. The mechanism is defined based on the state that maximizes its difference within the system.

Previous work employed similar properties to quantify intrinsic information but used a version of the specificity property that did not include the absolute value [5]. In that work, the goal was to compute the intrinsic information of a communication channel, with an implicit assumption that the source is sending a specific message. In that context, a signal is only informative if it increases the probability of receiving the correct message. Here we are interested in integrated information within the context of the postulates of IIT as a means to quantify existence, which requires causes and effects. A mechanism can be seen as having an effect (or cause) whether it increases or decreases the probability of a specific state.

Together, the three properties (causality, specificity, and intrinsicality) characterize a unique measure, the intrinsic difference, for measuring the integrated information of a mechanism. Note that while causality (Equation (5)) and expansion (Equation (6)) properties are traditionally required by information measures (see [15]), here we also require dilution (Equation (7)) and specificity (Equation (8)). While the maximum operation present in specificity in order to select one specific purview state seems to us uncontroversial, one may argue that the dilution factor in Equation (7) is somewhat arbitrary. However, note that if specificity requires that information is specific to one state, after adding a fully undetermined distribution of size n to the purview, the amount of causal power measured by the function f in state will be invariably divided by n. This way, we believe that the dilution factor must be necessarily , at least in this particular case.

Theorem 1.

If satisfies thecausality,intrinsicality, andspecificityproperties, then

where

The full mathematical statement of the theorem and its proof are presented in Appendix B. For the rest of the manuscript we assume without loss of generality. Here, our main interest is using ID to quantify the difference between unpartitioned and partitioned cause or effect repertoires when assessing the integrated information of a mechanism,

One can interpret the integrated information as being composed of two terms. First, the informativeness

which reflects the difference in Hartley information contained in state z before and after the partition. Second, the selectivity

which reflects the likelihood of the cause or effect. Together, the two terms can be interpreted as the density of information for a particular state [5].

4. Methods and Results

Throughout this section we investigate each step necessary to compute , the integrated information of a mechanism M in state m. To this end, we construct systems S formed by units that are either ↑ (1) or ↓ (−1) at time t with probability of being ↑ defined by (Figure 2a)

| (9) |

for all , where are the units that input to Y. Besides the sum of the input states, the function depends on two parameters: defines a bias towards being ↑ () or ↓ (), while defines how deterministic unit A is. For , the unit turns ↑ or ↓ with equal probability (fully undetermined), while for it turns ↑ whenever the sum of the inputs is greater than the threshold , and turns ↓ otherwise (fully selective; Figure 2a). This way, means that the unit is fully constrained by the inputs (deterministic), means the unit is partially constrained, and means the unit is only weakly constrained, etc. Unless otherwise specified, in the following we focus on investigating effect purviews.

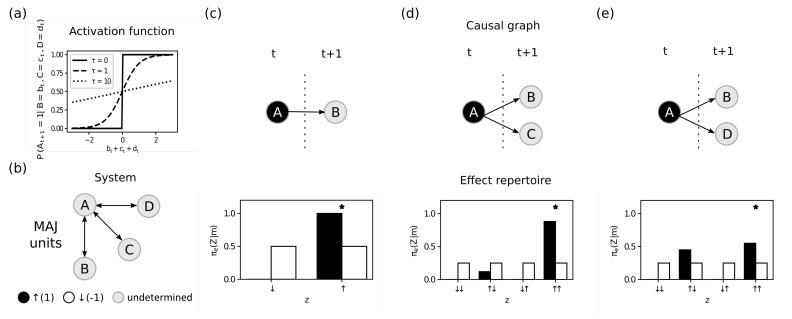

Figure 2.

Intrinsicality. (a) Activation functions without bias () and different levels of constraint (, and ). (b) System S analyzed in this figure. The remaining panels show on top the causal graph of the mechanism at state constraining different output purviews and on the bottom the probability distributions of the purviews (effect repertoires). The black bars show the probabilities when the mechanism is constraining the purview, and the white bars show the unconstrained probabilities after the complete partition . The “*” indicates the state selected by the maximum operation in the intrinsic difference (ID) function. (c) The mechanism fully constrains the unit B in the purview (), resulting in state defining the amount of intrinsic information in the mechanism as . (d) After adding a slightly undetermined unit () to the purview (), the intrinsic information increases to . The new maximum state () has now much higher informativeness () but only slightly lower selectivity (), resulting in expansion. (e) When instead of C, we add the very undetermined unit D to the purview (), the new purview () has a new maximum state () with marginally higher informativeness () and very low selectivity (), resulting in dilution.

4.1. Intrinsic Information

We start by investigating the role of intrinsicality in computing the integrated information of a mechanism. To this end, we will compare for various mechanism-purview pairs, which evaluates the ID over a complete partition

of mechanism M and purview units Z, leaving the purview fully unconstrained after the partition (in this case, the partitioned repertoires are equivalent to the unconstrained repertoires defined in Equation (A5) and Equation (A4)). Intrinsicality requires that the ID must increase additively when fully constrained units are added to the purview (expansion, Equation (6)) and decrease exponentially when fully unconstrained units are added to the purview (dilution, Equation (7)). We define the system S depicted in Figure 2b to investigate the expansion and dilution of a mechanism over different purviews . Next, we fix the mechanism M in state and measure the ID of this mechanism over effect purviews with varying levels of indeterminism but a fixed threshold (partially deterministic majority gates).

First consider the purview with a fully constrained unit (), such that (Figure 2B)

Now consider the same mechanism over a larger purview , which has an additional, partially constrained unit C (). This purview has a larger repertoire of possible states, resulting in a larger difference between partitioned and unpartitioned probabilities of one state (high informativeness). At the same time, the probability of this state is still very high in absolute terms (high selectivity). Thus, the ID of m over is higher than over alone (Figure 2c):

The higher value for reflects the expansion that occurs whenever informativeness increases while selectivity is still high. Notice that the expansion here is subadditive since the new unit is constrained but not fully constrained (or fully selective).

Finally, consider another purview , where D is only weakly constrained (). While the new purview has a state where informativeness is marginally higher than before, selectivity is much lower (the state has much lower probability). For this reason, is lower for than for the smaller purview , reflecting dilution (Figure 2c):

Notice that dilution here is not exactly a factor of 2 since the new unit is weakly constrained by the mechanism but not fully unconstrained.

Next we investigate the role of the information postulate, which requires that the mechanism must be specific, meaning that a mechanism must both be in a specific state and specify an effect state (or a cause state) of a specific purview. Consider the system in Figure 3a where we focus on a high-order mechanism with four units over a purview with three units . The threshold and amount of indeterminism of the purview units are fixed: and , which makes the purview units function like partially deterministic AND gates. We show not only that the mechanism can be more or less informative depending on its state but also that the specific purview state selected by the ID measure depends both on the probability of the state and on how much the state is constrained by the mechanism.

Figure 3.

Information. (a) System S analyzed in this figure. All units have and (partially deterministic AND gates). The remaining panels show on the left the time unfolded graph of the mechanism constraining different output purviews and on the right the probability distribution of the purview (effect repertoires). The black bars show the probabilities when the mechanism is constraining the purview, and the white bars show the unconstrained probabilities after the complete partition. The “*” indicates the state selected by the maximum operation in the ID function. (b) The mechanism at state . The purview state is not only the most constrained by the mechanism (high informativeness) but also very dense (high selectivity). As a result, it has intrinsic information higher than all other states in the purview and defines the intrinsic information of the mechanism as 0.27. (c) If we change the mechanism state to , the probability of observing the purview state is now smaller than chance. However, this probability is still very different from chance and therefore very constrained by the mechanism (high informativeness). At the same time, the state is still very dense, meaning it has a probability of happening much higher than all other states (high selectivity). Together, they define the intrinsic information of the state, which is higher than the intrinsic information of all other states in the purview, defining the intrinsic information of the mechanism as 0.08.

When the state of the mechanism is (Figure 3b), the most informative state in the purview is since all units are more likely to be turned ↓ than they are after partitioning (high informativeness), and at the same time this state still has high probability (high selectivity). Out of all states, maximizes informativeness and selectivity in combination, resulting in

A different scenario is depicted if we change the state of the mechanism to (Figure 3c). In this mechanism state the constrained probability of is lower than than the probability after partitioning. However, the mechanism is informative because the probabilities are different. At the same time, the state still has high probability while being constrained by the mechanism . Together the product of the informativeness and selectivity is higher for the purview state than any other state, resulting in

Although it may be counterintuitive to identify an effect state whose probability is decreased by the mechanism, it highlights an important feature of intrinsic information: it balances informativeness and selectivity. Informativeness is about constraint, meaning how much the probability of observing a given state in the purview changes due to being constrained by the mechanism. At the same time, selectivity is about probability density at a given state, meaning that this constraint is only relevant if the state is realized by the purview. If the mechanism is informative while increasing selectivity, then there is no tension between the two. However, whenever the mechanism decreases the probability of a state, there is a tension between how informative and how selective that state is. As long as together the product of informativeness and selectivity of a state (in this case ) is higher than all other states, it is selected by the maximum operation in the ID function and thus determines the intrinsic information of the mechanism.

4.2. Integrated Information

The integration postulate of IIT requires that mechanisms be integrated or irreducible to parts. In this section we use the system defined in Figure 4a, with and for all units, to investigate how mechanisms are impacted by different partitions. We compute the ID between the intact and all possible partitioned effect repertoires to measure the impact of each partition . We identify the partition with lowest ID as the MIP of the candidate mechanism over a purview.

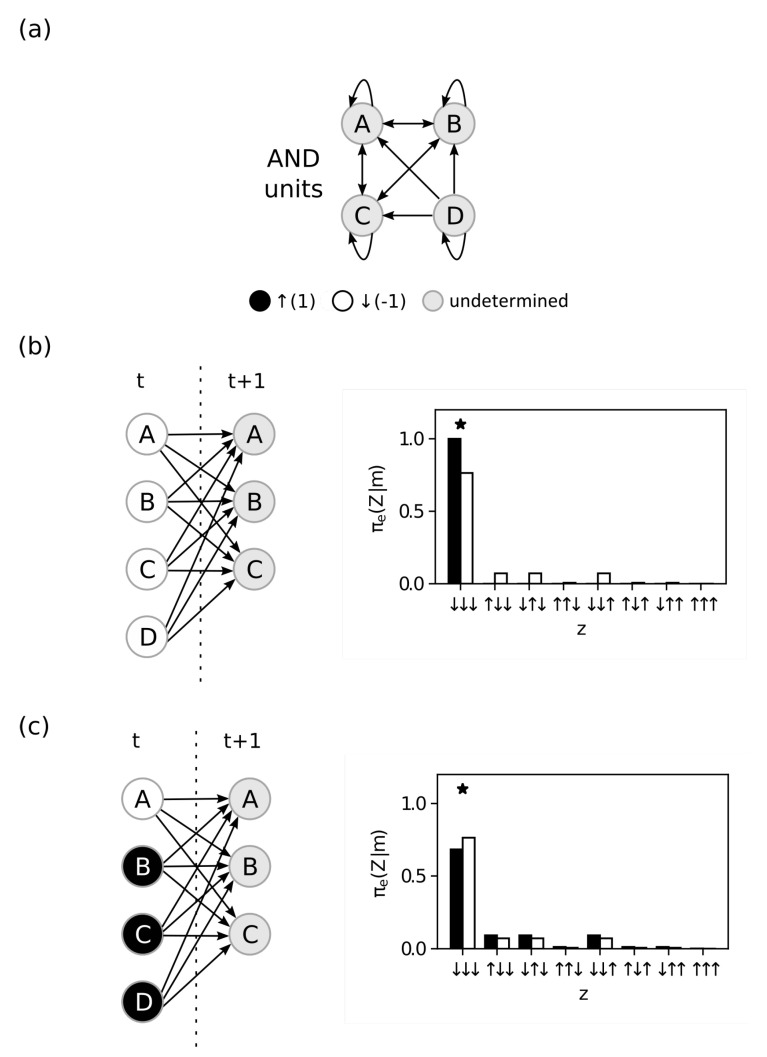

Figure 4.

Integration. (a) System S analysed in this figure and in Figure 5. All units have and (partially deterministic MAJORITY gates). The remaining panels show on the top the time unfolded graph of different mechanisms constraining different output purviews and on the bottom the probability distributions (effect repertoires). The black bars show the probabilities when the mechanism is constraining the purview, and the white bars show the partitioned probabilities. The “*” indicates the state selected by the maximum operation in the ID function. (b) The mechanism in state constraining the purview . While the complete partition has nonzero intrinsic information, the mechanism is clearly not integrated, as revealed by the MIP partition , resulting in zero integrated information. (c) The mechanism in state constraining the purview . The partition has less intrinsic information than any other partition, i.e., it is the MIP of this mechanism, and it defines the integrated information as 0.36. (d) The mechanism in state constraining the purview . The tri-partition is the MIP and it shows that the mechanism is not integrated, i.e, the mechanism has zero integrated information.

First, when considering the mechanism over the purview , the complete partition (partitioning the entire mechanism away from the entire purview) assigns a positive value

However, if we try the partition

we find that the candidate mechanism is not integrated (as is obvious after inspecting Figure 4b):

We conclude that this candidate mechanism does not exist within the system over this purview.

Next, we consider the candidate mechanism over the purview . We observe that the partition

defines an intrinsic difference

which is smaller than the one assigned by the complete partition ,

Although the ID over is smaller than that over the complete partition, this information is not zero. Moreover, the partition yields an ID value that is smaller than any other partition . In this case, we say that is the MIP (, and that the candidate mechanism has integrated effect information (Figure 4c):

Finally, for the candidate mechanism over the purview , any partition that does not include the empty set as a part in leads to nonzero ID. However, if we allow the empty set for (as discussed in Section 3.2), the candidate mechanism is reducible because disintegrating it with the partition

makes no difference to the purview states, resulting in

This occurs since B and D have opposite effects over the purview unit E, and by cutting both inputs to E we avoid changing the repertoire. Therefore, does not exist as a mechanism over the purview

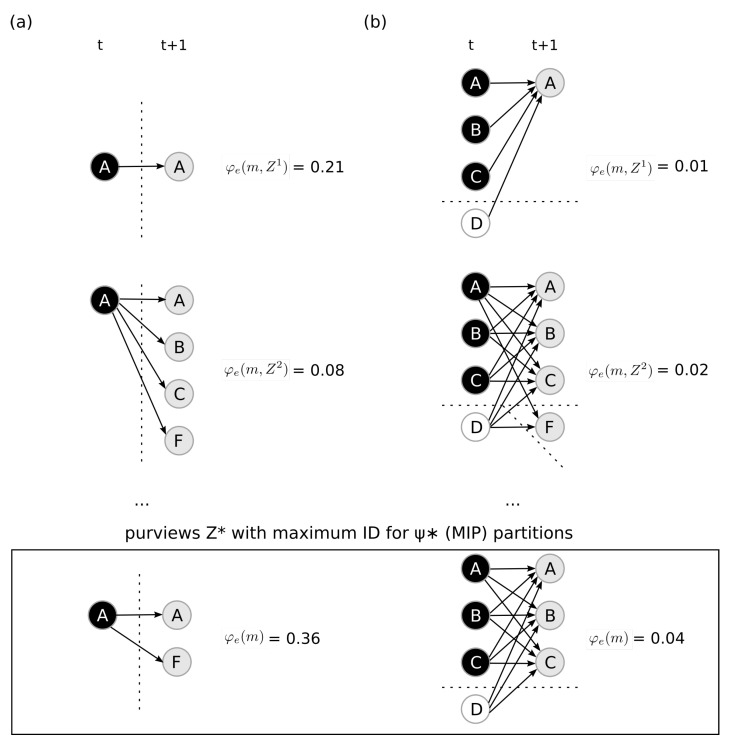

4.3. Maximal Integrated Information

The last postulate we investigate is exclusion, which dictates that mechanisms are defined over a definite purview, the one over which the mechanism is maximally irreducible (has maximal integrated effect information). Using the system defined in Figure 4a, we investigate two candidate mechanisms. First, we study the candidate mechanism , similar to the one in Figure 2. Since is first order (constituted of one unit), there is only one possible partition (the complete partition)

After computing for all possible purviews , we find that the mechanism has maximum integrated effect information over the purview , thus according to Equation (3) we have

Next, similarly to Figure 3, we investigate the candidate mechanism . After computing over all possible purviews in the system () and over all possible partitions for each purview (), we find that the mechanism has maximum integrated effect information over the purview , with partition

and that

Finally, IIT requires that mechanisms have both causes and effects within the system. We perform an analogous process using the cause repertoire (see Equation (A2) and Figure 6) to identify the maximally irreducible cause purview . We find that with MIP

and integrated cause information

Figure 6.

Integrated cause information. (a) Causal graph of mechanism at state constraining the purview , which has the maximum integrated information of all and defines the mechanism integrated information. (b) The black bars show the probabilities when the mechanism is constraining the purview (cause repertoire), and the white bars show the probabilities after the partition (partitioned cause repertoire). The “*” indicates the state selected by the maximum operation in the ID function and defines .

Since has an irreducible cause () and effect (), we say that the mechanism exists within the system with integrated information

This means that, given the system S, the mechanism in state specifies the distinction

5. Discussion

Mechanism integrated information is a measure of the intrinsic cause–effect power of a mechanism within a system. It reflects how much a mechanism as a whole (above and beyond its parts) constrains the units in its cause and effect purview. We characterize three properties of information based on the postulates of IIT: causality, intrinsicality, and specificity, and demonstrate that there is a unique measure (ID) that satisfies these properties. Notably, intrinsicality requires that information increases when expanding a purview with a fully constrained unit (expansion) but decreases when expanding a purview with a fully unconstrained unit (dilution). In situations with partial constraint, finding a unique measure gives us a principled way to balance expansion and dilution.

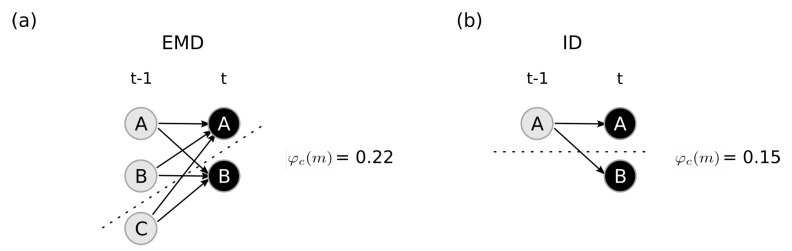

Early versions of IIT used the KLD to measure the difference between probability distributions [4,16]. The KLD was a practical solution given its unique mathematical properties and ubiquity in information theory; however, there was no principled reason to select it over any other measure. In [3], the KLD was replaced by the EMD, which was an initial attempt to capture the idea of relations among distinctions. The more two distinctions overlap in their purview units and states, the smaller the EMD distance between them; this distance was used as the ground distance to compute the system integrated information (). This aspect of the EMD is now encompassed by including relations as an explicit part of the cause–effect structure, defined in a way that is consistent with the postulates of IIT [10]. The new intrinsic difference measure is the first principled measure based on properties derived from the postulates of IIT. Importantly, ID is shown to be the unique measure that satisfies the three properties—causality, intrinsicality and specificity—the KLD and EMD measures do not satisfy intrinsicality or specificity. See Appendix C for an example of how the different measures change the purview with maximum integrated information.

Furthermore, we define a set of possible partitions of a mechanism and its purview (), which ensures that the mechanism is destroyed (“distintegrated”) after the partition operation is applied. Previous formulations of mechanism integrated information restricted the set of all possible partitions to bipartitions of a mechanism and its purview but allowed for partitions that do not qualify as “disintegrating” the mechanism (for example, cutting away a single purview unit) [3]. For most mechanisms the minimum information partition still partitions the mechanism in two parts; exceptions tend to occur if multiple inputs to the same unit counteract each other. The requirement for disintegrating partitions is more consequential, especially for first-order mechanisms (those composed of a single unit). Without this restriction, the of a first-order mechanism would always be to cut away its weakest purview unit, and the integrated information of the mechanism would then be equal to the information the mechanism specifies about its least constrained purview unit. With the disintegrating partitions, a first-order mechanism must be cut away from its entire purview, reflecting the notion that everything that a first-order mechanism does is irreducible (since it is unified).

The particular partition that yields the minimum ID between partitioned and unpartitioned repertoires defines the integrated information of a mechanism over a purview. The balance between expansion and dilution, together with the set of possible partitions, allows us to find the purviews and with maximum integrated cause and effect information. Moreover, the ID measure identifies the specific cause state and effect state that maximize the mechanism’s integrated cause and effect information. Finally, the overall integrated information of a mechanism M in state m is the minimum between its integrated cause and effect information: .

Mechanisms that exist within a system () specify a distinction (a cause and effect) for the system, and the set of all distinctions and the relations among them define the cause–effect structure of the system [10]. As mentioned above (Section 3.1.5), it is in principle possible that there are multiple solutions for or for a given mechanism m in degenerate systems with symmetries in connectivity and functionality (but note that is uniquely defined). However, by the exclusion postulate, distinctions within the cause–effect structure of a conscious system should specify a definite cause and effect, which means that they should specify a definite cause and effect purview in a specific state. As also argued in [17], distinctions that are underdetermined should thus not be included in the cause–effect structure until the tie between purviews or states can be resolved. In physical systems that evolve in time with a certain amount of variability and indeterminism, ties are likely short lived and may typically resolve on a faster scale than the temporal scale of experience.

The principles and arguments applied to mechanism information will need to be extended to relation integrated information and system integrated information, laying the ground work for an updated 4.0 version of the theory. Relations describe how causes and effects overlap in the cause–effect structure, by being over the same units and specifying the same state. Like distinctions, relations exist within the cause–effect structure, and their existence is quantified by an analogous notion of relation integrated information (). Similarly, the intrinsic existence of a candidate system and its cause–effect structure as a PSC with an experience is quantified by system integrated information (). Both and measure the difference made by "cutting apart" the object (relation or system) according to its . As a measure of existence, the difference measures used for and must also satisfy the causality, intrinsicality and specificity properties. In the case of , the expansion and dilution properties will need to be adapted to the combinatorial nature of the measure, since adding a single unit to a PSC doubles the number of potential distinctions.

According to IIT, a system is a PSC if its cause–effect structure is maximally irreducible (it is a maximum of system integrated information, ). Moreover, if a system is a PSC, then its subjective experience is identical to its cause–effect structure [3]. Since the quantity and quality of consciousness are what they are, the cause–effect structure cannot vary arbitrarily with the chosen measure of intrinsic information. For this reason, a measure of intrinsic information that is based on the postulates and is unique is a critical requirement of the theory.

Acknowledgments

Would like to thank Shuntaro Sasai, Andrew Haun, William Mayner, Graham Findlay and Matteo Grasso for their helpful comments.

Appendix A. Cause and Effect Repertoires

The cause and the effect repertoire can be derived from the system defined in Equation (1). The random variables define the system state space , where × is the cross product of each individual state space. We also require that the random variables are conditional independent

that the transitions are time invariant

and that the probabilities are well-defined for all possible states

Given the former definitions, the stochastic system S corresponds to a causal network where [12,18,19].

We use uppercase letters as parameters of the probability function to define probability distributions, e.g., , and the operators ∑ and ∏ are applied to each state independently.

Appendix A.1. Causal Marginalization

Given a mechanism in a state and a purview , causal marginalization serves to remove any contributions to the repertoire of states that are outside the mechanism M and purview Z. Explicitly, given the set , we define the effect repertoire of a single unit as

Note that, for causal marginalization, we impose a uniform distribution as . This ensures that the repertoire captures the constraints due to the mechanism alone and not to whatever external factors might bias the variables in W to one state or another.

Given the set , the cause repertoire for a single unit , using Bayes’ rule, is

where again we impose the uniform distributions as , , and .

Note that the transition probability function not only contains dependencies of on but also correlations between the variables in Z due to common inputs from units in W, which should not be counted as constraints due to . To discount such correlations, we define the effect repertoire over a set Z of r units as the product of the effect repertoires over individual units

| (A1) |

where ⨂ is the Kronecker product of the probability distributions. In the same manner, given that the mechanism M has q units , we define the cause repertoire of Z as

| (A2) |

Appendix A.2. Partitioned Repertoires

Given a partition constituted of k parts (see Equation (4)), we can define the partitioned repertoire

| (A3) |

with . In the case of , corresponds to an unconstrained effect repertoire

| (A4) |

which follows from Equation (A1) and cause repertoire

| (A5) |

which follows from Equation (A2).

Appendix B. Full Statement and Proof of Theorem 1

We now give the full statement and proof of the Theorem 1, demonstrating the uniqueness of the function f (see also [15,20]). We start with some preliminary definitions:

For each , we further define

Using these definitions, we further define the following properties.

- Property I: Causality. Let . The difference is defined as , such that

-

Property II: Intrinsicality. Let and . Then

(a) expansion:

(b) dilution:

where and from Property I .

- Property III: Specificity. The difference must be state-specific, meaning there exists such that for all we have , where , and . More precisely, we define

where f is continuous on K, analytic on and is analytic on J.

The following lemma allows the analytic extension of real analytic functions.

Lemma A1

(See Proposition 1.2.3 in [21]). If f and g are real analytic functions on an open interval and if there is a sequence of distinct points with such that

then

Corollary A1

(See Corollary 1.2.6 in [21]). If f and g are analytic functions on an open interval U and if there is an open interval such that

then

The following lemma shows that a strict maximum over continuous functions, each evaluated at fixed points, must hold for an open interval around such fixed points.

Lemma A2.

Let be continuous functions, where , fix . If there exists such that for ,

then there exists such that

for all and for all .

We now provide the solution to a functional equation similar to the Pexider logarithmic equation [22].

Lemma A3.

Let be analytic functions on J. Suppose the functional equation

holds for all , where . Then there exists such that

Proof.

First, for some suppose that there exists such that and

is a strict maximum. Then by Lemma A2 there exists such that

(A6) Second, if there does not exist such that and is a strict maximum, then we set , and so that Equation (A6) holds since for all such that . Next, define . Suppose that there exists such that , and for some ,

is a strict maximum. Then by Lemma A2 there exists such that

(A7) Finally, if there does not exist such that and is a strict maximum, then we set and so that Equation (A7) holds since for all such that . Let and define , then

Moreover, it follows that one of the following options must be true

Since the functions are analytic on J and therefore twice differentiable, then

Integrating with respect to x yields

for and for all where . Since f is analytic on J and since , by Corollary A1, we can extend such that

□

Lemma A4.

If satisfies properties I and III for some , then

Proof.

Let . By properties I and III

(A8) □

Theorem A1.

Let for some and where D satisfies properties I, II and III. Then

(A9) where for some ,

(A10)

Proof of Theorem A1.

First we show that the function in Equation (A10) satisfies properties I, II and III. To see that the function satisfies Property I, notice that for each where , since , then there exists such that

and for each ,

To see that it satisfies Property II.a, for each and for each

Similarly by Property II.b notice that for each

It is clear that the function f in Equation (A10) satisfies Property III.

The remaining part of the proof is divided into two steps:

Step 1. First we show that under four assumptions, f satisfies properties I, II and III iff .

Step 2. Next we show that if any of our assumptions is violated, then no suitable f exists.

Verification of Step 1. We apply Property II.a with for some where . We then have

By Property III, the following identity holds

| (A11) |

Our first assumption (AS1) states that there exists some such that is a strict maximum. By Lemma A2, there exists a such that

| (A12) |

for all . Further, by Lemma A3 there exists such that

and since by Property III f is continuous, the application of Lemma A4 yields

i.e., for , we have

| (A13) |

Now applying Property II.b for , , for all and for each , we have

and by Property III

| (A14) |

for all . Our second assumption (AS2) states that there exists such that . For some , we have

Further, by Lemma A2 and Equation (A13), there exists such that

Plugging this result back into Equation (A14) yields

| (A15) |

Our third assumption (AS3) states that is never a strict maximum in Equation (A15), so that for some , we have

Let and let . Then by Equation (A15)

where . By Corollary A1, we can extend to J

| (A16) |

where . Let and let , then . By Property II.b for , , and , we have

and by Property III

By Equation (A16), we have

Since , this yields

for some . Then we have that for the sequence

| (A17) |

for all , where . Our fourth and last assumption (AS4) is that is a strict maximum only for a finite number of . More specifically, let

Then (A4) states that where, for convention, if . Let if , else there exists such that . Define . Then for a fixed , there exists such that

for some . By Lemma A2, there exists such that

By Equation (A17), for , we have

| (A18) |

where the sign b was absorbed by the constant . By Corollary A1, for a fixed , we can extend to J, i.e.,

For a fixed , since by Property III f is continuous, we have

By Lemma A1, we can uniquely extend to J such that

Since this is true for all , we have that

Note that violates Property I since for some and , we have

By Property III, f is continuous in K and the following limits exist for all :

Consequently,

Verification of Step 2. Up until here we have showed that Equation (A10) not only defines a function which satisfies properties I, II and III, but it also defines the only function which satisfies properties I, II and III for given the following assumptions

-

AS1:

such that is a strict maximum in Equation (A11),

-

AS2:

such that in Equation (A14),

-

AS3:

is never a strict maximum in Equation (A15),

-

AS4:

.

All that is left to prove the theorem is to show that violating any of these assumptions also violates some property. First assume that (A1), (A2) and (A3) are true but (A4) is violated, i.e., for . Let , and let be the sequence of ordered elements in such that . Then by Equation (A17), for all , there exists such that

By Lemma A2, for a fixed , there exists such that

Since this holds for all , we have

Similarly to Equation (A18), using the sequence instead of the sequence , this result can be extended to , i.e.,

| (A19) |

Applying Property II.b to , , with yields

Further, by Property III

However this contradicts Equation (A19) since

Next we assume that (AS1) and (AS2) are true but (AS3) is violated, meaning that there exists such that is a strict maximum in Equation (A15), i.e.,

for some . For some , we have

By Lemma A2, there exists such that

By Corollary A1, we can extend to , i.e.,

However, this implies that f is discontinuous and violates Property III since

We now assume that AS1 is true but AS2 is violated, i.e.,

For some and , by Equation (A11) and by Equation (A13)

| (A20) |

Let and let , then

Therefore, is constant and

Plugging this back into Equation (A20) we see that , and for some , there exists such that

By Lemma A2, there exists such that

and by Corollary A1

However this is a contradiction since by Equation (A13), for and , we have

Note that violates Property I since for any and , we have

Finally, if AS1 is violated then there does not exist such that is a strict maximum in Equation (A11). Hence, for all , there exists such that

If is a strict maximum, then by Lemma A2 there exists such that

| (A21) |

for all . If there does not exist such that is a strict maximum, then there must exist such that Equation (A21) holds for all . In both cases, by Lemma A3 there exists such that

However, this implies that f is discontinuous since

even though by Lemma A4. □

Appendix C. Comparison between ID and EMD

Since the different information measures satisfy different properties, the distinctions that exist in a given system may be different depending on the information measure used to compute integrated information. Here, using the same system used in Figure 5, we provide an example where the cause purview with maximum integrated information is larger when using the EMD measure (Figure A1a) when compared to the same mechanism when using the ID measure (Figure A1b).

Figure 5.

Exclusion. Causal graphs of different mechanisms constraining different purviews. The system S used in these examples is the same as in Figure 4a. Each line shows the mechanism M constraining different purviews Z. (a) The mechanism at state . The bottom line shows the purview with maximum integrated effect information and the MIP is the complete partition. (b) The mechanism at state . The bottom line is the purview with maximum integrated effect information and the MIP is .

Figure A1.

Comparison between earth mover’s distance (EMD) and ID. Using the same system S used in Figure 4a, we find the cause purview with maximum integrated information for the mechanism in state , which is larger when using the EMD measure (a) when compared to the ID measure (b). The integrated information when using the EMD measure is also larger than the ID measure.

Author Contributions

Conceptualization, L.S.B., W.M., L.A. and G.T.; software, L.S.B.; investigation, L.S.B., W.M. and L.A.; writing—original draft preparation, L.S.B. and W.M.; writing—review and editing, L.S.B., L.A., W.M. and G.T.; visualization, L.S.B.; supervision, G.T.; project administration, G.T.; funding acquisition, G.T. All authors have read and agreed to the published version of the manuscript.

Funding

This project was made possible through the support of a grant from Templeton World Charity Foundation, Inc. (#TWCF0216) and by the Tiny Blue Dot Foundation (UW 133AAG3451). The opinions expressed in this publication are those of the authors and do not necessarily reflect the views of Templeton World Charity Foundation, Inc. and Tiny Blue Dot Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Albantakis L. Integrated information theory. In: Overgaard M., Mogensen J., Kirkeby-Hinrup A., editors. Beyond Neural Correlates of Consciousness. Routledge; London, UK: 2020. pp. 87–103. [Google Scholar]

- 2.Tononi G., Boly M., Massimini M., Koch C. Integrated information theory: From consciousness to its physical substrate. Nat. Rev. Neurosci. 2016;17:450–461. doi: 10.1038/nrn.2016.44. [DOI] [PubMed] [Google Scholar]

- 3.Oizumi M., Albantakis L., Tononi G. From the Phenomenology to the Mechanisms of Consciousness: Integrated Information Theory 3. 0. PLoS Comput Biol. 2014;10:e1003588. doi: 10.1371/journal.pcbi.1003588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Balduzzi D., Tononi G. Integrated information in discrete dynamical systems: Motivation and theoretical framework. PLoS Comput. Biol. 2008;4:e1000091. doi: 10.1371/journal.pcbi.1000091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Barbosa L., Marshall W., Streipert S., Albantakis L., Tononi G. A measure for intrinsic information. Sci. Rep. 2020;10:1–9. doi: 10.1038/s41598-020-75943-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tononi G. The Blackwell Companion to Consciousness. John Wiley & Sons, Ltd.; Hoboken, NJ, USA: 2017. The Integrated Information Theory of Consciousness; pp. 243–256. [Google Scholar]

- 7.Albantakis L., Marshall W., Hoel E., Tononi G. What caused what? A quantitative account of actual causation using dynamical causal networks. Entropy. 2019;21:459. doi: 10.3390/e21050459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tononi G. Consciousness as integrated information: A provisional manifesto. Biol. Bull. 2008;215:216–242. doi: 10.2307/25470707. [DOI] [PubMed] [Google Scholar]

- 9.Marshall W., Albantakis L., Tononi G. Black-boxing and cause-effect power. PLoS Comput. Biol. 2018;14:e1006114. doi: 10.1371/journal.pcbi.1006114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Haun A., Tononi G. Why Does Space Feel the Way it Does? Towards a Principled Account of Spatial Experience. Entropy. 2019;21:1160. [Google Scholar]

- 11.Albantakis L., Tononi G. The Intrinsic Cause-Effect Power of Discrete Dynamical Systems—From Elementary Cellular Automata to Adapting Animats. Entropy. 2015;17:5472–5502. doi: 10.3390/e17085472. [DOI] [Google Scholar]

- 12.Albantakis L., Tononi G. Causal Composition: Structural Differences among Dynamically Equivalent Systems. Entropy. 2019;21:989. doi: 10.3390/e21100989. [DOI] [Google Scholar]

- 13.Marshall W., Gomez-Ramirez J., Tononi G. Integrated Information and State Differentiation. Conscious. Res. 2016;7:926. doi: 10.3389/fpsyg.2016.00926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gomez J.D., Mayner W.G.P., Beheler-Amass M., Tononi G., Albantakis L. Computing Integrated Information (Φ) in Discrete Dynamical Systems with Multi-Valued Elements. Entropy. 2021;23:6. doi: 10.3390/e23010006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Csiszár I. Axiomatic Characterizations of Information Measures. Entropy. 2008;10:261–273. doi: 10.3390/e10030261. [DOI] [Google Scholar]

- 16.Tononi G. An information integration theory of consciousness. BMC Neurosci. 2004;5:42. doi: 10.1186/1471-2202-5-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kyumin M. Exclusion and Underdetermined Qualia. Entropy. 2019;21:405. doi: 10.3390/e21040405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pearl J. Causality. Cambridge University Press; Cambridge, UK: 2009. [Google Scholar]

- 19.Janzing D., Balduzzi D., Grosse-Wentrup M., Schölkopf B. Quantifying causal influences. Ann. Stat. 2013;41:2324–2358. doi: 10.1214/13-AOS1145. [DOI] [Google Scholar]

- 20.Ebanks B., Sahoo P., Sander W. Characterizations of Information Measures. World Scientific; Singapore: 1998. [Google Scholar]

- 21.Krantz S.G., Parks H.R. A Primer of Real Analytic Functions. 2nd ed. Birkhäuser Advanced Texts Basler Lehrbücher; Birkhäuser; Basel, Switzerland: 2002. [Google Scholar]

- 22.Aczél J. Lectures on Functional Equations and Their Applications. Dover Publications; Mineola, NY, USA: 2006. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.