Abstract

Objective:

Most seizure forecasting algorithms have relied on features specific to electroencephalographic recordings. Environmental and physiological factors, such as weather and sleep, have long been suspected to affect brain activity and seizure occurrence but have not been fully explored as prior information for seizure forecasts in a patient-specific analysis. The study aimed to quantify whether sleep, weather, and temporal factors (time of day, day of week, and lunar phase) can provide predictive prior probabilities that may be used to improve seizure forecasts.

Methods:

This study performed post hoc analysis on data from eight patients with a total of 12.2 years of continuous intracranial electroencephalographic recordings (average = 1.5 years, range = 1.0–2.1 years), originally collected in a prospective trial. Patients also had sleep scoring and location-specific weather data. Histograms of future seizure likelihood were generated for each feature. The predictive utility of individual features was measured using a Bayesian approach to combine different features into an overall forecast of seizure likelihood. Performance of different feature combinations was compared using the area under the receiver operating curve. Performance evaluation was pseudoprospective.

Results:

For the eight patients studied, seizures could be predicted above chance accuracy using sleep (five patients), weather (two patients), and temporal features (six patients). Forecasts using combined features performed significantly better than chance in six patients. For four of these patients, combined forecasts outperformed any individual feature.

Significance:

Environmental and physiological data, including sleep, weather, and temporal features, provide significant predictive information on upcoming seizures. Although forecasts did not perform as well as algorithms that use invasive intracranial electroencephalography, the results were significantly above chance. Complementary signal features derived from an individual’s historic seizure records may provide useful prior information to augment traditional seizure detection or forecasting algorithms. Importantly, many predictive features used in this study can be measured noninvasively.

Keywords: circadian, forecasting, seizure, sleep, weather

1 |. INTRODUCTION

One of the most debilitating aspects of living with epilepsy is the unpredictability of seizures.1,2 Seizure forecasting may reduce the burden of uncertainty and improve quality of life for people with epilepsy.3 With accurate seizure forecasting, people with epilepsy could take precautions when seizure risk is high and participate in a wider range of activities when seizure risk is low. Previous work has shown that seizure prediction is possible4–6 but that seizure forecasting algorithms need to be patient-specific.7 Most seizure prediction studies used very few seizures per patient,8–10 making it difficult to develop patient-specific algorithms. On the other hand, generic algorithms fail to generalize to new data, making them unreliable for individual patients.11

Seizure prediction generally uses intracranial electroencephalography (iEEG), using features such as power spectrum,12–14 synchronicity,15–17 et cetera. Such features are typically fed into machine learning algorithms with varying degrees of complexity.18,19 Results can be made more robust when multiple algorithms and features are combined.5 The reliance upon iEEG requires invasive surgery, which may deter many potential users of a forecasting device.

People with epilepsy often report factors that correlate with seizure occurrence, such as sleep deprivation, stress, and weather.20 Numerous measurements that may not require iEEG have been proposed for use in seizure forecasting, including cortisol levels, heart rate, weather, sleep, et cetera.2 Forecasting using these factors is gaining interest. However, studies investigating their utility remain limited, partly due to lack of data. Retrospective analysis of existing data is limited by the number of seizures per patient and the types of measurements recorded. Some features can be inferred retrospectively from existing iEEG (sleep patterns, circadian rhythms) or other sources, such as historical weather data.

This study investigates the utility of different environmental and physiological factors for seizure forecasting: sleep, temporal features (clock and calendar time), and weather. Continuous long-term EEG recordings4 were used to establish the relationship between these factors and seizure occurrence at a patient-specific level, enabling seizure forecasting to be compared for different feature types. Recent studies have shown the forecasting potential of sleep, weather, and temporal factors. Patient-specific circadian and multiday cycles of seizure occurrence are well established.21–23 Temperature, humidity, and pressure may also correlate with seizure occurrence,24 although no patient-specific analysis has yet been performed. Sleep deprivation has long been considered to increase seizure frequency,25,26 although this has recently been contradicted27 and the subtleties of the relationship between sleep and seizures are not fully understood.

Given that forecasting performance can be improved with multivariate models, it is well worth investigating the predictive power of combining information from complementary signals. To combine features into a single forecast, machine learning algorithms are commonly used. These algorithms often have many parameters, making them effective at finding patterns in multivariate data. However, this approach requires a large volume of data, meaning hundreds of seizures may be needed for an effective forecaster to be developed. In contrast, a Bayesian approach that combines forecasts from several factors is simple and flexible.28 Inputs can be changed without the need to retrain the whole algorithm. The Bayesian approach may also require fewer samples than machine learning approaches if the relationship between each feature and seizure occurrence is learned independently.

Many studies have aimed to predict, rather than forecast, seizures. Seizure prediction is binary, because the goal is to predict whether a seizure will or will not occur. This approach assumes that all seizures have a detectable preictal state29 and attempts to identify this state. However, when evaluating external risk factors, it is not preictal states that are detected, but potential variations in seizure likelihood caused by these risk factors, thus implying a proictal state.30 In the proictal state, seizures are more likely but not certain, and a probabilistic forecasting approach is suitable.31

2 |. MATERIALS AND METHODS

2.1 |. Dataset

Eight of 15 patients from the NeuroVista seizure prediction clinical trial were analyzed in this study.4 The Human Research Ethics Committees of the participating institutes approved the NeuroVista trial and subsequent use of the data. All patients gave written informed consent before participation. Patient 3 was excluded due to high levels of signal dropout. Patients 2, 4, 5, 7, 12, and 14 were excluded because they had less than 50 seizures (lead and nonlead seizures during .5–2.0 years of recording). This cutoff was chosen to minimize unreliable seizure likelihood priors generated from patients with relatively few seizures. Patient demographics are shown in Table S2. iEEG recordings lasted for an average of 1.5 years per patient (range = 1.0–2.1), with an average of 248 seizures per patient (range = 52–475). To allow for initial postsurgical instability of iEEG and seizure behaviors, only data from 100 days after implantation were used.32 Events that were not clinically confirmed or electrographically similar to clinically confirmed events were excluded. Nonlead seizures, defined as seizures that followed another seizure by less than 5 h, were also excluded to avoid potential confounding effects from an increased seizure chance during seizure clusters.

Table S1 shows the different features that were considered. To account for signal dropout, histograms for each feature were inspected, and samples were removed where values lay outside of expected ranges. For example, time since waking was sometimes greater than 24 h due to overnight signal loss.

2.2 |. Sleep

The iEEG data were automatically labeled by classifiers trained according to American Academy of Sleep Medicine 2012 methodology into rapid eye movement (REM) sleep, stage 1 sleep, stage 2 sleep, stage 3 sleep, or awake. The iEEG data were scanned for artifacts using the algorithm of Nejedly et al.33 Each epoch that was not detected as artifactual was assigned into sleep categories based on methods adapted from Kremen et al.34 and Gerla et al.35 This method was previously confirmed and validated on a set of iEEG data with concurrent polysomnography and gold standard sleep scoring according to American Academy of Sleep Medicine 2012 rules and yielded in average accuracy 94% with Cohen kappa of .87.34 Sleep scoring was performed on a representative electrode or median of all electrodes driven by expert selection and reviewer judgment during manual review. For each patient, 9 days at equidistant positions throughout the dataset were manually sleep-wake scored and used to train a patient-specific classifier. The trained classifier was then deployed in a daily manner, scoring 24-h sections at a time. As a postprocessing step, days with insufficient or noisy data were classified as unknown if more than 50% of data in a day were missing. Subsequently, each 30-s epoch where more than 50% of data were missing was set to unknown class.

From the classified data, nine objective sleep features were derived: current brain state, hours asleep, hours awake, hours in REM sleep, hours in stage 1, hours in stage 2 sleep, hours in stage 3 sleep, number of sleep stage transitions, and time since waking. All (except time since waking and current brain state) were calculated from the preceding 24 h. Time since waking was calculated from the previous sleep sample (this includes both night sleep and naps). Where current brain state is classified as unknown, the previous known state (within 1 h) was used.

Hours asleep and hours awake were not complementary because of iEEG signal dropout (median loss per patient = .125–7.125 h per day; see Figure S4). Dropout will misrepresent seizure likelihoods if not accounted for. Samples were weighted according to the proportion of data lost. Thus, seizure likelihood for the jth percentile bin of the ith feature was calculated as

| (1) |

where fi,j represents the ith feature value in the jth percentile bin, n is the number of samples, dk represents dropout in the kth sample, bi,j,k represents the ith feature value of the kth sample that fell within the jth percentile bin, and sk represents a seizure occurring in the kth sample.

2.3 |. Weather

Hourly weather data were provided by the Australian Bureau of Meteorology. Temperature, humidity, wind speed, pressure, and rainfall data were collected from the local weather station closest to each patient’s home. Patients stayed near their home locations for the duration of the trial.

Maximum temperatures, minimum temperatures, and pressure ranges were based on the 24 h preceding the sample time. All other features used values associated with the hour preceding the sample time. Rain measures simply reflected whether there was any rainfall, because most hours had no rain.

2.4 |. Temporal features

The times of seizures were binned according to hour of the day using 24-bin histograms. Similarly, weekday information was binned into the seven days of the week. To investigate monthly cycles, the lunar cycle was used, as it is constant in length, in contrast to calendar months. Some evidence suggests that the lunar cycle can affect sleep quality36 and so may also affect seizure likelihood.

2.5 |. Forecasting seizure likelihood

Training and testing sets were allocated chronologically for pseudoprospective analysis. The first 50% of seizures were allocated to the training set and the remaining 50% of seizures to the testing set to ensure the widest possible range of weather in both sets.

The training and testing sets were segmented into consecutive 10-min samples. These samples were aligned to the beginning of each set and not to seizure onset. Samples that contained the onset of a seizure were labeled “ictal,” and all other samples were labeled “interictal.” Each sample was considered a potential seizure occurrence period (SOP), and thus the SOP duration was 10 min. SOP was chosen to be 10 min because that is preferred by patients.37 A 10-min warning/intervention time was also used, matching median patient preference.38

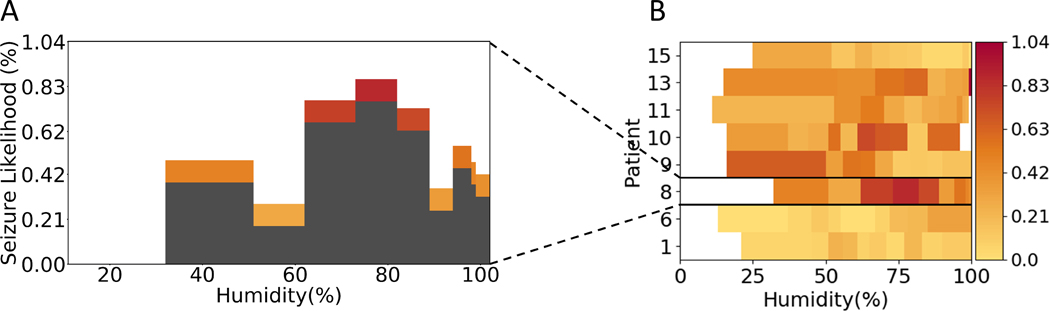

For each feature, histograms of seizure likelihood were generated (see Figure 1 and Figures S1–S3). Bin edges were defined by percentile values such that each bin contained 10% of the samples. Percentiles were used to ensure that each bin represented an equal number of samples, avoiding more extreme deviations of likelihood that arise when calculated using a bin with few samples.

FIGURE 1.

Example of a seizure likelihood histogram and heatmap. (A) Seizure likelihood across a range of humidity levels for Patient 8. Each histogram bin represents 10% of all samples, and so they are not of consistent width. Both the height and color represent seizure likelihood. (B) Histograms for each patient are converted into a row of the heatmap, keeping the color representation and width shown in the histograms

For each sample in the test set, the forecast was given by the training set seizure likelihood associated with the sample’s weather, sleep, or temporal feature value.

2.6 |. Combining forecasts

Forecasts from individual features were combined using the naïve Bayesian equation,

| (2) |

where l is the forecasted seizure likelihood given all included features, s is a seizure, s′ is the absence of a seizure, i is the current feature, ji is the percentile bin of the ith feature, and fi,j represents that the ith feature’s value was within the jith percentile bin.

Derivation of this equation is provided in Appendix S1. This equation assumes independence between all factors. This assumption is likely false, but the naïve Bayesian method has been shown to work as an effective model even without independent variables.39 Not relying on this assumption requires the calculation of a multivariate probability distribution, which was not feasible given the number of seizures and factors considered.

2.7 |. Measuring performance

Many of the standard metrics of performance for seizure prediction, including sensitivity, false positive rate, and time in warning, do not apply for probabilistic forecasting, because the binary distinction of true and false depend upon a set warning threshold. This issue was circumvented by producing receiver operator characteristic (ROC) curves, converting the forecasted probability to one or zero across varying thresholds. The area under the curve (AUC) of the ROC curve is useful as a single-value measure of forecasting performance.5 AUC assumes that discriminative thresholds are applied to a forecast to classify into either low or high risk. Other metrics that consider a continuous probability rather than a binary warning state22 may be more appropriate to compare the clinical utility of seizure forecasts. The aim of this work was to evaluate the relative capability of sleep, weather, and temporal features to discriminate proictal (i.e., high risk) states from background (low risk) states linked to these features.

Samples in the hour following an ictal sample were considered postictal and so were not included when determining forecasting performance. Forecasters were considered to perform significantly better than chance if the AUC confidence interval did not contain .50 (the average value with surrogate time series forecasts). AUC confidence interval was calculated using the Hanley-McNeil method40 (α = .05 with Bonferroni correction). For details on the methodology for testing significance and surrogate time series, see Appendix S2.

3 |. RESULTS

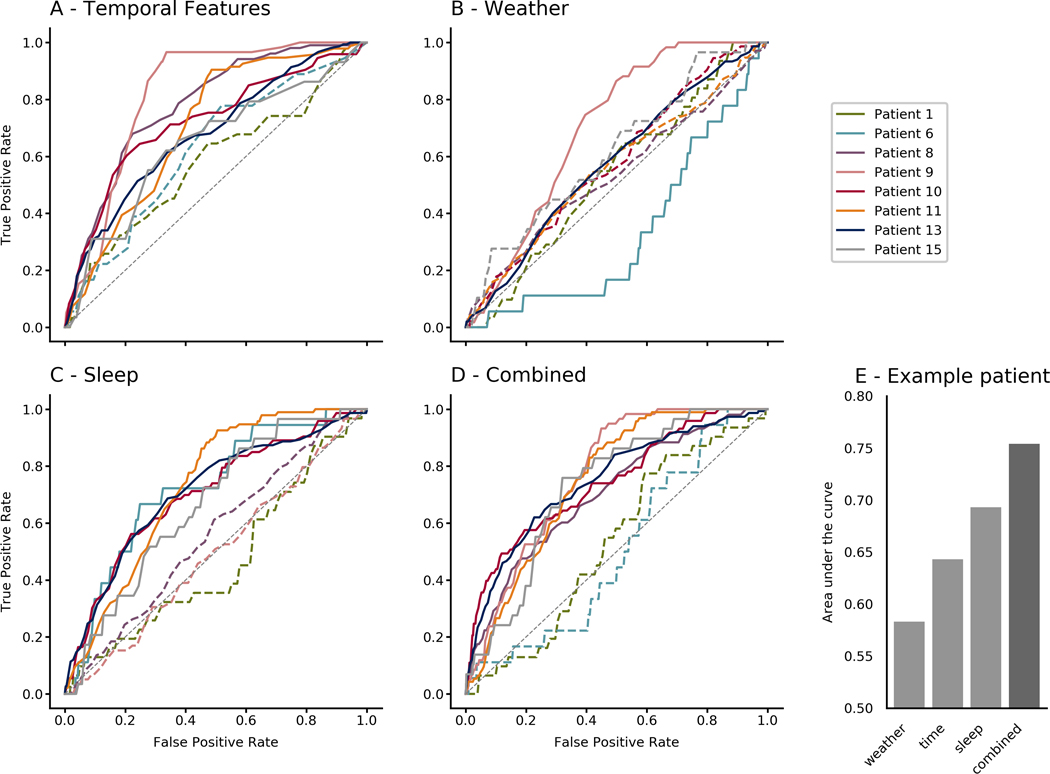

In the following sections, the term feature group refers to a collection of features (e.g., sleep features). Figure 2 shows ROC curves for each patient in each of the feature groups (sleep, weather, temporal) and all feature groups combined. AUC values of the ROC curves are shown in Table 1. Six of eight patients had an AUC significantly greater than the chance level of .5 when combining all feature groups (average = .684, range = .503–.770). For four of these six patients, combining all feature groups gave better results than forecasting with any one feature group. Temporal features performed better than chance for six patients, sleep features for five patients, and weather features for two patients. Using only features that performed better than chance in the training set did not alter average test set performance (average AUC = .687, range .498–.783). Per patient, the combined forecast outperformed the best performing individual feature group in four of the eight patients (p = .0495, paired t-test).

FIGURE 2.

Receiver operator characteristic curves for all patients using (A) combined temporal features, (B) combined weather features, (C) combined sleep features, and (D) all feature groups combined. (E) Area under the curve values for Patient 15, exemplifying how performance can improve when features are combined

TABLE 1.

Test set area under the curve scores across patients and feature groups

| Patient | Seizures | p(Sz) | Sleep | Weather | Temporal | Combined |

|---|---|---|---|---|---|---|

| 1 | 74 | .000801 | .465 (p = .487) | .538 (p = .47) | .578a (p = .15) | .531 (p = .55) |

| 6 | 39 | .000794 | .722a,b (p = .001) | .336a (p = .003) | .625 (p = .077) | .503 (p = .96) |

| 8 | 224 | .00339 | .555 (p = .062) | .538 (p = .20) | .780a,b (p < .001) | .702a (p < .001) |

| 9 | 146 | .00343 | .492 (p = .82) | .698a (p < .001) | .818a,b (p < .001) | .770a (p < .001) |

| 10 | 168 | .00427 | .701a (p < .001) | .578 (p = .027) | .721a (p < .001) | .743a,b (p < .001) |

| 11 | 212 | .00237 | .728a (p < .001) | .558 (p = .059) | .704a (p < .001) | .747a,b (p < .001) |

| 13 | 305 | .00351 | .709a (p < .001) | .576a (p = .002) | .680a (p < .001) | .747a, b (p < .001) |

| 15 | 58 | .00110 | .664a (p = .003) | .616 (p = .037) | .644a (p < .001) | .731a,b (p < .001) |

Abbreviation: p(Sz), probability of seizure in a 10-min sample, calculated from the combined train and test data.

Best performance for each patient.

Chance performance (.5) is not within the confidence interval for the area under the curve (α = .0125 after Bonferroni correction).

3.1 |. Forecasting with sleep features

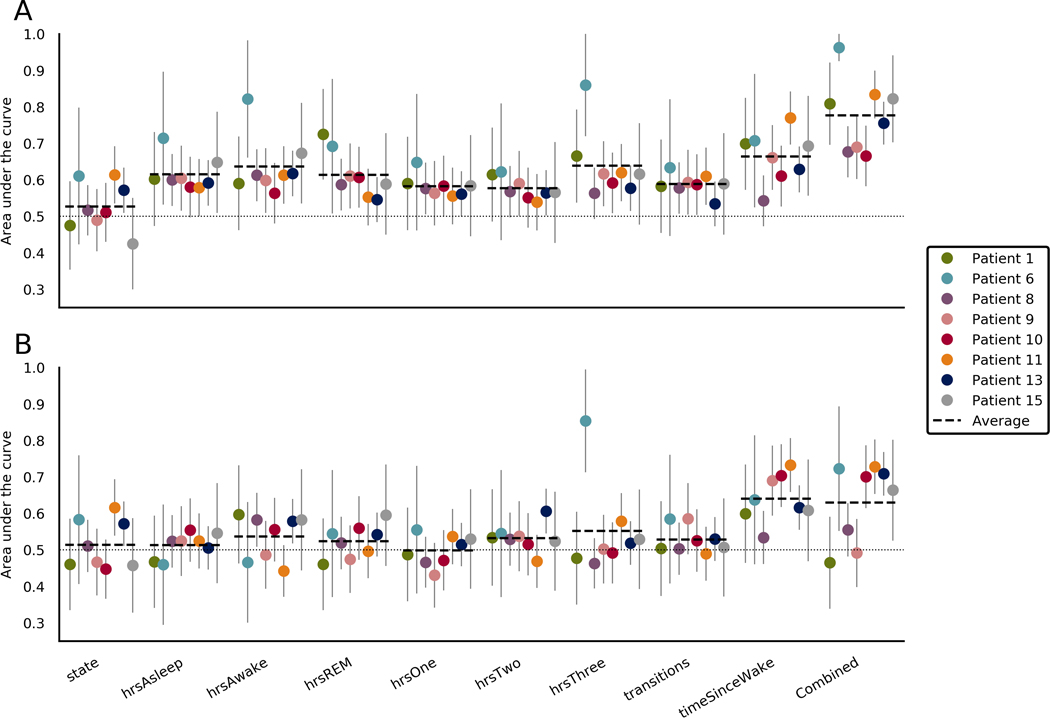

Figure 3 shows forecasting performance derived from objective measures of sleep (sleep features) in all patients. Forecasting with all sleep features combined performed better than chance for five of eight patients, with an average of .630 (range = .465–.728) across all patients. Forecasting with the number of hours asleep, hours in REM sleep, hours in stage 1 sleep, or the number of sleep stage transitions did not perform better than chance for any patient. Forecasting with the number of hours awake performed better than chance for two patients. Current state, hours in stage 2, and hours in stage 3 sleep performed better than chance in one patient. Time since waking proved the most useful sleep feature, with better than chance forecasting in four patients.

FIGURE 3.

Area under the curve (AUC) scores when forecasting using sleep features in the (A) training set and (B) testing set. Each section represents a different sleep feature. The final section shows scores when the forecasts from all sleep features were combined. Average lines indicate the average for that feature across patients. Chance performance is .5, as indicated by the dotted line. Error bars represent the upper limits of the confidence interval of each AUC score. REM, rapid eye movement sleep

3.2 |. Forecasting with weather features

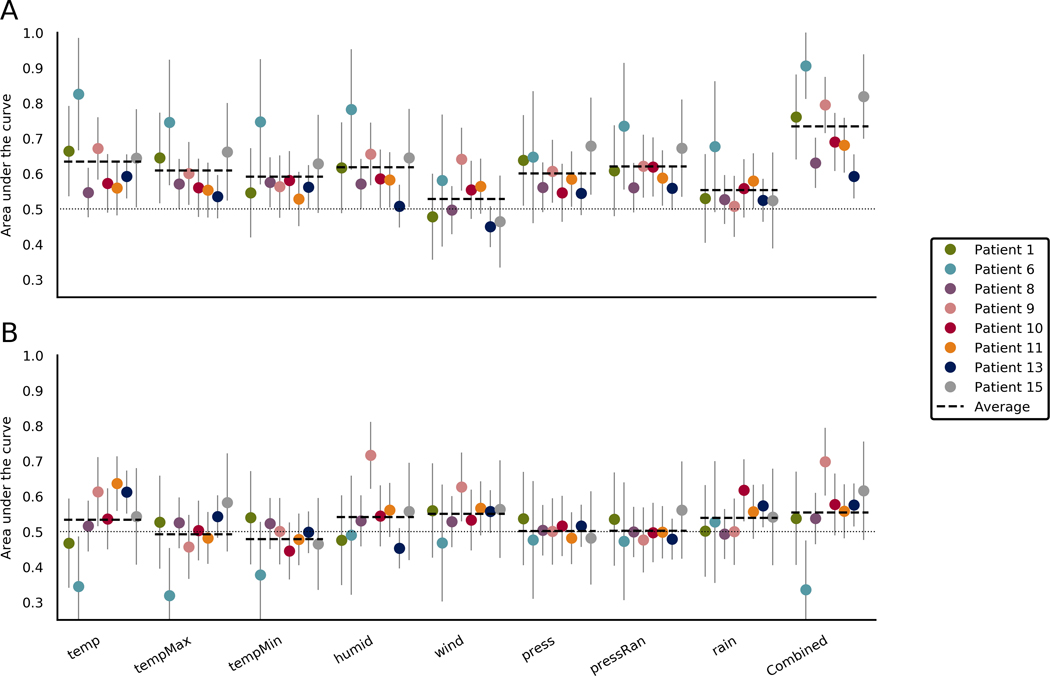

Figure 4 shows forecasting performance for the weather features in all patients. Forecasting with combined weather features performed better than chance for two of eight patients, with an average of .555 (range = .336–.698) across all patients. Forecasting with maximum temperature, minimum temperature, pressure, and pressure range did not perform better than chance for any patient. Forecasting with humidity and wind speed performed better than chance for patient 9 only. Forecasting with rainfall performed better than chance for two patients. Forecasting with temperature at the time of the sample performed better than chance for three patients. For Patient 6, temperature, maximum temperature, and combining all weather features performed significantly worse than chance. This likely occurred due to the low seizure count resulting in a seizure likelihood distribution that was not representative of the patient’s susceptibility to weather features.

FIGURE 4.

Area under the curve (AUC) scores when forecasting using weather features in the (A) training set and (B) testing set. Each section represents a different weather feature. The final section shows scores when the forecasts from all weather features were combined. Average lines indicate the average for that feature across patients. Chance performance is .5, as indicated by the dotted line. Error bars represent the upper limits of the confidence interval of each AUC score. Combined, rain occurence and all features combined; humid, humidity; press, pressure; pressRan, pressure range; temp, current temperature; tempMax, maximum daily temperature; tempMin, minimum daily temperature; wind, windspeed

3.3 |. Forecasting with temporal features

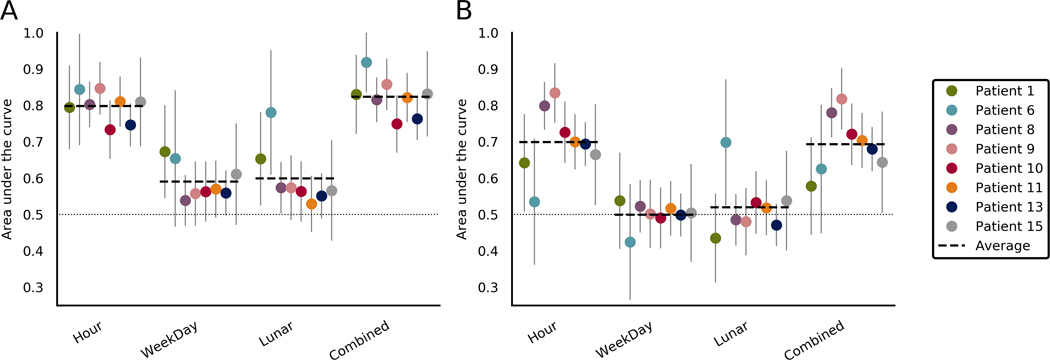

Figure 5 shows forecasting performance for the temporal features in all patients. Forecasting with all temporal features combined performed better than chance for six of eight patients, with an average AUC of .694 (range = .578–.818) across all patients. Forecasting with the hour of the day performed better than chance for seven patients, averaging .699 (range = .535-.834) across all patients. Lunar phase performed better than chance in only Patient 6, and day of the week did not perform better than chance for any patient.

FIGURE 5.

Comparison of area under the curve (AUC) scores when forecasting using temporal features in the (A) training set and (B) testing set. Each section represents a different temporal feature. The final section shows scores when the forecasts from all temporal features were combined. Average lines indicate the average for that feature across patients. Chance performance is .5, as indicated by the dotted line. Error bars represent the upper limits of the confidence interval of each AUC score

For patients where both time of day and time since sleep performed better than chance, forecasting with these two features combined outperformed forecasts with either feature individually (mean improvement = .016, p = .012; see Table S5).

4 |. DISCUSSION

This study showed the predictive power of environmental and physiological features that may be complementary to EEG, opening a new direction to search for effective factors in seizure forecasting. The sleep, weather, and temporal feature groups all performed better than chance in different patients. No measure performed better than chance across all patients, further highlighting the patient-specific nature of seizure forecasting.6,7 The combined forecast outperformed all individual feature groups in four of the eight patients.

Combining only features that performed above chance on training set data did not alter performance compared with using all features from all feature groups. This is likely because training set performance could not reliably predict test set performance. To validate forecasting performance of some features, dozens if not hundreds of seizures may need to be recorded over at least 2 years (to account for a full seasonal cycle in each set). The advantage of a Bayesian approach is that a weighted combination can be used so that even uncertain or weak prior information may contribute to improving an overall forecast. Therefore, patients may still derive early benefits from these features. We expect that performance would gradually improve as more data are collected and longer cycles are fully captured.

Patient 6 performed well in the training set but poorly in the test set, including an AUC significantly worse than chance when using weather. This reversal of the predictive power is likely due to seasonal changes in weather data. For example, susceptibility to hot days may have been missed in the training set because hot days only occurred in the test set. Patient 6 also had the least number of lead seizures (39) in the study. We conclude that Patient 6 did not have enough seizures to reliably generate seizure likelihood distributions that were representative of the true distributions, leading to unreliable performance. This effect is likely present in all patients, causing training set performance to be an unreliable indicator of test set performance. Therefore, using the training set to select features should be avoided. A real-world implementation of these prior features could initially use all features from all feature groups and only alter feature sets when enough data are available to robustly compare performance.

4.1 |. Sleep

Time since waking was the best performing sleep feature (average AUC = .649, range = .487–.769). Time since waking is related to time of day (average AUC = .699, range = .535–.834), but it was not clear which more directly influenced seizure likelihood. Where both were useful individually, the combined forecast outperformed both features, indicating that the two features have distinct utility to some degree.

For people with an irregular sleep schedule (see Figure S6), time since waking may be more informative than time of day. Time since waking has a value of zero while the subject is asleep and so infers current brain state (sleep vs. awake), which may also contribute to its performance. Further research is needed to explore the relationship between sleep, time, and seizures.

Current brain state performed better than chance in only two patients. Current brain state does not consider multiple samples like the other sleep features and therefore is more heavily impacted by unknown sleep state, which may have led to this result.

For all patients, most sleep features other than time since waking did not perform better than chance. This was unexpected, as sleep deprivation is associated with increased seizure frequency,25,26 although this has recently been contradicted,27 and the relationship is less clear when sleep deprivation is milder.41,42

Although smaller day-to-day variations in sleep may modulate seizure likelihood, our results indicate that it might not be useful as a seizure forecasting feature. The duration of stage 2 and stage 3 sleep was predictive of seizures for one patient, which aligns with previous findings that epileptic activity is mainly facilitated by non-REM sleep.43

Signal loss and the resulting unknown vigilance state limit the conclusions we can make from these data (see Appendix S4). This has been partially accounted for by weighing the importance of samples by signal loss (see Equation 1), although the effects of lost data cannot be fully counteracted. Patients 1 and 9 had large parts of the night classified as unknown (see Figure S5), which might explain their poorer performance using sleep features.

In this study, sleep features were measured by iEEG; however, some of these sleep features may eventually be measured noninvasively. Further technological improvements are necessary before noninvasive devices, such as wearables, can be used to accurately measure sleep stages compared to gold standard polysomnography. Interestingly, the most useful sleep features (i.e., time since waking) also require the least precision and so may be utilized without an invasive device.

4.2 |. Weather

Weather features showed the worst forecasting performance, with only two patients showing better than chance performance when combining features and only seven of 64 patient– feature pairings showing significant results. Considering that patients were likely indoors for much of the study, it is promising that weather was even slightly predictive of seizures. Patient-localized sensors may improve performance of features such as temperature, pressure, and humidity. For most people with epilepsy, weather may simply not be a factor that contributes to seizure likelihood, as only one of eight patients showed a positive result. For others, the effect was small, and so weather will likely play a minor role in forecasting, although it is still potentially useful.

Current temperature, humidity, windspeed, and rainfall all performed better than chance in at least one patient, but pressure, pressure range, maximum temperature, and minimum temperature did not. Changes in atmospheric pressure can affect seizure rate,44 although perhaps higher temporal resolution is required for pressure change to have utility.

The results indicate that current weather information was more useful than recent weather information. High humidity was predictive of increased seizure likelihood in a previous study of an in-patient population,24 and the current results (see Figure S2) support that this effect is also true for individual patients.

4.3 |. Temporal features

Time of day was the most reliable of all individual features, with all but Patient 6 performing better than chance. This is not surprising, as time of day paces the endogenous circadian cycle, which is known to influence seizure timing.45 This may be because time of day is also a useful proxy for both weather conditions and wakefulness. Given the strength of circadian features,22,23 it is reasonable to conclude that time of day should simply have been used alone without using weather or sleep data at all. This choice may be correct for some patients, because weather and sleep may not significantly affect their seizure likelihood, although many patients are likely to be interested in understanding their personal seizure risk factors in addition to receiving a “black-box” forecast. Furthermore, the current results show that selecting single or a few features is generally less reliable than using combined forecasts.

Only Patient 6 performed better than chance with lunar cycles, and no patient showed significant performance with weekly cycles. Patient-specific multidien cycles from iEEG have been shown,23 including in these patients,46 and have produced robust prior probabilities.47 However, patient-specific cycles may not always be known, and so temporal cycles were restricted to fixed calendar-based cycles. Misalignment between patient-specific and calendar cycles likely led to the poor performance of multidien temporal features shown. The mismatch in timescales between forecaster and data may also reduce performance.

Using time since last seizure is perhaps an obvious candidate for a temporal feature, especially given that seizures occur in bursts. However, the feature was omitted, as only lead seizures were considered, which could undervalue the feature’s utility.

4.4 |. Forecasting

The Bayesian method of combining forecasts falsely assumes independence between each of the features being combined. Ideally, a multivariate likelihood distribution would be generated to account for the complex relationships between features. This would require an impractical number of seizures, with a further increase in numbers required with each additional feature. By assuming independence, the number of seizures needed becomes feasible while still producing useful forecasts. This study cannot determine whether the tested features are independent from each other or features not tested such as patient-specific multidien cycles.

Seizure number is still a limiting factor on whether forecasting in this framework is achievable. As seen with Patient 6, when too few seizures were used to train the forecaster, performance was unreliable. This was largely due to overfitting of data in the training set. Overfitting may have been reduced by reducing the number of histogram bins. However, with fewer bins it becomes harder to observe changes in seizure likelihood due to extreme conditions.

Ten feature bins were used as a trade-off between reliability and sensitivity to extreme events. The effects of extreme events were still likely diluted due to their rarity. Some people may have been highly susceptible to seizures in extreme scenarios (such as no sleep in 24 hours or a 45°C day), but these scenarios were not observed frequently enough during the recording period. For these rare but potentially high-risk scenarios, an alternative method for determining seizure probability, such as conditioning on the likelihood of rare events or even using population-wide histograms, may be more appropriate. Further research should investigate metrics for quantifying seizure probability under extreme scenarios.

Some people have multiple and distinct seizure populations distinguished by seizure length.48,49 It is possible that these populations react differently to external factors, and therefore accounting for different seizure populations could improve forecasting performance. In addition, if seizure populations can be distinguished, external factors could enable not only a forecast of when a seizure occurs, but also the anticipated severity of the seizure.

This paper developed seizure forecasts from three groups of features that could be measured noninvasively. Although the set of possible features that was explored was limited by the available data, there are likely to be more features that will prove beneficial for seizure forecasting, such as heart rate,50,51 cortisol levels, alcohol consumption, or medication compliance.2 As more features are explored, more patients may see significant performance improvements.

5 |. CONCLUSION

This study builds on our previous work to show the potential power of combining new environmental and behavioral features. Although EEG signal features will likely continue to outperform auxiliary signals in seizure forecasting, the presented results provide complementary features that can be used in addition to invasively measured features or when such recordings are not available. Ultimately, it is our hope that a better understanding of patient-specific risk factors is a step toward making seizure forecasting a clinical reality for people with epilepsy.

Supplementary Material

Key Points.

For some patients, weather, sleep, and temporal features contain significant weak-to-strong predictive information about upcoming seizures

Seizure likelihood distributions varied between individuals, and the best performing feature combinations were highly patient-specific

Features combined using a Bayesian approach provide forecasts that outperformed single features

ACKNOWLEDGMENTS

The authors acknowledge the support of the National Health and Medical Research Council, project grant ID 1065638. Daniel E. Payne acknowledges the support of a Melbourne Research Scholarship, the University of Melbourne, and Mentone Grammar. Vaclav Kremen and Vaclav Gerla were partially supported by Institutional Resources of Czech Technical University in Prague. Vaclav Kremen and Gregory A. Worrell were supported by the National Institutes of Health under grant R01 NS09288203 and grant UH2/UH3NS95495.

Funding information

Mentone Grammar, Grant/Award Number: Mentone Grammar Foundation Award; National Institutes of Health, Grant/Award Number: R01 NS09288203 and UH2/UH3NS95495; National Health and Medical Research Council, Grant/ Award Number: 1065638; The University of Melbourne, Grant/Award Number: Melbourne Research Scholarship

Footnotes

ETHICAL PUBLICATION STATEMENT

We confirm that we have read the Journal’s position on issues involved in ethical publication and affirm that this report is consistent with those guidelines.

DATA AVAILABILITY STATEMENT

The NeuroVista Kaggle competition and seizure data are accessible online via https://www.epilepsyecosystem.org.

SUPPORTING INFORMATION

Additional supporting information may be found online in the Supporting Information section.

CONFLICT OF INTEREST

None of the authors has any conflict of interest to disclose.

REFERENCES

- 1.Epilepsy Foundation. Ei2 community survey. 2016. https://www.epilepsy.com/sites/core/files/atoms/files/community-survey-report-2016%20V2.pdf. Accessed 14 Dec 2018.

- 2.Dumanis SB, French JA, Bernard C, Worrell GA, Fureman BE. Seizure forecasting from idea to reality. Outcomes of the My Seizure Gauge Epilepsy Innovation Institute workshop. eNeuro. 2017;2:1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Fisher RS, Vickrey BG, Gibson P, Hermann B, Penovich P, Scherer A, et al. The impact of epilepsy from the patient’s perspective I. Descriptions and subjective perceptions. Epilepsy Res. 2000;41:39–51. [DOI] [PubMed] [Google Scholar]

- 4.Cook MJ, Brien TJO, Berkovic SF, Murphy M, Morokoff A, Fabinyi G, et al. Prediction of seizure likelihood with a long-term, implanted seizure advisory system in patients with drug-resistant epilepsy: a first-in-man study. Lancet Neurol. 2013;12(6):563–71. [DOI] [PubMed] [Google Scholar]

- 5.Kuhlmann L, Karoly P, Freestone DR, Brinkmann BH, Temko A, Barachant A, et al. Epilepsyecosystem.org: crowd-sourcing reproducible seizure prediction with long-term human intracranial EEG. Brain. 2018;141:2619–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kuhlmann L, Lehnertz K, Richardson MP, Schelter B, Zaveri HP. Seizure prediction—ready for a new era. Nat Rev Neurol. 2018;14(10):618–30. [DOI] [PubMed] [Google Scholar]

- 7.Freestone DR, Karoly PJ, Cook MJ. A forward-looking review of seizure prediction. Curr Opin Neurol. 2017;30(2):1. [DOI] [PubMed] [Google Scholar]

- 8.Bedeeuzzaman M, Fathima T, Khan YU, Farooq O. Seizure prediction using statistical dispersion measures of intracranial EEG. Biomed Signal Process Control. 2013;10:338–41. [Google Scholar]

- 9.Costa RP, Oliveira P, Rodrigues G, Leitão B, Dourado A. Epileptic seizure classification using neural networks with 14 features. In: Lovrek I, Howlett RJ, Jain LC, editors. Knowledge-based intelligent information and engineering systems: 12th international conference, KES 2008, Zagreb, Croatia, September 3–5, 2008, proceedings, part II. Berlin, Heidelberg, Germany: Springer; 2008. p. 281–8. [Google Scholar]

- 10.Winterhalder M, Schelter B, Maiwald T, Brandt A, Schad A, Schulze-Bonhage A, et al. Spatio-temporal patient-individual assessment of synchronization changes for epileptic seizure prediction. Clin Neurophysiol. 2006;117(11):2399–413. [DOI] [PubMed] [Google Scholar]

- 11.Mormann F, Andrzejak RG, Elger CE, Lehnertz K. Seizure prediction: the long and winding road. Brain. 2007;130:314–33. [DOI] [PubMed] [Google Scholar]

- 12.Park Y, Luo L, Parhi KK, Netoff T. Seizure prediction with spectral power of EEG using cost-sensitive support vector machines. Epilepsia. 2011;52(10):1761–70. [DOI] [PubMed] [Google Scholar]

- 13.Le Van Quyen M, Soss J, Navarro V, Robertson R, Chavez M, Baulac M, et al. Preictal state identification by synchronization changes in long-term intracranial EEG recordings. Clin Neurophysiol. 2005;116(3):559–68. [DOI] [PubMed] [Google Scholar]

- 14.Ghaderyan P, Abbasi A, Sedaaghi MH. An efficient seizure prediction method using KNN-based undersampling and linear frequency measures. J Neurosci Methods. 2014;232:134–42. [DOI] [PubMed] [Google Scholar]

- 15.Schelter B, Feldwisch-Drentrup H, Ihle M, Schulze-Bonhage A, Timmer J. Seizure prediction in epilepsy: from circadian concepts via probabilistic forecasting to statistical evaluation. Annu Int Conf IEEE Eng Med Biol Soc. 2011;2011:1624–7. [DOI] [PubMed] [Google Scholar]

- 16.Parvez MZ, Paul M. Seizure prediction using undulated global and local features. IEEE Trans Biomed Eng. 2017;64(1):208–17. [DOI] [PubMed] [Google Scholar]

- 17.Kuhlmann L, Freestone D, Lai A, Burkitt AN, Fuller K, Grayden DB, et al. Patient-specific bivariate-synchrony-based seizure prediction for short prediction horizons. Epilepsy Res. 2010;91(2–3):214–31. [DOI] [PubMed] [Google Scholar]

- 18.Mirowski P, Madhavan D, LeCun Y, Kuzniecky R. Classification of patterns of EEG synchronization for seizure prediction. Clin Neurophysiol. 2009;120(11):1927–40. [DOI] [PubMed] [Google Scholar]

- 19.Kiral-Kornek I, Roy S, Nurse E, Mashford B, Karoly P, Carroll T, et al. Epileptic seizure prediction using big data and deep learning: toward a mobile system. EBioMedicine. 2017;27:103–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Spalt J, Langbauer G, Boltzmann L. Subjective perception of seizure precipitants: results of a questionnaire study. Seizure. 1998;7:391–5. [DOI] [PubMed] [Google Scholar]

- 21.Bercel NA. The periodic features of some seizure states. Ann N Y Acad Sci. 1964;117:555–63. [DOI] [PubMed] [Google Scholar]

- 22.Karoly PJ, Ung H, Grayden DB, Kuhlmann L, Leyde K, Cook MJ, et al. The circadian profile of epilepsy improves seizure forecasting. Brain. 2017;140(8):2169–82. [DOI] [PubMed] [Google Scholar]

- 23.Baud MO, Kleen JK, Mirro EA, Andrechak JC, King-Stephens D, Chang EF, et al. Multi-day rhythms modulate seizure risk in epilepsy. Nat Commun. 2018;9:1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rakers F, Walther M, Schiffner R, Rupprecht S, Rasche M, Kockler M, et al. Weather as a risk factor for epileptic seizures: a case-crossover study. Epilepsia. 2017;58(7):1287–95. [DOI] [PubMed] [Google Scholar]

- 25.Ellingson RJ, Wilken K, Bennett DR. Efficacy of sleep deprivation as an activation procedure in epilepsy patients. J Clin Neurophysiol. 1984;1(1):83–101. [DOI] [PubMed] [Google Scholar]

- 26.Foldvary-Schaefer N, Grigg-Damberger M. Sleep and epilepsy: what we know, don’t know, and need to know. J Clin Neurophysiol. 2006;23(1):4–20. [DOI] [PubMed] [Google Scholar]

- 27.Rossi KC, Joe J, Makhija M, Goldenholz DM. Insufficient sleep, electroencephalogram activation, and seizure risk: re-evaluating the evidence. Ann Neurol. 2020;87(6):798–806. [DOI] [PubMed] [Google Scholar]

- 28.Russel SJ, Norvig P. Bayes rule and its use. In: Hirsch M, editor. Artificial intelligence: a modern approach. 3rd ed. Hoboken, NJ: Pearson; 2010. p. 495–9. [Google Scholar]

- 29.Freestone DR, Karoly PJ, Peterson ADH, Kuhlmann L, Lai A, Goodarzy F, et al. Seizure prediction: science fiction or soon to become reality? Curr Neurol Neurosci Rep. 2015;15:73. [DOI] [PubMed] [Google Scholar]

- 30.Baud MO, Proix T, Rao VR, Schindler K. Chance and risk in epilepsy. Curr Opin Neurol. 2020;33(2):163–72. [DOI] [PubMed] [Google Scholar]

- 31.Litt B, Lehnertz K. Seizure prediction and the preseizure period. Curr Opin Neurol. 2002;15(2):173–7. [DOI] [PubMed] [Google Scholar]

- 32.Ung H, Baldassano SN, Bink H, Krieger AM, Williams S, Vitale F, et al. Fluctuates over months after implanting electrodes in human brain. J Neural Eng. 2017;14(5):056011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nejedly P, Kremen V, Sladky V, Cimbalnik J, Klimes P, Plesinger F, et al. Exploiting graphoelements and convolutional neural networks with long short term memory for classification of the human electroencephalogram. Sci Rep. 2019;9(1):2–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kremen V, Brinkmann BH, Van Gompel JJ, Stead M, St Louis EK, Worrell GA, et al. Automated unsupervised behavioral state classification using intracranial electrophysiology. J Neural Eng. 2019;16(2):1–8. [DOI] [PubMed] [Google Scholar]

- 35.Gerla V, Kremen V, Macas M, Dudysova D, Mladek A, Sos P, et al. Iterative expert-in-the-loop classification of sleep PSG recordings using a hierarchical clustering. J Neurosci Methods. 2019;317:61–70. [DOI] [PubMed] [Google Scholar]

- 36.Cajochen C, Altanay-Ekici S, Münch M, Frey S, Knoblauch V, Wirz-Justice A. Evidence that the lunar cycle influences human sleep. Curr Biol. 2013;23:1485–8. [DOI] [PubMed] [Google Scholar]

- 37.Schulze-Bonhage A, Sales F, Wagner K, Teotonio R, Carius A, Schelle A, et al. Views of patients with epilepsy on seizure prediction devices. Epilepsy Behav. 2010;18(4):388–96. [DOI] [PubMed] [Google Scholar]

- 38.Arthurs S, Zaveri HP, Frei MG, Osorio I. Patient and care-giver perspectives on seizure prediction. Epilepsy Behav. 2010;19(3):474–7. [DOI] [PubMed] [Google Scholar]

- 39.Domingos P, Pazzani M. Beyond independence: conditions for the optimality of the simple Bayesian classifier. In: Saitta L, editor. Machine learning 1996 international conference: proceedings of the thirteenth international conference. San Francisco, CA: Morgan Kaufmann; 1996. p. 1–8. [Google Scholar]

- 40.Hanley JA, McNeil BJ. A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology. 1983;148(3):839–43. [DOI] [PubMed] [Google Scholar]

- 41.Cobabe MM, Sessler DI, Nowacki AS, O’Rourke C, Andrews N, Foldvary-Schaefer N. Impact of sleep duration on seizure frequency in adults with epilepsy: a sleep diary study. Epilepsy Behav. 2015;43:143–8. [DOI] [PubMed] [Google Scholar]

- 42.Samsonsen C, Sand T, Bråthen G, Helde G, Brodtkorb E. The impact of sleep loss on the facilitation of seizures: a prospective case-crossover study. Epilepsy Res. 2016;127:260–6. [DOI] [PubMed] [Google Scholar]

- 43.Frauscher B, von Ellenrieder N, Ferrari-Marinho T, Avoli M, Dubeau F, Gotman J, et al. Facilitation of epileptic activity during sleep is mediated by high amplitude slow waves. Brain. 2015;138:1629–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Doherty MJ, Youn C, Gwinn RP, Haltiner AM. Atmospheric pressure and seizure frequency in the epilepsy unit: preliminary observations. Epilepsia. 2007;48(9):1764–7. [DOI] [PubMed] [Google Scholar]

- 45.Quigg M, Straume M. Dual epileptic foci in a single patient express distinct temporal patterns dependent on limbic versus nonlimbic brain location. Ann Neurol. 2001;48(1):117–20. [DOI] [PubMed] [Google Scholar]

- 46.Karoly PJ, Goldenholz DM, Freestone DR, Moss RE, Grayden DB, Theodore WH, et al. Circadian and circaseptan rhythms in human epilepsy: a retrospective cohort study. Lancet Neurol. 2018;17(11):977–85. [DOI] [PubMed] [Google Scholar]

- 47.Maturana MI, Meisel C, Dell K, Karoly PJ, D’Souza W, Grayden DB, et al. Critical slowing as a biomarker for seizure susceptibility. Nat Commun. 2020;11:2172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cook MJ, Karoly PJ, Freestone DR, Himes D, Leyde K, Berkovic S, et al. Human focal seizures are characterized by populations of fixed duration and interval. Epilepsia. 2016;57(3):359–68. [DOI] [PubMed] [Google Scholar]

- 49.Payne DE, Karoly PJ, Freestone DR, Boston R, D’Souza W, Nurse E, et al. Postictal suppression and seizure durations: a patient-specific, long-term iEEG analysis. Epilepsia. 2018;59(5): 1027–36. [DOI] [PubMed] [Google Scholar]

- 50.Kerem DH, Geva AB. Forecasting epilepsy from the heart rate signal. Med Biol Eng Comput. 2005;43:230–9. [DOI] [PubMed] [Google Scholar]

- 51.Fujiwara K, Miyajima M, Yamakawa T, Abe E, Suzuki Y, Sawada Y, et al. Epileptic seizure prediction based on multivariate statistical process control of heart rate variability features. IEEE Trans Biomed Eng. 2015;63(6):1321–32. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.