Keynotes and oral presentations MEMTAB2020

List of abstracts (in order by programme schedule)

I1: Introductory extended abstract: MEMTAB2020

Jan Y Verbakel1,2, Ann Van den Bruel1 and Ben Van Calster3,4

1Department of Public Health and Primary Care, KU Leuven, Leuven, Belgium; 2Nuffield Department of Primary Care Health Sciences, University of Oxford, UK; 3Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 4Department of Biomedical Data Sciences, Leiden University Medical Center, Leiden, the Netherlands

Keynote session on PATH statement

This supplement contains the conference abstracts accepted at the MEMTAB2020 virtual symposium, hosted from Leuven, Belgium, focussing on Methods for Evaluation of Medical prediction Models, Tests And Biomarkers.

The MEMTAB2020 symposium was hosted by Professor Ben Van Calster, Professor Ann Van den Bruel, Professor Jan Verbakel and the University of Leuven's EPI-Centre, part of the Department of Public Health and Primary Care.

The international MEMTAB symposium attracts researchers, healthcare workers, policy makers and manufacturers actively involved in the development, evaluation or regulation of tests, (bio) markers, models, tools, apps, devices or any other modality used for the purpose of diagnosis, prognosis, risk stratification or (disease or therapy) monitoring.

Rapid technological progress coupled with the significant methodological complexities involved in developing, evaluating and implementing tests, markers, models or devices create formidable challenges. Yet these challenges are matched by unique opportunities, while the wide array of involved subdisciplines create an exciting milieu for the generation of new ideas and directions. The symposium aims to provide a forum for disseminating knowledge at the forefront of current research, and for stimulating dialogue that will propel future thought and endeavours to tackle the methodological and practical complexities facing the medical diagnostic, prognostic and monitoring field today. The virtual MEMTAB2020 event was specifically aimed at bringing together researchers from the diverse reaches of test evaluation, from in vitro test developers, industry and regulatory representatives, through methodologists, guideline developers and practising clinicians, in the hope of improving current understanding through knowledge exchange, and forging our diverse experiences and perspectives to delineate the future direction of diagnostic test research. In this respect, it is the only conference in the world that provides a platform dedicated to the investigation of medical tests, markers, models and other devices used for diagnosis prognosis and monitoring.

This year’s symposium focussed on the following conference themes:

How to develop and apply prediction models and diagnostic tests

High-dimensional data and genetic prediction

Machine learning for evaluation of diagnostic tests, markers and prediction models

Impact studies for diagnostic tests, markers and prediction models (including low resource settings)

Systematic review and meta-analysis (including individual participant data)

Big data, electronic health records, dynamic prediction

How to quantify overdiagnosis

With over 135 delegates and 88 accepted abstracts, we believe we were able to offer a very strong programme.

It was our great pleasure to host this year’s symposium and are looking forward to meeting you again at the next MEMTAB symposium!

Overview of the different committees

(listed alphabetically)

Conference Chairs: Ben Van Calster, Ann Van den Bruel, Jan Y Verbakel

Local organizing Committee:

Niel Hens, University of Antwerp and Hasselt University

Ben Van Calster, KU Leuven and LUMC

Ann Van den Bruel, KU Leuven

Jan Y Verbakel, KU Leuven and University of Oxford

Scientific Committee:

Gary Collins, University of Oxford

Jon Deeks, University of Birmingham

Nandini Dendukuri, McGill Universit

Niel Hens, University of Antwerp and Hasselt University

Lotty Hooft, UMC Utrecht

Mariska Leeflang, AMC Amsterdam

Richard Riley, Keele University

Yemisi Takwoingi, University of Birmingham

Maarten van Smeden, LUMC

Laure Wynants, KU Leuven and Maastricht University

Ben Van Calster, KU Leuven and LUMC

Ann Van den Bruel, KU Leuven

Jan Y Verbakel, KU Leuven and University of Oxford

Session chair: Ben Van Calster

1. Using Group Data for Individual Patients: The Predictive Approaches to Treatment Effect Heterogeneity (PATH) Statement

David M Kent1, David van Klaveren1,2, Jessica K. Paulus1 and Ewout Steyerberg2,3 for the PATH Group

1Predictive Analytics and Comparative Effectiveness Center, Tufts Medical Center, Boston, MA, USA 2Department of Public Health, Erasmus University Medical Center, Rotterdam, The Netherlands; 3Department of Biomedical Data Sciences, Leiden University Medical Center, Leiden, The Netherlands

Correspondence: David M Kent

Background: Evidence-based medicine (EBM) relies on forecasting for an individual patient using the frequency of outcomes in groups of similar patients (i.e., a reference class) under alternative treatments. Despite a widespread belief that individuals respond differently to the same treatment, EBM has traditionally stressed the reference class of the whole trial population, in part because conventional (one-variable-at-a-time) subgroup analysis have well-known limitations.

We aimed to provide guidance for “predictive” approaches to heterogeneous treatment effects (HTE), which can provide patient-centered estimates of outcome risks under treatment alternatives, taking into account multiple relevant patient attributes simultaneously.

Methods: 1) a systematic literature review; 2) simulations to characterizing potential problems with predictive HTE analysis; and 3) a deliberative process engaging a technical expert panel to develop guidance.

Results: We found various limitations of conventional subgroup analysis in contrast to various advantages of predictive approaches. The latter span two broad classes: 1) Risk modeling, where patient subgroups are formed according to their risk of an outcome (usually the primary study outcome), exploiting the mathematical dependency of treatment effects on the control event rate; and 2) Effect modeling, where patients are disaggregated by a model developed directly from randomized trial data to predict treatment effects (i.e., contrasting outcome risks under two treatment conditions). Unlike risk modeling, effect modeling is “unblinded” to treatment assignment, allowing the inclusion of treatment-by-covariate interaction terms. We review strengths and limitations of these approaches and summarize recommendations in the PATH Statement.

Conclusions: While positive RCT results provide strong evidence that an intervention works for at least some patients, clinicians need to understand how a patient’s multiple characteristics combine to influence their potential treatment benefit. Revision and refinement of the PATH guidance supporting that goal is anticipated as experience with these novel methods grows.

Keywords: PATH statement, prediction, heterogeneity of treatment effect, personalized medicine

2. Estimating heterogeneity of treatment effect by risk modeling

Ewout W. Steyerberg1,2, David M Kent3, David van Klaveren1,3

1Department of Biomedical Data Sciences, Leiden University Medical Center, Leiden, The Netherlands; 2 Department of Public Health, Erasmus University Medical Center, Rotterdam, The Netherlands; 3 Predictive Analytics and Comparative Effectiveness Center, Tufts Medical Center, Boston, MA, USA

Correspondence: Ewout W. Steyerberg

Background: Heterogeneity of treatment effect refers to the nonrandom variation in the magnitude of the absolute treatment effect ('benefit') across levels of covariates. For randomized controlled trials (RCTs), the PATH (Predictive Approaches to Treatment effect Heterogeneity) Statement suggests 2 categories of predictive HTE approaches: “risk modeling” approaches, which combine a multivariable model with a constant relative effect of treatment, and “effect modeling” approaches, which includes interactions between treatment and baseline covariates[1].

We aimed to assess practical challenges in deriving estimates of absolute benefit based on risk modeling.

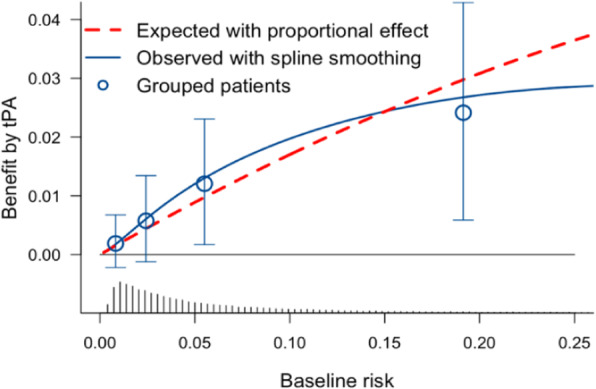

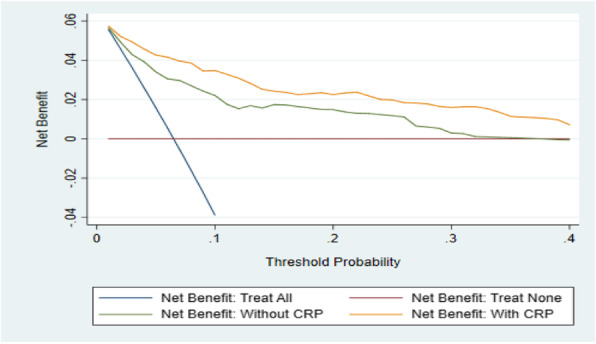

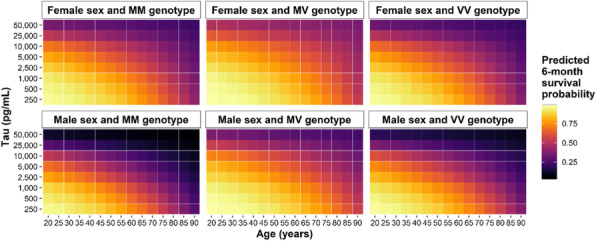

Methods & Results: We re-analyzed data from 30,510 patients with an acute myocardial infarction, as enrolled in the GUSTO-I trial[2]. The average mortality was 6.3% with tPA and 7.3% with streptokinase, or an average benefit of 1.0% (p<.001). A multivariable logistic regression model included 6 predictors of 30-day mortality, which occurred in 2128 patients. The model provided a linear predictor (or risk score) that discriminated well between low risk and high risk patients, with an area under the ROC curve of 0.82. The benefit of tPA over streptokinase treatment increased from 0.2% to 2.4% for the lowest to the highest risk quarter (Figure 1). Proportionality of the treatment effect across predictors was not rejected in tests of interaction (overall test: p=0.30). Continuous benefit was estimated by subtracting estimated risk under either treatment with a spline transformation of the linear predictor (Figure 1). Sensitivity analyses showed similar results for different specifications of the risk model or the continuous benefit modeling. Exploratory one at a time subgroup analyses showed consistent relative effects of treatment.

Conclusions: Risk modeling should become part of the primary analysis of RCTs. One at a time subgroup analyses should be abandoned as secondary to indicate any heterogeneity of treatment effect.

Fig. 1 (abstract 2).

benefit of treatment by tPA compared to streptokinase in the GUSTO-I trial

Keywords: Heterogeneity of treatment effect, regression model, spline functions

References

[1] David M. Kent, Jessica K. Paulus, David van Klaveren, et al. The Predictive Approaches to Treatment effect Heterogeneity (PATH) Statement. Ann Intern Med.2020;172:35-45.

Linked contributed talks on predicting treatment response

Session chair: Ben Van Calster

3. Application of the PATH Statement: Predicting Treatment Benefit of Heart Bypass Surgery versus Coronary Stenting

David van Klaveren1,2, Kuniaki Takahashi3, Ewout W. Steyerberg1,4, David M. Kent2, Patrick W. Serruys5

1Department of Public Health, Erasmus University Medical Center, Rotterdam, The Netherlands; 2Predictive Analytics and Comparative Effectiveness Center, Tufts Medical Center, Boston, MA, USA; 3Department of Cardiology, Academic Medical Center, University of Amsterdam, The Netherlands; 4Department of Biomedical Data Sciences, Leiden University Medical Center, Leiden, The Netherlands; 5Department of Cardiology, National University of Ireland, Galway, Ireland

Correspondence: David van Klaveren

Background: The Syntax Score II (SSII) was proposed to predict treatment benefit, i.e. the DIFFERENCE in 4-year mortality when treating complex Coronary Artery Disease patients with heart bypass surgery rather than coronary stenting. Between 4 and 10 years post-procedure, SSII has shown good predictive performance for mortality, but not for the treatment benefit of surgery versus stenting.

We aimed to develop a new SSII (SSII-2020) for predicting the treatment benefit of surgery versus stenting over a 10-year horizon.

Methods: Following the recently published PATH statement, we first used Cox regression in the SYNTAX trial data (n=1,800) to develop a clinical prognostic index (PI) for mortality over a 10-year horizon, blinded to treatment assignment. Second, we fitted a Cox model which included the treatment, the PI and 2 pre-specified effect-modifiers based on prior evidence: type of disease (Left Main Disease [LMD] or 3-Vessel Disease [3VD]), and anatomical disease complexity (SYNTAX Score [SS]). In a cross-validation, we assessed the ability of SSII-2020 to predict the absolute mortality difference between surgery and stenting.

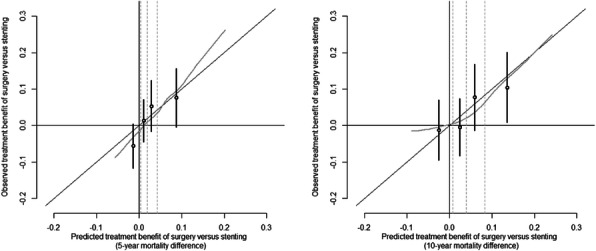

Results: The PI consisted of 7 clinical predictors of mortality. SSII-2020 included the PI, treatment and 2 significant treatment interactions: surgery was on average beneficial for 3VD patients (HR 0.66; 95%CI 0.52-0.84), but not for LMD patients (HR 1.17; 95%CI 0.77-1.36; p-for-interaction 0.02), and the disease complexity only influenced mortality risk when patients were treated with stenting (HR per 10 SS points 1.17; 95%CI 1.06-1.30; p-for-interaction 0.05). In both treatment arms, SSII-2020 discriminated well (c-index 0.73) and was well calibrated for mortality risk. In contrast with SSII, SSII-2020 was well calibrated for treatment benefit both at 5 and 10 years post-procedure (Figure 1).

Conclusions: The newly developed SSII-2020 is able to predict the treatment benefit of heart bypass surgery versus coronary stenting over a 10-year horizon. External validation will be undertaken.

Fig. 1 (abstract 3).

Calibration plots for benefit of treatment with surgery versus stenting at 5 years (left panel) and 10 years (right panel)

Keywords: PATH statement, prediction, heterogeneity of treatment effect, personalized medicine

4. Individual participant data meta-analysis to examine treatment-covariate interactions: statistical recommendations for conduct and planning

Richard D. Riley1, Thomas Debray2, David Fisher3, Miriam Hattle1, Nadine Marlin4, Jeroen Hoogland2, Francois Gueyffier5, Jan A. Staessen6, Jiguang Wang7, Karel G.M. Moons2, Johannes B. Reitsma2, Joie Ensor1

1Centre for Prognosis Research, School of Primary, Community and Social Care, Keele University, UK; 2Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, Utrecht, The Netherlands; 3MRC Clinical Trials Unit, Institute of Clinical Trials & Methodology, Faculty of Population Health Sciences, University College London, London, UK; 4Blizard Institute, Barts and The London School of Medicine and Dentistry, Queen Mary University of London, London, UK; 5Inserm, CIC201, Lyon, France; 6Studies Coordinating Centre, Research Unit Hypertension and Cardiovascular Epidemiology, KU Leuven Department of Cardiovascular Sciences, Leuven, Belgium; 7Centre for Epidemiological Studies and Clinical Trials, Ruijin Hospital, Shanghai Jiaotong University School of Medicine, Shanghai, China

Correspondence: Richard D. Riley

Background: Personalised healthcare often requires the use of treatment-covariate interactions, which refers to when a treatment effect (e.g. measured as a mean difference, odds ratio, hazard ratio) changes across values of a participant-level covariate (e.g. age, biomarker). Single randomised trials do not usually have sufficient power to detect genuine treatment-covariate interactions, which motivates the sharing of individual participant data (IPD) from multiple trials for meta-analysis. However, IPD meta-analyses are time consuming and statistically challenging

We aimed to provide statistical recommendations for conducting and planning an IPD meta-analysis of randomised trials to examine treatment-covariate interactions.

Methods: Drawing on our collective experience, we identify five key lessons to improve statistical analysis, and two key recommendations to improve planning IPD meta-analysis projects. Real IPD meta-analysis examples are used to substantiate the issues.

Results: For conduct, we recommend: (i) interactions should be estimated directly, and not by calculating differences in meta-analysis results for subgroups; (ii) interaction estimates should be based solely on within-study information; (iii) continuous covariates and outcomes should be analysed on their continuous scale; (iv) non-linear relationships should be examined for continuous covariates, using a multivariate meta-analysis; and (v) translation into clinical practice requires individualised treatment effect prediction. For planning, the decision to initiate an IPD meta-analysis should (a) not be based on between-study heterogeneity in the overall treatment effect; and (b) consider the potential power of an IPD meta-analysis conditional on characteristics of studies promising their IPD.

Conclusions: We hope our recommendations improve the planning and conduct of IPD meta-analyses to examine treatment-covariate interactions, to help flag when the approach is worthwhile and to ensure more robust results.[1]

Keywords: IPD meta-analysis, effect modifier, treatment-covariate interaction, subgroup

References

[1] Riley RD, et al. IPD meta-analysis to examine interactions between treatment effect and participant-level covariates: statistical recommendations for conduct and planning. Stat Med (submitted)

Contributed session on diagnostic tests

Session chair: Nandini Dendukuri

5. Test and Treat Superiority Plot: estimating threshold performance for developers of tests for treatment response

Neil Hawkins1, Janet Bouttell1, Andrew Briggs2, Dmitry Pomonomarev3

1Health Economics and Health Technology Assessment, Institute of Health and Wellbeing, 1 Lilybank Gardens, Glasgow, Scotland; 2Department of Health Services Research and Policy, London School of Hygiene and Tropical Medicine, London, UK; 3Meshalkin National Medical Centre, Novosibirsk, Russian Federation

Correspondence: Neil Hawkins; Janet Bouttell

Background: It is useful for developers of diagnostic technologies to know how accurate a test predicting response to a treatment would need to be in order for a “test and treat” strategy to produce superior clinical outcomes to a ‘treat all’ strategy.

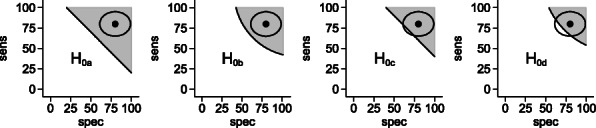

This study explored the derivation of a set of sensitivity and specificity values that define the threshold for clinical superiority for a test and treat strategy.

Methods: Taking the scenario of a test that predicts response to treatment A with a given sensitivity and specificity but does not predict the response to an alternative treatment (B), we developed a mathematical model that determined the threshold sensitivity and specificity required for a strategy of test and treat with “A” if positive or “B” if negative to outperform a treat-all strategy with either treatment A or B.

Results: We demonstrated that an estimate of odds ratio of response rate between treatments is sufficient to determine a set of threshold sensitivities and specificities for clinical superiority of the test and treat strategy. However, if the absolute probability of response is known for one of the treatments, the net-clinical benefit of the test can be estimated as a function of sensitivity and specificity. Using a hypothetical test of response to hormone treatment compared to chemotherapy in ovarian cancer, we demonstrate in a Shiny App the “Test and Treat Superiority Plot”, which illustrates the threshold performance necessary for a test of treatment response to outperform a treat all strategy given only the odds ratio between two treatments.

Conclusions: This model and plot can be used to distinguish promising candidate diagnostics from those unlikely to have clinical value. The plot also indicates how the relative importance of sensitivity and specificity varies as a function of the relative treatment effect.

Keywords: Diagnostic tests, sensitivity and specificity, test development

6. Unblinded sample size re-estimation for diagnostic accuracy studies

Antonia Zapf1, Annika Hoyer2

1Department of Medical Biometry and Epidemiology, University Medical Center Hamburg-Eppendorf, Hamburg, Germany; 2German Diabetes Center, Leibniz Center for Diabetes Research at Heinrich Heine University Düsseldorf, Institute for Biometrics and Epidemiology, Düsseldorf, Germany

Correspondence: Antonia Zapf

Background: In diagnostic accuracy studies, sensitivity and specificity are recommended as co-primary endpoints. For the sample size calculation, assumptions about the expected sensitivity and specificity of the index test as well as the minimal acceptable diagnostic accuracy or the expected diagnostic accuracy of the comparator test have to be made. However, the assumptions from previous studies are often unsure.[1] As an example for the talk we chose the study from Yan et al., where the estimated sensitivity was 75.8%, whereas the authors expected 91%.[2]

Methods: Because of the uncertainty, it is essential to develop methods for a sample size re-estimation in diagnostic accuracy trials. While such adaptive designs are standard in interventional trials, in diagnostic trials they are uncommon.[3] Known approaches from interventional trials cannot be applied to diagnostic accuracy studies or have to be modified; especially because the specific feature of diagnostic accuracy trials are the two co-primary endpoints sensitivity and specificity.

Results: In this talk we propose an approach for an unblinded sample size re-estimation in diagnostic accuracy studies. We can show that with the adaptive design the type-one error is maintained and the desired power is achieved. Furthermore, the results of the example study are presented.

Conclusion: Using unblinded sample size re-estimation, diagnostic accuracy studies can be made more efficient.

Keywords: Diagnostic accuracy, adaptive design, unblinded interim analysis

References

[1] AW. Rutjes, JB. Reitsma, M. Di Nisio, N. Smidt, JC. van Rijn, PM. Bossuyt. CMAJ, 174(4) 2006, 469-476.

[2] L. Yan, S. Tang, Y. Yang, X. Shi, Y. Ge, W. Sun, Y. Liu, X. Hao, X. Gui, H. Yin, Y. He, Q. Zhang. Medicine (Baltimore), 95(4) 2016, e2597.

[3] A. Zapf, M. Stark, O. Gerke, C. Ehret, N. Benda, P. Bossuyt, J. Deeks, J. Reitsma, T. Alonzo, T. Friede. Stat Med [Epub ahead of print] 2019.

7. An alternative method for presenting risk of bias assessments in systematic review of accuracy studies

Yasaman Vali1, Jenny Lee1, Patrick M. Bossuyt1, Mohammad Hadi Zafarmand1

1Department of Clinical Epidemiology, Biostatistics & Bioinformatics, Amsterdam UMC, Amsterdam, The Netherlands

Correspondence: Yasaman Vali

Background: Systematic reviews include primary studies that differ in sample sizes, with larger studies contributing more to the meta-analysis. At present, study size is not considered in Risk of Bias evaluations.

We aimed to develop an alternative way to present the contribution of individual studies to the total body of evidence on diagnostic accuracy, in terms of risk of bias and concerns about applicability, one that takes the effective sample size into account.

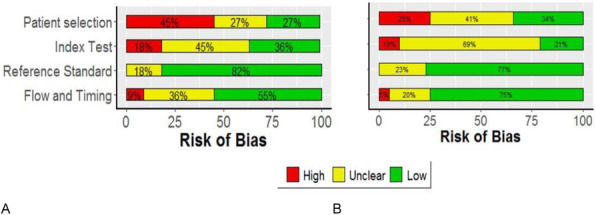

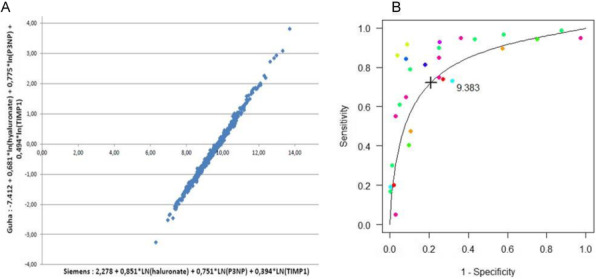

Methods: We used the results of a systematic review of diagnostic accuracy studies of the Enhanced Liver Fibrosis (ELF) test for diagnosing liver fibrosis among non-alcoholic fatty liver disease patients. We assessed the 11 studies identified from our systematic search of five databases with the QUADAS-2 (Quality Assessment of Diagnostic Accuracy Studies) tool. We first used number of studies to show the proportion of studies at low, unclear and high risk of bias. We then developed an alternative version of the graph, which relies on the proportion of the total sample size of studies at different levels of risk of bias.

Results: The risk of bias levels for each domain of the QUADAS-2 checklist changed after replacing the number of studies with the relative sample sizes of the individual studies. For instance, the risk of bias was high in the patient selection domain in 45% of the studies, and low and unclear in 27% of studies (Figure 1A). The alternative graph using the sample sizes showed 25%, 41% and 34% of included population with high risk, unclear and low risk of bias, respectively (Figure 1B).

Conclusion: A fair representation of the risk-of-bias and concerns about applicability in the available body of evidence from diagnostic accuracy studies should be based on the total sample size, not on the number of studies

Fig. 1 (abstract 7).

Risk of bias assessment results based on (A) proportion of included studies in the systematic review. (B) proportion of included patients in the systematic review

Keywords: Meta-analysis, accuracy studies, risk of bias assessment

8. Major depression classification based on different diagnostic interviews: A synthesis of individual participant data meta-analyses

Yin Wu1,2,3, Brooke Levis1,2,4, John P. A. Ioannidis5, Andrea Benedetti2,6, Brett D Thombs1,2,3, and the DEPRESsion Screening Data (DEPRESSD) Collaboration7

1Lady Davis Institute for Medical Research, Jewish General Hospital, Montréal, Québec, Canada; 2Department of Epidemiology, Biostatistics and Occupational Health, McGill University, Montréal, Québec, Canada; 3Department of Psychiatry, McGill University, Montréal, Québec, Canada; 4Centre for Prognosis Research, School of Primary, Community and Social Care, Keele University, Staffordshire, UK; 5Departments of Medicine, Health Research and Policy, Biomedical Data Science, and Statistics, Stanford University, Stanford, California, CA, USA; 6Respiratory Epidemiology and Clinical Research Unit, McGill University Health Centre, Montréal, Québec, Canada; 7McGill University, Montréal, Québec, Canada

Correspondence: Yin Wu

Background: Three previous individual participant data meta-analyses (IPDMAs) found that, compared to the semi-structured Structured Clinical Interview for DSM (SCID), the Composite International Diagnostic Interview (CIDI) and Mini International Neuropsychiatric Interview (MINI) tended to misclassify major depression.

We aimed to synthesize results from the three studies to compare the performance of the most commonly used diagnostic interviews for major depression classification: the SCID, CIDI, and MINI; and to determine if (1) probability of major depression classification based on the CIDI and MINI differs from probability based on SCID and (2) if differences are associated with depressive symptom levels.

Methods: We updated the Patient Health Questionnaire-9 IPDMA database, and standardised screening tool scores in all three databases. We re-analysed by fitting binomial generalized linear mixed models to compare odds of major depression classification across interviews, controlling for screening tool scores and participant characteristics, with and without an interaction term between interview and screening score. We synthesised results from these IPDMAs by estimating pooled adjusted odds ratios (aORs) for each interview and for interactions of each interview with screening scores using random effects meta-analysis.

Results: In total, 69,405 participants (7,574 [11%] with major depression) from 212 studies were included. The MINI (74 studies, 25,749 participants, 11% major depression) classified major depression more often than the SCID (108 studies, 21,953 participants, 14% major depression; aOR [95% CI] = 1.45 [1.11-1.92]). As screening scores increased, odds of major depression classification increased less for the CIDI (30 studies, 21,703 participants, 7% major depression) than the SCID (interaction aOR [95% CI] = 0.64 [0.52-0.80]).

Conclusions: Compared to the SCID, the MINI classifies major depression more often and the CIDI is less responsive to increases in symptom levels, regardless of measure of depressive symptom severity. Findings from research studies using MINI or CIDI should be cautiously interpreted.

Keywords: Depressive disorders, diagnostic interviews, individual participant data meta-analysis, major depression

9. What makes a good cancer biomarker? Developing a consensus

Katerina-Vanessa Savva1, Melody Ni1, George B. Hanna1, and Christopher J. Peters1

1Department of Surgery and Cancer, Imperial College London, London, UK

Correspondence: Katerina-Vanessa Savva

Background: Although a large number of resources have been invested in biomarker (BM) discovery, for both prognostic and diagnostic purposes, very few of those BMs have been clinically adopted. In an attempt to bridge the gap between BM discovery and clinical use, our previous study has developed and retrospectively validated a checklist comprised of 125 characteristics associated with cancer BM clinical implementation. Despite validation, complexity in implementing the full checklist might present a barrier. Therefore, this study aims to generate a user-friendly and concise consensus statement with literature-reported attributes associated with successful BM implementation.

Methods: A checklist of BM attributes was created using Medline and Embase databases according to PRISMA guidelines. A qualitative approach was applied to validate the list utilising semi-structured interviews (n=32). Thematic analysis was conducted until thematic saturation was achieved. Upon completion of literature review and interviews, a 3-phase online Delphi-Survey was designed aiming to develop a consensus document. The participants involved were grouped based on their expertise: clinicians, academics, patient and industry representatives.

Results: Previously identified 125 attributes retrieved from literature and reporting guidelines were included in the checklist. Upon thematic analysis of the interviews, characteristics listed in the checklist were validated. Most commonly occurring theme focused on clinical utility. Interestingly, different groups focused on differential themes emphasising the importance of participants’ diverse background. In specific, clinician and laboratory personnel commonly occurring themes fell under clinical utility. Moreover, patient representatives and industry personnel recurrent themes focused on clinical and analytical validity, respectively.

Conclusions: This study generated a validated checklist with literature-reported attributes linked with successful BM implementation. Upon completion of the Delphi-survey, a consensus statement will be generated which could be used to i) detect BMs with the highest potential of being clinically implemented and ii) shape how BM studies are designed and performed.

Keywords: Biomarkers, clinical implementation, checklist, Delphi survey, qualitative research

10. Developing Target Product Profiles for medical tests: a methodology review

Paola Cocco1, Anam Ayaz-Shah2, Michael Paul Messenger,3 Robert Michael West,4 Bethany Shinkins1

1Test Evaluation Group, Academic Unit of Health Economics, University of Leeds, Leeds, UK; 2Academic Unit of Primary Care, Leeds Institute for Health Sciences, University of Leeds, Leeds, UK; 3Centre for Personalised Health and Medicine, University of Leeds, Leeds, UK; 4Leeds Institute for Health Sciences, University of Leeds, Leeds, UK

Correspondence: Paola Cocco

Background: A Target Product Profile (TPP) is a strategic document which describes the necessary characteristics of an innovative product to address an unmet clinical need. TPPs present valuable information for designing ‘fit for purpose’ tests to manufacturers. To our knowledge, there is no formal guidance as to best practice methods for developing a TPP specific to medical tests.

We aimed to review and summarise the methods currently used to develop TPPs for medical tests and identify the test characteristics commonly reported.

Methods: We conducted a methodology systematic review of TPPs for medical tests. Database and website searches were carried out in November 2018. TPPs written in English for any medical test were included. Test characteristics were clustered into commonly recognized themes.

Results: Forty-four studies were identified, all of which focused on diagnostic tests for infectious diseases. Three core decision-making phases for developing TPPs were identified: scoping, drafting and consensus-building. Consultations with experts and the literature mostly informed the scoping and drafting of TPPs. All TPPs provided information on unmet clinical need and desirable test analytical performance, and the majority specified clinical validity characteristics. Few TPPs described specifications for clinical utility, and none included cost-effectiveness.

Conclusions: Based on our descriptive summary of the methods implemented, we have identified a commonly used framework that could be beneficial for anyone interested in drafting a TPP for a medical test. We also highlighted some key weaknesses, including the quality of the information sources underpinning TPPs and failure to consider test characteristics relating to clinical utility and cost-effectiveness. This review provides some recommendations for further methodological research on the development of TPPs for medical test. This work would also help to inform the development of a formal guideline on how to draft TPPs for medical tests.

Keywords: Medical test, target product profile, TPP, quality by design, diagnostic, test characteristic

11. Nonparametric Limits of Agreement for small to moderate sample sizes - a simulation study

Maria E Frey1, Hans Christian Petersen2, Oke Gerke3

1Department of Toxicology, Charles River Laboratories Copenhagen A/S , Hestehavevej 36 A, 4623 Lille Skensved, Denmark; 2Department of Mathematics and Computer Science, University of Southern Denmark, Campusvej 55, 5230 Odense M, Denmark; 3Department of Clinical Research, University of Southern Denmark & Department of Nuclear Medicine, Odense University Hospital, Kløvervænget 47, 5000 Odense C, Denmark

Correspondence: Oke Gerke

Background: The assessment of agreement in method comparison and observer variability analysis on quantitative measurements is often done with Bland-Altman Limits of Agreement (BA LoA) for which the paired differences are implicitly assumed to follow a Normal distribution. Whenever this assumption does not hold, the respective 2.5% and 97.5% percentiles are often assessed by simple quantile estimation.

Sample, subsampling, and Kernel quantile estimators as well as other methods for quantile estimation have been proposed in the literature and were compared in this simulation study.

Methods: Given sample sizes between 30 and 150 and different distributions of the paired differences (Normal; Normal with 1%, 2%, and 5% outliers; Exponential; Lognormal), the performance of 14 estimators in generating prediction intervals for one newly generated observation was evaluated by their respective coverage probability.

Results: For n=30, the most simple sample quantile estimator (smallest and largest observation as estimates for the 2.5% and 97.5% percentiles) outperformed all other estimators. For sample sizes of n=50, 80, 100, and 150, only one other sample quantile estimator (a weighted average of two order statistics) complied with the nominal 95% level in all distributional scenarios. The Harrell-Davis subsampling estimator and estimators of the Sfakianakis-Verginis type achieved at least 95% coverage for all investigated distributions for sample sizes of at least n=80 apart from the Exponential distribution (at least 94%).

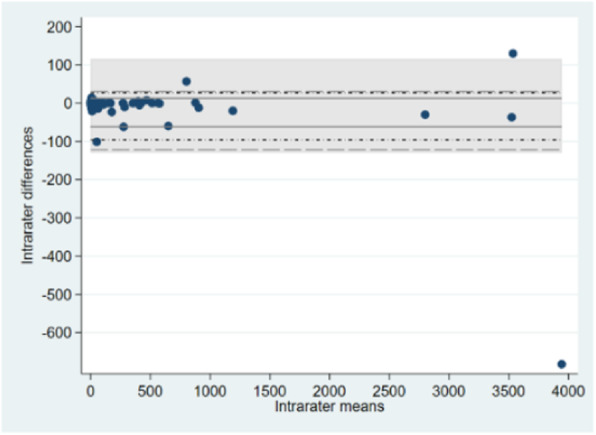

Conclusions: Simple sample quantile estimators based on one and two order statistics can be used for deriving nonparametric Limits of Agreement. For sample sizes exceeding 80 observations, more advanced quantile estimators of the Harrell-Davis and Sfakianakis-Verginis types that make use of all observed differences are equally applicable, but may be considered intuitively more appealing than simple sample quantile estimators that are based on only two observations per quantile (Figure 1).

Fig. 1 (abstract 11).

A sample quantile estimator (weighted average of two order statistics; solid), Harrell-Davis subsampling estimator (short dashes and dots), and an estimator of Sfakianakis-Verginis type (long dashes) contrasted with classical BA LoA (shaded area); n=129.

Keywords: Agreement, Bland-Altman plot, coverage, prediction, quantile estimation, repeatability, reproducibility

Contributed session on prediction models

Session chair: Laure Wynants

12. QUADAS-C: a tool for assessing risk of bias in comparative diagnostic accuracy studies

Bada Yang1, Penny Whiting2, Clare Davenport3,4, Jonathan Deeks3,4, Christopher Hyde5, Susan Mallett3, Yemisi Takwoingi3,4 and Mariska Leeflang1 for the QUADAS-C group

1Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam UMC, University of Amsterdam, Meibergdreef 9, 1105AZ, Amsterdam, The Netherlands; 2Population Health Sciences, Bristol Medical School, Canynge Hall, 39 Whatley Road, Bristol BS8 2PS, UK; 3Test Evaluation Research Group, Institute of Applied Health Research, University of Birmingham, Edgbaston, Birmingham, B15 2TT, UK; 4NIHR Birmingham Biomedical Research Centre, University Hospitals Birmingham NHS Foundation Trust and University of Birmingham, Birmingham, UK; 5Exeter Test Group, Institute of Health Research, College of Medicine and Health, University of Exeter, Exeter, UK

Correspondence: Bada Yang

Background: Comparative diagnostic test accuracy studies assess the accuracy of multiple tests in the same study and compare their accuracy. While these studies have the potential to yield reliable evidence regarding comparative accuracy, shortcomings in the design, conduct and analysis may bias their results. The currently recommended quality assessment tool for diagnostic accuracy studies, QUADAS-2, is not designed for the assessment of test comparisons.

We developed QUADAS-C as an extension to QUADAS-2 to assess the risk of bias in comparative diagnostic test accuracy studies.

Methods: Through a four-round Delphi study involving 24 international experts in test evaluation and a face-to-face consensus meeting, we developed a draft version of QUADAS-C which will undergo piloting in ongoing systematic reviews of comparative diagnostic test accuracy.

Results: QUADAS-C retains the same four-domain structure of QUADAS-2 (patient selection, index test, reference standard, flow and timing) and is comprised of additional questions to each QUADAS-2 domain. A risk of bias judgement for comparative accuracy requires a risk of bias judgement for each test (QUADAS-2), and additional criteria specific for test comparisons. Examples of such additional criteria include whether patients either received all index tests or were randomized to index tests, and whether index tests were interpreted blinded to other index tests.

Conclusions: QUADAS-C will be useful for systematic reviews of diagnostic test accuracy addressing comparative accuracy questions. Furthermore, researchers may use this tool to identify and avoid risk of bias when designing a comparative diagnostic test accuracy study. Currently a draft version of QUADAS-C is being piloted and the tool will be finalized by the time of the conference.

Keywords: Diagnostic accuracy, bias, test comparison, methodology, systematic review

13. Minimum sample size for external validation of a clinical prediction model with a continuous outcome

Archer L1, Snell KIE1, Ensor J1, Hudda M2, Collins GS3, Riley RD1

1Centre for Prognosis Research, School of Primary, Community and Social Care. Keele University, Staffordshire, UK; 2Population Health Research Institute, St George’s, University of London, London, UK; 3Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK

Correspondence: Archer L

Background: Once a clinical prediction model has been developed its predictive performance should be examined in new data, independent to that used for model development. This process is known as external validation. Many current external validation studies suffer from small sample sizes and, subsequently, imprecise estimates of a model’s predictive performance.

To address this, in our talk we propose methods to determine the minimum sample size needed for external validation of a clinical prediction model with a continuous outcome.

Methods: Four criteria are proposed, that target precise estimates of (i) R2 (the proportion of variance explained), (ii) calibration-in-the-large (agreement between predicted and observed outcome values on average), (iii) calibration slope (agreement between predicted and observed values across the range of predicted values), and (iv) the variance of observed outcome values. Closed-form sample size solutions are derived for each criterion, which require the user to specify anticipated values of the model’s performance (in particular R2) and the outcome variance.

Results: The sample size formulae require the user to specify their desired precision for each performance estimate, whilst also making assumptions about the anticipated distribution of predicted values and the expected model performance in the validation study. For the latter, a sensible starting point is to base values on those reported in the model development study, assuming the target population is similar. The largest sample size required to meet all four criteria is the recommended minimum sample size needed in the external validation dataset. We illustrate the proposed methods on a case-study predicting fat-free mass in children, with the criteria suggesting a sample size of at least 234 participants are needed.

Conclusion: We recommend that researchers consider the minimum sample size required to precisely estimate key predictive performance measures, before commencing external validation of a prediction model for a continuous outcome.

Keywords: Sample size, external validation, prediction model, continuous outcome

14. A systematic review of clinical prediction models developed using machine learning methods in Oncology

Paula Dhiman1, Jie Ma1, Benjamin Speich1, Garrett Bullock2, Constanza Andaur-Navarro3, Shona Kirtley1, Ben Van Calster4, Richard Riley5, Karel G Moons3, Gary S Collins1

1Centre for Statistics in Medicine, University of Oxford, Oxford, UK; 2Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford, UK; 3Julius Center, UMC Utrecht, Utrecht University, Utrecht, The Netherlands; 4Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 5School of Primary, Community and Social Care, University of Keele, Keele, UK

Correspondence: Paula Dhiman

Background: Clinical prediction models (CPMs) are of great interest to Oncology clinicians, who can use past and current patient characteristics to inform current and future health status. However, systematic reviews show that CPMs are often developed and validated using inappropriate methodology and are poorly reported. Application of Machine Learning (ML) methods to develop CPMs has risen considerably and it is often portrayed to offer many advantages (over traditional statistical methods), especially when using ‘big’, non-linear and high-dimensional data. However, poor methodology and reporting continue to be barriers to their clinical use. To improve usability of ML-CPMs, it is important to evaluate their methodological quality and adherence to reporting guidelines for prediction modelling.

We aimed to evaluate methodological conduct and reporting of author-defined ML-CPM studies within Oncology.

Methods: We conducted a systematic review of Oncology ML-CPMs published in 2019 using MEDLINE and Embase. We excluded studies using imaging or lab-based data. We extracted data on study design, outcome, sample size, ML methodology, and items for risk of bias[1]. The primary outcome was adherence to prediction modelling reporting guidelines[2].

Results: We identified 2922 publications and excluded 2843 based on the eligibility criteria; extracting data from 79 publications. Preliminary results show poor reporting and methodological conduct. Studies used inefficient validation methods (e.g., split-sample) and did not adequately address missing data. Sample size was not reported for most studies, and discrimination was emphasised over calibration. Studies were at increased risk of overfitting, leading to optimistic performance measures for their models.

Conclusions: Reporting and methodological conduct of Oncology ML-CPMs needs to be improved. Caution is needed when interpreting ML-CPMs as performance may be over-optimistic.

Keywords: Machine learning, prediction modelling, reporting

References

[1] Wolff RF, Moons KGM, et al, Annals of Internal Medicine, 170 2019, 51-58.

[2] Heus P, Damen JAAG, et al, BMJ Open, 9 2019, e025611.

15. Causal interpretation of clinical prediction models: When, why and how

Matthew Sperrin1, Lijing Lin1, David Jenkins1, Niels Peek1

1Health e-Research Centre, Division of Informatics, Imaging and Data Science, University of Manchester, UK

Correspondence: Matthew Sperrin

Background: When developing models for prediction, neither the parameters of the model, nor the output predictions, have any causal interpretations. For pure prediction this is perfectly acceptable. However, prediction models are commonly interpreted in a causal manner - for example by altering inputs to the model to demonstrate hypothetical impact of an intervention. This can lead to biased causal effects being inferred, and thus misinformed decision making.

We aimed to collect examples of use of prediction models in a causal manner in practice, and to identify and interpret literature that provides methods for enriching prediction models with causal interpretations.

Methods: We systematically reviewed literature to identify methods for prediction models with causal interpretations, by adapting a scoping review framework, and considering the interaction of prediction modelling keywords, and causal inference keywords. We included papers where methods are developed or applied that undertake prediction enriched with causal inference methods; specifically allowing for some assessment of the causal impact of an intervention on predicted risk.

Results: There were two broad categories of approach identified: 1) enriching prediction models with externally estimated causal effects, such as from meta-analyses of clinical trials; and 2) estimating both a prediction model, and causal effects, from observational data. The latter category included methods such as marginal structural models and g-estimation, embedded within both statistical and machine learning frameworks.

Conclusions: There is a need for prediction models that allow for 'counterfactual prediction': i.e. estimating risk of outcomes under different hypothetical interventions, to support decision making. Methods exist but require development, particularly when triangulating data from different sources (e.g. observational data and randomised controlled trials). Techniques are also required to validate such models.

Keywords: Causal, counterfactual, prediction, model

16. Risk prediction with discrete ordinal outcomes; calibration and the impact of the proportional odds assumption

Michael Edlinger1,2, Maarten van Smeden3,4, Hannes F Alber5,6, Ewout W Steyerberg7, Ben Van Calster1,7

1Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 2Department of Medical Statistics, Informatics, and Health Economy, Medical University Innsbruck, Innsbruck, Austria; 3Julius Center for Health Science and Primary Care, University Medical Center Utrecht, Utrecht, the Netherlands; 4Department of Clinical Epidemiology, Leiden University Medical Center, Leiden, the Netherlands; 5Department of Internal Medicine and Cardiology, Klinikum Klagenfurt am Wörthersee, Klagenfurt, Austria; 6Karl Landsteiner Institute for Interdisciplinary Science, Rehabilitation Centre, Münster, Austria; 7Department of Biomedical Data Sciences, Leiden University Medical Center, Leiden, the Netherlands

Correspondence: Michael Edlinger

Background: When evaluating the performance of risk prediction models, calibration is often underappreciated. There is little research on calibration for discrete ordinal outcomes.

We aimed to compare calibration measures for risk models that predict a discrete ordinal outcome (typically 3 to 6 categories), investigate the impact of assuming proportional odds on risk estimates and calibration, and study the impact of assuming proportional odds.

Methods: We studied multinomial logistic, cumulative logit, adjacent category logit, continuation ratio logit, and stereotype logistic models. To assess calibration, we investigated calibration intercepts and slopes for every outcome level, for every dichotomised version of the outcome, and for every linear predictor (i.e. algorithm-specific calibration). Finally, we used the estimated calibration index as a single-number metric, and constructed calibration plots. We used large sample simulations to study the behaviour of the logistic models in terms of risk estimates, and small sample simulations to study overfitting. As a case study, we used data from 4,888 symptomatic patients to predict the degree of coronary artery disease (five levels, from no disease to three-vessel disease).

Results: Models assuming proportional odds easily resulted in incorrect risk estimates. Calibration slopes for specific outcome levels or for dichotomised outcomes often deviated from unity, even on the development data. Non-proportional odds models, however, suffered more from overfitting, because these models require more parameters. Algorithm-specific calibration for proportional odds models assumes that this assumption holds, and therefore did not fully evaluate calibration.

Conclusions: Deviations from the proportional odds assumption can result in poor risk estimates and calibration. Therefore, non-proportional odds models are generally recommended for risk prediction, although larger sample sizes are needed.

Keywords: Prediction, calibration, ordinal outcome, proportional odds

17. Recovering the full equation of an incompletely reported logistic regression model

Toshihiko Takada1, Chris van Lieshout1, Jeroen Hoogland1, Ewoud Schuit1, Johannes B Reitsma1

1Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, Utrecht University, Universiteitsweg 100, 3584 CG Utrecht, The Netherlands

Correspondence: Toshihiko Takada

Background: Reporting of clinical prediction models has been shown to be poor with information on the intercept often missing. To allow application of a model for individualized risk prediction, information on the intercept is essential.

We aimed to evaluate possible methods to estimate an unreported intercept of a logistic regression model.

Methods: Using existing data, we developed a logistic regression model with 6 predictors to predict the risk of operative delivery in pregnant women. We considered 4 scenarios which did not report the intercept, but in which different information was available: i) web calculator, ii) nomogram, iii) coefficients/odds ratios, and iv) scoring table (i.e., three simplified categories and corresponding predicted probabilities). In scenario i) and ii), the coefficient for each predictor was estimated by assessment of the change in predicted probabilities that occurred with the change in the particular predictor. Then, the intercept was estimated by calculating the differences between the predicted probability and the estimated predictor coefficients. In scenario iii), the intercept was estimated based on the assumption that the mean risk estimated by the model would be close to the observed incidence of the outcome in a patient who had the mean value for each predictor. In scenario iv), the intercept was estimated by the association between score categories and corresponding predicted probabilities.

Results: Among 5667 laboring women, 1590 (28.1%) had an operative delivery. While the true value of the intercept was -9.563, the estimated intercept in each scenario was -9.552, -9.580, -9.308, and -8.940, respectively.

Conclusion: In scenarios i) and ii) where detailed information of predicted probability is available, the unreported intercept can be accurately estimated. On the other hand, the estimation of the intercept could be unstable when only coefficients/odds ratios or simple scoring and corresponding predicted probabilities are reported.

Keywords: Intercept, prediction model, logistic regression

18. AI phone apps for skin cancer: Reviewing the evidence, regulations, marketing, plus what happened next

Jon Deeks1,2, Jac Dinnes1,2, Karoline Freeman1,3, Naomi Chuchu1,4, Sue E Bayliss1, Rubeta N Matin5, Abhilash Jain6,7, Yemisi Takwoingi1,2, Fiona Walter8, Hywel Williams9

1Test Evaluation Research Group, University of Birmingham, Birmingham B30 1UZ UK; 2NIHR Biomedical Research Centre, University Hospitals Birmingham NHS Foundation Trust and University of Birmingham, Birmingham UK; 3Warwick Medical School, University of Warwick, Coventry, UK; 4London School of Hygiene and Tropical Medicine, London UK; 5Department of Dermatology, Churchill Hospital, Oxford UK; 6Nuffield Department of Orthopaedics, Rheumatology and Musculoskeletal Sciences, University of Oxford, Oxford UK; 7Department of Plastic and Reconstructive Surgery, Imperial College Healthcare NHS Trust, St Mary's Hospital, London UK; 8Department of Public Health and Primary Care, University of Cambridge, Cambridge UK; 9Centre for Evidence Based Dermatology, University of Nottingham, Nottingham UK

Correspondence: Jon Deeks

Background: AI based smartphone diagnostic apps can empower app users to make risk assessments and diagnoses. Apps for assessing suspicious moles for skin cancer are being implemented in health systems in the UK and elsewhere.

As a case study of AI, we examined the validity and findings of studies of AI smartphone apps to assess suspicious lesions for skin cancer, and assessed regulatory and NHS implementation processes.

Methods: We searched eight major databases and registers, including accuracy studies of any design. Reference standards included histological diagnosis and follow-up, or expert assessment. QUADAS-2 assessments summarised weaknesses in the evidence. Consequently we investigated how evidence is used with the MHRA and the NHS.

Results: Nine small studies evaluating six smartphone apps were found, two further studies have been provided since. QUADAS-2 assessments showed studies at high risk of bias with selective recruitment, unevaluable images, and differential verification. Applicability concerns included recruitment in secondary care clinics; clinicians performing lesion selection and image acquisition, and commercial confidentiality, name changes, and no version identifiers preventing app identification. Sensitivity estimates improved over time, but specificity remained below 80%. Two apps have CE marks as Class 1 devices. The Intended Use Statements state apps should not be used instead of medical assessments. NHS marketing material did not report specificity values, implied favorable comparisons with health professionals, and was contrary to the Intended Use statement. The NHS AI implementation process does not require a formal independent assessment of evidence.

Conclusions: Evidence does not support use of smartphone apps to detect melanoma. Current marketing of one app is contrary to its authorisation, and there is serious risk of harm to the public as a result. We have reported our findings to the NHS and the regulators and will update on what happened next.

Keywords: AI, apps, evidence, regulation

19. TRIPOD-CLUSTER: reporting of prediction model studies in IPD-MA, EHR and other clustered datasets

Thomas P. A. Debray1, Kym Snell2, Ben van Calster3, Gary S. Collins4, Richard D. Riley2, Johannes B. Reitsma1, Doug G. Altman4, Karel G. Moons1

1Julius center for Health Sciences and Primary Care, University Medical Center Utrecht, Utrecht University, The Netherlands; 2Research Institute for Primary Care and Health Sciences, Keele University, Staffordshire, UK; 3Department of Development and Regeneration, KU Leuven, Leuven, Belgium; 4Centre for Statistics in Medicine, Nuffield Department of Orthopaedics, Rheumatology & Musculoskeletal Sciences, Botnar Research Centre, University of Oxford, Oxford, UK

Correspondence: Thomas P. A. Debray

Background: The TRIPOD (Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis) Statement is a widely acknowledged guideline for the reporting of studies developing, validating, or updating a prediction model. With increasing availability of large datasets (or “big data”) from electronic health records and from individual participant data (IPD) meta-analysis, authors face novel opportunities and challenges for conducting and reporting their prediction model research. In particular, when prediction model development and validation studies include participants from multiple clusters such as multiple centers or studies, prediction models may generalize better across multiple centers, settings and populations. However, differences in participant case-mix (e.g. in participant eligibility criteria), in variable definitions and in data quality may also lead to substantial variation in model performance. Accordingly, prediction model studies that are based on large clustered datasets need to be sufficiently reported to determine whether a developed or validated prediction model is fit for purpose.

Methods: We describe the rationale and development process of an extension of TRIPOD focusing on studies aimed at developing, validating or updating a prediction model that use large clustered datasets with IPD from multiple studies or datasets with data combined from multiple centers, regions or countries.

Results: We pay specific attention to new items (N=10) and those TRIPOD items that required adjustment (N=20) due to the fact that participants are clustered within studies, practices, regions or countries.

Conclusions: The rationale for each items is discussed, along with examples of good reporting and why transparent reporting is important, with a view to assessing risk of bias and clinical usefulness of the developed or validated prediction model.

Keywords: Prediction, model, reporting, meta-analysis, individual participant data

Invited talk: Rudi Pauwels

Session chair: Ann Van den Bruel

20. High Impact Pandemics: From Crisis to Preparedness

Rudi Pauwels1

1Praesens Foundation, Zemst, Belgium

Keynote: Cecile Janssens

Background: The ongoing Covid-19 pandemic was just a matter of time to occur, given not only the long history of outbreaks and pandemics but also the increase in their frequency and diversity during the past decades. A number of human and non-human related factors are converging and driving these outbreaks.

The causative viral agent, SARS-Co-2, spread around the world in a matter of weeks, facilitated by transmission via the respiratory airways and even by people who do not display symptoms.

The world still had to predominantly react in crisis mode, exposing gaps in pandemic preparedness at multiple fronts. It's a reminder that a problem in one part of the world can rapidly become a problem in every part of the world where it impacts can be felt beyond the medical and public health levels.

Methods: Therefore, we call for a quantum change in the world’s approach, preparedness and response to pandemics, some posing existential threats to society. Proper preparation should include an international re-evaluation of the role of basic healthcare around the world. The growing threat of future ‘unseen’ enemies requires the adoption of a fundamental new mind set, one with a longer time horizon, new technological tools, including more advanced diagnostics that can be deployed locally, generate high quality data rapidly and can be mass produced.

Results: In order to respond better to future threats we should invest and develop pan-viral therapies and more vaccine platforms that can deliver solutions much more rapidly.

Conclusions: The Praesens Foundation and its partners are developing new technologies to assist in better surveillance, rapid response to outbreaks and pandemics. This will be illustrated by a new mobile laboratory example.

Keywords: Covid-19, pandemics, outbreaks, diagnostics, mobile labs

Session chair: Jan Y. Verbakel

21. Risk Prediction using Polygenic Scores: What's the State of the Evidence?

A. Cecile J.W. Janssens1

1Department of Epidemiology, Rollins School of Public Health, Emory University, Atlanta GA, USA

Contributed session on diagnostic tests

Background: Polygenic scores have become the standard for quantifying genetic liability in the prediction of disease risks.

Methods: Polygenic scores are generally constructed as weighted sum scores of risk alleles using effect sizes from genome-wide association studies as their weights.

Results: The construction of polygenic scores is being improved with more appropriate selection of independent single-nucleotide polymorphisms and optimized estimation of their weights, but other aspects of the research methods are receive little attention. Polygenic prediction research is primarily done in large convenience samples, with no questions asked about the relevance of the data, and, hence, the relevance of the evidence that is being gathered.

Conclusion: In this lecture, I will review 15 years of polygenic risk research and discuss lessons learned, lessons not learned, and promising directions for future research.

Keywords: Prediction, model, polygenic scores, evidence, genome-wide association studies

Contributed session on diagnostic tests

Session chair: Mariska Leeflang

22. A framework to evaluate proposals to change a screening test

Sian Taylor-Phillips1,2, Lavinia Ferrante di Ruffano2, Julia Geppert1, Aileen Clarke1, Chris Hyde3, Russ Harris4, Patrick Bossuyt5, Jon Deeks1

1Warwick Medical School, University of Warwick, Coventry, UK; 2Institute of Applied Health Research, University of Birmingham, Birmingham, UK; 3College of Medicine and Health, University of Exeter, Exeter, UK; 4University of North Carolina, North Carolina, USA; 5Department of Clinical Epidemiology and Biostatistics, University of Amsterdam, The Netherlands

Correspondence: Sian Taylor-Phillips

Background: Screening programmes are evaluated using randomised controlled trials with lengthy follow-up to morbidity, mortality and overdiagnosis. The fast pace of advances in technology means these trials are often based on outdated screening tests. A framework is needed to guide how to evaluate proposed changes to screening tests.

We aimed to develop a practical framework to evaluate proposed changes to screening tests in established screening programmes, by synthesis of existing methods and development of new theory.

Methods: We identified published frameworks for the evaluation of tests and screening programmes (n=64), and existing methods for evaluating or comparing screening tests published in websites of national screening organisations from 16 countries. We extracted principles relevant to evaluation of screening tests. We then searched the same websites for reviews evaluating changes to screening tests (n=484). We analyzed the pathways through which these changes to screening tests affected downstream health, and used these to adapt and extend our framework.

Results: We did not find an existing framework specifically designed for evaluation of screening tests across screening programmes. Our proposed framework describes the pathways through which changing a screening test can affect downstream health. Some of these pathways are already included in test evaluation frameworks, e.g. test failures, test accuracy and incidental findings. Some are specific to screening, such as overdiagnosis. We recommend study designs to evaluate these pathways, and recommend a stepwise approach to ensure proportionate review, with the most intensive evaluation required when there is a change to spectrum of disease detected.

Conclusions: We present a draft framework for evaluating changes in screening tests. This framework adapts principles from diagnostic test frameworks to the unique challenges of evaluating screening tests, including the complexity in estimating the benefit of earlier detection following screen detection, and associated overdiagnosis.

Keywords: Screening, test, systematic review

23. Bias in diagnostic accuracy estimates due to data-driven cutoff selection: a simulation study

Brooke Levis1,2,3, Parash Mani Bhandari1,2, Dipika Neupane1,2, Brett D Thombs1,2, Andrea Benedetti2,4, and the DEPRESsion Screening Data (DEPRESSD) PHQ Collaboration

1Lady Davis Institute for Medical Research, Jewish General Hospital, Montréal, Québec, Canada; 2Department of Epidemiology, Biostatistics and Occupational Health, McGill University, Montréal, Québec, Canada; 3Centre for Prognosis Research, School of Primary, Community and Social Care, Keele University, Staffordshire, UK; 4Respiratory Epidemiology and Clinical Research Unit, McGill University Health Centre, Montréal, Québec, Canada

Correspondence: Brooke Levis

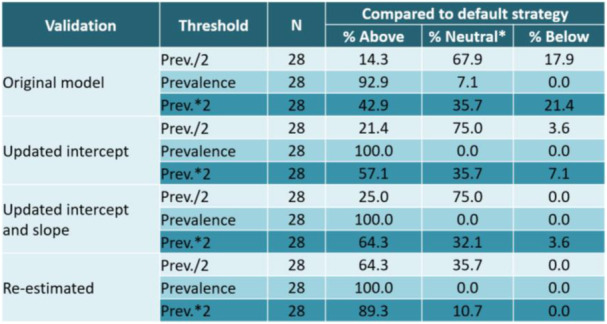

Background: Diagnostic accuracy studies with small numbers of cases often use data-driven methods to simultaneously identify an optimal cutoff and estimate its accuracy. When data-driven optimal cutoffs diverge from standard or commonly used cutoffs, authors sometimes argue that sample characteristics influence accuracy and thus different optimal cutoffs are needed for particular population subgroups.

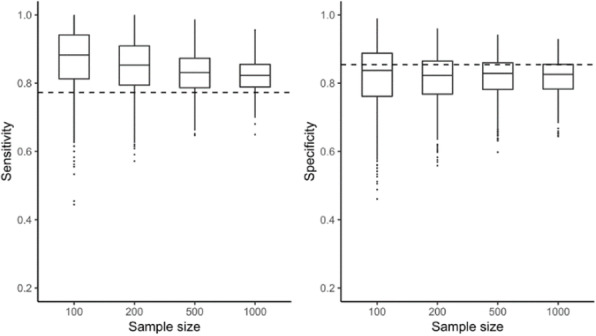

We aimed to explore variability in optimal cutoffs identified and diagnostic accuracy estimates from samples of different sizes and quantify bias in accuracy estimates for data-driven optimal cutoffs, using real participant data on Patient Health Questionnaire-9 (PHQ-9) diagnostic accuracy.

Methods: We conducted a simulation study using data from an individual participant data meta-analysis (IPDMA) of PHQ-9 diagnostic accuracy (N studies = 58, N participants = 17,436, N cases = 2,322). 1000 samples of size 100, 200, 500 and 1000 participants were drawn with replacement from the IPDMA database. (Figure 1) Optimal cutoffs (based on Youden’s J) and their accuracy estimates were compared to accuracy estimates for the standard and optimal cutoff of ≥ 10 in the full IPDMA database.

Results: Optimal cutoffs ranged from 3-19, 5-14, 5-13, and 6-12 in samples of 100, 200, 500, and 1000 participants, respectively. Compared to estimates for a cutoff of ≥ 10 in the full IPDMA database, sensitivity was overestimated by 10%, 8%, 6% and 5% in samples of 100, 200, 500, and 1000 participants, respectively. Specificity was underestimated by 4% across sample sizes.

Conclusions: Using data-driven methods to select optimal cutoffs in small samples leads to large variability in optimal cutoffs identified and exaggerated accuracy estimates, although cutoff variability and sensitivity exaggeration reduce as sample size increases. Researchers should report accuracy estimates for all cutoffs rather than just study-specific optimal cutoffs. Differences in accuracy and optimal cutoffs seen in small studies may be due to the small sample sizes rather than participant characteristics.

Fig. 1 (abstract 23).

Bias in accuracy results for samples of 100, 200, 500 and 1000 participants

Keywords: Diagnostic test accuracy, bias, individual participant data meta-analysis

24. Graphical Enhancements to Summary Receiver Operating Characteristic Plots to Facilitate Diagnostic Test Accuracy Meta-Analysis

Amit Patel1,2, Nicola J Cooper2, Suzanne C Freeman2, Alex J Sutton2

1Cancer Research UK Clinical Trials Unit, Institute of Cancer and Genomic Sciences, College of Medical and Dental Sciences, University of Birmingham, Birmingham, UK; 2Biostatistics Research Group, Department of Health Sciences, University of Leicester, Leicester, UK

Correspondence: Suzanne C Freeman

Background: Results of diagnostic test accuracy (DTA) meta-analyses are often presented in two ways: i) forest plots displaying meta-analysis results for sensitivity and specificity separately, and ii) Summary Receiver Operating Characteristic (SROC) curves to provide a global summary of test performance. However other relevant information on included studies is often not presented graphically and in the context of the results.

We aimed to develop graphical enhancements to SROC plots to address shortcomings in the current guidance on graphical presentation of DTA meta-analysis results.

Methods: A critical review of guidelines for conducting DTA systematic reviews and meta-analyses was conducted to establish and critique current recommendations for best practice for producing plots. New plots addressing shortcomings identified in the review were devised and implemented in MetaDTA [1].

Results: Two primary shortcomings were identified: i) lack of incorporation of quality assessment results into the main analysis and; ii) ambiguity with how the contribution of individual studies to the meta-analysis are represented on SROC curves. In response, two novel graphical displays were developed: i) A quality assessment enhanced SROC plot which displays the results from individual studies in the meta-analysis using glyphs to simultaneously represent the multiple dimensions of quality assessed using QUADAS-2; and ii) A percentage study weights enhanced SROC plot which accurately portrays the percentage contribution each study makes to both sensitivity and specificity simultaneously using ellipses.

Conclusions: The proposed enhanced SROC curves facilitate the exploration of DTA data, leading to a deeper understanding of the primary studies including identifying reasons for between-study heterogeneity and why specific study results may be divergent. Both plots can easily be produced in the free online interactive application, MetaDTA [1].

Keywords: Diagnostic test accuracy, meta-analysis, visualisation

References

[1] Freeman et al. Development of an interactive web-based tool to conduct and interrogate meta-analysis of diagnostic test accuracy studies: MetaDTA. BMC Med Res Methodol, 2019;19:81

25. Network meta-analysis of cerebrospinal fluid and blood biomarkers for the diagnosis of sporadic Creutzfeldt-Jakob disease

Nicole Rübsamen1, Stephanie Pape1, André Karch1

1Institute for Epidemiology and Social Medicine, University of Münster, Domagkstraße 3, 48149 Münster, Germany

Correspondence: Nicole Rübsamen

Background: Several biomarkers have been proposed for the diagnosis of sporadic Creutzfeldt-Jakob disease (sCJD), the most prevalent form of human prion disease.

We identified and evaluated all relevant diagnostic studies for the biomarker-based differential diagnosis (using serum or cerebrospinal fluid biomarkers) of sCJD, and combined direct and indirect evidence from these studies in a network meta-analysis.

Methods: We systematically searched Medline (via PubMed), Embase, and the Cochrane Library. To be eligible, studies had to include the established diagnostic criteria of sCJD and established diagnostic criteria for other forms of dementia as reference standard. The studies had to provide sufficient information to construct the 2×2 contingency table (i.e., false and true positives and negatives). We registered the study protocol with PROSPERO, number CRD42019118830. Risk of bias was assessed with the QUADAS-2 tool. We used a bivariate model to conduct meta-analyses of individual biomarkers and to estimate the between-study variability in logit sensitivity and specificity. To investigate sources of heterogeneity, we performed subgroup analyses based on QUADAS-2 quality and clinical criteria. We used a Bayesian beta-binomial analysis of variance model for the network meta-analysis.

Results: We included eleven studies, which investigated 14-3-3 (n=11), NSE (n=1), RT-QuIC (n=3), S100B (n=3), and tau (n=9). Heterogeneity was high in the meta-analyses of individual biomarkers and different depending on the level of certainty of sCJD diagnosis. In the network meta-analysis, 14-3-3 was the most sensitive, but among the least specific test, while RT-QuIC was the most specific though among the least sensitive test.

Conclusions: Our work shows the weaknesses of previous diagnostic accuracy studies. Subgroup analyses will reveal if our results depend on methodological quality of the studies or clinical criteria of the patients.

Keywords: Blood, cerebrospinal fluid, neurodegeneration, diagnosis, sporadic Creutzfeldt-Jakob disease

26. The potential for seamless designs in traditional diagnostic research?

Werner Vach1, Eric Bibiza-Freiwald2 , Oke Gerke3, Tim Friede4, Patrick M Bossuyt5, Antonia Zapf2

1Department of Clinical Research, University of Basel, Schanzenstrasse 55, CH-4031 Basel, Switzerland; 2Institute of Medical Biometry and Epidemiology, University Medical Center Hamburg-Eppendorf, Martinistraße 52, D-20246 Hamburg, Germany; 3Department of Nuclear Medicine, Odense University Hospital & Department of Clinical Research, University of Southern Denmark, J. B. Kløvervænget 47, DK-5000 Odense C, Denmark; 4Department of Medical Statistics, University Medical Center Goettingen, Humboldtallee 32, D-37073 Goettingen, Germany; 5Department of Clinical Epidemiology, Biostatistics and Bioinformatics, Amsterdam University Medical Centers, Postbus 22660, NL-1100 DD Amsterdam, The Netherlands

Correspondence: Werner Vach

Background: New diagnostic tests to identify a well-established disease state have to undergo a series of scientific studies from test construction until finally demonstrating a societal impact. Traditionally, these studies are performed with substantial time gaps in between. Seamless designs allow us to combine a sequence of studies in one protocol and may hence accelerate this process.

We performed a systematic investigation of the potential of seamless designs in diagnostic research.

Methods: We summarized the major study types in diagnostic research and identified their basic characteristics with respect to applying seamless designs. This information was used to identify major hurdles and opportunities for seamless designs.

Results: 11 major study types were identified. The following basic characteristics were identified: type of recruitment (case-control vs population-based), application of a reference standard, inclusion of a comparator, paired or unpaired application of a comparator, assessment of patient relevant outcomes, possibility for blinding of test results. Two basic hurdles could be identified: 1) Accuracy studies are hard to combine with post-accuracy studies, as the first are required to justify the latter and as application of a reference test in outcome studies is a threat to the study’s integrity. 2) Questions, which can be clarified by other study designs, should be clarified before performing a randomized diagnostic study. However, there is a substantial potential for seamless designs since all steps from the construction until the comparison with the current standard can be combined in one protocol. This may include a switch from case-control to population-based recruitment as well as a switch from a single arm study to a comparative accuracy study. In addition, change in management studies can be combined with an outcome study in discordant pairs. Examples from the literature illustrate the feasibility of both approaches.

Conclusions: There is a potential for seamless designs in diagnostic research.

Keywords. Test construction studies, accuracy studies, randomized diagnostic studies, seamless design, blinding

27. Meta-analysis of diagnostic test accuracy using multivariate probit models

Enzo Cerullo1, Hayley Jones2, Terry Quinn3, Nicola Cooper1, Alex Sutton1

1NIHR Complex Reviews Support Unit, Department of Health Sciences, University of Leicester, Leicester, UK; 2Population Health Sciences, Bristol Medical School, University of Bristol, Bristol, UK; 3NIHR Complex Reviews Support Unit, Institute of Cardiovascular and Medical Sciences, University of Glasgow, Glasgow, UK

Correspondence: Enzo Cerullo

Background: Multivariate probit models are used to analyze correlated ordinal data. In the context of diagnostic test accuracy without a gold standard test, their use has been more limited. Multivariate probit models have been used for the analysis of dichotomous and categorical (>1 threshold) diagnostic tests in a single study, and for the meta-analysis of dichotomous tests.

We aimed to (i) develop a model for the meta-analysis of multiple binary and categorical diagnostic tests without a gold standard; (ii) extend the model to enable estimation of joint test accuracy.

Methods: We extended proposed multivariate probit models for the meta-analysis of diagnostic test accuracy, modelling the conditional within-study correlations between tests. Dichotomous tests use binary multivariate probit likelihoods and categorical tests use ordered likelihoods. We also showed how the model can be extended to estimate joint test accuracy, to meta-analyse studies which report accuracy at distinct thresholds, and how to incorporate priors for the 'gold standard' tests based on inter-rater agreement information. We fitted the models using Stan which uses a state-of-the-art Hamiltonian Monte Carlo algorithm.

Results: We applied the methods to a dataset in which studies evaluated the accuracy of tests for deep vein thrombosis, where studies included two dichotomous tests and one categorical test. We compared our results to the original study, which assumed a perfect reference test. In Stan, we found estimation to be very slow for meta-analyses which contained large studies with sparse data. We discuss these computational issues and possible ways to improve scalability by making use of recently proposed algorithms, such as calibrated data augmentation Gibbs sampling.

Conclusions: We developed a model for the meta-analysis of multiple, categorical diagnostic tests without a gold standard. Unlike latent class models, they can be extended to tackle a variety of problems without having to inappropriately simplify or discard data.

Keywords: Meta-Analysis, diagnostic, test, accuracy, probit, imperfect, gold, reference, thresholds, interrater, agreement

Contributed session on prediction models

Session chair: Maarten Van Smeden

28. Performance of Heart Failure Clinical Prediction Models: A Systematic External Validation Study

Jenica N. Upshaw1,2, Jason Nelson1, Benjamin Koethe1, Jinny G. Park1, Hannah McGinnes1, Benjamin S. Wessler1,2, Ben Van Calster PhD3, David van Klaveren PhD1,4, Ewout Steyerberg PhD4, David M. Kent MD MS1