Abstract

Pitch is an essential cue that allows the auditory system to distinguish between sound sources. Pitch cues are less useful when listeners are not able to discriminate different pitches between the two ears, a problem encountered by listeners with hearing impairment (HI). Many listeners with HI will fuse the pitch of two dichotically presented tones over a larger range of interaural frequency disparities, i.e., have a broader fusion range, than listeners with normal hearing (NH). One potential explanation for broader fusion in listeners with HI is that hearing aids stimulate at high sound levels. The present study investigated effects of overall sound levels on pitch fusion in listeners with NH. It was hypothesized that if sound level increased, then fusion range would increase. Fusion ranges were measured by presenting a fixed frequency tone to a reference ear simultaneously with a variable frequency tone to the opposite ear and finding the range of frequencies that were fused with the reference frequency. No significant effects of sound level (comfortable level ± 15 decibels) on fusion range were found, even when tested within the range of levels where some listeners with HI show large fusion ranges. Results suggest that increased sound level does not explain increased fusion range in listeners with HI and imply that other factors associated with hearing loss might play a larger role.

Keywords: binaural, pitch, fusion, sound level

1. INTRODUCTION

Listeners with bilateral hearing loss perform worse on speech reception tasks in noisy environments relative to listeners with normal hearing (NH; Festen and Plomp, 1990). One reason that listeners with hearing impairment (HI) struggle in noise is that they have limited access to cues that are used to perceptually segregate sound sources. Pitch is one such example of an important sound source segregation cue (e.g., Darwin, 1997).

Recent findings show that many listeners with HI have poor simultaneous frequency discrimination across ears (Reiss et al., 2017). That is, these listeners are unable to distinguish pitches despite a large interaural frequency disparity, instead fusing the pitches into a single pitch percept in a phenomenon called binaural pitch fusion (Perrott and Barry, 1969; van den Brink et al., 1976). The factors that contribute to binaural pitch fusion differences between listeners with NH and HI remain elusive despite evidence that peculiarities in pitch perception might have negative impacts for listeners with HI.

1.1. Binaural Pitch Fusion

Frequency discrimination thresholds for simultaneously presented sounds differ from sequentially presented sounds. Even listeners with NH will fuse the pitch of two pure tones presented simultaneously to separate ears over a larger frequency disparity than can be discriminated when presented sequentially (Odenthal, 1963, 1961; Perrott and Barry, 1969; Reiss et al., 2017; Thurlow and Bernstein, 1957; Thurlow and Elfner, 1959; van den Brink et al., 1976). Results from these types of experiments are referred to as “binaural pitch fusion”. In this case, the word “binaural” only indicates that fusion occurs between the two ears and does not necessarily imply any role of the cues encoded by binaural nuclei in the brainstem (i.e., the lateral and medial superior olive). Binaural pitch fusion can be measured by presenting a pure tone of fixed reference frequency to a reference ear while a tone with a different (henceforth referred to as “variable”) frequency is presented to the contralateral ear. Listeners are then asked to indicate whether the tones presented to the two ears are fused together into one pitch or perceived as two separate pitches. The frequency range over which the variable tone fuses with the reference tone is referred to as the fusion range.

Interestingly, listeners with HI who use bilateral hearing aids often fuse pitch over a much larger frequency disparity relative to listeners with NH (up to 4 octaves; Reiss et al., 2017). Listeners with HI who use a hearing aid and cochlear implant can have similarly broad fusion ranges (Reiss et al., 2014). Taken together, results from listeners that use hearing aids and/or cochlear implants suggest that binaural pitch fusion is less selective in these groups. Less selectivity to frequency disparity for tones presented simultaneously across the ears suggests that the efficacy of pitch as a cue to segregate sound sources could be compromised in these clinical populations.

To further complicate the problem, little is known about how stimulus variables affect binaural pitch fusion in listeners with NH. Findings from early work in listeners with NH indicate that the fusion range increases as the frequency of the reference tone increases (Odenthal, 1963, 1961; Perrott and Barry, 1969; Thurlow and Bernstein, 1957; Thurlow and Elfner, 1959; van den Brink et al., 1976). Early investigations of pitch perception suggested that the pitch of pure tones presented monaurally or diotically might change with level, the seminal example being a study by Stevens (1935). Motivated by these early studies, using a slightly different paradigm, Thurlow and Bernstein (1957) showed that simultaneous frequency discrimination (akin to fusion range) remains constant over relatively low levels of stimulation (10–50 decibels sensation level). However, it is possible that fusion range changes when higher intensities are used.

1.2. Goal and Hypothesis

Since hearing aids increase sound level in order to increase audibility, one possible contributor to increased fusion range in listeners with HI may be higher sound presentation levels. Increasing sound level broadens the auditory filter widths (e.g., 30 to 80 decibels sound pressure level, Glasberg and Moore, 2000; 16 to 65 decibels noise spectrum level, Pick, 1980). Further, as sound level increases from 10 to 70 decibels sensation level, the frequency range over which contralateral masking occurs also increases (i.e., central masking, or when pure tone maskers are presented to the opposite ear; Zwislocki, 1971). Thus, increases in area of excitation and central masking could reflect mechanisms that lead to a broadening of fusion range at moderate to high sound levels. Unlike listeners with HI, sound level can be manipulated over a large range in listeners with NH without impacting audibility. Accordingly, the purpose of the present study was to determine whether increasing sound level from moderate to high levels (approximately 57 to 87 decibels sound pressure level) leads to broader binaural pitch fusion in listeners with NH. It was hypothesized that as the overall level was increased, the fusion range would also increase.

2. METHODS

2.1. Listeners

Six adults with NH (four female; age 21–29 years) participated in the present study. All listeners had pure tone thresholds at or below 20 decibels hearing level (dB HL) for frequencies with octave spacing between 0.25 and 6 kHz. Data from nine additional listeners (five female; age 35–77) with HI who used bilateral hearing aids were included in portions of these analyses. Averaged audiograms for both groups are plotted in Fig. 1. Listeners completed informed consent and a Mini-Mental State Exam with a minimum criterion score of 27 to confirm that they had no substantial cognitive impairment (Folstein et al., 1975). Listener NH82 was an author on this paper. All but one listener with NH (NH80) indicated that they had at least 10 years of musical experience. Listeners HI16, HI22, HI37, and HI39 had no previous musical training. Listener HI41 had two to four years of musical training on one or more instruments. Listeners HI25, HI38, HI40, and HI45 had at least 10 years of musical training on one or more instruments. Three listeners with NH (NH76, NH80, and NH85) reported experiencing tinnitus. All methods employed in this experiment were approved by the Institutional Review Board of Oregon Health and Science University.

Fig. 1.

Mean pure-tone detection thresholds. The x-axis represents the frequency being tested in kHz. The y-axis represents absolute detection threshold in dB hearing level (HL), where circles represent means and error bars represent one standard deviation above or below the mean. The left and right panel correspond to the left and right ear, respectively. Listeners with NH are shown in black and listeners with HI are shown in grey. One listener (HI37) was not tested at 1.5 kHz, so the mean and error bars on 1.5 kHz reflect the other eight listeners.

2.2. Stimuli and Equipment

Stimuli were pure tones of varying frequency presented through Sennheiser HD-25 headphones in a double-walled, sound-attenuating booth. All tones had 10-ms onset and offset ramps. Stimuli were created in MATLAB (version R2010b; Mathworks, Natick, MA), generated with an ESI (Leonburg, Germany) Juli sound card, and attenuated with a Turner-Davis Technologies (TDT; Alachua, FL) programmable attenuator. Two separately grounded attenuators, mixers, and headphone channels were used to prevent any cross talk that could result in within-ear acoustic beats from small differences in frequency for listeners with NH.

2.3. Procedures

Prior to binaural pitch fusion measurements, it was important to ensure that listeners did not use other binaural cues to make their judgment on each trial. The upper limit for detection of binaural beats and interaural phase differences in humans with NH is commonly thought to be below 1.5 kHz (Grose and Mamo, 2010; Perrott and Nelson, 1969), though the presence of binaural beats has been reported as high as 3 kHz (Wever, 1949). While most literature agrees that the limit for binaural beats is approximately 1.5 kHz, Wever (1949) used an uncommon definition of binaural beats (“rough character”), and we felt it important to rule out the usefulness of interaural phase with the present experimental setup. The absence of sensitivity to interaural phase differences (i.e., the cues used in binaural beat detection) in the present study was confirmed by measuring the upper limit frequencies for interaural phase difference threshold (79%; Grose and Mamo, 2010). It is worth noting that 79% is a relatively conservative threshold, though the results using this task have been consistent with other previous literature suggesting that interaural phase is not a useful cue above 1.5 kHz. A three-interval, three-alternative forced choice (3I-3AFC) procedure was used. The stimulus was an 800-ms pure tone that was 100% amplitude-modulated at 5 Hz, with modulation starting in 3/4 π phase. In the two standard intervals, the tone was binaurally in-phase. In the target interval, the tone alternated between in-phase and out-of-phase across sequential envelope modulation periods, with the phase adjustments made during zero-voltage points in the envelope. The interval containing the phase-inverted stimulus was randomized among three intervals, and listeners were asked to pick the interval that was different. A three-up, one-down adaptive procedure was used with a total of 10 reversals where the carrier frequency of the tone was adapted across trials. The interaural phase difference threshold was computed as the geometric mean of the last eight reversals. The interaural phase difference thresholds were confirmed to be below the 2-kHz reference frequency for all listeners.

The fusion task employed in the present study was similar to that by Reiss et al. (2014). Pitch fusion was measured using a one-interval, two-alternative forced-choice (1I-2AFC) task where listeners were instructed to choose whether they heard one or two pitches. Stimuli in the dichotic pitch fusion task were played simultaneously for 1500 ms. The stimulus played to the reference ear was a tone fixed at 2 kHz, and the stimulus played to the comparison ear was a tone of variable frequency. Listeners were given the following instructions: “Listen for whether you hear one or two distinct pitches, not changes in loudness or sound quality, such as dissonance.” Listeners were also presented with examples of shifting sound quality by presenting two pure tones of varying frequency disparity from 25–50 Hz simultaneously to both ears, within the typical fusion range. The method of constant stimuli procedure was used. The variable frequency was selected pseudo-randomly out of 17 logarithmically spaced frequencies (e.g., 1/64 or 1/32 octave steps) centered around 2 kHz. The logarithmic spacing and frequency range were determined individually for each listener based on the results of a tutorial and practice trials at a comfortable level. The comparison frequency range (and corresponding logarithmic frequency-spacing) was selected so that fusion occurred for approximately half of the variable comparison frequencies. Each frequency was tested six times for a total of 102 trials per trial block.

For the fusion task, ideally tones at various frequencies will be matched in loudness. Hence, prior to fusion range measurements, equal loudness contours were estimated using a loudness balancing procedure for individual listeners. Loudness balancing was completed at each level (soft, comfortable, and loud) for listeners with NH, and at a comfortable level for listeners with HI. Loudness balancing was conducted using a method of adjustment in each listener for frequencies ranging from 0.125 – 4 kHz. Some listeners with HI completed loudness balancing for a wider range of frequencies from 0.125 – 8 kHz.

Three loudness levels were determined for listeners with NH: soft, comfortable, and loud. The reference level was set as 70 dB SPL(C) for the 2-kHz tone in the reference ear and was adjusted if the listener did not perceive 70 dB SPL(C) as comfortable. Levels at all other frequencies in both ears were initialized at 70 dB SPL(C) and adjusted to match the 2-kHz tone in the reference ear. Loudness was adjusted for each frequency during sequential presentations within or across ears by the experimenter based on listener feedback.

The “soft” levels were initially set for 1.00, 1.25, 1.50, 2.00, 3.00, and 4.00 kHz by decreasing the comfortable sound levels by 15 dB. Sound levels were checked for equal loudness at these frequencies and minor adjustments were made, if necessary, by the experimenter based on listener feedback. The same procedure was followed to adjust the intensity to the “loud” level, except the comfortable sound levels were increased by 15 dB. For both soft and loud levels, the loudness balance was only measured from 1 – 4 kHz since frequencies outside of this range were not presented for the NH listeners in the present experiment. The order of loudness conditions was varied across listeners. Two trial blocks per loudness condition were collected for each listener. The resulting equal loudness functions were used to present all stimuli at equal loudness using linear interpolation.

2.4. Analysis

The proportion of “one sound” responses at each variable frequency was combined and plotted for both trial blocks to form a fusion function (for example, see Fig. 2). Data were fit with the four-parameter asymmetric Gaussian function in Eq. 1:

| (1) |

Fig. 2.

Example fusion function. The blue symbols and solid line represent data gathered from one participant over two experimental blocks. The x-axis represents variable frequencies that were presented in the comparison ear while 2 kHz was presented at the same time to the reference ear. The y-axis represents the proportion of the time that listener NH79 perceived that frequency combination as one pitch. The dotted, black line represents a skewed Gaussian fit to the data (see Eq. 1; values of α, μ, σ, and β were fitted at 1.5, 1914, 235.2, and 1.364, respectively). The fusion range is calculated from the point at which the skewed Gaussian crosses the 0.5 line and is shown by the double-ended purple arrow.

where yi is the proportion of one sound responses for the ith comparison frequency, α varies the maximum of the bell curve, xi is the ith comparison frequency (in Hz), μ varies the center of the bell curve, σ varies the width of the bell curve, Φ is the cumulative standard normal function, and β varies skewness. The fusion range was calculated as the frequency range between the lower and upper 50% crossings of the fusion function and is indicated by double-ended arrow in Fig. 2. The error bounds for fusion ranges were estimated in a simulation study as described in the Appendix.

3. RESULTS

3.1. Loudness-Balanced Levels

Loudness balancing results at the comfortable level are presented for each listener with NH in Fig. 3(A). For comparison, loudness-balanced levels at comfortable level from listeners with HI are plotted in Fig. 3(B). Loudness-balanced levels from listeners with HI reflect unaided presentation levels rather than hearing aid gain. Listeners with HI were tested over headphones to minimize variation due to differences in hearing aid processing between individuals. Three different levels were determined by listeners with NH: soft, comfortable, and loud, corresponding to means of 57, 71, and 87 dB SPL(C), respectively, at the reference frequency (2 kHz) in the reference ear. Thus, while soft and loud levels at each frequency were initiated at ±15 dB from the comfortable level, several listeners reduced/increased the levels of the soft, comfortable, and loud sounds to achieve the desired loudness. This includes the reference frequency (2 kHz) in the reference ear. From Fig. 3 it is also clear that larger differences in level were required to achieve equal loudness across ears for listeners with HI compared to those with NH.

Fig. 3.

Loudness balance levels for listeners with (A) NH or (B) HI. The x-axis represents the frequency being presented in kHz. The y-axis represents the level in dB SPL(C) at which listeners perceived a pure tone as comfortable. Blue crosses and red circles represent the left and right ear, respectively. Each panel corresponds to a different listener whose subject code is given in the top-left corner. Because not every listener perceived 70 dB SPL(C) as comfortable for the 2 kHz tone, level was sometimes adjusted above or below 70 dB. The left ear was the reference ear for listeners NH77, NH79, NH80, and NH82. The age of listeners with HI is given below their subject code.

3.2. Fusion Range

Fusion ranges as a function of sound level are shown for all listeners with NH in Fig. 4A. Changes in fusion range with sound level varied widely across individuals with respect to magnitude and direction. Accordingly, there was no significant change in fusion range over the range of sound levels according to a Friedman non-parametric, repeated-measures test (χ2(2) = 1.000, p = .607). A non-parametric test was used since data were highly variable and there was no obvious distribution to the fusion ranges. Due to the small sample size, it would be inappropriate to test data or residuals for normality. This analysis was repeated excluding listener NH79 who had a fusion range of zero in the loud condition, but still resulted in a lack of significant effect of sound level (χ2(2) = 1.600, p = .449). Recall that one of the listeners, NH82, is an author on the paper. Their fusion ranges were neither the smallest nor largest, and showed virtually no effect of level, consistent with the results for the other participants. Thus, it appears that NH82 did not bias the results in any way that was not representative of other participants.

Fig. 4.

Fusion range by sound level. The x-axis represents sound level. The y-axis represents the fusion range in kHz. (A) The closed, black squares and error bars represent the mean plus or minus one standard deviation. Standard deviations were calculated on log-transformed fusion ranges and then converted back to kHz. Individual data are shown in blue for each of the six listeners with NH whose subject codes are given in the legend. (B) The closed, black circles represent the fusion range for each listener whose subject code is given in the top-left corner of each panel. Offset to the right is a boxplot of the distribution of 17 fusion range estimates, each calculated by removing one data point from the fusion function. Each box represents the median and interquartile range. Whiskers represent the maximum and minimum fusion range estimate.

Given the lack of effect of level, we wanted to establish reasonable bounds around fusion range estimates to determine what magnitude of effect could be detected using this task. This could be done in at least two different ways, by evaluating: (1) the statistical properties of the fusion function under ideal circumstances, and (2) the influence of particular data points observed for each individual listener. Accordingly, we devised two different strategies for each of these analyses. The first is presented in the Appendix. It shows a simulation study suggesting that, under ideal circumstances where data from listeners are only affected by variability associated with sampling error, the task is able to detect a change in fusion range of 25% with 99% confidence. Accordingly, under ideal circumstances, a difference of 50% fusion range between two of the loudness conditions would be needed to detect an effect of level (since there are two different estimates of fusion range involved). The second is presented in Fig. 4B. Each panel shows results from different listeners, where the boxplot shows the distribution of bootstrapped fusion range estimates with one data point removed from the fusion function (resulting in 17 estimates). The results in Fig. 4B suggest that the fusion range estimates were robust against deviations of a single data point (or variable frequency). In Table I, we also included 95% confidence intervals for each parameter in the fusion function across listeners and sound levels.

Table I.

Fusion function parameter estimates and 95% confidence intervals. Each row represents a different listener with NH whose subject code is indicated in the left-most column. Any parameter estimate with an asterisk indicates that the estimate was fixed at a computational bound, and that it was not possible to compute a confidence interval.

| Soft | Comfortable | Loud | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Subject ID | α | μ | σ | β | α | μ | σ | β | α | μ | σ | β |

| NH76 | 1.365 ± 0.295 | 1892 ± 36 | 356.7 ± 92.6 | 4.001 ± 2.955 | 1.499 ± 0.801 | 1955 ± 76 | 183.2 ± 110.3 | 1.844 ± 3.286 | 1.266 ± 0.192 | 1919 ± 31 | 477.7 ± 108.2 | 4.070 ± 2.078 |

| NH77 | 1.180 ± 221.950 | 1906 ± 3296000 | 183.7 ± 34540.0 | −0.013 ± 44990.000 | 1.500* | 188 6 ± 38 | 173.9 ± 27.8 | 0.984 ± 0.843 | 0.902 ± 0.466 | 182 8 ± 109 | 278.8 ± 151.0 | 1.989 ± 3.47 0 |

| NH79 | 1.496 ± 0.268 | 1863 ± 22 | 198.0 ± 38.2 | 2.547 ± 1.449 | 1.500* | 1914 ± 25 | 235.2 ± 23.7 | 1.364 ± 0.536 | 0.538 ± 0.257 | 1861 ± 72 | 300.3 ± 160.3 | 3.723 ± 6.001 |

| NH80 | 1.111 ± 0.140 | 1595 ± 40 | 1586.0 ± 460.0 | 17.820 ± 13.473 | 1.240 ± 0.106 | 1712 ± 32 | 912.5 ± 93.6 | 5.243 ± 1.63 8 | 1.335 ± 0.337 | 1855 ± 113 | 970.3 ± 349.6 | 3.611 ± 2.936 |

| NH82 | 1.500* | 1846 ± 32 | 246.8 ± 33.3 | 1.536 ± 0.755 | 1.500* | 1886 ± 71 | 207.7 ± 31.2 | 0.549 ± 1.010 | 1.500* | 1819 ± 28 | 275.3 ± 33.6 | 1.925 ± 0.78 0 |

| NH85 | 1.500 ± 0.441 | 1908 ± 52 | 224.3 ± 69.6 | 1.921 ± 1.871 | 1.467 ± 0.828 | 193 1 ± 103 | 197.4 ± 115.8 | 1.56 8 ± 3.19 3 | 0.956 ± 0.253 | 187 0 ± 38 | 276.7 ± 81.5 | 3.39 0 ± 2.97 0 |

To further investigate whether increasing level is associated with increasing fusion range, we compared previously published fusion ranges of listeners with HI against listeners with NH in the present experiment. Data from listeners with HI were re-fitted using the asymmetric Gaussian function described in Section 2.4. Fusion ranges from nine listeners with HI are shown for comparison with the fusion ranges of listeners with NH in the loud condition in Fig. 5. Listener NH79 was excluded from Fig. 5 since their fusion range was 0 Hz in the loud condition, which implies an infinitely small value on the y-axis, and is therefore impossible to show in a correlation plot. Results from listeners with HI were previously published in Oh et al. (2019), Oh and Reiss (2018), Oh and Reiss (2017), and Reiss et al. (2017).

Fig. 5.

Fusion range by level across listeners with NH or HI. The x-axis represents the mean level between ears in dB SPL(C) presented to each ear at the reference frequency. The y-axis represents the fusion range in kHz. Listeners with NH in the loud condition are shown in blue and listeners with HI in the comfortable condition are shown in black, with each shape representing one individual whose subject codes are given in the legend.

Each point in Fig. 5 reflects the fusion range and loud or comfortable level (at 2 kHz) for listeners with NH and HI, respectively. Results from listeners HI25, HI38, and HI40 were based on the average of two different fusion range estimates where the left and right ear were each used as the reference ear, while all others only used one reference ear and fusion function. The results indicate that fusion ranges tended to be larger for listeners with HI compared to NH, as in previous experiments with listeners who use bilateral hearing aids (Oh et al., 2019; Reiss et al., 2017, 2016). Only 4/9 of the HI data points indicate fusion ranges at or below the greatest NH fusion range. Moreover, while the NH data in Fig. 5 imply a correlation between fusion range and level, when combined with the greater amount of HI data, the results do not support this correlation. In particular, listeners HI16 and HI41 were presented stimuli at lower levels than those used for listeners with NH (at 2 kHz), yet had greater fusion ranges.

4. DISCUSSION

The present experiment investigated whether sound level affected the interaural frequency disparity over which two pure tones are heard as one pitch (fusion range). It was hypothesized that if increased sound level contributes to the larger fusion ranges observed in previous studies in listeners with HI who use hearing aids compared to NH (Oh et al., 2019; Reiss et al., 2017, 2016), then fusion range would also increase for higher sound levels in listeners with NH. The results of the present experiment contradict this hypothesis and suggest that increases in sound level alone do not lead to consistent increases in fusion range. This finding has important implications for listeners that use hearing aids, as these devices increase sound levels to those used in these experiments and above. The results of this experiment imply that sound level is not a likely contributor to the larger fusion range observed in listeners with HI.

4.1. Binaural Pitch Fusion

4.1.1. Significance

Listeners with NH exhibit a small amount of frequency-to-pitch mismatch between the ears (diplacusis; e.g., Albers and Wilson, 1968; van den Brink et al., 1976), meaning that the auditory system must be able to account for differences between the ears to form one coherent pitch percept. One strategy clearly used by the auditory system is to “group” frequency and temporal information with a common onset time into one perceptual image (Bregman, 1990; Darwin, 1997; Shinn-Cunningham, 2008), and it has been proposed by van den Brink et al. (1976) that binaural fusion normally accommodates diplacusis such that only one coherent sound is heard for small interaural pitch differences. With sufficient differences in frequency between the ears, the NH auditory system will perceive separate pitches for pure tones (Perrott and Barry, 1969; Reiss et al., 2017; Thurlow and Elfner, 1959; van den Brink et al., 1976). In listeners with HI, however, binaural pitch fusion tends to be broader such that even tones with large pitch differences are fused across ears (Reiss et al., 2017, 2014).

Together with interaural mismatches due to dead regions, broad binaural fusion can lead to fusion of mismatched spectral information. That is, listeners with HI can show patterns of binaural vowel perception that do not correspond to the vowel perception in either ear, such as an averaging of vowel perception between the ears (Reiss et al., 2016). Broad fusion is also correlated with reduced release from masking due to voice-pitch differences in multi-talker listening environments, potentially because the different voices are fused together instead of segregated as they normally would be on the basis of pitch (Oh et al., 2019). While voice pitch is thought to be conveyed by different cues than place-pitch, referring to the changes in place-of-stimulation along the basilar membrane thought to be involved with pitch perception of high frequency tones (for review, see Oxenham, 2008), preliminary findings suggest that abnormal fusion of dichotic tones is correlated with abnormal fusion of dichotic vowels at different fundamental frequencies, and thus fusion of voices of different pitch in HI listeners (Reiss et al., 2018). Such speech fusion leads to spectral averaging of different vowels similar to that demonstrated for consonants (Cutting, 1976). Thus, it is critical to understand the factors underlying abnormal broad fusion in HI listeners.

It is worth mentioning that additional interaural spectral differences may arise when two sounds are presented from different horizontal and vertical locations. When this situation occurs for similar sounds, the difference in horizontal location leads to differences in the level of the sound source in each ear due to attenuation from the head and body, and differences in both vertical and horizontal location lead to differences in spectral content in each ear due to filtering from the pinnae. Thus, the introduction of interaural spectral differences (like those manipulated in the present experiment) reflects the types of changes to sounds that occur due to differences in sound source location.

4.1.2. Relationship with Level

The present study endeavored to explore one factor, sound level, that differs between listeners with HI who use hearing aids and listeners with NH. At moderate to high levels of stimulation in the present study (57–87 dB SPL(C) on average at 2 kHz), there was no consistent change in fusion range for listeners with NH (Fig. 4). Further, when data from listeners with HI were included at similar or higher levels of stimulation, there was no apparent relationship between fusion range and sound level. These results are in agreement with those of Thurlow and Bernstein (1957) at low to moderate levels (10–50 dB sensation level) in listeners with NH. The comparison of listeners with NH against listeners with HI suggests that their fusion ranges may be larger due to factors other than level. This conclusion was reached since there was no systematic trend with level within listeners, and fusion ranges tended to be larger for listeners with HI, though there was overlap for four listeners in each group (Fig. 5). Lending further support to our conclusion, HI16 and HI41 were tested at levels lower than and similar to those of NH listeners, respectively, but had very broad fusion ranges. Together, our results and those of Thurlow and Bernstein (1957) suggest that overall sound level does not affect fusion range. However, it is worth mentioning that introducing interaural level differences (ILDs) shifts the pitch of a fused dichotic tone pair, an average of the two original pitches, toward that of the louder ear (van den Brink et al., 1976).

Whether ILDs affect fusion range requires further investigation and has important implications for listeners with HI and clinicians, as spurious ILDs can be introduced by uncoordinated hearing aids (Brown et al., 2016; Musa-Shufani et al., 2006) as well as with features such as automatic gain control. Loudness balancing and coordinated hearing aids could play an important role in binaural frequency selectivity since loudness perception can differ considerably across the two ears, especially in cases where listeners have asymmetric hearing loss.

4.2. Pitch Perception and Level

Very early studies in pitch perception using pure tones suggested that there were large effects of level on pitch (e.g., Stevens, 1935). However, later studies showed much smaller effects, with middle frequencies (1–2 kHz) changing as little as 1% to a maximum of 5% at lower or higher frequencies, and large amounts of variability across listeners (Terhardt, 1974; Verschuure and van Meeteren, 1975). Similarly, changing sound level has no consistent effect on diplacusis in listeners with NH (Burns, 1982) or HI (Burns and Turner, 1986). However, in contrast to the previous results, it should be noted that increasing sensation levels from slightly above threshold (5–20 dB) has been shown to increase difference limens (worsen performance) in diplacusis-like tasks for listeners with NH (Pikler and Harris, 1955). If changes in diplacusis with sound level were responsible for changes in fusion range, then one would expect fusion range to vary consistently at low sensation levels. The results of Thurlow and Bernstein (1957) do not support this conclusion.

4.3. Large Binaural Fusion Ranges with HI

The auditory periphery is very different in listeners with sensorineural HI compared to those with NH. For example, HI can affect auditory nerve fiber density (Nadol, 1997; Spoendlin and Schrott, 1989) and the cochlea can contain dead regions (Moore, 2004). Additionally, the shape of auditory filters obtained from forward masking is altered in listeners with HI (Glasberg and Moore, 1986). These peripheral decrements may lead to larger diplacusis and mismatches in place-of-stimulation between ears for listeners with sensorineural hearing loss. For listeners with hearing aids, these mismatches occur due to the effects of deafness that result in changes in the auditory periphery. For listeners with cochlear implants, sound processing strategies often introduce place-of-stimulation mismatches by allocating acoustic frequencies to electrodes independent of where the electrodes are actually located. The results of the present study suggest that an increase in overall level, which broadens the pattern of stimulation in the auditory periphery, does not explain the large fusion ranges measured in listeners with sensorineural HI. Studies comparing listeners with conductive and sensorineural HI might shed additional light into the mechanisms contributing to larger fusion ranges in listeners with sensorineural HI.

The hypothesis in the present study supposed that increasing sound level would lead to increasing fusion range based upon an increase in the area of excitation in the auditory periphery. This hypothesis is not supported by the data from the present study. Increasing level has a very predictable effect on peripheral excitation patterns (i.e., an increase in the area of excitation). In contrast, fusion ranges in listeners with NH did not show increases that would be consistent with these peripheral excitation pattern increases due to sound level, and some HI listeners had much broader fusion at lower or similar sound levels. These findings suggest that broad binaural pitch fusion may either arise due to broader peripheral activity due to hearing loss, such as loss of outer hair cells, or may arise at a more central locus.

Previous reports have shown that extensive musical training in listeners with and without HI are associated with improved pitch discrimination (e.g., Başkent and Gaudrain, 2016; Oxenham et al., 2003). Our results do not show any consistent trend with musical training in listeners with HI or NH (Fig. 5). Two of the listeners with HI who had the largest fusion ranges also had 10 or more years of musical training (HI25 and HI45). It is possible that the present study was underpowered to detect an effect of musical training, as it was not designed to do so. However, given that fusion ranges are considerably larger than frequency discrimination thresholds, it could be that the lack of association with musical training could be due to a different mechanism than that associated with improved frequency discrimination in musicians. That is, while both pitch fusion and frequency discrimination may be affected by resolution of frequency coding in the auditory periphery, pitch fusion may also involve a central component.

The high inter-subject variability in listeners with NH suggests some contribution of central auditory processing. One study of electrophysiological recordings from the auditory cortex of the cat showed sensitivity of neurons to large interaural frequency differences (Mendelson, 1992), which may provide one putative, central source of binaural high-frequency comparison. Broadly speaking, it is thought that binaural pitch processing involves peripheral and central mechanisms (Plack et al., 2014).

4.4. Limitations

There were several limitations to the present study. A general limitation that should be noted is that binaural fusion measurements are subjective in nature, as in any experiment that seeks to measure a perceptual attribute where there is no “correct” answer. Pitch matching experiments are similarly subjective. This differs from objective experiments that involve threshold measurements or discrimination, where there is a correct answer. The same problem is noted in the localization (i.e., precedence effect) literature in a review by Litovsky et al., (1999) that characterizes the benefits of each type of measurement.

While there have been attempts to develop objective measures of fusion (Fiedler et al., 2011), there are no known measures that have been verified independently by multiple laboratories. In discrimination experiments where tones of varying frequency are presented dichotically and compared against a diotic reference, and subjects are asked which interval had the dichotic tone, discrimination is possible even in the presence of fusion due to pitch averaging across the two ears, providing a pitch cue according to which intervals are different (Oh and Reiss, 2017; van den Brink et al., 1976). Discrimination tasks against a diotic reference could also be completed by attending to one ear when the dichotic tone pair is not fused. One alternative approach has been to use a 1I-5AFC binaural pitch fusion task, where listeners were asked to indicate whether one or two pitches were heard, and if two pitches, which ear contained the higher pitch (Reiss et al., 2017). While this task worked well for listeners with HI, listeners with NH made pitch confusions across the two ears for closely spaced frequencies so that it was not possible to provide useful feedback. One additional alternative was provided by Anderson et al. (2019) in a related experimental paradigm where listeners judged whether the same or different amplitude modulation rates were presented to different ears using sinusoidally amplitude-modulated tones. They used a 1I-2AFC task like the one in the present study, except that they provided visual feedback on whether amplitude modulation rates were in fact the same or different. This task still resulted in large inter-subject variability and high task difficulty, suggesting that this type of task is not suitable to measure binaural pitch fusion.

Another limitation was that a small data set was collected. Several additional pilot experiments were conducted showing no effect of sound level, and the authors found it wasteful to continue to collect data indicating that there was a null effect. To address this concern, we completed a simulation study (see Appendix) with many parameterizations of fusion functions. The results of the simulation study suggest that the fusion functions from our listeners were adequately sampled to detect an effect of sound level if it increased or decreased fusion range by 50% (in Hz or octaves) between two loudness conditions under ideal circumstances. For reference, the average fusion range was 478% greater for listeners with HI (average fusion range of 2.60 kHz) compared to NH (average fusion range in the loud condition of 0.45 kHz). Fusion range also changes according to the frequency in the reference ear (Odenthal, 1963, 1961; Perrott and Barry, 1969; Thurlow and Bernstein, 1957; Thurlow and Elfner, 1959; van den Brink et al., 1976). For a further point of comparison, while the effect of reference frequency is listener-dependent, in listeners with NH, fusion range increases on the order of 100–300% as reference frequency is increased from 1.00 to 2.00 kHz.

An additional limitation to the present study is that fusion functions are notoriously variable across listeners with NH (Odenthal, 1963; Perrott and Barry, 1969; Reiss et al., 2017; van den Brink et al., 1976). Across the sound levels tested in listeners with NH, the fusion ranges typically remained on a smaller scale (0.15–0.50 kHz; 7.5–25.0%) than the fusion ranges seen in listeners with HI (0.31–6.23 kHz; 15.5–311.5%). The largest fusion range from listeners with NH in this study was 1.25 kHz (62.3%) from NH80. The next highest fusion range from other listeners was 0.49 kHz (24.5%) from NH76. The present study used a within-subjects design to address the considerable variability typically observed across listeners. Thus, if there was an effect of level, we would have expected to see consistent trends within individuals.

Finally, the listeners with NH that participated in the present study were younger on average than listeners with HI in previous studies of fusion range (Oh et al., 2019; Oh and Reiss, 2018, 2017; Reiss et al., 2017). It may be that aging affects binaural pitch fusion, as this has not been explored in the literature. However, it should be noted that in the listeners with HI shown here, some of the broadest fusion ranges were seen in three of the youngest subjects (HI16, HI22, and HI45), suggesting that aging alone did not explain extremely broad fusion.

It is unlikely that listeners used binaural beats (i.e., interaural phase differences) to make their decisions in the binaural pitch fusion task. Firstly, all listeners had limited sensitivity to interaural phase above 1.5 kHz, below the test frequency of 2 kHz, when tested using the task from Grose and Mamo (2010). Next, while binaural beats have varying definitions, from perceived roughness to a lateralizable percept (Licklider et al., 1950), most studies suggest that binaural beats are no longer audible above 1.5 kHz (Perrott and Nelson, 1969).

4.5. Summary

The present study investigated the effects of sound level on the range of frequency discrepancies for which listeners with NH perceive one pitch when one pure tone is presented to each ear (i.e., fusion range). Our results showed no effect of sound level within listeners with NH, smaller fusion ranges compared against listeners with HI when tested at similar or higher levels, and no apparent correlation between sound level and fusion range across listeners, suggesting that other factors associated with hearing loss lead to large fusion ranges for listeners with HI.

HIGHLIGHTS.

Hearing loss is associated with less binaural frequency selectivity

Sound level does not change binaural frequency selectivity

Binaural frequency selectivity is likely affected by other deafness-related factors

ACKNOWLEDGEMENTS

This work was supported by the National Institutes of Health grant numbers R01 DC013307 and P30 DC005983 from the National Institutes of Deafness and Communication Disorders.

APPENDIX

Fusion range in the present study was computed from a function relating percent of fusion to comparison frequency. Seventeen comparison frequencies with 12 observations per point (e.g., Fig. 2) were collected in two blocks with six observations per point. It is not obvious from this method of analysis how much error could reasonably be expected in estimates of fusion range. Thus, a simulation study was completed to estimate an error bound for fusion range estimates.

Fusion functions observed in the experiment were assumed to be samples of some “true” fusion function. Fig. A. 1 shows 27 examples of different fusion functions determined by parameterizations of Eq. 1 given in the figure legend. All fusion functions in the simulation study spanned one octave from 1.414 to 2.828 kHz. Within each panel of Fig. A. 1, one parameter was varied while all other parameters were held constant. Panel A adjusts the center frequency of the fusion function, panel B adjusts the width of the fusion function, and panel C adjusts skewness. These parameters were chosen to attempt to span most of the range in which fusion ranges could fall, with similar fusion ranges observed in the experiment for all listeners with NH except NH80. Fig. A. 1 shows that many different fusion functions can be generated using the formula in Eq. 1. In the simulation study, all possible combinations of the parameters shown in the three panels were tested (nine from Fig. A. 1(A), A. 1(B), and A. 1(C)), resulting in a total of 729 possible parameter combinations. From the 729 possible parameterizations, or true fusion functions, 79 unique, true fusion ranges were possible. Only 79 unique fusion ranges were demonstrated because shifting the fusion center (as in Fig. A. 1(A)) does not change the fusion range, and two other parameter combinations resulted in identical fusion ranges. For each of the true fusion functions, fusion range was estimated from 1000 simulations using 12 repetitions per point drawn from a binomial distribution Bin(n=12,p) whose parameter p was determined by the true fusion function. During simulations it was assumed that testing was completed using a range of 1.414 to 2.828 kHz. Said another way, it was assumed that testing was completed such that the variable comparison frequencies spanned the “true” fusion function.

Results from 729,000 simulations are plotted in Fig. A. 2. A 99% confidence interval for the error of fusion range estimates was computed based on simulations. All fusion range estimates that were 5% or lower (i.e., fusion range estimates at or near 0 Hz) were discarded from the confidence interval calculation (see paragraph below), resulting in 501,109 remaining fusion range estimates. The confidence interval for fusion range estimate errors was −0.3% (biased toward lower values) ± 24.5%. Thus, bounds in Fig. A. 2 were set at 25% based on the error estimates, covering a 99% confidence interval.

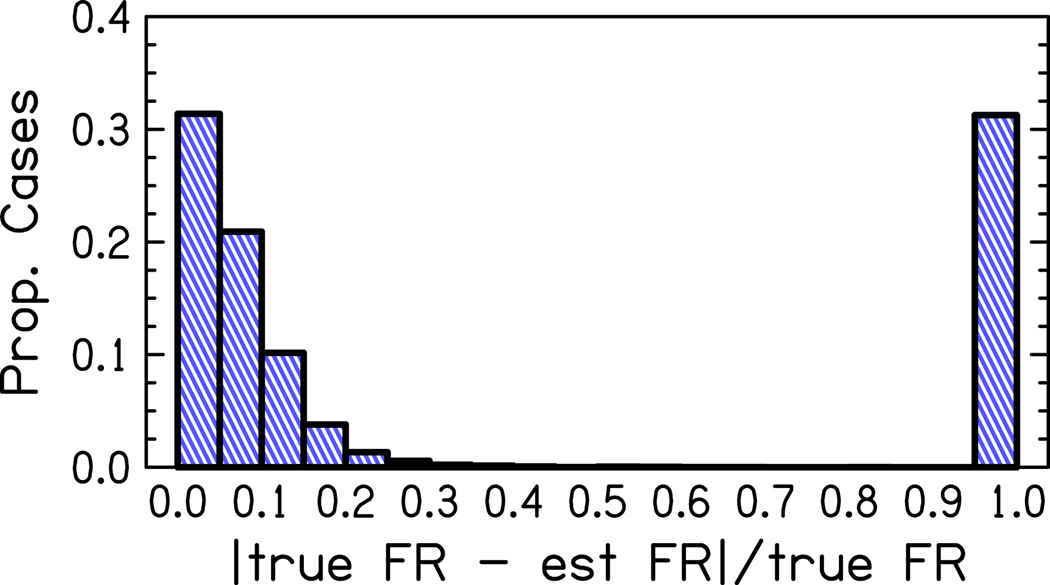

The large size of the dataset makes it difficult to see how many observations are focused at any particular value in Fig. A. 2. Thus, the following is plotted in the relative frequency histogram in Fig. A. 3:

| (2) |

where |t| indicates the absolute value of some variable t. A large number of cases equal to one occur because many observations, particularly for the smallest true fusion ranges, were estimated to be zero. Fig. A. 2 and A. 3 suggest that if sound level increased or decreased fusion range by 50% between two loudness conditions (i.e., with an error tolerance of 25% each), an effect should have been discernable in any of the listeners with NH that participated in the present study, so long as fusion ranges were not estimated to be zero. In the present experiment where data were pooled across blocks, the fusion range of listener NH79 in the loudest condition was estimated to be zero. No other experimental fusion ranges were estimated as zero.

Fig. A.1.

Simulated example fusion functions. The x-axis represents the variable frequency presented to the comparison ear in kHz. The y-axis represents the proportion of fusion. Each curve (color and pattern) represents a different parameterization of Eq. 1 according to the figure legend. Values of α, σ, and β representing height, width, and skew, were fixed at 2, 432, and 0, respectively in (A). Values of α, μ, and β representing height, frequency offset, and skew were fixed at 2, 2000, and 0, respectively in (B). Values of μ and σ representing frequency offset and skew were fixed at 2000 and 432, respectively in (C).

Fig. A.2.

Correlation between true and estimated fusion range. The x-axis represents the true fusion range calculated from the curve used to simulate experimentally observed fusion functions in kHz. The y-axis represents the estimated fusion range in kHz. The solid black line represents perfect estimation of fusion range. The dashed lines represent 25% above and below the true fusion range (based on 99% confidence intervals for error of fusion range estimates).

Fig. A.3.

Relative frequency histogram for fusion range errors. The x-axis represents the amount of error in the fusion range estimate (expressed in proportion relative to the true fusion range). The y-axis represents the relative frequency of that error in fusion range estimates from the simulations, which sum to one. Each bin of the histogram is defined to include the lower value and exclude the upper value, e.g., bin 1 represents [0,0.05), except for the final bin which represents [0.95,1.0].

Footnotes

Author Contributions

Sean R. Anderson: Conceptualization, methodology, software, formal analysis, investigation, resources, data curation, writing – original draft, visualization. Bess Glickman: Methodology, validation, investigation, resources, data curation, writing – review & editing. Yonghee Oh: Conceptualization, methodology, software, formal analysis, investigation, writing – review & editing, supervision. Lina A. J. Reiss: Conceptualization, methodology, software, formal analysis, investigation, writing – review & editing, supervision.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Albers GD, Wilson WH, 1968. Diplacusis. I. Historical review. Arch.Otol 87, 601–603. 10.1001/archotol.1968.00760060603009 [DOI] [PubMed] [Google Scholar]

- Anderson SR, Kan A, Litovsky RY, 2019. Asymmetric temporal envelope encoding: Implications for within- and across-ear envelope comparison. J. Acoust. Soc. Am 146, 1189–1206. 10.1121/1.5121423 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Başkent D, Gaudrain E, 2016. Musician advantage for speech-on-speech perception. J. Acoust. Soc. Am 139, EL51–EL56. 10.1121/1.4942628 [DOI] [PubMed] [Google Scholar]

- Bregman AS, 1990. Auditory Scene Analysis: The Perceptual Organization of Sound. Bradford Books, MIT Press, Caimbridge, MA. 10.1121/1.408434 [DOI] [Google Scholar]

- Brown AD, Rodriguez FA, Portnuff CDF, Goupell MJ, Tollin DJ, 2016. Time-Varying Distortions of Binaural Information by Bilateral Hearing Aids: Effects of Nonlinear Frequency Compression. Trend. Hear 20, 1–15. 10.1177/2331216516668303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burns EM, 1982. Pure-tone pitch anomalies. I. Pitch-intensity effects and diplacusis in normal ears. J. Acoust. Soc. Am 72, 1394–1402. 10.1121/1.388445 [DOI] [PubMed] [Google Scholar]

- Burns EM, Turner C, 1986. Pure-tone pitch anomalies. II. pitch-intensity effects and diplacusis in impaired ears. J. Acoust. Soc. Am 79, 1530–1540. 10.1121/1.393679 [DOI] [PubMed] [Google Scholar]

- Cutting JE, 1976. Auditory and linguistic processes in speech perception: Inferences from six fusions in dichotic listening. Psych. Rev 83, 114–140. 10.1037/0033-295X.83.2.114 [DOI] [PubMed] [Google Scholar]

- Darwin CJ, 1997. Auditory Grouping. Trends Cogn. Sci. 1, 327–333. 10.1016/b978-012505626-7/50013-3 [DOI] [PubMed] [Google Scholar]

- Festen JM, Plomp R, 1990. Effects of fluctuating noise and interfering speech reception threshold for impaired and normal hearing. J. Acoust. Soc. Am 88, 1725–1736. 10.1121/1.400247 [DOI] [PubMed] [Google Scholar]

- Fiedler A, Schröter H, Seibold VC, Ulrich R, 2011. The influence of dichotical fusion on the redundant signals effect, localization performance, and the mismatch negativity. Cogn Affect Behav Neurosci 11, 68–84. 10.3758/s13415-010-0013-y [DOI] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR, 1975. “Mini-mental state” a practical method for grading the cognitive state of patients for the clinician. J. Psychiat. Res 12, 189–198. 10.1016/0022-3956(75)90026-6 [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ, 2000. Frequency selectivity as a function of level and frequency measured with uniformly exciting notched noise. J. Acoust. Soc. Am 108, 2318–2328. 10.1121/1.1315291 [DOI] [PubMed] [Google Scholar]

- Glasberg BR, Moore BCJ, 1986. Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments. J. Acoust. Soc. Am 79, 1020–1033. 10.1121/1.393374 [DOI] [PubMed] [Google Scholar]

- Grose JH, Mamo SK, 2010. Processing of temporal fine structure as a function of age. Ear Hear. 31, 755–760. 10.1097/AUD.0b013e3181e627e7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Licklider JCR, Webster JC, Hedlun JM, 1950. On the frequency limits of binaural beats. J. Acoust. Soc. Am 22, 468–473. 10.1121/1.1906629 [DOI] [Google Scholar]

- Litovsky RY, Colburn HS, Yost WA, Guzman SJ, 1999. The precedence effect. J. Acoust. Soc. Am 106, 1633–1654. 10.1016/0378-5955(83)90002-3 [DOI] [PubMed] [Google Scholar]

- Mendelson JR, 1992. Neural selectivity for interaural frequency disparity in cat primary auditory cortex. Hear. Res 58, 47–56. 10.1016/0378-5955(92)90007-a [DOI] [PubMed] [Google Scholar]

- Moore BCJ, 2004. Dead Regions in the Cochlea: Conceptual Foundations, Diagnosis, and Clinical Applications. Ear Hear. 25, 98–116. 10.1097/01.AUD.0000120359.49711.D7 [DOI] [PubMed] [Google Scholar]

- Musa-Shufani S, Walger M, von Wedel H, Meister H, 2006. Influence of dynamic compression on directional hearing in the horizontal plane. Ear Hear. 27, 279–85. 10.1097/01.aud.0000215972.68797.5e [DOI] [PubMed] [Google Scholar]

- Nadol JJ, 1997. Patterns of neural degeneration in the human cochlea and auditory nerve: Implications for cochlear implantation. Otolayngol. Head. Neck Surg. 117, 220–228. 10.1016/S0194-5998(97)70178-5 [DOI] [PubMed] [Google Scholar]

- Odenthal DW, 1963. Perception and neural representation of simultaneous dichotic pure tone stimuli. Acta. Physiol. Pharmacol. Neerl 12, 453–496. [PubMed] [Google Scholar]

- Odenthal DW, 1961. Simultaneous dichotic frequency discrimination. J. Acoust. Soc. Am 33, 357–358. 10.1121/1.1908661 [DOI] [Google Scholar]

- Oh Y, Hartling CL, Srinivasan NK, Eddolls M, Diedesch AC, Gallun FJ, Reiss LAJ, 2019. Broad binaural fusion impairs segregation of speech based on voice pitch differences in a ‘ cocktail party ‘ environment. BioRxiv 1–39. 10.1101/805309 [DOI] [Google Scholar]

- Oh Y, Reiss LAJ, 2018. Binaural Pitch Fusion: Effects of Amplitude Modulation. Trend. Hear 22, 1–12. 10.1177/2331216518788972 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oh Y, Reiss LAJ, 2017. Binaural pitch fusion: Pitch averaging and dominance in hearing-impaired listeners with broad fusion. J. Acoust. Soc. Am 142, 780–791. 10.1121/1.4997190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham AJ, 2008. Pitch Perception and Auditory Stream Segregation: Implications for Hearing Loss and Cochlear Implants. Trends Amplif. 12, 316–331. 10.1177/1084713808325881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham AJ, Fligor BJ, Mason CR, Kidd G, 2003. Informational masking and musical training. J. Acoust. Soc. Am 114, 1543–1549. 10.1121/1.1598197 [DOI] [PubMed] [Google Scholar]

- Perrott DR, Barry SH, 1969. Binaural fusion. J. Aud. Res 3, 263–269. [Google Scholar]

- Perrott DR, Nelson MA, 1969. Limits for the Detection of Binaural Beats. J. Acoust. Soc. Am 46, 1477–1481. 10.1121/1.1911890 [DOI] [PubMed] [Google Scholar]

- Pick GF, 1980. Level dependence of psychophysical frequency resolution and auditory filter shape. J. Acoust. Soc. Am 68, 1085–1095. 10.1121/1.384979 [DOI] [PubMed] [Google Scholar]

- Pikler AG, Harris JD, 1955. Channels of reception in pitch discrimination. J. Acoust. Soc. Am 27, 124–131. 10.1121/1.1907472 [DOI] [Google Scholar]

- Plack CJ, Barker D, Hall DA, 2014. Pitch coding and pitch processing in the human brain. Hear. Res 307, 53–64. 10.1016/j.heares.2013.07.020 [DOI] [PubMed] [Google Scholar]

- Reiss LAJ, Eggleston JL, Walker EP, Oh Y, 2016. Two Ears Are Not Always Better than One: Mandatory Vowel Fusion Across Spectrally Mismatched Ears in Hearing-Impaired Listeners. J. Assoc. Res. Otolaryngol 17, 341–356. 10.1007/s10162-016-0570-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Ito RA, Eggleston JL, Wozny DR, 2014. Abnormal binaural spectral integration in cochlear implant users. J. Assoc. Res. Otolaryngol 15, 235–248. 10.1007/s10162-013-0434-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Shayman CS, Walker EP, Bennett KO, Fowler JR, Hartling CL, Glickman B, Lasarev MR, Oh Y, 2017. Binaural pitch fusion: Comparison of normalhearing and hearing-impaired listeners. J. Acoust. Soc. Am 141, 1909–1920. 10.1121/1.4978009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Simmons S, Anderson L, Molis MR, 2018. Effects of broad binaural fusion and hearing loss ondichotic concurrent vowel identification [Abstract]. J. Acoust. Soc. Am 143, 1942. [Google Scholar]

- Shinn-Cunningham BG, 2008. Object-based auditory and visual attention. Trends Cogn. Sci. 12, 182–186. 10.1016/j.tics.2008.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoendlin H, Schrott A, 1989. Analysis of the human auditory nerve. Hear. Res 43, 25–38. 10.1016/0378-5955(89)90056-7 [DOI] [PubMed] [Google Scholar]

- Stevens SS, 1935. The relation of pitch to intensity. J. Acoust. Soc. Am 6, 150–154. 10.1121/1.1915715 [DOI] [Google Scholar]

- Terhardt E, 1974. Pitch of pure tones: Its relation to intensity, in: Zwicker E, Terhardt E (Eds.), Facts and Models in Hearing. Springer, Berlin, Germany, pp. 353–360. 10.1007/978-3-642-65902-7_46 [DOI] [Google Scholar]

- Thurlow WR, Bernstein S, 1957. Simultaneous two-tone pitch discrimination. J. Acoust. Soc. Am 29, 515–519. 10.1121/1.1908946 [DOI] [Google Scholar]

- Thurlow WR, Elfner LF, 1959. Pure-tone cross-ear localization effects. J. Acoust. Soc. Am 31, 1606–1608. 10.1121/1.1907666 [DOI] [Google Scholar]

- van den Brink G, Sintnicolaas K, van Stam WS, 1976. Dichotic pitch fusion. J. Acoust. Soc. Am 59, 1471–1476. 10.1121/1.380989 [DOI] [PubMed] [Google Scholar]

- Verschuure J, van Meeteren AA, 1975. The effect of intensity on pitch. Acta Acust. 32, 33–44. [Google Scholar]

- Wever EG, 1949. The products of tonal interaction, in: Theory of Hearing. Wiley, Hoboken, NJ, pp. 375–398. [Google Scholar]

- Zwislocki JJ, 1971. A Theory of Central Auditory Masking and Its Partial Validation. J. Acoust. Soc. Am 52, 644–659. 10.1121/1.1913154 [DOI] [Google Scholar]