Abstract

Introduction

During the COVID‐19 pandemic the Association of American Medical Colleges recommended that medical students not be involved with in‐person patient care or teaching, necessitating alternative learning opportunities. Subsequently we developed the telesimulation education platform: TeleSimBox. We hypothesized that this remote simulation platform would be feasible and acceptable for faculty use and a perceived effective method for medical student education.

Methods

Twenty‐one telesimulations were conducted with students and educators at four U.S. medical schools. Sessions were run by cofacilitator dyads with four to 10 clerkship‐level students per session. Facilitators were provided training materials. User‐perceived effectiveness and acceptability were evaluated via descriptive analysis of survey responses to the Modified Simulation Effectiveness Tool (SET‐M), Net Promoter Score (NPS), and Likert‐scale questions.

Results

Approximately one‐quarter of students and all facilitators completed surveys. Users perceived that the sessions were effective in teaching medical knowledge and teamwork, though less effective for family communication and skills. Users perceived that the telesimulations were comparable to other distance learning and to in‐person simulation. The tool was overall positively promoted.

Conclusion

Users overall positively scored our medical student telesimulation tool on the SET‐M objectives and promoted the experience to colleagues on the NPS. The next steps are to further optimize the tool.

Keywords: COVID‐19 educational innovations, medical student education, simulation‐based medical education, telesimulation

INTRODUCTION

The necessary strict social isolation regulations put in place in response to the coronavirus disease 2019 (COVID‐19) pandemic restricted in‐person educational opportunities for medical students. 1 Medical associations, including the Accreditation Council for Graduate Medical Education and the American Medical Association, recommended avoiding in‐person learning during this time as a safety measure. 2 , 3 In response to this, medical school clerkship directors expressed the need for easy‐to‐use educational tools promptly available to adapt to the remote education environment, including simulation exercises. 4

Modalities to provide synchronous, active learning drills with participants in different locations have been defined as tele‐, remote, distance, virtual, mental, and online simulation. 5 Remote simulation is defined as “simulation performed with either the facilitator, learners or both in an offsite location separate from other members to complete educational or assessment activities.” 6 , 7 As the field of screen‐based simulation has grown over the past 20 years, 8 educators have demonstrated the ability to leverage telecommunication resources to overcome access barriers to reach distant learner groups in the areas of surgical, procedural, and resuscitation training. 9 , 10 , 11 , 12 Teledebriefing 13 and telementoring 14 involving a facilitator who connects remotely into a simulated drill to observe and lead a postdrill reflection is one effective way to expand the reach of a simulation center. Practical guidelines to support this process have recently been published in response to the growing need imposed by the pandemic. 15 In light of the demonstrated success using remote simulation to overcome barriers to in‐person training, the Society for Simulation in Healthcare (SSH) called for “the use of remote simulation as a replacement for clinical hours for students currently enrolled in health sciences professions during the public health crisis caused by COVID‐19.” 16 We responded by creating a telesimulation platform available for use as Free Open Access Medical education (FOAMed), defined as “a collection of open access medical education resources created to augment traditionally published educational materials such as textbooks and journals” 17 and conducted a prospective observational study to evaluate the perceived efficacy, acceptability, and feasibility of the educational tool with medical student learners and faculty.

METHODS

Intervention

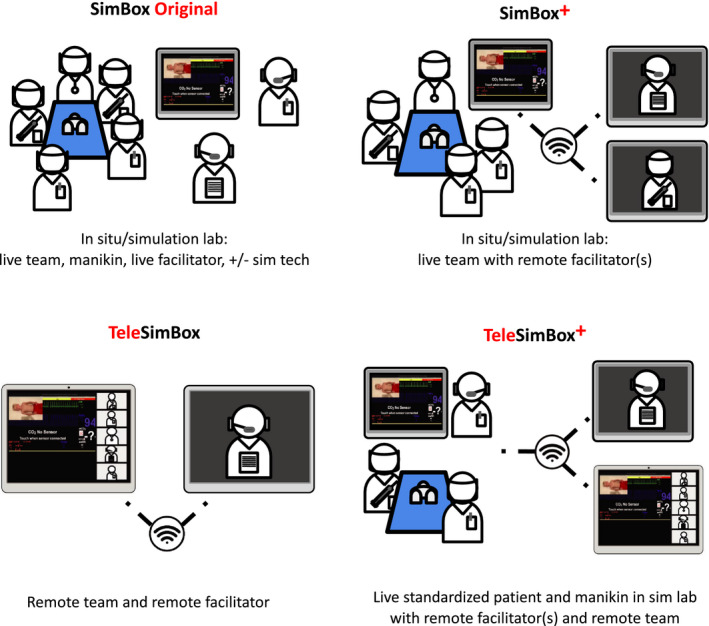

The American College of Emergency Physicians (ACEP) SimBox was originally developed by an interdisciplinary, multi‐institutional team of medical providers and simulationists in 2017. 18 Rooted in the theoretical models of simulation‐based adult and experiential learning, 19 , 20 the creators sought to provide a tool for community practitioners to facilitate their own resuscitation drills without the need for prior simulation training or costly resources. The SimBox provides the recipe and essential ingredients needed for educators to run their own simulation drill. In the months prior to the COVID‐19 pandemic, SimBox core team members piloted remotely facilitating the drills with rural emergency department providers, laying the groundwork for further teleadaptations. With the strict COVID‐19 restrictions to in‐person learning, we expanded the SimBox to include the TeleSimBox for use across the simulation distance spectrum (all in‐person, all remote, all learners in‐person around the mannikin with cofacilitator dyad remote, and a hybrid of in‐person and remote participants and facilitators). We respectively termed these iterations: SimBox Original, TeleSimBox, SimBox+, and TeleSimBox+ (see Figure 1). For this particular study, we used only the TeleSimBox format with all learner and all facilitator participants remotely connecting with no physical mannikin. Experts in medical education, simulation, and pediatric emergency medicine developed and published cases for the medical student learner, free and openly available on the Web. Each peer‐reviewed TeleSimBox case bundle published on the website includes a facilitator guide including: how‐to, prebrief, scenario and debrief guides, checklist, debriefing prompts, and resources curated to highlight the case‐specific learning objectives.

FIGURE 1.

Telesimulation pictograph. Courtesy of Maybelle Kou, MD

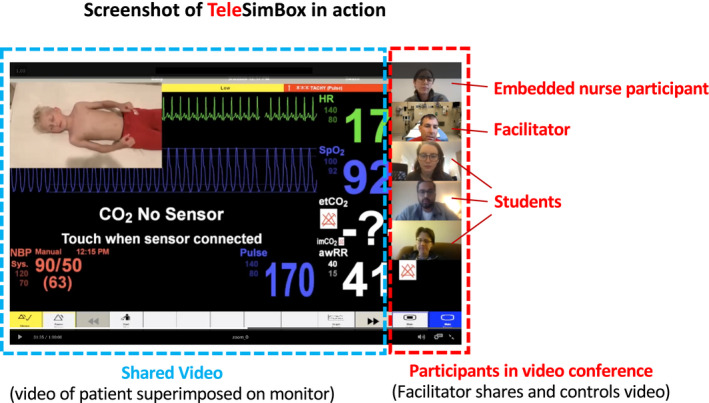

The TeleSimBox is designed for anyone with access to Internet to facilitate a complete remote simulated experience. This platform is run by the faculty/facilitator who plays a streaming YouTube video of the patient and monitor that trends vital signs over time, but does not change in response to interventions. The facilitator can simply pause or rewind the streaming video to slow the vital sign progression if the participants do not address the key medical objectives for the case. However, the video does not have embedded specific branch points to respond to different proposed participant actions. We have termed this concept here as the “sim on rails” approach. This differentiates the TeleSimBox from traditional simulation with a handler who responds to participants’ actions, which requires higher costs, technologists, and more advanced training of facilitators. The facilitator guide has a legend linking time with vital signs as they appear on the video. The TeleSimBox works best if run with two cofacilitators. One facilitator takes the lead to give the prebrief, control the YouTube video, play the role of the parent, and conclude the session to move onto the debrief. The second facilitator plays the role of the embedded nurse participant who serves as the eyes and ears of the simulation, responding to requests and tasks proposed by the participants. Both facilitators are able to lead the debrief together or assign one to take the lead. To run the simulation, the facilitator simply shares his or her screen with the streaming video with the participants and manipulates the scenario by starting, pausing, or rewinding the video stream in response to participants’ stated actions. For example, if the participants meet all case learning objectives in a timely fashion, the vital sign trend on the video stream needs no manipulation. If the participants do not do an intervention, for example, do not apply oxygen or do not give an antiepileptic medication, the facilitator can rewind the video to delay the progression in vital signs on the video stream (see Figure 2).

FIGURE 2.

Screenshot of simulation session

Study design, participants, and sampling size

This was a prospective observational study designed to trial the novel TeleSimBox educational platform with a convenience sample of medical students and faculty. Twenty‐one telesimulation drills were conducted over 3 months (April–June 2020) with approximately 30 faculty and 300 medical students recruited via peer networks across four U.S. medical schools (University of Pennsylvania, Yale University, University of Washington, and the Medical College of Wisconsin). Each drill was run by cofacilitator dyads, with medical student groups ranging in size from four to 10 learners per remote session. Sessions were conducted via a secured telecommunication platform, such as Zoom or Webex. Facilitators were provided suggested training materials for the case of pediatric seizure, all free and openly available on the website. Participants were medical students in their third and fourth clerkship years from each respective facilitator's institution. We did not advise any specific didactic preparation for students.

Data collection and analysis

Following the sessions, users (both medical student learners and faculty) were asked to evaluate the perceived educational effectiveness of the tool in the realms of the prebrief, debrief, and scenario and willingness to recommend to colleagues for use and compared to other distance learning and in‐person simulation. The survey compiled questions from the Modified Simulation Effectiveness Tool (SET‐M), 21 Likert‐Scale questions developed by the authors, and Net Promoter Scores (NPS). 22 The SET‐M is used to evaluate the learner's perception of the effectiveness of the simulation in the prebrief, learning, confidence, and debrief. The survey is based on three sets of standards: the International Association for Clinical Simulation and Learning, Standards of Best Practice, Quality and Safety Education for Nurses, and Essentials of Baccalaureate Education for Professional Nursing Practice; it is validated for use with both nurses and medical students. We added 3‐point Likert‐scale questions to assess perceived effectiveness on comfort responding to an acute care scenario, medical knowledge, psychomotor skills, teamwork/communication, family‐centered care, and comparison to other distance learning modalities and in‐person simulation. We used the 3‐point Likert scale to stay consistent with the 3‐point SET‐M. The NPS is a score widely used in the service industry and is used here as an overarching measure of user satisfaction. NPS responders are categorized as “promoters” (definitely recommend the tool), “passives” (generally happy, but would not actively promote), and “detractors” (actively discourage the experience to others). The overall score is calculated from the percentage of promoters minus the percentage of detractors (excluding the passives). Scores range from –100 (everyone is a detractor) to +100 (everyone is a promoter). In industry, a positive score is well regarded, and scores over 50 are thought to highlight good performance. Finally, open‐ended responses were thematically coded, 23 revealing insights into the overall acceptability of the tool and how to optimize logistics to enhance the educational experience in terms of participant group dynamics and technical aspects of telecommunication. Institutional review board–exempt status was obtained from the Yale School of Medicine.

RESULTS

Demographics

A total of 67/300 (22%) of the medical students and 29/30 (97%) of the facilitators completed the posttelesimulation surveys. Of the 29 facilitators who responded, there were 28 physicians (six residents, eight fellows, 14 faculty) and one nurse simulation educator. All worked at moderate to high pediatric volume (>50 children per day) academic children's hospitals with residency and fellowship training programs. This was a trained group of simulation facilitators as most (26/29, 90%) had completed a simulation facilitation/debriefing course and (12/29, 41%) had facilitated more than 20 simulations in the prior year. For this project all of the facilitator dyads included at least one senior, experienced faculty member.

The 67 medical student participants who responded to the survey expressed plans to pursue specialties in internal medicine (15/67, 22%), pediatrics (15/67, 22%), surgery (6/67, 9%), neurology (1/67, 2%), med‐peds (2/67, 3%), family medicine (1/67, 2%), and other (27/67, 40%). The range of clerkship and simulation experience of the participants was broad. One‐quarter had completed an emergency medicine clerkship, 20% had completed their pediatrics clerkship, and only one had completed a pediatric subinternship. Most (64/67, 96%) had participated in few (<10) simulations prior to the session, 39/67 (58%) had participated in some sort of “tele‐virtual/remote simulations” and 15/67 (22%) had participated in “in‐person pediatric simulations.”

Perceived effectiveness

In the SET‐M survey responses, students overall responded positively to the prebriefing, learning, confidence and debriefing. The vast majority of the participants felt that the prebrief was beneficial to learning (46/67 [69%] strongly agreed, 21/67 [31%] somewhat agreed, none disagreed) and increased confidence (37/67 [55%] strongly agreed, 28/67 [42%] somewhat agreed, 2/67 [3%] disagreed).

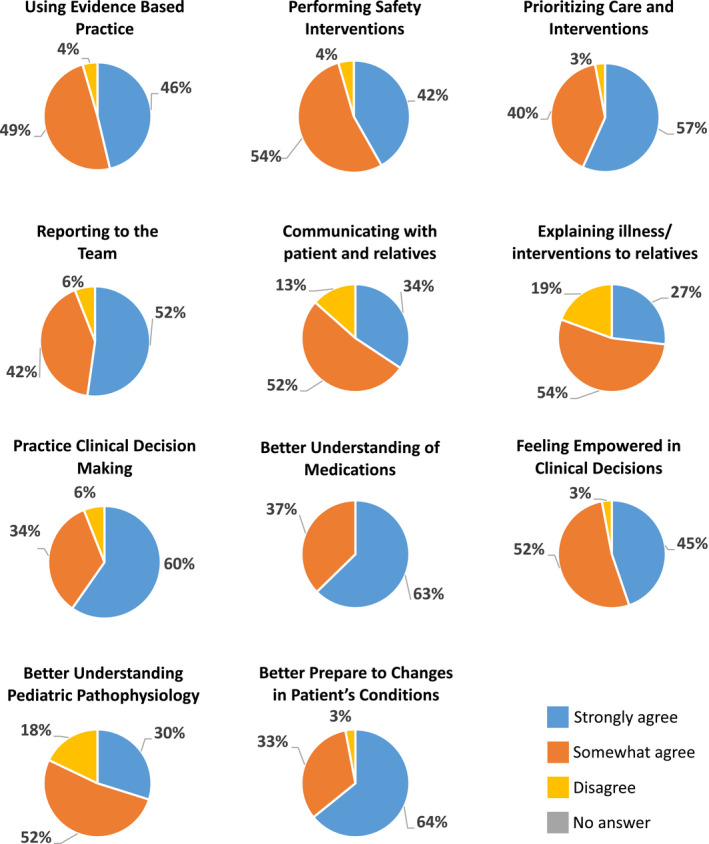

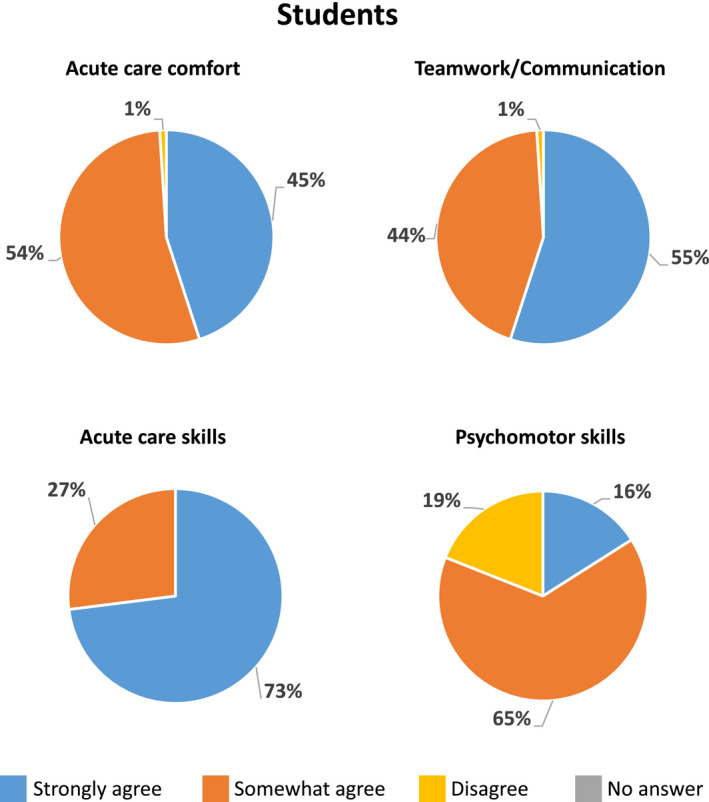

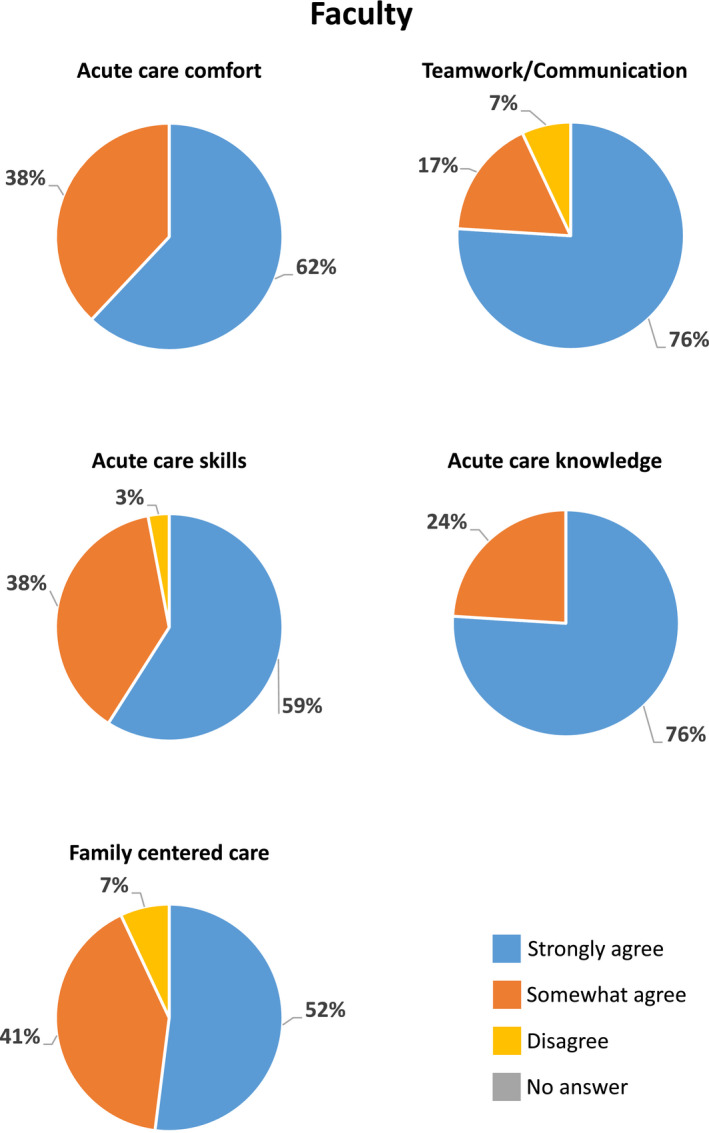

The scenario was perceived to improve confidence in using evidence‐based practice, foster safety, prioritize care and interventions, practice clinical decision making skills, understand medications, feel empowered to make clinical decisions, respond to a change in the patient’s condition and improve communication within the team (see Figure 3). Scores were somewhat lower in the realm of communication with family members and better understanding of pathophysiology. Students perceived the tool to be efficacious in improving acute care comfort and skills and teamwork/communication; however, students responded less positively when asked if the tool was efficacious teaching psychomotor skills (see Figure 4). One student responded in an open‐ended answer: “This sim was effective for helping us learn about the importance of different communication strategies (e.g., closing the loop during emergency situations) and the roles of different team members during emergencies” (P10). Facilitators responded to 3‐point Likert‐scale questions positively in the realms of perception in teaching acute care comfort, teamwork/communication, family‐centered care, acute care knowledge, and skills (see Figure 5).

FIGURE 3.

Student perception of the ability of the simulations to achieve specific learning competencies, Modified Simulation Effectiveness Tool (SET‐M)

FIGURE 4.

Student perception of the ability of the simulations to achieve specific learning competencies, 3‐point Likert‐scale questions

FIGURE 5.

Faculty perception of the ability of the simulations to achieve specific learning competencies, 3‐point Likert‐scale questions

Participants responded that the debrief provided a constructive evaluation of the simulation (58/67 [87%] strongly agreed, 9/67 [13%] somewhat agreed, none disagreed), the opportunity to self‐reflect on performance (56/67 [84%] strongly agreed, 10/67 [15%] somewhat agreed, 1/67 [1%] disagreed), helped with clinical judgment (52/67 [78%] strongly agreed, 14/67 [21%] somewhat agreed, 1/67 [1%] disagreed), allowed space to verbalize feelings before focusing on the scenario (54/67 [81%] strongly agreed, 12/67 [18%] somewhat agreed, 1/67 [1%] disagreed), and overall contributed to learning (57/67 [85%] strongly agreed, 10/67 [15%] somewhat agreed, none disagreed).

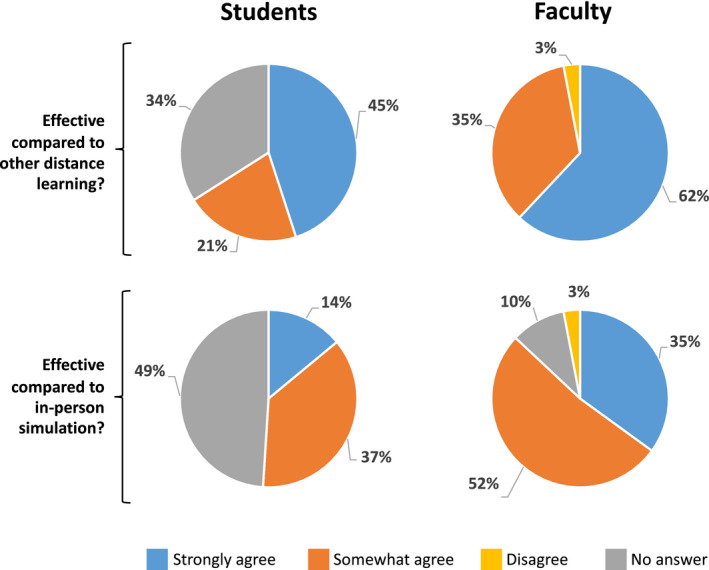

The overall facilitator NPS was +66 and the student NPS was +41. In addition, facilitators either somewhat agreed (8/29 [28%]) or strongly agreed (20/29 [69%]) that the tool was easy to learn how to use (one did not answer). All medical student learner respondents felt that the facilitators worked well together (51/69 [74%] strongly agreed, 2/69 [3%] somewhat agreed, 16 no answer) and that having a cofacilitator enhanced their learning during the sessions (45/69 [67%] strongly agreed, 8/69 [12%] somewhat agreed, 16 no answer). Nearly all facilitators and two‐thirds of the students perceived the tool to be comparable to other distance learning (e.g., online lectures and discussions). One student responded: “I would sign up for any simulations offered. I think it is a much more engaging and informative format than lectures” (P3). When asked if the telesimulation was effective compared to in‐person simulation, approximately 90% of facilitators, and one‐half of the students either somewhat or strongly agreed (see Figure 6). Of note, nearly half of the students did not submit an answer to this question, likely because less than a quarter of the students had ever participated in an in‐person simulation prior.

FIGURE 6.

Comparison of telesimulation to other distance learning modalities and in‐person simulation

Feasibility, acceptability, learner logistics, communication, and technical aspects

All of the remote simulation sessions were completed on schedule without technical issues, highlighting the feasibility of the project. Several themes emerged in the free‐response section of the surveys that were shared among facilitators and participants on the topics of acceptability, learner logistics, communication, and technical aspects of this telesimulation platform as an educational tool.

Overall, both facilitators and participants felt the telesimulation tool was an acceptable education tool. For example, facilitators commented: “Overall I think the simulation went really well. The content worked well on Zoom and participants reported that it felt like a physical simulation” (F1); “Sim well done and good learning experience” (F2). Students remarked: “This was a really great session, I appreciated the opportunity to hear from the preceptors about how they would make treatment decisions and pathophysiology” (P4); “I really enjoyed it and learned a ton” (P13); “Virtual simulation can be tricky to implement but I thought it was really well done in this case!” (P2).

Respondents commented on learner logistics, specifically recommending limiting the number of participants. One student commented: “Having fewer learners in the session would allow everyone to participate. We had some students "observing" because there weren't enough roles for them to be able to participate” (P5). One facilitator provided a possible solution for groups that are larger than the optimal number: “With large numbers, add roles, that is, leader/airway/survey/historian/and person who looks things up on the internet and the rest as observers assigned to watch specifically for teamwork/communication, patient communication and medical/clinical knowledge, take notes, and contribute in debrief” (F7). Medical students are relatively naïve to simulation and resuscitation drills; therefore, it was suggested to take the time in the prebrief to give a thorough orientation to team roles. One student commented: “It would be helpful to perhaps spend a little more time at the beginning reviewing the responsibilities of each team member (e.g., more instruction/review of the components of the primary survey)” (P8). Facilitators also observed the need to assess the learners’ experience prior to starting the simulation, as third‐ and fourth‐year medical students bring varying experience in simulation and clinical time. “Ask participant initially with a show of hands experience to give a sense of how in depth to go with prebrief/explanation of things and consider having more experienced participants be lead/roles to set up for success and all get more out of sim (1) who has done Peds or EM rotation, (2) ever seen or participated in a resuscitation, or (3) ever participated in a simulation” (F8).

Several expressed that it felt uncomfortable to communicate on web conferencing such as Zoom because they sometimes talked over each other. One participant commented: “It was challenging having the parent–provider interactions occurring at the same time as the conversations by the managing members of the team … people were talking over one another and it was hard to keep track of what was being said” (F16). Another participant highlighted the struggle of achieving the learning objective of family‐centered communication via the lead facilitator playing the parent and the participant assigned to the history taking in the telecommunication environment, offering the suggestion to utilize virtual break‐out rooms for this purpose in the future: “I think practicing family‐centered care might not be the best task to practice with telesimulation—it was hard to not talk over each other. Break out rooms?” (F15). To optimize, participants appreciated having the option of calling a “timeout” in the setting of confusion and use the chat box: “It worked well to have the timeouts and utilize the chat box” (F14). The option of taking a timeout and using the chat box was presented by every facilitator during the prebrief as an attempt to set the stage for smoother communication and troubleshooting in the telecommunication arena. Cofacilitators felt that they were able to communicate well with one another outside of Zoom or Webex via text or WhatsApp during the simulations. One facilitator highlighted the importance of the cofacilitators understanding ahead of time the specific learning objectives for the case: “Good to understand specific endpoint/learning objective for the learner (i.e., if OK to just say ‘give benzo’ don't keep pushing for specific med/dose/route if novice learner” (F19).

For the technical aspects of the simulation, participants appreciated having a simulation structure including the prebrief, scenario, and debrief guides. One facilitator commented: “Really appreciate the timeline given in the facilitator guide, helped to move the video backward and forward depending on interventions completed” (F23). To overcome Internet issues, it was useful to have the scenario guide written out as a back‐up: “The video was a bit choppy/hard to hear … this was overcome by verbally giving prompts” (F26). Several users (both participants and facilitators) suggested that having more sophisticated/interactive video capabilities to respond to participants’ requests and allowing visualization of physiologic changes to interventions would enhance the fidelity. “Consider making ways to make video more engaging/reactive to participant sim: One video is the image—change the participant still/video image;” (F30) “Improve video to include interventions” (F27). “The linear, non‐responsive nature of the video components [was challenging] … [This] is unfortunately a limitation of the format of the session. I am not sure of the feasibility of creating a premade simulation such as this with ‘branch points’ or other forms of response to the interventions initiated by the team … That said, for a relatively simple case like seizure, it worked for our group, as there were not a lot of different roads to go down and our team generally identified and performed the proper steps in the expected order” (F32).

DISCUSSION

The ACEP TeleSimBox is feasible to use and is perceived to be an effective alternative to in‐person simulation that users would promote to their colleagues. An important implication is that the tool can be used to effectively reach trainees in rural or more resource limited settings; for example, it can be used by students on away rotations from their primary hospital site as well as for provider outreach to community affiliates.

It is not surprising that both facilitators and participants expressed that the sessions were more conducive to practicing teamwork/communication skills, medical knowledge, and situational comfort and less conducive to hands‐on psychomotor skills practice and family‐centered care in this environment. Strategies to optimize the family‐centered communication via telecommunication platforms include communicating in the chat box, setting up a breakout room, or adding a standardized patient for the role (rather than having the lead facilitator also play the parent). The learning objectives for this seizure case designed for medical students are focused on medical knowledge and teamwork/communication over specific skills practice. Future cases can be written to achieve psychomotor skill competencies, because this is an area that has proven to be effectively taught with this didactic method. 24 To do so, the video content could be adapted to focus on the technicalities of the skill set‐up and process. For example, the video could include airway and breathing maneuvers, intravenous or intraosseous access techniques, or various ways to administer a medication. We have not trialed this concept with the SimBox to date.

The NPS was higher for the facilitators (+66) than for the students (+41). It is impossible to know if those who did not complete the survey would recommend the tool as is or not. Despite this, a positive score in industry is well regarded. Of these student respondents (only 22% of the entire learner cohort), many requested more sophisticated video changing capabilities, specifically via branch points reflecting user interventions. While a core aim of this project is to create a low‐technology, easy‐to‐use telesimulation tool (represented here with a simple “sim on rails” YouTube video) we acknowledge the limitations of this style. We encourage interdisciplinary collaborations with medical and information technology professionals to collaborate on optimizing low‐cost, easy‐to‐produce and ‐use telesimulation platforms programs in the future.

LIMITATIONS

This study is limited by a small convenience sample size and low student survey response rate. The respondents completed surveys on a strictly voluntary and anonymous basis with fewer than a quarter of the medical student group responding to the postsession survey. Given that students are routinely asked to evaluate sessions, this may be due to survey fatigue and/or facilitators may not have been consistent asking students to complete the surveys. Nonetheless, this is a significant limitation of this study because it is impossible to infer if nonresponders felt strongly one way or another about the exercise. The participants were overall quite new to simulation‐based education; 96% responded that they had participated in fewer than 10 simulations ever and only 22% overall had ever participated in a single prior in‐person simulation. This could explain the lower response rate to the particular question comparing in‐person to telesimulation.

Our study participants were volunteer recruits and some faculty were required by their respective institutions to include larger groups of students in each teaching session. For this reason, the learner size of each group varied between four and 10 participants. To adapt, we attempted to crowd control by limiting the actual participants to four and asking the remaining to actively observe and contribute in the debrief. While we were able to review qualitative responses by these participants about their experience, we did not compare the survey results between the large and small cohorts.

We limited the evaluation of the simulation to user perceptions of effectiveness and did not directly evaluate the impact on knowledge, acquisition, or communication skills. Pre‐ and posttests and/or videotaping with subsequent review are ways to enhance the evaluation in the future.

The facilitators were expected to have completed a train‐the‐trainer that included reading a guide, watching a recorded telesimulation sample session, and communicating with the project lead for clarifications prior to running the drills. Not all facilitators completed this presimulation work; therefore, facilitator incongruence is a limitation of this study. Given that nearly all of the facilitators were previously trained in simulation facilitation, they may not have felt the need to review the prework. Future studies are needed to explore how novice facilitators feel about running the drills with only the prework provided with the ACEP TeleSimBox platform to assess feasibility for use with this group.

CONCLUSIONS

This telesimulation education platform implemented during the COVID‐19 pandemic with medical students was feasible, perceived to be effective in teaching specific learning objectives, and comparable to other distance learning modalities and in‐person simulation and was positively recommended by both student and faculty users. As we move further into the COVID‐19 and postpandemic world, telesimulation tools must be studied and iteratively improved to maximize usability, accessibility, and educational effectiveness beyond user perception.

PRACTICE POINTS

Telesimulation case developed, trialed, and evaluated for use with medical students and faculty

TeleSimBox is no/low cost and easily replicable

This “sim on rails” tool is feasible to use, acceptable, and effective at teaching medical knowledge and teamwork/communication skills

Learners and facilitators positively promote the tool to colleagues

CONFLICT OF INTEREST

The authors report no declarations of interest.

ACKNOWLEDGMENTS

The authors acknowledge the ACEP SimBox core team; data and figure assistance from Zeus Antonello, PhD; and the many facilitators who volunteered their time to facilitate the sessions, including: Megan Schultz, MD, Abigail Schuh, MD, and Jean Pearce, MD.

Sanseau E, Lavoie M, Tay K-Y, et al. TeleSimBox: A perceived effective alternative for experiential learning for medical student education with social distancing requirements. AEM Educ Train. 2021;5:e10590. 10.1002/aet2.10590

REFERENCES

- 1. Ahmed H, Allaf M, Elghazaly H. COVID‐19 and medical education. Lancet Infect Dis. 2020;20(7):777‐778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. UPDATED: Coronavirus (COVID‐19) and ACGME Site Visits, Educational Activities, and Other Meetings [Internet] . ACGME. 2020. Accessed February 3, 2021. https://www.acgme.org/Newsroom/Newsroom‐Details/ArticleID/10072/UPDATED‐Coronavirus‐COVID‐19‐and‐ACGME‐Site‐Visits‐Educational‐Activities‐and‐Other‐Meetings

- 3. COVID‐19 FAQs: Guidance for Medical Students . American Medical Association. 2020. Accessed February 3, 2021. https://www.ama‐assn.org/residents‐students/medical‐school‐life/covid‐19‐faqs‐guidance‐medical‐students

- 4. Ashokka B, Ong SY, Tay KH, Loh NH, Gee CF, Samarasekera DD. Coordinated responses of academic medical centres to pandemics: sustaining medical education during COVID‐19. Med Teach. 2020;42(7):762‐771. [DOI] [PubMed] [Google Scholar]

- 5. Healthcare Simulation Dictionary. c2021. Accessed February 3, 2021. https://www.ssih.org/dictionary

- 6. Laurent DA, Niazi AU, Cunningham MS, et al. A valid and reliable assessment tool for remote simulation‐based ultrasound‐guided regional anesthesia. Reg Anesth Pain Med. 2014;39(6):496‐501. [DOI] [PubMed] [Google Scholar]

- 7. Shao M, Kashyap R, Niven A, et al. Feasibility of an international remote simulation training program in critical care delivery: a pilot study. Mayo Clin Proc Innov Qual Outcomes. 2018;2(3):229‐233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Ventre KM, Schwid HA. Computer and web based simulators. In: Levine AI, DeMaria S, Schwartz AD, Sim AJ, editors. The Comprehensive Textbook of Healthcare Simulation. New York, NY: Springer; 2013:191–208.

- 9. von Lubitz D, Carrasco B, Gabbrielli F, et al. Multiplatform high fidelity human patient simulation environment in an ultra‐long distance setting. Paper presented at: 10th Americas Conference on Information Systems, AMCIS 2004, New York, NY, USA, August 6‐8, 2004.

- 10. Henao Ó, Escallón J, Green J, et al. Fundamentals of laparoscopic surgery in Colombia using telesimulation: an effective educational tool for distance learning. Biomed Rev Inst Nac Salud. 2013;33(1):107‐114. [DOI] [PubMed] [Google Scholar]

- 11. Austin E, Landes M, Meshkat N. Telesimulation in emergency medicine: connecting Canadian faculty to Ethiopian residents to provide procedural training. Paper presented at: 3rd Annual Sunnybrook Education Conference: Technology Enhanced Learning; October 17, 2014; Toronto, Ontario, Canada.

- 12. Burckett‐St.Laurent DA, Cunningham MS, Abbas S, Chan VW, Okrainec A, Niazi AU. Teaching ultrasound‐guided regional anesthesia remotely: a feasibility study. Acta Anaesthesiol Scand. 2016;60(7):995‐1002. [DOI] [PubMed] [Google Scholar]

- 13. Teledebriefing in Medical Simulation. StatsPearls Publishing. 2019. Accessed February 3, 2021. https://europepmc.org/article/NBK/nbk546584

- 14. Gross IT, Whitfill T, Auzina L, Auerbach M, Balmaks R. Telementoring for remote simulation instructor training and faculty development using telesimulation. BMJ Simul Technol Enhanc Learn. 2021;7:61‐65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Cheng A, Kolbe M, Grant V, et al. A practical guide to virtual debriefings: communities of inquiry perspective. Adv Simul. 2020;5(1):18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Position Statement on Use of Virtual Simulation during the Pandemic. Society for Simulation in Healthcare. 2020. Accessed February 3, 2021. https://www.ssih.org/Portals/48/2020/INACSL_SSH%20Position%20Paper.pdf

- 17. Open Access Publishing and Resources: Free Open Access Meducation (FOAM). Georgetown University Dahlgren Memorial Library. c2021. Accessed February 3, 2021. https://guides.dml.georgetown.edu/openaccess/FOAM

- 18. TeleSimBox. SIMBOX Creative Team. c2017‐2020. Accessed February 3, 2021. https://www.acepsim.com

- 19. Zigmont JJ, Kappus LJ, Sudikoff SN. Theoretical foundations of learning through simulation. Semin Perinatol. 2011;35(2):47‐51. [DOI] [PubMed] [Google Scholar]

- 20. Taylor DC, Hamdy H. Adult learning theories: implications for learning and teaching in medical education: AMEE Guide No. 83. Med Teach. 2013;35(11):e1561–e1572. [DOI] [PubMed] [Google Scholar]

- 21. Leighton K, Ravert P, Mudra V, Macintosh C. Updating the simulation effectiveness tool: item modifications and reevaluation of psychometric properties. Nurs Educ Perspect. 2015;36(5):317‐323. [DOI] [PubMed] [Google Scholar]

- 22. Hamilton DF, Lane JV, Gaston P, et al. Assessing treatment outcomes using a single question. Bone Jt J. 2014;96‐B(5):622–628. [DOI] [PubMed] [Google Scholar]

- 23. Braun V, Clarke V. Using thematic analysis in psychology. Qual Res Psychol. 2006;3(2):77‐101. [Google Scholar]

- 24. Mikrogianakis A, Kam A, Silver S, et al. Telesimulation: an innovative and effective tool for teaching novel intraosseous insertion techniques in developing countries. Acad Emerg Med. 2011;18(4):420‐427. [DOI] [PubMed] [Google Scholar]