Abstract

Objectives:

The National Academy of Medicine identified diagnostic error as a pressing public health concern and defined failure to effectively communicate the diagnosis to patients as a diagnostic error. Leveraging Patient’s Experience to improve Diagnosis (LEAPED) is a new program for measuring patient-reported diagnostic error. As a first step, we sought to assess the feasibility of using LEAPED after emergency department (ED) discharge.

Methods:

We deployed LEAPED using a cohort design at three EDs within one academic health system. We enrolled 59 patients after ED discharge and queried them about their health status and understanding of the explanation for their health problems at 2-weeks, 1-month, and 3-months. We measured response rates and demographic/clinical predictors of patient uptake of LEAPED.

Results:

Of those enrolled (n=59), 90% (n=53) responded to the 2-week post-ED discharge questionnaire (1 and 3-month ongoing). Of the six non-responders, one died and three were hospitalized at two weeks. The average age was 50 years (SD 16) and 64% were female; 53% were white and 41% were black. Over a fifth (23%) reported they were not given an explanation of their health problem on leaving the ED, and of those, a fourth (25%) did not have an understanding of what next steps to take after leaving the ED.

Conclusions:

Patient uptake of LEAPED was high, suggesting that patient-report may be a feasible method of evaluating the effectiveness of diagnostic communication to patients though further testing in a broader patient population is essential. Future research should determine if LEAPED yields important insights into the quality and safety of diagnostic care.

Keywords: diagnostic error, measurement, patient-reported outcome measures, patient engagement

Introduction

Despite the urgent need to address diagnostic quality and safety, diagnostic errors remains a mostly unmeasured area of patient safety [1]. The National Academy of Medicine (NAM) defines diagnostic error as the failure to establish an accurate and timely explanation of the patient’s health problems or the failure to communicate that explanation to the patient [1]. Effective communication implies patients both receive and understand the diagnostic message [2]. Efforts to measure diagnostic error have principally centered on triggered chart reviews [3] or epidemiologic analysis of administrative data [4]. These methods rely on clinical documentation that typically does not record whether or not the patient understood their diagnosis, and is often missing information from outside institutions or providers when follow-up occurs elsewhere [4].

Engaging patients is a potentially powerful approach to measuring diagnostic errors that remains under-explored. Previous studies have found that patients can identify when they are victims of diagnostic errors [5, 6]. Furthermore, they know what transpired with their diagnosis even if follow-up occurs at a new institution. A study of patients’ perceptions of errors found that while 13% of individuals reported receiving a misdiagnosis from a provider, the majority of those individuals (74%) changed providers following perceived harm from misdiagnosis and reported a reluctance to notify the previous provider of the mistake [6]. Finally, by the NAM’s definition, since a diagnostic error occurs when patients fail to receive an adequate explanation of their health problem(s), the only way to confidently ascertain this aspect of error is to directly ask patients or their family members about their understanding of the diagnosis.

This pilot study seeks to develop and implement a patient-centered measurement system, Leverage Patient’s Experience to improve Diagnosis (LEAPED). LEAPED is our proposed method of measuring diagnostic error through systematically seeking patient feedback on their diagnoses and health status following emergency department (ED) discharge. We sought to test the hypothesis that LEAPED could provide a feasible measure of diagnostic error. This pilot study deployed the program and determined the extent and predictors of patient uptake of LEAPED. This manuscript reports on feasibility of the approach and patient-reported event rates; future manuscripts will report on the clinical validity of patient-reported diagnostic errors.

Materials and methods

Design and setting

To test LEAPED, we used a longitudinal cohort study design at three EDs within a single academic health system in the Mid-Atlantic. Patients consented to complete questionnaires at 2 weeks, 1 month, and 3 months following ED discharge. The Institutional Review Board approved this study (JHM IRB00202800).

Inclusion criteria

People aged 18 and older who were seen at the ED within the past seven days with one or more common chief complaints (chest pain, upper back pain, abdominal pain, shortness of breath/cough, dizziness, and headache) and one or more chronic conditions (hypertension, diabetes, history of stroke, arthritis, cancer, heart disease, osteoporosis, depression, and/or chronic obstructive lung disease) were eligible to join the study.

Recruitment and data collection procedures

Participants were recruited from June 2019 through September 2019. Two recruitment methods were used: in-person approach in the ED, and patient portal messaging. The in-person recruitment involved screening the patient’s triage note to ensure eligibility, and obtaining permission from the ED provider to approach the patient. Patient portal messaging involved first creating a computable phenotype that identified eligible patients based on inclusion criteria in the electronic medical record [6]. The computable phenotype allowed for the creation of a workbench report in Epic Hyperspace 2019 (Verona, WI) where bulk messages could be sent to eligible patients. Patients recruited through patient portal messaging answered screening questions on their eligibility, and were also confirmed as eligible by the study team through an electronic medical record verification. All recruited participants completed questionnaires through REDCap (Research Electronic Data Capture) tools hosted at Johns Hopkins University [7, 8]. The links to the follow-up questionnaires were emailed or texted, or the questionnaire was completed over the phone with a research assistant pending on patient preference. Patients received a $10 gift card for each questionnaire completed.

Measurement of patient-reported diagnostic error

Patient reported diagnostic errors were divided into two categories based on the NAM definition: 1) the failure to establish an accurate and timely explanation of the patient’s health problems, or 2) failure to communicate that explanation to the patient [1]. The questionnaires were thus designed to elicit: 1) whether the patient experienced previously undiagnosed health problems following discharge, and 2) whether the patient understands the health problems documented in their recent ED visit, a “failure to communicate” diagnostic error. The questionnaire for the 2-week, 1-month, and 3-month follow up is provided in the Supplementary Material.

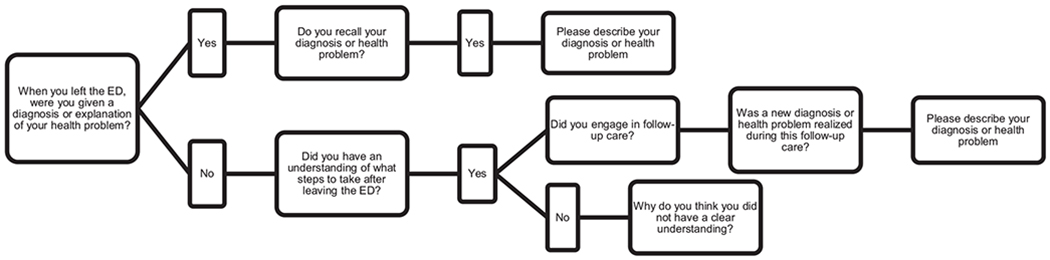

The questionnaire, which was built in collaboration with patient advocates, is designed to capture diagnostic errors using layman’s language. It was also built with the understanding that the ED is not where a patient will receive a final diagnosis, and that they may need to engage in follow-up care in a non-emergency setting to receive a more complete explanation of their problem. Figure 1 depicts the branching logic used in the questionnaire. The first questions are: “When you left the ED, were you given a diagnosis or explanation of your health problem?” If the participant responded “Yes,” they were asked if they recalled their diagnosis or health problem. If the participant responded “No,” they were asked if they had an understanding of what steps to take after leaving the ED. It was considered a diagnostic error based on “failure to communicate” if a participant responded that either [1] they did not recall their diagnosis or explanation of their health problem; or [2] they were not given a diagnosis or explanation of their health problem and they also did not have an understanding of what steps to take after leaving the ED.

Figure 1:

Flowchart of branching logic used in questionnaire.

This figure depicts the branching logic used in the first question of the questionnaire. The branching logic was used because, particularly in the emergency department setting, it is common for the patient to be recommended for follow-up testing in an outpatient setting to confirm a diagnosis, and thus it is important the questionnaire captures this clinically appropriate alternative to receiving a diagnosis in the emergency department. There are three additional questions included in the questionnaire participants respond to regardless of their response to the first question. The three questions are about their health status and new or additional health problems identified by their caregiver or healthcare provider outside of follow-up care, see Supplementary Material for full questionnaire.

Participants were also questioned about new diagnoses or health problems recognized after discharge, either by other providers or by the participant or their caregiver, and whether their health status stayed the same, improved, or worsened. In this pilot study, we focused only on the feasibility of collecting this information; validation of new diagnoses or health problems as diagnostic errors (e.g., relatedness to the prior visit) will occur in future studies.

Other variables

Sociodemographic data were the key independent variables examined in this study. Sex, age, race, ethnicity, employment, and education level were assessed in the baseline questionnaire. Chronic conditions (hypertension, diabetes, stroke, dementia, arthritis, cancer, heart disease, osteoporosis, healthy, depression, chronic obstructive lung disease and associated conditions, other [specify]) were collected via patient self-report.

Feasibility was examined by looking at patient uptake. The threshold was an uptake of at least 80% of patients responding to follow-up questionnaires.

Statistical methods

We used Stata 16.0 for all analyses. Viechtbauer and colleagues’ formula for sample size calculation in pilot studies was used to determine a sample size of 59 was necessary to identify potential study design problems (e.g., misinterpretation of questionnaire items) with a prevalence of 0.05, with 95% confidence [7]. We calculated descriptive statistics for all variables included in the analysis.

Results

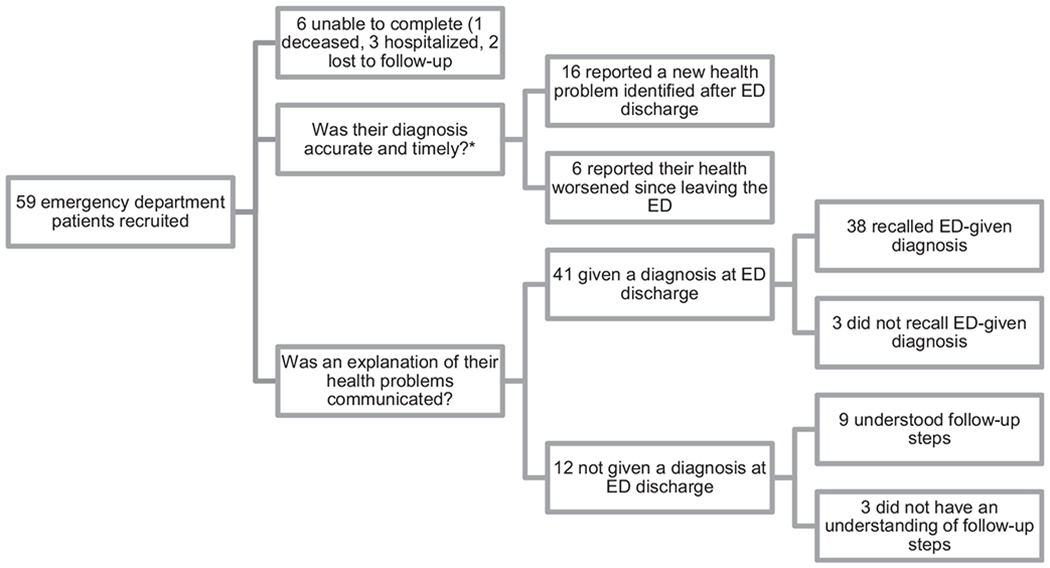

Of the 517 participants identified and messaged through the patient portal, 9% (n=45) consented to participate. Of the 17 people approached in the ED, 82% (n=14) consented; the three who were approached but did not consent cited fatigue (n=2) and “too long without water or food” as reasons for unwillingness to join the study. Key sample characteristics by recruitment method are summarized in Table 1. The racial breakdown was 53% white, 41% black, 4% Asian, 1% American Indian, and 1% Pacific Islander. A small number (2%) were Hispanic/Latino. The majority of participants (64%) were female and the median age was 48.6 (IQR 36.75–60.25, range 21–83). Of those enrolled (n=59), 90% (n=53) responded to the 2-week post-ED discharge questionnaire (1 and 3-month ongoing). Of the six non-responders at two weeks, three were hospitalized, one had died, and two were lost to follow-up (Figure 2).

Table 1:

Sample characteristics by recruitment method (n=59).

| Recruited by patient portal (n=45) | Recruited in person (n=14) | Overall | |

|---|---|---|---|

| Sex, n (%) | |||

| Men | 14 (31) | 7 (50) | 21 (36) |

| Women | 31 (69) | 7 (50) | 38 (64) |

| Age, mean (SD) | 47 (14.75) | 57.14 (17.38) | 49.88 (15.96) |

| Education level, n (%) | |||

| GED, or high school or less | 4 (9) | 6 (42) | 10 (17) |

| Some college or Associate’s degree | 16 (36) | 1 (7) | 17 (29) |

| Bachelor’s degree | 13 (29) | 2 (14) | 15 (25) |

| Graduate school | 12 (27) | 5 (36) | 17 (29) |

| Race, n (%) | |||

| Black | 15 (33) | 9 (57) | 24 (41) |

| White | 26 (58) | 5 (36) | 31 (53) |

| Other | 3 (7) | 1 (7) | 4 (6) |

| Employment, n (%) | |||

| Employed | 29 (64) | 6 (43) | 35 (59) |

| Unemployed | 8 (18) | 2 (14) | 10 (17) |

| Retired | 8 (18) | 6 (43) | 14 (24) |

Figure 2:

Two-week follow-up results from Leveraging Patient’s Experience to improve Diagnosis (LEAPED) pilot study.

*Patient-reported diagnoses were not validated with administrative data in this pilot study, as is planned in future studies. LEAPED includes questions about understanding of “follow-up steps” as it is not always possible to reach a diagnosis in the emergency department (ED), and thus it is important in these cases to know the patient understood next steps.

In total, 11% (n=6/53) had a “communication failure” diagnostic error. Over a fifth (23%) reported they were not given an explanation of their health problem on leaving the ED, and of those, a fourth (25%) did not have an understanding of what steps to take after leaving the ED (Table 2). Of the 77% who were given a diagnosis or explanation of their health problems, 7% did not recall the diagnosis or explanation.

Table 2:

Patient-reported 2-week follow-up responses, n=53a.

| Question | n (%) |

|---|---|

| Given a diagnosis or explanation of health problem at emergency department discharge | |

| Yes | 41 (77) |

| No | 12 (23) |

| Recalled given diagnosis or explanation of health problem | |

| Yes | 38 (93) |

| No | 3 (7) |

| Reported understanding follow-up directions given at dischargeb | |

| Yes | 9 (75) |

| No | 3 (25) |

| Health status | |

| Improved | 39 (74) |

| Worsened | 6 (11) |

| Stayed the same | 8 (15) |

| New health problem identified by a health care provider | |

| Yes | 10 (19) |

| No | 43 (81) |

| New health problem identified by patient or caregiver | |

| Yes | 6 (11) |

| No | 47 (89) |

Overall n=59, in the two-week results, six people unable to complete the 2-week questionnaire were dropped from analyses.

Participants were only asked this question if they reported they did not receive a diagnosis/explanation of their health problem at discharge.

In total, 30% (n=16/53) reported that a new health problem was identified after ED discharge, with 19% reporting that a health care provider identified the new problem and 11% reporting that either they or their caregiver identified the new health problem. A minority of participants reported worsening health status (12%) or no change (15%), while the majority had improved in the interval (74%) (Table 2). Only 41% (n=24/59) reported improved health, no communication failure diagnostic error, and no newly identified health problems.

Discussion

In this study, for patients agreeing to participate, 2-week completion of the LEAPED survey was high (90%), loss to follow-up was low (4%), and measured rates of communication failure diagnostic error (13%) and new health problems (30%) were high. Taken together, these results suggest that patient self-report may be a feasible method of assessing diagnostic error though further testing in a broader patient population is essential.

Our results are consistent with prior literature. Multiple studies have found that patients are able to identify medication errors in the electronic medical record [9, 10]. Our findings suggest that the same could feasibly be done for diagnosis, though identifying when a diagnostic occurs is both highly complex [11, 12] and personal to clinicians [1]. A study of patients diagnosed with heart failure, pneumonia, and acute coronary syndrome found that only 60% of patients accurately described their diagnosis in postdischarge interviews, [13] which is in line with our current findings that a sizable minority of patients do not fully “get the message” about their diagnosis. Our study likely reflects a more activated population because the majorities were recruited through the patient portal, and we also did not assess if they accurately described their diagnosis, only if they could recall their diagnosis or understood follow-up steps.

Limitations and potential solutions in future LEAPED iterations

Generalizability is limited in this small, convenience sample; only 9% of those approached electronically agreed to participate and two enrolled patients were lost to follow-up. Sicker patients refused participation or could not complete LEAPED questionnaires, creating a risk of bias in ascertainment absent complementary methods of case review or follow-up.

Patient portal recruitment inherently introduces selection bias [14]. Importantly for this study, patient portal users are more likely to be engaged with their healthcare system and thus more likely to respond with updates on their health status than the average patient population. As clinical research operations have increasingly moved to be virtual wherever possible, it is important to ensure that the sample recruited remains reflective of the target population. Post mail used in tandem with the patient portal may be one step to ensuring a more generalizable sample in future iterations [14–16].

Based on our initial learnings from this pilot, there are multiple ways the questionnaire could be improved in future iterations. We indirectly questioned participants on understanding their diagnosis by asking if they could both recall and describe their diagnosis. In future iterations, we will directly ask patients “Do you understand your diagnosis” and ask further questions about their perception on whether or not they received the information they needed to understand the diagnosis. We also only asked participants who did not receive a diagnosis if they received follow-up steps, when it is not uncommon for patients to receive a diagnosis and follow-up steps to confirm this initial diagnosis. Going forward, we will ask all participants about follow-up steps, rather than limiting this question to participants who did not receive a diagnosis.

Components of the questionnaire were written above the recommended sixth-grade reading level. For example, we used the term “caregiver” without guidance for the definition. We have since revised the questionnaire to explain that “caregiver” is intended to be interpreted broadly and inclusive of family, friends, care partners, or healthcare providers. We will simplify questions and deliberately seek feedback from the broader, non-medical population to ensure easy readability.

We ask participants about new or additional health problems in the questionnaire without anchoring this question to the symptom that originally brought them into the emergency department. This is intentional, we do not expect patients to link new health problems to their original emergency department visit as even chart reviews done by experts to identify preventable diagnostic errors have poor inter-rater reliability [17]. In future work, we will validate reported harms from diagnostic errors that have a clinically plausible link between the symptom and the disease, following the Symptom-Disease Pair Analysis of Diagnostic Error (SPADE) model [4].

Conclusions

Currently there are no routine methods to measure and monitor diagnostic errors in clinical practice. Our results suggest that asking patients directly to assess diagnostic errors is likely feasible. The next step is to assess the accuracy of patient-reported diagnosis. Understanding the accuracy of patient-reported diagnoses will critically inform health science, regardless of the outcome found—if patients are highly accurate at identifying their diagnoses, it establishes that patients are an excellent source of truth on their own diagnoses and can provide key feedback to identify and solve diagnostic errors; if patients are inaccurate at identifying their diagnoses, it establishes the need for increased efforts focused on educating and empowering patients to understand their diagnoses. Future research should seek to establish the reliability of assessing communication failure diagnostic errors and the clinical validity of newly-reported health problems as a proxy for additional NAM-defined diagnostic errors.

Supplementary Material

Acknowledgments

Research funding: U.S. Department of Health and Human Services, National Institutes of Health, National Center for Advancing Translational Sciences, Institute of Clinical and Translational Research/Institutional Career Development Core/KL2 TR0030. U.S. Department of Health and Human Services, National Institutes of Health, National Institute of Nursing Research Hopkins Center to Promote Resilience in Persons and families living with multiple chronic conditions (the PROMOTE Center), P30NR019083.

Competing interests: David Newman-Toker was supported by the Armstrong Institute Center for Diagnostic Excellence, Johns Hopkins University School of Medicine.

Footnotes

Informed consent: Informed consent was obtained from all individuals included in this study.

Ethical approval: The Institutional Review Board approved this study (JHM IRB00202800).

Supplementary Material: The online version of this article offers supplementary material (https://doi.org/10.1515/dx-2020-0014).

Contributor Information

Kelly T. Gleason, School of Nursing, Johns Hopkins University, 525 N Wolfe Street, Baltimore, MD, 21205, USA.

Susan Peterson, School of Medicine, Johns Hopkins University, Baltimore, MD, USA.

Cheryl R. Dennison Himmelfarb, School of Nursing, Johns Hopkins University, Baltimore, MD, USA; School of Medicine, Johns Hopkins University, Baltimore, MD, USA.

Mariel Villanueva, School of Nursing, Johns Hopkins University, Baltimore, MD, USA.

Taylor Wynn, School of Nursing, Johns Hopkins University, Baltimore, MD, USA.

Paula Bondal, School of Nursing, Johns Hopkins University, Baltimore, MD, USA.

Daniel Berg, Minneapolis, MN, USA.

Welcome Jerde, Minneapolis, MN, USA.

David Newman-Toker, School of Medicine, Johns Hopkins University, Baltimore, MD, USA.

References

- 1.National Academy of Medicine. Improving diagnosis in health Care. Washington, DC: National Academies Press; 2015. Available from: https://iom.nationalacademies.org/Reports/2015/Improving-Diagnosis-in-Healthcare.aspx [Accessed 25 Jun 2020]. [Google Scholar]

- 2.Berger ZD, Brito JP, Ospina NS, Kannan S, Hinson JS, Hess EP, et al. Patient centred diagnosis: sharing diagnostic decisions with patients in clinical practice. BMJ (Clin Res Ed) 2017;359:j4218. [DOI] [PubMed] [Google Scholar]

- 3.Murphy DR, Meyer AND, Sittig DF, Meeks DW, Thomas EJ, Singh H. Application of electronic trigger tools to identify targets for improving diagnostic safety. BMJ Qual Saf 2019;28:151–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Liberman AL, Newman-Toker DE. Symptom-Disease Pair Analysis of Diagnostic Error (SPADE): a conceptual framework and methodological approach for unearthing misdiagnosis-related harms using big data. BMJ Qual Saf 2018;27:557–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ward K, Armitage G. Can patients report patient safety incidents in a hospital setting? a systematic review. BMJ Qual Saf 2012;21: 685–700. [DOI] [PubMed] [Google Scholar]

- 6.Kistler CE, Walter LC, Mitchell CM, Sloane PD. Patient perceptions of mistakes in ambulatory care. Arch Intern Med 2010;170:1480–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)-a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inf 2009;42:377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O’Neal L, et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inf 2019;95:103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Otte-Trojel T, Rundall TG, De Bont A, Van De Klundert J, Reed ME. The organizational dynamics enabling patient portal impacts upon organizational performance and patient health: a qualitative study of Kaiser Permanente. BMC Health Serv Res 2015;15. 10.1186/s12913-015-1208-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mold F, de Lusignan S, Sheikh A, Majeed A, Wyatt JC, Quinn T, et al. Patients’ online access to their electronic health records and linked online services: a systematic review in primary care. Br J Gen Pract 2015;65:e141–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Singh H, Meyer AND, Thomas EJ. The frequency of diagnostic errors in outpatient care: estimations from three large observational studies involving US adult populations. BMJ Qual Saf 2014;23:727–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Graber ML. The incidence of diagnostic error in medicine. BMJ Qual Saf 2013;22:ii21–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Horwitz LI, Moriarty JP, Chen C, Fogerty RL, Brewster UC, Kanade S, et al. Quality of discharge practices and patient understanding at an academic medical center. JAMA Intern Med 2013;173:1715–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Miller HN, Gleason KT, Juraschek SP, Plante TB, Lewis-Land C, Woods B, et al. Electronic medical record-based cohort selection and direct-to-patient, targeted recruitment: early efficacy and lessons learned. J Am Med Inf Assoc 2019. 10.1093/jamia/ocz168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Powell KR. Patient-perceived facilitators of and barriers to electronic portal use. CIN Comput Inform Nurs 2017;35:565–73. [DOI] [PubMed] [Google Scholar]

- 16.Antonio MG, Petrovskaya O, Lau F. Is research on patient portals attuned to health equity? a scoping review. J Am Med Inform Ass 2019;26:871–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Singh H, Sittig DF. Were my diagnosis and treatment correct? No news is not necessarily good news. J Gen Intern Med 2014;29:1087–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.