Abstract

Emotion in daily life is often expressed in a multimodal fashion. Consequently emotional information from one modality can influence processing in another. In a previous fMRI study we assessed the neural correlates of audio–visual integration and found that activity in the left amygdala is significantly attenuated when a neutral stimulus is paired with an emotional one compared to conditions where emotional stimuli were present in both channels. Here we used dynamic causal modelling to investigate the effective connectivity in the neuronal network underlying this emotion presence congruence effect. Our results provided strong evidence in favor of a model family, differing only in the interhemispheric interactions. All winning models share a connection from the bilateral fusiform gyrus (FFG) into the left amygdala and a non-linear modulatory influence of bilateral posterior superior temporal sulcus (pSTS) on these connections. This result indicates that the pSTS not only integrates multi-modal information from visual and auditory regions (as reflected in our model by significant feed-forward connections) but also gates the influence of the sensory information on the left amygdala, leading to attenuation of amygdala activity when a neutral stimulus is integrated. Moreover, we found a significant lateralization of the FFG due to stronger driving input by the stimuli (faces) into the right hemisphere, whereas such lateralization was not present for sound-driven input into the superior temporal gyrus.

In summary, our data provides further evidence for a rightward lateralization of the FFG and in particular for a key role of the pSTS in the integration and gating of audio–visual emotional information.

Keywords: fMRI, DCM, Incongruence, Amygdala, pSTS, Audiovisual

Introduction

The ability to accurately extract emotional information from facial and vocal expressions is an important part of social functioning. In everyday life, however, we are rarely confronted with information from only one sensory channel. Rather, emotional processing is most of the time based on multimodal inputs, for instance seeing a happy face and concurrently hearing laughter. Consequently, processed unimodal inputs from different channels have to be integrated into a coherent construct of emotional perception.

Studies of audiovisual integration have predominantly assessed the neural correlates of audiovisual speech perception (Beauchamp et al., 2010; Benoit et al., 2010; Calvert et al., 2000; Sekiyama et al., 2003) as well as object and action processing (Beauchamp et al., 2004; James et al., 2011; Taylor et al., 2006). Yet, some authors have also investigated the integration of emotional information (Hagan et al., 2009; Kreifelts et al., 2007, 2009; Pourtois et al., 2005; Robins et al., 2009). Independent of the different stimuli and experimental designs, all of these studies identified the posterior temporal sulcus (pSTS)/middle temporal gyrus (MTG) as a key region for audiovisual integration. Although some authors could not replicate this finding (Hocking and Price, 2008; Olson et al., 2002), other studies assessing effective connectivity (Kreifelts et al., 2007) and using TMS (Beauchamp et al., 2010) further support the role of the pSTS in the processing and integration of multimodal stimuli. For instance, Kreifelts et al. (2007) report increased effective connectivity from FFG and STG to ipsilateral pSTS in multimodal compared to unimodal conditions.

Unimodal emotional processing in turn has been extensively studied, with a high proportion of studies reporting amygdala activation for both visual and auditory emotion processing (Belin et al., 2004; Fecteau et al., 2007; Gur et al., 2002; Habel et al., 2007; Phillips et al., 1998; Sabatinelli et al., 2011; Sander and Scheich, 2001). Crossmodally Dolan et al. (2001) and Klasen et al. (2011) used an emotional audiovisual paradigm and found greater activity in the amygdala in emotional congruent compared to incongruent conditions. In a recent fMRI study investigating audiovisual emotional integration we differentiated between two different types of incongruence processing, incongruence of emotional valence and incongruence of emotion presence (Müller et al., 2011). While incongruence of emotional valence refers to pairing of two emotional stimuli which differ in valence (e.g., positive sound such as laughter and a negative visual stimulus such as a fearful face), incongruence of emotional presence is defined as presentation of an emotional stimulus in one channel and a neutral one in the other (e.g., an emotional sound such as laughter or scream and a neutral visual stimulus such as a face showing no emotional expression). While no effect in the amygdala could be observed for (in)congruence of emotion valence, we found a statistically significant effect for the congruence of emotional presence. In particular, activity in the left amygdala was significantly higher in conditions where an emotional stimulus was presented in both channels (emotion presence congruence) compared to those conditions where an emotional stimulus in one modality was paired with a neutral stimulus in the other channel (Müller et al., 2011). This result indicates that independent of crossmodal valence congruency, left amygdala activity is elevated when an emotional signal is not paired with neutral information. This emotion presence congruence (EPC) effect may be understood as differential additive and competitive effects on amygdala activity in audiovisual integration. Emotional stimuli in both channels, congruent or incongruent in valence, both cause amygdala activation resulting in summation of activity. In contrast, an emotional stimulus paired with a neutral one leads to competition and the neutral information in one channel may counteract activation by the emotional stimulus in the other modality. This result indicates that the amygdala not only signals the presence of emotional, but also the absence of neutral information, suggesting a more general role of the amygdala in the evaluation of social relevance (Adolphs, 2010). However, the neural interactions of the network leading to a mechanistic understanding of this signal in the amygdala can’t be uncovered by conventional fMRI analysis. Using Dynamic Causal Modelling (DCM), the present study therefore aims to investigate the effective connectivity between uni- and multimodal sensory regions contributing to the modulation of left amygdala activity by emotion presence congruence (EPC). In total 48 different models, reflecting all different neurobiological hypotheses, were varied along three different factors: i) presence and absence of interhemispheric connections, ii) region projecting into the left amygdala and iii) modulation of the effective connectivity towards the left amygdala. Seven regions were included into the models, bilateral fusiform (FFG) and superior temporal gyri (STG) as face and voice sensitive areas (Belin et al., 2000; Gainotti, 2011; Grill-Spector et al., 2004; Kanwisher et al., 1997; Kanwisher and Yovel, 2006) respectively as well as bilateral pSTS because of its role in audiovisual processing (Beauchamp et al., 2004, 2010; Calvert et al., 2000; Hagan et al., 2009; James et al., 2011; Kreifelts et al., 2007, 2009). Finally the left amygdala was included as the aim of the present study is to investigate the neural interactions which lead to the EPC effect found in that region.

Methods

Subjects

The present effective connectivity modeling was based on a fMRI experiment investigating audiovisual emotion integration (Müller et al., 2011). Six participants of the original sample of 35 subjects were excluded from the DCM analysis due to missing activation on the single-subject level in one or more regions assessed by the dynamic causal models, leaving 29 subjects for the DCM. Two subjects were excluded after the modeling, as their model parameters were strongly deviant (more than two standard deviations from the group mean, leading to violations of the normality assumption). In order to avoid any bias by these subjects, all group analyses were based on the remaining 27 subjects. All participants had normal or corrected-to-normal vision and were right handed as confirmed by the Edinburgh Inventory (Oldfield, 1971). No subject had a history of any neurological or psychiatric disorder, including substance abuse. Informed consent for the study, which was approved by the ethics committee of the School of Medicine of the RWTH Aachen University, was given by every participant.

Stimuli and procedure

A detailed description of the stimulus material is reported in Müller et al. (2011). In brief, we used a total of 10 (5 male and 5 female) different faces, each showing three different expressions (happy, neutral, fear). In addition, 10 different neutral faces blurred with a mosaic filter were included as masks. The auditory stimuli were neutral (yawning) or emotional sounds like laughing (happy) or screaming (fear), with 10 sounds for each category (5 male and 5 female in each case).

In total 180 audiovisual stimulus pairs were presented. Every trial started with the presentation of a sound concurrently with a blurred neutral face. After 1000 ms the blurred face was displaced by the target stimulus (neutral or emotional face) and this was presented together with the ongoing sound for another 500 ms. The subjects were asked to ignore the sound and to rate the presented face on an eight-point rating scale from extremely fearful to extremely happy. Each pair was hence presented for 1500 ms. Between trials a blank screen was presented for 4000–6000 ms (uniformly jittered) before the onset of the next trial. All stimuli were presented with the software Presentation 14.2 (http://www.neurobs.com/) and responses were given manually using an MR-compatible response pad.

fMRI data acquisition and pre-processing

Images were acquired on a Siemens Trio 3T whole-body scanner (Erlangen, Germany) in the Research Center Jülich using blood-oxygen-level-dependent (BOLD) contrast [Gradient-echo EPI pulse sequence, TR = 2.2 s, in plane resolution = 3.1 × 3.1 mm, 36 axial slices (3.1 mm thickness)] covering the entire brain. To allow magnetic field saturation, prior to image acquisition 4 dummy images were collected which were discarded before further processing. Images were analyzed using SPM5 (www.fil.ion.ucl.ac.uk/spm). The data was pre-processed by using head movement correction, spatial normalization to the MNI single subject space, resampling at 2×2×2 mm3 voxel size and smoothing using an 8 mm FWHM Gaussian kernel (for a detailed description see Müller et al., 2011).

Statistical analysis (GLM)

The imaging data were analyzed using a General Linear Model. Each experimental condition (“fearful face+scream”, “fearful face+ yawn”, “fearful face+laugh”, “neutral face+scream”, “neutral face+yawn”, “neutral face+laugh”, “happy face+scream”, “happy face+yawn”, “happy face+laugh”) and the (manual) response were separately modeled by a boxcar reference vector convolved with a canonical hemodynamic response function and its first-order temporal derivative. Simple main effects for each of the nine experimental conditions and the response were computed for every subject and then fed to a second-level group-analysis using an ANOVA (factor: condition, blocking factor subject) employing a random-effects model. By applying appropriate linear contrasts to the ANOVA parameter estimates, simple main effects for each condition (versus baseline) as well as comparisons between the conditions were tested. The resulting SPM (T) maps were then thresholded at p<.05, corrected for multiple comparisons by controlling the family-wise error (FWE) rate according to the theory of Gaussian random fields (Worsley et al., 1996). Ensuing activations were anatomically localized using version 1.6b of the SPM Anatomy toolbox (Eickhoff et al., 2005, 2006, 2007; www.fz-juelich.de/ime/spm_anatomy_toolbox).

Regions of Interest for DCM

The aim of this study was to assess the effective connectivity within the network underlying the emotion presence congruence effect (Müller et al., 2011). Regions of Interest (ROIs) were selected based on the group analysis (coordinates: see Supplement Table 1) of the previous fMRI experiment. In total seven regions showing robust activation in the fMRI analysis were included into the models. Bilateral FFG and STG were included as they might comprise face- and voice selective areas, respectively, involved in early unimodal sensory processing (Belin et al., 2000; Gainotti, 2011; Grill-Spector et al., 2004; Kanwisher et al., 1997; Kanwisher and Yovel, 2006) and bilateral pSTS because of its role in audiovisual integration (Beauchamp et al., 2004, 2010; Calvert et al., 2000; Hagan et al., 2009; James et al., 2011; Kreifelts et al., 2007, 2009). The coordinates of these six regions were determined by the conjunction over all nine conditions as auditory and visual processing as well as audiovisual integration should take place in every condition. Moreover, as the seventh region, the left amygdala was included in the models as the purpose of this study was to assess the EPC effect found in this region. As the EPC effect was not found bilaterally in our GLM analysis, only the left amygdala was included into the models. In contrast to the other six areas, the coordinates of this region were defined by the congruence of emotion presence contrast (fear+scream, fear+laugh, happy+scream, happy+laugh>fear+yawn, neutral+scream, neutral+laugh, happy+yawn). It should be pointed out that except for the amygdala which is defined by the EPC effect, all other regions included in the analysis were defined by a conjunction over all conditions. This implies that the experimental design, lacking unimodal conditions didn’t allow us to directly extract areas involved in voice, face and multimodal processing. Therefore the current DCM analysis was limited to hypothetically assigning functions to regions which are well known from previous research to be involved in these processes (Beauchamp et al., 2004, 2010; Belin et al., 2000; Calvert et al., 2000; Gainotti, 2011; Grill-Spector et al., 2004; Kanwisher et al., 1997; Kanwisher and Yovel, 2006; Kreifelts et al., 2007, 2009). On the other hand, it should be noted that the group peak maxima of the pSTS fit very well with the coordinates reported in studies of multimodal integration (Beauchamp et al., 2004; Calvert et al., 2000; Kreifelts et al., 2007, 2009), which warrants the assumption that the chosen ROI covers the multisensory integration area in the pSTS.

For each subject the individual maximum was identified for each of the seven areas. This was done by identifying the local maximum (p<0.05 uncorrected; cf. Eickhoff et al., 2009; Grefkes et al., 2008; Mechelli et al., 2005) of the respective contrast which was closest to the coordinates of the group analysis. For each region a time series was extracted as the first principal component of those 15 voxels in the target contrast which were most significant. Only voxels located around the individual peak coordinates in a radius of 4 mm were taken into account. Furthermore the extracted time series were then adjusted for the effect of interest (Cieslik et al., 2011). This procedure was used to assure that the number of significant voxels is kept constant over subjects and regions (Cieslik et al., 2011; Rehme et al., 2011; Wang et al., 2011).

Dynamic Causal Modelling

Tested models

All three sounds and all three faces served as driving inputs into the models. In contrast to the GLM analysis where every condition included a face-sound pair, stimuli were separated for the DCM analysis. This means that the input functions for the DCM don’t reflect the experimental conditions but rather the individual designelements (fear, neutral, happy, scream, yawn, and laugh). Sounds were modeled as driving inputs on bilateral STG and faces as driving inputs on bilateral FFG. We modeled our inputs as events with their real duration of 1500 ms for sounds and 500 ms for faces.

In total 48 different models were tested for the effective connectivity within the network spanned by the seven regions outlined above (bilateral fusiform gyrus, bilateral posterior superior temporal sulcus, bilateral superior temporal gyrus and left amygdala). All models shared intrinsic (endogenous) connections from the FFG and STG on each hemisphere to the respective ipsilateral pSTS. These projections were based on the consistent reports of audiovisual (emotional) integration in the pSTS (Kreifelts et al., 2007, 2009; Robins et al., 2009).

The 48 models then varied along three different factors. The first two factors were varied systematically across models, whereas the third factor varied depending on the levels of factor two (Table 1). The three different factors were:

Table 1:

Model space consisted of 48 different models which were varied systematically along factors 1 and 2, whereas factor 3 was varied depending on the level of factor 2. Letters in brackets refer to the level of the respective factor as labeled in the main text.

| Model | Interhemispheric conntections (factor 1) | Projection into amygdala from (factor 2) | Modulation by (factor 3) |

|---|---|---|---|

| 1 | FFG, STG, pSTS (a) | Left pSTS (f) | No modulation (c) |

| 2 | FFG, STG, pSTS (a) | Left pSTS (f) | EPC (a) |

| 3 | FFG, STG, pSTS (a) | Right pSTS (e) | No modulation (c) |

| 4 | FFG, STG, pSTS (a) | Right pSTS (e) | EPC (a) |

| 5 | FFG, STG, pSTS (a) | Bilateral pSTS (d) | No modulation (c) |

| 6 | FFG, STG, pSTS (a) | Bilateral pSTS (d) | EPC (a) |

| 7 | FFG, STG, pSTS (a) | STG (b) | No modulation (c) |

| 8 | FFG, STG, pSTS (a) | STG (b) | EPC (a) |

| 9 | FFG, STG, pSTS (a) | FFG (a) | No modulation (c) |

| 10 | FFG, STG, pSTS (a) | FFG (a) | EPC (a) |

| 11 | FFG, STG, pSTS (a) | FFG (a) | pSTS (d2) |

| 12 | FFG, STG, pSTS (a) | FFG (a) | STG (d1) |

| 13 | FFG, STG, pSTS (a) | FFG, STG (c) | No modulation (c) |

| 14 | FFG, STG, pSTS (a) | FFG, STG (c) | EPC (a) |

| 15 | FFG, STG, pSTS (a) | FFG, STG (c) | STG connection by EPC (b1) |

| 16 | FFG, STG, pSTS (a) | FFG, STG (c) | FFG connection by EPC (b2) |

| 17 | None (c) | Left pSTS (f) | No modulation (c) |

| 18 | None (c) | Left pSTS (f) | EPC (a) |

| 19 | None (c) | Right pSTS (e) | No modulation (c) |

| 20 | None (c) | Right pSTS (e) | EPC (a) |

| 21 | None (c) | Bilateral pSTS (d) | No modulation (c) |

| 22 | None (c) | Bilateral pSTS (d) | EPC (a) |

| 23 | None (c) | STG (b) | No modulation (c) |

| 24 | None (c) | STG (b) | EPC (a) |

| 25 | None (c) | FFG (a) | No modulation (c) |

| 26 | None (c) | FFG (a) | EPC (a) |

| 27 | None (c) | FFG (a) | pSTS (d2) |

| 28 | None (c) | FFG (a) | STG (d1) |

| 29 | None (c) | FFG, STG (c) | No modulation (c) |

| 30 | None (c) | FFG, STG (c) | EPC (a) |

| 31 | None (c) | FFG, STG (c) | STG connection by EPC (b1) |

| 32 | None (c) | FFG, STG (c) | FFG connection by EPC (b2) |

| 33 | FFG, STG (b) | Left pSTS (f) | No modulation (c) |

| 34 | FFG, STG (b) | Left pSTS (f) | EPC (a) |

| 35 | FFG, STG (b) | Right pSTS (e) | No modulation (c) |

| 36 | FFG, STG (b) | Right pSTS (e) | EPC (a) |

| 37 | FFG, STG (b) | Bilateral pSTS (d) | No modulation (c) |

| 38 | FFG, STG (b) | Bilateral pSTS (d) | EPC (a) |

| 39 | FFG, STG (b) | STG (b) | No modulation (c) |

| 40 | FFG, STG (b) | STG (b) | EPC (a) |

| 41 | FFG, STG (b) | FFG (a) | No modulation (c) |

| 42 | FFG, STG (b) | FFG (a) | EPC (a) |

| 43 | FFG, STG (b) | FFG (a) | pSTS (d2) |

| 44 | FFG, STG (b) | FFG (a) | STG (d1) |

| 45 | FFG, STG (b) | FFG, STG (c) | No modulation (c) |

| 46 | FFG, STG (b) | FFG, STG (c) | EPC (a) |

| 47 | FFG, STG (b) | FFG, STG (c) | STG connection by EPC (b1) |

| 48 | FFG, STG (b) | FFG, STG (c) | FFG connection by EPC (b2) |

-

1The presence or absence of interhemispheric connections between right and left FFG, STG and pSTS, respectively, was varied systematically to assess the influence of transcallosal interactions on the models. Studies in the macaque and in humans have shown that most of the visual (Clarke and Miklossy, 1990; Van Essen et al., 1982) and auditory regions (Ban et al., 1984; Hackett et al., 1999; Pandya and Rosene, 1993) project to homotopic areas of the other hemisphere. Three different alternatives were modeled for this factor to test if and which transcallosal interactions contribute to the EPC effect in the amygdala:

- As anatomical interhemispheric connections in auditory and visual areas exist, the first model family assumed that connections between all three regions (FFG, STG, pSTS) are functionally relevant.

- Alternatively, we modeled the scenario that only regions of unimodal processing, namely FFG and STG (but not the polymodal and potentially lateralized pSTS) feature reciprocal interhemispheric connections (Hackett et al., 1999; Van Essen et al., 1982).

- The last level of this factor represented the absence of (functionally relevant) transcallosal interactions. It should be noted, that this is not in contrast to the well-established anatomical connections between the respective areas. Rather it reflects the hypothesis that effective connectivity between homotopic areas is not relevant for the model.

-

2

Secondly, we modeled several different hypotheses about the projections into the left amygdala. Literature concerning amygdala connections in the macaque reports that the amygdala receives direct input from auditory and visual areas (McDonald, 1998; Yukie, 2002). Furthermore studies of effective connectivity in face processing support connections from FFG to the amygdala (Fairhall and Ishai, 2007; Herrington et al., 2011). However, how the amygdala is influenced when information from two different sensory channels is received is yet unknown. Rather, the focus of this study is to assess which of these (anatomical) connections to the amygdala are relevant with respect to the EPC effect. Therefore six different options were modeled:

The first three possibilities assumed that the input into the amygdala originates from an early stage of stimulus processing by modeling input into the left amygdala only from unimodal sensory cortices:

-

a

Only bilateral FFG has a direct influence on amygdala activity.

-

b

Only bilateral STG directly affects amygdala activity.

-

c

Both bilateral FFG and STG influence amygdala activity.

-

a

In contrast, the remaining three options reflected the assumption that projections to the amygdala arise from later stages of processing, hypothesizing a direct connection of the pSTS to the left amygdala. As studies of audiovisual integration report inconsistent results concerning the (bi)lateralization of the pSTS (see Hein and Knight, 2008) we modeled three different options:

-

d

Bilaterally pSTS directly project into the amygdala.

-

e

Only right pSTS directly affects amygdala activity.

-

f

Only left pSTS has an influence on amygdala activity.

-

3Finally, the modulation of effective connectivity towards the left amygdala potentially underlying the EPC effect varied between models. As the aim of this study is to investigate the neuronal mechanism of the EPC effect in the amygdala, this factor is the most important one in our model. Importantly, this factor had to vary as a function of the amygdala connection in factor 2:

- This alternative entailed a context-dependent modulation by EPC varying across all levels of factor two. This means that EPC (precisely, emotional information in both channels) modulates the feed-forward of the region defined by factor 2 projecting into the left amygdala. Conceptually, such context-dependent modulation may be understood as influence of regions not explicitly included in the models onto these connections.

- When the level of factor 2 entailed projections from both STG and FFG to the amygdala, additional models tested for a contextual modulation of only one of them by EPC, which reflects the hypothesis that, even though both areas project into the amygdala, EPC only influences one of these connections. This means that both areas influence activity of the amygdala based on their unimodal information, but EPC additionally affects the strength of one of these projections. That is, both regions project into the amygdala but information from these two unisensory connections is not sufficient to explain the EPC effect, suggesting that other areas, not included in the model, modulate one of these connections:

- Direct context modulation of the STG-amygdala connection only.

- Direct context modulation of the FFG-amygdala connection only.

- Absence of a direct context modulation by EPC, again varying across all levels of factor two. This alternative assumed that activity and interactions of the regions included in the model can explain the EPC effect by themselves.

- When, for factor 2 (cf. above), a FFG-amygdala connection was modeled (option a), the model could include non-linear modulations by:

- Bilateral STG.

- Bilateral pSTS.

Models from these families thus assumed that the EPC effect can be explained by a gating of FFG-to-amygdala connectivity by the current state of either the STG or the pSTS. We only modeled the modulation of the FFG-amygdala connection due to our experimental design (timing and task). (Unattended) sounds were always presented first whereas presentation of (attended) faces occurred after 1000 ms. Therefore only sounds (or the integration of sounds and faces), but not faces, may act as context which gates the processing of future stimuli. Consequently areas driven or influenced by sound information (context) may potentially modulate a connection forwarding face information but not vice versa.

Model selection and inference

Here we used DCM8 as implemented in SPM8, not the conceptually modified version DCM10. This means that our input-functions were not mean-centered and complete additivity between intrinsic and modulatory effect is given.

To identify the most likely generative model for the observed data among the 48 different models, we used a random-effects Bayesian Model Selection (BMS) procedure (Stephan et al., 2009) where all 48 models were tested against each other. This Bayes method treats the model as a random variable and estimates the parameter of a Dirichlet distribution which describes the probabilities for all models being considered and allows computing the exceedence probability of one model being more likely than any other model tested.

For confirmation and comparability with previous DCM analyses, fixed-effects model comparison was also performed. To test if the coupling parameters were consistently expressed across subjects, the connectivity parameters (driving inputs, intrinsic connections, context dependent modulations and non-linear influences) of the model with the highest exceedence probability were then entered in a second level analysis by means of one sample t-tests. Connections were considered statistically significant if they passed a threshold of p<0.05 (Bonferroni corrected for multiple comparisons across all tested connections). Finally, a MANOVA was calculated to test lateralization and emotion differences of the driving inputs using SPSS 19.0.

Results

Baysian Model Selection

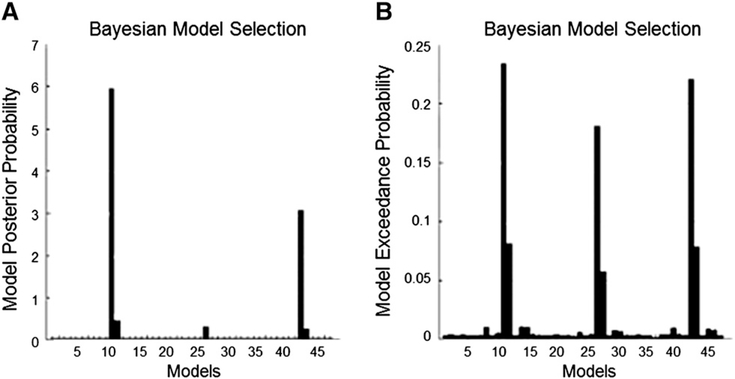

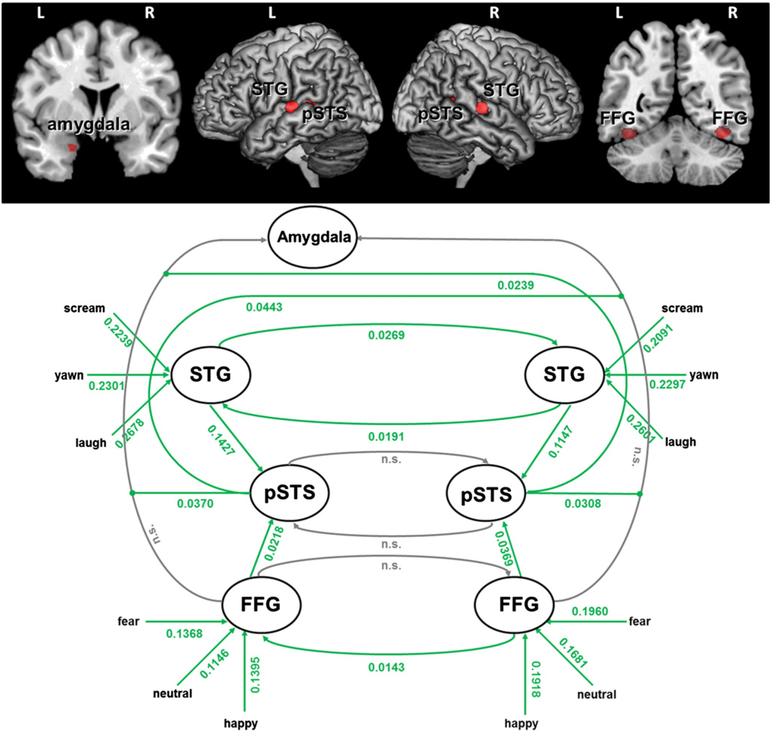

Both random-effects and fixed-effects model selection provide evidence for a small group of models as the most likely generative models for the observed data. While in both procedures models 11 and 43 receive very high evidence, the random effects procedure additionally supports model 27 (Fig. 1). Importantly, all of these three models differ only in the presence or absence of interhemispheric connections between FFG, STG and pSTS, with model 11 entailing reciprocal interhemispheric connections between all three regions, model 27 assuming no transcallosal connection and model 43 modeling only reciprocal connections between FFG and STG. Apart from these differences, all other aspects of the models are identical. That is, all three most likely models reflect that a) bilateral FFG projects into the left amygdala and that b) these connections are modulated by bilateral pSTS (Fig. 2).

Fig. 1.

Results of the fixed (A) and random (B) Bayesian Model Selection procedures with both favoring three models. Whereas in A the winners are models 11 and 43, B also favors model 27. All three models differ only in the presence and absence of interhemispheric connections.

Fig. 2.

Regions included in the models and intrinsic and modulatory influences of model 11. All significant (green) coupling parameters are positive indicating facilitating connections. Connections in gray are not significant.

In the following the most likely model (model 11) will be described in detail, whereas information about the model with the second highest probability in both selection processes (model 43) can be found in the supplement (Supplement Fig. 1).

Driving inputs

All types of faces (neutral, happy, fearful) significantly influence bilateral FFG activity, whereas all sounds (yawn, laugh, scream) have a significant effect on bilateral STG. A MANOVA testing “side” (left/right) and “emotion” (positive/negative/neutral) effects of the driving activity on FFG and STG (dependent variables) reveal significant main effects of “side” (F2,25 = 6.86, p<.05) and of “emotion” (F4,23 = 5.672, p<.05) and a non-significant interaction (F4,23 = 0.447, n.s.). The univariate comparisons show that the effect of “side” is only significant for the (visual) FFG (F1,26 = 14.21, p<.05) but not for the (auditory) STG (F1,26 = 0.15, n.s.), whereas the effect of “emotion” is significant for both regions (FFG: F2,52 = 5.578, p<.05; STG: F2,52 = 9.326, p<.05). The significant effect in the FFG reflects a stronger drive of faces on right compared to left FFG activity. Post-hoc comparison of the factor emotion reveals that happy faces have a significantly stronger influence on FFG activity than neutral faces whereas fearful faces show a trend for a greater driving effect than neutral ones. For sounds, laughs significantly drive STG activity more than yawns and screams, whereas yawns and screams don’t show a significant difference.

Intrinsic connections and modulatory influences

Fig. 2 shows the intrinsic connections as well as the modulatory influences for the model with the highest probability (model 11). All connections, except left pSTS→right pSTS, right pSTS→left pSTS, right FFG→left FFG, left FFG→left amygdala, right FFG→left amygdala differ significantly from zero (p<.05, means see Fig. 2). Given that the three models that receive the highest probabilities only differ in the presence or absence of interhemispheric connections, it should be emphasized that the parameters for some of the interhemispheric connections included in model 11 were not significant across subjects (Fig. 2). That is, including these connections in the model still resulted in a high model posterior and the respective parameters are not consistently (across subjects) different from zero. In contrast, all other intrinsic connections are constant over all three models with similar parameters and significance levels in all of them. This means that, apart from the transcallosal connections, the parameters of the remaining network are highly consistent within this model family. Moreover, Fig. 2 further demonstrates that all coupling parameters are positive, pointing to a network in which all connections are facilitating.

With respect to the modulation of effective connectivity towards the left amygdala, our models don’t support a context modulation by EPC but rather a non-linear modulation of FFG to left amygdala connectivity by the pSTS. This modulation of the pSTS is again positive, indicating a facilitating modulation (Fig. 2). That is, activation within the pSTS gates influences from the FFG towards the amygdala in a non-linear fashion.

Discussion

We here used Dynamic Causal Modelling (DCM) in order to gain mechanistic insight into the effective connectivity underlying the emotion presence congruence (EPC) effect found in cross-modal processing of affective stimuli (Müller et al., 2011). This effect represents attenuation of activity in the left amygdala when a neutral stimulus in one sensory channel is paired with an emotional stimulus in another modality. Our results provide evidence for a model family differing only in the presence or absence of homotopic interhemispheric interactions. All three winning models entail a feed-forward connection of bilateral fusiform gyrus (FFG) to the left amygdala which is modulated by activity of bilateral posterior superior temporal sulcus (pSTS). As the pSTS integrates information from the FFG and superior temporal gyrus (STG) as evidenced by the respective feed-forward connections from these unisensory areas, this result indicates that unisensory information is combined into a multi-sensory context, which then gates the feed-forward of information into the amygdala and thus provides for the EPC effect.

Interhemispheric connections

Our model selection doesn’t clearly support one single model but rather favors a model family. These three models are identical in the level of two factors from our model space by sharing the same amygdala driving and modulatory effects but differing only in the presence or absence of interhemispheric connections between FFG, STG and pSTS. Apart from the transcallosal interactions, all other parameters are highly consistent across all models of the family. In summary, we did not observe clear evidence for only one model but rather for three models differing in their interhemispheric projections and found that some of the connections by which the three models of the winning family differed are non-significantly expressed. This indicates that interhemispheric interactions may contribute to the modeled network but don’t seem to be consistent across subjects.

Lateralization

The present DCM analysis revealed that activity of the FFG on both hemispheres is significantly driven by faces, but analyzing these driving effects in detail demonstrated that the drive on FFG is significantly stronger on the right. This result thus further supports the view of a right sided dominance for face processing (Dien, 2009; Ishai et al., 2005; Morris et al., 2007; Rhodes et al., 2004). As the interaction between side and emotion was not significant, this lateralization seems not to be driven by asymmetries in emotion processing but rather reflects a general right sided dominance for faces. A similar functional lateralization as for face processing has also been suggested for the auditory domain (Belin et al., 2004). Even though there are a few studies dealing with the processing of human sounds other than speech (Belin et al., 2000; Dietrich et al., 2007; Fecteau et al., 2007; Meyer et al., 2005; Sander and Scheich, 2001), there is no clear conclusion about lateralized processing of these types of sounds. Challenging a possible lateralization similar to that found for the FFG, our DCM analysis indicates that, unlike faces, sounds don’t show any lateralization effects on STG activity.

When further evaluating influences of stimulus category our results corroborated previous findings (Fusar-Poli et al., 2009) by demonstrating a sensitivity of the FFG for emotional faces through significantly stronger drive on FFG activity by happy compared to neutral and a trend of stronger FFG activity in response to fearful than neutral faces. In contrast, positive and negative human vocalizations differ in the drive they have on STG activity. In particular, only laughs have a stronger drive on bilateral STG activity compared to yawns (and screams), whereas screams have the same effect as yawns. This contrasts with results of Fecteau et al. (2007) reporting greater STG activation for both negative and positive vocalizations. Similarly greater responsiveness of the STG has been found for positive and negative compared to neutral prosody (Ethofer et al., 2006a; Wiethoff et al., 2008). Wiethoff et al. (2008) further demonstrated that the stronger activity in right STG in response to emotional prosody was abolished when correcting for emotional arousal and different acoustic parameters. Sander and Scheich (2001) also interpret their result of stronger auditory cortex activation in response to laughs as compared to cries in terms of differences in spectrotemporal characteristics and loudness. Therefore our results may indicate that the STG has a stronger sensitivity to positive emotions, but could more parsimoniously also reflect acoustic differences immanent to the stimulus material.

The role of the pSTS

In our daily life we often receive signals from different sensory channels. This information is processed in distinct unimodal sensory areas. Nevertheless in some way and at some level of processing this information must interact or be integrated in order to enable an individual to adequately respond to a unified percept arising from multimodal stimulation. According to Wallace et al. (2004) integration may take place in multisensory convergence zones which may be located anatomically between unimodal zones. Because of its anatomical location between the visual and auditory system, the pSTS is a likely candidate for an area of audiovisual integration. The results of the present DCM analysis, indicating significant input into the pSTS from the STG and the FFA, are well in line with this proposed multimodal role of the pSTS. Moreover, previous studies using speech (Beauchamp et al., 2010; Calvert et al., 2000; Sekiyama et al., 2003) or non-speech stimuli (Beauchamp et al., 2004; James et al., 2011) of non-emotional (Beauchamp et al., 2004, 2010; Calvert et al., 2000; Sekiyama et al., 2003) or emotional (Kreifelts et al., 2007, 2009, 2010; Robins et al., 2009) nature have also indicated a major role of the pSTS in audiovisual integration.

Kreifelts et al. (2007) further explored the mechanism of audiovisual emotional integration by assessing the effective connectivity between FFG, STG and pSTS. The authors reported an increase in effective connectivity between FFG, STG with ipsilateral pSTS in bimodal compared to unimodal conditions. We expand these results by showing that the pSTS not only integrates but also gates audiovisual information. When considering the crucial role of this region, it has to be noted that in our favored model the (parameters of the) intrinsic connection between FFG and left amygdala did not become significant, indicating that the parameters are not consistently expressed over subjects. Intrinsic connectivity parameters reflect effective connectivity independent of any context or nonlinear modulation. The fact that the intrinsic connection between FFG and left amygdala is not significant indicates that this connection is not consistently facilitating or inhibiting over subjects, even though it’s inclusion in the model was supported by the Bayesian model selection procedure. This further highlights the crucial role of the pSTS, as the FFG to amygdala connectivity is significantly modulated by the pSTS which thereupon causes the observed EPC effect in the amygdala. That is, in particular by the modulation through the pSTS the feed-forward from the FFG to the amygdala becomes relevant for the EPC effect.

Therefore we would suggest a hierarchical modulated system of sensory processing with the pSTS acting as a higher level integration center, receiving and integrating input from sensory specific regions, namely FFG and STG. This higher level multisensory center thereupon further gates the feed-forward of information from the FFG into (at least) the amygdala. More precisely, based on the result of the integration process, the pSTS may modulate what the FFG forwards to the left amygdala. That is, multimodal context shapes the strengths of FFG- left amygdala connectivity and therefore the affective outcome.

This model also fits with the results of Ethofer et al. (2006b) reporting increased effective connectivity between right FFG and left amygdala during rating of facial expressions while hearing a fearful compared to a happy voice. Based on our results, this increase in effective connectivity might be explained by modulation by the pSTS which may enhance the feed-forward projection in the amygdala when a fearful voice is integrated.

Parallels to audiovisual speech integration

A great proportion of studies in audiovisual integration have focused primarily on the integration of speech signals (Beauchamp et al., 2010; Benoit et al., 2010; Calvert et al., 2000; Sekiyama et al., 2003). One phenomenon studied in this context is the McGurk effect (McGurk and MacDonald, 1976), which describes an illusion where the auditory perception of a syllable is altered by concurrently presenting a face forming a different syllable. Beauchamp et al. (2010) recently demonstrated with TMS that the McGurk effect doesn’t occur when the pSTS is temporary disrupted. Nath and Beauchamp (2011) further report a correlation of pSTS activation with the likelihood of perceiving the McGurk illusion. These results emphasize the crucial role of the pSTS in the integration of speech and visual information, demonstrating that when the pSTS doesn’t integrate stimuli from both modalities, visual information can’t influence the unimodal auditory signal. The respective (auditory) signal is thereupon forwarded to higher processing areas without modulation and the McGurk illusion is not perceived. This view is in line with our model, supporting the idea that, based on the integration of sound and visual input, the pSTS modulates the unimodal signal which is forwarded to higher processing areas (in our case the amygdala).

Together with the findings of Beauchamp et al. (2010) our result suggests that the mechanism in audiovisual integration is similar for non-emotional speech and emotional non-speech signals: The unimodal signal which is feed-forwarded to higher level processing areas is modulated depending on the top-down context which is generated by multisensory integration areas, in particular the pSTS. This means that the pSTS, which represents integrated information from unimodal auditory and visual areas, shapes the responses in earlier unisensory areas as well as their connections to other brain regions and hence potentially the outcome (perception) of sensory processing.

Together, our model and previous results thus suggest that the function of the pSTS may be conceptualized as holding a key role in various forms of multimodal processing such as speech or emotional integration and exerting modulatory influences on unisensory cortices and their forward connections. Evidently, these properties would implicate a crucial role of the pSTS in the perception of multimodal stimuli that goes far beyond simple integration.

Neurobiological implementation

Finally, one has to consider how the established model may be implemented on the neural level or, more colloquially, where and how does the modulation of the FFG to amygdala connectivity take place? Biophysiologically, modulation may occur via different mechanisms of short term plasticity (Stephan et al., 2008) such as synaptic depression or facilitation (Abbott and Regehr, 2004). Given that DCM doesn’t imply that a significant connection in a model is a “direct connection” (Stephan et al., 2010), we would suggest that this modulation operates via relays or loops. Therefore it may be that the pSTS acts via relay areas like, for example, the thalamus. More precisely, this suggests that pSTS and the FFG (or a collateral from earlier visual processes) project into the thalamus which thereupon further influences amygdala activity. This fits with the finding of Kreifelts et al. (2007) who report, besides pSTS activation, multimodal effects in the right thalamus. Effective connectivity analysis in that paper further demonstrated that the right thalamus was significantly influenced by ipsilateral FFG. Furthermore Hunter et al. (2010) reported modulation of effective connectivity between auditory cortex and thalamus by visual information. Transformed to our model, this could possibly indicate that the pSTS may allow the FFG to modulate thalamic activity which thereupon may contribute to activation in the amygdala.

Conclusion

In summary, our models provide support for a right hemispheric dominance of the FFG in response to faces regardless of emotional content, whereas no lateralization effect could be found for sound driven STG activity. Moreover, the present study demonstrates that the pSTS not only plays a crucial role in audiovisual integration but also gates the influence that bilateral FFG exerts over the left amygdala. Therefore it is suggested that the pSTS acts as a higher-level multisensory integration center creating a more abstract neural code from bottom-up driven information which thereupon modulates information transfer into the amygdala in a top-down manner.

Supplementary Material

Acknowledgment

This study was supported by the Deutsche Forschungsgemeinschaft (DFG, IRTG 1328), by the Human Brain Project (R01-MH074457-01A1), and the Helmholtz Initiative on systems biology (The Human Brain Model).

Footnotes

Appendix A. Supplementary material

Supplementary data to this article can be found online at doi:10.1016/j.neuroimage.2011.12.007.

References

- Abbott LF, Regehr WG, 2004. Synaptic computation. Nature 431, 796–803. [DOI] [PubMed] [Google Scholar]

- Adolphs R, 2010. What does the amygdala contribute to social cognition? Ann. N. Y. Acad. Sci 1191, 42–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ban T, Naito J, Kawamura K, 1984. Commissural afferents to the cortex surrounding the posterior part of the superior temporal sulcus in the monkey. Neurosci. Lett 49, 57–61. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A, 2004. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41, 809–823. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Nath AR, Pasalar S, 2010. fMRI-Guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the McGurk effect. J. Neurosci 30, 2414–2417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B, 2000. Voice-selective areas in human auditory cortex. Nature 403, 309–312. [DOI] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Bedard C, 2004. Thinking the voice: neural correlates of voice perception. Trends Cogn. Sci. 8, 129–135. [DOI] [PubMed] [Google Scholar]

- Benoit MM, Raij T, Lin FH, Jaaskelainen IP, Stufflebeam S, 2010. Primary and multisensory cortical activity is correlated with audiovisual percepts. Hum. Brain Mapp. 31, 526–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ, 2000. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr. Biol 10, 649–657. [DOI] [PubMed] [Google Scholar]

- Cieslik EC, Zilles K, Grefkes C, Eickhoff SB, 2011. Dynamic interactions in the fronto-parietal network during a manual stimulus–response compatibility task. NeuroImage 58 (3), 860–869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke S, Miklossy J, 1990. Occipital cortex in man: organization of callosal connections, related myelo- and cytoarchitecture, and putative boundaries of functional visual areas. J. Comp. Neurol 298, 188–214. [DOI] [PubMed] [Google Scholar]

- Dien J, 2009. A tale of two recognition systems: implications of the fusiform face area and the visual word form area for lateralized object recognition models. Neuropsychologia 47, 1–16. [DOI] [PubMed] [Google Scholar]

- Dietrich S, Hertrich I, Alter K, Ischebeck A, Ackermann H, 2007. Semiotic aspects of human nonverbal vocalizations: a functional imaging study. Neuroreport 18, 1891–1894. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Morris JS, de Gelder B, 2001. Crossmodal binding of fear in voice and face. Proc. Natl. Acad. Sci. U. S. A 98, 10006–10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K, 2005. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage 25, 1325–1335. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Heim S, Zilles K, Amunts K, 2006. Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. NeuroImage 32, 570–582. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras MH, Evans AC, Zilles K, Amunts K, 2007. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. NeuroImage 36, 511–521. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Heim S, Zilles K, Amunts K, 2009. A systems perspective on the effective connectivity of overt speech production. Philos. Transact. A Math. Phys. Eng. Sci 367, 2399–2421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T, Anders S, Wiethoff S, Erb M, Herbert C, Saur R, Grodd W, Wildgruber D, 2006a. Effects of prosodic emotional intensity on activation of associative auditory cortex. Neuroreport 17, 249–253. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Pourtois G, Wildgruber D, 2006b. Investigating audiovisual integration of emotional signals in the human brain. Prog. Brain Res. 156, 345–361. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A, 2007. Effective connectivity within the distributed cortical network for face perception. Cereb. Cortex 17, 2400–2406. [DOI] [PubMed] [Google Scholar]

- Fecteau S, Belin P, Joanette Y, Armony JL, 2007. Amygdala responses to nonlinguistic emotional vocalizations. NeuroImage 36, 480–487. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Allen P, Landi P, Abbamonte M, Barale F, Perez J, McGuire P, Politi PL, 2009. Laterality effect on emotional faces processing: ALE meta-analysis of evidence. Neurosci. Lett 452, 262–267. [DOI] [PubMed] [Google Scholar]

- Gainotti G, 2011. What the study of voice recognition in normal subjects and brain-damaged patients tells us about models of familiar people recognition. Neuropsychologia 49, 2273–2282. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Eickhoff SB, Nowak DA, Dafotakis M, Fink GR, 2008. Dynamic intra- and interhemispheric interactions during unilateral and bilateral hand movements assessed with fMRI and DCM. NeuroImage 41, 1382–1394. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N, 2004. The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci 7, 555–562. [DOI] [PubMed] [Google Scholar]

- Gur RC, Schroeder L, Turner T, McGrath C, Chan RM, Turetsky BI, Alsop D, Maldjian J, Gur RE, 2002. Brain activation during facial emotion processing. NeuroImage 16, 651–662. [DOI] [PubMed] [Google Scholar]

- Habel U, Windischberger C, Derntl B, Robinson S, Kryspin-Exner I, Gur RC, Moser E, 2007. Amygdala activation and facial expressions: explicit emotion discrimination versus implicit emotion processing. Neuropsychologia 45, 2369–2377. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH, 1999. Callosal connections of the parabelt auditory cortex in macaque monkeys. Eur. J. Neurosci 11, 856–866. [DOI] [PubMed] [Google Scholar]

- Hagan CC, Woods W, Johnson S, Calder AJ, Green GG, Young AW, 2009. MEG demonstrates a supra-additive response to facial and vocal emotion in the right superior temporal sulcus. Proc. Natl. Acad. Sci. U. S. A 106, 20010–20015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hein G, Knight RT, 2008. Superior temporal sulcus—it’s my area: or is it? J. Cogn. Neurosci 20, 2125–2136. [DOI] [PubMed] [Google Scholar]

- Herrington JD, Taylor JM, Grupe DW, Curby KM, Schultz RT, 2011. Bidirectional communication between amygdala and fusiform gyrus during facial recognition. NeuroImage 56, 2348–2355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hocking J, Price CJ, 2008. The role of the posterior superior temporal sulcus in audiovisual processing. Cereb. Cortex 18, 2439–2449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hunter MD, Eickhoff SB, Pheasant RJ, Douglas MJ, Watts GR, Farrow TF, Hyland D, Kang J, Wilkinson ID, Horoshenkov KV, Woodruff PW, 2010. The state of tranquility: subjective perception is shaped by contextual modulation of auditory connectivity. NeuroImage 53, 611–618. [DOI] [PubMed] [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P, 2005. Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93. [DOI] [PubMed] [Google Scholar]

- James TW, Vanderklok RM, Stevenson RA, James KH, 2011. Multisensory perception of action in posterior temporal and parietal cortices. Neuropsychologia 49, 108–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G, 2006. The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM, 1997. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci 17, 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klasen M, Kenworthy CA, Mathiak KA, Kircher TT, Mathiak K, 2011. Supramodal representation of emotions. J. Neurosci 31, 13635–13643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreifelts B, Ethofer T, Grodd W, Erb M, Wildgruber D, 2007. Audiovisual integration of emotional signals in voice and face: an event-related fMRI study. NeuroImage 37, 1445–1456. [DOI] [PubMed] [Google Scholar]

- Kreifelts B, Ethofer T, Shiozawa T, Grodd W, Wildgruber D, 2009. Cerebral representation of non-verbal emotional perception: fMRI reveals audiovisual integration area between voice- and face-sensitive regions in the superior temporal sulcus. Neuropsychologia 47, 3059–3066. [DOI] [PubMed] [Google Scholar]

- Kreifelts B, Ethofer T, Huberle E, Grodd W, Wildgruber D, 2010. Association of trait emotional intelligence and individual fMRI-activation patterns during the perception of social signals from voice and face. Hum. Brain Mapp. 31, 979–991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald AJ, 1998. Cortical pathways to the mammalian amygdala. Prog. Neurobiol 55, 257–332. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J, 1976. Hearing lips and seeing voices. Nature 264, 746–748. [DOI] [PubMed] [Google Scholar]

- Mechelli A, Crinion JT, Long S, Friston KJ, Lambon Ralph MA, Patterson K, McClelland JL, Price CJ, 2005. Dissociating reading processes on the basis of neuronal interactions. J. Cogn. Neurosci 17, 1753–1765. [DOI] [PubMed] [Google Scholar]

- Meyer M, Zysset S, von Cramon DY, Alter K, 2005. Distinct fMRI responses to laughter, speech, and sounds along the human peri-sylvian cortex. Brain Res. Cogn. Brain Res. 24, 291–306. [DOI] [PubMed] [Google Scholar]

- Morris JP, Pelphrey KA, McCarthy G, 2007. Face processing without awareness in the right fusiform gyrus. Neuropsychologia 45, 3087–3091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller VI, Habel U, Derntl B, Schneider F, Zilles K, Turetsky BI, Eickhoff SB, 2011. Incongruence effects in crossmodal emotional integration. NeuroImage 54, 2257–2266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nath AR, Beauchamp MS, 2012. A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. NeuroImage 59 (1), 781–787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC, 1971. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113. [DOI] [PubMed] [Google Scholar]

- Olson IR, Gatenby JC, Gore JC, 2002. A comparison of bound and unbound audiovisual information processing in the human cerebral cortex. Brain Res. Cogn. Brain Res. 14, 129–138. [DOI] [PubMed] [Google Scholar]

- Pandya DN, Rosene DL, 1993. Laminar termination patterns of thalamic, callosal, and association afferents in the primary auditory area of the rhesus monkey. Exp. Neurol 119, 220–234. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Young AW, Scott SK, Calder AJ, Andrew C, Giampietro V, Williams SC, Bullmore ET, Brammer M, Gray JA, 1998. Neural responses to facial and vocal expressions of fear and disgust. Proc. Biol. Sci 265, 1809–1817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, de Gelder B, Bol A, Crommelinck M, 2005. Perception of facial expressions and voices and of their combination in the human brain. Cortex 41, 49–59. [DOI] [PubMed] [Google Scholar]

- Rehme AK, Eickhoff SB, Wang LE, Fink GR, Grefkes C, 2011. Dynamic causal modeling of cortical activity from the acute to the chronic stage after stroke. NeuroImage 55, 1147–1158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes G, Byatt G, Michie PT, Puce A, 2004. Is the fusiform face area specialized for faces, individuation, or expert individuation? J. Cogn. Neurosci. 16, 189–203. [DOI] [PubMed] [Google Scholar]

- Robins DL, Hunyadi E, Schultz RT, 2009. Superior temporal activation in response to dynamic audio-visual emotional cues. Brain Cogn. 69, 269–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabatinelli D, Fortune EE, Li Q, Siddiqui A, Krafft C, Oliver WT, Beck S, Jeffries J, 2011. Emotional perception: meta-analyses of face and natural scene processing. NeuroImage 54, 2524–2533. [DOI] [PubMed] [Google Scholar]

- Sander K, Scheich H, 2001. Auditory perception of laughing and crying activates human amygdala regardless of attentional state. Brain Res. Cogn. Brain Res. 12, 181–198. [DOI] [PubMed] [Google Scholar]

- Sekiyama K, Kanno I, Miura S, Sugita Y, 2003. Auditory-visual speech perception examined by fMRI and PET. Neurosci. Res 47, 277–287. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Kasper L, Harrison LM, Daunizeau J, den Ouden HE, Breakspear M, Friston KJ, 2008. Nonlinear dynamic causal models for fMRI. NeuroImage 42, 649–662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ, 2009. Bayesian model selection for group studies. NeuroImage 46, 1004–1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Moran RJ, den Ouden HE, Daunizeau J, Friston KJ, 2010. Ten simple rules for dynamic causal modeling. NeuroImage 49, 3099–3109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor KI, Moss HE, Stamatakis EA, Tyler LK, 2006. Binding crossmodal object features in perirhinal cortex. Proc. Natl. Acad. Sci. U. S. A 103, 8239–8244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen DC, Newsome WT, Bixby JL, 1982. The pattern of interhemispheric connections and its relationship to extrastriate visual areas in the macaque monkey. J. Neurosci 2, 265–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE, 2004. A revised view of sensory cortical parcellation. Proc. Natl. Acad. Sci. U. S. A 101, 2167–2172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang LE, Fink GR, Diekhoff S, Rehme AK, Eickhoff SB, Grefkes C, 2011. Noradrenergic enhancement improves motor network connectivity in stroke patients. Ann. Neurol. 69, 375–388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiethoff S, Wildgruber D, Kreifelts B, Becker H, Herbert C, Grodd W, Ethofer T, 2008. Cerebral processing of emotional prosody—influence of acoustic parameters and arousal. NeuroImage 39, 885–893. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC, 1996. A unified statistical approach for determining significant signals in images of cerebral activation. Hum. Brain Mapp. 4, 58–73. [DOI] [PubMed] [Google Scholar]

- Yukie M, 2002. Connections between the amygdala and auditory cortical areas in the macaque monkey. Neurosci. Res 42, 219–229. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.