Abstract

The National Center for Education Statistics’ (NCES) longitudinal student surveys have long been exceptionally useful for many purpose. Despite their many virtues, however, these surveys cannot be used to monitor trends at short time intervals, they do not allow for flexible changes to survey content, they cannot generally be used to infer policy effects, they are not useful for international comparisons, and they are of limited value to local stakeholders. NCES should consider doing to its longitudinal students surveys what the Census Bureau did to the decennial census long form and what NORC has long done for the General Social Survey: Move to annual rotating panels and allow outside investigators to field (and fund) supplemental topical modules. NCES should also continue to work with the research community to explore new survey content areas and modes of observation, improve the quality of spatial measures, and pursue record linkage to administrative data.

Keywords: longitudinal surveys, data infrastructure, National Center for Education Statistics, quantitative methodology

Data from longitudinal surveys tracking children and adolescents over periods of decades or longer have proven extremely useful for developing and testing theories about the student-, school-, family-, and neighborhood-level factors that are associated with the development of educational outcomes over the course of young people’s early lives. These outcomes include academic achievement (i.e., test scores), schooling transitions (e.g., completing high school, entering college), and early career development (e.g., entering science, technology, engineering, and mathematics [STEM] fields). Population-based studies also promote understanding of gender, racial/ethnic, and socioeconomic inequalities in these processes.

With several prominent exceptions (e.g., the National Longitudinal Surveys of Youth, the National Longitudinal Study of Adolescent Health, the Beginning School Study), U.S. longitudinal surveys have been run by the National Center for Educational Statistics (NCES). NCES’s longitudinal surveys of students typically feature a large random sample of students selected from within a random sample of schools; often they also feature oversamples of strategically chosen population groups or school types. Some surveys begin in early childhood, some in middle school, and some later. In all cases, students are followed periodically and for a decade (or so). Most projects feature surveys of students, their parents, and school personnel, and many are supplemented with transcripts and other school records. Most also make available private or restricted versions of the data that feature, among other things, more precise geography and more detailed information.

NCES’s collection of longitudinal student surveys has long been an enormously valuable resource for academic and applied research on education. As a nation, we are fortunate to have them, and even maintaining the status quo would be valuable. But while giving full credit to the remarkable utility of NCES’s longitudinal surveys of students, I argue that the designs of these surveys are not as useful for some analytic purposes. In no particular order, these include the following:

Routine monitoring. NCES’s longitudinal surveys describe populations of students in cross-section only at infrequent intervals (and then typically only in one grade). For example, the population of American high school sophomores can be described (after weighting) in cross-section in 1980 (from High School & Beyond), 1990 (from the National Educational Longitudinal Study of 1988), 2002 (from the Educational Longitudinal Study of 2002), and 2010 (from the High School Longitudinal Study of 2009). Thus these surveys are not very useful for frequent and ongoing monitoring of trends in educational outcomes (e.g., grade retention or college enrollment rates) or related issues (e.g., school safety or course offerings in science). To be fair, NCES has other surveys—for example, the Crime and Safety Surveys, the Fast Response Survey System, and the National Household Education Survey— for ongoing monitoring. However, data from these cross-sectional surveys are more topically specialized and thus less easily connected to other variables or issues. In general, the decade-or-so spacing of the longitudinal study cohorts facilitates analyses of only very broad, long-term trends.

Inferring policy effects. Academic and applied researchers devote considerable effort to assessing the causal impact of education policy interventions. In some cases, they employ randomized controlled trials; in others, they use strong quasiexperimental methods (e.g., regression discontinuity designs or instrumental variable analyses). Rarely can compelling causal conclusions about education policy effects be made using purely observational data, such as that available from NCES’s longitudinal student surveys.

Flexibility and innovation. Because they are fielded only every decade or so, NCES’s longitudinal student surveys cannot quickly adapt to study new topical domains or to deploy innovative survey methodologies. Even if the innovative content suggested by Moore (In press), Espelage (In press), and Muller (In press) were incorporated immediately into ongoing NECS surveys, the educational landscape and survey technology are likely to change dramatically in the decade before the next cohort study could be fielded.

International comparisons. NCES’s longitudinal student surveys are rarely used to compare the United States to other countries with respect to educational processes or outcomes. This stems, in part, from the timing, frequency, and cross-sectional representativeness issues described above. Again, NCES participates in other (cross-sectional) survey programs—notably the Program for International Student Assessment and the Trends in International Mathematics and Science Study—that serve some of those purposes for limited topical areas. However, these cross-sectional surveys collect relatively little background or contextual information and are thus much more limited in their capacity to facilitate research on the correlates and consequences of educational achievements and attainments.

Local utility. The data from NCES’s longitudinal student surveys are extremely useful for academic and applied researchers interested in national-level issues, but they are not now designed to be especially useful for teachers, school, districts, or the states. The samples are not state representative, there is now no room to field questions of special interest to local stakeholders (e.g., principals, school boards), and the typical design does not facilitate within-school or within-district cohort comparisons over time. American schools are constantly innovating and facing new challenges, but they often lack the capacity to collect and analyze data that might inform decision making; NCES’s longitudinal surveys do not currently help them in this regard. On a practical level, this weakness limits NCES’s ability to gain the cooperation of busy schools and their personnel.

Beyond these issues, since the NCES longitudinal studies were first initiated, the technological, fiscal, policy, and demographic contexts in which they are conducted have shifted in ways that have not always been easy to address. The student population has become more ethnically and linguistically diverse, but the landscape of educational institutions has also become more varied (e.g., with the growth of charter schools, home schooling, and for-profit colleges). New modes of survey administration are now more common (e.g., Internet surveys, experience sampling methods, video and audio methods), but greater effort is required to generate satisfactory response rates and high-quality data. As argued by Dynarski (2014), there are now exciting possibilities for linking traditional survey data to administrative record data. Overlaying all of these new opportunities and challenges, the fiscal and policy environment around NCES’s surveys has changed; surveys now need to be more efficiently executed, their traditional content areas touch on hot-button issues (e.g., school finance, segregation, achievement gaps), and the political environment around public education has become even more contentious. Every one of these issues has immediate implications for how NCES conducts its longitudinal surveys.

NCES may elect to go forward into the future with only minor modifications to its longitudinal student surveys, incorporating some new content domains and expanding data collection modalities around the edges (e.g., by linking to some administrative records and diversifying survey modes). However, to overcome the several basic limitations listed above and to expand their utility for academic and applied researchers despite a changing demographic, technological, and policy landscape, NCES might instead elect to pursue a bolder course.

Below, I outline a model for the future of NCES’s longitudinal student surveys that builds on their traditional strengths and overcomes many of their current limitations by borrowing good ideas from other ongoing survey programs. Although I end by countering this bolder vision with a dose of realism, I contend that following the lead of other survey programs would allow NCES to produce more broadly useful data for relatively little additional expense or burden. As I note below, my “bolder vision” would be expensive to implement in its entirety. However, I suggest that the value of the resulting data would offset the added expense.

Bolder Vision 1: Do to NCES Longitudinal Student Surveys What the U.S. Census Bureau Did to the Census Long Form

Until the early 2000s, the U.S. Census Bureau faced many of the same challenges that now face NCES. Every 10 years, the Census Bureau conducted the annual cross-sectional enumeration of all Americans—as mandated by the U.S. Constitution—primarily via the “short form” of the census, which collected only very basic demographic information about each person. Since 1970, it had also administered a “long form” to about one in six randomly selected households at each enumeration. The decennial long form collected considerably more information about each household’s composition, dwelling, and finances and about each household member’s demographic attributes, educational enrollment and attainments, language use, migration history, citizenship status, military service, labor force activities, income, and more. Data from the long form—released as a public-use microdata sample (PUMS) file that included information on about one in every 20 Americans—proved enormously useful for academic research, policy enforcement and program administration, local governance and planning, and business and marketing.

However—and like NCES’s longitudinal student surveys—data from the long form were limited in important respects. Because the long form was (like the short form) administered only once every 10 years, it was imperfect for routine monitoring. The PUMS data facilitated only very general and long-term analyses of trends in things like poverty rates, racial/ethnic diversity, home ownership rates, and rates of residential movement to and from urban cores. This limited the usefulness of long-form data for academic research, policy analysis, local planning, and marketing research. Local governments were forced to wait a decade for updated information about things like transportation patterns, residents’ age distributions, and neighborhood-level poverty statistics. Likewise, businesses were forced to wait a decade for updated information about the median income of neighborhoods in which they hoped to open new stores or about the median education of potential employees in a locale. Also because of the decade that passed between administrations of the long form, it was difficult for the Census Bureau to be flexible and responsive with respect to survey content or modes of administration.

Despite heated debate and vocal concerns by data user communities, the 2000 U.S. Census was the last with both short and long forms. Beginning in 2000, the Census Bureau fielded the annual, cross-sectional American Community Survey (ACS); the ACS replaced the long form. Once it was fully implemented in 2005, the ACS gathered largely the same information as the long form did but for about one in 100 Americans each year. By design, ACS data—now available annually—can be pooled across individual years to produce small-area estimates (i.e., descriptions of local communities). Local governments or businesses can, for example, use pooled 5-year ACS files that include as many observations as the long-form data—but a new 5-year ACS file is released every year. Although the content of the ACS has grown over time and is the subject of intense debate, the core survey items have remained largely unchanged over time. As compared to the long form, the ACS allows for annual monitoring; because it is administered annually, the content and design can be updated as circumstances change and as new opportunities arise. Although many academic and applied researchers resisted the demise of the decennial long form at the time the ACS was launched, few would opt to switch back now that the benefits of the ACS have been realized.

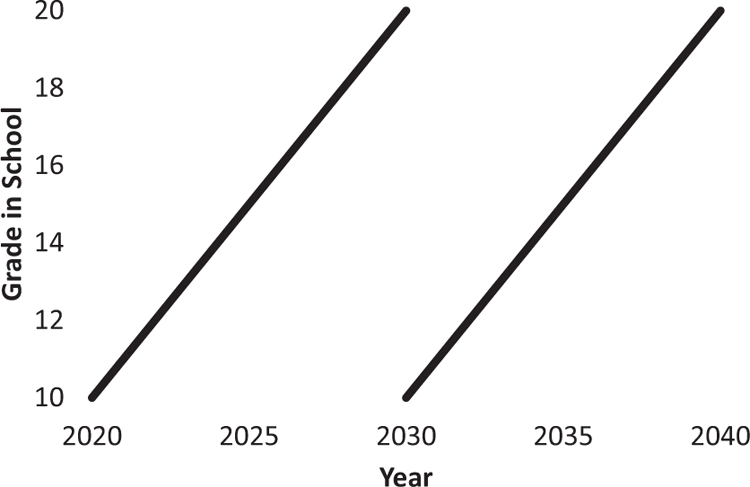

What would it look like if NCES did to its longitudinal surveys what the Census Bureau did to the long form? In Figure 1, I depict the current situation as it pertains to high school surveys; I might have drawn parallel figures for early- or middle-grade surveys. In the figure, the x-axis is calendar year and the y-axis is grade in school; the lines represent panels of students followed (just for example) from Grade 10 through “Grade” 20 (or 4 years beyond when students typically complete bachelor’s degrees). As depicted in the figure, if things proceed as they have in the past, NCES will likely field a new longitudinal 10th-grade cohort in about 2020 and another in 2030 (and so forth). They will be followed until just beyond the typical years of formal schooling. For the sake of argument, assume that each new cohort includes 30,000 tenth graders sampled from within 1,000 high schools.

FIGURE 1.

Current model: Field a new longitudinal survey every decade. Each line represents a new longitudinal cohort. For example, the leftmost line represents a cohort first observed as 10th graders in 2020 and followed until they were 8 years past 12th grade in 2030. Assume that each new longitudinal cohort includes 30,000 students sampled from within 1,000 schools.

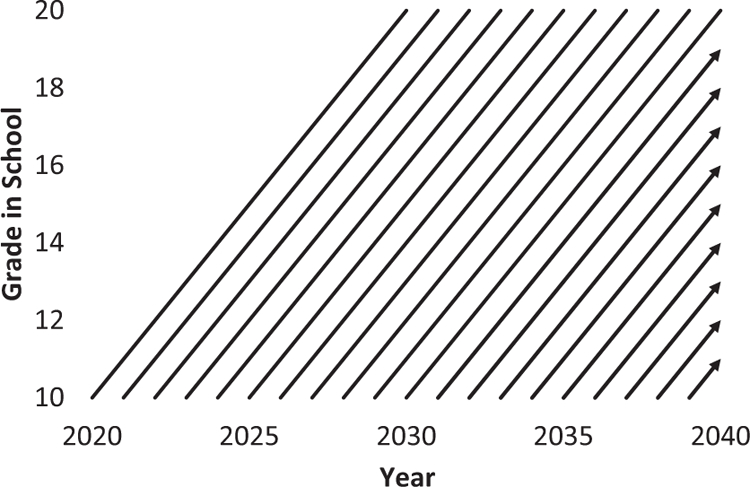

In Figure 2, I present an alternative model. Instead of enrolling a new cohort of 30,000 tenth-grade students in 1,000 schools every 10 years, NCES might enroll a smaller cohort every year. Under this model, every year NCES might enroll 200 new sampled schools. Schools selected to participate would do so for 5 consecutive years and would contribute 30 new sampled 10th graders in each of those years. All 10th graders would then be followed for 10 years, with interviews in Grades 10 and 12 and 2, 4, 6, and 8 years after most graduate high school. Under this model, in any calendar year, one in five schools would be in its 1st year of participation, one in five schools would be in its second year of participation, and so forth. The result of this design would be that in any calendar year, 180,000 young people from 1,000 different high schools would be participating in the study. As with the ACS, analysts could also pool data from consecutive years to yield larger samples of particular student groups or to generalize to the state, district, or school level. Below, I discuss the virtues of this new design.

FIGURE 2.

Proposed model: Field a new, smaller longitudinal survey every year. Each line represents a new longitudinal cohort. For example, the leftmost line represents a cohort first observed as 10th graders in 2020 and followed until they were 8 years past 12th grade in 2030. Assume that each year, 200 new schools enter the study; that each school is in the study for 5 consecutive years; and that each year, each school contributes 30 new students per year. This would mean that every year the study would include 180,000 people from 1,000 schools.

A downside of this design would obviously be its cost: It would involve interviewing many more individuals each year (although part of the cost could be recovered by absorbing or folding in other NCES cross-sectional data collection efforts into the redesigned longitudinal studies). There are a number of ways to modify this basic design to reduce cost while retaining its basic strengths. Schools could participate every other year for 5 years (in Years 1, 3, and 5); NCES could sample just a subset of students for longitudinal follow-up after high school and/or college (as it did, for example, with High School & Beyond); instead of surveying individuals every other year for 10 years, one or more of the post–high school follow-ups could be dropped (perhaps for samples of students); or new schools might be sampled to enter the survey every other year instead of every year. However, in the end, this design would cost more.

Bolder Vision 2: Do to NCES Longitudinal Student Surveys What NORC Has Long Done for the U.S. General Social Survey (GSS)

The GSS is an ongoing study of non-institutionalized adults in the United States. It has been administered annually (1972–1993) or biennially (1994 onward) since 1972 by NORC (formerly the National Opinion Research Center) at the University of Chicago. The core GSS questionnaire touches on a variety of social and political issues, including abortion, intergroup tolerance, crime and punishment, government spending, social mobility, civil liberties, religion, and women’s rights (to name just a few). The GSS has long been a foundational data resource in the social sciences; it is especially useful for studying long-term trends in things like attitudes about social issues, political views, and social mobility.

An important design feature of the GSS is that each year, outside investigators and research teams are allowed to compete to field supplemental topical survey modules (for which they also procure funding). Supplemental modules range in length from a single question to 15-minute batteries of questions; most are closed-ended survey items, but others use less traditional designs. In recent years, the GSS has included supplemental modules on topics like firearms, aging, cell phones, clergy sexual contact, and how people met their spouses.

If NCES’s longitudinal student surveys were administered annually or every other year, they could—like the GSS—feature both a core set of survey items that are fielded every year and supplemental items that vary from year to year; items could differ across levels of schooling (i.e., 10th grade, 2 years beyond 10th grade, etc.) and/or could be administered on the full sample or just a subset of respondents. Like the GSS, NCES could allow outside investigators—including academic researchers, other government agencies, and state or local education agencies—to compete for (and pay for, with grants or other funds) supplements that feature new content or innovative modes of administration.

On a small scale, schools or districts could fund supplemental items for students in their jurisdiction, or they could opt to field NCES surveys for a denser sample of students; the data might then not be released as part of the main data file. With 5 consecutive years of participation (as described above), the district could monitor trends in topics of special interest to them. On a larger scale, it would be possible to test new content modules—such as the innovative measures of school context, bullying, or immigrants’ experiences proposed by Muller (2014), Espelage (2014), and Rumbaut (2014), respectively—or new modes of survey administration, such as video analysis of classroom instruct as proposed by Grossman (2014).

New content modules could also be coordinated with the adoption of new policies to better observe the impact of those policies. Indeed, one could use this flexible design to implement some of the quasiexperimental and experimental designs described by Cook (2014). For example, imagine that a research team obtained funds to evaluate the efficacy of a dropout prevention program. The team could implement the program among 10th graders in 20 schools chosen at random from among the 200 selected to participate in the NCES longitudinal student surveys program in a given year; 20 other schools selected to participate in the longitudinal surveys program could be selected as controls. If the program were implemented in schools’ 3rd year (out of 5 years) of participating in the longitudinal surveys program, the evaluators could make both cross-cohort and between-school comparisons to assess the program’s effectiveness.

Advantages of the Proposed Model

If NCES were to adopt these two fundamental design changes, many of the current limitations of their longitudinal student surveys would be ameliorated, at least to some extent. The data from the surveys would continue to serve the many valuable functions that they now serve (although in some instances researchers may need to pool across incoming cohorts).

Routine monitoring. Once implemented, the data from the new longitudinal student surveys would include— each year—representative cross-sections of 10th graders, of students 2 years beyond 10th grade, and so forth. It would thus be possible to monitor educational trends from year to year; indeed, NCES may be able to use the newly configured longitudinal studies to do what is now accomplished by (for example) the Crime and Safety Surveys or the Fast Response Survey System. Unlike those surveys, however, the new data could be used to describe trends over time in longitudinal processes (e.g., trends over time in the association between 10th-grade student attributes and college enrollment).

Inferring policy effects. As noted above, with 5 consecutive years of participation by schools and with a flexible design that accommodates supplemental modules funded by outside investigators, the newly configured longitudinal studies program could serve as a vehicle to study the efficacy of various educational interventions. There would be limits, of course, to what could be done using relatively small samples.

Flexibility and innovation. An annual design would allow NCES to modify the content of the longitudinal student surveys as circumstances warrant. Of course, to facilitate monitoring and analyses of trends over time, it would be important for there to be some stability in core survey content. However, as educational issues emerge or evolve, new questions could be added to the core set of items and supplemental topical modules could be fielded.

International comparisons. The flexible new design would also facilitate cross-national comparison. The GSS, for example, now routinely fields topical modules as part of the International Social Survey Program; the same modules are fielded in GSS-like surveys in other countries. NCES could adopt a similar approach, fielding supplemental topical modules that replicate those fielded in other countries.

Local utility. A major logistical challenge that NCES faces in fielding its longitudinal student surveys is gaining the cooperation of schools. As things now stand, participation brings few tangible benefits to the schools, the districts, or their personnel. However, a flexible and annually administered survey in which schools participate for 5 consecutive years makes it possible to produce data that are much more useful for local stakeholders. For example, schools or districts might field short topical modules on issues of local interest that piggyback on NCES’s instruments. They might develop their own content, or more likely, they might field modules of questions developed by others for this purpose (e.g., modules of questions about school choice or instructional technology developed by private companies or social service agencies and made available to districts free of charge). Schools and districts might also increase local sample density to improve the reliability of local-level estimates. This would allow them to reliably describe local trends over 5 years in many educational processes and outcomes (e.g., attitudes among 10th graders, rates of college going). These data would have practical local utility, but they might also help to gain cooperation from schools and districts.

Less Bold, but Still Useful: Steps That NCES Can (Also) Take to Improve the Longitudinal Student Surveys

The two “bolder vision” design changes above may or may not be feasible to implement. My view is that together, they would make the data dramatically more useful. However, the design changes would mean some net additional expense. Whether or not the “bolder vision” above can be implemented, there are a number of other incremental steps that NCES could take to improve the utility of the longitudinal student surveys. All of the ideas below could be implemented instead of or in addition to the larger changes I have suggested above.

Measurement Innovations

NCES should continue its ongoing dialogue with experts in various fields to understand what measures could be added to longitudinal student surveys in order to maximize their utility and impact. Espelage (In press) makes a strong case for expanding and improving the surveys’ measures of school violence, bullying, sexual harassment, and the social and institutional contexts in which they occur; without high-quality data, it will be difficult to effectively address these problems. Moore and colleagues (In press) argue on various grounds that NCES ought to include more complete measures of physical health, development, and safety; psychological and emotional development; social development and behavior; cognitive development and approaches to learning; and relationships. They also demonstrated the need for obtaining multiple measures of these things, sometimes from multiple reporters and/or at multiple points in time. Secada (2014) noted the need to better and more completely assess STEM knowledge and training; this would include better measurement of STEM knowledge obtained outside of schools and further development of concepts related to learning trajectories. Muller (In press) argued for improving and expanding the way that NCES measures school context and actors’ positions within those contexts; this may include, among other things, better measurement of social networks and obtaining information from multiple actors. Finally, Rumbaut (2014) made a case for better ascertaining the perspectives of immigrant students.

It is difficult to say whether these are the most pressing topical areas in need of greater attention in future NCES longitudinal student surveys; I will leave it to others to establish those priorities. However, it is clear that NCES must continue its deliberative and collaborative process of constantly updating and improving the content of its longitudinal surveys.

New Modes of Observation

Likewise, NCES should continue to consider the value of nontraditional methods for obtaining important information about students and the contexts in which they are educated. Grossman (2014) has demonstrated the potential for measuring dynamic aspects of instructional practices via video methods. Others have made the case for deploying modern techniques for assessing students’ social networks (Muller, 2014) and for the value of “experience sampling” methods. I would add that the pervasiveness of cell phones in American high schools creates possibilities for understanding social networks, exposure to digital information, and various spatial issues; it would also seem to make mobile electronic survey administration more feasible. Again, I will leave it to others to weigh the costs and benefits of these various methodologies.

State Representative Samples

The sampling strategies employed in most NCES longitudinal student surveys do not currently permit researchers to generalize to the state level (except for 10 states in High School Longitudinal Survey:09). This limits the utility of the data for state education agencies and probably limits NCES’s ability to gain cooperation from state and local gatekeepers. Given that about 1,000 schools typically participate in NCES’s longitudinal surveys—and that about 1,000 would be participating at any one point in time under the “bolder vision” I outlined above—this means that it would be necessary to draw stratified random samples of (on average) about 20 schools from within each state. This certainly limits the potential to produce efficient state-level estimates using single years of data. However, under the “bolder vision” above, one could pool schools within states across years to produce reliable state-level estimates. This is exactly analogous to the ACS in this respect: To produce local area estimates, ACS users must pool across 3 or 5 years of data.

Spatial Data

In publicly released data files, NCES now provides limited geographic and neighborhood contextual information about the places in which schools are located. Versions of data files available via restricted-use agreements include much more geographic detail. However, these spatial measures are frequently tied to ZIP codes or census tracts. NCES should instead invest in spatial measures that are tied to schools’ catchment areas; see, for example, the School Attendance Boundary Information System (http://www.sabinsdata.org/), which provides aggregate census data and geographic information system–compatible boundary files for school attendance areas for selected years. For most applications, catchment areas are more meaningful geographic units that other administrative boundaries.

Beyond better measures of the neighborhoods in which schools are situated, NCES should produce spatial measures describing the places in which students reside. In an era of school choice and charter schools, many students live in neighborhoods whose attributes are quite different from the neighborhood around the school that they attend. Measures of students’ neighborhoods beyond what is now available (which is often just residential ZIP Codes) would be extremely useful for understanding the role of social, economic, and other contextual factors in shaping educational outcomes.

Administrative Record Linkage

As articulated by Dynarski (2014), there are numerous advantages to linking NCES’s longitudinal student surveys to existing administrative data. There are now few practical or technological barriers to linking to records from state longitudinal data systems (for information about school progress, curriculum and course work, and school and peer contexts), the National Student Clearinghouse (for completion and enrollment information), the National Postsecondary Student Aid Study (for financial aid information), the Common Core of Data (for information about schools and school districts), the Internal Revenue Service and Social Security Administration (for information about parents’ and young adults’ labor force activities and incomes), the ACS (for information about spatial contexts as described above), the National Death Index (for information about students’ and their parents’ deaths), and others. The current barriers (real or perceived) to linking to these and other administrative data are primarily legal and political. There are reasonable concerns about privacy and data sharing, and issues of communication and jurisdiction make it difficult for federal agencies to cooperate. However, the payoff to large-scale record linkage can be enormous; vast amounts of new high-quality information can be obtained. As noted by Dynarski, there are models in place for how linkage and data sharing might happen, including the model provided by the Census Bureau.

NCES should aggressively pursue large-scale administrative record linkage and to as many administrative data resources as possible. The resulting data should be made available in highly restricted form, for example, through Census Research Data Centers (which include data from many federal agencies outside of the U.S. Department of Commerce). I second Dynarski’s (2014) recommendations that NCES should (a) support researcher-initiated requests to link records from longitudinal student surveys to other data sources and (b) explore ways to make linked microdata more widely available to researchers, particularly by looking to successful initiatives at the Census Bureau.

Conclusion

NCES’s longitudinal student surveys have historically been extremely useful for describing educational processes and outcomes and for developing and testing ideas about how educational outcomes are shaped by individual, family, organizational, and other contextual factors. However, as now implemented, their utility is limited in a number of important respects. They cannot be used to monitor trends at short time intervals, they do not allow for flexible changes to survey content, they cannot generally be used to make inferences about policy or other treatment effects, they are not especially useful for making international comparisons, and they are of limited practical value to local stakeholders. NCES should do for its longitudinal students surveys what the Census Bureau did to the census long form and what NORC has done with the GSS: Move to annual rotating panels and allow outside investigators to field (and fund) supplemental topical modules. I outlined the virtues of this design using the example of longitudinal surveys that begin in 10th grade. However, the design I propose could begin with young people of any grade or age; I might just as well have demonstrated the utility of this design using kindergartners as the baseline. My intuition (and it is just that) is that the optimal strategy would be to have a baseline survey in kindergarten or preschool and another in eighth or ninth grade; the former cohorts could be followed through the transition to high school, and the latter could be followed beyond the typical ages of college completion.

Along the way, and regardless of whether the broader design changes that I describe can be made, NCES should continue to work with the research community to explore new survey content areas and modes of observation, move to state representative samples, improve the quality of the spatial data it provides about both school catchment areas and students’ residential neighborhoods, and pursue large-scale record linkage to numerous administrative data. These measures would vastly expand the utility of these already valuable data resources.

The broader design changes that I propose would be expensive to implement. Above, I outline some ways that the design might be scaled back a bit to reduce the added costs. It might also be possible to realize savings by folding ongoing surveys, like the Crime and Safety Surveys, the Fast Response Survey System, and/or the National Household Education Surveys, into the proposed design. However, the proposed design would nonetheless be expensive. I contend that the additional utility of the data that would result from this design for multiple stakeholders would make the additional costs more than worth it.

Although my comments and suggestions have been mainly critical in tone, the fact is that we are lucky to have the current NCES longitudinal student surveys program. The data that this program has produced have long been enormously valuable. It would certainly not be a bad outcome if NCES were simply to go forward with the same basic design it has long used, continuing to make relatively minor modifications to survey content, sampling strategy, and survey mode. However, in my view, this would be a missed opportunity.

Acknowledgments

This paper was initially prepared for the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, held on November 5–6, 2013, in Washington, DC. This paper was supported with funding from the Department of Education Institute of Education Sciences under grant # R305U120002. This paper reflects the views of the author and not necessarily those of the U.S. Department of Education. I am very grateful to workshop and American Educational Research Association session participants for providing helpful comments and suggestions on presentations that culminated in this paper and to three anonymous AERA Open reviewers for their constructive feedback. However, errors and omissions are solely my responsibility.

Author

JOHN ROBERT WARREN is a professor of sociology at the University of Minnesota and Training Director of the Minnesota Population Center. He does work in education policy, health disparities, and historical demography; is co-leading the 2013–2015 follow up of the High School & Beyond cohorts; and is editor of Sociology of Education.

References

- Cook T (2014, November). Quasi-experiments with longitudinal survey data. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Dynarski S (2014, November). Building better longitudinal surveys (on the cheap) through links to administrative data. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Espelage DL (2014, November). Using NCES surveys to understand school violence and bullying. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Grossman P (2014, November). Collecting evidence of instruction with video and observation data in NCES surveys. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Moore KA, Lippman L, & Ryberg R (2014, November). Improving outcome measures other than achievement. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Muller C (2014, November). New tools for measuring context. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Rumbaut RG (2014, November). Using NCES surveys to understand the experiences of immigrant-origin students. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]

- Secada WG (2014, November). Implications of evolving notions in STEM education for longitudina data gathering. Paper presented at the National Academy of Education’s Workshop to Examine Current and Potential Uses of NCES Longitudinal Surveys by the Education Research Community, Washington, DC. [Google Scholar]