Abstract

Medical imaging plays a significant role in different clinical applications such as medical procedures used for early detection, monitoring, diagnosis, and treatment evaluation of various medical conditions. Basicsof the principles and implementations of artificial neural networks and deep learning are essential for understanding medical image analysis in computer vision. Deep Learning Approach (DLA) in medical image analysis emerges as a fast-growing research field. DLA has been widely used in medical imaging to detect the presence or absence of the disease. This paper presents the development of artificial neural networks, comprehensive analysis of DLA, which delivers promising medical imaging applications. Most of the DLA implementations concentrate on the X-ray images, computerized tomography, mammography images, and digital histopathology images. It provides a systematic review of the articles for classification, detection, and segmentation of medical images based on DLA. This review guides the researchers to think of appropriate changes in medical image analysis based on DLA.

Keywords: Deep learning, Convolutional neural networks, Medical images, Segmentation, Classification, Detection

Introduction

In the health care system, there has been a dramatic increase in demand for medical image services, e.g. Radiography, endoscopy, Computed Tomography (CT), Mammography Images (MG), Ultrasound images, Magnetic Resonance Imaging (MRI), Magnetic Resonance Angiography (MRA), Nuclear medicine imaging, Positron Emission Tomography (PET) and pathological tests. Besides, medical images can often be challenging to analyze and time-consuming process due to the shortage of radiologists.

Artificial Intelligence (AI) can address these problems. Machine Learning (ML) is an application of AI that can be able to function without being specifically programmed, that learn from data and make predictions or decisions based on past data. ML uses three learning approaches, namely, supervised learning, unsupervised learning, and semi-supervised learning. The ML techniques include the extraction of features and the selection of suitable features for a specific problem requires a domain expert. Deep learning (DL) techniques solve the problem of feature selection. DL is one part of ML, and DL can automatically extract essential features from raw input data [88]. The concept of DL algorithms was introduced from cognitive and information theories. In general, DL has two properties: (1) multiple processing layers that can learn distinct features of data through multiple levels of abstraction, and (2) unsupervised or supervised learning of feature presentations on each layer. A large number of recent review papers have highlighted the capabilities of advanced DLA in the medical field MRI [8], Radiology [96], Cardiology [11], and Neurology [155].

Different forms of DLA were borrowed from the field of computer vision and applied to specific medical image analysis. Recurrent Neural Networks (RNNs) and convolutional neural networks are examples of supervised DL algorithms. In medical image analysis, unsupervised learning algorithms have also been studied; These include Deep Belief Networks (DBNs), Restricted Boltzmann Machines (RBMs), Autoencoders, and Generative Adversarial Networks (GANs) [84]. DLA is generally applicable for detecting an abnormality and classify a specific type of disease. When DLA is applied to medical images, Convolutional Neural Networks (CNN) are ideally suited for classification, segmentation, object detection, registration, and other tasks [29, 44]. CNN is an artificial visual neural network structure used for medical image pattern recognition based on convolution operation. Deep learning (DL) applications in medical images are visualized in Fig. 1.

Fig. 1.

a X-ray image with pulmonary masses [121] b CT image with lung nodule [82] c Digitized histo pathological tissue image [132]

Neural networks

History of neural networks

The study of artificial neural networks and deep learning derives from the ability to create a computer system that simulates the human brain [33]. A neurophysiologist, Warren McCulloch, and a mathematician Walter Pitts [97] developed a primitive neural network based on what has been known as a biological structure in the early 1940s. In 1949, a book titled “Organization of Behavior” [100] was the first to describe the process of upgrading synaptic weights which is now referred to as the Hebbian Learning Rule. In 1958, Frank Rosenblatt’s [127] landmark paper defined the structure of the neural network called the perceptron for the binary classification task.

In 1962, Windrow [172] introduced a device called the Adaptive Linear Neuron (ADALINE) by implementing their designs in hardware. The limitations of perceptions were emphasized by Minski and Papert (1969) [98]. The concept of the backward propagation of errors for purposes of training is discussed in Werbose1974 [171]. In 1979, Fukushima [38] designed artificial neural networks called Neocognitron, with multiple pooling and convolution layers. One of the most important breakthroughs in deep learning occurred in 2006, when Hinton et al. [9] implemented the Deep Belief Network, with several layers of Restricted Boltzmann Machines, greedily teaching one layer at a time in an unsupervised fashion. In 1989, Yann LeCun [71] combined CNN with backpropagation to effectively perform the automated recognition of handwritten digits. Figure 2 shows important advancements in the history of neural networks that led to a deep learning era.

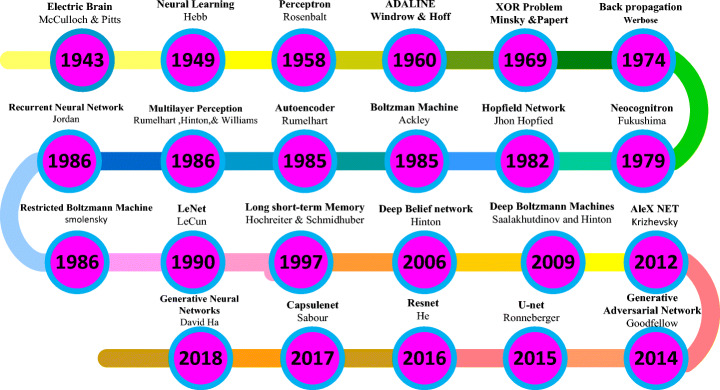

Fig. 2.

Demonstrations of significant developments in the history of neural networks [33, 134]

Artificial neural networks

Artificial Neural Networks (ANN) form the basis for most of the DLA. ANN is a computational model structure that has some performance characteristics similar to biological neural networks. ANN comprises simple processing units called neurons or nodes that are interconnected by weighted links. A biological neuron can be described mathematically in Eq. (1). Figure 3 shows the simplest artificial neural model known as the perceptron.

| 1 |

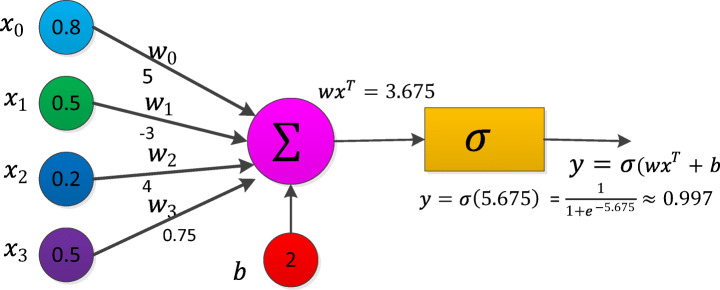

Fig. 3.

Perceptron [77]

Let  be the vector of inputs (features),

be the vector of inputs (features),  be the weight vector,

be the weight vector,  be the bias, f be the non-linear activation (sigmoid) function and y be the scalar, the output of a node. For the construction of neural networks required parameters are i) The pattern of connections between the neurons (architecture) ii) The method of determining the weights on the connection (Training or learning) and iii) Activation function. Depending on the pattern of connection between nodes in the neural networks, they are mainly classified into two types, viz., a Feed-Forward Neural Network (FFNN) and Recurrent Neural Networks (RNN) [42]. For FFRN the requirement is that network has to be a directed acyclic graph. In RNN the connection between nodes forms a directed cycle. An FFNN consists of one or more layers in which each layer comprises one or more nodes. The network has one input layer and the last layer is called the output layer. The layers between the input and the output layer are considered as a hidden layer. FFNN is used for supervised [140] learning tasks like classification and regression.

be the bias, f be the non-linear activation (sigmoid) function and y be the scalar, the output of a node. For the construction of neural networks required parameters are i) The pattern of connections between the neurons (architecture) ii) The method of determining the weights on the connection (Training or learning) and iii) Activation function. Depending on the pattern of connection between nodes in the neural networks, they are mainly classified into two types, viz., a Feed-Forward Neural Network (FFNN) and Recurrent Neural Networks (RNN) [42]. For FFRN the requirement is that network has to be a directed acyclic graph. In RNN the connection between nodes forms a directed cycle. An FFNN consists of one or more layers in which each layer comprises one or more nodes. The network has one input layer and the last layer is called the output layer. The layers between the input and the output layer are considered as a hidden layer. FFNN is used for supervised [140] learning tasks like classification and regression.

Training a neural network with Backpropagation (BP)

In the neural networks, the learning process is modeled as an iterative process of optimization of the weights to minimize a loss function. Based on network performance, the weights are modified on a set of examples belonging to the training set. The necessary steps of the training procedure contain forward and backward phases. For Neural Network training, any of the activation functions in forwarding propagation is selected and BP training is used for changing weights. The BP algorithm helps multilayer FFNN to learn input-output mappings from training samples [16]. Forward propagation and backpropagation are explained with the one hidden layer deep neural networks in the following algorithm.

The backpropagation algorithm is as follows for one hidden layer neural network

Initialize all weights to small random values.

While the stopping condition is false, do steps 3 through10.

For each training pair ((x1, y1)…(xn, yn) do steps 4 through 9.

Feed-forward propagation:

-

4.

Each input unit (Xi, i = 1, 2, …n) receives the input signal xi and send this signal to all hidden units in the above layer.

-

5.

Each hidden unit (Zj, j = 1. ., p) compute output using the below equation, and it transmits to the output unit (i.e.) applies to an activation function Zj = f(Zj _ in).

-

6.

Compute the out signal for each output unit (Yk,k = 1, …., m).

and calculate activation yk = f(yk _ in)

Backpropagation

-

7.

For input training pattern (x1, x2…., xn) corresponding output pattern (y1, y2, …, ym), let (t1, t2, …. . tm) be target pattern. For each output, the neuron computes network error δk

At output-layer neurons δk = (tk − yk)f′(yk _ in)

-

8.

For each hidden neuron, calculate its error information term δj while doing so, use δk of the output neurons as obtained in the previous step

At Hidden layer neurons

-

9.

Update weights and biases using the following formulas where ηis learning rate

Each output layer (Yk, k = 1, 2, …. m) updates its weights (J = 0, 1, …P) and bias

wjk(new) = wjk(old) + ηδkzj;bk(new) = bk(old) + ηδk

Each hidden layer (ZJ, J = 1, 2, …p) updates its weights (i = 0, 1, …n) biases:

wij(new) = wij(old) + ηδjxi; bj(old) = bj(old) + ηδj

-

10.

Test stopping condition

Activation function

The activation function is the mechanism by which artificial neurons process and transfers information [42]. There are various types of activation functions which can be used in neural networks based on the characteristic of the application. The activation functions are non-linear and continuously differentiable. Differentiability property is important mainly when training a neural network using the gradient descent method. Some widely used activation functions are listed in Table 1.

Table 1.

Activation functions

| Function name | Function equation | Function derivate |

|---|---|---|

| Sigmoid [86] | = f (x)(1 − f (x)) | |

| Hyperbolic tangent [87] | = 1 − f (x) 2 | |

| Soft sign activation | ||

| Rectified Linear Unit [68, 104] (ReLU) | ||

|

Leaky Rectified Linear Unit [94] (leaky ReLU) |

||

| Parameterized Rectified Linear Unit(PReLU) [47] | PReLU is the same as leaky ReLU. The difference is ∝ can be learned from training data via backpropagation | |

| Randomized Leaky Rectified Linear Unit [180] | ||

| Soft plus [32] | f (x) = ln(1 + ex) | |

| Exponential Linear Unit (ELU) [24, 137] | ||

| Scaled exponential Linear Unit (SELU) [67] | ||

Deep learning

Deep learning is a subset of the machine learning field which deals with the development of deep neural networks inspired by biological neural networks in the human brain.

Autoencoder

Autoencoder (AE) [128] is one of the deep learning models which exemplifies the principle of unsupervised representation learning as depicted in Fig. 4a. AE is useful when the input data have more number of unlabelled data compared to labeled data. AE encodes the input x into a lower-dimensional space z. The encoded representation is again decoded to an approximated representation x′ of the input x through one hidden layer z.

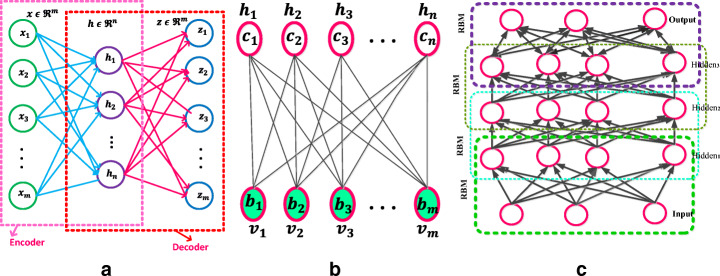

Fig. 4.

a Autoencoder [187] b Restricted Boltzmann Machine with n hidden and m visible units [88] c Deep Belief Networks [88]

Basic AE consists of three main steps:

Encode: Convert input vector into , the hidden layer by h = f(wx + b)where and.m and n are dimensions of the input vector and converted hidden state. The dimension of the hidden layer h is to be smaller than x.f is an activate function.

Decode: Based on the above h, reconstruct input vector z by equation z = f ′(w′h + b′) where and The f′is the same as the above activation function.

Calculate square error:Lrecons(x, z) = ∥ x − z∥2, which is the reconstruction error cost function. Reconstruct error minimization is achieved by optimizing the cost function (2)

| 2 |

Another unsupervised algorithm representation is known as Stacked Autoencoder (SAE). The SAE comprises stacks of autoencoder layers mounted on top of each other where the output of each layer was wired to the inputs of the next layer. A Denoising Autoencoder (DAE) was introduced by Vincent et al. [159]. The DAE is trained to reconstruct the input from random noise added input data. Variational autoencoder (VAE) [66] is modifying the encoder where the latent vector space is used to represent the images that follow a Gaussian distribution unit. There are two losses in this model; one is a mean squared error and the Kull back Leibler divergence loss that determines how close the latent variable matches the Gaussian distribution unit. Sparse autoencoder [106] and variational autoencoders have applications in unsupervised, semi-supervised learning, and segmentation.

Restricted Boltzmann machine

A Restricted Boltzmann machine [RBM] is a Markov Random Field (MRF) associated with the two-layer undirected probabilistic generative model, as shown in Fig. 4b. RBM contains visible units (input) v and hidden (output) units h. A significant feature of this model is that there is no direct contact between the two visible units or either of the two hidden units. In binary RBMs, the random variables (v, h) takes (v, h) ∈ {0, 1}m + n. Like the general Boltzmann machine [50], the RBM is an energy-based model. The energy of the state {v, h} is defined as (3)

| 3 |

where vj, hi are the binary states of visible unit j ∈ {1, 2, …m} and hidden unit i ∈ {1, 2, .. n}, bj, ci are their biases of visible and hidden units, wij is the symmetric interaction term between the units vj and hi them. A joint probability of (v, h) is given by the Gibbs distribution in Eq. (4)

| 4 |

Z is a “partition function” that can be given by summing over all possible pairs of visual v and hidden h (5).

| 5 |

A significant feature of the RBM model is that there is no direct contact between the two visible units or either of the two hidden units. In term of probability, conditional distributions p(h| v) and p(v| h) is computed as (6)

| 6 |

For binary RBM condition distribution of visible and hidden are given by (7) and (8)

| 7 |

| 8 |

where σ(·) is a sigmoid function

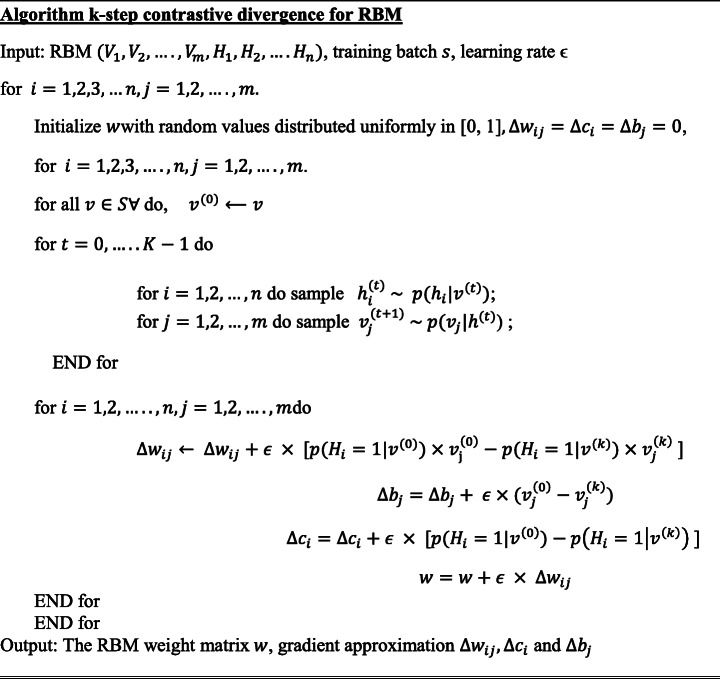

RBMs parameters (wij, bj, ci) are efficiently calculated using the contrastive divergence learning method [150]. A batch version of k-step contrastive divergence learning (CD-k) can be discussed in the algorithm below [36]

.

.

Deep belief networks

The Deep Belief Networks (DBN) proposed by Hinton et al. [51] is a non-convolution model that can extract features and learn a deep hierarchical representation of training data. DBNs are generative models constructed by stacking multiple RBMs. DBN is a hybrid model, the first two layers are like RBM, and the rest of the layers form a directed generative model. A DBN has one visible layer v and a series of hidden layers h(1), h(2), …, h(l) as shown in Fig. 4c. The DBN model joint distribution between the observed units v and the l hidden layers hk( k = 1, …l) as (9)

| 9 |

where v = h(0), P(hk| hk + 1) is a conditional distribution (10) for the layer k given the units of k + 1

| 10 |

A DBN has l weight matrices: W(1), …. , W(l) and l + 1 bias vectors: b(0), …, b(l)P(h(l), h(l − 1)) is the joint distribution of top-level RBM (11).

| 11 |

The probability distribution of DBN is given by Eq. (12)

| 12 |

Convolutional neural networks (CNN)

In neural networks, CNN is a unique family of deep learning models. CNN is a major artificial visual network for the identification of medical image patterns. The family of CNN primarily emerges from the information of the animal visual cortex [55, 116]. The major problem within a fully connected feed-forward neural network is that even for shallow architectures, the number of neurons may be very high, which makes them impractical to apply to image applications. The CNN is a method for reducing the number of parameters, allows a network to be deeper with fewer parameters.

CNN’s are designed based on three architectural ideas that are shared weights, local receptive fields, and spatial sub-sampling [70]. The essential element of CNN is the handling of unstructured data through the convolution operation. Convolution of the input signal x(t) with filter signal h(t) creates an output signal y(t) that may reveal more information than the input signal itself. 1D convolution of a discrete signals x(t) and h(t) is (13)

| 13 |

A digital image x(n1, n2) is a 2-D discrete signal. The convolution of images x(n1, n2) and h(n1, n2) is (14)

| 14 |

where 0 ≤ n1 ≤ M − 1, 0 ≤ n2 ≤ N − 1.

The function of the convolution layer is to detect local features xl from input feature maps xl − 1 using kernels kl by convolution operation (*) i.e. xl − 1 ∗ kl. This convolution operation is repeated for every convolutional layer subject to non-linear transform (15)

| 15 |

where represents weights between feature map m at layer l − 1 and feature map n at represents the m feature map of the layer l − 1 and is n feature map of the layer l. is the bias parameter. f(.) is the non-linear activation function. Ml − 1 denotes a set of feature maps. CNN significantly reduces the number of parameters compared with a fully connected neural network because of local connectivity and weight sharing. The depth, zero-padding, and stride are three hyperparameters for controlling the volume of the convolution layer output.

A pooling layer comes after the convolutional layer to subsample the feature maps. The goal of the pooling layers is to achieve spatial invariance by minimizing the spatial dimension of the feature maps for the next convolution layer. Max pooling and average pooling are commonly used two different polling operations to achieve downsampling. Let the size of the pooling region M and each element in the pooling region is given as xj = (x1, x2, …xM × M), the output after pooling is given as xi. Max pooling and average polling are described in the following Eqs. (16) and (17).

| 16 |

| 17 |

The max-pooling method chooses the most superior invariant feature in a pooling region. The average pooling method selects the average of all the features in the pooling area. Thus, the max-pooling method holds texture information that can lead to faster convergence, average pooling method is called Keep background information [133]. Spatial pyramid pooling [48], stochastic polling [175], Def-pooling [109], Multi activation pooling [189], and detailed preserving pooling [130] are different pooling techniques in the literature. A fully connected layer is used at the end of the CNN model. Fully connected layers perform like a traditional neural network [174]. The input to this layer is a vector of numbers (output of the pooling layer) and outputs an N-dimensional vector (N number of classes). After the pooling layers, the feature of previous layer maps is flattened and connected to fully connected layers.

The first successful seven-layered LeNet-5 CNN was developed by Yann LeCunn in 1990 for handwritten digit recognition successfully. Krizhevsky et al. [68] proposed AlexNet is a deep convolutional neural network composed of 5 convolutional and 3 fully-connected layers. In AlexNet changed the sigmoid activation function to a ReLU activation function to make model training easier.

K. Simonyan and A. Zisserman invented the VGG-16 [143] which has 13 convolutional and 3 fully connected layers. The Visual Geometric Group (VGG) research group released a series of CNN starting from VGG-11, VGG-13, VGG-16, and VGG-19. The main intention of the VGG group to understand how the depth of convolutional networks affects the accuracy of the models of image classification and recognition. Compared to the maximum VGG19, which has 16 convolutional layers and 3 fully connected layers, the minimum VGG11 has 8 convolutional layers and 3 fully connected layers. The last three fully connected layers are the same as the various variations of VGG.

Szegedy et al. [151] proposed an image classification network consisting of 22 different layers, which is GoogleNet. The main idea behind GoogleNet is the introduction of inception layers. Each inception layer convolves the input layers partially using different filter sizes. Kaiming He et al. [49] proposed the ResNet architecture, which has 33 convolutional layers and one fully-connected layer. Many models introduced the principle of using multiple hidden layers and extremely deep neural networks, but then it was realized that such models suffered from the issue of vanishing or exploding gradients problem. For eliminating vanishing gradients’ problem skip layers (shortcut connections) are introduced. DenseNet developed by Gao et al. [54] consists of several dense blocks and transition blocks, which are placed between two adjacent dense blocks. The dense block consists of three layers of batch normalization, followed by a ReLU and a 3 × 3 convolution operation. The transition blocks are made of Batch Normalization, 1 × 1 convolution, and average Pooling.

Compared to state-of-the-art handcrafted feature detectors, CNNs is an efficient technique for detecting features of an object and achieving good classification performance. There are drawbacks to CNNs, which are that unique relationships, size, perspective, and orientation of features are not taken into account. To overcome the loss of information in CNNs by pooling operation Capsule Networks (CapsNet) are used to obtain spatial information and most significant features [129]. The special type of neurons, called capsules, can detect efficiently distinct information. The capsule network consists of four main components that are matrix multiplication, Scalar weighting of the input, dynamic routing algorithm, and squashing function.

Recurrent neural networks (RNN)

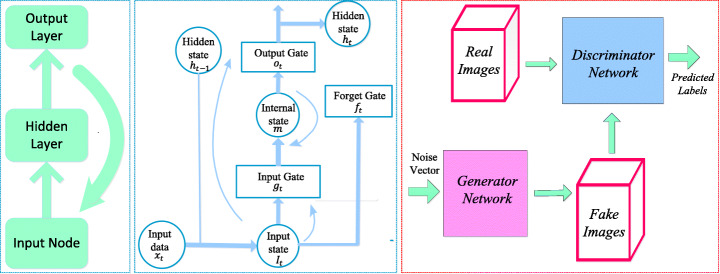

RNN is a class of neural networks used for processing sequential information (deal with sequential data). The structure of the RNN shown in Fig. 5a is like an FFNN and the difference is that recurrent connections are introduced among hidden nodes. A generic RNN model at time t, the recurrent connection hidden unit ht receives input activation from the present data xt and the previous hidden state ht − 1. The output yt is calculated given the hidden state ht. It can be represented using the mathematical Eqs. (18) and (19) as

Fig. 5.

a Recurrent Neural Networks [163] b Long Short-Term Memory [163] c Generative Adversarial Networks [64]

| 18 |

| 19 |

Here f is a non-linear activation function, whx is the weight matrix between the input and hidden layers, whh is the matrix of recurrent weights between the hidden layers and itself wyh is the weight matrix between the hidden and output layer, and bhand by are biases that allow each node to learn and offset. While the RNN is a simple and efficient model, in reality, it is, unfortunately, difficult to train properly. Real-Time Recurrent Learning (RTRL) algorithm [173] and Back Propagation Through Time (BPTT) [170] methods are used to train RNN. Training with these methods frequently fails because of vanishing (multiplication of many small values) or explode (multiplication of many large values) gradient problem [10, 112]. Hochreiter and Schmidhuber (1997) designed a new RNN model named Long Short Term Memory (LSTM) that overcome error backflow problems with the aid of a specially designed memory cell [52]. Figure 5b shows an LSTM cell which is typically configured by three gates: input gate gt, forget gate ft and output gate ot, these gates add or remove information from the cell.

An LSTM can be represented with the following Eqs. (20) to (25)

| 20 |

| 21 |

| 22 |

| 23 |

| 24 |

| 25 |

Generative adversarial networks (GAN)

In the field of deep learning, one of the deep generative models are Generative Adversarial Networks (GANs) introduced by Good Fellow in [43]. GANs are neural networks that can generate synthetic images that closely imitate the original images. In GAN shown in Fig. 5c, there are two neural networks, namely generator, and discriminator, which are trained simultaneously. The generator G generates counterfeit data samples which aim to “fool” the discriminator D, while the discriminator attempts to correctly distinguish the true and false samples. In mathematical terms, D and G play a two player minimax game with the cost function of (26) [64].

| 26 |

Where x represents the original image, z is a noise vector with random numbers. pdata(x) and pz(z) are probability distributions of x and z, respectively. D(x) represents the probability that x comes from the actual data pdata(x) rather than the generated data. 1 − D(G(z)) is the probability that it can be generated from pz(z). The expectation of x from the real data distribution pdata is expressed by and the expectation of z sampled from noise is The goal of the training is to maximize the loss function for the discriminator, while the training objective for the generator is to reduce the term log(1 − D(G(z))).The most utilization of GAN in the field of medical image analysis is data augmentation (generating new data) and image to image translation [107]. Trustability of the Generated Data, Unstable Training, and evaluation of generated data are three major drawbacks of GAN that might hinder their acceptance in the medical community [183].

U-net

Ronneberger et al. [126] proposed CNN based U-Net architecture for segmentation in biomedical image data. The architecture consists of a contracting path (left side) to capture context and an expansive symmetric path (right side) that enables precise localization. U-Net is a generalized DLA used for quantification tasks such as cell detection and shape measurement in medical image data [34].

Software frameworks

There are several software frameworks available for implementing DLA which are regularly updated as new approaches and ideas are created. DLA encapsulates many levels of mathematical principles based on probability, linear algebra, calculus, and numerical computation. Several deep learning frameworks exist such as Theano, TensorFlow, Caffe, CNTK, Torch, Neon, pylearn, etc. [138]. Globally, Python is probably the most commonly used programming language for DL. PyTorch and Tensorflow are the most widely used libraries for research in 2019. Table 2 shows the analysis of various Deep Learning Frameworks based on the core language and supported interface language.

Table 2.

Comparison of various Deep Learning Frameworks

| Framework | Core Language | Interface provided | Link |

|---|---|---|---|

| Caffe [61] | C ++ | Python,MATLAB, C ++ | http://caffe.berkeleyvision.org/ |

| CNTK [186] | C ++ | C ++,Python,Brain Script | https://github.com/Microsoft/CNTK |

| Chainer | – | Python | http://chainer.org/ |

| DL4j | Java | Java, Python, Scala | https://deeplearning4j.org/ |

| MXNet | C ++ |

Python, R, Scala, Perl, Julia, C ++, etc. |

https://github.com/dmlc/mxnet |

| MatConvNet [158] | – | MATLAB | http://www.vlfeat.org/matconvnet/ |

| Tensor Flow [1] | C ++ | – | https://www.tensorflow.org/ |

| Theano [6, 153] | Python | Python | http://deeplearning.net/software/theano/ |

| Torch [25] | Lua | – | http://torch.ch/ |

Use of deep learning in medical imaging

X-ray image

Chest radiography is widely used in diagnosis to detect heart pathologies and lung diseases such as tuberculosis, atelectasis, consolidation, pleural effusion, pneumothorax, and hyper cardiac inflation. X-ray images are accessible, affordable, and less dose-effective compared to other imaging methods, and it is a powerful tool for mass screening [14]. Table 3 presents a description of the DL methods used for X-ray image analysis.

Table 3.

An overview of the DLA for the study of X-ray images

| Reference | Dataset | Method | Application | Metrics |

|---|---|---|---|---|

| Lo et al.,1995 [89] | – | CNN | Two-layer CNN, each with 12 5 × five filters for lung nodule detection. | ROC |

| S.Hwang et al. 2016 [57] | KIT, MC, and Shenzhen | Deep CNN | The first deep CNN-based Tuberculosis screening system with transfer learning technique | AUC |

| Rajpurkar et al. 2017 [122] | ChestX-ray14 | CNN | Detects Pneumonia using CheXNet is a 121-layer CNN from a chest X-ray image. | F1 score |

|

Lopes & Valiati 2017 [91] |

Shenzhen and Montgomery | CNN | Comparative analysis of Pre-trained CNN as feature extractors for tuberculosis detection | Accuracy, ROC |

| Mittal et al. 2018 [99] | JSRT | LF-SegNet | Segmentation of lung field from CXR images using Fully convolutional encoder-decoder network | Accuracy |

| E.J.Hwang et al. 2019 [58] | 57,481 CXR images | CNN | Deep learning-based automatic detection (DLAD) algorithm for tuberculosis detection on CXR | ROC |

| Souza et al. 2019 [148] | Montgomery | CNN | Segmentation of lungs in CXR for detection and diagnosis of pulmonary diseases using two CNN architecture | Dice coefficient |

| Hooda et al. [53] | Shenzhen, Montgomery, Belarus, JSRT | CNN | An ensemble of three pre-trained architectures ResNet, AlexNet, and GoogleNet for TB detection | Accuracy, ROC |

| Xu et al. 2019 [181] | chest X-ray14 | CNN, CXNet-m1 | Design a hierarchical CNN structure for a new network CXNet-m1 to detect anomaly of chest X-ray images | Accuracy, F1-score, and AUC |

| Murphy et al. 2019 [103] | 5565 CXR images | Deep learning-based CAD4TB software evaluation | ROC | |

| Rajaraman and Antani 2020 [119] | RSNA, Pediatric pneumonia, and Indiana, | CNN | An ensemble of modality-specific deep learning models for Tuberculosis (TB) detection from CXR |

Accuracy, AUC, CI |

| Capizzi et al. 2020 [15] | Open data set from radiologykey.com | PNN | The fuzzy system, combined with a neural network, can detect low-contrast nodules. | Accuracy |

| Abbas et al. 2020 [2] | 196 X-ray images | CNN | Classification of COVID-19 CXR images using Decompose, Transfer, and Compose (DeTraC) | Accuracy, SN, SP |

| Basu et al. 2020 [7] | 225 COVID-19 CXR images | CNN | DETL (Domain Extension Transfer Learning) method for the screening of COVID-19 from CXR images | Accuracy |

| Wang & Wong 2020 [165] | 13,975 X-ray images | CNN | A deep convolutional neural network COVID-Net design for the detection of COVID-19 cases | Accuracy, SN, PPV. |

| Ozturk et al. 2020 [110] | 127 X-ray images | CNN | Deep learning-based DarkCovid net model to detect and classify COVID-19 cases from X-ray images | Accuracy. |

| Loey et al. 2020 [90] | 306 X-ray images | AlexNet google Resnet18 | A GAN with deep transfer learning for COVID-19 detection in limited CXR images. | Accuracy, |

| Apostolopoulos & Mpesiana 2020 [3] | 1427 X-ray images | CNN | Transfer Learning-based CNN architectures to the detection of the Covid-19. | Accuracy, SN, SP |

S. Hwang et al. [57] proposed the first deep CNN-based Tuberculosis screening system with a transfer learning technique. Rajaraman et al. [119] proposed modality-specific ensemble learning for the detection of abnormalities in chest X-rays (CXRs). These model predictions are combined using various ensemble techniques toward minimizing prediction variance. Class selective mapping of interest (CRM) is used for visualizing the abnormal regions in the CXR images. Loey et al. [90] proposed A GAN with deep transfer training for COVID-19 detection in CXR images. The GAN network was used to generate more CXR images due to the lack of the COVID-19 dataset. Waheed et al. [160] proposed a CovidGAN model based on the Auxiliary Classifier Generative Adversarial Network (ACGAN) to produce synthetic CXR images for COVID-19 detection. S. Rajaraman and S. Antani [120] introduced weakly labeled data augmentation for increasing training dataset to improve the COVID-19 detection performance in CXR images.

Computerized tomography (CT)

CT uses computers and rotary X-ray equipment to create cross-section images of the body. CT scans show the soft tissues, blood vessels, and bones in different parts of the body. CT is a high detection ability, reveals small lesions, and provides a more detailed assessment. CT examinations are frequently used for pulmonary nodule identification [93]. The detection of malignant pulmonary nodules is fundamental to the early diagnosis of lung cancer [102, 142]. Table 4 summarizes the latest deep learning developments in the study of CT image analysis.

Table 4.

A review of articles that use DL techniques for the analysis of the CT image

| Reference | Dataset | Method | Application | Metrics |

|---|---|---|---|---|

|

Van Ginneken 2015 [157] |

LIDC (865 CT scans) | CNN | Nodule detects in chest CT with pre-trained CNN models from orthogonal patches around the candidate | FROC |

| Li et al. 2016 [74] | LIDC database. | CNN | Nodule classification with 2D CNN that processes small patches around a nodule |

SN, FP/exam Accuracy |

| Setio et al. 2016 [136] |

LIDC-IDRI, ANODE09 |

Multi-view Conv Net |

CNN-based algorithms for pulmonary nodule detection with 9-patches per candidate. |

Sensitivity FROC |

| Shin et al. 2016 [141] | ILD dataset | CNN | Interstitial lung disease (ILD) classification and Lymph node (LN) detection using transfer learning-based CNNs | AUC |

| Qiang, Yan et al. 2017 [117] | Independent dataset | Deep SDAE-ELM | Discriminative features of nodules in CT and PET images are combined using the fusion method for classification of nodules | SN,SP,AUC, |

| Onishi Y et al. 2019 [108] | Independent dataset | CNN | CNN trained by Wasserstein GAN for pulmonary nodule classification | SN, SP, AUC Accuracy |

| Li et al. .2018 [75] | 2017 LiTS, 3DIRCADb dataset | H-Dense Unet | H-Dense UNet for tumor and liver segmentation from CT volume | DICE |

| Pezeshk et al. 2018 [114] | LIDC | 3DFCN and 3DCNN | 3DFCN is used for nodule candidate generation and 3D CNN for reducing the false-positive rate | FROC |

| Balagourouchetty et.al 2019 [5] | 634 liver CT images | GoogLeNet based FCNet Classifier | The liver lesion classification using GoogLeNet based ensemble FCNet classifier |

Accuracy, ROC |

| Y.Wang et a2019 [166] | Independent dataset | Faster RCNN and ResNet | Intelligent Imaging Layout System (IILS) for the detection and classification of pulmonary nodules | SN, SP AUC Accuracy |

| Pang et al. 2020 [111] |

Shandong Provincial Hospital |

CNN (DenseNet) |

Classification of lung cancer type from CT images using the DenseNet network. | Accuracy |

|

Masood et al. 2020 [95] |

LIDC | mRFCN | Lung nodule classification and detection using mRFCN based automated decision support system |

SN, SP, AUC, Accuracy |

| Zhao and Zeng 2019 [190] |

KiTS19 challenge |

3D-UNet | Multi-scale supervised 3D U-Net to simultaneously segment kidney and kidney tumors from CT images |

DICE, Recall Accuracy Precision |

| Fan et al. 2020 [35] |

COVID-19 infection dataset |

Inf-Net | COVID-19 lung CT infection segmentation network |

DICE, SN, SP MAE |

| Li et al. 2020 [79] | 4356 Chest CT images | COVNet | COVID-19 detection neural network (COVNet) used for the recognition of COVID-19 from volumetric chest CT exams | AUC, SN, SP |

AUC: area under ROC curve; FROC: Area under the Free-Response ROC Curve; SN: sensitivity; SP: specificity; MAE: mean absolute error LIDC: Lung Image Database Consortium; LIDC-IDRI: Lung Image Database Consortium-Image Database Resource Initiative.

Li et al. 2016 [74] proposed deep CNN for the detection of three types of nodules that are semisolid, solid, and ground-glass opacity. Balagourouchetty et al. [5] proposed GoogLeNet based an ensemble FCNet classifier for The liver lesion classification. For feature extraction, basic Googlenet architecture is modified with three modifications. Masood et al. [95] proposed the multidimensional Region-based Fully Convolutional Network (mRFCN) for lung nodule detection/classification and achieved a classification accuracy of 97.91%. In lung nodule detection, the feature work is the detection of micronodules (less than 3 mm) without loss of sensitivity and accuracy. Zhao and Zeng 2019 [190] proposed DLA based on supervised MSS U-Net and 3DU-Net to automatically segment kidneys and kidney tumors from CT images. In the present pandemic situation, Fan et al. [35] and Li et al. [79] used deep learning-based techniques for COVID-19 detection from CT images.

Mammograph (MG)

Breast cancer is one of the world’s leading causes of death among women with cancer. MG is a reliable tool and the most common modality for early detection of breast cancer. MG is a low-dose x-ray imaging method used to visualize the breast structure for the detection of breast diseases [40]. Detection of breast cancer on mammography screening is a difficult task in image classification because the tumors constitute a small part of the actual breast image. For analyzing breast lesions from MG, three steps are involved that are detection, segmentation, and classification [139].

The automatic classification and detection of masses at an early stage in MG is still a hot subject of research. Over the past decade, DLA has shown some significant overcome in breast cancer detection and classification problem. Table 5 summarizes the latest DLA developments in the study of mammogram image analysis.

Table 5.

Summary of DLA for MG image analysis

| Reference | Dataset | Method | Application | Metrics |

|---|---|---|---|---|

| Sahiner et al.1996 [131] | Manually extracted ROIs from 168 mammograms | CNN | CNN for classification of masses and normal tissue on MG. | ROC,TP,FP |

| Fonseca et al. 2015 [37] | – | CNN | CNN for feature extraction in combing with an SVM as a classifier for breast density estimation | Accuracy |

| Huych et al. .2016 [56] | 607 Digital MG images(219 breast lesions) | CNN | Pre-trained CNN models (MG-CNN) for mass classification | AUC |

| Wang et al. .2017 [161] | 840 standard screening FFDMs | Deep CNN | Detection of cardiovascular disease based on vessel calcification | FROC |

| Geras et al. 2017 [41] | Screening mammograms images 129, 208 | MV-CNN | Multi-view deep CNN for breast cancer screening and image resolution on the prediction accuracy | Accuracy, ROC, TP, FP |

| Zhang et al. 2017 [188] | 3000 MG images | CNN | Data augmentation and transfer learning methods with a CNN for classification | ROC |

| Wu et al. 2017 [177] | 200,000 Breast cancer screening exams | DCN | Deep CNN for breast density classification | AUC |

| Kyono et al. 2018 [69] | Private dataset of 8162 patients | MAMMO-CNN | MAMMO is a novel multi-view CNN with multi-task learning (MTL) a clinical decision support system capable of triaging MG | Accuracy |

| Lehman et al. [72] | 41,479 Mammogram images | ResNet-18 | Deep learning-based CNN for mammographic breast density classification | Accuracy |

| Kim et al. 2018 [65] | 29,107 Digital MG (24,765 normal cases and 4339 cancer cases) | DIB-MG | DIB-MG is weakly supervised learning. DIB-MG learns radiologic features without any human annotations. | SN, SP, Accuracy |

| Ribli et al. 2018 [124] | DDSM (2620), INbreast (115), Private database |

Faster R-CNN, VGG16 |

CNN detects and classifies malignant or benign lesions on MG images | AU |

| Chougrad et al. 2018 [23] | MIAS,DDSM, INbreast, BCDR |

VGG16, ResNet50, Inceptionv3 |

Transfer learning and fine-tuning strategy based CNN to classify MG mass lesions | AUC, Accuracy |

| Karthik et al. 2018 [63] | WBCD | DNN-RFS | Deep neural network (DNN) as a classifier model for breast cancer data | Accuracy, Precision, SP, SN, F-score |

| Cai et al. 2019 [13] | 990 MG images, 540 Malignant masses, and 450 benign lesions | DCNN | Deep CNN for microcalcification discrimination for breast cancer screening | Accuracy, Precision, SP, AUC, SN |

| Wu et al. 2019 [176] | 1000 000 images | DCNN | CNN-based breast cancer screening classifier | AUC |

| Conant et al. .2019 [26] | 12,000 cases, including 4000 biopsy-proven cancers | DCNN | Deep CNN based system detected soft tissue and calcific lesions in the DBT images | AUC |

| Rodriguez-Ruiz et al. 2019 [125] |

9000 Cancer cases and 180,000 normal cases Radiologists |

DCNN | CNN based CAD system | AUC |

| Ionescu et al. 2019 [59] | Private data set | CNN | Breast density estimation and risk scoring |

MIAS: Mammographic Image Analysis Society dataset; DDSM: Digital Database for Screening Mammography; BI-RADS: Breast Imaging Reporting and Data System; `WBCD: Wisconsin Breast Cancer Dataset; DIB-MG: data-driven imaging biomarker in mammography. FFDMs: Full-Field Digital Mammograms; MAMMO: Man and Machine Mammography Oracle; FROC: Free response receiver operating characteristic analysis; SN: sensitivity; SP: specificity.

Fonseca et al. [37] proposed a breast composition classification according to the ACR standard based on CNN for feature extraction. Wang et al. [161] proposed twelve-layer CNN to detect Breast arterial calcifications (BACs) in mammograms image for risk assessment of coronary artery disease. Ribli et al. [124] developed a CAD system based on Faster R-CNN for detection and classification of benign and malignant lesions on a mammogram image without any human involvement. Wu et al. [176] present a deep CNN trained and evaluated on over 1,000,000 mammogram images for breast cancer screening exam classification. Conant et al. [26] developed a Deep CNN based AI system to detect calcified lesions and soft- tissue in digital breast tomosynthesis (DBT) images. Kang et al. [62] introduced Fuzzy completely connected layer (FFCL) architecture, which focused primarily on fused fuzzy rules with traditional CNN for semantic BI-RADS scoring. The proposed FFCL framework achieved superior results in BI-RADS scoring for both triple and multi-class classifications.

Histopathology

Histopathology is the field of study of human tissue in the sliding glass using a microscope to identify different diseases such as kidney cancer, lung cancer, breast cancer, and so on. The staining is used in histopathology for visualization and highlight a specific part of the tissue [45]. For example, Hematoxylin and Eosin (H&E) staining tissue gives a dark purple color to the nucleus and pink color to other structures. H&E stain plays a key role in the diagnosis of different pathologies, cancer diagnosis, and grading over the last century. The recent imaging modality is digital pathology

Deep learning is emerging as an effective method in the analysis of histopathology images, including nucleus detection, image classification, cell segmentation, tissue segmentation, etc. [178]. Tables 6 and 7 summarize the latest deep learning developments in pathology. In the study of digital pathology image analysis, the latest development is the introduction of whole slide imaging (WSI). WSI allows digitizing glass slides with stained tissue sections at high resolution. Dimitriou et al. [30] reviewed challenges for the analysis of multi-gigabyte WSI images for building deep learning models. A. Serag et al. [135] discuss different public “Grand Challenges” that have innovations using DLA in computational pathology.

Table 6.

Summary of articles using DLA for digital pathology image - Organ segmentation

| Reference | Staining/ Image modality |

Method | Application | Dataset | Metrics |

|---|---|---|---|---|---|

| Ronneberger et al. .2015 [126] | EM | U-net architecture with deformation augmentation | Segmentation of neuronal structures, cell segmentation | ISBI cell tracking challenge 2014 and 2015 | Warping, Rand, Pixel Error |

| Song et al. 2016 [147] |

Pap, H & E |

Multi-scale CNN model | Segmentation of cervical cells in Pap smear images | ISBI 2015 Challenge, Shenzhen University (SZU) Dataset | Dice Coefficient |

| Xing et al. 2016 [179] |

IHC H & E, |

CNN and sparse shape model | Nuclei segmentation | Private set containing brain tumor (31), pancreatic NET (22), breast cancer (35) images | – |

| Chen et al. 2017 [19] | H & E | Multi-task learning framework with contour-aware FCN model for instance segmentation |

Deep contour-aware CNN Segmentation of colon glands |

GLAS challenge (165 images), MICCAI2015 nucleus segmentation challenge (33 images) |

Dice coefficient |

| Van Eycke et al. (2018) [156] | H & E | Integration of DCAN, UNet, and ResNet models | Segmentation of glandular epithelium in H & E and IHC staining images | GlaS challenge (165 images) and a private set containing colorectal tissue microarray images |

F1-score, object dice coefficient |

| Liang et al. 2018 [81] | H & E | Patch-based FCN + iterative learning approach | first-time deep learning applied to the gastric tumor segmentation | 2017 China Big Data and AI challenge (1900 images) | Mean IoU, mean accuracy |

| Qu et al. 2019 [118] | H & E | FCN trained with perceptual loss | Jointly classifies and segments various types of nuclei from histopathology images |

40 tissue images of lung adenocarcinoma (private set) |

F1score, Dice coefficient accuracy, |

| Pinckaers and Litjens 2019 [115] | H & E | Incorporating NODE in U - Net to allow an adaptive receptive field | Segmentation of colon glands | GlaS challenge (165) images | Object Dice, F1 score |

| Gadermayr et al. 2019 [39] | Stain agnostic | CycleGAN + UNet segmentation | Multi-Domain Unsupervised Segmentation of object-of interest in WSIs | 23 PAS, 6 AFOG, 6 Col3 & 6 CD31 WSIs | F1 score |

| Sun et al. 2019 [149] | H & E | Multi-scale modules and specific convolutional operations |

Deep learning architecture for gastric cancer segmentation |

500 pathological images of gastric areas, with cancerous regions | – |

Table 7.

Summary of articles using DLA for digital pathology image - Detection and classification of disease

| Reference | Staining/image modality | Method | Application | Data set |

|---|---|---|---|---|

| Xu et al. 2016 [182] | H&E | Stacked sparse autoencoders | Nucleus detection from Breast Cancer Histopathology Images | 537 H&E images from Case Western Reserve University |

| Coudray et al. (2018) [27] | H&E |

Patch-based Inception-V3 model |

Lung cancer histopathology images classify them into LUAD, LUSC, or normal lung tissue |

FFPE sections (140 s) Frozen sections (98 s), and lung biopsies (102 s) |

| Song et al. 2018 [146] | H&E | Deep autoencoder | Simultaneous detection and classification of cells in bone marrow histology images | – |

| Yi et al. 2018 [184] | H&E | FCN | Microvessel prediction in H&E Stained Pathology Images |

Lung adenocarcinoma (ADC) patients images 38 |

| Bulten and Litjens 2018 [12] | H&E, IHC | Self-clustering Convolutional adverse Arial Autoencoders | Classification of the pros take into tumor vs non-tumor |

94 registered WSIs from Radboud University Medical Center |

| Valkonen et al. 2019 [154] |

ER, PR, Ki-67 |

Fine-tuning partially pre-trained CNN network | Recognition of epithelial cells in breast cancers stained for ER, PR, and Ki-67 | Digital Pan CK (152 – invasive breast cancer images) |

| Wei et al. 2019 [169] | H&E | ResNet-18 based patch classifier | Classification of histologic subtypes on lung adenocarcinoma | 143 WSIs private set |

| Wang et al. (2019) [167] | H & E | Patch-based FCN and context-aware block selection + feature aggregation strategy | Lung cancer image classification | Private (939 WSIs), TCGA (500 WSIs) |

| Li et al. 2019 [80] | H & E | FCN trained with a concentric loss on weakly annotated centroid label | Mitosis detection in breast histopathology images | ICPR12 (50 images), ICPR14 (1696 images), AMIDA13 (606 images), TUPAC16 (107 images) |

| Tabibu et al. .2019 [152] | H & E |

Pre-trained Res Net based patch classifier |

Classification of Renal Cell Carcinoma subtypes and survival prediction | TCGA(2, 093WSI) |

| Lin et al. 2019 [83] | H & E | Fast Scan Net: FCN based model | Automatic detection of breast cancer metastases from whole-slide image | 2016 Camelyon Grand Challenge (400 WSI) |

NODE: Neural Ordinary Differential Equations; IoU: mean Intersection over Union coefficient

Other images

Endoscopy is the insertion of a long nonsurgical solid tube directly into the body for the visual examination of an internal organ or tissue in detail. Endoscopy is beneficial in studying several systems inside the human body, such as the gastrointestinal tract, the respiratory tract, the urinary tract, and the female reproductive tract [60, 101]. Du et al. [31] reviewed the Applications of Deep Learning in the Analysis of Gastrointestinal Endoscopy Images. A revolutionary device for direct, painless, and non-invasive inspection of the gastrointestinal (GI) tract for detecting and diagnosing GI diseases (ulcer, bleeding) is Wireless capsule endoscopy (WCE). Soffer et al. [145] performed a systematic analysis of the existing literature on the implementation of deep learning in the WCE. The first deep learning-based framework was proposed by He et al. [46] for the detection of hookworm in WCE images. Two CNN networks integrated (edge extraction and classification of hookworm) to detect hookworm. Since tubular structures are crucial elements for hookworm detection, the edge extraction network was used for tubular region detection. Yoon et al. [185] developed a CNN model for early gastric cancer (EGC) identification and prediction of invasion depth. The depth of tumor invasion in early gastric cancer (EGC) is a significant factor in deciding the method of treatment. For the classification of endoscopic images as EGC or non-EGC, the authors employed a VGG-16 model. Nakagawa et al. [105] applied DL technique based on CNN to enhance the diagnostic assessment of oesophageal wall invasion using endoscopy. J.choi et al. [22] express the feature aspects of DL in endoscopy.

Positron Emission Tomography (PET) is a nuclear imaging tool that is generally used by the injection of particular radioactive tracers to visualize molecular-level activities within tissues. T. Wang et al. [168] reviewed applications of machine learning in PET attenuation correction (PET AC) and low-count PET reconstruction. The authors discussed the advantages of deep learning over machine learning in the applications of PET images. AJ reader et al. [123] reviewed the reconstruction of PET images that can be used in deep learning either directly or as a part of traditional reconstruction methods.

Discussion

The primary purpose of this paper is to review numerous publications in the field of deep learning applications in medical images. Classification, detection, and segmentation are essential tasks in medical image processing [144]. For specific deep learning tasks in medical applications, the training of deep neural networks needs a lot of labeled data. But in the medical field, at least thousands of labeled data is not available. This issue is alleviated by a technique called transfer learning. Two transfer learning approaches are popular and widely applied that are fixed feature extractors and fine-tuning a pre-trained network. In the classification process, the deep learning models are used to classify images into two or more classes. In the detection process, Deep learning models have the function of identifying tumors and organs in medical images. In the segmentation task, deep learning models try to segment the region of interest in medical images for processing.

Segmentation

For medical image segmentation, deep learning has been widely used, and several articles have been published documenting the progress of deep learning in the area. Segmentation of breast tissue using deep learning alone has been successfully implemented [104]. Xing et al. [179] used CNN to acquire the initial shape of the nucleus and then isolate the actual nucleus using a deformable pattern. Qu et al. [118] suggested a deep learning approach that could segment the individual nucleus and classify it as a tumor, lymphocyte, and stroma nuclei. Pinckaers and Litjens [115] show on a colon gland segmentation dataset (GlaS) that these Neural Ordinary Differential Equations (NODE) can be used within the U-Net framework to get better segmentation results. Sun 2019 [149] developed a deep learning architecture for gastric cancer segmentation that shows the advantage of utilizing multi-scale modules and specific convolution operations together. Figure 6 shows U-Net is the most usually used network for segmentation (Fig. 6).

Fig. 6.

U-Net architecture for segmentation,comprising encoder (downsampling) and decoder (upsampling) sections [135]

Detection

The main challenge posed by methods of detection of lesions is that they can give rise to multiple false positives while lacking a good proportion of true positive ones. For tuberculosis detection using deep learning methods applied in [53, 57, 58, 91, 119]. Pulmonary nodule detection using deep learning has been successfully applied in [82, 108, 136, 157].

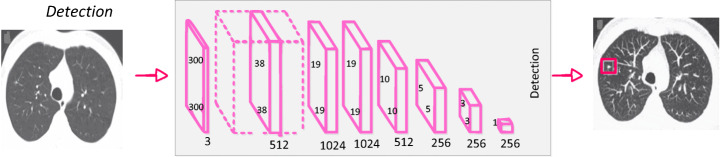

Shin et al. [141] discussed the effect of CNN pre-trained architectures and transfer learning on the identification of enlarged thoracoabdominal lymph nodes and the diagnosis of interstitial lung disease on CT scans, and considered transfer learning to be helpful, given the fact that natural images vary from medical images. Litjens et al. [85] introduced CNN for the identification of Prostate cancer in biopsy specimens and breast cancer metastasis identification in sentinel lymph nodes. The CNN has four convolution layers for feature extraction and three classification layers. Riddle et al. [124] proposed the Faster R-CNN model for the detection of mammography lesions and classified these lesions into benign and malignant, which finished second in the Digital Mammography DREAM Challenge. Figure 7 shows VGG architecture for detection.

Fig. 7.

CNN architecture for detection [144]

An object detection framework named Clustering CNN (CLU-CNNs) was proposed by Z. Li et al. [76] for medical images. CLU-CNNs used Agglomerative Nesting Clustering Filtering (ANCF) and BN-IN Net to avoid much computation cost facing medical images. Image saliency detection aims at locating the most eye-catching regions in a given scene [21, 78]. The goal of image saliency detection is to locate a given scene in the most eye-catching regions. In different applications, it also acts as a pre-processing tool including video saliency detection [17, 18], object recognition, and object tracking [20]. Saliency maps are a commonly used tool for determining which areas are most important to the prediction of a trained CNN on the input image [92]. NT Arun et al. [4] evaluated the performance of several popular saliency methods on the RSNA Pneumonia Detection dataset and was found that GradCAM was sensitive to the model parameters and model architecture.

Classification

In classification tasks, deep learning techniques based on CNN have seen several advancements. The success of CNN in image classification has led researchers to investigate its usefulness as a diagnostic method for identifying and characterizing pulmonary nodules in CT images. The classification of lung nodules using deep learning [74, 108, 117, 141] has also been successfully implemented.

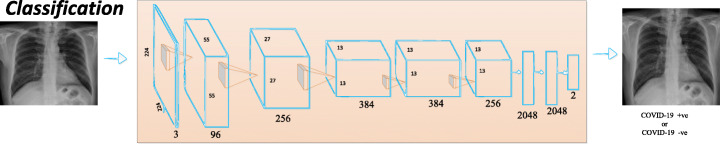

Breast parenchymal density is an important indicator of the risk of breast cancer. The DL algorithms used for density assessment can significantly reduce the burden of the radiologist. Breast density classification using DL has been successfully implemented [37, 59, 72, 177]. Ionescu et al. [59] introduced a CNN-based method to predict Visual Analog Score (VAS) for breast density estimation. Figure 8 shows AlexNet architecture for classification.

Fig. 8.

CNN architecture for classification [144]

Alcoholism or alcohol use disorder (AUD) has effects on the brain. The structure of the brain was observed using the Neuroimaging approach. S.H.Wang et al. [162] proposed a 10-layer CNN for alcohol use disorder (AUD) problem using dropout, batch normalization, and PReLU techniques. The authors proposed a 10 layer CNN model that has obtained a sensitivity of 97.73, a specificity of 97.69, and an accuracy of 97.71. Cerebral micro-bleeding (CMB) are small chronic brain hemorrhages that can result in cognitive impairment, long-term disability, and neurologic dysfunction. Therefore, early-stage identification of CMBs for prompt treatment is essential. S. Wang et al. [164] proposed the transfer learning-based DenseNet to detect Cerebral micro-bleedings (CMBs). DenseNet based model attained an accuracy of 97.71% (Fig. 8).

Limitations and challenges

The application of deep learning algorithms to medical imaging is fascinating, but many challenges are pulling down the progress. One of the limitations to the adoption of DL in medical image analysis is the inconsistency in the data itself (resolution, contrast, signal-to-noise), typically caused by procedures in clinical practice [113]. The non-standardized acquisition of medical images is another limitation in medical image analysis. The need for comprehensive medical image annotations limits the applicability of deep learning in medical image analysis. The major challenge is limited data and compared to other datasets, the sharing of medical data is incredibly complicated. Medical data privacy is both a sociological and a technological issue that needs to be discussed from both viewpoints. For building DLA a large amount of annotated data is required. Annotating medical images is another major challenge. Labeling medical images require radiologists’ domain knowledge. Therefore, it is time-consuming to annotate adequate medical data. Semi-supervised learning could be implemented to make combined use of the existing labeled data and vast unlabelled data to alleviate the issue of “limited labeled data”. Another way to resolve the issue of “data scarcity” is to develop few-shot learning algorithms using a considerably smaller amount of data. Despite the successes of DL technology, there are many restrictions and obstacles in the medical field. Whether it is possible to reduce medical costs, increase medical efficiency, and improve the satisfaction of patients using DL in the medical field cannot be adequately checked. However, in clinical trials, it is necessary to demonstrate the efficacy of deep learning methods and to develop guidelines for the medical image analysis applications of deep learning.

Conclusion and future directions

Medical imaging is a place of origin of the information necessary for clinical decisions. This paper discusses the new algorithms and strategies in the area of deep learning. In this brief introduction to DLA in medical image analysis, there are two objectives. The first one is an introduction to the field of deep learning and the associated theory. The second is to provide a general overview of the medical image analysis using DLA. It began with the history of neural networks since 1940 and ended with breakthroughs in medical applications in recent DL algorithms. Several supervised and unsupervised DL algorithms are first discussed, including auto-encoders, recurrent, CNN, and restricted Boltzmann machines. Several optimization techniques and frameworks in this area include Caffe, TensorFlow, Theano, and PyTorch are discussed. After that, the most successful DL methods were reviewed in various medical image applications, including classification, detection, and segmentation. Applications of the RBM network is rarely published in the medical image analysis literature. In classification and detection, CNN-based models have achieved good results and are most commonly used. Several existing solutions to medical challenges are available. However, there are still several issues in medical image processing that need to be addressed with deep learning. Many of the current DL implementations are supervised algorithms, while deep learning is slowly moving to unsupervised and semi-supervised learning to manage real-world data without manual human labels.

DLA can support clinical decisions for next-generation radiologists. DLA can automate radiologist workflow and facilitate decision-making for inexperienced radiologists. DLA is intended to aid physicians by automatically identifying and classifying lesions to provide a more precise diagnosis. DLA can help physicians to minimize medical errors and increase medical efficiency in the processing of medical image analysis. DL-based automated diagnostic results using medical images for patient treatment are widely used in the next few decades. Therefore, physicians and scientists should seek the best ways to provide better care to the patient with the help of DLA. The potential future research for medical image analysis is the designing of deep neural network architectures using deep learning. The enhancement of the design of network structures has a direct impact on medical image analysis. Manual design of DL Model structure requires rich knowledge; hence Neural Network Search will probably replace the manual design [73]. A meaningful feature research direction is also the design of various activation functions. Radiation therapy is crucial for cancer treatment. Different medical imaging modalities are playing a critical role in treatment planning. Radiomics was defined as the extraction of high throughput features from medical images [28]. In the feature, Deep-learning analysis of radionics will be a promising tool in clinical research for clinical diagnosis, drug development, and treatment selection for cancer patients. Due to limited annotated medical data, unsupervised, weakly supervised, and reinforcement learning methods are the emerging research areas in DL for medical image analysis. Overall, deep learning, a new and fast-growing field, offers various obstacles as well as opportunities and solutions for a range of medical image applications.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Muralikrishna Puttagunta, Email: murali93940@gmail.com.

S. Ravi, Email: sravicite@gmail.com

References

- 1.Abadi M et al. (2016) TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems, [Online]. Available: http://arxiv.org/abs/1603.04467.

- 2.Abbas A, Abdelsamea MM, Gaber MM (2020) Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network, pp. 1–9, [Online]. Available: http://arxiv.org/abs/2003.13815. [DOI] [PMC free article] [PubMed]

- 3.Apostolopoulos ID, Mpesiana TA (2020) Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks, Phys Eng Sci Med, no. 0123456789, pp. 1–6, DOI: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed]

- 4.Arun NT et al. (2020) Assessing the validity of saliency maps for abnormality localization in medical imaging, pp. 1–5, [Online]. Available: http://arxiv.org/abs/2006.00063.

- 5.L. Balagourouchetty, J. K. Pragatheeswaran, B. Pottakkat, and R. G, “GoogLeNet based ensemble FCNet classifier for focal liver lesion diagnosis,” IEEE J Biomed Heal Inf, vol. 2194, no. c, pp. 1–1, 2019, DOI: 10.1109/jbhi.2019.2942774, 1694. [DOI] [PubMed]

- 6.Bastien F et al. (2012) Theano: new features and speed improvements, pp. 1–10, [Online]. Available: http://arxiv.org/abs/1211.5590.

- 7.Basu S, Mitra S, Saha N (2020) Deep Learning for Screening COVID-19 using Chest X-Ray Images, pp. 1–6, [Online]. Available: http://arxiv.org/abs/2004.10507.

- 8.Bauer S, Wiest R, Nolte LP, Reyes M. A survey of MRI-based medical image analysis for brain tumor studies. Phys Med Biol. 2013;58(13):1–44. doi: 10.1088/0031-9155/58/13/R97. [DOI] [PubMed] [Google Scholar]

- 9.Bengio Y, Lamblin P, Popovici D, Larochelle H. The 19th International Conference on Neural Information Processing Systems(NIPS’06) 2006. Greedy layer-wise training of deep networks; pp. 153–160. [Google Scholar]

- 10.Bengio Y, Simard P, Palo F. Learning long -term dependencies with gradient descent is difficult. IEEE Trans Neural Netw. 1994;5(2):157–166. doi: 10.1109/72.279181. [DOI] [PubMed] [Google Scholar]

- 11.Bizopoulos P, Koutsouris D. Deep learning in cardiology. IEEE Rev Biomed Eng. 2019;12(c):168–193. doi: 10.1109/RBME.2018.2885714. [DOI] [PubMed] [Google Scholar]

- 12.Bulten W, Litjens G (2018) Unsupervised Prostate Cancer Detection on H&E using Convolutional Adversarial Autoencoders, [Online]. Available: http://arxiv.org/abs/1804.07098.

- 13.Cai H et al. (2019) Breast Microcalcification Diagnosis Using Deep Convolutional Neural Network from Digital Mammograms, Comput Math Methods Med, vol. 2019, DOI: 10.1155/2019/2717454. [DOI] [PMC free article] [PubMed]

- 14.Candemir S, Rajaraman S, Thoma G, Antani S. 2018 IEEE Life Sciences Conference (LSC) 2018. Deep learning for grading cardiomegaly severity in chest x-rays : an investigation; pp. 109–113. [Google Scholar]

- 15.Capizzi G, Lo Sciuto G, Napoli C, Połap D (2020) Small Lung Nodules Detection based on Fuzzy-Logic and Probabilistic Neural Network with Bio-inspired Reinforcement Learning, IEEE Trans Fuzzy Syst, vol. PP, no. XX, p. 1. 10.1109/TFUZZ.2019.2952831.

- 16.Chen DS, Jain RC. A robust back propagation learning algorithm for function approximation. IEEE Trans. Neural Networks. 1994;5(3):467–479. doi: 10.1109/72.286917. [DOI] [PubMed] [Google Scholar]

- 17.Chen C, Li S, Qin H, Pan Z, Yang G. Bilevel feature learning for video saliency detection. IEEE Trans Multimed. 2018;20(12):3324–3336. doi: 10.1109/TMM.2018.2839523. [DOI] [Google Scholar]

- 18.Chen C, Li S, Wang Y, Qin H, Hao A. Video saliency detection via spatial-temporal fusion and low-rank coherency diffusion. IEEE Trans Image Process. 2017;26(7):3156–3170. doi: 10.1109/TIP.2017.2670143. [DOI] [PubMed] [Google Scholar]

- 19.Chen H, Qi X, Yu L, Dou Q, Qin J, Heng PA. DCAN: deep contour-aware networks for object instance segmentation from histology images. Med Image Anal. 2017;36:135–146. doi: 10.1016/j.media.2016.11.004. [DOI] [PubMed] [Google Scholar]

- 20.Chen C, Wang G, Peng C, Zhang X, Qin H. Improved robust video saliency detection based on long-term spatial-temporal information. IEEE Trans Image Process. 2020;29:1090–1100. doi: 10.1109/TIP.2019.2934350. [DOI] [PubMed] [Google Scholar]

- 21.Chen C, Wei J, Peng C, Zhang W, Qin H. Improved saliency detection in RGB-D images using two-phase depth estimation and selective deep fusion. IEEE Trans Image Process. 2020;29:4296–4307. doi: 10.1109/TIP.2020.2968250. [DOI] [PubMed] [Google Scholar]

- 22.Choi J, Shin K, Jung J, Bae HJ, Kim DH, Byeon JS, Kim N. Convolutional neural network technology in endoscopic imaging: artificial intelligence for endoscopy. Clin Endosc. 2020;53(2):117–126. doi: 10.5946/ce.2020.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chougrad H, Zouaki H, Alheyane O. Deep convolutional neural networks for breast cancer screening. Comput Methods Prog Biomed. 2018;157:19–30. doi: 10.1016/j.cmpb.2018.01.011. [DOI] [PubMed] [Google Scholar]

- 24.Clevert DA, Unterthiner T, Hochreiter S. 4th International Conference on Learning Representations, ICLR 2016. 2016. Fast and accurate deep network learning by exponential linear units (ELUs) pp. 1–14. [Google Scholar]

- 25.Collobert R, Kavukcuoglu K, Farabet C (2011) Torch7: A matlab-like environment for machine learning, BigLearn, NIPS Work, pp. 1–6, [Online]. Available: http://infoscience.epfl.ch/record/192376/files/Collobert_NIPSWORKSHOP_2011.pdf.

- 26.Conant EF, et al. Improving Accuracy and Efficiency with Concurrent Use of Artificial Intelligence for Digital Breast Tomosynthesis. Radiol Artif Intell. 2019;1(4):e180096. doi: 10.1148/ryai.2019180096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyö D, Moreira AL, Razavian N, Tsirigos A. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat Med. 2018;24(10):1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Dercle L, Henry T, Carré A, Paragios N, Deutsch E, Robert C (2020) Reinventing radiation therapy with machine learning and imaging bio-markers (radiomics): State-of-the-art, challenges and perspectives, Methods, no. May, pp. 0–1, DOI: 10.1016/j.ymeth.2020.07.003. [DOI] [PubMed]

- 29.Dhillon A, Verma GK (2019) Convolutional neural network: a review of models, methodologies, and applications to object detection Prog Artif Intell, no. 0123456789, DOI: 10.1007/s13748-019-00203-0.

- 30.Dimitriou N, Arandjelović O, Caie PD. Deep Learning for Whole Slide Image Analysis: An Overview. Front Med. 2019;6(November):1–7. doi: 10.3389/fmed.2019.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Du W, et al. Review on the applications of deep learning in the analysis of gastrointestinal endoscopy images. IEEE Access. 2019;7:142053–142069. doi: 10.1109/ACCESS.2019.2944676. [DOI] [Google Scholar]

- 32.Dugas C, Bengio Y, Bélisle F, Nadeau C, Garcia R. 13th International Conference on Neural Information Processing Systems (NIPS’00) 2000. Incorporating second-order functional knowledge for better option pricing; pp. 451–457. [Google Scholar]

- 33.Eberhart RC, Dobbins RW. Early neural network development history: the age of Camelot. IEEE Eng Med Biol Mag. 1990;9(3):15–18. doi: 10.1109/51.59207. [DOI] [PubMed] [Google Scholar]

- 34.Falk T, Mai D, Bensch R, Çiçek Ö, Abdulkadir A, Marrakchi Y, Böhm A, Deubner J, Jäckel Z, Seiwald K, Dovzhenko A, Tietz O, Dal Bosco C, Walsh S, Saltukoglu D, Tay TL, Prinz M, Palme K, Simons M, Diester I, Brox T, Ronneberger O. U-net: deep learning for cell counting, detection, and morphometry. Nat Methods. 2019;16(1):67–70. doi: 10.1038/s41592-018-0261-2. [DOI] [PubMed] [Google Scholar]

- 35.Fan D-P et al. (2020) Inf-Net: Automatic COVID-19 Lung Infection Segmentation from CT Scans, pp. 1–10, [Online]. Available: http://arxiv.org/abs/2004.14133. [DOI] [PubMed]

- 36.Fischer A, Igel C. Training restricted Boltzmann machines: an introduction. Pattern Recogn. 2014;47(1):25–39. doi: 10.1016/j.patcog.2013.05.025. [DOI] [Google Scholar]

- 37.Fonseca P, et al. Automatic breast density classification using a convolutional neural network architecture search procedure. Med Imaging 2015 Comput Diagnosis. 2015;9414(c):941428. doi: 10.1117/12.2081576. [DOI] [Google Scholar]

- 38.Fukushima K. Neocognitron: a self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol Cybern. 1980;36(4):193–202. doi: 10.1007/BF00344251. [DOI] [PubMed] [Google Scholar]

- 39.Gadermayr M, Gupta L, Appel V, Boor P, Klinkhammer BM, Merhof D. Generative adversarial networks for facilitating stain-independent supervised and unsupervised segmentation: a study on kidney histology. IEEE Trans Med Imaging. 2019;38(10):2293–2302. doi: 10.1109/TMI.2019.2899364. [DOI] [PubMed] [Google Scholar]

- 40.Gardezi SJS, Elazab A, Lei B, Wang T. Breast cancer detection and diagnosis using mammographic data: systematic review. J Med Internet Res. 2019;21(7):1–22. doi: 10.2196/14464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Geras KJ et al. (2017) High-Resolution Breast Cancer Screening with Multi-View Deep Convolutional Neural Networks, pp. 1–9, [Online]. Available: http://arxiv.org/abs/1703.07047.

- 42.Goodfellow I, Bengio Y, Courville A (2016) “Deep learning,” DOI: 10.1038/nmeth.3707

- 43.Goodfellow IJ, et al. Generative adversarial nets. Adv Neural Inf Process Syst. 2014;3(January):2672–2680. [Google Scholar]

- 44.Greenspan H, Van Ginneken B, Summers RM. Guest editorial deep learning in medical imaging: overview and future promise of an exciting new technique. IEEE Trans Med Imaging. 2016;35(5):1153–1159. doi: 10.1109/TMI.2016.2553401. [DOI] [Google Scholar]

- 45.Gurcan MN, Boucheron LE, Can A, Madabhushi A, Rajpoot NM, Yener B. Histopathological image analysis: a review. IEEE Rev Biomed Eng. 2009;2:147–171. doi: 10.1109/RBME.2009.2034865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.He JY, Wu X, Jiang YG, Peng Q, Jain R. Hookworm detection in wireless capsule endoscopy images with deep learning. IEEE Trans Image Process. 2018;27(5):2379–2392. doi: 10.1109/TIP.2018.2801119. [DOI] [PubMed] [Google Scholar]

- 47.He K, Zhang X, Ren S., Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification, Proc IEEE Int Conf Comput Vis, vol. 2015 Inter, pp 1026–1034, DOI: 10.1109/ICCV.2015.123.

- 48.He K, Zhang X, Ren S, Sun J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell. 2015;37(9):1904–1916. doi: 10.1109/TPAMI.2015.2389824. [DOI] [PubMed] [Google Scholar]

- 49.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit. 2016;2016(Decem):770–778. doi: 10.1109/CVPR.2016.90. [DOI] [Google Scholar]

- 50.Hinton G (2014) Boltzmann Machines, Encycl Mach Learn Data Min, no. 1, pp. 1–7, DOI: 10.1007/978-1-4899-7502-7_31-1.

- 51.Hinton GE, Osindero S, Teh Y-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18:1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 52.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 53.Hooda R, Mittal A, Sofat S. Automated TB classification using ensemble of deep architectures. Multimed Tools Appl. 2019;78(22):31515–31532. doi: 10.1007/s11042-019-07984-5. [DOI] [Google Scholar]

- 54.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely connected convolutional networks. Proc - 30th IEEE Conf Comput Vis Pattern Recognition, CVPR 2017. 2017;2017(Janua):2261–2269. doi: 10.1109/CVPR.2017.243. [DOI] [Google Scholar]

- 55.Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol. 1962;160(1):106–154. doi: 10.1113/jphysiol.1962.sp006837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huynh BQ, Li H, Giger ML. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J Med Imaging. 2016;3(3):034501. doi: 10.1117/1.jmi.3.3.034501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hwang S, Kim H-E, Jeong J, Kim H-J. A novel approach for tuberculosis screening based on deep convolutional neural networks. Med Imaging 2016 Comput Diagnosis. 2016;9785:97852W. doi: 10.1117/12.2216198. [DOI] [Google Scholar]

- 58.Hwang EJ, Park S, Jin KN, Kim JI, Choi SY, Lee JH, Goo JM, Aum J, Yim JJ, Park CM, Deep Learning-Based Automatic Detection Algorithm Development and Evaluation Group. Kim DH, Woo W, Choi C, Hwang IP, Song YS, Lim L, Kim K, Wi JY, Oh SS, Kang MJ. Development and validation of a deep learning–based automatic detection algorithm for active pulmonary tuberculosis on chest radiographs. Clin Infect Dis. 2019;69(5):739–747. doi: 10.1093/cid/ciy967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ionescu GV, et al. Prediction of reader estimates of mammographic density using convolutional neural networks. J Med Imaging. 2019;6(03):1. doi: 10.1117/1.jmi.6.3.031405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Jani KK, Srivastava R. A survey on medical image analysis in capsule endoscopy. Curr Med Imaging Rev. 2019;15(7):622–636. doi: 10.2174/1573405614666181102152434. [DOI] [PubMed] [Google Scholar]

- 61.Jia Y et al. (2014) Caffe: Convolutional architecture for fast feature embedding,” MM 2014 – Proc 2014 ACM Conf Multimed , pp. 675–678, DOI: 10.1145/2647868.2654889.

- 62.Kang C, Yu X, Wang SH, Guttery DS, Pandey HM, Tian Y, Zhang YD. A heuristic neural network structure relying on fuzzy logic for images scoring. IEEE Trans Fuzzy Syst. 2020;6706(c):1–1. doi: 10.1109/tfuzz.2020.2966163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.S. Karthik, R. Srinivasa Perumal, and P. V. S. S. R. Chandra Mouli, “Breast cancer classification using deep neural networks,” Knowl Comput Its Appl Knowl Manip Process Tech Vol. 1, pp. 227–241, 2018, DOI: 10.1007/978-981-10-6680-1_12

- 64.Kazeminia S et al. (2020) GANs for Medical Image Analysis,” Artif Intell Med, p. 104262, DOI: 10.1016/j.jece.2020.104262. [DOI] [PubMed]

- 65.Kim EK, Kim HE, Han K, Kang BJ, Sohn YM, Woo OH, Lee CW. Applying data-driven imaging biomarker in mammography for breast Cancer screening: preliminary study. Sci Rep. 2018;8(1):1–8. doi: 10.1038/s41598-018-21215-1. [DOI] [PMC free article] [PubMed] [Google Scholar]