Abstract

During the first wave of the global COVID-19 pandemic the clinical utility and indications for SARS-CoV-2 serological testing were not clearly defined. The urgency to deploy serological assays required rapid evaluation of their performance characteristics. We undertook an internal validation of a CE marked lateral flow immunoassay (LFIA) (SureScreen Diagnostics) using serum from SARS-CoV-2 RNA positive individuals and pre-pandemic samples. This was followed by the delivery of a same-day named patient SARS-CoV-2 serology service using LFIA on vetted referrals at central London teaching hospital with clinical interpretation of result provided to the direct care team. Assay performance, source and nature of referrals, feasibility and clinical utility of the service, particularly benefit in clinical decision-making, were recorded. Sensitivity and specificity of LFIA were 96.1% and 99.3% respectively. 113 tests were performed on 108 participants during three-week pilot. 44% participants (n = 48) had detectable antibodies. Three main indications were identified for serological testing; new acute presentations potentially triggered by recent COVID-19 e.g. pulmonary embolism (n = 5), potential missed diagnoses in context of a recent COVID-19 compatible illness (n = 40), and making infection control or immunosuppression management decisions in persistently SARS-CoV-2 RNA PCR positive individuals (n = 6). We demonstrate acceptable performance characteristics, feasibility and clinical utility of using a LFIA that detects anti-spike antibodies to deliver SARS-CoV-2 serology service in adults and children. Greatest benefit was seen where there is reasonable pre-test probability and results can be linked with clinical advice or intervention. Experience from this pilot can help inform practicalities and benefits of rapidly implementing new tests such as LFIAs into clinical service as the pandemic evolves.

Introduction

Infection with SARS-CoV-2 stimulates a detectable antibody response in most people, however, the clinical utility of routine serological testing has been questioned [1, 2]. There has been uncertainty about what proportion of infected individuals produce serum antibodies, how long they persist for, what role they have in diagnosis and whether their detection provides protection against reinfection or disease manifestations upon re-exposure to the virus. These uncertainties, coupled with the fact that antibody testing for other respiratory viral infection is not standard practice and concerns regarding production and validation of rapidly developed new tests [1, 3], led to hesitancy introducing them into widespread clinical practice.

From late May 2020 the UK government prioritised serological testing to NHS staff, reserving patient testing for those interested and undergoing other blood tests with a requirement for written consent. By that time our virology department had received many enquiries from different specialties asking whether SARS-CoV-2 infection might be contributing to patient presentation despite negative or absent conventional PCR testing.

We had recently completed parallel validation of eight lateral flow immunoassay (LFIA) devices and two commercial ELISA platforms against an ELISA developed at King’s College London (KCL) measuring IgG, IgA and IgM against several SARS-CoV-2 antigens (nucleocapsid (N) and spike (S) proteins and the S receptor binding domain (RBD). Viral neutralisation assays were also established alongside the in-house ELISA to correlate antibody titres with functional activity. Validation was initially performed on a cohort of patients presenting to Guy’s and St Thomas’ NHS Foundation Trust and showed that the accuracy of some of the lateral flow devices was comparable to our ELISA [4].

We therefore submitted a formal request to the hospital risk & assurance board sub-committee to provide a pilot clinical SARS-CoV-2 serology service for children and adults. Pilot approval was obtained on May 29th 2020 following review of protocols and laboratory data including a further pilot validation set reported here.

Methods

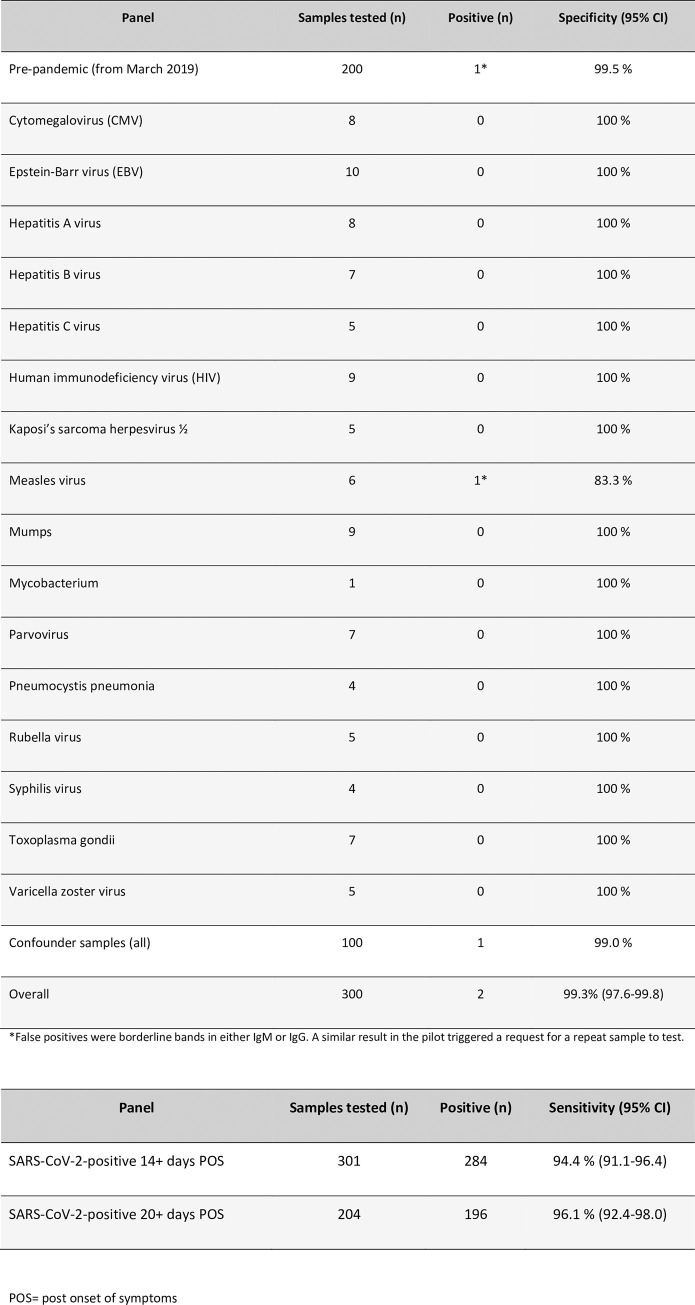

SureScreen diagnostics LFIA validation

The CE marked SureScreen Diagnostics LFIA was selected for further validation based on results from previous head-to-head analyses [4], provision of additional proprietary information on the antigen target by the manufacturer and confidence in procurement. Tests were performed according to manufacturer’s instructions by two independent operators evaluating the result as negative (0: no visible band), borderline (0.5: visible band in ideal lighting conditions, unable to photograph/ scan), positive (1: visible band in all lighting conditions), strong positive (2: visible band at the intensity of the control band or 3: visible band of greater intensity than control line) (S1 Fig). Sensitivity and specificity experiments were performed to meet MHRA validation guidance published on 19th May 2020 [5]. Serum samples were obtained from SARS-CoV-2 RNA positive (AusDiagnostics) [6] patients taken 14 or more (n = 301) and 20 or more (n = 204) days post onset of symptoms (POS) and 300 pre-pandemic samples. This included 200 stored serum samples and a panel of 100 stored acute and convalescent confounder samples taken from individuals with EBV, CMV, HIV and a range of other viral, bacterial and fungal pathogens. 95% confidence intervals were determined using the Wilson/Brown Binomial test. Sera from individuals diagnosed with seasonal coronaviruses were not available for testing. The research reagent for anti-SARS-CoV-2 Ab (NIBSC 20/130) obtained from the National Institute for Biological Standards and Control (NIBSC), UK, was used as a positive control for reproducibility and limit of detection experiments (IgG only) [7].

Service delivery

Internal governance approval for service delivery was obtained based on the laboratory validation data, clinical oversight, confirmation of an ability to request and report tests on electronic systems, a review of risks and their mitigation and agreement to report back on completion of the pilot. Service commenced on 29th May 2020 and was delivered by scientists from the KCL Department of Infectious Diseases who had conducted all the LFIA validations. Tests were performed in and provided by the Guy’s and St Thomas’ Hospital Centre for Clinical Infection and Diagnostics Research (CIDR), located adjacent to hospital routine diagnostic virology and blood sciences laboratories on the St Thomas’ Hospital site.

Availability of SARS-CoV-2 serology service was communicated through clinical networks with requests vetted by the clinical virology team. Samples were requested as part of routine laboratory testing route and serology was performed once daily, Monday to Friday. A positive band for either IgM or IgG (or borderline band in both) was reported to the clinician as “antibodies detected”. Results were uploaded onto hospital electronic patient records as a scanned image of the lateral flow cassette with a written comment alongside telephoning where appropriate. Differential detection of IgM and IgG was not considered as part of verbal or written advice. Repeat testing was recommended when there was a high index of clinical suspicion and no antibodies were detected, or a borderline result in IgM or IgG was the only observed band (S2 Fig). A standard set of demographics, clinical information, and SARS-CoV-2 PCR results were recorded for each participant and stored in a clinical database (S3 Fig). Informal verbal or written feedback from clinicians about their views on utility was also recorded.

ELISA

ELISA testing was performed on the 168 stored samples where sufficient sample was available from patients that had all severities of COVID-19 for comparison with the LFIA validation cohort (n = 301). All serum samples from the pilot service (where sufficient sample was available) were also batched for comparative testing at a time remote from clinical decision making.

High-binding ELISA plates (Corning, 3690) were coated with antigen (N, S) at 3 μg/mL (25 μL per well) in PBS. Wells were washed with PBS-T (PBS with 0.05% Tween-20) and then blocked with 100 μL 5% milk in PBS-T for 1 hr at room temperature. Wells were emptied and sera diluted at 1:50 in milk was added and incubated for 2 hr at room temperature. Control reagents included CR3009 (2 μg/mL), CR3022 (0.2 μg/mL), negative control plasma (1:25 dilution), positive control plasma (1:50) and blank wells. Wells were washed with PBS-T. Secondary antibody was added and incubated for 1 hr at room temperature. IgM was detected using goat-anti-human-IgM-HRP (1:1,000) (Sigma: A6907), IgG was detected using goat-anti-human-Fc-AP (1:1,000) (Jackson: 109-055-043-JIR). Wells were washed with PBS-T and either Alkaline Phosphatase (AP) substrate (Sigma) was added and read at 405 nm (AP) or 1-step TMB substrate (Thermo Scientific) was added and quenched with 0.5 M H2SO4 before reading at 450 nm (HRP). Antibodies were considered detected if OD values were 4-fold or greater above background.

Neutralising antibody assay

Neutralising antibody testing was performed on six patients (all in infection control/ immunosuppression management group–Fig 3). Neutralisation were conducted as previously described [8]. Serial dilutions of serum samples were prepared with DMEM media and incubated with pseudotyped HIV virus incorporating the SARS-CoV-2 spike protein [9] for 1-hour at 37°C in 96-well plates. Next, HeLa cells stably expressing the ACE2 receptor (provided by Dr James Voss, The Scripps Research Institute) were added and the plates were left for 72 hours. Infection level was assessed in lysed cells with the Bright-Glo luciferase kit (Promega), using a Victor™ X3 multilabel reader (Perkin Elmer). ID50 for each serum was calculated using GraphPad Prism. Neutralisation titres were classified as low (50–200), moderate (201–500), high (501–2000), or potent (2001+).

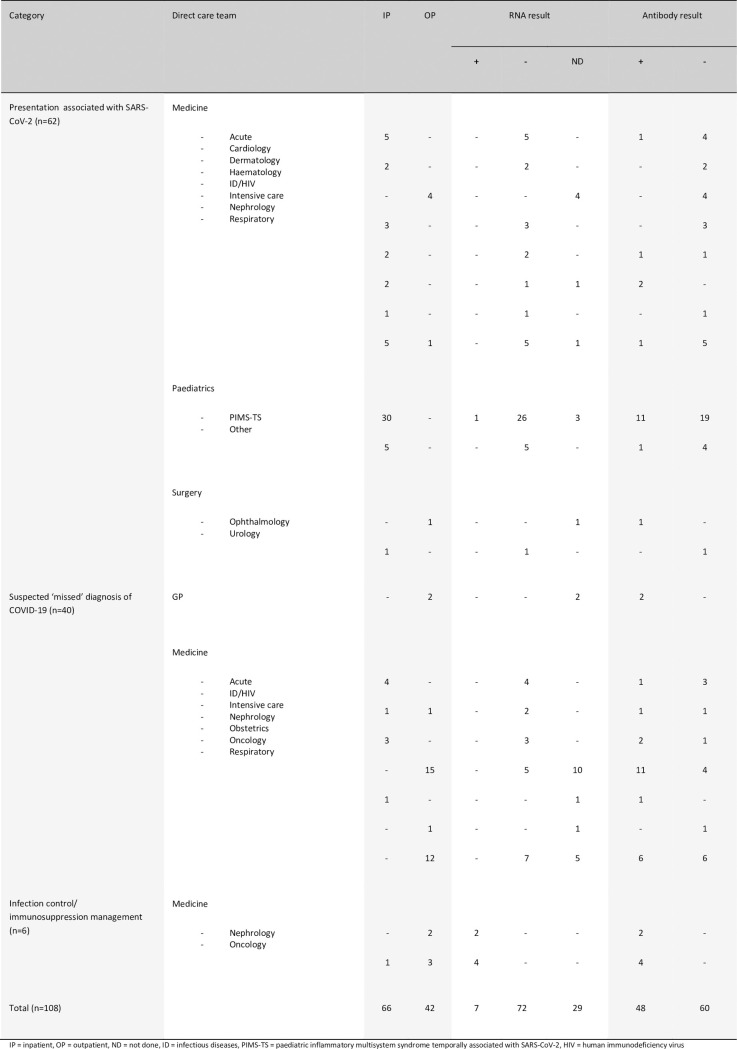

Fig 3. Referral characteristics and RNA results of individuals having SARS-CoV-2 serology testing performed during the pilot.

Patient and public involvement

Patients were not involved in the development of the study or its outcome measures, conduct of the research, or preparation of the manuscript.

Ethical approval

All work was performed in accordance with the UK Policy Framework for Health and Social Care Research, and approved by the Risk and Assurance Committee at Guy’s and St Thomas’ NHS Foundation Trust. Informed consents were not required from participants in this study as per the guidelines set out in the UK Policy Framework for Health and Social Care Research and by the registration with, and express consent of the host institution’s review board.

Results

LFIA validation was performed using serum samples from 301 PCR-confirmed SARS-CoV-2 positive individuals collected 14 or more days POS and 300 pre-pandemic serum samples including 100 (acute and convalescent) from patients with a range of other infections that could give rise to a false positive result (Fig 2A) and in accordance with published MHRA guidance at the time. A random selection of 168 (of the 301) samples from patients with a range of disease severities (and where sufficient sample for analysis was available) were compared head-to-head with an in-house ELISA for IgM and IgG to N, S and RBD (Fig 1). Sensitivity at 14 and 20 days or more POS was 94.4% and 96.1% respectively and specificity was 99.3% (Fig 2B). Limit of detection based on visual inspection of LFIA bands by two operators was determined using the NIBSC reference standard to a dilution of 1 in 500. This was consistent with the expected limit of detection of the NIBSC in-house assay (S4 Fig) [9].

Fig 2.

a: Testing of samples that were pre-pandemic from patients with other infectious diseases and known confounders to estimate specificity of the SureScreen lateral flow immunoassay. b: Sensitivity estimates of SureScreen lateral flow immunoassay using serum samples obtained from SARS-CoV-2 PCR positive patients at greater than 14 and 20 days post reported onset of symptoms. POS = post onset of symptoms.

Fig 1. Comparative assessment of 168 serum samples from SARS-CoV-2-infected individuals by ELISA and lateral flow immunoassay.

168 serum samples from individuals with confirmed SARS-CoV-2 infection were tested for the presence of antibody by ELISA to the full spike (S), receptor binding domain (RBD) and nucleocapsid (N), and by SureScreen lateral flow immunoassay. Detection of IgG is shown in the top panel, and IgM in the bottom panel. Samples are arranged according to days post onset of symptoms, ranging from 14 to 40 days. Results are displayed as a heatmap, with white indicating a negative result, and gradations of orange indicating the magnitude of response detected.

The pilot service was commenced on May 29th 2020 and lasted 3 weeks. 48/108 (44%) participants had detectable IgG and/or IgM SARS-CoV-2 antibodies on their first serum sample that was communicated to referring clinicians as “antibodies detected” (Fig 3). 38/48 (79%) had IgM and 47/48 (98%) had IgG bands. Five participants with a high index of suspicion but no detectable antibodies had a further serum sample tested at least one week after initial testing. All repeat samples had no detectable antibodies. Rationale for testing broadly fell into three referral categories. First, acute presentations with new symptoms potentially triggered by SARS-CoV-2 infection. This included suspected cases of Paediatric Inflammatory Multisystem Syndrome Temporally associated with SARS-CoV-2 (PIMS-TS) (n = 30), plus adults (n = 27) and children (n = 5) presenting with other clinical syndromes including thrombotic events such as strokes and pulmonary emboli (collectively called COVID-19 syndromes). Second, suspected “missed” diagnoses in individuals with a (recent) COVID-19 compatible illness who either never had an RNA test performed (n = 19) or viral RNA was not detected in respiratory specimens (n = 21). Third, those for whom antibody detection made a significant contribution to decisions on infection control management or immunosuppressive treatment (n = 6).

Of 30 children with suspected PIMS-TS, 11 had detectable antibodies (37%). Reviewing the clinical history of the 19 with no detectable antibodies, seven had an alternate plausible diagnosis, or did not fulfil PIMS-TS diagnostic criteria at the time of discharge and 12 had ongoing high clinical suspicion of PIMS-TS. Two children (participants 018 and 034) had repeat testing at least seven days later, neither had detectable antibodies at this stage. For the remaining 32 PCR negative/ not done individuals presenting with a potential post-COVID syndrome, seven (21.2%) had antibodies detected. This included two with the diagnosis of pulmonary embolism (PE), one with a new diagnosis of interstitial lung disease (ILD), two with a hyperinflammatory syndrome (akin to PIMS-TS), one patient with a relapse of HSV encephalitis, and one patient with paracentral acute middle maculopathy.

40 individuals were tested to identify potential missed COVID-19 diagnoses comprising nine presenting to hospital with ongoing compatible symptoms but negative SARS-CoV-2 RNA tests, and 31 who had recovered from a recent compatible illness in the community, including 15 individuals with end-stage renal failure, who had been advised to shield, and 12 patients attending the respiratory led post-COVID clinic due to failure to return to their baseline level of function. Overall, 24/40 (60%) had detectable antibodies, including two patients admitted to ITU but with repeatedly negative PCR results on upper and lower respiratory sampling.

Of the six individuals with persistent SARS-CoV-2 RNA on nose and throat swabs tested to guide infection control or immunosuppression decisions, all had detectable antibodies on SureScreen LFIA, and when tested, moderate (n = 1, ID50 = 277), high (n = 1, ID50 = 1135), or potent (n = 4, ID50 = 2333, 4130, 5164, 5248) neutralising antibodies titres. This implied, when considered with other factors such as time from first positive PCR test, and threshold cycle for RNA detection, that they were no longer infectious, and had a degree of protection from reinfection.

Serological testing was performed no earlier than 21 days post onset of symptoms (POS), up to approximately 90 days POS (where symptom onset data was available) for all participants.

When considering the combined (IgM and IgG) anti-spike ELISA data versus the SureScreen LFIA result there were 13 discrepant results. Reviewing the ELISA IgG anti-spike data only, there was greater concordance (four discrepant). The ELISA did not detect antibodies in two cases–participants 055 and 098 (where the SureScreen LFIA did), and in two cases antibodies were detected by the ELISA–participants 019 and 046 (where the SureScreen LFIA detected none). There was one individual (participant 045) with detectable anti-nucleocapsid IgG by ELISA who did not have anti-spike IgG (S3 Fig). The SureScreen LFIA did not detect antibodies in this participant–an expected result as the device only detects anti-spike antibodies. The IgM anti-nucleocapsid ELISA data has not been considered as previous work recognised the low specificity of this test (4). ELISA testing was not performed on three participants due to lack of sample availability (participants 002, 036 and 096).

Discussion

This pilot SARS-CoV-2 serology service was introduced two months after the peak of acute UK COVID-19 admissions and provided results on 108 patients over a three-week period. It included a large number of children presenting with a new hyper-inflammatory, Kawasaki-like syndrome, termed PIMS-TS [10], to the on-site Evelina London Children’s Hospital that provides regional specialist services. 37% had antibodies detected, lower than previously reported [10, 11], potentially due to increased awareness and broadening of clinical evaluation criteria, supported by a number of children having this diagnosis removed from discharge coding.

Serology was particularly helpful aiding diagnosis and management of what is an increasing range of assumed COVID-19 triggered conditions [12–17]. For example, antibodies were detected in two patients presenting with a PE that was therefore considered a provoked event, limiting the need for additional investigations and reducing the period of anticoagulation. Negative serology also helped to discount, although recognising the limitations of testing, could not fully exclude COVID-19 as a potential trigger for newly presenting conditions. These included acquired haemophilia A and a range of unusual dermatological presentations e.g. ‘Covid toes’.

Detecting antibodies in patients with persistently positive SARS-CoV-2 PCR tests despite symptom resolution, a phenomenon reported elsewhere [18], enabled important decisions for infection control and immunosuppression. These decisions were supported by data that antibodies against spike protein (personal communication with SureScreen Diagnostics Ltd) correlate with neutralisation [19] and there is published guidance that neutralisation can be used as a proxy for reduced risk of transmission [20, 21]. Since neutralising experiments are time-consuming and complex, rapid tests that detect antibodies against spike, such as the SureScreen LFIA and some, but not other technologies [22–24] are a practical alternative [25] when considered alongside other factors including timing from symptom onset, ongoing symptoms, and cycle threshold or take-off values of PCR results.

The strength of this study includes the extensive prior comparison of multiple technologies using a large panel of serum samples to inform choice and validation of the selected LFIA for clinical service. Results were also consistent with recommendations from a Cochrane review published after completion of our pilot, which suggested a benefit for serology to confirm a COVID-19 diagnosis in patients who did not have SARS-CoV-2 RNA testing performed, or who had a negative result despite an ongoing high index of clinical suspicion [3].

It was also offered across the hospital to assess the broad potential clinical utility. With high pre-test probability (e.g. 45%), the positive predictive value (PPV) is 99.2%, with an acceptable negative predictive value (NPV) of 96.9%. However, it is of note that if testing were to be extended to a population where prevalence is low (e.g. 5%) the PPV falls to <90%. This re-enforces the importance of providing serology for defined patient cohorts where the pre-test probability is high and the potential clinical utility is understood [26, 27].

The main limitation of this study is in being performed at a single-centre at a discrete time-point in the COVID-19 pandemic. Since that time there have been many changes in the epidemiology and approach to COVID-19 testing. Most countries are in the midst of a second wave and vaccination will change the utility and interpretation of antibody detection. PCR tests are also more widely available to patients in the community (pillar 2) and hospital laboratories have higher capacity and more rapid PCR tests (pillar 1), which could reduce the number of missed or delayed diagnoses. There are also more accurate laboratory serology technologies, including the ability to assess dynamic responses [28–30] alongside T cell assays [31, 32], which could reduce utility of LFIA in many settings.

The discrepant ELISA and LFIA data illustrate the challenges of any single technology employed to detect specific antibodies induced in response to infection rather than cross-reactivity or anamnestic responses–particularly for IgM. Ten participants with no antibodies detected using SureScreen LFIA had low level anti-spike IgM antibodies detected by ELISA (but not IgG). At 21 or more days POS the one would expect the vast majority of individuals to have seroconverted to IgG (only one study participant had IgM only identified on SureScreen LFIA). When taken into consideration with previous validation work using this ELISA [4], these results could merely represent non-specific reactivity. The explanation for the four SureScreen results that were discrepant with the ELISA anti-spike IgG would require further investigation including repeat sampling and testing using other technologies. All LFIA results in this pilot were communicated in the context of the clinical history and the decisions being made, and where limitations of serological results in general and these technologies in particular were understood. Technologies for confirmatory testing alongside participation in external quality assurance schemes would be required to extend delivery of such a service.

Nevertheless, LFIAs are quick (10-minute test), inexpensive and are used in diagnostic laboratories for example in detecting pneumococcal and legionella urinary antigens. It is hard to predict where future clinical need for serology LFIAs might be. They could be developed further for deployment in settings with limited laboratory facilities, enabled by methods to collect capillary blood (although this will require further validation work), or used longitudinally to assess waning response to mass population vaccination campaigns where rapid high-volume longitudinal assessments might be required. There are also now technologies available for electronic reading of bands that would take away the subjectivity of reading band signal-strength by eye, which we recorded here in a semi-quantitative way by two independent observers. This experience may help inform approach to reading and communicating SARS-CoV-2 antigen lateral flow devices that are now being used by healthcare staff, patients and public [33], although the significance of band strength for both antibody and antigen lateral flow assays still requires further investigation.

This study also represents the final phase of a translational research pathway completed in three months from the basic science, comparative evaluation and now this pilot service study. The diagnostic response to this pandemic will come under continued scrutiny and there are lessons to be learnt on the ability of diagnostic laboratories and translational research teams to work together in the response to rapidly emerging infections [34]. The strengths and limitations of conducting this study at this time therefore also provides useful data to inform discussion on the requirements of academia to respond in a pandemic setting.

Supporting information

Negative = 0: No visible borderline = 0.5: A visible band in ideal lighting conditions, positive = 1: A visible band in all lighting conditions, strong positive = 2: A visible band at the intensity of the control line or 3: A visible band of greater intensity than the control line. NB: Bands of 0.5 intensity are unable to be scanned/ photographed and therefore appear blank on the scanned image below.

(DOCX)

(DOCX)

(DOCX)

Defined dilutions of the NIBSC research reference reagent for anti-SARS-CoV-2 antibody (20/130) were tested in triplicate on the SureScreen LFIA. Results are displayed as a heat map, with white indicating a negative result and gradations of orange representing the magnitude of response detected.

(DOCX)

Acknowledgments

We are extremely grateful to all staff in Viapath Infection Sciences and Department of Infectious Diseases based at St Thomas’ Hospital who helped deliver this service.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

King’s Together Rapid COVID-19 Call awards to KJD, SJDN and RMN. MRC Discovery Award MC/PC/15068 to SJDN, KJD and MHM. National Institute for Health Research (NIHR) Biomedical Research Centre based at Guy's and St Thomas' NHS Foundation Trust and King's College London, programme of Infection and Immunity (RJ112/N027) to MHM and JE. BM was supported by an NIHR Academic Clinical Fellowship in Combined Infection Training. AWS and CG were supported by the MRC-KCL Doctoral Training Partnership in Biomedical Sciences (MR/N013700/1). GB was supported by the Wellcome Trust (106223/Z/14/Z to MHM). SA was supported by an MRC-KCL Doctoral Training Partnership in Biomedical Sciences industrial Collaborative Award in Science & Engineering (iCASE) in partnership with Orchard Therapeutics (MR/R015643/1). NK was supported by the Medical Research Council (MR/S023747/1 to MHM). SP, HDW and SJDN were supported by a Wellcome Trust Senior Fellowship (WT098049AIA). Fondation Dormeur, Vaduz for funding equipment (KJD). Development of SARS-CoV-2 reagents (RBD) was partially supported by the NIAID Centers of Excellence for Influenza Research and Surveillance (CEIRS) contract HHSN272201400008C. Viapath LLP provided support in the form of salaries for author JR, but did not have any additional role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript. The specific roles of this author is articulated in the ‘author contributions’ section.

References

- 1.Andersson M., Low N., French N., Greenhalgh T., Jeffery K., Brent A., et al. Rapid roll out of SARS-CoV-2 antibody testing-a concern. BMJ. 2020;369:m2420. 10.1136/bmj.m2420 [DOI] [PubMed] [Google Scholar]

- 2.Armstrong S. Why covid-19 antibody tests are not the game changer the UK government claims. BMJ. 2020;369:m2469. 10.1136/bmj.m2469 [DOI] [PubMed] [Google Scholar]

- 3.Deeks J. J., Dinnes J., Takwoingi Y., Davenport C., Spijker R., Taylor-Phillips S., et al. Antibody tests for identification of current and past infection with SARS-CoV-2. Cochrane Database Syst Rev. 2020;6:CD013652. 10.1002/14651858.CD013652 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pickering S., Betancor G., Galao R. P., Merrick B., Signell A. W., Wilson H. D., et al. Comparative assessment of multiple COVID-19 serological technologies supports continued evaluation of point-of-care lateral flow assays in hospital and community healthcare settings. PLoS Pathog. 2020;16(9):e1008817. 10.1371/journal.ppat.1008817 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Medicines and Healthcare Regulation Authority (MHRA). Target Product Profile: antibody tests to help determine if people have recent infection to SARS-CoV-2: Version 2. 2020. Available from: https://www.gov.uk/government/publications/how-tests-and-testing-kits-for-coronavirus-covid-19-work/target-product-profile-antibody-tests-to-help-determine-if-people-have-recent-infection-to-sars-cov-2-version-2.

- 6.Public Health England. Rapid assessment of the AusDiagnostics Coronavirus Typing (8-well) assay for the detection of SARS-CoV-2 (COVID-19). 2020. Available from: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/889335/Rapid_assessment_AusDiagnostics_Coronavirus_Typing__8-well__assay_for_detection_of_SARS-CoV-2.pdf.

- 7.National Institute for Biological Standards and Control. Data Sheet: Research reagent for anti-SARS-CoV-2 Ab. NIBSC code 20/120.; 2020.

- 8.Carter M. J., Fish M., Jennings A., Doores K. J., Wellman P., Seow J., et al. Peripheral immunophenotypes in children with multisystem inflammatory syndrome associated with SARS-CoV-2 infection. Nat Med. 2020;26(11):1701–7. 10.1038/s41591-020-1054-6 [DOI] [PubMed] [Google Scholar]

- 9.Thompson C., Grayson N., Paton R.S., Lourenco J., Penman B.S., Lee L., et al. Neutralising antibodies to SARS coronavirus 2 in Scottish blood donors—a pilot study of the value of serology to determine population exposure. medrxvi; 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Perez-Toledo M., Faustini S. E., Jossi S. E., Shields A. M., Kanthimathinathan H. K., Allen J. D., et al. Serology confirms SARS-CoV-2 infection in PCR-negative children presenting with Paediatric Inflammatory Multi-System Syndrome. medRxiv. 2020. 10.1101/2020.06.05.20123117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Whittaker E., Bamford A., Kenny J., Kaforou M., Jones C. E., Shah P., et al. Clinical Characteristics of 58 Children With a Pediatric Inflammatory Multisystem Syndrome Temporally Associated With SARS-CoV-2. JAMA. 2020;324(3):259–69. 10.1001/jama.2020.10369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Franchini M., Glingani C., De Donno G., Casari S., Caruso B., Terenziani I., et al. The first case of acquired hemophilia A associated with SARS-CoV-2 infection. Am J Hematol. 2020;95(8):E197–E8. 10.1002/ajh.25865 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sala S., Peretto G., Gramegna M., Palmisano A., Villatore A., Vignale D., et al. Acute myocarditis presenting as a reverse Tako-Tsubo syndrome in a patient with SARS-CoV-2 respiratory infection. Eur Heart J. 2020;41(19):1861–2. 10.1093/eurheartj/ehaa286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.British Thoracic Society. BTS Guidance on Venous Thromboembolic Disease in patients with COVID-19. 2020 Date. Available from: https://www.brit-thoracic.org.uk/about-us/covid-19-information-for-the-respiratory-community/.

- 15.Barrios-Lopez J. M., Rego-Garcia I., Munoz Martinez C., Romero-Fabrega J. C., Rivero Rodriguez M., Ruiz Gimenez J. A., et al. Ischaemic stroke and SARS-CoV-2 infection: A causal or incidental association? Neurologia. 2020;35(5):295–302. 10.1016/j.nrl.2020.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Besler M. S., Arslan H. Acute myocarditis associated with COVID-19 infection. Am J Emerg Med. 2020. 10.1016/j.ajem.2020.05.100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wollina U., Karadag A. S., Rowland-Payne C., Chiriac A., Lotti T. Cutaneous signs in COVID-19 patients: A review. Dermatol Ther. 2020:e13549. 10.1111/dth.13549 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wu J., Liu X., Liu J., Liao H., Long S., Zhou N., et al. Coronavirus Disease 2019 Test Results After Clinical Recovery and Hospital Discharge Among Patients in China. JAMA Netw Open. 2020;3(5):e209759. 10.1001/jamanetworkopen.2020.9759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Luchsinger L. L., Ransegnola B., Jin D., Muecksch F., Weisblum Y., Bao W., et al. Serological Analysis of New York City COVID19 Convalescent Plasma Donors. medRxiv. 2020. 10.1101/2020.06.08.20124792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.World Health Organisation. Criteria for releasing COVID-19 patients from isolation. 2020 Date. Available from: https://www.who.int/news-room/commentaries/detail/criteria-for-releasing-covid-19-patients-from-isolation.

- 21.European Centre for Disease Prevention and Control. Novel coronavirus (SARS-CoV-2)—Discharge criteria for confirmed COVID-19 cases. 2020.

- 22.Tang M. S., Case J. B., Franks C. E., Chen R. E., Anderson N. W., Henderson J. P., et al. Association between SARS-CoV-2 neutralizing antibodies and commercial serological assays. Clin Chem. 2020. 10.1093/clinchem/hvaa211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Public Health England. Evaluation of the Abbott SARS-CoV-2 IgG for the detection of anti-SARS-CoV-2 antibodies. 2020. Available from: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/890566/Evaluation_of_Abbott_SARS_CoV_2_IgG_PHE.pdf.

- 24.Public Health England. Evaluation of Roche Elecsys Anti-Sars-CoV-2 serology assay for the detection of anti-SARS-CoV-2 antibodies. 2020. Available from: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/891598/Evaluation_of_Roche_Elecsys_anti_SARS_CoV_2_PHE_200610_v8.1_FINAL.pdf.

- 25.Premkumar L., Segovia-Chumbez B., Jadi R., Martinez D. R., Raut R., Markmann A., et al. The RBD Of The Spike Protein Of SARS-Group Coronaviruses Is A Highly Specific Target Of SARS-CoV-2 Antibodies But Not Other Pathogenic Human and Animal Coronavirus Antibodies. medRxiv. 2020. [Google Scholar]

- 26.Watson J., Richter A., Deeks J. Testing for SARS-CoV-2 antibodies. BMJ. 2020;370:m3325. 10.1136/bmj.m3325 [DOI] [PubMed] [Google Scholar]

- 27.Pallett S. J. C., Rayment M., Patel A., Fitzgerald-Smith S. A. M., Denny S. J., Charani E., et al. Point-of-care serological assays for delayed SARS-CoV-2 case identification among health-care workers in the UK: a prospective multicentre cohort study. Lancet Respir Med. 2020;8(9):885–94. 10.1016/S2213-2600(20)30315-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mlcochova P., Collier D., Ritchie A., Assennato S. M., Hosmillo M., Goel N., et al. Combined Point-of-Care Nucleic Acid and Antibody Testing for SARS-CoV-2 following Emergence of D614G Spike Variant. Cell Rep Med. 2020;1(6):100099. 10.1016/j.xcrm.2020.100099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rosadas C., Randell P., Khan M., McClure M. O., Tedder R. S. Testing for responses to the wrong SARS-CoV-2 antigen? Lancet. 2020;396(10252):e23. 10.1016/S0140-6736(20)31830-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Seow J., Graham C., Merrick B., Acors S., Pickering S., Steel K. J. A., et al. Longitudinal observation and decline of neutralizing antibody responses in the three months following SARS-CoV-2 infection in humans. Nat Microbiol. 2020;5(12):1598–607. 10.1038/s41564-020-00813-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sekine T., Perez-Potti A., Rivera-Ballesteros O., Strålin K., Gorin J-B., Olsson A., et al. Robust T Cell Immunity in Convalescent Individuals with Asymptomatic or Mild COVID-19. Cell. 2020. 10.1016/j.cell.2020.08.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Peng Y., Mentzer A. J., Liu G., Yao X., Yin Z., Dong D., et al. Broad and strong memory CD4(+) and CD8(+) T cells induced by SARS-CoV-2 in UK convalescent individuals following COVID-19. Nat Immunol. 2020;21(11):1336–45. 10.1038/s41590-020-0782-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Government HM. Community Testing—a guide for local delivery. 2020. Date. Available from: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/939957/Community_rapid_testing_prospectus_FINAL_30-11.pdf. [Google Scholar]

- 34.The Academy of Medical Sciences. Lessons learnt: the role of and industry in the UK diagnostic testing response to COVID-19. 2020. Date. Available from: https://acmedsci.ac.uk/file-download/23230740. [Google Scholar]