Abstract

Background

Early in the coronavirus disease 2019 (COVID-19) pandemic, there was minimal data to guide treatment, and we lacked understanding of how clinicians translated this limited evidence base for potential therapeutics to bedside care. Our objective was to systematically determine how emerging data about COVID-19 treatments was implemented by analyzing institutional treatment protocols.

Methods

Treatment protocols from North American healthcare facilities and recommendations from guideline-issuing bodies were collected. Qualitative data on treatment regimens and their applications were extracted using an adapted National Institutes of Health/US Food and Drug Administration experimental therapeutics framework. Structured data on risk factor and severity of illness scoring systems were extracted and analyzed using descriptive statistics.

Results

We extracted data from 105 independent protocols. Guideline-issuing organizations published recommendations after the initial peak of the pandemic in many regions and generally recommended clinical trial referral, with limited additional guidance. Facility-specific protocols favored offering some treatment (96.8%, N = 92 of 95), most commonly, hydroxychloroquine (90.5%), followed by remdesivir and interleukin-6 inhibitors. Recommendation for clinical trial enrollment was limited largely to academic medical centers (19 of 52 vs 9 of 43 community/Veterans Affairs [VA]), which were more likely to have access to research studies. Other themes identified included urgent protocol development, plans for rapid updates, contradictory statements, and entirely missing sections, with section headings but no content other than “in process.”

Conclusions

In the COVID-19 pandemic, emerging information was rapidly implemented by institutions into clinical practice and, unlike recommendations from guideline-issuing bodies, heavily favored administering some form of therapy. Understanding how and why evidence is translated into clinical care is critical to improve processes for other emerging diseases.

Keywords: COVID-19, implementation science, treatment protocols

Early in COVID-19 pandemic, institutions developed treatment protocols based on limited evidence. In this analysis of those protocols, we found marked heterogeneity in the use and application of treatments seemingly governed by factors other than quality of scientific evidence.

The emergence and rapid spread of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) led to an urgent push to develop and implement clinical treatment strategies to optimize outcomes for this new and frequently morbid disease [1]. However, early in the coronavirus disease 2019 (COVID-19) pandemic, there was limited human evidence to guide clinical decision making.

Clinical innovations are postulated to spread in a slow but predictable manner: first, preclinical studies, then human efficacy and effectiveness studies, and finally studies that focus on dissemination, implementation, and sustainability [2]. Under typical conditions, there is an average 17-year lag from the time an intervention is proven effective until its implementation [3]. Implementation frameworks highlight the importance of the external environment, organizational structure, innovation characteristics, and processes for facilitating the ultimate adoption of evidence-based treatment [4–6].

Although laboratory and anecdotal evidence were published and available to guide treatment options, major guideline-issuing bodies, including the Centers for Disease Control and Prevention (CDC) [7], the World Health Organization (WHO) [8, 9], the National Institutes of Health (NIH) [10], and the Infectious Diseases Society of America (IDSA) [11], were delayed in releasing guidelines relative to the start of the first surge in many regions in North America. Thus, although evidence from human clinical trials was forthcoming, healthcare systems across the world faced pressure to provide frontline providers with guidance, despite the limited evidence base on which to base those recommendations.

Evidence on how evolving data are rapidly disseminated and translated into clinical practice are lacking, but it is critical that we learn more about how this process occurs and what drives decision making for emerging diseases with a limited evidence base. Thus, the objective of this study was to develop and use a concurrent mixed methods approach to categorize, classify, and qualitatively and quantitatively analyze the variation present in these locally and rapidly developed treatment protocols in medical centers across North America.

METHODS

Overview

After obtaining institutional treatment protocols, we used a concurrent analysis mixed methods approach to collect quantitative and qualitative data in parallel [12]. This analytic approach is novel in its application to clinical documents; however, it is a standard mixed methods approach to data analysis that can yield insights into factors driving medical decision making and translation of evidence into practice.

Patient Consent Statement

This study did not include factors necessitating patient consent.

Protocol Identification, Collection, and Recruitment

To ensure diverse representation of institutional protocols across North America, we recruited protocols developed during the period from March 15, 2020 to May 15, 2020 using multiple strategies, including the following: (1) email from the Society of Healthcare Epidemiology of America (SHEA) Research Network [13] to the 74 participating institutions requesting protocol sharing (follow-up email was sent to the facilities that did not initially respond); (2) outreach to personal contacts; (3) postings on Twitter, a major social media platform, by the authors with requests for sharing of protocols (A.A., W.B.-E.); (4) requests for protocol sharing on major national listservs (the National Antimicrobial Stewardship listserv hosted by Northwestern and the VA Society of Infectious Diseases Providers (VASPID); (5) request for protocol sharing posted to the IDSA Message Board with over 13 000 members active in the system; (6) Internet and intranet (Veterans Affairs [VA]) searches for COVID protocols; and (7) review of protocols posted to major national organizations, such as those posted to the AAMC website.

Inclusion/Exclusion Criteria

Inclusion criteria for protocols included (1) those from North American institutions that referenced guidance pertaining to the use and application of COVID-19 therapeutics for adults and (2) published guidance from major guideline-issuing bodies. Protocols without a section on therapeutics (eg, testing or infection control protocols only) and those pertaining to pediatric patients only were excluded. In some cases, city-wide treatment consortia with similar protocols were developed and were coded separately among members of the consortium, because they included individual site specifications. Centers that copied verbatim other sites’ protocols were not coded in duplicate. Protocols from affiliated institutions were included in data analysis under the parent institution, and exact duplicates of protocols were excluded. Guidelines from national and international organizations not originating from a specific institution (eg, CDC, WHO, NIH) were classified as “reference protocols.”

Data Extraction and Coding

Coding was completed by authors using a structured summary template to extract relevant qualitative and structured quantitative data (Supplementary Methods 1) [14]. Institutions were asked to submit copies of their protocols but did not fill out any survey or form. All protocols were coded by clinician investigators on the study team using a standardized template (W.B.-E., A.A., P.B.) after an initial quality assurance process to ensure interrater consistency. To ensure data quality, coded protocols were reviewed by a second clinician investigator; discrepancies were resolved through a third review. Final agreements were determined via discussion and consensus [15].

Codes were grouped according to type of treatment (eg, which medications selected), how treatments were applied (eg, to all patients with a confirmed test, to all patients with a suspected test, to high risk patients, etc), whether a disease severity index was used to guide decision making and what the disease severity index and/or risk stratification for severe/complicated COVID-19 infection scores were based on (eg, vital signs, laboratory findings, medical history), and whether laboratory results were included to guide decision making (eg, interleukin [IL]-6 level to guide tocilizumab use). Authors also collected and classified information about the facility that developed the specific institutional guideline (eg, size, academic vs community, VA vs non-VA, region etc), to identify facility factors associated with different treatment recommendations, access to various treatments and limited resources, and access to clinical trials.

Analysis

For qualitative variables, we developed an a priori coding frame from categories established by the NIH and the US Food and Drug Administration (FDA) (Supplementary Methods) [16]. We used this coding frame in a directed content approach for analysis of qualitative data, allowing for new codes to emerge [17]. The coded data were organized through matrix displays in Microsoft Excel [15]. These matrices were then used to compare and contrast evidence across the treatment protocols and between institutions to identify common themes.

Some variables were collected as numerical values (eg, age, obesity definition, laboratory cutoffs). For these quantitative variables, such as cutoffs for abnormal vital signs data, body mass index (BMI) cutoffs, and laboratory results, we extracted structured numerical variables from the protocols (eg, obesity defined as BMI >30) [18–20]. Quantitative variables were analyzed using descriptive statistics, such as t tests and Fisher’s exact tests, and the range of variability of these numerical variables was calculated.

RESULTS

Protocol Characteristics

Protocols (N = 168) representing 123 healthcare institutions, systems, or entities were collected (Supplementary Figure 1); of these, 105 met criteria for inclusion. Among these, 52 were academic (ie, university-affiliated teaching hospitals) centers, 12 were community/private hospitals or healthcare systems, and 31 were VA healthcare systems or networks (se Table 1 and Figure 1 for regional distribution). Protocols varied in data presentation: 46.3% included a reference list and 12.6% referenced another facility’s protocol (Table 1). Protocols (10 of 105) were from guideline-issuing bodies and were commonly cited in other protocols.

Table 1.

Protocol Characteristics and Quantitative Results

| Protocol Characteristics | Total | Academic | Community | VA |

|---|---|---|---|---|

| Totala | 95 | 52 (54.7) | 12 (12.6) | 31 (32.6) |

| Region/Location (%) | ||||

| Northeast | 26 (27.4) | 17 | 2 | 7 |

| Midwest | 15 (15.8) | 8 | 3 | 4 |

| South | 26 (27.4) | 15 | 2 | 9 |

| West | 24 (25.2) | 10 | 3 | 11 |

| Nationwide | 2 (2.1) | 0 | 2 | 0 |

| Canada | 2 (2.1) | 2 | 0 | 0 |

| Median beds (IQR) | - | 751 (527–890) | 429 (253–579) | 244 (144–304) |

| Median ICU beds (IQR) | - | 88 (67–120) | 1 (1–2) | 41 (14–56) |

| Average no. of facilities (range) | - | 5.8 (1–40) | 38.5 (1–186) | 1.5 (1–3) |

| SHEA Research Network sites | 24 | 20 | - | 4 |

| Protocol Presentation | ||||

| Data presentation | ||||

| Algorithm or flow chart | 41 (43.2) | - | - | - |

| Table | 62 (65.3) | - | - | - |

| Text | 58 (61.1) | - | - | - |

| Version Updates | ||||

| 1 update | 30 | 19 | 0 | 11 |

| ≥2 updates | 12 | 8 | 0 | 4 |

| Resource limitations (% of subcategory total) | 32 (33.7) | 14 (26.9) | 3 (25.0) | 15 (48.4) |

| Has a reference list (% of subcategory total) | 44 (46.3) | 26 (50.0) | 6 (50.0) | 12 (38.7) |

| Refers to another sites protocol (% of subcategory total) | 12 (12.6) | 3 (5.8) | 2 (16.7) | 7 (22.6) |

| Gatekeeping of Decision Making | ||||

| Decision for treatment based on disposition (% of subcategory total) | 51 (53.7) | 27 (51.9) | 8 (66.7) | 16 (51.6) |

| Recommended ID consult (% of subcategory total) | 43 (45.3) | 27 (51.9) | 4 (33.3) | 12 (38.7) |

| Required ID approval/consult (% of subcategory total) | 49 (51.6) | 23 (44.2) | 4 (33.3) | 22 (71.0) |

| Antibiotic steward approval required (% of subcategory total) | 18 (18.9) | 7 (13.5) | 3 (25.0) | 8 (25.8) |

| Clinical Trial | ||||

| Trial Available (% of subcategory total) | 28 (29.5) | 19 (36.5) | 2 (16.7) | 7 (22.6) |

| By Facility Sizeb per Number Of Beds (Trial Available/N Institutions in Size Category, %) | - | - | - | |

| 1–99 | 0/4 (0.0) | - | - | - |

| 100–199 | 2/9 (22.2) | - | - | - |

| 200–499 | 7/30 (23.3) | - | - | - |

| 500–999 | 14/39 (35.9) | - | - | - |

| 1000+ | 4/9 (44.4) | |||

| By Top 20 Metropolisc | ||||

| Yes (% of subcategory total) | 13/26 (50.0) | 9 (56.3) | 0 (0.0) | 4 (44.4) |

| No (% of subcategory total) | 14/65 (21.5) | 10 (28.6) | 2 (22.2) | 2 (9.5) |

| Trial open for enrollment (%) | 22 (23.3) | 15 (15.8) | 2 (2.1) | 5 (5.3) |

| Recommended trial enrollment (%) | 43 (42.6) | 25 (48.1) | 5 (41.7) | 13 (41.9) |

| By Top 20 Metropolis | ||||

| Yes (% of subcategory total) | 15 (57.7) | 10 (62.5) | 0 (0.0) | 5 (55.5) |

| No (% of subcategory total) | 25 (39.1) | 14 (38.9) | 4 (36.4) | 7 (31.8) |

Abbreviations: ICU, intensive care unit; ID, Infectious Diseases; IQR, interquartile range; VA, Veterans Affairs.

aOnly includes unique protocols from parent institutions; however, this does include different protocols from institutions with overlapping catchment areas.

bFour protocols were from healthcare institutions where facility size and location in top 20 metropolis could not be categorized.

cLargest 20 cities based on US Census Bureau 2019 population estimates.

Figure 1.

Map of distribution of facilities. This figure was created with the assistance of Google Maps, 2020. Blue, VA healthcare facilities; Yellow, Academic healthcare facilities; Orange, Community healthcare facilities.

Recommendations From Guideline-Issuing Bodies

The 10 reference protocols from guideline-issuing organizations, such as IDSA [11], NIH [10], CDC [7], and WHO [8, 9], were published during the period from March 21, 2020 to April 11, 2020 and encouraged clinical trial enrollment but did not offer specific recommendations about treatments administered outside of a clinical trial, citing a lack of high-quality evidence. An exception to this was the Thoracic Society Guideline (developed using a modified Delphi technique and an expert panel) that was released in March 2020 and included a statement of support for unproven therapies in critically ill patients [21].

Overarching Themes

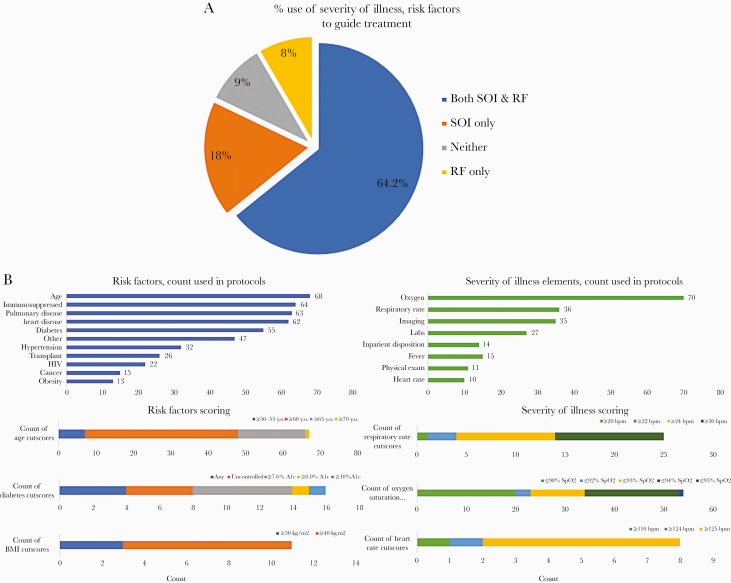

Two themes present throughout almost every institutional protocol were (1) a clear and strong suggestion to provide some form of treatment (92 of 95 protocols, 96.8%), particularly to patients with more severe illness (78 of 95 protocols, 82.1%), and with risk factors for progression for severe disease (69 of 95, 72.6%) (Figure 2), and (2) the desire to create a “standard” treatment for patients presenting to their institution when a broader standard of care did not exist. Acknowledging a potential for harm associated with unproven treatments, many institutional guidelines included detailed indications for safety monitoring, such as recommendations for electrocardiograms in patients receiving hydroxychloroquine and baseline human immunodeficiency virus testing before antiretrovirals.

Figure 2.

(a) Use of severity of illness (SOI) and risk factors (RF) criteria to guide treatment decisions (% of protocols), and (b) severity of illness and risk factor measures to guide therapeutic decisions. BPM, beats per minute.

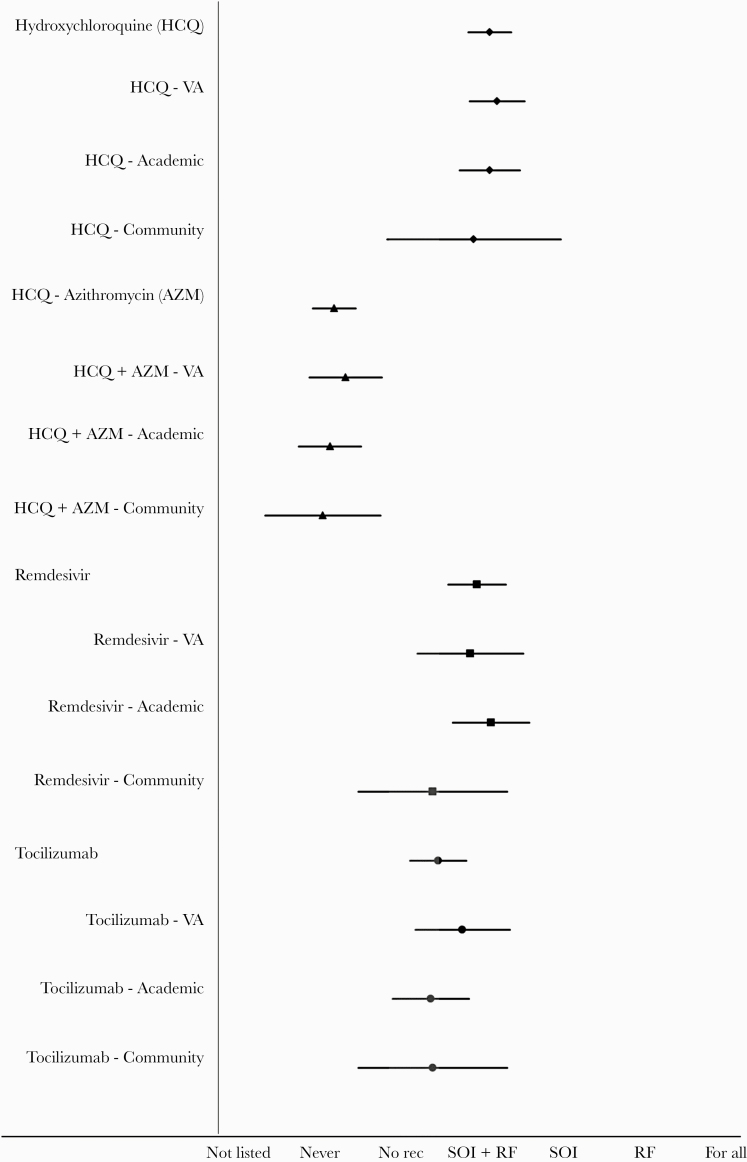

Treatments and Their Application

The most commonly recommended therapy within the included institutional protocols was hydroxychloroquine (Figure 3), cited in 90.5% of protocols. Of these, 80.0% recommended it in at least some circumstances, most frequently based on a locally developed risk factor and/or severity of illness scoring scheme. A total of 2.1% specifically recommended against its use. The combination of hydroxychloroquine and azithromycin was often specifically “not recommended.” Other commonly included treatment recommendations included remdesivir and IL-6 inhibitors, particularly among facilities with an open and enrolling clinical trial. Criteria for remdesivir use were typically based on a combination of risk factors and severity scores, due to the drug’s non-FDA-approved status and gatekeeping by specific industry-determined eligibility criteria for access (initially via a compassionate use mechanism and later through expanded use access).

Figure 3.

Forest plot of coronavirus disease 2019 (COVID-19) therapeutics by strength of recommendation according to facility type. RF, risk factor; SOI, severity of illness; VA, Veterans Affairs.

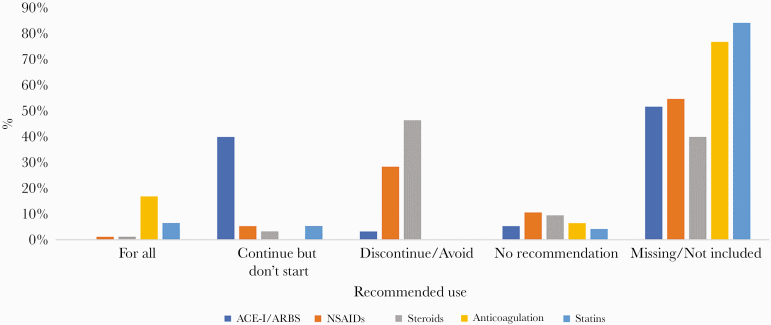

Ancillary treatments, such as steroids and nonsteroidal anti-inflammatory drugs (NSAIDs), were listed in 60.0% and 45.3% of protocols, respectively. Most commonly, these medications were listed with a recommendation against their use for COVID-19 treatment (46.3% and 28.4% of protocols) (Figure 4). Angiotensin-converting enzyme (ACE) inhibitors were also commonly included (48.4%), typically with a recommendation to “continue but not start.” Anticoagulation was included in 23.2%, often with a recommendation to administer standard prophylactic treatment doses.

Figure 4.

Ancillary treatments. ACE-I, angiotensin-converting enzyme inhibitors; ARB, angiotension II receptor blocker; NSAID, nonsteroidal anti-inflammatory drugs.

A total of 45.3% of protocols included a recommendation for infectious diseases consultation for all COVID-19 patients (Table 1). Approval of any COVID-19 medication was required in 55.8% (pharmacy approval in 18.9% and infectious diseases approval in 51.6%). Requirement for approval was highly correlated with status as an academic facility (Supplementary Figure 2).

Although the application of risk factor for severe/complicated COVID-19 disease and severity of illness scoring to guide treatment was a recurring theme, we found substantial interfacility variation in how these scores were constructed and applied (Figure 2). Almost all protocols listed heart and pulmonary disease as risk factors; immunosuppression was also often included, although this term was variably defined. Many protocols (31.5%) included various terms related to renal disease, including “chronic kidney disease”, “end stage renal disease”, or “kidney disease”; however, this latter term was often not further defined and lacked specific cutoffs, potentially creating challenges for application in clinical settings. Lack of standard definitions were also found for other variables, including “respiratory disease” and “heart disease.” For numerical variables, such as BMI cutoffs to define “obesity,” there was similar lack of standardization.

Severity of illness was defined in many ways; however, some form of oxygenation status was included in almost all (89.7%). Disposition status (eg, inpatient floor vs intensive care unit, 17.9%) and respiratory rates were also frequently included (46.2%). Similar to findings with numerical variables used in risk factor scoring, there was wide variation in how these variables were defined. For example, oxygen saturations across a wide range to define moderate or severe disease (eg, 90%–95% Oxygen Saturation). Likewise, the respiratory rate used to define severe disease ranged from 20 to 30, encompassing the a range of values that would all be consistent with clinical definitions of tachypnea.

Emerging Themes and Association With Facility Factors

A recommendation to enroll patients in a clinical trial was included in 45.3% (43 of 95) of institutional protocols, with an open trial available at the institution referenced in 29.5% (28 of 95 protocols). Clinical trial availability strongly correlated with institutional size and region (Supplementary Figure 3) and was more common among large academic institutions (36.5%, 19 of 52) versus community centers (16.7%, 2 of 12) and VA facilities (22.6%, 7 of 31). Clinical trials access was also higher in major metropolitan areas (50.0% in top 20 metropolises versus 21.5% in other areas) (Table 1, Supplementary Figures 3 and 4). Although some smaller facilities had academic affiliations and referenced trial availability at a parent institution, most institutions without strong academic ties did not have open and enrolling trials. Institutions without an open trial generally issued stronger recommendations for off-label therapeutics, despite explicit warnings included in many protocols that these agents were “not FDA-approved” for COVID-19.

Many protocols highlighted a sense of urgency regarding the development and operationalization of treatment protocols and included statements about “frequent updates” and “do not print” given the “rapidly changing” nature of recommendations (Table 2). Reference to “living documents” being updated in real-time and subject to change were common, including statements such updates pending “further information becom[ing] available from CDC and WHO.” However, possibly as an extension of the speed of development and frequent changes, several protocols included ambiguous or contradictory recommendations, such as recommendations to administer a treatment in one section and cautionary statements recommending against the use of the same treatment in another section of the same document (Table 2). Another emergent theme was the interdisciplinary nature of many of the protocols, which in some cases included substantial coordination efforts across the entire healthcare system. Many were “jointly developed” with clinicians from several departments.

Table 2.

Emergent Themes From Institutional Protocols and Illustrative Examples

| Emergent Theme | Examples From Protocols |

|---|---|

| Protocol Development and Use | |

| Developed rapidly | “We built the first iteration of these guidelines “from the bottom up” in less than a week with the input of over 50 people. With the help of our readers, we expect to correct and revise as we as a society learn about COVID-19.” |

| Developed collaboratively | “This protocol was jointly-developed with input from clinicians across multiple departments.” |

| Discussion of updates/real-time changes | “This is a living document that will be updated in real time as more data emerge.” |

| Language Use in Protocols | |

| Cautionary | “Given the lack of clear evidence to support hydroxychloroquine, medical experts have asked clinicians to exercise caution and to consider the risk of the medication—notably the potential cardiac complications. Because the data is still unclear, there are several ongoing trials of hydroxychloroquine.” |

| Clinical ambiguity | Risk factors for severe disease: age ≥65 years, chronic cardiovascular, pulmonary, hepatic, renal, hematologic, or neurologic conditions, immunocompromised, pregnant women, residents of nursing homes or long-term care facilities. |

| Symptomatic individuals who are older adults (age >65 years), immunocompromised state, chronic medical conditions (eg, diabetes, CAD, chronic lung, or kidney disease) | |

| Urgency | “URGENT! Please circulate as widely as possible. It is crucial that every pulmonologist, every critical care doctor and nurse, every hospital administrator, every public health official receive this information immediately.” |

| Contradictory/conflicting recommendations | Lopinavir/ritonavir listed under “not recommended” treatments in main table, however, included criteria for use in table footnote. |

| Treatment/Care Delivery | |

| Strength of treatment recommendations | “Please note there are NO FDA approved treatment options for COVID-19. These medications are experimental in nature and utilization may change with newly discovered clinical trials and results.” |

| Recommendation to participate in a clinical trial | “[Site Name] is committed to participation in randomized controlled clinical trials to facilitate the generation of robust evidence concerning the effectiveness of products in treating COVID-19 and to appropriately delineate risk-vs-benefit assessments for various treatment strategies.” |

| Resource limitations as an element of decision making | “Consider available resources and pump availability when ordering.” |

| Integration of different clinical services/interdisciplinary care team management | “In a surge situation we will work with palliative care to provide palliative services for older patients.” |

Abbreviations: CAD, coronary artery disease; COVID-19, coronavirus disease 2019; FDA, US Food and Drug Administration.

Other notable emerging themes involved the inclusion of “warning” or cautionary statements that therapeutics recommended were not FDA-approved or evidence-based; however, how these cautionary statements were presented varied from inclusion at the beginning of the document in bolded, red font, to a footnote in small font at the bottom of a table (Table 2). Thus, there was strong variation in how visible cautionary statements were and in the probability that the cautionary statement would be digested. Similarly, several protocols included phrases like “Do not share” or “Do not distribute,” potentially conveying discomfort with recommending unproven therapies, yet also acknowledging some pressure to provide local guidance about management strategies. Recommendations were often justified using statements about deference to physicians’ “sound clinical judgement.”

Reference to “resource limitations” was a common theme, but this was more frequently found in VA facilities versus non-VA facilities and higher in the Northeast and Southern regions (Supplementary Figure 5). Protocols included phrasing such as “availability of medications may change” due to ongoing drug shortages or that medications were in “limited supply”; therefore, a “strategic utilization” approach was used in the protocol. Due to resource limitations, some institutions implemented crisis standards of care, resulting in variability in which patients were triaged to receive critical care. A small number of facilities developed palliative care treatment guidelines and order sets for patients with COVID-19 and included strategies for referring patients for palliative care consultation upon hospital presentation. Risk factors and severity of illness scores were also often applied as a means of triaging limited medication stockpiles to the patients at highest risk of disease progression and/or most severe disease.

DISCUSSION

Although there is typically a multiyear lag from preclinical evidence to human data and dissemination and implementation, the postulated progression from preclinical evidence to human studies and finally to dissemination and implementation was upended for COVID-19 therapy [2, 22]. Driven by pressure to offer “something” to patients with few options, and often differing from later recommendations from national guideline-issuing bodies, almost every institution endorsed unproven treatments for at least some patients, particularly those with severe disease or risk factors for disease progression. Recommendations from international groups (eg, Lombardy Infectious Diseases Society), which heavily favored treatment, were available by March 2020. National guideline-issuing bodies, such as the CDC and the IDSA, did not release their recommendations until mid-to-late April 2020. Thus, guidelines from US national organizations were not available until after the initial surge in some regions, including the Northeastern United States. This may have contributed to our finding that facility protocols seemed to be more congruent with international recommendations, and advice from more experienced overseas colleagues, than with US national guidelines.

Challenges of confounding by indication were present in many of the early data released, for example, suggesting first benefit and then harm associated with hydroxychloroquine [23–25]. Hydroxychloroquine was later found in a meta-analysis of randomized trials and observational studies to be ineffective in reducing mortality [26].

Recognizing that not every question can be answered in a randomized clinical trial, we must find ways to improve the quality and reliability of observational studies to speed answers to questions about COVID-19 management. Incorporation of facility factors, such as treatment recommendations and severity of illness scores, provides a mechanism for including important confounding factors into these analyses. To this end, data matrices are available, so that critical facility factors can be incorporated into future observational studies to enhance their quality.

Implementation Science Frameworks postulate that major factors impacting the adoption of innovations include the external environment, organizational structure, and characteristics of the innovation—including the strength of the evidence supporting it, and the processes used [4, 5]. However, during the early pandemic, we found that the 2 constructs that most heavily influenced institutional recommendations were the external environment and provider and organizational factors. Strength of the underlying evidence played a relatively minor role in driving adoption of practices.

Preclinical, observational data, and data extrapolated from other respiratory viruses including Middle East respiratory syndrome (MERS) and SARS-CoV-1 led to the common recommendation for hydroxychloroquine and against the routine use of steroids as treatments for SARS-CoV-2 in many early treatment protocols. However, despite early enthusiasm about hydroxychloroquine, subsequent randomized trials found it to be ineffective [25, 27–30]. In contrast, human data extrapolated from other respiratory viral syndromes was found not to apply to COVID-19, and use of steroids for hospitalized patients with COVID-19 has now been widely adopted [31, 32]. These data demonstrate the importance of human clinical data to guide recommendations and the importance of viewing treatment guidelines for emerging diseases as “living documents” that will necessarily evolve.

Application of evidence-based data to guide decision making is critical; however, this must be balanced against the near-universal instinct of frontline clinicians to provide some form of treatment—even if human evidence is lacking. Several local protocols included in this study were widely disseminated, including integration into references in other guidelines and commonly used clinician resources such as UpToDate [33]. For medications with a long track record of safety, prescribing something may be preferable to clinicians when the alternative is to offer nothing to patients with a potentially fatal disease—and this feature of physician culture to favor “doing something” over “doing nothing” should be taken into consideration when developing recommendations about best practices for novel or rare diseases without a strong evidence basis to support one treatment strategy or another.

Despite being developed by discrete institutions, many of same therapeutic agents (hydroxychloroquine, remdesivir) were included, potentially indicative of some degree of informal information sharing between physician peers as a key component of information dissemination, particularly when guideline-issuing bodies issued recommendations that may not be timely or even feasible for all sites.

Guideline-issuing bodies focused on suggestions for clinical trial enrollment [8–11]; however, access to clinical trials primarily available at large, urban, academic medical centers with existing research infrastructure and access at VA medical centers and community medical centers, particularly those in rural settings, was rare. Thus, smaller facilities, which on an individual basis have fewer patients but where the majority of clinical care is provided [34], typically did not have the option of offering treatment as part of a clinical trial. Thus, these facilities faced the option of offering their patients no treatment or developing protocols based on the best evidence available to them at the time, which is what many opted to do.

Our findings highlight the importance of innovating mechanisms to expand clinical trials access. Guideline-issuing bodies should recognize the unintended consequences of blanket recommendations about “only in the context of a clinical trial,” without coupling the recommendation with a mechanism to support access to experimental studies for a larger segment of the population. Potential strategies for improving clinical trial access include strategic decisions about availability made at a regional or national level and not by individual institutions or sponsors, which may promote disparities by favoring well connected organizations with existing research infrastructure. As we move toward a Learning Healthcare Model [35], consideration should be given to technological innovations to support expansion of clinical trial access to patients receiving care at smaller, nonacademic institutions. This might include increasing use of embedded clinical trials within integrated electronic medical records systems [36, 37], a rapid referral mechanism to sister institutions that do have clinical trial availability, or pre-existing agreements about transfers if trial enrollment is indicated.

Limitations

We relied on informal networks and established societies and list serves to gather protocols, which may have led to sampling bias. We also do not have data about how many institutions developed and implemented their own protocols. However, the protocols analyzed in this study represent a wide range of facility types and regions facing the brunt of the pandemic during the study period, thus partially mitigating this limitation. In addition, due to the rapidly evolving nature of the pandemic, different protocols were developed at different times, and thus some facilities may have had more data available to them when developing their initial protocol than others. We attempted to mitigate this limitation by collecting protocols developed during a relatively short window from the early part of the pandemic in North America, but we may not have fully accounted for these differences in timing. A final limitation was the potential for bias in any qualitative analysis; however, we attempted to address this by using multiple coders and a coding check, to ensure accuracy and reproducibility.

CONCLUSIONS

Although randomized controlled clinical trials will remain the gold standard for establishing treatment options for COVID-19, not every research question can or will be answered through these typically resource- and time-intensive study designs. Thus, it is critical that we find ways to enhance the quality of observational research to improve our understanding of COVID-19. Viewing treatment through the lens of implementation science, we may be able to enhance the quality of COVID-19 observational data by more fully accounting for patient and facility-level factors that may have driven clinical decision making.

Facing a novel pathogen with rapid spread and high mortality, and no high-quality evidence about effective treatment options, many centers generated institutional protocols to locally standardize care, operationalize decision making, and triage limited resources to the sickest patients. Recommendations were developed with minimal input from guideline-issuing bodies, which were slow to publish guidance and which focused on referrals to clinical trials, without addressing the impact of a blanket recommendation for “in the context of a clinical trial” on facilities with limited research infrastructure.

Data Sharing Statement

In line with the Open Science Framework [38], a deidentified version of the matrix display, including relevant implementation variables and clinical definitions stratified by facility, is publicly accessible through a data-sharing resource such that others may include these variables in their investigations. The data-sharing resource is available at: www.covidresourcecenter.com. Release of the institutional name associated with the random study number will be made available upon written request to the study authors.

Supplementary Data

Supplementary materials are available at Open Forum Infectious Diseases online. Consisting of data provided by the authors to benefit the reader, the posted materials are not copyedited and are the sole responsibility of the authors, so questions or comments should be addressed to the corresponding author.

Acknowledgments

This research would not have been possible without the help and support of the Society for Healthcare Epidemiology in America Research Network and the contributions and support of colleagues from around the country. We greatly appreciate their willingness to share their protocols to support this work. Without the efforts, this research would not have been possible. We also thank the VA Boston Center for Healthcare and Organization Research (CHOIR) for help and assistance with this project.

Disclaimer. The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government.

Financial support. This work was funded by the National Heart, Lung and Blood Institute (Grant 1K12HL138049-01; to W. B.-E.). The Veterans’ Affairs Boston Center for Healthcare Organization and Implementation Research, a VA Health Services Research and Development Center of Innovations, also supported the work through personnel support.

Potential conflicts of interest. A. A. is an investigator for studies funded by Gilead Sciences, Roche/Genentech, Merck, Day Zero Diagnostics, and ViiV/GlaxoSmithKline. W. B.-E. is an investigator for studies funded by Gilead Sciences and paid directly to the institution. All authors have submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Conflicts that the editors consider relevant to the content of the manuscript have been disclosed.

References

- 1. Guan WJ, Ni ZY, Hu Y, et al. ; China Medical Treatment Expert Group for Covid-19. Clinical characteristics of coronavirus disease 2019 in China. N Engl J Med 2020; 382:1708–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Pinnock H, Barwick M, Carpenter CR, et al. ; StaRI Group. Standards for Reporting Implementation Studies (StaRI) Statement. BMJ 2017; 356:i6795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. J R Soc Med 2011; 104:510–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009; 4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Fisher ES, Shortell SM, Savitz LA. Implementation science: a potential catalyst for delivery system reform. JAMA 2016; 315:339–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients’ care. Lancet 2003; 362:1225–30. [DOI] [PubMed] [Google Scholar]

- 7. Centers for Disease Control. Interim Clinical Guidance for Management of Patients with Confirmed Coronavirus Disease (COVID-19). Available at: https://www.cdc.gov/coronavirus/2019-ncov/hcp/clinical-guidance-management-patients.html. Accessed 9 September 2020.

- 8. World Health Organization. Clinical Management of severe acute respiratory Infection (SARI) when COVID-19 disease is suspected. Interim guidance. Available at: https://www.who.int/docs/default-source/coronaviruse/clinical-management-of-novel-cov.pdf . Accessed 9 September 2020.

- 9. World Health Organization. Clinical management of COVID-19. Interim guidance. Available at: https://www.who.int/publications/i/item/clinical-management-of-covid-19. Accessed 9 September 2020.

- 10. National Institutes of Health. COVID-19 Treatment Guidelines Panel. Coronavirus Disease 2019 (COVID-19) Treatment Guidelines. Available at: https://www.covid19treatmentguidelines.nih.gov/. Accessed 9 September 2020. [PubMed]

- 11. Bhimraj A, Morgan RL, Shumaker AH, et al. Infectious Diseases Society of America Guidelines on the Treatment and Management of Patients with COVID-19. Clin Infect Dis 2020. 10.1093/cid/ciaa478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Fetters MD, Curry LA, Creswell JW. Achieving integration in mixed methods designs-principles and practices. Health Serv Res 2013; 48:2134–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Safdar N, Anderson DJ, Braun BI, et al. ; Research Committee of the Society for Healthcare Epidemiology of America. The evolving landscape of healthcare-associated infections: recent advances in prevention and a road map for research. Infect Control Hosp Epidemiol 2014; 35:480–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Hamilton A. Qualitative methods in rapid turn-around health services research. Health Services Research & Development Cyberseminar. VA Human Services Research and Development. Transcript and Recording, 11 December 2013. Available at: https://www.hsrd.research.va.gov/for_researchers/cyber_seminars/archives/video_archive.cfm?SessionID=780. [Google Scholar]

- 15. Huberman AM, Miles M, Saldana J.. Qualitative Data Analysis: A Methods Sourcebook. Thousand Oaks, California, USA: SAGE Publications; 2014. [Google Scholar]

- 16. National Institutes of Health. Protocol Templates for Clinical Trials. Available at: https://grants.nih.gov/policy/clinical-trials/protocol-template.htm. Accessed 31 March 2020.

- 17. Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res 2005; 15:1277–88. [DOI] [PubMed] [Google Scholar]

- 18. Elwy AR, Wasan AD, Gillman AG, et al. Using formative evaluation methods to improve clinical implementation efforts: description and an example. Psychiatry Res 2020; 283:112532. [DOI] [PubMed] [Google Scholar]

- 19. Elwy AR, Groessl EJ, Eisen SV, et al. A systematic scoping review of yoga intervention components and study quality. Am J Prev Med 2014; 47:220–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Elwy AR, Hart GJ, Hawkes S, Petticrew M. Effectiveness of interventions to prevent sexually transmitted infections and human immunodeficiency virus in heterosexual men: a systematic review. Arch Intern Med 2002; 162:1818–30. [DOI] [PubMed] [Google Scholar]

- 21. Wilson K, Chotirmall S, Bai C, Rello J. COVID-19: interim guidance on management pending empirical evidence.Eur Respir Rev 2002; 29:200287. [Google Scholar]

- 22. Branch-Elliman W, Elwy AR, Monach P. Bringing new meaning to the term “adaptive trial”: challenges of conducting clinical research during the coronavirus disease 2019 pandemic and implications for implementation science. Open Forum Infect Dis 2020; 7:ofaa490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Geleris J, Sun Y, Platt J, et al. Observational study of hydroxychloroquine in hospitalized patients with Covid-19. N Engl J Med 2020; 382:2411–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Gautret P, Lagier JC, Parola P, et al. Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial. Int J Antimicrob Agents 2020; 56:105949. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 25. Recovery Collaborative Group. Effect of hydroxychloroquine in hospitalized patients with Covid -19. N Engl J Med 2020; 383:2030–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Fiolet T, Guihur A, Rebeaud ME, Mulot M, Peiffer-Smadja N, Mahamat-Saleh Y. Effect of hydroxychloroquine with or without azithromycin on the mortality of coronavirus disease 2019 (COVID-19) patients: a systematic review and meta-analysis. Clin Microbiol Infect 2021; 27:19–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Boulware DR, Pullen MF, Bangdiwala AS, et al. A randomized trial of hydroxychloroquine as postexposure prophylaxis for Covid-19. N Engl J Med 2020; 383:517–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Gautret P, Lagier JC, Parola P, et al. Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial. Int J Antimicrob Agents 2020; 56:105949. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 29. Self WH, Semler MW, Leither LM, et al. ; National Heart, Lung, and Blood Institute PETAL Clinical Trials Network. Effect of hydroxychloroquine on clinical status at 14 days in hospitalized patients with COVID-19: a randomized clinical trial. JAMA 2020; 324:2165–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Skipper CP, Pastick KA, Engen NW, et al. Hydroxychloroquine in nonhospitalized adults with early COVID-19: a randomized trial. Ann Intern Med 2020; 173:623–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Recovery Collaborative Group. Dexamethasone in hospitalized patients with Covid-19 — preliminary report. N Engl J Med 2021; 384:693–704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. The WHO Rapid Evidence Appraisal for COVID-19 Therapies (REACT) Working Group. Association between administration of systemic corticosteroids and mortality among critically ill patients with COVID-19: a meta-analysis. JAMA 2020. doi: 10.1001/jama.2020.17023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Kim A, Gandhi R.. Coronavirus disease 2019 (COVID-19): management in hospitalized adults. In: UpToDate, Post TW (Ed), UpToDate, Waltham, MA. Accessed 9 September 2020. Available at: www.uptodate.com. [Google Scholar]

- 34. Dunn L, Becker S. 50 things to know about the hospital industry. Becker’s Hospital Review 2013. Available at: https://www.beckershospitalreview.com/hospital-management-administration/50-things-to-know-about-the-hospital-industry.html. Accessed 9 September 2020. [Google Scholar]

- 35. Chambers DA, Feero WG, Khoury MJ. Convergence of implementation science, precision medicine, and the learning health care system: a new model for biomedical research. JAMA 2016; 315:1941–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Fiore LD, Brophy M, Ferguson RE, et al. A point-of-care clinical trial comparing insulin administered using a sliding scale versus a weight-based regimen. Clin Trials 2011; 8:183–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Weinfurt KP, Hernandez AF, Coronado GD, et al. Pragmatic clinical trials embedded in healthcare systems: generalizable lessons from the NIH Collaboratory. BMC Med Res Methodol 2017; 17:144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Foster ED, Deardorff A. Open science framework (OSF). JMLA 2017; 105:203. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.