Abstract

Although identifying cell names in dense image stacks is critical in analyzing functional whole-brain data enabling comparison across experiments, unbiased identification is very difficult, and relies heavily on researchers’ experiences. Here, we present a probabilistic-graphical-model framework, CRF_ID, based on Conditional Random Fields, for unbiased and automated cell identification. CRF_ID focuses on maximizing intrinsic similarity between shapes. Compared to existing methods, CRF_ID achieves higher accuracy on simulated and ground-truth experimental datasets, and better robustness against challenging noise conditions common in experimental data. CRF_ID can further boost accuracy by building atlases from annotated data in highly computationally efficient manner, and by easily adding new features (e.g. from new strains). We demonstrate cell annotation in Caenorhabditis elegans images across strains, animal orientations, and tasks including gene-expression localization, multi-cellular and whole-brain functional imaging experiments. Together, these successes demonstrate that unbiased cell annotation can facilitate biological discovery, and this approach may be valuable to annotation tasks for other systems.

Research organism: C. elegans

Introduction

Annotation of anatomical structures at cellular resolution in large image sets is a common data analysis step in many studies in Caenorhabditis elegans such as gene expression pattern analysis (Long et al., 2009; Murray, 2008), lineage tracing (Bao et al., 2006), multi-cell calcium imaging and whole-brain imaging (Schrödel et al., 2013; Kato et al., 2015; Venkatachalam et al., 2016; Nguyen et al., 2016). It is necessary for cellular resolution comparison of data across animals, trials, and experimental conditions. Particularly in whole-brain functional imaging, meaningful interpretation of population activity critically depends on cell identities as they facilitate the incorporation of existing knowledge about the system (Kato et al., 2015). Cell identities are also needed for applying common statistical data analysis methods such as Principal Component Analysis, Tensor Component Analysis, demixed-Principal Component Analysis (Williams et al., 2018; Kobak et al., 2016) etc as data across experiments needs to be indexed and pooled by cell identities before applying these methods.

While accurate annotation of cell identities in images is critical, this task is difficult. Typically, the use of cell-specific markers as landmarks delivers good accuracy, but has the cost of having to engineer cell-specific reagents without interfering with phenotypes of interest, which is not guaranteed. Further, even with markers such as the recently developed impressive reagents in the NeuroPAL collection (Yemini et al., 2021), there is still a need to automate the cell identification process. In the absence of markers, cells are identified by comparing images to a reference atlas such as WormAtlas (Altun and Hall, 2009) and OpenWorm (Szigeti et al., 2014) atlas. However, there are severe limitations from both using reference atlas and the presence of noise in data. Reference atlases assume a static and often single view of the anatomy; in contrast, anatomical features vary across individuals. Moreover, due to variations in experimental conditions during acquisition such as exact resolution and orientation of animals, image data often do not match the static atlases, making manual cell identification extremely difficult if not infeasible. Separately, two kinds of noise are prevalent in data. First, individual-to-individual variability in cell positions compared to positions in atlas (position noise). Second, mismatch between number of cells in image and atlas (count noise). Count noise is primarily caused by variability in the expression levels of the reporter used to label cells across animals (i.e. mosaicism), incomplete coverage of promoter to label desired cells, and limits in the computational methods to detect cells. In each of these cases, fewer cells are detected in the images than cells in the atlas. Empirical data have shown that in normalized coordinates, a cell’s position can deviate from the atlas position by more than the cell’s distance to its tenth’ nearest neighbor in the image (Yemini et al., 2021; Toyoshima et al., 2016). Further, our data, as well as data from other labs, have shown that 30–50% of cells in atlases may be missing from images (Kato et al., 2015; Venkatachalam et al., 2016; Nguyen et al., 2016). As a result of the large position and count noise common in data, identifying densely packed cells in head ganglion images of C. elegans by manually comparing images to the atlas is extremely difficult, even for experienced researchers. Further, manual annotation is labor intensive. Therefore, there is a critical need for automated methods for cell identification.

Previous computational methods for cell identification in C. elegans images (Long et al., 2009; Long et al., 2008; Qu et al., 2011; Aerni et al., 2013) focused on identifying sparsely distributed cells with stereotypical positions in young larvae animals. Tools for identification of cells in whole-brain datasets, that is in dense head ganglion, do not exist. Further, previous methods (Long et al., 2009; Yemini et al., 2021; Qu et al., 2011; Aerni et al., 2013; Toyoshima, 2019; Scholz, 2018) do not explicitly address the challenges imposed by the presence of position and count noise in the data. All previous methods either are registration-based or formulate a linear assignment problem; objective functions in these methods minimize a first-order constraint such as the distances between cell-specific features in images and atlases. Thus, these methods maximize only extrinsic similarity (Bronstein et al., 2007) between images and atlas, which is highly sensitive to count noise, position noise, and pre-alignment of spaces in which the image and the atlas exist (i.e. orientations of animals in images and atlases). With the amount of position and count noise commonly observed in experimental data, registration-based methods produce large matching errors.

An alternative criterion proposed for topology-invariant matching of shapes is to maximize intrinsic similarity (Bronstein et al., 2007; Bronstein et al., 2009), orthogonal to extrinsic similarity. This approach has advantages because noise that affects extrinsic similarity does not necessarily imply worse intrinsic similarity. For instance, although cell positions in an image may deviate from their positions in the atlas (large extrinsic noise), geometrical relationships among them are largely maintained (low intrinsic noise). As a specific example, although absolute positions of the cell bodies of AIBL and RIML in an image may deviate greatly from their atlas positions, AIBL soma stays anterior to RIML soma. Therefore intrinsic similarity is more robust against noises, independent of the pre-alignment of spaces, and inherently captures dependencies between cell label assignments that registration methods do not consider.

To directly optimize for intrinsic similarity and dependencies between label assignments, we cast the cell annotation problem as a Structured Prediction Problem (Bakir, 2007; Nowozin, 2010; Caelli and Caetano, 2005; Kappes et al., 2015) and build a Conditional Random Fields (CRF) model (Lafferty et al., 2001) to solve it. The model directly optimizes cell-label dependencies by maximizing intrinsic and extrinsic similarities between images and atlases. One major advantage, as shown using both synthetic data with realistic properties (e.g. statistics from real data) and manually annotated experimental ground-truth datasets, is that CRF_ID achieves higher accuracy compared to existing methods. Further, CRF_ID outperforms existing methods in handling both position noise and count noise common in experimental data across all challenging noise levels.

To further improve accuracy, we took two approaches. First, we took advantage of spatially distributed (fluorescently labeled) landmark cells. These landmark cells act as additional constraints on the model, thus aiding in optimization, and helping in pre- as well post-prediction analysis. Second, we developed a methodology to build data-driven atlases that capture the statistics of the experimentally observed data for better prediction. We provide a set of computational tools for automatic and unbiased annotation of cell identities in fluorescence image data, and efficient building of data-driven atlases using fully or partially annotated image sets. We show the utility of our approach in several contexts: determining gene expression patterns with no prior expectations, tracking activities of multiple cells during calcium imaging, and identifying cells in whole-brain imaging videos. For the whole-brain imaging experiments, our annotation framework enabled us to analyze the simultaneously recorded response of C. elegans head ganglion to food stimulus and identify two distinct groups of cells whose activities correlated with distinct variables – food sensation and locomotion.

Results

Cell annotation formulation using structured prediction framework

Our automated cell annotation algorithm is formulated using Conditional Random Fields (CRF). CRF is a graphical model-based framework widely used for structured/relational learning tasks in Natural Language Processing and Computer Vision community (Bakir, 2007; Nowozin, 2010; Lafferty et al., 2001; Sutton and McCallum, 2010). The goal of structured learning tasks is to predict labels for structured objects such as graphs. In our neuron annotation problem, we assume that our starting point is a 3D image stack of the C. elegans head ganglion (Figure 1A(i)) in which neurons have already been detected (Figure 1A(ii)), either manually or by automated segmentation, and we want to match each neuronal cell body or nucleus to an identity label (a biological name). Hence, we have detected neuronal cell bodies that form the set of observed variables , and their 3D coordinates, . We also have a neuron atlas that provides a set of labels (biological names) of the neurons and positional relationships among them. Note that the number of neurons in the atlas is greater than the number of neurons detected in the image stack in all datasets, that is . The goal is to annotate a label to each neuron in the image stack. The problem is similar to structured labeling (Nowozin, 2010) since the labels to be assigned to neurons are dependent on each other. For example, if a certain neuron is assigned label AVAL, then the neurons that can be assigned label RMEL become restricted since only the cells anterior to AVAL can be assigned RMEL label.

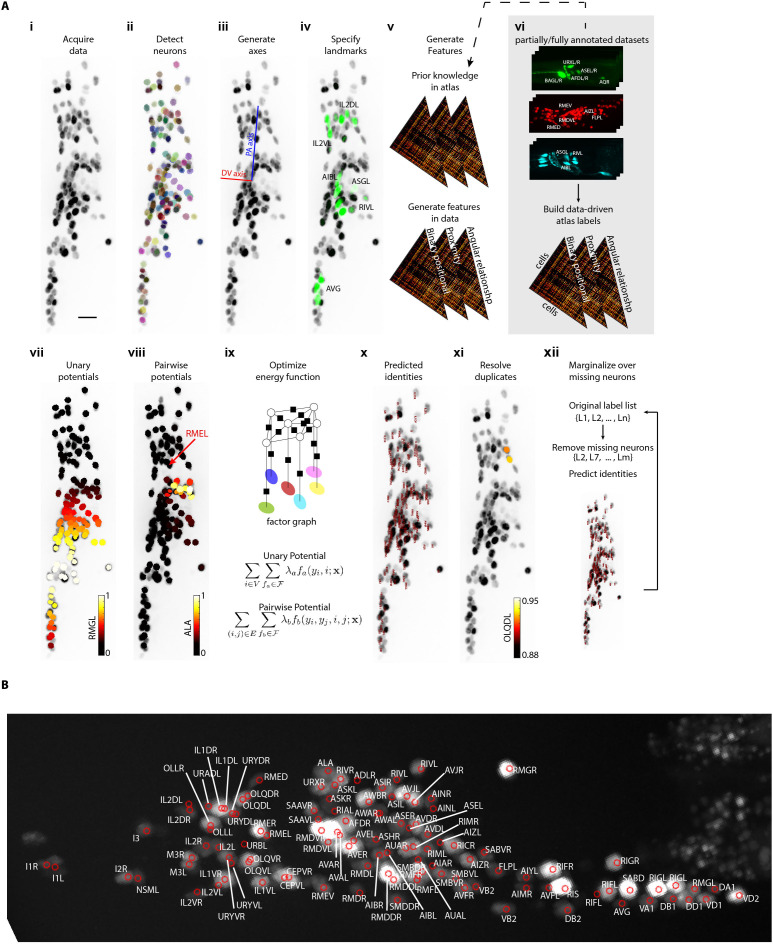

Figure 1. CRF_ID annotation framework automatically predicts cell identities in image stacks.

(A) Steps in CRF_ID framework applied to neuron imaging in C. elegans. (i) Max-projection of a 3D image stack showing head ganglion neurons whose biological names (identities) are to be determined. (ii) Automatically detected cells (Materials and methods) shown as overlaid colored regions on the raw image. (iii) Coordinate axes are generated automatically (Note S1). (iv) Identities of landmark cells if available are specified. (v) Unary and pairwise positional relationship features are calculated in data. These features are compared against same features in atlas. (vi) Atlas can be easily built from fully or partially annotated dataset from various sources using the tools provided with framework. (vii) An example of unary potentials showing the affinity of each cell taking the label RMGL. (viii) An example of dependencies encoded by pairwise potentials, showing the affinity of each cell taking the label ALA given the arrow-pointed cell is assigned the label RMEL. (ix) Identities are predicted by simultaneous optimization of all potentials such that assigned labels maximally preserve the empirical knowledge available from atlases. (x) Predicted identities. (xi) Duplicate assignment of labels is handled using a label consistency score calculated for each cell (Appendix 1–Extended methods S1). (xii) The process is repeated with different combinations of missing cells to marginalize over missing cells (Note S1). Finally, top candidate label list is generated for each cell. (B) An example of automatically predicted identities (top picks) for each cell.

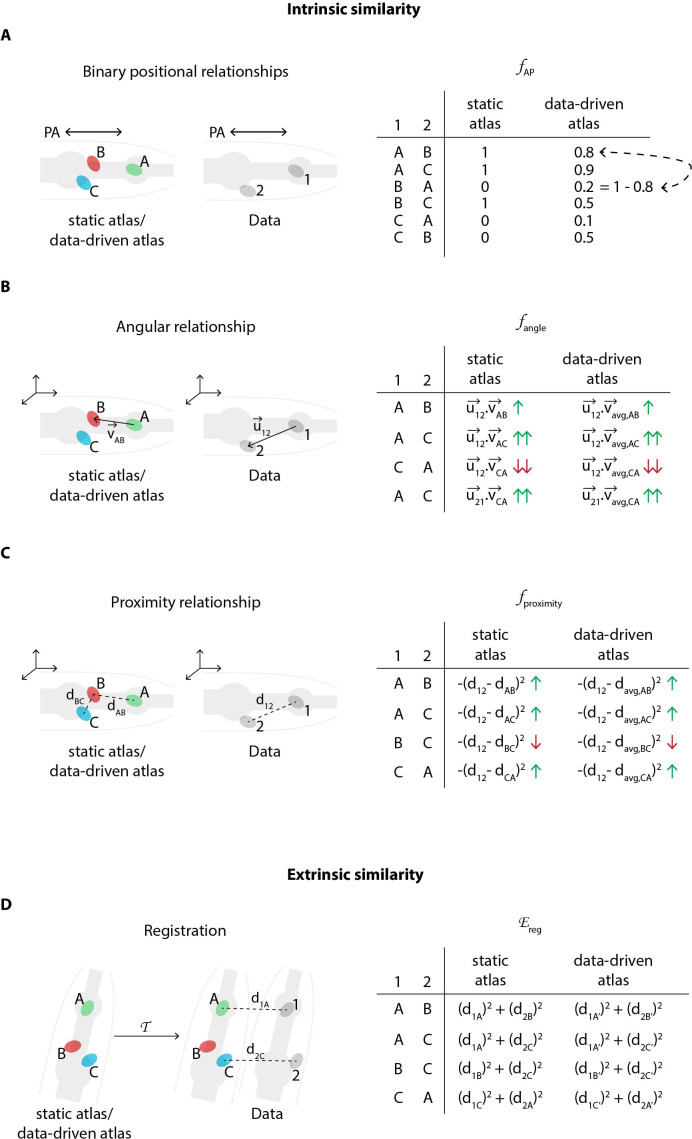

Figure 1—figure supplement 1. Schematic description of various features in the CRF model that relate to intrinsic similarity and extrinsic similarity.

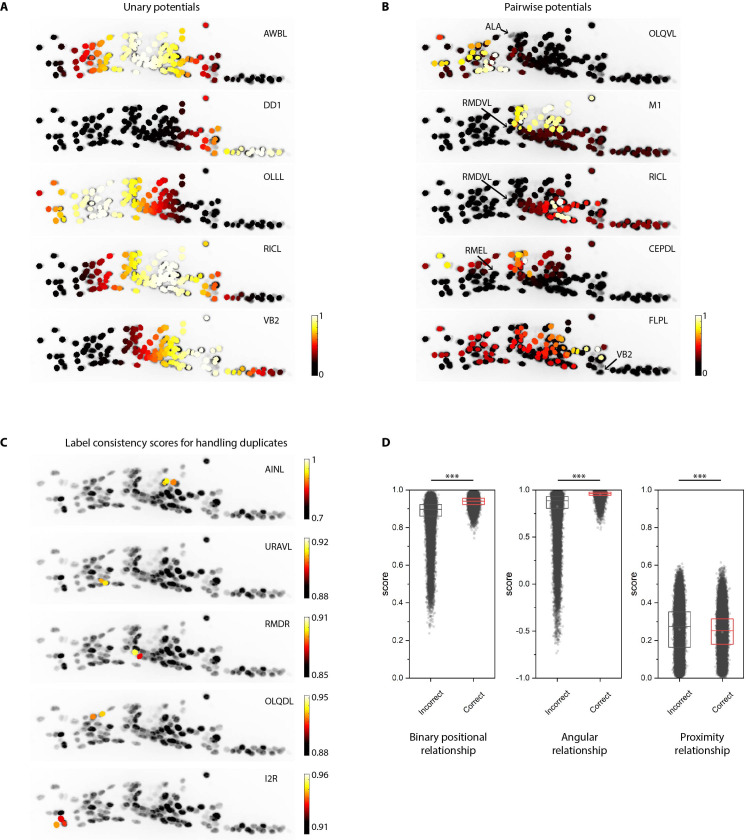

Figure 1—figure supplement 2. Additional examples of unary and pairwise potentials and label consistency scores calculated for each cell.

Figure 1—video 1. Identities predicted automatically by the CRF_ID framework in head ganglion stack.

We use CRF-based formulation to directly optimize for such dependencies and automatically assign names to each cell. Briefly, a node is associated with each observed variable (i.e. segmented neuron in image data) forming the set of variables in the model. Then, CRF models a conditional joint probability distribution over product space of labels assigned to given observations , where each and is a particular assignment of labels to . contains non-optimal and optimal assignments. In CRF, label dependencies among various nodes are encoded by the structure of an underlying undirected graph defined over nodes , where denotes the set of cliques in graph . With the underlying graph structure, the conditional joint probability distribution over full label space factorizes over cliques in , making it tractable to the model:

| (1) |

Here, is the normalization constant with and denotes clique potential if nodes in clique are assigned label . In our model, the fully connected graph structure considers only pairwise dependencies between every pair of neurons. Thus, the graph structure of our model becomes , with the node set containing nodes associated with each segmented neuron and pairwise edges between all nodes that form the edge set . The potential functions in our model are node-potentials and edge-potentials . These functions are parameterized with unary feature functions and pairwise feature functions , respectively:

| (2) |

| (3) |

where and are labels in atlas. Note, there are unary features and pairwise features with weights and respectively to define node and edge potentials.

While unary features account for extrinsic similarity and cell-specific features, pairwise features account for intrinsic similarity. To maximize accuracy, we encode pairwise dependencies between all pairs of cells in the form of several geometrical relationship features (Figure 1—figure supplement 1). Optimal identities of all neurons is obtained by maximizing the joint-distribution . This is equivalent to maximizing the following energy function.

| (4) |

Optimizing this energy function over fully connected graphs (more specifically graphs with loops) is known to be an NP-hard problem (Kohli et al., 2009). However, approximate inference algorithms are widely used in CRF community as they provide reasonable solutions. We implemented a popular method called Loopy Belief Propagation (Murphy et al., 1999) to infer the most probable labeling over all cells, as well as marginal distributions of label assignments for each cell.

The features used in the base version of the model are geometrical relationship features that ensure identities assigned to cells in image are consistent with the atlas in terms of satisfying pairwise geometrical relationships. These features include binary positional relationship feature, proximity relationship feature, and angular relationship feature (Figure 1—figure supplement 1, Appendix 1–Extended methods S1.2). All these features are a variant of the quadratic Gromov-Wasserstein distance used in matching metric spaces (Bronstein et al., 2009; Peyre et al., 2016) and shapes (Solomon et al., 2016; Mémoli, 2011). Briefly, binary positional relationship features encode that as an example, if cell is anterior, dorsal and to the right of cell in image stack, then identities assigned to these cells should satisfy these relationships in the atlas. Proximity relationship features ensure that if cell is spatially near to cell in image stack, then identities of spatially distant cells in atlas would not be assigned to these cells. Finally, angular relationships ensure that identities assigned to cells and should satisfy fine-scale directional relationships as well, and not just simple binary relationships. We show that the CRF model can be easily updated to include additional features such as cells with known identities (landmark cells) and fluorescent spectral codes of cells. We demonstrate this by incorporating landmark cells and spectral information of cells in the model and show improvement in accuracy.

A critical component for the success of automated cell identification methods is data-driven atlas. Static atlases such as OpenWorm atlas provide a single observation of positional relationships among cells. For instance, if cell RMEL is to the left of cell AVAL in OpenWorm atlas, then the model assumes that RMEL is to the left of AVAL with 100% probability. In contrast, in observed experimental data RMEL may be observed to be left of AVAL with 80% probability (e.g. in 8 out of 10 annotated experimental datasets). Thus, data-driven atlases relax the hard-coded constraint of 100% probability imposed by static atlas and accounts for the statistics that is observed experimentally for all positional relationship features (Figure 1—figure supplement 1). Note, data-driven atlas built in our framework is considerably different from those built by registration-based methods. While the latter atlases store probabilistic positions of cells, atlases built by our framework store only probabilistic pairwise positional relationship features among cells, thus more generalizable. We show that building such data-driven atlases is easy for our CRF model (Appendix 1–Extended methods S1.7). We demonstrate this by building several data-driven atlases from different data sources containing various features, showing considerable improvement in accuracy. Further, building data-driven atlases is computationally cheap in CRF_ID, requiring only simple averaging operations; thus, it is scalable to build atlases from large-scale annotated data that may become available in future.

Computational workflow for automatic cell identification

Our annotation framework consists of four major steps (Figure 1A; Appendix 1–Extended methods S1). First, cells are automatically detected in input image channels using a Gaussian Mixture-based segmentation method (see Materials and methods – Whole-brain data analysis). Cells with known identities (landmarks cells) are also detected in this step and their identities are specified. We designed the framework to be flexible on several fronts: (1) easily using manual segmentations of image channels or segmenting on the run; (2) integrating landmark information from any number of image channels; (3) specifying identities of landmark cells on the run or from existing fully or partially annotated files generated with other tools such as Vaa3D (Peng et al., 2010). In the second step, a head coordinate is generated by solving an optimization problem with considerations of the directional consistency of axes (see Appendix 1-Extended methods S1.3). With this coordinate system, we next define cell-specific features (unary potentials) and co-dependent features (pairwise potentials) in the data (Figure 1—figure supplement 2A,B). The base version of the model uses only pairwise relationship features for all pairs of cells, including binary positional relationships, angular relationship, and proximity relationship between cells in images (Figure 1—figure supplement 1). However, additional unary features such as landmarks and color information can be easily added in the model. By encoding these features among all pairs of cells, our fully connected CRF model accounts for label dependencies between each cell pair to maximize accuracy. The atlas used for prediction may be a standard atlas such as the OpenWorm (Szigeti et al., 2014) atlas or it can be easily built from fully or partially annotated datasets from various sources using the tools provided with our framework (see Appendix 1–Extended methods S1.7). In the third step, identities are automatically predicted for all cells by optimizing the CRF energy function consisting of unary and pairwise potentials, which in our formulation is equivalent to maximizing the intrinsic similarity between data and the atlas (see Appendix 1–Extended methods S1.4). Duplicate assignments are resolved by calculating a label-consistency score for each neuron, removing duplicate assignments with low scores (Figure 1—figure supplement 2C,D, see Appendix 1–Extended methods S1.5) and re-running the optimization. After the third step, the code outputs top predicted label for each cell. Next, an optional fourth step can be performed to account for missing neurons in image stack. In this step, full atlas is subsampled to remove fixed number of labels from atlas by either sampling uniformly or based on prior confidence values available on missing rate of labels in images (see Appendix 1–Extended methods S1.5). Subsampled atlas assumes that labels removed are missing from the image and thus ensures that those labels cannot be assigned to any cell in the image. The sampling procedure is repeated, and identities are predicted in each run. We perform these runs in parallel on computing clusters. Lastly, identities predicted across each run are pooled to generate top candidate identities for each cell (Figure 1B; Figure 1—video 1; see Appendix 1–Extended methods S1.6). Thus, there are two modes of running the framework – single-run mode that outputs only top label for each cell and parallel-run mode that outputs multiple top candidate labels for each cell. We make the software suite freely available at https://github.com/shiveshc/CRF_Cell_ID (Chaudhary, 2021; copy archived at swh:1:rev:aeeeb3f98039f4b9100c72d63de25f73354ec526).

Prediction accuracy is bound by position and count noise in data

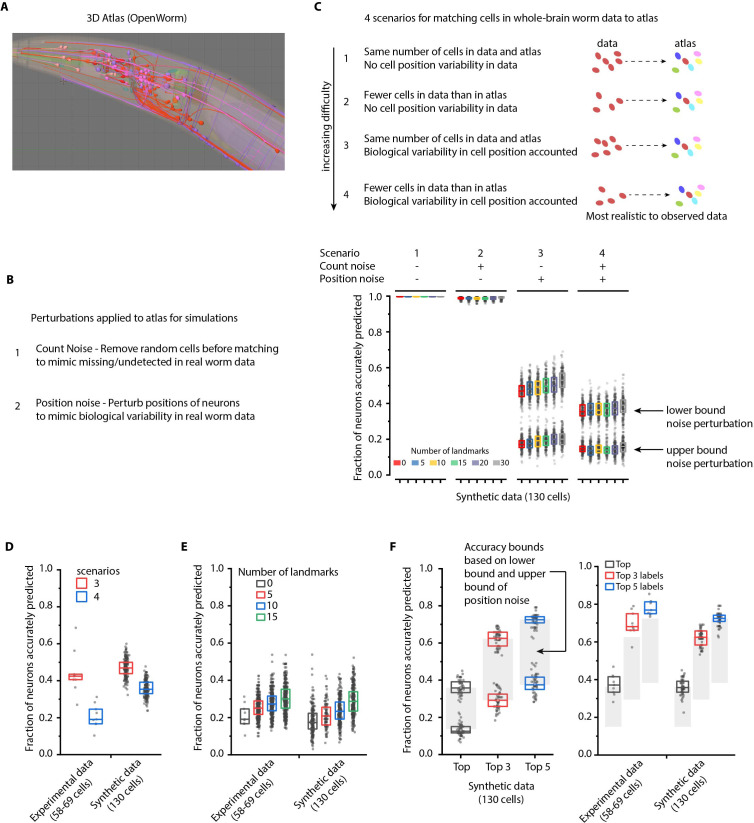

Given the broad utility of image annotation, we envision our workflow to apply to a variety of problems where experimental constraints and algorithm performance requirements may be diverse. For example, experimental data across different tasks inherently contains noise contributed by various sources in varying amounts that can affect annotation accuracy. These sources of noises include the following: (1) deviation between cell positions in images and positions in atlases, which is position noise, (2) smaller count of cells in images than number of cells in atlas due to missing cells in images, which is count noise, and (3) absence of cells with known identities, i.e. known landmarks. We set out to determine general principles of how these noises may affect cell identification accuracy across various tasks. We used two different kinds of data: synthetic data generated from OpenWorm 3D atlas (Szigeti et al., 2014; Figure 2—figure supplement 1A,B and Figure 2—figure supplement 2) and experimental data generated using NeuroPAL strains (Yemini et al., 2021), consisting of annotated ground-truth of nine animals with ~100 uniquely identified neurons (Figure 2—figure supplement 3). While experimental data enables the assessment of prediction accuracy in real scenarios, synthetic data enable us to tune the amount of noise contributed from various sources and dissect their effects on accuracy independently.

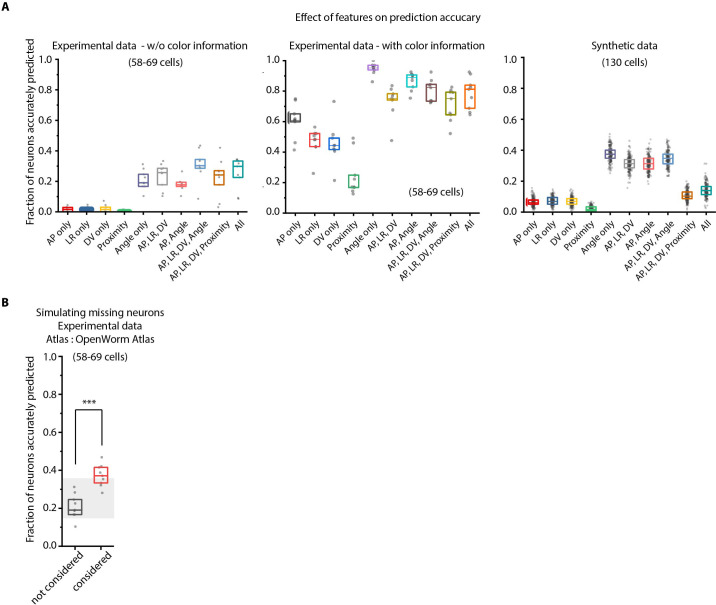

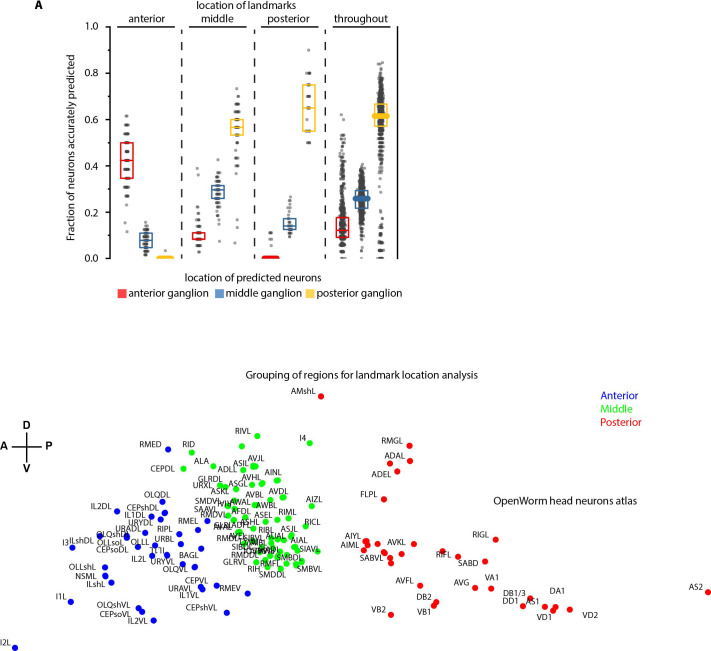

To assess the effects of position noise and count noise on prediction accuracy, we simulated four scenarios using the synthetic data (Figure 2—figure supplement 1C). In the absence of any noise, relative positional relationship features predicted neuron identities with perfect accuracy (scenario one in Figure 2—figure supplement 1C), thus demonstrating the suitability of co-dependent features and CRF_ID framework for the annotation task. We found that both position noise and count noise affect accuracy significantly (Figure 2—figure supplement 1C,D) with position noise having a larger effect (compare scenarios 1–2 with 3–4 in Figure 2—figure supplement 1C). As mentioned before, count noise is primarily caused by inefficiencies of either the reporter used to label cells or inaccuracies of the cell detection algorithm used, thus leading to fewer cells detected in the images than cells in atlases. Results on both synthetic data and real data show that 10–15% improvement in prediction accuracy can be attained by simply improving reagents and eliminating count noise (Figure 2—figure supplement 1D). Next, we tested the effect of landmarks (cells with known identities) on annotation accuracy (Figure 2—figure supplement 1E). We hypothesized that landmarks will improve accuracy by acting as additional constraints on the optimization while the algorithm searches for the optimal arrangement of labels for non-landmark cells. Indeed, we found, in both experimental data and synthetic data, randomly chosen landmarks increased prediction accuracy by ~10–15%. It is possible that strategic choices of landmarks could further improve accuracy.

Another advantage of simulations using synthetic data is that by quantifying accuracy across the application of extreme-case of empirically observed noises, they can be used to obtain expected accuracy bounds for real scenarios. We obtained such bounds (shown as gray regions in Figure 2—figure supplement 1F) based on observed position noise in experimental data (Figure 2—figure supplement 2). Notably, the prediction results for experimental data lay close to the estimated bounds using synthetic data (Figure 2—figure supplement 1F). Together, good agreement between results obtained on synthetic and experimental data suggest that the general trends uncovered using synthetic data of how various noises affect accuracy are applicable to experimental data.

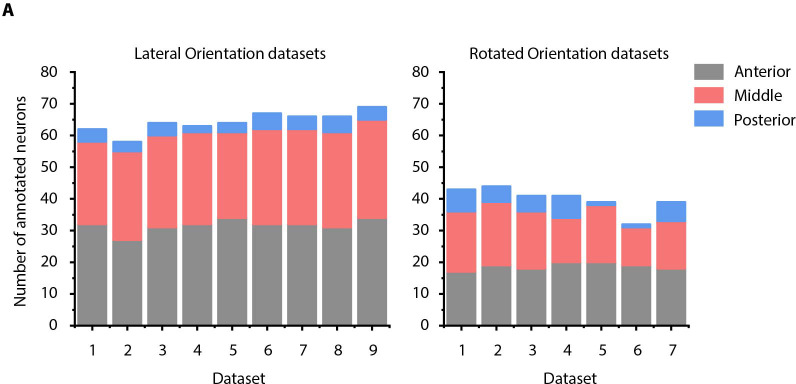

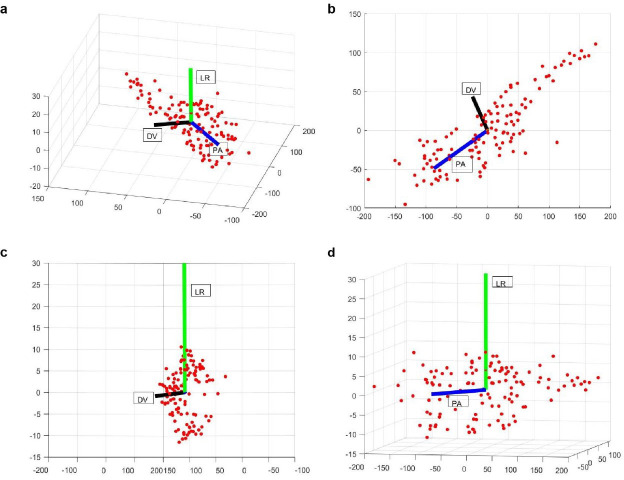

Next, with this knowledge, we tuned the features in the model, and we compared prediction accuracy for several combinations of positional relationship features. Among all co-dependent positional relationship features, the angular relationship feature by itself or when combined with PA, LR, and DV binary position relationship features performed best (Figure 2—figure supplement 4A). To account for missing cells, we developed a method that considers missing neurons as a latent state in the model (similar to hidden-state CRF Quattoni et al., 2007) and predicts identities by marginalizing over latent states (see Appendix 1–Extended methods S1.6). Compared to the base case that assumes all cells are present in data, simulating missing neurons significantly increased the prediction accuracy (Figure 2—figure supplement 4B) on experimental data.

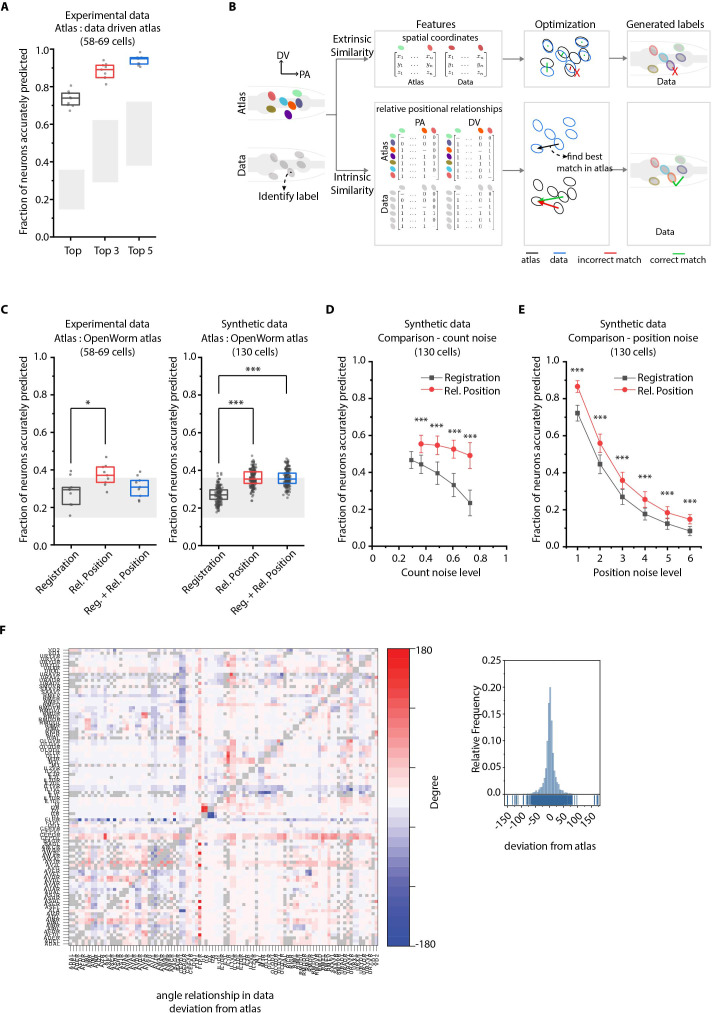

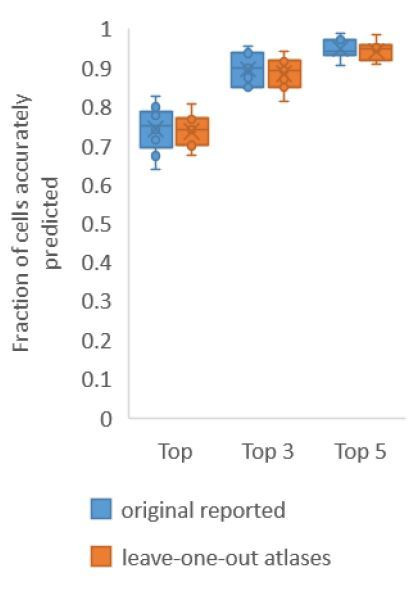

Identity assignment using intrinsic features in CRF_ID outperforms other methods

We next characterized the performance of our CRF_ID framework by predicting the identities of cells in manually annotated ground-truth datasets (Figure 2A). To specify prior knowledge, we built data-driven atlases combining positional information of cells from annotated ground-truth datasets and OpenWorm atlas (Appendix 1–Extended methods S1.7). To predict cell identities in each ground-truth dataset, separate leave-one-out atlases were built keeping the test dataset held out. Building such data-driven atlases for our framework is extremely computationally efficient, requiring simple averaging operations; thus, new atlases can be built from thousands of annotated images very quickly. With data-driven atlases (of only eight annotated set, one for each test dataset), 74% of cells were correctly identified by the top label prediction in the ground-truth data set, which exceeds the state of the art. Further, 88% and 94% of cells had true identities within the top 3 and the top 5 predicted labels, respectively (Figure 2A). Note that with using only positional relationship features in the data-driven atlas, this case is equivalent to predicting identities in experimental whole-brain datasets without color information. More importantly, automated annotation is unbiased because, in principle, the framework can combine manual annotations of cell identities of several users (possibly across labs) in the form of data-driven atlases and can predict identities such that positional relationships in the atlas are maximally preserved. Thus, automated annotation removes individual biases in annotating cells. Further, it greatly supports researchers with no prior experience.

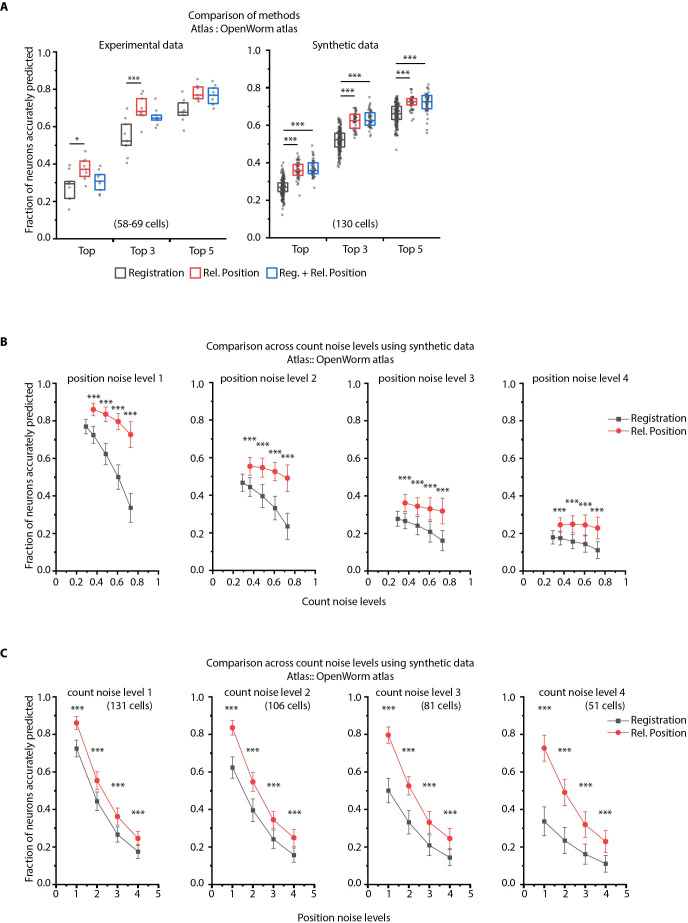

Figure 2. CRF_ID annotation framework outperforms other approaches.

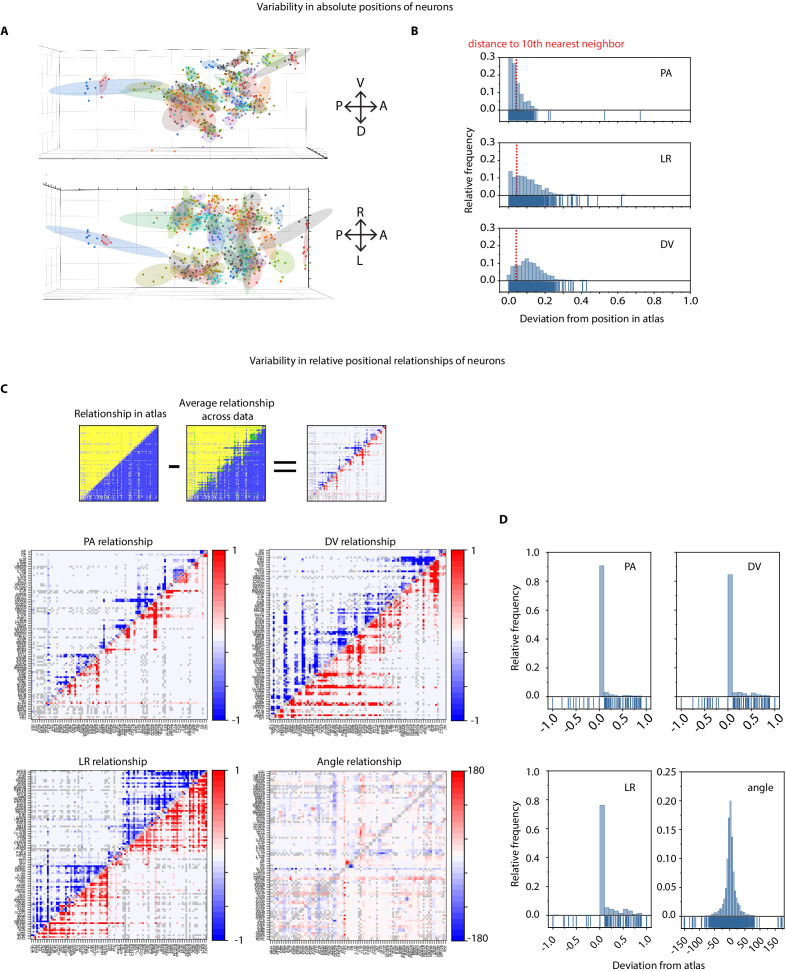

(A) CRF_ID framework achieves high prediction accuracy (average 73.5% for top labels) using data-driven atlases without using color information. Results shown for whole-brain experimental ground truth data (n = 9 animals). Prediction was performed using separate leave-one-out data-driven atlases built for each animal dataset with test dataset held out. Gray regions indicate bounds on prediction accuracy obtained using simulations on synthetic data (see Figure 2—figure supplement 1F). Experimental data comes from strain OH15495. Top, middle, and bottom lines in box plot indicate 75th percentile, median, and 25th percentile of data, respectively. (B) Schematic highlighting key difference between registration-based methods and our CRF_ID framework. (C) Prediction accuracy comparison across methods for ground truth experimental data (n = 9, *p<0.05, Bonferroni paired comparison test) and synthetic data (n = 190–200 runs for each method, ***p<0.001, Bonferroni paired comparison test). OpenWorm atlas was used for predictions. Accuracy results shown for top predicted labels. Experimental data comes from strain OH15495. For synthetic data, random but realistic levels of position and count noise applied in each run. Gray regions indicate bounds on prediction accuracy obtained using simulations on synthetic data (see Figure 2—figure supplement 1F). Top, middle, and bottom lines in box plot indicate 75th percentile, median, and 25th percentile of data, respectively. (D) Comparison of methods across count noise levels (defined as percentage of cells in atlas that are missing from data) using synthetic data. (n = 150–200 runs for Rel. Position for each noise level, n = ~1000 runs for Registration for each noise level, ***p<0.001, Bonferroni paired comparison test). OpenWorm atlas was used for prediction. Accuracy results shown for top predicted labels. For a fixed count noise level, random cells were set as missing in each run. Markers and error bars indicate mean ± standard deviation. (E) Comparison of methods across position noise levels using synthetic data. (n = 190–200 runs for each method for each noise level, ***p<0.001, Bonferroni paired comparison test). OpenWorm atlas was used for prediction. Accuracy results shown for top predicted labels. For a fixed position noise level, random position noise was applied to cells in each run. Different noise levels correspond to different variances of zero-mean gaussian noise added to positions of cells (see section Materials and methods – Generating synthetic data for framework tuning and comparison against other methods). Noise levels 3 and 6 correspond to the lower bound and upper bound noise levels shown in Figure 2—figure supplement 1F. Markers and error bars indicate mean ± standard deviation. (F) Pairwise positional relationships among cells are more consistent with OpenWorm atlas even though the absolute positions of cells vary across worms. (Left) average deviation of angular relationship measured in ground truth data (n = 9) from the angular relationship in static atlas. (Right) distribution of all deviations in left panel (total of 8516 relationships) is sparse and centered around 0 deviation, thus indicating angular relationships are consistent with atlas.

Figure 2—figure supplement 1. Performance characterization using synthetic data.

Figure 2—figure supplement 2. Method of applying position noise to the atlas to generate synthetic data.

Figure 2—figure supplement 3. Details of manually annotated experimental ground-truth datasets.

Figure 2—figure supplement 4. Model tuning/characterization – features selection and simulating missing cells.

Figure 2—figure supplement 5. CRF_ID framework with relative positional features outperforms registration method.

Figure 2—figure supplement 6. Variability in absolute positions of cells and relative positional features in experimental data compared to the static atlas.

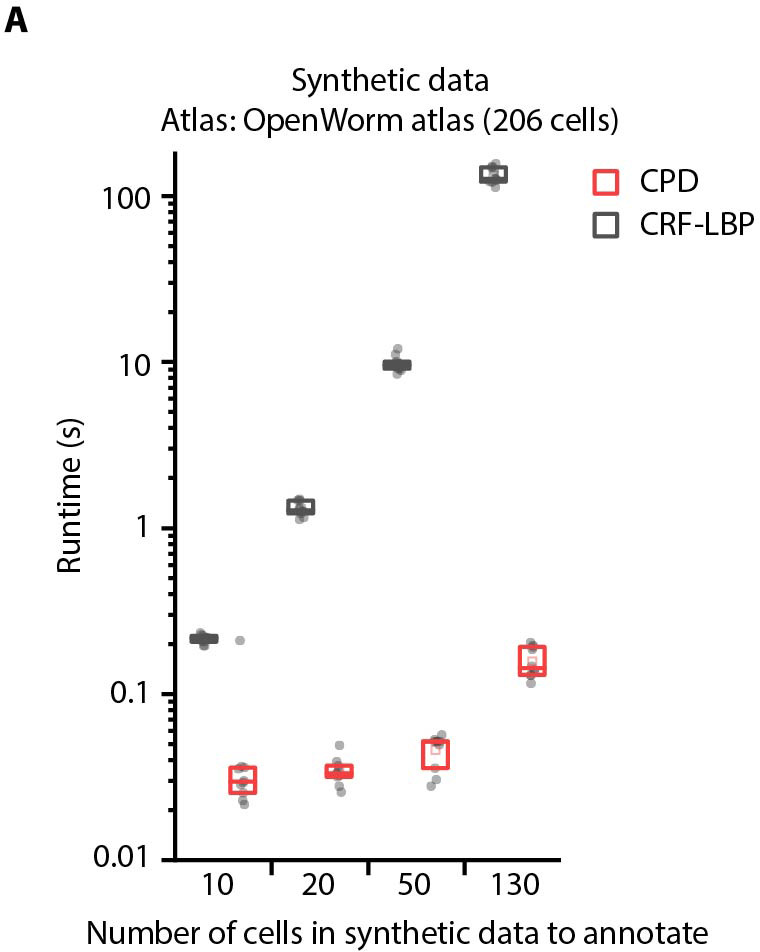

Figure 2—figure supplement 7. Comparison of optimization runtimes of CRF_ID framework with a registration method CPD (Myronenko and Song, 2010).

We next compared our method against registration-based methods popular for automatic cell annotation (Long et al., 2009; Long et al., 2008; Aerni et al., 2013; Toyoshima, 2019; Scholz, 2018) (see Appendix– S1.9 Registration methods do not consider intrinsic similarity features such as relative positional relationships and S2.1 Registration). For fair comparison across methods, all methods used OpenWorm atlas as reference for prediction. The major difference between our framework and previous methods is the use of intrinsic similarity compared to extrinsic similarities in previous methods in the annotation task (Figure 2B, Figure 1—figure supplement 1). Remarkably, for both experimental and synthetic data, CRF_ID using relative positional features performs the best (Figure 2C; Figure 2—figure supplement 5A). Note that the decrease in accuracy compared to Figure 2A here is due to using static OpenWorm atlas, further highlighting the importance of building data-driven atlases. Notably, CRF_ID outperforms registration-based method across all levels of count noise and position noise in data (Figure 2D,E; Figure 2—figure supplement 5B,C). The accuracy of registration-based methods falls rapidly with increasing count noise levels, whereas CRF_ID is highly robust, maintaining higher accuracy even when up to 75% of cells in atlas were missing from data. This has important practical implications as the amount of count noise observed in experimental data may vary significantly across reagents, imaging conditions etc. Further, neuron positions being highly variable across individual animals have been shown (Yemini et al., 2021), and confirmed by our datasets as well (Figure 2—figure supplement 6A). Because cell positions on average can deviate from their atlas position by more than the distance to their tenth nearest neighbor (Figure 2—figure supplement 6B), we expect that this variability introduces large matching errors in registration-based methods. In contrast, most pair-wise relationships are preserved despite the variability of absolute positions (Figure 2F; Figure 2—figure supplement 6C,D). Interestingly, a hybrid objective function that combines registration using absolute positions with relative position features in CRF_ID framework corrupts the annotation performance (Figure 2—figure supplement 5A), likely due to competing effects in the objective function. This again highlights the fact that higher accuracy is achieved by positional relationship features in CRF_ID method.

Next, to compare the computational efficiency of CRF_ID framework with that of registration based methods, we compared the optimization step runtimes of the single-run mode of CRF_ID framework with that of a popular registration method (Coherent Point Drift Myronenko and Song, 2010; Figure 2—figure supplement 7A). The computational speed of both methods scales with the number of cells to be annotated in images and the number of cells in the atlas. As expected, CRF_ID framework is computationally more expensive compared to CPD, because it optimizes both unary and pairwise potentials. Nonetheless, the optimization runtime of CRF_ID framework for multi-cell calcium imaging use-case (10–50 cells in image) is on the order of 0.1–10 s, on a desktop computer (see Materials and methods – Runtime comparison), when full head ganglion atlas (206 cells) is used for annotation. We emphasize that using full head ganglion atlas for cell identity annotation in whole-brain imaging is important because without prior knowledge of which cells are missing in images, full atlas provides unbiased opportunity to cells in images to take any label from the atlas. In contrast, if only a partial atlas or partially annotated data set is used as atlas, the labels absent in atlas will never get assigned to any cell in images, thus potentially biasing the annotation. In practice, faster runtimes can be achieved in multi-cell calcium imaging and whole-brain imaging case with the use of smaller atlases based on prior knowledge of cells expected in strains. Further, the multiple-run mode of CRF_ID framework can be parallelized using multiple CPU workers. Thus, higher accuracy compared to registration based methods combined with reasonable speeds makes CRF_ID favorable for cell annotation tasks.

Cell annotation in gene-expression pattern analysis

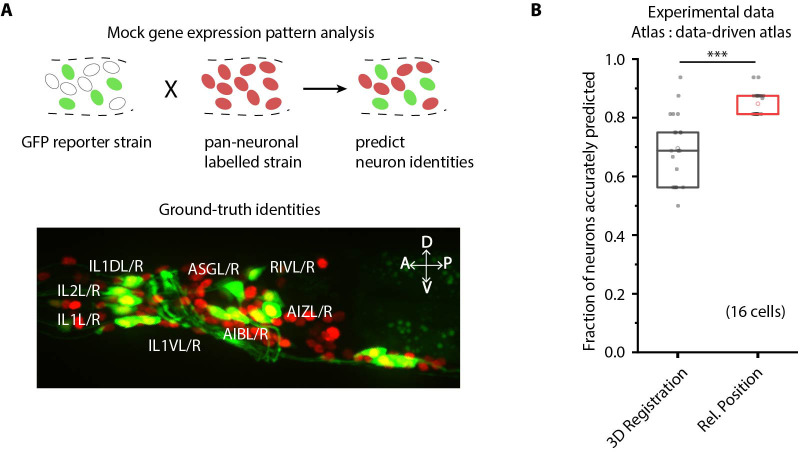

We next demonstrate the utility of our framework for gene-expression localization analyses, which is important for many problems, for example mapping the cellular atlas of neurotransmitters (Gendrel et al., 2016; Pereira et al., 2015), receptors (Vidal et al., 2018), and neuropeptides (Bentley et al., 2016). Conventional methods, for example screening a list of cell-specific marker lines that overlap in expression with the reporter, are laborious and scale poorly with the number of cells expressing the genes of interest and the number of new genes for which expression patterns are to be determined. Our cell annotation framework can considerably reduce manual efforts by generating a small list of candidate identities for each cell expressing the reporter. Subsequently, researchers can easily verify or prune the candidate list. To demonstrate this use case, we imaged a strain with multiple cells labeled with GFP and predicted candidate identities for each cell (Figure 3A). Determining cell identities in this case is difficult due to large count noise along with position noise: since the full list of labels in the atlas is much bigger than few cells in the reporter strain (equivalent to scenario four in Figure 2—figure supplement 1C). Thus, several degenerate (equally probable) solutions are possible. To avoid accuracy decrease in such cases, we directly predicted the candidate identities of all cells marked with pan-neuronal red fluorescent protein (RFP) using full whole-brain atlas and subsequently assessed the accuracy of only cells of interest, that is those marked with GFP. Our framework accurately generated a candidate list for cells across all datasets (n = 21 animals); 85% of cells had true identities within the top five labels chosen by the framework. In comparison, the candidate list generated by the registration method achieved only 61% accuracy (Figure 3B).

Figure 3. CRF_ID framework predicts identities for gene expression pattern analyses.

(A) (Top) Schematic showing a fluorescent reporter strain with GFP expressed in cells for which names need to be determined. Since no candidate labels are known a priori neurons labels are predicted for all cells marked with pan-neuronally expressed RFP using full whole-brain atlas. (Bottom) A proxy strain AML5 [rab-3p(prom1)::2xNLS::TagRFP; odr-2b::GFP] with pan-neuronal RFP and 19 cells labeled with GFP was used to assess prediction accuracy. (B) CRF_ID framework with relative position features outperforms registration method (n = 21 animals) (***p<0.001, Bonferroni paired comparison test). Accuracy shown for top five labels predicted by both methods. Experimental data comes from strain AML5. Top, middle, and bottom lines in box plot indicate 75th percentile, median, and 25th percentile of data, respectively.

Cell annotation in multi-cell functional imaging experiments

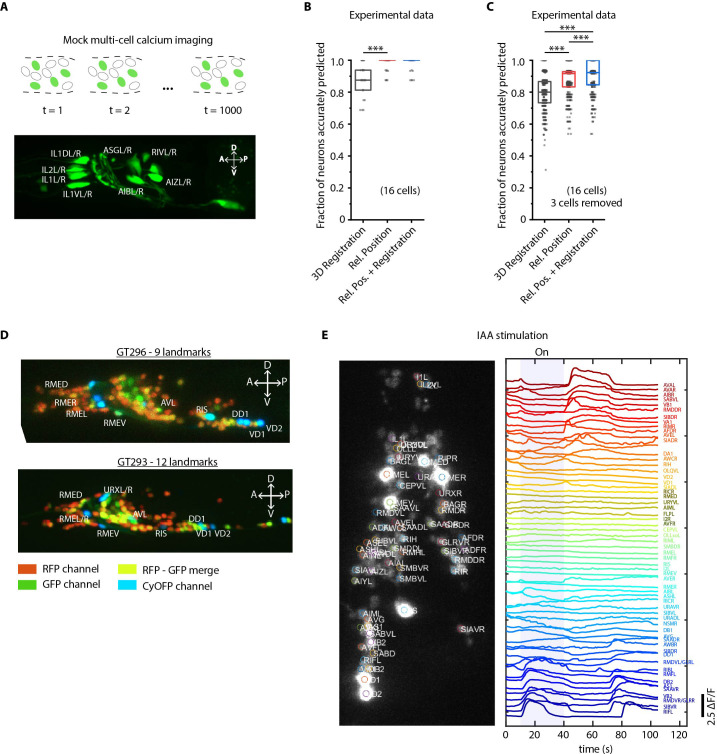

We next demonstrate the utility of our algorithm in another important application - annotating cell identities in multi-cell calcium functional imaging in vivo (Figure 4). Automation in this case dramatically reduces labor associated with cell annotation for many time points, across trials, animals, and experiments. We used a strain carrying GFP in multiple cells as a proxy for GCaMP-labeled strains for illustration purposes (Figure 4A). Given the known candidate list of labels that can be assigned (i.e. no count noise), the configurational space is small, which makes the task easy (similar to scenario three in Figure 2—figure supplement 1C). Indeed, our annotation framework identified neurons with high accuracy (98%, n = 35 animals). In comparison, the registration method predicted identities with lower accuracy (88%) even with the small label assignment space (Figure 4B). In reality, some neurons may be undetected in the data due to expression mosaicism or low-calcium transients thus adding count noise to data (equivalent to scenario 4 in Figure 2—figure supplement 1C). We thus simulated this case by randomly removing up to a third of total neurons from the images and predicting identities of remaining cells using the full label list (Figure 4C; Figure 4—figure supplement 1A). Even under these conditions, the accuracy of our method remains high (88%), significantly outperforming registration method (81%) (Figure 4—video 1). In practice, the performance can be further compensated for by using multiple frames from each video, which we are not doing here in the mock experiment.

Figure 4. Cell identity prediction in mock multi-cell calcium imaging experiments and landmark strain.

(A) (Top) schematic showing automatic identification of cells in multi-cell calcium imaging videos for high-throughput analysis. (Bottom) A mock strain with GFP-labeled cells was used as an illustration of GCaMP imaging. Only green channel of AML5 strain was used for this purpose. (B) CRF_ID framework outperforms registration method (n = 35 animals, ***p<0.001, Bonferroni paired comparison test). OpenWorm atlas was used for prediction. Accuracy results shown for top predicted labels. Experimental data comes from strain AML5 (only green channel used). Top, middle, and bottom lines in box plot indicate 75th percentile, median, and 25th percentile of data, respectively. (C) Prediction accuracy comparison for the case of missing cells in images (count noise). ***p<0.001, Bonferroni paired comparison test. Total n = 700 runs were performed across 35 animals for each method with 3 out 16 randomly selected cells removed in each run. For fair comparison, cells removed across methods were the same. OpenWorm atlas was used for prediction. Accuracy results shown for top predicted labels. Experimental data comes from strain AML5 (only green channel used). Top, middle, and bottom lines in box plot indicate 75th percentile, median, and 25th percentile of data, respectively. (D) Max-projection of 3D image stacks showing CyOFP labeled landmark cells in head ganglion (pseudo-colored as cyan): animals carrying [unc47p::NLS::CyOFP1::egl-13NLS] (GT296 strain) with nine landmarks (top), and animals carrying [unc-47p::NLS::CyOFP1::egl-13NLS; gcy-32p::NLS::CyOFP1::egl-13NLS] with 12 landmarks (bottom). (E) (Left) max-projection of a 3D image stack from whole-brain activity recording showing head ganglion cells and identities predicted by CRF_ID framework (Top labels). Animal is immobilized in a microfluidic device channel and IAA stimulus is applied to the nose tip. (Right) GCaMP6s activity traces extracted by tracking cells over time in the same 108 s recording and their corresponding identities. Blue shaded region shows IAA stimulation period. Experimental data comes from strain GT296.

Figure 4—figure supplement 1. Relative position features perform better than registration in handling missing cells in images.

Figure 4—figure supplement 2. Spatially distributed landmarks or landmarks in lateral ganglion perform best in supporting CRF_ID framework for predicting identities.

Figure 4—figure supplement 3. Microfluidic device used in chemical stimulation experiments and characterization.

Figure 4—video 1. Comparison between the CRF_ID framework and the registration method for predicting identities in case of missing cells.

To further facilitate annotation accuracy, we explored the utility of landmarks with known identities. Landmarks can also help in establishing a coordinate system in images and guiding post-prediction correction. Because the combinatorial space of potential landmarks is very large (~1014 for 10 landmarks out of ~200 cells in the head), we asked what properties landmarks should have. We found that landmarks distributed throughout the head or in lateral ganglion perform better in predicting identities of neurons in all regions of the brain (Figure 4—figure supplement 2; Materials and methods). As a test case, we developed strains with spatially distributed, sparse neuronal landmarks using CyOFP (see Material and methods - Construction of landmark strains), which by itself can assist researchers in manual cell identification tasks. When crossed with pan-neuronally expressing GCaMP/RFP reagents, the strains can be used for whole-brain imaging (Figure 4D) by using only two channels. This has two advantages: CyOFP can be imaged 'for free' while imaging GCaMP and RFP simultaneously, thus the landmarks providing a concurrent reference in all frames; this strategy also leaves other channels open for optogenetic manipulations and voltage imaging (Piatkevich et al., 2019; Piatkevich et al., 2018).

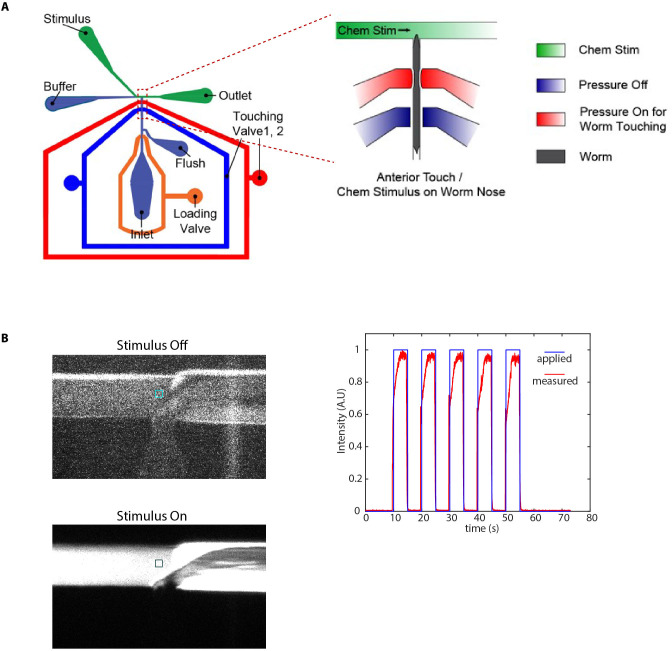

We next tested this strategy in a simple whole-brain imaging experiment. Isoamyl alcohol (IAA) is a well-known component of the bacterial metabolites that C. elegans senses and responds to Chalasani et al., 2007; L'Etoile and Bargmann, 2000; Bargmann et al., 1993. We recorded neuronal responses to a step-change in IAA concentration using a microfluidic system (Cho et al., 2020; Figure 4—figure supplement 3). We observed both odor-specific responses and spontaneous activities (Figure 4E). More importantly, neurons with algorithm-assigned identities demonstrate expected behavior. For instance, we identified the sensory neuron AWC, and detected an off-response to IAA, consistent with known AWC behavior. In addition, the predicted interneurons (e.g. AVA, RIB, and AIB) also demonstrate previously known activity patterns (Kato et al., 2015).

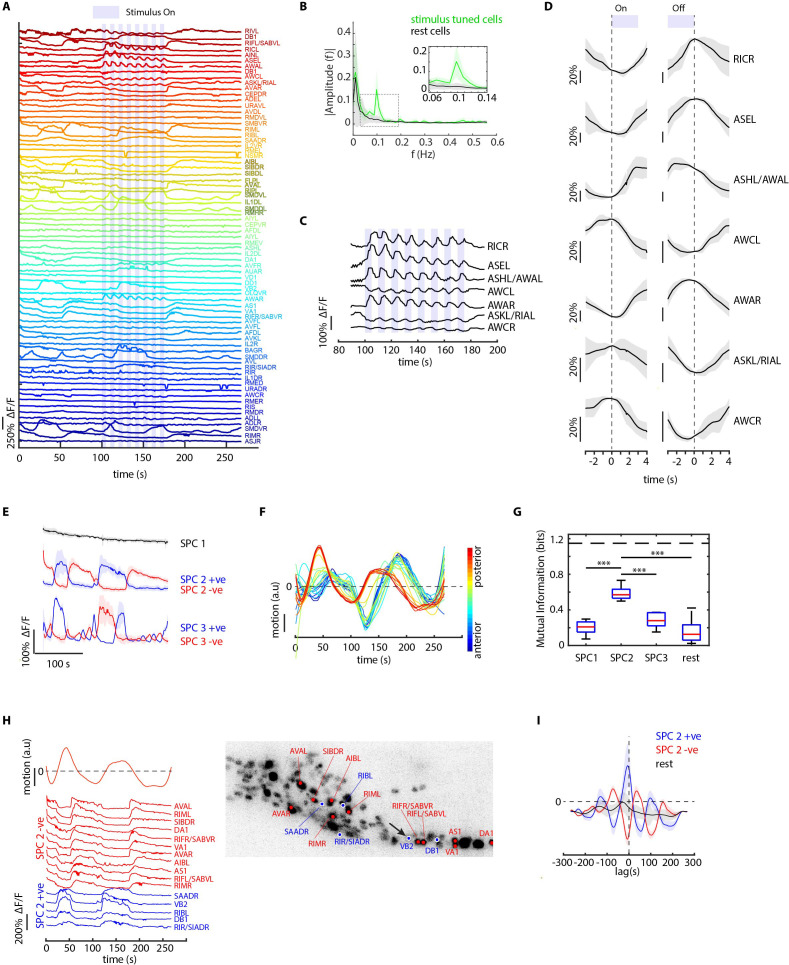

We also tested worms’ responses to periodic stimuli of a more complex and naturalistic input – supernatant of bacterial culture (Figure 5, Figure 5—video 1). A periodic input (5 s On and 5 s Off for eight cycles) entrains many neurons as expected, therefore allowing us to better separate the odor-elicited responses from spontaneous activities (Figure 5A). We generated the candidate identities for all recorded neurons (Figure 5—figure supplement 1A). Notably, several highly entrained neurons were identified as sensory neurons known to respond to food stimuli (Liu et al., 2019; Wakabayashi et al., 2009; Zaslaver et al., 2015; Figure 5C), some of which responded to the onset of the stimuli and some to the withdrawal of the stimuli (Figure 5D). The power spectrum of these neurons showed a strong frequency component at 0.1 Hz as expected (Figure 5B).

Figure 5. CRF_ID framework identifies neurons representing sensory and motor activities in whole-brain recording.

(A) GCaMP6s activity traces of 73 cells automatically tracked throughout a 278 s long whole-brain recording and the corresponding predicted identities (top labels). Periodic stimulus (5 sec-on – 5 sec-off) of bacteria (E. coli OP50) supernatant was applied starting at 100 s (shaded blue regions). Experimental data comes from strain GT296. (B) Power spectrum of neuron activity traces during the stimulation period for all cells. Cells entrained by 0.1 Hz periodic stimulus show significant amplitude for 0.1 Hz frequency component (green). (C) Activity traces of cells entrained by periodic stimulus shown for the stimulation period. Blue shaded regions indicate stimulus ON, unshaded region indicate stimulus OFF. Identities predicted by the framework are labeled. (D) Average ON and OFF responses of cells entrained by periodic stimulus across trials. The black line indicates mean and gray shading indicates ± s.e.m. (E) Average activities of neurons with significant non-zeros weights in the first three sparse principal components (SPCs). Activities within each component are stereotypical and different components show distinct temporal dynamics. Cells with positive weights (blue) and negative weights (red) in SPC2 and SPC3 showed anti-correlated activity. Out of the 67 non-stimulus-tuned cells, 19 had non-zero weights in SPC1, 16 cells had non-zero weights in SPC2, and 5 cells had non-zero weights in SPC3. SPC1, SPC2, and SPC3 weights of cells are shown in Figure 5—figure supplement 1. Shading indicates mean ± s.e.m of activity. (F) Velocity (motion/second) traces of cells along anterior-posterior (AP) axis (blue to red) show phase shift in velocity indicating motion in device shows signatures of wave propagation. (G) Cells with non-zero weights in SPC2 show high mutual information with worm velocity compared to cells grouped in other SPCs (*** denotes p<0.001, Bonferroni paired comparison test). Median (red line), 25th and 75th percentiles (box) and range (whiskers). Dashed line indicates entropy of velocity (maximum limit of mutual information between velocity and any random variable). Velocity of cell indicated by the black arrow in panel H right was used for mutual information analysis. (H) Activity traces of 16 cells (with significant non-zero weights) in SPC2 and corresponding identities predicted by the framework. Red traces for cells with negative weights in SPC2, blue traces for cells with positive weights in SPC2. Worm motion/second shown on top. (Right) max projection of 3D image stack showing head ganglion neurons and cells with positive weights (blue) and negative weights (red) in SPC2. Motion/second of cell indicated with arrow is shown in left panel. (I) Cross-correlation analysis between velocity and cells with non-zero weights in SPC2 shows a strong correlation between neuron activities and velocity. In comparison, other cells show low correlation. Velocity of cell indicated by arrow in panel H right was used for cross-correlation analysis.

Figure 5—figure supplement 1. Further analysis of data in periodic food stimulation and whole-brain imaging experiment.

Figure 5—video 1. Whole-brain functional imaging with bacteria supernatant stimulation.

Figure 5—video 2. Wave propagation in animal and correlation of neuron activities to worm motion.

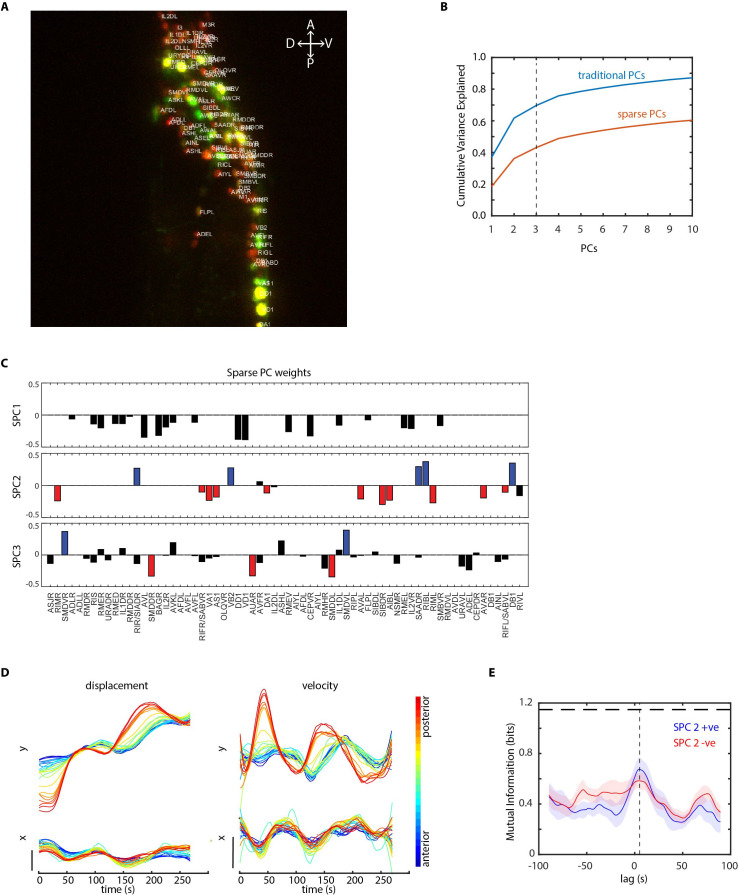

Next, to examine the latent dynamics in the whole-brain activities during the entire experiment, we used traditional Principal Component Analysis (PCA) and Sparse Principal Component Analysis (sPCA) (Zou et al., 2006). The overall dynamics are low-dimensional with top three traditional PCs capturing 70% of the variance (Figure 5—figure supplement 1B). In comparison, while the top 3 sparse PCs (SPCs) explain 43% of the variance in the data, they enable meaningful interpretation of the latent dynamics by eliminating mixing of activity profiles in PCs (Figure 5E). SPC1 shows a systematic decline of the signals, presumably related to photobleaching of the fluorophores; both SPC2 and SPC3 illustrate spontaneous activities with different temporal dynamics. With automatic annotation, we were able to identify cell classes belonging to each SPC (Figure 5—figure supplement 1C). We then analyzed the relationship between motion and neuron activities. In our microfluidic device, the animals are not fully immobilized. By tracking landmarks on the body; we observed propagating waves along the body (Figure 5F; Figure 5—figure supplement 1D, Figure 5—video 2). Interestingly, cells participating in SPC2 showed significantly higher mutual information with motion than any other component (Figure 5G). Examining the connection between activities of neurons that drive SPC2 and animal motion demonstrates that these neurons are indeed correlated or anti-correlated with the motion we detected (Figure 5H); notably, these neurons included several command interneurons such as AVA, RIM, and motor neurons such as VA and DA (Kato et al., 2015; Figure 5H). Cross-correlation analysis between motion and neuron activities showed that neurons are activated ahead of motion (Figure 5I); when a lag is added to the neuron activities, the mutual information of SPC2 neurons with motion is maximum at the same delay observed in the cross-correlation analysis (Figure 5—figure supplement 1E). These experiments together demonstrate the power of the approach, which enabled previously difficult simultaneous analyses of several sensory, inter-, and motor neurons’ activities to natural food stimulus. Thus, automatic identity prediction enabled meaningful interpretation of the whole-brain data.

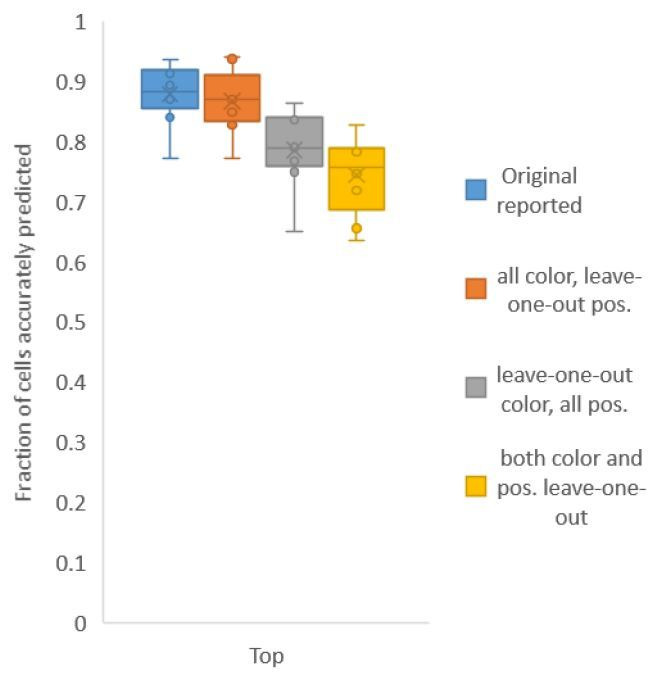

CRF framework is broadly applicable to wider conditions

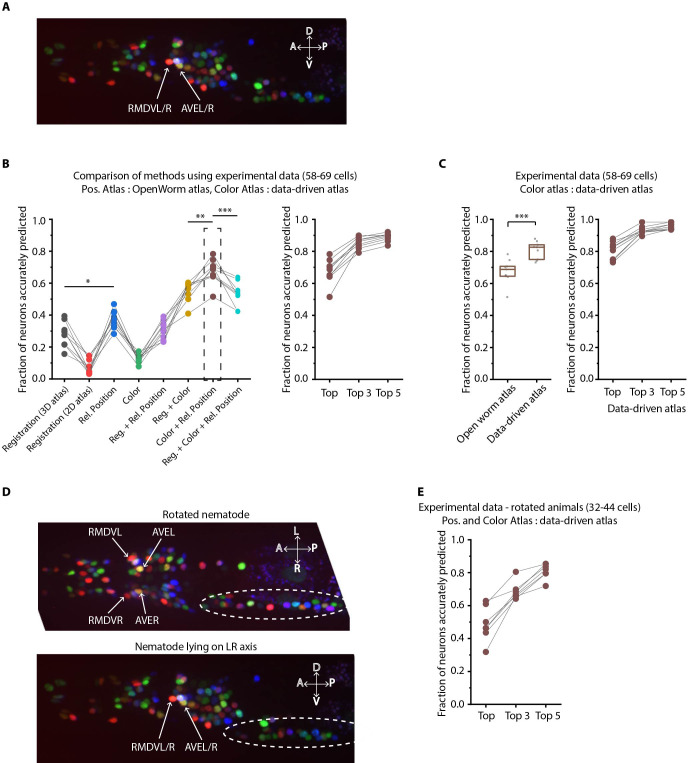

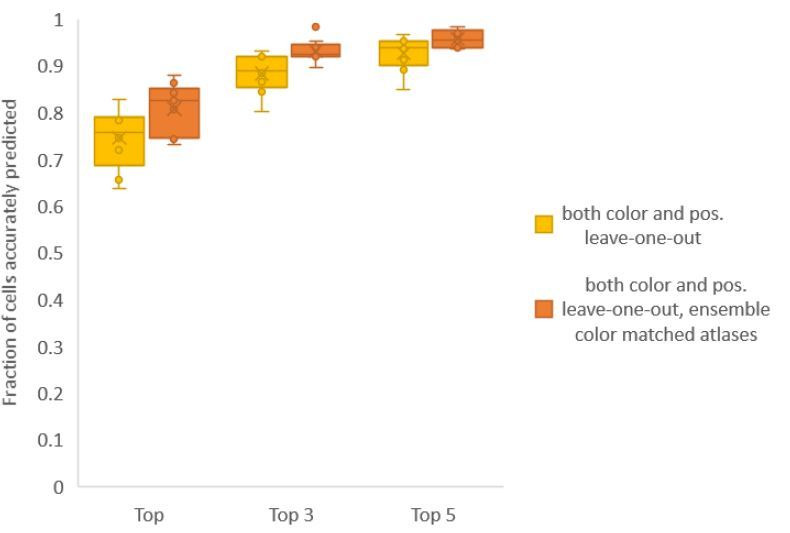

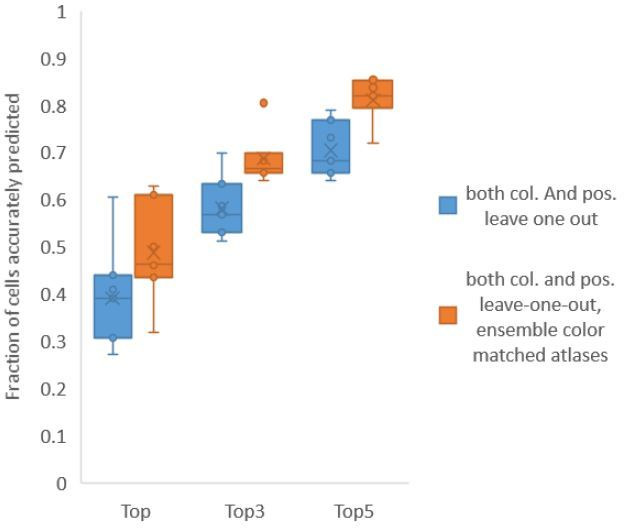

Another important advantage of the CRF_ID framework is its flexibility to incorporate additional information to improve the identification accuracy, by simply adding new terms in the objective function without disturbing the weights of existing features. Here we demonstrate this idea by using the recently developed NeuroPAL (Yemini et al., 2021) that provides a unique chromatic code to each neuron (Figure 6A). The chromatic code was included as a unary feature in the model (see Appendix 1–Extended methods S2.6). Using manually curated ground-truth data, we compared different methods. These methods included different orthogonal feature combinations, as used by previous approaches, thus providing insights into which features perform best in predicting cell identities (Figure 6B, see Appendix 1–Extended methods S2). For fair comparison across methods, static OpenWorm atlas was used across all methods. For methods that use color information, we built data-driven color atlases (Appendix 1–Extended methods S2.4) using all datasets except the test dataset: leave-one-out color atlases. Unsurprisingly, registration performs poorly (with or without color information); color alone is not sufficient, and color combined with spatial features improves the accuracy (whether registration or relative position is used). Notably, the best performing model uses relative position features in combination with color and without registration term (Figure 6B; Figure 6—figure supplement 1A), achieving 67.5% accuracy for the top-label prediction. Further, for 85.3% of the neurons, the true identity is within the top three labels.

Figure 6. Annotation framework is generalizable and compatible with different strains and imaging scenarios.

(A) A representative image (max-projection of 3D stack) of head ganglion neurons in NeuroPAL strain OH15495. (B) (Left) comparison of prediction accuracy for various methods that use different information. CRF_ID framework that combines relative position features along with color information performs best (n = 9 animals, *p<0.05, **p<0.01, ***p<0.001, Bonferroni paired comparison test). (Right) the best performing method predicts cell identities with high accuracy. OpenWorm static atlas was used for all methods. Color atlas was built using experimental data with test data held out. Ensemble of color atlases that combine two different color matching methods were used for prediction. Accuracy results shown for top predicted labels. Experimental data comes from strain OH15495. (C) (Left) annotation framework can easily incorporate information from annotated data in the form of data-driven atlas, which improves prediction accuracy (***p<0.001, Bonferroni paired comparison test). Prediction was performed using leave-one-out data-driven atlases for both positional relationship features and color. Accuracy shown for top predicted labels. Ensemble of color atlases that combine two different color matching methods were used for prediction. (Right) accuracy achieved by top, top 3, and top 5 labels. Experimental data comes from strain OH15495. Top, middle, and bottom lines in box plot indicate 75th percentile, median and 25th percentile of data, respectively. (D) An example image of head ganglion neurons in NeuroPAL strain for rotated animal (nematode lying on DV axis). In contrast, animal lying on the LR axis is shown below. The locations of RMDVL/R, AVEL/R cells in the two images are highlighted for contrasts. Dashed ellipses indicate positions of cells in retrovesicular ganglion, showing that the rotated animal is not rigidly rotated. Experimental data comes from strain OH15495. (E) Top-label prediction accuracies for non-rigidly rotated animal. n = 7 animals. Experimental data comes from strain OH15495 and OH15500. Prediction was performed using leave-one-out data-driven atlases for both positional relationship features and color. Accuracy shown for top predicted labels. Ensemble of color atlases that combine two different color matching methods were used for prediction.

Figure 6—figure supplement 1. Additional results on prediction performance of CRF_ID method on NeuroPAL data: comparison against registration method and utility of ensemble of color atlases.

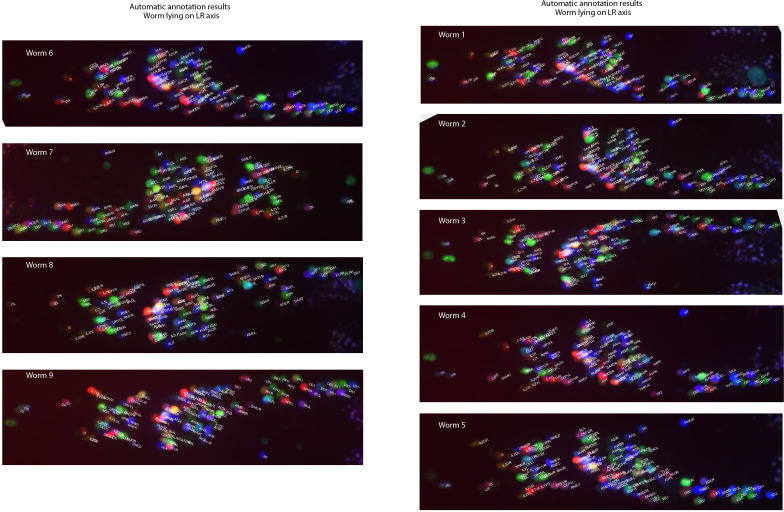

Figure 6—figure supplement 2. Example annotations predicted by the CRF_ID framework for animals imaged lying on the LR axis.

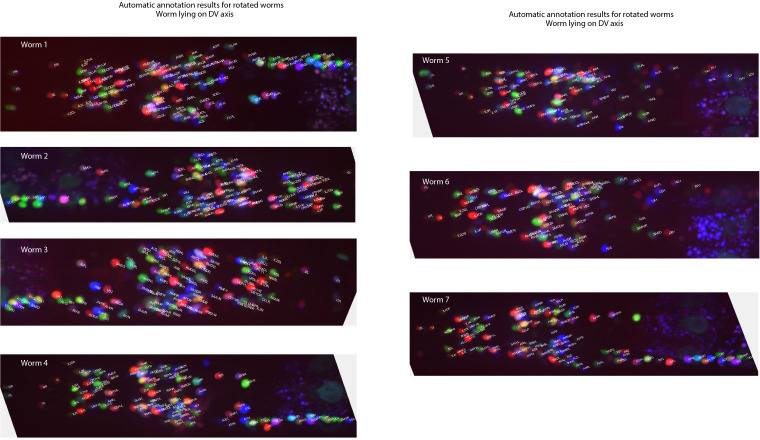

Figure 6—figure supplement 3. Example annotations predicted by the CRF_ID framework for animals twisted about the anterior-posterior axis (note the anterior and lateral ganglions show clear left-right separation whereas retrovesicular ganglion instead of being in the middle is more toward one of the left or right sides).

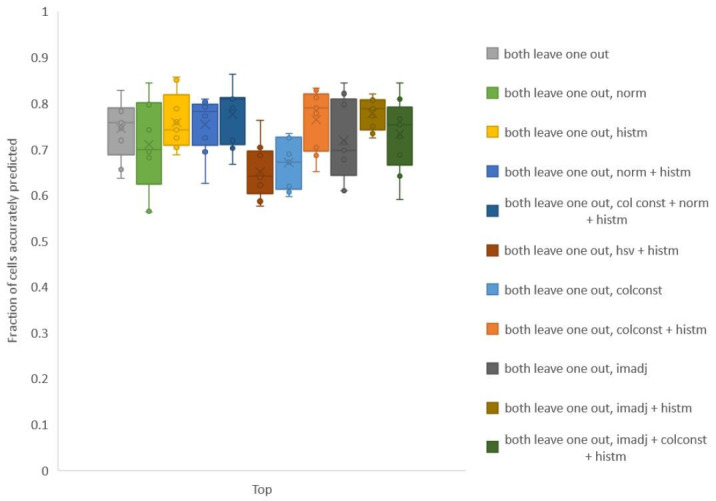

Next, to assess true potential of CRF_ID framework, instead of using OpenWorm atlas, we used data-driven positional relationship atlases, so that the predictions are now performed with data-driven atlases for both positional relationships and color. To test the generalizability of the method on unseen datasets, we compared the accuracy of CRF_ID framework across several kinds of data-driven atlases (Figure 6—figure supplement 1B). These included the following: (1) positional relationship and color atlases, which include information from all datasets including test dataset, (2) color information comes from all datasets and leave-one-out atlases for positional relationships built with test dataset held out, (3) positional relationship information, which comes from all datasets and leave-one-out color atlases, and (4) leave-one-out atlases for both positional relationships and color. The analysis revealed that accuracy falls more sharply with using color leave-one-out atlases compared to the leave-one-out positional relationship atlases. This implies that in the datasets used, positional relationship features are more consistent compared to color features. Thus, leave-one-out positional relationships atlases can represent positional relationships among cells in test datasets. Further, to assess the contribution of color information to prediction accuracy, we compared the accuracy of the case using both positional relationship and color leave-one-out atlases (Figure 6—figure supplement 1B last column) to the case where predictions were performed using only leave-one-out positional relationship atlases shown earlier in Figure 2A. We found that color contributed little to improving accuracy. This is because in the datasets used, the color variability in raw RGB values across animals is far greater than the position variability across animals; hence, the distribution of color features in the training data does not match the distribution of features in the test data. This could be due to inherent variations in fluorophore expressions across animals, or variations in imaging settings (i.e. exposure time of each channel, laser power etc.) across sessions. Thus, a naive approach of building color atlas by directly aggregating RGB values from training images contributed little to improving accuracy. The problem of mismatched feature distributions in test data compared to training data is commonly solved by domain adaptation methods in machine-learning community. We adopted a simple domain adaptation strategy for dealing with color discrepancies and developed a two-step method (Appendix 1–Extended methods S2.4). First, we aligned the distributions of RGB values in training datasets to the test dataset by several methods such as simple normalization of color channels, histogram matching color channels in training images to test data set, contrast and gamma adjustment of image channels, and transforming the color space of all images with color invariants (Finlayson et al., 1998; Figure 6—figure supplement 1C). Note that this alignment does not rely on cell identity information at all. These color alignment methods by themselves or in combination with other methods improved accuracy for some datasets but not all datasets. Second, we used an ensemble of leave-one-out color atlases for prediction, that is predictions were performed using multiple leave-one-out color atlases each built with a different color alignment technique. The ensemble, in comparison to single atlases, provides a combination of color features from aligned color distributions, thus improving accuracy. The two-step method improves accuracy by 6% over the naïve approach (Figure 6—figure supplement 1D). Overall, a significant improvement in the model accuracy was achieved by using data-driven atlas to account for biological variability in both the positional relationships and color (Figure 6C; Figure 6—figure supplement 2). Using the data-driven atlas, accuracy improved to 81% (top labels); more than 93% of the neurons have their true identities in the top three labels chosen by the model. We expect that more datasets for the atlas will continue to improve the accuracy.

Lastly, we show that our framework is equipped to work with realistic complex scenarios of animals imaged in different orientations, often not rigid rotations (Figure 6D). Identifying cells in these cases is challenging: manual annotation using the 2D-atlas (Altun and Hall, 2009) is not possible since it lacks left-right information; further, due to low-z sampling of image stacks, segmented positions of cells along z-axis are noisier. These challenges can be addressed by using the data-driven atlas. We manually annotated data collected for animals imaged with varying degrees of non-rigid rotations and built data-driven atlases for positional relationships and color. Further, we combined rotated animals’ atlas with the previous atlas built from animals imaged in lateral orientation to build a super atlas. With the test data held out in atlases, the prediction accuracy of top labels was 48.8%, and the accuracy was 68.7% for top three labels (Figure 6E ), which are reasonable for practical purposes. In this case too, aligning the color distributions in atlas to the test data set and using ensemble of color atlases with different alignment techniques helped to significantly improve accuracy over the naïve approach to build color atlases (Figure 6—figure supplement 1E).

Discussion

Annotating anatomical features and cellular identities in biological images are important tasks for many applications. Here, we demonstrated our CRF_ID framework is suitable for fluorescently labeled cells in 3D images for many applications. Using both ground-truth experimental data of whole-brain image stacks and synthetic data generated from an atlas, we showed that our framework is more accurate compared to existing approaches. We demonstrated using real examples how the pipeline can be used for analysis of gene expression pattern for instance, and for neuron identification from dense multi-cell or whole-brain imaging experiments. Further, our CRF_ID framework significantly speeds up the cell identification compared to manual labeling while reducing bias.

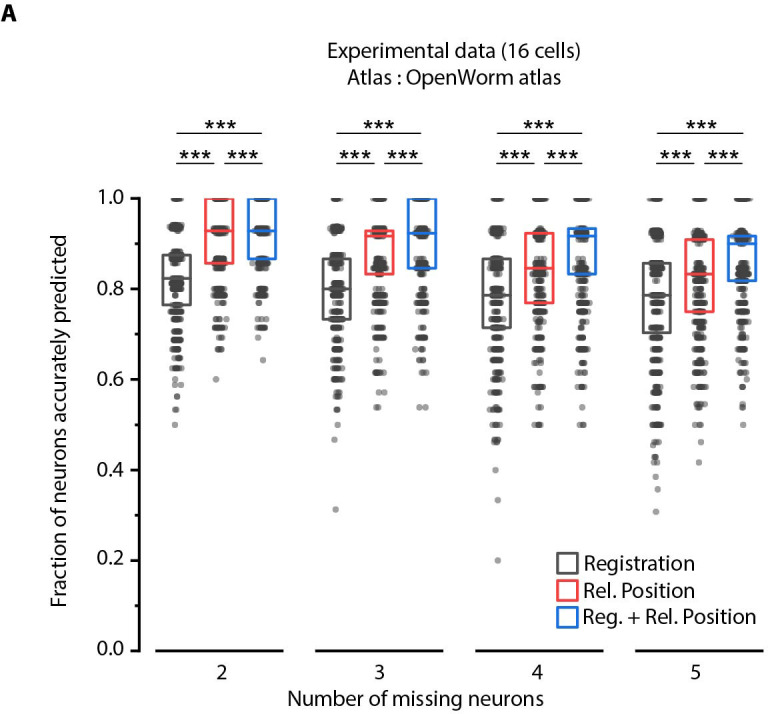

With the pipeline, we address several challenges. There is ample evidence that anatomy varies from individual to individual, and from condition to condition. This variability, or position noise, is a major source of roadblock in effectively applying previous methods to annotate the whole-brain recording data. Because our framework leverages intrinsic similarity (Bronstein et al., 2007), it performs better than registration methods in handling position noise (Figure 2E; Figure 2—figure supplement 5C). Further, CRF_ID formulation is more accurate in handling count noise that is cases of missing or undetectable cells in images (Figure 2D; Figure 4C; Figure 2—figure supplement 5B; Figure 4—figure supplement 1A), because the missing neurons do not upset the relationships among the detectable neurons in the CRF_ID formulation while missing neurons introduce large uncertainty in registration methods. Lastly, CRF_ID method predicts identities with sufficient accuracy for different postural orientations of the worms often seen in our microfluidic experiments. We expect that this superiority is maintained for any data that have relational information preserved, this is the case virtually in all biological samples where tissues are connected by matrix materials, such as in other whole-brain recordings or for registration of fixed tissues.

Building and using data-driven atlases in the pipeline is simple and yet highly effective. We expect that data from more animals, different orientations, age, and imaging techniques will further improve the generalizability. Since building such data-driven atlas for our framework requires only cheap mathematical operations (Appendix 1–Extended methods S1.7), incorporating more data is quite simple and easily scalable. In contrast, previous registration-based methods may require simultaneous or batch-wise registration of multiple images to one reference image; this would require solving multiple constrained regression problems on increasingly large data sets, thus rendering them computationally unscalable. Further, without systematic methodology of which image should be chosen as reference image, atlas gets biased toward the chosen reference image or by the order in which blocks of images are registered to the reference image. Tackling this challenge is an active field of research (Wang et al., 2008; Evangelidis et al., 2014). In comparison, in CRF method, atlas building is unbiased toward any image set because there is no concept of reference image. Additionally, atlas can be built from all images simultaneously because of the cheapness of mathematical operations.

Another major advantage of data-driven atlases in our framework is that the atlases can be built incrementally using partially or fully annotated datasets, for example using lines that label partial and distinct subsets of cells. In comparison, registration-based methods cannot build atlas from lines that label distinct subset of cells. This is because registration-based methods build probabilistic spatial atlases by first establishing correspondence among cells in images and subsequently registering images. However, this is not possible if the cells in different images do not have any overlapping cells or have very few overlapping cells. In comparison, atlases built in CRF_ID framework store probabilistic positional relationship features among cells observed within each image. Hence, correspondence between images is not required. Thus, in principle, CRF_ID framework can combine manually annotated data across different lines, generated by different researchers (and across labs) in the form of data-driven atlases. Automated annotation using such atlases removes individual biases in annotating cells. Further, it greatly supports researchers with no prior experiences with cell identification. We expect that using our framework, large-scale atlases can be built in the future with community contributed data.

Finally, a distinction of CRF_ID framework is its ability to build and annotate with complete atlases covering all cells. This is made possible by the efficient utilization of data, even from strains with non-overlapping cells. Annotating against a complete atlas is crucial because commonly in practice, no prior information is available on exactly which cells are missing from the images before annotation. Registration-based or unary potential-based methods are limited in building atlas by the availability of overlapping strains. Thus, in these methods, cells that are missing in the atlas can never be assigned to cells in images; hence these methods do not perform completely unbiased annotation. In comparison, CRF_ID framework uses a complete atlas to assign any possible label in the atlas to cells in the images, thus performing unbiased annotation, resulting in better handling of count noise in images.

CRF framework offers the flexibility of combining arbitrary unary features with arbitrary pairwise features for cell annotation task. We demonstrate the utility of such flexibility by combining color information of cells in NeuroPAL strain with positional relationship features and show higher accuracy compared to other methods. Our experiments show that in order to be able to utilize color information of cells in NeuroPAL for automatic annotation of cell identities, color consistency across animals needs to be maintained, either experimentally or by post hoc corrections. Experimentally, consistent protocol/imaging settings across sessions should be maintained as much as possible. Even with consistent protocol, color variation may exist due to inherent differences across animals in relative expressions of fluorophores that define the chromatic code of cells. This can be tackled by (1) collecting large volume of data to capture each cells’ full RGB variations and (2) using computational domain adaptation techniques. More advancement in image color transfer and domain adaptation techniques will further improve accuracy in future.

While we only considered pairwise features in the current formulation, feature functions with arbitrary dependency can be included in the model that may further improve prediction accuracy (Kohli et al., 2009; Najafi et al., 2014). Advances in structured energy minimization field (Kohli et al., 2009; Komodakis and Paragios, 2009; Krähenbühl and Koltun, 2011) will enable tackling the increased complexity of combinatorial optimization in these cases. Our workflow borrows techniques developed in metric object/shape matching literature for annotation in biological images. Log-linear parameterization in our framework makes the model a member of the exponential families (Wainwright and Jordan, 2007); thus, the objective function in our framework has interesting connections with max-entropy models and with the entropy-regularized optimal transport objective functions (Solomon et al., 2016; Nitzan et al., 2019). Therefore, improvements in computational speed can be achieved by borrowing fast optimization techniques for quadratic assignment problems developed in optimal transport literature. Advances in these fields will continue to improve the method development in image analysis.

We anticipate that by using our generalizable formulation, similar pipelines can be set up to annotate more image sets in other organisms and build atlases. Data in many anatomical annotation problems (e.g. brain atlas construction, registering images from different modalities, comparing animals or related species to one another for developmental studies) share a similar property, in that the anatomical features of interest maintain a cohesion from sample to sample. This underlining cohesion lends itself to the CRF framework. As we have shown, the pipeline is extremely flexible in incorporating new information. Thus, framework should be easily modifiable catering to the data demands in other organisms including features besides landmarks and spectral information such as cellular morphology and expected cellular activities (e.g. calcium transients). Because the only inputs to our framework are segmented anatomical regions in images and positional relationships among them, information already available in data across organisms (Robie et al., 2017; Kim et al., 2015; Chen et al., 2019; Ronneberger et al., 2012), the framework proposed here should be generally useful for many problems in model organisms such as Drosophila (Robie et al., 2017; Vaadia et al., 2019), zebrafish (Ronneberger et al., 2012), mammalian brains (Kim et al., 2015; Chen et al., 2019). Besides fluorescence, the pipeline should also be able to work with data from other modalities including EM, live imaging, and fluorescence imaging from cleared tissues.

Materials and methods

Reagents

For all experiments, animals were cultured using standard techniques (Stiernagle, 2006). A detailed list of strains used is provided below.

| Name | Genotype | Experiments | Reference |

|---|---|---|---|

| GT290 | aEx18[unc-47p::NLS::CyOFP1::egl-13NLS] | Strain with nine neuronal landmarks in head | This work |

| GT298 | aEx22[unc-47p::NLS::CyOFP1::egl-13NLS + gcy-32p::NLS::CyOFP1::egl-13NLS] | Strain with 12 neuronal landmarks in head | This work |

| AML32 | wtfIs5 [rab-3p::NLS::GCaMP6s + rab-3p::NLS::tagRFP] | Strain used to make whole-brain imaging strain with CyOFP labeled landmarks GT296 | Nguyen et al., 2016 |

| AML70 | wtfIs5 [rab-3p::NLS::GCaMP6s + rab-3p::NLS::tagRFP]; lite-1(ce314) X | Strain used to make whole-brain imaging strain with CyOFP labeled landmarks GT293 | Scholz, 2018 |

| KG1180 | lite-1(ce314) X | Strain used to make whole-brain imaging strain with CyOFP labeled landmarks GT296 | CGC |

| GT296 | wtfIs5 [rab-3p::NLS::GCaMP6s + rab-3p::NLS::tagRFP]; aEx18[unc-47p::NLS::CyOFP1::egl-13NLS]; lite-1(ce314) X | Strain used for whole-brain functional imaging experiments (Figures 4 and 5) and quantifying cell position variability. | This work |

| GT293 | wtfIs5 [rab-3p::NLS::GCaMP6s + rab-3p::NLS::tagRFP]; lite-1(ce314) X; aEx22[unc-47p::NLS::CyOFP1::egl-13NLS + gcy-32p::NLS::CyOFP1::egl-13NLS] | Strain used for quantifying cell position variability. | This work |

| AML 5 | otIs355 [rab-3p(prom1)::2xNLS::TagRFP] IV. kyIs51 [odr-2p::GFP + lin-15(+)] | Strain used for mock gene-expression pattern analysis and mock multi-cell calcium imaging experiments | Nguyen et al., 2016 |

| OH15495 | otIs696 [UPN::NLS::TagRFP-T + acr-5::NLS::mTagBFP2::H2B + flp-1::NLS::mTagBFP2::H2B + flp-6::NLS::mTagBFP2::H2B + flp-18::NLS::mTagBFP2::H2B + flp-19::NLS::mTagBFP2::H2B + flp-26::NLS::mTagBFP2::H2B + gcy-18::NLS::mTagBFP2::H2B + ggr-3::NLS::mTagBFP2::H2B + lim-4::NLS::mTagBFP2::H2B + pdfr-1::NLS::mTagBFP2::H2B + srab-20::NLS::mTagBFP2::H2B + unc-25::NLS::mTagBFP2::H2B + cho-1::NLS::CyOFP1::H2B + flp-13::NLS::CyOFP1::H2B + flp-20::NLS::CyOFP1::H2B + gcy-36::NLS::CyOFP1::H2B + gpa-1::NLS::CyOFP1::H2B + nlp-12::NLS::CyOFP1::H2B +nmr-1::NLS::CyOFP1::H2B + ocr-1::NLS::CyOFP1::H2B + osm-9::NLS::CyOFP1::H2B + srh-79::NLS::CyOFP1::H2B + sri-1::NLS::CyOFP1::H2B + srsx-3::NLS::CyOFP1::H2B + unc-8::NLS::CyOFP1::H2B + acr-2::NLS::mNeptune2.5 + ceh-2::NLS::mNeptune2.5 + dat-1::NLS::mNeptune2.5 + dhc-3::NLS::mNeptune2.5 + eat-4::NLS::mNeptune2.5 + flp-3::NLS::mNeptune2.5 + gcy-35::NLS::mNeptune2.5 + glr-1::NLS::mNeptune2.5 + gcy-21::NLS::CyOFP1::H2B::T2A::NLS::mTagBFP2::H2B + klp-6::NLS::mNeptune2.5::T2A::NLS::CyOFP1::H2B + lim-6::NLS::mNeptune2.5::T2A::NLS::CyOFP1::H2B + mbr-1::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B + mec-3::NLS::CyOFP1::H2B::T2A::NLS::mTagBFP2::H2B + odr-1::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B + srab-20::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B] | NeuroPAL strain demonstrating the ease of incorporating color information, and thus demonstrating generalizability | Yemini et al., 2021 |

| OH15500 | otIs672 [rab-3::NLS::GCaMP6s + arrd-4:NLS:::GCaMP6s]. otIs669 [UPN::NLS::TagRFP-T + acr-5::NLS::mTagBFP2::H2B + flp-1::NLS::mTagBFP2::H2B + flp-6::NLS::mTagBFP2::H2B + flp-18::NLS::mTagBFP2::H2B + flp-19::NLS::mTagBFP2::H2B + flp-26::NLS::mTagBFP2::H2B + gcy-18::NLS::mTagBFP2::H2B + ggr-3::NLS::mTagBFP2::H2B + lim-4::NLS::mTagBFP2::H2B + pdfr-1::NLS::mTagBFP2::H2B + srab-20::NLS::mTagBFP2::H2B + unc-25::NLS::mTagBFP2::H2B + cho-1::NLS::CyOFP1::H2B + flp-13::NLS::CyOFP1::H2B + flp-20::NLS::CyOFP1::H2B + gcy-36::NLS::CyOFP1::H2B + gpa-1::NLS::CyOFP1::H2B + nlp-12::NLS::CyOFP1::H2B + nmr-1::NLS::CyOFP1::H2B + ocr-1::NLS::CyOFP1::H2B + osm-9::NLS::CyOFP1::H2B + srh-79::NLS::CyOFP1::H2B + sri-1::NLS::CyOFP1::H2B + srsx-3::NLS::CyOFP1::H2B + unc-8::NLS::CyOFP1::H2B + acr-2::NLS::mNeptune2.5 + ceh-2::NLS::mNeptune2.5 + dat-1::NLS::mNeptune2.5 + dhc-3::NLS::mNeptune2.5 + eat-4::NLS::mNeptune2.5 + flp-3::NLS::mNeptune2.5 + gcy-35::NLS::mNeptune2.5 + glr-1::NLS::mNeptune2.5 + gcy-21::NLS::CyOFP1::H2B::T2A::NLS::mTagBFP2::H2B + klp-6::NLS::mNeptune2.5::T2A::NLS::CyOFP1::H2B + lim-6::NLS::mNeptune2.5::T2A::NLS::CyOFP1::H2B + mbr-1::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B + mec-3::NLS::CyOFP1::H2B::T2A::NLS::mTagBFP2::H2B + odr-1::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B + srab-20::NLS::mNeptune2.5::T2A::NLS::mTagBFP2::H2B] V | NeuroPAL strain demonstrating the ease of incorporating color information, and thus demonstrating generalizability | Yemini et al., 2021 |

Imaging

All imagings were performed using either a Perkin Elmer spinning disk confocal microscope (1.3 NA, 40x, oil objective) or Brucker Opterra II Swept field confocal microscope (0.75 NA, 40x, Plan Fluor air objective), with an EMCCD camera.

To acquire data used for framework validation and comparison against other methods (Figure 2), gene expression pattern analysis (Figure 3), multi-cell calcium imaging (Figure 4), imaging landmark strain (Figure 4) and NeuroPAL imaging (Figure 6), animals were synchronized to L4 stage and were imaged in an array microfluidic device (Lee et al., 2014). A single 3D stack was acquired with either 0.5 µm or 1 μm spacing between z-planes and 10 ms exposure time (except for NeuroPAL strain where exposure times of different channels were chosen based on the guidelines provided in NeuroPAL manuals Yemini et al., 2021).

Whole-brain functional recording data while providing chemical stimulus were acquired using a microfluidic device designed for applying chemical stimulation (Cho et al., 2020) to the nose-tip of the animal. Here, image stacks were acquired with 1 μm spacing between z-planes and 10 ms exposure for each z-plane. This enabled recording videos at 1.1 volumes/s while imaging two channels simultaneously (GCaMP and RFP). Animals were synchronized to Day-1 adult stage.

Generating synthetic data for framework tuning and comparison against other methods