Abstract

Background

The ictal examination is crucial for neuroanatomic localization of seizure onset, which informs medical and neurosurgical treatment of epilepsy. Substantial variation exists in ictal examination performance in epilepsy monitoring units (EMUs). We developed and implemented a standardized examination to facilitate rapid, reliable execution of all testing domains and adherence to patient safety maneuvers.

Methods

Following observation of examination performance, root cause analysis of barriers, and review of consensus guidelines, an ictal examination was developed and disseminated. In accordance with quality improvement methodology, revisions were enacted following the initial intervention, including differentiation between pathways for convulsive and nonconvulsive seizures. We evaluated ictal examination fidelity, efficiency, and EMU staff satisfaction before and after the intervention.

Results

We identified barriers to ictal examination performance as confusion regarding ictal examination protocol, inadequate education of the rationale for the examination and its components, and lack of awareness of patient-specific goals. Over an 18-month period, 100 ictal examinations were reviewed, 50 convulsive and 50 nonconvulsive. Ictal examination performance varied during the study period without sustained improvement for convulsive or nonconvulsive seizure examination. The new examination was faster to perform (0.8 vs 1.5 minutes). Postintervention, EMU staff expressed satisfaction with the examination, but many still did not understand why certain components were performed.

Conclusion

We identified key barriers to EMU ictal assessment and completed real-world testing of a standardized, streamlined ictal examination. We found it challenging to reliably change ictal examination performance in our EMU; further study of implementation is warranted.

The epilepsy monitoring unit (EMU) provides a unique environment to assess patients' seizures with video EEG for diagnosis, characterization, and/or presurgical evaluation.1 EEG provides electrographic data; however, clinico-electrographic correlation is key to interpretation. Clinical assessment during seizures allows neuroanatomic classification of seizure onset, which can inform pharmacologic and surgical treatment of epilepsy.

The ictal examination should consist of a focused neurologic evaluation to test domains including seizure aura, language, and motor function.2 Because a prolonged generalized seizure can lead to respiratory compromise and, rarely, death, safety maneuvers (i.e., turning the patients on their side and providing oxygen/suction) are also vital components of ictal assessment.3,4 Uniform assessment can be challenging as seizures occur with great heterogeneity; however, suboptimal performance may result in loss of important clinical data and potential safety risks.

Although EMU admissions are relatively safe and of high diagnostic yield, EMU care processes differ widely across institutions.4–7 Proposed EMU quality indicators highlight parameters including length of stay, seizure induction techniques, anti-epileptic drug withdrawal, safety precautions, and rescue medication administration. However, EMU practice guidelines remain underdeveloped.4–6 Furthermore, despite the International League Against Epilepsy (ILAE) 2016 consensus report on managing patients during seizures,2 few epilepsy centers use a standardized approach. Ictal examination content and performance fidelity are ripe for further investigation and optimization.8 The aim of our quality improvement initiative was to develop an ictal examination allowing rapid, reliable execution of all testing domains and adherence to patient safety maneuvers.

Methods

Baseline Condition

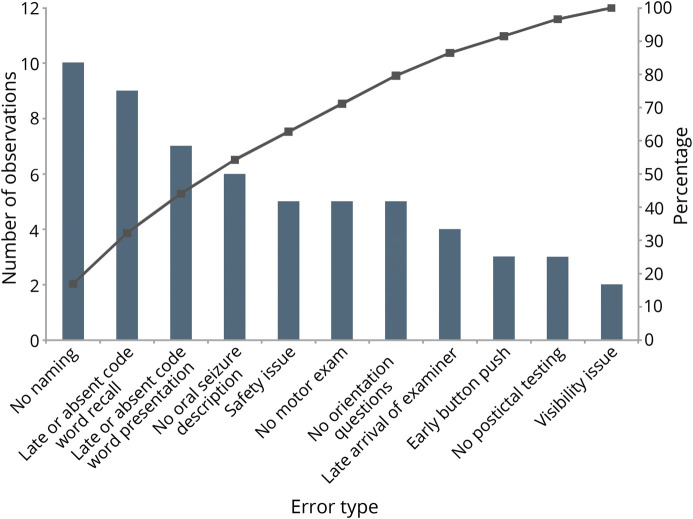

We assessed the current condition through observation of a sample of ictal examination videos recorded by our EMU monitoring software Natus Neuroworks (Natus, Pleasanton, CA). We sampled 20 ictal examinations (10 convulsive and 10 nonconvulsive) that had been performed by nurses, EEG technicians, and physicians in our EMU over 2 months. Three neurologist reviewers used a rubric to tally errors and omissions. This rubric included the following considerations: safety issues (turning the patients on their side and providing oxygen and suction), visibility issues (uncovering the patient and ensuring that the patient is on camera), prioritization of pushing the alarm button over performing the ictal examination, late arrival of the examiner (more than 10 seconds after clinical onset), failure to narrate seizure semiology, no orientation questions (level of awareness), absent or late verbal memory testing presentation and recall (e.g., after convulsion underway), no aphasia assessment (naming), no motor function testing, and no postictal assessment. We found the most common errors in examination to be language testing (naming objects and verbal memory testing presentation), seizure semiology narration, motor function testing, and safety/visibility maneuvers (uncovering the patients, turning the patients on their side, and providing oxygen and suction) (figure 1). With a multidisciplinary team of neurologists, neurology house staff, nurses, and EEG technicians, we performed a root cause analysis of variations in performance of the ictal assessment. From our root cause analysis, we identified the most frequent issue precluding optimal ictal testing to be failure to understand the rationale for this testing.

Figure 1. Pareto Chart of Baseline Errors and Omissions in Ictal Examination Testing.

Types of errors are sorted across the x-axis in descending frequency. The left side y-axis displays counts of observations (bars), and the right side y-axis displays cumulative percentage (lines).

Intervention

Drawing from consensus guidelines reported in the 2016 ILAE initiative to develop standardized comprehensive peri-ictal testing2 and applying our local process analysis, we developed a standardized, streamlined ictal examination that tailored a validated assessment to our needs. The development staff comprised 6 neurologists, 4 neurology house staff, 2 EEG techs, and 2 nurses. Before this initiative, we had a general ictal testing guideline that encompassed several key components, but this examination included redundancies, lacked rigorous postictal testing, and was not written to prioritize safety as explicitly (figure e-1, links.lww.com/CPJ/A167). We chose to make the battery shorter by eliminating redundancies, due to a testing length range that was largely longer than an average seizure length of 1–2 minutes. Our goals were to assess the performance fidelity of our examination (adapted from consensus guidelines) and to explore the challenges of implementing a standardized assessment. As safety must be the top priority in the EMU setting, we felt it impossible to describe a standardized approach to ictal assessment without integrating safety maneuvers. The components were safety (safety/visibility maneuvers: turn the patients on their side, bring oxygen/suction, turn the light on, uncover the patients, and check the camera), assign a lead examiner among providers who have entered the room, seizure aura inquiry (“What do you feel right now?”), semiology narration (“Describe what you see out loud”), verbal memory testing (“Please repeat and remember purple elephant”), naming objects (list generated by the examiner), orientation questions (“What is your name? Where are you? What day is it?”), and motor assessment (“Raise your arms”). Postictal components were verbal memory testing (“Can you remember the words I gave you?”), seizure recollection (“When was your last seizure? What did you feel?”), and repetition of the naming, orientation, and motor prompts. Over 2 months, we held training sessions for all staff rotating through the EMU in which the principles of semiology were reviewed, and staff practiced the new examination through role-playing exercises.9 Our EMU used dedicated nurses and EEG techs; staffing was stable throughout the course of this study. Ictal examination cue cards, “badge buddies,” were distributed, the new ictal examination was posted in every EMU patient room, and we initiated a practice of discussing patient-specific considerations with staff in daily rounds.

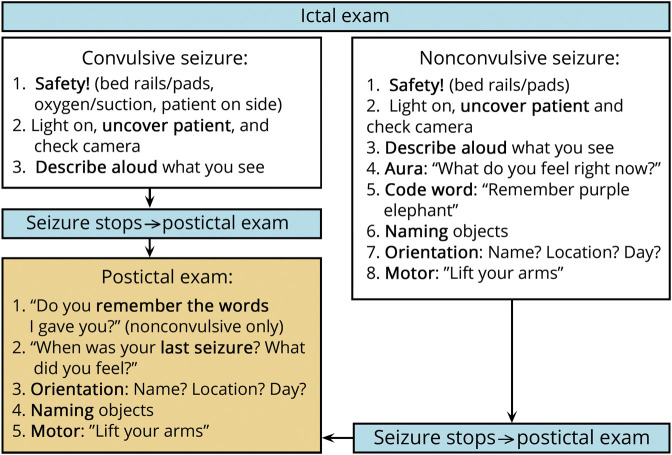

In accordance with quality improvement methodology, we continuously evaluated our intervention. An early revision to our protocol occurred after we observed that EMU staff found the protocol unclear when patients were already convulsing on responder arrival and rarely referred to the cue cards or posters. The pathway was revised to be specific to seizure type at 6 months into the intervention (figure 2). We also noted that patient-specific goals were poorly communicated. As a countermeasure, at the same time the revised pathway was disseminated, the EMU junior resident incorporated patient-specific considerations via a daily “infogram” in the electronic record that detailed how many seizures a patient had to date and what the focus of testing maneuvers should be going forward.

Figure 2. Ictal Examination.

To promote sustainability, training was repeated for new house staff who would rotate in the EMU 1 year following the initial educational intervention. The standardized ictal examination was also created and published on our health care system's internal platform for electronic dissemination of medical pathways.

Evaluation

At baseline, 1 month after intervention, and then every 3 months following the educational intervention, we sampled 20 ictal examinations performed in the EMU and video recorded by our monitoring software (Natus). At each time point, we scored a convenience sample of examinations of 10 convulsive and 10 nonconvulsive seizures. In keeping with quality improvement methodology, this convenience sampling was not meant to assess for a difference in pre- and post-intervention medians, but rather for a trend established over time. The convenience sample was intended to capture ictal assessments longitudinally: we filtered patients' files on Natus every 3 months to look at the previous 60-day time interval. Patients' files that had seizures were often marked as such or as “event,” so if we opened the file and there was an ictal and postictal assessment, we included it. We took the first consecutive 10 convulsive and 10 nonconvulsive seizures we found in that time frame. We did not discriminate between events with and without electrographic correlate as rapidity, safety, and clinical assessment were the immediate priorities. Each examination was scored according to a rubric by at least 2 physician reviewers for quality assurance. The rubric for a convulsive seizure evaluated safety maneuvers, ensuring patient visibility, semiology narration, postictal aura inquiry, postictal language testing, and postictal motor testing. The rubric for a nonconvulsive seizure assessed ictal and postictal testing of seizure aura, code word administration and recall, motor ability, and language function. All components were equally weighted and only counted if performed. If physician reviewers disagreed in their scoring of a particular component, no credit was assigned for that component. We aimed to count only components of the ictal examination that were well captured and available for subsequent review by video, as that is how seizures are typically analyzed in the EMU setting. No more than 3 ictal examinations of a given seizure type (convulsive vs nonconvulsive) per patient were used to avoid overrepresentation. Examinations were excluded if lorazepam was administered to the patient during or after the seizure, as this safety maneuver appropriately superseded the subsequent ictal examination. For successive seizures, the verbal memory testing prompt varied, with “purple elephant” used as an example.

As balancing measures, we evaluated examination efficiency, specifically the time it took to perform the new examination compared with the preintervention examination, and EMU staff satisfaction and sense of competency with the new examination. These latter considerations were assessed by a survey of dedicated EMU EEG techs (3 total), dedicated EMU nurses (4 total), and the EMU floating nurse (1 at any given time) that assessed how strongly they agreed or disagreed with the following 5 statements: “I am able to perform the ictal examination faster than previously, I feel confident about my ability to perform the new ictal examination well, I believe that the ictal examination has improved patient safety in the EMU, I understand how to perform the components of the ictal examination, and I understand why we perform each component of the examination.”

Standard Protocol Approvals, Registrations, and Patient Consents

This project was reviewed by the University of Pennsylvania Institutional Review Board and was deemed to be quality improvement and exempt.

Data Availability

The anonymized data used for our study will be shared by request from any qualified investigator.

Results

The preintervention assessment occurred in April and May 2017, and the postintervention study was conducted from June 2017 to September 2018. In total, 100 ictal examinations were reviewed, 50 convulsive and 50 nonconvulsive. The unit of analysis for this study was the ictal and postictal examination, which was performed in a population of adult patients older than 21 years who were admitted to the EMU for differential diagnosis, characterization, or presurgical evaluation. We included assessments of both epileptic and nonepileptic seizures and did not specifically screen for level of cognitive function.

Convulsive Seizures

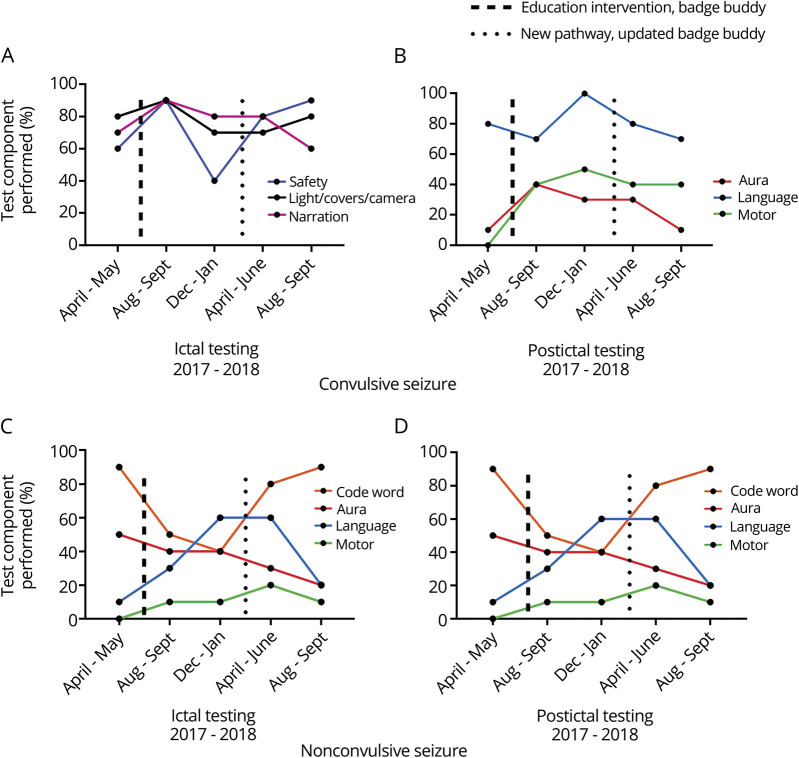

For a convulsive seizure, the scoring rubric prioritized safety maneuvers, improving patient visibility, narration of seizure semiology, and postictal testing (seizure aura inquiry, language ability, and motor function), as the assumption was that a patient would be unresponsive if already convulsing. Although small improvement in adherence for ictal and postictal testing components was observed following the educational intervention, this result was not sustained (figure 3, A and B).

Figure 3. Ictal and Postictal Testing in Convulsive and Nonconvulsive Seizures.

(A) Ictal Testing Performance for Convulsive Seizures; (B) Postictal Testing Performance for Convulsive Seizures; (C) Ictal Testing Performance for Nonconvulsive Seizures; (D) Postictal Testing Performance for Nonconvulsive Seizures. One hundred examinations were sampled (April 2017 to September 2018). Each data point represents a 2-month time range in which 10 studies were sampled of each seizure type.

Nonconvulsive Seizures

If a patient was not convulsing, the standardized ictal examination instructed the responder to perform more detailed testing: soliciting seizure aura, verbal memory testing, language evaluation, and motor testing and postictal testing (seizure aura inquiry, verbal memory, language ability, and motor function). No sustained change was observed for ictal or postictal testing for nonconvulsive seizures (figure 3, C and D).

Balancing Measures

The new examination took on average 46 seconds to administer compared with 1 minute 28 seconds required to administer the old examination (figure e-1, links.lww.com/CPJ/A167).

The 5-item survey was administered to EMU nurses and EEG techs 4 months after the second iteration of the ictal testing pathway had been disseminated to evaluate competency and satisfaction with the new examination. The majority of staff (7 of 8) felt that they had learned from this intervention, but 3 of 8 did not understand why certain elements of the test were performed even after education.

Discussion

In this study, we characterized barriers to ictal testing and implemented a uniform examination, which prioritized safety, efficiency, and testing of the eloquent cortex. Despite comprehensive root cause analysis and multiple cycles of intervention, we were unable to improve the fidelity of ictal examination testing in our EMU.

Our findings are consistent with multiple previous studies, which have shown that there is considerable variation in EMU practices.6,7,10 To date, prospective studies have assessed patients' ictal and postictal consciousness using both humans and artificial intelligence.11–13 There have been studies and consensus statements to articulate EMU patient safety guidelines10,14,15 and a feasibility study implementing an ictal testing battery developed by a European taskforce.2 Here, we present a rigorous assessment of ictal examination fidelity with consideration of the various portions of the examination across seizure types. Even at our level 4 epilepsy center, however, we found that key examination components continued to be underassessed despite multiple types of examination prompts. The barriers to performing a high quality ictal examination are multifactorial; a provider educational intervention alone is inadequate.

Before our intervention, EMU staff provided their expertise regarding obstacles to performing a “good” ictal examination in a root cause analysis: no standardized education as to how to perform the examination, no explanation of the rationale for the examination's components, emphasis on pressing the alarm button over assessing the patient, and difficulty recognizing a nonconvulsive seizure. As it has been observed that those best positioned to ensure EMU patient safety are nurses,16 we focused efforts on an educational intervention targeting the EMU nurses and EEG techs who would most often be administering the examination. However, on the postintervention staff survey, up to a third of respondents did not understand why examination components were performed, suggesting that the educational intervention was either inadequate or needed more repetition for true penetrance.

Other barriers similarly remained unaddressed by the educational intervention and examination prompts. Examiner fatigue was a factor when a nurse took care of a patient with multiple nonconvulsive seizures daily. Anecdotally, nocturnal ictal examinations seemed more onerous to perform, given the absence of EEG technicians in the unit overnight. In addition, the existing workflow did not promote interdisciplinary collaboration: nurses were not typically part of EMU rounds and therefore relied on the resident, fellow, or attending to communicate with them afterward. Even after prompts for regular discussion of patient-specific goals on daily rounds, including an infogram in the electronic recording specifying the focus of ictal testing for a particular patient, these initiatives were not preserved in the workflow longitudinally. EMU house staff and attendings rotate as frequently as weekly on the service, which may have contributed; EMU nursing staff is more consistent but not continuous.

Postictal testing represents a window during which patients' language and motor abilities may remain impaired and thus can offer important clinical information.10,11 Postictal testing was consistently inadequate in our study. Many patients receive IV lorazepam after prolonged or multiple convulsive seizures in our EMU; in fact, rescue medication administration represents an important EMU quality indicator to prevent adverse events such as status epilepticus.5 Perhaps postictal testing for convulsive seizures remained poorly assessed because staff associate a convulsive seizure with a prolonged duration of unresponsiveness, regardless of whether a benzodiazepine is administered. Postictal testing for nonconvulsive seizures similarly lagged behind compliance with other testing domains. After a seizure, staff were observed to be monitoring the EEG, assessing patient vitals, and reporting the ictus to other staff, but postictal testing was either incompletely performed or substituted for a conversation with the patient.

Finally, we recognize the intrinsic limitations associated with an educational intervention. Human fallibility means examinations will inevitably vary, even for the same performer, and educational interventions remain difficult to study objectively, given the heterogeneity of their design.17 On an inpatient ward, there is a continuous flow of education and “in-services” as well as fairly frequent staff turnover. Furthermore, with increasing demands on medical professionals' time and attention, changing provider behavior is a well-documented barrier in and of itself.18 These challenges certainly raise the question of using other modalities to initiate ictal testing. One example is an innovative automatic testing battery that was shown to cue at the appropriate times.13 Exploration of advanced technologies to improve ictal examination testing is exciting and promising. However, there are subtleties to the ictal assessment that are best observed in person and often are not visible or inaudible on video recording. As the bedside ictal examination remains integral to EMU patient safety and care, our field demands optimization of this traditional yet nonetheless crucial test with the goal of generalizability across epilepsy centers.

There are several limitations to this study. Our baseline data were collected at a time when discussion of ictal examination testing was underway; therefore, attention to the examinee may have inflated baseline performance. In addition, some aspects of the baseline examination (e.g., verbal memory testing and language testing in the nonconvulsive seizure) were already reliably performed, and we anticipated a ceiling effect. We did not comprehensively sample examinations from each time point due to feasibility considerations, but instead reviewed a convenience sample in keeping with quality improvement methodology to evaluate for trend establishment over time. This convenience sample was selected by EEG techs who were not involved in the examination assessment. Although our observations identified type of deviations from the protocol, our measurement of magnitude may be inaccurate. In addition, some examination components were difficult to identify from video: we uniformly erred on the side of undercounting component performance. Seizure duration was not a requirement for inclusion of an ictal assessment, so this study did not address brief seizures that were not clinically detected or assessed. We did not collect data on the average time after seizure onset that testing started, which could affect patient and provider performance. Last, we did not assess the clinical utility of examination components for each ictal examination: the components that were not performed in a particular examination may not have had relevance for that particular patient and/or may have been appropriately excluded due to baseline neurologic deficits. This could be pursued further in subsequent iterations of the examination.

We envision a future, better version of this ictal assessment. We tailored the rubric from consensus guidelines2 for brevity, so once an initial seizure is evaluated, the ideal would be to customize it for the individual patient. One example might be including a question about verbal memory recall or command following for the convulsing patient in case it was a nonepileptic event. In addition, local input is critical to achieve culture change so that the examination is performed with high fidelity. Our EMU nurses cited lack of feedback about examination performance: a worthy consideration is therefore allocation of time and resources for personalized evaluation at regular intervals. Although our project did not substantially improve ictal assessment performance, we believe that our work enriches study in this area and will precipitate future innovation in ictal examination assessment.

We developed a standardized ictal examination protocol with the goals of ensuring that clinically relevant patient behavior was consistently assessed, decreasing the time required to administer the examination, increasing EEG tech and nursing confidence in their performance, and improving patient safety. The examination itself required several revisions based on staff feedback, and this study encompasses a year of ictal examination development. The ictal examination was not adopted in a widespread or sustained way at our institution, yet the examination content we developed provides an important foundation in the critical charge to create standardized, high-quality EMU care.

Appendix. Authors

Footnotes

Editorial, page 95

Study Funding

No targeted funding reported.

Disclosure

S.S. O'Kula, L. Faillace, C.V. Kulick-Soper, and S. Reyes-Esteves report no disclosures. J. Raab is a consultant for NeuroPace. K.A. Davis is a consultant for UCB and Lundbeck; receives research support from Eisai; and is supported by NIH K23-NS073801 and by the Thornton Foundation. A. Kheder reports no disclosures. C.E. Hill is supported by the Mirowski Family Fund. Full disclosure form information provided by the authors is available with the full text of this article at Neurology.org/cp.

References

- 1.Shih JJ, Fountain NB, Herman ST, et al. Indications and methodology for video-electroencephalographic studies in the epilepsy monitoring unit. Epilepsia 2018;59:27–36. [DOI] [PubMed] [Google Scholar]

- 2.Beniczky S, Neufeld M, Diehl B, et al. Testing patients during seizures: a European consensus procedure developed by a joint taskforce of the ILAE—Commission on European Affairs and the European Epilepsy Monitoring Unit Association. Epilepsia 2016;57:1363–1368. [DOI] [PubMed] [Google Scholar]

- 3.Ryvlin P, Nashef L, Lhatoo SD, et al. Incidence and mechanisms of cardiorespiratory arrests in epilepsy monitoring Units (MORTEMUS): a retrospective study. Lancet Neurol 2013;12:966–977. [DOI] [PubMed] [Google Scholar]

- 4.Sauro KM, Macrodimitris S, Krassman C, et al. Quality indicators in an epilepsy monitoring unit. Epilepsy Behav 2014;33:7–11. [DOI] [PubMed] [Google Scholar]

- 5.Sauro KM, Wiebe S, Macrodimitris S, Jetté N. Quality indicators for the adult epilepsy monitoring unit. Epilepsia 2016;57:1771–1778. [DOI] [PubMed] [Google Scholar]

- 6.Sauro KM, Wiebe N, Macrodimitris S, Wiebe S, Lukmanji S, Jetté N. Quality and safety in adult epilepsy monitoring units: a systematic review and meta analysis. Epilepsia 2016;57:1754–1770. [DOI] [PubMed] [Google Scholar]

- 7.Fitzsimons M, Browne G, Kirker J, Staunton H. An international survey of long-term video/EEG services. J Clin Neurophysiol 2000;17:59–67. [DOI] [PubMed] [Google Scholar]

- 8.Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci 2007;2:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Goldenberg D, Andrusyszyn M, Iwasiw C. The effect of classroom simulation on nursing students' self-efficacy related to health teaching. J Nurs Educ 2005;44:310–314. [DOI] [PubMed] [Google Scholar]

- 10.Shafer P, Buelow JM, Noe KH, et al. A consensus-based approach to patient safety in epilepsy monitoring units: recommendations for preferred practices. Epilepsy Behav 2012;25:449–456. [DOI] [PubMed] [Google Scholar]

- 11.Yang L, Shklyar I, Lee HW, et al. Impaired consciousness in epilepsy investigated by a prospective responsiveness in epilepsy scale (RES). Epilepsia 2012;53:437–447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bauerschmidt A, Koshkelashvili N, Ezeani CC, et al. Prospective assessment of ictal behavior using the revised Responsiveness in Epilepsy Scale (RES-II). Epilepsy Behav 2013;26:25–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Touloumes G, Morse E, Chen WC, et al. Human bedside evaluation versus automatic responsiveness testing in epilepsy (ARTiE). Epilepsia 2015;57:e28–e32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lee JW, Shah A. Safety in the EMU: reaching consensus. Epilepsy Curr 2013;13:107–109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Spanaki MV, McCloskey C, Remedio V, et al. Developing a culture of safety in the epilepsy monitoring unit: a retrospective study of safety outcomes. Epilepsy Behav 2012;25:185–188. [DOI] [PubMed] [Google Scholar]

- 16.Sanders PT, Cysyk BJ, Bare MA. Safety in long-term EEG video monitoring. J Neurosci Nurs 1996;28:305–313. [DOI] [PubMed] [Google Scholar]

- 17.Reed D, Price EG, Windish DM, et al. Challenges in systematic reviews of educational intervention studies. Ann Intern Med 2005;142:1080–1089. [DOI] [PubMed] [Google Scholar]

- 18.Grimshaw JM, Eccles MP, Walker AE, Thomas RE. Changing physicians behavior: what works and thoughts on getting more things to work. J Contin Educ Health Prof 2003;22:237–243. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The anonymized data used for our study will be shared by request from any qualified investigator.