Abstract

Objectives:

The motivation for this research is to determine whether a listening-while-balancing task would be sensitive to quantifying listening effort in middle age. The premise behind this exploratory work is that a decrease in postural control would be demonstrated in challenging acoustic conditions, more so in middle-aged than in younger adults.

Design:

A dual-task paradigm was employed with speech understanding as one task and postural control as the other. For the speech perception task, participants listened to and repeated back sentences in the presence of other sentences or steady-state noise. Targets and maskers were presented in both spatially-coincident and spatially-separated conditions. The postural control task required participants to stand on a force platform either in normal stance (with feet approximately shoulder-width apart) or in tandem stance (with one foot behind the other). Participants also rated their subjective listening effort at the end of each block of trials.

Results:

Postural control was poorer for both groups of participants when the listening task was completed at a more adverse (vs. less adverse) signal-to-noise ratio. When participants were standing normally, postural control in dual-task conditions was negatively associated with degree of high-frequency hearing loss, with individuals who had higher pure-tone thresholds exhibiting poorer balance. Correlation analyses also indicated that reduced speech recognition ability was associated with poorer postural control in both single- and dual-task conditions. Middle-aged participants exhibited larger dual-task costs when the masker was speech, as compared to when it was noise. Individuals who reported expending greater effort on the listening task exhibited larger dual-task costs when in normal stance.

Conclusions:

Listening under challenging acoustic conditions can have a negative impact on postural control, more so in middle-aged than in younger adults. One explanation for this finding is that the increased effort required to successfully listen in adverse environments leaves fewer resources for maintaining balance, particularly as people age. These results provide preliminary support for using this type of ecologically-valid dual-task paradigm to quantify the costs associated with understanding speech in adverse acoustic environments.

Keywords: Aging, Balance, Hearing, Speech Perception

INTRODUCTION

Speech perception is typically quantified by how accurately participants can understand a target message. Although this yields valuable information about objective performance, there are other dimensions of speech understanding that also are important to quantify. There is increasing interest in studying listening effort as a complement to measuring how well individuals can understand a message (the reader is referred to the comprehensive edition of Ear and Hearing (Volume 37, 2016) that summarizes information from the Eriksholm Workshop on this topic). One salient reason to measure listening effort is that some people who can accurately perceive a message may need to expend additional effort to do so. That increase in listening effort may have a cascading effect on individuals’ ability to perform other tasks while they are communicating.

There is a long history of using dual-task methods to quantify listening effort. The theoretical framework behind such studies is that dual-task costs (the extent to which performance on a task completed in isolation is negatively affected when individuals execute another task simultaneously) are caused by competition for resources; when one of the tasks is effortful, requiring a higher cognitive load, fewer resources remain for performing the other task (e.g., Broadbent 1958; Kahneman 1973).

Many of the paradigms used in dual-task studies of listening effort incorporate somewhat artificial secondary tasks. For example, participants may be asked to complete a speech understanding test (the primary task) while also pressing a button in response to a visual stimulus (the secondary task). Listening effort is quantified by the difference in performance on the secondary task (e.g., increased button-press reaction time) when performing both tasks together as compared to when just completing the button-press task in isolation.

This article describes the results of a study that explored the feasibility and outcomes of using a dual-task paradigm that is more likely to be encountered in everyday realistic situations. Support for quantifying listening effort using ecologically-valid dual-task and multi-task paradigms can be found in a recent study by Devesse et al. (2020). In the present article, participants (groups of younger and middle-aged adults) listened to and repeated sentences in the presence of masking speech or noise while postural control (defined here as the ability to stand still) was measured. Two levels of difficulty were tested: (1) an easy postural condition where participants stood normally, with feet about shoulder-width apart; and (2) a more difficult postural condition where participants stood with one foot behind the other (tandem stance).

We chose this particular paradigm because, given the potential dire consequences that can occur when older adults fall (e.g., Burns et al. 2016), it is important to document how early aging (i.e., when individuals are in middle age) influences this combination of tasks. Postural control is generally thought of as automatic but can require some level of cognitive resources, especially under challenging conditions (see Boisgontier et al. 2013 for a review). There is strong evidence that postural control is negatively affected by both aging and age-related hearing loss (e.g., Crews & Campbell 2004; Viljanen et al. 2009; Lin & Ferrucci 2012; Li et al. 2013; Bruce et al. 2017; Thomas et al. 2018), with subtle changes in postural control arising by middle age (Ku et al. 2016; Park et al. 2016). A thorough review of this topic can be found in the recent publication by Agmon et al. (2017). Older adults appear to need to recruit additional cognitive and neural resources to maintain balance, as compared to younger adults (Brown et al. 1999; Li & Lindenberger 2002; Deviterne et al. 2005; Boisgontier et al. 2013; Bruce et al. 2017). Critically, both postural sway (Maki et al. 1990) and hearing loss (Crews & Campbell 2004; Viljanen et al. 2009; Lin & Ferrucci 2012; Li et al. 2013) are associated with an increased risk of falls in older adults.

A modest amount of recent work has used dual-task listening paradigms that incorporate ecologically-valid motor control tasks. Most relevant to the current study are listening-while-walking (Lau et al. 2017; Nieborowska et al. 2019) and listening-while-balancing (Bruce et al 2017; Carr et al. 2019) experiments. These studies generally found differences between older and younger adults on dual-task performance. Older adults with mild hearing loss exhibited greater dual-task costs, as compared to younger adults, when pairing an auditory working memory task with a postural control task (Bruce et al. 2017). In a study that used a virtual-reality street crossing task along with quantifying speech understanding in noise (Nieborowska et al. 2019), older and younger adults differed in how they allocated resources for the two tasks. In a balancing-while-listening task, older adults with and without subjective cognitive decline showed dual-task costs in both postural control and speech understanding (Carr et al. 2019). Conversely, in another study using a paradigm similar to that of Nieborowska et al. (2019) (Lau et al. 2017), only minimal dual-task costs were found in their two groups of participants (older adults with and without hearing loss).

Unlike conventional dual-task measures of listening effort where participants are instructed to prioritize the listening task, when these more ecologically-valid dual tasks are used it is typical not to instruct participants to put more emphasis on one of the tasks. A justification for this decision is that in real-life multitasking situations individuals must determine for themselves how to coordinate various tasks (see Fraser and Bherer, 2013 for a discussion of this issue). Not instructing participants to emphasize one task over the other allows researchers to examine how individuals prioritize the two tasks. This has led to the consistent finding that older adults appear to prioritize safety (i.e., maintaining balance) over other tasks (e.g., Li et al, 2005; Bruce et al., 2017; Nieborowska et al., 2019), as demonstrated by smaller dual-task costs for the postural control task than for the other task. Regardless of the difference in instructions, interpretation still centers around the concept that the two tasks compete for resources, and the more effortful one task is, the fewer resources remain to devote to the other task.

As stated above, we chose to study middle-aged adults in the present work. It is not uncommon for middle-aged people to report problems understanding speech in adverse listening situations. These individuals often are found to have normal or near-normal audiometric results. Research confirms this observation, with middle-aged adults rating their self-perceived listening ability as being poorer on average than what would be anticipated by their audiograms (Bainbridge & Wallhagen 2014; Helfer et al. 2017).

Why might this be so? Modest (i.e., clinically non-significant) increases in auditory thresholds often occur during middle age (e.g., Agrawal et al. 2008; Nash et al. 2011). Small elevations of high-frequency thresholds are associated with increased self-reported psychosocial problems (Bess et al. 1991). Changes in peripheral and central auditory function that do not affect pure-tone thresholds likely contribute to problems in complex listening environments. For example, temporal processing may be reduced in middle-aged (relative to younger) individuals (Muchnik et al. 1985; Snell & Frisina 2000; Babkoff et al. 2002; Lister et al. 2002; Grose et al. 2006; Humes et al. 2010; Leigh-Paffenroth & Elangovan 2011; Ozmeral et al. 2016). The effective utilization of temporal fine structure cues decreases by middle age (Grose & Mamo 2010; Füllgrabe 2013), which may lead to difficulty segregating sounds. Changes in how the middle-aged brain processes sounds have been noted in several studies using event-related potentials (Mager et al. 2005; Alain & McDonald 2007; Wambacq et al. 2009; Ruggles et al. 2012; Davis et al. 2013; Davis & Jerger 2014). There also is the possibility that a reduction in the number of auditory nerve fibers (e.g., Bharadwaj et al. 2015; Viana et al. 2015) in middle-aged adults leads to problems understanding speech in noisy situations.

Another likely reason for the discrepancy between middle-aged adults’ subjective listening experience and their performance on audiologic tests is that current clinical tests do not extend to measuring speech understanding in the conditions in which these individuals are reporting problems. Even clinical tests of speech understanding in noise do not tap into the types of situations that are most difficult for middle-aged adults: understanding one message in the presence of a small number of competing talkers. Research has confirmed that these kinds of environments are particularly challenging for middle-aged listeners (e.g., Wiley et al. 1998; Helfer & Vargo 2009; Cameron et al. 2011; Hannula et al. 2011; Glyde et al. 2013; Shinn-Cunningham et al. 2013; Başkent et al. 2014; Füllgrabe et al. 2015; Tremblay et al. 2015). In fact, several studies from our lab have found that middle-aged adults perform similarly to younger adults in the presence of noise maskers but behave more like older adults when the masker is understandable competing speech (Helfer & Freyman 2014; Helfer & Jesse 2015; Helfer et al. 2018). Finally, an additional potential reason for self-reported hearing problems in this population (and one that motivates the present study) is that even if middle-aged adults can understand speech adequately in challenging listening situations, they may need to expend more effort in doing so. Hence, increased effort or cognitive load may be a key reason why middle-aged adults who perform normally on clinical tests often report difficulty in day-to-day communication situations, and this increase in effort may be more apparent when they are listening in the presence of competing understandable speech messages vs. when the background consists of steady-state noise.

The present project was designed to explore the extent to which an ecologically-valid paradigm (listening while maintaining postural control) is sensitive to changes in the difficulty of the listening task, as well as how adequately this paradigm can index differences in listening effort between younger and middle-aged adults. We also were interested in determining the associations among age, high-frequency hearing loss, self-rated listening effort, and performance on the two tasks (speech perception and postural control) under both single- and dual-task conditions. Participants heard sentences in the presence of competing speech or noise while standing on a force platform in either normal stance (with feet approximately shoulder-width apart) or the more challenging tandem stance (with one foot behind the other). We proposed that postural control would be adversely affected as the listening situation became more difficult [i.e., when the signal-to-noise ratio (SNR) was poorer, or when there was no spatial separation between target and maskers], reflecting increasing cognitive load or effort. We further hypothesized that these postural control changes would be larger for middle-aged adults than for younger adults. This hypothesis is consistent with the idea that listening in challenging environments leads to a greater drain on cognitive resources for these individuals than for younger adults. We theorized that this increase in the influence of the listening task on postural control would be accompanied by an increase in self-rated listening effort. Finally, since prior research has indicated that middle-aged adults have particular difficulty in situations with competing talkers, we proposed that the differences in postural control and self-rated effort between age groups would be larger when the masker was competing speech than when it was steady-state noise.

MATERIALS AND METHODS

Participants

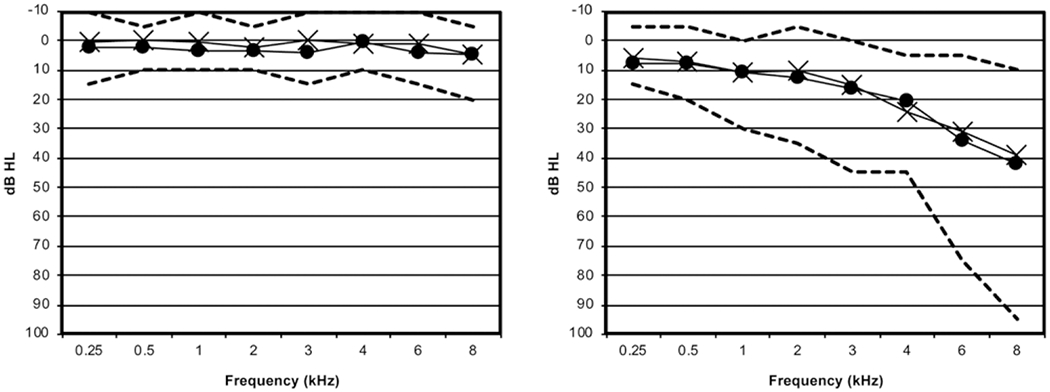

Two groups of listeners participated in this study (n = 16/group). Data from one participant in each group were eliminated due to experimental error; hence, results presented in this article are based on n = 15/group. One group consisted of younger adults (18 to 28 years, mean 22 years) with normal hearing (pure-tone thresholds <20 dB HL from 250 to 8000 Hz bilaterally). The other group consisted of middle-aged adults (48 to 64 years, mean = 58 years). All but two of these participants had clinically normal hearing (pure tone thresholds <30 dB HL) up to and including 4000 Hz; one participant had 30 to 45 dB HL thresholds from 2000 to 4000 Hz, and the other had thresholds from 30 to 45 dB HL between 1000 and 4000 Hz. The mean high-frequency pure-tone average (HFPTA) (the average threshold for 2000 to 6000 Hz pure tones) for the middle-aged group was 22 dB HL for the right ear and 19 dB HL for the left ear (range = 8 to 45 dB HL). Figure 1 shows audiometric data for both groups of participants. All listeners were native speakers of English with a negative history of otologic or neurologic disorder. In addition, all middle-aged participants scored >26 (out of 30) on the Montreal Cognitive Assessment (Nasreddine et al. 2005). Participants all had normal tympanograms bilaterally on the test day. Although vestibular function was not explicitly assessed, each potential participant was thoroughly screened via an informal questionnaire and follow-up interview to eliminate individuals who reported any vestibular, neurological, or motor condition that might affect balance or hearing. All participants also had normal or corrected-to-normal vision.

Fig. 1.

Average audiograms for younger (left panel) and middle-aged (right panel) participants. Hatched marks represent the lowest and highest thresholds at each frequency.

Procedures

The listening task for this experiment required participants to repeat sentences from the TVM-Colors corpus (Helfer et al. 2016). These sentences consist of a name (Theo, Victor, or Michael; hence TVM) followed by a color-noun combination and an adjective (other than a color)-noun combination. The sentences are grammatically feasible but have low predictability. An example of a sentence is Theo found the pink menu and the true item here, with underlined words used for scoring. As described in Helfer et al. (2016), sentences were recorded from three male and three female talkers. For the present study, the target talker was randomly selected from trial to trial, and the maskers (in competing speech conditions) were utterances from the two other same-sex talkers. The target sentence was cued at the beginning of a trial by displaying the name corresponding to the initial word of that sentence (Theo, Victor, or Michael) on a video monitor located in front of the participant. Participants were instructed to look at that monitor for the duration of each block of trials. Target sentences were presented at 67 dBA as measured with a speech-shaped noise played at the equivalent root mean square level. Two spatial conditions were used: front-front-front (FFF), where all signals were played from a loudspeaker located at 0° azimuth and 1.3 m from the participant; and right-front-left (RFL), where the target was played from the front and the maskers were played from speakers located 60° to the right and left, also at a distance of 1.3 m. The height of each of the three loudspeakers was adjusted to align with the ear height of each participant.

For competing speech conditions, maskers consisted of two TVM-Colors sentences (that were different from the target sentences) with utterances from one masking talker presented from the left loudspeaker and sentences from other masking talker emanating from the right loudspeaker. Each masker sentence began at a random starting point within the sentence and continued to the end of that utterance. The beginning of the sentence was then appended and the maskers continued until the target ended (Helfer et al. 2016). This looping of maskers was used because competing messages typically are not grammatically aligned with the message of interest. In noise-masking conditions, independent samples of steady-state speech shaped noise were presented from each of these two loudspeakers. SNRs for this study were −6 dB and 0 dB, measured in root mean square and computed in reference to the total masker energy (so at 0 dB SNR, the combined level of the two maskers was equal to the level of the target). Trials were blocked by condition (2 SNRs × 2 masker types × 2 spatial conditions × 2 stance conditions, as described below), with each block presented twice. The order of the 32 blocks was randomized. There were eight sentences per block, allowing for 64 scorable words per condition (4 score-able words/sentence × 8 sentences/block × 2 blocks), with an average block duration of roughly 80 seconds. Participants were instructed to repeat back the target sentence at the end of each trial; their responses were audio-recorded and scored later offline. At the end of each block of trials, participants rated their perceived listening effort on a scale of 1 (very little effort) to 10 (very high effort). This scale was displayed on the video monitor and participants reported their self-rated effort verbally.

For all conditions, participants stood on a 40 × 60 cm piezoelectric force platform (Kistler Instruments Corporation, Amherst, NY). Data were collected in two stance conditions: normal, with feet approximately shoulder-width apart; and tandem, with one foot in front of the other. Participants removed their shoes before testing commenced. For consistency between blocks, participants were required to select and maintain a preferred tandem foot orientation (right foot in front or left foot in front) and both stances were outlined on the force platform. Participants were instructed to listen and then repeat back the target sentence while attempting to stand as still as possible. Baseline measures of 80 seconds of standing in quiet (i.e., single-task postural control conditions) were conducted for both normal and tandem stance conditions at the start and end of the session. A custom LabView program (National Instruments, Austin, TX) was time synced with the audio tasks as it recorded, at a 100 Hz A/D conversion rate, the ground reaction forces from the force platform.

Data Analysis

Postural control was defined as the center of pressure (CoP) excursion area measured from the force platform (Swanenburg et al., 2008; Duarte & Freitas, 2010). A 95% confidence ellipse based on the anterior-posterior and medial-lateral displacements of the CoP was calculated in a custom Matlab script (Mathworks, Natick, MA) from the ground reaction forces. Before all calculations, the CoP data were low-pass filtered with a 10 Hz zero-lag Butterworth filter. Ground reaction forces were collected continuously across an entire block of eight trials. Since prior evidence suggests that oral responses made during postural control tasks can negatively influence postural control (e.g., Huxhold et al. 2006, Fraizer & Mitra 2008), and because the question of interest in this study was how listening and postural control interacted, we chose to analyze data only for time intervals corresponding to when participants were listening but not while they were responding. This was achieved by partitioning each block’s eight listening trials and corresponding responses from time-stamps. The CoP ellipses from the eight listening intervals from each block were averaged to produce a mean CoP ellipse area-per-block metric. In order to employ a similar trial length during single-task postural control trials (i.e., when participants were required to stand but not listen to stimuli), CoP values were excised from eight equally spaced 3.26-second intervals (the average length of time participants listened during each trial) distributed within the 80-second trial.

Main and interaction effects were identified using multivariate analyses of variance (ANOVAs) for the speech recognition data (Table 1), the self-report ratings of effort (Table 3), the postural control data (Table 4), and for dual-task costs (Table 5). We conducted separate analyses for each SNR for the speech perception and self-rated effort data since there was little uncertainty regarding how a 6 dB change in SNR would affect both of these metrics. However, we did not know how SNR would influence postural control, so we opted not to separate the postural control data by SNR before analysis. Significant interactions (α < 0.05) that addressed our research questions were further analyzed with posthoc one-way ANOVAs or t-tests. We also examined effect size for participant group differences in speech recognition, postural control, and dual-task costs using Cohen’s d or Hedge’s g (Table 2). Finally, we conducted correlation analyses to illuminate the associations among age, high-frequency hearing loss, speech recognition, self-rated effort, and postural control (Table 6).

TABLE 1.

Summary of analyses of variance results for the speech perception data

| 0 dB SNR |

−6 dB SNR |

|||

|---|---|---|---|---|

| Speech Recognition | F | p | F | p |

| Stance | 0.05 | 0.822 | 0.49 | 0.492 |

| Stance × group | 0.72 | 0.404 | 0.24 | 0.630 |

| Masker | 84.99 | <0.001 | 15.39 | 0.001 |

| Masker × group | 0.92 | 0.345 | 0.45 | 0.507 |

| Spatial | 243.57 | <0.001 | 218.94 | <0.001 |

| Spatial × group | 0.54 | 0.470 | 7.14 | 0.012 |

| Stance × masker | 0.28 | 0.603 | 0.28 | 0.601 |

| Stance × masker × group | 2.04 | 0.164 | 5.87 | 0.022 |

| Stance × spatial | 0.60 | 0.446 | 0.10 | 0.756 |

| Stance × spatial × group | 0.32 | 0.575 | 1.32 | 0.261 |

| Masker × spatial | 115.25 | <0.001 | 292.74 | <0.001 |

| Masker × spatial × group | 0.14 | 0.709 | 0.15 | 0.705 |

| Stance × masker × spatial | 3.20 | 0.084 | 0.19 | 0.663 |

| Stance × masker × spatial × group | 0.12 | 0.662 | 0.76 | 0.391 |

| Group | 5.08 | 0.032 | 2.99 | 0.095 |

Statistically significant results (p < 0.05) are in bold typeface.

TABLE 3.

Summary of analyses of variance results for the listening effort rating data

| 0 dB SNR |

−6 dB SNR |

|||

|---|---|---|---|---|

| Self-Assessed Effort | F | p | F | p |

| Stance | 8.42 | 0.007 | 1.36 | 0.253 |

| Stance × group | 0.66 | 0.425 | 1.60 | 0.216 |

| Masker | 36.81 | 0.001 | 4.44 | 0.044 |

| Masker × group | 0.01 | 0.968 | 0.12 | 0.728 |

| Spatial | 59.75 | <0.001 | 51.12 | <0.001 |

| Spatial × group | 0.66 | 0.423 | 1.75 | 0.197 |

| Stance × masker | 0.05 | 0.824 | 0.84 | 0.367 |

| Stance × masker × group | 0.26 | 0.617 | 0.37 | 0.546 |

| Stance × spatial | 3.52 | 0.071 | 0.01 | 0.936 |

| Stance × spatial × group | 0.02 | 0.890 | 0.00 | 1.000 |

| Masker × spatial | 38.23 | <0.001 | 37.24 | <0.001 |

| Masker × spatial × group | 0.01 | 0.957 | 3.71 | 0.064 |

| Stance × masker × spatial | 0.25 | 0.622 | 0.90 | 0.352 |

| Stance × masker × spatial × group | 1.13 | 0.298 | 0.44 | 0.513 |

| Group | 2.12 | 0.157 | 1.54 | 0.225 |

Statistically significant results (p < 0.05) are in bold typeface.

TABLE 4.

Summary of analyses of variance results for the postural control data

| F | p | |

|---|---|---|

| Single-task postural control | ||

| Stance | 26.94 | <0.001 |

| Stance × group | 0.18 | 0.679 |

| Group | 0.49 | 0.488 |

| Dual-task postural control | ||

| Spatial | 0.06 | 0.805 |

| Spatial × group | 0.26 | 0.616 |

| Masker | 3.03 | 0.094 |

| Masker × group | 3.73 | 0.065 |

| SNR | 9.32 | 0.005 |

| SNR × group | 0.85 | 0.366 |

| Stance | 35.03 | <0.001 |

| Stance × group | 1.33 | 0.260 |

| Spatial × masker | 2.21 | 0.150 |

| Spatial × masker × group | 3.24 | 0.084 |

| Spatial × SNR | 5.71 | 0.025 |

| Spatial × SNR × group | 1.46 | 0.238 |

| Masker × SNR | 0.01 | 0.916 |

| Masker × SNR × group | 0.80 | 0.381 |

| Spatial × masker × SNR | 0.15 | 0.707 |

| Spatial × masker × SNR × group | 0.34 | 0.565 |

| Spatial × stance | 3.61 | 0.069 |

| Spatial × stance × group | 1.20 | 0.283 |

| Masker × stance | 0.25 | 0.620 |

| Masker × stance × group | 0.03 | 0.870 |

| Spatial × masker × stance | 0.19 | 0.666 |

| Spatial × masker × stance × group | 0.39 | 0.538 |

| SNR × stance | 1.60 | 0.217 |

| SNR × stance × group | 1.28 | 0.270 |

| Spatial × SNR × stance | 0.01 | 0.912 |

| Spatial × SNR × stance × group | 0.47 | 0.499 |

| Masker × SNR × stance | 0.35 | 0.561 |

| Masker × SNR × stance × group | 0.26 | 0.612 |

| Spatial × masker × SNR × stance | 0.55 | 0.467 |

| Spatial × masker × SNR × stance × group | 0.87 | 0.361 |

| Group | 3.98 | 0.057 |

Statistically significant results (p < 0.05) are in bold typeface.

TABLE 5.

Summary of ANOVA results for dual-task costs

| Dual-Task Costs | F | p |

|---|---|---|

| Stance | 5.71 | 0.025 |

| Stance × group | 0.86 | 0.363 |

| SNR | 8.59 | 0.007 |

| SNR × group | 0.97 | 0.336 |

| Spatial | 0.59 | 0.448 |

| Spatial × group | 0.04 | 0.849 |

| Masker | 1.09 | 0.308 |

| Masker × group | 5.83 | 0.024 |

| Stance × SNR | 0.03 | 0.872 |

| Stance × SNR × group | 0.41 | 0.527 |

| Stance × spatial | 2.74 | 0.111 |

| Stance × spatial × group | 0.00 | 0.974 |

| SNR × spatial | 1.65 | 0.211 |

| SNR × spatial × group | 0.00 | 0.993 |

| Stance × SNR × spatial | 0.92 | 0.346 |

| Stance × SNR × spatial × group | 0.01 | 0.955 |

| Stance × masker | 0.06 | 0.803 |

| Stance × masker × group | 0.59 | 0.452 |

| SNR × masker | 0.85 | 0.367 |

| SNR × masker × group | 1.13 | 0.299 |

| Stance × SNR × masker | 0.00 | 0.974 |

| Stance × SNR × masker × group | 0.89 | 0.356 |

| Spatial × masker | 1.93 | 0.178 |

| Spatial × masker × group | 3.68 | 0.067 |

| Stance × spatial × masker | 0.67 | 0.420 |

| Stance × spatial × masker × group | 1.49 | 0.234 |

| SNR × spatial × masker | 4.18 | 0.052 |

| SNR × spatial × masker × group | 2.06 | 0.164 |

| Stance × SNR × spatial × masker | 5.69 | 0.025 |

| Stance × SNR × spatial × masker × group | 0.02 | 0.893 |

| Group | 0.01 | 0.944 |

Statistically significant results (p < 0.05) are in bold typeface.

TABLE 2.

Effect size calculations for differences between the two participant groups in each condition for performance on the speech recognition task and dual-task postural control (CoP)

| Speech Recognition | Postural Control | |

|---|---|---|

| Normal stance | ||

| RFL 0 speech | 1.06 | 0.46 |

| RFL –6 speech | 0.63 | 0.74 |

| RFL 0 noise | 0.52 | 0.63 |

| RFL –6 noise | 0.61 | 0.64 |

| FFF 0 speech | 0.47 | 0.80 |

| FFF –6 speech | 0.49 | 0.54 |

| FFF 0 noise | 0.32 | 0.63 |

| FFF –6 noise | 0.23 | 0.13 |

| Tandem stance | ||

| RFL 0 speech | 0.44 | 0.74 |

| RFL –6 speech | 0.91 | 0.45 |

| RFL 0 noise | 0.50 | 0.66 |

| RFL –6 noise | 1.12 | 0.94 |

| FFF 0 speech | 0.31 | 0.54 |

| FFF –6 speech | 0.16 | 0.95 |

| FFF 0 noise | 0.40 | 0.52 |

| FFF –6 noise | 0.19 | 0.39 |

Cohen’s d was used to calculate effect size for speech recognition scores. Hedge’s g was used to derive effect sizes for CoP data due to the large difference in variance between groups when postural control was measured in tandem stance. Condition is specified by spatial configuration (RFL = spatially-separated target and masker, FFF = spatially co-located target and masker); SNR (0 = 0 dB, 6 = −6 dB); masker type (speech or noise); and stance (normal or tandem). Effect size greater than 0.80 is considered to be large; effect sizes around 0.50 are considered medium; and effect sizes less than 0.40 are considered small.

TABLE 6.

Results of Pearson r and partial correlation analyses performed separately for data obtained in the two stance conditions

| Normal Stance: Pearson r | SpeechRec | Effort | CoPQ | CoPlisten | Cost |

| SpeechRec | — | −0.25 | −0.51* | −0.61* | −0.19 |

| Effort | — | 0.21 | 0.38† | 0.38† | |

| CoPQ | — | 0.81* | −0.17 | ||

| CoPlisten | 0.35 | ||||

| Cost | — | ||||

| Normal Stance: Partial Correlation Controlling for beHFPTA | Age | SpeechRec | CoPQ | CoPlisten | Cost |

| Age | — | 0.10 | 0.15 | −0.17 | −0.47† |

| SpeechRec | — | −0.39† | −0.49* | −0.08 | |

| CoPQ | — | 0.77* | −0.31 | ||

| CoPlisten | — | 0.27 | |||

| Cost | — | ||||

| Normal Stance: Partial Correlation Controlling for Age | beHFPTA | SpeechRec | CoPQ | CoPlisten | Cost |

| beHFPTA | — | −0.38 | 0.15 | 0.43† | 0.51* |

| SpeechRec | — | −0.43† | −0.58* | 0.25 | |

| CoPQ | — | 0.79* | −0.15 | ||

| CoPlisten | — | 0.39† | |||

| Cost | — | ||||

| Tandem Stance: Pearson r | SpeechRec | Effort | CoPQ | CoPlisten | Cost |

| SpeechRec | — | −0.34 | 0.02 | −0.42† | −0.41† |

| Effort | — | −0.19 | 0.14 | 0.23 | |

| CoPQ | — | 0.46† | −0.64* | ||

| CoPlisten | 0.11 | ||||

| Cost | — | ||||

| Tandem Stance: Partial Correlation Controlling for beHFPTA | Age | SpeechRec | CoPQ | CoPlisten | Cost |

| Age | — | −0.16 | 0.10 | −0.02 | −0.17 |

| SpeechRec | — | 0.08 | −0.34 | −0.38 | |

| CoPQ | — | 0.46† | −0.67* | ||

| CoPlisten | — | 0.05 | |||

| Cost | — | ||||

| Tandem Stance: Partial Correlation Controlling for Age | beHFPTA | SpeechRec | CoPQ | CoPlisten | Cost |

| beHFPTA | — | −0.04 | −0.01 | 0.32 | 0.23 |

| SpeechRec | — | 0.10 | −0.34 | −0.42† | |

| CoPQ | — | 0.44† | −0.65* | ||

| CoPlisten | — | 0.11 | |||

| Cost | — | ||||

Data for speech recognition (SpeechRec), effort ratings (Effort), postural control in dual-task conditions (CoPlisten), and dual-task costs (Cost) were averaged across masker type, spatial condition, and SNR.

Significant at the 0.01 level.

Significant at the 0.05 level.

beHFPTA, better-ear high-frequency pure-tone average; CoPQ, postural control in the baseline condition.

RESULTS

Speech Recognition

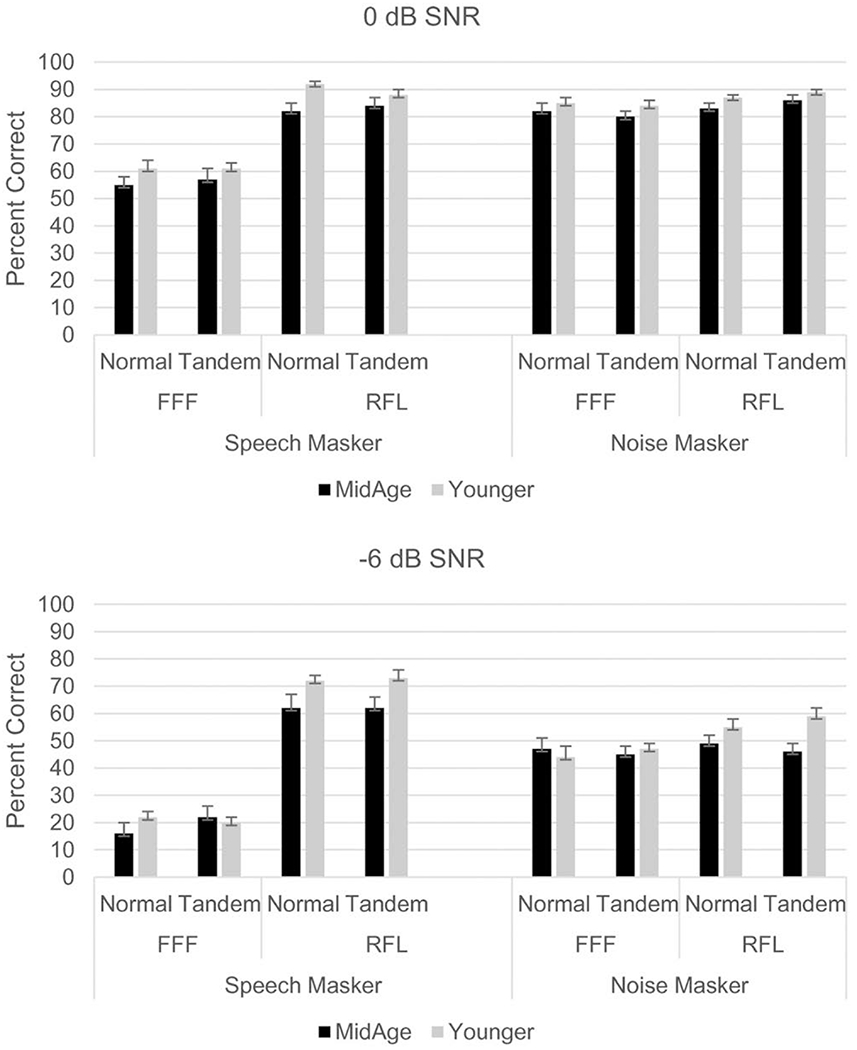

Percent-correct recognition of keywords was analyzed separately for data collected at the two SNRs. These data are shown in Figure 2 (0 dB SNR: upper panel; −6 dB SNR: lower panel). Across both groups, performance in the presence of speech maskers improved greatly with target-masker spatial separation (RFL versus FFF), a pattern that did not occur when the masker was steady-state noise. This result was expected, as spatial release from masking observed when using speech maskers was presumed to be partially based on momentary SNR advantages from head shadow at either ear due to masker fluctuations (Brungart and Iyer 2012). Listeners may have also benefited from a release from informational masking in this scenario. Neither of these advantages was expected with a continuous noise masker presented from both sides of the listener. Also apparent in Figure 2 is that the younger participants outperformed the middle-aged participants in 14 out of 16 conditions.

Fig. 2.

Percent-correct speech recognition data by participant group, listening condition, and stance. Upper panel = data collected at 0 dB SNR; bottom panel = data collected at −6 dB SNR. FFF = spatially-coincident target and masker; RFL = spatially separated target and masker. Error bars represent the standard error.

In order to analyze these observations, repeated measures ANOVA were conducted with stance (normal or tandem), masker (speech or noise), and spatial condition (FFF or RFL) as within-subjects factors and group as a between-subjects factor (Table 1). For 0 dB SNR, results indicated significant main effects of masker, spatial condition, and group, as well as a significant masker × spatial condition interaction. More robust differences between conditions were found at −6 dB SNR, with significant main effects of masker and spatial condition along with significant interactions of spatial × group, spatial × masker, and stance × masker × group. Observation of Figure 2 for the −6 dB SNR condition suggests that the significant spatial × group interaction was due to the finding that younger participants outperformed older participants in the RFL condition, with percent-correct scores similar between groups for the FFF condition. The three-way interaction of stance × masker × group was explored with posthoc one-way ANOVAs. Results indicated that the younger participants obtained higher scores than the middle-aged participants in two conditions: normal stance with speech as the masker (younger: mean = 61.66%; middle-age: mean = 53.61%; p = 0.024) and tandem stance with noise as the masker (younger: mean = 69.59%; middle-age: 64.20%; p = 0.028).

In order to further explore how the two groups’ performance differed, effect size (Cohen’s d) was calculated for each condition, as shown in Table 2. Large effect size differences (Cohen’s d > 0.8) between young and middle-age groups were found in three conditions, all entailing the spatially-separated RFL condition: at −6 dB SNR for both noise and speech maskers in the tandem stance, and at 0 dB SNR for speech maskers in the normal stance. Moderate effect sizes (Cohen’s d = 0.4 to 0.6) were found for several other conditions, primarily those involving spatially-separated signals. It should be noted, however, that in many conditions the numerical differences in percent-correct performance between groups were modest.

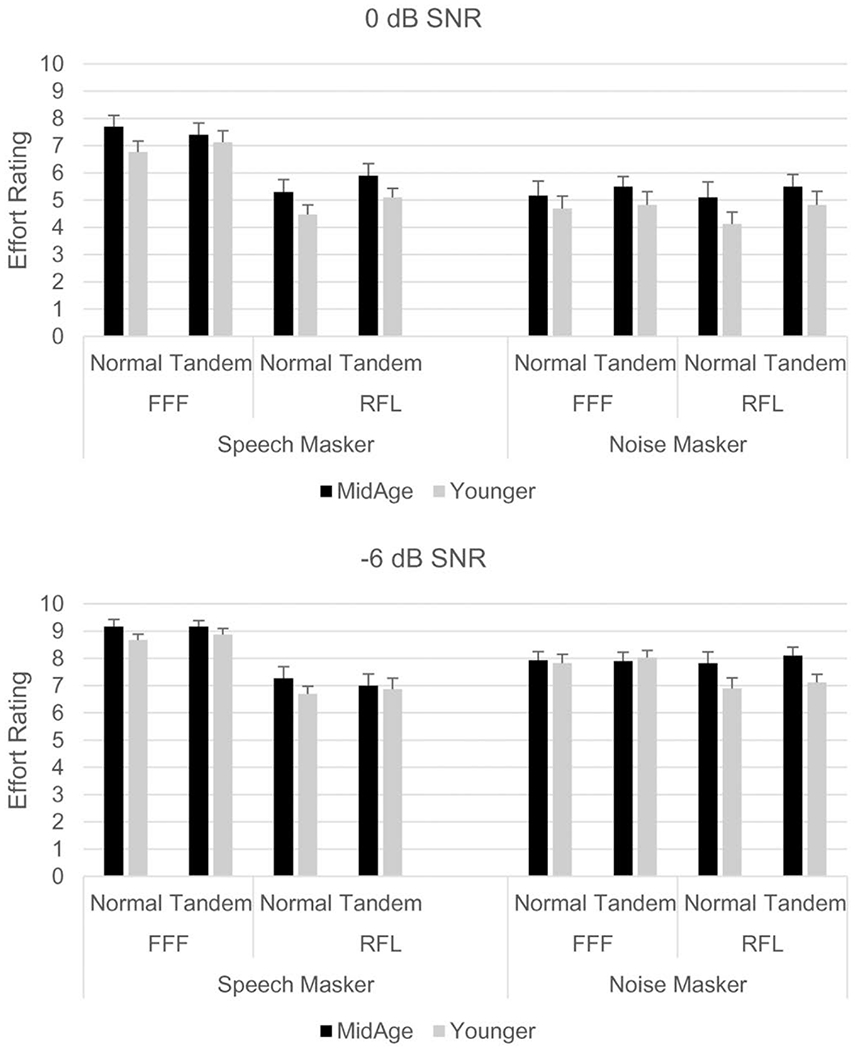

Self-Rated Listening Effort

Recall that participants were asked to rate the amount of effort expended on the listening task using a 10-point scale, with greater effort indicated by higher numbers. These effort ratings are shown in Figure 3, with 0 dB SNR in the top panel and −6 dB SNR in the bottom panel. Although effort ratings were higher at −6 dB SNR, a similar pattern was found across the two SNRs.

Fig. 3.

Listening effort ratings by participant group, acoustic condition, and stance. Higher ratings indicate greater perceived effort reported for task completion. Upper panel = data collected at 0 dB SNR; bottom panel = data collected at −6 dB SNR. FFF = spatially-coincident target and masker; RFL = spatially separated target and masker. Error bars represent the standard error.

As with the accuracy data, the effort ratings were analyzed separately at the two SNRs using repeated measures ANOVA (Table 3) with stance, masker, and spatial condition as within-subjects factors and group as a between-subjects factor [see Sullivan & Artino (2013) for justification for using parametric statistics to analyze Likert-scale data]. Analysis of the 0 dB SNR data indicated significant main effects for stance, masker, and spatial condition as well as a significant masker × spatial interaction. Similar results were found in the −6 dB SNR data, with significant main effects for masker and spatial condition, and a significant interaction between these two variables. Overall, the effort scores suggest that participants perceived the listening task to be more difficult when they were standing in the tandem stance versus normal stance at the easier 0 dB SNR (this was not observed at −6 dB SNR); when the masker was competing speech (versus steady-state noise); and when the speech masker was co-located (FFF) with the target (versus the spatially-separated RFL condition). Of note was the lack of significant differences between groups on self-rated listening effort.

Postural Control

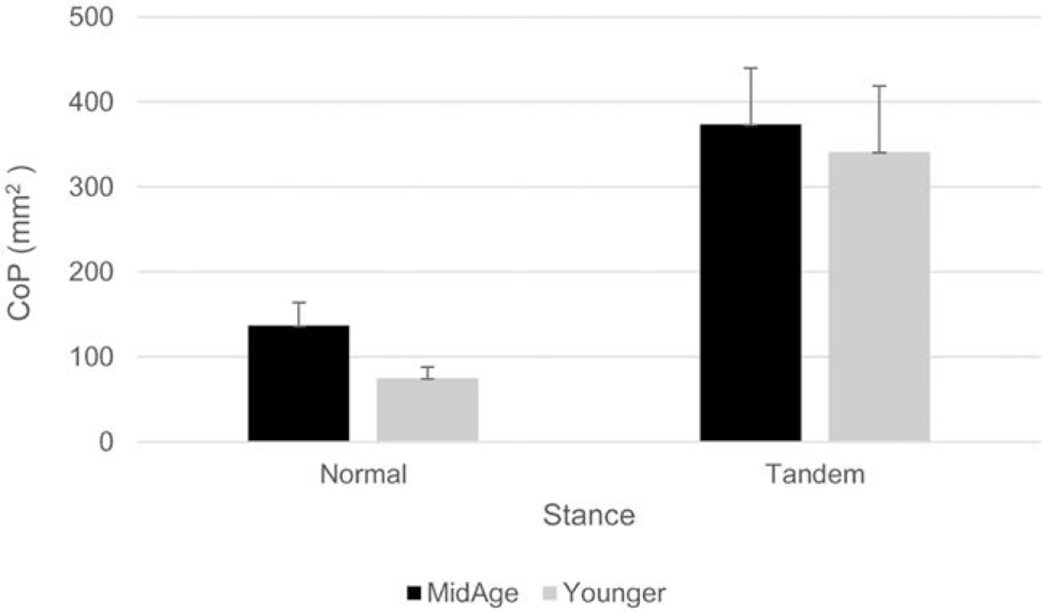

Baseline (Single-Task) Balance CoP •

Baseline balance-only trials (i.e., standing in quiet) were conducted both before and after dual-task trials. Results of the single-task balance-only trials, averaged between pre- and post-experiment data collection periods, are shown in Figure 4. Expectedly, CoP values were higher for the more difficult tandem stance (versus normal stance). Repeated measures ANOVA conducted with stance as a within-subjects variable and group as the between-subjects variable confirmed this observation (Table 4). The main effect of stance was significant, with a non-significant effect of group and a non-significant stance × group interaction.

Fig. 4.

Postural control (CoP in mm2) in single-task conditions for each listener group. Error bars represent the standard error.

Listening-While-Balancing (Dual-Task) CoP •

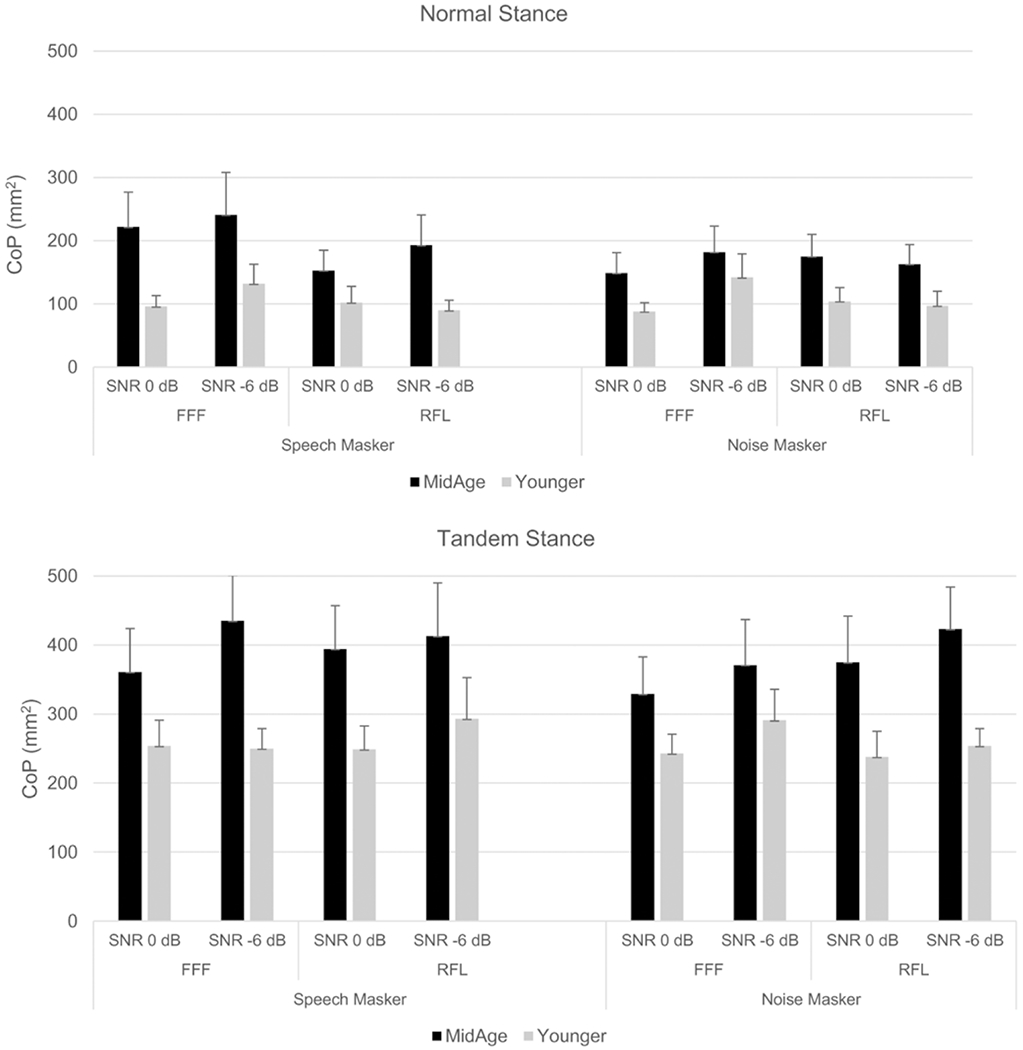

CoP data collected in each listening condition are shown separately for normal versus tandem stance conditions (Fig. 5). For both these conditions, the small differences between groups shown in the balance-only trials were amplified when participants were balancing while listening. In general, CoP was modulated by listening task difficulty, with more CoP excursion noted in more difficult acoustic conditions, especially in the normal stance condition.

Fig. 5.

Postural control (CoP in mm2) by participant group and listening condition during dual-task trials. Upper panel = participants standing in normal stance; bottom panel = participants standing in tandem stance. FFF = spatially-coincident target and masker; RFL = spatially separated target and masker. Error bars represent the standard error.

These observations were tested with repeated measures ANOVA with stance, masker type, spatial condition, and SNR as within-subjects factors and group as a between-subjects factor (Table 4). The main effects of SNR (0 dB SNR: mean CoP = 217.45 mm2; −6 dB SNR: mean = 248.83 mm2) and stance (normal: mean CoP = 144.29 mm2; tandem: mean CoP = 317.02 mm2) were statistically significant. The main effect of group just missed statistical significance (p = 0.057; younger: mean = 176.90 mm2; middle-age: mean = 285.03 mm2), as did the masker × group interaction (p = 0.065; mean CoP values: younger/speech masker 189.06 mm2, younger/noise masker 174.10 mm2; middle-age/speech masker 301.40 mm2, middle-age/noise masker 268.66 mm2).

In order to further explore the group variation shown in Figure 5, effect sizes were calculated to examine group differences by masker and spatial conditions (Table 2). Because standard deviations were substantially larger for the middle-aged group, Hedge’s g was used to calculate effect size. This analysis demonstrated large group effect sizes for three conditions (speech masker: FFF at 0 dB SNR in normal stance and FFF at −6 dB SNR in tandem stance; noise masker: RFL at −6 dB SNR in tandem stance) and moderate effect sizes for many others. In all of these conditions, postural control was poorer in middle-aged adults than in younger adults.

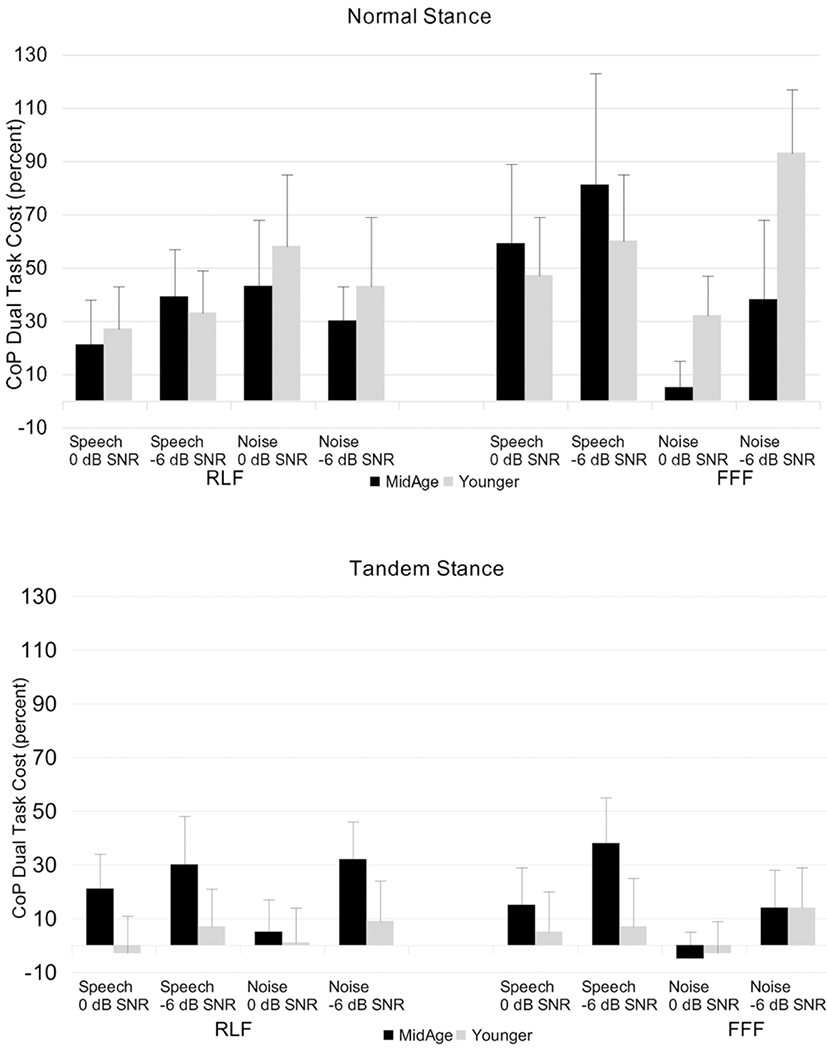

Dual-Task Costs

Dual-task costs in postural control (i.e., the difference in postural control when participants were standing in quiet versus when they were standing while completing the speech perception task) were calculated for each condition using the following formula:

These dual-task cost values can be seen in Figure 6. The dual-task cost data were analyzed using repeated-measures ANOVA with stance, SNR, spatial condition, and masker as within-subjects variables and group as the between-subjects variable (Table 5). This analysis found significant main effects for stance and SNR as well as a significant masker × group interaction and a significant four-way interaction of stance × SNR × spatial × masker. Observation of Figure 6 suggests that dual-task costs were, in general, larger for the normal stance condition than for the tandem stance condition, and were mostly larger at the more adverse −6 dB SNR versus 0 dB SNR. The influence of these factors on each other is clearly complex, making it challenging to interpret this interaction.

Fig. 6.

Dual-task costs in postural control, expressed as a percentage change from single-task postural control data [100 × (dual-task CoP-single task CoP/single task CoP)]. FFF = spatially-coincident target and masker; RFL = spatially separated target and masker. Upper panel = participants standing in normal stance; bottom panel = participants standing in tandem stance. Error bars represent the standard error.

The masker × group interaction was explored further via posthoc t-tests. Results indicated that dual-task costs were significantly larger for the speech masker than for the noise masker for the middle-aged group (speech masker: mean = 38.88; noise masker: mean = 20.15; p = 0.02); the same was not true for younger participants (speech masker: mean = 29.27; noise masker: mean = 26.66; p = 0.40).

Correlation Analyses

We conducted a series of correlation analyses for several purposes. First, we wanted to attempt to account for the rather large amount of inter-subject variability in our data (especially our postural control data). We also were interested in examining how postural control was related to speech recognition on an individual participant level. Finally, we sought to determine if our two working metrics of listening effort (self-rated effort and postural control dual-task costs) were related. To this end, correlation analyses were completed on data for both participant groups in each stance, using Pearson r analysis followed by partial correlation analyses controlling separately for age and better ear HFPTA (which was average thresholds from 2 to 6 kHz). Variables in these analyses were percent-correct speech recognition, single- and dual-task CoP values, and dual-task costs, each averaged across all acoustic conditions (SNR, masker type, and spatial configuration). The results of these analyses are presented in Table 6.

For normal stance, percent-correct scores were significantly and negatively related to postural control in both single- and dual-task conditions. Individuals with better speech recognition performance had smaller postural excursions. Self-rated effort was significantly associated with dual-task costs, with individuals who gave higher effort ratings also exhibiting greater dual-task costs. When age was controlled, these significant associations persisted. Moreover, with age controlled, HFPTA was significantly correlated with postural control in the dual-task condition and with dual-task costs: individuals who had poorer hearing exhibited poorer postural control and larger dual-task costs. When HFPTA was controlled, speech recognition was still significantly associated with postural control in both single- and dual-task conditions. Age was correlated with dual-task costs, but in perhaps an unexpected direction—once hearing loss was accounted for, the older the participant, the smaller the dual-task costs.

When participants were in tandem stance, postural control was significantly related to speech understanding in dual-task, but not single-task, conditions, as well as to dual-task costs, with better speech recognition related to improved postural control. Here, self-rated effort was not significantly associated with dual-task costs. Results of the partial correlation analysis with HFPTA controlled showed no significant associations between age and any other variable. With age controlled, speech recognition continued to be associated with dual-task postural control costs. HFPTA was not significantly correlated with any variable when age was controlled.

In sum, results of these correlation analyses suggest that degraded speech recognition ability in the adverse listening conditions tested in this study was associated with poorer postural control. Further, when participants were standing normally, greater high-frequency hearing loss was associated with both poorer postural control in dual-task conditions and greater dual-task costs.

DISCUSSION

The experiment described in this article was an exploratory study whose primary objective was to determine whether a task that combined listening with postural control would be sensitive to differences in performance between easy and hard listening conditions, and to differences between younger and middle-aged adults. We had proposed that postural control would be modulated by the difficulty of the listening task, suggesting that an increase in cognitive load or effort required for the listening task would be reflected in fewer cognitive resources remaining to devote to the balance task. We further hypothesized that this increase would be larger for middle-aged adults, consistent with early age-related changes in postural control and/or speech perception.

Our results partially support our hypothesis that the difficulty of the listening task affects postural control. During dual-task conditions, postural control was influenced to a greater extent when the speech stimuli were presented at the more difficult −6 dB SNR (versus 0 dB SNR). The effect of spatial presentation condition on postural control was not as clear-cut. Although, when the masker was competing speech, speech understanding was better when the masker and target were spatially separated (versus spatially coincident), postural control did not differ significantly between the two spatial conditions.

Dual-task costs derived from the data also suggest that postural control was influenced by the difficulty of the listening task. In most conditions, dual-task costs were larger at the more adverse SNR, and dual-task costs in most conditions were numerically larger for spatially-coincident versus spatially-separated speech maskers when participants were standing normally. This result is consistent with Gandemer et al. (2017), which found that postural control was better when sound was presented from three spatially-separated sources versus from a single source. One caveat about the dual-task costs in the present study is that they were calculated using a baseline condition in which postural control was assessed while standing in quiet. It is known that sound can enhance balance (relative to when it is measured in quiet), possibly because sound acts an “anchor” or orienting signal (e.g., Easton et al. 1998; Dozza et al. 2011; Zhong & Yost 2013; Ross et al. 2016; Stevens et al. 2016; Vitkovic et al. 2016; Gandemer et al. 2017). Hence, the presence of the maskers might have provided this type of anchoring effect, serving to partially counteract any detrimental effects of increased difficulty of the listening task caused by the maskers. We do see some indication of this phenomenon, especially in the data from younger participants in tandem stance, where CoP values were in some cases smaller in dual-task trials than in single-task trials.

Another issue related to our dual-task costs is that baseline postural control in tandem stance was, as expected, much poorer than when participants were standing normally. This complicates the comparison of dual-task costs between the two stance conditions since we used the conventional way to calculate dual-task costs, which is a percentage change. The consequence is that similar absolute degrees of increased sway during dual-task trials resulted in a smaller percentage change for the tandem stance than for the normal stance. This should be taken into account when interpreting differences between the two stances in dual-task costs reported in the present study.

We had hypothesized that listening while maintaining postural control would be more detrimental to middle-aged adults than to younger adults. Indeed, the small difference in postural control between groups of younger and middle-aged participants while they were not performing the speech perception task (Fig. 4) increased dramatically in dual-task conditions (Fig. 5). Prior research has established that dual-task costs associated with performing cognitive tasks while balancing are larger for older than for younger adults (Shumway-Cook & Woollacott 2000; Deviterne et al. 2005; Huxhold et al. 2006; Gosselin & Gagne 2011; Granacher et al. 2011; Bruce et al. 2017). There is evidence to suggest that age effects in dual-task costs emerge in middle age when speech understanding is coupled with a memory task (Degeest et al., 2015; Cramer & Donai 2018). The significant masker × group interaction found for dual-task costs in the present study is consistent with this prior work.

Correlation analyses suggest that the differences between groups in postural control were influenced by high-frequency hearing loss. The more hearing loss, the greater the CoP excursion when participants were in normal stance, even when age was controlled for statistically. Moreover, poorer speech understanding was associated with poorer postural control in both single- and dual-task conditions when using normal stance. Results from at least two previous studies have similarly found a significant association between degree of hearing loss in older adults and postural sway measured during dual-task paradigms (Bruce et al. 2017; Thomas et al. 2018). Our results also are consistent with previous research demonstrating that hearing loss in older adults is associated with poorer postural control in general (e.g., Vilajnen et al. 2009; Chen et al. 2015; Agmon et al. 2017; Thomas et al. 2018). The present study extends this finding to middle-aged adults with (for the most part) very mild degrees of hearing loss. Early aging (and the hearing loss that accompanies it) seems to influence postural control even in the naturalistic condition of individuals standing normally on a stable platform. The underpinnings of this effect have yet to be identified, but one possibility (given the current findings of reductions in postural control associated with degree of hearing loss) is subtle changes in the vestibular system that accompany age-related cochlear deterioration (e.g., Campos et al. 2018).

We had proposed that postural control would be more greatly compromised in the presence of competing speech maskers as compared to steady-state noise maskers, particularly for middle-aged adults. Prior work using a different type of secondary task (visual-motor tracking) found little difference in dual-task costs between two-talker maskers and steady-state noise for either younger or older participants (Desjardins & Dougherty 2013). In the present study, examination of the effect sizes for group differences in dual-task postural control demonstrated that of the six conditions with the largest effect sizes, five were when competing speech was the masker. Moreover, the significant masker × group interaction in dual-task costs indicated that the difference in dual-task costs between maskers was significantly larger for speech maskers than noise maskers for the middle-aged group but not for younger participants. Collectively, these findings support the idea that middle-aged adults are more greatly affected by speech maskers than are younger individuals.

Participants rated how much listening effort they believed they expended in each condition. The effort ratings were roughly in line with percent-correct speech recognition performance: individuals perceived the listening task to be more effortful in conditions where the target and speech maskers were co-located versus when they were spatially separated, and when the masker was speech versus when it was noise. A spatial release from self-rated listening effort has been noted previously for young, normally-hearing participants (Rennies & Kidd 2018). Participants also perceived the listening task to be more difficult when in tandem stance than when in normal stance. It could be that, even though they were instructed specifically to rate listening effort, effort involved in standing in tandem stance influenced participants’ listening ratings [the reader is referred to Moore and Picou (2018) for data supporting the difficulty individuals may have in distinguishing effort from task performance].

One idea tested in this study was whether our balancing-while-listening paradigm was able to tap into listening effort. Recall that our premise was that when one task (in this case, speech understanding) was more difficult or effortful, fewer resources would be available for a second task (which in the present study was standing still). Indeed, we found that listening effort ratings obtained when participants were standing normally were correlated with both postural control in dual-task conditions and with dual-task costs. These results demonstrated that individuals who perceived the listening task to be more difficult exhibited poorer postural control. This finding supports the idea that the balancing-while-listening paradigm may index listening effort.

It is thought that one potential benefit of measuring listening effort is that it might be sensitive to group differences even when speech recognition is close to or at ceiling levels of performance, which often can happen when testing at positive SNRs. Indeed, this pattern was observed in the present study. As an example, the two groups’ scores on the speech perception task were essentially equivalent and above 80% when the steady-state noise masker was presented at 0 dB SNR (Fig. 2). Single-task postural control also differed little between groups when they were in tandem stance (Fig. 4). Yet postural control in middle-aged participants was substantially poorer than in younger participants under dual-task conditions with steady-state noise as the masker, especially in tandem stance (Fig. 5). This result could be due to the speech perception task being more cognitively taxing (i.e., effortful) for the middle-aged participants than for the younger participants, even though performance in terms of percent-correct was very similar between groups.

As mentioned above, one substantial limitation to the present study is our choice of measuring baseline (single-task) postural control in quiet, and how this might have influenced the results. In the present study, this single baseline condition was used to calculate dual-task costs. Since the presence of sound is known to influence postural control, future studies should carefully consider the acoustic conditions in which baseline postural control is measured. Another limitation with the current work is the possibility that our study was underpowered, especially considering the number of statistical comparisons that approached (but did not reach) statistical significance (although our n = 15 per group is in the range of what is typical for this type of research: Lau et al. 2017; Carr et al. 2019; Niewborowska et al. 2019).

In summary, results of this exploratory study at least partially support the concept of using a balancing-while-listening task to measure listening effort. The task used in the present study is ecologically valid, as it is not uncommon for people to communicate in difficult acoustic environments while standing (like at a cocktail party). Postural sway has been associated with increased risk of falls in older adults (Maki et al. 1990; Maylor & Wing 1996; Verghese et al. 2002; Bergland & Wyller 2004; Lajoie & Gallagher, 2004) as has age-related hearing loss (Lin & Ferrucci 2012; Li et al. 2013; Jiam et al. 2016). Results described in this article suggest that even in middle age, individuals experience challenges to postural control and stability, and may be at higher risk for falls when they need to communicate in difficult environments.

ACKNOWLEDGMENTS

We thank Michael Rogers, Lincoln Dunn, and Mike Clauss for their assistance with this project.

This work was supported by NIH NIDCD R01 012057.

Footnotes

The authors have no conflicts of interest to disclose.

REFERENCES

- Agmon M, Lavie L, Doumas M (2017). The association between hearing loss, postural control, and mobility in older adults: A systematic review. J Am Acad Audiol, 28, 575–588. [DOI] [PubMed] [Google Scholar]

- Agrawal Y, Carey JP, Della Santina CC, et al. (2009). Disorders of balance and vestibular function in US adults: data from the National Health and Nutrition Examination Survey, 2001-2004. Arch Intern Med, 169, 938–944. [DOI] [PubMed] [Google Scholar]

- Alain C, & McDonald KL (2007). Age-related differences in neuromagnetic brain activity underlying concurrent sound perception. J Neurosci, 27, 1308–1314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babkoff H, Muchnik C, Ben-David N, et al. (2002). Mapping lateralization of click trains in younger and older populations. Hear Res, 165, 117–127. [DOI] [PubMed] [Google Scholar]

- Bainbridge KE, & Wallhagen MI (2014). Hearing loss in an aging American population: extent, impact, and management. Annu Rev Public Health, 35, 139–152. [DOI] [PubMed] [Google Scholar]

- Başkent D, van Engelshoven S, Galvin JJ III. (2014). Susceptibility to interference by music and speech maskers in middle-aged adults. J Acoust Soc Am, 135, EL147–EL153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergland A, & Wyller TB (2004). Risk factors for serious fall related injury in elderly women living at home. Inj Prev, 10, 308–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bess FH, Lichtenstein MJ and Logan SA (1991). Making hearing impairment relevant: Linkages with hearing disability and hearing handicap. Acta Otolaryng, suppl. 476, 226–232. [DOI] [PubMed] [Google Scholar]

- Bharadwaj HM, Masud S, Mehraei G, et al. (2015). Individual differences reveal correlates of hidden hearing deficits. J Neurosci, 35, 2161–2172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boisgontier MP, Beets IA, Duysens J, et al. (2013). Age-related differences in attentional cost associated with postural dual tasks: increased recruitment of generic cognitive resources in older adults. Neurosci Biobehav Rev, 37, 1824–1837. [DOI] [PubMed] [Google Scholar]

- Burns ER, Stevens JA, Lee R (2016). The direct costs of fatal and non-fatal falls among older adults—United States. J Safety Res, 58, 99–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broadbent D (1958). Perception and Communication. London, England: Permagon Press. [Google Scholar]

- Brown LA, Shumway-Cook A, and Woollacott MH (2009). Attentional demands and postural recovery: the effects of aging. J Gerontol A Biol Sci Med Sci, 54, 165–171. [DOI] [PubMed] [Google Scholar]

- Bruce H, Aponte D, St-Onge N, et al. (2019). The effects of age and hearing loss on dual-task balance and listening. J Gerontol B Psychol Sci Soc Sci, 74, 275–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart DS, & Iyer N (2012). Better-ear glimpsing efficiency with symmetrically-placed interfering talkers. J Acoust Soc Am, 132, 2545–2556. [DOI] [PubMed] [Google Scholar]

- Cameron S, Glyde H, and Dillon H (2011). Listening in Spatialized Noise---Sentences Test (LiSN-S): Normative and retest reliability data for adolescents and adults up to 60 years of age. J Amer Acad Aud, 22, 697–709. [DOI] [PubMed] [Google Scholar]

- Campos J, Ramkhalawansingh R, Pichora-Fuller MK (2018). Hearing, self-motion perception, mobility, and aging. Hear Res, 369, 42–55. [DOI] [PubMed] [Google Scholar]

- Carr S, Pichora-Fuller MP, Li KZH et al. (2019). Multisensory, multi-tasking performance of older adults with and without subjective cognitive decline. Multisens Res, 1, 1–33. [DOI] [PubMed] [Google Scholar]

- Chen DS, Betz J, Yaffe K, et al. ; Health ABC Study. (2015). Association of hearing impairment with declines in physical functioning and the risk of disability in older adults. J Gerontol A Biol Sci Med Sci, 70, 654–661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cramer JL, & Donai JJ (2019). Effects of signal bandwidth on listening effort in young- and middle-aged adults. Int J Audiol, 58, 116–122. [DOI] [PubMed] [Google Scholar]

- Crews JE, & Campbell VA (2004). Vision impairment and hearing loss among community-dwelling older Americans: implications for health and functioning. Am J Public Health, 94, 823–829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis TM, & Jerger J (2014). The effect of middle age on the late positive component of the auditory event-related potential. J Am Acad Audiol, 25, 199–209. [DOI] [PubMed] [Google Scholar]

- Davis TM, Jerger J, Martin J (2013). Electrophysiological evidence of augmented interaural asymmetry in middle-aged listeners. J Am Acad Audiol, 24, 159–173. [DOI] [PubMed] [Google Scholar]

- Degeest S, Keppler H, Corthals P (2015). The effect of age on listening effort. J Speech Lang Hear Res, 58, 1592–1600. [DOI] [PubMed] [Google Scholar]

- Desjardins JL, & Doherty KA (2013). Age-related changes in listening effort for various types of masker noises. Ear Hear, 34, 261–272. [DOI] [PubMed] [Google Scholar]

- Devesse A, van Wieringen A, and Wouters J (2020). AVATAR assesses speech understanding and multitask costs in ecologically relevant listening situations. Ear Hear, 41, 521–531. [DOI] [PubMed] [Google Scholar]

- Deviterne D, Gauchard GC, Jamet M, et al. (2005). Added cognitive load through rotary auditory stimulation can improve the quality of postural control in the elderly. Brain Res Bull, 64, 487–492. [DOI] [PubMed] [Google Scholar]

- Dozza M, Chiari L, Peterka RJ et al. (2011). What is the most effective type of audio- biofeedback for postural motor learning? Gait Post, 34, 313–319. [DOI] [PubMed] [Google Scholar]

- Duarte M, & Freitas SM (2010). Revision of posturography based on force plate for balance evaluation. Rev Bras Fisioter, 14, 183–192. [PubMed] [Google Scholar]

- Easton RD, Greene AJ, DiZio P, et al. (1998). Auditory cues for orientation and postural control in sighted and congenitally blind people. Exp Brain Res, 118, 541–550. [DOI] [PubMed] [Google Scholar]

- Fraser S, & Bherer L (2013). Age-related decline in divided-attention: from theoretical lab research to practical real-life situations. Wiley Interdiscip Rev Cogn Sci, 4, 623–640. [DOI] [PubMed] [Google Scholar]

- Fraizer EV, & Mitra S (2008). Methodological and interpretive issues in posture-cognition dual-tasking in upright stance. Gait Posture, 27, 271–279. [DOI] [PubMed] [Google Scholar]

- Füllgrabe C, Moore BC, Stone MA (2014). Age-group differences in speech identification despite matched audiometrically normal hearing: contributions from auditory temporal processing and cognition. Front Aging Neurosci, 6, 347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Füllgrabe C (2013). Age-dependent changes in temporal-fine-structure processing in the absence of peripheral hearing loss. Am J Audiol, 22, 313–315. [DOI] [PubMed] [Google Scholar]

- Gandemer L, Parseihian G, Kronland-Martinet R, et al. (2017). Spatial Cues Provided by Sound Improve Postural Stabilization: Evidence of a Spatial Auditory Map? Front Neurosci, 11, 357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glyde H, Cameron S, Dillon H, et al. (2013). The effects of hearing impairment and aging on spatial processing. Ear Hear, 34, 15–28. [DOI] [PubMed] [Google Scholar]

- Gosselin P, & Gagné JP (2011). Older adults expend more listening effort than young adults recognizing speech in noise. J Speech Lang Hear Res, 54, 944–958. [DOI] [PubMed] [Google Scholar]

- Granacher U, Bridenbaugh SA, Muehlbauer T, et al. (2011). Age-related effects on postural control under multi-task conditions. Gerontology, 57, 247–255. [DOI] [PubMed] [Google Scholar]

- Grose JH, Hall JW III, Buss E (2006). Temporal processing deficits in the pre-senescent auditory system. J Acoust Soc Am, 119, 2305–2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grose JH, & Mamo SK (2010). Processing of temporal fine structure as a function of age. Ear Hear, 31, 755–760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula S, Bloigu R, Majamaa K, et al. (2011). Self-reported hearing problems among older adults: prevalence and comparison to measured hearing impairment. J Am Acad Audiol, 22, 550–559. [DOI] [PubMed] [Google Scholar]

- Helfer KS, & Freyman RL (2014). Stimulus and listener factors affecting age-related changes in competing speech perception. J Acoust Soc Am, 136, 748–759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, Freyman RL, Merchant GR (2018). How repetition influences speech understanding by younger, middle-aged and older adults. Int J Audiol, 57, 695–702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, & Jesse A (2015). Lexical influences on competing speech perception in younger, middle-aged, and older adults. J Acoust Soc Am, 138, 363–376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, Merchant GR, Freyman RL (2016). Aging and the effect of target-masker alignment. J Acoust Soc Am, 140, 3844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, Merchant GR, Wasiuk PA (2017). Age-Related Changes in Objective and Subjective Speech Perception in Complex Listening Environments. J Speech Lang Hear Res, 60, 3009–3018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS, & Vargo M (2009). Speech recognition and temporal processing in middle-aged women. J Am Acad Audiol, 20, 264–271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE, Kewley-Port D, Fogerty D, et al. (2010). Measures of hearing threshold and temporal processing across the adult lifespan. Hear Res, 264, 30–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huxhold O, Li SC, Schmiedek F, et al. (2006). Dual-tasking postural control: aging and the effects of cognitive demand in conjunction with focus of attention. Brain Res Bull, 69, 294–305. [DOI] [PubMed] [Google Scholar]

- Jiam NT, Li C, Agrawal Y (2016). Hearing loss and falls: A systematic review and meta-analysis. Laryngoscope, 126, 2587–2596. [DOI] [PubMed] [Google Scholar]

- Kahneman D (1973). Attention and Effort. Englewood Cliffs, New Jersey: Prentice-Hall. [Google Scholar]

- Ku PX, Abu Osman NA, Wan Abas WAB (2016). The limits of stability and muscle activity in middle-aged adults during static and dynamic stance. J Biomech, 49, 3943–3948. [DOI] [PubMed] [Google Scholar]

- Lajoie Y, & Gallagher SP (2004). Predicting falls within the elderly community: comparison of postural sway, reaction time, the Berg balance scale and the Activities-specific Balance Confidence (ABC) scale for comparing fallers and non-fallers. Arch Gerontol Geriatr, 38, 11–26. [DOI] [PubMed] [Google Scholar]

- Lau ST, Pichora-Fuller MK, Li KZ, et al. (2017). Effects of Hearing Loss on Dual-Task Performance in an Audiovisual Virtual Reality Simulation of Listening While Walking. J Am Acad Audiol, 27, 567–587. [DOI] [PubMed] [Google Scholar]

- Leigh-Paffenroth ED, & Elangovan S (2011). Temporal processing in low-frequency channels: effects of age and hearing loss in middle-aged listeners. J Am Acad Audiol, 22, 393–404. [DOI] [PubMed] [Google Scholar]

- Li KZ, & Lindenberger U (2002). Relations between aging sensory/sensorimotor and cognitive functions. Neurosci Biobehav Rev, 26, 777–783. [DOI] [PubMed] [Google Scholar]

- Li L, Simonsick EM, Ferrucci L, et al. (2013). Hearing loss and gait speed among older adults in the United States. Gait Posture, 38, 25–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin FR, & Ferrucci L (2012). Hearing loss and falls among older adults in the United States. Arch Intern Med, 172, 369–371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lister J, Besing J, Koehnke J (2002). Effects of age and frequency disparity on gap discrimination. J Acoust Soc Am, 111, 2793–2800. [DOI] [PubMed] [Google Scholar]

- Mager R, Falkenstein M, Stormer R et al. (2005). Auditory distraction in young and middle-aged adults: a behavioural and event-related potential study. J Neural Trans, 112, 1165–1176. [DOI] [PubMed] [Google Scholar]

- Maki BE, Holliday PJ, Fernie GR (1990). Aging and postural control. A comparison of spontaneous- and induced-sway balance tests. J Am Geriatr Soc, 38, 1.-. [DOI] [PubMed] [Google Scholar]

- Maylor EA, & Wing AM (1996). Age differences in postural stability are increased by additional cognitive demands. J Gerontol B Psychol Sci Soc Sci, 51, P143–P154. [DOI] [PubMed] [Google Scholar]

- Moore TM, & Picou EM (2018). A potential bias in subjective ratings of mental effort. J Speech Lang Hear Res, 61, 2405–2421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muchnik C, Hildesheimer M, Rubinstein M, et al. (1985). Minimal time interval in auditory temporal resolution. J Aud Res, 25, 239–246. [PubMed] [Google Scholar]

- Nash SD, Cruickshanks KJ, Klein R, et al. (2011). The prevalence of hearing impairment and associated risk factors: the Beaver Dam Offspring Study. Arch Otolaryngol Head Neck Surg, 137, 432–439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasreddine ZS, Phillips NA, Bedirian V, et al. (2005). The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J Am Geriatr Soc, 53, 695–699. [DOI] [PubMed] [Google Scholar]

- Nieborowska V, Lau ST, Campos J, et al. (2019). Effects of age on dual-task walking while listening. J Mot Behav, 51, 416–427. [DOI] [PubMed] [Google Scholar]

- Ozmeral EJ, Eddins AC, Frisina DR Sr, et al. (2016). Large cross-sectional study of presbycusis reveals rapid progressive decline in auditory temporal acuity. Neurobiol Aging, 43, 72–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park JH, Mancini M, Carlson-Kuhta P, et al. (2016). Quantifying effects of age on balance and gait with inertial sensors in community dwelling healthy adults. Exp Gerontol, 85, 48–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rennies J, & Kidd G Jr. (2018). Benefit of binaural listening as revealed by speech intelligibility and listening effort. J Acoust Soc Am, 144, 2147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross JM, Will OJ, McGann Z, et al. (2016). Auditory white noise reduces age-related fluctuations in balance. Neurosci Lett, 630, 216–221. [DOI] [PubMed] [Google Scholar]

- Ruggles D, Bharadwaj H, Shinn-Cunningham BG (2012). Why middle-aged listeners have trouble hearing in everyday settings. Curr Biol, 22, 1417–1422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shinn-Cunningham B, Ruggles DR, Bharadwaj H (2013). How early aging and environment interact in everyday listening: from brainstem to behavior through modeling. Adv Exp Med Biol, 787, 501–510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shumway-Cook A, & Woollacott M (2000). Attentional demands and postural control: the effect of sensory context. J Gerontol A Biol Sci Med Sci, 55, M10–M16. [DOI] [PubMed] [Google Scholar]

- Snell KB, & Frisina DR (2000). Relationships among age-related differences in gap detection and word recognition. J Acoust Soc Am, 107, 1615–1626. [DOI] [PubMed] [Google Scholar]

- Stevens MN, Barbour DL, Gronski MP, et al. (2016). Auditory contributions to maintaining balance. J Vestib Res, 26, 433–438. [DOI] [PubMed] [Google Scholar]

- Sullivan GM, & Artino AR Jr. (2013). Analyzing and interpreting data from likert-type scales. J Grad Med Educ, 5, 541–542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swanenburg J, de Bruin ED, Favero K, et al. (2008). The reliability of postural balance measures in single and dual tasking in elderly fallers and non-fallers. BMC Musculoskelet Disord, 9, 162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas E, Martines F, Bianco A, et al. (2018). Decreased postural control in people with moderate hearing loss. Medicine (Baltimore), 97, e0244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay KL, Pinto A, Fischer ME, et al. (2015). Self-reported hearing difficulties among adults with normal audiograms: The Beaver Dam Offspring Study. Ear Hear, 36, e290–e299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verghese J, Buschke H, Viola L, et al. (2002). Validity of divided attention tasks in predicting falls in older individuals: A preliminary study. J Am Geriatr Soc, 50, 1572–1576. [DOI] [PubMed] [Google Scholar]

- Viana LM, O’Malley JT, Burgess BJ, et al. (2015). Cochlear neuropathy in human presbycusis: Confocal analysis of hidden hearing loss in post-mortem tissue. Hear Res, 327, 78–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vitkovic J, Le C, Lee SL, et al. (2016). The contribution of hearing and hearing loss to balance control. Audiol Neurootol, 21, 195–202. [DOI] [PubMed] [Google Scholar]

- Viljanen A, Kaprio J, Pyykkö I, et al. (2009). Hearing as a predictor of falls and postural balance in older female twins. J Gerontol A Biol Sci Med Sci, 64, 312–317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wambacq IJ, Koehnke J, Besing J, et al. (2009). Processing interaural cues in sound segregation by young and middle-aged brains. J Am Acad Audiol, 20, 453–458. [PubMed] [Google Scholar]

- Wiley TL, Cruickshanks KJ, Nondahl DM, et al. (1998). Aging and word recognition in competing message. J Am Acad Audiol, 9, 191–198. [PubMed] [Google Scholar]

- Zhong X, & Yost WA (2013). Relationship between postural stability and spatial hearing. J Am Acad Audiol, 24, 782–788. [DOI] [PubMed] [Google Scholar]