Summary

Quantifying gait parameters and ambulatory monitoring of changes in these parameters have become increasingly important in epidemiological and clinical studies. Using high-density accelerometry measurements, we propose adaptive empirical pattern transformation (ADEPT), a fast, scalable, and accurate method for segmentation of individual walking strides. ADEPT computes the covariance between a scaled and translated pattern function and the data, an idea similar to the continuous wavelet transform. The difference is that ADEPT uses a data-based pattern function, allows multiple pattern functions, can use other distances instead of the covariance, and the pattern function is not required to satisfy the wavelet admissibility condition. Compared to many existing approaches, ADEPT is designed to work with data collected at various body locations and is invariant to the direction of accelerometer axes relative to body orientation. The method is applied to and validated on accelerometry data collected during a  -m outdoor walk of

-m outdoor walk of  study participants wearing accelerometers on the wrist, hip, and both ankles. Additionally, all scripts and data needed to reproduce presented results are included in supplementary material available at Biostatistics online.

study participants wearing accelerometers on the wrist, hip, and both ankles. Additionally, all scripts and data needed to reproduce presented results are included in supplementary material available at Biostatistics online.

Keywords: ADEPT, Gait, Pattern segmentation, Physical activity, Walking, Wearable accelerometers

1. Introduction

The purpose of this article is to introduce and evaluate a new class of methods for automatic pattern segmentation from sub-second accelerometry data recordings. The problem was motivated by individual walking stride segmentation from continuous walking in large observational studies as well as in clinical trial settings. Obtaining individual strides from such data are important in scientific studies, because it can provide a detailed estimation of walking characteristics including a number of steps, cadence, acceleration, pattern, as well as the variability of these characteristics within- and between-days. Such measurements have the potential to better characterize large populations (Studenski and others, 2011; Urbanek and others, 2017), identify the disease onset and describe the pathophysiological cascade of a particular disease or patterns of recovery after treatment. However, manual segmentation of individual strides from very large accelerometry data is difficult, can only be applied in short time windows and is not scalable. Therefore, fast, automated, and reliable procedures for automatic detection of strides are needed.

1.1. Accelerometry data in physical activity monitoring

Technological advances led to an explosion in the popularity of wearable sensors in health research (Matthews and others, 2008; Healy and others, 2011; Schrack and others, 2014; Xiao and others, 2015; Karas and others, 2019). Modern wearable accelerometers typically collect  –

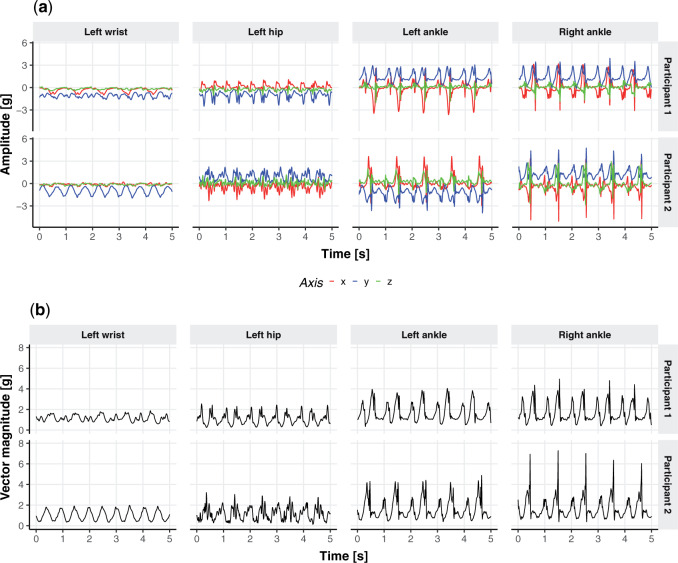

– observations per second along each of three orthogonal axes. To better understand the data, Figure 1a displays the three-dimensional time series of raw acceleration values collected from 5 s of walking for two different persons by sensors located at the four body locations (left wrist, left hip, left ankle, and right ankle). Each row of Figure 1a corresponds to one of two individuals, while each column corresponds to a body location: left wrist, left hip, left ankle, and right ankle, respectively. In the online version of the article, a different color (red, blue, and green) corresponds to one of the three orthogonal axes of the device: up/down, left/right, forward/backward in the device’s frame of reference. Figure 1b provides the acceleration vector magnitude (VM), computed as the square root of the sum of squares of the three acceleration values at each time point. The panels in Figure 1b correspond directly to the panels in Figure 1a. While the original data is three-dimensional, we will work with the one-dimensional VM time series, which is easier to handle and is sufficient for stride segmentation.

observations per second along each of three orthogonal axes. To better understand the data, Figure 1a displays the three-dimensional time series of raw acceleration values collected from 5 s of walking for two different persons by sensors located at the four body locations (left wrist, left hip, left ankle, and right ankle). Each row of Figure 1a corresponds to one of two individuals, while each column corresponds to a body location: left wrist, left hip, left ankle, and right ankle, respectively. In the online version of the article, a different color (red, blue, and green) corresponds to one of the three orthogonal axes of the device: up/down, left/right, forward/backward in the device’s frame of reference. Figure 1b provides the acceleration vector magnitude (VM), computed as the square root of the sum of squares of the three acceleration values at each time point. The panels in Figure 1b correspond directly to the panels in Figure 1a. While the original data is three-dimensional, we will work with the one-dimensional VM time series, which is easier to handle and is sufficient for stride segmentation.

Fig. 1.

(a) Three-dimensional acceleration time series from 5 s of walking for two different study participants (separate row panels) at four body locations: wrist, hip, left, and right ankle (separate column panels). In the online version of the article, a different color (red, blue, and green) corresponds to one of the three orthogonal axes of the device. (b) Same as the (a) panels but showing the vector magnitude, a one-dimensional summary of the three-dimensional time series.

1.2. Challenges in stride identification from accelerometry data

Figure 1a and b display clear repetitive patterns characterized by high amplitude peaks in the data. While these patterns are relatively clear to a human observer, identifying them algorithmically is, however, more complicated. There are many reasons why this problem is challenging. First, there is variation in the duration and shape of the repetitive patterns with substantial differences both within and between subjects. Second, there are multiple local maxima and exact identification of the start and end of a stride may depend on the position of the device. Third, the device can move, which further affects the time-series characteristics. Fourth, the shape, duration, and intensity of the signal can change with the time of day and energy level of the movement. Thus, any method that is designed to work well and reproducibly will need to account for these challenges and provide evidence that it can work for different subjects in complex environments. Below we describe the intuition and the main components of our idea.

1.3. Pattern definition and recognition

That brings us to the question: “what is a pattern and what does it mean to find patterns in the data?” We propose that a pattern is a function,  , with mean zero, variance one and compact support in the same domain as the data. For example, in our case,

, with mean zero, variance one and compact support in the same domain as the data. For example, in our case,  because we are working with univariate time series,

because we are working with univariate time series,  if

if  ,

,  , and

, and  . The requirements that the mean of the function is zero and variance is one is not necessary, but keeps pattern functions comparable. We also propose that finding a pattern is maximizing a distance (e.g., covariance) between this function, translated and scaled, and the original signal. More precisely, if the univariate VM time-series is denoted by

. The requirements that the mean of the function is zero and variance is one is not necessary, but keeps pattern functions comparable. We also propose that finding a pattern is maximizing a distance (e.g., covariance) between this function, translated and scaled, and the original signal. More precisely, if the univariate VM time-series is denoted by  then we are interested in the following covariance function

then we are interested in the following covariance function

|

(1.1) |

This is a simple formula, but it packs a powerful methodological punch that is worth watching again in slow motion. Note that the  is the function

is the function  translated by

translated by  and scaled by

and scaled by  , and its domain is

, and its domain is  . Moreover, the function

. Moreover, the function  continues to have mean zero and variance one. Of course, we can use the correlation between the original signal and the translated and scaled patterns, which can be done by dividing by the standard deviation of the time-series

continues to have mean zero and variance one. Of course, we can use the correlation between the original signal and the translated and scaled patterns, which can be done by dividing by the standard deviation of the time-series  in the interval

in the interval  . We could use other distance measures including

. We could use other distance measures including  ,

,  or any other distance between two vectors of the same length.

or any other distance between two vectors of the same length.

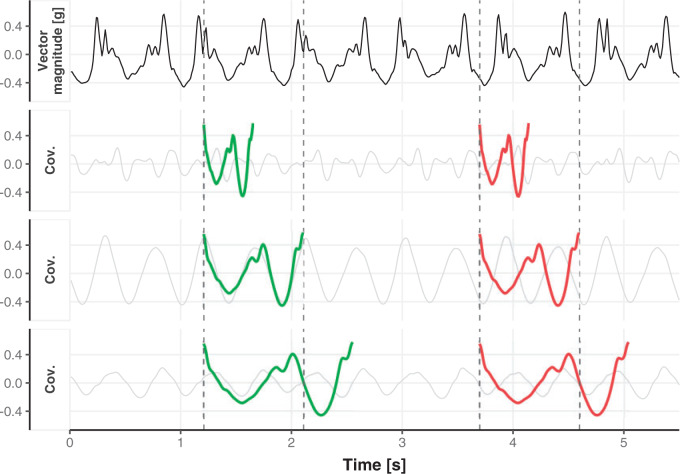

To visualize the translation and scaling operations on the data, Figure 2 displays four panels. The first panel displays 6 s of VM accelerometry data during walking for one person. Panels  ,

,  , and

, and  display the covariance between a pattern, scaled by a particular scale parameter

display the covariance between a pattern, scaled by a particular scale parameter  , and subsequent windows of VM. The reason the covariance function is continuous is that the translation of the pattern is continuous in the translation parameter

, and subsequent windows of VM. The reason the covariance function is continuous is that the translation of the pattern is continuous in the translation parameter  . For each scale parameter

. For each scale parameter  , we display two instances of the translated scaled pattern; in the online version of the article, one appears in green and one in red. As one compares the scaled patterns across scaling parameters (panels

, we display two instances of the translated scaled pattern; in the online version of the article, one appears in green and one in red. As one compares the scaled patterns across scaling parameters (panels  ,

,  , and

, and  ), it should become clear what the effect of scaling will be.

), it should become clear what the effect of scaling will be.

Fig. 2.

First horizontal panel: vector magnitude signal derived from accelerometry data of continuous walking. Three following horizontal panels: covariance (y-axis) between a stride pattern rescaled according to one of three scale parameters, respectively, and a VM signal window that corresponds to particular translation parameter (x-asis) of a pattern. Within each of three covariance horizontal panels, in the online version of the article, red and green colors denote a pattern of the same scale but different translation (location) parameter values.

1.4. Related literature

The transformation we have discussed above is widely used in the continuous wavelet transform (CWT) (Grossmann and Morlet, 1984), where the pattern  is called the mother wavelet. In CWT, the pattern or mother wavelet function is fixed, with popular choices including the Haar (Haar, 1910), Daubechies (Daubechies, 1988), and Biorthogonal (Cohen and others, 1992) mother wavelets. In statistics, discrete wavelet transform (DWT) is typically more well known and allows only discrete scaling of the mother movelet,

is called the mother wavelet. In CWT, the pattern or mother wavelet function is fixed, with popular choices including the Haar (Haar, 1910), Daubechies (Daubechies, 1988), and Biorthogonal (Cohen and others, 1992) mother wavelets. In statistics, discrete wavelet transform (DWT) is typically more well known and allows only discrete scaling of the mother movelet,  , where

, where  is in the set of integers,

is in the set of integers,  . DWT and CWT have been successfully used in a variety of scientific studies that involve digital signal processing including respiratory patterns (Dupuis and Eugene, 2000), cardiac rhythms (Madeiro and others, 2012), electromyography (Phinyomark and others, 2011), electroencephalography (EEG) (Gadhoumi and others, 2012), blood pressure measurements (Li and others, 2013), DNA sequences (Jia and others, 2015), and electrocardiography (ECG) signals (Yochum and others, 2016).

. DWT and CWT have been successfully used in a variety of scientific studies that involve digital signal processing including respiratory patterns (Dupuis and Eugene, 2000), cardiac rhythms (Madeiro and others, 2012), electromyography (Phinyomark and others, 2011), electroencephalography (EEG) (Gadhoumi and others, 2012), blood pressure measurements (Li and others, 2013), DNA sequences (Jia and others, 2015), and electrocardiography (ECG) signals (Yochum and others, 2016).

Our proposal is related to the large literature on estimation of activity type in health studies using accelerometers. Bai and others (2012) defined movelets, a family of subsecond-level accelerometry data patterns representing different types of physical activities and used them for activity classification. This approach has been further expanded by He and others (2014) and Xiao and others (2016). Another part of the literature has focused on activity type classification using minute level accelerometry data (Pober and others, 2006; Staudenmayer and others, 2009; Attal and others, 2015). These approaches use a number of accelerometry data features combined with supervised classification algorithms. There are many published algorithms for the quantification of cumulative walking time and quality using sub-second accelerometry data. The most basic ones are based on thresholding the signal amplitude (Dijkstra and others, 2008; Weiss and others, 2013), zero-crossing analysis (Jayalath and Abhayasinghe, 2013), local periodicity estimators (Kavanagh and Menz, 2008), and wavelet analysis (Nyan and others, 2006). More recent approaches have focused on template matching (Soaz and Diepold, 2016) and Fourier transformations (Dirican and Aksoy, 2017; Kang and others, 2018; Urbanek and others, 2018).

Walking strides are often segmented based on landmark events within a stride (e.g., heel-strike, push-off, or swing) (Willemsen and others, 1990; Selles and others, 2005; McCamley and others, 2012; Wang and others, 2012; Godfrey and others, 2015). These approaches rely heavily on the location of the device relative to the body, device orientation, and the assumption that the device is not moving during a task. Therefore, they are not robust to observed changes in signals during quasi free-living environments and are less likely to be extended to true free-living environment. Soaz and Diepold (2016) used template matching and clustering for step detection from data collected from a waist-worn accelerometer. Ying and others (2007) described an algorithm which uses a stride template derived dynamically from the data and proposed to use the auto-correlation of data segments for stride segmentation. Müller (2007) proposed dynamic time warping (DTW), where both the pattern and the signal are transformed non-linearly to obtain an optimal match in a pre-defined family of warping functions. Barth and others (2015) used multi-subsequence DTW to combine information from the different axes of the accelerometer and gyroscope. While we thought that approaches by Ying and others (2007) and Barth and others (2015) can potentially be robust to sensor location and orientation, we were unable to obtain the associated software from the authors to compare our proposal with these approaches.

Here, we propose adaptive empirical pattern transformation (ADEPT), a fast, scalable, and accurate method for segmentation of individual walking strides. ADEPT computes the covariance between a scaled and translated pattern function and the data, an idea similar to the CWT. The difference is that ADEPT uses a data-based pattern function, allows multiple pattern functions, can use other distances instead of the covariance, and the pattern function is not required to satisfy the wavelet admissibility condition. For completeness of the presentation, a function  satisfies the admissibility condition if

satisfies the admissibility condition if  , where

, where  denotes Fourier transform of the function

denotes Fourier transform of the function  . A square integrable function that satisfies the admissibility condition can be used to recover the original signal without loss of information (Sheng, 2000). Our approach does not require the complete recovery of the original signal, which allows us to use a broader class of functions for

. A square integrable function that satisfies the admissibility condition can be used to recover the original signal without loss of information (Sheng, 2000). Our approach does not require the complete recovery of the original signal, which allows us to use a broader class of functions for  . Compared to many existing approaches, ADEPT is designed to work with data collected at various body locations and is invariant to the direction of accelerometer axes relative to body orientation. We also provide open source software that implements ADEPT method, and make available both the data set and reproducible analysis code used in the application example. Those resources are referenced in supplementary material available at Biostatistics online.

. Compared to many existing approaches, ADEPT is designed to work with data collected at various body locations and is invariant to the direction of accelerometer axes relative to body orientation. We also provide open source software that implements ADEPT method, and make available both the data set and reproducible analysis code used in the application example. Those resources are referenced in supplementary material available at Biostatistics online.

The manuscript is organized as follows. Section 2 describes a continuous walking experiment conducted and introduces the notation and definitions. Sections 3 and 4 describe the two components of ADEPT. Section 5 presents the results of automatic segmentation of individual strides from accelerometry data collected from the experiment. Section 6 provides validation of the approach, and Section 7 contains the discussion.

2. Data, notation and definitions

2.1. Participants and data collection

Data were collected as a part of the study on identification of walking, stair climbing, and driving using wearable accelerometers, sponsored by the Indiana University CTSI grant and conducted at the Department of Biostatistics, Fairbanks School of Public Health at Indiana University. The study was led by Dr Jaroslaw Harezlak, assisted by Dr William Fadel and Dr Jacek Urbanek (coauthors on this article) and enrolled  healthy participants between

healthy participants between  and

and  years of age. In this article, we focus exclusively on the task of self-paced, undisturbed, outdoor walking on the sidewalk, even though the experiment contained additional tasks. During this task, participants were asked to walk outside on the pathway designed for the purpose of the study (indicated as a blue line on the map in Figure 8 in Appendix A of the supplementary material available at Biostatistics online). Each participant walked unaccompanied to maintain their own pace, while the supervising person recorded the starting and stopping time of the task. The length of the walking pathway was approximately

years of age. In this article, we focus exclusively on the task of self-paced, undisturbed, outdoor walking on the sidewalk, even though the experiment contained additional tasks. During this task, participants were asked to walk outside on the pathway designed for the purpose of the study (indicated as a blue line on the map in Figure 8 in Appendix A of the supplementary material available at Biostatistics online). Each participant walked unaccompanied to maintain their own pace, while the supervising person recorded the starting and stopping time of the task. The length of the walking pathway was approximately  feet and the duration of this task ranged between

feet and the duration of this task ranged between  and

and  min, depending on the pace of each participant. The pathway consists of smooth changes in elevation and has no sharp turns, turnarounds, or physical obstacles. Each participant was equipped with four 3-axial ActiGraph GT3X+ (ActiGraph LLC, Pensacola, FL, USA) accelerometers located on the left wrist, left hip, and both ankles. Accelerometers collected the data along three orthogonal axes with a sampling frequency of

min, depending on the pace of each participant. The pathway consists of smooth changes in elevation and has no sharp turns, turnarounds, or physical obstacles. Each participant was equipped with four 3-axial ActiGraph GT3X+ (ActiGraph LLC, Pensacola, FL, USA) accelerometers located on the left wrist, left hip, and both ankles. Accelerometers collected the data along three orthogonal axes with a sampling frequency of  Hz. Periods of walking were manually marked in the resulting dataset based on time markers generated during clapping. The study was approved by the IRB of Indiana University; all participants provided written informed consent.

Hz. Periods of walking were manually marked in the resulting dataset based on time markers generated during clapping. The study was approved by the IRB of Indiana University; all participants provided written informed consent.

2.2. Notation and definitions

We denote by  the three-dimensional acceleration time-series for subject

the three-dimensional acceleration time-series for subject  at time

at time  corresponding to the three accelerometer device axes. For every time point, we define the VM

corresponding to the three accelerometer device axes. For every time point, we define the VM  . Figure 1b provides an example of how the one-dimensional VM data are obtained from the three-dimensional accelerometry data displayed in Figure 1a. We propose to use the high-resolution one-dimensional VM data to conduct segmentation of individual strides. A stride is defined as a combination of two subsequent steps. We focus on strides as the information collected by an accelerometer about subsequent steps may be asymmetric due to device placement; for example, an accelerometer placed on the right hip will tend to record lower amplitude signals during a step with the left leg.

. Figure 1b provides an example of how the one-dimensional VM data are obtained from the three-dimensional accelerometry data displayed in Figure 1a. We propose to use the high-resolution one-dimensional VM data to conduct segmentation of individual strides. A stride is defined as a combination of two subsequent steps. We focus on strides as the information collected by an accelerometer about subsequent steps may be asymmetric due to device placement; for example, an accelerometer placed on the right hip will tend to record lower amplitude signals during a step with the left leg.

We denote by  ,

,  , the time when

, the time when  -th stride of

-th stride of  -th subject is initiated, where

-th subject is initiated, where  is the total number of strides for subject

is the total number of strides for subject  . We further define

. We further define  ,

,  , to be the time duration for the

, to be the time duration for the  -th stride of subject

-th stride of subject  . For simplicity, we will express

. For simplicity, we will express  in seconds. The

in seconds. The  -th stride of

-th stride of  -th subject is defined as

-th subject is defined as  , where

, where  is the VM function and

is the VM function and  is a discrete equally-spaced grid of time points at which the measurements were collected. The parameters

is a discrete equally-spaced grid of time points at which the measurements were collected. The parameters  ,

,  , and

, and  are a priori unknown. For continuous walking, the beginning of the next stride is the end of the current stride, that is,

are a priori unknown. For continuous walking, the beginning of the next stride is the end of the current stride, that is,  is the end of the

is the end of the  -th stride and the beginning of the

-th stride and the beginning of the  -th stride. The duration of a stride is hence given by

-th stride. The duration of a stride is hence given by  and the remaining unknown parameters are

and the remaining unknown parameters are  ’s, the times when strides are initiated. The notation provides the conceptual framework for partitioning the walking VM accelerometry data into adjacent strides. We also introduce the cadence of walking, which is number of steps per second. Cadence is related to the duration of the stride expressed in seconds,

’s, the times when strides are initiated. The notation provides the conceptual framework for partitioning the walking VM accelerometry data into adjacent strides. We also introduce the cadence of walking, which is number of steps per second. Cadence is related to the duration of the stride expressed in seconds,  , as

, as  .

.

Figure 9 in Appendix A of the supplementary material available at Biostatistics online displays acceleration time series for two subsequent strides, where the beginning of a stride is marked by  and

and  , respectively. The duration of the first stride, expressed in seconds, is

, respectively. The duration of the first stride, expressed in seconds, is  . The beginning of the second stride is

. The beginning of the second stride is  . In the figure, the first stride is initiated at

. In the figure, the first stride is initiated at  and the second stride is initiated at

and the second stride is initiated at  , yielding a duration of the first stride of

, yielding a duration of the first stride of  s and a cadence estimate equal to

s and a cadence estimate equal to  steps per second for this segment of walking.

steps per second for this segment of walking.

3. Empirical pattern estimation

As we described in Equation 1.1 of Section 1, we need to identify the pattern,  . Since we estimate the pattern from the data, we refer to the estimated

. Since we estimate the pattern from the data, we refer to the estimated  as an empirical pattern. For simplicity, we drop the hat notation and continue to denote the empirical pattern as

as an empirical pattern. For simplicity, we drop the hat notation and continue to denote the empirical pattern as  . The estimating process is relatively simple but requires some manual segmentation of patterns, which could be tedious. Figure 10a in Appendix A of the supplementary material available at Biostatistics online displays

. The estimating process is relatively simple but requires some manual segmentation of patterns, which could be tedious. Figure 10a in Appendix A of the supplementary material available at Biostatistics online displays  manually segmented strides; these strides represent a random subset from the

manually segmented strides; these strides represent a random subset from the  strides we obtained from

strides we obtained from  individuals, for an average of

individuals, for an average of  strides per person. Figure 10a in Appendix A of the supplementary material available at Biostatistics online shows quite a bit of variation in the length of the stride, with some strides taking as little as

strides per person. Figure 10a in Appendix A of the supplementary material available at Biostatistics online shows quite a bit of variation in the length of the stride, with some strides taking as little as  s and some strides taking

s and some strides taking  s or more. Some landmarks are de-synchronized across individuals; note, for example, the peak acceleration in the middle of the strides, which corresponds to the heel strike. To construct the empirical pattern we did something simple: (i) take every stride and stretch it or compress it to the interval

s or more. Some landmarks are de-synchronized across individuals; note, for example, the peak acceleration in the middle of the strides, which corresponds to the heel strike. To construct the empirical pattern we did something simple: (i) take every stride and stretch it or compress it to the interval  ; (ii) interpolate every stride on an equally-spaced grid; (iii) standardize every stride to have mean zero and variance one; (iv) average the standardized stride patterns; and (v) standardize resulted average to have mean zero and variance one. Figure 10b in Appendix A of the supplementary material available at Biostatistics online displays the

; (ii) interpolate every stride on an equally-spaced grid; (iii) standardize every stride to have mean zero and variance one; (iv) average the standardized stride patterns; and (v) standardize resulted average to have mean zero and variance one. Figure 10b in Appendix A of the supplementary material available at Biostatistics online displays the  stretched, centered, and normalized strides (black lines) and the normalized mean of all

stretched, centered, and normalized strides (black lines) and the normalized mean of all  such functions (red line). The red line represents the empirical pattern obtained from the data.

such functions (red line). The red line represents the empirical pattern obtained from the data.

If one empirical pattern is insufficient to capture the types of observed stride patterns, we propose to incorporate additional empirical patterns. Figure 11a in Appendix A of the supplementary material available at Biostatistics online displays the normalized strides, as described in Figure 10b in Appendix A of the supplementary material available at Biostatistics online, but clustered into two groups using the correlation similarity. Interestingly, the main difference between the two groups is the location of the peak acceleration in the middle of the stride (heel strike). The first group of strides (top panel) corresponds to a heel strike around  , while the second group corresponds to a heel strike around

, while the second group corresponds to a heel strike around  . Taking the averages of these two groups leads to two empirical patterns,

. Taking the averages of these two groups leads to two empirical patterns,  and

and  (shown as red lines.) Note that these two empirical patterns are correlated but also subtly different. Such differences would be hard to estimate by simply stretching the patterns, as the scaling of the empirical patterns does. We further pushed the envelope on extracting three distinct groups of strides and results are shown in Figure 11b in Appendix A of the supplementary material available at Biostatistics online. Results seem to be a further refinement of the two-group clustering, but it seems that we have hit a point of diminishing returns. In practice one may need to do this especially in cases when there are clearly defined subgroups (e.g., individuals who suffered a stroke).

(shown as red lines.) Note that these two empirical patterns are correlated but also subtly different. Such differences would be hard to estimate by simply stretching the patterns, as the scaling of the empirical patterns does. We further pushed the envelope on extracting three distinct groups of strides and results are shown in Figure 11b in Appendix A of the supplementary material available at Biostatistics online. Results seem to be a further refinement of the two-group clustering, but it seems that we have hit a point of diminishing returns. In practice one may need to do this especially in cases when there are clearly defined subgroups (e.g., individuals who suffered a stroke).

4. The maximization-tunning procedure for ADEPT

Once the empirical pattern is estimated, we propose a two-step procedure to segment strides. The first step consists of maximization of the covariance between the rescaled empirical pattern and the VM signal in various time windows. This provides a good idea about where the stride is localized, but it can miss the exact location by fractions of a second. The second step is designed to tune the stride segmentation to better match the beginning and end of a stride.

4.1. Vector magnitude smoothing

In the maximization-tuning procedure, the raw VM signal is smoothed using an unweighted moving window average. To understand the window size effect on smoothing, Figure 12 in Appendix A of the supplementary material available at Biostatistics online displays three horizontal panels. The first horizontal panel shows the VM data collected from the left wrist, left hip, and both ankles during 3 s of walking for one person. Panels 2 and 3 display the smoothed version of the VM signal using a moving window of length  equal to

equal to  (an example of moderate smoothing) and

(an example of moderate smoothing) and  s (an example of aggressive smoothing), respectively. The blue vertical lines indicate the local maxima of the VM smoothed signal with a window size of

s (an example of aggressive smoothing), respectively. The blue vertical lines indicate the local maxima of the VM smoothed signal with a window size of  s. For the first algorithm step (covariance maximization), we recommend moderate smoothing which preserves the major features of the original signal. However, moderate smoothing may leave the multiple local maxima present at the beginning and end of a stride (note the wiggles in the proximity of the dominant peaks in the second row of panels). If this occurs, to avoid ambiguity in determining which data peak corresponds to stride beginning/end, we recommend applying aggressive smoothing for the second step (tuning) to smooth over neighboring local maxima. The aggressive smoothing used in the tuning step may aid strides segmentation from wrist data in particular, where the stride beginning and end are less well-defined compared to data from the hip and ankle.

s. For the first algorithm step (covariance maximization), we recommend moderate smoothing which preserves the major features of the original signal. However, moderate smoothing may leave the multiple local maxima present at the beginning and end of a stride (note the wiggles in the proximity of the dominant peaks in the second row of panels). If this occurs, to avoid ambiguity in determining which data peak corresponds to stride beginning/end, we recommend applying aggressive smoothing for the second step (tuning) to smooth over neighboring local maxima. The aggressive smoothing used in the tuning step may aid strides segmentation from wrist data in particular, where the stride beginning and end are less well-defined compared to data from the hip and ankle.

4.2. Distance matrix computation

The first step of the procedure starts by calculating the covariance function,  , which was described in equation (1.1). This function depends on two parameters, the scale

, which was described in equation (1.1). This function depends on two parameters, the scale  and the location

and the location  and will be calculated on a two-dimensional grid and stored as a matrix. Note that if

and will be calculated on a two-dimensional grid and stored as a matrix. Note that if  and

and  denote the minimum and maximum walking cadence, respectively, the duration of strides is between

denote the minimum and maximum walking cadence, respectively, the duration of strides is between  and

and  . Therefore, we considering a grid of scaling factors,

. Therefore, we considering a grid of scaling factors,  , such that the rescaled versions of the pattern function

, such that the rescaled versions of the pattern function  cover densely the interval

cover densely the interval  . Once the

. Once the  pattern is scaled we use linear interpolation to match the sampling points with those of the observed data. The location of stride patterns can be estimated by identifying the parameters

pattern is scaled we use linear interpolation to match the sampling points with those of the observed data. The location of stride patterns can be estimated by identifying the parameters  and scale

and scale  that maximize the covariance matrix.

that maximize the covariance matrix.

Figure 13 in Appendix A of the supplementary material available at Biostatistics online provides the visualization of the ADEPT covariance matrix for a  s walking period for one individual; data are from the left ankle monitor. The x-axis corresponds to time (the

s walking period for one individual; data are from the left ankle monitor. The x-axis corresponds to time (the  location parameter), while the y-axis corresponds to the stride duration (the

location parameter), while the y-axis corresponds to the stride duration (the  scaling parameter.) The black dot indicates the point of the largest sample covariance, which is equal

scaling parameter.) The black dot indicates the point of the largest sample covariance, which is equal  . This value is attained for an empirical pattern

. This value is attained for an empirical pattern  rescaled to have a duration of

rescaled to have a duration of  that starts at time

that starts at time  from the beginning of the recording. A quick visual inspection indicates that there are four different strides in this particular segment. So far, we have discussed the case when only one empirical pattern is available. When there are multiple empirical patterns

from the beginning of the recording. A quick visual inspection indicates that there are four different strides in this particular segment. So far, we have discussed the case when only one empirical pattern is available. When there are multiple empirical patterns  ,

,  , we first compute the sample covariance matrix

, we first compute the sample covariance matrix  for each of them separately and then calculate

for each of them separately and then calculate  as the entry-wise maximum across the pattern-specific covariance matrices. Matrix

as the entry-wise maximum across the pattern-specific covariance matrices. Matrix  is then used throughout the segmentation procedure.

is then used throughout the segmentation procedure.

4.3. Tuning procedure

The maximization of the covariance function provides an initial estimator of the stride location, but the estimation can be off by fractions of a second. This is due to the large fluctuations of the VM signal toward the beginning and end of the stride. Therefore, the exact location of the stride may be missed. Recall that the covariance maximization procedure identifies a start point,  , and the scaling parameter,

, and the scaling parameter,  . The scaling parameter corresponds to a specific length,

. The scaling parameter corresponds to a specific length,  , of a stride,

, of a stride,  . Thus, the stride is estimated to be

. Thus, the stride is estimated to be  for

for  . Once this is obtained we are interested in tuning both

. Once this is obtained we are interested in tuning both  and

and  .

.

To do this we focus on estimating the largest local maximum in a neighborhood of  . In general, the covariance maximization procedure does not ensure that

. In general, the covariance maximization procedure does not ensure that  is a local maximum and the closest local maximum may not be the largest local maximum in the signal. We start by building two neighborhoods of a fixed length, centered at

is a local maximum and the closest local maximum may not be the largest local maximum in the signal. We start by building two neighborhoods of a fixed length, centered at  and

and  , respectively. The tuning procedure is simple: identify the local maximum of (possibly smoothed) VM signal in each of these neighborhoods. This procedure is applied after each

, respectively. The tuning procedure is simple: identify the local maximum of (possibly smoothed) VM signal in each of these neighborhoods. This procedure is applied after each  -th stride location and duration is estimated using the covariance matrix.

-th stride location and duration is estimated using the covariance matrix.

The left panel in Figure 14 in Appendix A of the supplementary material available at Biostatistics online displays a small part of the raw VM signal collected from the left ankle (black line). The blue vertical lines indicate  and

and  , which were identified in the covariance maximization step; refer to Figure 13 in Appendix A of the supplementary material available at Biostatistics online. The blue shaded areas denote the symmetric neighborhoods of length

, which were identified in the covariance maximization step; refer to Figure 13 in Appendix A of the supplementary material available at Biostatistics online. The blue shaded areas denote the symmetric neighborhoods of length  s around

s around  and

and  , respectively. The right panel in Figure 14 in Appendix A of the supplementary material available at Biostatistics online displays the same raw (black line) and smooth (red line) VM signal with a moving average window of

, respectively. The right panel in Figure 14 in Appendix A of the supplementary material available at Biostatistics online displays the same raw (black line) and smooth (red line) VM signal with a moving average window of  s. The red vertical lines mark local maxima of the smoothed VM signal, which are at

s. The red vertical lines mark local maxima of the smoothed VM signal, which are at  and

and  . These values are only slightly different from the original ones, but it provides more reproducible estimates of the beginning and end of a stride. The maximization tuning algorithm proceeds until all strides within the VM signal are identified.

. These values are only slightly different from the original ones, but it provides more reproducible estimates of the beginning and end of a stride. The maximization tuning algorithm proceeds until all strides within the VM signal are identified.

5. Strides segmentation from raw accelerometry data

ADEPT was applied to automatically segment strides from accelerometry data collected from all the  study participants in the study. We focused on continuous walking periods and used all four sensor locations: left wrist, left hip, and both ankles. The procedure was applied separately to every sensor location; the algorithm smoothing and fine-tuning parameters were kept the same across sensor locations.

study participants in the study. We focused on continuous walking periods and used all four sensor locations: left wrist, left hip, and both ankles. The procedure was applied separately to every sensor location; the algorithm smoothing and fine-tuning parameters were kept the same across sensor locations.

5.1. Estimation of the empirical patterns of strides

The procedure started with the manual segmentation of  strides from all

strides from all  individuals (

individuals ( strides per individual) conducted by a specialist in accelerometer signal processing (Dr Urbanek, a coauthor on this article). Strides were defined as segments of the data between one heel-strike peak and the subsequent heel-strike of the same leg. For manual segmentation, data from all body-locations were synchronized manually to ensure that heel-strike peaks occur at the same time-points in all four time-series. This substantially reduced manual segmentation time but introduced some small errors which are likely due to the clock drift across devices (Karas and others, 2019). Next, five 5-s data segments were chosen randomly for each individual and a time-index for the beginning and the end of each stride was manually marked using

strides per individual) conducted by a specialist in accelerometer signal processing (Dr Urbanek, a coauthor on this article). Strides were defined as segments of the data between one heel-strike peak and the subsequent heel-strike of the same leg. For manual segmentation, data from all body-locations were synchronized manually to ensure that heel-strike peaks occur at the same time-points in all four time-series. This substantially reduced manual segmentation time but introduced some small errors which are likely due to the clock drift across devices (Karas and others, 2019). Next, five 5-s data segments were chosen randomly for each individual and a time-index for the beginning and the end of each stride was manually marked using  base function in

base function in  statistical software. This was an extremely laborious process that took approximately

statistical software. This was an extremely laborious process that took approximately  h and covered about

h and covered about  % of the walking data. Thus, manual segmentation is impractical even in small studies and impossible in moderately large studies. Moreover, manual segmentation has its own imperfections, which we address using ADEPT.

% of the walking data. Thus, manual segmentation is impractical even in small studies and impossible in moderately large studies. Moreover, manual segmentation has its own imperfections, which we address using ADEPT.

For each sensor location, we estimated two empirical patterns based on the  manually segmented strides; the sets of estimated patterns are showed in Figure 15 in Appendix A of the supplementary material available at Biostatistics online, where each location corresponds to a panel column and a color. The left wrist and left ankle, the empirical patterns are clearly distinct; note the middle of the stride differences in the pattern shape for the left wrist (first panel column) and size of the local maximum for left ankle (third panel column). The empirical patterns for the right ankle (fourth panel column) differ in terms of the timing of the heel strike (local maximum in the middle of the stride) and intensity. These differences are most likely due to differences between individuals. The empirical patterns for the left hip (second panel column) are slightly different, especially at the beginning and end of the stride. They appear to be the same, but phase-shifted, which is most likely due to manual segmentation artifacts.

manually segmented strides; the sets of estimated patterns are showed in Figure 15 in Appendix A of the supplementary material available at Biostatistics online, where each location corresponds to a panel column and a color. The left wrist and left ankle, the empirical patterns are clearly distinct; note the middle of the stride differences in the pattern shape for the left wrist (first panel column) and size of the local maximum for left ankle (third panel column). The empirical patterns for the right ankle (fourth panel column) differ in terms of the timing of the heel strike (local maximum in the middle of the stride) and intensity. These differences are most likely due to differences between individuals. The empirical patterns for the left hip (second panel column) are slightly different, especially at the beginning and end of the stride. They appear to be the same, but phase-shifted, which is most likely due to manual segmentation artifacts.

5.2. Stride segmentation

Once the empirical patterns were obtained, we estimated the location of strides by maximization of covariance function (1.1) and fine-tuned the location estimation. For the scale parameter, we used a  -dimensional grid to ensure that the stride pattern function,

-dimensional grid to ensure that the stride pattern function,  , covers densely the interval

, covers densely the interval  expressed in seconds. We used a smoothing window of length

expressed in seconds. We used a smoothing window of length  in the covariance maximization step,

in the covariance maximization step,  in the fine-tuning step, and a window of length

in the fine-tuning step, and a window of length  s length to search for the local maximum.

s length to search for the local maximum.

Table 1 in Appendix B of the supplementary material available at Biostatistics online summarizes the number of segmented strides per person grouped by sensor location. On average, we identified  strides per person from data collected at each location; the minimum, maximum and three quartiles for the number of strides are close, but not identical, across sensor locations. Table 2 in Appendix B of the supplementary material available at Biostatistics online displays summaries of the estimated stride duration time (in seconds) grouped by sensor location; on average, an estimated stride duration time was between

strides per person from data collected at each location; the minimum, maximum and three quartiles for the number of strides are close, but not identical, across sensor locations. Table 2 in Appendix B of the supplementary material available at Biostatistics online displays summaries of the estimated stride duration time (in seconds) grouped by sensor location; on average, an estimated stride duration time was between  and

and  s, with slight variations across sensor locations.

s, with slight variations across sensor locations.

5.3. Cadence estimation

Once every individual stride is estimated, the walking cadence (number of steps per second) can be estimated as a function of time. More precisely, for a stride estimated to occur between  the cadence is estimated as

the cadence is estimated as  . Figure 16 in Appendix A of the supplementary material available at Biostatistics online provides these cadence estimates for the left ankle data. Each row (y-axis) corresponds to one person, and each column (x-axis) corresponds to a

. Figure 16 in Appendix A of the supplementary material available at Biostatistics online provides these cadence estimates for the left ankle data. Each row (y-axis) corresponds to one person, and each column (x-axis) corresponds to a  second window of walking. More intense blue corresponds to lower cadence and more intense red corresponds to higher cadence. Rows are ordered according to the median cadence, with lower median cadence displayed higher. The median cadence was correlated (

second window of walking. More intense blue corresponds to lower cadence and more intense red corresponds to higher cadence. Rows are ordered according to the median cadence, with lower median cadence displayed higher. The median cadence was correlated ( ) with the average walking duration, with height (

) with the average walking duration, with height ( ), and weight (

), and weight ( ).

).

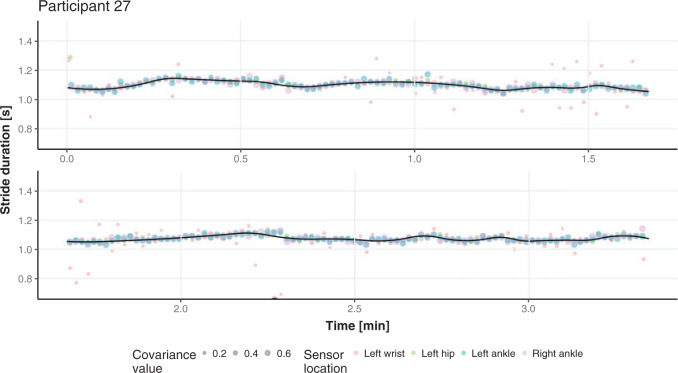

6. Segmentation validation

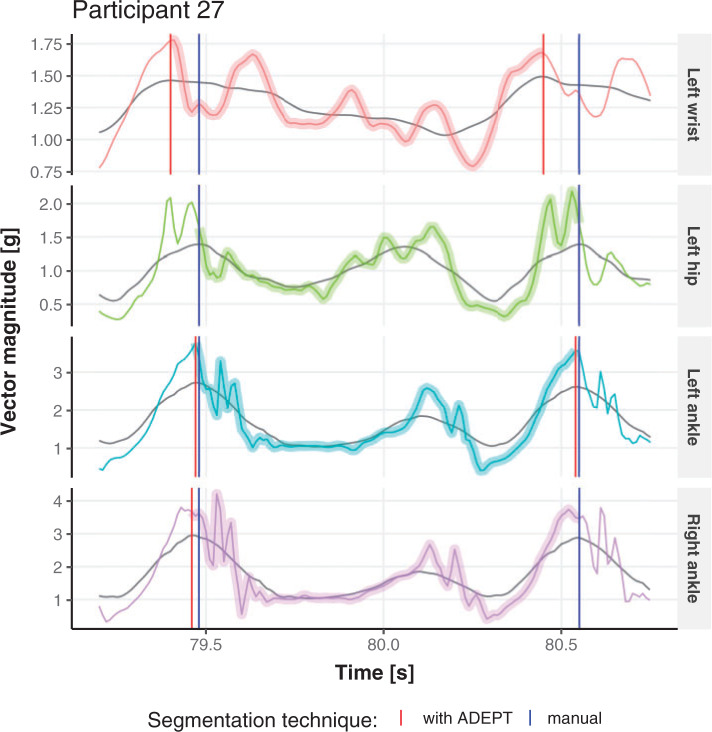

We do not have a true, “gold standard” segmentation of strides, which raises important challenges when it comes to validation. A good first step is to visually inspect the results and ensure that stride estimates are not completely misaligned with the data. We have conducted this visual inspection and we could not find any substantial discrepancies. This process is hard to illustrate in a article, though Figure 4 provides a short snapshot of the process. A second visualization step is to compare the stride estimators obtained from different sensor locations. If strides are consistently estimated across body locations, at least the procedure is consistently right or wrong. For one person, Figure 3 provides the estimated stride duration (y-axis) as a function of the estimated start point of the stride for all four locations (shown as different color bubbles in the online version of the article). In the online version of the article, the color of points corresponds to different sensor location: left wrist (red), left hip (green), left ankle (blue), and right ankle (purple). The size of the point corresponds to the covariance between the VM signal and the empirical pattern (see Section 4.2); the covariance values should be used to only compare estimates within the same sensor location. The black solid line is a nonparametric smoother for the dots across all four body locations. Figure 3 indicates that the stride duration and the beginning of the stride estimates are consistent across the four sensors for most of the walking experiment time; note that most dots are, in fact, overlapping. There are a few discrepancies, and they all correspond to the left wrist. This is important, as many current studies suggest using the non-dominant wrist location for accelerometers.

Fig. 4.

Short segments of the vector magnitude and its smoothed version (gray line) for Participant  . Comparing stride segmentation using the manual (blue vertical lines) and ADEPT (red vertical lines) approaches for four different body locations. The color version of the plot is available in the online version of the article.

. Comparing stride segmentation using the manual (blue vertical lines) and ADEPT (red vertical lines) approaches for four different body locations. The color version of the plot is available in the online version of the article.

Fig. 3.

Estimated start point (x-axis) and duration time (y-axis) of all estimated strides for one study participant across four sensor locations. Each point represents a one estimated stride from particular sensor location. The size of a point corresponds to covariance value between VM signal and empirical pattern that yielded the stride estimate and, in the online version of the article, the color of a point denotes sensor location. Black line represents a nonparametric regression fit curve.

6.1. Segmentation results consistency across sensor locations

Here, we formalize the idea suggested by Figure 3. The solid line provides a consensus estimator of the stride duration across body locations. Denote by  the estimated length of the

the estimated length of the  -th stride for subject

-th stride for subject  using the location

using the location  (here

(here  because there are four body locations). Also denote by

because there are four body locations). Also denote by  the consensus estimator for the stride length; this was obtained by smoothing the data

the consensus estimator for the stride length; this was obtained by smoothing the data  , where

, where  is the estimated time when stride

is the estimated time when stride  was initiated for subject

was initiated for subject  according to location

according to location  . This is done for a fixed

. This is done for a fixed  and by combining points over locations,

and by combining points over locations,  . We define the percent absolute deviation for stride

. We define the percent absolute deviation for stride  as

as  , which provides a measure of the error,

, which provides a measure of the error,  , relative to the signal,

, relative to the signal,  . It could be viewed as a noise to signal ratio or an estimator of the coefficient of variation. By averaging these values over

. It could be viewed as a noise to signal ratio or an estimator of the coefficient of variation. By averaging these values over  we obtain an individual percent absolute deviation for each body location

we obtain an individual percent absolute deviation for each body location  , where

, where  is the estimated number of strides for subject

is the estimated number of strides for subject  at location

at location  .

.

For subject  the iPAD for the left wrist, left hip, left ankle, and right ankle stride estimates were

the iPAD for the left wrist, left hip, left ankle, and right ankle stride estimates were  %,

%,  %,

%,  %, and

%, and  %, respectively. The average iPAD across study participants was

%, respectively. The average iPAD across study participants was  %,

%,  %,

%,  %, and

%, and  %, respectively. All subject-specific iPAD values are showed in Table 3 in Appendix B of the supplementary material available at Biostatistics online. Results indicate that the errors are small relative to the signal for all body locations, though the left wrist has an error that is roughly three to four times larger than for the other locations.

%, respectively. All subject-specific iPAD values are showed in Table 3 in Appendix B of the supplementary material available at Biostatistics online. Results indicate that the errors are small relative to the signal for all body locations, though the left wrist has an error that is roughly three to four times larger than for the other locations.

6.2. Consistency between ADEPT and manual segmentation

Manual segmentations were used primarily to estimate location-specific empirical patterns. Once empirical patterns are estimated we can re-estimate these strides using ADEPT. Thus, we can compare the results of the manual and ADEPT segmentations on all the  manually segmented strides. To do this, we split randomly the

manually segmented strides. To do this, we split randomly the  study participants into four equally sized groups, eight participants in each. In each group, we used ADEPT to segment strides from data of the eight members of that group, using stride patterns estimated from manually segmented strides of the remaining 24 participants. We repeated the procedure for each four participant groups. For each manually-segmented stride, we identified the closest ADEPT-segmented pattern by minimizing the start point distance. For each matched pair, we computed the differences between the estimated start and end times, respectively.

study participants into four equally sized groups, eight participants in each. In each group, we used ADEPT to segment strides from data of the eight members of that group, using stride patterns estimated from manually segmented strides of the remaining 24 participants. We repeated the procedure for each four participant groups. For each manually-segmented stride, we identified the closest ADEPT-segmented pattern by minimizing the start point distance. For each matched pair, we computed the differences between the estimated start and end times, respectively.

For example, for Participant  the average estimated stride duration was

the average estimated stride duration was  s. The stride start difference values has a mean (standard deviation) summaries equal:

s. The stride start difference values has a mean (standard deviation) summaries equal:  ,

,  , and

, and  (in seconds) for left hip, left ankle, and right ankle, respectively. For the left wrist, the mean of difference was

(in seconds) for left hip, left ankle, and right ankle, respectively. For the left wrist, the mean of difference was  s, while the standard deviation was

s, while the standard deviation was  s. Thus, the differences are roughly of the order of

s. Thus, the differences are roughly of the order of  –

– % of the length of the stride. A similar result can be observed across subjects. The empirical density distributions of these differences for all subjects combined are shown in Figure 17 in Appendix A of the supplementary material available at Biostatistics online. They indicate excellent agreement for all body locations, with slightly worse performance for the left wrist. The differences for all subjects combined are summarized in Table 4 in Appendix B of the supplementary material available at Biostatistics online. For comparison, in Table 5 in Appendix B of the supplementary material available at Biostatistics online, we included the summary of differences obtained when ADEPT uses stride patterns derived from data of all

% of the length of the stride. A similar result can be observed across subjects. The empirical density distributions of these differences for all subjects combined are shown in Figure 17 in Appendix A of the supplementary material available at Biostatistics online. They indicate excellent agreement for all body locations, with slightly worse performance for the left wrist. The differences for all subjects combined are summarized in Table 4 in Appendix B of the supplementary material available at Biostatistics online. For comparison, in Table 5 in Appendix B of the supplementary material available at Biostatistics online, we included the summary of differences obtained when ADEPT uses stride patterns derived from data of all  study participants. Results are almost identical with the results obtained using the partition into four subgroups.

study participants. Results are almost identical with the results obtained using the partition into four subgroups.

The differences between manual and ADEPT segmentations should not automatically be interpreted in favor of the manual segmentation. For example, Figure 4 compares four strides of Participant  , using the manual (blue lines) and ADEPT (red lines) segmentation results using the validation procedure described above (the color version of the plot is available in the online version of the article). Each horizontal plot panel corresponds to a different accelerometry sensor location. Colored lines are the original VM signal, and the gray lines are the smoothed VM signals. The largest observed discrepancy between ADEPT and manual segmentation is for the left wrist, indicating a difference of

, using the manual (blue lines) and ADEPT (red lines) segmentation results using the validation procedure described above (the color version of the plot is available in the online version of the article). Each horizontal plot panel corresponds to a different accelerometry sensor location. Colored lines are the original VM signal, and the gray lines are the smoothed VM signals. The largest observed discrepancy between ADEPT and manual segmentation is for the left wrist, indicating a difference of  and

and  s between the estimated stride start and end points, respectively. However, visual inspection seems to indicate that ADEPT estimates the strides more accurately than the manual approach. For the other locations, the differences between manual and ADEPT segmentations are visually indistinguishable.

s between the estimated stride start and end points, respectively. However, visual inspection seems to indicate that ADEPT estimates the strides more accurately than the manual approach. For the other locations, the differences between manual and ADEPT segmentations are visually indistinguishable.

6.3. Visualization of the estimated stride patterns

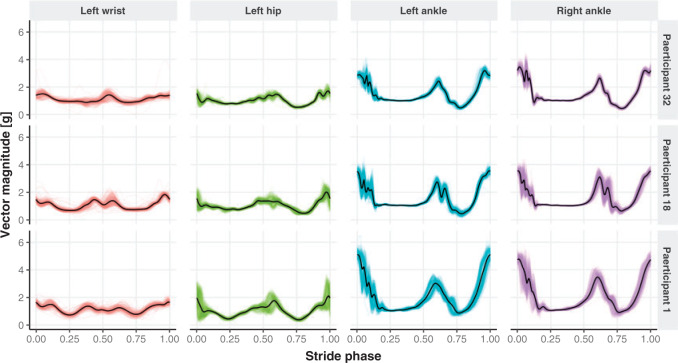

Visually inspecting results could be very difficult and subject to substantial observer bias and measurement error. Instead, we propose to conduct a parallel visualization of the time series, by jointly plotting the segmented strides. More precisely, for each study participant and location we registered the ADEPT segmented stride to the  interval using linear interpolation. Figure 5 displays these registered ADEPT segmented strides for each location and three study participants. The color version of the plot is available in the online version of the article. Each stride is displayed as a line colored according to the location, while the solid black lines indicate the average of these strides. Participants

interval using linear interpolation. Figure 5 displays these registered ADEPT segmented strides for each location and three study participants. The color version of the plot is available in the online version of the article. Each stride is displayed as a line colored according to the location, while the solid black lines indicate the average of these strides. Participants  and

and  were selected because they had the highest and the lowest median walking cadence among the

were selected because they had the highest and the lowest median walking cadence among the  study participants, respectively. Participant

study participants, respectively. Participant  was selected because he had the longest execution time of the walking component of the study. Study participant

was selected because he had the longest execution time of the walking component of the study. Study participant  performed the walking exercise in the shortest time. Visual inspection of these results does not trigger any particular red flag in terms of segmentation, though we could envision further quantifying the location- and subject-specific variability or outlier detection methods for identifying incorrect stride segmentations. Moreover, even though we started with population-specific patterns of walking, after applying ADEPT we end up with subject-specific strides. These strides can be used to extract subject-specific patterns, which could be used for further refinement of ADEPT at the subject level.

performed the walking exercise in the shortest time. Visual inspection of these results does not trigger any particular red flag in terms of segmentation, though we could envision further quantifying the location- and subject-specific variability or outlier detection methods for identifying incorrect stride segmentations. Moreover, even though we started with population-specific patterns of walking, after applying ADEPT we end up with subject-specific strides. These strides can be used to extract subject-specific patterns, which could be used for further refinement of ADEPT at the subject level.

Fig. 5.

Individual strides segmented with the proposed automatic approach (thin colored lines) together with the derived subject- and location-specific stride pattern (black line) for three study participants, across four sensor locations. The color version of the plot is available in the online version of the article.

7. Discussion

We proposed ADEPT for precise identification of individual walking strides from high-resolution raw accelerometry data. Our automated approach reduces strides segmentation time substantially, making the approach feasible for moderate and large studies. ADEPT yields results visually indistinguishable from manual segmentation for most locations, with some larger discrepancies for the non-dominant wrist. ADEPT identifies maxima of the covariance (or other distances) between the scaled and translated stride pattern and a data signal, a concept that has common characteristics with CWT. Unlike CWT, ADEPT uses a data-based pattern function, allows multiple pattern functions, can use other distances instead of the covariance, and the pattern function is not required to satisfy the wavelet admissibility condition. ADEPT also contains a novel fine-tuning procedure. Compared to many existing approaches, ADEPT is designed to work with data collected at various body locations and is invariant to the direction of accelerometer axes relative to body orientation.

ADEPT can be used in various studies that require segmentation of strides from subsecond accelerometry data collected during standardized walking tests (e.g.,  -min walk test (Salbach and others, 2015)). The segmented strides can be used to estimate interval-specific walking cadence, derive subject- and population-specific stride patterns, and stride-to-stride amplitude and phase deviation (Urbanek and others, 2017). However, we expect that ADEPT approach will work well in a range of applications including detection of other types of movements or even different biosignals, such as ECG and EEG. A particularly challenging area is walking identification in the free-living environment. The current ADEPT approach has several potential limitations. For example, the study participants consisted of healthy individuals, with age ranging between

-min walk test (Salbach and others, 2015)). The segmented strides can be used to estimate interval-specific walking cadence, derive subject- and population-specific stride patterns, and stride-to-stride amplitude and phase deviation (Urbanek and others, 2017). However, we expect that ADEPT approach will work well in a range of applications including detection of other types of movements or even different biosignals, such as ECG and EEG. A particularly challenging area is walking identification in the free-living environment. The current ADEPT approach has several potential limitations. For example, the study participants consisted of healthy individuals, with age ranging between  and

and  years. Whether ADEPT applies to older study participants or individuals with impaired walking remains an open problem. Moreover, we only investigated data collected during continuous outdoor walking on a flat surface, which may not directly generalize to walking in heterogeneous and complex environments.

years. Whether ADEPT applies to older study participants or individuals with impaired walking remains an open problem. Moreover, we only investigated data collected during continuous outdoor walking on a flat surface, which may not directly generalize to walking in heterogeneous and complex environments.

Supplementary Material

Acknowledgments

Conflict of Interest: None declared.

Funding

Research was partially supported by the NIMH grant R01MH108467.

References

- Attal, F., Mohammed, S., Dedabrishvili, M., Chamroukhi, F., Oukhellou, L. and Amirat, Y. (2015). Physical human activity recognition using wearable sensors. Sensors (Basel) 15, 31314–31338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai, J., Goldsmith, J., Caffo, B., Glass, T. A. and Crainiceanu, C. M. (2012). Movelets: a dictionary of movement. Electronic Journal of Statistics 6, 559–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barth, J., Oberndorfer, C., Pasluosta, C., Schülein, S., Gassner, H., Reinfelder, S., Kugler, P., Schuldhaus, D., Winkler, J., Klucken, J.. and others. (2015). Stride segmentation during free walk movements using multi-dimensional subsequence dynamic time warping on inertial sensor data. Sensors 15, 6419–6440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, A., Daubechies, I. and Feauveau, J.-C. (1992). Biorthogonal bases of compactly supported wavelets. Communications on Pure and Applied Mathematics 45, 485–560. [Google Scholar]

- Daubechies, I. (1988). Orthogonal bases of compactly supported wavelets. Communications on Pure and Applied Mathematics 41, 909–996. [Google Scholar]

- Dijkstra, B., Zijlstra, W., Scherder, E. and Kamsma, Y. (2008). Detection of walking periods and number of steps in older adults and patients with parkinson’s disease: accuracy of a pedometer and an accelerometry-based method. Age and Ageing 37, 436–441. [DOI] [PubMed] [Google Scholar]

- Dirican, A. and Aksoy, S. (2017). Step counting using smartphone accelerometer and fast fourier trransform. Sigma Journal of Engineering and Natural Sciences 8, 175–182. [Google Scholar]

- Dupuis, P. and Eugene, C. (2000). Combined detection of respiratory and cardiac rhythm disorders by high-resolution differential cuff pressure measurement. IEEE Transactions on Instrumentation and Measurement 49, 498–502. [Google Scholar]

- Gadhoumi, K., Lina, J. M. and Gotman, J. (2012). Discriminating preictal and interictal states in patients with temporal lobe epilepsy using wavelet analysis of intracerebral EEG. Clinical Neurophysiology 123, 1906–1916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Godfrey, A., Din, S., Barry, G., Mathers, J. and Rochester, L. (2015). Instrumenting gait with an accelerometer: a system and algorithm examination. Medical Engineering and Physics 37, 400–407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann, A. and Morlet, J. (1984). Decomposition of hardy functions into square integrable wavelets of constant shape. SIAM Journal on Mathematical Analysis 15, 723–736. [Google Scholar]

- Haar, A. (1910). Zur theorie der orthogonalen funktionensysteme. Mathematische Annalen 69, 331–371. [Google Scholar]

- He, B., Bai, J., Zipunnikov, V. V., Koster, A., Caserotti, P., Lange-Maia, B., Glynn, N. W., Harris, T. B. and Crainiceanu, C. M. (2014). Predicting human movement with multiple accelerometers using movelets. Medicine and Science in Sports and Exercise 46, 1859–1866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Healy, G. N., Matthews, C. E., Dunstan, D. W., Winkler, E. A. H. and Owen, N. (2011). Sedentary time and cardio-metabolic biomarkers in US adults: NHANES 200306. European Heart Journal 32, 590–597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jayalath, S. and Abhayasinghe, N. (2013). A gyroscopic data based pedometer algorithm. In: Proceedings of the 8th International Conference on Computer Science and Education, ICCSE 2013. Colombo: IEEE, pp. 551–555. [Google Scholar]

- Jia, J., Liu, Z., Xiao, X., Liu, B. and Chou, K. C. (2015). iPPI-Esml: AN ensemble classifier for identifying the interactions of proteins by incorporating their physicochemical properties and wavelet transforms into PseAAC. Journal of Theoretical Biology 377, 47–56. [DOI] [PubMed] [Google Scholar]

- Kang, X., Huang, B. and Qi, G. (2018). A novel walking detection and step counting algorithm using unconstrained smartphones. Sensors (Basel, Switzerland) 18, 297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karas, M., Bai, J., Straczkiewicz, M., Harezlak, J., Glynn, N. W., Harris, T., Zipunnikov, V., Crainiceanu, C. and Urbanek, J. K. (2019). Accelerometry data in health research: challenges and opportunities. Review and examples. Statistics in Biosciences 11, 210–237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kavanagh, J. J. and Menz, H. B. (2008). Accelerometry: a technique for quantifying movement patterns during walking. Gait and Posture 28, 1–15. [DOI] [PubMed] [Google Scholar]

- Li, Z., Zhang, M., Xin, Q., Luo, S., Cui, R., Zhou, W. and Lu, L. (2013). Age-related changes in spontaneous oscillations assessed by wavelet transform of cerebral oxygenation and arterial blood pressure signals. Journal of Cerebral Blood Flow and Metabolism 33, 692–699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madeiro, J. P. V., Cortez, P. C., Marques, J. A. L., Seisdedos, C. R. V. and Sobrinho, C. R. M. R. (2012). An innovative approach of QRS segmentation based on first-derivative, Hilbert and Wavelet Transforms. Medical Engineering and Physics 34, 1236–1246. [DOI] [PubMed] [Google Scholar]

- Matthews, C. E., Chen, K. Y., Freedson, P. S., Buchowski, M. S., Beech, B. M., Pate, R. R. and Troiano, R. P. (2008). Amount of time spent in sedentary behaviors in the United States, 2003-2004. American Journal of Epidemiology 167, 875–881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCamley, J., Donati, M., Grimpampi, E. and Mazzà, C. (2012). An enhanced estimate of initial contact and final contact instants of time using lower trunk inertial sensor data. Gait and Posture 36, 316–318. [DOI] [PubMed] [Google Scholar]

- Müller, M. (2007). Dynamic Time Warping. Berlin, Heidelberg: Springer Berlin Heidelberg, pp. 69–84. [Google Scholar]

- Nyan, M. N., Tay, E. H. F., Seah, K. H. W. and Sitoh, Y. Y. (2006). Classification of gait patterns in the time-frequency domain. Journal of Biomechanics 39, 2647–2656. [DOI] [PubMed] [Google Scholar]

- Phinyomark, A., Limsakul, C. and Phukpattaranont, P. (2011). Application of wavelet analysis in EMG feature extraction for pattern classification. Measurement Science Review 11, 45–52. [Google Scholar]

- Pober, D. M., Staudenmayer, J., Raphael, C. and Freedson, P. S. (2006). Development of novel techniques to classify physical activity mode using accelerometers. Medicine and Science in Sports and Exercise 38, 1626–1634. [DOI] [PubMed] [Google Scholar]

- Takhar, Chan and Salbach, N. M., Brien, K. K., Brooks, D., Irvin, E., Martino, R., Takhar, P., Chan, S. and Howe, J. A. (2015). Reference values for standardized tests of walking speed and distance: a systematic review. Gait and Posture 41, 341–360. [DOI] [PubMed] [Google Scholar]

- Schrack, J. A., Zipunnikov, V., Goldsmith, J., Bai, J., Simonsick, E. M., Crainiceanu, C. and Ferrucci, L. (2014). Assessing the physical cliff: detailed quantification of age-related differences in daily patterns of physical activity. Journals of Gerontology - Series A Biological Sciences and Medical Sciences 69, 973–979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Selles, R., Formanoy, M., Bussmann, J., Janssens, P. and Stam, H. (2005). Automated estimation of initial and terminal contact timing using accelerometers; development and validation in transtibial amputees and controls. IEEE Transactions on Neural Systems and Rehabilitation Engineering 13, 81–88. [DOI] [PubMed] [Google Scholar]

- Sheng, Y. (2000). Wavelet transform. In: POULARIKAS, A. D. (editor), Transforms and Applications Handbook: Second Edition. Boca Raton: CRC Press LLC. [Google Scholar]

- Soaz, C. and Diepold, K. (2016). Step detection and parameterization for gait assessment using a single waist-worn accelerometer. IEEE Transactions on Biomedical Engineering 63, 933–942. [DOI] [PubMed] [Google Scholar]

- Staudenmayer, J., Pober, D., Crouter, S., Bassett, D. and Freedson, P. (2009). An artificial neural network to estimate physical activity energy expenditure and identify physical activity type from an accelerometer. Journal of Applied Physiology (Bethesda, Md.: 1985; ) 107, 1300–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Studenski, S., Perera, S., Patel, K., Rosano, C., Faulkner, K., Inzitari, M., Brach, J., Chandler, J., Cawthon, P., Connor, E. B.. and others. (2011). Gait speed and survival in older adults. JAMA: the Journal of the American Medical Association 305, 50–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urbanek, J. K., Harezlak, J., Glynn, N. W., Harris, T., Crainiceanu, C. and Zipunnikov, V. (2017). Stride variability measures derived from wrist- and hip-worn accelerometers. Gait and Posture 52, 217–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urbanek, J. K., Zipunnikov, V., Harris, T., Fadel, W., Glynn, N., Koster, A., Caserotti, P., Crainiceanu, C. and Harezlak, J. (2018). Prediction of sustained harmonic walking in the free-living environment using raw accelerometry data. Physiological Measurement 39, 02NT02. doi: 10.1088/1361-6579/aaa74d. [DOI] [PMC free article] [PubMed] [Google Scholar]