Abstract

Significant efforts in the past decades to teach evidence-based practice (EBP) implementation has emphasized increasing knowledge of EBP and developing interventions to support adoption to practice. These efforts have resulted in only limited sustained improvements in the daily use of evidence-based interventions in clinical practice in most health professions. Many new interventions with limited evidence of effectiveness are readily adopted each year—indicating openness to change is not the problem. The selection of an intervention is the outcome of an elaborate and complex cognitive process, which is shaped by how they represent the problem in their mind and is mostly invisible processes to others. Therefore, the complex thinking process that support appropriate adoption of interventions should be taught more explicitly. Making the process visible to clinicians increases the acquisition of the skills required to judiciously select one intervention over others. The purpose of this paper is to provide a review of the selection process and the critical analysis that is required to appropriately decide to trial or not trial new intervention strategies with patients.

Keywords: evidence-based practice, clinical reasoning, causal model, intervention theory, concept mapping

1. Introduction

All health professions have mandated the use of evidence-based practice (EBP) as a tenet of ethical practice [1] as it is known to improve healthcare quality, reliability, and client outcomes [2,3]. Over the last twenty years, skills and knowledge have significantly improved [4] and a great deal of effort (as well as the development of the fields of implementation science and knowledge translation) has gone into increasing the use of evidence-based practices (EBPs) by clinicians. However, these efforts have resulted in limited sustained behavior change for implementing EBPs [4,5]. A consensus of agreement suggests that appropriate implementation of EBPs is challenging due to a variety of factors. However, the theoretical “causes” of this is due to low EBP skill [4], organizational climate [6], and lack of “openness” to change practice [7]. However, there is no evidence that willingness to change practice is a causal factor as no studies have specifically measured the frequency of adopting new interventions; instead, most studies probe the adoption of specific interventions of interest (e.g., Locke et al. 2019 [8]). On the contrary, evidence suggests that openness to adoption of new interventions is not the problem as “fad” interventions continue to be reported in many areas of healthcare [9,10,11]. Most professionals report learning about new interventions from peers and rely on experience to select which ones to use in practice [12,13,14,15,16]. Importantly, it is the professional’s opinions on the intervention alternatives that most highly influence the client’s preference for action [17].

Second, we agree that some EBPs, such as handwashing for reducing infection, need to be implemented in all settings. However, not all interventions should be assumed to be the correct action in all settings with all clients. It is important to note that some EBPs can be adopted as written, some may need to be modified in a specific context or with a specific patient, and some will not be appropriate for adoption at all. Appropriate implementation of any given intervention is therefore dependent on many factors, including the confirmation that the clients are members of the same population as the sample from the intervention study being considered for adoption to practice. Frequently, the descriptions of whom the EBPs is directed provides insufficient information to determine if that the client is a member of the population [14,15]. Importantly, EBPs are often developed using evidence from randomized controlled trials (RCTs) [14]. However, the features of RCTs are such that increasing internal validity often leads to decreased external validity as appropriate application requires significantly more information about the participants, settings, and intervention than what is typically available in a published study. Registries (e.g., ClinicalTrials.gov) and manualized interventions are two strategies developed to address the generalizability difficulties of clinical trials. These strategies support the clinician in assessing generalizability by providing rich information on the participants, settings, and characteristics of the interventions used [18,19,20]. However, difficulties remain due to insufficient information [21]. Most interventions are complex, with many elements included in the intervention application. Many EBPs provide limited clear understanding of which intervention components are required activities (i.e., active ingredients) and which are not (i.e., inert ingredients). Therefore, partial implementation may result in the same outcome without doing all intervention components [22]. In other words, appropriate implementation of EBPs can be different across different settings.

Lastly, significant efforts and interventions have been applied in the last twenty years to increase EBP implementation rates. In fact, the main purpose of the field of implementation science is to identify the methods and strategies that facilitate evidence-based practice and research by clinicians [23,24]. Importantly, one of the main assumptions underlying implementation science research is that organizational climate affects implementation rates [6,25]. Therefore, strategies for increasing implementation of EBPs have targeted decreasing barriers, increasing supports, and increasing cultural expectations [6]. Although we agree these are important variables, we suggest an alternative causal mechanism should be considered. New evidence suggests that organizational climate is not a “causing” variable, but a “moderating” factor to EBP implementation [5]. Adoption of new interventions may not be the issue related to low EBP implementation as clinicians report frequently adopting and trialing new interventions with no or low evidence [16,26]. This suggests that we may need to take a step back and reexamine how we are defining and attempting to solve the problem of low implementation. We suggest that students need to be explicitly taught how to assess their personal causal models and the decision-making process.

2. Cognitive Mapping

Cognitive mapping has been used for over a half century to understand how learning changes a person’s cognitive structure of knowledge [27]. Initially, this instructional and assessment tool was used to understand meaningful learning in children. (Meaningful learning is defined as the learner has learned new information completely and has connected (identifying relationships) between new information and previously known knowledge [28]). Importantly, meaningful learning of the concepts and theory of a profession is the foundation of all professional knowledge [29]. Evidence supports the effectiveness of concept mapping to accurately capture a person’s knowledge and, further, can be used to measure change in knowledge [28]. The process of developing a cognitive map is consistent: first, the domain of knowledge is identified (e.g., a segment of text, a fieldtrip, a clinic, etc.); second, developing a specific question that the map will address sets the context; the third step is to use one’s tacit knowledge to identify key concepts. Some suggest to use a “parking lot” for key concepts where only factors that have an established relationship are placed in the cognitive map [27], while others suggest placing all the factors on the map, as that represents the individual’s understanding of the concept [30]. Once the concepts are on the map, the crosslinks between different factors are identified. It is the identification of these crosslinks of the underlying relationships between different factors that leads to the deeper understanding and the construction of new knowledge [27].

Cognitive mapping provides two avenues for improving evidence-based practices in healthcare. First, evidence on the effectiveness of cognitive mapping suggests that it is an effective tool to support organizing new knowledge into a coherent representation and integration with prior knowledge [31] and the development of clinical reasoning [32]. Second, cognitive mapping is effective in supporting divergent and creative thinking processes [33]. Divergent thinking is the ability to think originally, flexibly, and fluently [33]. Cognitive mapping encourages these activities by reducing the cognitive working memory load, which then frees cognitive capacity to engage in critical analysis and problem solving, e.g., identify associations, search for alternative perspectives, etc. [29,31,33]. Evidence also supports that it helps learners engage in dynamic thinking (not linear thinking) [34], the holistic analysis of the concepts or problems, and leads to new cognitive representations of the problem (or concept) [33]. In other words, the outcome of the cognitive mapping process supports adaptive change through changing their cognitive representations of the problem, which supports identifying new solutions to “old” problems. Importantly for an EBP implementation, cognitive mapping, specifically fuzzy cognitive mapping, has been identified as an effective tool to understand complex, uncertain problems and the perceptions (e.g., causal functions of factors) of the various stakeholders [35]. Evidence supports that cognitive mapping of complex problems is able to identify the causal structures used by various stakeholders to frame the problem and influencing the action decision [35], and can affect achieving organizational change [36,37].

3. EBP Curricula

We agree that the development of various EBP curricula has led to better skills and knowledge of how to appraise and synthesize evidence to support local practice. These curricula have taken a complex, multistep process and made it easier by directly teaching the specific action steps that the clinicians are expected to do (e.g., Ask, Identify, Appraise, Apply, Evaluate). Further, appraisal systems and tools (e.g., GRADE, CASP, Cochrane) decrease cognitive load by providing cues or reminders on questions that need to be answered. More elaborate systems, like GRADE, even provide decision trees to decrease the chance of making an error. Importantly, the EBP process, appraisal systems, and decision-support tools have been demonstrated to improve the positive impact on the quality of the care and clinical outcomes as they increase the likelihood of selecting and enacting the correct decision [1]. However, the decision-support tools typically are only available to address clinical questions where there is more certainty (e.g., differential diagnosis decision-making of known problems, depression medication choice, statin choice (Available online: https://carethatfits.org/ (accessed on 14 February 2021)), and sufficient evidence to develop EBP activities. Critically, many of the intervention decisions are made in uncertain conditions [1], which lack strong, unequivocal evidence that there is only one correct action.

4. Problem Solving in Medicine

Since the Renaissance, science and medicine were rooted in the assumption that if problems are divided into smaller and simpler units, they become easier to solve [38]. These smaller units lent themselves to linear causality, e.g., one cause produces one effect [39] and heavy reliance on linear diagrams and flow charts [40]. Recently, support has been voiced for using “holistic” or biological system approaches to think about clinical problems; however, many technological advances continue to require increased specialization that reinforce reductionistic approaches [41]. On the other hand, understanding a problem from a dynamic systems approach requires one to examine it from a multidisciplinary approach leading to the mandate of interprofessional care [42]. However, this same push introduces another level of complexity as each specific discipline advocates for their individualized profession’s scope of practice, allocation of resources, and different professional definitions of the problem, goal, action steps, and outcome indicators [43]. Modern healthcare services are consistent with the blind men and elephant parable. Each profession uses their own professional paradigm, experiences, and client interactions to frame and solve the client problem. Importantly, it is more likely that each professional will have a different causal model that they are using to organize their knowledge and examine the problem [44]. In fact, even members of the same profession are just as likely to have different mental conceptualizations of the same client that may not be supported by the evidence or formal theories (e.g., professional paradigms) [45,46]. Critically, integrated knowledge has been implicated as prerequisite for successful problem solving [47,48] and evidence supports it is the restructuring of knowledge as new information is learned leads to richer causal models, which leads to being more likely to select appropriate actions [49].

Evidence on what humans do when presented with a novel problem suggests that heuristic reasoning is used in time limited situations [50], as most people require approximately eleven to sixteen seconds to process, interpret, and formulate an initial reflective response [51]. In medicine and healthcare, heuristics is a strategy that is used when decisions need to be made quickly, as it ignores information as a tradeoff between time available and the cost of getting better information [52]. Fast thinking can be highly influenced by cognitive biases and include ambiguities [53,54] Actions taken in the past are easier to remember than the actions not taken, [55] or how the problem was initially defined. Importantly, fast thinking (e.g., intuition, heuristics) also highly influence initial seeking and interpretation of information, but also early closure if purposeful conscious thinking efforts are not engaged [56,57]. One common educational intervention frequently used to slow down closure has been the use of “think aloud” during which the respondent describes their evidence, making their thinking visible to communication partners [50]. This allows all involved to have more time to reflect on the quality of the judgment and to compare evidence to their own reflective thinking process [50]. However, “think aloud” was not a solution for all difficulties. Reflective practice and EBP frameworks were developed to best address limitations in decision-making and heuristic reasoning. Both strive to increase critical reflection and integration of nonexperiential evidence into the decision-making process [56]. There is general consensus that thinking changes with experience and expertise [58,59,60,61,62,63,64,65,66,67]. One benefit of this change is that experienced clinicians often recognize similar situations (e.g., familiar problems) more quickly [68]. Importantly, experience may not improve their ability to adapt commonly used strategies to resolve new problems or increase their ability to recognize errors in their own thinking processes [50,69,70,71]. Since heuristics will always have a role in decision-making due to the speed expected in real-time interactions, it is important to understand what information is used in heuristic decision-making.

4.1. Heuristics and Causal Models

In everyday life, adaptive response is the outcome of rapid decisions based on numerous different interrelated variables [72,73]. In any given situation, we identify the variables which we believe are “causing” or influencing the current situation [72,73,74]. In other words, decision-making is not linear, but a dynamic process, where there are many alternative options for achieving an outcome [72]. Interestingly, there is strong consensus that the clinician’s beliefs about clinical problems are probabilistic and are weighted in the causal model by how likely any individual variable is believed to be causing or influencing the outcome [1], consistent with Bayesian inference [75]. Importantly, these mental models represent a person’s theory of how the world works and also include estimations of their probabilistic relationship of the variable to the situation and the desired outcome [38,73]. Clinicians use their mental model to select the actions that their personal theory posits as the most likely to achieve the desired outcome given a specific situation. Significant evidence supports that it is this mental model which drives intuitive and heuristics decisions [76,77,78,79]. Deliberate practice and EBP were specifically targeted to support clinicians in solving problems as they emerge. We agree that these models for supporting practice are important and should not be discounted. However, professional education curricula need to directly teach students how to think about their thinking, appraise their knowledge, and how to integrate of information into rich evidence-informed causal models. Importantly, we also posit that by clearly teaching this skill as an expectation for ethical practice, completed (at least informally) when new information is learned, it significantly increases the expectations of how frequently they should engage in these activities.

4.2. Causal Models and Interventions

Ideally, each individual clinician relies on their own causal model to select the interventions to use with a specific client [80]. Causal models develop over time as they are the individualized outcome of the clinician’s thinking process, synthesizing formal professional theories, lived experiences, and overlying the client’s preferences and values related to the problem. Causal models use “rules” to identify the relationship between the factors, problem, and outcomes that are achievable. The rules develop from professional paradigm, experience, and tacit knowledge. The accuracy of the rules (and their causal model) will depend on how the clinician represents the problem, the number of factors identified, and the factors that are missing. Evidence supports that people’s causal judgments can be influenced by observed data when the data are consistent with their mental model; this thinking process is highly influenced by limited knowledge, missing information or ignored causal assumptions, and cognitive biases [4]. However, making this causal model visible and providing specific strategies (e.g., seeking evidence that the factor is influencing the problem and remediable) increases the likelihood that the causal model correctly weighs the factors that are really causing the problem [11,17,73].

4.3. Causal Mapping

Linear thinking leads to erroneous causal assumptions, as it relies on the readily available data points (e.g., proximal goal attainment), has difficulty accounting for feedback, and has difficulty accounting for other variables that do not fit in the linear model (e.g., “what if X causes Y, rather than Y causes X, or Z causes Y and X”) [6,42]. Historically, cognitive maps have been used with subjects who have extensive knowledge; however, cognitive maps also have the ability to provide insight into how new information is integrated and synthesized with previous experience and knowledge [3]. Cognitive maps have been used extensively in education as a teaching tool, as they reduce cognitive load [50] and encourage deeper learning of concepts by supporting the identification of the relationships between variables [80]. Empirical evidence finds that cognitive maps help students organize the information into a format that is retained at higher levels, and allows the learner to encode the information in both visual and language forms (e.g., conjoint retention) [80]. The visual representation of complex relationships is easier to follow compared to relationships between variables described by words alone, and they foster critical thinking and reasoning [80,81]. McHugh Shuster (2016) provides an example of how concept mapping helped nurses organize patient care by organizing the patient data, analyzing relationships, establishing priorities, builds on previous knowledge, and encourages a holistic view of the client [82]. Importantly, cognitive mapping has been demonstrated to support EBP uptake [83,84]. This may be due to integration of the new information into their causal model of the problem [84], which increases the likelihood of an EBP being viewed as an alternative action to achieve the outcome. Importantly, evidence also provides an understanding of how linguistics and analogies, including testimonials, moderate and change an individual’s causal model [39], highlighting a critical method that could be used to shift causal models.

4.4. Making Decision-Making Visible

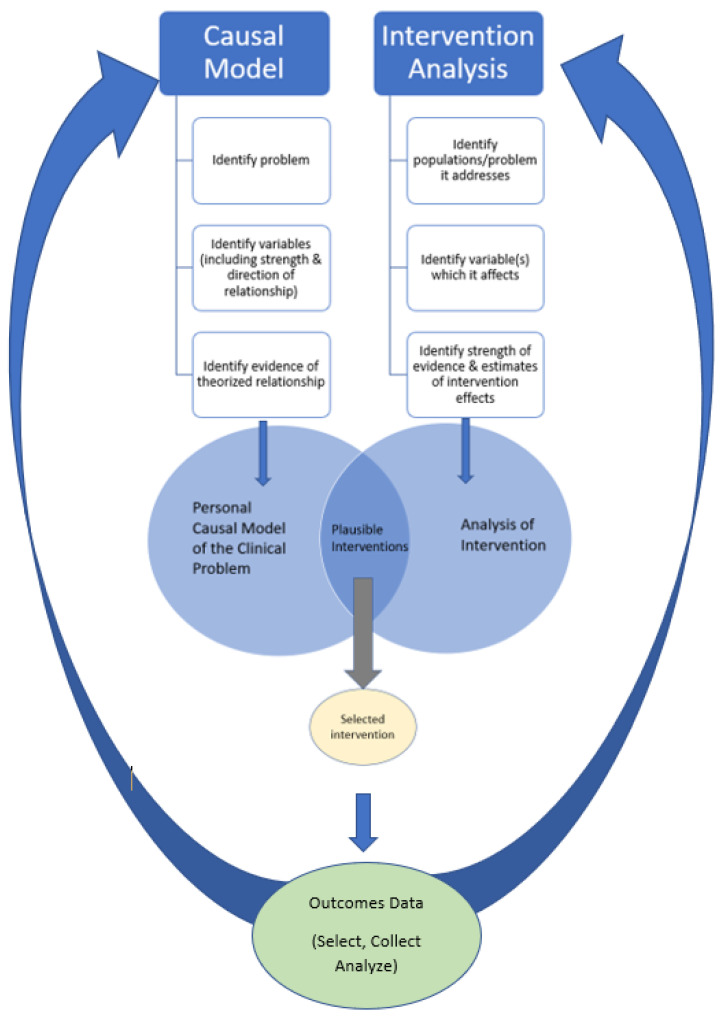

The decision of selecting one intervention over others arises out of the intersection of two different critical analysis processes: (1) the personal causal model of the problem and (2) the analysis of interventions (refer to Figure 1. Simple view of intervention selection). The outcome of this process is to select, from all plausible interventions, the one that the clinician believes is the most likely to achieve the outcome given the specific situation and to be able to identify the level of evidence (empirical or theoretical) and confidence of the decision.

Figure 1.

Simple view of intervention selection (diagram only contains the main steps of each process).

Many of the interventions commonly used in practice have limited evidence of effectiveness [85] and are complex with multiple moderating and affecting variables [86]. The goal of this process is to develop an evidence-informed causal model of the clinical problem, which allows the student (or clinician) a deeper and more complex understanding of the theorized causal relationships of the variables. Therefore, this process brings awareness to what, how, and why individuals are thinking. Importantly, this process expands the reasons that one seeks new information as one main purpose is to advance the currency of knowledge with clinical problems, which they may address in the future, and expand the integration of knowledge from various sources. The use of expected routines and habits for thinking about practice is from deliberate reflective practice [87,88] where clinicians respond to triggering events through a structured process of reflection and critical analysis. In contrast, evidence-informed thinking is an expected habit of practice done frequently and routinely—not just when triggering events occur.

In making causal models visible, students are taught the first step when confronted with a problem (e.g., medical condition, clinical problem, culture, spirituality) is to tentatively define the concept or problem and then critically reflect and analyze what they think they know, believe, and value. At this stage, they use their intuition and tacit knowledge to identify variables that they think may be involved. Consistent with the brainstorming stage of problem-solving frameworks, variables are placed on the map without regard to strength or plausibility of its relationship, as the goal is to bring to consciousness the variables that may influence thinking at later stages. In the second stage, they are explicitly taught to seek and read the literature and talk with peers in order to identify other variables that are theorized or empirically known to be influencing the problem. They add the newly identified variables and add linking verbs to identity relationships between the variables (theoretical or supported by evidence). At this stage, they also indicate the strength and direction of the relationship (one-way, two-way, or related through other variables) [89]. At this stage, some authors suggest removing concepts that have no relationship [89]. However, there may be benefits to leaving all variables on the map and including the evidence that shows the lack of relationship. This may provide a conscious, visible “nudge” to reflect on why they believe there is a causal relationship and deeper analysis of the phenomena. Importantly, the “lack of evidence” may be due to the lack of data on the relationship, not the lack of a relationship; this, therefore, supports the need for identification and collection of local data to use in outcomes reflection (refer to Table 1. Making thinking visible—evidence-informed causal model). Importantly, this process is needed to support the deliberate practice models; however, deliberate practice models are not fully described here for simplicity purposes.

Table 1.

Making thinking visible- Evidence-informed causal models.

| Action | |

|---|---|

| Step 1 | Identify possible problem(s), concepts, etc. |

| Step 2 | Reflect on your beliefs, knowledge, experiences, and place any variable which may be affecting the problem on the map |

| Step 3 | Seek empirical evidence and background information

|

| Step 4 | Identify and link interventions to the variables they affect |

| Step 5 | Assess applicability, feasibility to context and client |

| Step 6 | Identify and collect outcomes data points locally which will allow assessing accuracy of decision |

| Step 7 | Reflect on outcomes data:

|

Making thinking visible—evidence-informed causal model.

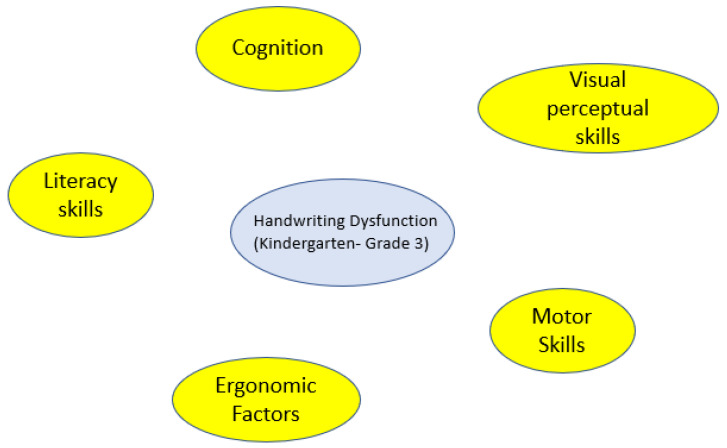

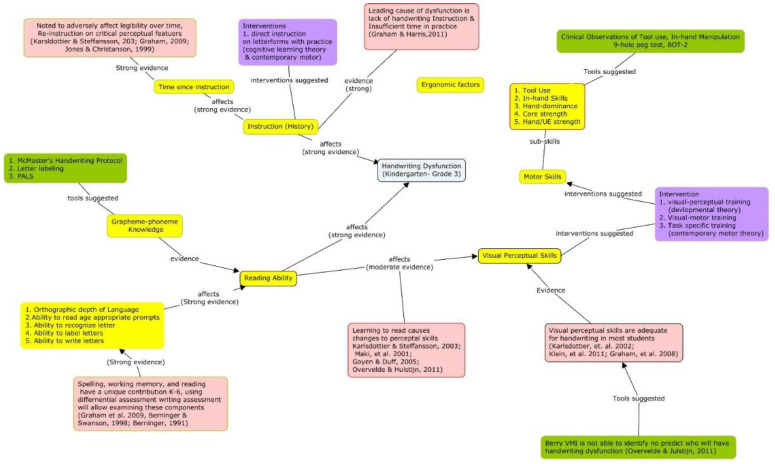

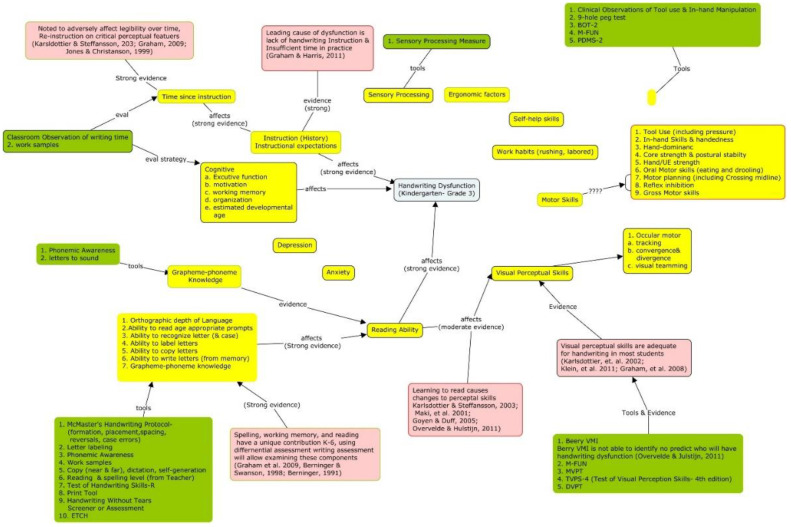

For clinical problems, causal maps provide four specific benefits. First, it makes searching for evidence feasible and more efficient as the variables can become search terms. Second, the factors can be used to identify interventions that are theorized to affect the clinical presentation and the outcome of interest. Third, a causal model in which interventions have been added will contain alternative options for achieving an outcome. Interventions connected to different variables can be inserted into the clinical question (e.g., Population, Intervention, Comparison, Outcome (PICO). In other words, the variables and concepts included on a cognitive map provide starting points for the formal search strategies found in EBP textbooks, which can be a very difficult and frustrating experience for people with limited knowledge of a phenomena. Figure 2, Figure 3 and Figure 4 provide an example of this process as it relates to handwriting dysfunction in young children. Finally, this process supports the development of evidence-informed causal models and the integration of new information into tacit knowledge by having them add new information as they go through their professional programs and link different cognitive maps together. Using cognitive mapping is consistent with spiral curricula. In spiral curricula, there is a purposeful revisiting of topics whereby each revisit builds on previous knowledge by both deepening their knowledge (and complexity of understanding), but also expanding the connectedness to other knowledge [90]. Using tools such as CMapTools®®® (Available online: https://cmap.ihmc.us/ (accessed on 14 February 2021)) allows for students to actively organize and construct their own maps, which also allow them to connect maps together, embed resources, and share maps with others.

Figure 2.

Intuitive factors thought to affect handwriting dysfunction.

Figure 3.

Preliminary causal model after searching the literature. It includes direction of causal relationship and strength of evidence supporting the relationship. Placing the evidence provides ability to confirm appraisal of evidence.

Figure 4.

Evidence-informed causal model with interventions and assessment tools suggested in the literature. Blue indicates the target problem. Yellow indicates factors which are believed, theoretically and/or empirically to have a relationship to the target outcome. Pink indicates evidence on the factor’s relationship to other factors or target problem. Green indicates assessment tools.

As illustrated in the figures, cognitive maps are able to cogently translate information and complex relationships.

4.5. Intervention Theory

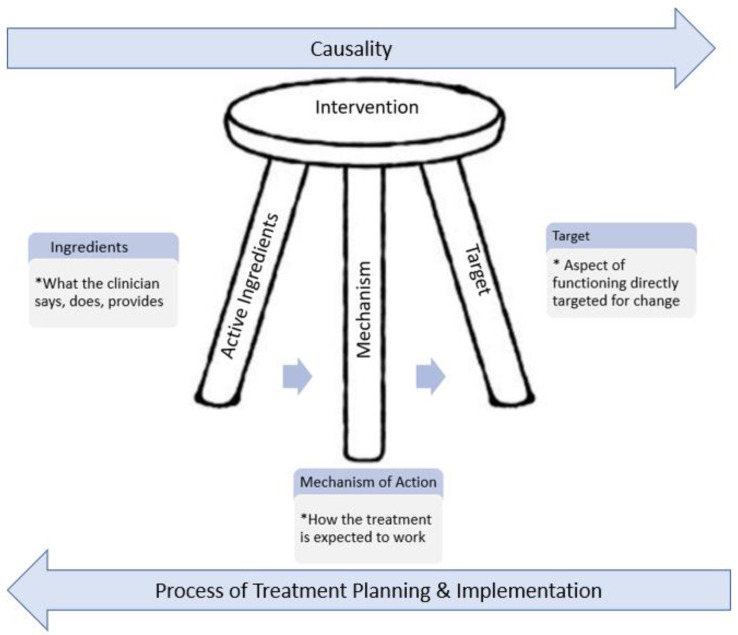

Many interventions addressing behavioral change are complex, consisting of various components that have been combined as they theoretically cause change to important variables affecting the clinical presentation [91]. Intervention theory consists of three components: the target that the intervention changes, the mechanism of change, and the components.

Components, or “ingredients” in intervention theory, include anything that is done by the clinician to a client, including, for example, medications, assistive devices, motivational speech, ultrasound, manual touch, instruction, feedback, and physical cues. Ingredients target small aspects of the clinical outcome. In practice, interventions contain both active ingredients that are required to enact change in outcome, and inert ingredients, which have no effect on the targeted outcome [92,93]. Identifying the active ingredients can be cost-effective as it reduces time and resource requirements because fewer activities need to be done in order to achieve the outcome [22]. Typically, there is not a clear understanding of which ingredients of an intervention are active [93,94]. Another reason is that there is a lack of clear identification of all components being applied in the intervention. This is likely due to the difficulty in separating the complex relationships between the components when change occurs in dynamic systems [91]. In other words, the form of delivery (when, where, how, how much, who, etc.) is critical to the “active ingredient” [95]. Therefore, being able to assess intervention fidelity is critical. Fidelity is “the extent to which the core components of a program, differentiated from “business as usual,“ are carried out as intended upon program enactment” [22] (p. 320). Fidelity in studies provides the confidence that it was the intervention that caused the desired effect. Fidelity measures can also be used to differentiate those components more likely to be active ingredients from those that are inert [22]. This is done by comparing the percentage of the components being delivered by the components as defined, and those that are not; this allows assessment of the impact the outcome effects by each component. If the fidelity is low, but equal affects are achieved, the active ingredient is more likely to be one that was causing the change [22].

An example from the clinic on how fidelity tools were used to examine practice is the history of constraint-induced movement therapy (CIMT). CIMT is an intervention that addresses function of a hemiplegic upper extremity. Early protocols called for constraining the uninvolved upper extremity for 90% of the waking hours and intensive therapy for six hours a day, for 10 days over two weeks [96]. However, current understanding of the effects of various treatments for increasing affected extremity function use suggest that CIMT is no more effective than high-dose standard care occupational therapy or dose-matched bimanual therapy, neither of which the “constraining” ingredient is included [97]. This is a great example where assessing local application fidelity to the original protocol allowed clinicians to identify differences in the application of ingredients (including dose, timing, etc.). When consistent outcomes were achieved, it led to significantly reduced time of intensive therapy, using oven mitts (versus expensive “therapy” mitts), and eventually to examining alternative protocols such as bimanual training [98]. Currently, the evidence suggests that these interventions (CIMT, bimanual, and intensive standard care) work due to the dose of the active ingredient, task-specific training [99]. Importantly, research on task-specific training is currently exploring more specific questions (e.g., dose, timing) for which critical information is needed to develop effective (and cost-effective) nonburdensome, interventions [100].

One issue with many EBPs is that the descriptions of the interventions lack a clear explanation of parameters (i.e., what, when, how, or how long) for any specific activity or component [95,101]. This “black box” of intervention components creates a barrier for translation to different contexts and clients [14,101,102,103] and explains the commonly reported dependence using peers and what was previously done for selecting interventions [14,101]. Treatment theory has been proposed as a tool to make intervention selection clearer, and make outcome data more interpretable and more transferable to different clients and contexts [92,94,103]. This process consists of three structures—the target of the treatment (e.g., body function, structure, participation) that is altered by the intervention; the ingredients that produce the change; and the mechanism that the ingredients cause change in the target of the treatment [92,94,102]. This analysis, as well as traditional appraisal of estimates of effect, is what allows for appropriate application of an intervention with a different client and setting [92,103] (see Figure 5. Three-part structure of intervention and intervention selection).

Figure 5.

The Three-part structure of Intervention and Intervention Selection. Based on [83].

4.6. Teaching How to Think about Interventions

We argue that it is important to explicitly teach students what information they should seek when learning about a new intervention. Due to the complexity of information that impacts appropriateness, feasibility, and applicability, the quality indicators for interventions have been identified using suggestions of best practice from a variety of areas: intervention theory, total quality improvement, continuing education effectiveness, clinical reasoning, and EBP. Interestingly, there were similarities across the literature. Appropriate application and increased likelihood of actually selecting an intervention depends on first and foremost, the learner gaining an understanding of the problem (e.g., defining) and the need for better client outcomes—in other words, what it typically achieved is less than the potential [91,94]. This provides the motivation that they need to seek and learn about new interventions, engaging the potential to change behavior [91]. Second, appropriate application is dependent on the learner gaining information about the problem: what interventions (and active components) work, why they work, and any contextual factors that may influence the effectiveness of the intervention [1,2]. Contextual factors are critical, including what components were done, when, where, how, and how much [94]. It also includes a discussion of alternative interventions that would be used to weigh plausible different actions [92,102] and the measurement indicators that were used to support differentiation [94,103]. Importantly, establishing the habit of discussing commonly used alternatives can help the learner access their experiential knowledge, which can be used to compare client factors, activities, etc. [2]. By having the students use the intervention rubric to reflect on the information provided (from any source) on a specific intervention reduces the cognitive load and encourages asking (seeking) for needed information. It also supports the student’s process of identifying when there is a lack of evidence (empirical or theoretical), lack of causal mechanism, and/or lack of clearly identifying data points that would indicate change (see Appendix A. Intervention rubric).

Clinical Example of Intervention Analysis

By the mid-1990s, auditory integration training became a worldwide popular intervention for people with autism. It was theorized to improve many personal factors such as attention span, eye contact, tantrums, etc. Due to its prevalent use, multiple efficacy studies were completed and showed that the intervention had no effect [104]. However, by the time the Cochrane Collaboration issued its findings, the original intervention, attributed to Berard (1993) [105], had been modified and adapted into multiple interventions with new names (e.g., Tomatis method (Available online: https://www.tomatis.com/en (accessed on 12 February 2021)), (Available online: https://soundsory.com/samonas-sound-therapy/ (accessed on 12 February 2021)), Listening Program (Available online: https://advancedbrain.com/about-tlp/) (accessed on 12 February 2021)), Therapeutic Listening (Available online: https://vitallinks.com/therapeutic-listening/ (accessed on 12 February 2021)), etc.) [106]. Importantly, it is only through an analysis of the critical aspects of the interventions that a clinician becomes aware that the new interventions use the same treatment theory. Differences (e.g., name, type of headphone, location to purchase music, etc.) appear to be only surface changes. Therefore, the evidence as appraised by Sihna et al. (2011) would suggest that all of these new interventions would also lack of evidence effectiveness. Importantly, these new iterations of auditory integration intervention continue to be used by clinicians, although the developer now targets a different audience (e.g., occupational therapists, educators, and parents). Interestingly, the information readily available on the interventions is limited but located on highly attractive websites that present powerful narratives of client change by the people who have financial incentives to sell the tools of the intervention. Importantly, none of the newer iterations clearly provide specific examples that support viewing them as having a different theory of causal mechanism from the studies that showed no effect [106].

5. Conclusions

In order to select the best intervention, it is essential that the clinician use an evidence-informed causal model and has appropriately integrated information on many different variables. Evidence supports that making the complex thinking process visible supports deep learning and improves the causal reasoning, which is used to predict the best intervention option. Expanding our instructional methods to include direct instruction (making thinking visible) has the potential to improve the accuracy of decisions, and therefore, client outcomes, as it supports the development of underlying thinking habits of clinicians. Importantly, intervention selection and EBP are both highly influenced by the clinician’s causal model. Therefore, improving the quality and habits of thinking has the potential to increase the likelihood of selecting the “best” interventions with our clients. Specifically, teaching and helping students to establish the habit of developing an evidence-informed causal model has the potential to improve heuristic decision-making as evidence suggests that one’s causal model highly influences heuristic decision-making. Using cognitive support tools such as the intervention rubric (Appendix A) also has the potential to improve appropriate selection of interventions by encouraging deeper analysis of interventions and identifying measurement data points that can be used to assess the accuracy of the information used to select it. Using cognitive mapping, specifically causal modeling, also supports the EBP process by highlighting alternative terms and interventions strategies to use in the EBP process.

Appendix A. Intervention Rubric

| Category | “Best Practice” | Competent | Inadequate |

|---|---|---|---|

|

Clearly names intervention and provides alternative names that may be associated with the intervention, (e.g., The Listening Program and auditory integration training) and links to resources that provide specific, in-depth information about the intervention. |

Provides only the name of the specific intervention being described and links to resources that provide specific, in-depth information about the intervention. | Does not clearly name intervention nor provide links to resources. |

|

Clearly describes the theoretical model(s) and tenets that were used to develop the intervention, including:

|

Identifies the theoretical model used to develop the intervention, including:

|

Labels the theoretical model used to develop the intervention. |

|

Clearly describes what population should be considered and/or ruled out as potential candidates for this intervention

|

Labels and describes what clinical population would benefit and not benefit from this intervention. | Provides only minimal information on inclusion/exclusion for intervention |

|

Clearly describes a “best practice” aspects of measuring outcomes of intervention, including:

|

Provides major indicators that should be monitored for measuring the impact of the intervention.

|

Does not provide any information on measuring the impact of the intervention. |

| Intervention Process “Key elements of Intervention” |

Clearly describes a theorized or evidence of the process by which the intervention causes the change. | Based on a broad theory (e.g., dynamic systems, diffusion of innovation, etc.), and/or heavy reliance on values and beliefs. | Does not address. |

|

Clearly describes important indicators that should be considered while reflecting on the client-intervention- outcome process. Clearly describes all of alternative interventions which should be considered and ruled out due to clinical and/or client characteristics (e.g., consistent with differential diagnosis) |

Highlights some indicators that should be considered while reflecting on client-intervention- outcome process. Identifies some alternatives interventions which should be considered. |

Does not provide any indicators that should be considered while reflecting on client-intervention- outcome process. Does not identify any alternative interventions which should be considered. |

|

Peer-reviewed Evidence Clearly describes the strength of evidence to support using this intervention with each population

Provides clear synthesis of clinical evidence |

Peer-reviewed Evidence Uses multiple person/multiple site narratives/clinical experience to describe the client change experienced when using this intervention. Clinical Evidence Describes a systematic outcomes data collection system, including the list of data points collected, however only some clinical outcomes data provided |

Peer-reviewed Evidence No peer-reviewed evidence is provided, reliance on expert opinion and personal experience Clinical Evidence Anecdotal experience with general description of client outcomes and heavy reliance on goal achievement (unstandardized and/or lacks evidence of reliability/validity, limited interpretability of change scores) |

Justification for criteria:

- Name: Intervention has a clear name that allows it to be distinguished from other interventions.

- Interventions which have more resources available, such as protocols or manuals, provide deeper understanding of the assessment, application (activities which should and should not be done), and the theoretical underpinnings which provide explanation of how the intervention causes a different outcome.

- Fidelity Tools: provide criteria to assess current practice for similarities and differences and assess if application done locally is develop consistent with the intervention as theorized or in efficacy studies.

-

Theory—“Why it works”

Interventions are developed using treatment theory, which identifies a specific group of activities which specify the mechanism by which the active ingredients* of a treatment intervention produce change in the treatment target. In other words, it identifies the aspect of function that is directly impacted by the treatment. A well-defined treatment theory will identify active ingredients (those which cause the change) from inactive ingredients. See Figure 5 and Hart & Ehde [82] for a more extensive discussion of treatment theory.- Active ingredients involve at a minimum those things that the clinician does, says, and applies to the client that influence a targeted outcome. These include both communicative processes and sequential healing, learning, and environmental processes.

- Essential ingredients are those activities which must be included in order for it to be a given intervention.

- Mechanism of Action: clearly identifies the processes by which the ingredients bring about change on the outcome target.

- Measurable/observable treatment targets: “things” that you measure or observe to know if the intervention is beginning to work in therapy, i.e., clinical process measures.

- Population: Clearly defines who is or is not a member of the population of interest, including clinical characteristics.

- Inclusion/exclusion criteria: what are clinical or client characteristics indicating that the intervention should or should not be applied.

- Measuring the Impact of Intervention: Clearly describes a “best practice” aspect for choosing and observing/measuring outcomes of the intervention including:

- Using the ICF Framework, identifying the measurement construct that are indicators of change theoretically “caused” by the intervention. See addendum a: Outcome Domains Related to Rehabilitation.

- Collecting client reported outcomes at baseline (before), during, end, and after discharge from therapy. Distal measures should include satisfaction, value of change, and functional change outside of the medical model indicators (e.g., activity, participation, or quality of life levels of the ICF). Distal measures will provide evidence that the clinical interventions translated to meaningful change in life circumstances (See do we want to add reference that limited evidence that clinical goals have real world affects)

- Identify and describe the standardized subjective and objective measures that should be used, including the psychometrics and appropriate clinical interpretations of the scores obtained

- Identification of assessment and evaluation tools which have known reliability, sensitivity, and predictive validity, when possible.

- Describe “best practice” for non-standardized data points, e.g., provides clinical data indicators which should be systematically collected locally if the intervention is provided.

Intervention Process- “Key elements of Intervention”

Clinical reasoning: clearly describes the important indicators (i.e. person, organizational, socio-political factors, etc.) that should be considered on the intervention process, including conditional decisions regarding strategy feasibility, applicability, and appropriateness in the specific situation.

-

Evidence “What Works”:

Peer-reviewed Evidence:- Clearly describes the strength of evidence supporting the use of the intervention with each population.

- Peer-reviewed evidence would be provided when possible.

Clinical evidence:- Provides a synthesis of clinical outcomes data including: number of sites using the intervention and number of clinicians (indicators of replication)

- Provides the estimated number of clients who have received the intervention and who have pre-post outcome data

- Provides estimates of the number and characteristics of patients who did not respond to treatment in the expected time frame.

- Provides information on how they minimized bias and allocated patients to the “new” intervention (compared to standard treatment)

Author Contributions

Conceptualization, A.B. and R.B.K.; writing—original draft preparation, A.B.; writing—review and editing, R.B.K.; visualization, A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This article did not involve humans or animals subjects.

Informed Consent Statement

This article did not involve any human or animal subjects.

Data Availability Statement

There is no data.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Lehane E., Leahy-Warren P., O’Riordan C., Savage E., Drennan J., O’Tuathaigh C., O’Connor M., Corrigan M., Burke F., Hayes M., et al. Evidence-based practice education for healthcare professions: An expert view. BMJ Evid. Based Med. 2019;24:103–108. doi: 10.1136/bmjebm-2018-111019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Melnyk B.M., Fineout-Overholt E., Giggleman M., Choy K. A test of the ARCC© model improves implementation of evidence-based practice, healthcare culture, and patient outcomes. Worldviews Evid. Based Nurs. 2017;14:5–9. doi: 10.1111/wvn.12188. [DOI] [PubMed] [Google Scholar]

- 3.Wu Y., Brettle A., Zhou C., Ou J., Wang Y., Wang S. Do educational interventions aimed at nurses to support the implementation of evidence-based practice improve patient outcomes? A systematic review. Nurse Educ. Today. 2018;70:109–114. doi: 10.1016/j.nedt.2018.08.026. [DOI] [PubMed] [Google Scholar]

- 4.Saunders H., Gallagher-Ford L., Kvist T., Vehviläinen-Julkunen K. Practicing healthcare professionals’ evidence-based practice competencies: An overview of systematic reviews. Worldviews Evid. Based Nurs. 2019;16:176–185. doi: 10.1111/wvn.12363. [DOI] [PubMed] [Google Scholar]

- 5.Williams N.J., Ehrhart M.G., Aarons G.A., Marcus S.C., Beidas R.S. Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: Cross-sectional and lagged analyses from a 2-year observational study. Implement. Sci. 2018;13:85. doi: 10.1186/s13012-018-0781-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Melnyk B.M. Culture eats strategy every time: What works in building and sustaining an evidence-based practice culture in healthcare systems. Worldviews Evid. Based Nurs. 2016;13:99–101. doi: 10.1111/wvn.12161. [DOI] [PubMed] [Google Scholar]

- 7.Aarons G. Mental health provider attitudes towards adoption of evidence-based practice: The Evidence-Based Practice Attitudes Scale (EBPAS) Ment. Health Serv. Res. 2004;6:61–74. doi: 10.1023/B:MHSR.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Locke J., Lawson G.M., Beidas R.S., Aarons G.A., Xie M., Lyon A.R., Stahmer A., Seidman M., Frederick L., Oh C. Individual and organizational factors that affect implementation of evidence-based practices for children with autism in public schools: A cross-sectional observational study. Implement. Sci. 2019;14:1–9. doi: 10.1186/s13012-019-0877-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Konrad M., Criss C.J., Telesman A.O. Fads or facts? Sifting through the evidence to find what really works. Interv. Sch. Clin. 2019;54:272–279. doi: 10.1177/1053451218819234. [DOI] [Google Scholar]

- 10.Goel A.P., Maher D.P., Cohen S.P. Ketamine: Miracle drug or latest fad? Pain Manag. 2017 doi: 10.2217/pmt-2017-0022. [DOI] [PubMed] [Google Scholar]

- 11.Snelling P.J., Tessaro M. Paediatric emergency medicine point-of-care ultrasound: Fundamental or fad? Emerg. Med. Australas. 2017;29:486–489. doi: 10.1111/1742-6723.12848. [DOI] [PubMed] [Google Scholar]

- 12.Scurlock-Evans L., Upton P., Upton D. Evidence-based practice in physiotherapy: A systematic review of barriers, enablers and interventions. Physiotherapy. 2014;100:208–219. doi: 10.1016/j.physio.2014.03.001. [DOI] [PubMed] [Google Scholar]

- 13.Upton D., Stephens D., Williams B., Scurlock-Evans L. Occupational therapists’ attitudes, knowledge, and implementation of evidence-based practice: A systematic review of published research. Br. J. Occup. Ther. 2014;77:24–38. doi: 10.4276/030802214X13887685335544. [DOI] [Google Scholar]

- 14.Jeffery H., Robertson L., Reay K.L. Sources of evidence for professional decision-making in novice occupational therapy practitioners: Clinicians’ perspectives. Br. J. Occup. Ther. 2020:0308022620941390. doi: 10.1177/0308022620941390. [DOI] [Google Scholar]

- 15.Robertson L., Graham F., Anderson J. What actually informs practice: Occupational therapists’ views of evidence. Br. J. Occup. Ther. 2013;76:317–324. doi: 10.4276/030802213X13729279114979. [DOI] [PubMed] [Google Scholar]

- 16.Hamaideh S.H. Sources of Knowledge and Barriers of Implementing Evidence-Based Practice Among Mental Health Nurses in Saudi Arabia. Perspect. Psychiatr. Care. 2016;53:190–198. doi: 10.1111/ppc.12156. [DOI] [PubMed] [Google Scholar]

- 17.Shepherd D., Csako R., Landon J., Goedeke S., Ty K. Documenting and Understanding Parent’s Intervention Choices for Their Child with Autism Spectrum Disorder. J. Autism Dev. Disord. 2018;48:988–1001. doi: 10.1007/s10803-017-3395-7. [DOI] [PubMed] [Google Scholar]

- 18.Mathes T., Buehn S., Prengel P., Pieper D. Registry-based randomized controlled trials merged the strength of randomized controlled trails and observational studies and give rise to more pragmatic trials. J. Clin. Epidemiol. 2018;93:120–127. doi: 10.1016/j.jclinepi.2017.09.017. [DOI] [PubMed] [Google Scholar]

- 19.National Library of Medicine ClinicalTrials.gov Background. [(accessed on 29 January 2021)]; Available online: https://clinicaltrials.gov/ct2/about-site/background.

- 20.Wilson G.T. Manual-based treatments: The clinical application of research findings. Behav Res. 1996;34:295–314. doi: 10.1016/0005-7967(95)00084-4. [DOI] [PubMed] [Google Scholar]

- 21.Holmqvist R., Philips B., Barkham M. Developing practice-based evidence: Benefits, challenges, and tensions. Psychother. Res. 2015;25:20–31. doi: 10.1080/10503307.2013.861093. [DOI] [PubMed] [Google Scholar]

- 22.Abry T., Hulleman C.S., Rimm-Kaufman S.E. Using indices of fidelity to intervention core components to identify program active ingredients. Am. J. Eval. 2015;36:320–338. doi: 10.1177/1098214014557009. [DOI] [Google Scholar]

- 23.Glasgow R.E., Eckstein E.T., ElZarrad M.K. Implementation Science Perspectives and Opportunities for HIV/AIDS Research: Integrating Science, Practice, and Policy. Jaids J. Acquir. Immune Defic. Syndr. 2013;63:S26–S31. doi: 10.1097/QAI.0b013e3182920286. [DOI] [PubMed] [Google Scholar]

- 24.Khalil H. Knowledge translation and implementation science: What is the difference? JBI Evid. Implement. 2016;14:39–40. doi: 10.1097/XEB.0000000000000086. [DOI] [PubMed] [Google Scholar]

- 25.Aarons G.A., Ehrhart M.G., Farahnak L.R., Sklar M. Aligning Leadership Across Systems and Organizations to Develop a Strategic Climate for Evidence-Based Practice Implementation. Annu. Rev. Public Health. 2014;35:255–274. doi: 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Yadav B., Fealy G. Irish psychiatric nurses’ self-reported sources of knowledge for practice. J. Psychiatr. Ment. Health Nurs. 2012;19:40–46. doi: 10.1111/j.1365-2850.2011.01751.x. [DOI] [PubMed] [Google Scholar]

- 27.Cañas A.J., Novak J.D. Concept Maps: Theory, Methodology, Technology, Proceedings of the Second International Conference on Concept Mapping, San Jose, Costa Rica, 5–8 September 2006. Universidad de Costa Rica; San Jose, Costa Rica: Re-examining the foundations for effective use of concept maps; pp. 494–502. [Google Scholar]

- 28.Vallori A.B. Meaningful learning in practice. J. Educ. Hum. Dev. 2014;3:199–209. doi: 10.15640/jehd.v3n4a18. [DOI] [Google Scholar]

- 29.Miri B., David B.-C., Uri Z. Purposely Teaching for the Promotion of Higher-order Thinking Skills: A Case of Critical Thinking. Res. Sci. Educ. 2007;37:353–369. doi: 10.1007/s11165-006-9029-2. [DOI] [Google Scholar]

- 30.Elsawah S., Guillaume J.H.A., Filatova T., Rook J., Jakeman A.J. A methodology for eliciting, representing, and analysing stakeholder knowledge for decision making on complex socio-ecological systems: From cognitive maps to agent-based models. J. Environ. Manag. 2015;151:500–516. doi: 10.1016/j.jenvman.2014.11.028. [DOI] [PubMed] [Google Scholar]

- 31.Wang M., Wu B., Kirschner P.A., Michael Spector J. Using cognitive mapping to foster deeper learning with complex problems in a computer-based environment. Comput. Hum. Behav. 2018;87:450–458. doi: 10.1016/j.chb.2018.01.024. [DOI] [Google Scholar]

- 32.Wu B., Wang M., Grotzer T.A., Liu J., Johnson J.M. Visualizing complex processes using a cognitive-mapping tool to support the learning of clinical reasoning. BMC Med Educ. 2016;16:216. doi: 10.1186/s12909-016-0734-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sun M., Wang M., Wegerif R. Using computer-based cognitive mapping to improve students’ divergent thinking for creativity development. Br. J. Educ. Technol. 2019;50:2217–2233. doi: 10.1111/bjet.12825. [DOI] [Google Scholar]

- 34.Derbentseva N., Safayeni F., Cañas A.J. Concept maps: Experiments on dynamic thinking. J. Res. Sci. Teach. 2007;44:448–465. doi: 10.1002/tea.20153. [DOI] [Google Scholar]

- 35.Özesmi U., Özesmi S.L. Ecological models based on people’s knowledge: A multi-step fuzzy cognitive mapping approach. Ecol. Model. 2004;176:43–64. doi: 10.1016/j.ecolmodel.2003.10.027. [DOI] [Google Scholar]

- 36.Täuscher K., Abdelkafi N. Visual tools for business model innovation: Recommendations from a cognitive perspective. Creat. Innov. Manag. 2017;26:160–174. doi: 10.1111/caim.12208. [DOI] [Google Scholar]

- 37.Village J., Salustri F.A., Neumann W.P. Cognitive mapping: Revealing the links between human factors and strategic goals in organizations. Int. J. Ind. Ergon. 2013;43:304–313. doi: 10.1016/j.ergon.2013.05.001. [DOI] [Google Scholar]

- 38.Ahn A.C., Tewari M., Poon C.-S., Phillips R.S. The limits of reductionism in medicine: Could systems biology offer an alternative? PLoS Med. 2006;3:e208. doi: 10.1371/journal.pmed.0030208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Grocott M.P.W. Integrative physiology and systems biology: Reductionism, emergence and causality. Extrem. Physiol. Med. 2013;2:9. doi: 10.1186/2046-7648-2-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.American Physical Therapy Association Vision 2020. [(accessed on 3 January 2013)]; Available online: http://www.apta.org/AM/Template.cfm?Section=Vision_20201&Template=/TaggedPage/TaggedPageDisplay.cfm&TPLID=285&ContentID=32061.

- 41.Wolkenhauer O., Green S. The search for organizing principles as a cure against reductionism in systems medicine. Febs J. 2013;280:5938–5948. doi: 10.1111/febs.12311. [DOI] [PubMed] [Google Scholar]

- 42.Zorek J., Raehl C. Interprofessional education accreditation standards in the USA: A comparative analysis. J. Interprofessional Care. 2013;27:123–130. doi: 10.3109/13561820.2012.718295. [DOI] [PubMed] [Google Scholar]

- 43.McDougall A., Goldszmidt M., Kinsella E., Smith S., Lingard L. Collaboration and entanglement: An actor-network theory analysis of team-based intraprofessional care for patients with advanced heart failure. Soc. Sci. Med. 2016;164:108–117. doi: 10.1016/j.socscimed.2016.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Gambrill E. Critical Thinking in Clinical Practice. John Wiley & Sons; Hoboken, NJ, USA: 2012. [Google Scholar]

- 45.Fava L., Morton J. Causal modeling of panic disorder theories. Clin. Psychol. Rev. 2009;29:623–637. doi: 10.1016/j.cpr.2009.08.002. [DOI] [PubMed] [Google Scholar]

- 46.Pilecki B., Arentoft A., McKay D. An evidence-based causal model of panic disorder. J. Anxiety Disord. 2011;25:381–388. doi: 10.1016/j.janxdis.2010.10.013. [DOI] [PubMed] [Google Scholar]

- 47.Scaffa M.E., Wooster D.M. Effects of problem-based learning on clinical reasoning in occupational therapy. Am. J. Occup. Ther. 2004;58:333–336. doi: 10.5014/ajot.58.3.333. [DOI] [PubMed] [Google Scholar]

- 48.Masek A., Yamin S. Problem based learning for epistemological competence: The knowledge acquisition perspectives. [(accessed on 29 January 2021)];J. Tech. Educ. Train. 2011 3 Available online: https://publisher.uthm.edu.my/ojs/index.php/JTET/article/view/257. [Google Scholar]

- 49.Schmidt H.G., Rikers R.M. How expertise develops in medicine: Knowledge encapsulation and illness script formation. Med. Educ. 2007;41:1133–1139. doi: 10.1111/j.1365-2923.2007.02915.x. [DOI] [PubMed] [Google Scholar]

- 50.Facione N.C., Facione P.A. Critical Thinking and Clinical Reasoning in the Health Sciences: A Teaching Anthology. Insight Assessment/The California Academic Press; Millbrae CA, USA: 2008. [(accessed on 29 January 2021)]. Critical Thinking and Clinical Judgment; pp. 1–13. Available online: https://insightassessment.com/wp-content/uploads/ia/pdf/CH-1-CT-CR-Facione-Facione.pdf. [Google Scholar]

- 51.Newell A. Unified Theories of Cognition. Cambridge University Press; Cambridge, MA, USA: 1990. [Google Scholar]

- 52.Howard J. In: Bursting the Big Data Bubble: The Case for Intuition-Based Decision Making. Liebowitz J., editor. Auerbach; Boca Raton, FL, USA: 2015. pp. 57–69. [Google Scholar]

- 53.Bornstein B.H., Emler A.C. Rationality in medical decision making: A review of the literature on doctors’ decision-making biases. J. Eval. Clin. Pract. 2001;7:97–107. doi: 10.1046/j.1365-2753.2001.00284.x. [DOI] [PubMed] [Google Scholar]

- 54.Mamede S., Schmidt H.G., Rikers R. Diagnostic errors and reflective practice in medicine. J. Eval. Clin. Pract. 2007;13:138–145. doi: 10.1111/j.1365-2753.2006.00638.x. [DOI] [PubMed] [Google Scholar]

- 55.Klein J.G. Five pitfalls in decisions about diagnosis and prescribing. BMJ: Br. Med. J. 2005;330:781. doi: 10.1136/bmj.330.7494.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Schwartz A., Elstein A.S. Clinical reasoning in medicine. Clin. Reason. Health Prof. 2008;3:223–234. [Google Scholar]

- 57.Mann K., Gordon J., MacLeod A. Reflection and reflective practice in health professions education: A systematic review. Adv. Health Sci. Educ. 2009;14:595–621. doi: 10.1007/s10459-007-9090-2. [DOI] [PubMed] [Google Scholar]

- 58.Cross V. Introducing physiotherapy students to the idea of ‘reflective practice’. Med. Teach. 1993;15:293–307. doi: 10.3109/01421599309006652. [DOI] [PubMed] [Google Scholar]

- 59.Donaghy M.E., Morss K. Guided reflection: A framework to facilitate and assess reflective practice within the discipline of physiotherapy. Physiother. Theory Pract. 2000;16:3–14. doi: 10.1080/095939800307566. [DOI] [Google Scholar]

- 60.Entwistle N., Ramsden P. Understanding Learning. Croom Helm; London, UK: 1983. [Google Scholar]

- 61.Routledge J., Willson M., McArthur M., Richardson B., Stephenson R. Reflection on the development of a reflective assessment. Med. Teach. 1997;19:122–128. doi: 10.3109/01421599709019364. [DOI] [Google Scholar]

- 62.King G., Currie M., Bartlett D.J., Gilpin M., Willoughby C., Tucker M.A., Baxter D. The development of expertise in pediatric rehabilitation therapists: Changes in approach, self-knowledge, and use of enabling and customizing strategies. Dev. Neurorehabilit. 2007;10:223–240. doi: 10.1080/17518420701302670. [DOI] [PubMed] [Google Scholar]

- 63.Johns C. Nuances of reflection. J. Clin. Nurs. 1994;3:71–74. doi: 10.1111/j.1365-2702.1994.tb00364.x. [DOI] [PubMed] [Google Scholar]

- 64.Koole S., Dornan T., Aper L., Scherpbier A., Valcke M., Cohen-Schotanus J., Derese A. Factors confounding the assessment of reflection: A critical review. BMC Med. Educ. 2011;11:104. doi: 10.1186/1472-6920-11-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Larrivee B. Development of a tool to assess teachers’ level of reflective practice. Reflective Pract. 2008;9:341–360. doi: 10.1080/14623940802207451. [DOI] [Google Scholar]

- 66.Rassafiani M. Is length of experience an appropriate criterion to identify level of expertise? Scand. J. Occup. Ther. 2009:1–10. doi: 10.1080/11038120902795441. [DOI] [PubMed] [Google Scholar]

- 67.Wainwright S., Shepard K., Harman L., Stephens J. Factors that influence the clinical decision making of novice and experienced physical therapists. Phys. Ther. 2011;91:87–101. doi: 10.2522/ptj.20100161. [DOI] [PubMed] [Google Scholar]

- 68.Benner P. From Novice to Expert. Addison-Wesley Publishing Company; Menlo Park, CA, USA: 1984. [Google Scholar]

- 69.Eva K.W., Cunnington J.P., Reiter H.I., Keane D.R., Norman G.R. How can I know what I don’t know? Poor self assessment in a well-defined domain. Adv. Health Sci. Educ. 2004;9:211–224. doi: 10.1023/B:AHSE.0000038209.65714.d4. [DOI] [PubMed] [Google Scholar]

- 70.Eva K.W., Regehr G. “I’ll never play professional football” and other fallacies of self-assessment. J. Contin. Educ. Health Prof. 2008;28:14–19. doi: 10.1002/chp.150. [DOI] [PubMed] [Google Scholar]

- 71.Galbraith R.M., Hawkins R.E., Holmboe E.S. Making self-assessment more effective. J. Contin. Educ. Health Prof. 2008;28:20–24. doi: 10.1002/chp.151. [DOI] [PubMed] [Google Scholar]

- 72.Furnari S., Crilly D., Misangyi V.F., Greckhamer T., Fiss P.C., Aguilera R. Capturing causal complexity: Heuristics for configurational theorizing. Acad. Manag. Rev. 2020 doi: 10.5465/amr.2019.0298. [DOI] [Google Scholar]

- 73.Gopnik A., Glymour C., Sobel D.M., Schulz L.E., Kushnir T., Danks D. A theory of causal learning in children: Causal maps and Bayes nets. Psychol Rev. 2004;111:3–32. doi: 10.1037/0033-295X.111.1.3. [DOI] [PubMed] [Google Scholar]

- 74.Hattori M., Oaksford M. Adaptive non-interventional heuristics for covariation detection in causal induction: Model comparison and rational analysis. Cogn. Sci. 2007;31:765–814. doi: 10.1080/03640210701530755. [DOI] [PubMed] [Google Scholar]

- 75.Friston K., Schwartenbeck P., FitzGerald T., Moutoussis M., Behrens T., Dolan R.J. The anatomy of choice: Dopamine and decision-making. Philos. Trans. R. Soc. B: Biol. Sci. 2014;369:20130481. doi: 10.1098/rstb.2013.0481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Lu H., Yuille A.L., Liljeholm M., Cheng P.W., Holyoak K.J. Bayesian generic priors for causal learning. Psychol. Rev. 2008;115:955. doi: 10.1037/a0013256. [DOI] [PubMed] [Google Scholar]

- 77.Sloman S. Causal Models: How People Think about the World and Its Alternatives. Oxford University Press; New York, NY, USA: 2005. [Google Scholar]

- 78.Waldmann M.R., Hagmayer Y. The Oxford Handbook of Cognitive Psychology. Oxford Library of Psychology, Oxford University Press; New York, NY, USA: 2013. Causal reasoning; pp. 733–752. [Google Scholar]

- 79.Rehder B., Kim S. How causal knowledge affects classification: A generative theory of categorization. J. Exp. Psychol. 2006;32:659–683. doi: 10.1037/0278-7393.32.4.659. [DOI] [PubMed] [Google Scholar]

- 80.Davies M. Concept mapping, mind mapping and argument mapping: What are the differences and do they matter? High. Educ. 2011;62:279–301. doi: 10.1007/s10734-010-9387-6. [DOI] [Google Scholar]

- 81.Daley B.J., Torre D.M. Concept maps in medical education: An analytical literature review. Med. Educ. 2010;44:440–448. doi: 10.1111/j.1365-2923.2010.03628.x. [DOI] [PubMed] [Google Scholar]

- 82.McHugh Schuster P. Concept Mapping a Critical-Thinking Approach to Care Planning. F.A.Davis; Philadelphia, PA, USA: 2016. [Google Scholar]

- 83.Blackstone S., Iwelunmor J., Plange-Rhule J., Gyamfi J., Quakyi N.K., Ntim M., Ogedegbe G. Sustaining nurse-led task-shifting strategies for hypertension control: A concept mapping study to inform evidence-based practice. Worldviews Evid. Based Nurs. 2017;14:350–357. doi: 10.1111/wvn.12230. [DOI] [PubMed] [Google Scholar]

- 84.Powell B.J., Beidas R.S., Lewis C.C., Aarons G.A., McMillen J.C., Proctor E.K., Mandell D.S. Methods to improve the selection and tailoring of implementation strategies. J. Behav. Health Serv. Res. 2017;44:177–194. doi: 10.1007/s11414-015-9475-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Mehlman M.J. Quackery. Am. J. Law Med. 2005;31:349–363. doi: 10.1177/009885880503100209. [DOI] [PubMed] [Google Scholar]

- 86.Ruiter R.A., Crutzen R. Core processes: How to use evidence, theories, and research in planning behavior change interventions. Front. Public Health. 2020;8:247. doi: 10.3389/fpubh.2020.00247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Bannigan K., Moores A. A model of professional thinking: Integrating reflective practice and evidence based practice. Can. J. Occup. Ther. 2009;76:342–350. doi: 10.1177/000841740907600505. [DOI] [Google Scholar]

- 88.Krueger R.B., Sweetman M.M., Martin M., Cappaert T.A. Occupational Therapists’ Implementation of Evidence-Based Practice: A Cross Sectional Survey. Occup. Ther. Health Care. 2020:1–24. doi: 10.1080/07380577.2020.1756554. [DOI] [PubMed] [Google Scholar]

- 89.Cole J.R., Persichitte K.A. Fuzzy cognitive mapping: Applications in education. Int. J. Intell. Syst. 2000;15:1–25. doi: 10.1002/(SICI)1098-111X(200001)15:1<1::AID-INT1>3.0.CO;2-V. [DOI] [Google Scholar]

- 90.Harden R.M. What is a spiral curriculum? Med. Teach. 1999;21:141–143. doi: 10.1080/01421599979752. [DOI] [PubMed] [Google Scholar]

- 91.Clark A.M. What are the components of complex interventions in healthcare? Theorizing approaches to parts, powers and the whole intervention. Soc. Sci. Med. 2013;93:185–193. doi: 10.1016/j.socscimed.2012.03.035. [DOI] [PubMed] [Google Scholar]

- 92.Whyte J., Dijkers M.P., Hart T., Zanca J.M., Packel A., Ferraro M., Tsaousides T. Development of a theory-driven rehabilitation treatment taxonomy: Conceptual issues. Arch. Phys. Med. Rehabil. 2014;95:S24–S32.e22. doi: 10.1016/j.apmr.2013.05.034. [DOI] [PubMed] [Google Scholar]

- 93.Levack W.M., Meyer T., Negrini S., Malmivaara A. Cochrane Rehabilitation Methodology Committee: An international survey of priorities for future work. Eur. J. Phys. Rehabil. Med. 2017;53:814–817. doi: 10.23736/S1973-9087.17.04958-9. [DOI] [PubMed] [Google Scholar]

- 94.Hart T., Ehde D.M. Defining the treatment targets and active ingredients of rehabilitation: Implications for rehabilitation psychology. Rehabil. Psychol. 2015;60:126. doi: 10.1037/rep0000031. [DOI] [PubMed] [Google Scholar]

- 95.Dombrowski S.U., O’Carroll R.E., Williams B. Form of delivery as a key ‘active ingredient’ in behaviour change interventions. Br. J. Health Psychol. 2016;21:733–740. doi: 10.1111/bjhp.12203. [DOI] [PubMed] [Google Scholar]

- 96.Wolf S.L., Winstein C.J., Miller J.P., Taub E., Uswatte G., Morris D., Giuliani C., Light K.E., Nichols-Larsen D., EXCITE Investigators f.t. Effect of Constraint-Induced Movement Therapy on Upper Extremity Function 3 to 9 Months After StrokeThe EXCITE Randomized Clinical Trial. JAMA. 2006;296:2095–2104. doi: 10.1001/jama.296.17.2095. [DOI] [PubMed] [Google Scholar]

- 97.Hoare B.J., Wallen M.A., Thorley M.N., Jackman M.L., Carey L.M., Imms C. Constraint-induced movement therapy in children with unilateral cerebral palsy. Cochrane Database Syst. Rev. 2019;2011:CD003681. doi: 10.1002/14651858.CD004149.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Gordon A.M., Hung Y.C., Brandao M., Ferre C.L., Kuo H.C., Friel K., Petra E., Chinnan A., Charles J.R. Bimanual training and constraint-induced movement therapy in children with hemiplegic cerebral palsy: A randomized trial. Neurorehabil Neural Repair. 2011;25:692–702. doi: 10.1177/1545968311402508. [DOI] [PubMed] [Google Scholar]

- 99.Waddell K.J., Strube M.J., Bailey R.R., Klaesner J.W., Birkenmeier R.L., Dromerick A.W., Lang C.E. Does task-specific training improve upper limb performance in daily life poststroke? Neurorehabilit. Neural Repair. 2017;31:290–300. doi: 10.1177/1545968316680493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Lang C.E., Lohse K.R., Birkenmeier R.L. Dose and timing in neurorehabilitation: Prescribing motor therapy after stroke. Curr. Opin. Neurol. 2015;28:549. doi: 10.1097/WCO.0000000000000256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Jette A.M. Opening the Black Box of Rehabilitation Interventions. Phys. Ther. 2020;100:883–884. doi: 10.1093/ptj/pzaa078. [DOI] [PubMed] [Google Scholar]

- 102.Lenker J.A., Fuhrer M.J., Jutai J.W., Demers L., Scherer M.J., DeRuyter F. Treatment theory, intervention specification, and treatment fidelity in assistive technology outcomes research. Assist Technol. 2010;22:129–138. doi: 10.1080/10400430903519910. [DOI] [PubMed] [Google Scholar]

- 103.Johnston M.V., Dijkers M.P. Toward improved evidence standards and methods for rehabilitation: Recommendations and challenges. Arch. Phys. Med. Rehabil. 2012;93:S185–S199. doi: 10.1016/j.apmr.2011.12.011. [DOI] [PubMed] [Google Scholar]

- 104.Sinha Y., Silove N., Hayen A., Williams K. Auditory integration training and other sound therapies for autism spectrum disorders (ASD) Cochrane Database Syst. Rev. 2011 doi: 10.1002/14651858.CD003681.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Bérard G. Hearing Equals Behavior. Keats Publishing; New Canaan, CT, USA: 1993. [Google Scholar]

- 106.American Speech-Language-Hearing Association Auditory Integration Training [Technical Report] [(accessed on 22 January 2021)]; Available online: https://www2.asha.org/policy/TR2004-00260/

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

There is no data.