Abstract

The Structural Assessment of Knowledge (SAK) is an implicit form of evaluation, which examines the organization of knowledge structures or networks. The current study investigates variability in expert knowledge structures of neuroscience concepts, and whether different expert referents affect undergraduate students’ learning of neuronal physiology and structure and function relationships across different course levels. Experts and students made pairwise ratings of terms on their relatedness. Students completed the ratings before and after learning in the classroom. Using Pathfinder software, students’ networks were compared to three expert networks: their individual professor, an average of neuroscience professors at their institution, and an average of neuroscience professors in the field across multiple institutions. Neuroscience experts had large variability in the number of links in their networks. Furthermore, an exploratory analysis suggests experts’ training may differentiate knowledge structures for some concepts. For student knowledge structures, the type of expert referent interacted with the type of class for neuronal physiology, but not structure and function relationships. More specifically, for neuronal physiology, advanced students were more similar to their professor than professors at their institution or professors in the field, but introductory students’ similarity did not vary by expert referent. These findings highlight the role factors such as type of class, type of expert referent, and type of knowledge may play in comparisons using SAK. These issues may be more complex in interdisciplinary fields like neuroscience.

Keywords: assessment, concept mapping, knowledge representation, expertise, structural knowledge

An overarching goal of neuroscience education is to increase the knowledge base of neuroscience concepts (Kerchner et al., 2012). Traditionally, this learning is evaluated with strictly a post-test explicit metric of learning (e.g., exam) which may not encourage deep or long-lasting knowledge acquisition (Momsen et al., 2010). An alternative approach is to use implicit measures of learning, such as structural assessment of knowledge or SAK (Trumpower et al., 2010).

SAK comprises three phrases: (1) knowledge elicitation, in which participants rate the relatedness of pairs of concepts; (2) knowledge representation, or the transformation of a participant’s ratings into a mathematical and visual representation using the Pathfinder network scaling algorithm; and (3) knowledge evaluation, or the comparison of individual or group networks (see Schvaneveldt, 1990 for additional details on the Pathfinder method).

SAK can be used both as an instructional and assessment tool (for an extensive review, see Trumpower and Vanapalli, 2016), and has been implemented in various disciplines including chemistry (Wilson, 1994), computer programming (Trumpower et al., 2010), mathematics (Gomez et al., 1996; Davis et al., 2003), neuroscience (Stevenson et al., 2016), nursing (Azzarello, 2007), physics (Chen and Kuljis, 2003; Trumpower and Sarwar, 2010), and psychology (Beier et al., 2010). When assessing academic performance, the similarity of students’ knowledge structures is compared with a referent – or expert – knowledge structure, typically the instructor (Naveh-Benjamin et al., 1986; Goldsmith et al., 1991; Beier et al., 2010) or group of instructors (d’Apollonia et al., 2004; Azarello, 2007). Similarity between students’ knowledge structures and a referent knowledge structure correlates with traditional explicit course performance measures, such as grades on an exam (Goldsmith et al., 1991) or essay (d’Apollonia et al., 2004).

While referent knowledge structures using an average of multiple experts is generally preferred over a single expert (Acton et al., 1994), there are examples where experts vary in their knowledge organization. For instance, knowledge structure organization of human computer interface varies depending on whether experts have knowledge in human factors or software development (Gillan et al., 1992). Similarly, knowledge structure organization of situation awareness behaviors varies among differing instructor roles (navigators or pilots) in military aviators (Fiore et al., 2000).

Stevenson et al. (2016) conducted the first study to demonstrate students’ acquisition of neuroscience concepts using SAK; however, students’ networks were compared to a single expert’s network. Although some individuals’ knowledge structures may serve as a referent (Acton et al., 1994), it is unknown whether a single expert or multiple experts should be used for the referent structure in interdisciplinary fields, like neuroscience, which provides the motivation for the current study.

More specifically, the current study explored variability in neuroscience experts’ structural organization of neuroscience concepts, and whether different expert referents (the class instructor, neuroscience professors at the institution, and neuroscience professors in the field) affect introductory and advanced students’ similarity to the expert referent. Due to the interdisciplinary nature of neuroscience, we hypothesized that neuroscience experts would exhibit high levels of variability in their knowledge structures reflective of their training and specialization (e.g., psychology, biology, physics). As a result, we also hypothesized that students would be less similar to referents comprising neuroscience professors in the field than their class instructor due to the greater variability among multiple experts. Finally, we expected students in the advanced class to have more similar knowledge structures to the expert referents than students in the introductory class, and that all students would be more similar to the expert referents after learning than before learning.

MATERIALS AND METHODS

Participants

Seventeen individuals (11 female, 6 male) who attained their Ph.D. in a neuroscience-related field between 1970 and 2014 were used as experts in the study. Experts were recruited through a listserv of faculty who teach undergraduate courses in neuroscience or because they taught neuroscience courses at Ursinus College (including JLS and JPB due to the size of the neuroscience program). Expert faculty received a $25 gift card for their participation.

Students were recruited from either their introductory psychology course (n = 24), their advanced behavioral neuroscience course (n = 16), or their advanced cognitive neuroscience course (n = 38) to participate in the study for extra credit. These courses were selected due to their topics of neuronal physiology (e.g., oligodendrocyte, threshold) or brain anatomy and function (e.g., anterior cingulate, language comprehension).

This study was approved by the Ursinus College Institutional Review Board.

Materials and Procedure

JLS and JPB constructed five sets of neuroscience concept terms: a set of 15 terms related to neuronal physiology (i.e., action potential, axon hillock, axon terminal, dendrite, depolarization, ion channel, neuron, oligodendrocyte, receptor, resting potential, reuptake, saltatory conduction, summation, synapse, and threshold), a set of 16 terms related to brain structure and function (i.e., thalamus, long-term memory, hypothalamus, language production, hippocampus, facial processing, prefrontal cortex, visuospatial processing, anterior cingulate, executive function, fusiform gyrus, language comprehension, posterior parietal lobe, working memory, Broca’s area, and theory of mind), a set of 15 terms related to gross brain anatomy (i.e., amygdala, basal ganglia, cerebellum, cerebral cortex, cerebrospinal fluid, corpus callosum, forebrain, hindbrain, hippocampus, hypothalamus, medulla, midbrain, meninges, pons, thalamus), a set of 16 terms related to statistics and research methods (i.e., control group, correlation coefficient, dependent variable, experimental group, independent variable, normal distribution, significance, probability, causation, randomization, standard deviation, reliability, validity, variability, type I error, generalizability), and a set of 15 terms related to neuroscience techniques (i.e., confocal microscopy, DTI, EEG, electroporation, extracellular recording, fluorescence, fMRI, fNIR, intracellular recording, MEG, MRS, patch clamp, PCR, TMS, VBM). The sets of terms related to neuronal physiology and gross brain anatomy were used in a previous study (Stevenson et al., 2016).

Five different Pathfinder programs were constructed (one for each of the domains). Each program contained every possible pairing of the terms for a given list (e.g., 210 concept pairs for neuronal physiology program with 15 terms including receptor-reuptake and reuptake-receptor). Each participant was asked to rate each concept pairing based on the terms’ relatedness to each other, on a scale from 1 (not at all related) to 7 (extremely related) on a computer using JRate (open source software available from Interlink Inc. at http://www.interlinkinc.net). JLS and JPB aimed to create a representative set of terms that included key terms, terms within a hierarchy (e.g., general and specific), and terms that linked together conceptually. For each concept set, this process was slightly different. For example, for neuroscience techniques, JLS and JPB considered factors such as representativeness of molecular/cellular, behavioral, and cognitive neuroscience techniques, as well as the goal of the technique (e.g., visualization of structure).

The expert participants completed all five programs, but only once. Experts were assigned one of two orders to complete the five programs. Experts were asked to download JRate.jar to their computer via a link that was provided to them by the research team. Once installed, the experts downloaded the files for the respective programs and then ran the files to complete the pairwise ratings. After completion of all programs, experts were additionally asked to complete a background questionnaire on Qualtrics survey software to assess their rank, the year in which their doctoral degree was awarded, their gender, their particular training (e.g., biology, psychology, physics), their self-reported familiarity with the domains, the frequency in which they teach the concepts of each domain, their self-reported undergraduate training in each domain, and their self-reported graduate training in each domain. Experts were asked to complete all five programs to help establish a database of expert knowledge structures that could be used for the current study, as well as ongoing and future studies of students’ neuroscience knowledge structures.

The students enrolled in the introductory psychology course were invited to complete both the neuronal physiology program (n = 24) and the brain structure and function program (n = 21). Students in the advanced behavioral neuroscience course completed the neuronal physiology program only, and students in the advanced cognitive neuroscience course completed the brain structure and function program only. In the current study, JPB was the instructor for the introductory psychology course; JLS and JPB were the instructors for the advanced cognitive neuroscience course; a third professor at the institution was the instructor for the advanced behavioral neuroscience course. The student participants completed their respective program twice, at two separate points in time: one time before they had covered that particular material in their respective class (i.e., pre-learning), and one time after they had covered that particular content in the class (i.e., post-learning).

Network relatedness ratings were derived using JPathfinder (open source software available from Interlink Inc. at http://www.interlinkinc.net) using q = n-1 and r = inf. JPathfinder was also used to compare individual participants’ networks (including students’ pre- and post-learning) to an expert comparison network. Individual Pathfinder networks were averaged using medians to create group networks for these comparisons (i.e., professors at the institution and professors in the field). Figure 1 displays sample networks for neuronal physiology concepts for the different expert referent groups. Sample introductory student networks compared to a single network were provided in Stevenson et al. (2016).

Figure 1.

Pathfinder maps for three different expert comparison groups for neuronal physiology concepts: the instructor of the introductory course (top), professors at the institution (middle), and professors in the field (bottom).

Data Analysis

Analyses were conducted to assess variability in expert knowledge structure. In addition to descriptive statistics, Pearson correlations were used to assess relationships between experts’ self-reported familiarity with the topic, undergraduate training in the topic, and graduate school training in the topic with number of links, coherence, and corrected similarity to a single expert, separately for each domain. In these analyses expert participants were compared to one expert, JPB, who was used as the expert in a previous study (Stevenson et al., 2016) due to his experience teaching various neuroscience courses and coordinating the neuroscience program.

An exploratory analysis to assess whether experts’ graduate training can account for variability in knowledge structure was conducted. Only experts who identified training in psychology (n = 4) or biology (n = 5) were used in this analysis because they were the two most commonly identified training areas. Other experts self-reported training in physics (n = 1), pharmacology (n = 1), neuroscience (n = 2), neuropharmacology (n = 1), molecular neurobiology (n = 1), and both biology and psychology (n = 1). JPB whose training was in psychology was not included in this analysis as he was the expert referent. A 2 (training) x 5 (domain) mixed-design Analysis of Variance (ANOVA) was conducted to examine whether experts’ training or domain had an effect on corrected similarity with a single expert.

To compare student knowledge structures to a reference knowledge structure, three types of expert referent knowledge structures were computed: the class instructor (n = 1), professors at the institution excluding the class instructor (n = 4), and professors in the field at undergraduate institutions excluding professors at the institution (n = 12). Corrected similarity was calculated with the three types of experts.

To assess changes in student knowledge structures, two 2 x 2 x 3 mixed-design ANOVAs were conducted to evaluate the effect of type of expert referent (professor, institution, or field), type of class (introductory or advanced), and time (pre- or post-learning) on the corrected similarity between participants and the referent network, for both neuronal physiology and brain structure and function relationships.

In all analyses, similarity is defined as C / (Links1 + Links2 – C) where C refers to the number of links (i.e., connections between nodes) in common between the participant and the referent network, Links1 refers to the total number of links in the participant network and Links2 refers to the total number of links in the referent network. Corrected similarity is then similarity minus the similarity expected by chance. Finally, coherence is an indirect measure of similarity and reflects consistency of ratings.

RESULTS

Expert Knowledge Structure Variability

The number of links and coherence of the experts’ knowledge structures for the five domains was extremely variable, as shown in Table 1. For example, in terms of the number of links, neuronal physiology was the least variable domain (SD = 8.00) whereas neuroscience techniques was the most variable domain (SD = 21.67). Variability was more consistent in terms of coherence, with the least variable domain being gross brain anatomy (SD = 0.15) and the most variable domain being neuroscience techniques (SD = 0.28).

Table 1.

Descriptive statistics of metrics of experts’ knowledge structures for the five domains. Similarity and corrected similarity are compared with one expert’s knowledge structure (JPB). Means are provided with standard deviations in parentheses.

| Links | Coherence | Corrected Similarity | |

|---|---|---|---|

| Neuronal Physiology | 27.29 (8.00) | 0.42 (0.20) | 0.24 (0.08) |

| Structure-Function | 30.53 (17.85) | 0.40 (0.23) | 0.15 (0.12) |

| Gross Anatomy | 27.18 (10.39) | 0.44 (0.15) | 0.24 (0.10) |

| Methods/ Statistics | 30.65 (20.38) | 0.33 (0.21) | 0.14 (0.06) |

| Techniques | 33.06 (21.67) | 0.56 (0.28) | 0.12 (0.07) |

Variability in experts’ knowledge structures is also apparent when comparing their similarity to a single expert as shown in Table 1. In some cases, the standard deviation is almost numerically equivalent to the mean (e.g., brain structure and function relationships: M = 0.15, SD = 0.12).

Pearson correlations revealed positive relationships between experts’ self-reported expertise and self-reported graduate school training in four of the five domains: neuronal physiology, r(15) = 0.57, p = 0.02; gross brain anatomy, r(15) = 0.60, p = 0.01; research methods and statistics, r(15) = 0.75, p < 0.001; and neuroscience techniques, r(15) = 0.60, p = 0.01. The relationship between experts’ self-reported expertise and self-reported graduated school training in brain structure and function relationships was not significant, r(15) = 0.39, p = 0.12. However, experts’ self-reported familiarity and training in a domain was not significantly related to measures of their knowledge structure. The only significant relationship was self-reported familiarity with neuroscience techniques and coherence, r(15) = 0.66, p = 0.004.

Exploratory analyses revealed there was a significant main effect of domain on experts’ corrected similarity to a single expert (JPB whose graduate training was in psychology; F(4, 28) = 4.96, p = 0.004, η2partial = 0.41). The interaction between domain and type of graduate training was marginally significant (F(4, 28) = 2.22, p = 0.09, η2partial = 0.24). As shown in Figure 2, experts with graduate training in psychology tended to have greater corrected similarity to a single expert for gross brain anatomy (t(7) = 2.92, p = 0.08, d = 0.67). No other pairwise comparisons approached significance (all ps > 0.10).

Figure 2.

Experts’ corrected similarity with a single expert by domain and experts’ graduate training. Error bars represent ±1 SE.

Student Knowledge Structure

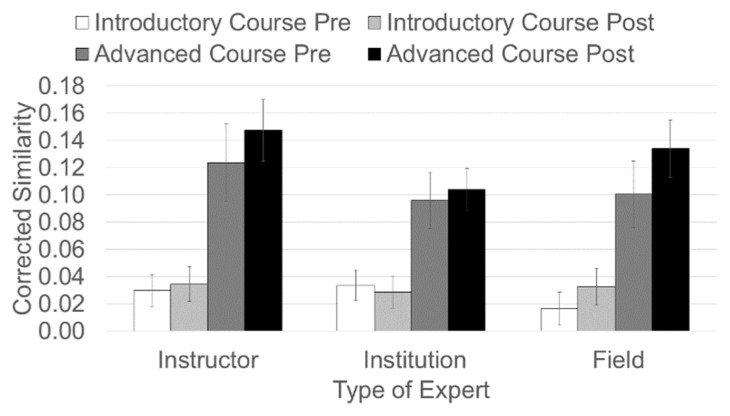

For neuronal physiology, there were significant main effects of type of expert referent (F(2, 76) = 5.93, p = 0.004, η2partial = 0.14), and type of class (F(1, 38) = 21.43, p < 0.001, η2partial = 0.36). There was also a significant interaction between type of expert referent and type of class (F(2, 76) = 5.27, p = 0.007, η2partial = 0.12). No other main effects or interactions were significant (all ps > 0.05).

As shown in Figure 3, introductory students’ corrected similarity in knowledge structures of neuronal physiology did not vary depending on type of expert (all ps > 0.05). In contrast, advanced students had more corrected greater similarity with their professor than professors at their institution (t(15) = 3.13, p = 0.007, d = 0.78) or professors in the field (t(15) = 2.57, p = 0.02, d = 0.64) for knowledge of neuronal physiology. They did not differ in similarity between professors at their institution compared with professors in the field for knowledge of neuronal physiology (t(15) = −1.87, p = 0.08, d = −0.47).

Figure 3.

Students’ corrected similarity by type of student, pre- and post-learning, and type of expert referent for neuronal physiology. Error bars represent ±1 SE.

For brain structure and function relationships, there were significant main effects of time (F(1, 57) = 6.14, p = 0.02, η2partial = 0.10), and type of class (F(1, 57) = 37.77, p < 0.001, η2partial = 0.40). No other main effects or interactions were significant (all ps > 0.05). As shown in Figure 4, all students were more similar to expert referents at post-learning than pre-learning, and advanced students were more similar to expert referents than introductory students for knowledge of brain structure and function relationships.

Figure 4.

Students’ corrected similarity by type of student, pre- and post-learning, and type of expert referent for brain structure and function relationships. Error bars represent ±1 SE.

DISCUSSION

Neuroscience experts demonstrated a large degree of variability in their knowledge structures when assessed via the number of links present, coherence, and similarity to a single expert. This was consistent with our hypothesis, given the interdisciplinary nature of the field, and suggests that some, but not all experts may be appropriate as a single referent for comparison networks (Acton et al., 1994). Experts’ self-reported expertise in a content area was positively correlated with their self-reported training in the content area; however, their self-reported familiarity and training was unrelated to knowledge structure metrics with a single expert. Experience and training correlate with knowledge structure metrics (Goldsmith et al., 1991; Davis and Yi, 2004) but these relationships have not previously been explored in experts and warrants further investigation.

Variability among experts’ knowledge structures may partially be explained by their training. Exploratory analyses revealed experts with a background in psychology were marginally more similar to a single expert for brain structure and function relationship concepts than experts with a background in biology. This is consistent with past research that found differing roles (Fiore et al., 2000) and experience (Gillan et al., 1992) can affect the organization of experts’ knowledge structures. The current study had a relatively small number of experts (n = 17) which meant the subsamples based on training experience were quite small. A future study that explores the specific role of type of training and expertise (e.g., cognitive neuroscience versus neurobiology) may help tease apart the variability among experts’ knowledge structures. Furthermore, future research should consider other ways to assess individual experts. For example, individual expert networks could be compared to every other expert network, non-referent measures of comparison could be used (e.g., coherence, number of links) to compare similarity between various groups of experts based on institutions and experience teaching.

The similarity of students’ knowledge structures to experts significantly increased over time for brain structure and function relationships, but not for neuronal physiology, which is consistent with previous research (Stevenson et al., 2016). However, as expected, advanced students’ knowledge structures were more similar to expert networks than introductory students for both neuronal physiology and brain structure and function relationships. Thus, the knowledge structure of students appears to become more similar to experts with greater training. Combined, these results suggest that SAK is effective at demonstrating long-term changes in knowledge structure for both types of concepts from introductory to advanced levels; however, detection of short-term changes in knowledge structure may be dependent on content or other factors (e.g., teaching style).

Students’ knowledge structures’ similarity to an expert referent depended on the type of class (introductory or advanced), the type of expert referent (professor, institution, or field), and the type of knowledge (neuronal physiology or brain structure and function relationships). Advanced students learning neuronal physiology were more similar to their professor than professors at their institution or professors in the field. However, the type of expert referent did not affect similarity of knowledge structures for introductory students learning neuronal physiology, and all students learning brain structure and function relationships. These findings should be replicated to ensure they are not due to potential confounds in the data; for example, professors at the institution differed from professors from the field in several ways, including their gender ratio and average time since Ph.D., which may have affected the results.

Collectively, these results suggest the referent network may affect evaluation of student knowledge structures. Neuroscience experts are variable in their training which may lead to greater variability in the organization of their knowledge structures (Gillan et al., 1992; Fiore et al., 2000). Depending on your goal, and the content being assessed, the “best” referent may vary. For neuronal physiology, students were most similar to their course instructor. Additionally, a single expert (i.e., the instructor) may be the best referent for an implicit measure of learning course content, if multiple experts (i.e., multiple instructors) are not available or do not have similar training (e.g., a small neuroscience program with one person trained in cellular/molecular neuroscience and one person trained in cognitive neuroscience). However, multiple experts may be desirable in other situations, such as when evaluating a program’s effectiveness.

Future studies could explore other alternatives for average networks. Top performing students have been successfully used to create a referent network (Diekhoff, 1983; Acton et al., 1994; Rowe et al. 1997). Another alternative might be to have multiple experts work together to create the referent network; for example, Barab et al. (1996) had 100% agreement among three experts in a study of hypermedia navigation. Additionally, each Neuroscience program may have a specific focus (e.g., cognitive neuroscience, neurophysiology) that may alter the focus of the curriculum and shape the way in which a faculty member may instruct or organize their course; thus, additional comparisons of networks based on subdisciplines within neuroscience would be invaluable.

Similar to Stevenson et al. (2016), the current study focused on short-term learning and it was impractical to directly relate students’ course performance (e.g., exam grades) with SAK metrics. Future studies may consider the relationship between students’ explicit course performance and SAK metrics for the different types of expert referents which may further help address what referent is better at predicting course performance. Additionally, a future study focuses on longer-term assessment of the curriculum, investigating differences between newly declared neuroscience majors and students near graduation.

SAK continues to show promise as a technique for neuroscience faculty to evaluate what their students have learned, regardless of what grade they have achieved. However, the expert referent should be considered.

Footnotes

This work was supported by Ursinus College Student Achievement in Research and Creativity funding to NCY. NCY worked on this project as an undergraduate student under JLS and JPB’s supervision.

REFERENCES

- Acton WH, Johnson PJ, Goldsmith TE. Structural knowledge assessment: Comparison of referent structures. J Educ Psychol. 1994;86:303–311. [Google Scholar]

- Azzarello J. Use of the Pathfinder scaling algorithm to measure students’ structural knowledge of community health nursing. J Nurs Educ. 2007;46:313–318. doi: 10.3928/01484834-20070701-05. [DOI] [PubMed] [Google Scholar]

- Barab SA, Fajen BR, Kulikowich JM, Young MF. Assessing hypermedia navigation through Pathfinder: Prospects and limitations. J Educ Comput Res. 1996;15:185–205. [Google Scholar]

- Beier ME, Campbell M, Crook AE. Developing and demonstrating knowledge: Ability and non-ability determinants of learning and performance. Intelligence. 2010;38:179–186. [Google Scholar]

- Chen C, Kuljis J. The rising landscape: a visual exploration of superstring revolutions in physics. J Am Soc Inf Sci Technol. 2003;54:435–446. [Google Scholar]

- d’Appolonia ST, Charles ES, Boyd GM. Acquisition of complex systemic thinking: Mental models of evolution. Educ Res Eval. 2004;10:499–521. [Google Scholar]

- Davis MA, Curtis MB, Tschetter JD. Evaluating cognitive outcomes: validity and utility of structural knowledge assessment. J Bus Psychol. 2003;18:191–206. [Google Scholar]

- Davis FD, Yi MY. Improving computer skill training: behavior modeling, symbolic mental rehearsal, and the role of knowledge structures. J Appl Psychol. 2004;89:509–523. doi: 10.1037/0021-9010.89.3.509. [DOI] [PubMed] [Google Scholar]

- Diekhoff GM. Testing through relationship judgments. J Educ Psychol. 1983;75:227–233. [Google Scholar]

- Fiore SM, Fowlkes J, Martin-Milham L, Oser RL. Convergence or divergence of expert mental models: The utility of knowledge structure assessment in training research. Proc Hum Factors Ergon Soc Annu Meet. 2000;44:427–430. [Google Scholar]

- Gillan DJ, Breedin SD, Cooke NJ. Network and multidimensional representations of the declarative knowledge of human-computer interface design experts. Int J Man Mach Stud. 1992;36:587–615. [Google Scholar]

- Goldsmith TE, Johnson PJ, Acton WH. Assessing structural knowledge. J Educ Psychol. 1991;83:88–96. [Google Scholar]

- Gomez RL, Hadfield OD, Housner LD. Conceptual maps and simulated teaching episodes as indicators of competence in teaching elementary mathematics. J Educ Psychol. 1996;88:572–585. [Google Scholar]

- Kerchner M, Hardwick JC, Thornton JE. Identifying and using ‘core competencies’ to help design and assess undergraduate neuroscience curricula. J Undergrad Neurosci Educ. 2012;11:A27–A37. [PMC free article] [PubMed] [Google Scholar]

- Momsen JL, Long TM, Wyse SA, Ebert-May D. Just the facts? Introductory undergraduate biology courses focus on low-level cognitive skills. CBE Life Sci Educ. 2010;9:435–440. doi: 10.1187/cbe.10-01-0001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naveh-Benjamin M, McKeachie WJ, Lin Y-G, Tucker DG. Inferring students’ cognitive structures and their development using the “ordered tree technique. ” J Educ Psychol. 1986;78:130–140. [Google Scholar]

- Rowe AL, Meyer TN, Miller TM, Steuck K. Assessing knowledge structures: Don’t always call an expert. Proc Hum Factors Ergon Soc Annu Meet. 1997;41:1203–1207. [Google Scholar]

- Schaneveldt R. Pathfinder associative networks: studies in knowledge organization. Norword, NJ: Ablex; 1990. [Google Scholar]

- Stevenson JL, Shah S, Bish JP. Use of structural assessment of knowledge for outcomes assessment in the neuroscience classroom. J Undergrad Neurosci Educ. 2016;15:A38–A43. [PMC free article] [PubMed] [Google Scholar]

- Trumpower DL, Sarwar GS. Effectiveness of structural feedback provided by Pathfinder networks. J Educ Comput Res. 2010;43:7–24. [Google Scholar]

- Trumpower DL, Sharara H, Goldsmith TE. Specificity of structural assessment of knowledge. J Technol Learn Assess. 2010;8(5):1–32. [Google Scholar]

- Trumpower DL, Vanapalli AS. Structural assessment of knowledge as, of, and for learning. In: Spector MJ, Lockee BB, Childress MD, editors. Learning, Design, and Technology: An International Compendium of Theory, Research, Practice, and Policy. Switzerland: Springer International Publishing; 2016. [DOI] [Google Scholar]

- Wilson JM. Network representations of knowledge about chemical equilibrium: variations with achievement. J Res Sci Teach. 1994;31:1133–1147. [Google Scholar]