Abstract

Background/Aims:

Regulatory approval of a drug or device involves an assessment of not only the benefits but also the risks of adverse events associated with the therapeutic agent. Although randomized controlled trials (RCTs) are the gold standard for evaluating effectiveness, the number of treated patients in a single RCT may not be enough to detect a rare but serious side effect of the treatment. Meta-analysis plays an important role in the evaluation of the safety of medical products and has advantage over analyzing a single RCT when estimating the rate of adverse events.

Methods:

In this paper, we compare 15 widely-used meta-analysis models under both Bayesian and frequentist frameworks when outcomes are extremely infrequent or rare. We present extensive simulation study results and then apply these methods to a real meta-analysis that considers RCTs investigating the effect of rosiglitazone on the risks of myocardial infarction and of death from cardiovascular causes.

Results:

Our simulation studies suggest that the beta hyperprior method modeling treatment group-specific parameters and accounting for heterogeneity performs the best. Most models ignoring between-study heterogeneity give poor coverage probability when such heterogeneity exists. In the data analysis, different methods provide a wide range of log odds ratio estimates between rosiglitazone and control treatments with a mixed conclusion on their statistical significance based on 95% confidence (or credible) intervals.

Conclusions:

In the rare event setting, treatment effect estimates obtained from traditional meta-analytic methods may be biased and provide poor coverage probability. This trend worsens when the data have large between-study heterogeneity. In general, we recommend methods that first estimate summaries of treatment-specific risks across studies and then relative treatment effects based on the summaries when appropriate. Furthermore, we recommend fitting various methods, comparing the results and model performance, and investigating any significant discrepancies among them.

Keywords: Meta-analysis, randomized controlled trials, Bayesian analysis, rare event, regulatory science, rosiglitazone

Introduction

Regulatory approval of a drug or device involves an assessment of not only the benefits but also the risks of adverse events associated with the therapeutic agent. Although we design randomized controlled trials (RCTs), the gold standard for evaluating effectiveness, to be large enough to detect a benefit of clinical importance, the number of treated patients may not be enough to detect infrequent adverse events. The rarer the adverse event, the less likely one or even two randomized clinical trials will provide enough of a signal, which could be problematic if a side effect of a treatment is rare but serious. The Council for International Organizations of Medical Sciences defined adverse drug reactions to be uncommon (or infrequent) with a frequency from 0.1% to 1%, rare with a frequency from 0.01% to 0.1%, and very rare with a frequency smaller than 0.01%1.

Meta-analysis plays a role in the evaluation of the safety of medical products, whether drugs or medical devices. A single clinical trial may individually not provide sufficient information to make statements on the differential rate of occurrence of adverse events. Meta-analysis pools information on events over trials conducted prior to regulatory approval or surveillance studies after the marketing of a product to increase the number of treated patients and thereby increases the chance of seeing rare and unintended effects of the treatment2.

Although meta-analysis has advantages over analyzing a single clinical trial when estimating the rate of adverse events, there are some discussions that traditional meta-analysis methods may be ill-defined or have poor performance properties with rare events3,4,6–9,35. The main issues include (1) sparsity of data with many trials having no events (so called zero-event trials), (2) insufficient statistical power to infer the effect heterogeneity across studies, and (3) the impact of trials with large sample sizes relative to smaller ones.

It is not hard to find published investigations of the performance of many different meta-analysis methods via extensive simulation studies under the rare-event settings6,9–11. One common limitation of most simulation studies is that the simulated data sets were generated assuming no heterogeneity in treatment effects across trials. Most studies listed above focused mainly on traditional frequentist meta-analytic methods (such as Peto, Mantel-Haenszel, and inverse variance estimators). Bayesian models for meta-analysis are gaining popularity12,13 and they are suitable to deal with rare events, because they can handle trials with zero events more naturally than frequentist methods. In addition, Bayesian methods provide model flexibility and many choices of prior specifications based on different model assumptions14. There are, however, few publications investigating the performance of different Bayesian meta-analytic methods in the setting of rare events. Alternative meta-analysis methods for rare events have been proposed: likelihood-based Poisson random-effects models15, combining confidence intervals16, and using an arcsine difference 17.

The objective of this paper is to provide a comprehensive comparison of widely-used meta-analysis methods under both frequentist and Bayesian frameworks and then to understand the current state of meta-analytic methods for rare events. The remainder of this paper is structured as follows. The methods section provides details of different meta-analysis methods. The simulation study section reports the settings and results of our extensive simulations studies. Then we apply all these methods to a real data example and the rosiglitazone data analysis section presents the results. Finally, the discussion section discusses our work and unmet methodological challenges.

Methods

In this section, we present models estimating the log odds ratio (LOR). Relative risk and risk difference scales could be used for rare events6 and the related models and results are presented in Supplementary Material. We summarize the results in the Discussion section.

Models may assume treatment effects to be either constant across studies or heterogeneous; we denote the former assumption as “Common Treatment Effect (CTE)” and the latter as “Heterogeneous Treatment Effect (HTE).” We include moment-based estimators for frequentist meta-analysis methods and likelihood-based approaches for Bayesian meta-analysis methods under both common and heterogeneous treatment effect assumptions. Table 1 summarizes all models we consider.

Table 1:

Model specifications

| Assumption | Model name | Model description | |

|---|---|---|---|

| Frequentist approaches | |||

| 1 | CTE | Naïve | Naïvely pooling estimator |

| 2 | Peto | Peto estimator | |

| 3 | MH | MH estimator | |

| 4 | MH(DM)1 | MH estimator with data modification | |

| 5 | CTE-IV(DM)1 | Inverse variance estimator ignoring effect heterogeneity | |

| 6 | SGS-Unwgt | Unweighted Shuster, Guo, and Skyler (SGS) estimator | |

| 7 | SGS-Wgt | Weighted Shuster, Guo, and Skyler (SGS) estimator | |

| 8 | HTE | HTE-DL(DM)1 | DerSimonia-Laird inverse variance estimator accounting for effect heterogeneity |

| 9 | SA(DM)2 | Simple average estimator | |

| Bayesian approaches | |||

| 10 | CTE | CTE-Logit | Logistic model ignoring effect heterogeneity |

| 11 | CTE-Beta | Using Beta hyperprior ignoring effect heterogeneity | |

| 12 | HTE | HTE-Logit | Logistic model accounting for effect heterogeneity |

| 13 | HTE-LogitSh | HTE-Logit with shrinkage prior on the effect of control group | |

| 14 | AB-Logit | Arm-based logistic model | |

| 15 | HTE-Beta | Using Beta hyperprior accounting for effect heterogeneity | |

Data modification to studies with zero events

Data modification to all studies

With binary data, each study in a meta-analysis forms a 2×2 table as in Table 2. Here, i indexes study (i = 1, …, M, where M is the number of studies), and k indexes treatment with k = 1 for control and k = 2 for the treated group. We assume that the outcomes follow a binomial distribution

| (1) |

where for the kth treatment in the ith study, yik is the number of events, nik is the number of subjects receiving treatment k, and pik is the probability of having an event.

Table 2:

Data structure for study i

| No. of events | No. of nonevents | Total | |

|---|---|---|---|

| Active treatment | yi2 | ni2 − yi2 | ni2 |

| Control treatment | yi1 | ni1 − yi1 | ni1 |

| Total | yi· | ni· − yi· | ni· |

Frequentist meta-analysis models

Naïve estimator.

We first consider an estimator that naïvely pools multiple contingency tables, without accounting for effect heterogeneity across studies (denoted by “Naïve”). The naïve log odds ratio estimator is written as

| (2) |

where and . The standard error of is calculated as .

Peto and Mantel-Haenszel estimators.

Peto18 and Mantel-Haenszel19 estimators assume common treatment effect across studies (denoted by “Peto” and “MH” respectively). The Peto estimator and its variance are

where , and yi· = yi1 + yi2.

The Mantel-Haenszel estimator and its approximate variance proposed by Robins et al.20 can be written as

where , , , and .

Studies with zero cells are not a problem for the Peto and Mantel-Haenszel estimators because they do not calculate the observed log odds ratios of the individual studies. Nevertheless, a data modification that adds a constant 0.5 to all cells of the 2 × 2 table for a trial with zero events in either group as in Table 3 is often considered with the Mantel-Haenszel estimator. We denote the Mantel-Haenszel estimator with data modification by “MH(DM).” A few alternative data modification approaches have been proposed by Sweeting et al.9.

Table 3:

Data modification for study i with zero events (i.e., either yi1 or yi2 is zero) using a constant 0.5

| No. of events | No. of nonevents | Total | |

|---|---|---|---|

| Control group | yi1 + 0.5 | ni1 − yi1 + 0.5 | ni1 + 1 |

| Treated group | yi2 + 0.5 | ni2 − yi2 + 0.5 | ni2 + 1 |

| Total | yi· + 1 | ni· − yi· + 1 | ni· + 2 |

Inverse variance estimators.

The inverse variance method21 is commonly used in meta-analysis. This method can assume either common or heterogeneous treatment effect. When heterogeneous treatment effect is assumed, we follow DerSimonian and Laird (DL)21 to estimate effect heterogeneity, (i.e., between-study variances of log odds ratio). First, we need to calculate a study-specific weight as a combination of within-study and between-study variances. Suppose then

| (3) |

where 1/wi is the within-study variance (i.e., the variance of ) and τ2 is the between-study variance. We estimate τ2 using as follows

| (4) |

where df is the number of studies minus 1, , and . As a result, the inverse variance estimator and its variance are written as

We apply the data modification approach introduced in the previous section to the inverse variance method to handle zero events. Under the common treatment effect assumption, we force τ2 in (3) to be zero and denote this estimator by “CTE-IV(DM).” We denote the estimator that uses to account for heterogeneous treatment effect as “HTE-DL(DM).” Other estimators. Shuster, Guo, and Skyler (SGS)11 proposed estimators that are functions of risk estimates in each group. These estimators are nonparametric and assume independence between studies, an assumption called studies at random. They proposed unweighted and weighted estimators, denoted by “SGS-Unwgt” and “SGS-Wgt,” respectively, for odds ratio, risk ratio, and risk difference scales.

For these estimators, one first estimates study-specific risks as and then calculates summary estimates of group-specific risks as , where M is the number of studies. The unweighted log odds ratio estimator is given by . The weighted log odds ratio estimator applies study-specific weights, ui = (ni1 + ni2)/2, to the study-specific risk estimates for group k, . One then calculates the estimator from the weighted study-group-specific estimates as with the unweighted estimator. They provided formulas for standard errors of the unweighted and weighted estimators11. We note that their unweighted estimator weights all studies equally, regardless of study sizes, while their weighted estimator weights each study by the study’s total sample size.

Bhaumik et al.10 proposed a simple average estimator. It is calculated as an average of study-specific log odds ratios after adding 0.5 to all cells in Table 2 for all trials. That is, the study-specific log odds ratio is

Then, the simple average estimator and its variance are defined as

| (5) |

where M is the number of studies, , and for k = 1 and 2. We use in (4) in the variance estimator (5). Note that this estimator uses a data modification that is different from the one used with the Mantel-Haenszel and inverse variance estimators. We denote the simple average estimator by “SA(DM).”

Bayesian meta-analysis models

Logistic regression.

Using the likelihood (1), we model the unknown parameter pik as follows:

| (6) |

| (7) |

where μi is the study-specific baseline effect (i.e., log odds of control group) and I(·) is the indicator function. Under the common treatment effect assumption in (6), d is the assumed common log odds ratio between the two k groups (control and treated) across studies. We assign vague N(0,102) priors to μi and d and denote this model by “CTE-Logit.”

Under the heterogeneous treatment effect assumption in (7), δi is the study-specific log odds ratio. We assume δi∼N(d, τ2) a priori, where τ quantifies between-study effect heterogeneity, and d has a vague N(0, 102) prior. We assume a Uniform(0,2) prior distribution for τ, where the range of this uniform prior makes it considerably vague, relative to the scale of the log odds ratio. We consider two priors for μi: vague and shrinkage priors (denoted by “HTE-Logit,” and “HTE-LogitSh,” respectively). The HTE-Logit model assigns a vague N(0, 102) prior to μi. The HTE-LogitSh sets , where m is the overall mean log odds of the event in the control group, and τμ is the heterogeneity of the log odds across the studies’ control groups. We set m∼N(0, 102) and τμ∼Uniform(0, 2), so that the shrinkage prior allows study-specific baseline effects (μi) and log odds ratios (δi) to vary across studies. Arm-based model. Recently, Hong et al.22 proposed an arm-based parameterization in network meta-analysis, an extension of meta-analysis to compare more than two treatments simultaneously. We simplify this model to fit an arm-based meta-analysis. This model estimates the treatment-specific risks and allows heterogeneity across studies. The model can be written as

| (8) |

where θk is the log odds of treatment k and the ηik are random effects allowing heterogeneity of the log odds. We assume that where BV N stands for bivariate normal distribution. We use priors θk∼N(0, 102) and Σ−1∼Wishart(Ω, 2), where Ω is a 2 × 2 matrix resulting in a vague prior for Σ−1. Note that the choice of Ω depends on data, outcomes, and scales of parameters of interest. We used a diagonal matrix with diagonal elements equal to 0.02 for simulated data and 0.25 for data analysis. One can obtain log odds ratio from θ2 − θ1. We call this model “AB-Logit.”

Beta hyperprior distribution.

We also consider pooling studies’ arm-specific risks using beta hyperprior distributions. First, we assume that the probability of having an event with treatment k is constant across studies. For the likelihood, we replace pi1 and pi2 in (1) with p1 and p2, such as

Then, we assign prior distributions pk∼Beta(αk, βk), where αk and βk are pre-specified. We employ common α and β values instead of αk and βk for simplicity, given that we use Beta(1,1) priors. We call this model “CTE-Beta.”

We also assume heterogeneity across studies, i.e., the pik vary across studies. We define a hierarchical prior distribution for the pik, namely,

| (9) |

With this notation, E(pik |Uk, Vk) = Uk and . In terms of the more common Beta(ak, bk) parameterization, and Vk = ak + bk. We use this parameterization because we want to assign a prior distribution directly to the mean of piks, which is Uk. Study heterogeneity in the probability scale is measured by . We assign Vk a vague prior, namely Inverse-Gamma(1, 0.01). Uk has a Beta(1, 1) prior distribution. We call this model “HTE-Beta.”

Bayesian model comparison.

We compare the performance of fitted Bayesian models in our data example using the Watanabe-Akaike or widely applicable information criterion23. This criterion evaluates predictive accuracy for a fitted model and then adjusts for overfitting based on the effective number of parameters. In addition, it provides more stable estimates than the deviance information criterion. One prefers models that have smaller values of Watanabe-Akaike information criterion24.

Computation

Simulation studies and data analyses were conducted in R25. We fit the Peto, Mantel-Haenszel, and inverse variance estimators using the R package metafor26; we used user-written functions to compute the remaining frequentist estimators. Bayesian models were fitted using the R package R2jags27 for simulations and rstan28 for the data analysis, as rstan provided reliable Watanabe-Akaike information criterion values. For Bayesian models, a total of 10,000 posterior samples were used after a 10,000 burn-in from a single Markov chain for our simulation studies, while our data analyses used two Markov chains, with each chain having a total of 30,000 posterior samples after a 20,000 burnin. We checked trace plots and scatter plots of pairs of parameters to ensure convergence; they converged well. The R code and the associated Bayesian model files are available at https://github.com/HwanheeHong/MetaAnalysis_RareEvents.

Simulation study

Settings

In this simulation study, we compare the performance of fifteen meta-analysis methods (9 frequentist and 6 Bayesian), summarized in Table 1. The results compare bias, mean squared error, and coverage probability of 95% intervals for each method’s log odds ratio estimate. The simulation settings and results with relative risk and risk difference scales are presented in Supplementary Material.

Our simulation setup is built upon that used in Shuster et al. (2012)11. We generated 10,000 simulated meta-analysis datasets, each consisting of thirty studies comparing a treatment of interest to a control. The control group’s sample size in study i, ni1, is sampled from a Uniform(50,1000) distribution. We set the size of the corresponding treated group, ni2, to be equal to ni1 to avoid an unrealistic study design that has extremely unbalanced sample sizes. We sampled probabilities for having an event (i.e., risks) for the two groups in study i as follows: pik∼Uniform(pk(1−0.5D), pk(1+0.5D)). pk is the true risk for group k = 1 or 2, and D controls between-study heterogeneity of the risks. Table 4 shows the six pairs of true underlying risks that we consider in our six different simulation scenarios. We vary pk to consider three null cases, namely, (p1, p2) = (0.002, 0.002), (0.005, 0.005) and (0.05, 0.05), and three alternative cases, (p1, p2) = (0.002, 0.004), (0.005, 0.01), and (0.05, 0.1). The true log odds ratios are 0 and around 0.7 in the null and alternative cases, respectively. As the true risks decrease, the number of studies with zero events increases. For example, the average numbers of studies with zero events in either group over 10,000 iterations were 18, 8, and less than 1 for the three null cases. In addition, we varied the degree of between-study heterogeneity of risks by setting D to 0, 1, or 2, where D = 0 means no heterogeneity in risks. As a result, there are 6 × 3 = 18 different settings. Given pik and nik samples, we generated the binary events as yik∼Binomial(nik, pik).

Table 4:

True risk parameters, pk, and the associated odds ratio (OR) and log odds ratio (LOR) values used in the simulation study

| Null | Alternative | ||||||

|---|---|---|---|---|---|---|---|

| p1 | p2 | OR | LOR | p1 | p2 | OR | LOR |

| 0.002 | 0.002 | 1 | 0 | 0.002 | 0.004 | 2.00 | 0.70 |

| 0.005 | 0.005 | 1 | 0 | 0.005 | 0.01 | 2.01 | 0.70 |

| 0.05 | 0.05 | 1 | 0 | 0.05 | 0.1 | 2.11 | 0.74 |

Results

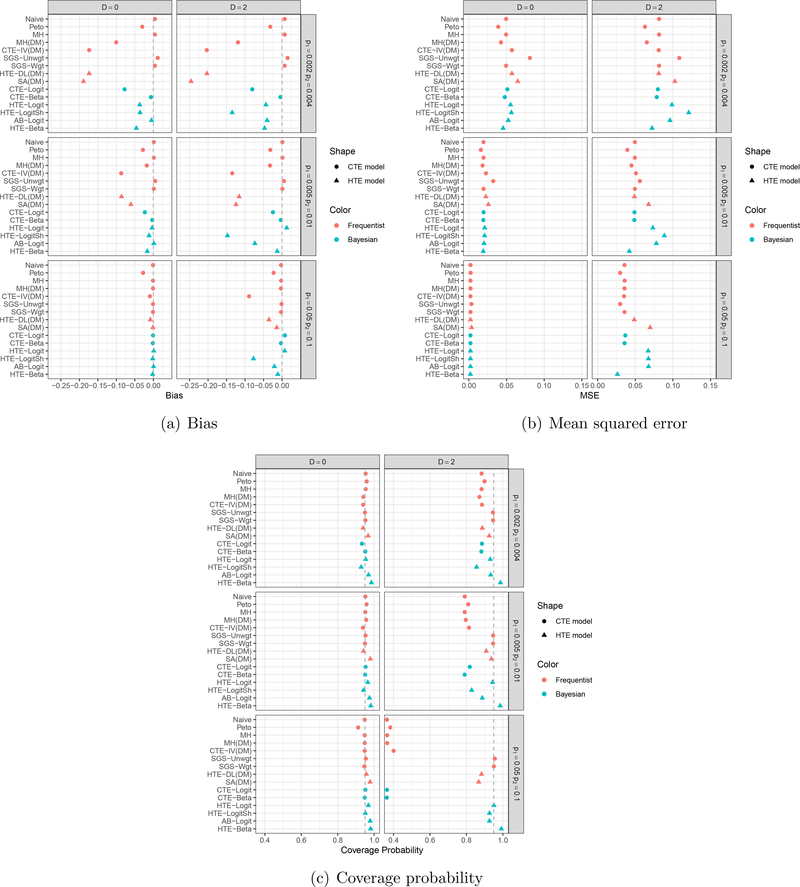

Figures 1 and 2 exhibit bias, mean squared error, and coverage probability of log odds ratio estimates for three null and three alternative cases, respectively. Frequentist and Bayesian models are plotted in red and blue, and common and heterogeneous treatment effect models are plotted using circle and triangle characters, respectively. We present results under D = 0 and 2; the results when D = 1 are presented in Supplementary Material, as they showed similar patterns to those under D = 2 with less extreme quantities.

Figure 1:

Simulation results in the null case: (a) Bias, (b) Mean squared error, and (c) Coverage probability. Frequentist and Bayesian models are plotted in red and blue, respectively; common and heterogeneous treatment effect models are plotted using circles and triangles, respectively.

Figure 2:

Simulation results in the presence of a treatment effect (i.e., the alternative case): (a) Bias, (b) Mean squared error, and (c) Coverage probability. Frequentist and Bayesian models are plotted in red and blue, respectively; common and heterogeneous treatment effect models are plotted using circles and triangles, respectively.

Under the null cases in Figure 1, all frequentist estimators, which are moment-based estimators, showed little or no bias, as did the three Bayesian methods that model treatment-specific risks, namely CTE-Beta, AB-Logit, and HTE-Beta. CTE-Logit, HTE-Logit, and HTE-LogitSh, however, showed relatively large biases, and the true risks were small. The bias may arise because these three methods rely on a logistic regression model. We generated each meta-analysis dataset from a binomial distribution after we sampled treatment-specific risks, pik, not treatment effects (i.e., log odds ratios), δi in (7). When the risks are small, studies will have zero cells, making study-specific estimation of the log odds ratio mathematically unwieldy. The estimates across studies become roughly bimodal, with some study estimates around 0 and some approaching infinity. CTE-Logit, HTE-Logit, and HTE-LogitSh model log odds ratio directly under the normality assumption of the δi, while CTE-Beta, AB-Logit, and HTE-Beta model treatment-specific parameters, such as the log odds of an event. THE-LogitSh had the largest bias when D = 2, suggesting that a shrinkage normal prior on log odds of an event in the control group may not be appropriate in the presence of large heterogeneity.

Mean squared error decreased as true risks increased (i.e., fewer rare events). When D = 2, MH(DM), CTE-IV(DM), HTE-DL(DM), SA(DM), and HTE-Beta tended to give small mean squared errors, while SGS-Unwgt, HTE-Logit, HTE-LogitSh, and AB-Logit gave large mean squared errors. In terms of coverage probability, SGS-Unwgt, SGS-Wgt, and HTE-Beta provided close to or slightly larger than the nominal level 0.95 across all scenarios. All common treatment effect models, except SGS-Unwgt and SGS-Wgt, had poor coverage in the presence of large between-study heterogeneity (D = 2), and it got worse as the true risks grew larger. This seemed counterintuitive, since all common treatment effect models showed little or no bias in Panel (a). We found that the estimated standard errors of these estimates were so small that their 95% confidence or credible intervals were very narrow, except SGS-Unwgt and SGS-Wgt; the widths of their 95% confidence intervals were sufficiently wider than estimators from the other common treatment effect models that their coverage probabilities were close to the nominal level 0.95.

Figure 2 shows the results for the three alternative cases. The Naïve, Mantel-Haenszel, SGS-Unwgt, SGS-Wgt, CTE-Beta, AB-Logit, and HTE-Beta methods had smaller biases when D = 0 and 2. CTE-IV(DM), HTE-DL(DM), and SA(DM) showed large biases when the true risks were small under both D = 0 and 2. Again, HTE-LogitSh gave large biases only when D = 2. In terms of mean squared error, four heterogeneous treatment effect models, SA(DM), HTE-Logit, HTE-LogitSh, and AB-Logit, gave large mean squared error when D = 2, while HTE-Beta and HTE-DL(DM) gave small mean squared error. SGS-Unwgt and SA(DM) had larger mean squared errors in the lowest risk scenario than any other frequentist methods. We see similar results to the null cases in terms of coverage probability.

In the alternative cases, we note two interesting findings that were not observed under the null cases. First, applying the data modification to Mantel-Haenszel resulted in large bias when the true risks were small. That is, adding 0.5 to the contingency table of studies with zero events may bias estimation, especially when a large number of studies report zero events and there is a treatment effect. Second, SGS-Unwgt, SGS-Wgt and SA(DM) provided very different point estimates, with SA(DM) exhibiting large biases. This discrepancy between the methods may relate to the simulated data generating mechanism being aligned with the SGS-Unwgt and SGS-Wgt estimators’ structure but not that of SA(DM). The target of the SGS-Unwgt and SGS-Wgt estimators is estimation of treatment-specific risks. These estimators calculate log odds ratio or other treatment effects based on estimates (weighted or unweighted) of treatment-specific risks, . The target parameter of SA(DM), on the other hand, is the relative treatment effect, log odds ratio, and it is estimated as an average of study-specific LORi after applying the data modification to each study’s table.

Rosiglitazone data analysis

The rosiglitazone dataset29 is a popular example with which to study different meta-analysis models with rare events. Many researchers have re-analyzed these data using various methods8,30–32. The authors have updated the data by adding a few more relevant trials33, and we use these updated data as our real data example. They collected and analyzed these data to investigate the effect of rosiglitazone on the risks of myocardial infarction and of death from cardiovascular causes. There are 56 trials, of which 15 did not report any myocardial infarction, and 29 trials did not report any cardiovascular death in either group.

We apply all models in Table 1 to the rosiglitazone data. For the Bayesian methods, we calculate the Watanabe-Akaike information criterion to compare fitted models. We conducted meta-analyses under four different data settings: (1) include all 56 studies; (2) exclude the RECORD trial, considered as large following Nissen and Wolski33 (55 studies included); (3) select studies comparing rosiglitazone + X to X alone, where X can be either placebo or an active treatment (42 studies included); and (4) select studies strictly comparing rosiglitazone to placebo (13 studies included). In the 56 studies, the total number of subjects in the rosiglitazone group is 19509 with 159 (pooled risk=0.00815) and 105 (0.00538) myocardial infarction and cardiovascular death events, respectively; the total number of subjects in the control group is 16022, with 136 (0.00848) and 100 (0.00624) myocardial infarction and cardiovascular death events, respectively. We note that the correlation between sample size and observed log odds ratio in each data setting vary from −0.32 to 0.37.

Figure 3 shows the estimated log odds ratios for the 4 different data settings. log odds ratios less than zero favor rosiglitazone, i.e., lower odds of having an myocardial infarction or cardiovascular death event than the control group. For the myocardial infarction outcome, the Peto, Mantel-Haenszel, and SGS-Wgt methods yielded 95% confidence intervals that excluded 0 when all studies were included. The estimated between-study variability in log odds ratio estimates, , were 0, 0.28, and 0.26 with HTE-DL(DM), HTE-Logit, and HTE-LogitSh, respectively. Note that it is known that frequentist heterogeneous treatment effect models underestimate effect heterogeneity compared to their Bayesian counterparts34. Excluding the RECORD trial did not change the point estimates or conclusions much, although the 95% intervals became slightly wider.

Figure 3:

Results of the rosiglitazone data analyses: (a) Myocardial infarction (b) Death from cardiovascular causes (CV death). Log odds ratios less than zero favor rosiglitazone with lower odds of having an event than control. ∗ The HTE-Beta model converged poorly for the fourth data setting.

When we considered the trials comparing rosiglitazone + X to treatment X alone, the estimated log odds ratios became larger (i.e., stronger effect of rosiglitazone on the odds of having an myocardial infarction event) than those from the other data settings. The Peto, Mantel-Haenszel, SGS-Unwgt, SGS-Wgt, and CTE-Logit methods’ 95% intervals excluded zero. When we included only the 13 studies that compared rosiglitazone to placebo, the associated 95% intervals were wide, because of the small number of events: 25 and 14 events out of 7449 and 4860 subjects in the rosiglitazone and placebo groups, respectively. As a result, the HTE-Beta model did not converge and a more informative prior of Vk would improve model convergence.

For cardiovascular death, all methods except SGS-Unwgt provided point estimates close to zero and 95% intervals that included zero in the 56-study data set. The were 0, 0.41, and 0.34 with HTE-DL(DM), HTE-Logit, and HTE-LogitSh, respectively. Excluding the RECORD trial or comparing rosiglitazone + X to treatment X alone resulted in log odds ratio estimates unfavorable to rosiglitazone, although most 95% intervals included zero; the exceptions were the SGS-Unwgt and SGS-Wgt estimators. When analyzing only the 13 placebo-controlled trials, all frequentist methods provided similar point estimates to the third data setting, except SGS-Unwgt. Again, all methods provided wider 95% intervals and the HTE-Beta model did not converge in this sparse data setting: eleven trials of the thirteen trials had one or zero cardiovascular death and four out of the eleven trials had no events.

Table 5 displays Watanabe-Akaike information criterion values obtained from the 6 fitted Bayesian models for all outcomes and data settings. For the myocardial infarction outcome, HTE-LogitSh was the best model by the Watanabe-Akaike information criterion for the first three data settings. Although HTE-Beta provided the smallest Watanabe-Akaike information criterion in the fourth setting, when single-agent placebo was the control, the model did not converge. AB-Logit was second place in terms of the Watanabe-Akaike information criterion in each of the data settings. For the cardiovascular death outcome, HTE-Logit (under the first two data settings), HTE-LogitSh (third data setting), and HTE-Beta (fourth data setting) provided the smallest Watanabe-Akaike information criterion values. Again, note that HTE-Beta did not converge in the fourth data setting and AB-Logit provided the second smallest Watanabe-Akaike information criterion value. Across all outcomes and data settings, CTE-Beta provided largest or second largest Watanabe-Akaike information criterion values, indicating a poor fit to the rosiglitazone data.

Table 5:

Watanabe-Akaike information criterion (WAIC) values for 6 Bayesian models fit to the rosiglitazone data. The smallest Watanabe-Akaike information criterion value among the 6 models for an outcome and a data setting is in bold. The predictive accuracy is estimated by the expected log predictive density for a new data point (elpd) and is corrected by the effective number of parameters (p).

| Myocardial Infarction | Cardiovascular Death | |||||

|---|---|---|---|---|---|---|

| Model | elpd | p | WAIC | elpd | p | WAIC |

| All studies (56 studies) | ||||||

| CTE-Logit | −140.1 | 35.7 | 280.2 | −90.4 | 24 | 180.8 |

| CTE-Beta | −277.9 | 31.2 | 555.8 | −299.2 | 59.2 | 598.3 |

| HTE-Logit | −140.5 | 36.2 | 281 | −89.7 | 23.8 | 179.4 |

| HTE-LogitSh | −129.6 | 19.4 | 259.2 | −90.4 | 16.5 | 180.8 |

| AB-Logit | −132.7 | 25.2 | 265.5 | −91.8 | 20 | 183.7 |

| HTE-Beta | −136.9 | 31.3 | 273.8 | −96.2 | 25.5 | 192.3 |

| All studies except RECORD trial (55 studies) | ||||||

| CTE-Logit | −132.9 | 35.1 | 265.9 | −80.5 | 21.7 | 161.1 |

| CTE-Beta | −187.7 | 14.1 | 375.4 | −109.6 | 3.7 | 219.2 |

| HTE-Logit | −133.2 | 35.5 | 266.4 | −81.2 | 22.5 | 162.4 |

| HTE-LogitSh | −122.8 | 18.6 | 245.6 | −82.1 | 14.8 | 164.1 |

| AB-Logit | −125.6 | 24.3 | 251.1 | −85.1 | 19.3 | 170.3 |

| HTE-Beta | −130.7 | 29.9 | 261.4 | −92.7 | 26.1 | 185.4 |

| Trials comparing rosiglitazone + X vs. X alone (42 studies) | ||||||

| CTE-Logit | −103.9 | 29.4 | 207.9 | −66 | 19.4 | 132.1 |

| CTE-Beta | −104.5 | 2.7 | 209 | −88.1 | 4.14 | 176.2 |

| HTE-Logit | −104.2 | 30.1 | 208.3 | −65.9 | 19.7 | 131.9 |

| HTE-LogitSh | −95.2 | 15.7 | 190.4 | −65.1 | 12.7 | 130.2 |

| AB-Logit | −95.2 | 19.9 | 190.4 | −66.3 | 16.06 | 132.6 |

| HTE-Beta | −99.3 | 22.8 | 198.7 | −70.1 | 20.6 | 140.3 |

| Trials comparing rosiglitazone vs. placebo (13 studies) | ||||||

| CTE-Logit | −35.4 | 9.7 | 70.9 | −28.6 | 8.9 | 57.3 |

| CTE-Beta | −35.1 | 3.0 | 70.2 | −29.2 | 3.4 | 58.4 |

| HTE-Logit | −35.2 | 10.1 | 70.3 | −28.3 | 9.2 | 56.7 |

| HTE-LogitSh | −32.5 | 5.5 | 64.9 | −25.6 | 5 | 51.2 |

| AB-Logit | −32.1 | 6.9 | 64.1 | −24.7 | 5.8 | 49.4 |

| HTE-Beta* | −32.0 | 7.7 | 64.0 | −24.6 | 6.9 | 49.3 |

The HTE-Beta model converged poorly for the fourth data setting.

Discussion

We considered many different meta-analytic models when the outcome event is rare. We assessed and compared the performance of frequentist and Bayesian approaches via an extensive simulation study under various data generating settings. The simulations considered really low frequency to somewhat rare event risks and examined no between-study heterogeneity to large heterogeneity. We also fitted all models to the rosiglitazone data and compared the Bayesian models using the Watanabe-Akaike information criterion.

Our simulation results suggest that we should interpret results of meta-analyses carefully in very low frequency settings. Overall, HTE-Beta seemed to perform among the best. AB-Logit also performed consistently well across all settings, except for mean squared error when between-study heterogeneity in risks existed. Among moment-based frequentist estimators, the SGS-Unwgt and SGS-Wgt estimators performed well, although the SGS-Unwgt estimator gave relatively large mean squared error under the low-risk settings. The Mantel-Haenszel estimator without a data modification provided no or little bias but had somewhat large mean squared error and under-coverage. Peto performed similarly to Mantel-Haenszel but was slightly more biased than Mantel-Haenszel in the alternative case. All frequentist and Bayesian common treatment effect models, except the SGS-Unwgt and SGS-Wgt estimators, tended to provide poor coverage probabilities when between-study heterogeneity existed.

In our data analyses, log odds ratio estimates and their 95% intervals differed by method. We would not expect all of the methods to be quantitatively the same, but most should be qualitatively similar. If there is great discrepancy across methods, then it may be because of a high degree of study-to-study heterogeneity or correlation between study design and risks11,35. One should investigate the source of such discrepancies, particularly in terms of model goodness of fit. Any method that assumes a common effect across studies may be wrong if there is evidence of a large degree of heterogeneity across studies as, for example, if the studies incorporate different controls.

We noticed a few interesting results in the rosiglitazone data analysis. First, the Naïve and CTE-Beta methods provided similar results, because the closed form of the posterior mean of risks for the CTE-Beta model is equivalent to the Naïve pooled risks. Second, CTE-IV(DM) and HTE-DL(DM) provided exactly the same results, because the estimated between-study heterogeneity with HTE-DL(DM). Third, SGS-Unwgt point and interval estimates were very different from those of SGS-Wgt and gave a totally different conclusion under certain data settings. In the 56-study data set, SGS-Unwgt indicated an increased risk of cardiovascular death for rosiglitazone with the associated 95% interval that excluded zero, while the SGS-Wgt estimate was close to zero and the associated 95% interval included zero. In addition, these two estimators tended to provide largely different point estimates even with the 13 placebo-controlled trials. Finally, SGS-Unwgt and SA(DM) occasionally provided very different point estimates. The SA(DM) estimator gave estimates close to the null across all data settings with both outcomes, while SGS-Unwgt, with narrow 95% intervals, provided strong evidence of greater risk of an event with rosiglitazone. We observed similar trends in our simulation study, again because the target parameters of these two estimators differ.

Furthermore, the rosiglitazone data analysis showed some findings that differed from what we observed in the simulation study. Simulation study results are valid and applicable to a real data analysis only when the simulation data generating mechanism agrees with the true data generating mechanism of the real data. It is untestable, however, whether the rosiglitazone data follow the same data generating mechanism that we used in our simulation study. Instead, we investigated key empirical features (such as correlation between sample size and risks) in the rosiglitazone data and assessed difference between simulated and real data. First, Although our simulation studies suggested that HTE-Beta, AB-Logit, and SGS-Wgt are the generally favored models and HTE-LogitSh may be one of the least favorite models, the Bayesian model comparison using the Watanabe-Akaike information criterion in our rosiglitazone data analysis suggested that HTE-LogitSh was the best fitting model. Second, SGS-Wgt always provided much narrower 95% confidence intervals than SGS-Unwgt. This trend was not observed in our simulations, though. The difference may be because of a non-zero correlation between study design and observed log odds ratio in the rosiglitazone data, whereas the simulations did not include such correlation. Shuster et al. (2012)11 pointed out that SGS-Wgt could be more influenced by large trials than SGS-Unwgt. One advantage of SGS-Wgt, however, is that it tends to have narrower confidence limits as the number of studies increases.

Shuster and Walker35 stated two points that need to be considered in meta-analysis methods. One may need to properly handle an interaction between sample size and treatment effect with meta-analysis methods that use weights based on sample sizes, and one may also need to consider the weights as random variables. They argued that most widely-used meta-analysis methods with binary outcomes do not consider these two issues. Instead, these methods assume independence of within-study effects and design, called effects at random, and could provide biased estimates. Our simulation study did not address these issues specifically, and further studies are needed.

The rosiglitazone data have flaws that limit the ability to assess if rosiglitazone increases the risks of myocardial infarction and cardiovascular death. A major concern is that it is unclear what the control group is. The data set includes some studies with active treatments as the control groups and some with placebo controls. Some trials compared rosiglitazone to placebo while a few trials compared rosiglitazone to glyburide. It is questionable whether we can consider placebo and glyburide as comparable control groups. Similarly, it is questionable whether the log odds ratio of rosiglitazone compared to placebo can be combined with log odds ratio of rosiglitazone + X compared to X alone. Nissen and Wolski33 tackled this issue by providing results from several meta-analyses that included studies having the same comparator (insulin, metformin, sulfonylurea, or placebo). For these specific data, a network meta-analysis may be a more reasonable approach, because the data have non-comparable treated and control groups across the trials22.

Although our findings and discussion were mainly based on the log odds ratio scale, it is important to consider other scales, such as the log relative risk and risk difference, and check the consistency of findings36. Our simulation and data analysis results with the log relative risk scale were very similar to those for the log odds ratio scale. This is expected as the odds ratio and relative risk are similar numerically for rare events. In our simulations with the risk difference scale, all methods provided very small biases and mean squared errors, but some methods showed poor coverage when outcomes were frequent and heterogeneity was large (see Section 2 in Supplementary Material). In the data analysis, the conclusions about treatment effect with risk difference and log odds ratio estimates were the same as those with log odds ratio. Note that reporting both absolute measures (e.g., risk difference) and relative measures (e.g., log odds ratio and log relative risk) might be useful in a meta-analysis that concerns rare events. Absolute measures usually provide a more clinically straightforward interpretation than relative measures, while relative measures tend to have less statistical heterogeneity than absolute measures36. However, Efthimiou (2018) warns against use of risk differences when faced with rare events37. Bayesian methods are advantageous and flexible when estimating different effect scales and interpreting them based on posterior probabilities.

In summary, when a rare binary event is the outcome of interest, treatment effect estimates obtained from traditional meta-analytic methods may be biased and provide poor coverage probability. This trend worsens when there is large between-study heterogeneity. Effect estimates vary when applying different models and are likely to depend on the characteristics of the data (e.g., level of between-study heterogeneity, rarity of outcomes, correlation between sample size and effect size, and so on). As such, inferences should be drawn cautiously. In general, we recommend methods that focus on first estimating treatment-specific risks and then estimating treatment differences based on summaries of the risks across the studies. Of course, this approach makes most sense when the studies in the meta-analysis share the same treatment and control arms. To avoid making a wrong decision, we recommend fitting various methods, comparing the results and model fit (for Bayesian approaches), and investigating any significant discrepancies across them.

Supplementary Material

Acknowledgements:

The authors appreciate helpful comments from the Associate Editor and two anonymous reviewers. The authors thank Drs. Estelle Russek-Cohen and Mark Levenson from the US Food and Drug Administration (FDA) for valuable comments which improved this manuscript. This work was made possible by U01 FD004977-01 from FDA, which supports the Johns Hopkins Center of Excellence in Regulatory Science and Innovation. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the Department of Health and human services or FDA. Hwanhee Hong was supported by R00MH111807 from the National Institute of Mental Health and Chenguang Wang and Gary L. Rosner were supported by P30CA006973 from the National Cancer Institute.

References

- [1].CIOMS Working Group V Current challenges in pharmacovigilance: pragmatic approaches, https://cioms.ch/wp-content/uploads/2017/01/Group5_Pharmacovigilance.pdf (2001, accessed 24 July 2019)

- [2].U. S. Food and Drug Administration. Meta-analyses of randomized controlled clinical trials to evaluate the safety of human drugs or biological products guidance for industry. 2018. Draft Guidance.

- [3].Stoto MA. Drug Safety Meta-Analysis: Promises and Pitfalls. Drug Safety 2015;38(3):233–243. [DOI] [PubMed] [Google Scholar]

- [4].Bennetts M, Whalen E, Ahadieh S, and Cappelleri JC. An appraisal of metaanalysis guidelines: how do they relate to safety outcomes? Research synthesis methods 2017;8(1):64–78. [DOI] [PubMed] [Google Scholar]

- [5].Shuster JJ and Walker MA. Low-event-rate meta-analyses of clinical trials: implementing good practices. Statistics in medicine 2016;35:2467–2478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Bradburn MJ, Deeks JJ, Berlin JA, and Russell Localio A. Much ado about nothing: a comparison of the performance of meta-analytical methods with rare events. Statistics in medicine, 2007:26(1):53–77. [DOI] [PubMed] [Google Scholar]

- [7].Chakravarty AG and Levenson M. Regulatory Issues in Meta-Analysis of Safety Data. Quantitative Evaluation of Safety in Drug Development: Design, Analysis and Reporting 67 2014;67:237. [Google Scholar]

- [8].Lane PW. Meta-analysis of incidence of rare events. Statistical methods in medical research, 2013:22(2):117–132. [DOI] [PubMed] [Google Scholar]

- [9].Sweeting MJ, Sutton AJ, and Lambert PC. What to add to nothing? Use and avoidance of continuity corrections in metaanalysis of sparse data. Statistics in medicine 2004;23(9):1351–75. [DOI] [PubMed] [Google Scholar]

- [10].Bhaumik DK, Amatya A, Normand SLT, Greenhouse J, Kaizar E, Neelon B, and Gibbons RD. Meta-analysis of rare binary adverse event data. Journal of the American Statistical Association 2012;107(498):555–567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Shuster JJ, Guo JD, and Skyler JS. Meta-analysis of safety for low event-rate binomial trials. Research Synthesis Methods 2012;3:30–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Smith TC, Spiegelhalter DJ, and Thomas A. Bayesian approaches to random-effects meta-analysis: a comparative study. Statistics in Medicine 1995;14:26852699. [DOI] [PubMed] [Google Scholar]

- [13].Sutton AJ and Abrams KR. Bayesian methods in meta-analysis and evidence synthesis. Statistical Methods in Medical Research 2001;10:277–303. [DOI] [PubMed] [Google Scholar]

- [14].Carlin BP, Louis TA. 2009. Bayesian Methods for Data Analysis, 3rd edition. Boca Raton, FL: Chapman & Hall/CRC. [Google Scholar]

- [15].Cai T, Parast L, and Ryan L. Metaanalysis for rare events. Statistics in medicine, 2010:29(20):2078–2089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Tian L, Cai T, Pfeffer MA, Piankov N, Cremieux PY, and Wei LJ. Exact and efficient inference procedure for meta-analysis and its application to the analysis of independent 2×2 tables with all available data but without artificial continuity correction. Biostatistics 2009;10(2):275–281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Rücker G, Schwarzer G, Carpenter J, and Olkin I. Why add anything to nothing? The arcsine difference as a measure of treatment effect in metaanalysis with zero cells. Statistics in medicine 2009;28(5):721–38. [DOI] [PubMed] [Google Scholar]

- [18].Yusuf S, Peto R, Lewis J, Collins R, and Sleight P. Beta blockade during and after myocardial infarction: an overview of the randomised trials. Progress in Cardiovascular Diseases 1985;27(5):335–371. [DOI] [PubMed] [Google Scholar]

- [19].Mantel N and Haenszel W. Statistical aspects of the analysis of data from retrospective studies of disease. Journal of the National Cancer Institute 1959;22(4):719748. [PubMed] [Google Scholar]

- [20].Robins J, Breslow N and Greenland S. Estimators of the Mantel-Haenszel variance consistent in both sparse data and large-strata limiting models. Biometrics 1986;42:311–323. [PubMed] [Google Scholar]

- [21].DerSimonian R and Laird N. Meta-analysis in clinical trials. Controlled Clinical Trials 1986;7: 177–188. [DOI] [PubMed] [Google Scholar]

- [22].Hong H, Chu H, Zhang J, and Carlin BP. A bayesian missing data framework for generalized multiple outcome mixed treatment comparisons. Research Synthesis Methods, 2016;7(1):6–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Watanabe S Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. Journal of Machine Learning Research, 2010;11:35713594. [Google Scholar]

- [24].Gelman A, Hwang J, and Vehtari A. Understanding predictive information criteria for Bayesian models. Statistics and computing, 2014;24(6):997–1016. [Google Scholar]

- [25].R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. 2017. https://www.R-project.org/. [Google Scholar]

- [26].Viechtbauer W Conducting meta-analyses in R with the metafor package. Journal of Statistical Software, 2010;36(3):1–48. [Google Scholar]

- [27].Su Y and Yajima M. R2jags: Using R to Run ‘JAGS’. R package version 0.5–7. 2015. https://CRAN.R-project.org/package=R2jags.

- [28].Stan Development Team. RStan: the R interface to Stan. 2019. R package version 2.19.2. http://mc-stan.org/

- [29].Nissen S and Wolski K. Effect of rosiglitazone on the risk of myocardial infarction and death from cardiovascular causes. New England Journal of Medicine 2007;356(24):2457–2471. [DOI] [PubMed] [Google Scholar]

- [30].Bohning D, Mylona K, and Kimber A. Meta-analysis of clinical trials with rare events. Biometrical Journal 2015;57(4):633–648. [DOI] [PubMed] [Google Scholar]

- [31].Diamond GA, Bax L, and Kaul S. Uncertain effects of rosiglitazone on the risk for myocardial infarction and cardiovascular death. Annals of Internal Medicine, 2007;147(8):578–581. [DOI] [PubMed] [Google Scholar]

- [32].Friedrich JO, Beyene J, and Adhikari NK. Rosiglitazone: can meta-analysis accurately estimate excess cardiovascular risk given the available data? Re-analysis of randomized trials using various methodologic approaches. BMC research notes, 2009;2(1):5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Nissen SE and Wolski K. Rosiglitazone revisited: an updated meta-analysis of risk for myocardial infarction and cardiovascular mortality. Archives of internal medicine 2010;170(14):1191–1201. [DOI] [PubMed] [Google Scholar]

- [34].Hong H, Carlin BP, Shamliyan TA, Wyman JF, Ramakrishnan R, Sainfort F, and Kane RL. Comparing Bayesian and frequentist approaches for multiple outcome mixed treatment comparisons. Medical Decision Making, 2013;33(5):702–714. [DOI] [PubMed] [Google Scholar]

- [35].Shuster JJ and Walker MA. Low-event-rate meta-analyses of clinical trials: implementing good practices. Statistics in Medicine 2016;35:2467–2478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].CIOMS Working Group X. Evidence Synthesis and Meta-Analysis for Drug Safety: Report of CIOMS Working Group X. Geneva, Switzerland: Council for International Organizations of Medical Sciences (CIOMS), 2016. [Google Scholar]

- [37].Efthimiou O Practical guide to the meta-analysis of rare events. Evidence-based mental health 2018;21(2):72–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.