Abstract

Digital experiences capture an increasingly large part of life, making them a preferred, if not required, method to describe and theorize about human behavior. Digital media also shape behavior by enabling people to switch between different content easily, and create unique threads of experiences that pass quickly through numerous information categories. Current methods of recording digital experiences provide only partial reconstructions of digital lives that weave – often within seconds – among multiple applications, locations, functions and media. We describe an end-to-end system for capturing and analyzing the “screenome” of life in media, i.e., the record of individual experiences represented as a sequence of screens that people view and interact with over time. The system includes software that collects screenshots, extracts text and images, and allows searching of a screenshot database. We discuss how the system can be used to elaborate current theories about psychological processing of technology, and suggest new theoretical questions that are enabled by multiple time scale analyses. Capabilities of the system are highlighted with eight research examples that analyze screens from adults who have generated data within the system. We end with a discussion of future uses, limitations, theory and privacy.

Keywords: Mobile, internet use, cognitive science, communication, visualization, software development, personal tools

Background.

This multi-year collaboration is designed to develop new methods and analytics to understand how people use digital media, and how they are affected by digital media. The collaboration combines expertise across a number of different areas, including media psychology, behavioral science, medicine, political communication, dynamic modeling of time series data, text extraction from images, database construction, and smartphone app development.

1. INTRODUCTION

The breadth of digitized experiences is impressive. Laptop computers and smartphones can be used for email and texting, shopping and finances, business and social relationships, work spreadsheets and writing, entertainment TV, news, movies and games, and monitoring personal information about health, activity, sleep, energy, appliances, driving and even home security, lighting and irrigation. The variety of human experiences available digitally will continue to grow as more artifacts of life – from refrigerators to shoes to food to car parts – become part of the so-called “internet of things.”

Although digital promises are decades old, the ubiquity and completeness of digitization is new and has crept up on us. Life now unfolds on and through digital media, not just in the familiar media categories of entertainment and work, but across multiple life domains including tasks and platforms related to social relationships, health, finance, work, shopping, politics, school, entertainment, parenting, and more. The merging of daily and digital life prompts consideration of how we study human behavior in its natural context. It is increasingly difficult to imagine any attempt to assess the course of individuals’ thinking, feeling or behavior without recourse to information obtained from digital media.

We propose consideration of the digital screenome, i.e., a unique individual record of experiences that constitute psychological and social life on digital devices with screens, the study of which we call screenomics. Like other “omes” from the biological and social sciences, the screenome has a standardized structure. It is composed of smartphone, laptop, and cable screens, with information sequences describing the temporal organization, content, functions and context of person-screen interactions. The screenome’s most important qualities are that it defines both the general structure of everyone’s screen experiences and the individual variants within that structure that are related to unique social, psychological, and behavioral characteristics and experiences. The screenome can be usefully linked to other levels of analysis showing, for example, how biological omics might affect or be affected by digital life experiences (Chen et al., 2012), and how cultural context might change or be changed by individual experiences (Jenkins, 2006).

This article first reviews how new digital technology has changed the ways people experience life. Then we define the screenome and its elements as a fundamental description of digital life, noting the benefits and differences of this approach relative to other logging and experience sampling methods. Next, we review how psychological theory can be extended by study of the screenome, including new theoretical questions that can be asked as a result of data that includes multiple time domains of experience. We then describe a specific system for recording and analyzing the screenome, followed by eight examples illustrating a variety of ways the screenome can be analyzed. We end with a discussion of limitations, future considerations, privacy and theory.

2. PSYCHOLOGICAL IMPLICATIONS OF DIGITIZATION

The breadth of digitization is reason enough to bend research methods toward recording life in media. There is an opportunity now to know more about human behavior via media than has ever been possible. We note three important changes in media that bolster this claim.

First, the digitization of life has produced a mediatization of life (Lundby, 2014), whereby societies experience psychological, social, and cultural transformations caused by media saturation. This means more than the simple fact that analog experiences are now digital. Mediatization means that life experiences now have all of the added features, for better and worse, of a symbolic experience. Interfaces, displays, visual effects, and device forms all add unique value to analog counterparts. Digitally mediated representations of life are now a primary means by which individuals evaluate life, and make decisions about themselves, their social partners and the world.

Second, while the amount of digital information has expanded, the number of different screen sources has consolidated. Twenty years ago, before smartphones and the rise of laptop computers, there were numerous separate screens that were specialized for different experiences (e.g., music players, work computers, home theaters). Now, smartphones and laptops are prominent devices, and especially for Millennials, a third of whom have cut the cords to other screens (GfK Research, 2017). The primary implication of screen consolidation is that numerous and radically different experiences, ones that in analog life would take significant time to arrange or reorder, can now be experienced in rapid succession on a single screen. Two recent studies of content switching on a digital device found that the median time devoted to any single activity was 10 to 20 seconds (Yeykelis, Cummings & Reeves, 2014; Yeykelis, Cummings & Reeves, 2018), and studies about technology use at work find only slightly longer segments (e.g., Mark, et al., 2012). Advances in media technology are providing both more flexibility in the types of experiences that can be engaged on any given screen and the pace at which we can switch among those experiences.

Third, digitization has influenced the fragmentation of experience (Yeykelis et al, 2018). Digital technology has freed individuals from the requirement that an activity be experienced whole and uninterrupted. Most digital experiences can now be paused and restarted without missing a thing. Thus, people are increasingly free to partition experiences into smaller bits and attend to those smaller pieces whenever they choose. Individuals have increased control over digital experiences, and are now able to create threads that weave in and out of larger life categories. Psychological research has long highlighted how temporal proximity strengthens the interdependence among different types of experiences (e.g., stimulus response pairings). Contextual differences in how information is presented are well-known to influence attitudes, decisions and behaviors (e.g., Kahneman, 2011; Ross & Nisbett, 2011). Users’ quick-switching between activities creates considerable opportunity for context effects; for example, for social relationships to influence work, for work to influence play, for money to influence health, and so on. The record of life now embedded in digital life provides new opportunity to study the complexities of context effects on real-world human behavior.

3. THE SCREENOME AS A MEASURE OF DIGITAL LIFE

Any system used to study personal experiences needs to record them at the new speed of life. Recording how individuals’ digital behavior weaves in and out of different content, actions, applications, platforms and commercial products requires assessment of moment-by-moment changes in the order in which they occur. General characterizations of daily life (e.g., “I use Facebook about one hour every day”) do not capture the reality of how quickly individuals are switching experiences or of how experiences are being altered by or are altering other parts of life. Study of individuals’ in situ behavior, and the fluid movement between and among digital content thus requires tracking or logging experiences as they unfold in real time.

3.1. Literature On Life Logging Methods

A range of different methods and goals characterize research that tracks individuals’ real-world media experiences. The literatures have names ranging from experience sampling to shadowing to url logging to lifelogging, and they are in disciplines as diverse as psychology, computer science, political science and health. We review each of the techniques, noting their strengths and weaknesses in relation to our goal of understanding the psychological experiences that individuals have with diverse media content and over extended periods of time.

Offline and experience sampling methods (e.g., diaries, post-hoc surveys) have been used frequently in psychology (e.g., Fraley & Hudson, 2014; Mehl & Connor, 2012) and communication (e.g., Kubey & Csikszentmihalyi, 2013). These methods allow people to provide subjective evaluations and reports of their momentary or recent experiences, and technology is often used to assist recording (e.g., text message surveys, photo sharing, and digital diaries). People are asked to make evaluations that summarize long time periods, often no shorter than one day and only rarely shorter than one hour (Fraley & Hudson, 2014; Csikszentmihalyi & Larson, 2014). Beyond concerns about intrusiveness and errors associated with recall and subjective judgments, it is difficult for people to reconstruct digital experiences at granularities that match the speed of behavior.

There have been attempts to closely shadow information workers while they use technology during the day (e.g., Su, Brdiczka, & Begole, 2013), and one study even had researchers shadowing people in their homes and recording media use every ten seconds (Taneja, Webster, Malthouse, & Ksiazek, 2012). Those studies provide rich context, but are not able to note fine-grained details of use that go beyond genre and software titles. The effort and expense required to arrange observations, take detailed notes, and debrief participants makes such methods difficult to scale.

Lab experiments, used often in psychology and media studies, create controlled environments where quick changes can be recorded. This allows researchers to examine, for example, television program changes (Wang & Lang, 2012), and the use of features that appear only momentarily, like swiping, hovering, sliding, and zooming (Sundar, Bellur, Oh, Xu, & Jia, 2014). The constraints of lab settings (e.g., provision of limited content, imposed instructions about the goals for making changes) provide for focused study of a specific digital experience or interface. In software usability studies, for example, screen captures or video recordings of a user’s interaction are used to understand how and when users discover and use particular design features (e.g., Kaufman et al, 2003). However, by design, these paradigms inhibit movement across the wide variety of content available to people outside of the lab, and thus are difficult to generalize to real-world behavior.

Researchers have long sought measures that use technology to sample natural experiences more often and without the requirement that people interrupt their experiences to cooperate. Studies that use computer and smartphone logging are plentiful, from political science to medicine to human-computer interaction. Search and toolbar plugins that provide precise records of websites visited and search terms used (e.g., Cockburn & McKenzie, 2001; Jansen & Spink, 2006; Kumar & Tomkins, 2010; Tossell, Kortum, Rahmati, Shepard, & Zhong, 2012; White & Huang, 2010) have been used to study diverse topics such as creation of online echo chambers (Dvir-Gvirsman, Tsfati, & Menchen-Trevino, 2016), and how health, diet, and food preparation are linked to medical problems (West, White, & Horvitz, 2013). In political science, several projects have focused on analysis of single platforms, notably Twitter (e.g., Colleoni, Rozza & Arvidsson, 2014). In the study of social networks, phone and text logs have been used to describe the variety and number of contacts in social networks (Battestini, Setlur, & Sohn, 2010), how and when people change locations (Deville, et al., 2014), and differences in communications within families, at work, and in social networks (Min, Wiese, Hong, & Zimmerman, 2013). Sophisticated sensors and recordings, from call and SMS logs to Bluetooth scans to app usage, have been used in psychology to describe personality (Chittaranjan, Blom, & Gatica-Perez, 2013), student mental health and progress in college (Wang et al., 2014), health interventions (Aharony, Pan, Ip, Khayal, & Pentland, 2011), and social networks (Eagle, Pentland, & Lazer, 2009). Typically, these approaches concentrate on tracking of very specific types of content or behavior. However, these data sets continue to grow, with significant new projects underway; for example, the Kavli Human Project that is collecting everything from the genome to smartphone usage from 10,000 New Yorkers over 20 years (Azmak et al., 2016).

In computer science, lifelogging describes efforts to record, as a form of pervasive computing, the totality of an individual’s experiences using multi-modal sensors, and then store those data permanently as a personal multimedia archive (Dodge & Kitchin, 2007; Gurrin, Smeaton, & Doherty, 2014; Jacquemard, Novitzky, O’Brolcháin, Smeaton, & Gordijn, 2014). There are proposals for storing the entirety of digital traces, including MyLifeBits (e.g., Gemmell, Bell, Lueder, Drucker, & Wong, 2002), recordings that track the focus of visual attention (Dingler, Agroudy, Matheis, & Schmidt, 2016), a smartphone application, LifeMap, that can identify and store precise locations (Chon & Cha, 2011), comprehensive platforms that allow developers to create original tools (Rawassizadeh, Tomitsch, Wac, & Tjoa 2013), and systems, like “Stuff I’ve Seen,” that emphasize recording for the purpose of information reuse (Dumais et al., 2016).

The goal of lifelogging is often to obtain information about oneself, similar to an automated biography, that can be summarized on a dashboard, and then used for reflection and self-improvement. Although that goal is different from our interest in studying psychological experiences, our framework is related to lifelogging. In particular, the ability to examine relations among different kinds of experiences in multiple domains is important in lifelogging and for screenomics. The proposed breadth of lifelogging data, however, from implanted physiological sensors to cameras that provide environmental context, is more ambitious than our own, especially with respect to enabling studies of human behavior at scale.

The most important limitation of many logging techniques is that they cannot easily capture threads of experience that span different applications, software, platforms and screens. Consider a user who switches from a Facebook post about the President to a CNN news story about the President, to a Saturday Night Live video that parodied the President, to the creation of a text message about the President – all within a single minute. It is conceivable that a researcher could obtain a record of that person’s Facebook activity using an API, install a browser plug-in that would record the CNN website visit, and obtain logs about phone calls and SMS activity that would contain time-stamped text messages. However, the management necessary to combine the information would be substantial, including negotiation to obtain individual passwords for each platform-specific API and creation of plug-ins for several browsers (or limiting subjects to the use of one). This is not an unusual example, but one that is increasingly typical of how people use a wide variety of media as they follow their own interests and create unique threads of experience. We propose an alternative framework based on the collection of high-density sequences of screenshots – screenomes – for obtaining accurate records of what people actually do with technology, within and across applications, software, platforms, and screens.

3.2. The Screenome and Psychological Theory

The goal of collecting screenomes is to obtain data that can be used both to test current theory about human behavior and digital life, and to generate new research questions that have not yet been studied. In this section, we briefly review established theories that could be elaborated with screenome analyses and indicate how the screenome might enable new theories of digital behavior. We organize comments about the screenome and theory around four key aspects of behavior: time, content, function and context.

Time.

Several reviews in psychology consider time to be a critical differentiator of psychological theories (e.g., Kahneman, 2011). Early conceptions of human-computer interaction (Newell & Card, 1985) also highlight the importance of time scales (from milliseconds to years) when theorizing about complex behaviors. Education, for example, can be defined with respect to numerous time scales, from neural firings and memory traces that occur over milliseconds, to the social dialogue between students and teachers that occur during a 3-hour seminar, or with respect to institutional policy changes that occur over decades (Lemke, 2000). Media and technology are similarly complex in that they can also be approached from many time scales (Reeves, 1989; Nass & Reeves, 1991). Psychological effects of media exposure, for instance, can be defined with respect to physiological arousal and dopaminergic rewards that occur over seconds, with respect to conditioned responses built over weeks, or with respect to use patterns that change over months or years. Each different time scale, from millisecond responses to processes unfolding over years, may require a separate theoretical approach. Certainly, each requires observation and measurement that is appropriately matched to the time-scale at which the processes work.

Many studies about psychological processing of technology have examined relatively long experiences; for example, the amount of time that people say they spend with online categories like news (e.g., Bakshy, Messing, & Adamic, 2015), social media (e.g., Allcott & Gentzkow, 2017) or computer and video games (e.g., Greitemeyer & Mügge, 2014). Media use, measured in units of days, weeks and months, is conceived as an accumulation of experiences that are thought to be influential as an aggregate. For example, greater time spent playing video games is associated with increases in aggressive tendencies, a finding that supports theory about how the accumulation of general learning about normative beliefs and behavioral scripts changes behavioral tendencies (Gentile, Li, Khoo, Prot, & Anderson, 2014). The general learning model applied to video games emphasizes repeated exposure over months and years – large time units – with the process of affective habituation (a desensitization to aggression) contributing to long-term development of personality characteristics that influence behavior over a lifetime. The assessment of general patterns of media experience over the longer time units (e.g., “How many hours did you spend playing a video game this month?”) is matched to the extended process of interest.

The fine granularity of behavior recorded in screenomes simultaneously supports investigation of individuals’ aggregate experience and their moment-by-moment experiences. The multiple time-scale nature of the screenome thus provides new opportunities to address areas where there is a mismatch between the theoretically implicated time scale and the time scale at which measurements are obtained. For example, current research on addiction to technology (e.g., Petry, et al., 2014; Kubey & Czikszentmihalyi, 2002), and particularly addiction to smartphones (Kwon et al., 2013; Lin et al., 2015), typically asks people to evaluate their own patterns of use, how they feel when interacting with different content, how much they miss their device when it is not with them or how addicted they feel to their phone – one time self-reports that apply to weeks or months of device use. The biology that explains addiction, however, operates at a much different time scale. If technology addiction is indeed similar to substance addiction, then the biobehavioral responses occur within seconds after the introduction of a pleasurable stimulus. These responses, marked by momentary changes in neurochemistry, become conditioned responses over multiple repetitions (Volkow, Koob, & McLellan, 2016). The time domain of the biological response is on the order of single-digit seconds. Most measures of addiction, however, consider use patterns that manifest at substantially longer units of time, usually days, weeks and months. The mismatch between the theory and the data occurs because of the difficulty in measuring individuals’ moment-by-moment technology use. Although it is possible to examine behavioral contingencies in the laboratory, those assessments could not easily, if ever, simulate the natural experience of the hundreds of smartphone sessions an individual might engage in during a typical day in their natural environment. The screenome allows observation of both how the moment-by-moment contingencies form in the natural environment and how those contingencies develop into or transform long-term behavior.

Similar opportunities exist in other research areas. Laboratory research on emotion management, for example, examines how individuals’ switching between different kinds of media content (e.g., news, entertainment) facilitates their goals to balance or equalize their emotional experience (e.g., Bartsch, Vorderer, Mangold, & Viehoff, 2008). Highly negative experiences, or highly arousing ones, are balanced by seeking ones that are positive or calming. The balancing occurs at time scales that range from days and hours to the length of time it takes to experience intact programs that last several minutes or hours and that correspond to the units of media and time scales that researchers have been able to access. Consequently, the theories that come from the research are necessarily about the units of media that could be measured. The screenome allows observation of how individuals switch between different kinds of media content at the time scale of seconds, and thus facilitates examination of how emotion management might occur within seconds, allowing for development of new theories that account for the micro-management of emotion. New research using the screenome (some of which will be described in Section 5) has found, for example, that when technology offers the ability to easily make quick switches, arousal management may occur within seconds (i.e., seeking calm in the face of too much excitement) (Yeykelis et al., 2014). The microscopic view provided by this new data stream changes explanations for why and how individuals use technology to manage emotions. In principle, balancing emotions at the second-to-second time scale may be more reactive and less thoughtful, while balancing emotions at the hour-to-hour or day-to-day time scale may be more reflective and purposive. The screenome thus can inform existing and new theory about how “bottom-up” regulation processes and “top-down” processes combine to drive emotional experience in the natural digital environment.

The temporal density of information in the screenome means that researchers can zoom in and out across time scales, examining time segments and sequences that span seconds and months and (eventually) years. The temporal density of the behavioral observations can foster discovery of the actual and multiple time scales that govern processing of media (Ram & Diehl, 2015). Researchers can simultaneously consider the biological, psychological and social theories relevant to a single process, and note both what is unique about each level of influence and how processes that manifest at different time scales (or levels of analysis) afford or constrain processes at other time scales. For example, when considering technology effects on cognition among different aged people, multiple time domain studies can simultaneously account for micro-time changes, for example in attentional focus, and macro-time changes related to longer-term development, for example in cognitive aging (Charness, Fox, & Mitchum, 2010). Integration across multiple levels of analysis and time scales has long been advocated in developmental psychology (e.g., Gottlieb, 1996; Nesselroade, 1991), even though most research remains focused on a single time domain (Ram & Diehl, 2015). The screenome facilitates integration across levels of analysis. For example, the flexible zoom afforded by temporally dense data allows examination of the bidirectional interplay between short-term stressors that manifest at a fast time scale during digital interactions and longer-term changes in well-being that manifest across weeks or months (Charles, Piazza, Mogle, Sliwinski, & Almeida, 2013). In sum, the inherent facility for combining multiple time scales in the same inquiry by simply zooming in and out of the temporal sequences embedded in the screenome creates new opportunities to examine how processes or content in one domain (and its corresponding time scale) influence and are influenced by processes and content in another domain (and at a different time scale).

Many areas of inquiry can benefit from the multiple time scale inquiry. Theories about individuals’ information processing, for example, all consider the sequencing of information. At fast time scales, perception and interpretation of any given piece of information may be influenced by what precedes and follows, through processes like priming (Hermans, De Houwer, & Eelen, 2001; Vorberg, Mattler, Heinecke, Schmidt, & Schwarzbach, 2003), framing (Seo, Goldfarb, & Barrett, 2010; Tversky & Kahneman, 1981), and primacy and recency effects (Murphy, Hofacker, & Mizerski, 2006). At slightly slower time scales, circadian, attentional, and interpersonal rhythms are reflected in, for example, the curation of media content (Cutting, Brunick, & Candan, 2012; Zacks & Swallow, 2007), the interactive cadence between consuming and producing information in dyadic communication (Beebe, Jaffe, & Lachmann, 2005; Burgoon, Stern, & Dillman, 2007), and daily cycles in sentiment of social media posts (Golder & Macy, 2011; Yin et al., 2014). The longitudinal sequences captured in the screenome provide new data about how all such rhythms manifest (or not) in media use, and they provide the temporal precision necessary for discovering the specific and often unknown time-scales at which individual behavior is actually organized. In the addiction example mentioned earlier, it would be possible to locate the specific, and likely idiosyncratic, cadence at which individuals respond to addictive features like notifications. Given that much psychological theory has not yet considered the time scale at which specific processes operate, the temporal component of the screenome can facilitate discovery of when and how often specific kinds of behavioral sequences manifest in everyday life.

A final point about the time information in the screenome is that the longitudinal data highlight and afford analysis of intraindividual change, as opposed to analysis of interindividual, cross-sectional differences. That is, the screenome is particularly relevant to theories about how one individual changes over time rather than about how groups of people are different at any given point in time. Much of the research in psychology and media has taken a nomothetic approach, examining between-subject differences in specific digital domains (e.g., health, social relationships, business collaboration). For example, active social media users have different friendship networks than inactive users. Findings based on interindividual differences, however, do not show how any given individual moves in and out of those networks, which is something that most people do every day (Estes, 1956; Robinson, 1950). Study of behavioral and psychological processes requires an idiographic approach (Magnusson & Cairns, 1996) that examines intraindividual variation to understand behavioral sequences (Molenaar, 2004). Given that most psychological theory is about within-person processes, intensive longitudinal data, like that included in the screenome, are required (Molenaar & Campbell, 2009; Ram & Gerstorf, 2009). The ability to track intraindividual change can enable discovery and testing of person-specific theories, detailed descriptions of individual-level processes that may be subsequently aggregated across people and groups. We show examples of intraindividual changes in the last section.

Content.

Theory in media psychology is often organized around categories of media. Media are described with respect to software applications (e.g., Facebook, Twitter), companies that produce or aggregate information (e.g., CNN, YouTube), or market segments (e.g., politics, health, relationships, finances). Cross-cutting themes organize content by domain (e.g., games, retail, finance, health, and social relationships), type of problems addressed (e.g., social issues, public policy or private problems), modality (e.g., text, image, video), or whether the content that is user generated versus sent from others (Kietzmann, Hermkens, McCarthy, & Silvestre, 2011). Within any given modality, text can be described in terms of sentiment (Kramer, Guillory, & Hancock, 2014), sentence complexity (e.g., number of words, sentence logic)(Schwartz et al., 2013) or vocabulary sophistication (Agichtein et al., 2008). Pictorial content is described with respect to presence and characteristics of faces (both particular people and strangers)(Krämer & Winter, 2008), the type of activity or action depicted (e.g., illegal behavior, social gatherings)(Morgan, Snelson, & Elison-Bowers, 2010). Forms of content are mapped to information content (Thorson, Reeves, & Schleuder, 1985; Lang, 2000), for example, through quantification of visual complexity or color spectrum qualities. And this list could easily be extended.

Any media experience, even a short one, is infinitely describable, never a “pure” stimulus, one thing and nothing else (Reeves, Yeykelis & Cummings, 2016). The inherent complexity of the stimuli complicates theory, especially when the units of media chosen for study are not necessarily consistent with the units of experience that a theory is about. Most studies about media and psychology begin by looking at a “big” (often commercially defined) category of content that is accessible to researchers (e.g., Facebook use, Amazon retail purchases, Outlook email use). That content, however, is incredibly complex. For example, social media messages may contain specific words that are shared by others or that reveal personal secrets, retail purchases may include the time when prices are compared, and email software shows whether information processed is incoming or outgoing, and so on. The screenome offers a more flexible method to pinpoint specific content of theoretical interest. In the study of news consumed online, for example, researchers can observe the exact screenshots related to a particular event (e.g., what the President said about an event at a specific time) regardless of whether the screen containing the information appeared in a formal news site, a social media post, a text message or anywhere else (we will show an example of these different placements in the last section). This has advantages for both confirmatory testing of theory and inductive generation of new theory.

The screenome can contribute to deductive, confirmatory testing of theory by providing stimulus specificity and by allowing for stimulus sampling. First, the screenome can be used to examine how a particular stimulus of interest is presented, engaged, and responded to in the real world. Studies can focus on the exact stimuli of interest without having to make assumptions about whether or not that content fits within a particular commercial container (or not). Second, the screenome provides the possibility to collect a representative sample of stimuli, without having to depend (as in the case of most media psychology experiments) on researcher selected examples of content that often inadequately represent a theoretically defined type of stimulus. Stimulus sampling is a problem in all social research (Judd, Westfall, & Kenny 2012), and media psychology in particular has suffered from use of paradigms where only one or a few prototypic stimuli are used to infer how a larger and more complex class of stimuli influences behavior (Reeves, et al., 2016). Media researchers can use the detailed record embedded in the screenome to examine all instances of a stimulus category for an individual, wherever and however those stimuli present themselves in the natural environment. The screenome thus simultaneously provides for both greater specificity and generalizability when testing relations between content and behavior.

The screenome can also be used to identify and define new types of content. The data stream is well suited to inductive research strategies and machine learning approaches to studying psychological responses (Shah, Cappella, & Neuman, 2015; Cappella, 2017). In those approaches, large numbers of stimuli are clustered based on stimulus qualities that are identified by computer algorithm rather than a priori definition of the exact material that may cause any specific effect of interest. The screenome is also suited to exploratory research that attempts to uncover theoretically useful definitions of digital content, definitions that can be substantially different than the commercial categories used in most research. For example, a database of millions of screenshots that are each tagged with respect to an effect of interest (e.g., arousal potential, visual complexity, relevance to social interaction) could be clustered in an attempt to identify the similarities and differences between screenshots that are different from and perhaps orthogonal to categories suggested by current theory. The screenome thus provides the raw material needed for inductive explorations into how individuals define and organize media content.

Function.

Functional theories in media psychology have been important for decades, including recent applications to the study of online behavior (Kaye & Johnson, 2002; Quan-Haase & Young, 2010; Raacke & Bonds-Raacke, 2008). These theories assume that the reasons people attend to media significantly influence what is attended to and how that information is perceived, remembered or used. Past research in this area of media psychology has focused on analysis of very large blocks of content by examining, for example, whether online and traditional media have different functions, or how different functions (e.g., social interaction, information seeking, passing time, entertainment, and relaxation) are served by different media modalities (e.g., text, image, video) (Sundar, 2012). The screenome provides for precise identification and study of how specific kinds of content serve different psychological functions.

In the laboratory, studies show that processing can be altered by precise manipulation of the motivations to process information (Sundar, Kalyanaraman, & Brown, 2003). For example, people who are asked to view political information with a motivation to learn how candidates stand on issues are more likely to become informed but have lower confidence in their knowledge, while people asked to view the same information with a motivation to find out what candidates are like as people pay greater attention to the pictorial content and have greater confidence in their knowledge. The point being that individuals differ in how they approach and use the media they encounter. The screenome offers information about how individuals interact with and use media content in the real world at the same level of specificity obtained in the laboratory. Information about the time that individuals dwell on different elements of content or how they focus on and follow different threads of information is all embedded in the screenome.

There is also need to understand if and how other laboratory results generalize to natural settings. For example, promising new work in neuroscience examines how differences in biologically constrained motivations to share information with others are related to how individuals receive and send media information (Meshi, Tamir, & Heekeen, 2015; Tamir, Zaki, & Mitchell, 2015). Neural activity in brain regions associated with motivation and reward are related to both subjective reports on sharing of information and on sharing actions in artificially constructed behavioral games. This work is by necessity done in the laboratory. Outside the laboratory, knowledge about individuals’ motivations has typically been studied through use of large-scale questionnaires (see Nadkarni & Hoffman, 2012 for a summary). This research notes very generally that individuals’ primary motivations to share through social media include connecting with others and managing the impressions that one can make of other people. Further, beyond some knowledge about how many texts or pictures individuals share in aggregate, little is known about the temporal organization of sharing, particularly with respect to what content was engaged immediately before or after sharing. Here the screenome provides a new microscope to examine what, when, and how often an individual shares material in real-world settings, as well as preferences for particular kinds of activities (e.g., reward-based games). This new record of screen activity can inform research about how functions of media in the laboratory, and the motivations individuals report in surveys, combine in everyday digital lives. In sum, the screenome provides data about how functions of media influence real-world behavior, and inductively, about new functions that have not yet been described.

Context.

A truism acknowledged by most psychological theory is that psychological contexts are inextricably linked to individual thinking, emotions and behavior (summarized by Rauthmann, Sherman, & Funder, 2015). Most psychologists agree that they have done a better job of understanding people than they have in understanding the situations in which they exist, and particularly the interaction between persons and situations. A forceful argument about the effects of the imbalance in attention to persons vs. situations was given by Ross and Nisbett (2011) when they questioned the relevance of the entirety of social and personality psychology, noting that much of what was “known” about behavior changed when the same phenomena were examined in a different context. Admonitions to examine situations are plentiful in many areas of psychology. In developmental psychology, for example, there has been strong evidence showing how the person-context “transactions” embedded, for example, in parent-child interactions (e.g., attachment theory) or epigenetic signaling (e.g., diathesis stress model) influence long-term development (Meaney, 2010). Dynamic systems theory, in particular, promotes the idea that all change, both long-term and short-term, is driven through a bidirectional interplay of biological and environmental “co-action” (Thelen & Smith, 2006).

The easy summary is that context is an important component of psychological theory. An advantage for research that hopes to include contextual detail is that increasingly complete descriptions of situations can now be obtained outside the laboratory. Many of the logging methods reviewed in the previous section can be used to obtain information about the “situation” surrounding any given behavior or sequence of behaviors. The added theoretical opportunity for the screenome is that much of the contextual information deemed important for understanding the situation is now embedded in digital records. Much of what is believed to be important outside of a media experience is now actually embedded in the media experience itself.

To illustrate, we highlight how specific aspects of psychological situations used to define contextual information across a range of theories (see Rauthmann et al., 2014) may be seen, at least partially, in the screenome. First, the cues that compose situations include objectively quantifiable information about persons, relationships, objects, events, activities, locations and time. These cues define “who, what, where, when and why” and are the environmental structures that help individuals define a particular experience, even a short one. All of these attributes can be extracted from the screenome and associated metadata (e.g., GPS). Second, the characteristics that give situations psychological meaning include, according to recent taxonomies (e.g., Rauthmann & Sherman, 2015), information about duty (is action required), intellect (is deep processing required), adversity (are there threats), positivity (is the situation pleasant), negativity (is the situation unpleasant), deception (is there dishonesty or duplicity), and socialability (are connections with other people possible, desirable or necessary). These characteristics can often be inferred from the screenome based on identification of textual and visual content. Third, situations are grouped or clustered into classes of situations that are based on the purpose of a situation. For example, in a taxonomy proposed by Van Heck (1984) that still guides much of the literature, situations are distinguished by conflict, joint working, intimacy, recreation, traveling, rituals, sport, excesses, and trading. A newer taxonomy based on evolutionary theory distinguishes situations with respect to self-protection, disease avoidance, affiliation, kin care, mate seeking, mate retention and group status (Morse, Neel, Todd, & Funder, 2015).

Each of these context characteristics can change the impact of any given digital experience and the screenome can provide information, otherwise difficult to uncover, that is relevant to determining the class to which a digital experience belongs. It is also worth noting that the screenome provides rich data for both quantitative and qualitative inductions. For qualitative researchers, and especially those who study uses of technology, the necessity of theorizing about the situations in which people use media is essential. The screenome may be particularly useful for ethnography because it allows researchers to engage in the “deep hanging out” that “gives voice” to the breadth of particulars that define the meaningfulness of individuals’ media practices (boyd, 2015; Carey, 1992; Geertz, 1998; Turkle, 1994). While screenshots do not follow people off-line, they do offer a sense of “over the shoulder” examination that facilitates discovery.

4. A FRAMEWORK FOR SCREENSHOT COLLECTION AND PROCESSING

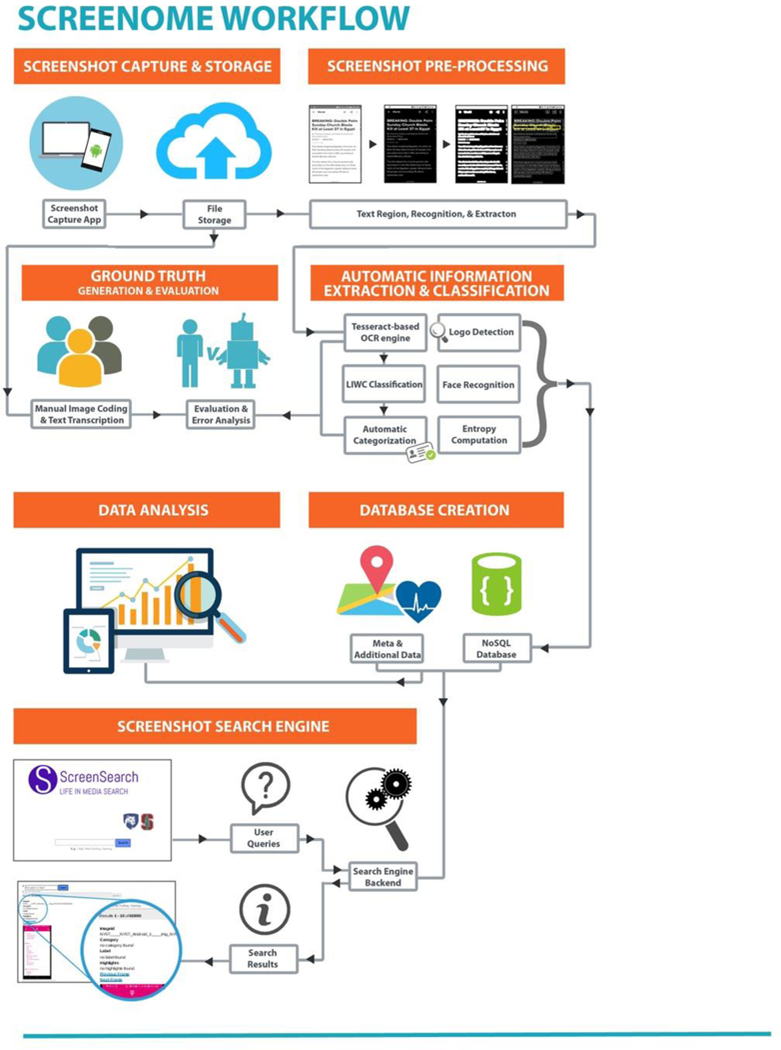

In this section we outline the framework for collecting, processing, storing, examining, and analyzing individual screenomes. The overall architecture of our system is shown in Figure 1, with each module described below. In brief, each component of the architecture considers a separate task: recording experiences via device screenshots, extracting text and graphics from screenshots, and data from laptop and smartphone services, analyzing textual and graphical content with respect to important psychological features (e.g., sentiment, subjects covered), fusing the raw and processed data into a spatiotemporal database, visualizing data via interactive dashboards, and analyzing data using search engines, machine learning and statistical models.

Figure 1.

Diagram illustrating screenome workflow.

The use of the framework for a single subject proceeds as follows. First, screenshot capture software is installed on a subject’s smartphone and/or laptop. There is a separate application for Windows and Mac laptops, and an application for Android smartphones (iPhones are currently not supported). The installation of the software can be done during a visit to the research lab or the software can be downloaded from a research website. Screenshots are then automatically encrypted, compressed and transmitted on a daily basis to secure university servers while the subject uses the devices over the course of days, weeks or months. After data collection, preprocessing of the screenshots is accomplished using the procedures described below to extract text and images, and a database is then created that synchronizes all material in time. Statistical analyses are conducted using that database. Qualitative descriptions and coding of material is facilitated by a screenshot search engine (described below).

4.1. Collection of Screenshots

The data collection module includes software that captures screenshots at researcher-chosen intervals, stores them on local devices, and encrypts and transmits bundles of screenshots to research servers at intervals that accommodate constraints in bandwidth and device memory. In-house applications take screenshots at periodic intervals (e.g., every five seconds that the device is in use), and store those images in a local folder. Once or twice per day the folder is encrypted, transmitted and then deleted from the laptop or smartphone. Data collection on Android devices (Lollipop OS) is done with a two-component application that uses functions in the Media Projection Library to capture a short three-frame video of the screen action at a regular interval set by the researcher. One frame from each video is retained and stored in a local folder. AlarmManager functions are used to invoke periodic transfer of bundled and encrypted screenshots to the research server when the device has a wireless connection, is plugged in or has reached a pre-determined memory limit. Helper functions ensure that the application starts automatically on device reboot, and allow for remote updating. The application enables capture of a continuous stream of screenshots without any participant intervention, without excessive battery drain, and (based on participant debriefing) without undue influence on individuals’ normal device use (also see notes in Section 6.0). Applications for Mac and PC work similarly, but retain screenshots directly (rather than retain a video image). The Mac application, coded in AppleScript and shell script, takes screenshots at researcher-specified intervals, and saves to a local folder that is then periodically encrypted and sent to the research server. Application startup is managed through placement in the operating system’s launch daemon. For Windows computers, we used a commercial application, TimeSnapper (version 3.9.0.3), to take the screenshots. The software was set to launch automatically at computer startup and take screenshots every five seconds. A separate application periodically encrypts the data and sends it to the research server. New versions of the applications for each platform are further optimizing functionality, including a subject enrollment interface and researcher data collection management tools.

The screenshot data stream is supplemented with other data already available from individuals’ smartphones and wearables. We make use of commercial location tracking apps that can identify locations and modes of transportation. Screenome data can be supplemented with surveys (e.g., online questionnaires), laboratory assessments (e.g., blood assays, cognitive tasks), or concurrent ambulatory monitoring (e.g., actigraphy, physiological monitoring, experience sampling). Synchronization of streams is currently done using time-stamps that are encoded from each device’s internet-updated clock.

4.2. Information Extraction

Screenshots represent the exact information that people consume and produce; however, extraction of behaviorally and psychologically relevant data from the digital record is required prior to analysis. The extraction techniques that follow were used to produce the example analytics reported in the next section, and are being updated as screenomics research develops.

Optical character recognition (OCR).

A major component of screenshot content is text. Some of the challenges typically associated with text extraction from degraded or natural images (e.g., diverse text orientation, heterogeneous background luminance) are not problematic with screenshots. But some are including inconsistency in fonts, screen layouts, and presence of multiple overlapping windows and these problems complicate identification, extraction, and organization of textual content. Our current text extraction module (Chiatti et al., 2017) makes use of open-source tools: OpenCV for image pre-processing (Culjak, Abram, Pribanic, Dzapo, & Cifrek, 2012), and Tesseract for OCR (Smith, 2007).

As shown in Figure 1, each screenshot is first converted from RGB to grayscale and then binarized to discriminate the textual foreground from surrounding background. Simple inverse thresholding combined with Otsu’s global binarization technique (Otsu, 1979) has been sufficient, given that most screenshots have consistent illumination across the image. Candidate blocks of text are then identified using a connected component approach (Talukder & Mallick, 2014) where white pixels are dilated, and a rectangular contour (i.e. bounding box) is wrapped around each region of text. Given the predominantly horizontal orientation of screenshot text, processing efficiency is maintained by skipping the skew estimation step. Each candidate block of text is then fed to a Tesseract-based OCR module to obtain a collection of text snippets that are compiled into Unicode text files, one for each screenshot. Our published studies, wherein we compared OCR results against ground-truth transcriptions of 2,000 images, show the accuracy of the text extraction procedures at 74% at the individual character level (Chiatti et al., 2017). On-going experiments support further improvements through integration of neural net-based line recognition that is trained and tuned specifically on the expanding screenshot repository, similar to the approach used in the OCRopus framework (Breuel, 2008), and included in the alpha version of Tesseract 4.0. Improvements in image segmentation, in particular, are expanding further opportunities for natural language processing analyses (e.g., LIWC; Pennebaker, Booth, Boyd, & Francis, 2015) that are then used to identify meaningful content from the extracted text.

Image analysis.

Parallel to text extraction, the pictures and images nested within each screenshot can be cataloged. This is done with open-source computer vision tools in the OpenCV library (Culjak et al., 2012) that provide for face detection, template matching, and quantification of color distributions and other image attributes. For example, identification of the screenshots that contain specific logos (e.g., ABC News, Facebook, Twitter) or screen pop-ups (e.g., keyboards, notification banners) can be accomplished through researcher selection of a reference template of interest and automated identification of edges that have the best match to the template (using Canny edge detection; Canny, 1986). Probability distributions can be examined for viable threshold values and probable matches confirmed through human tagging. Similar procedures provide for identification of faces and other common images using Haar cascades (Lienhart & Maydt, 2002; Viola & Jones, 2001) and lightweight convolutional neural nets and pre-trained detection models (Szegedy et al., 2015). Pixel-level information is also used to quantify screenshots with respect to image complexity (e.g., color entropy, Sethna, 2006.), and image velocity and flow (e.g., sum difference of RGB values for all pixels in successive screenshots, Richardson, 2003). These features are then used, in conjunction with labeled data, to identify the specific applications being used, type of content and so on. For example, smartphone screens where the user is producing textual content are identified with 98% accuracy using prediction models based on a collection of color entropy, face count, text, and logo features.

Labeling (Human Tagging).

There are some features of screenshots that are of theoretical interest but for which there are not yet automated methods for obtaining labels. Consequently, we facilitate labeling of individual screenshots with tools for human tagging. Human labeling of big data often uses public crowd-sourcing platforms (e.g., Amazon Mechanical Turk; Buhrmester, Kwang, & Gosling, 2011; Berinsky, Quek, & Sances, 2012; Bohannon, 2011; Horton, Rand, & Zeckhauser, 2011). Confidentiality and privacy protocols for the screenome require that labeling be done only by members of the research team that are authorized to see the raw data. Manual labeling and text transcription is done using a custom module built on top of the localturk opensource API (Vanderkam, 2017) and for some tasks the opensource Datavyu (2014) software. Through a secure server, screenshot coders (human subject approved university students) are presented screenshots to categorize using pre-defined response scales related to the specific features of content and function depicted in the image. For each particular project, research question or analysis, manual annotations for randomly or purposively selected subsets of screenshots are used as ground truth data to train and evaluate the performance of machine learning algorithms that are then used to propagate informative labels to the remaining data. For example, in a project focused on smartphone switching behaviors, the random forests currently used to propagate labels indicating the specific application that appears in a given screenshot currently run at greater than 85% accuracy, with misclassifications of any specific category running less than 1 percent (browsing in Facebook, 0.9% error; lock screen, 0.8%; home screen, 0.7%; browsing in Chrome, 0.6%; browsing in Instagram, 0.6%). Inaccuracies appear to be mostly in distinguishing very similar activities (e.g., browsing in Instagram vs Facebook) that are often considered together (e.g., both are social applications that serve similar functions).

4.3. Master Database

The output of information extraction and manual classification modules supplement the raw screenshots with a collection of additional “metadata.” Heterogeneity of data types is accommodated using a secure, limited access NoSQL database deployed in accordance with human subjects protocols for protection of privacy. Text, image, numeric, string, spatial, and temporal data are fused (by subject and time) within a schema-less NoSQL framework that supports flexible query and analysis. We use the open source MongoDB document-oriented framework that facilitates expansion of the metadata associated with subsets of the collection as different researchers in our group develop, refine, and add new fields and corresponding metrics to the feature set. The framework is specifically constructed to facilitate scaling, including repository expansion, parallelization, flexible workload distribution, and smooth integration with search, retrieval, and data analysis technologies.

4.4. Screenshot and Content Search

Examination of the document store is facilitated by a custom search engine that allows a user to enter a textual query (e.g., “president AND New York Times”) that returns a ranked list of screenshot thumbnails related to the input query. Indexing and search is done using a tailored vertical search engine built using Apache Solr Lucene. In brief, an xml-based document associated with each screenshot is indexed with respect to its enclosed text (with stemming and ignoring stop words) and content fields (e.g., geohash, content categories). When a researcher enters a query into the web-based user interface, all images with the exact text or content similar to the query are drawn from the document store, ranked based on relevance (e.g., using Okapi B25 metric; Robertson, Walker, Jones, Hancock-Beaulieu, & Gatford, 1995), and displayed to the researcher as a list of relevant screenshots. Summaries and links accompanying each search hit provide additional information (e.g., content category, geographic location, links to temporally adjacent screenshots). The search engine is critical for understanding the range of screen behaviors that pertain to specific content areas (e.g., health, politics), and for generating hypotheses about how screenome content is related to a wide range of thoughts, actions, and feelings.

5. RESEARCH EXAMPLES

This section presents eight examples of how the screenome framework can be used to study digital life experiences. Section 3.0 outlined advantages of theorizing with screenome data across several different literatures. The purpose of this section is not to test the range of theoretical potential but rather to offer exemplary analyses that different researchers might engage. Some of the examples follow only a single individual over the course of one day; others analyze larger samples and longer durations. All of the examples were chosen to highlight new ways to analyze technology experiences that are enabled by examination of individual screenomes.

All of the data collection followed the same general procedure. Participants were screened during short phone calls about their devices, and willingness and ability to participate. They then either visited our university lab or a central research facility. Once there, participants read and signed human subjects consent forms and filled out background questionnaires about demographics (e.g., race, sex), media use, and questions about psychological motivations and information searching. Software was then installed on their laptop computer and Android smartphone, and linked to the computational infrastructure described above. Participants then left the laboratory and went about their daily lives while the system unobtrusively recorded their device use and (in some cases) movement in physical space. The exact data associated with each example is listed in the endnotes. Figures 2 to 9 display the results of each analysis and were produced during analysis. They are not inherent to the screenome pipeline itself.

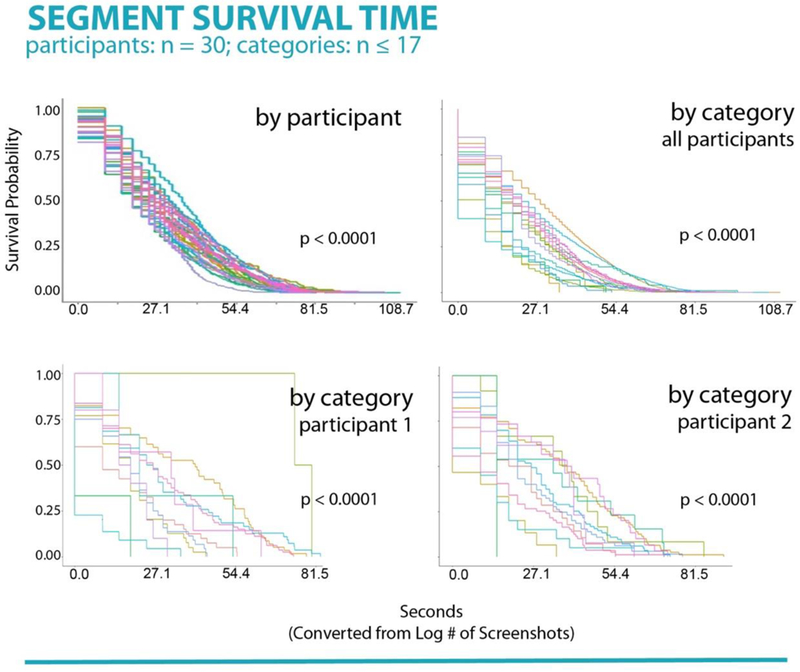

Figure 2.

Top left panel. Survival analysis for each participant in the study. Each curve represents an individual’s likelihood of switching screens at a given point in time. Individual differences in survival rates (i.e., rate of switching behaviors) were found. Top right panel. Survival analysis for each of 17 screen segment categories in the study, aggregated across the sample. Each curve represents the sample’s likelihood of switching screens given a particular screen category at each time point. Differences in survival rates (i.e., rate of switching behaviors) were found. Bottom panels. Survival analysis for each of 17 screen segment categories in the study for two individuals. Individual differences between switching behaviors given a certain screen category can be seen.

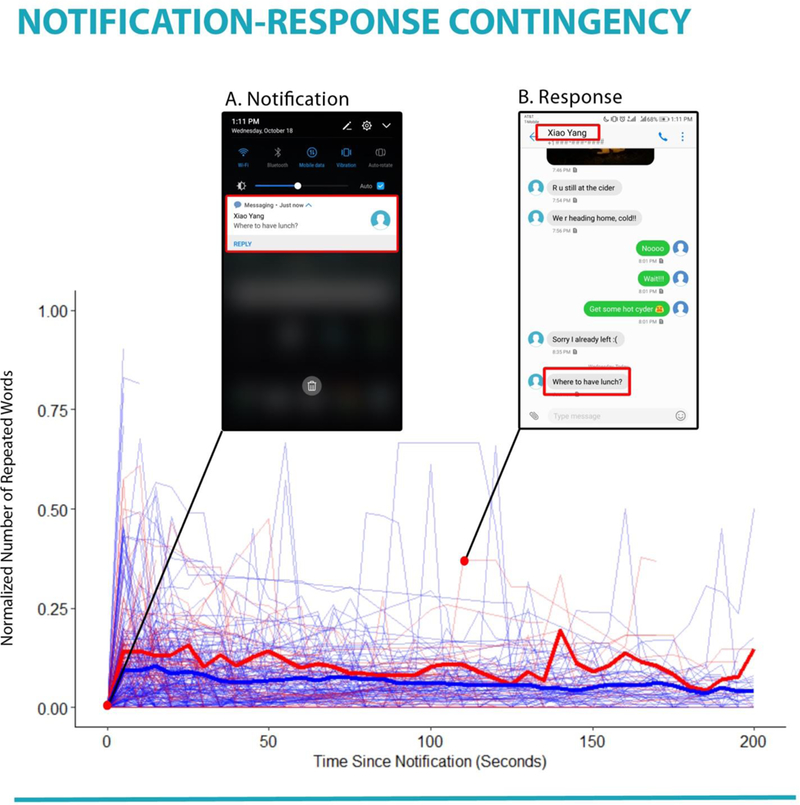

Figure 9.

The graph shows how the content of specific notification stimuli manifested in the screenshots following that notification. Red lines indicate notifications characterized as social cues, and blue lines indicate notifications with non-social cues. Bold lines provide the response profile for each type of cue, as averaged across all notifications in the two cue types. Inserts highlight a specific notification cue, and the moment, 115 s later, that the content of the cue returned (i.e., the response).

5.1. Example 1: Serial Switching Between Tasks

This example shows how time structure can be defined in the screenome with respect to the speed of switching between different content. This is of great interest in media psychology because quick switching may contribute to short attention spans (Anderson & Rainie, 2012; Brown, 2000; Rosen, Carrier, & Cheever, 2013), information overload (Bawden & Robison, 2009; DeStefano & LeFevre, 2007), and divided attention (Brasel & Gips, 2011; Hembrooke & Gay, 2003). Descriptions of switching, however, have been almost impossible, at least in natural environments, because tracking methods have not been able to follow threads of attention over time and across platforms and screens.

An example research question is: How long does one particular segment of experience last before another takes its place? To illustrate how such a question can be answered, we applied a proportional hazards model (Cox, 1972) to screenomes from 30 student laptop computers (see Yeykelis et al., 2018).i We identified median task switching time between segments at 20 seconds (e.g., switching from reading an email to conducting a Google search to texting a friend to liking a Facebook post). We were also able to consider individual and contextual differences. As shown in Figure 2 there were substantial differences in median switch-times (a) between individuals (χ2(29, N = 30) = 548, p <.01; top left panel) that were indicative of individual differences in attention, (b) between content categories such as email, information, news, pornography, shopping, social media and work (χ2(16, N = 17) = 1646, p <.01; top right panel) that were indicative of differential pull of attention across stimuli, and (c) in how different people approached the different categories (bottom panels), i.e., as indicative of person x stimuli interactions.

This analysis represents the first look at the rapid pacing of digital life in a way that considers switching between applications and platforms, between consumption and production of content, and between work and leisure domains. The results also highlight the importance of information embedded in the screenome, and the possibility of discovering behavioral “fingerprints” that represent the unique rates of switching and ordering of content that indicate how an individual seeks, learns, and organizes information. In sum, screenshots collected at five-second intervals provided a new opportunity to identify the time-invariant (e.g., age, gender, motivational style) or time-varying (e.g., sentiment, topic) characteristics of people and content that can influence how and when switching occurs.

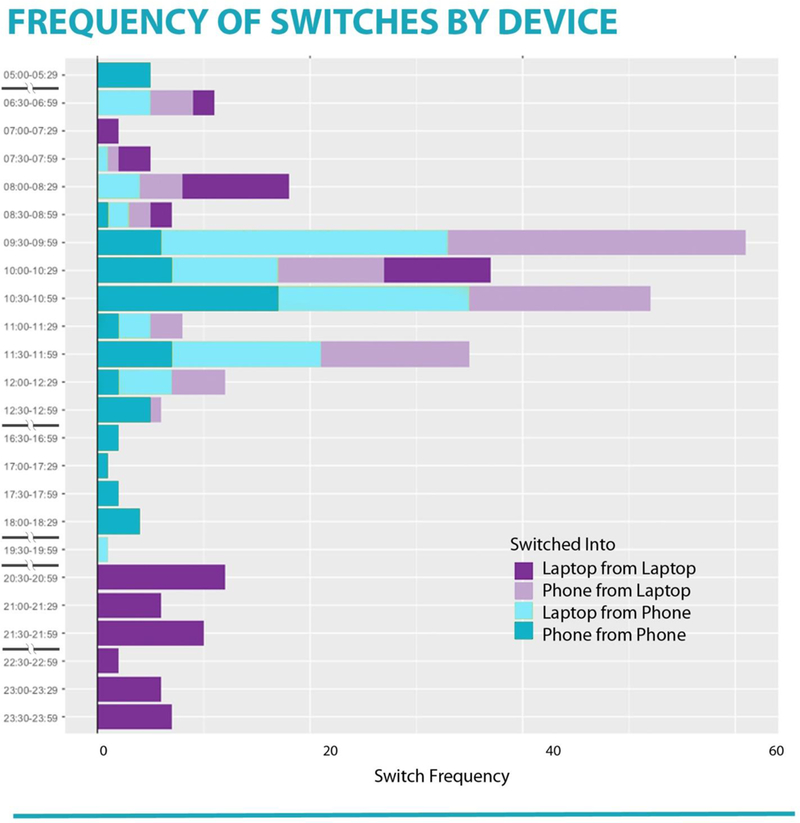

5.2. Example 2: Quick Switching Between and Within Screens

This example shows how the screenome allows content to be tracked within and between screens. Studies in media psychology and human-computer interaction often examine user interactions with a specific kind of content on a single device; for example, playing games on a computer, reading political news using a tablet app or posting on social media with a smartphone. Such research interests are limited to particular content categories and devices. Digitization, consolidation and fragmentation of media, however, increases the probability of switching between different categories of content and thus changes how content is sequenced.

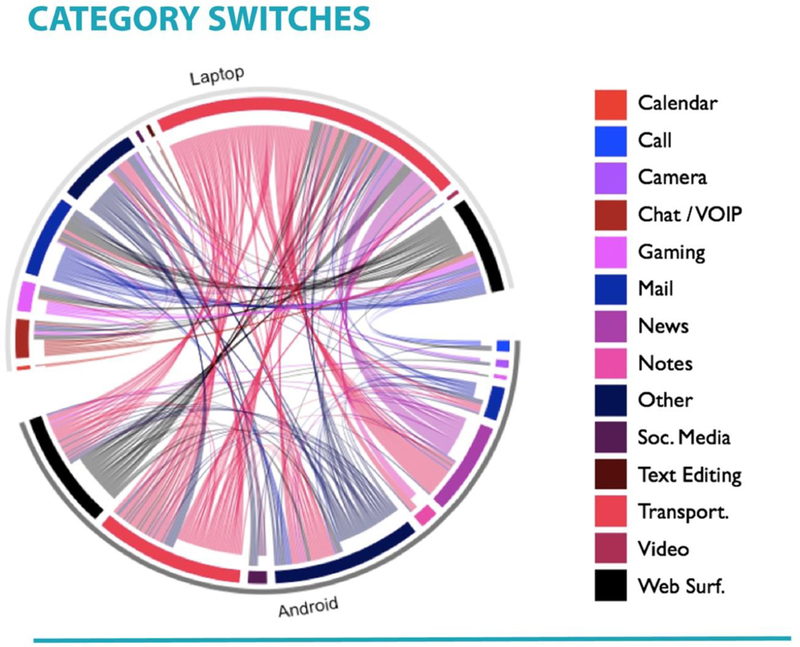

Tracking the sequence of digital experiences across traditional boundaries of commercial content and devices is important theoretically. Priming studies, for example, show how the sequencing of news and political information can change perceptions of content (Cacciatore, Scheufele, & Iyengar, 2016). Studies of excitation transfer show that there is temporal generalization of highly arousing content forward to new material that itself might be arousing (Lang, Sanders-Jackson, Wang, & Rubenking, 2013). Persuasive messages that are humorous or serious have different effects if they follow humorous or serious program segments (Bellman, Wooley, & Varan, 2016). These studies, however, have studied sequence effects using long blocks of homogeneous content (e.g., examining the influence of a TV program on a 30-second ad that follows it) or single transitions observed in a lab (e.g., examining how arousal during one experience influences arousal for content that follows). Our screenome sequences suggest that digital life is better characterized by hundreds or thousands of transitions, and often many per minute. An example research question is: How does an individual transition between content categories and devices?

To illustrate how such a question can be answered, we examined how one individual moved between devices (laptop and smartphone) and among content categories (e.g., calendar, call, chat) during one day (Figure 3).ii Transitions in the example are shown as arcs, colored by the category being used when the switch occurred. On this day, there were 312 transitions with the majority (57%) of those transitions characterized by exit from one device and entry to the other device. Some transitions were consistent: the phone calls were each followed by web surfing on the laptop; engagement with transportation content on the laptop (e.g., Google Maps) was followed by engagement with transportation (n=24, 27.9%), news (n=20, 23.3%) or web surfing (n=18, 20.9%) on the smartphone, with five other categories (n=24, 27.9%) constituting the remainder of switches. In contrast, some category transitions were more heterogeneous: chatting on the laptop was followed by instances of web surfing (n=3, 30%), gaming (n=2, 20%), checking a calendar (n=1, 10%), mail apps (10%), text editing (10%), and other (10%), all on the laptop. Notably, almost all types of content were represented on both laptop and smartphone, and only a few activities were device-specific; for example, this person used only the laptop to look at calendar and video content. In summary, this person’s screenome illustrated both simultaneous and serial engagement with multiple and overlapping content on two digital devices, sequences that would be difficult to observe with other records and methods.

Figure 3.

Visualization of within- and between- device switches by category for one person for one day.

Screenome sequences also have a time structure that allows examination of changes at different timescales. An example research question is: How is device and content switching organized across the day? Zooming out to 30-minute segments, the larger temporal structure can be examined (Figure 4). The day for this person began with a half-hour of switches among content on the phone (dark green). After actively switching between devices (light green and light purple) in the period from 8am and 1pm, this person did not use either device, and she used only the phone between 4:30pm and 7pm. The day ended with 3.5 hours of switches among content only on the laptop (dark purple). Together, Figures 3 and 4 illustrate how high-density data from the screenome can show moment-to-moment (temporal zoom-in) and hour-to-hour (temporal zoom-out) transition and patterns. This example illustrates how the screenome can be used to study technology use occurring at multiple time-scales.

Figure 4.

Transitions between and within devices for one person over the course for one day.

5.3. Example 3: Threads of Experience

As the variance in material available on digital devices grows, and as affordances for switching quickly between the material increase, technology use can be described as threads of experience that connect contiguous but different content. This next example, in the area of political communication, illustrates how following those threads can uncover previously invisible influences, and how theory about the processing of content might change as a result of tracking the connections.

An example research question is: How and when do people engage with political news and information? In studies of voting behavior, research has typically collected self-reports about the quantity and quality of news exposure, usually assessed by asking people about exposure to specific news outlets over long periods of time. Recently, more accurate measurements of exposure to political news have used web-browsing logs and posts on social media (e.g., Dilliplane, Goldman, & Mutz, 2013). In these cases, however, tracking of exposure to political information is restricted to individual platforms, either by researchers when they examine logs or by participants when they answer survey questions about use of the material (Romantan, Hornik, Price, Cappella, & Viswanath, 2008).

In contrast, the screenome provides a record of how political topics are ‘threaded’ through a variety of media experiences, across content categories and across platforms. Screenshots capture both individuals’ incidental exposure to political information, material about politics that is influential in civic learning but encountered while engaged in other functional tasks such as communicating with friends (Kim, Chen, & de Zúñiga, 2013; Wells & Thorson, 2017), and individuals’ intentional exposure through focused directed surveillance of political information for the purpose of political reinforcement or change (Eveland, Hutchens, & Shen, 2009; Ksiazek, Malthouse, & Webster, 2010; Valentino, Hutchings, Banks, & Davis, 2008). Viewing the screenome through the same lens used in the prior research, we can observe the temporal organization of individuals’ incidental and intentional exposures to political information at a level of detail that has not been measured previously and that is missing from the political communication literature.

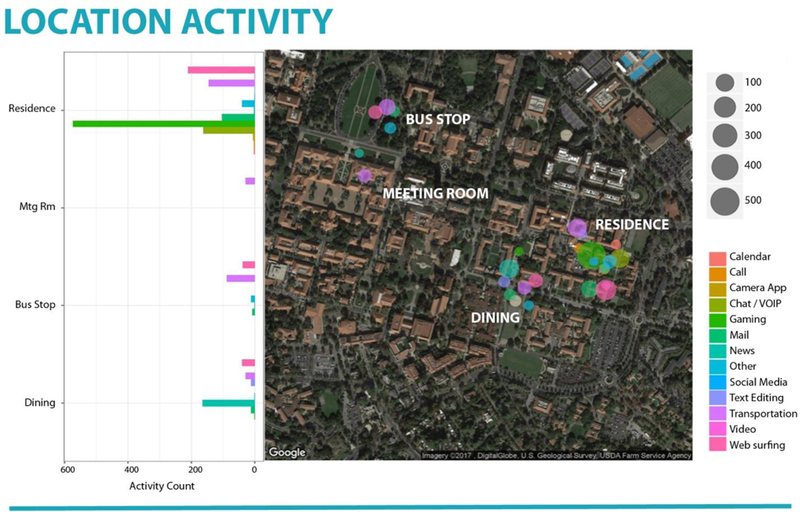

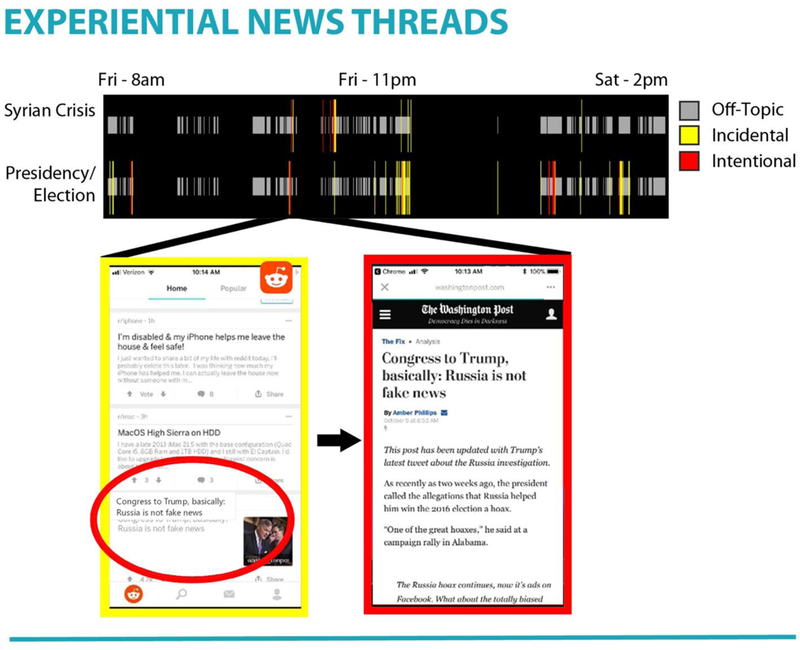

To illustrate how threads of engagement with political news manifest in the screenome, we describe how information related to the presidency, defined as presence of relevant key words, including Clinton, Donald, election, Hillary, president, Trump, White House, and information about the Syrian crisis, defined as presence of key words Assad, Iraq, Middle East, refugee, Saudi, Syria, war manifest in the 36-hour screenome obtained from one individual during mid-April 2017 and shown in Figure 7.iii Screenshots are shown as 3,700 colored vertical bars: red and yellow bars on the lower timeline (n = 171) indicating presence of information related to the presidency, and on the upper timeline (n = 42) indicating presence of information related to the Syrian crisis. Gray bars are for all other content. Most of the presidency and Syrian crisis content was encountered incidentally (in yellow, 162 of 213 screenshots, 76%) in informal settings (e.g., while browsing Twitter or Reddit). Of the 11 instances of active news-seeking, as indicated by sustained reading, active conversation, or a traditional news source, 7 were click-throughs from incidental encounters. Information related to the presidency stayed on screen for up to 50 seconds (M = 12.0, SD = 11.2) while information related to the Syrian crisis stayed on the screen for up to 60 seconds (M = 15.8, SD = 16.1), in this case through a podcast application (total listening time was 32.65 minutes). The screenome provided a record of the precise content that was engaged and the context surrounding that engagement. Expanded analysis of larger samples can provide new understanding of how and when individuals are exposed to politics, and how they formally or informally engage with politically relevant information.

Figure 7.

Barplot and map of a person’s screen activity for one day within one city. Locations visited include (from top to bottom of barplot): residence, meeting room, bus stop, and dining establishment.

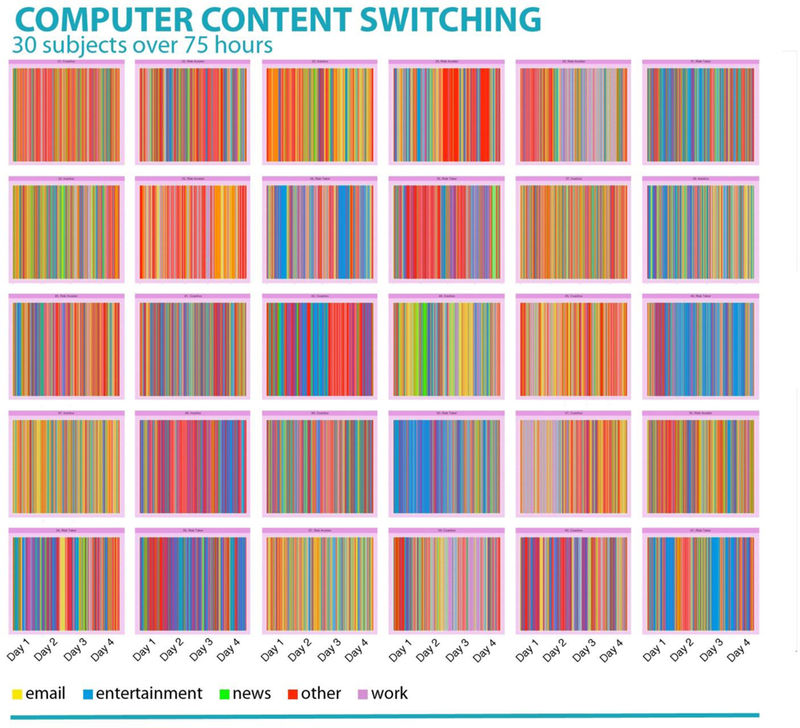

5.4. Example 4: Screenome Variance Between Groups versus Within Persons

This example illustrates the value of the screenome in assessing intraindividual as opposed to interindividual variation. The vast majority of media and life experience studies seek to differentiate groups of people from one another – interindividual differences. There are many definitions for the groups – demographics, personality, geography, viewing levels. For example, people who are sensation seekers are more likely to switch quickly between different types of content (Yeykelis et al., 2018). Extroverted personalities are more likely users of social media (Correa, Hinsely, & de Zúñiga, 2010). High media multi-taskers are better at certain visual acuity tasks (Ophir, Nass, & Wagner, 2009). Such studies seek results that generalize across persons to describe prototypical behavior. While these are worthy goals, it is also a mathematical reality that group-level averages do not indicate how any given individual behaves (i.e., the ecological fallacy), and significant errors are made by generalizing cross-sectional results to individuals (Estes, 1956; Robinson, 1950).

Questions about intraindividual variation ask: What is one individual’s media experience over time? To illustrate the issue and possibilities, Figure 6 shows four-day screenomes compiled from laptop computers for 30 undergraduate students.iv Each person is shown in a separate panel. Differences in the quantity and sequencing of engagement with five different types of content (email, entertainment, news, work, and a miscellaneous category including search) are shown by the order of colors seen in each panel. For example, the person shown on the bottom row, third from the left, has a considerable number of email interruptions, shown with orange lines. People with predominantly blue lines (e.g., the person on the far right in row three) are oriented toward entertainment (e.g., YouTube videos, movie segments, games). But even though it is possible to average across the four days for each screenome and cluster people into groups (e.g., those who work more than play), a substantial amount of the variance within each screenome would remain unexplained by the between-group difference. The screenomes in the figure show that no individual is “average.” The screenome allows consideration of each individual’s unique experience and provides rich time-series data needed to identify the patterns and irregularities embedded in experiences. Intraindividual variations are visually evident within a day and between days. Particularly with regard to interventions focused on behavioral change, the screenome may support more accurate, personalized prediction and delivery of real-time and context-sensitive services than would be possible with cross-sectional or aggregated data.

Figure 6.

Intraindividual variation in laptop screenshot content categories over the course of four days for 30 people. Each panel of vertical colored lines represents a unique person and each vertical line represents time spent in five different categories of content. Both within-person and between-person differences are evident across panels.

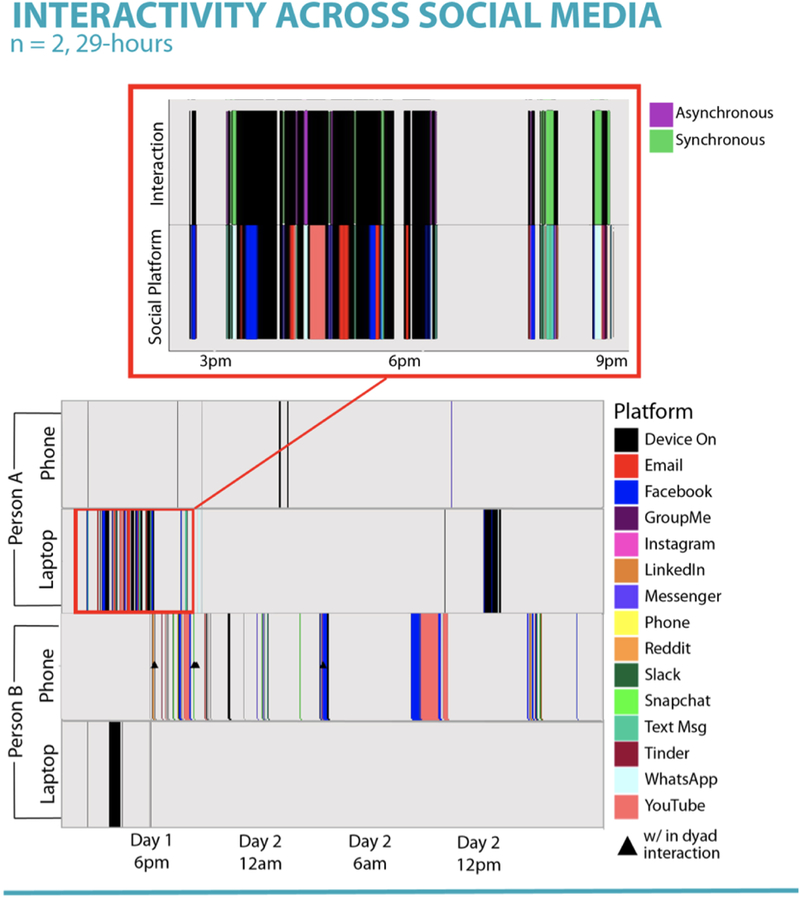

5.5. Example 5: Personal and Social Context Surrounding Media Use

This example illustrates the context that is available in the screenome and how the screenshot sequences facilitate rich description and theorizing about individual media use. The screenome is useful for ethnography because the detailed record “gives voice” to the breadth of particulars that define the meaningfulness of individuals’ media practices (Carey, 1992). The temporal sequencing, in particular, allows researchers to engage in the “deep hanging out” that makes for good ethnography (Geertz, 1998; Turkle, 1994; boyd, 2015). While screenshots do not follow people off-line, they do offer a sense of “over the shoulder” examination of the interdependencies among different content and contexts. Consider these two examples based on a qualitative analysis of one-day screenomes of two individuals.v

Story 1 – “News You Can Use”.

“Frank” lives outside of a large US city and spends hours on his phone doing everything from checking Facebook to talking to his partner to searching for repair shops for his broken-down truck to watching hours of live musical performances on YouTube and catching up on the news. One mid-April morning, at 6:15 AM, he woke up and checked the headlines. He opened the Fox News app and started reading. He scrolled quickly past the top stories (“‘STRATEGIC PATIENCE IS OVER’ Pence fires warning shots across DMZ in message to North Korea,” and “MANHUNT WIDENS 4 more states on alert for Facebook murder suspect”).

The story that caught his attention came just after the first scroll (“ANOTHER NIGHTMARE? Couple booted from United flight over seat switch”). He read the entire article, focusing for 10 seconds on the subtitle “Bride and groom on way to wedding booted off United flight.” Total time spent on the article was one minute and 55 seconds, longer than any other story that day. In isolation, we might assume that this was a salient topic within the public at large. The story came on the heels of a highly-publicized incident and widely-distributed videos and photos of a passenger being dragged from an overbooked flight. By looking at the broader context of Frank’s media day, there is a different story unique to Frank and with implications for media behavior more broadly.

Immediately after reading the article, Frank opened his e-mail and began searching for a reservation confirmation for an up-coming flight to Hawaii – on United Airlines. Frank opened the reservation details and struggled (meaning he went back and forth between steps) with online check-in for himself, his partner, and his pre-teen daughter. After the United news story, thoughts about the pending family trip make more sense.