Abstract

This study investigated how technology use impacts academic performance. A proposed model postulated that academic performance could be predicted by a cognitive independent variable–executive functioning problems–and an affective independent variable–technological anxiety or FOMO (fear of missing out)–mediated by how students choose to use technology. An unobtrusive smartphone application called “Instant Quantified Self” monitored daily smartphone un-locks and daily minutes of use. Other mediators included self-reported smartphone use, self-observed studying attention, self-reported multitasking preference, and a classroom digital metacognition tool that assessed the student’s ability to understand the ramifications of technology use in the classroom that is not relevant to the learning process. Two hundred sixteen participants collected an average of 56 days of “Instant” application data, demonstrating that their smartphone was unlocked more than 60 times a day for three to four minutes each time for a total of 220 daily minutes of use. Results indicated that executive functioning problems predicted academic course performance mediated by studying attention and a single classroom digital metacognition subscale concerning availability of strategies of when to use mobile phones during lectures. FOMO predicted performance directly as well as mediated by a second classroom digital metacognition concerning attitudes toward mobile phone use during lectures. Implications for college students and professors include increasing metacognition about technology use in the classroom and taking “tech breaks” to reduce technology anxiety.

Keywords: Classroom digital metacognition, FOMO, Anxiety, Executive functioning problems, Academic performance, Multitasking, Attention, Smartphone use

RESUMEN

Este estudio analiza la repercusión del uso de la tecnología en el desempeño académico. Se propuso un modelo que postulaba que el desempeño académico podía predecirse mediante una variable independiente cognitiva (los problemas de funcionamiento ejecutivo) y una variable independiente afectiva (la ansiedad tecnológica o FOMO –el miedo a perderse algo), influido por el modo como los alumnos elegían utilizar la tecnología. Mediante una aplicación para móvil no intrusiva, denominada “Yo cuantificado instantáneo” seguía los desbloqueos diarios del móvil y los minutos de uso. Había otros mediadores, como el uso del móvil según el usuario, la atención en el estudio según la observa el usuario, preferencias de multi-tarea según el usuario y una nueva herramienta de medida digital en el aula para analizar la capacidad del alumno para entender las ramificaciones del uso de la tecnología en el aula que no relevante para el proceso de aprendizaje. Un total de 216 participantes recogieron datos de la aplicación “instantánea” durante una media de 56 días, mostrando que su teléfono móvil era desbloqueado más de 60 veces al día entre tres y cuatro minutos cada vez durante un total de 220 minutos diarios de uso. Los resultados indicaban que los problemas de funcionamiento ejecutivo predecían el rendimiento académico mediatizado por la atención en el estudio y una única subescala de metacognición digital en el aula relativa a la disponibilidad de estrategias sobre cuándo utilizar el móvil durante las clases. El FOMO predecía el desempeño directamente además de a través de una segunda metacognición digital del aula relativa a las actitudes hacia el teléfono móvil durante las clases. Entre las implicaciones para los alumnos y los profesores está el aumento de la metacognición sobre el uso de la tecnología en el aula y “descansar de la tecnología” para disminuir la ansiedad que produce.

Palabras clave: Metacognición digital en el aula, FOMO, Ansiedad, Problemas de funcionamiento, ejecutivo, Desempeño académico, Multitarea, Atención, Uso del Smartphone

In a recent handbook review of the literature on the impact of technology use on student academic performance, Bowman, Waite, & Levine (2015) summarized a wealth of data by reporting, “Researchers have consistently found that the more students use electronic media in general, the lower their GPA tends to be” (p. 391). In another comprehensive review of the literature on everyday multitasking, Carrier, Rosen, Cheever, and Lim (2015) reported similar conclusions related to both classroom technology use and the negative impact of classroom multitasking. The current study extends this work and tests a model that provides both cognitive (executive functioning problems) and affective (technological anxiety/technological dependence) links to academic performance mediated by how students choose to use technology, including self-reported and application-reported daily technology use, observed self-reported “studying attention,” multitasking preference, and classroom digital metacognition.

This study provides a theoretically based examination of how students perform in a college course by investigating their daily technology use habits outside of the classroom and how those habits are impacted by executive functioning problems and anxiety about missing out on technology use. The next sections will briefly examine the literature on how technology use in the classroom as well as multitasking while inside the classroom or while studying at home impacts college course grades. In addition, we explore the literature on the negative impacts of executive functioning issues as well as technological anxiety on technology use and then assess prior models that attempted to predict college course performance.

Technology Use Inside and Outside of the Classroom

The negative impact of unrelated, in-class technology use on academic performance has been validated in numerous studies including findings pinpointing the effects of specific types of technology usage. Total time spent on a cell phone in class has been shown to predict lower college GPA (Bjornsen & Archer, 2015; Lepp, Barkley & Karpinski, 2014; Olufadi, 2015; Wood et al., 2012). Specific types of technology use have been pinpointed as negatively impacting learning, including social media (Chen & Yan, 2016; Downs, Tran, McMenemy, & Abegaze, 2015; Jacobsen & Forste, 2011; Junco, 2015; Lepp, Barkley, & Karpinski, 2015; Ravizza, Hambrick, & Fenn, 2014; Rosen, Carrier, & Cheever, 2013; Skiera, Hinz, & Spann, 2015; Wood et al., 2012), instant messaging (Wood et al., 2012), texting (Harman & Sato, 2011; Lepp et al., 2014; Ravizza et al., 2014; Rosen, Carrier et al., 2013), and email (Ravizza et al., 2014). As shown in numerous studies, technology use in the academic environment negatively impacts learning. On a more global level, however, research has demonstrated that technology use habits outside the classroom show negative relationships with college GPA (Bowman et al., 2015). This study will focus on more general daily technology use habits–both self-reported as well as unobtrusively reported by a smartphone application–and examine the role that these daily habits play in understanding how college students perform in the classroom.

Measuring daily technology use has proven to be a complicated process. Rosen, Whaling, Carrier, Cheever, and Rokkum (2013) reported four different self-reported methods in the literature, including total time per day, number of uses in a particular time period, attitudinal scales, and experience sampling. Further, although self-reported time of use is the most common metric, Junco (2013) compared actual and self-reported time estimates of various laptop uses (Facebook Twitter, e-mail, information searching) with data reported by background monitoring software and found that self-reported time produced substantial overestimates when compared with the monitoring software data. Based on the different results using different measurement strategies, the present study will use two estimates of daily smartphone usage–self-reported frequency of how often people check their smartphone and application-reported number of daily unlocks and daily minutes of smartphone use. The latter includes an application installed on each participant’s smartphone, which runs continuously in the background. This additional source of data should provide a more accurate estimate of daily smartphone usage, which is essential in testing the mediated model. Based on the cited work, the following hypothesis will be examined:

H1: Higher levels of daily technology use will predict lower academic performance.

Multitasking in the Classroom and while Studying

Wood and Zivcakova (2015) summarized the literature on multitasking in educational settings, concluding that off-task multitasking predicts lower grades, and Carrier et al. (2015) described these findings in the classroom and in everyday life. For example, Rosen, Lim, Carrier, and Cheever (2011) texted college students during a videotaped lecture, and those students who received and responded to eight texts in 30 minutes obtained a substantially lower grade than those who received half that number of texts or less. In a study of marketing courses, Clayson and Haley (2012) concluded that grades were negatively affected by in-class multitasking. Other researchers have demonstrated similar results using self-report measures of multitasking (Bellur, Nowak, & Hull, 2015; Burak, 2012; Junco, 2015; Zhang, 2015), multitasking preference measures (Rosen, Carrier et al., 2013), simultaneous technological multitasking during note-taking (Downs et al., 2015), and through diaries of multitasking habits (Mokhtari, Delello, & Reichard, 2015). All reports conclude that classroom multitasking negatively impacts learning.

Technology use is also rampant when college students study. In one study, Rosen, Carrier et al. (2013) observed 263 students who studied for 15 minutes in their (mostly) home environments and found that the college students in the study were on task for only 71% of those minutes with short runs of attention punctuated by distractions. These distractions were mostly from texting and social media, which in turn were predictors of lower college GPA. Based on the cited research the following hypothesis will be examined:

H2: Students who show a preference for multitasking and those who multitask more while studying will show reduced academic performance.

Technological Anxiety (FOMO)

Recent studies have demonstrated, using various methodologies, the impact of the absence of technology on anxiety. In a quasi-experimental study, Cheever, Rosen, Carrier, and Chavez (2014) restricted classroom cell phone use and forced students to simply sit quietly with no distractions during the 70-minute class period. Self-reported anxiety was measured after 10 minutes and twice more. Those with the lowest daily smartphone usage showed no increase in anxiety while those with the highest usage showed increased anxiety within 10 minutes, which continued to rise over the class period. Those with moderate smartphone usage showed an initial increase in anxiety during the second testing period and no further increase after that time. In a laboratory study, researchers (Clayton, Leshner, & Almond, 2015) found that not allowing a participant to answer their ringing phone led to increased heart rate and blood pressure as well as increased self-reported anxiety. Rosen, Carrier et al. (2013), and Rosen, Whaling, Rab, Carrier, and Cheever (2013) found anxiety associated with not being able to check in with various technologies including social media and texting.

Described often as FOMO–fear of missing out–this form of anxiety was defined by Przybylski, Murayama, DeHaan, and Gladwell (2013) as “the fears, worries, and anxieties people may have in relation to being out of touch with event, experiences, and conversations happening across their extended social circles” (p. 1482). Researchers found FOMO related to increased stress associated with Facebook use among adolescents (Beyens, Frison, & Eggermont, 2016) and others have found similar results with college students (Elhai, Levine, Dvorak, & Hall, 2016; Przybylski et al., 2013).

FOMO has been examined as a possible contributor to increased technology use in several recent studies of college students and adolescents. For example, Lepp et al. (2015) found an interrelationship between cell phone use, anxiety, and academic performance while Beyens, Frison, and Eggermont (2016) found a relationship between technology use and fear of missing out. In a study of older adolescents, Oberst, Wegmann, Stodt, Brand, and Chamarro (2017) found that FOMO triggered social network use, particularly among males. Finally, a study of Portuguese adolescents found that anxiety and dependence on media and technology predicted more technology use, particularly tied to uses for communication (Matos et al., 2017). In one recent study of FOMO, Abel, Buff, and Burr (2016) found that FOMO directly predicted college students’ grade point averages. Finally, Terry, Mishra, and Roseth (2016) found that FOMO was related to both multitasking and metacognition. Based on these studies, the following hypotheses will be tested:

H3: Increased anxiety and technological dependence (FOMO) will predict increased use of technology, multitasking, and classroom digital metacognition.

H4: Increased anxiety and technological dependence (FOMO) will predict lower academic performance.

Executive Functioning

Executive functioning includes cognitive processes that control attention, working memory, decision-making, multitasking, and problem solving, all clearly related to the choice to use or not use technology. Executive function has been shown to directly impact learning, particularly those areas of attention regulation including cognitive flexibility, working memory, and inhibitory control (Zelazo, Blair, & Willoughby, 2016). In a recent study of college students, smartphone separation impaired executive functions including working memory, task shifting, and inhibitory control (Hartanto & Yang, 2016). In Rosen, Carrier, Miller, Rokkum, and Ruiz’s (2016) study, executive functioning problems impacted sleep problems both directly and as via a path through additional nighttime awakenings to check in with technology. Researchers have also documented the relationship between technology use, college grades, and executive functioning. Burks et al. (2015) demonstrated a strong link between aspects of conscientiousness from the “Big Five” personality inventory and collegiate success while Cetin (2015) found that goal-setting abilities were related to GPA. Extending this to technology use, Zhang (2015) reported substantial effect sizes with self-regulation behaviors related to laptop in-class multitasking, which was in turn related to course grades. Along similar lines, Lepp et al. (2015) found that self-efficacy for self-regulated learning was related to cell phone use and GPA. In a study with Turkish students, Dos (2014) found a relationship between metacognitive awareness–an executive function–and GPA. Other researchers have reported similar effects of metacognition among college students (Bowman et al., 2015; Carrier et al., 2015; Lepp et al., 2015; Terry, 2015). In contrast, however, Norman and Furnes (2016) reported that although previous studies supported that digital technology used during studying impairs metacognitive reasoning, their study did not support these results. Based on the majority of cited literature, the following hypotheses were tested in this study:

H5: Higher levels of executive functioning problems will directly predict reduced academic performance.

H6: Higher levels of executive functioning problems will predict increased technology use, multitasking, and classroom digital metacognition.

Models of Course Performance

Several researchers have postulated models integrating technology use, affective variables, cognitive variables, and academic course performance. Michikyan, Subrahmanyam, and Dennis (2015) tested a model that examined the impact of self-reported Facebook use on academic success and found that the level of college performance may actually predict Facebook use rather than the other way around with lower levels of academic performance predicting increased Facebook use. Zhang (2015) tested a path model relating in-class laptop multitasking, self-regulation, and academic performance and Werner, Cades, and Boehm-Davis (2015) applied a combination of “memory for goals” theory and Threaded Cognition theory to explain performance. Finally, in a review article, Kamal, Kevlin, and Dong (2016) suggested that the model must be more complex with a variety of variables–external distractions, social factors, media availability, metacognition, and mental factors–predicting multitasking, which in turn predicts academic performance.

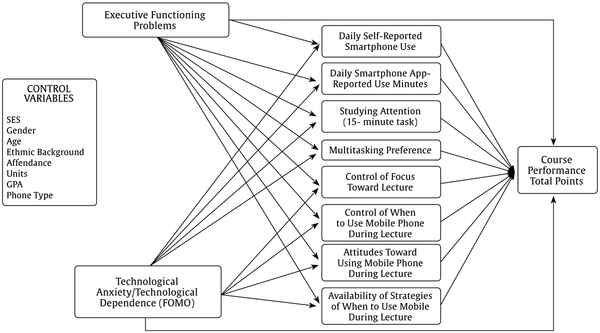

This study started with the comprehensive model suggested by Kamal et al. (2016) and then used the current literature and a model originally proposed to explain sleep problems in college students (Rosen et al., 2016) to provide an explanatory theoretical model of the role that cognitive and affective variables play in determining how much technology college students use in their daily lives and how they choose to use it inside and outside the classroom. The path model investigated by Rosen et al. (2016) was used in this study based on its integration of the cognitive and affective dimensions as well as research demonstrating that sleep quality is related to academic performance in college students (Gaultney, 2016). The adapted sleep problem model included demographic control variables, independent variables of executive functioning problems and technological anxiety/technological dependence, and mediator variables of daily smartphone use, multitasking preference, nighttime phone location, and nighttime phone awakenings to predict sleep problems. The model test showed that while executive functioning problems predicted more nighttime phone awakenings, which predicted sleep problems, it also directly predicted sleep problems. Technological anxiety (FOMO) showed a more complex pattern predicting sleep problems through mediated variables of daily smartphone use and nighttime phone awakenings as well as predicting multitasking preference (which did not predict sleep problems). A similar model (presented in Figure 1) is proposed in the current study to examine course performance. Based on the previous research the following hypotheses will be tested:

Figure 1.

Path analytic model and hypotheses predicting course performance from executive functioning and problems (cognitive influence), technological anxiety (FOMO; affective influence) through mediator of smartphone usage, studying attention, multitasking preference, and classroom digital metacognition subscales.

H7: Executive functioning problems and technological anxiety (FOMO) will predict course performance mediated by smartphone use, multitasking preference, studying attention, and a new construct of classroom digital metacognition.

H8: Cognitive and affective independent variables will predict academic performance independently of their mediated contributions through technology usage.

Method

Participants

Students in a single upper-division general education social science lecture course were offered extra credit for completing a battery of measurement instruments as well as installing an application on their smartphone to monitor the device’s daily usage. Overall, 216 participants collected at least 21 days of smartphone usage information. The sample included 62% females and 38% males with a mean age of 24.40 years (Mdn = 22.5, SD = 6.22) including 14.8% Asian/Asian-American, 13.4% Black/African-American, 12% Caucasian/White, and 56% Hispanic/Spanish descent. Participants supplied their home ZIP code (postal code), which was transformed into estimated median income (M = $53,505; SD = $19,698; Mdn = $50,873) based on the U.S. census figures (American Community Survey, 2014). Median income was used as a proxy for socio-economic status, which was included as a demographic control variable along with age, gender, and ethnic background.

Materials

Based on the predicted path model depicted in Figure 1, four categories of measurement instruments were used: (1) demographic and academic control variables (attendance, course load, GPA); (2) executive functioning problems and technological anxiety/technological dependence; (3) smartphone use (both self-report and calculated by the application), multitasking preference, studying attention, and classroom digital metacognition; and (4) course performance as measured by accumulated points in the class as the dependent variable.

Academic control variables.

In addition to controlling for demographic characteristics, the following variables that might influence academic performance were included as control variables. Course attendance was measured ranging from 1 = attended all class sessions to 7 = missed more than 20 meetings, with 13% attending all class sessions, 36% missing 1 or 2 meetings, 34% missing 3-5 meetings, and 18% missing 6 or more sessions (Mdn = missing 3-5 sessions). Course load was assessed by the number of current semester units ranging from 1 = 3-5 units to 7 = more than 20 units. The typical participant was taking 12-14 units (M = 4.16, Mdn = 4.00, SD = 1.05). College grade point average was assessed with a single self-report item ranging from 1 = 3.75-4.00 to 10 = 0.00-0.99 with the typical participant having a GPA of 3.00-3.24 (M = 4.58, Mdn = 4.00, SD = 3.12). Finally, phone type was used as a control variable and included 112 iOS users (52%) and 104 Android users (48%).

Executive functioning problems.

Executive functioning problems were assessed using Webexec (Buchanan et al., 2010). This measurement tool was created specifically to measure executive functioning problems over the Internet and includes six items asking participants to rate the extent to which they have problems in: (1) maintaining focus, (2) concentrating, (3) multitasking, (4) maintaining a train of thought, (5) finishing tasks, and (6) acting on impulse. Each item is rated on a four-point scale from 1 = no problems experienced to 4 = a great many problems experienced. The measure provides a single total score ranging from 6 to 24, which was then converted to a mean score with higher means indicating more executive functioning problems (individual items: M = 2.12, Mdn = 2, SD = 0.61). The scale has a reported Cronbach’s α of .76 (Buchanan et al., 2010).

Anxiety without technology/technological dependence (FOMO).

This subscale of the Media and Technology Usage and Attitudes Scale (MTUAS; Rosen, Whaling, Carrier et al. 2013) includes three items that indicate technological anxiety (e.g., “I get anxious when I don’t have my cell phone”) and dependence issues (e.g., “I am dependent on my technology”) each rated on a five-point Likert scale ranging from strongly agree to strongly disagree. The current study found a Cronbach’s α of .78 and items were averaged to provide a mean score from 1 to 5 with lower scores indicating more anxiety without technology and more dependence on technology (M = 2.29, Mdn = 2.33, SD = 0.89).

Technology use, studying attention, and multitasking preference.

Five different measurement instruments assessed the mediator variables of technology use, studying attention, and multitasking preference:

The daily smartphone usage subscale of the MTUAS (Rosen, Whaling, Carrier et al., 2013) includes 9 items (e.g., “How often do you read e-mail on a mobile phone?”), each on a 10-point frequency-of-use scale ranging from 1 = never to 10 = all the time. Items are averaged with higher scores indicating more daily smartphone use (M = 7.24, Mdn = 7.44, SD = 1.40). The current study found a Cronbach’s α of .78 for this subscale.

Participants installed the Instant Quantified Self application (Emberify.com), which was used to measure the total number of minutes that the smartphone remained unlocked each day. With a minimum requirement of at least 21 days of valid data, the average participant provided application data for approximately eight weeks of the 15-week semester (M = 55.80 days, Mdn = 58, SD = 17.14). Participants averaged more than three and a half hours of smartphone usage per day (M = 220.33 minutes, Mdn = 214.55, SD = 92.87) ranging from 18 minutes to 559 minutes per day. There was no significant difference in the daily minutes between Android users, M = 210.85, and iOS users, M = 229.13, t (214) = 1.45, p = .149. The number of times that the phone was unlocked was also measured; however, due to a problem with an early beta version of the iOS application not accurately assessing unlocks, those data were not used. The application developer indicated although there was a problem tallying the daily smartphone unlocks, the minutes the phone remained unlocked was accurately measured. In addition, since the application had to be kept open in the background to provide accurate, complete daily data some users mistakenly closed it. Upon inspection of the number of unlocks, a decision was made to remove any data points with unlock counts that were more than 2.5 standard deviations below the mean for each participant. This led to removing a mean of 6.10 unlock observations from iOS users (nearly all at the beginning of the study using the beta version). As a precaution that data from the two platforms might differ, phone type was used as a control variable. Strikingly, the Android users for which these unlock data were accurately tallied unlocked their phone more than 60 times per day (M = 72.33 unlocks, Mdn = 62.28, SD = 41.18) for an average of approximately three to four minutes per unlock (M = 3.92 minutes, Mdn = 2.80, SD = 3.30). A recent study (Andrews, Ellis, Shaw, & Piwek, 2015) created and tested a smartphone application with 23 young adults along by collecting estimated self-assessed use and found that while their participants unlocked their phone nearly 85 times a day for five total hours, there was no correlation between actual and self-assessed unlocks. However, there was a correlation between estimated duration and application-assessed duration of daily usage. Interestingly, in this small sample study 55% of all smartphone uses lasted less than 30 seconds.

Studying attention was assessed in a manner similar to that described in Rosen, Carrier et al. (2013). In that study, independent observers monitored students studying an important assignment for 15 minutes and noting how many minutes they were studying versus how many minutes they were doing something else. In the current study participants were asked to self-monitor behavior studying something important for 15 minutes and the data produced nearly identical results: Rosen, Carrier et al.’s study, M = 9.75 minutes studying, SD = 4.05; current study, M = 9.76 minutes studying, SD = 2.74. The number of minutes on task was used as a measure of “studying attention” with more minutes on task depicting more attention.

Multitasking preference was assessed using items from the Multitasking Preference Inventory (Poposki & Oswald, 2010). The four items with the highest loadings on each factor were selected with each measured on a five-point Likert scale (strongly agree to strongly disagree). One sample item is: “I prefer to work on several projects in a day rather than completing one project and then switching to another.” Higher scores indicated a preference for multitasking (M = 3.3, Mdn = 3.5, SD = 0.97). The current study reported a Cronbach’s α of .81 for these four items.

Classroom digital metacognition was assessed with a measurement tool that examined attitudes and behaviors surrounding classroom use of technology. The scale included 29 items, of which 18 were phrased as “attitudinal questions” (e.g., “I have strategies to avoid using my mobile phone when it is not relevant to lecture” and “I am not easily distracted by my mobile phone”) which were each assessed on a four-point Likert scale. The remaining 11, “behavioral items” (e.g., “I use my mobile phone if I already know the material” and “I find myself using my mobile phone when I do not want to”), included a four-point frequency scale of never, sometimes, often and always. The measure will be discussed in more detail in the results section (Ruiz, Carrier, Lim, Rosen, Ceja, & Jacob, 2015).

Academic Performance.

Academic performance was the dependent variable. The course included 1,000 points possible based on two exams (400 points each) and eight, one-to-three-page writing assignments (200 points). The average score was approximately 78% of the points (M = 785.24, Mdn = 779, SD = 96.35) and the scores were normally distributed (skewness = −0.115, kurtosis = −0.395).

Results

Preliminary Analyses

The classroom digital metacognition measurement tool included 29 original items, many of which were reversed scored to assure that higher scores denoted better metacognition. An exploratory factor analysis (EFA), using varimax rotation and a required loading of .55 revealed four factors that were labeled as follows: (1) Control of Focus Toward Lecture (7 items, α = .83); (2) Control of When to Use Mobile Phone During Lecture (4 items, α = .85); (3) Attitude Toward Using Mobile Phone During Lecture (4 items, α = .77); and (4) Availability of Strategies of When to Use Mobile Phone (4 items, α = .79). These items are listed in Table 1, including an indication of which items were reversed scored. Out of the original 29 items, 10 were discarded, nine due to low loadings and one due to being loaded on two factors. A confirmatory factor analysis (CFA) was applied to the test the relationship among four factors consisting of a latent variable, Classroom Digital Metacognition. The initial run of CFA indicated that the measurement model has an acceptable fit, χ2(146) = 316.21, p < .001, CFI = .90, RMSEA = .074, 90% CI = .06 to .08. Therefore, CFA confirmed the results of the EFA.

Table 1.

Factor Loadings for Four Classroom Digital Metacognition Factors (minimum factor loading .55)

| Classroom Digital Metacognition Factors | ||||

|---|---|---|---|---|

| Reduced Set of Classroom Digital | 1 | 2 | 3 | 4 |

| Metacognition Items | Control of focus toward lecture |

Control when use phone during lecture |

Attitude toward using phone during lecture |

Availability of strategies when use phone |

| 13. I am able to keep my eyes focused straight at the class lecturer if I choose to do so [R] | .78 | |||

| 8. For important class lectures, I can stay focused on the lecture so I do not miss critical information [R] | .76 | |||

| 6. I can motivate myself to stay focused on lecture when needed [R] | .73 | |||

| 10. I have control over the effectiveness of my own learning [R] | .73 | |||

| 4. I can shift my attention away from my mobile phone when class lecture begins [R] | .66 | |||

| 12. I am not easily distracted by my mobile phone [R] | .56 | |||

| 3. I have control over how well I restrain myself from using my mobile phone [R] | .56 | |||

| 26. I use my mobile phone if the class lecture is boring [R] | .81 | |||

| 19. I use my mobile phone if I already know the material [R] | .78 | |||

| 23. I wait until class ends to use my mobile phone | .79 | |||

| 24. I look at my mobile phone during class lecture [R] | .77 | |||

| 15. I learn more whwen I use my mobile phone than when I do not use it. | .77 | |||

| 11. Using my mobile phone helps me stay on task | .76 | |||

| 17. The benefits of using my mobile phone during class lecture outweigh the costs | .74 | |||

| 16. There are benefits of using my mobile phone during class lecture | .71 | |||

| 7. It is important for me to have strategies to avoid using my mobile phone during class lecture [R}] | .80 | |||

| 1. I have strategies to avoid using my mobile phone when it is not relevant to lecture [R] | .79 | |||

| 5. I develop new strategies if I continue to be distracted by my mobile phone [R] | .75 | |||

| 2. I know when each strategy I use to avoid using my mobile phone will be effective | .71 | |||

Note. R indicates item is reversed scored. Items 1-18 rated on a 4-point Likert scale. Items 19-29 rated on a 4-point frequency scale (never, sometimes, often, always). From the original 29 items, 19 items were retained. 9 items were removed due to loadings below the criterion while one item was removed due to it loading on two factors.

Hypothesis Tests for H1, H2, H3, H4, H5, and H6

Statistical analyses were performed using SPSS 24 as well as AMOS to test the hypothesized path model. Table 2 presents the first order correlations between all variables with the dependent variable of course performance as tests of Hypotheses 1, 2, 4, and 5. One-tailed tests were used based on predictions made from the literature. An examination of these relationships showed that the mediator variables of daily smartphone usage measured both with a self-report instrument and a smartphone application as well as studying attention showed significant correlations with course performance. Although not directly hypothesized, Table 2 also shows that three of the four classroom digital metacognition subscales were significantly correlated with course performance. More smartphone use and reduced studying attention were correlated with worse course performance and increased digital metacognition was correlated with better course performance but multitasking preference (an attitudinal self-report scale) was not correlated with course performance, supporting Hypothesis 1 and partially supporting Hypothesis 2. Neither executive functioning nor technological anxiety/dependence (FOMO) was correlated with course performance, rejecting Hypotheses 4 and 5.

Table 2.

Zero-order Correlations between all Variables and Course Performance

| Independent Variables/ Mediator Variables |

Zero-order Correlation Coefficient |

|---|---|

| Executive Functioning Problems | .08 |

| Anxiety/Dependence | −.08 |

| Daily Smartphone Usage | −.13* |

| Minutes Per Day Smartphone Usage | −.19** |

| On-Task Studying (Multitasking) | .30*** |

| Multitasking Preference | .07 |

| Classroom Digital Metacognition subscales: | |

| Control of focus toward lecture | .18** |

| Control when use phone during lecture | .20** |

| Attitude using phone during lecture | .16* |

| Availability of strategies when use phone | −.05 |

p < .05,

p < .01,

p < .001

Table 3 presents the zero-order one-tailed correlations between the independent variables and technology usage variables as tests of Hypotheses 3 and 6. Hypothesis 3 predicted that technological anxiety/dependence would be correlated with technology, usage and multitasking. As seen in the right column of Table 3, with the exception of on-task studying attention, Hypothesis 3 was supported. In addition, Table 3 indicates that all four classroom digital metacognition scales were correlated with anxiety/dependence. Hypothesis 6 was supported with significant correlations between executive functioning and daily smartphone use, on-task studying attention, and multitasking preference as well as three of the four classroom digital metacognition measures.

Table 3.

Zero-order Correlations between Independent Variables and Technology Usage

| Technology Usage Measure | Executive Functioning |

Anxiety/ Dependence |

|---|---|---|

| Daily Smartphone Usage | −.13* | −.25*** |

| Minutes Per Day Smartphone Usage | .07 | −.17** |

| On-Task Studying (Multitasking) | −.16* | .11 |

| Multitasking Preference | −.16* | .12* |

| Classroom Digital Metacognition subscales: | ||

| Control of focus toward lecture | −.47*** | .30*** |

| Control when use phone during lecture | −.17** | .27*** |

| Attitude using phone during lecture | .02 | .12* |

| Availability of strategies when use phone | −.16* | .12* |

p < .05,

p < .01,

p < .001

Hypotheses 7 and 8: Path Model Analyses

Based on the presented literature, the proposed path model predicted that after removing control variables (socio-economic status, gender, age, ethnic background, course attendance, college GPA, and phone type) both cognitive and affective components–executive functioning problems and technological anxiety/technological dependence (FOMO)–would predict course performance first by themselves (H8) and, in addition, by operating through the mediators of smartphone use (self-reported and application-reported), studying attention, self-reported multitasking preference, and classroom digital metacognition to predict course performance (H7). Figure 1 displayed the hypothesized pathways predicting course performance.

In analyzing the proposed model, a path analysis was employed using AMOS with bootstrapping procedures and bias-corrected confidence intervals to test if the hypothesized direct and indirect paths were significant in the proposed model. To control variables (socio-economic status, gender, age, ethnic background, course attendance, college GPA, and phone type), we regressed each model variable listed in the model on the control variables, and employed the residual score in the analysis.

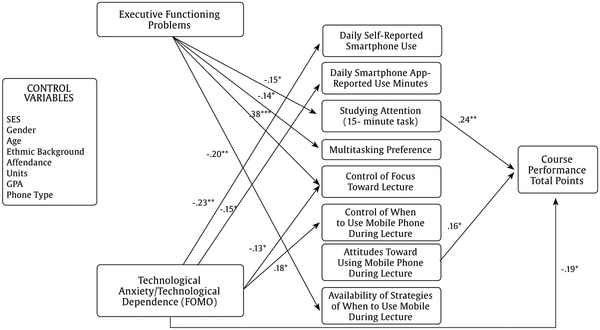

According to the literature, the root mean squared error of approximation (RMSEA) should be lower than .08 in order to be considered as a good fit (Bagozzi & Yi, 1988) and comparative fit index (CFI) values greater than .95 indicate a good fit, with any values between .90 and .95 indicating a reasonably good fit (Hu & Bentler, 1999). According to these guidelines, the initial run of this path model indicated that this was a good fit, χ2(22) = 32.04, p = .08, RMSEA = .05, 90% CI = .000 - .08, CFI = .96 (see Figure 2). Bootstrapping procedures using 2000 bootstrap samples and bias-corrected confidence interval of 95% revealed a significant direct path from the FOMO to course performance (β = −.19, p < .05, CI = −.32 to −.04). This initial path analysis examined paths from control variables to the independent variables and discovered that only two control variables predicted executive functioning problems, gender (β = −.15, p = .033), and age (β = −.15, p = .041), although the path itself was not significant, F(11, 204) = 1.76, p = .064. None of the control variables predicted the second independent variable of technological anxiety/dependence, F(11, 204) = 1.08, p = .383.

Figure 2.

Path Model Testing with Beta Weights for Significant Paths Predicting Course Performance from the Independent and Mediator Variables after Removing Control Variables.

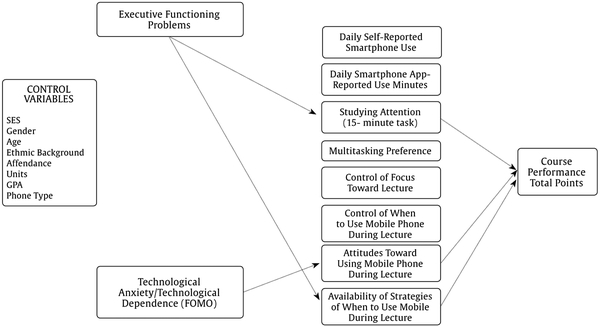

Further analyses employed user-defined estimands, which enables AMOS to estimate the indirect effects within a proposed path model via Visual Basic or C# script. Estimands were created to investigate indirect effect of each independent variable–executive functioning problems and technological anxiety/technological dependence (FOMO)–on course performance via each possible mediating path (e.g., executive functioning → daily self-reported smartphone use → course performance total points). A list of significant mediated paths can be found in Tables 4 (FOMO) and 5 (executive functioning problems) which demonstrated three significant paths: (1) Executive Functioning Problems → Studying Attention → Course Performance; (2) Executive Functioning Problems → Classroom Digital Metacognition (4 subscales) → Course Performance; and (3) Technological Anxiety/Dependence (FOMO) → Classroom Digital Metacognition (4 subscales) → Course Performance. The findings reported a significant indirect path from executive functioning problems to the course performance total points through both study attention and availability of strategies of when to use mobile phone during lecture. Another significant indirect path was found from FOMO to the course performance total points through attitudes toward using a mobile phone during lecture. These paths are illustrated in Figure 3.

Table 4.

User-Defined Estimands Testing Indirect Effects of FOMO

| Technological Anxiety/Technological Dependence (FOMO) |

B Estimates |

|---|---|

| Daily Self-reported Smartphone use → Course Performance Total Points | 0.79 (SE = 1.56) |

| Daily Smartphone App-reported use → Course Performance Total Points | 0.03 (SE = 1.10) |

| Studying Attention (15 minutes task) → Course Performance Total Points | 1.76 (SE = 1.61) |

| Multitasking Preference → Course Performance Total Points | 0.18 (SE = 0.75) |

| Control of Focus Toward Lecture → Course Performance Total Points | 1.63 (SE = 1.30) |

| Control of When to Use Mobile Phone During Lecture → Course Performance Total Points | 0.45 (SE = 1.37) |

| Attitudes Toward Using Mobile Phone During Lecture → Course Performance Total Points | 1.59* (SE = 1.26) |

| Availability of Strategies of When to Use Mobile During Lecture → Course Performance Total Points | −0.78 (SE = 1.10) |

p < .05

Table 5.

User-Defined Estimands Testing Indirect Effects of Executive Functioning Problems

| Executive Functioning Problems → | B Estimates |

|---|---|

| Daily Self-reported Smartphone use → Course PerformanceTotal Points | −0.18 (SE = 0.79) |

| Daily Smartphone App-reported use → Course Performance Total Points | −0.00 (SE = 0.72) |

| Studying Attention (15 minutes task) → Course Performance Total Points | −4.87* (SE = 2.78) |

| Multitasking Preference → Course Performance Total Points | −0.46 (SE = 1.65) |

| Control of Focus Toward Lecture → Course Performance Total Points | −7.32 (SE = 4.90) |

| Control of When to Use Mobile Phone During Lecture → Course Performance Total Points | −0.46 (SE = 1.66) |

| Attitudes Toward Using Mobile Phone During Lecture → Course Performance Total Points | 1.04 (SE = 1.69) |

| Availability of Strategies of When to Use Mobile | 3.56* (SE = 2.53) |

p < .05

Figure 3.

Path Model Estimands Effect Testing for Significant Indirect Paths Predicting Course Performance from the Independent and Mediator Variables after Removing Control Variables.

The results of the two analyses provide a picture of the variables that may impact course performance and provide partial support for Hypotheses 7 and 8. The paths from executive functioning problems through the mediator variables displayed the following results: (1) executive functioning problems predicted studying attention, multitasking preference, and metacognition of the availability of strategies for classroom phone use, but only the link from executive functioning through studying attention and executive functioning through classroom digital metacognition significantly predicted course performance; (2) technological anxiety/dependence (FOMO) predicted smartphone usage both self-reported and application-reported but neither of these paths predicted course performance; (3) technological anxiety/dependence (FOMO) predicted two subscales of classroom digital metacognition but only one–attitudes toward classroom mobile phone use–predicted course performance; and (4) technological anxiety/dependence (FOMO) also predicted course performance directly without the path needing to flow through any of the mediator variables.

Additional Analyses

Two different measures of daily smartphone usage were used in this study, one self-reported frequency of checking in with a smartphone and a second application-reported measure of minutes per day of smartphone use. A bivariate one-tailed Pearson correlation was computed which indicated that these two ways of measuring smartphone use were not significantly correlated (r = .11, p = .06), nor were they related when each was partitioned into quartiles and compared, χ2(9) = 5.64, p = .776. However, given that the daily self-reported smartphone usage scale measures the number of times someone “checks” his/her smartphone, a similar correlation was computed between daily phone unlocks and daily self-reported smartphone usage for only those Android users for whom the unlock data were accurately tallied. This analysis yielded a significant positive relationship (r = .25, p < .01) suggesting that the self-reported measure is likely measuring checks rather than total time spent on the phone. This may supply a potential second way of examining smartphone usage that includes how often someone checks their phone in addition to how much time they spend using their phone.

A similar comparison between attention while studying and multitasking preference showed these two measures were not correlated (r = −.02, p = .41). This result suggests that measuring studying attention through observation may yield different results than assessing one’s self-reported preference for multitasking. These results will be discussed below with reference to how to measure this elusive construct of multitasking.

The studying attention measure yielded the number of minutes that the participant was actually studying rather than doing something else. Participants provided a short written description of what they were doing that distracted them from studying at each of the 15-minute observations and those were categorized as communication based (texting, social media, email, or talking live to someone) or other (e.g., eating, playing a video game, watching a video). Overall, 56% of the interruptions were due to communication issues with the remainder due to other activities. This percentage remained relatively stable over the 15-minute observation, ranging from 46% to 66% communication-based distractions with no significant difference across the 15 minutes.

Additional Application Use Perceptions

In a final survey, a series of questions were asked of the application users. One hundred ninety five (90%) participants completed this questionnaire with the following opinions (NOTE: in each conclusion the iOS users did not differ significantly from the Android users): (1) 67% of participants found the Instant application easy to use; (2) 71% of participants monitored their application use only a few times or occasionally while 20% checked it daily; (3) while 33% felt that the application data of minutes per day were “as expected” an additional 50% felt it was “more than expected”; (4) 75% felt that the application measurements were “accurate” with only 20% feeling that they might have been artificially high due to leaving their phone unlocked but not actively engaging with it; and (5) when asked if they made any changes based on the application information, 65% made no changes and 33% tried to reduce their usage.

Discussion

The study provides two novel additions to the literature on the impact of technology on academic performance. First, in addition to using a standard self-report assessment of personal daily technology use, the study participants used a smartphone application called Instant Quantified Self or “Instant” for short (Emberify.com), which works unobtrusively in the background and counts the number of times the phone is unlocked each day and the total number of daily minutes that it remains unlocked. This unique background application allowed us to compare self-reported smartphone use with actual use to determine if self-reported use is accurately portraying daily smartphone usage. The second contribution is the inclusion of a newly created measure of classroom digital metacognition. This measurement tool includes an assessment both of attitudes and self-reported behaviors examining how a student perceives smartphone usage in the classroom as well as self-reported in-class smartphone usage. The classroom digital metacognition scale was factor analyzed and tested in the proposed path model as a mediator between the cognitive and affective predictors and academic performance, and the results pointed to potential strategies for students and professors to determine appropriate technology usage during lectures which will be discussed later in this section.

This study hypothesized direct paths leading from two independent variables–one cognitive (executive functioning problems) and one affective (technological anxiety/technological dependence)–to course performance as well as possible paths mediated by various uses of technology (self-reported and application-reported daily smartphone use, studying attention, multitasking preference, and classroom digital metacognition) to course performance in an upper-division, general education course. The model was based on other models proposed to account for college course grades as well as a 2016 study examining the impact of cognitive and affective variables on sleep problems through mediator variables of technology usage during the day and at night (Rosen et al., 2016).

In terms of the cognitive variable, although executive functioning problems did not directly impact course performance, it did show two paths to course performance with more problems predicting less studying attention and reduced classroom digital metacognition (fewer available strategies to deal with classroom mobile phone use) that, in turn, both predicted reduced course performance. These are precisely the variables that might be related to executive functioning with poor decision-making leading to inattentive studying and poor choices as to what to do with technology in the classroom and in the home while studying. Correspondingly, executive functioning problems predicted more preference for multitasking (which did not lead to worse course performance), another variable that has been shown to be influenced by attention and decision-making issues.

The technological anxiety/technological dependence variable (FOMO) showed a different pattern by directly influencing daily self-reported and application-reported smartphone usage as well as classroom digital metacognition with only metacognition (attitudes toward classroom phone use) predicting course performance. As hypothesized, technological anxiety/technological dependence directly predicted poorer course performance without needing a path through any technology usage variable. This is also supported by the studying attention data showing that when students were distracted while studying the most common distractor was communication based, primarily texting and Facebook. These two variables were also shown to be the biggest distractors in the original study by Rosen, Carrier et al. (2013) and by other researchers studying student distractions (cf. Junco, 2015) and are likely to bring about FOMO.

The proposed model was adapted from a study predicting sleep problems among college students. The model tests in these two studies share some similarities and differences. In the sleep study the FOMO variable was by far the strongest predictor highlighting paths to sleep problems through daily smartphone use, nighttime phone location and nighttime phone awakenings while executive functioning problems showed only a direct path to sleep problems plus a concurrent path through nighttime phone awakenings. The model in the present study showed no independent contribution from executive functioning problems but did show that unique contribution to course performance by FOMO. Interestingly, both independent variables operated on course performance by impacting classroom digital metacognition suggesting that this special type of metacognition may not simply be a function of our cognitive qualities but may also include some affective influences.

Measuring Daily Technology Usage

One unique aspect of this study was the addition of a smartphone application to unobtrusively assess smartphone usage. The application, which was found by two thirds of the participants to be easy to use, measured the number of minutes that the smartphone remained unlocked and showed that the typical college student spent more than 3.5 hr per day with their phone unlocked. Using valid unlock data from Android users, it was found that the phone was unlocked more than 60 times a day which translated to three to four minutes per unlock. Data reported by another application assessment (Andrews et al., 2015) found similar results although their young adults unlocked their phones 85 times a day for a total of five hours or about the same three to four minutes per unlock. Although the application used in the present study did not provide individual unlock information, the Andrews study found that more than half of all unlocks lasted less than 30 seconds suggesting that participants were briefly “checking in” with their virtual world. The current data suggest that those quick checks are to ascertain if any (perceived) interpersonal communication needs “demanded” immediate attention.

Participants were not instructed to examine daily application-reported data, although such data were easily available. Interestingly, although the course was on the global impact of technology, less than one in three monitored application data more than a few times during the semester even though a large portion of the course dealt with multitasking, attention and distraction. Intriguingly, half the participants were surprised by the daily application-reported minutes, finding them “more than expected.” Regardless of that impression, only one in three participants attempted to reduce their usage. This might be a failure of metacognition or knowing that checking your phone too often or for too long can distract you from the task at hand (learning).

Measurement Issues

Several variables were used to assess how technology was being used both in daily lives, in studying situations, in contemplating multitasking, and in the classroom. Two measures were examined to assess daily smartphone use, one a self-reported measurement of how often the participant checks his/her smartphone and the other an application-reported assessment of how many minutes were spent on the smartphone in a single day. Although the total checks were not correlated with application-reported minutes of usage, the valid unlock data was correlated with self-reported checks, which suggests that there are different constructs being assessed by unlocks and minutes using a smartphone. The fact that the average checks last only a few short minutes, as well as the Andrews et al.’s (2015) work showing more than half of the checks being less than 30 seconds, expands the importance of specifying what precisely is being measured in the construct of smartphone use. Future research might expand on this and use an application that cannot only monitor unlocks and minutes of usage but also accurately assess what is being accessed and how long that access lasts. This is vital if we are to understand what college students are doing with their smartphones in class and while studying and how that might impact learning.

Limitations

This study primarily used self-report measurement instruments although one was self-observational (studying attention) and one was application-reported. The latter had some technological issues that required removing a small amount of data but overall the median participant provided 58 days of valid data. Given that not all students in the course participated in the study, it is an open question as to why some chose not to participate and whether those who might be willing to use a continual monitoring application are in any way different. A second limitation is the length of the study. Given that participants measured their application use across more than half the semester, with nearly all participants monitoring it the last third of the semester after the application bug was located and fixed, it is possible that participants changed their behavior based on the application-reported data. Although self-report data suggest that this was not the case for two-thirds of the participants it is possible that they did make some changes and this should be investigated further.

A final limitation comes from the model structure itself. Only two independent variables–executive functioning problems and technological anxiety/technology dependence–were used in this study. A recent study (Lepp, Barkley, & Li, 2016) suggested that boredom relief might be an important motivator for leisure time smartphone use. Another study that monitored computer screen switches suggested that boredom was an important motivator of switching from a “work” (studying) screen to an “entertainment” (video, social media, gaming) with arousal beginning nearly half a minute prior to the actual screen switch (Yeykelis, Cummings, & Reeves, 2014). This also corresponds with other recent surveys (Bank of America, 2016) showing that 43% of younger Millennials feel bored without their smartphone. A Nielsen study polled nearly 4,000 adults and found that their top motivations for using their smartphone were being alone (70%) followed by when bored or killing time (62%) or while waiting for someone (61%). In the study that observed students studying (Rosen et al., 2011) students were asked why they switched from studying to using their phone and the most common response after checking for messages was boredom (63%). Given the work of John Eastwood on boredom and how stress appears to potentially exacerbate our stress levels (Eastwood, Frischen, Fenske, & Smilek, 2012; Sparks, 2012) it may be important to introduce boredom as a potential moderator of technological anxiety in future models that predict behavior as mediated by technology use.

Implications for College Students

Given the paths we have found that may lead to poor course performance, are there options for managing technology use inside and outside the classroom? In a recent study of college professors (Cheong, Shutter, & Suwinyattichaiporn, 2016) four in-class alternatives were identified: (1) codified rules which make it explicit to the students when and how they are allowed to use technology in the classroom; (2) strategic redirection where the professor directs the students away from technology and back to the classroom material; (3) discursive sanctions where students using technology were “named and shamed”; and (4) deflection or simply ignoring the distractions and leaving the choice to the students. In a new book titled The Distracted Mind (Gazzaley & Rosen, 2016), several additional options are offered for moderating general technology usage including: (1) increasing metacognition about the limitations of our abilities to task switch, (2) reducing accessibility of specific distractors while studying such as social media and other electronic communications by closing applications and screens that contain any communication and thus limiting the temptation to attend to technological communications rather than the study material, and (3) decreasing the mounting anxiety of being out of touch (FOMO) by allowing short one-minute “technology breaks” during the class session to allow for students to check in and dissipate that anxiety. These three suggestions directly relate to paths in the model that lead to course performance. Finally, Bowman et al. (2015) offered additional suggestions for reducing general technology usage including self-monitoring by keeping a log (or an application) of smartphone use, promoting metacognitive skills, teaching mindful meditation to reduce distractibility proneness, using technology specifically to enhance learning rather than distract from the learning process, and teaching technological literacy.

Conclusion

The current study has demonstrated that there are complex paths to college course performance and highlighted executive functioning and technological anxiety (FOMO) as being mediated by technology use as well as studying attention and classroom digital metacognition. In addition, the study highlighted the special role of FOMO in directly predicting poorer course performance. The independent variables of executive functioning problems and FOMO, plus the mediator variables of studying attention and classroom digital metacognition, should prove important in providing ways to promote solid college course performance and suggestions are offered to apply this model to the classroom.

Acknowledgements

Thanks to the George Marsh Applied Cognition Laboratory for their work on this project. Sincere appreciation to the CSUDH McNair Scholar’s Program for supporting Ms. Stephanie Elias and to the National Institutes of Health Minority Access to Research Careers Undergraduate Student Training in Academic Research Program (MARC U*STAR Grant No GM008683) for supporting Mr. Joshua Lozano. Finally, thanks to the National Institutes of Health, U.S. Department of Health and Human Services, Minority Biomedical Research Support Research Initiative for Scientific Enhancement Program (MBRS-RISE Grant No. GM008683) for supporting Mr. Abraham Ruiz.

Footnotes

Conflict of Interest

The authors of this article declare no conflict of interest.

References

- Abel JP, Buff CL, & Burr SA (2016). Social Media and the Fear of Missing out: Scale Development and Assessment. Journal of Business & Economics Research, 14, 33–43. [Google Scholar]

- American Community Survey (2014). ACS data tables on American FactFinder. Retrieved from https://www.census.gov/acs/www/data/data-tables-and-tools/american-factfinder/

- Andrews S, Ellis DA, Shaw H, & Piwek L (2015). Beyond self-report: Tools to compare estimated and real-world smartphone use. PloS One, 10(10), e0139004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagozzi RP, & Yi Y (1988). On the evaluation of structural equational models. Journal of the Academy of Marketing Science, 16(1), 74–94. [Google Scholar]

- Bank of America (2016). Trends in consumer mobility report. Retrieved from http://newsroom.bankofamerica.com/files/press_kit/additional/2016_BAC_Trends_in_Consumer_Mobility_Report.pdf.

- Bellur S, Nowak KL, & Hull KS (2015). Make it our time: In class multitaskers have lower academic performance. Computers in Human Behavior, 53, 63–70. [Google Scholar]

- Beyens I, Frison E, & Eggermont S (2016). “I don’t want to miss a thing”: Adolescents’ fear of missing out and its relationship to adolescents’ social needs, Facebook use, and Facebook related stress. Computers in Human Behavior, 64, 1–8. [Google Scholar]

- Bjornsen CA, & Archer KJ (2015). Relations between college students’ cell phone use during class and grades. Scholarship of Teaching and Learning in Psychology, 1, 326–336. [Google Scholar]

- Bowman LL, Waite BM, & Levine LE (2015). Multitasking and attention: Implications for college students. In Rosen LD, Cheever NA, & Carrier LM (Eds.), The Handbook of Psychology, Technology and Society (pp. 388–403). Hoboken, NJ: Wiley-Blackwell. [Google Scholar]

- Buchanan T, Heffernan TM, Parrott AC, Ling J, Rodgers J, & Scholey AB (2010). A short self-report measure of problems with executive function suitable for administration via the Internet. Behavior Research Methods, 42, 709–714. [DOI] [PubMed] [Google Scholar]

- Burak L (2012). Multitasking in the university classroom. International Journal for the Scholarship of Teaching and Learning, 6(2), 1–12. [Google Scholar]

- Burks SV, Lewis C, Kivi PA, Wiener A, Anderson JE, Götte L, … Rustichini A (2015). Cognitive skills, personality, and economic preferences in collegiate success. Journal of Economic Behavior & Organization, 115, 30–44. [Google Scholar]

- Carrier LM, Rosen LD, Cheever NA, & Lim AF (2015). Causes, effects, and practicalities of everyday multitasking. Developmental Review (Special Issue: Living in the Net Generation. Multitasking, Learning, and Development), 35, 64–78. [Google Scholar]

- Cetin B (2015). Academic Motivation and Self-Regulated Learning in Predicting Academic Achievement in College. Journal of International Education Research, 11(2), 95–106. [Google Scholar]

- Cheever NA, Rosen LD, Carrier LM, & Chavez A (2014). Out of sight is not out of mind: The impact of restricting wireless mobile device use on anxiety levels among low, moderate and high users. Computers in Human Behavior, 37, 290–297. [Google Scholar]

- Chen Q, & Yan Z (2016). Does multitasking with mobile phones affect learning? A review. Computers in Human Behavior, 54, 34–42. [Google Scholar]

- Cheong PH, Shuter R, & Suwinyattichaiporn T (2016). Managing student digital distractions and hyperconnectivity: Communication strategies and challenges for professorial authority. Communication Education, 65, 272–289. [Google Scholar]

- Clayson DE, & Haley DA (2013). An introduction to multitasking and texting prevalence and impact on grades and GPA in marketing classes. Journal of Marketing Education, 35, 26–40. [Google Scholar]

- Clayton RB, Leshner G, & Almond A (2015). The extended iSelf: The impact of iPhone separation on cognition, emotion, and physiology. Journal of Computer-Mediated Communication, 20, 119–135. [Google Scholar]

- Dos B (2014). The relationship between mobile phone use, metacognitive awareness and academic achievement. European Journal of Educational Research, 3, 192–200. [Google Scholar]

- Downs E, Tran A, McMenemy R, & Abegaze N (2015). Exam performance and attitudes toward multitasking in six, multimedia-multitasking classroom environments. Computers & Education, 86, 250–259. [Google Scholar]

- Eastwood JD, Frischen A, Fenske MJ, & Smilek D (2012). The unengaged mind defining boredom in terms of attention. Perspectives on Psychological Science, 7, 482–495. [DOI] [PubMed] [Google Scholar]

- Elhai JD, Levine JC, Dvorak RD, & Hall BJ (2016). Fear of missing out, need for touch, anxiety and depression are related to problematic smartphone use. Computers in Human Behavior, 63, 509–516. [Google Scholar]

- Gaultney JF (2016). Risk for sleep disorder measured during students’ first college semester may predict institutional retention and grade point average over a 3-year period, with indirect effects through self-efficacy. Journal of College Student Retention: Research, Theory & Practice. 18, 333–359. [Google Scholar]

- Gazzaley A, & Rosen LD (2016). The Distracted Mind: Ancient Brains in a High-Tech World. Boston: MIT Press. [Google Scholar]

- Harman BA, & Sato T (2011). Cell phone use and grade point average among undergraduate university students. College Student Journal, 45, 544–549. [Google Scholar]

- Hartanto A, & Yang H (2016). Is the smartphone a smart choice? The effect of smartphone separation on executive functions. Computers in Human Behavior, 64, 329–336. [Google Scholar]

- Hu LT, & Bentler PM (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55. [Google Scholar]

- Jacobsen WC, & Forste R (2011). The wired generation: Academic and social outcomes of electronic media use among university students. Cyberpsychology, Behavior, and Social Networking, 14, 275–280. [DOI] [PubMed] [Google Scholar]

- Junco R (2013). Comparing actual and self-reported measures of Facebook use. Computers in Human Behavior, 29, 626–631. [Google Scholar]

- Junco R (2015). Student class standing, Facebook use, and academic performance. Journal of Applied Developmental Psychology, 36, 18–29. [Google Scholar]

- Kamal M, Kevlin S, & Dong Y (2016). Investigating multitasking with technology in academic settings. SAIS 2016 Proceedings; (Paper 16). Retrieved from http://aisel.aisnet.org/sais2016/16. [Google Scholar]

- Lepp A, Barkley JE, & Karpinski AC (2014). The relationship between cell phone use, academic performance, anxiety, and satisfaction with life in college students. Computers in Human Behavior, 31, 343–350. [Google Scholar]

- Lepp A, Barkley JE, & Karpinski AC (2015). The relationship between cell phone use and academic performance in a sample of US college students. SAGE Open, 5(1), 2158244015573169. [Google Scholar]

- Lepp A, Barkley JE, & Li J (2017). Motivations and Experiential Outcomes Associated with Leisure Time Cell Phone Use: Results from Two Independent Studies. Leisure Sciences, 39, 144–162. 10.1080/01490400.2016.1160807 [DOI] [Google Scholar]

- Matos AP, Costa JJM, Pinheiro M. d. R., Salvador M. d. C., Vale-Dias M. d. L., & Zenha-Rela M (2016). Anxiety and dependence to media and technology use: Media, technology use and attitudes and personality variables in Portuguese adolescents. Journal of Global Academic Institute Education & Social Sciences, 2(2), 1–21. [Google Scholar]

- Michikyan M, Subrahmanyam K, & Dennis J (2015). Facebook use and academic performance among college students: A mixed-methods study with a multi-ethnic sample. Computers in Human Behavior, 45, 265–272. [Google Scholar]

- Mokhtari K, Delello J, & Reichard C (2015). Connected yet distracted: Multitasking among college students. Journal of College Reading and Learning, 45, 164–180. [Google Scholar]

- Norman E, & Furnes B (2017). The relationship between metacognitive experiences and learning: Is there a difference between digital and non-digital study media? Computers in Human Behavior, 54, 301–309. [Google Scholar]

- Oberst U, Wegmann E, Stodt B, Brand M, & Chamarro A (2017). Negative consequences from heavy social networking in adolescents: the mediating role of fear of missing out. Journal of Adolescence, 55, 51–60. [DOI] [PubMed] [Google Scholar]

- Olufadi Y (2015). A configurational approach to the investigation of the multiple paths to success of students through mobile phone use behaviors. Computers & Education, 86, 84–104. [Google Scholar]

- Poposki EM, & Oswald FL (2010). The multitasking preference inventory: Toward an improved measure of individual differences in polychronicity. Human Performance, 23, 247–264. [Google Scholar]

- Przybylski AK, Murayama K, DeHaan CR, & Gladwell V (2013). Motivational, emotional, and behavioral correlates of fear of missing out. Computers in Human Behavior, 29, 1841–1848. [Google Scholar]

- Ravizza SM, Hambrick DZ, & Fenn KM (2014). Non-academic internet use in the classroom is negatively related to classroom learning regardless of intellectual ability. Computers & Education, 78, 109–114. [Google Scholar]

- Rosen LD, Carrier LM, & Cheever NA (2013). Facebook and texting made me do it: Media-induced task switching while studying. Computers in Human Behavior, 29, 948–958. [Google Scholar]

- Rosen LD, Carrier LM, Miller A, Rokkum J, & Ruiz A (2016). Sleeping with technology: Cognitive, affective and technology usage predictors of sleep problems among college students. Sleep Health: Journal of the National Sleep Foundation, 2, 49–56. [DOI] [PubMed] [Google Scholar]

- Rosen LD, Lim AF, Carrier LM, & Cheever NA (2011). An empirical examination of the educational impact of text message-induced task switching in the classroom: Educational implications and strategies to enhance learning. Psicologia Educativa, 17, 163–177. [Google Scholar]

- Rosen LD, Whaling K, Carrier LM, Cheever NA, & Rokkum J (2013c). The media and technology usage and attitudes scale: An empirical investigation. Computers in Human Behavior, 29, 2501–2511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen LD, Whaling K, Rab S, Carrier LM, & Cheever NA (2013). Is Facebook creating “iDisorders”? The link between clinical symptoms of psychiatric disorders and technology use, attitudes and anxiety. Computers in Human Behavior, 29, 1243–1254. [Google Scholar]

- Ruiz A, Carrier M, Lim A, Rosen L, Ceja L, & Jacob J (2015, March). Digital Metacognition in the College Classroom. Poster presentation at the Inaugural International Convention of Psychological Science, Amsterdam. [Google Scholar]

- Skiera B, Hinz O, & Spann M (2015). Social media and academic performance: Does the intensity of Facebook activity relate to good grades? Schmalenbach Business Review: ZFBF, 67, 54–72. [Google Scholar]

- Sparks SD (2012). Researchers argue boredom may be ‘flavor of stress.’ Education Week, 32 (7), 1. [Google Scholar]

- Terry CA (2015, March). Media multitasking, technological dependency, & metacognitive awareness: A correlational study focused on pedagogical implications. Society for Information Technology & Teacher Education International Conference (pp. 1304–1311). [Google Scholar]

- Terry CA, Mishra P, & Roseth CJ (2016). Preference for multitasking, technological dependency, student metacognition, & pervasive technology use: An experimental intervention. Computers in Human Behavior, 65, 241–251. [Google Scholar]

- Werner NE, Cades DM, & Boehm-Davis DA (2015). Multitasking and interrupted task performance from theory to application. In Rosen LD, Cheever NA, & Carrier LM (Eds.), The Handbook of Psychology, Technology and Society (pp. 436–452). Hoboken, NJ: Wiley-Blackwell. [Google Scholar]

- Wood E & Zivcakova L (2015). Understanding multimedia multitasking in educational settings. In Rosen LD, Cheever NA, & Carrier LM (Eds.), The Handbook of Psychology, Technology and Society (pp. 404–419). Hoboken, NJ: Wiley-Blackwell. [Google Scholar]

- Wood E, Zivcakova L, Gentile P, Archer K, De Pasquale D, & Nosko A (2012). Examining the impact of off-task multi-tasking with technology on real-time classroom learning. Computers & Education, 58, 365–374. [Google Scholar]

- Yeykelis L, Cummings JJ, & Reeves B (2014). Multitasking on a single device: Arousal and the frequency, anticipation, and prediction of switching between media content on a computer. Journal of Communication, 64, 167–192. [Google Scholar]

- Zelazo PD, Blair CB, and Willoughby MT (2016). Executive Function: Implications for Education (NCER 2017–2000). National Center for Education Research. Institute of Education Sciences. U.S. Department of Education. Washington, DC. Retrieved from http://ies.ed.gov/ [Google Scholar]

- Zhang W (2015). Learning variables, in-class laptop multitasking and academic performance: A path analysis. Computers & Education, 81, 82–88. [Google Scholar]