Abstract

Amid the coronavirus outbreak, many countries are facing a dramatic situation in terms of the global economy and human social activities, including education. The shutdown of schools is affecting many students around the world, with face-to-face classes suspended. Many countries facing the disastrous situation imposed class suspension at an early stage of the coronavirus outbreak, and Asia was one of the earliest regions to implement live online learning. Despite previous research on online teaching and learning, students' readiness to participate in the real-time online learning implemented during the coronavirus outbreak is not yet well understood. This study explored several key factors in the research framework related to learning motivation, learning readiness and student's self-efficacy in participating in live online learning during the coronavirus outbreak, taking into account gender differences and differences among sub-degree (SD), undergraduate (UG) and postgraduate (PG) students. Technology readiness was used instead of conventional online/internet self-efficacy to determine students' live online learning readiness. The hypothetical model was validated using confirmatory factor analysis (CFA). The results revealed no statistically significant differences between males and females.

On the other hand, the mean scores for PG students were higher than for UG and SD students based on the post hoc test. We argue that during the coronavirus outbreak, gender differences were reduced because students are forced to learn more initiatively. We also suggest that students studying at a higher education degree level may have higher expectations of their academic achievement and were significantly different in their online learning readiness. This study has important implications for educators in implementing live online learning, particularly for the design of teaching contexts for students from different educational levels. More virtual activities should be considered to enhance the motivation for students undertaking lower-level degrees, and encouragement of student-to-student interactions can be considered.

Keywords: Live online learning, Learning readiness, Multi-group analysis, Post hoc test, Heterotrait-monotrait, Higher education, Coronavirus, COVID-19, Pandemic

1. Introduction

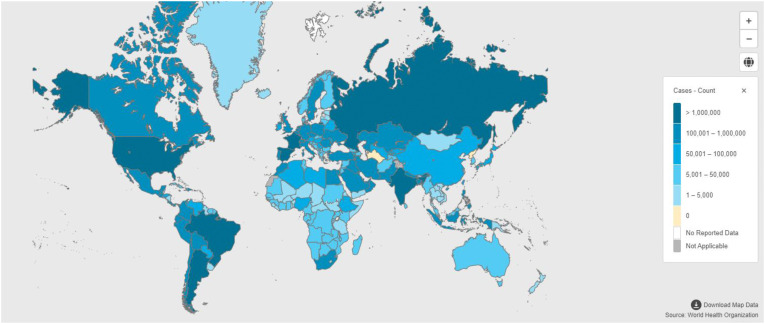

In 2003, a type of upper respiratory tract pneumonia, Severe Acute Respiratory Syndrome (SARS), killed hundreds of people in China and Hong Kong. After nearly two decades, a more destructive novel coronavirus (COVID-19), possibly originating from Wuhan (Huang et al., 2020; Yang, 2020), has spread throughout China, as well as around the world (Guan et al., 2020). The coronavirus outbreak resulted in over one million deaths, with more than 42 million people infected (2020WHO) by the end of October 2020 (Fig. 1 ).

Fig. 1.

The reported confirmed cases of COVID-19 in different countries, as reported by the end of 2020 (2020WHO).

Despite the declining trend of new cases in China, the virus has continued its momentum in other parts of the globe. The coronavirus is damaging to the global economy (Duan, 2020) and affects human social activities, especially in education (Qiu, 2018). Many countries have implemented various policies to control the situation, including border control and public health policies. These measures also affect the education sector, with schools forced to close down in many countries (Stancati 2020; BBC, 2020; MOE, 2020; Education Bureau, 2020).

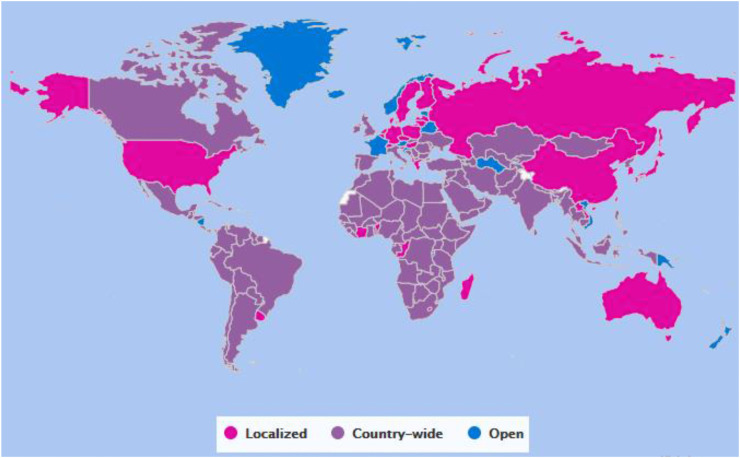

In the midst of the disastrous outbreak, many countries suspended face-to-face classes from the early stage of the pandemic. These nationwide closures impacted over 146 countries, and the number of students accounted for 67.7% of the world's population. The global implementation of coronavirus travel restrictions and bans affected a great number of additional learners. According to recent statistics from the United Nations Educational, Scientific and Cultural Organization (UNESCO), the number of learners affected by COVID-19 was almost 1500 million. Over 190 countries were affected by school closures in mid-April 2020 (UNESCO, 2020). This figure is dropping since April 2020. However, students from over 100 countries were still unable to attend school, affecting more than 900 million learners by June 2020. In early 2021, there were 250 million learners still affected by the school or university closures.

Fig. 2 shows the impact of COVID-19 on school closures corresponding to learners enrolled at all education levels. This affects the studies of lower form students. Still, the shutdown of borders significantly damaged the higher education sector because most higher education sectors have many international and exchange students. These students were not allowed to enter or were required to quarantine for 14 days before entering other countries for study (HKSAR, 2020).

Fig. 2.

The nationwide closures of schools caused by COVID-19 at the end of May 2020 (UNESCO, 2020).

In response to the coronavirus outbreak, remote learning seems to be the only solution for the education sector. While face-to-face classes are stopped, tertiary institutes worldwide have revisited the feasibility of online learning to minimize the impact on the academic progression of students (Hart et al., 2019; Shah and Barkas, 2018).

While online teaching enabled teaching and learning to continue, minimizing the impact on students' study progress and allowing distance learning of overseas students who cannot leave their countries to attend classes across boundaries, several challenges stand in implementing online learning. On the students' side, in many communities, especially in mainland China, large numbers of students do not have internet access, have a slow internet connection at home, or require to bypass a firewall (Zhang, 2020). This means that a switch to online learning could worsen longstanding equity problems. On the teachers' side, the infrastructure's readiness (i.e. internet access, hardware, etc.) and software are concerns. Therefore, it is interesting to explore the key attributes to influence learning effectiveness in blended learning, including online and real-time learning (Law et al., 2019).

Confronted with the viral pandemic, little is known about students’ readiness for online learning, particularly how effective online learning adoption can combat the deadly catastrophe. This paper will explore the learning motivation, learning readiness and efficacy of students engaging in online learning, including the differences among sub-degree, degree and postgraduate students. In particular, student readiness for live or real-time online learning is not yet well understood. Compared with classroom learning, online learning requires higher fundamental computer skills (Sun et al., 2020), the efficiency of human-human and human-machine interaction (Cuadrado-García et al., 2010), as well as studying motivation (Hartnett, 2016). Technology readiness is adopted as one of the independent variables in the hypothetical model of this study. It can determine students' skills in practising with technological tools and identify students' proficiency in adopting the online platform to participate in live online learning. However, these attributes are usually associated with gender and educational background. For instance, males traditionally have higher technology proficiency (Yawson et al., 2021), while females are more likely to express their emotions than men in online forums or other communication channels (Zhang et al., 2013). These differences could contribute to the success of individual online learning.

Nevertheless, differences in student perception in live online learning environments between students from different education levels and genders have seldom been discussed. Given the current pandemic situation and wider adoption of digital technologies in this era, the live online learning education mode may become the new normal in the future. However, various considerations in delivering online classes, including adjusting the teaching approaches, handling different groups of students on the online platform, and the design of teaching pedagogy when an educator practically runs a real-time online class, still have many uncertainties. Therefore, it is important to determine students’ learning readiness for this mode and evaluate their difference between different groups of students during the pandemic. This study presents major contributions to understanding students' perceptions of the live learning approach and the differences between students from different groups. Still, the educators can also actively adjust and adopt a new teaching pedagogy in their real-time classes based on this research. This study contributes to the knowledge in learning readiness and determinants for live online teaching. The comparison between different groups of students for live online learning provides a good reference for the educational research relating to learning pedagogies.

To this end, the present study describes an investigation of a number of student perceptions of live online learning in the academic year 2019–2020. Student readiness and differences in gender and education levels were analyzed and presented. Specifically, this study focused on four major research questions:

-

1.

What underlying factors contribute to students' live online learning readiness during the coronavirus pandemic in the higher education sector?

-

2.

Is student readiness for live online learning affected by gender?

-

3.

Is student readiness for live online learning affected by the education levels of students' degrees, including sub-degree (SD), undergraduate (UG), and postgraduate (PG) degrees?

-

4.

What core factors contribute to differences in student readiness for live online learning between genders and education levels?

The rest of this paper is organized as follows. In section 2, the theoretical background of the research and the development of the model are discussed. In section 3, the methodology is described, with the online learning tools and survey design illustrated. Besides, we elaborate on the participants, validity testing, and analysis used in this study. In section 5, factors contributing to live online learning readiness, differences between gender and education levels, and the implication for educators are discussed. Lastly, the conclusion and future research directions are given in section 6.

2. Theoretical development

2.1. Online teaching and learning

Despite online teaching and learning is implemented for many years, the impacts remain unsatisfactory. Many teachers have refused to adopt online teaching tools due to the non-traditional teaching approach. Mohamad et al. (2015) investigated the factors affecting student motivation in participating in online teaching tools. Motivating teachers to change their teaching approach or style is one of the biggest obstacles. Baran (2011) investigated successful online teaching practices, and it was found that teachers “themselves” and their participatory role within an online environment are very important.

On the other hand, many students are not used to using online learning platforms for study. Many studies have reviewed the practices in providing effective online teaching and learning for students. Technology and communication competencies are the key factors to enhance student satisfaction and retention, but motivation and presence in online learning are the key issues for student participation (Law et al., 2019; Widjaja, 2017). Learning activities, including practice-related scenarios for integrating theory and practice, video lessons, self-assessment activities, exercises, etc., are recommended for educators to enhance students’ online learning presence (Rensburg, 2018; Rohrbach, 2014).

Higher education institutions are forced to shift the teaching strategies towards more flexible, and students oriented approaches by adopting active learning (Law, 2019), flipped classrooms (Rover, Astatke, Bakshi, & Vahid, 2013; Nouri, 2016), blended learning (Kintu, 2017; Bernard, 2014; Dziuban, Graham, Moskal, Norberg, & Sicilia, 2018), and virtual technologies (Tang, Au, Lau, Ho, & Wu, 2020, Tang et al., 2020). Successful implementation of these methods still greatly relies on students attending classes face-to-face. In spite of the various teaching approaches proposed to enhance students learning, the key challenge is that students do not participate. Successful implementation of the learning pedagogies relies on whether students are motivated to use such online learning.

2.2. Live online learning readiness

Live online learning refers to the teaching and learning activities conducted through live broadcasting online, in real-time (Abdous, 2010; Zhao et al., 2018). Teachers must post the teaching materials to the learning platform in advance and deliver lessons, including lectures and tutorials, in real-time, feedback to students' questions and allow discussion in the lessons. Despite motivation and engagement being the key success factors of online learning, however, with the coronavirus pandemic outbreak, the stories are different. Online teaching and learning are compulsory for each stakeholder, including the teachers and students. On the teachers’ side, they have to adapt no matter what their teaching style, participatory role, and the technological barrier. To implement online teaching, universities have provided designated online teaching tools, infrastructure and technical support from the information technology (IT) department to support the teaching in real-time. Nevertheless, students can attend the live lessons anywhere, so that teachers find it impossible to monitor or control, and student readiness for a live online learning environment is still unknown.

Students' readiness for live online learning is believed to be one of the prerequisite conditions for an effective learning process and educational achievement (Dangol & Shrestha, 2019). However, unlike traditional face-to-face teaching in class, remote learning does not guarantee the attendance of students, and it is thus difficult to determine the degree of concentration of students in online learning (Cheon, 2012; Li & Yang, 2016). The live online learning readiness of students is important in affecting the willingness of students' participation in class and the quality of live online learning. Therefore, investigation of the core factors contributing to students’ live online learning readiness is important.

Readiness for learning online has been studied for many years (Warner, Christie, & Choy, 1998). Some studies define it as students' perception in delivery, self-confidence in using e-communication channels and students' autonomy in learning participation. There are different factors in measuring students' readiness for online learning. Recently, Walia (2019) investigated students' readiness for online learning based on their study program and gender differences. Seven measurement components determined the study: student access to technology, their technology skills, lifestyle factors, teaching presence, cognitive presence, social presence, their skills and study habits. Engin (2017)assessed the student's emotional intelligence levels to investigate students' online learning readiness. Five factors were used for the measurements, in which computer/internet self-efficacy was adopted to measures student's tendency in using a computer. Hung (2010) developed a similar instrument to reveal college students' readiness for online learning based on several student attributes. A systematic review was conducted to investigate the tools, the number of factors, and the items used in measuring student online readiness (Alem, Plaisent, Bernard, & Chitu, 2014). Based on the investigation, the student's readiness can be determined by up to 45 questionnaire items (Kerr et al., 2006). It is suggested that multidimensional e-learning readiness is constructed by computer skills, internet self-efficacy, self-direction, motivation, interaction, and attitude.

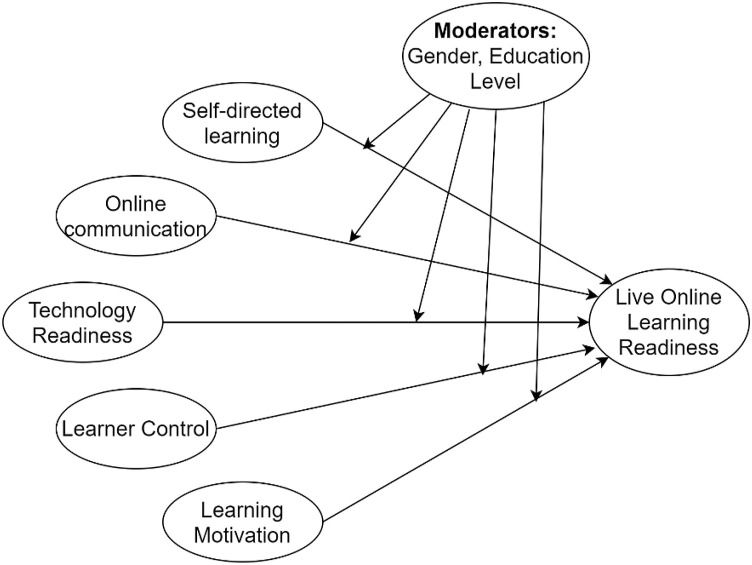

Despite the wide use of online learning, research focusing on live (real-time) online teaching is still very limited. Virtual teaching and real-time teaching platforms for live broadcast teaching were implemented in some case studies (Liu, 2018; Barbour, 2015). The methods and strategies for teaching in real-time were explored (Miranda, 2015). Nevertheless, these research studies did not specifically focus on the higher education sector, where students' live online learning readiness has not been investigated. Therefore, there is a need to explore students' readiness towards live online learning in the higher education sector, involving students at sub-degree, degree and postgraduate levels. By referring to the generalized factors of e-learning readiness in the systematic review in Kerr et al. (2006) and Law et al. (2019c), we have generalized students' learning attitudes to measure students' readiness for live online learning with the following five key factors: technology readiness (Phan & Dang, 2017; Glenda, 2016), self-directed learning, learner control, motivation for learning, and online communication self-efficacy (Engin, 2017; Hung et al., 2010). Fig. 3 generalizes the research model of the five key factors contributing to the student's readiness to live online learning. Each factor is elaborated in sections 2.3 to 2.7.

Fig. 3.

Five key factors contributing to a student's readiness for the live online learning environment.

2.3. Self-directed learning (SDL)

For many years, self-directed learning has been identified since Dewey (1916), arising from adult education principles. It is suggested that all persons are unconstrained for development and growth. The idea of education is that teachers are the facilitators, and thus it is inappropriate to impede or control the learning process.

Knowles (1975) proposed that self-directed learning is a process in which students will diagnose their learning needs initiatively, formulate their own learning goals, identify and implement learning strategies appropriately, and finally evaluate their learning outcomes. Geng et al. (2019) proposed that self-directed learning emphasizes student initiatives, such as setting goals and making choices. A self-directed student will also search for information or other resources online. It was found that students' collaborative learning perceptions and the use of technology can promote student's self-directed learning. Two major characteristics for interpretation of self-directed learning are whether students have authentic control in most decisions and the ability to gain appropriate resource access. In this study, we reference these two major self-directed learning characteristics of students' readiness for live online learning in the questionnaire items setup.

2.4. Online communication self-efficacy (OCS)

Online communication self-efficacy refers to the ability of students to develop their own personal and purposeful relationships—the characteristics including the ability to formulate effective communication in group discussion (Alqurashi, 2016). In the past few decades, the advancement of information technology has enhanced the development of online communication. Li et al. (2014) estimated that around 110 million Chinese college students were internet users. Online provides a wide communication channel for young generations. Online communication has increasing significance for students because it fosters attaining their objectives effectively, for instance, drawing out information, doing assignments, keeping social relationships, and having interactive communication (Jang & Kim, 2012). Ansari and Khan (2020) and Li et al. (2014) suggested online communication contributes to collaborative learning, fulfills the psychological need for satisfaction, encourages the social construction of knowledge, and helps in the adoption of critical thinking skills. In terms of online communication, self-efficacy, text-based online asynchronous communication, discussions (e.g., chat rooms), instant messaging, and participation are critical for exchanging ideas and information (Roper, 2007).

2.5. Technology readiness (TR)

Parasuraman (2000) proposed that technology readiness is “peoples' propensity to embrace and use new technologies for accomplishing goals in home life and at work”. Incorporating technology is a complicated process that needs readiness (Blut & Wang, 2020). It has been identified as a key element in enhancing behavioural intention towards high technology services or products. The influence of technology readiness on students' behavioural intention toward online learning needs investigation (Badia et al., 2014; Shirahada et al., 2019). Indeed, innovativeness and optimism are important to technology readiness, while insecurity and discomfort usually discourage users' technology readiness (Summak et al., 2010; Kim et al., 2020). Based on Hawkins and Mothersbaugh (2010)'s study, technology readiness is similar to consumer behaviour. To this end, we need to consider internal factors (e.g., personality, learning) and external factors (e.g., social status, culture) for exploring technology readiness to be effective in studying students' tendency towards adopting new technologies (Shirahada et al., 2019).

2.6. Learner control (LC)

Learner control is defined as “the learners will benefit if given more control over the pace or style of instruction they receive” (Snow, 1980, p. 151). Learner control describes enabling individual learners to have the judgment on choosing learning examples, arranging a sequence of learning tasks, structure, practice, and pacing the number of learning sessions based on their individual cognitive needs (Chang & Ho, 2009; Chen & Yen, 2019). Several researchers have addressed the significance of learner control (e.g., Chang & Ho, 2009; Chen & Yen, 2019; Orvis et al., 2010). Educators have recognized that effective learning needs dynamic participation and learner control during the learning process (Oxford, 1990). Thus, learners understand learning approaches and find a way to adjust the various learning content in an ever-changing environment (Chang & Ho, 2009). In this way, the design and implementation of online learning require aligning with their preferences to make the students respond more satisfactorily to the online learning programme so that such positive learning attitudes improve engagement in the learning task itself (Orvis et al., 2010).

2.7. Motivation in learning (ML)

Motivation in learning is one of the key successes for student learning today. However, Fredman (2014) and Lau and Ng (2015) indicated that students attribute changes in their actual motivation for learning remain under-researched. Motivation can affect students' perception, attitude, and determination of their learning success (Lee & Pang, 2014). Motivation theorists emphasized the contribution of environment, socialization and personal beliefs (Hufton et al., 2003; Oqvist & Malmstrom, 2016). Oqvist and Malmstrom (2016) identified typical examples of enhancing students' motivation for learning, including modelling, guidance, giving sufficient choices, reinforcement, enthusiasm, and interest induction. In doing so, a learning environment is generated that encourages students’ educational motivation to be high, positive well-being about their studies. Motivation is one of the essential factors for the success of many students learning activities.

3. Methodology

3.1. Research framework

The learning readiness model generalized from Phan and Dang (2017) and Hung et al. (2010) was used. The research framework consists of five key factors: technology readiness, self-directed learning, learner control, motivation for learning, and online communication self-efficacy. Moderating factors, including gender (King, 2013) and education levels (Sheikh, 2014), were used to determine their differences in the measured outcomes. This research validates the underlying factors of the proposed hypothetical model in determining student readiness for live online learning by using CFA and comparing their differences by taking into account the moderating factors.

3.2. Online learning tools

Several institutes in the higher education sector in Hong Kong, Macau and several cities in China have participated in this study. Since the online learning environment was targeted, we recruited teachers from various institutes who deliver classes in an online mode. Due to the travel ban, some exchange students studying in other regions must state in their home cities. To facilitate students based in Mainland China to access online teaching with the teachers in Hong Kong, a new infrastructure with higher bandwidth capacity was established using a secure virtual private network (VPN) connection. The VPN gateway was arranged for a direct connection to learning tools to reduce the possible impact of the students' connection problem. The live online learning platforms adopted in Hong Kong and Macau were mainly Microsoft Teams and Zoom Meeting, including Blackboard Collaborate Ultra, Moodle, edX, Google Meet, etc. In China, the supported live online learning platforms were mainly Ding Ding and Tencent Meeting.

3.3. Survey design

The survey consisted of three sections. The first section included a brief introduction of the current study, which aimed to collect background information. The second section had the core 35 items of the 5 measurement factors. The selection of the 5 measurement factors contributing to the live online learning readiness is explained in Section 2.2. To further develop the core questionnaire items in this study, it was designed and reviewed by referring to the existing literature from Kerr et al. (2006), Law et al. (2019), and Geng et al. (2019). Among the 35 items, 8 items were used to determine the motivation for learning and self-directed learning, 7 items were used to determine learner control and technology readiness, and 5 items were used to determine online communication self-efficacy. To ensure content validity, we referred to the approach adopted in Beck (2020). The questionnaire was assessed by reviewing the scale with five experts with professional expertise in online teaching and learning from various regions, including Hong Kong, Macau, China, and Australia. The questionnaire items were reviewed and adjusted by determining unclear content, misleading items, rephrasing, and rewording. The factors and measurement items for students’ readiness for live online learning used in the questionnaire are summarized in Appendix 1. The last section included demographic questions such as age group, gender, education level, study department, etc., to collect the background information for student segmentation.

3.4. Participants and procedures

This study investigated students' live online learning readiness in higher education, and the participants were students from universities or institutes of higher education who enrolled in live online learning. Students were from three higher academic institutions in Hong Kong: the Hong Kong Polytechnic University, the Hang Seng University, and the College of Professional and Continuing Education of the Hong Kong Polytechnic University were involved in the investigation. These included students from Hong Kong, China, and exchange students at the sub-degree (SD), degree (UG), and postgraduate (PG) levels. SD includes Higher Diploma and Associated Degree programmes. Since students from different education levels participated in the study for comparison purposes, the teaching curricula were different. For the data collection, teachers from the participating higher education sectors were invited to distribute the online questionnaire during class. An invitation e-mail was sent to the teachers who were interested in this study from different institutions. Students elected to participate in the survey voluntarily were required to fill out an anonymous survey. Written consent was obtained from all individual participants included in the data collection process. The questionnaires were distributed using online survey tools 2–4 weeks after attending a live online learning class from February to March of 2020.

3.5. Learning context

The learning context comprises lectures, students' study processes, teaching experience, and assignments or tests (Govender, 2009). In this study, the participants were recruited from the higher education sectors in different disciplines, for example, the Faculty of Business, Faculty of Engineering, Social Sciences, Applied Sciences. The faculties from each institute were randomly selected. The criteria for an online learning environment in this study has two learning contexts: the lecture setting and assignments. The lecture setting is a forum for a teacher to transfer knowledge and the content to the students. In the lecture setting, we strive to mimic the conventional learning environment for teaching before the pandemic by providing live lectures. Students were required to attend lessons on time, and the lecture content was delivered and supplemented in real-time. Students were also able to post questions during lessons, verbally or written in the chatbox. Assignment settings refer to the exercises or tasks for assessing students' understanding at the lecture requirement level. The assessment tasks may also be used for the continuous assessment of students. Solving problems in assignment settings confirms the students’ intention to understand the lecture context (Mo & Tang, 2017). In the live online learning, assignments were usually delivered and submitted through the online learning platform, and the marking of these assignments was conducted on the online platform. The scores and feedback were also provided to the students directly online.

3.6. Validity test and analysis

Analysis packages IBM SPSS and AMOS were used for statistical analysis of the collected data. At the beginning of data analysis, the CFA was used to measure the statistical fitness of the hypothetical model in this study. Measurement models, including chi-square to degrees of freedom, root mean square error of approximation (RMSEA), comparative fit index (CFI) and standardized root mean square residual (SRMR), were used to determine the model fitness. To evaluate the acceptable fitness of the models, we referred to the values suggested by MacCallum et al. (1996), Moutinho (2011) and Xia and Yang (2019), i.e. chi-square to degrees of freedom value < 5, RMSEA values ranging from <0.08, CFI value ≥ 0.9, SRMR <0.05. On the other hand, Cronbach's alpha, composite reliability (CR), and average variance extracted (AVE) were also used to test the model's reliability and validity. Cronbach's alpha value of 0.7 was used to measure the model's satisfactory reliability levels, while the acceptable value of CR and AVE was 0.7 and 0.5, respectively (Taber, 2018). This study adopted new criteria to determine the model's discriminant validity by calculating the Heterotrait-monotrait (HTMT) ratio of the correlations. It demonstrates a superior performance using a Monte Carlo simulation study (Henseler et al., 2015). The HTMT is calculated by the average of the heterotrait-hetero method correlations relative to the average of the monotrait-hetero method correlations, which means the correlations of the indicators across constructs relative to the correlations of indicators within the same construct. Thus, the HTMT of the ith and jth constructs is given by Equation (1):

| (1) |

where K i and K j are denoted as the number of indicators of construct i and j, respectively.

The mean scores and standard deviations (σ) of the students on the 35 measurement items of the five factors were used to compare their readiness for live online learning. We performed two tests to determine the gender difference and education level difference towards students' readiness for live online learning. The independent sample t-test was used to determine gender statistical differences. The differences in students' education level among the five measurement factors were compared by running the Multivariate Analysis of Variance (MANOVA). In both tests, 5% statistical significance of p values of <0.05 were used. Finally, this research also attempted to investigate how different factors affected live online learning readiness of different groups of students through the CFA's multi-group analysis (MGA). The MGA was performed to compare various students, including male and female students and the PG, UG, and SD students.

4. Results

In this research, the questionnaire was distributed to 1189 students, in which a total of 913 valid questionnaires were collected, which means the overall response rate was approximately 76.8%. Table 1 shows the descriptive analysis of the collected feedback. In the collected data, there were 323 (35.4%) SD students, 372 (40.7%) UG students and 218 (23.9%) PG students, in which 383 (41.9%) were male and 530 (58.1%) were female. In this study, most of the students, N = 359 (39.3%), were studying in the business faculty, the number of engineering students, N = 175 (19.2%), students from social sciences, N = 162 (17.7%), and other faculties, N = 217 (23.8%) including applied science, social science, design, humanities, language, etc.

Table 1.

Student's characteristics based on their background of education.

| Variables | SD students | UG students | PG students | |

|---|---|---|---|---|

| Total | Number (%) | 323 (35.4%) | 372 (40.7%) | 218 (23.9%) |

| Gender | Male | 150 (16.4%) | 146 (16.0%) | 87 (9.5%) |

| Female | 173 (18.9%) | 226 (24.8%) | 131 (14.3%) | |

| Faculties | Engineering | 12 (1.3%) | 135 (14.8%) | 28 (3.1%) |

| Business | 217 (23.8%) | 69 (7.6%) | 73 (8.0%) | |

| Social Sciences | 28 (3.1%) | 101 (11.1%) | 33 (3.6%) | |

| Others | 66 (7.2%) | 67 (7.3%) | 84 (9.2%) |

4.1. Validity test

In this test, the CFA was used to validate the hypothetical model. There are 35 measurement items of the five factors in the model to determine students' live online learning readiness. Standard factor loadings were used, and all items were over the suggested value of 0.5 (Hair et al., 2010). The loading ranged from 0.78 to 0.84 for learner control, 0.68 to 0.86 for motivation for learning, 0.51 to 0.77 for technology readiness, 0.67 to 0.77 for self-directed learning, and 0.72 to 0.82 for online communication (see Table 2 ). The factor loading for live online learning readiness on technology readiness, learner control, online communication self-efficacy, self-directed learning, and motivation were 0.81, 0.98, 0.95, 0.79, and 0.90. The overall Cronbach's reliability was 0.927, while the values for all individual factors were over 0.7, showing satisfaction reliability. The measured composite reliability (CR) for the factors ranged from 0.785 to 0.932, which were higher than the target threshold of 0.7 suggested by Bacon et al. (1995). The values for the average variance extracted (AVE) ranged from 0.486 to 0.661. The values for most of the factors were higher than 0.5, except for technology readiness. However, according to Fornell and Larcker (1981), as the measured CR was higher than 0.6, the convergent validity of the construct was adequate, and we can still accept an AVE higher than 0.4 for the technology readiness. The results for the discriminant validity using the HTMT values are illustrated in Table 3 . It was found that the HTMT criterion for each pair of measured items was from 0.711 to 0.946, in which 6 pairs of constructs were smaller than the HTMT0.85 criterion (Kline, 2011) and 3 pairs of constructs were smaller than the HTMT0.9 criterion (Mat Yusoff et al., 2020). The results indicated that only the HTMTinference indicates discriminant validity between all construct measures.

Table 2.

Results of the confirmatory factor analysis, the corresponding factor loadings and reliabilities of the Model.

| Factors/Items | Mean | SD | Factor Loadings | Cronbach's alpha | Composite reliability (CR) | Average Variance extracted (AVE) |

|---|---|---|---|---|---|---|

| Technology readiness | 0.754 | 0.867 | 0.486 | |||

| TR1 | 3.54 | 0.919 | 0.76 | |||

| TR2 | 3.74 | 0.890 | 0.74 | |||

| TR3 | 3.50 | 0.855 | 0.77 | |||

| TR4 | 3.15 | 0.921 | 0.67 | |||

| TR5 | 3.36 | 0.891 | 0.77 | |||

| TR6 | 3.37 | 0.913 | 0.62 | |||

| TR7 | 3.55 | 0.850 | 0.51 | |||

| Learner control | 0.884 | 0.932 | 0.661 | |||

| LC1 | 3.47 | 0.862 | 0.81 | |||

| LC2 | 3.45 | 0.832 | 0.78 | |||

| LC3 | 3.51 | 0.829 | 0.83 | |||

| LC4 | 3.49 | 0.886 | 0.84 | |||

| LC5 | 3.45 | 0.878 | 0.81 | |||

| LC6 | 3.42 | 0.890 | 0.81 | |||

| LC7 | 3.47 | 0.862 | 0.81 | |||

| Online Communication self-efficacy | 0.838 | 0.785 | 0.591 | |||

| OC1 | 3.51 | 0.822 | 0.76 | |||

| OC2 | 3.44 | 0.935 | 0.72 | |||

| OC3 | 3.26 | 0.924 | 0.73 | |||

| OC4 | 3.36 | 0.900 | 0.81 | |||

| OC5 | 3.26 | 0.932 | 0.82 | |||

| Self-directed learning | 0.740 | 0.901 | 0.532 | |||

| SDL1 | 3.41 | 0.911 | 0.74 | |||

| SDL2 | 3.12 | 0.885 | 0.74 | |||

| SDL3 | 3.15 | 0.917 | 0.74 | |||

| SDL4 | 3.52 | 0.891 | 0.77 | |||

| SDL5 | 3.39 | 0.845 | 0.75 | |||

| SDL6 | 3.27 | 0.908 | 0.72 | |||

| SDL7 | 3.57 | 0.823 | 0.70 | |||

| SDL8 | 3.41 | 0.911 | 0.67 | |||

| Motivation for learning | 0.835 | 0.931 | 0.629 | |||

| MFL1 | 3.51 | .0898 | 0.80 | |||

| MFL2 | 3.66 | 0.886 | 0.80 | |||

| MFL3 | 3.52 | 0.908 | 0.83 | |||

| MFL4 | 3.58 | 0.928 | 0.86 | |||

| MFL5 | 3.57 | 0.897 | 0.84 | |||

| MFL6 | 3.23 | 0.956 | 0.72 | |||

| MFL7 | 3.15 | 1.009 | 0.68 | |||

| MFL8 | 3.39 | 0.945 | 0.80 |

Table 3.

The HTMT results among each measured item.

| Measurement Items | TR | LC | OC | SDL |

|---|---|---|---|---|

| Technology readiness | – | |||

| Learner control | 0.789 | – | ||

| Online communication self-efficacy | 0.794 | 0.946 | – | |

| Self-directed learning | 0.747 | 0.760 | 0.711 | – |

| Motivation for learning | 0.738 | 0.879 | 0.855 | 0.755 |

On the other hand, the fit index values of the proposed model were tested and are summarized in Table 4 . χ2/df = 4.90 < 5, RMSEA = 0.065 < 0.08, CFI = 0.904 ≥ 0.9 and SRMR = 0.044 < 0.05. All the model fit measurement statistics indicated a good fit to the model's fit index. Therefore, we argue that the tested model has good reliability and validity. Also, the proportion of the variance explained (R 2), as suggested by Hair et al. (2011), was calculated to evaluate the predictive power criterion of a structured model to investigate its quality. The explained variance of the latent dependent factors to the total variance in the model was calculated, as shown in Table 5 , according to the rule of thumb proposed in Henseler et al. (2009). The predictive power is described as substantial, moderate, and weak with R 2 > 0.75 or 0.50 or 0.25. The results have revealed that technology readiness and self-directed learning have moderate predictive power, while the factors including learner control, online communication self-efficacy, and motivation for learning have substantial predictive power in the study.

Table 4.

Statistics of several fit indices of the hypothetical model.

| χ2 | df | χ2/df | RMSEA | CFI | SRMR |

|---|---|---|---|---|---|

| 2681.00 | 550 | 4.87 | 0.065 | 0.909 | 0.044 |

Table 5.

The predictive power of each factor in the measured model.

| Factors | Technology readiness | Learner control | Online Communication self-efficacy | Self-directed learning | Motivation for learning |

|---|---|---|---|---|---|

| R2 | 0.66 | 0.96 | 0.91 | 0.63 | 0.80 |

| Predictive Power | Moderate | Substantial | Substantial | Moderate | Substantial |

4.2. Students’ readiness for different genders

In this study, 383 (41.9%) males and 530 (58.1%) females participated in live online learning. To investigate the differences between male and female students' readiness for live online learning, an independent samples t-test was used. The results are summarized in Table 6 . It was revealed that the mean scores for females were generally higher than males for all contributing factors towards students’ readiness for live online learning. However, the differences (p-value range from 0.189 to 0.864, >0.05) between the two tested groups were not significant.

Table 6.

Summary of the gender differences in students’ readiness for live online learning.

| Measurement Factors | Gender |

t | p | |

|---|---|---|---|---|

| Male |

Female |

|||

| Mean (SD) | Mean (SD) | |||

| Technology readiness | 3.49 (0.74) | 3.44 (0.60) | 1.11 | 0.269 |

| Learner control | 3.44 (0.80) | 3.45 (0.67) | −0.17 | 0.864 |

| Online Communication self-efficacy | 3.37 (0.79) | 3.36 (0.70) | 0.27 | 0.787 |

| Self-directed learning | 3.42 (0.74) | 3.36 (0.63) | 1.32 | 0.189 |

| Motivation for learning | 3.43 (0.84) | 3.47 (0.70) | −0.77 | 0.443 |

4.3. Students’ readiness for different education level

In this study, 323 (35.4%) SD students, 372 (40.7%) UG students and 218 (23.9%) PG students participated. Students' readiness in the five factors were determined. Multivariate Analysis of Variance (MANOVA) was used to perform the statistical analysis. It was revealed that the mean scores in the five measured factors for the PG students were generally higher than the students studying UG and SD programmes, while UG students did not show an obvious difference than SD students. Students' education levels made a significant difference in the students' readiness for live online learning (F = 1.660, p = 0.013 < 0.05). Wilks' Lambda and Hotelling's Trace for the education levels difference were 6.18 and 6.23, respectively. Students' education levels were significant differences for technology readiness (F = 4.80, p = 0.008 < 0.01), learner control (F = 6.76, p = 0.001 < 0.01), self-directed learning (F = 4.89, p = 0.008 < 0.01), and student's motivation (F = 18.19, p = 0.000 < 0.01) factors based on the MANOVA test. The education level differences in students' live online learning readiness are summarized in Table 7 .

Table 7.

Summary of the education level differences in students’ readiness for live online learning.

| Measurement Factors | Education level |

F | Partial Eta Squared | ||

|---|---|---|---|---|---|

| PG |

UG |

SD |

|||

| Mean (SD) | Mean (SD) | Mean (SD) | |||

| Technology readiness | 3.57 (0.60) | 3.40 (0.69) | 3.45 (0.66) | 4.80** | 0.010 |

| Learner control | 3.60 (0.63) | 3.38 (0.78) | 3.43 (0.72) | 6.76** | 0.015 |

| Online Communication self-efficacy | 3.46 (0.73) | 3.35 (0.77) | 3.33 (0.71) | 2.21 | 0.005 |

| Self-directed learning | 3.51 (0.61) | 3.35 (0.70) | 3.34 (0.69) | 4.89** | 0.011 |

| Motivation for learning | 3.72 (0.66) | 3.35 (0.80) | 3.38 (0.74) | 18.19** | 0.038 |

*p < 0.05; **p < 0.01.

To identify which pairs of means were significantly different and investigate the differences between multiple groups, Fisher's Least Significant Difference (LSD) post hoc tests were implemented. Table 8 summarizes the results and the corresponding interpretation of the five measurement factors. It was revealed that PG students had significant differences to UG and SD students in most of the measurement factors including technology readiness (UG: p = 0.02 < 0.05; SD: p = 0.045 < 0.05), learner control (UG: p = 0.000 < 0.01; SD: p = 0.005 < 0.05), self-directed learning (UG: p = 0.006 < 0.01; SD: p = 0.004 < 0.01) and motivation for learning (UG: p = 0.000 < 0.01; SD: p = 0.000 < 0.01). In online communication self-efficacy, PG students also demonstrated a significant mean difference (p = 0.045 < 0.05) compared with the SD students. The positive value of the mean difference indicated that the mean scores of the PG students were higher than the UG and SD students. In contrast, UG students did not show any significant mean difference from the SD students. The interpretation is given in the last column of Table 8.

Table 8.

The mean differences between PG, UG and SD students, and the corresponding interpretation in 5 measurement factors.

| Measurement Factors | Mean Difference Between |

Interpretation | ||

|---|---|---|---|---|

| PG and UG | PG and SD | UG and SD | ||

| Technology readiness | 0.174** | 0.116* | −0.579 | PG > UG; PG > SD |

| Learner control | 0.222** | 0.177** | −0.045 | PG > UG; PG > SD |

| Online Communication self-efficacy | 0.109 | 0.130* | 0.021 | PG > SD |

| Self-directed learning | 0.157** | 0.170** | 0.013 | PG > UG; PG > SD |

| Motivation for learning | 0.362** | 0.333** | −0.030 | PG > UG; PG > SD |

*p < 0.05; **p < 0.01.

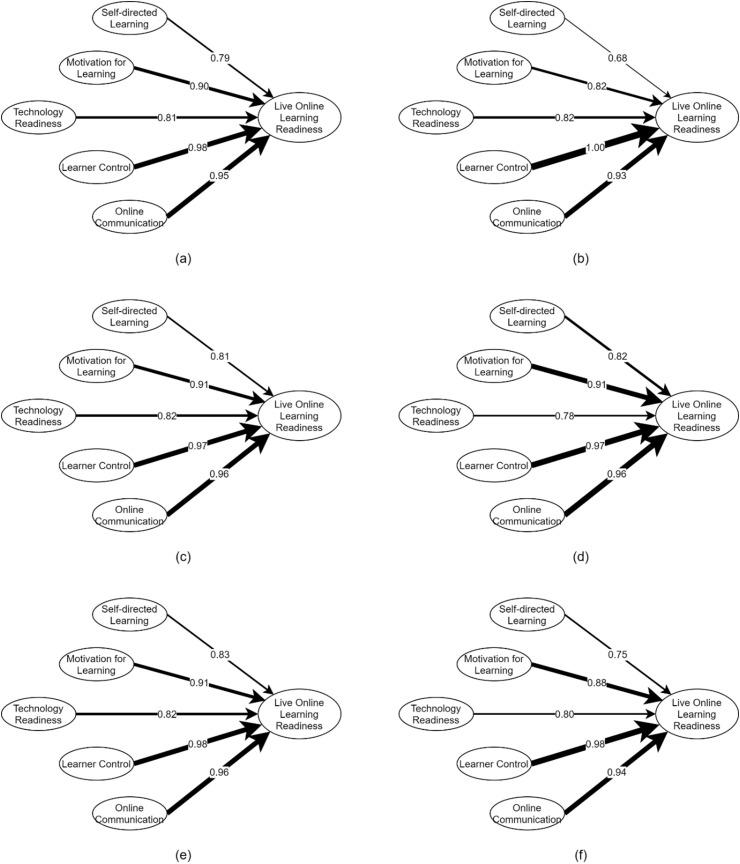

4.4. Multi-group analysis

MGA was also undertaken to address how different factors are affecting the students' live online learning readiness. In the MGA, CFA was conducted to investigate how each factor's loadings affect students' live online learning readiness. All the factors showed a high influence on students' live online learning. The MGA results are illustrated in Fig. 4 . In the analysis, the full model involving all students in the collected data (Fig. 4a) was compared for the PG, UG, and SD student groups (Fig. 4b–d). It was found that the factors loading for the PG, UG, SD students were 0.78–0.97, 0.82 to 0.98, and 0.75 to 0.94, respectively. The research revealed that the motivation for learning, online communication, and learner control were the main factors influencing student's live online learning readiness in this study.

Fig. 4.

Multi-group analysis (MGA) of the CFA for (a) full model; (b) PG students; (c) UG students; (d) SD students; (e) males; and (f) females for all education levels.

On the other hand, male and female groups for all education levels (Fig. 4e and f) were also compared. The results revealed that the factor loadings for the male group were between 0.68 and 1.00, and for the female group shows 0.81 to 0.97. It was found that both male and female groups showed similar results. Most of the factors largely influenced students’ live online learning readiness, except that the influence of self-directed learning for male students was moderate. Similar results were found compared in the PG, UG, and SD groups.

5. Discussion

5.1. Factors contributing to online learning readiness

This study explores the factors contributing to students’ readiness for live online learning. Our results agreed with previous studies (Hung et al., 2010; Melih, 2017). The same model fit indices were used for measurement. It was found that in our model, χ2/df, SRMR, RMSEA and CFI were also fit to the fitting index. The value of χ2/df is greater than 3. However, the value is still within the acceptable range and can be justified. Regarding the factor loading for each corresponding factor, our model is generally higher. On the other hand, the convergent and discriminant validity of the model was also determined in this study. It was found that the AVE was higher than 0.5 for most of the factors except technology readiness. However, we can still accept the convergent validity of this model based on the investigation. Moreover, we adopted a recent model for determining the discriminant validity based on the HTMT criterion. The results have shown a discriminant validity between all construct measures based on the HTMTinference.

The computer/internet self-efficacy was used instead of technology self-efficacy. Since computer and internet skills are already well established and rapid development of the latest technologies in this generation, the current study revised the previous studies by adopting the technology self-efficacy model (Bigatel et al., 2012). Technology was one of the core factors in adopting online teaching and learning in many papers (Phan & Dang, 2017; Yurdagül et al., 2014; Glenda (2016). We believe our model can effectively reflect students’ readiness for live online learning.

5.2. Difference between genders

Our results have shown the significant difference between males and females with respect to the five contributing factors. This agrees with the previous studies, particularly in communication self-efficacy and self-directed learning (Cuadrado-García, 2010; Little-Wiles et al., 2014). Although some existing studies claimed that female students might have the higher motivation (Yukselturk and Bulut, 2009) and better communication in online learning than males (Atkinson & Blankenship, 2009). The motivation of female students was higher because they are more enthusiastic about using communication and technological tools for learning (Ünal, Alır, & Soydal, 2014). The better communication of female students may also be explained by females preferring to use written communication over male students, or females preferred to use written communication over spoken communication.

Nonetheless, live online learning is more attractive to female students than males (Caspi, 2008; Mae and Stuart, 2001). We argue that live learning and the outbreak of the coronavirus may be the reasons to push male students to participate in live onlinelearning more actively, thus narrowing the gender differences towards student readiness in motivation and communication, as well as other contributing factors. The MGA analysis also indicated similar factor loading results between males and females. The analysis agrees with the findings from other studies (Ramírez-Correa et al., 2015).

5.3. Difference between education levels

Previous research indicating a relationship between online learning readiness and the educational level of degree study, especially between SD, UG and PG students, is minimal. In our study, student readiness for live online learning among different education level groups is significantly different. The results agreed with Rasouli et al. (2016) findings that there is a significant difference between the readiness of UG students, PG students, and graduates in E-learning. Our results agree with Wojciechowski and Palmer (2005) that older students, especially those studying at Master or above levels, are more successful in online classes as the readiness for learning is one of the key factors for educational achievement (Dangol & Shrestha, 2019). Whilst Sudha (2011) suggested that the education level did not significantly contribute to students’ readiness for E-Learning, this can be revealed from the results that there was no significant difference between UG and SD students. We believe the academic results for SD students were important for them to pursue UG studies after graduated. Therefore, the differences in learning readiness compared with UG students were not significant.

From the results of this study, it can be implied that the changes to teaching and learning prompted by the coronavirus outbreak may have led to a difference in student readiness, particularly students at the higher education level. A. We argue that the expectation in academic achievement is higher for PG students. They were more ready to accept live online learning than the UG and SD did, as a positive correlation between student's readiness and academic achievement was indicated in Özkan (2015). Despite student readiness for live online learning being investigated, more research can also investigate the psychological changes in online learning during the pandemic for students at different education levels. The MGA analysis for different education levels indicated similar factor loadings compared with the full and gender differences. The MGA analysis indicated that learner control and online communication have relatively higher factor loadings than others. We believe that these two factors are influenced by the learning platform based on Mayende et al. (2017) and Taipjutorus (2012). Thus, the learning platform can be considered as the mediating factor, which may be considered for further study in the future.

5.4. Implications for educators

The findings from the current study have several important implications for educators to implement live online learning in the future. According to the statistical analysis in this study, it was proposed that the student's live online learning readiness is contributed by five core factors. These factors are believed to be the prerequisite conditions for effective learning and academic achievement. Based on the comparison between each contributing factor to the investigated moderating factors, this provides the underlying fundamentals for educators to design the teaching context, teaching strategy, lecture setting, assessment method, etc., to enhance students' live online learning. Though the gender difference for student readiness for live online learning is insignificant, educators should take this opportunity to enhance peer-to-peer communication. Student motivation and communication self-efficacy, especially for male students, can be enhanced to be more active to learn from peers in the future. Educators can consider various strategies where students receive peer-to-peer support, such as creating communities, encouraging teamwork, and using existing social networking tools to promote students' collaborative learning. On the other hand, more efforts can be made to enhance their motivation towards live online learning for students at lower education levels, such as by designing more learning activities such as virtual games (Bovermann et al., 2018) to increase their readiness. Despite PG students demonstrating significantly higher readiness in most of the measured factors, there was no significant difference in online communication self-efficacy, suggesting that teacher-student communication, student-to-student interactions, question and answer sessions can be promoted to develop better online communication habits to enhance student's live online learning readiness.

6. Conclusions

This study intended to investigate students’ readiness for live online learning. Thirty-five (35) measurement items corresponding to five contributing factors, technology readiness, self-directed learning, learner control, motivation for learning, and online communication self-efficacy, were used for investigation. We explored the key factors of the research frameworks in learning motivation, learning readiness and self-efficacy of students attending online learning, the gender difference and the difference among sub-degree, degree and postgraduate students.

The analysis revealed that the difference between male and female students was not significant. However, the mean scores in student readiness for live online learning between PG, UG and SD students were significantly different. The post hoc test results found that PG students have higher motivation for learning than UG and SD students. PG students also have higher technology readiness, learner control, and self-directed learning ability than SD students. However, no significant difference was found for online communication self-efficacy.

Students' readiness for live online learning under the outbreak of the coronavirus was investigated. There are some limitations to this research. First of all, the results have revealed that the difference between males and females was not significant. However, no comparison before the coronavirus outbreak was conducted to determine if there was any change in their learning readiness. The current research used the MGA and MANVOA to determine the core factors contributing to students’ readiness for live online learning during the coronavirus pandemic in different education levels and gender groups. The models have revealed that technology readiness, self-directed learning, motivation, learner control, and online communications can be explained using the model with good reliability and validity. However, each of the core factors was not investigated in depth. In addition, student disciplines were not included in the study due to the skewed distribution of the population. In the future, more research could be conducted to investigate the psychological changes on each of the contributing factors due to the outbreak of the coronavirus in live online learning for different genders, education levels, and disciplines. Since this study determined student readiness in the first few weeks of live online learning, an extension of the current study can be conducted at the end of the teaching period or after this dramatic period is over. We also suggest determining the intrinsic and extrinsic reasons affecting each contributing factor is essential, especially for higher education level students. The findings can be used to drive improvements in students from lower education levels. Since teaching through real-time online broadcasting becoming more popular, different live online learning approaches, such as flipped classrooms, real-time lectures, blended learning, etc., should be further investigated. Finally, data collection in this study relies on the students' direct responses. Students may have difficulties to understand the questions, and this influences the accuracy of the collected data. In the future, we will consider using other data sources, such as the attendance rate, utilization frequency, and duration of students, to supplement the direct data.

Under the coronavirus pandemic, this is the first attempt to implement live online learning worldwide in history. Thus, this research took the initiative to investigate the live online learning readiness for students' different demographics, including gender and education levels. Despite the factors contributing to students' live online learning readiness being investigated, it would still be important to investigate further the degree of emotional changes in students learning during the coronavirus pandemic. The current research has various control variables, and the effects on the live online learning environment are not yet known. Further investigations can be conducted to determine how the variables such as institutions and student’ backgrounds mediate the effects on their learning readiness. To further improve the teaching practice and strategies of online teaching, and provide evidence-based guidance to front-end teachers to conduct online teaching continuously and in the future.

Credit author statement

YM Tang: Conceptualization, Methodology, Formal analysis, Writing-final draft. PC Chen: Reviewing and Editing. Kris M.Y. Law: Conceptualization, Methodology, Writing-first draft. CH Wu: Writing-first draft, Data collection. Yui-yip Lau: Writing-first draft, Data collection. Jieqi Guan: Data collection. Dan He: Data collection. GTS Ho: Data collection.

Acknowledgements

This project is substantially supported by the Department of Industrial and Systems Engineering, The Hong Kong Polytechnic University. We also acknowledge this project is partially supported by the Learning and Teaching Development Grant (LTG16-19/LS/ISE2) from the Hong Kong Polytechnic University, Hong Kong, for the research, authorship and/or publication of this article.

Appendix 1 Contributing factors and measurement items for students' readiness in online learning

| Items | Questions |

|---|---|

| Technology Readiness (TR) | |

| TR1 | I prefer to use the most advanced technology available |

| TR2 | Technology gives me more freedom of mobility |

| TR3 | I feel confident that machines will follows through with what you instructed them to do |

| TR4 | In general, you are among the first in your circle of friend to acquire new technology when it appears |

| TR5 | You enjoy the challenge of figuring our high-tech gadgets |

| TR6 | There should be caution in replacing important people-tasks with technology because new technology can breakdown or get disconnected |

| TR7 | If you provide information to a machine or over the Internet, you can never be sure it really gets to the right place |

| Learner Control (LC) | |

| LC1 | I am able to acquire knowledge from the course easily |

| LC2 | I am able to explore more information related to the course from other means of learning (e.g. videos, games, and discussion). |

| LC3 | I am able to linkage the information learnt form the course. |

| LC4 | The course provides the chance for me to reflect what I learned. |

| LC5 | The course provides clear guideline on learning |

| LC6 | The tools or technologies used in the course facilitate learning and interaction |

| LC7 | I am satisfied of the information delivery channels. |

| Online Communication self-efficacy (OCS) | |

| OCS1 | The course provides the chances for me to express my opinions |

| OCS2 | The course offers the opportunity for me to interact with fellow students informally (e.g. online chat room or forum). |

| OCS3 | The course provides enough collaborative activities. |

| OCS4 | I enjoy participating in the course activities |

| OCS5 | I have a sense of belonging to the course. |

| Self-directed learning (SDL) | |

| SDL1 | I regularly learn things on my own outside of class |

| SDL2 | I am better at learning things on my own than most students. |

| SDL3 | I am very good at finding out answers on my own for things that the teacher does not explain in class |

| SDL4 | If there is something I don't understand in a class, I always find a way to learn it on my own |

| SDL5 | I am good at finding the right resources to help me do well in school |

| SDL6 | I view self-directed learning based on my own initiative as very important for success in school and in my future career. |

| SDL7 | I am very motivated to learn on my own without having to rely on other people |

| SDL8 | I like to be in charge of what I learn and when I learn it. |

| Motivation for learning (MFL) | |

| MFL1 | I am motivated when I can complete the tasks distributed in the course successfully. |

| MFL2 | I am motivated when I have the ability to complete the tasks successfully. |

| MFL3 | I am interested in the course content, and it motivates me to learn from the course. |

| MFL4 | Improving my competence and knowledge in this course motivates me to learn. |

| MFL5 | The knowledge learnt from the course provides insights or long-term benefits to me, it motivates me to study in this course. |

| MFL6 | I am motivated by the course, because I would have strong relationship with my teacher. |

| MFL7 | I am motivated by the course, because I would have strong relationship with my fellow classmates. |

| MFL8 | I am glad that I feel connected to the course. |

References

- Abdous M., Yen C.J. A predictive study of learner satisfaction and outcomes in face-to-face, satellite broadcast, live video-streaming learning environments. The Internet and Higher Education. 2010;13(4):248–257. doi: 10.1016/j.iheduc.2010.04.005. [DOI] [Google Scholar]

- Alqurashi E. Self-efficacy in online learning environments: A literature review. Contemporary Issues In Education Research. 2016;9(1):45–52. doi: 10.19030/cier.v9i1.9549. [DOI] [Google Scholar]

- Alem F., Plaisent M., Bernard P., Chitu O. Student online readiness assessment tools: A systematic review approach. The Electronic Journal of e-Learning. 2014;12(4):375–383. [Google Scholar]

- Ansari J.A.N., Khan N.A. Exploring the role of social media in collaborative learning the new domain of learning. Smart Learn. Environ. 2020;7:9. doi: 10.1186/s40561-020-00118-7. [DOI] [Google Scholar]

- Atkinson J., Blankenship Ray. Online learning readiness of undergraduate college students: A comparison between male and female students. The Journal of Learning in Higher Education. 2009;5:49–56. [Google Scholar]

- Bacon D.R., Sauer P.L., Young M. Composite reliability in structural equations modeling. Educational and Psychological Measurement. 1995;55(3):394–406. doi: 10.1177/0013164495055003003. [DOI] [Google Scholar]

- Badia A., Garcia C., Meneses J. Factors influencing university instructors' adoption of the conception of online teaching as a medium to promote learners' collaboration in virtual learning environments. Procedia - Social and Behavioral Sciences. 2014;141:369–374. doi: 10.1016/j.sbspro.2014.05.065. [DOI] [Google Scholar]

- Baran, E. (2011). The transformation of online teaching practice: Tracing successful online teaching in higher education (Doctoral dissertation). Retrieved from ProQuest Dissertations and Theses. (Order No. 3472990).

- Barbour M. Real-time virtual teaching: Lessons learned from a case study in a rural school. Online Learning. 2015;19:54–68. doi: 10.24059/olj.v19i5.705. [DOI] [Google Scholar]

- BBC News . 2020. Coronavirus: Japan to close all schools to halt spread. 2020, February 27). Retrieved from https://www.bbc.com/news/world-asia-51663182. [Google Scholar]

- Beck K. Ensuring content validity of psychological and educational tests – the role of experts. Frontline Learning Research. 2020;8(6):1–37. doi: 10.14786/flr.v8i6.517. [DOI] [Google Scholar]

- Bernard R.M., Borokhovski E., Schmid R.F., Tamim R.M., Abrami P.C. A meta-analysis of blended learning and technology use in higher education: From the general to the applied. Journal of Computing in Higher Education. 2014;26(1):87–122. [Google Scholar]

- Bigatel P.M., Ragan L.C., Kennan S., May J., Redmond B.F. The identification of competencies for online teaching success. Journal of Asynchronous Learning Networks. 2012;16(1):59–77. [Google Scholar]

- Blut M., Wang C. Technology readiness: A meta-analysis of conceptualizations of the construct and its impact on technology usage. Journal of the Academy of Marketing Science. 2020;48:649–669. doi: 10.1007/s11747-019-00680-8. [DOI] [Google Scholar]

- Bovermann K., Weidlich J., Bastiaens T. Online learning readiness and attitudes towards gaming in gamified online learning – a mixed methods case study. Int J Educ Technol High Educ. 2018;15:27. doi: 10.1186/s41239-018-0107-0. [DOI] [Google Scholar]

- Caspi A., Chajut E., Saporta K. Participation in class and in online discussions: Gender differences. Computers & Education. 2008;50:718–724. doi: 10.1016/j.compedu.2006.08.003. [DOI] [Google Scholar]

- Chang M.M., Ho C.M. Effects of locus of control and learner-control on web-based language learning. Computer Assisted Language Learning. 2009;22(3):189–206. [Google Scholar]

- Chen C.Y., Yen R.R. Interactive Learning Environment; 2019. Learner control, segmenting, and modality effects in animated demonstrations used as the before-class instructions in the flipped classroom. [DOI] [Google Scholar]

- Cheon J., Lee S., Crooks S.M., Song J. An investigation of mobile learning readiness in higher education based on the theory of planned behavior. Comput. Educ. 2012;59(3):1054–1064. doi: 10.1016/j.compedu.2012.04.015. [DOI] [Google Scholar]

- Cuadrado-García M., Ruiz-Molina M., Montoro-Pons J.D. 2010. Are there gender differences in e-learning use and assessment? Evidence from an interuniversity online project in Europe. [Google Scholar]

- Dangol R., Shrestha M. Learning readiness and educational achievement among school students. The International Journal of Indian Psychology. 2019;7:467–476. doi: 10.25215/0702.056. [DOI] [Google Scholar]

- Dewey J. Macmillan; New York: 1916. Education and democracy. [Google Scholar]

- Duan H., Wang S., Yang C. Coronavirus: Limit short-term economic damage. Nature. 2020;578(7796):515. doi: 10.1038/d41586-020-00522-6. [DOI] [PubMed] [Google Scholar]

- Dziuban C., Graham C.R., Moskal P.D., Norberg A., Sicilia N. Blended learning: The new normal and emerging technologies. Int J Educ Technol High Educ. 2018;15:3. doi: 10.1186/s41239-017-0087-5. [DOI] [Google Scholar]

- Education Bureau . 2020. Arrangements on deferral of class resumption for all schools. Retrieved from https://www.edb.gov.hk/attachment/en/sch-admin/admin/about-sch/diseases-prevention/edb_20200225_eng.pdf. [Google Scholar]

- Engin M. Analysis of students' online learning readiness based on their emotional intelligence level. Educational Research and Evaluation. 2017;5 doi: 10.13189/ujer.2017.051306. [DOI] [Google Scholar]

- Fornell Larcker D.F. Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research. 1981:39–50. 1981. [Google Scholar]

- Fredman N. Understanding motivation for study: Human capital or human capacity? International Journal of Training Research. 2014;12(2):93–105. [Google Scholar]

- Geng S., Law K.M.Y., Niu B. Investigating self-directed learning and technology readiness in blending learning environment. Int J Educ Technol High Educ. 2019;16:17. doi: 10.1186/s41239-019-0147-0. [DOI] [Google Scholar]

- Glenda G. An assessment of online instructor e-learning readiness before, during, and after course delivery. Journal of Computing in Higher Education. 2016;28:199–220. doi: 10.1007/s12528-016-9115-z. [DOI] [Google Scholar]

- Govender I. The learning context: Influence on learning to program. Computers & Education. 2009;53:1218–1230. doi: 10.1016/j.compedu.2009.06.005. [DOI] [Google Scholar]

- Guan W., Ni Z., Hu Y., Liang W., Ou C., He J.…Zhong N. Clinical characteristics of coronavirus disease 2019 in China. New England Journal of Medicine. 2020 doi: 10.1056/NEJMoa2002032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hair J.F., Jr., Black W.C., Babin B.J., Anderson R.E. 7th ed. Prentice Hall; NY: 2010. Multivariate data analysis. [Google Scholar]

- Hair J.F., Ringle C.M., Sarstedt M. PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice. 2011;19(2):139–152. [Google Scholar]

- Hart C.M.D., Berger D., Jacob B., Loeb S., Hill M. AERA Open; 2019. Online learning, offline outcomes: Online course taking and high school student performance. [DOI] [Google Scholar]

- Hartnett M. Motivation in online education. Springer; Singapore: 2016. The importance of motivation in online learning. [DOI] [Google Scholar]

- Hawkins D.I., Mothersbaugh D.I. 11th ed. McGraw-Hill; Irwin: 2010. Consumer behavior: Building marketing strategy. [Google Scholar]

- Henseler J., Ringle C.M., Sarstedt M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science. 2015;43:115–135. doi: 10.1007/s11747-014-0403-8. [DOI] [Google Scholar]

- Henseler J., Ringle C., Sinkovics R. The use of partial least squares path modeling in international marketing. Advances in International Marketing (AIM) 2009;20:277–320. [Google Scholar]

- HKSAR . 2020. COVID-19 thematic website, together, we fight the virus. Inbound Travel. (n.d.). Retrieved from https://www.coronavirus.gov.hk/eng/inbound-travel.html. [Google Scholar]

- Huang C., Wang Y., Li X., Ren L.L., Zhao J.P., Hu Y.…Cao B. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. published online Jan 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hufton N.R., Elliott J.G., Illushin L. Teachers' beliefs about student motivation: Similarities and differences across cultures. Comparative Education. 2003;39(3):367–389. [Google Scholar]

- Hung M.L., Chou C., Chen C.H., Own Z.Y. Learner readiness for online learning: Scale development and student perceptions. Computers & Education. 2010;55(3):1080–1090. doi: 10.1016/j.compedu.2010.05.004. [DOI] [Google Scholar]

- Jang J.Y., Kim Y.C. The effects of parent-child communication patterns on children's interactive communication in online communities: Focusing on social self-efficacy and unwillingness to communicate as mediating factors. Asian Journal of Communication. 2012;22(5):493–505. [Google Scholar]

- Kerr M.S., Rynearson K., Kerr M.C. Student characteristics for online learning success. The Internet and Higher Education. 2006;9(2):91–105. [Google Scholar]

- Kim M.J., Lee C.K., Preis M.W. The impact of innovation and gratification on authentic experience, subjective well-being, and behavioral intention in tourism virtual reality: The moderating role of technology readiness. Telematics and Informatics. 2020 doi: 10.1016/j.tele.2020.101349. [DOI] [Google Scholar]

- King P.S. In: Encyclopedia of behavioral medicine. Gellman M.D., Turner J.R., editors. Springer; New York, NY: 2013. Moderators/moderating factors. [DOI] [Google Scholar]

- Kintu M.J., Zhu C., Kagambe E. Blended learning effectiveness: The relationship between student characteristics, design features and outcomes. Int J Educ Technol High Educ. 2017;14:7. doi: 10.1186/s41239-017-0043-4. [DOI] [Google Scholar]

- Kline R.B. 3rd ed. Guilford Press; 2011. Methodology in the Social Sciences.Principles and practice of structural equation modeling. [Google Scholar]

- Knowles M. Cambridge Books; New York: 1975. Self-directed learning: A guide for learners and teachers. [Google Scholar]

- Lau Y.Y., Ng A.K.Y. The motivations and expectations of students pursuing maritime education. WMU Journal of Maritime Affairs. 2015;14:313–331. [Google Scholar]

- Law K.M. Teaching project management using project-action learning (PAL) games: A case involving engineering management students in Hong Kong. International Journal of Engineering Business Management. 2019;11:1–7. doi: 10.1177/1847979019828570. [DOI] [Google Scholar]

- Law K.M.Y., Geng S., Li T. Student enrollment, motivation and learning performance in a blended learning environment: The mediating effects of social, teaching, and cognitive presence. Computers & Education. 2019;136(1):1–12. [Google Scholar]

- Lee P.L., Pang V. The influence of motivational orientations on academic achievement among working adults in continuing education. International Journal of Training Research. 2014;12(1):5–15. [Google Scholar]

- Li C., Shi X., Dang J. Online communication and subjective well-being in Chinese college students: The mediating role of shyness and social self-efficacy. Computers in Human Behavior. 2014;34:89–95. [Google Scholar]

- Little-Wiles J., Fernandez E., Fox P. IEEE Frontiers in Education Conference (FIE) Proceedings; Madrid: 2014. Understanding gender differences in online learning; pp. 1–4. [Google Scholar]

- Liu J.Q. Construction of real-time interactive mode-based online course live broadcast teaching platform for physical training. International Journal of Emerging Technologies in Learning (iJET) 2018 doi: 10.3991/ijet.v13i06.8583. 13.73. [DOI] [Google Scholar]

- Li X., Yang X. Effects of learning styles and interest on concentration and achievement of students in mobile learning. Journal of Educational Computing Research. 2016;54(7):922–945. doi: 10.1177/0735633116639953. [DOI] [Google Scholar]

- MacCallum R.C., Browne M.W., Sugarwara H.M. Power analysis and determination of sample size for covariance structure modeling. Psychological Methods. 1996;1(2):130–149. [Google Scholar]

- Mae M.S., Stuart Y. Does gender matter in online learning? Research in Learning Technology. 2001;9 doi: 10.3402/rlt.v9i2.12024. [DOI] [Google Scholar]

- Mat Yusoff A., Fan S.P., Razak F., Mustafa W. Discriminant validity assessment of religious teacher acceptance: The use of HTMT criterion. Journal of Physics: Conference Series. 2020;1529 doi: 10.1088/1742-6596/1529/4/042045. [DOI] [Google Scholar]

- Mayende G., Prinz A., Isabwe G. Improving communication in online learning systems. Proceedings of the 9th International Conference on Computer Supported Education. 2017;1:300–307. doi: 10.5220/0006311103000307. [DOI] [Google Scholar]

- Miranda R.J., Hermann R.S. Methods & strategies: Teaching in real time. Science and Children. 2015;53(1):80–85. [Google Scholar]

- 2020. Ministry of education of the People’s Republic of China.http://www.moe.gov.cn/ [Google Scholar]

- Mohamad S.N.M., Salleh M.A.A., Salam S. Factors affecting lecturers motivation in using online teaching tools. Procedia - Social and Behavioral Sciences. 2015;195:1778–1784. doi: 10.1016/j.sbspro.2015.06.378. [DOI] [Google Scholar]

- Mo J.P.T., Tang Y.M. Project-based learning of systems engineering V model with the support of 3D printing. Australasian Journal of Engineering Education. 2017;22(1):3–13. doi: 10.1080/22054952.2017.1338229. [DOI] [Google Scholar]

- Moutinho L. In: The SAGE dictionary of quantitative management research. Moutinho L., Hutcheson G., editors. SAGE Publications Ltd; London: 2011. Exploratory or confirmatory factor analysis; pp. 111–116. [DOI] [Google Scholar]

- Nouri J. The flipped classroom: For active, effective and increased learning – especially for low achievers. Int J Educ Technol High Educ. 2016;13:33. doi: 10.1186/s41239-016-0032-z. [DOI] [Google Scholar]

- Oqvist A., Malmstrom M. Teachers' leadership: A maker or a breaker of students' educational motivation. School Leadership & Management. 2016;36(4):365–380. [Google Scholar]

- Orvis K.A., Brusso R.C., Wasserman M.E., Fisher S.L. E-nabled for e-learning? The moderating role of personality in determining the optimal degree of learner control in an e-learning environment. Human Performance. 2010;24(1):60–78. [Google Scholar]

- Oxford R.L. Newbury House/Harper & Row; New York: 1990. Language learning strategies: What every teacher should know. [Google Scholar]

- Özkan K. The influence of learner readiness on student satisfaction and academic achievement in an online program at higher education. Turkish Online Journal of Educational Technology. 2015;14:133–142. [Google Scholar]

- Parasuraman A. Technology readiness index (TRI) a multiple-item scale to embrace new technologies. Journal of. Service Research. 2000;2(4):307–320. [Google Scholar]

- Phan T.T.N., Dang L.T.T. Teacher readiness for online teaching: A critical review. The International Journal on Open and Distance e-Learning. 2017;3(1) [Google Scholar]

- Qiu W.Q., Chu C., Mao A., Wu Jing. The impacts on health, society, and economy of SARS and H7N9 outbreaks in China: A case comparison study. Journal of Environmental and Public Health. 2018:1–7. doi: 10.1155/2018/2710185. [DOI] [PMC free article] [PubMed] [Google Scholar]