Abstract

The search for a scientific theory of consciousness should result in theories that are falsifiable. However, here we show that falsification is especially problematic for theories of consciousness. We formally describe the standard experimental setup for testing these theories. Based on a theory’s application to some physical system, such as the brain, testing requires comparing a theory’s predicted experience (given some internal observables of the system like brain imaging data) with an inferred experience (using report or behavior). If there is a mismatch between inference and prediction, a theory is falsified. We show that if inference and prediction are independent, it follows that any minimally informative theory of consciousness is automatically falsified. This is deeply problematic since the field’s reliance on report or behavior to infer conscious experiences implies such independence, so this fragility affects many contemporary theories of consciousness. Furthermore, we show that if inference and prediction are strictly dependent, it follows that a theory is unfalsifiable. This affects theories which claim consciousness to be determined by report or behavior. Finally, we explore possible ways out of this dilemma.

Keywords: consciousness, theories and models

Introduction

Successful scientific fields move from exploratory studies and observations to the point where theories are proposed that can offer precise predictions. Within neuroscience, the attempt to understand consciousness has moved out of the exploratory stage and there are now a number of theories of consciousness capable of predictions that have been advanced by various authors (Koch et al. 2016).

At this point in the field’s development, falsification has become relevant. In general, scientific theories should strive to make testable predictions (Popper 1968). In the search for a scientific theory of consciousness, falsifiability must be considered explicitly as it is commonly assumed that consciousness itself cannot be directly observed, instead it can only be inferred based off of report or behavior.

Contemporary neuroscientific theories of consciousness first began to be proposed in the early 1990s (Crick 1994). Some have been based directly on neurophysiological correlates, such as proposing that consciousness is associated with neurons firing at a particular frequency (Crick and Koch 1990) or activity in some particular area of the brain like the claustrum (Crick and Koch 2005). Other theories have focused more on the dynamics of neural processing, such as the degree of recurrent neural connectivity (Lamme 2006). Others yet have focused on the “global workspace” of the brain, based on how signals are propagated across different brain regions (Baars 1997). Specifically, Global Neuronal Workspace (GNW) theory claims that consciousness is the result of an “avalanche” or “ignition” of widespread neural activity created by an interconnected but dispersed network of neurons with long-range connections (Sergent and Dehaene 2004).

Another avenue of research strives to derive a theory of consciousness from analysis of phenomenal experience. The most promising example thereof is Integrated Information Theory (IIT) (Tononi 2004, 2008; Oizumi et al. 2014). Historically, IIT is the first well-formalized theory of consciousness. It was the first (and arguably may still be the lone) theory that makes precise quantitative predictions about both the contents and level of consciousness (Tononi 2004). Specifically, the theory takes the form of a function, the input of which is data derived from some physical system’s internal observables, while the output of this function is predictions about the contents of consciousness (represented mathematically as an element of an experience space) and the level of consciousness (represented by a scalar value ).

Both GNW and IIT have gained widespread popularity, sparked a general interest in consciousness, and have led to dozens if not hundreds of new empirical studies (Massimini et al. 2005; Del Cul et al. 2007; Dehaene and Changeux 2011; Gosseries et al. 2014; Wenzel et al. 2019). Indeed, there are already significant resources being spent attempting to falsify either GNW or IIT in the form of a global effort pre-registering predictions from the two theories so that testing can be conducted in controlled circumstances by researchers across the world (Ball 2019; Reardon 2019). We therefore often refer to both GNW and IIT as exemplar theories within consciousness research and show how our results apply to both. However, our results and reasoning apply to most contemporary theories, e.g. (Lau and Rosenthal 2011; Chang et al. 2019), particularly in their ideal forms. Note that we refer to both “theories” of consciousness and also “models” of consciousness, and use these interchangeably (Seth 2007).

Due to IIT’s level of formalization as a theory, it has triggered the most in-depth responses, expansions, and criticisms (Cerullo 2015; Bayne 2018; Mediano et al. 2019; Kleiner and Tull 2020) since well-formalized theories are much easier to criticize than nonformalized theories. Recently, one criticism levied against IIT was based on how the theory predicts feedforward neural networks have zero and recurrent neural networks have nonzero . Since a given recurrent neural network can be “unfolded” into a feedforward one while preserving its output function, this has been argued to render IIT outside the realm of science (Doerig et al. 2019). Replies have criticized the assumptions which underlie the derivation of this argument (Tsuchiya et al. 2019; Kleiner 2020).

Here, we frame and expand concerns around testing and falsification of theories by examining a more general question: what are the conditions under which theories of consciousness (beyond IIT alone) can be falsified? We outline a parsimonious description of theory testing with minimal assumptions based on first principles. In this agnostic setup, falsifying a theory of consciousness is the result of finding a mismatch between the inferred contents of consciousness (usually based on report or behavior) and the contents of consciousness as predicted by the theory (based on the internal observables of the system under question).

This mismatch between prediction and inference is critical for an empirically meaningful scientific agenda, because a theory’s prediction of the state and content of consciousness on its own cannot be assessed. For instance, imagine a theory that predicts (based on internal observables like brain dynamics) that a subject is seeing an image of a cat. Without any reference to report or outside information, there can be no falsification of this theory, since it cannot be assessed whether the subject was actually seeing a “dog” rather than “cat.” Falsifying a theory of consciousness is based on finding such mismatches between reported experiences and predictions.

In the following work, we formalize this by describing the prototypical experimental setup for testing a theory of consciousness. We come to a surprising conclusion: a widespread experimental assumption implies that most contemporary theories of consciousness are already falsified.

The assumption in question is the independence of an experimenter’s inferences about consciousness from a theory’s predictions. To demonstrate the problems this independence creates for contemporary theories, we introduce a “substitution argument.” This argument is based on the fact that many systems are equivalent in their reports (e.g. their outputs are identical for the same inputs), and yet their internal observables may differ greatly. This argument constitutes both a generalization and correction of the “unfolding argument” against IIT presented in Doerig et al. (2019). Examples of such substitutions may involve substituting a brain with a Turing machine or a cellular automaton since both types of systems are capable of universal computation (Turing 1937; Wolfram 1984) and hence may emulate the brain’s responses, or replacing a deep neural network with a single-layer neural network, since both types of networks can approximate any given function (Hornik et al. 1989; Schäfer and Zimmermann 2006).

Crucially, our results do not imply that falsifications are impossible. Rather, they show that the independence assumption implies that whenever there is an experiment where a theory’s predictions based on internal observables and a system’s reports agree, there exists also an actual physical system that falsifies the theory. One consequence is that the “unfolding argument” concerning IIT (Doerig et al. 2019) is merely a small subset of a much larger issue that affects all contemporary theories which seek to make predictions about experience off of internal observables. Our conclusion shows that if independence holds, all such theories come falsified a priori. Thus, instead of putting the blame of this problem on individual theories of consciousness, we show that it is due to issues of falsification in the scientific study of consciousness, particularly the field’s contemporary usage of report or behavior to infer conscious experiences.

A simple response to avoid this problem is to claim that report and inference are not independent. This is the case, e.g., in behaviorist theories of consciousness, but arguably also in Global Workspace Theory (Baars 2005), the “attention schema” theory of consciousness (Graziano and Webb 2015) or “fame in the brain” (Dennett 1991) proposals. We study this answer in detail and find that making a theory’s predictions and an experimenter’s inferences strictly dependent leads to pathological unfalsifiability.

Our results show that if the independence of prediction and inference holds true, as in contemporary cases where report about experiences is relied upon, it is likely that no current theory of consciousness is correct. Alternatively, if the assumption of independence is rejected, theories rapidly become unfalsifiable. While this dilemma may seem like a highly negative conclusion, we take it to show that our understanding of testing theories of consciousness may need to change to deal with these issues.

Formal Description of Testing Theories

Here, we provide a formal framework for experimentally testing a particular class of theories of consciousness. The class we consider makes predictions about the conscious experience of physical systems based on observations or measurements. This class describes many contemporary theories, including leading theories such as IIT (Oizumi et al. 2014), GNW Theory (Dehaene and Changeux 2004), Predictive Processing [when applied to account for conscious experience (Hohwy 2012; Hobson et al. 2014; Seth 2014; Clark 2019; Dolega and Dewhurst 2020)], or Higher Order Thought Theory (Rosenthal 2002). These theories may be motivated in different ways, or contain different formal structures, such as e.g., the ones of category theory (Tsuchiya et al. 2016). In some cases, contemporary theories in this class may lack the specificity to actually make precise predictions in their current form. Therefore, the formalisms we introduce may sometimes describe a more advanced form of a theory, one that can actually make predictions.

In the following section, we introduce the necessary terms to define how to falsify this class of theories: how the measurement of a physical system’s observables results in datasets (Experiments section), how a theory makes use of those datasets to offer predictions about consciousness (Predictions section), how an experimenter makes inferences about a physical system’s experiences (Inferences section), and finally how falsification of a theory occurs when there is a mismatch between a theory’s prediction and an experimenter’s inference (Falsification section). In Summary section, we give a summary of the introduced terms. In subsequent sections, we explore the consequences of this setup, such as how all contemporary theories are already falsified if the data used by inferences and predictions are independent, and also how theories are unfalsifiable if this is changed to a strict form of dependency.

Experiments

All experimental attempts to either falsify or confirm a member of the class of theories we consider begin by examining some particular physical system which has some specific physical configuration, state, or dynamics, p. This physical system is part of a class P of such systems which could have been realized, in principle, in the experiment. For example, in IIT, the class of systems P may be some Markov chains, set of logic gates, or neurons in the brain, and every denotes that system being in a particular state at some time t. On the other hand, for GNW, P might comprise the set of long-range cortical connections that make up the global workspace of the brain, with p being the activity of that global workspace at that time.

Testing a physical system necessitates experiments or observations. For instance, neuroimaging tools like fMRI or EEG have to be used in order to obtain information about the brain. This information is used to create datasets such as functional networks, wiring diagrams, models, or transition probability matrices. To formalize this process, we denote by all possible datasets that can result from observations of P. Each is one particular dataset, the result of carrying out some set of measurements on p. We denote the datasets that can result from measurements on p as . Formally:

| (1) |

where is a correspondence, which is a “generalized function” that allows more than one element in the image (functions are a special case of correspondences). A correspondence is necessary because, for a given p, various possible datasets may arise, e.g., due to different measurement techniques such as fMRI vs. EEG, or due to the stochastic behavior of the system, or due to varying experimental parameters. In the real world, data obtained from experiments may be incomplete or noisy, or neuroscientific findings difficult to reproduce (Gilmore et al. 2017). Thus for every , there is a whole class of datasets which can result from the experiment.

Note that describes the experiment, the choice of observables, and all conditions during an experiment that generates the dataset o necessary to apply the theory, which may differ from theory to theory, such as interventions in the case of IIT. In all realistic cases, the correspondence is likely quite complicated since it describes the whole experimental setup. For our argument, it simply suffices that this mapping exists, even if it is not known in detail.

It is also worth noting here that all leading neuroscientific theories of consciousness, from IIT to GNW, assume that experiences are not observable or directly measurable when applying the theory to physical systems. That is, experiences themselves are never identified or used in but are rather inferred based on some dataset o that contains report or other behavioral indicators.

Next, we explore how the datasets in are used to make predictions about the experience of a physical system.

Predictions

A theory of consciousness makes predictions about the experience of some physical system in some configuration, state, or dynamics, p, based on some dataset o. To this end, a theory carries within its definition a set or space E whose elements correspond to various different conscious experiences a system could have. The interpretation of this set varies from theory to theory, ranging from descriptions of the level of conscious experience in early versions of IIT, descriptions of the level and content of conscious experience in contemporary IIT (Kleiner and Tull 2020), or the description only of whether a presented stimuli is experienced in GNW or HOT. We sometimes refer to elements e of E simply as experiences.

Formally, this means that a prediction considers an experimental dataset (determined by obs) and specifies an element of the experience space E. We denote this as pred, for “prediction,” which is a map from to E. The details of how individual datasets are being used to make predictions again do not matter for the sake of our investigation. What matters is that a procedure exists, and this is captured by pred. However, we have to take into account that a single dataset may not predict only one single experience. In general, pred may only allow an experimenter to constrain experience of the system in that it only specifies a subset of all experiences a theory models. We denote this subset by . Thus, is also a correspondence

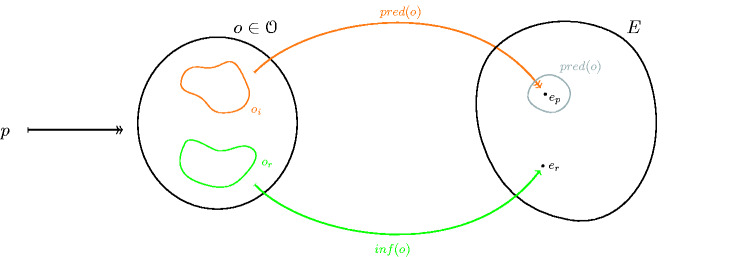

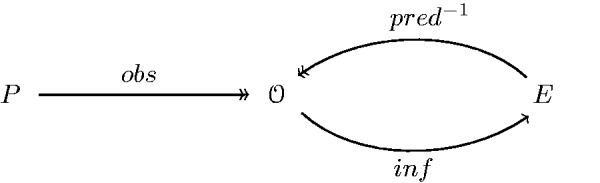

Shown in Fig. 1 is the full set of terms needed to formally define how most contemporary theories of consciousness make predictions about the experience. So far, what we have said is very general. Indeed, the force and generalizability of our argument comes from the fact that we do not have to define pred explicitly for the various models we consider. It suffices that it exists, in some form or the other, for the models under consideration.

Figure 1.

We assume that an experimental setup apt for a particular model of consciousness has been chosen for some class of physical systems P, wherein represents the dynamics or configurations of a particular physical system. then denotes all datasets that can arise from observations or measurements on P. Measuring the observables of p maps to datasets , which is denoted by the obs correspondence. E represents the mathematical description of experience given by the theory or model of consciousness under consideration. In the simplest case, this is just a set whose elements indicate whether a stimulus has been perceived consciously or not, but far more complicated structures can arise (e.g. in IIT). The correspondence pred describes the process of prediction as a map from to E.

It is crucial to note that predicting states of consciousness alone does not suffice to test a model of consciousness. Some have previously criticized theories of consciousness, IIT in particular, just based off of their counter-intuitive predictions. An example is the criticism that relatively simply grid-like networks have high (Aaronson 2014; Tononi 2014). However, debates about counter-intuitive predictions are not meaningful by themselves, since alone does not contain enough information to say whether a theory is true or false. The most a theory could be criticized for is either not fitting our own phenomenology or not being parsimonious enough, neither of which are necessarily violated by counter-intuitive predictions. For example, it may actually be parsimonious to assume that many physical systems have consciousness (Goff 2017). That is, speculation about acceptable predictions by theories of consciousness must implicitly rely on a comparative reference to be meaningful, and speculations that are not explicit about their reference are uninformative.

Inferences

As discussed in the previous section, a theory is unfalsifiable given just predictions alone, and so pred must be compared to something else. Ideally, this would be the actual conscious experience of the system under investigation. However, as noted previously, the class of theories we focus on here assumes that experience itself is not part of the observables. For this reason, the experience of a system must be inferred separately from a theory’s prediction to create a basis of comparison. Most commonly, such inferences are based on reports. For instance, an inference might be based on an experimental participant reporting on the switching of some perceptually bistable image (Blake et al. 2014) or on reports about seen vs. unseen images in masking paradigms (Alais et al. 2010).

It has been pointed out that report in a trial may interfere with the actual isolation of consciousness, and there has recently been the introduction of so-called “no-report paradigms” (Tsuchiya et al. 2015). In these cases, report is first correlated to some autonomous phenomenon like optokinetic nystagmus (stereotyped eye movement), and then the experimenter can use this instead of the subject’s direct reports to infer their experiences. Indeed, there can even be simpler cases where report is merely assumed: e.g., that in showing a red square, a participant will experience a red square without necessarily asking the participant since previously that participant has proved compos mentis. Similarly, in cases of nonhumans incapable of verbal report, “report” can be broadly construed as behavior or output.

All these cases can be broadly described as being a case of inference off of some data. These data might be actual reports (like a participant’s button pushes) or may be based off of physiological reactions (like no-report paradigms) or may be the outputs of a neural network or set of logic gates, such as the results of an image classification task (LeCun et al. 2015). Therefore, the inference can be represented as a function, , between a dataset o generated by observation or measurement of the physical system, and the set of postulated experiences in the model of consciousness, E:

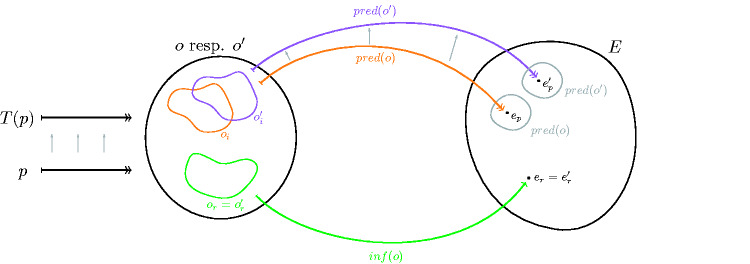

Defining as a function means that we assume that for every experimental dataset o, one single experience in E is inferred during the experiment. Here, we use a function instead of a correspondence for technical and formal ease, which does not affect our results: if two correspondences to the same space are given, one of them can be turned into a function. (If is a correspondence, one defines a new space by . Every individual element of this space describes exactly what can be inferred from one dataset , so that is a function. The correspondence is then redefined, for every , by the requirement that iff for some .) The inf function is flexible enough to encompass both direct report, no-report, input/output analysis, and also assumed-report cases. It is a mapping that describes the process of inferring the conscious experience of a system from data recorded in the experiments. Both inf and pred are depicted in Fig. 2.

Figure 2.

Two maps are necessary for a full experimental setup, one that describes a theory’s predictions about experience (pred), another that describes the experimenter’s inference about it (inf). Both map from a dataset collected in an experimental trail to some subset of experiences described by the model, E.

It is worth noting that we have used here the same class as in the definition of the prediction mapping above. This makes sense because the inference process also uses data obtained in experimental trials, such as reports by a subject. So both pred and inf can be described to operate on the same total dataset measured, even though they usually use different parts of this dataset (cf. below).

Falsification

We have now introduced all elements which are necessary to formally say what a falsification of a theory of consciousness is. To falsify, a theory of consciousness requires mismatch between an experimenter’s inference (generally based on report) and the predicted consciousness of the subject. In order to describe this, we consider some particular experimental trial, as well as and .

Definition 2.1.

There is a falsification at if we have

(2) This definition can be spelled out in terms of individual components of E. To this end, for any given dataset , let denote the experience that is being inferred, and let be one of the experiences that is predicted based off of some dataset. Then (2) simply states that we have for all possible predictions ep . None of the predicted states of experience is equal to the inferred experience.

What does Equation (2) mean? There are two cases which are possible. Either, the prediction based on the theory of consciousness is correct, and the inferred experience is wrong. Or the prediction is wrong, so that in this case the model would be falsified. In short: either the prediction process or the inference process is wrong.

We remark that if there is a dataset o on which the inference procedure or the prediction procedure cannot be used, then this dataset cannot be used in falsifying a model of consciousness. Thus, when it comes to falsifications, we can restrict to datasets o for which both procedures are defined.

In order to understand in more detail what is going on if (2) holds, we have to look into a single dataset . This will be of use later.

Generally, and will make use of different part of the data obtained in an experimental trial. For example, in the context of IIT or GNW, data about the internal structure and state of the brain will be used for the prediction. These data can be obtained from an fMRI scan or EEG measurement. The state of consciousness on the other hand can be inferred from verbal reports. Pictorially, we may represent this as in Fig. 3. We use the following notation:

oi For a chosen dataset , we denote the part of the dataset which is used for the prediction process by oi (for “internal” data). This can be thought of as data about the internal workings of the system. We call oi the prediction data in o.

or For a chosen dataset , we denote the part of the dataset which is used for inferring the state of experience by or (for “report” data). We call it the inference data in o.

Note that in both cases, the subscript can be read similarly as the notation for restricting a set. We remark that a different kind of prediction could be considered as well, where one makes use of the inverse of . In Appendix B, we prove that this is in fact equivalent to the case considered here, so that Definition 2.1 indeed covers the most general situation.

Fig. 3.

This figure represents the same setup as Fig. 2. The left circle depicts one single dataset o. oi (orange) is the part of the dataset used for prediction. or (green) is the part of the dataset used for inferring the state of experience. Usually the green area comprises verbal reports or button presses, whereas the orange area comprises the data obtained from brain scans. The right circle depicts the experience space E of a theory under consideration. ep denotes a predicted experience while er denotes the inferred experience. Therefore, in total, to represent some specific experimental trial we use and , where .

Summary

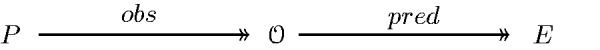

In summary, for testing of a theory of consciousness we have introduced the following notion:

Pdenotes a class of physical systems that could have been tested, in principle, in the experiment under consideration, each in various different configurations. In most cases, every thus describes a physical system in a particular state, dynamical trajectory, or configuration.

is a correspondence which contains all details on how the measurements are set up and what is measured. It describes how measurement results (datasets) are determined by a system configuration under investigation. This correspondence is given, though usually not explicitly known, once a choice of measurement scheme has been made.

is the class of all possible datasets that can result from observations or measurements of the systems in the class P. Any single experimental trial results in a single dataset , whose data are used for making predictions based on the theory of consciousness and for inference purposes.

pred describes the process of making predictions by applying some theory of consciousness to a dataset o. It is therefore a mapping from to E.

E denotes the space of possible experiences specified by the theory under consideration. The result of the prediction is a subset of this space, denoted as . Elements of this subset are denoted by ei and describe predicted experiences.

describes the process of inferring a state of experience from some observed data, e.g., verbal reports, button presses or using no-report paradigms. Inferred experiences are denoted by er.

The Substitution Argument

Substitutions are changes of physical systems (i.e. the substitution of one for another) that leave the inference data invariant, but may change the result of the prediction process. A specific case of substitution, the unfolding of a reentrant neural network to a feedforward one, was recently applied to IIT to argue that IIT cannot explain consciousness (Doerig et al. 2019).

Here, we show that, in general, the contemporary notion of falsification in the science of consciousness exhibits this fundamental flaw for almost all contemporary theories, rather than being a problem for a particular theory. This flaw is based on the independence between the data used for inferences about consciousness (like reports) and the data used to make predictions about consciousness. We discuss various responses to this flaw in Objections section.

We begin by defining what a substitution is in Substitutions section, show that it implies falsifications in Substitutions imply falsifications section and analyze the particularly problematic case of universal substitutions in Universal substitutions imply complete falsification section. In When does a universal substitution exist? section, we prove that universal substitutions exist if prediction and inference data are independent and give some examples of already-known cases.

Substitutions

In order to define formally what a substitution is, we work with the inference content or of a dataset o as introduced in Falsification section. We first denote the class of all physical configurations which could have produced the inference content or upon measurement by . Using the correspondence which describes the relation between physical systems and measurement results, this can be defined as

| (3) |

where denotes all possible datasets that can be measured if the system p is under investigation and where is a shorthand for with inference content or.

Any map of the form takes a system configuration p which can produce inference content or to another system’s configuration S(p) which can produce the same inference content. This allows us to define what a substitution is formally. In what follows, the indicates the composition of the correspondences and to give a correspondence from P to E, which could also be denoted as (That is, , it is the image under of the set .), and denotes the intersection of sets.

Definition 3.1.

There is a or-substitution if there is a transformation such that at least for one

(4) In words, a substitution requires there to be a transformation S which keeps the inference data constant but changes the prediction of the system. So much in fact that the prediction of the original configuration p and of the transformed configuration S(p) are fully incompatible, i.e. there is no single experience e which is contained in both predictions. Given some inference data or, an or-substitution then requires this to be the case for at least one system configuration p that gives this inference data. In other words, the transformation S is such that for at least one p, the predictions change completely, while the inference content or is preserved.

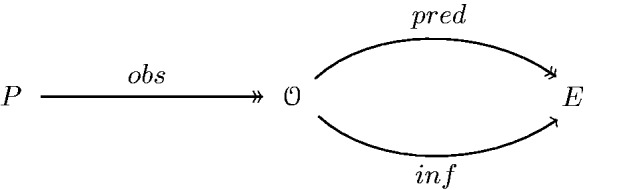

A pictorial definition of substitutions is given in Fig. 4. We remark that if and were functions, so that only contained one element, Equation (4) would be equivalent to .

We will find below that the really problematic case arises if there is an or-substitution for every possible inference content or. We refer to this case as a universal substitution.

Figure 4.

This picture illustrates substitutions. Assume that some dataset o with inference content or is given. A substitution is a transformation S of physical systems which leaves the inference content or invariant but which changes the result of the prediction process. Thus whereas p and S(p) have the same inference content or, the prediction content of experimental datasets is different; different in fact to such an extent that the predictions of consciousness based on these datasets are incompatible (illustrated by the nonoverlapping gray circles on the right). Here, we have used that by definition of , every yields at least one dataset with the same inference content as o and have identified o and in the drawing.

Definition 3.2.

There is a universal substitution if there is an or-substitution for every or.

We recall that according to the notation introduced in Falsification section, the inference content of any dataset is denoted by or (adding the subscript r). Thus, the requirement is that there is an or-substitution for every inference data that can pertain in the experiment under consideration (for every inference data that is listed in ). The subscript or of indicates that the transformation S in Definition 3.1 can be chosen differently for different or. Definition 3.2 does not require there to be one single transformation that works for all or.

Substitutions imply falsifications

The force of our argument comes from the fact that if there are substitutions, then this necessarily leads to mismatches between inferences and predictions. This is shown by the following lemma.

Lemma 3.3.

If there is a or-substitution, there is a falsification at some .

Proof. Let p be the physical system in Definition 3.1 and define . Let be a dataset of p which has inference content or and let be a dataset of which has the same inference content or, guaranteed to exist by the definition of in (3). Equation (4) implies that

(5) Since, however, , we have . Thus we have either or , or both. Thus there is either a falsification at o, a falsification at , or both. □

The last lemma shows that if there are substitutions, then there are necessarily falsifications. This might, however, not be considered too problematic, since it could always be the case that the model is right whereas the inferred experience is wrong. Inaccessible predictions are not unusual in science. A fully problematic case only pertains for universal substitutions, i.e., if there is an or-substitution for every inference content or that can arise in an experiment under consideration.

Universal substitutions imply complete falsification

In Falsification section, we have defined falsifications for individual datasets . Using the “insight view” of single datasets, we can refine this definition somewhat by relating it to the inference content only.

Definition 3.4.

There is an or-falsification if there is a falsification for some which has inference content or.

This definition is weaker than the original definition, because among all datasets which have inference content or, only one needs to exhibit a falsification. Using this notion, the next lemma specifies the exact relation between substitutions and falsifications.

Lemma 3.5.

If there is an or-substitution, there is an or-falsification.

Proof. This lemma follows directly from the proof of Lemma 3.3 because the datasets o and used in that proof both have inference content or. □

This finally allows us to show our first main result. It shows that if a universal substitution exists, the theory of consciousness under consideration is falsified. We explain the meaning of this proposition after the proof.

Proposition 3.6.

If there is a universal substitution, there is an or-falsification for all possible inference contents or.

Proof. By definition of universal substitution, there is an or-substitution for every or. Thus, the claim follows directly from Lemma 3.5. □

In combination with Definition 3.4, this proposition states that for every possible report (or any other type of inference procedure, cf. our use of terminology in Falsification section), there is a dataset o which contains the report’s data and for which we have

(6) where we have slightly abused notation in writing instead of for clarity. This implies that one of two cases needs to pertain: either at least one of the inferred experiences is correct, in which case the corresponding prediction is wrong and the theory needs to be considered falsified. The only other option is that for all inference contents or, the prediction is correct, which qua (6) implies that no single inference points at the correct experience, so that the inference procedure is completely wrong. This shows that Proposition 3.6 can equivalently be stated as follows.

Proposition 3.7.

If there is a universal substitution, either every single inference operation is wrong or the theory under consideration is already falsified.

Next, we discuss under which circumstances a universal substitution exists.

When does a universal substitution exist?

In the last section, we have seen that if a universal substitution exists, this has strong consequences. In this section, we discuss under what conditions universal substitutions exist.

Theories need to be minimally informative

We have taken great care above to make sure that our notion of prediction is compatible with incomplete or noisy datasets. This is the reason why is a correspondence, the most general object one could consider. For the purpose of this section, we add a gentle assumption which restricts slightly: we assume that every prediction carries at least a minimal amount of information. In our case, this means that for every prediction , there is at least one other prediction which is different from . Put in simple terms, this means that we do not consider theories of consciousness which have only a single prediction.

In order to take this into account, for every , we define , which comprises exactly all those datasets which can be generated by physical systems p that also generate o. When applying our previous definitions, this can be fleshed out as

| (7) |

Using this, we can state our minimal information assumption in a way that is compatible with the general setup displayed in Fig. 2:

We assume that the theories of consciousness under consideration are minimally informative in that for every , there exists an such that

| (8) |

Inference and prediction data are independent

We have already noted, that in most experiments, the prediction content oi and inference content or consist of different parts of a dataset. What is more, they are usually assumed to be independent, in the sense that changes in oi are possible while keeping or constant. This is captured by the next definition.

Definition 3.8.

Inference and prediction data are independent if for any oi, and or, there is a variation

(9) such that but and for some .

Here, we use the same shorthand as in (3). For example, the requirement is a shorthand for there being an which has prediction content oi. The variation ν in this definition is a variation in P, which describes physical systems which could, in principle, have been realized in an experiment (cf. Summary section). We note that a weaker version of this definition can be given which still implies our results below, cf. Appendix A. Note that if inference and prediction data are not independent, e.g., because they have a common cause, problems of tautologies loom large, cf. Objections section. Throughout the text, we often refer to Definition 3.8 simply as “independence.”

Universal substitutions exist

Combining the last two sections, we can now prove that universal substitutions exist.

Proposition 3.9.

If inference and prediction data are independent, universal substitutions exist.

Proof. To show that a universal substitution exists, we need to show that for every , an or-substitution exists (Definition 3.1). Thus assume that an arbitrary is given. The minimal information assumption guarantees that there is an such that Equation (8) holds. As before, we denote the prediction content of o and by oi and , respectively, and the inference content of o by or.

Since inference and prediction data are independent, there exists a as well as a such that and . By Definition (7), the first two of these four conditions imply that and . Thus, Equation (8) applies and allows us to conclude that

Via Equation (3), the latter two of the four conditions imply that and . Thus, we may restrict ν to to obtain a map

which in light of the first part of this proof exhibits at least one which satisfies (4). Thus we have shown that an or-substitution exists. Since o was arbitrary, it follows that a universal substitution exists. □

The intuition behind this proof is very simple. In virtue of our assumption that theories of consciousness need to be minimally informative, for any dataset o, there is another dataset which makes a nonoverlapping prediction. But in virtue of inference and prediction data being independent, we can find a variation that changes the prediction content as prescribed by o and but keeps the inference content constant. This suffices to show that there exists a transformation S as required by the definition of a substitution.

Combining this result with Proposition 3.7, we finally can state our main theorem.

Theorem 3.10.

If inference and prediction data are independent, either every single inference operation is wrong or the theory under consideration is already falsified.

Proof. The theorem follows by combining Propositions 3.9 and 3.7. □

In the next section, we give several examples of universal substitutions, before discussing various possible responses to our result in Objections section.

Examples of data independence

Our main theorem shows that testing a theory of consciousness will necessarily lead to its falsification if inference and prediction data are independent (Definition 3.8), and if at least one single inference can be trusted (Theorem 3.10). In this section, we give several examples that illustrate the independence of inference and prediction data. We take report to mean output, behavior, or verbal report itself and assume that prediction data derives from internal measurements.

Artificial neural networks. ANNs, particularly those trained using deep learning, have grown increasingly powerful and capable of human-like performance (LeCun et al. 2015; Bojarski et al. 2016). For any ANN, report (output) is a function of node states. Crucially, this function is noninjective, i.e., some nodes are not part of the output. For example, in deep learning, the report is typically taken to consist of the last layer of the ANN, while the hidden layers are not taken to be part of the output. Correspondingly, for any given inference data, one can construct a ANN with arbitrary prediction data by adding nodes, changing connections and changing those nodes which are not part of the output. Put differently, one can always substitute a given ANN with another with different internal observables but identical or near-identical reports. From a mathematical perspective, it is well-known that both feedforward ANNs and recurrent ANNs can approximate any given function (Hornik et al. 1989; Schäfer and Zimmermann 2007). Since reports are just some function, it follows that there are viable universal substitutions.

A special case thereof is the unfolding transformation considered in Doerig et al. (2019) in the context of IIT. The arguments in this article constitute a proof of the fact that for ANNs, inference and prediction data are independent (Definition 3.8). Crucially, our main theorem shows that this has implications for all minimally informative theories of consciousness. A similar result (using a different characterization of theories of consciousness than minimally informative) has been shown in Kleiner (2020).

Universal computers. Turing machines are extremely different in architecture than ANNs. Since they are capable of universal computation (Turing 1937), they should provide an ideal candidate for a universal substitution. Indeed, this is exactly the reasoning behind the Turing test of conversational artificial intelligence (Turing 1950). Therefore, if one believes it is possible for a sufficiently fast Turing machine to pass the Turing test, one needs to accept that substitutions exist. Notably, Turing machines are just one example of universal computation, and there are other instances of different parameter spaces or physical systems that are capable thereof, such as cellular automata (Wolfram 1984).

Universal intelligences. There are models of universal intelligence that allow for maximally intelligent behavior across any set of tasks (Hutter 2003). For instance, consider the AIXI model, the gold-standard for universal intelligence, which operates via Solomonoff induction (Solomonoff 1964; Hutter 2004). The AIXI model generates an optimal decision making over some class of problems, and methods linked to it have already been applied to a range of behaviors, such as creating “AI physicists” (Wu and Tegmark 2019). Its universality indicates it is a prime candidate for universal substitutions. Notably, unlike a Turing machine, it avoids issues of precisely how it is accomplishing universal substitution of report, since the algorithm that governs the AIXI model behavior is well-described and “relatively” simple.

The above are all real and viable classes of systems that are used everyday in science and engineering which all provide different viable universal substitutions if inferences are based on reports or outputs. They show that in normal experimental setups such as the ones commonly used in neuroscientific research into consciousness (Frith et al. 1999), inference and prediction data are indeed independent, and dependency is not investigated nor properly considered. It is always possible to substitute the physical system under consideration with another that has different internal observables, and therefore different predictions, but similar or identical reports. Indeed, recent research in using the work introduced in this work shows that even different spatiotemporal models of a system can be substituted for one another, leading to falsification (Hanson and Walker 2020). We have not considered possible but less reasonable examples of universal substitutions, like astronomically large look-up ledgers of reports.

As an example of our Main Theorem 3.10, we consider the case of IIT. Since the theory is normally applied in Boolean networks, logic gates, or artificial neural networks, one usually takes report to mean “output.” In this case, it has already been proven that systems with different internal structures and hence different predicted experiences, can have identical input/output (and therefore identical reports or inferences about report) (Albantakis and Tononi 2019). To take another case: within IIT it has already been acknowledged that a Turing machine may have a wildly different predicted contents of consciousness for the same behavior or reports (Koch 2019). Therefore, data independence during testing has already been shown to apply to IIT under its normal assumptions.

Inference and Prediction Data Are Strictly Dependent

An immediate response to our main result showing that many theories suffer from a priori falsification would be to claim that it offers support of theories which define conscious experience in terms of what is accessible to report. This is the case, e.g., for behaviorist theories of consciousness but might arguably also be the case for some interpretations of global workspace theory or fame in the brain proposals. In this section, we show that this response is not valid, as theories of this kind, where inference and prediction data are strictly dependent, are unfalsifiable.

In order to analyze this case, we first need to specifically outline how theories can be pathologically unfalsifiable. Clearly, the goal of the scientific study as a whole is to find, eventually, a theory of consciousness that are empirically adequate and therefore corroborated by all experimental evidence. Therefore, not being falsified in experiments is a necessary condition (though not sufficient) any purportedly “true” theory of consciousness needs to satisfy. Therefore, not being falsifiable by the set of possible experiments per se is not a bad thing. We seek to distinguish this from cases of unfasifiability due to pathological assumptions that underlie a theory of consciousness, assumptions which render an experimental investigation meaningless. Specifically, a pathological dependence between inferences and predictions leads to theories which are unfalsifiable.

Such unfalsifiable theories can be identified neatly in our formalism. To see how, recall that denotes the class of all datasets that can result from an experiment investigating the physical systems in the class P. Put differently, it contains all datasets that could, in principle, appear when probed in the experiment. This is not the class of all possible datasets of type one can think of. Many datasets which are of the same form as elements of might simply not arise in the experiment under consideration. We denote the class of all possible datasets as:

Intuitively, in terms of possible worlds semantics, describes the datasets which could appear, for the type of experiment under consideration, in the actual world. , in contrast, describes the datasets which could appear in this type of experiment in any possible world. For example, contains datasets which can only occur if consciousness attaches to the physical in a different way than it actually does in the actual word.

By construction, is a subset of , which describes which among the possible datasets actually arises across experimental trials. Hence, also determines which theory of consciousness is compatible with (i.e. not falsified by) experimental investigation. However, defines all possible datasets independent of any constraint by real empirical results, i.e., all possible imaginable datasets.

Introduction of allows us to distinguish the pathological cases of unfalsifiability mentioned above. Whereas any purportedly true theory should only fail to be falsified with respect to the experimental data , a pathological unfalsifiability pertains if a theory cannot be falsified at all, i.e. over . This is captured by the following definition.

Definition 4.1.

A theory of consciousness which does not have a falsification over is empirically unfalsifiable.

Here, we use the term “empirically unfalsifiable” to highlight and refer to the pathological notion of unfalsifiability. Intuitively speaking, a theory which satisfies this definition appears to be true independently of any experimental investigation, and without the need for any such investigation. Using , we can also define the notion of strict dependence in a useful way.

Definition 4.2.

Inference and prediction data are strictly dependent if there is a function f such that for any , we have .

This definition says that there exists a function f which for every possible inference data or allows to deduce the prediction data oi. We remark that the definition refers to and not , as the dependence of inference and prediction considered here holds by assumption and is not simply asserting a contingency in nature.

The definition is satisfied, e.g., if inference data is equal to prediction data, i.e., if oi = or, where f is simply the identity. This is the case, e.g., for behaviorist theories (Skinner 1938) of consciousness, where consciousness is equated directly with report or behavior, or for precursors of functionalist theories of consciousness that are based on behavior or input/output (Putnam 1960). The definition is also satisfied in the case where prediction data are always a subset of the inference data:

(10) Here, f is simply the restriction function. This arguably applies to global workspace theory (Baars 2005), the “attention schema” theory of consciousness (Graziano and Webb 2015) or “fame in the brain” (Dennett 1991) proposals.

In all these cases, consciousness is generated by—and hence needs to be predicted via—what is accessible to report or output. In terms of Block’s distinction between phenomenal consciousness and access consciousness (Block 1996), Equation (10) holds true whenever a theory of consciousness is under investigation where access consciousness determines phenomenal consciousness.

Our second main theorem is the following.

Theorem 4.3.

If a theory of consciousness implies that inference and prediction data are strictly dependent, then it is either already falsified or empirically unfalsifiable.

Proof. To prove the theorem, it is useful to consider the inference and prediction content of datasets explicitly. The possible pairings that can occur in an experiment are given by

(11) where we have again used our notation that oi denotes the prediction data of o, and similar for or. To define the possible pairings that can occur in , we let denote the class of all prediction contents that arise in , and denote the class of all inference contents that arise in . The set of all conceivable pairings is then given by

(12)

(13) Crucially, here, oi and do not have to be part of the same dataset o. Combined with Definition 2.1, we conclude that there is a falsification over if for some , we have , and there is a falsification over if for some , we have .

Next we show that if inference and prediction data are strictly dependent, then holds. We start with the set as defined in (12). Expanding this definition in words, it reads

(14) where we have symbols di and dr to denote prediction and inference data independently of any dataset o.

Assume that the first condition in this expression, dr = or holds for some . Since inference and prediction data are strictly dependent, we have . Furthermore, for the same reason, the prediction content oi of the dataset o satisfies . Applying the function f to both sides of the first condition gives , which thus in turn implies oi = di. This means that the o that satisfies the first condition in (14) automatically also satisfies the second condition. Therefore, due to inference and prediction data being strictly dependent, (14) is equivalent to

(15) This, however, is exactly as defined in (11). Thus we conclude that if inference and prediction data are strictly dependent, necessarily holds.

Returning to the characterization of falsification in terms of and above, what we have just found implies that there is a falsification over if and only if there is a falsification over . Thus either there is a falsification over , in which case the theory is already falsified or there is no falsification over , in which case the theory under consideration is empirically unfalsifiable. □

The gist of this proof is that if inference and prediction data are strictly dependent, then as far as the inference and prediction contents go, and are the same. That is, the experiment does not add anything to the evaluation of the theory. It is sufficient to know only all possible datasets to decide whether there is a falsification. In practise, this would mean that knowledge of the experimental design (which reports are to be collected, on the one hand, which possible data a measurement device can produce, one the other) is sufficient to evaluate the theory, which is clearly at odds with the role of empirical evidence required in any scientific investigation. Thus, such theories are empirically unfalsifiable.

To give an intuitive example of the theorem, let us examine a theory that uses the information accessible to report in a system to predict conscious experience (perhaps this information is “famous” in the brain or is within some accessible global workspace). In terms of our notation, we can assume that or denotes everything that is accessible to report, and oi denotes that part which is used by the theory to predict conscious experience. Thus, in this case we have . Since the predicted contents are always part of what can be reported, there can never be any mismatch between reports and predictions. However, this is not only the case for but also, in virtue of the theory’s definition, for all possible datasets, i.e., . Therefore, such theories are empirically unfalsifiable. Experiments add no information to whether the theory is true or not, and such theories are empirically uninformative or tautological.

Objections

In this section, we discuss a number of possible objections to our results.

Restricting inferences to humans only

The examples given in section 3.4.4 show that data independence holds during the usual testing setups. This is because prima facie it seems reasonable to base inferences either on report capability or intelligent behavior in a manner agnostic of the actual physical makeup of the system. Yet this entails independence, so in these cases our conclusions apply.

One response to our results might be to restrict all testing of theories of consciousness solely to humans. In our formalisms, this is equivalent to making the strength of inferences based not on reports themselves but on an underlying biological homology. Such an inf function may still pick out specific experiences via reports, but the weight of the inference is carried by homology rather than report or behavior. This would mean that the substitution argument does not significantly affect consciousness research, as reports of nonhuman systems would simply not count. Theories of consciousness, so this idea goes, would be supported by abductive reasoning from testing in humans alone.

Overall, there are strong reasons to reject this restriction of inferences. One significant issue is that this objection is equivalent to saying that reports or behavior in nonhumans carry no information about consciousness, an incredibly strong claim. Indeed, this is highly problematic for consciousness research which often uses nonhuman animal models (Boly et al. 2013). For instance, cephalopods are among the most intelligent animals yet are quite distant on the tree of life from humans and have a distinct neuroanatomy, and still are used for consciousness research (Mather 2008). Even in artificial intelligence research, there is increasing evidence that deep neural networks produced brain-like structures such as grid cells, shape tuning, and visual illusions, and many others (Richards et al. 2019). Given these similarities, it becomes questionable why the strength of inferences should be based on homology instead of capability of report or intelligence.

What is more, restricting inferences to humans alone is unlikely to be sufficient to avoid our results. Depending on the theory under consideration, data independence might exist just in human brains alone. That is, it is probable that there are transformations (as in Equation (9)) available within the brain wherein or is fixed but oi varies. This is particularly true once one allows for interventions on the human brain by experimenters, such as perturbations like transcranial magnetic stimulation, which is already used in consciousness research (Rounis et al. 2010; Napolitani et al. 2014).

For these reasons this objection does not appear viable. At minimum, it is clear that if the objection were taken seriously, it would imply significant changes to consciousness research which would make the field extremely restricted with strong a priori assumptions.

Reductio ad absurdum

Another hypothetical objection to our results is to argue that they could just as well be applied to scientific theories in other fields. If this turned out to be true, this would not imply our argument is necessarily incorrect. But, the fact that other scientific theories do not seem especially problematic with regard to falsification would generate the question of whether some assumption is illegitimately strong. In order to address this, we explain which of our assumptions is specific to theories of consciousness and would not hold when applied to other scientific theories. Subsequently, we give an example to illustrate this point.

The assumption in question is that , the class of all datasets that can result from observations or measurements of a system, is determined by the physical configurations in P alone. That is, every single dataset o, including both its prediction content oi and its inference content or, is determined by p, and not by a conscious experience in E. In Fig. 2, this is reflected in the fact that there is an arrow from P to , but no arrow from E to .

This assumption expresses the standard paradigm of testing theories of consciousness in neuroscience, according to which both the data used to predict a state of consciousness and the reports of a system are determined by its physical configuration alone. This, in turn, may be traced back to consciousness’ assumed subjective and private nature, which implies that any empirical access to states of consciousness in scientific investigations is necessarily mediated by a subject’s reports, and to general physicalist assumptions.

This is different from experiments in other natural sciences. If there are two quantities of interest whose relation is to be modeled by a scientific theory, then in all reasonable cases there are two independent means of collecting information relevant to a test of the theory, one providing a dataset that is determined by the first quantity, and one providing a dataset that is determined by the second quantity.

Consider, as an example, the case of temperature T and its relation to microphysical states. To apply our argument, the temperature T would replace the experience space E and p would denote a microphysical configuration. In order to test any particular theory about how temperature is determined by microphysical states, one would make use of two different measurements. The first measurement would access the microphysical states and would allow measurement of, say, the mean kinetic energy (if that’s what the theory under consideration utilizes). This first measurement would provide a dataset om that replaces the prediction data oi above. For the second measurement, one would use a thermometer or some other measuring device to obtain a dataset ot that replaces our inference data or above. Comparison of the inferred temperature with the temperature that is predicted based on om would allow testing of the theory under consideration. These independent means provide independent access to each of the two datasets in question. Combining om and ot in one dataset o, the diagrammatic representation is

which differs from the case of theories of consciousness considered here, wherein the physical system determines both datasets.

Theories could be based on phenomenology

Another response to the issue of independence/dependence identified here is to propose that a theory of consciousness may not have to be falsified but can be judged by other characteristics. This is reminiscent of ideas put forward in connection with String Theory, which some have argued can be judged by elegance or parsimony alone (Carroll 2018).

In addition to elegance and parsimony, in consciousness science, one could in particular consider a theory’s fit with phenomenology, i.e., how well a theory describes the general structure of conscious experience. Examples of theories that are constructed based on a fit with phenomenology are recent versions of IIT (Oizumi et al. 2014) or any view that proposes developing theories based on isomorphisms between the structure of experiences and the structure of physical systems or processes (Tsuchiya et al. 2019).

It might be suggested that phenomenological theories might be immune to aspects of the issues we outline in our results (Negro 2020). We emphasize that in order to avoid our results, and indeed the need for any experimental testing at all, a theory constructed from phenomenology has to be uniquely derivable from conscious experience. However, to date, no such derivation exists, as phenomenology seems to generally underdetermine the postulates of IIT (Bayne 2018; Barrett and Mediano 2019), and because it is unknown what the scope and nature of nonhuman experience is. Therefore, theories based on phenomenology can only confidently identify systems with human-like conscious experiences and cannot currently do so uniquely. Thus they cannot avoid the need for testing.

As long as no unique and correct derivation exists across the space of possible conscious experiences, the use of experimental tests to assess theories of consciousness, and hence our results, cannot be avoided.

Rejecting falsifiability

Another response to our findings might be to deny the importance of falsifications within the scientific methodology. Such responses may reference a Lakatosian conception of science, according to which science does not proceed by discarding theories immediately upon falsification, but instead consists of research programs built around a family of theories (Lakatos 1980). These research programs have a protective belt which consists of nonessential assumptions that are required to make predictions, and which can easily be modified in response to falsifications, as well as a hard core that is immune to falsifications. Within the Lakatosian conception of science research programs are either progressive or degenerating based on whether they can “anticipate theoretically novel facts in its growth” or not (Lakatos 1980).

It is important to note, however, that Lakatos does not actually break with falsificationism. This is why Lakatos description of science is often called “refined falsificationism” in philosophy of science (Radnitzky 1991). Thus cases of testing theories’ predictions remain relevant in a Lakatosian view in order to distinguish between progressive and degenerating research programs. Therefore, our results generally translate into this view of scientific progress. In particular, Theorem 3.10 shows that for every single inference procedure that is taken to be valid, there exists a system for which the theory makes a wrong prediction. This implies necessarily that a research program is degenerating. That is, independence implies that there is always an available substitution that can falsify any particular prediction coming from the research program.

Conclusion

In this article, we have subjected the usual scheme for testing theories of consciousness to a thorough formal analysis. We have shown that there appear to be deep problems inherent in this scheme which need to be addressed.

Crucially, in contrast to other similar results (Doerig et al. 2019), we do not put the blame on individual theories of consciousness, but rather show that a key assumption that is usually being made is responsible for the problems: an experimenter’s inference about consciousness and a theory’s predictions are generally implicitly assumed to be independent during testing across contemporary theories. As we formally prove, if this independence holds, substitutions or changes to physical systems are possible that falsify any given contemporary theory. Whenever there is an experimental test of a theory of consciousness on some physical system which does not lead to a falsification, there necessary exists another physical system which, if it had been tested, would have produced a falsification of that theory. We emphasize that this problem does not only affect one particular type of theory, e.g., those based on causal interactions like IIT; theorems apply to all contemporary neuroscientific theories of consciousness if independence holds.

In the second part of our results, we examine the case where independence does not hold. We show that if an experimenter’s inferences about consciousness and a theory’s predictions are instead considered to be strictly dependent, empirical unfalsifiability follows, which renders any type of experiment to test a theory uninformative. This affects all theories wherein consciousness is predicted off of reports or behavior (such as behaviorism), theories based off of input/output functions, and also theories that equate consciousness with on accessible or reportable information.

Thus, theories of consciousness seem caught between Scylla and Charybdis, requiring delicate navigation. In our opinion, there may only be two possible paths forward to avoid these dilemmas, which we briefly outline below. Each requires a revision of the current scheme of testing or developing theories of consciousness.

Lenient dependency

When combined, our main theorems show that both independence and strict dependence of inference and prediction data are problematic and thus neither can be assumed in an experimental investigation. This raises the question of whether there are reasonable cases where inference and prediction are dependent, but not strictly dependent.

A priori, in the space of possible relationships between inference and prediction data, there seems to be room for relationships that are neither independent (The substitution argument section) nor strictly dependent (Inference and prediction data are strictly dependent section). We define this relationships of this kind as cases of lenient dependency. No current theory or testing paradigm that we know of satisfies this definition. Yet cases of lenient dependency cannot be excluded to exist. Such cases would technically not be beholden to either Theorem 3.10 or Theorem 4.3.

There seems to be two general possibilities of how lenient dependencies could be built. On the one hand, one could hope to find novel forms of inference that allow to surpass the problems we have identified here. This would likely constitute a major change in the methodologies of experimental testing of theories of consciousness. On the other hand, another possibility to attain lenient dependence would be to construct theories of consciousness which yield prediction functions that are designed to explicitly have a leniently dependent link to inference functions. This would likely constitute a major change in constructing theories of consciousness.

Physics is not causally closed

Another way to avoid our conclusion is to only consider theories of consciousness which do not describe the physical as causally closed (Kim 1998). That is, the presence or absence of a particular experience itself would have to make a difference to the configuration, dynamics, or states of physical systems above and beyond what would be predicted with just information about the physical system itself. If a theory of consciousness does not describe the physical as closed, a whole other range of predictions are possible: predictions which concern the physical domain itself, e.g., changes in the dynamics of the system which depend on the dynamics of conscious experience. These predictions are not considered in our setup and may serve to test a theory of consciousness without the problems we have explored here.

Supplementary Materials

(A) Weak Independence

In this section, we show how Definition 3.8 can be substantially relaxed while still ensuring our results to hold. To this end, we need to introduce another bit of formalism: We assume that predictions can be compared to establish how different they are. This is the case, e.g., in IIT where predictions map to the space of maximally irreducible conceptual structures (MICS), sometimes also called the space of Q-shapes, which carries a distance function analogous to a metric (Kleiner and Tull, 2020). We assume that for any given prediction, one can determine which of all those predictions that do not overlap with the given one is most similar to the latter, or equivalently which is least different. We calls this a minimally differing prediction and use it to induce a notion of minimally differing datasets below. Uniqueness is not required.

Let an arbitrary dataset be given. The minimal information assumption from Theories need to be minimally informative section ensures that there is at least one dataset such that Equation (8) holds. For what follows, let denote the set of all datasets which satisfy Equation (8) with respect to o,

| (16) |

Thus contains all datasets whose prediction completely differs from the prediction of o.

Definition A.1.

We denote by those datasets in whose prediction is least different from the prediction of o.

In many cases will only contain one dataset, but here we treat the general case where this is not so. We emphasize that the minimal information assumption guarantees that exists. We can now specify a much weaker version of Definition 3.8.

Definition

A.2. Inference and prediction data are independent if for any and , there is a variation

(17) such that but and for some .

The difference between Definitions A.2 and 3.8 is that for a given , the latter requires the transformation ν to exist for any , wheres the former only requires it to exist for minimally different datasets . The corresponding proposition is the following.

Proposition A.3.

If inference and prediction data are weakly independent, universal substitutions exist.

Proof. To show that a universal substitution exists, we need to show that for every , an or-substitution exists (Definition 3.1). Thus assume that an arbitrary is given and pick an . As before, we denote the prediction content of o and by oi and , respectively, and the inference content of o by or.

Since inference and prediction data are weakly independent, there exists a as well as a such that and . By Definition (7), the first two of these four conditions imply that and . Since is in particular an element of , Equation (8) applies and allows us to conclude that

Via Equation (3), the latter two of the four conditions imply that and . Thus we may restrict ν to to obtain a map

which in light of the first part of this proof exhibits at least one which satisfies (4). Thus we have shown that an or-substitution exists. Since o was arbitrary, it follows that a universal substitution exists. □

The following theorem shows that Definition A.2 is sufficient to establish the claim of Theorem 3.10.

Theorem A.4.

If inference and prediction data are weakly independent, either every single inference operation is wrong or the theory under consideration is already falsified.

Proof. The theorem follows by combining Propositions A.3 and 3.7. □

(B) Inverse Predictions

When defining falsification, we have considered predictions that take as input data about the physical configuration of a system and yield as output a state of consciousness. An alternative would be to consider the inverse procedure: a prediction which takes as input a reported stated of consciousness and yields as output some constraint on the physical configuration of the system that is having the conscious experience. In this section, we discuss the second case in detail.

As before, we assume that some dataset o has been measured in an experimental trail, which contains both the inference data or (which includes report and behavioral indicators of consciousness used in the experiment under consideration) as well as some data oi that provides information about the physical configuration of the system under investigation. For simplicity, we will also call this prediction data here. Also as before, we take into account that the state of consciousness of the system has to be inferred from or, and again denote this inference procedure by .

The theory under consideration provides a correspondence which describes the process of predicting states of consciousness mentioned above. If we ask which physical configurations are compatible with a given state e of consciousness, this is simply the preimage of e under , defined as

| (18) |

Accordingly, the class of all prediction data which is compatible with the inferred experience is

| (19) |

depicted in Fig. 5, and a falsification occurs in case the observed o has a prediction content oi which is not in this set. Referring to the previous definition of falsification as type-1 (Definition 2.1), we define this new form of falsification as type-2.

Fig. 5.

The case of an inverse prediction. Rather than comparing the inferred and predicted state of consciousness, one predicts the physical configuration of a system based on the system’s report and compares this with measurement results.

Definition

B.1. There is a type-2 falsification at if we have

(20) In terms of the notion introduced in Summary section, Equation (20) could equivalently be written as . The following lemma shows that there is a type-2 falsification if and only if there is a type-1 falsification. Hence all of our previous results apply as well to type-2 falsifications.

Lemma B.2.

There is a type-2 falsification at o if and only if there is a type-1 falsification at o.

Proof. Equation (18) implies that if and only if . Applied to , this implies:

The former is the definition of a type-2 falsification. The latter is Equation (2) in the definition of a type-1 falsification. Hence the claim follows. □

Acknowledgments

We would like to thank David Chalmers, Ned Block, and the participants of the NYU philosophy of mind discussion group for valuable comments and discussion. Thanks also to Ryota Kanai, Jake Hanson, Stephan Sellmaier, Timo Freiesleben, Mark Wulff Carstensen, and Sofiia Rappe for feedback on early versions of the manuscript.

Conflict of interest statement. None declared.

References

- Aaronson S. Why I am not an integrated information theorist (or, the unconscious expander). In: Shtetl-Optimized: The Blog of Scott Aaronson. eds. Radin D., Richard D., & Karim T., 2014. [Google Scholar]

- Alais D, Cass J, O’Shea RP, et al. Visual sensitivity underlying changes in visual consciousness. Curr Biol 2010;20:1362–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albantakis L, Tononi G.. Causal composition: Structural differences among dynamically equivalent systems. Entropy 2019;21:989. [Google Scholar]

- Baars BJ. In the theatre of consciousness. global workspace theory, a rigorous scientific theory of consciousness. J Conscious Stud 1997;4:292–309. [Google Scholar]

- Baars BJ. Global workspace theory of consciousness: toward a cognitive neuroscience of human experience. Progr Brain Res 2005;150:45–53. [DOI] [PubMed] [Google Scholar]

- Ball P. Neuroscience readies for a showdown over consciousness ideas. Quanta Mag 2019;6. [Google Scholar]

- Barrett AB, Mediano PA.. The phi measure of integrated information is not well-defined for general physical systems. J Conscious Stud 2019;26:11–20. [Google Scholar]

- Bayne TJ On the axiomatic foundations of the integrated information theory of consciousness. Neurosci Conscious 2018;4: 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake R, Brascamp J, Heeger DJ.. Can binocular rivalry reveal neural correlates of consciousness? Philos Trans R Soc B 2014;369:20130211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Block N. How can we find the neural correlate of consciousness? Trends Neurosci 1996;19:456–9. [DOI] [PubMed] [Google Scholar]